Abstract

Radar-based human behavior recognition has significant value in IoT application scenarios such as smart healthcare and intelligent security. However, the existing unimodal perception architecture is susceptible to multipath effects, which can lead to feature drift, and the issue of limited cross-scenario generalization ability has not been effectively addressed. Although Wi-Fi sensing technology has emerged as a promising research direction due to its widespread device applicability and privacy protection, its drawbacks, such as low signal resolution and weak anti-interference ability, limit behavior recognition accuracy. To address these challenges, this paper proposes a dynamic adaptive behavior recognition method based on the complementary fusion of radar and Wi-Fi signals. By constructing a cross-modal spatiotemporal feature alignment module, the method achieves heterogeneous signal representation space mapping. A dynamic weight allocation strategy guided by attention is adopted to effectively suppress environmental interference and improve feature discriminability. Experimental results show that, on a cross-environment behavior dataset, the proposed method achieves an average recognition accuracy of 94.8%, which is a significant improvement compared to the radar unimodal domain adaptation method.

1. Introduction

Human Activity Recognition (HAR) is a core topic in the field of intelligent perception, involving multiple disciplines such as machine vision, pattern recognition, and artificial intelligence. It has significant application value in scenarios such as healthcare [1], intelligent driving [2], and security monitoring [3].

HAR refers to the analysis and recognition of human actions and postures to determine and identify an individual’s identity and behavioral intent. The current mainstream sensing modalities include optical sensing, radar detection, and Wi-Fi signal analysis. Optical sensor-based methods rely on visual feature extraction, but their accuracy drops by more than 40% under low-light or rainy and foggy weather conditions, and there is a risk of privacy leakage. Radar technology utilizes the reflection properties of electromagnetic waves for non-contact sensing, which is not affected by lighting conditions or weather and can penetrate obstacles [4]. However, signal aliasing caused by multipath effects can lead to distortion of time-frequency domain features. The new sensing technology based on Wi-Fi Channel State Information (CSI) builds a monitoring system using commercial routers, and through analyzing changes in signal propagation paths, it achieves activity recognition. This technology has outstanding advantages in terms of device ubiquity and privacy security [5]. However, due to the wavelength characteristics, its spatial resolution is two to three orders of magnitude lower than that of millimeter-wave radar and is susceptible to multipath interference in non-line-of-sight scenarios. These inherent flaws make single-modality systems face significant challenges in complex environments.

Specifically, radar and Wi-Fi technologies exhibit significant modal differences in their performance limitations for behavior recognition. While radar systems can capture fine movements through micro-Doppler features [6], their Frequency Modulated Continuous Wave (FMCW) suffers from multipath superposition effects in complex reflective environments. Experiments have shown that in typical office scenarios, signal aliasing caused by metal furniture increases the entropy value of the time-frequency spectrogram for fall detection, significantly raising the misjudgment rate [7]. In contrast, Wi-Fi sensing technology, although capable of non-line-of-sight monitoring due to its signal wavelength characteristics, is limited by the time-varying volatility of CSI, which can blur phase changes caused by subtle movements. In the same experimental environment, Wi-Fi’s recognition accuracy for micro-movements like waving or finger tapping decreases significantly compared to radar systems [8]. Therefore, a single sensing source is insufficient to meet the robustness requirements in complex scenarios.

Recent research has found that radar and Wi-Fi signals exhibit multidimensional physical complementarity in behavior recognition [9]. In terms of spatial coverage, radar, with its line-of-sight propagation characteristics, can accurately capture the movement trajectory of a target, while Wi-Fi, through multipath reflection effects, overcomes physical obstructions, enhancing detection coverage in non-line-of-sight scenarios. On the time-frequency feature level, radar’s micro-Doppler effect can resolve high-speed dynamic features at the 0.1 m/s scale, while Wi-Fi, based on CSI phase fluctuation characteristics, shows significantly enhanced sensitivity to low-frequency movements in the 0.5–2 Hz range. This temporal and spatial feature complementarity provides the physical foundation for constructing an adaptive fusion framework, making it possible to overcome the limitations of single-source systems through cross-modal feature collaboration.

Based on the above characteristics, this study proposes a spatiotemporal collaborative radar-Wi-Fi cross-modal behavior recognition method that breaks through the limitations of single-modal sensing through signal fusion. The main contributions are summarized as follows:

- Development of a multimodal spatiotemporal synchronization acquisition system. A joint sensing platform based on hardware synchronization triggering is designed, integrating millimeter-wave radar and Wi-Fi to construct a heterogeneous signal acquisition system with unified spatiotemporal references.

- Construction of a multiscene cross-modal benchmark dataset. Radar and Wi-Fi CSI data for five common behaviors are collected in three typical indoor scenes: office, laboratory, and lounge, providing a multidimensional dataset for cross-modal algorithm research.

- Proposal of a spatiotemporal collaborative dual-stream fusion network. A dual-branch deep neural network is built using radar micro-Doppler time-frequency spectrograms and Wi-Fi CSI phase gradients. A feature alignment module based on attention gating is designed to achieve adaptive fusion of cross-modal spatiotemporal features. The behavior recognition accuracy reaches 94.8% in complex scenarios.

2. Theoretical Basis

2.1. Wi-Fi Perception and Channel State Information Mechanism

2.1.1. Basic Principles of Wi-Fi Communication

Wi-Fi (Wireless Fidelity) technology is built upon the IEEE 802.11 protocol family and utilizes Orthogonal Frequency Division Multiplexing (OFDM) technology to achieve high-speed wireless communication [10]. At the physical layer, modern Wi-Fi devices generally support Multiple Input Multiple Output (MIMO) technology, which enhances channel capacity through spatial diversity. The equipment used in this study supports the 802.11n standard and operates on the 2.4 GHz frequency band. It features three transmit antennas and three receive antennas, forming nine independent spatial streams.

Channel State Information (CSI), as a characteristic at the physical layer, represents the propagation characteristics of the signal from the transmitter to the receiver. Compared to the traditional Received Signal Strength Indicator (RSSI), CSI provides more granular channel response characteristics. According to Maxwell’s theory of electromagnetic fields, when electromagnetic waves encounter obstacles—such as a human body—during propagation, phenomena like reflection, diffraction, and scattering occur, resulting in multipath propagation effects. This effect can be described by the following mathematical model:

Here, represents the time-varying channel frequency response, while and represent the attenuation coefficient and propagation delay of the -th path, respectively, and is the total number of multipaths. Human behavior causes dynamic changes in and by altering the geometric characteristics of the propagation paths, which provides the physical foundation for behavior recognition based on CSI.

2.1.2. Physical Connotation and Mathematical Representation of CSI

In an OFDM system, CSI can be decomposed into amplitude and phase components. For the -th subcarrier, its CSI value can be represented as follows:

Here, represents the amplitude response, and represents the phase response.

To address the linear phase shift caused by carrier frequency offset (CFO) and the non-linear phase distortion resulting from sampling clock offset (SCO), a phase gradient method is employed for feature extraction. The common phase errors are eliminated by using the phase differences between adjacent subcarriers as follows:

This processing method effectively preserves the phase variation characteristics associated with human body movements while suppressing the noise interference caused by hardware differences in the device [11].

2.1.3. CSI Signal Processing Flow

The raw CSI data must undergo the following standardized preprocessing flow [12]:

- Outlier removal: the Hampel filter is used to detect anomalies, with a window length set to 20 sampling points.

- Wavelet denoising: the sym4 wavelet base is used for 5-level decomposition, with soft-thresholding applied to high-frequency noise.

- Phase unwrapping: a linear transformation is applied to eliminate phase jumping phenomena.

- Time-frequency analysis: time-frequency features are extracted using Continuous Wavelet Transform (CWT), with the Morlet function selected as the mother wavelet.

Here, is set to 6 to balance the time-frequency resolution.

2.1.4. Impact Mechanism of Multipath Effects on Behavior Recognition

According to the Fresnel zone theory, human movements dynamically alter the propagation path length of electromagnetic waves [13], resulting in phase variations as follows:

Here, represents the signal wavelength (12.5 cm for 2.4 GHz). When performing intense movements, such as falling, the variation can reach several tens of centimeters, generating significant phase gradient change patterns. By establishing a mapping relationship between multipath variations and kinematic parameters, fine-grained behavior recognition can be achieved [14].

2.2. Millimeter-Wave Radar Perception and Micro-Doppler Effect

2.2.1. Working Principle of Frequency-Modulated Continuous Wave Radar

Millimeter-wave radar achieves target detection by emitting Frequency-Modulated Continuous Wave (FMCW) signals. Its working principle is based on the electromagnetic wave reflection characteristics and the Doppler effect [15]. The experiment uses the AWR1843 radar module, which operates in the 77 GHz frequency band with a bandwidth of 4 GHz, meeting the precision requirements for detecting human micro-movements. The mathematical model for the transmitted signal can be expressed as

Here, represents the starting frequency (76.5 GHz), is the frequency sweep bandwidth, and is the sweep period. When the signal encounters a moving target, the echo signal will produce a time delay and Doppler frequency shift as follows:

The intermediate frequency (IF) signal is extracted through a mixer as follows:

Here, is the time delay, which determines the target distance, and the Doppler frequency shift reflects the radial velocity, providing kinematic parameters for behavior recognition.

2.2.2. Micro-Doppler Effect Mechanism

When the target has a micro-motion component (such as human joint movement), the radar echo will exhibit a frequency modulation phenomenon, known as the micro-Doppler feature [16]. According to kinematic principles, the micro-Doppler frequency shift of the -th body segment of the human body can be expressed as:

Here, represents the time-varying distance from the body part to the radar. For typical walking actions, the micro-Doppler frequency shift caused by arm swinging can reach ±200 Hz, while a falling motion can produce a sudden frequency shift of 300–500 Hz.

Time-frequency analysis is the core method for extracting micro-Doppler features. The Short-Time Fourier Transform (STFT) is used to generate the time-frequency map as follows:

Here, is the Hanning window function, and the window length is set to 50 ms to balance time-frequency resolution. By applying a three-dimensional convolutional neural network (3D CNN) for feature learning on the time-frequency map, the differences in motion patterns for different behaviors can be effectively captured.

2.2.3. Radar Signal Processing Flow

The raw ADC data must undergo the following multi-stage processing to transform it into a time-frequency feature map [17]:

- Range dimension FFT: perform a 2048-point FFT for each frequency sweep cycle, achieving range resolution .

- Doppler dimension FFT: perform FFT on 64 consecutive frequency sweep cycles, achieving velocity resolution .

- Constant False Alarm Rate Detection (CFAR): use the cell average greatest-of (GO-CFAR) algorithm to eliminate background noise.

- Phase compensation: correct phase errors based on target tracking results.

- Time-frequency map generation: perform Short-Time Fourier Transform (STFT) on a 2-s data segment to generate a 256 × 256 pixel time-frequency map.

3. Related Work

3.1. Radar-Based Cross-Scene Human Activity Recognition

Radar-based HAR has evolved significantly through advances in micro-Doppler analysis and deep learning. Pioneering work by Chen et al. [18] established the theoretical foundation of micro-Doppler signatures, demonstrating their discriminative power for complex activities. C Yu et al. [19] combined millimeter-wave radar with nested CNN architectures for human interaction recognition, emphasizing joint kinematic feature extraction.

Despite these advancements, environmental sensitivity remains a critical challenge [20], which means that trained models are dependent on specific environments, making it difficult to deploy the system across different settings. To address this issue, several researchers have developed learning strategies based on Unsupervised Domain Adaptation (UDA) frameworks [21,22,23], using labeled data to reduce the distribution differences across domains. For example, Wang et al. [24] used a max–min adversarial strategy and center alignment strategy to eliminate feature shifts from the source domain to the target domain. Liang et al. [25] proposed the Source Hypothesis Transfer (SHOT) method, which reduces data discrepancies between different environments by extracting environment-independent features. These methods have made significant progress in specific scenarios; however, relying solely on millimeter-wave radar for cross-scene recognition has inherent limitations due to the impact of device heterogeneity. When there are abrupt changes in scene parameters, single-modal features are prone to domain shifts.

3.2. Wi-Fi Signal-Based Human Activity Recognition

Wi-Fi sensing has gained momentum due to device ubiquity and privacy preservation [26]. Chen et al. [27] conducted a comprehensive comparison between RSSI and CSI modalities, demonstrating that CSI’s subcarrier-level granularity enables higher accuracy in non-line-of-sight (NLoS) scenarios through controlled experiments in cluttered environments. This superiority stems from CSI’s capability to capture multipath variations caused by limb movements [28]. The frontier of Wi-Fi HAR lies in fine-grained motion decoding. Hao et al. [29] pioneered the WiPg system that extracts phase gradient features from CSI streams, achieving millimeter-level sensitivity to finger movements through differential phase processing.

Nevertheless, inherent physical constraints persist. Our experiments confirm that Wi-Fi’s wavelength characteristics (2.4 GHz) limit its sensitivity to sub-meter movements, with phase jumps occurring during abrupt motions like falls. Traditional approaches using SVM/LSTM [30] struggle with fine-grained temporal dynamics, as CSI fluctuations exhibit non-stationary patterns across environments. These limitations motivate the exploration of multimodal solutions.

3.3. Multimodal Fusion for Robust HAR

Emerging research recognizes the complementary nature of heterogeneous sensors. In autonomous driving, Z Wei et al. [31] demonstrated that radar-vision fusion improves detection robustness through cross-modal feature corroboration. For HAR, Huang et al. [32] explored radar-point cloud fusion yet neglected ubiquitous Wi-Fi signals.

The above analysis indicates that the single Wi-Fi modality has physical limitations in terms of spatiotemporal resolution and dynamic response. Therefore, a cross-modal collaboration is necessary to achieve performance breakthroughs. Wi-Fi, with its signal ubiquity, can achieve coarse localization of large-scale targets, while millimeter-wave radar, through micro-Doppler spectrograms, can resolve fine details of movements. The complementary spatiotemporal features of both can form an orthogonal and complete behavior representation space, effectively addressing the recognition failure of single-modal systems in complex scenarios.

4. Multimodal Fusion Method Design

4.1. System Architecture Overview

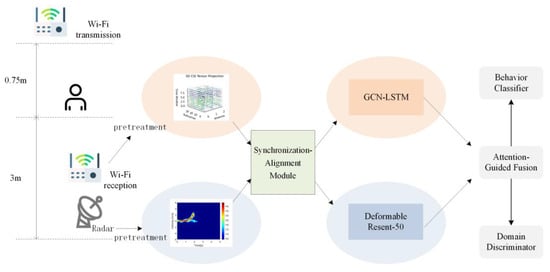

The fusion system architecture of this study is shown in Figure 1. The core objective is to enhance the robustness of human activity recognition in complex scenarios through the complementary nature of heterogeneous data from millimeter-wave radar and Wi-Fi CSI. The system hardware deployment adopts a distributed sensing model, where the FMCW radar and Wi-Fi router are arranged in a non-line-of-sight layout to cover the target activity area, ensuring the capture of multi-angle information. After preprocessing the raw radar echo signals and Wi-Fi CSI data, time synchronization is achieved through a hardware-triggered synchronization mechanism. These data are then fed into a parallel feature extraction network to generate modality-specific semantic features. Finally, through a scene-adaptive multimodal fusion strategy, robust behavior classification results across scenes are output. The process is as follows:

Figure 1.

Radar-Wi-Fi fusion system architecture.

- Front-end heterogeneous signal analysis: the radar signal is processed using STFT to generate time-frequency spectrograms, and Wi-Fi CSI undergoes phase calibration to eliminate carrier frequency offsets.

- Dual-branch feature extraction: the radar branch uses an improved ResNet-50 to extract spatial-frequency domain features, while the Wi-Fi branch uses LSTM to capture temporal dynamic patterns.

- Prior-guided feature fusion: attention-weighted fusion based on Wi-Fi semantic constraints, combined with domain adversarial training, enhances cross-domain generalization.

4.2. Multimodal Data Collection and Synchronization

4.2.1. Radar Time-Frequency Feature Construction

The FMCW radar echo signal is mixed and passed through a low-pass filter to obtain the intermediate frequency signal , where the Doppler frequency reflects the radial velocity of the target. A Short-Time Fourier Transform (STFT) is applied to to generate the time-frequency spectrogram as follows

In the formula, represents the Hann window function. To suppress multipath interference, the following background clutter removal algorithm based on Principal Component Analysis (PCA) is used:

where is the result of reconstructing the principal components after performing truncated Singular Value Decomposition (SVD) on the static background time-frequency matrix (retaining the first k = 3 principal components).

4.2.2. Wi-Fi CSI Dynamic Calibration

The received CSI is modeled as , where and represent the attenuation and delay of the -th path. To address the CSI phase distortion problem, linear phase compensation is applied to eliminate carrier frequency offset (CFO) and environmental drift as follows:

where is the phase offset of the reference subcarrier, and is estimated through static scene sampling. The subcarrier selection criterion based on Kullback-Leibler divergence is:

The top eight subcarriers with the maximum Kullback-Leibler divergence are retained to form the optimized CSI feature vector .

4.2.3. Multimodal Synchronization

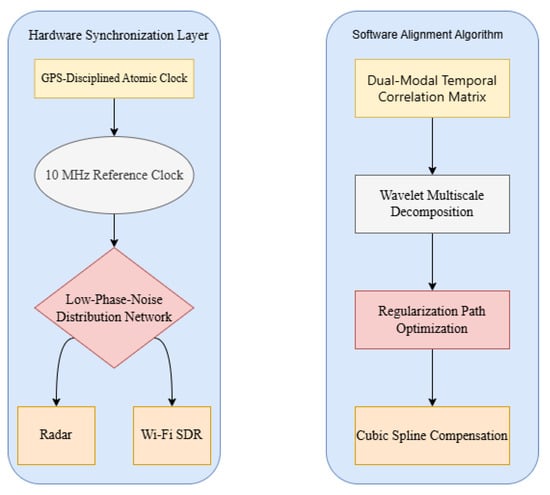

This work constructs a hardware-algorithm collaborative two-level synchronization framework, as shown in Figure 2. The specific implementation is as follows:

Figure 2.

Multimodal synchronized streaming pipeline architecture diagram.

- (1)

- Hardware Synchronization Layer

A GPS-disciplined atomic clock is used to generate a 10 MHz reference clock. A low-phase-noise distribution network drives the phase-locked loops of the millimeter-wave radar and Wi-Fi SDR. Device synchronization is achieved using nanosecond trigger pulses, with time reference alignment error ≤ 1 μs.

- (2)

- Software Alignment Algorithm

To address the residual errors in channel transmission delays, an improved Multi-Scale Dynamic Time Warping (MS-DTW) algorithm is proposed, and the process is shown in Table 1.

Table 1.

Multimodal synchronized streaming pipeline.

4.3. Feature Extraction and Adaptive Encoding

4.3.1. Radar Spatiotemporal Feature Extraction

Deformable Convolution (Deformable Conv) and the Channel-Temporal Attention Module (CTAM) are embedded in the ResNet-50 backbone network [33] to enhance the modeling ability for non-rigid human motion. The output feature representation of CTAM is:

where and , respectively, learn channel and temporal correlations, and represents element-wise multiplication. The motion feature enhancement loss is introduced as:

where is the optical flow feature projection network, which constrains the consistency between radar features and motion dynamics.

4.3.2. Wi-Fi Behavior Semantic Encoding

A gated spatiotemporal graph convolutional network (GST-GCN) is designed to model the spatial correlation of CSI signals as follows:

where is the adaptive adjacency matrix between subcarriers, is the degree matrix, and represents gated fusion. The subcarrier graph structure is optimized using the following spectral clustering algorithm:

Here, : CSI feature vectors of the -th and -th subcarriers, selected through KL-divergence screening.

: Conditional Mutual Information (CMI) between subcarrier pairs, measuring their statistical dependency in behavior recognition tasks.

: bandwidth parameter of the Gaussian kernel, optimized to through grid search experiments.

: adaptive mutual information threshold, adaptively selected based on the mutual information distribution of training subcarrier pairs to retain significantly correlated connections.

4.4. Prior Knowledge Guided Decision Fusion

4.4.1. Environmental Perception Feature Modulation

A scene attribute parsing network is constructed, which outputs a ddd-dimensional scene semantic vector. Radar feature modulation is achieved through Conditional Batch Normalization (CBN) as follows:

: raw feature vector extracted by the radar branch, output from Deformable ResNet-50.

: mean and standard deviation of the feature batch, calculated through standard batch normalization.

: modulation parameters obtained via linear transformation of scene semantic vectors generated by the scene attribute parsing network as follows:

Here, is the learnable weight matrix, and denotes the bias term.

4.4.2. Adversarial Domain-Invariant Fusion

A Gradient Reversal Layer (GRL) is introduced after the feature fusion layer to jointly optimize the classification loss and the domain adversarial loss as follows:

: the behavior category probability distribution output by the classifier, where corresponds to five behavior categories.

: the domain label probability distribution output by the discriminator, where denotes the source or target domain.

: the dynamic adversarial weight, which linearly increases from 0 to 1 following a curriculum learning strategy. Feature distribution alignment is constrained by the Wasserstein distance as follows:

5. Experiments and Results Analysis

5.1. Dataset Construction

To verify the cross-scene robustness of the multimodal fusion framework, this study constructs a human activity dataset covering three typical indoor environments. Five human activity types—walking, sitting, standing up, picking up objects, and falling—are selected from three different scenes: laboratory, lounge, and office. Each activity is performed twenty times by six participants (three males and three females, with heights ranging from 165 to 185 cm) in each scene, totaling 1800 samples.

5.2. Multimodal Data Collection and Processing

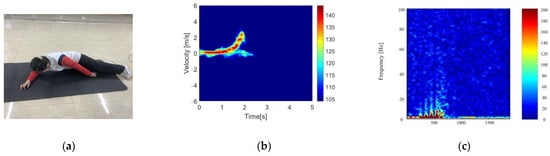

The TI IWR6843 millimeter-wave radar (Texas Instruments, Dallas, USA) was used to collect raw intermediate frequency signals, with a starting frequency of 77 GHz, a frequency modulation slope of 60 GHz/s, and a frame period of 50 ms. Time-frequency spectrograms are generated using Short-Time Fourier Transform (STFT). A dual-node sensing system is constructed using a TP-Link N750 router (TP-Link Technologies Co., Ltd., Shenzhen, China), and 114 subcarriers’ CSI data are extracted via the Atheros CSI Tool. These data are transmitted through a three-antenna array at the receiver to form a sensing link. Linear phase compensation is applied to eliminate carrier frequency offset and clock drift, and the first 34 sensitive subcarriers are selected based on KL divergence. A 3 × 34 × 10 tensor is output. After data processing, the micro-Doppler feature maps and CSI spectrograms are shown in Figure 3.

Figure 3.

Multimodal synchronized streaming pipeline architecture diagram. (a) Fall. (b) Micro-Doppler signatures. (c) CSI Spectrum.

5.3. Evaluation Protocol

5.3.1. Definition of Evaluation Metrics

- Metrics

To systematically evaluate cross-scene recognition performance, the following standardized protocol is adopted:

Macro-averaged accuracy across scenes is used as the primary metric to mitigate sample imbalance, calculated by averaging per-task accuracies.

- 2.

- Confusion Matrix

Visualizes misclassification patterns between activity classesto evaluate discriminability for similar actions.

- 3.

- Statistical Significance Test

Paired t-test is applied to verify performance differences between the proposed method and baselines, with significance level . Bonferroni correction is used to control family-wise error rate.

5.3.2. Data Splitting & Validation

Cross-scene partition: for each transfer task (e.g., Lab → Lounge), 80% of source scene samples are for training, 20% are for validation, and all target scene samples are for testing.

Subject independence: Leave-One-Subject-Out (LOSO) cross-validation prevents subject overlap between training and testing sets.

Temporal processing: sliding windows (2s length, 50% overlap) segment continuous activities to avoid data leakage.

5.3.3. Implementation Details

The proposed dual-stream network is implemented using PyTorch 1.12.1. For the radar branch, we initialize Deformable ResNet-50 with pre-trained weights on ImageNet and fine-tune it with a batch size of 32. The Wi-Fi branch uses Xavier initialization for the GCN-LSTM layers with a batch size of 64. All experiments are conducted on an NVIDIA RTX 3090 GPU.

Optimization: AdamW optimizer with initial learning rates of 3 × 10−4 (radar) and 1 × 10−3 (Wi-Fi), reduced by a factor of 0.1 after 50 epochs.

Regularization: weight decay (1e-4), DropPath (probability = 0.2), and label smoothing (α = 0.1).

Data augmentation: radar spectrograms are augmented with random time warping (±10% length) and frequency masking (2 bands). Wi-Fi CSI sequences undergo random phase jittering (±5°) and subcarrier dropout (10% probability).

Early stopping: training halts if validation accuracy plateaus for 15 epochs.

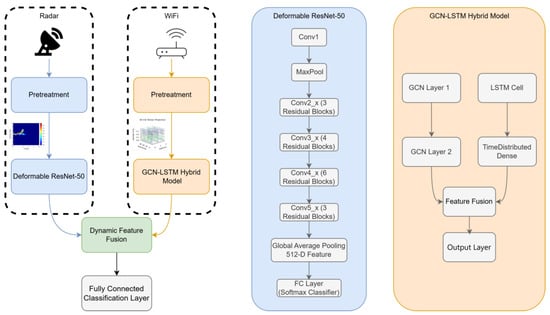

5.4. Model Architecture

The network adopts a dual-branch dynamic fusion architecture as shown in Figure 4. The radar branch uses Deformable ResNet-50 as the backbone, with a 256 × 256 time-frequency spectrogram as input. It employs deformable convolutions to adaptively extract micro-Doppler features, outputting a 512-dimensional feature vector. The Wi-Fi branch constructs a GCN-LSTM hybrid model, mapping the 3 × 34 × 10 dimensional CSI tensor into a spatiotemporal graph. After three layers of graph convolution to aggregate spatial features, the data is fed into a bidirectional LSTM to capture temporal dependencies, ultimately outputting a 512-dimensional semantic vector. The multimodal fusion uses a conditional batch normalization mechanism, dynamically modulating the feature contributions with attention weights and generated by the Wi-Fi branch as follows:

Figure 4.

Dual-branch dynamic fusion architecture. The radar branch employs Deformable Res Net-50, while the Wi-Fi branch utilizes a GCN-LSTM hybrid model, with feature fusion achieved through an attention module.

The training phase adopts a progressive domain adversarial strategy: during the first 50 epochs, the domain discriminator is frozen, and only the feature extractor and classifier are optimized; during the following 100 epochs, adversarial loss is introduced with dynamic weights. The AdamW optimizer is used, with an initial learning rate of 3 × 10−4, weight decay of 1 × 10−4, DropPath of 0.2, and label smoothing of 0.1. The total training consists of 100 epochs.

5.5. Experiment Results Analysis

This experiment selects human activity data measured in three different scenes: laboratory, lounge, and office. In this context, the domains correspond to different scenes. Since there are three scenes, a total of six transfer tasks are considered. The recognition accuracy is used as the evaluation metric to represent the final transfer effect of the methods in the target domain. During the experiment, each task is run three times. The evaluation results for different methods in different adaptation tasks are shown in Table 2. Single-modal, Dynamic Adversarial Adaptation Network (DAAN) [34], Deep Subdomain Adaptation Network (DSAN) [35], and BNM [36] are used as comparison methods. The bold numbers in the table represent the optimal values in each task.

Table 2.

Recognition accuracy (%) for different methods in different adaptation tasks.

From the above table, it can be seen that using single-modal methods has issues such as perception defects and domain sensitivity. The reason lies in the fact that radar relies on the Doppler effect to analyze motion speed, which introduces false velocity components. Wi-Fi, due to the instability of phase, especially in sudden movements like falls, is prone to CSI phase jumps, further exacerbating classification ambiguity.

Experimental results validate the physical limitations of unimodal systems. As shown in Table 2, in the Lab → Lounge task, radar-only accuracy is 72.3%, while Wi-Fi is only 65.1%. This performance gap originates from the following inherent differences in sensing principles:

- Radar physical constraints: Doppler-based velocity perception generates false components in metal-furnished environments (e.g., offices). Multipath interference increases time-frequency spectrogram entropy, raising the fall detection error rate to 21.3%. This results from aliasing between target reflections and multipath components in NLoS conditions.

- Wi-Fi phase vulnerability: sudden movements (e.g., falls) cause CSI phase gradients exceeding π/2, inducing phase jumps.

Domain adaptation methods can alleviate scene differences by aligning distributions in the latent space, but they are limited by the bottleneck of single-modal information. DAAN uses a global domain adversarial strategy but is not sensitive to coupling shifts between multimodal domains, leading to confusion of key action features in some scenes. DSAN enhances fine-grained adaptation through subdomain alignment but does not model the modality complementarity, resulting in accumulated errors. BNM enhances target domain discriminability through nuclear norm maximization, but it does not fully utilize the complementary features across modalities.

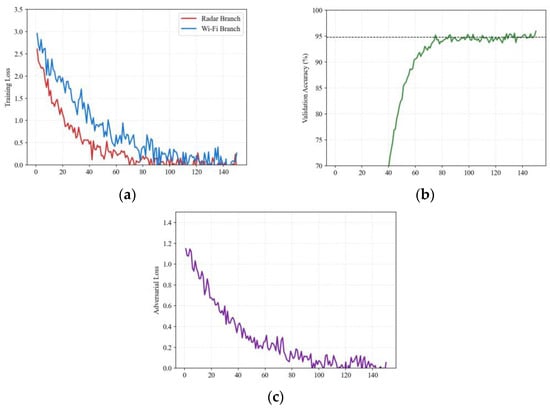

To further validate the training dynamics of our multimodal fusion framework, Figure 5 presents detailed learning curves across different training phases. Figure 5a demonstrates the training loss curves of dual branches. The radar branch (red) shows faster convergence due to the structural regularity of micro-Doppler spectrograms, reaching stable loss (≈0.8) by epoch 40. The Wi-Fi branch (blue) exhibits higher volatility caused by CSI phase noise, stabilizing at loss ≈ 1.2 after 60 epochs. Figure 5b presents the cross-scene validation accuracy. The model achieves 90% accuracy at epoch 35 and converges to 94.8% (dashed line) by epoch 80, with standard deviation < 0.9% across six transfer tasks. The sigmoidal growth pattern confirms the effectiveness of our curriculum learning strategy. Figure 5c reveals the domain adversarial loss dynamics. The initial high loss (1.4 at epoch 0) indicates significant domain discrepancy. Through adversarial training, the loss decreases exponentially to 0.3 at epoch 100, demonstrating successful cross-domain feature alignment.

Figure 5.

Learning curves across different training phases. (a) Training loss curves of radar and Wi-Fi branches. (b) Cross-scene validation accuracy. (c) Domain adversarial loss evolution.

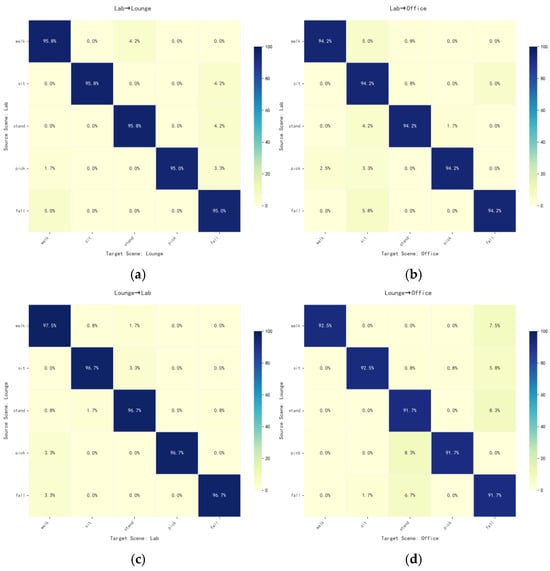

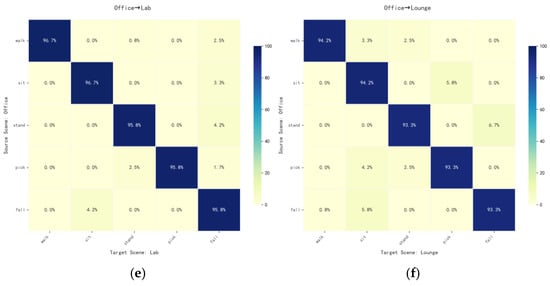

Our method complements radar’s micro-motion sensitivity with Wi-Fi’s wide coverage characteristics, allowing radar signal attenuation to be compensated by the phase gradient features of Wi-Fi. This approach breaks through the physical limitations of single sensors and achieves cross-environment robustness by using a dynamic fusion mechanism with cross-modal complementarity and scene adaptability. The transfer effects in different scenes are shown in Figure 6. The overall accuracy of our method remains between 92.1% and 96.9%. Data analysis reveals that fall detection generally suffers from misclassification with sitting and standing actions, which may be caused by overlapping time-frequency features between the sitting-to-ground phase and the fall-prevention buffering actions in the acceleration signal. Additionally, dynamic scene transfer did not cause systemic misclassification spreading, such as walking–sitting and standing–picking actions, with no cross-category confusion observed. This proves that the multimodal feature extraction module can effectively decouple core motion patterns.

Figure 6.

Confusion matrix for different scenes. (a) Lab → Lounge. (b) Lab → Office. (c) Lounge → Lab. (d) Lounge → Office. (e) Office → Lab. (f) Office → Lounge.

The results from the above experiment show that our method achieves an average accuracy of 94.8% across six cross-scene transfer tasks, which is a significant improvement over single-modal methods and advanced domain adaptation methods. Statistical tests further validate the significance of the performance improvement, achieving high-fidelity extraction and discrimination of behavior features in complex environments.

6. Conclusions

This study achieves a performance breakthrough in cross-scene human activity recognition through the collaborative perception of the physical complementarity between radar and Wi-Fi signals. The experiments show that by fusing the spatial information from the Wi-Fi modality with the micro-Doppler motion features of radar, a spatiotemporal complementarity reconstruction in coverage range is completed. The introduction of a joint interference suppression mechanism for environmental disturbances significantly enhances the system’s overall recognition accuracy in complex scenes. This effectively overcomes the inherent physical limitations of single modalities and provides new insights for the interdisciplinary integration of wireless sensing and computer vision. In the future, lightweight synchronization architectures can be developed, and hardware-level time alignment operations can be implemented through FPGA to further reduce system latency.

Author Contributions

Conceptualization, Y.S. and L.Q.; methodology, Y.S.; software, Z.C.; validation, Z.C., Y.S. and L.Q.; formal analysis, Z.C.; investigation, Z.C.; resources, L.Q.; data curation, Z.C.; writing—original draft preparation, Z.C.; writing—review and editing, L.Q.; visualization, Z.C.; supervision, Y.S.; project administration, Y.S.; funding acquisition, L.Q. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Fundamental Research Funds for Universities of Liaoning Province under Grant LJ222410143071.

Data Availability Statement

Due to privacy and ethical restrictions, we are unable to provide access to the data used in this study. This decision was made to protect the confidentiality and privacy of the study participants. We understand that access to data is important for scientific progress, but we believe that protecting the privacy of our participants is paramount. We apologize for any inconvenience this may cause and hope that our findings will still be valuable to the scientific community.

Acknowledgments

The authors sincerely appreciate the constructive feedback provided by the reviewers, whose rigorous evaluation and insightful suggestions were instrumental in guiding methodological refinements and enabling experimental breakthroughs. The critical perspectives highlighted by the reviewers significantly strengthened the validity and scope of this work. Additionally, we extend our gratitude to all members of the research team for their active engagement in fruitful discussions, which fostered a collaborative environment and enriched the analytical framework. Special thanks are owed to colleagues who contributed technical expertise and conceptual critiques during weekly meetings. This collective intellectual synergy not only resolved key challenges but also inspired innovative approaches to domain adaptation. The combined support from reviewers and the research group has profoundly enhanced the quality and impact of this study, laying a robust foundation for future investigations.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Cardillo, E.; Caddemi, A. A review on biomedical MIMO radars for vital sign detection and human localization. Electronics 2020, 9, 1497. [Google Scholar] [CrossRef]

- Cardillo, E.; Caddemi, A. Radar range-breathing separation for the automatic detection of humans in cluttered environments. IEEE Sens. J. 2020, 21, 14043–14050. [Google Scholar] [CrossRef]

- Jung, J.; Lim, S.; Kim, B.K.; Lee, S. CNN-Based Driver Monitoring Using Millimeter-Wave Radar Sensor. IEEE Sens. Lett. 2021, 5, 3500404. [Google Scholar] [CrossRef]

- Qiao, X.; Feng, Y.; Shan, T.; Tao, R. Person identification with low training sample based on micro-doppler signatures separation. IEEE Sens. J. 2022, 22, 8846–8857. [Google Scholar] [CrossRef]

- Ding, E.; Zhang, Y.; Xin, Y.; Zhang, L.; Huo, Y.; Liu, Y. A robust and device-free daily activities recognition system using Wi-Fi signals. KSII Trans. Internet Inf. Syst. (TIIS) 2020, 14, 2377–2397. [Google Scholar]

- Björklund, S.; Petersson, H.; Nezirovic, A.; Guldogan, M.B.; Gustafsson, F. Millimeter-wave radar micro-Doppler signatures of human motion. In Proceedings of the 2011 12th International Radar Symposium (IRS), Leipzig, Germany, 7–9 September 2011; pp. 167–174. [Google Scholar]

- Yao, Y.; Liu, C.; Zhang, H.; Yan, B.; Jian, P.; Wang, P.; Du, L.; Chen, X.; Han, B.; Fang, Z. Fall detection system using millimeter-wave radar based on neural network and information fusion. IEEE Internet Things J. 2022, 9, 21038–21050. [Google Scholar] [CrossRef]

- Moghaddam, M.G.; Shirehjini, A.A.N.; Shirmohammadi, S. A wifi-based method for recognizing fine-grained multiple-subject human activities. IEEE Trans. Instrum. Meas. 2023, 72, 2520313. [Google Scholar] [CrossRef]

- Ma, Y.; Zhou, G.; Wang, S. WiFi sensing with channel state information: A survey. ACM Comput. Surv. (CSUR) 2019, 52, 1–36. [Google Scholar] [CrossRef]

- Wang, Y.; Wu, K.; Ni, L.M. Wifall: Device-free fall detection by wireless networks. IEEE Trans. Mob. Comput. 2016, 16, 581–594. [Google Scholar] [CrossRef]

- Zhang, J.; Tang, Z.; Li, M.; Fang, D.; Nurmi, P.; Wang, Z. CrossSense: Towards cross-site and large-scale WiFi sensing. In Proceedings of the 24th Annual International Conference on Mobile Computing and Networking, New Delhi, India, 29 October–2 November 2018; pp. 305–320. [Google Scholar]

- Yousefi, S.; Narui, H.; Dayal, S.; Ermon, S.; Valaee, S. A survey on behavior recognition using WiFi channel state information. IEEE Commun. Mag. 2017, 55, 98–104. [Google Scholar] [CrossRef]

- Ratnam, V.V.; Chen, H.; Chang, H.-H.; Sehgal, A.; Zhang, J. Optimal preprocessing of WiFi CSI for sensing applications. IEEE Trans. Wirel. Commun. 2024, 23, 10820–10833. [Google Scholar] [CrossRef]

- Wang, F.; Song, Y.; Zhang, J.; Han, J.; Huang, D. Temporal unet: Sample level human action recognition using wifi. arXiv 2019, arXiv:1904.11953. [Google Scholar]

- Cardillo, E.; Li, C.; Caddemi, A. Radar-based monitoring of the worker activities by exploiting range-Doppler and micro-Doppler signatures. In Proceedings of the 2021 IEEE International Workshop on Metrology for Industry 4.0 & IoT (MetroInd4. 0&IoT), Rome, Italy, 7–9 June 2021; pp. 412–416. [Google Scholar]

- Li, X.; Qiu, Y.; Deng, Z.; Liu, X.; Huang, X. Lightweight Multi-Attention Enhanced Fusion Network for Omnidirectional Human Activity Recognition with FMCW Radar. IEEE Internet Things J. 2024, 12, 5755–5768. [Google Scholar] [CrossRef]

- Sun, C.; Wang, S.; Lin, Y. Omnidirectional Human Behavior Recognition Method Based on Frequency-Modulated Continuous-Wave Radar. J. Shanghai Jiaotong Univ. (Sci.) 2024, 1–9. [Google Scholar] [CrossRef]

- Chen, V.C.; Tahmoush, D.; Miceli, W.J. Radar Micro-Doppler Signatures; IET: London, UK, 2014. [Google Scholar]

- Yu, C.; Xu, Z.; Yan, K.; Chien, Y.-R.; Fang, S.-H.; Wu, H.-C. Noninvasive human activity recognition using millimeter-wave radar. IEEE Syst. J. 2022, 16, 3036–3047. [Google Scholar] [CrossRef]

- Jiang, W.; Miao, C.; Ma, F.; Yao, S.; Wang, Y.; Yuan, Y.; Xue, H.; Song, C.; Ma, X.; Koutsonikolas, D. Towards environment independent device free human activity recognition. In Proceedings of the 24th Annual International Conference on Mobile Computing and Networking, New Delhi, India, 29 October–2 November 2018; pp. 289–304. [Google Scholar]

- Liang, J.; Hu, D.; Feng, J.; He, R. DINE: Domain Adaptation from Single and Multiple Black-box Predictors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2022, New Orleans, LA, USA, 18–24 June 2021; pp. 8003–8013. [Google Scholar]

- Liu, X.; Yoo, C.; Xing, F.; Oh, H.; El Fakhri, G.; Kang, J.-W.; Woo, J. Deep unsupervised domain adaptation: A review of recent advances and perspectives. APSIPA Trans. Signal Inf. Process. 2022, 11, e25. [Google Scholar] [CrossRef]

- Wilson, G.; Cook, D.J. A survey of unsupervised deep domain adaptation. ACM Trans. Intell. Syst. Technol. (TIST) 2020, 11, 1–46. [Google Scholar] [CrossRef]

- Wang, J.; Zhao, Y.; Ma, X.; Gao, Q.; Pan, M.; Wang, H. Cross-Scenario Device-Free Activity Recognition Based on Deep Adversarial Networks. IEEE Trans. Veh. Technol. 2020, 69, 5416–5425. [Google Scholar] [CrossRef]

- Liang, J.; Hu, D.; Feng, J. Do We Really Need to Access the Source Data? Source Hypothesis Transfer for Unsupervised Domain Adaptation. In Proceedings of the 37th International Conference on Machine Learning, Vienna, Austria, 13–18 July 2020; pp. 6028–6039. [Google Scholar]

- Mosharaf, M.; Kwak, J.B.; Choi, W. WiFi-Based Human Identification with Machine Learning: A Comprehensive Survey. Sensors 2024, 24, 6413. [Google Scholar] [CrossRef]

- Chen, J.; Huang, X.; Jiang, H.; Miao, X. Low-cost and device-free human activity recognition based on hierarchical learning model. Sensors 2021, 21, 2359. [Google Scholar] [CrossRef]

- Ding, X.; Jiang, T.; Zhong, Y.; Huang, Y.; Li, Z. Wi-Fi-based location-independent human activity recognition via meta learning. Sensors 2021, 21, 2654. [Google Scholar] [CrossRef] [PubMed]

- Hao, Z.; Niu, J.; Dang, X.; Qiao, Z. WiPg: Contactless action recognition using ambient wi-fi signals. Sensors 2022, 22, 402. [Google Scholar] [CrossRef]

- Wang, F.; Li, Z.; Han, J. Continuous user authentication by contactless wireless sensing. IEEE Internet Things J. 2019, 6, 8323–8331. [Google Scholar] [CrossRef]

- Wei, Z.; Zhang, F.; Chang, S.; Liu, Y.; Wu, H.; Feng, Z. Mmwave radar and vision fusion for object detection in autonomous driving: A review. Sensors 2022, 22, 2542. [Google Scholar] [CrossRef] [PubMed]

- Huang, X.; Tsoi, J.K.; Patel, N. mmWave radar sensors fusion for indoor object detection and tracking. Electronics 2022, 11, 2209. [Google Scholar] [CrossRef]

- Theckedath, D.; Sedamkar, R. Detecting affect states using VGG16, ResNet50 and SE-ResNet50 networks. SN Comput. Sci. 2020, 1, 79. [Google Scholar] [CrossRef]

- Yu, C.; Wang, J.; Chen, Y.; Huang, M. Transfer Learning with Dynamic Adversarial Adaptation Network. In Proceedings of the In 2019 IEEE International Conference on Data Mining (ICDM), Beijing, China, 8–11 November 2019; pp. 778–786. [Google Scholar]

- Zhu, Y.; Zhuang, F.; Wang, J.; Ke, G.; He, Q. Deep Subdomain Adaptation Network for Image Classification. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 1713–1722. [Google Scholar] [CrossRef]

- Cui, S.; Wang, S.; Zhuo, J.; Li, L.; Tian, Q. Towards Discriminability and Diversity: Batch Nuclear-norm Maximization under Label Insufficient Situations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3941–3950. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).