Enhancing Machine Learning Techniques in VSLAM for Robust Autonomous Unmanned Aerial Vehicle Navigation

Abstract

1. Introduction

2. Related Work

- By using SOFT visual odometry, which achieves an inaccuracy of roughly 0.8% relative to the distance traveled, localization can be accomplished without the more computationally demanding bundle correction.

- Since the streams in charge of mapping and odometry are independent of one another, the thread in charge of mapping does not impede the visual odometry stream, which results in a more consistent processing time for incoming frames. Furthermore, the algorithm is deterministic, meaning it consistently yields the same output for the same input, in contrast to ORB-SLAM2.

- Like ORB-SLAM2, SOFT keypoints are employed for loop closure, which results in a highly dependable, straightforward, and efficient system that achieves subpixel precision, although SOFT critical points are rational invariant. The authors demonstrated on publicly available datasets that loop closure happens frequently enough in practice. This disadvantage has minimal bearing on the outcome.

- After obtaining the image, find the local offset concerning the active keyframe.

- Revise or produce a fresh keyframe. If modified, the stereo depth data are probabilistically combined with the current keyframe. When a new keyframe is created, propagate the old depth map to the new one.

- Applying position graph optimization to update the global map. The library is used for optimization, and the metric is used to look for edges in the graph.

3. VSLAM Techniques for UAV Mapping: Difficulties and Experimental Setup

- Front-end algorithms process sensor data to estimate the camera’s pose and extract 3D map points from the environment.

- Back-end algorithms refine the camera trajectory and map through optimization techniques, ensuring consistency across the entire system.

3.1. Experimental Setup and Environment

- CPU: Intel® Core™ i7-11800H @ 2.30GHz (8 cores, 16 threads); Intel Corporation, Santa Clara, CA, USA.

- GPU: NVIDIA® GeForce RTX™ 3060 Laptop GPU with 6 GB GDDR6 VRAM; NVIDIA Corporation, Santa Clara, CA, USA.

- RAM: 32 GB DDR4 3200 MHz; Kingston Technology, Fountain Valley, CA, USA.

- Storage: 1 TB NVMe SSD; Samsung Electronics Co., Ltd., Suwon, Republic of Korea.

- System Architecture: 64-bit.

- Operating System: Windows 11 Pro, Version 23H2; Microsoft Corporation, Redmond, WA, USA.

- Software Environment:

- MATLAB: Version R2022a;

- Programming Language: MATLAB, with custom scripts for adaptive parameter tuning, feature extraction, and trajectory evaluation.

3.2. Database Description

3.3. Map Initialization

3.3.1. Detect and Extract Features

- Keypoint: This denotes the 2D position of a feature within the image. Specific keypoints may also store auxiliary information, such as scale and orientation, to support invariance to viewpoint or image resolution changes.

- Descriptor: A descriptor is a numerical vector characterizing the visual content around a keypoint. It is designed to ensure that similar-looking regions produce descriptors close in vector space, enabling robust feature matching across images.

- scaleFactor: This parameter determines the factor by which the image is resized at each scale level when constructing the image pyramid for feature detection. A smaller scale factor will lead to more levels in the pyramid, resulting in finer-scale features being detected, but it will also increase computation time. Conversely, a larger scale factor will reduce the number of pyramid levels and may miss smaller-scale features but will reduce computation time.

- numLevels: This parameter specifies the number of levels in the image pyramid used for feature detection. Each level represents a scaled version of the original image. More levels allow for the detection of features at different scales but also increase computational complexity. Typically, a higher number of levels leads to more fine-scale features being detected.

- numPoints (or nFeatures): This parameter determines the maximum number of keypoints (feature points) to be detected in the image. The ORB feature detector aims to detect a fixed number of keypoints, and numPoints controls this number.

- Select pixel p in the image assuming its brightness as .

- Set a threshold T (for example, 20% of “this is a fixed one”).

- Take the pixel p as the center and select the 16 pixels on a circle with a radius of 3.

- If there are consecutive N points on the selected circle whose brightness is greater than or less than , then the central pixel p can be considered a feature point. The value of N is usually 12, 11, or 9.

- Iterate through the above four steps on each pixel.

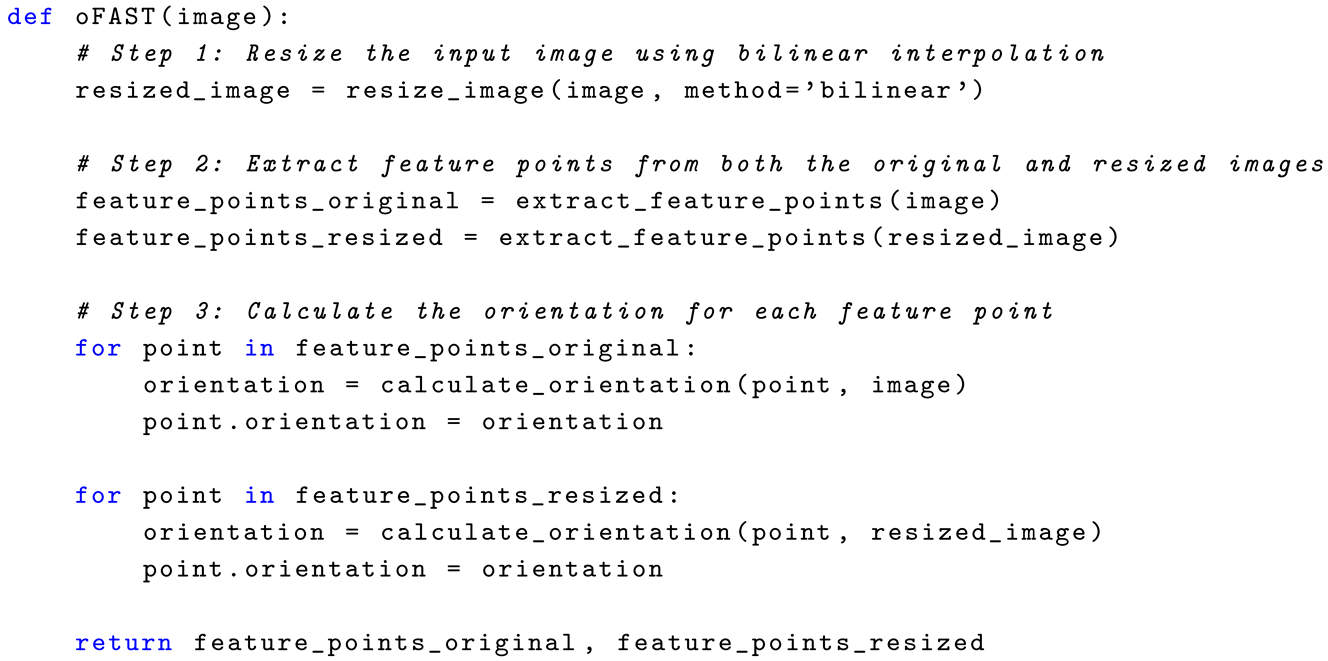

- Begin by resizing the input image using bilinear interpolation.

- Next, extract feature points from both the original and the resized images.

- Finally, calculate the orientation for each feature point.

- The first step involves considering the circular patch defined in oFAST. This patch selects M pairs of points based on a Gaussian distribution.

- Next, to achieve rotation invariance, these pairs of points are rotated by an angle calculated using Equation (3). Thus, after rotation, M pairs of points are labeled as ,,, where and are the two points of a pair, and and represent the intensity values of the point.

- Finally, an operator is defined as follows:

- Input Image: The process begins with a grayscale input image of size 640 × 480 pixels.

- Extend Image (Make Border): Borders are added to the image to prevent losing features near the edges during detection.

- Gaussian Blur: A Gaussian blur is applied to reduce noise and enhance feature stability.

- Feature Detector (FAST): The FAST algorithm is applied to detect corner-like feature points.

- Feature Points Check: If no sufficient feature points are found, the algorithm adjusts the parameters, such as lowering the detection threshold, and retries the detection process.

- Compute Orientation: Once feature points are detected, their orientation is computed using the intensity centroid method, allowing for rotation invariance.

- Compute Descriptor: Using the BRIEF descriptor (rotated to align with the orientation), a binary descriptor is computed for each feature point.

- Output Data: The computed key points and descriptors from Level 1 are prepared for output unless further processing in Level 2 is required.

- Level 2: Scaled Image Processing:

- Resize Image: If needed, the original image is resized (typically downscaled) to allow detection at a different scale.

- Extend Image (Make Border): The resized image is padded with borders, just like in Level 1.

- Gaussian Blur: The resized image is blurred to reduce noise.

- Feature Detector (FAST): The FAST detector is applied again to identify feature points in the resized image.

- Feature Points Check: If the detection is insufficient, adaptive parameters are adjusted, and detection is retried.

- Compute Orientation: Orientation of detected points is computed as in Level 1.

- Compute Descriptor: BRIEF descriptors are computed for the oriented key points in the resized image.

- Scale Recovery: The key points detected in the resized image are scaled back to match the original image dimensions.

- Using Adaptive Threshold Extract Feature Points: The feature points from both levels are filtered using adaptive thresholds to ensure quality and consistency.

- Output Data: Finally, all the valid key points and descriptors from both levels are merged and output for further tasks such as matching or object recognition.

| Listing 1. Pseudocode 1: oFAST Algorithm. |

|

| Listing 2. Pseudocode 2: Orientation Calculation Function. |

|

| Listing 3. Pseudocode 3: Steered BRIEF Function. |

|

| Listing 4. Pseudocode 4: Robust ORB Feature Matching and Parameter Adaptation. |

|

- Adaptation Mechanism for Parameter Tuning:

- The algorithm dynamically adjusts the parameters related to ORB feature detection (scaleFactor, numLevels, and numPoints) when the number of feature matches is insufficient.

- Specifically, when the number of matched features between two frames is less than the predefined threshold (minMatches), the parameters are modified as follows:

- –

- scaleFactor: This value is reduced by 0.1. The reduction of scaleFactor increases the sensitivity of the feature detector, enabling it to capture smaller features and finer details.

- –

- numLevels: This value is incremented by 0.2. Increasing the number of pyramid levels helps capture features at different scales, which can improve detection in challenging or varied visual scenes

- –

- numPoints: The number of keypoints is increased by 100. This ensures that more features are detected in each frame, increasing the likelihood of finding sufficient matches.

- These parameters are adjusted iteratively, and the process stops if the number of matches reaches the threshold (minMatches) or if the parameters reach certain limits (e.g., scaleFactor cannot go below 1.0, numLevels cannot exceed 20).

- Criteria for Adjustment:

- The adjustment process is triggered by a lack of sufficient matches between the features of the current frame and the reference frame. If the algorithm detects that the match count falls below the threshold (minMatches), it tries to refine the feature detection by modifying the detection parameters.

- The criteria for adjusting these parameters are purely based on the feature matching success rate. If the matching fails to meet the threshold, the algorithm adapts the parameters to increase the chance of successful matching.

- Algorithm for Adaptation: The adjustment algorithm follows these basic steps:

- Initial Feature Matching: Extract features from the current and reference frames.

- Match Counting: Count the number of matches found.

- Check if Matches are Sufficient: If the number of matches is below mismatches, proceed to adjust the parameters.

- Parameter Adjustment: Reduce scaleFactor, increase numLevels, and increase numPoints.

- Re-evaluate Matching: Re-run feature detection and matching with adjusted parameters.

- Stop Condition: The process stops when the matches meet the threshold or the parameters reach predefined limits.

3.3.2. Feature Matching

3.3.3. Initializing Place Recognition Database

3.4. Tracking

3.5. Loop Closure

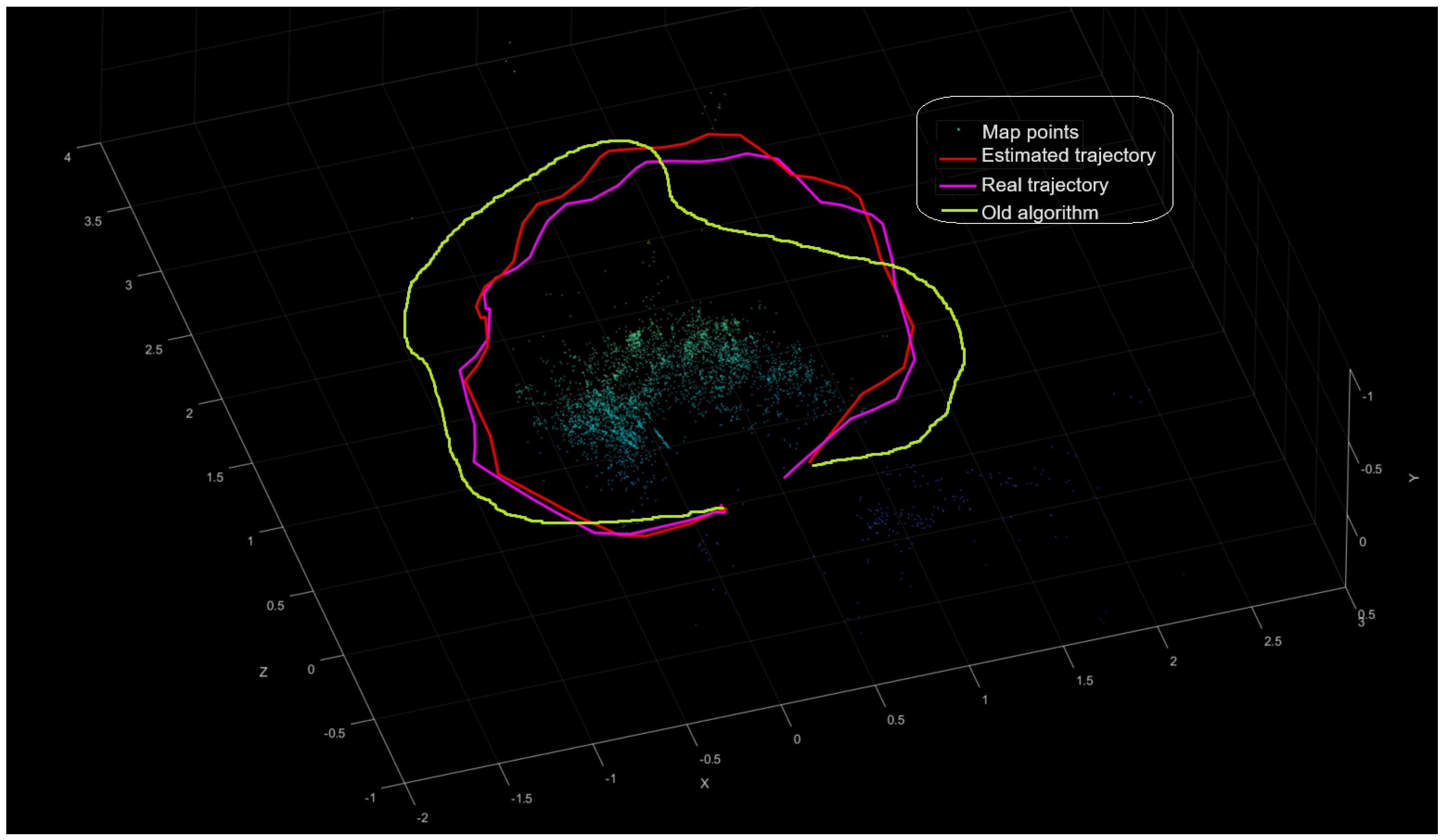

3.6. Compare with the Actual Camera Trajectory

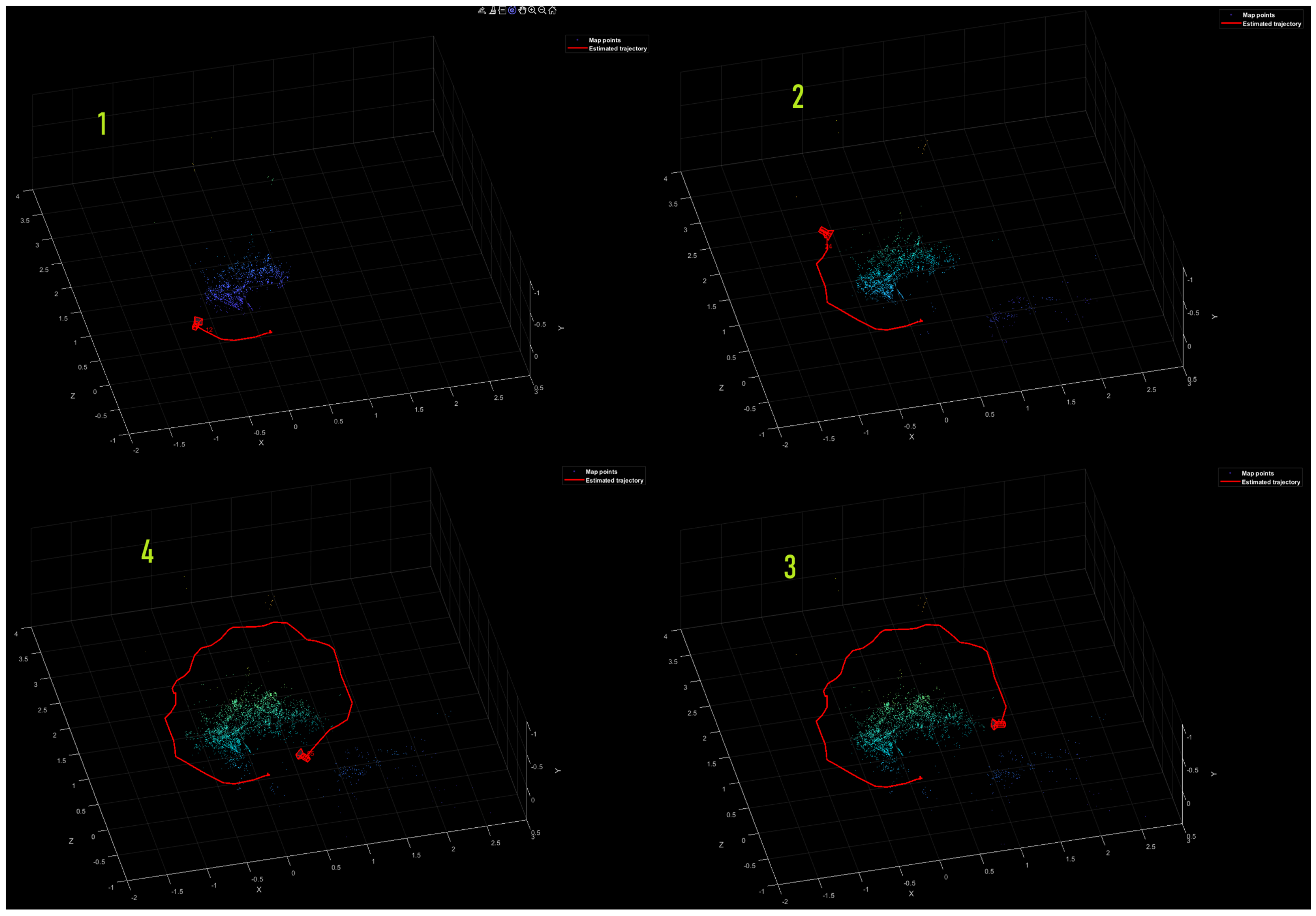

4. Results and Analysis

Result Comparison with an Existing Adaptive Method

5. Conclusions

Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| VSLAM | Visual Simultaneous Localization and Mapping |

| UAV | Unmanned Aerial Vehicle |

| ORB | Oriented FAST and Rotated BRIEF |

| GNSS | Global Navigation Satellite System |

| AVs | Autonomous Vehicles |

| LIDAR | Light Detection and Ranging |

| IMU | Inertial Measurement Unit |

| SfM | Structure from Motion |

| PTAM | Parallel Tracking and Mapping |

| VINS | Visual–Inertial System |

| SOFT | Stereo Odometry Algorithm relies on Feature Tracking |

| CNN | Convolutional Neural Network |

| DTAM | Dense Tracking and Mapping |

| TSDF | Truncated Signed Distance Function |

| ICP | Iterative Closest Point |

| GPU | Graphics Processing Unit |

| SVO | Semi-Dense Visual Odometry |

| LSD | Large-Scale Direct |

| RANSAC | Random Sample Consensus |

| FPGA | Field Programming Gate Array |

| oFAST | Oriented Feature from Accelerated Segment Test |

| BRIEF | Binary Robust Independent Elementary Feature |

| DSO | Direct Sparse Odometry |

References

- Rostum, H.M.; Vásárhelyi, J. A Review of Using Visual Odometry Methods in Autonomous UAV Navigation in GPS-Denied Environment. Acta Univ. Sapientiae Electr. Mech. Eng. 2023, 15, 14–32. [Google Scholar]

- Salih Omar, M.H.R.; Vásárhelyi, J. A Novel Method to Improve the Efficiency and Performance of Cloud-Based Visual Simultaneous Localization and Mapping. Eng. Proc. 2024, 79, 78. [Google Scholar] [CrossRef]

- Chen, W.; Shang, G.; Ji, A.; Zhou, C.; Wang, X.; Xu, C.; Li, Z.; Hu, K. An overview on visual slam: From tradition to semantic. Remote Sens. 2022, 14, 3010. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Martinez Montiel, J.M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-scale direct monocular SLAM. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014. [Google Scholar]

- Mur-Artal, R.; Tardós, J.D. ORB-SLAM2: An open-source SLAM system for monocular, stereo, and RGB-D cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Qin, T.; Li, P.; Shen, S. VINS-Mono: A robust and versatile monocular visual-inertial state estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar]

- Biber, P.; Strasser, W. The normal distributions transform: A new approach to laser scan matching. In Proceedings of the 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003), Las Vegas, NV, USA, 27 October–1 November 2003; IEEE Xplore: Piscataway, NJ, USA, 2003; Volume 3. [Google Scholar]

- Singh, R.; Nagla, K.S. Comparative analysis of range sensors for the robust autonomous navigation—A review. Sens. Rev. 2019, 40, 17–41. [Google Scholar]

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, present, and future of simultaneous localization and mapping: Toward the robust-perception age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar]

- Chen, C.; Wang, B.; Lu, C.X.; Trigoni, N.; Markham, A. A survey on deep learning for localization and mapping: Towards the age of spatial machine intelligence. arXiv 2020, arXiv:2006.12567. [Google Scholar]

- Davison, A.J.; Reid, I.D.; Molton, N.D.; Stasse, O. MonoSLAM: Real-time single camera SLAM. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1052–1067. [Google Scholar] [CrossRef] [PubMed]

- Klein, G.; Murray, D. Parallel tracking and mapping for small AR workspaces. In Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13–16 November 2007; IEEE Xplore: Piscataway, NJ, USA, 2007. [Google Scholar]

- Strasdat, H.; Montiel, J.M.M.; Davison, A.J. Real-time monocular SLAM: Why filter? In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, Alaska, 3 May 2010; IEEE Xplore: Piscataway, NJ, USA, 2010. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; IEEE Xplore: Piscataway, NJ, USA, 2011. [Google Scholar]

- Kümmerle, R.; Grisetti, G.; Strasdat, H.; Konolige, K.; Burgard, W. g2o: A general framework for graph optimization. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; IEEE Xplore: Piscataway, NJ, USA, 2011. [Google Scholar]

- Gálvez-López, D.; Tardos, J.D. Bags of binary words for fast place recognition in image sequences. IEEE Trans. Robot. 2012, 28, 1188–1197. [Google Scholar] [CrossRef]

- Kurdel, P.; Novák Sedláčková, E.L.J. UAV flight safety close to the mountain massif. Transp. Res. Procedia 2019, 43, 319–327. [Google Scholar]

- Pecho, P.; Velky, P.K.S.N.A. Use of Computer Simulation to Optimize UAV Swarm Flying; IEEE: Piscataway, NJ, USA, 2022; pp. 168–172. [Google Scholar]

- Qin, T.; Shen, S. Robust initialization of monocular visual-inertial estimation on aerial robots. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vancouver, BC, Canada, 24–28 September 2017; IEEE Xplore: Piscataway, NJ, USA, 2017. [Google Scholar]

- Qin, T.; Cao, S.; Pan, J.; Shen, S. A general optimization-based framework for global pose estimation with multiple sensors. arXiv 2019, arXiv:1901.03642. [Google Scholar]

- Cvišić, I.; Ćesić, J.; Marković, I.; Petrović, I. SOFT-SLAM: Computationally efficient stereo visual simultaneous localization and mapping for autonomous unmanned aerial vehicles. J. Field Robot. 2018, 35, 578–595. [Google Scholar] [CrossRef]

- Tateno, K.; Tombari, F.; Laina, I.; Navab, N. CNN-SLAM: Real-time dense monocular SLAM with learned depth prediction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE Xplore: Piscataway, NJ, USA, 2017. [Google Scholar]

- Newcombe, R.A.; Lovegrove, S.J.; Davison, A.J. DTAM: Dense tracking and mapping in real-time. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; IEEE Xplore: Piscataway, NJ, USA, 2011. [Google Scholar]

- Newcombe, R.A.; Izadi, S.; Hilliges, O.; Molyneaux, D.; Kim, D.; Davison, A.J.; Kohi, P.; Shotton, J.; Hodges, S.; Fitzgibbon, A. Kinectfusion: Real-time dense surface mapping and tracking. In Proceedings of the 2011 10th IEEE International Symposium on Mixed and Augmented Reality, Basel, Switzerland, 26–29 October 2011; IEEE Xplore: Piscataway, NJ, USA, 2011. [Google Scholar]

- Usenko, V.; Demmel, N.; Schubert, D.; Stückler, J. Visual-inertial mapping with non-linear factor recovery. IEEE Robot. Autom. Lett. 2019, 5, 422–429. [Google Scholar]

- Engel, J.; Sturm, J.; Cremers, D. Semi-dense visual odometry for a monocular camera. In Proceedings of the IEEE International Conference on Computer Vision, Vancouver, BC, Canada, 7–14 July 2001; IEEE Xplore: Piscataway, NJ, USA, 2013. [Google Scholar]

- Forster, C.; Pizzoli, M.; Scaramuzza, D. SVO: Fast semi-direct monocular visual odometry. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; IEEE Xplore: Piscataway, NJ, USA, 2014. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Yu, C.; Liu, Z.; Liu, X.J.; Xie, F.; Yang, Y.; Wei, Q.; Fei, Q. DS-SLAM: A semantic visual SLAM towards dynamic environments. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; IEEE Xplore: Piscataway, NJ, USA, 2018. [Google Scholar]

- Bescos, B.; Fácil, J.M.; Civera, J.; Neira, J. DynaSLAM: Tracking, mapping, and inpainting in dynamic scenes. IEEE Robot. Autom. Lett. 2018, 3, 4076–4083. [Google Scholar] [CrossRef]

- Bârsan, I.A.; Liu, P.; Pollefeys, M.; Geiger, A. Robust dense mapping for large-scale dynamic environments. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; IEEE Xplore: Piscataway, NJ, USA, 2018. [Google Scholar]

- Yang, D.; Bi, S.; Wang, W.; Yuan, C.; Wang, W.; Qi, X.; Cai, Y. DRE-SLAM: Dynamic RGB-D encoder SLAM for a differential-drive robot. Remote Sens. 2019, 11, 380. [Google Scholar] [CrossRef]

- Dou, M.; Khamis, S.; Degtyarev, Y.; Davidson, P.; Fanello, S.R.; Kowdle, A.; Escolano, S.O.; Rhemann, C.; Kim, D.; Taylor, J.; et al. Fusion4d: Real-time performance capture of challenging scenes. ACM Trans. Graph. (ToG) 2016, 35, 1–13. [Google Scholar]

- Rünz, M.; Agapito, L. Co-fusion: Real-time segmentation, tracking and fusion of multiple objects. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; IEEE Xplore: Piscataway, NJ, USA, 2017. [Google Scholar]

- Shao, S. A Monocular SLAM System Based on the ORB Features. In Proceedings of the 2023 IEEE 3rd International Conference on Power, Electronics and Computer Applications (ICPECA), Shenyang, China, 29–31 January 2023; IEEE Xplore: Piscataway, NJ, USA, 2023. [Google Scholar]

- Macario Barros, A.; Michel, M.; Moline, Y.; Corre, G.; Carrel, F. A comprehensive survey of visual slam algorithms. Robotics 2022, 11, 24. [Google Scholar] [CrossRef]

| RMSE to the Estimated Trajectory for Adapted ORB-SLAM | RMSE for the ORB-SLAM Fixed Parameters | Passed or Failed | |

|---|---|---|---|

| Performance Time | 03 min.15.56 s | 02 min19.59 s | |

| Daylight | RMSE: 0.20123 | RMSE: 2.0697 | Adaptive: 🗸 ORB-SLAM: 🗸 |

| Extra Room Light | RMSE: 0.20123 | RMSE: 2.0697 | Adaptive: 🗸 ORB-SLAM: 🗸 |

| Full Shading | RMSE: 0.20123 | RMSE: 3.280 | Adaptive: 🗸 ORB-SLAM: X |

| Half Shading | RMSE: 0.20123 | RMSE: 2.5112 | Adaptive: 🗸 ORB-SLAM: 🗸 |

| Algorithm | Scale Factor | Num Levels | Num Points | Threshold | RMSE | Performance Time min-s |

|---|---|---|---|---|---|---|

| ORB-SLAM | 1.2 | 8 | 600 | 40 | 2.0697 | 02 min19.59 s |

| Proposed Method | adaptive | adaptive | adaptive | adaptive | 0.20123 | 03 min.15 s |

| ORB-SLAM2 | 1.5 | 8 | 1000 | 60 | 0.4523 | 02 min.15 s |

| VINS-Mono | 1.7 | 7 | 900 | 60 | 0.4745 | 02 min.55 s |

| FORB-SLAM | 1.3 | 7 | 856 | 60 | 0.4512 | 02 min.15 s |

| ORB-SLAM3 | 1.5 | 9 | 650 | 60 | 0.4756 | 02 min.12 s |

| DSO | / | / | / | / | 0.3218 | 02 min.18 s |

| Test1(ORB-SLAM) | 1.4 | 9 | 800 | 50 | 2.012 | 03 min.10 s |

| Test2(ORB-SLAM) | 1.5 | 10 | 1000 | 55 | 1.9987 | 04 min.15 s |

| Test3(ORB-SLAM) | 1.6 | 11 | 1200 | 60 | 1.898 | 03 min.50 s |

| Test4(ORB-SLAM) | 1.3 | 12 | 1400 | 65 | 1.65 | 05 min.01 s |

| Test5(ORB-SLAM) | 1.1 | 7 | 1600 | 70 | 1.82 | 04 min.08 s |

| Test6(ORB-SLAM) | 1 | 6 | 2000 | 75 | 1.1154 | 02 min.40 s |

| Test7(ORB-SLAM) | 0.9 | 13 | 2200 | 85 | 0.9987 | 02 min.56 s |

| Test8(ORB-SLAM) | 0.8 | 15 | 2400 | 100 | 0.5548 | 02 min.50 s |

| Aspect | Traditional ORB-SLAM | Proposed Adaptive ORB-SLAM |

|---|---|---|

| Parameter Selection | Fixed values set manually before execution. | Adaptive parameters adjusted dynamically during runtime. |

| Robustness in Dynamic Environments | Struggles with changing lighting conditions, motion noise, and low-texture scenes. | Adapts automatically to different conditions, improving tracking stability. |

| Feature Extraction | Uses a fixed number of key points per frame. | Adjusts the number of key points dynamically based on scene complexity. |

| Computational Efficiency | Faster processing due to fixed parameters but less adaptive. | Slightly higher computational cost but significantly more accurate. |

| Tracking Performance | May lose tracking when key points are insufficient. | Re-extracts features dynamically, maintaining tracking accuracy. |

| Loop Closure and Mapping | Susceptible to drift due to static parameter settings. | More robust map generation with adaptive keyframe selection. |

| Real-time Applicability | Requires manual tuning for different environments. | Self-adjusting mechanism makes it suitable for real-time applications. |

| RMSE (Accuracy of Estimated Trajectory) | Higher RMSE due to parameter limitations. | Lower RMSE due to adaptive tuning. |

| Feature | DynaSLAM | Proposed Method (Adaptive ORB-SLAM) |

|---|---|---|

| Approach to Dynamic Environments | Removes moving objects using motion segmentation | Dynamically adjusts feature extraction and tracking parameters for better performance |

| Performance in Low-Light Conditions | Poor, as motion segmentation is sensitive to lighting and suffers from high noise | More robust, as an adaptive parameter tuning maintains performance in low-light scenarios |

| Effect on Generated Maps | Removes moving objects, which can lead to the loss of essential scene details | Does not remove elements but enhances the ability to track and adapt to them |

| Computational Cost | High due to motion detection and inpainting (background reconstruction) | Lower, as it focuses on improving parameters instead of removing objects |

| Real-Time Performance | Relatively slow, due to complex image processing operations | Slower but more efficient in real time, making it suitable for UAVs |

| Best Suited For | Environments with large moving objects, such as pedestrians and vehicles | Low-light or low-texture environments, where the feature extraction is more challenging |

| Reliability in Poor Lighting Conditions | Low, since poor lighting affects motion detection | High, as the method relies on the feature analysis rather than motion segmentation |

| RMSE (Accuracy of Estimated Trajectory) | Higher RMSE due to parameter limitations | Lower RMSE due to adaptive tuning. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rostum, H.; Vásárhelyi, J. Enhancing Machine Learning Techniques in VSLAM for Robust Autonomous Unmanned Aerial Vehicle Navigation. Electronics 2025, 14, 1440. https://doi.org/10.3390/electronics14071440

Rostum H, Vásárhelyi J. Enhancing Machine Learning Techniques in VSLAM for Robust Autonomous Unmanned Aerial Vehicle Navigation. Electronics. 2025; 14(7):1440. https://doi.org/10.3390/electronics14071440

Chicago/Turabian StyleRostum, Hussam, and József Vásárhelyi. 2025. "Enhancing Machine Learning Techniques in VSLAM for Robust Autonomous Unmanned Aerial Vehicle Navigation" Electronics 14, no. 7: 1440. https://doi.org/10.3390/electronics14071440

APA StyleRostum, H., & Vásárhelyi, J. (2025). Enhancing Machine Learning Techniques in VSLAM for Robust Autonomous Unmanned Aerial Vehicle Navigation. Electronics, 14(7), 1440. https://doi.org/10.3390/electronics14071440