Abstract

The proliferation of Internet of Things (IoT) devices has introduced significant challenges in cybersecurity, particularly in the realm of intrusion detection. While effective, traditional centralized machine learning approaches often compromise data privacy and scalability due to the need for data aggregation. In this study, we propose a federated learning framework for near-real-time intrusion detection in IoT environments. Federated learning enables decentralized model training across multiple devices without exchanging raw data, thereby preserving privacy and reducing communication overhead. Our approach builds upon a previously proposed hybrid model, which combines a machine learning model deployed on IoT devices with a second-level cloud-based analysis. This previous work required all data to be passed to the cloud in aggregate form, limiting security. We extend this model to incorporate federated learning, allowing for distributed training while maintaining high accuracy and privacy. We evaluate the performance of our federated-learning-based model against a traditional centralized model, focusing on accuracy retention, training efficiency, and privacy preservation. Our experiments utilize actual attack data partitioned across multiple nodes. The results demonstrate that this hybrid federated learning not only offers significant advantages in terms of data privacy and scalability but also retains the previous competitive accuracy. This paper also explores the integration of federated learning with cloud-based infrastructure, leveraging platforms such as Databricks and Google Cloud Storage. We discuss the challenges and benefits of implementing federated learning in a distributed environment, including the use of Apache Spark and MLlib for scalable model training. The results show that all the algorithms used maintain an excellent identification accuracy (98% for logistic R=regression, 97% for SVM, and 100% for Random Forest). We also report a very short training time (less than 11 s on a single machine). The previous very low application time is also confirmed (0.16 s for over 1,697,851 packets). Our findings highlight the potential of federated learning as a viable solution for enhancing cybersecurity in IoT ecosystems, paving the way for further research in privacy-preserving machine learning techniques.

1. Introduction

The Internet of Things (IoT) is increasingly integrated being into many aspects of daily life, with intelligent services and applications powered by Artificial Intelligence (AI). Traditionally, AI techniques require processing that may not be feasible in realistic IoT scenarios due to the scalability of modern IoT networks. Additionally, there are growing concerns about data privacy. In IoT infrastructures, attack and anomaly detection are well-known challenges. As IoT infrastructure becomes more prevalent, threats and attacks on these systems are growing proportionally. Recently, a hybrid approach for detecting cyber attacks on IoT devices has been proposed, which involves applying a machine learning model implemented directly on the IoT device, along with a second-level analysis (training) performed in the cloud. While this approach is effective, it still requires centralized data collection, where data must be aggregated in a single location before model training, and AI functions and data are placed in a cloud server for data learning and modeling [1].

In the broader context of the IoT, federated learning (FL) has emerged as a promising solution for building intelligent and privacy-enhanced IoT systems. FL is a distributed collaborative AI approach that enables intelligent IoT applications by allowing AI training at distributed IoT devices without the need for data sharing. FL is a decentralized approach to model training that preserves data privacy. It enables machine learning models to be trained across multiple decentralized devices or servers holding local data samples without exchanging them. This method contrasts with traditional centralized machine learning techniques, where data must be aggregated in a single location before model training [2,3,4].

The core principle of FL is to keep data localized, addressing growing concerns over privacy and data security. This is particularly relevant in an era where data protection regulations like the General Data Protection Regulation (GDPR) in Europe [5] and similar laws worldwide impose strict guidelines on data handling. By training models directly on the devices where data are generated—whether smartphones, IoT devices, or hospital systems—FL reduces the need for data to travel over networks, minimizing the risk of data breaches and ensuring compliance with privacy laws.

However, this decentralized approach presents unique challenges. The heterogeneity of data across devices can skew model training, the fragmentation may cause communication overhead, and malicious actors may influence the model, either by providing bad updates or by attempting to extract sensitive information from the model updates themselves. Techniques such as differential privacy [6] are integrated into FL frameworks to enhance privacy further by adding noise to the model updates.

A schematic comparison between centralized learning and FL is reported in Table 1.

Table 1.

Comparison between centralized learning and federated learning.

The heterogeneity of IoT devices introduces a trade-off between scalability, accuracy, and privacy in the context of FL. IoT devices vary widely in computational capabilities, ranging from low-power sensors with limited CPUs (e.g., microcontrollers) to more powerful gateways (e.g., Raspberry Pi). This variability impacts their ability to perform local model training. Less powerful devices may take longer to complete a training epoch or may be unable to handle complex models, leading to delays in synchronization with the central server. Some devices might need to simplify their local models, causing discrepancies between local and global models and impacting the convergence time of the global model and the local accuracy compared to the aggregated model.

Here, we extend our previous hybrid model for detecting cyber attacks on IoT devices to incorporate FL, allowing for distributed training while maintaining high accuracy and privacy. The key to our approach is that learning is carried out in a federated and distributed way, not on the IoT devices themselves but on homogeneous resources in the cloud. IoT devices themselves will eventually only apply the obtained models.

To evaluate the new hybrid model in the federated learning framework, we selected the logistic regression (LR), Support Vector Machines (SVM), and Random Forest (RF) methods, which have already been used in previous work [1]. These models were chosen for their computational efficiency, their proven efficacy in intrusion detection, and the compatibility of the trained models with the resource-constrained, heterogeneous nature of IoT devices. LR offers a lightweight, interpretable baseline ideal for low-power devices and rapid aggregation in FL [7]. SVM provides robust classification capabilities for complex intrusion patterns, adaptable to federated training with moderate resource demands [8]. RF, an ensemble method, enhances robustness against non-IID data distributions, a key challenge in our heterogeneous IoT environment [9]. Together, these models enable a comprehensive analysis of FL’s performance under varying conditions, aligning with our goal of maintaining near-real-time detection while addressing privacy and scalability.

We evaluated the performance of our FL-based model against a traditional centralized model, focusing on accuracy retention, training efficiency, and privacy preservation. We conducted FL experiments using the cybersecurity Kitsune Network Attack dataset [10], focusing on the challenges and benefits of decentralized model training. The original dataset was divided into multiple partitions, simulating a distributed data scenario. The dataset was then prepared for training, and machine learning algorithms were used to implement an FL approach.

Related Works

Network intrusion detection has garnered significant research interest in recent years [11,12,13], with machine learning methods increasingly being integrated into frameworks for detecting intrusions in IoT-based environments [14,15]. Hybrid approaches, combining centralized and decentralized techniques, have also emerged to address specific challenges in these settings [16].

The concept of federated learning was formally introduced by Google researchers in 2016 [17], establishing a paradigm for training machine learning models across distributed datasets without centralizing sensitive data. This seminal work sparked a dynamic research field, with applications spanning mobile device personalization, such as Google’s Gboard for privacy-preserving keyboard predictions [3], to secure medical data analysis [18].

Several studies align with aspects of our hybrid FL approach. For example, Hamdi [19] proposed an FL-based intrusion detection system for Supervisory Control and Data Acquisition (SCADA) environments, demonstrating that blending centralized and federated learning mitigates performance degradation in FL. Cao et al. [20] developed a dual-key black-box aggregation method for FL, employing Paillier threshold homomorphic encryption (TPHE) to secure model parameters during transmission and aggregation through a two-stage decryption process. Gu and Wang [21] explored the effects of diverse training paradigms, including hybrid strategies, on FL performance, highlighting their potential to enhance robustness. Similarly, Albogami [22] introduced a federated hybrid deep belief network for time-series data from IoT edge devices, showcasing a complex model tailored to decentralized settings.

While these works share similarities with our research—such as hybrid architectures or security enhancements—they differ in scope and implementation. Furthermore, our approach uniquely leverages Databricks [23] for scalable model training, Google Cloud [24] for efficient aggregation and deployment, and Fivetran [25] for seamless data integration, creating a cohesive framework optimized for near-real-time intrusion detection. This combination not only addresses data heterogeneity and system scalability but also ensures practical deployment in distributed environments, distinguishing our contribution from prior efforts.

2. Materials and Methods

To implement our FL approach, we required a robust infrastructure capable of handling distributed data storage, providing computational resources and enabling collaborative development. For this reason, we leveraged Databricks as our primary computing environment and Google Cloud Storage (GCS) for data management. This setup provided a scalable, efficient, and privacy-preserving way to implement our experiments.

2.1. Databricks

Databricks is a cloud-based data analytics and machine learning platform built on top of Apache Spark [26]. It provides an optimized environment for big data processing, supporting distributed computing for large-scale data analytics and machine learning tasks. With its collaborative workspace, Databricks enables multiple researchers and engineers to work simultaneously on data science projects, making it an ideal platform for our FL implementation.

The key advantages of using Databricks for our study include scalability, high-performance computing, seamless integration with cloud storage, and a collaborative environment. Databricks provides an autoscaling cluster management system, allowing dynamic allocation of processing power based on workload demand. The platform offers powerful CPUs and GPUs, essential for efficiently running complex machine learning models. Additionally, Databricks can easily connect with cloud services like Google Cloud Storage, facilitating efficient data access and management.

2.2. Google Cloud Storage

Given FL’s decentralized nature, a key challenge was determining where to store the datasets used for training. For this purpose, we used Google Cloud Storage (GCS), a highly scalable and secure object storage solution. GCS allowed us to store and manage large volumes of data efficiently while ensuring accessibility for the Databricks platform.

Google Cloud Storage provides several advantages, such as scalability, security, seamless integration, and high availability. It supports large datasets, making it suitable for machine learning applications with high data demands. GCS offers encryption and access control mechanisms, ensuring that sensitive data remain protected during storage and transfer. Moreover, GCS integrates smoothly with Databricks, allowing direct access to stored data for analysis and model training.

2.3. Fivetran

To facilitate data ingestion from Google Cloud Storage into Databricks, we utilized Fivetran, a data integration service that automates the process of moving data across platforms. Fivetran enabled a seamless and efficient data pipeline, ensuring that the datasets stored in GCS could be easily accessed and processed within Databricks without manual intervention.

The benefits of using Fivetran included automated data movement, real-time data updates, and minimal maintenance. Fivetran simplifies the ingesting and syncing of data between platforms, ensuring that data remain up-to-date across different systems. The service automates schema changes and optimizes data synchronization, reducing the need for manual adjustments.

2.4. Flower Federated Learning Framework

Various frameworks are available for implementing FL, each with distinct features and use cases. After evaluating some prominent frameworks, which are described in Appendix A, we selected FLWR (Flower) for our project due to its simplicity, scalability, and compatibility with various machine learning libraries [27], including TensorFlow 2.17, PyTorch 2.6.0, and Scikit-learn, making it highly adaptable. Flower simplifies FL implementation by providing built-in server–client communication protocols and customizable strategies. Its modular design allowed us to efficiently integrate FL into our cloud-based infrastructure while maintaining flexibility in model development.

2.5. Data, Preprocessing, and Integration with Hugging Face

From the Kitsune Network Attack data, containing nine different network attacks on a commercial IP-based surveillance system and an IoT network, we selected the OS Scan dataset, which includes 1,697,851 packets. We divided it as follows: 80% in the training set, and 20% in the validation set. We uploaded the dataset to Hugging Face [28], a platform that facilitates easy sharing and loading of datasets. While our dataset originally consisted of two CSV tables and while FLWR can also work with CSV files, using Hugging Face is recommended as it provides better integration and flexibility.

2.6. Federated Learning Implementation Workflow

The integration of Databricks, Google Cloud Storage, and Fivetran enabled an efficient FL workflow, which proceeded as follows:

- Data Storage: The original dataset was uploaded to Google Cloud Storage.

- Data Ingestion: Fivetran was used to automate data transfer from Google Cloud Storage to Databricks, ensuring a streamlined data pipeline.

- Cluster Configuration: A Databricks cluster was provisioned, and appropriate computing resources (CPUs and GPUs) were selected based on workload requirements.

- FL Execution: Using Apache Spark and MLlib, the Apache Spark machine learning library, we implemented an FL training process, where each node processed its local dataset without sharing raw data.

- Model Aggregation: After local training, updates were aggregated centrally to refine the global model while preserving data privacy.

- Evaluation: The final model was assessed to compare its performance against a traditionally centralized training approach.

This setup allowed us to explore the feasibility and benefits of FL in a cloud environment, ensuring privacy preservation and scalability. By leveraging Databricks for computation, Google Cloud Storage for distributed data handling, and Fivetran for automated data ingestion, we successfully implemented a decentralized machine learning workflow, laying the foundation for further experimentation and refinement.

A schematic representation of the federated learning workflow components is reported in Figure 1.

Figure 1.

Schematic representation of the federated learning workflow components.

2.7. Evaluation Parameters

For each method we evaluated the following parameters:

Accuracy, i.e., the ratio of correctly classified instances among the total number, as shown in Equation (1).

where TP (true positive) is the number of malicious packets correctly identified, TN (true negative) is the number of normal instances correctly identified, FP (false positive) are normal instances incorrectly identified, and FN (false negative) is the number of undetected malicious packets.

Accuracy = (TP + TN)/(P + N) = (TP + TN)/(TP + TN + FP + FN)

Precision, i.e., the rate of elements that are classified as positive and are actually positive. It is obtained by dividing correctly classified anomalies (TP) by the total number of positive instances (FP + TP), as shown in Equation (2).

Precision = TP/(TP + FP)

Recall (sensitivity, or true positive rate), i.e., the correctly classified attacks (TP) divided by the total number of attacks (TP + FN); see Equation (3).

Recall = TP/(TP + FN)

F1-score, i.e., the harmonic mean of precision and recall, as shown in Equation (4).

F1-Score = 2 ∗ (Recall ∗ Precision)/(Recall + Precision)

False positive rate, i.e., the proportion of normal instances that are classified as positive, as in the Equation (5).

FP rate = FP/(TN + FP)

False negative rate i.e., the number of false negatives divided by the number of all samples that are actually positives:

FN rate = FN/(TP + FN)

3. Results

3.1. Logistic Regression

We began by realizing a federated implementation of a logistic regression model [29]. We started with the example code provided by FLWR and made the following modifications: dataset loading, feature and class configuration, and model parameters. We adjusted the number of features and classes to match our dataset, specifically 115 features and two classes. We configured the logistic regression model to use the L2 penalty and set the number of local epochs to five.

Once the basic logistic regression model was working, we extended the implementation to include the following additional features: logging, model saving, and a custom strategy. We added logging to track the training process, including metrics such as accuracy and loss. After each run, we saved the model’s weights and configuration in JSON format. To facilitate logging and accuracy tracking, we created a custom strategy based on Federated Averaging (FedAvg) [7], modifying the strategy to extract and save results after each round.

Our final experimental setup included the following parameters: number of classes (2), number of features (115), number of supernodes (25), local epochs (5), penalty (L2), and server rounds (3). This configuration allowed us to evaluate the performance of FL in a realistic scenario, balancing computational efficiency with model accuracy. This setup is resumed in Table 2.

Table 2.

Logistic regression parameter setup.

By implementing these changes, we successfully trained a logistic regression model using FLWR. The addition of logging and model saving provided valuable insights into the training process, enabling us to fine-tune the model and improve its performance. The custom strategy ensured that we could track accuracy and other metrics, making it easier to analyze the results and compare different configurations.

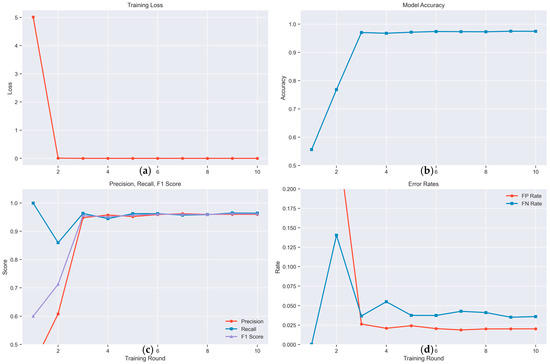

The final accuracy was 0.9783, as reported in Table 4. Figure 2 resumes the FL logistic regression evaluation parameters over 50 training rounds.

Figure 2.

This dashboard reports the FL logistic eegression results on 50 training rounds: (a) training loss; (b) model accuracy; (c) precision, recall, F1 score; (d) error rates. In each panel, the abscissa reports the training round.

3.2. Support Vector Machines Using Stochastic Gradient Descent

Then, we realized a federated implementation of a Support Vector Machine (SVM) [30], also implementing the Stochastic Gradient Descent (SGD) solver [31]. This approach provided a different way of fitting the SVM model, with memory efficiency, flexibility, and regularization advantages. SGD is, in fact, particularly well suited for large datasets and high-dimensional feature spaces, as it processes data in small batches. We selected an appropriate loss function (hinge loss for SVM). SGD supports elastic-net regularization, which combines L1 (Lasso) and L2 penalties to prevent overfitting and improve model generalization.

We configured the SGD classifier with the hinge loss function, with regularization tuned for strength and strategy, with the maximum iterations set to a high number to prevent premature convergence, and with the learning rate η dynamically computed as ‘optimal’, according to the Equation (7):

where α is the regularization parameter, which balances the trade-off between fitting the training data and keeping model weights small, t is the current time step or iteration number, and t0 is an initial offset or scaling factor, which adjusts the starting learning rate and its decay rate. This setup is resumed in Table 3.

η = 1/(α * (t + t0))

Table 3.

Support Vector Machines using Stochastic Gradient Descent parameter setup.

The SGD-based implementation evidenced several key benefits: faster training performance, competitive accuracy, and scalability. The training process was significantly faster compared to traditional solvers, especially for large datasets. While the evaluation metrics were slightly lower than those achieved with the linear model, the results were still competitive, highlighting the importance of careful hyperparameter tuning. The memory-efficient nature of SGD allowed us to handle larger feature spaces without running into computational limitations.

The final accuracy for the SVM model was 0.9746, as reported in Table 4. Figure 3 resumes the FL SVM + SGD evaluation parameters over 10 training rounds.

Table 4.

FL results (on the validation set) for federated logistic regression, Support Vector Machines using Stochastic Gradient Descent, and Random Forest.

Figure 3.

This dashboard reports the FL Support Vector Machines using Stochastic Gradient Descent results on 10 training rounds: (a) training loss; (b) model accuracy; (c) precision, recall, F1 score; (d) error rates. In each panel the abscissa reports the training round.

3.3. Random Forest

RF is an ensemble learning method that constructs multiple decision trees during training and outputs the mode of the classes (classification) or the mean prediction (regression) of the individual trees. In an FL setting, the key challenge is to train these trees across distributed nodes without sharing raw data. Instead, only model updates (e.g., tree structures, split points, or leaf values) are shared with a central server or among nodes [32].

The main steps for implementing FL with RF were as follows:

- Local Training: Each node trains a subset of decision trees on its local data.

- Model Aggregation: The central server aggregates the trees from all nodes to create a global RF model.

- Model Distribution: The global model is sent back to the nodes for further training or inference.

In FL, each node trains a subset of decision trees independently. The number of trees trained per node depends on the total number of trees in the RF and the number of participating nodes. For example, if the global RF consists of 100 trees and there are 10 nodes, each node trains 10 trees.

The training process occurs in three phases:

- Bootstrap Sampling: Each node performs bootstrap sampling on its local data to create subsets for training individual trees. ensuring diversity among the trees.

- Feature Selection: At each split in a decision tree, a random subset of features is considered. This randomness helps reduce overfitting and improves generalization.

- Tree Construction: Based on the local data, each node constructs its trees using standard decision tree algorithms (e.g., CART).

Then, aggregating decision trees from multiple nodes into a global RF is a critical step in FL. As reported in Appendix B, several techniques can be used, each with its own advantages and limitations. In the same way, pruning is essential to control the complexity of the global RF and improve its efficiency. Several pruning techniques can be applied, as reported in Appendix C. Furthermore, implementing FL with RF presents several challenges and limitations, for example:

- Communication Overhead: RF models can be large, especially when the number of trees is high. Transmitting these models between nodes and the central server can incur significant communication overhead. Compression techniques (e.g., quantization, sparsification) can mitigate this issue but may affect model accuracy.

- Tree Alignment: Aggregating trees from different nodes requires aligning their structures, which is computationally expensive. Mismatched tree structures can lead to suboptimal global models.

- Data Heterogeneity: In FL, data across nodes are often non-IID (non-independent and identically distributed). This heterogeneity can lead to biased or inconsistent trees, reducing the global model’s performance.

- Privacy Concerns: While FL preserves data privacy by not sharing raw data, model updates (e.g., tree structures) can still leak information. Techniques like differential privacy can mitigate this risk but may degrade model performance.

- Scalability: As the number of nodes increases, the complexity of aggregating and managing the global RF grows. Scalability becomes a significant concern, especially for large-scale deployments.

Several advanced techniques can be employed to address the challenges mentioned above, as reported in Appendix D.

Given the intrinsic complexity, the experimentation was conducted with a minimal number of trees, and we therefore believe that it is necessary to further refine the implementation, although the results were excellent, as reported in Table 4.

3.4. Implementation Repository

To make the experiments reproducible, a repository was created to contain the implementations described [33]. This repository contains implementations of federated learning using Flower (FLWR) for the different machine learning algorithms used. Each implementation aimed to enable multiple clients to collaborate in model training without directly sharing their data, preserving privacy. Each method has its own customized aggregation strategy.

The repository is divided into three main directories, each implementing a different Machine Learning algorithm in a federated setting. Each folder includes its own README with more details on the specific implementation.

We opted to use Databricks in each implementation, so the code we have developed is fully compatible with Databricks, and the current setup can be seamlessly migrated to a distributed environment.

The default configuration is local, meaning that all resources run on a single machine. While this setup is sufficient for testing and development, it is not ideal for large-scale FL tasks. In a local environment, the computational load is limited to the capabilities of a single machine, which can lead to slower training times and inefficiencies when dealing with large datasets or complex models.

Moving to a distributed Databricks environment significantly improves performance. In such a setup, each node has its own machine, and computations can be performed simultaneously across multiple nodes. This parallelism drastically reduces training times and allows us to scale our experiments effortlessly. For instance, in theory, training could be completed in just a second, depending on the number of nodes and the complexity of the model. However, even in the default local configuration, the training time is less than 11 s.

Moreover, Databricks allows us to run the same code on multiple virtual machines without changing the core logic. The only modification required is in the configuration file, specifically the pyproject.toml file, where we define the IP addresses of the various clients. The ability to modify only the configuration file (i.e., pyproject.toml) without changing the core code simplifies the process of scaling and adapting the system to different use cases. During training, all metrics are saved in a CSV file for better analysis of the results. It is worth noting that the results show that the overall performance is stable with respect to the data distribution.

In our FL setup, near-real-time intrusion detection is achievable in 0,16s, including latency. Full FL training cycles push the latency over 5s, better suited for periodic updates rather than continuous detection.

Details on the transition from a local setup to a distributed Databricks environment are reported in Appendix E.

4. Discussion

The experimental results appear to demonstrate not only that federated learning offers significant advantages in terms of data privacy and scalability, but it also retains competitive accuracy. The three models tested offer a spectrum of complexity and strengths, LR for simplicity and speed, SVM for discriminative power, and RF for robustness and ensemble learning. Moreover, our implementation evidences the flexibility and control of FLWR for federated learning tasks.

By starting with a simple logistic regression model and gradually extending the framework, we built a robust and scalable solution for decentralized machine learning.

Using SGD as a solver provided a flexible and efficient way to fit SVM models. By leveraging its capabilities, we improved computational performance. However, the results also underscored the need for careful tuning of hyperparameters, such as the learning rate and regularization strength, to achieve optimal performance.

FL with Random Forest (RF) is a challenging yet promising approach for decentralized machine learning. As an ensemble method, RF requires careful consideration of aggregation techniques, pruning methods, and scalability. However, while challenges like communication overhead, data heterogeneity, and privacy concerns exist, advanced techniques like hybrid learning, secure multi-party computation (SMPC), and distributed pruning can mitigate these issues. By leveraging these methods, federated RF can be effectively applied to real-world scenarios, enabling privacy-preserving and scalable machine learning.

It is worth highlighting that the adopted hybrid strategy, i.e., a machine learning model deployed on IoT devices with a second-level federated-based analysis (learning), allows to overcome the heterogeneity and the limited computing resources in IoT devices. While the final rules reside on the single IoT units, these can be learned on homogeneous and high-performance units.

Namely, the integration of federated learning with a cloud-based infrastructure, leveraging platforms such as Databricks and Google Cloud Storage, is also afforded. Our setup is functional, and transitioning to a distributed Databricks environment unlocks significant performance improvements and scalability. With minimal changes to the configuration, we can run our FL experiments on multiple machines simultaneously, achieving faster training times and greater flexibility. In fact, by running our FL experiments on Databricks with a distributed configuration, we can achieve the following benefits:

- Faster Training Times: Parallel processing across multiple nodes reduces the time required for model training.

- Scalability: The same code can be run on any number of virtual machines, allowing us to scale experiments effortlessly.

- Cost Efficiency: Databricks’ pay-as-you-go model ensures that we only pay for the resources we use, making it a cost-effective solution for large-scale experiments.

- Flexibility: The ability to modify only the configuration file (e.g., pyproject.toml) without changing the core code simplifies the process of scaling and adapting the system to different use cases.

The combination of Databricks, Google Cloud, and Fivetran appears preferable for federated learning when we need a scalable, enterprise-ready solution with automated data integration, robust processing, and seamless cloud infrastructure. It excels in scenarios requiring end-to-end management, compliance, and collaboration (e.g., cross-silo FL in regulated industries), outshining narrower FL frameworks in versatility and operational efficiency. Lighter frameworks might suffice for simpler or highly specialized FL needs, but this stack offers a powerful, integrated alternative for complex, real-world applications.

Moreover, in FL, raw data stay on local devices or silos, and only model updates are shared with a central server for aggregation. However, these updates can still leak sensitive information about individual data. Differential privacy (DP) mitigates this by adding controlled noise to the updates, ensuring that the output of the aggregation process does not reveal too much about any single participant’s data [34]. Databricks’ Apache Spark framework allows for distributed computation of model updates, where DP noise (e.g., Gaussian or Laplace) can be applied either locally (on participant nodes) or centrally (during aggregation). Google Cloud provides access to libraries like TensorFlow Privacy and Google’s Differential Privacy library, which implement DP-SGD (Stochastic Gradient Descent with Differential Privacy). Fivetran’s hybrid deployment model allows for data transformations (e.g., anonymization or noise addition) to occur on-premises before model training begins.

The Databricks, Google Cloud, and Fivetran stack is prone to adversarial attacks in FL due to its decentralized trust model, integration complexity, and reliance on participant honesty—issues inherent to FL rather than unique to these tools. Specific vulnerabilities include Databricks’ cluster security, Google Cloud’s aggregation exposure, and Fivetran’s pipeline risks. However, this stack also offers powerful mitigation tools (e.g., DP, secure aggregation, anomaly detection) that, when implemented, can make it more resilient than many FL frameworks.

Advanced cryptographic techniques such as secure multi-party computation (SMPC) [35] and homomorphic encryption (HE) [36] can significantly enhance the security of FL. These methods address adversarial attacks—such as poisoning, inference, and Byzantine threats—by enabling privacy-preserving computation and aggregation without exposing raw data or model updates. SMPC and HE can fortify our Databricks–Google Cloud–Fivetran FL setup against adversarial attacks by ensuring privacy and integrity during aggregation, and this will be the argument of future work.

Author Contributions

Conceptualization, S.R. and G.R.; methodology, G.R. and T.I.; software, T.I.; validation, S.R., G.R., and T.I.; formal analysis, T.I.; investigation, G.R. and T.I.; resources, S.R.; data curation, T.I.; writing—original draft preparation, S.R. and G.R.; writing—review and editing, S.R.; visualization, G.R.; supervision, S.R.; project administration, S.R.; funding acquisition, S.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The Kitsune Network Attack dataset containing nine different network attacks on a commercial IP-based surveillance system and an IoT network is freely available at http://archive.ics.uci.edu/dataset/516/kitsune+network+attack+dataset (accessed on 1 March 2025).

Acknowledgments

The authors wish to thank L. Rampone for carefully reading the manuscript and the anonymous referees whose comments helped improve the first version of this paper.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial intelligence |

| DS | Differential privacy |

| FLWR | Flower |

| FL | Federated learning |

| GCS | Google Cloud Storage |

| GDPR | General Data Protection Regulation |

| HE | Homomorphic encryption |

| IoT | Internet of Things |

| LR | Logistic regression |

| RF | Random Forest |

| SMPC | Secure multi-party computation |

| SGD | Stochastic Gradient Descent |

| SVM | Support Vector Machine |

Appendix A. Federated Frameworks

Appendix A.1. TensorFlow Federated (TFF)

TensorFlow Federated (TFF) is an open-source framework developed by Google that allows machine learning researchers to simulate and implement FL algorithms using TensorFlow. It provides two key APIs: Federated Core (FC) for building custom FL algorithms and federated learning (FL) for deploying common strategies. TFF is well integrated with TensorFlow but has a steep learning curve and limited flexibility outside TensorFlow-based models.

Appendix A.2. PySyft

PySyft is a privacy-preserving machine learning framework developed by OpenMined. It enables secure and private computations using techniques such as differential privacy and secure multi-party computation. While powerful, PySyft focuses more on privacy-preserving computations than on FL specifically.

Appendix A.3. Federated AI Technology Enabler (FATE)

FATE is an industrial-level FL framework designed for large-scale applications, particularly in the financial and healthcare industries. It supports secure model training across different organizations. However, it requires significant setup and is not as flexible for general-purpose FL research.

Appendix A.4. Flower (FLWR)

FLWR (Flower) is a lightweight, flexible FL framework designed for ease of use and scalability. It supports multiple machine learning libraries, including TensorFlow, PyTorch, and Scikit-learn, making it highly adaptable. Flower simplifies FL implementation by providing built-in server–client communication protocols and customizable strategies.

After evaluating these frameworks, we selected FLWR due to its simplicity, scalability, and compatibility with various machine learning libraries. Its modular design allowed us to efficiently integrate FL into our cloud-based infrastructure while maintaining flexibility in model development.

Appendix B. Federated Random Forest Aggregation Techniques

Aggregating decision trees from multiple nodes into a global RF is a critical step in FL. Several techniques can be used, each with its advantages and limitations.

Appendix B.1. Tree Aggregation

The most straightforward approach is to aggregate all trees from all nodes into a single global RF. The central server collects the trees and combines them into a unified model. This method is straightforward but can lead to a large global model, especially if the number of nodes is high.

Appendix B.2. Weighted Aggregation

In weighted aggregation, trees from different nodes are assigned weights based on the size or quality of their local datasets. For example, nodes with more data or higher data quality contribute more to the global model. This approach can improve model performance but requires additional computation to determine the weights.

Appendix B.3. Pruning-Based Aggregation

Pruning techniques can be applied to reduce the size of the global RF. After aggregating all trees, the central server prunes redundant or less essential trees to create a more compact model. This reduces the computational cost of inference but may slightly degrade performance.

Appendix B.4. Federated Averaging for Random Forest

Federated Averaging (FedAvg) can be adapted for RF by averaging the parameters of the decision trees. However, this is non-trivial because decision trees are non-parametric models. One approach is to average the split points and leaf values of trees with similar structures. This requires aligning trees from different nodes, which can be computationally expensive.

Appendix C. Federated Random Forest Pruning Techniques

Pruning is essential to controlling the complexity of the global RF and improving its efficiency. Several pruning techniques can be applied in an FL setting.

Appendix C.1. Cost-Complexity Pruning

Cost-complexity pruning removes subtrees that contribute little to the model’s accuracy. This technique can be applied locally on each node before sending the trees to the central server. However, global pruning after aggregation is often more effective.

Appendix C.2. Error-Based Pruning

Error-based pruning removes trees that have high error rates on a validation set. In FL, the central server can evaluate the performance of each tree on a held-out validation set and prune the worst-performing trees.

Appendix C.3. Feature Importance Pruning

Trees that rely on less important features can be pruned to reduce model complexity. Feature importance can be calculated using metrics like Gini importance or mean decrease in impurity. This technique is particularly useful when the global model becomes too large.

Appendix D. Advanced Techniques for Federated Random Forest

Appendix D.1. Hybrid Federated Learning

A hybrid approach combines centralized and FL. For example, a subset of nodes can share their data with the central server to train a base model, while the remaining nodes perform FL. This approach balances privacy and performance.

Appendix D.2. Secure Multi-Party Computation (SMPC)

SMPC allows nodes to collaboratively train a model without revealing their local data. This technique can be applied to aggregate trees securely, ensuring privacy while maintaining model accuracy.

Appendix D.3. Distributed Pruning

Pruning can be performed in a distributed manner, where each node prunes its local trees before sending them to the central server. This reduces the communication overhead and computational cost of global pruning.

Appendix D.4. Adaptive Tree Construction

Nodes can adaptively construct trees based on the global model’s performance. For example, nodes with poor local models can train additional trees to improve the global model’s accuracy.

Appendix E. Distributed Environment Software Setup

To transition from a local setup to a distributed Databricks environment, the following changes are necessary:

- Update pyproject.toml: This file must be modified to include the IP addresses of all client machines participating in the FL process. This ensures that the server can communicate with each client effectively.

- Cluster Configuration: In Databricks, a cluster must be configured with the appropriate number of worker nodes. Each node will act as a client, running its own instance of the training process.

- Resource Allocation: It is ensured that each node has sufficient computational resources (CPU, GPU, and memory) to handle its share of the workload.

- Network Configuration: it is verified that all nodes can communicate with each other and with the server without network bottlenecks.

References

- Morfino, V.; Rampone, S. Towards Near-Real-Time Intrusion Detection for IoT Devices using Supervised Learning and Apache Spark. Electronics 2020, 9, 444. [Google Scholar] [CrossRef]

- Konečný, J.; McMahan, H.B.; Yu, F.X.; Richtárik, P.; Suresh, A.T.; Bacon, D. Federated Learning: Strategies for Improving Communication Efficiency. arXiv 2016, arXiv:1610.05492. [Google Scholar]

- Hard, A.; Rao, K.; Mathews, R.; Beaufays, F.; Augenstein, S.; Eichner, H.; Kiddon, C.; Ramage, D. Federated Learning for Mobile Keyboard Prediction. arXiv 2018, arXiv:1811.03604. [Google Scholar]

- Nguyen, D.C.; Ding, M.; Pathirana, P.N.; Seneviratne, A.; Li, J.; Poor, H.V. Federated Learning for Internet of Things: A Comprehensive Survey. IEEE Commun. Surv. Tutor. 2021, 23, 1622–1658. [Google Scholar]

- Goddard, M. The EU general data protection regulation (GDPR): European regulation that has a global impact. Int. J. Mark. Res. 2017, 59, 703–705. [Google Scholar]

- Abadi, M.; Chu, A.; Goodfellow, I.; McMahan, H.B.; Mironov, I.; Talwar, K.; Zhang, L. Deep Learning with Differential Privacy. In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security, Vienna, Austria, 24–28 October 2016; pp. 308–318. [Google Scholar]

- McMahan, H.B.; Moore, E.; Ramage, D.; Hampson, S.; Aguera y Arcas, B. Communication-Efficient Learning of Deep Networks from Decentralized Data. In Proceedings of the 20th International Conference on Artificial Intelligence and Statistics (AISTATS), Lauderdale, FL, USA, 20–22 April 2017; Volume 54, pp. 1273–1282. [Google Scholar]

- Li, T.; Sahu, A.K.; Zaheer, M.; Sanjabi, M.; Talwalkar, A.; Smith, V. Federated Optimization in Heterogeneous Networks. In Proceedings of the Machine Learning and Systems 2 (MLSys 2020), Austin, TX, USA, 2–4 March 2020. [Google Scholar]

- Yang, Q.; Liu, Y.; Chen, T.; Tong, Y. Federated Machine Learning: Concept and Applications. ACM Trans. Intell. Syst. Technol. 2019, 10, 1–19. [Google Scholar]

- Yisroel, M.; Tomer, D.; Yuval, E.; Asaf, S. Kitsune: An Ensemble of Autoencoders for Online Network Intrusion Detection. In Proceedings of the Network and Distributed System Security Symposium (NDSS), San Diego, CA, USA, 18–21 February 2018; Available online: https://archive.ics.uci.edu/dataset/516/kitsune+network+attack+dataset (accessed on 1 March 2025).

- Li, Y.; Li, Z.; Li, M. A comprehensive survey on intrusion detection algorithms. Comput. Electr. Eng. 2025, 121, 109863. [Google Scholar] [CrossRef]

- Zhou, W.; Xia, C.; Wang, T.; Liang, X.; Lin, W.; Li, X.; Zhang, S. HIDIM: A novel framework of network intrusion detection for hierarchical dependency and class imbalance. Comput. Secur. 2025, 148, 104155. [Google Scholar] [CrossRef]

- Lin, W.; Xia, C.; Wang, T.; Zhao, Y.; Xi, L.; Zhang, S. Input and Output Matter: Malicious Traffic Detection with Explainability. IEEE Netw. 2024, 39, 259–267. [Google Scholar]

- Najafimehr, M.; Zarifzadeh, S.; Mostafavi, S. DDoS attacks and machine-learning-based detection methods: A survey and taxonomy. Eng. Rep. 2023, 5, e12697. [Google Scholar] [CrossRef]

- Alqudhaibi, A.; Albarrak, M.; Jagtap, S.; Williams, N.; Salonitis, K. Securing industry 4.0: Assessing cybersecurity challenges and proposing strategies for manufacturing management. Cyber Secur. Appl. 2025, 3, 100067. [Google Scholar] [CrossRef]

- Bebortta, S.; Barik, S.C.; Sahoo, L.K.; Mohapatra, S.S.; Kaiwartya, O.; Senapati, D. Hybrid Machine Learning Framework for Network Intrusion Detection in IoT-Based Environments. Lect. Notes Netw. Syst. 2024, 1, 573–585. [Google Scholar] [CrossRef]

- Konečný, J.; McMahan, B.; Ramage, D.; Richtàrik, P. Federated Optimization: Distributed Machine Learning for On-Device Intelligence. arXiv 2016, arXiv:1610.02527. [Google Scholar]

- Taha, K. Machine learning in biomedical and health big data: A comprehensive survey with empirical and experimental insights. J. Big Data 2025, 12, 61. [Google Scholar] [CrossRef]

- Hamdi, N. A hybrid learning technique for intrusion detection system for smart grid. Sustain. Comput. Inform. Syst. 2025, 46, 101102. [Google Scholar] [CrossRef]

- Cao, S.; Liu, S.; Yang, Y.; Du, W.; Zhan, Z.; Wang, D.; Zhang, W. A hybrid and efficient Federated Learning for privacy preservation in IoT devices. Ad Hoc Netw. 2025, 170, 103761. [Google Scholar] [CrossRef]

- Gu, Y.; Wang, J.; Zhao, S. HT-FL: Hybrid Training Federated Learning for Heterogeneous Edge-Based IoT Networks. IEEE Trans. Mob. Comput. 2025, 24, 2817–2831. [Google Scholar] [CrossRef]

- Albogami, N.N. Intelligent deep federated learning model for enhancing security in internet of things enabled edge computing environment. Sci. Rep. 2025, 15, 4041. [Google Scholar] [CrossRef]

- Databricks Data Intelligence Platform. Available online: https://www.databricks.com/ (accessed on 1 March 2025).

- Google Cloud Storage. Available online: https://cloud.google.com/storage?hl=en (accessed on 1 March 2025).

- Fivetran Data Integration. Available online: https://www.fivetran.com/learn/data-integration (accessed on 1 March 2025).

- Apache Spark. Available online: https://spark.apache.org/ (accessed on 1 March 2025).

- Flower Federated Learning Framework. Available online: https://flower.ai/ (accessed on 1 March 2025).

- Hugging Face. Available online: https://huggingface.co/ (accessed on 1 March 2025).

- Hosmer, D.W., Jr.; Lemeshow, S.; Sturdivant, R.X. Applied Logistic Regression, 3rd ed.; John Wiley & Sons: Hoboken, NJ, USA, 2013. [Google Scholar]

- Hearst, M.A.; Dumais, S.T.; Osuna, E.; Platt, J.; Scholkopf, B. Support vector machines. IEEE Intell. Syst. Their Appl. 1998, 13, 18–28. [Google Scholar] [CrossRef]

- Bottou, L. Stochastic Gradient Learning in Neural Networks. Proc. Neuro-Nımes 1991, 91, 12. [Google Scholar]

- Bodynek, M.; Leiser, F.; Thiebes, S.; Sunyaev, A. Applying Random Forests in Federated Learning: A Synthesis of Aggregation Techniques. In Proceedings of the Wirtschaftsinformatik, Paderborn, Germany, 18–21 September 2023; Volume 46. Available online: https://aisel.aisnet.org/wi2023/46 (accessed on 1 March 2025).

- Implementation Code Repository. Available online: https://github.com/n3pt7un/Federated-Learning-LR_RF (accessed on 26 March 2025).

- Bonawitz, K.; Ivanov, V.; Kreuter, B.; Marcedone, A.; McMahan, H.B.; Patel, S.; Ramage, D.; Segal, A.; Seth, K. Practical Secure Aggregation for Privacy-Preserving Machine Learning. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security (CCS ’17), Dallas, TX, USA, 30 October–3 November 2017; pp. 1175–1191. [Google Scholar] [CrossRef]

- Kairouz, P.; McMahan, H.B.; Avent, B.; Bellet, A.; Bennis, M.; Bhagoji, A.N.; Bonawitz, K.; Charles, Z.; Cormode, G.; Cummings, R.; et al. Advances and Open Problems in Federated Learning. Found. Trends Mach. Learn. 2021, 14, 1–210. [Google Scholar]

- Phong, L.T.; Aono, Y.; Hayashi, T.; Wang, L.; Moriai, S. Privacy-Preserving Deep Learning via Additively Homomorphic Encryption. IEEE Trans. Inf. Forensics Secur. 2018, 13, 1333–1345. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).