Abstract

This advanced exploration of integrating cross-modal Artificial-Intelligence-Generated Content (AIGC) within the Unity3D game engine seeks to elevate the diversity and coherence of image generation in game art creation. The theoretical framework proposed dives into the seamless incorporation of generated visuals within Unity3D, introducing a novel Generative Adversarial Network (GAN) structure. In this architecture, both the Generator and Discriminator embrace a Transformer model, adeptly managing sequential data and long-range dependencies. Furthermore, the introduction of a cross-modal attention module enables the dynamic calculation of attention weights between text descriptors and generated imagery, allowing for real-time modulation of modal inputs, ultimately refining the quality and variety of generated visuals. The experimental results show outstanding performance on technical benchmarks, with an inception score reaching 8.95 and a Frechet Inception Distance plummeting to 20.1, signifying exceptional diversity and image quality. Surveys reveal that users rated the model’s output highly, citing both its adherence to text prompts and its strong visual allure. Moreover, the model demonstrates impressive stylistic variety, producing imagery with intricate and varied aesthetics. Though training demands are extended, the payoff in quality and diversity holds substantial practical value. This method exhibits substantial transformative potential in Unity3D development, simultaneously improving development efficiency and optimizing the visual fidelity of game assets.

1. Introduction

The digital entertainment industry’s explosive growth has intensified competition in the gaming realm [1,2,3], where visual allure stands as a decisive element in capturing attention and distinguishing titles within an oversaturated market [4,5]. Yet, the creation of game art assets often grapples with steep production costs and protracted timelines, constraining the creative scope of development teams and slowing product launches [6,7]. In response, an increasing reliance on cutting-edge artificial intelligence (AI) technologies has emerged, seeking to either assist or fully automate game art creation [8].

Recent breakthroughs in computational capabilities, coupled with the evolution of deep learning algorithms, have driven AI’s extensive adoption in creative sectors [9,10,11]. Beyond generating vast arrays of high-quality design choices at unprecedented speeds, AI enhances final outputs by adapting to learned user preferences [12]. Within game development, AI applications have transcended basic physics simulations, advancing into more intricate realms such as character sculpting and environmental design [13,14]. These technological strides unlock fresh possibilities for overcoming the longstanding hurdles of traditional game art production.

Recent investigations, both globally and domestically, have delved into AI’s expanding role in game art creation, though much of the focus remains confined to processing single-modality data—transforming text to images or audio into video, for instance. The handling of cross-modal data, where diverse inputs such as text or sound are converted into different content forms like animations or images, remains in its nascent stages [15,16]. Cross-modal Artificial-Intelligence-Generated Content (AIGC) promises new dimensions in game art creation, unlocking creative potential that moves beyond traditional media boundaries [17].

This study centers on the deep integration of cross-modal AIGC technology within the Unity3D engine, aiming to mitigate challenges prevalent in game art production. Unity3D serves as the primary development platform in this study, selected for its extensive application in game development and its advantages in cross-platform compatibility, resource allocation, and real-time rendering optimization. Compared to Unreal Engine, Unity3D features a well-established development ecosystem with broad plugin support. The adoption of C# as the core programming language further reduces the complexity of implementation, facilitating the seamless integration of AI models within interactive environments. Additionally, the flexibility of Unity3D in hybrid 2D and 3D rendering enhances the adaptability of AIGC, providing a versatile framework for procedural content generation.

While Unreal Engine demonstrates strengths in high-fidelity rendering and advanced global illumination techniques, its elevated hardware requirements introduce limitations for lightweight deployment scenarios, constraining the practical integration of AIGC in resource-constrained environments. As an open-source alternative, Godot offers extensive customization, making it particularly suited for experimental applications and niche game development. However, limitations in high-quality 3D rendering and AI model integration impose challenges in AIGC-driven game asset generation. Blender, primarily designed for 3D modeling and animation, supports AI-assisted artistic workflows through Python (version 3.8) scripting but lacks the real-time interaction capabilities necessary for dynamic procedural content generation in game development.

Through efficient computational resource management, scalable architecture, and real-time rendering capabilities, Unity3D emerges as an optimal solution for implementing the cross-modal AIGC framework proposed in this study. This platform enables dynamic adaptation, real-time style transfer, and seamless integration of Generative Adversarial Network (GAN)-based artistic content within interactive game environments, enhancing both visual fidelity and content diversity. With the application of cutting-edge AI algorithms, the pursuit encompasses multiple objectives: minimizing repetitive manual tasks, accelerating asset production, broadening creative possibilities, and significantly lowering production expenses. Automation through AIGC opens doors for small teams and independent developers to access high-caliber artistic resources. To tackle these objectives, deep learning frameworks—specifically Transformer architectures and GANs—are deployed and trained to process and generate multimodal content.

The primary contributions and innovations of this study are encapsulated in the following key aspects:

- (1)

- A cross-modal AIGC framework is developed, leveraging a Transformer–GAN architecture that integrates GANs with Transformer models. This architecture enhances both the diversity and global coherence of generated images, addressing limitations in conventional unimodal generative methods.

- (2)

- A cross-modal attention mechanism is introduced, enabling dynamic computation of attention weights between textual descriptions and generated images. This mechanism facilitates precise semantic alignment between input prompts and generated content, significantly improving fidelity and variation in generated outputs.

- (3)

- Deep learning model integration within the Unity3D game engine is achieved through the Barracuda inference framework, ensuring the real-time execution of AI-driven content generation. This approach enables on-device inference with low-latency processing, optimizing efficiency for interactive game environments.

- (4)

- An asynchronous computation pipeline is implemented to optimize the cross-modal attention mechanism, preventing interference with the main rendering thread during gameplay. This optimization enhances system responsiveness, ensuring seamless real-time content adaptation without compromising interactivity or rendering performance.

2. Research Theory

2.1. Literature Review

In the wake of the swift evolution of AI technologies, a burgeoning number of studies have emerged, zeroing in on the transformative potential of AI within the realm of game development, particularly in the intricate sphere of game art creation [18]. Initial investigations predominantly fixated on single-modality data processing, emphasizing image generation and text handling [19]. For instance, Özçift et al. (2021) [20] unveiled the Cycle-Consistent GAN (Cycle-Consistent Generative Adversarial Network)model, achieving remarkable feats in image-to-image translation through the lens of unsupervised learning—think of converting horses into zebras. Such technology holds substantial promise for game development, enabling the generation of diverse game scenes and characters rendered in an array of artistic styles. Similarly, Gu et al. (2022) [21] introduced the Style-based GAN (Style-based Generative Adversarial Network) model, renowned for its ability to produce high-fidelity facial images via GANs. This innovation can be seamlessly integrated to craft realistic character models that enhance gaming experiences. Venturing into the realm of text processing, Erdem et al. (2022) [22] showcased the Bidirectional Encoder Representations from Transformers model, which made substantial strides in natural-language-understanding tasks through its sophisticated bidirectional Transformer architecture. This technology is pivotal for developing dialogue systems in games, fostering more organic and immersive interactions with Non-Player Characters (NPCs).

In the unfolding landscape of technological innovation, cross-modal AIGC technology has ascended to a prominent position within research circles. This burgeoning field aspires to unlock a realm of richer, more diverse applications by adeptly processing and generating multifaceted data types, encompassing text, images, and audio. A notable illustration comes from Goel et al. (2022) [23], who introduced the Contrastive Language–Image Pretraining model. This framework shines in a plethora of cross-modal tasks, excelling in text-to-image generation and image classification through extensive pre-training on vast datasets of text-image pairs. Such capabilities hold immense potential for game development, offering the ability to conjure game scenes or characters purely from textual descriptions. Meanwhile, Zhang et al. (2023) [24] unveiled a multimodal generation model that artfully intertwined text and image inputs, producing game assets imbued with specific artistic styles. This technological advancement opens new avenues for crafting game content that harmonizes with distinct artistic aesthetics, thereby deepening player immersion. Additionally, the contributions of Kaźmierczak et al. (2024) [25] manifested in the form of a cutting-edge cutscene sequencer designed for educational and cultural games within the Unity3D environment. This innovative tool serves as a guiding hand for game developers, streamlining the creation of cinematic interludes that enrich storytelling experiences.

Despite the remarkable strides made by cross-modal AIGC technology across various domains, its integration into the realm of game art creation remains firmly in its nascent phase. Numerous studies have sought to weave these cutting-edge technologies into the fabric of game development, aiming to bolster efficiency and elevate content quality. For example, Huang and Yuan (2023) [26] harnessed the power of GAN technology for terrain generation, yielding high-quality textures that significantly enhance the realism of game environments. In a parallel vein, Värtinen et al. (2022) [27] employed a Transformer model to craft dialogue scripts, facilitating the procedural generation of task descriptions in role-playing video games and rendering NPC conversations more fluid and varied. Furthermore, Li et al. (2023) [28] showcased the versatile applications of AI across different facets of game content interfaces, opening avenues for deeper exploration in this exciting intersection of technology and creativity. For example, Zhang et al. (2025) [29] analyzed the primary sources of label noise in real-world environments. Their study provided valuable insights for selecting appropriate methods in image generation and cross-modal tasks. For example, Zhang et al. (2025) [30] constructed the first dataset specifically tailored for infrared small-sample target detection by integrating three widely used infrared datasets. The innovative ideas in the methodology of their study have certain reference significance in improving the efficiency and generation quality of the model.

Despite the wealth of insights offered by existing studies, a notable limitation arises from their focus on isolated tasks, often neglecting comprehensive support for the entire spectrum of game development processes [31,32,33]. The seamless integration of cross-modal AIGC technologies into prevailing game development tools remains a critical area for exploration. Whereas the potential of cross-modal AIGC technology in the realm of game art creation is vast, several formidable challenges loom. Primarily, the quality and diversity of generated content require substantial enhancement to align with the rigorous standards set by the gaming industry. Moreover, optimizing both the training and inference efficiency of these models is paramount, ensuring the capacity for real-time generation that modern gaming demands. Lastly, the realm of user interaction and personalized content generation beckons further investigation; enriching customization options can significantly elevate interactivity and overall enjoyment. This study seeks to bridge these gaps, with a particular emphasis on the pressing need for consistency in artistic style within generated content.

2.2. Theoretical Framework

To facilitate the effective application of cross-modal AIGC technology within the realm of game art creation, a robust and multifaceted theoretical framework has emerged in this investigation. This intricate framework spans the entire spectrum, addressing everything from demand analysis to performance evaluation. Such thoroughness guarantees that the generated game assets not only exhibit exceptional quality but also achieve seamless integration into the gaming environment. Figure 1 illustrates the specific components of this theoretical framework, elucidating its comprehensive nature and foundational role in enhancing game art production.

Figure 1.

Theoretical framework for the integration of cross-modal AIGC technology and the Unity3D game engine.

As depicted in Figure 1, the theoretical framework unfolds into five primary components: requirement analysis, data processing, model design and training, system integration, and performance evaluation. Initially, insights into the requirements of game developers and players concerning game art creation were gathered through various methodologies, including surveys and interviews. This exploration aimed to unveil the expectations and challenges related to AI-generated content. For example, developers might express a need for seamless integration of generated assets into existing game environments, whereas players could yearn for personalized and interactive experiences. Additionally, a thorough examination of bottlenecks and obstacles within the current game development workflow reveals opportunities where AI technologies can step in. Tasks such as terrain generation, character design, and prop creation, often laden with repetitive processes, stand to benefit immensely from automation through AI.

Next, a rich tapestry of data types was collected and organized from publicly accessible datasets, encompassing textual descriptions, hand-drawn sketches, and imagery of existing game characters and environments. These curated data served as the foundation for training the generative model. Employing techniques from natural language processing and computer vision, the data underwent a rigorous cleaning, annotation, and standardization process, ensuring their integrity and readiness for model training. For instance, text data necessitated procedures like tokenization, stemming, and the removal of stop words, whereas image data underwent resizing and color space transformation to achieve uniformity.

The subsequent phase involved the careful selection of an appropriate deep learning model, finely tuned to the task’s specific requirements. Utilizing the meticulously preprocessed data, this model underwent training, with optimization of parameters achieved through either supervised or unsupervised learning methodologies. Such efforts aimed to elevate both the quality and diversity of the generated content. For example, pretraining on extensive text–image pairs equipped the model with the capability to yield high-caliber game assets. Following this, a Unity3D plugin emerged, ingeniously crafted to weave the trained model into the fabric of the Unity3D editor. This plugin presents a user-friendly interface, streamlining the process for developers to summon the model for asset generation. Through simple text prompts or image uploads, developers can initiate the creation of corresponding game materials. The implementation of real-time generation and interactive functionalities within Unity3D empowers both developers and players to trigger content generation effortlessly. A player, for instance, can conjure new game characters or scenes in real-time by merely inputting descriptive text. In the final stages, an evaluation of the generated content takes place, scrutinizing various facets such as consistency in artistic style, representation of intricate details, and compatibility with the game environment. Expert reviews, alongside user testing, aimed to ascertain whether the generated assets align harmoniously with the game’s overall artistic vision. For the expert evaluation, five industry professionals with substantial expertise in game art design and visual effects were selected to assess the generated assets. The evaluation process entailed a thorough comparison of AI-generated assets and traditionally crafted assets, with a particular emphasis on quality, stylistic consistency, and visual effects. The assets were assessed according to a set of predefined criteria, including image clarity, detail representation, and coherence of artistic style. A rating scale from 1 to 5 was used, where 1 represents a failure to meet the specified requirements and 5 indicates exceptional compliance. After completing the assessment, the experts engaged in a discussion, offering insights and constructive recommendations aimed at optimizing the generative model.

A comparative study involving 50 game players was conducted to assess user reactions. Participants were split into two groups: one group interacted with traditionally handcrafted visual content, while the other engaged with AI-generated visuals. Data collection occurred through questionnaires and interviews, with the aim of evaluating player satisfaction with the visual content, perceptions of game immersion, and preferences for image quality and artistic style.

The questionnaire focused on the following areas:

- (1)

- Satisfaction with Visual Content: An overall evaluation of visual elements, including image quality, stylistic coherence, and consistency with the game’s atmosphere, was provided by the participants.

- (2)

- Game Immersion: The influence of AI-generated visuals on player immersion and overall gaming experience was measured.

- (3)

- Image Quality and Style Preferences: Preferences regarding image details, color schemes, and artistic style were explored, alongside comparisons between AI-generated and traditional visuals.

- (4)

- Content Generation Efficiency: Player perceptions of the efficiency and time requirements of the AI-generated content were assessed, with particular attention paid to their acceptance of AI-driven alterations in visual production.

The demographic information of the participants is outlined in Table 1.

Table 1.

Demographic distribution of game players.

Player feedback plays a crucial role in assessing the real-world impact of AI-generated content, particularly regarding game immersion and player satisfaction. By combining expert evaluations with user insights, this study offers a comprehensive examination of the efficiency and cost-effectiveness of AI-driven content. The findings highlight the advantages of AI-generated content in comparison to traditional handcrafted assets, particularly in terms of production efficiency and cost savings. These results provide substantial evidence supporting the integration of AI-generated content in game development practices.

Through the implementation of this theoretical framework and technical methodologies, a structured pathway emerges for the application of cross-modal AIGC technology in the realm of game art creation. This endeavor aspires to foster innovation and advancement in game design, encapsulating every facet from data processing to model training while placing a strong emphasis on the integration of user needs and technical specifications. Such a focus ensures that the generated game assets align seamlessly with practical application demands. Furthermore, the incorporation of real-time generation and interactive features amplifies the game’s interactivity and engagement, thereby unveiling new vistas for game development.

2.3. Key Technologies

To unravel the complexities of understanding and generating cross-modal data, this study strategically embraced deep learning architectures adept at processing such intricate data. The primary contenders in this endeavor are Transformers and GANs, both of which underwent customization and optimization tailored to meet the specific demands of the project. In the crucial data preprocessing phase, two prominent public datasets came into play: the Flickr30K dataset and the MS-COCO Caption dataset. Each of these datasets boasts an extensive collection of images paired with corresponding textual descriptions, rendering them exceptionally well suited for text-to-image generation tasks. Images, along with their descriptive counterparts, were meticulously harvested from the Flickr30K and MS-COCO Caption datasets. Notably, the inherent organization of these datasets allows for seamless integration into model training processes. The Flickr30K dataset comprises 30,000 images, each accompanied by a quintet of descriptive sentences, whereas the MS-COCO Caption dataset expands the scope with hundreds of thousands of images, each featuring multiple descriptive sentences. Figure 2 and Figure 3 provide a visual representation of the preprocessing workflows employed for text and image data, respectively.

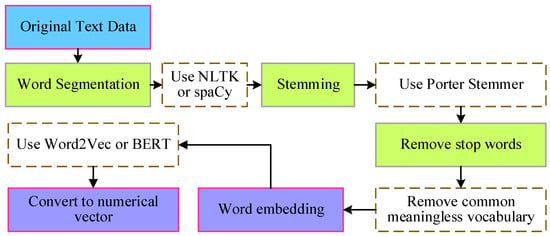

Figure 2.

Text data preprocessing process.

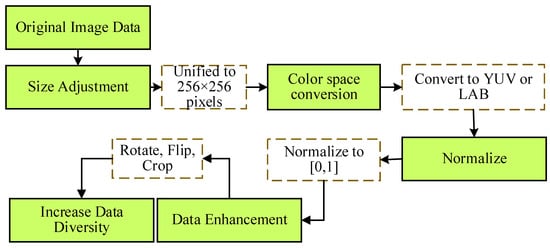

Figure 3.

Image data preprocessing process.

Text data were initially processed using the spaCy tool (version 3.6.0) to segment the text into words or phrases. This step ensured that each word was handled individually, facilitating subsequent feature extraction. The Porter Stemmer algorithm was then employed to reduce words to their root forms. Stemming aided in decreasing vocabulary diversity, thereby enhancing the model’s learning efficiency. Common stop words, such as “the” and “is”, were removed. This elimination of stop words helped the model focus on significant vocabulary, improving the relevance of the generated content. The processed text was converted into numerical vectors using a pre-trained word embedding model. Word embeddings transformed the text into high-dimensional vectors, making it more manageable for the model.

During image data preprocessing, all images are resized to a uniform dimension, such as 256 × 256 pixels, to prepare them for input into the model. The images are then converted from the RGB color space to alternative color spaces to enhance specific features. This color space transformation assists the model in capturing color information within the images. In this study, the conversion of images to YUV or LAB color spaces was employed to optimize the model’s ability to process color, with a specific focus on the importance of color accuracy in game art creation. The YUV color space, by separating luminance (Y) from chrominance (U and V), allowed the model to concentrate on brightness and contrast independently of color, enhancing the image’s detailed representation. Conversely, the LAB color space aligns more closely with the human visual system, enabling more precise capture of subtle color variations, which, in turn, improves both color saturation and visual consistency. This color space conversion during training reduced redundant color information, heightened the model’s sensitivity to color details, and ultimately resulted in superior image generation outcomes.

Next, pixel values of the images were normalized to the range [0, 1] to improve the stability of model training. Normalization facilitated faster convergence of the model. Finally, data diversity was increased through operations such as rotation, flipping, and cropping to prevent overfitting. Data augmentation enhanced the model’s generalization capability, enabling it to perform well across various scenarios.

The GAN model situated within this research framework unfolds as a dynamic interplay between a Generator and a Discriminator. Tasked with the intricate duty of crafting images from the provided text descriptions, the Generator embarks on a creative journey. In tandem, the Discriminator steps in to evaluate the authenticity of these generated images, wielding a critical role in the generative process. To forge a deeper connection between text and image information, an innovative cross-modal attention module finds its place within the Generator. This module intricately computes the similarity between textual features and visual features, dynamically generating attention weights that steer the Generator toward creating images with heightened precision. These cross-modal attention weights are described as follows:

Equation (1) calculates the attention weights () between text and visual features. In the cross-modal attention mechanism, the relationship between text and visual features is established by computing attention weights, which adjust the image generation process in response to the input text.

(Query) represents features extracted from the text input. These text features are transformed into vector representations via a deep learning model, acting as “queries” that guide the image generation process by highlighting relevant characteristics.

denotes the dimensionality of the “key” features (K), typically represented by the vector length. This term normalizes the computation, scaling the dot product by the square root to prevent numerical instability as feature dimensions increase.

Softmax normalizes the calculated attention weights, ensuring their sum equals 1. The softmax function redistributes attention, balancing the focus across the generated content, especially the sections corresponding to the input text.

Once the attention weights are determined, they are multiplied by the visual features (V) to obtain the final contextual information (Context). These weighted visual features integrate the semantic content from the text description, supplying the model with relevant and detailed visual data for image generation.

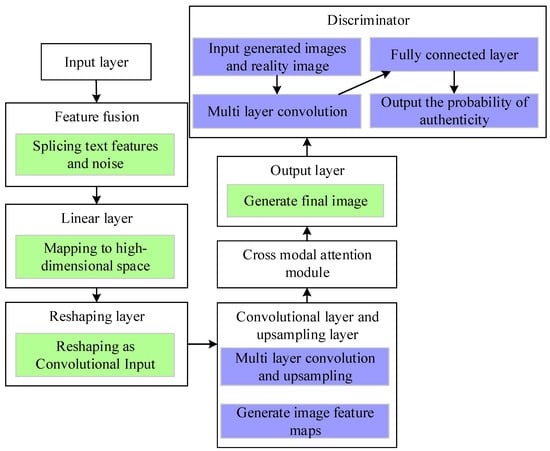

Figure 4 presents the GAN architecture seamlessly integrated with the cross-modal attention module.

Figure 4.

GAN structure incorporating cross-modal attention module.

Within Figure 4 lies a GAN architecture integrating a cross-modal attention module, dedicated to the creation of high-quality game art resources drawn from intricate textual descriptions. The system receives two primary inputs: text features and a noise vector. Text features are derived from a detailed description, while the noise vector introduces variation in the generated image. A typical user input might include a description like “a spring garden with blooming flowers, sunlight filtering through the leaves, and a classical-style pavilion in the distance”. These text inputs undergo processing through a text encoder, which utilizes a Transformer model to extract significant text features such as “spring”, “garden”, “sunlight”, “leaves”, and “classical-style pavilion”. These features are subsequently converted into high-dimensional vectors for further processing.

The feature fusion layer combines the text features with the noise vector, forming a unified input vector. The noise vector, generated randomly, enhances the diversity and randomness of the generated image. After concatenation, the combined vector is mapped to a higher-dimensional space via a linear layer, preparing the input for subsequent convolutional operations. This step provides a foundation for the network to capture more intricate patterns in the data. In the reshaping layer, the high-dimensional vector is transformed into a format suitable for convolutional processing. The reshaped data are then converted into feature maps, which serve as an initial structural framework for the image generation process. These feature maps are passed through multiple convolutional layers and upsampling layers. The convolutional layers focus on extracting local features, while the upsampling layers progressively increase the spatial resolution, constructing the basic outline of the image.

To achieve an elevated level of consistency between generated images and their corresponding textual descriptions, the introduction of a cross-modal attention module becomes paramount. This module engages in calculating the similarity between textual features and the features extracted from generated images, ultimately producing a set of attention weights. These weights signify the importance of various regions or attributes, empowering the model to apply nuanced processing to the feature maps of the generated images. Such a strategic focus enables the model to hone in on the critical elements encapsulated in the textual description, yielding images that are not only accurate but also rich in detail. Once the weighted image feature maps are constructed, they flow into the output layer, where an intricate series of processes culminate in the creation of the final image. The Discriminator takes center stage, evaluating the generated image and distinguishing it from real images, thereby providing a probability score that indicates the level of authenticity. In response to the Discriminator’s feedback, the Generator engages in a relentless cycle of parameter adjustments, refining the quality of the produced images until the output becomes virtually indistinguishable from genuine photographs.

This process illustrates the remarkable capabilities inherent in deep learning technology when applied to cross-modal data processing, especially within the realm of game art generation. The integration of a cross-modal attention mechanism equips the model to produce high-quality images that align closely with textual descriptions, a feat of considerable importance in the creation of artistic resources for game development. Through the simplicity of textual inputs, developers can swiftly generate compliant game art assets, thereby bolstering both development efficiency and creative flexibility.

The cross-modal attention mechanism facilitates the dynamic adjustment of the relationship between the generated image and the corresponding text description, optimizing the integration of deep learning models within Unity3D. By combining this mechanism with GANs, game art assets can be swiftly generated to align with specific in-game scenarios, tailored to real-time requirements. For instance, when a game scene undergoes modifications, such as lighting changes or scene transitions, the model can rapidly produce game characters, environments, or props that correspond to updated text descriptions or image features. This eliminates the need for labor-intensive manual adjustments typical in traditional methods. Such real-time adaptability accelerates the acquisition of high-quality art assets, significantly shortening the development cycle. Within the Unity3D environment, text input is fed into the model through a dedicated interface, which uses this input to control the style and details of the generated visuals. This system allows for the precise manipulation of the generated content based on textual prompts, ensuring a high degree of consistency and richness between the text and the resulting image.

For the real-time operation of the deep learning model within Unity3D, the Barracuda deep learning inference framework is employed. Barracuda supports the deployment of various deep learning models, enabling efficient inference directly within Unity3D. By utilizing the Barracuda framework, the trained Transformer–GAN model is converted into a format compatible with Unity3D, allowing for local inference without relying on external server support. This integration significantly enhances the speed of image generation while ensuring a low-latency response, meeting the stringent real-time demands of game development.

In the Unity3D environment, an asynchronous computation pipeline is employed to optimize the real-time performance of the cross-modal attention mechanism. Upon receiving a text description, the Unity3D asynchronous pipeline ensures that the calculation of attention weights between text and image occurs independently of the main thread’s rendering tasks. This strategy facilitates the concurrent execution of the generation process and other game operations, thereby reducing inference latency and maintaining a smooth gaming experience. For game asset management, Unity3D’s AssetDatabase and Prefab system are integrated with the generated image content. The generated images are automatically stored as game assets and managed through the Prefab system, simplifying access and usage by developers. This integration reduces the repetitive labor traditionally involved in art creation and enhances the reusability and adaptability of resources.

The implementation of the cross-modal attention mechanism utilizes a multi-head attention structure, consisting of eight attention heads. This configuration allows the model to extract features from distinct subspaces, improving the model’s ability to capture and process relationships between text and images at multiple levels. To manage computational efficiency, the attention calculation process is optimized. The standard self-attention mechanism is employed, with the computational complexity primarily influenced by the dimensionality of the input features and the number of attention heads.

3. Experiments and Analysis

3.1. Experimental Design

To rigorously assess the performance of the proposed GAN structure with an integrated cross-modal attention module for generating game art, a series of experiments were carried out utilizing the previously mentioned public datasets. These datasets, rich in variety, span across multiple styles and environments—ranging from fantasy realms to modern cityscapes and historical backdrops—ensuring that the model’s training data embraced diversity and possessed broad generalization capabilities. A multifaceted evaluation approach was adopted, leveraging a mix of both technical and subjective metrics to analyze the model’s outputs. Key technical metrics such as the Inception Score (IS) and Frechet Inception Distance (FID) were employed to measure the quality and diversity of the generated images. The IS and FID serve as key metrics in evaluating the quality and diversity of generated images. In the context of game art generation, IS measures the diversity and visual appeal of the produced images, while FID quantifies the similarity between generated and real images. Diversity holds particular importance in game development, as art assets must encompass a broad range of styles and visual effects to suit varying scenes, characters, and interactive requirements. Concurrently, image quality plays a critical role in player immersion and the overall gaming experience, demanding that generated images sustain high levels of realism and artistic coherence.

Meanwhile, user survey scores were collected to gauge alignment between the generated content and the provided textual descriptions while also capturing the overall aesthetic appeal and user experience. A comparative analysis was conducted by benchmarking the proposed GAN model against several leading image generation architectures, including the basic GAN, Conditional GAN (Conditional Generative Adversarial Network, CGAN), DCGAN (Deep Convolutional Generative Adversarial Network), StyleGAN, and recent image generation methods from references [34,35]. Reference [34] presents a GAN-based approach for controlling human pose generation in images, aimed at video content creation. Reference [35] introduces a multimodal deep generative model utilizing Metropolis–Hastings, exploring the mechanisms of information transfer and communication across different modalities. These models were selected to underscore the distinct advantages of incorporating cross-modal attention in generating highly detailed and contextually consistent game art. The comparative analysis highlights the superior performance of the proposed method in image generation quality, detail retention, and textual alignment, particularly within the realm of game art creation.

The experiments were conducted on a workstation equipped with an NVIDIA RTX 3090 GPU, ensuring a smooth and efficient computational environment. All models were implemented using the PyTorch framework (version 1.9.0), whereas auxiliary Python (version 3.8) libraries such as NumPy and Pandas were utilized for data manipulation and result analysis. The training process involved fine-tuning several hyperparameters through multiple trial runs, seeking optimal settings. For instance, a learning rate of 0.0002 was adopted, paired with a batch size of 64, and the total number of training iterations was capped at 200, ensuring a robust learning curve while minimizing overfitting or undertraining. The numerical settings were established through a series of experiments designed to optimize model training performance. A batch size of 64 was selected based on the initial experimental outcomes. Comparisons with various batch sizes revealed this value as an optimal compromise between computational efficiency and model convergence speed. Smaller batch sizes led to unstable gradient estimates, while excessively large batch sizes resulted in high memory consumption and hindered the training speed. The choice of 200 epochs was driven by considerations of model convergence and the potential for overfitting. The experiments demonstrated that the model maintained strong generalization and stable performance after 200 epochs without exhibiting significant overfitting. This choice of epoch count facilitated sufficient model training and yielded stable, effective learning results. The training time for each phase was approximately 6 h for text feature extraction, 18 h for image generation and feature fusion, and 8 h for model optimization and evaluation. The entire training process was executed from scratch, with no use of transfer learning.

3.2. Experimental Results and Analysis

In demonstrating the potency of the proposed model, several representative textual descriptions were chosen, and corresponding images were generated. Figure 5, Figure 6 and Figure 7 provide examples of game scenes birthed by the model.

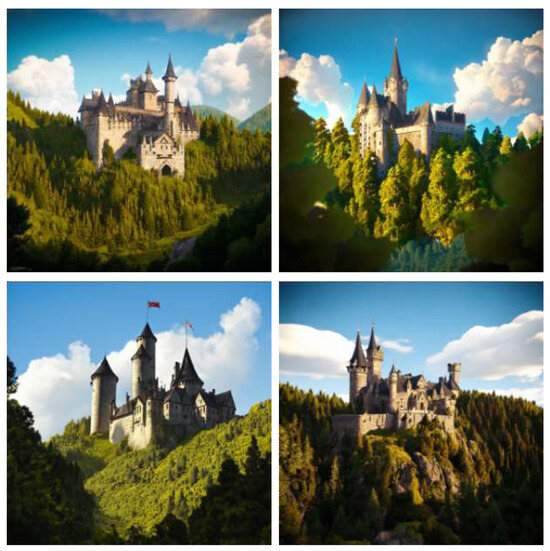

Figure 5.

Castle in a fantasy world.

Figure 6.

Nightscape of a modern city.

Figure 7.

Ancient battlefield.

The visual clarity and coherence of the images in Figure 5, Figure 6 and Figure 7 suggest more than just technical prowess—the images resonate with refined detail and exhibit a seamless balance between elements. The model’s ability to synthesize both macro and micro aspects of each scene becomes especially apparent in these examples. Take the “castle in a fantasy world” scene, for instance: the imposing fortress rises atop a mountain, exuding grandeur. The dense forests encircling the base and the ethereal clouds floating above are depicted with such precision that the realism seems to seep through each pixel. This heightened fidelity, particularly in compositional balance and intricate detailing, owes much to the cross-modal attention mechanism, which sifts through the textual narrative, extracting and infusing it into the image creation process. Moreover, the model proves adept at producing a broad spectrum of images across genres and settings, a testament to its versatility. Whether constructing dreamlike fantasy worlds or meticulously detailed urban nightscapes, the generated visuals consistently exhibit richness and variety. The model exhibits exceptional performance in capturing scene element accuracy, reliably reproducing details and key elements across diverse artistic styles. In the “ancient battlefield” scene, for instance, the warriors’ fierce combat across a vast plain, with galloping horses in the distance, vividly conveys the dynamic nature and depth of the scene, infusing the image with vitality. This precision, from sweeping grandeur to intricate detail, instills a sense of realism and vividness into each generated frame.

Despite consistent alignment with text prompts in most cases, the model encounters limitations with complex or ambiguous descriptions, which may lead to misalignment between the generated content and original input. To address these failures, several strategies were employed. Analysis of common failure patterns revealed that issues arose from ambiguous terminology, insufficiently detailed descriptions, or vague language in the input text. When abstract or subjective concepts were involved, the model occasionally produced vague or imprecise imagery. This challenge can be mitigated by enhancing the clarity and specificity of the text descriptions.

Further adjustments were made in terms of text encoding handling to address these cases. For example, incorporating more detailed contextual information allowed for better interpretation of complex descriptions, while varying descriptive texts during training improved adaptability to complex language structures. Additionally, the cross-modal attention mechanism played a vital role in addressing these challenges. By optimizing the number of attention heads and refining processing strategies, the model’s ability to capture key textual information was enhanced, reducing biases in the generated results and improving overall alignment with the input descriptions.

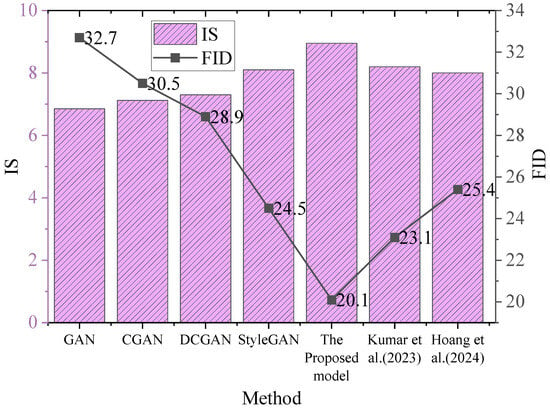

Figure 8 presents a comparison of the IS and FID between the different models, highlighting their quality and diversity of generated images.

Figure 8.

Performance of different models in terms of IS and FID (Kumar et al. (2023) [34], Hoang et al. (2024) [35]).

As depicted in Figure 8, the proposed model surpassed traditional methods like GAN, CGAN, DCGAN, and StyleGAN in both the Inception Score (IS) and Fréchet Inception Distance (FID) metrics, also exhibiting clear advantages over recent methods in the literature [34,35]. Specifically, the method in [34] achieved an IS of 8.2 and an FID of 23.1, while the approach in [35] attained an IS of 8.0 and an FID of 25.4. Although these methods demonstrated considerable performance in terms of image generation quality and diversity, the proposed model outperformed them.

Achieving an IS of 8.95 and an FID of 20.1, the proposed model highlights significant improvements in the diversity and quality of generated images. The enhancement in IS indicates the model’s superior performance in both image quality and diversity. The observed decrease in IS may prompt comparisons with other models, particularly those outlined in the literature. While the proposed model exceeded the performance of models like GAN, CGAN, DCGAN, and StyleGAN in terms of IS, this improvement comes with inherent challenges, potentially indicating a trade-off between image diversity and generation quality. As for the anticipated lower bound, while the model performed strongly in terms of IS, further optimization could lead to a stabilization of the score. Innovations within the model’s cross-modal attention mechanism and generative network architecture have contributed significantly to the enhancement of image quality. In terms of FID, the model demonstrated a substantial reduction when compared to both the traditional methods and those presented in the literature, underscoring innovations aimed at minimizing the distance between generated and real images. The integration of the cross-modal attention mechanism and Transformer architecture strengthened the alignment between text and image, resulting in generated images that are not only richer in detail but also more consistent and diverse. These advancements hold considerable practical implications for game art creation.

While methods presented in the literature [34,35] have made strides in improving image generation quality, their focus remains primarily on the image generation process itself. In contrast, the proposed approach, which integrates cross-modal attention, addresses the interplay between textual and visual content, ensuring both semantic consistency and visual diversity throughout the generation process. This integration not only elevates image quality but also offers an efficient and adaptable solution for real-time game art generation and rapid prototyping.

To further assess the model’s effectiveness, a statistical significance analysis was conducted by comparing the performance of various models using the IS and FID metrics. t-Tests were performed to evaluate the statistical significance of the differences between models. The findings, presented in Table 2, demonstrated a significant difference in both the IS and FID scores between the traditional GAN model and the proposed model (p < 0.001). This significant difference reflects a marked improvement in IS scores and a considerable reduction in FID scores, indicating superior performance in image generation quality and diversity. The comparison between CGAN and the proposed model also yielded a significant difference, reinforcing the advantages of the proposed approach in generating diverse and high-quality images. The proposed model consistently outperformed the comparative models in both the IS and FID metrics, with distinct advantages in image quality, diversity, and alignment between generated and real images. These substantial differences validate the innovations within the proposed method, particularly in terms of image quality, richness of detail, and diversity.

Table 2.

Statistical significance analysis.

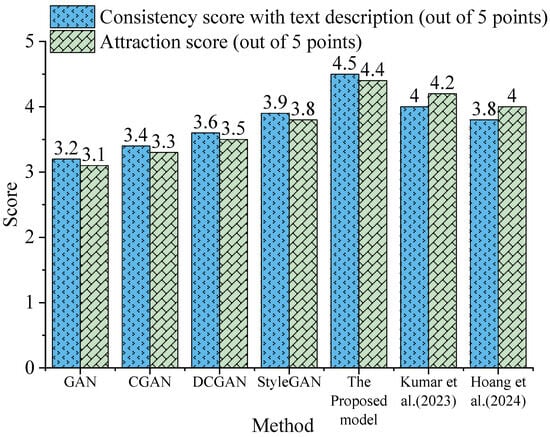

The results of the user survey scores are shown in Figure 9.

Figure 9.

User survey scores (Kumar et al. (2023) [34], Hoang et al. (2024) [35]).

As illustrated in Figure 9, the proposed model exhibited notable superiority over traditional models, such as GAN, CGAN, DCGAN, and StyleGAN, in both text description consistency and visual appeal scores, also surpassing the methods from the literature [34,35]. Specifically, the method outlined in study [34] achieved a consistency score of 4.0 and an appeal score of 4.2, while the approach in study [35] resulted in a consistency score of 3.8 and an appeal score of 4.0. Although these models demonstrated strong visual appeal, a distinct gap was observed when compared to the proposed model.

The proposed model achieved consistency and appeal scores of 4.5 and 4.4, respectively, underscoring substantial improvements. In particular, the model excelled in consistency, aligning the generated images more closely with the input text descriptions. This alignment ensured that the generated images not only reflected the semantic content of the text but also upheld a higher level of visual appeal. The key to this achievement lies in the integration of the cross-modal attention mechanism, which dynamically adjusts attention weights while processing the relationship between text and image. This approach ensures that the generated images more accurately capture the details specified in the text, overcoming monotony and enhancing the diversity and richness of the generated results.

The methods presented in study [34,35] contribute to improvements in text consistency and visual appeal, but their focus primarily resides in enhancing the visual aspect of the generated images. In contrast, the proposed model achieves a more balanced integration of both text consistency and visual appeal throughout the generation process, demonstrating superior performance in both dimensions. This balance is of particular significance in the context of game art creation, where the generated images must not only adhere to creative textual descriptions but also possess substantial visual appeal and artistic quality.

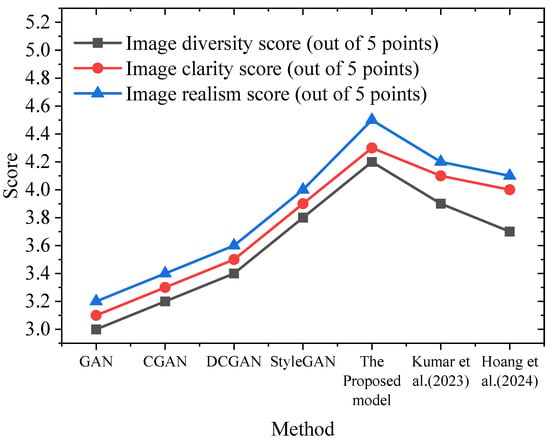

In conclusion, the proposed model exhibits clear advantages in both text consistency and visual appeal of the generated images. When integrating text descriptions with visual content, the model ensures precise alignment between the generated images and textual descriptions while preserving high-quality visual effects. This capability enhances the model’s practical value, especially in fields like game art creation and content generation. The scores on image diversity are shown in Figure 10.

Figure 10.

Image diversity scores (Kumar et al. (2023) [34], Hoang et al. (2024) [35]).

As depicted in Figure 10, the proposed model outperformed traditional methods such as GAN, CGAN, DCGAN, and StyleGAN, along with the approaches outlined in the literature [34,35], across the image diversity, clarity, and realism metrics. Specifically, the method in study [34] achieved an image diversity score of 3.9, an image clarity score of 4.1, and an image realism score of 4.2, while the method in study [35] achieved a diversity score of 3.7, clarity score of 4.0, and realism score of 4.1. Despite these methods showing strong performance in terms of visual effects, a clear gap remains when compared to the proposed model.

The model attained an image diversity score of 4.2, clarity score of 4.3, and realism score of 4.5, highlighting substantial improvements. Particularly, the model excelled in realism, generating images that not only exhibited high clarity but also realistically replicated natural visual effects. This enhancement proves crucial in practical applications, particularly in game art creation, where images must present high-quality details while maintaining visual alignment with real-world environments.

The methods introduced in the literature [34,35] exhibited notable strengths in terms of image clarity and realism; however, the relatively lower diversity scores suggest limitations in the richness of and variability in the generated images compared to the proposed approach. The integration of the cross-modal attention mechanism and a Transformer-based architecture not only enhances clarity and realism but also substantially improves image diversity, ensuring that each generated image presents distinct visual effects and artistic styles.

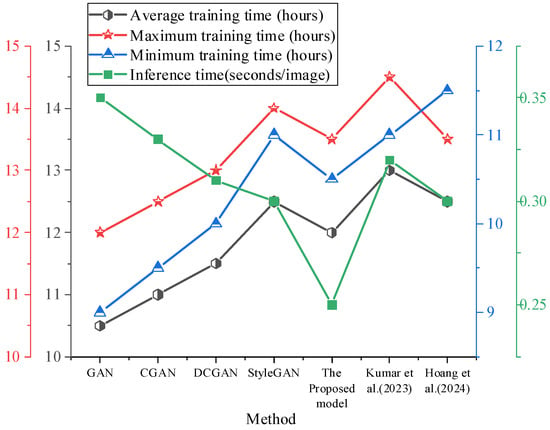

The proposed model demonstrates superior performance in terms of image diversity, clarity, and realism when evaluated against traditional GAN-based techniques and recent state-of-the-art approaches. Through architectural optimization and enhanced cross-modal information integration, the model generates artistically expressive and diverse game images, further advancing the practical application of GANs in game art creation. Figure 11 provides an analysis of the training times across the different models, presenting the average, maximum, and minimum durations, alongside a comparative evaluation of inference times to offer a comprehensive assessment of the performance during both the training and inference stages.

Figure 11.

Analysis of model training time (Kumar et al. (2023) [34], Hoang et al. (2024) [35]).

As illustrated in Figure 11, the proposed model maintained a training time distribution comparable to the other methods, with an average duration of 12 h, a maximum of 13.5 h, and a minimum of 10.5 h, demonstrating stable computational requirements. Although the training time slightly exceeded that of traditional GANs and their variants, this increase is closely associated with the model’s complexity and the integration of the cross-modal attention mechanism, which enhances the quality of the generated results.

In terms of inference time, the proposed model achieved a processing speed of 0.25 s per image, demonstrating greater computational efficiency compared to traditional GAN-based methods such as GAN and CGAN, which generally require longer inference times. The method presented in [34] recorded an inference time of 0.32 s per image, slightly higher than that of the proposed model, further highlighting the advantage in computational efficiency during inference. This improvement is particularly critical in applications requiring frequent image generation, such as game development and real-time content creation.

Although the method in [35] achieved an inference time comparable to that of the proposed model, its training duration was slightly longer, suggesting higher computational demands during the generative process. In contrast, the proposed model maintained a balanced training efficiency while demonstrating clear advantages in inference performance. Despite a marginally extended training time, the superior inference speed facilitates rapid, high-quality image generation, making the model particularly well suited for real-time applications that demand prompt responses, including game development and real-time image synthesis.

The cross-modal AIGC approach introduced in this study extends beyond Unity3D, demonstrating adaptability to alternative game engines such as Unreal Engine and Godot. Unity3D serves as the primary platform due to its robust plugin ecosystem, including Barracuda for neural network inference, and its efficient resource management framework. When adapting this method to other engines, considerations must account for their compatibility with deep learning inference frameworks. Unreal Engine 5, for instance, integrates advanced rendering technologies such as Nanite and Lumen, offering high-fidelity visualization but potentially necessitating further optimizations for lightweight AI model inference, such as leveraging Unreal Engine’s Blueprints in conjunction with its Python API. Meanwhile, Godot, with its GDExtension support for C++ and Python, provides extension capabilities, though its AI toolchain remains less developed relative to Unity3D and Unreal Engine, requiring additional modifications to accommodate the Transformer–GAN architecture for both inference and training.

When applying this approach across different engines under consistent experimental conditions, variations in outcomes arise due to multiple factors. Unreal Engine’s advanced lighting computation enhances the visual fidelity of generated AIGC content but incurs additional computational overhead. Unity3D, in contrast, exhibits greater efficiency in lightweight AI inference, offering advantages in real-time dynamic adjustments of AIGC-generated content. Performance disparities also emerge in real-time interactivity, with Godot and Blender exhibiting comparatively lower responsiveness. Additionally, Unity3D’s C#-based development environment facilitates more efficient iteration and integration, whereas Unreal Engine and Godot demand supplementary adaptation efforts to achieve seamless AIGC module integration.

4. Conclusions

In conclusion, this study propels significant strides in both technical metrics and user experience, as well as image diversity, largely due to the innovative introduction of a cross-modal attention module. The success stems from the distinctive advantages inherent in this attention mechanism, which adeptly navigates the complexities of multimodal information fusion. By dynamically modulating the weights assigned to various modalities, the model adeptly harnesses textual information throughout the generation process, yielding images that resonate closely with user expectations. This adaptive mechanism not only elevates the quality of the generated images but also augments the model’s capacity for generalization and adaptability. Moreover, despite the substantial training duration, such an investment is deemed essential, as it endows the model with enhanced learning capabilities and heightened precision in image generation. In practical contexts, the prowess for high-quality image generation emerges as a critical asset for game development and other creative domains. Game developers stand to benefit immensely from this model, enabling the rapid generation of sophisticated game scenes and characters, thus streamlining development processes and elevating the overall caliber of the games produced. The implications for high-quality image generation are particularly profound within projects utilizing the Unity3D engine. Additionally, the versatility of this model extends to other creative spheres, including advertising design and film special effects, equipping these fields with formidable image generation tools.

Future research endeavors will focus on further refining model architecture and training methodologies to curtail training time and bolster training efficiency. Several key aspects will be addressed to optimize the existing framework. First, reducing the network size will be explored to enhance training efficiency and accelerate inference speed. Second, model compression techniques such as knowledge distillation and pruning will be investigated to lower computational costs while maintaining the quality and diversity of the generated images. Lastly, strategies for improving inference efficiency will be examined, including optimizing convolutional operations, eliminating redundant computations, and applying quantization techniques to reduce both storage and computational demands. These refinements will not only expedite the training process but also enhance real-time generation capabilities and scalability for large-scale content creation. Furthermore, extending the model’s capabilities to encompass a wider array of image generation tasks—such as portraiture and landscape painting—will aid in affirming its applicability and generalization potential across various disciplines. Through these initiatives, the overarching goal remains the enhancement of the model’s performance and practicality, ultimately offering robust support for game development and an array of other creative industries.

Author Contributions

Q.L.: Conceptualization, validation, investigation, writing—original draft preparation, writing—review and editing, visualization. J.L.: software, formal analysis, data curation, writing—original draft preparation, writing—review and editing, supervision. W.H.: methodology, resources, writing—original draft preparation, writing—review and editing, project administration. All authors have read and agreed to the published version of the manuscript.

Funding

The authors received no financial support for the research, authorship, and/or publication of this article.

Data Availability Statement

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

Conflicts of Interest

The authors declare no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

References

- AlZoubi, O.; AlMakhadmeh, B.; Yassein, M.B.; Mardini, W. Detecting naturalistic expression of emotions using physiological signals while playing video games. J. Ambient. Intell. Humaniz. Comput. 2021, 14, 1133–1146. [Google Scholar] [CrossRef]

- Sekhavat, Y.A.; Azadehfar, M.R.; Zarei, H.; Roohi, S. Sonification and interaction design in computer games for visually impaired individuals. Multimed. Tools Appl. 2022, 81, 7847–7871. [Google Scholar] [CrossRef]

- von Gillern, S.; Stufft, C. Multimodality, learning and decision-making: Children’s metacognitive reflections on their engagement with video games as interactive texts. Literacy 2023, 57, 3–16. [Google Scholar] [CrossRef]

- Itzhak, N.B.; Franki, I.; Jansen, B.; Kostkova, K.; Wagemans, J.; Ortibus, E. An individualized and adaptive game-based therapy for cerebral visual impairment: Design, development, and evaluation. Int. J. Child-Comput. Interact. 2022, 31, 100437. [Google Scholar] [CrossRef]

- Delmas, M.; Caroux, L.; Lemercier, C. Searching in clutter: Visual behavior and performance of expert action video game players. Appl. Ergon. 2021, 99, 103628. [Google Scholar] [CrossRef]

- Anantrasirichai, N.; Bull, D. Artificial intelligence in the creative industries: A review. Artif. Intell. Rev. 2021, 55, 589–656. [Google Scholar] [CrossRef]

- Zhang, W.; Shankar, A.; Antonidoss, A. Modern art education and teaching based on artificial intelligence. J. Interconnect. Netw. 2021, 22, 2141005. [Google Scholar] [CrossRef]

- Lv, Z. Generative artificial intelligence in the metaverse era. Cogn. Robot. 2023, 3, 208–217. [Google Scholar] [CrossRef]

- Lai, G.; Leymarie, F.F.; Latham, W. On mixed-initiative content creation for video games. IEEE Trans. Games 2022, 14, 543–557. [Google Scholar] [CrossRef]

- Mikalonytė, E.S.; Kneer, M. Can Artificial Intelligence make art?: Folk intuitions as to whether AI-driven robots can be viewed as artists and produce art. ACM Trans. Hum.-Robot Interact. 2022, 11, 43. [Google Scholar] [CrossRef]

- Vinchon, F.; Lubart, T.; Bartolotta, S.; Gironnay, V.; Botella, M.; Bourgeois-Bougrine, S.; Burkhardt, J.-M.; Bonnardel, N.; Corazza, G.E.; Glăveanu, V.; et al. Artificial Intelligence & Creativity: A manifesto for collaboration. J. Creat. Behav. 2023, 57, 472–484. [Google Scholar]

- Caramiaux, B.; Alaoui, S.F. Explorers of Unknown Planets’ Practices and Politics of Artificial Intelligence in Visual Arts. Proc. ACM Hum.-Comput. Interact. 2022, 6, 477. [Google Scholar] [CrossRef]

- Abbott, R.; Rothman, E. Disrupting creativity: Copyright law in the age of generative artificial intelligence. Fla. L. Rev. 2023, 75, 1141. [Google Scholar]

- Leichtmann, B.; Hinterreiter, A.; Humer, C.; Streit, M.; Mara, M. Explainable artificial intelligence improves human decision-making: Results from a mushroom picking experiment at a public art festival. Int. J. Hum.–Comput. Interact. 2023, 40, 4787–4804. [Google Scholar] [CrossRef]

- Zhang, B.; Romainoor, N.H. Research on artificial intelligence in new year prints: The application of the generated pop art style images on cultural and creative products. Appl. Sci. 2023, 13, 1082. [Google Scholar] [CrossRef]

- Sunarya, P.A. Machine learning and artificial intelligence as educational games. Int. Trans. Artif. Intell. 2022, 1, 129–138. [Google Scholar]

- Wagan, A.A.; Khan, A.A.; Chen, Y.L.; Yee, P.L.; Yang, J.; Laghari, A.A. Artificial intelligence-enabled game-based learning and quality of experience: A novel and secure framework (B-AIQoE). Sustainability 2023, 15, 5362. [Google Scholar] [CrossRef]

- Lu, Y.; Liu, J.; Lv, L.; Gao, X.; Chen, W.; Zhang, Y. Re-EnGAN: Unsupervised image-to-image translation based on reused feature encoder in CycleGAN. IET Image Process. 2022, 16, 2219–2227. [Google Scholar] [CrossRef]

- de Souza, V.L.T.; Marques, B.A.D.; Batagelo, H.C.; Gois, J.P. A review on generative adversarial networks for image generation. Comput. Graph. 2023, 114, 13–25. [Google Scholar] [CrossRef]

- Özçift, A.; Akarsu, K.; Yumuk, F.; Söylemez, C. Advancing natural language processing (NLP) applications of morphologically rich languages with bidirectional encoder representations from transformers (BERT): An empirical case study for Turkish. Automatika 2021, 62, 226–238. [Google Scholar] [CrossRef]

- Gu, J.; Meng, X.; Lu, G.; Hou, L.; Minzhe, N.; Liang, X.; Yao, L.; Huang, R.; Zhang, W.; Jiang, X.; et al. Wukong: A 100 million large-scale chinese cross-modal pre-training benchmark. Adv. Neural Inf. Process. Syst. 2022, 35, 26418–26431. [Google Scholar]

- Erdem, E.; Kuyu, M.; Yagcioglu, S.; Frank, A.; Parcalabescu, L.; Plank, B.; Babii, A.; Turuta, O.; Erdem, A.; Calixto, L.; et al. Neural natural language generation: A survey on multilinguality, multimodality, controllability and learning. J. Artif. Intell. Res. 2022, 73, 1131–1207. [Google Scholar] [CrossRef]

- Goel, S.; Bansal, H.; Bhatia, S.; Rossi, R.; Vinay, V.; Grover, A. Cyclip: Cyclic contrastive language-image pretraining. Adv. Neural Inf. Process. Syst. 2022, 35, 6704–6719. [Google Scholar]

- Zhang, W.; Cui, Y.; Zhang, K.; Wang, Y.; Zhu, Q.; Li, L.; Liu, T. A static and dynamic attention framework for multi turn dialogue generation. ACM Trans. Inf. Syst. 2023, 41, 15. [Google Scholar] [CrossRef]

- Kaźmierczak, R.; Skowroński, R.; Kowalczyk, C.; Grunwald, G. Creating Interactive Scenes in 3D Educational Games: Using Narrative and Technology to Explore History and Culture. Appl. Sci. 2024, 14, 4795. [Google Scholar] [CrossRef]

- Huang, Y.-L.; Yuan, X.-F. StyleTerrain: A novel disentangled generative model for controllable high-quality procedural terrain generation. Comput. Graph. 2023, 116, 373–382. [Google Scholar] [CrossRef]

- Värtinen, S.; Hämäläinen, P.; Guckelsberger, C. Generating role-playing game quests with GPT language models. IEEE Trans. Games 2022, 16, 127–139. [Google Scholar] [CrossRef]

- Li, C.; Wang, Y.; Zhou, Z.; Wang, Z.; Mardani, A. Digital finance and enterprise financing constraints: Structural characteristics and mechanism identification. J. Bus. Res. 2023, 165, 114074. [Google Scholar] [CrossRef]

- Zhang, R.; Cao, Z.; Huang, Y.; Yang, S.; Xu, L.; Xu, M. Visible-Infrared Person Re-identification with Real-world Label Noise. IEEE Trans. Circuits Syst. Video Technol. 2025, 1-1. [Google Scholar] [CrossRef]

- Zhang, R.; Yang, B.; Xu, L.; Huang, Y.; Xu, X.; Zhang, Q.; Jiang, Z.; Liu, Y. A Benchmark and Frequency Compression Method for Infrared Few-Shot Object Detection. IEEE Trans. Geosci. Remote. Sens. 2025, 63, 5001711. [Google Scholar] [CrossRef]

- Gao, R. AIGC Technology: Reshaping the Future of the Animation Industry. Highlights Sci. Eng. Technol. 2023, 56, 148–152. [Google Scholar] [CrossRef]

- Ren, Q.; Tang, Y.; Lin, Y. Digital Art Creation under AIGC Technology Innovation: Multidimensional Challenges and Reflections on Design Practice, Creation Environment and Artistic Ecology. Comput. Artif. Intell. 2024, 1, 1–12. [Google Scholar] [CrossRef]

- Deng, J. Governance Prospects for the Development of Generative AI Film Industry from the Perspective of Community Aesthetics. Stud. Art Arch. 2024, 3, 153–162. [Google Scholar] [CrossRef]

- Kumar, L.; Singh, D.K. Pose image generation for video content creation using controlled human pose image generation GAN. Multimed. Tools Appl. 2023, 83, 59335–59354. [Google Scholar] [CrossRef]

- Hoang, N.L.; Taniguchi, T.; Hagiwara, Y.; Taniguchi, A. Emergent communication of multimodal deep generative models based on Metropolis-Hastings naming game. Front. Robot. AI 2024, 10, 1290604. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).