Abstract

Software-defined networking (SDN) is becoming a predominant architecture for managing diverse networks. However, recent research has exhibited the susceptibility of SDN architectures to cyberattacks, which increases its security challenges. Many researchers have used machine learning (ML) and deep learning (DL) classifiers to mitigate cyberattacks in SDN architectures. Since SDN datasets could suffer from class imbalance issues, the classification accuracy of predictive classifiers is undermined. Therefore, this research conducts a comparative analysis of the impact of utilizing oversampling and principal component analysis (PCA) techniques on ML and DL classifiers using publicly available SDN datasets. This approach combines mitigating the class imbalance issue and maintaining the effectiveness of the performance when reducing data dimensionality. Initially, the oversampling techniques are used to balance the classes of the SDN datasets. Then, the classification performance of ML and DL classifiers is evaluated and compared to observe the effectiveness of each oversampling technique on each classifier. PCA is applied to the balanced dataset, and the classifier’s performance is evaluated and compared. The results demonstrated that Random Oversampling outperformed the other balancing techniques. Furthermore, the XGBoost and Transformer classifiers were the most sensitive models when using oversampling and PCA algorithms. In addition, macro and weighted averages of evaluation metrics were calculated to show the impact of imbalanced class datasets on each classifier.

1. Introduction

Security is considered a significant challenge when deploying a software-defined network (SDN) across many networks, despite the benefits of SDN [1]. The migration of the networks that have various conventional devices to SDNs increases the complexity of the system. Many issues need to be resolved, including the single point of failure of the centralized controller [2]. This can lead to bigger problems when the attackers take over the central controller so they can have unauthorized access and control of the network. Several cyberattacks constitute a risk to the SDN, but the distributed denial-of-service (DDoS) attack is one of the main causes because it can target the interconnected layers, including control, application, and data layers [3]. In addition, the DDoS attack can be grouped into two attacks based on the protocol level of attack, one at the network/transport layer and the other at the application layer. The communication links that link data with the control layer can be targeted by a DDoS attack. Several researchers are proposing a backup controller to eliminate DDoS attack damage, but this controller is still susceptible to the attack of DDoS [4]. Intrusion detection systems (IDSs) are standard solutions that are used for attack detection in network solutions; some of the solutions use conventional IDS, which can be a challenging aspect, while the environment of the network supports the Internet of Things (IoT) as well as traditional protocols and utilize SDN [5]. Recently, intrusion detection systems have given attention to machine learning (ML) and the subset of ML, which is deep learning (DL) for advanced attacks because of the limitations in traditional IDS [6]. The researchers explored ML in network intrusion detection [7], botnet attack detection [8], and malware detection [9]. The ability of SDNs to differentiate between control and data traffic is an important feature [10]. Complex jobs with less human intervention and low implementation costs can be possible because of this feature of SDN [11].

One significant challenge that researchers face is the imbalanced data that are transmitted to intrusion detection systems in SDN systems. The IDS is an important tool for detecting abnormal attacks and activities in a network. In SDN, network traffic and the attack distribution can create a data imbalance that might reduce the efficacy of the IDS designed based on ML [12]. Most of the datasets with intrusions include intense class imbalance ratios. A dataset called InSDN [13] has many cyberattacks like DDoS, probe attacks, and DoS. A large imbalance of class can cause lower learning algorithm performance when generalizing the minority classes [14]. The imbalanced data means that a dataset where one class, e.g., regular traffic, outbalances another class, such as malicious traffic. Since malicious activity may occur occasionally, it can be considered a minority in SDN-based IDS, as the majority of the traffic is normal in the network [12]. To cope with this imbalanced data, techniques called resampling could be used, including oversampling the minor class and undersampling the major class, which can be useful to balance the data. Several methods can handle this, including random undersampling and the synthetic minority oversampling technique (SMOTE) [12]. An effective way to learn from unbalanced data is to oversample minority classes before training. Using the SMOTE [15] technique, the minority class is oversampled by generating synthetic samples across the line sections that connect any class of minority of k nearest neighbors. The problem of class imbalance is still a challenge in the field, and it is constantly changing despite the fact that it was initially identified decades ago [14]. The problem of class imbalance is addressed in two manners [16]. The first method is considered a method at the data level by using the technique of balancing the dataset before classification, and the second is a method at the classification level that utilizes classification techniques that are aware of imbalances. To accomplish balanced learning, the first method adds or subtracts instances from datasets of training by changing their sizes. The second method suggests models that do not change an unbalanced dataset; instead, they learn from it directly [14]. The class imbalance has been researched using the most common datasets in intrusion detection, like UNSW-NB15 [17] and NSL-KDD [18], but this issue has not been tackled in SDN intrusion publicly available datasets. The InSDN dataset can be considered one of the earliest available datasets in intrusion detection, and it is publicly accessible and created through the testbed of SDN. This dataset faces the issue of imbalanced data, like other datasets in intrusion detection. The classification performance of ML/DL classifiers becomes unstable when the training datasets are imbalanced.

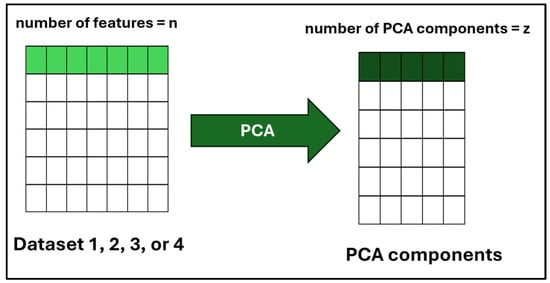

Employing oversampling techniques to balance the classes of imbalanced datasets, such as using Random Oversampling, results in increasing the size of the data. Therefore, PCA can be used to reduce the dimensionality of the dataset [19].

Therefore, it is essential to study the challenges of imbalanced classes of SDN datasets when using ML/DL classifiers. In addition, there is a need to explore the impact of using oversampling techniques and PCA to mitigate class imbalance issues of SDN datasets on the performance of ML/DL classifiers.

This paper presents a comparative study to analyze the influence of oversampling techniques and PCA on the classification performance of several ML and DL classifiers. The study utilizes four publicly available IDS datasets in the SDN environment with imbalanced classes to examine the effectiveness of oversampling techniques, PCA, and various ML and DL models on classification performance. The contribution of this study can be summarized as follows:

- Applying oversampling techniques, including Random Oversampling, synthetic minority oversampling technique (SMOTE), and adaptive synthetic sampling (ADASYN), and analyzing their results on public imbalanced SDN datasets.

- Investigating the impact of integrating oversampling techniques with PCA on datasets to observe its impact on classification performance.

- Addressing the challenge of class imbalance issues in SDN datasets, not limited to the InSDN dataset, which is heavily used in the literature.

- Comparing and evaluating the classification performance of several ML and DL models before using oversampling techniques, after using oversampling techniques, using PCA, and without using PCA to present a comprehensive analysis of these methods for classifying cyberattacks in the SDN environment. The test accuracy and evaluation metrics (precision, recall, and F1-score) were computed to show in-depth analyses of the results.

- Comparing the computational time (in seconds) of executing each oversampling technique and PCA to evaluate their computational cost.

The rest of the paper is organized as follows: Section 2 discusses the related works, Section 3 provides background information on SDN architecture, Section 4 explains the methodology used for conducting this research, Section 5 presents and discusses the findings, and Section 6 concludes the study.

2. Related Works

The fast advancement of SDN has shown a significant improvement in managing and controlling the network. However, this progress has been directed at the increased attacks that made IDS an important part of securing the environment of SDN. Several researchers have proposed methods, including ML, optimization algorithms, and DL, to improve the performance of IDS. This section provides the recent developments in IDS progress, presenting methodologies, datasets, and outcomes. A summary of the related works is shown in Table 1, as the table summarizes the details of each article, including the publication year, methods used, datasets used, and key findings.

Authors in [20] proposed an intrusion detection system to identify probe attacks using a grey wolf optimizer and light gradient boosting machine classifier. They utilized the InSDN dataset, which can be considered a benchmark dataset in SDN, to train and test the IDS that they proposed. When compared with other IDSs, the proposed IDS presented optimal performance while detecting probe attacks in SDN. Their proposed model achieved an accuracy of 99.8%, which outperforms other IDS models in the literature.

Researchers in [21] created DL-based IDS for detecting and mitigating network intrusions. Their proposed model has a number of phases, starting with the data augmentation approach called deep convolutional generative adversarial networks to tackle the imbalance data issue. Then, the feature extraction method is used to extract data input using an approach based on CenterNet. After gathering efficient features, the Slime Mold Algorithm (SMA) with ResNet152V2-based DL is used to categorize the assaults in the InSDN dataset. When the intrusion on the network is identified, the proposed model of defense is triggered to quickly return to normal network connectivity. Lastly, the authors have conducted many experiments to validate the algorithms, and their findings show that they have proposed a system that can detect and mitigate intrusion efficiently. According to their results, they achieved an accuracy of 99.65%.

The paper in [22] concentrated on using real-time flow-based characteristics to identify intrusion in SDN as early as feasible. In addition to facilitating early intrusion detection, the authors aimed to identify intrusions with fewer packets for each flow, which is helpful while an attack flow comprises fewer packets. They demonstrated the way machine learning algorithms work well while trained offline using a dataset; with fewer packets utilized to produce characteristics for the model generated by ML, its accuracy drops by about 25%. In their experiments, they observed that random forest (RF) outperformed a DL algorithm when applied to a dataset that is publicly accessible in SDN for intrusion detection. The authors accomplished an accuracy of 98.72% with the random forest algorithm when applied to the InSDN dataset. The authors also mentioned that they did not apply steps to overcome the imbalanced classes’ issues as there were imbalanced classes in the dataset.

The study in [23] used a genetic algorithm (GA) to select features using a correlation coefficient as a function of fitness. To evaluate the reliance on the objective variable, mutual information (MI) was utilized for ranking the features. To detect threats in IoT networks, a hybrid DNN algorithm was trained using the chosen optimal characteristics. In order to train the input data, the hybrid DNN combines bidirectional long short-term memory, CNN, and bi-gated recurrent Units. In addition to an ablation investigation, the effectiveness of their suggested model was compared to a number of alternative baseline DL models. The model’s efficacy was evaluated using three important datasets: InSDN, UNSW-NB15, and CICIoT 2023, which included a variety of attack scenarios. With less use of resources, the suggested model outperforms the current model in terms of detection time and accuracy. They achieved an accuracy of 98.66%.

The researchers in [24] proposed an approach that includes a synthetic data augmentation technique (S-DATE) and a diverse self ensemble model (D-SEM) based on the particle swarm optimizer (PSO). Their approach presented a dataset called M-En that combines three datasets from a different network architecture comprising InSDN, IoTID-20, and UNSWNB-15. Then, S-DATE is used to overcome imbalanced data distribution in the generated M-En dataset, improving the rate of detection for both regular and anomalous traffic and facilitating improved convergence of the model. They provide an ensemble model called PSO-D-SEM that uses the variety that PSO offers to manage the complicated nature of M-En networks. Each model was trained on a part of the M-En dataset and then combined with the PSO-D-SEM to increase overall performance. The test results demonstrate the advantages of their approach, with an accuracy rate of 98.9%. They further employed a statistical T-test to highlight the importance of the suggested PSO-D-SEM method in contrast to cutting-edge approaches.

The paper in [25] proposed a two-phase detection method for DDoS attacks in SDN-based multidimensional characteristics. First, a coarse-grained identification of DDoS assaults inside the network was conducted by analyzing the traffic data collected from the SDN ports of the switch. Then, multidimensional features were extracted from the traffic data going via suspected switches by building a multidimensional deep convolutional classifier (MDDCC) with CNN and wavelet decomposition. A simple classifier may be used to precisely identify attack instances based on these collected multidimensional features. Lastly, the origin of attacks in SDN networks may be effectively detected and separated by combining graph theory with restricted techniques. The experimental findings show that the suggested approach that uses very little statistical data is capable of promptly and precisely detecting SDN network threats. They evaluated their model using public and generated datasets and achieved better accuracy than traditional detection techniques. The technique effectively reduces the effect of attacks by separating the compromised nodes and confirming that genuine traffic continues to flow normally, even during network intrusions. This method protects the efficiency and security of the network by improving detection abilities and offering a barrier against an increased number of cyber threats. The MDDCC achieved an accuracy of 99.24% on the InSDN dataset.

The authors in [26] proposed a federated learning approach (FedLAD) used to counter attacks in SDN, particularly in multi-controller architectures. They used datasets called InSDN, CICDDoS2019, and CICDoS2017 to explore the effectiveness of the proposed model, and the results showed an accuracy of over 98%. Additionally, the FedLAD protocol’s capacity to identify DDoS assaults with high accuracy and low resource consumption is demonstrated by its assessment using traffic in real-time in SDN. The paper presented a method for using FL to identify DDoS attacks in large-scale SDN. They achieved a 99.86% binary classification accuracy rate when applied to the InSDN. Furthermore, they achieved an accuracy of 98.07% in FedLAD binary classification when applied to the InSDN.

The work in [27] presented a deep learning threat hunting framework (DLTHF) to defend SD-IoT data and identify attack vectors. Firstly, an unsupervised extracting features module was created that filters and converts dataset values into the protected format using the suggested long short-term memory contractive sparse AutoEncoder method in combination with data perturbation-driven encoding and normalization-driven scaling. Second, a unique Threat Detection System (TDS) that uses Multi-head Self-attention-based Bidirectional Recurrent Neural Networks (MhSaBiGRNN) was developed to identify various kinds of attacks according to the data that has been encoded. Specifically, a novel TDS approach was created where each event was examined and given a weight that was self-learned according to its level of significance. They achieved an accurate level of 99.97% with DLTHF binary classification on the InSDN dataset. Furthermore, they achieved an accuracy of 99.88 with DLTHF multi-classification on the InSDN dataset.

In [28], the authors concentrated on designing robust DL models to counteract dynamically emerging threat vectors. To provide a comprehensive IDS, they constructed an approach to compare two models, including a hybrid CNN-LSTM architecture and a Transformer encoder architecture. In particular, they aimed at the controller for SDN, which is a critical component. For the training and evaluation of the models that they developed, they used the InSDN dataset. They employed accuracy, F1 score, recall, and precision for evaluation. The Transformer model using 48 features obtained an accuracy rate of 99.02%, while the CNN-LSTM model achieved an accuracy of 99.01%.

The research in [29] presented an enhanced framework (SDN-ML-IoT) that works like an Intrusion and Prevention Detection System (IDPS) that can help with more effective detection and real-time mitigation of attacks using DDoS. To secure Internet of Things devices in the smart home from DDoS attacks, they employed the SDN-ML-IoT, which has an ML technique in an SDN context. They used a one-versus-rest (OvR) technique using an ML approach using logistic regression (LR), random forest (RF), naive Bayes (NB), and k-nearest neighbors (kNN). They compared their study to other relevant research. The authors achieved the highest rate of accuracy of 99.99% with the RF algorithm, and according to their findings, it can mitigate DDoS in 3 s or less.

Table 1.

Summary of related works.

Table 1.

Summary of related works.

| Research | Year | Methods | Dataset | Key Findings |

|---|---|---|---|---|

| [20] | 2023 | (GWO) and (LightGBM) | InSDN | An accuracy of 99.8%. |

| [21] | 2024 | (DCGAN), CenterNet-based approach and ResNet152V2 with Slime Mold Algorithm (SMA) | InSDN | An accuracy of 99.65%. |

| [22] | 2023 | (RF) | InSDN | An accuracy of 98.72%. |

| [23] | 2024 | (GA), (MI), bidirectional long short-term memory, CNN, and bi-gated recurrent Units. | InSDN, UNSW-NB15, and CICIoT 2023 | An accuracy of 98.66%. |

| [24] | 2024 | (S-DATE) and (D-SEM) based on (PSO). | InSDN, IoTID-20 and UNSWNB-15 | An accuracy of 98.9%. |

| [25] | 2024 | (MDDCC) with wavelet decomposition and convolutional neural networks | InSDN | An accuracy rate of 99.24% |

| [26] | 2025 | Federated learning approach | InSDN, CICDDoS2019 and CICDoS2017 | An accuracy rate of 98.07% in FedLAD binary classification |

| [27] | 2025 | (DLTHF), Long Short-Term Memory Contractive Sparse AutoEncoder, and (MhSaBiGRNN) | InSDN | An accuracy level of 99.97 with binary classification and an accuracy level of 99.88 with multi-classification |

| [28] | 2024 | CNN-LSTM and Transformer encoder | InSDN | The Transformer obtains an accuracy rate of 99.02% and the CNN-LSTM achieves an accuracy level of 99.01%. |

| [29] | 2024 | (OvR) technique using an ML approach using (LR), (RF), (NB) and (kNN). | InSDN | They achieved the highest rate of accuracy of 99.99% with RF. |

The majority of research dealt with data imbalance, which results in biased models that have difficulty identifying minority attack kinds. Inconsistent selection of features frequently leads to higher computing expenses or less-than-optimal model performance. It is clear that most of the researchers have used the InSDN dataset as a benchmarking dataset in the SDN environment, but relying on one dataset for SDN may raise some concerns regarding the generalizability of systems to various or unique attacks.

3. SDN Architecture

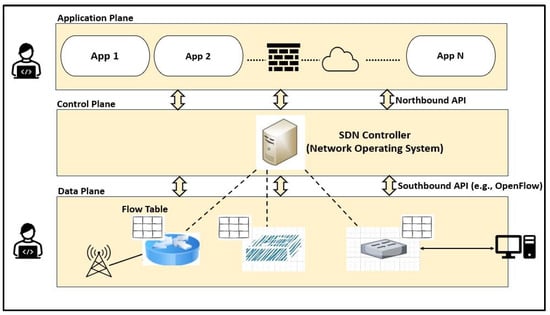

The general architecture of SDN involves data (or infrastructure), control, and application layers. These layers utilize northbound and southbound application programming interfaces (APIs) to communicate. In other words, these interfaces are used to exchange information between the layers. Nevertheless, these APIs remain closely connected to the core physical or virtual infrastructure’s forwarding components, such as switches, filters, and routers. In SDN, it has been noted that the functionalities of the forwarding elements are separated from the control and data layers. The data layer comprises data forwarding elements, whereas the control layer comprises controllers acting as a network operating system. To simplify, SDN is a revolutionary network management design that separates the control plane from the data forwarding plane to centralize management. For dynamic and centralized administration of computer network architecture, SDN integrates numerous network tools. It empowers the network managers to rapidly control the needs of the business. To manage computer network architecture centrally and dynamically, SDN incorporates several network tools [30,31,32,33].

In the data layer, SDN switches and routers are responsible for transporting packets between locations, while the control layer decides how they must travel across the network. Briefly, the primary advantage of SDN is the decoupling of the control layer from the data layer, which allows data forwarding devices to merely implement the forwarding function [34].

To clarify, SDN is a novel technology used for managing networks, offering a diverse set of applications that aims to extract as much logic from the hardware as possible. The fundamental objective is to be able to reduce the dependency on hardware and consolidate all of the network’s “intelligence” into a single controller as opposed to distributing it across all routers and/or switches. This separation of the control plane and data forwarding plane is intended to improve efficiency by lowering process durations in intermediary nodes through the use of multiple flow protocols, most commonly OpenFlow. For instance, packet forwarding in network devices is made easier by detaching those two layers. SDNs are designed to improve software applications and lessen reliance on hardware, which increases network intelligence [35].

As above, the general architecture of the SDN, as shown in Figure 1, is composed of three conceptual planes or layers [31,36,37,38,39]:

Data Plane: Also known as the forwarding plane or infrastructure layer. This layer contains physical and virtual networking switches, routers, and networking equipment that oversee forwarding data and are in charge of data routing and statistics storage. An OpenFlow switch has programmable tables that outline a course of action for each packet connected to a certain flow. The control plane can dynamically configure a flow table. In an SDN network, a switch typically searches its flow table whenever a new packet arrives to ascertain the output port. A new packet can be discarded, flooded across all end nodes, forwarded to a selected end node, or transmitted to the network controller when it enters a switch. The switches participating in this connection maintain statistical data for each flow that the control plane can access.

Control Plane: This layer is made up of at least one SDN controller. The major responsibility of this plane is to preserve a logically consolidated view that enables applications to make inferences about the behavior and characteristics of the network. The control plane supplies an application programming interface (API) to build applications and monitor the state of the network using the open interface with the devices. This layer can handle the data gathered by switches at the data plane to coordinate traffic behavior, such as flow statistics. Moreover, this plane can give tools for fault detection, make choices regarding current traffic allocations, and enforce QoS regulations since it has a global network perspective. Typically, the control plane is conceptually centralized but physically spread among controller devices.

Application Plane: In this top layer, all the features and services are defined. Applications that operate across the network infrastructure must be executed by the application plane, which hosts the applications that are not related to the control plane. These programs often include some level of human interaction when making changes to the network components, such as the network’s routing behavior. For instance, examples of network applications employed on this layer include network visualization, network provisioning, and path reservation. SDN applications take advantage of the separation of the application functionality from the network equipment in addition to the logical centralized nature of the network control for directly sharing the intended aims and methods in a centralized superior fashion without getting bound by the specifics of how the basic infrastructure is implemented and distributed between states.

Every one of the three layers interacts and depends on the others. The main benefit of the SDN design is that it gives users a comprehensive perspective of all the network applications available, thereby turning the network into a “smart” system. In addition, since SDN is a virtual network overlay, there is no requirement that these components be situated in the same physical location [13].

The northbound interface oversees forwarding processes, event alerts, statistical reporting, and network capacity advertising. In essence, it gives a controller the ability to specify how the network’s hardware should behave. The northbound interface offers abstractions to ensure programming language and controller platform-independent support. Stated differently, high-level abstract programming languages are critical to programming simplification in SDN. Network engineers employ language abstractions like Frenetic [40], Pyretic [41], Procera [42], and NetCore [43] for application development to avoid disclosing the intricacies of South-bound APIs. For instance, REST north-bound APIs are supported by both FloodLight and OpenDaylight SDN controllers. One commonality among these four abstract programming languages is their ability to work with the NOX/POX controllers to translate a few high-level rules into complex OpenFlow instructions [44].

The desire for a new network architectural design came from the loss of the ability of current fixed network infrastructures and dependence on established protocols to manage the dramatically rising volume of traffic and its service requirements without resorting to over-provisioning, which is both expensive and ineffective. Furthermore, network operators’ flexibility was severely constrained by their reliance on suppliers that created specialized computers for varied uses. As a result, the innovation potential was limited by both vendor dependency and long-established procedures. To solve this, the SDN paradigm in communication networks separates control (control plane) from forwarding (data plane). Using open protocols like OpenFlow, applications run on a logically centralized controller to manage how routers and switches act in the network on a dynamic basis [45].

Figure 1.

SDN architecture.

Fortunately, the latest solutions in this area do not need large changes to the forwarding switches and routers, making them appealing not just to the academic community but also to the networking business. For example, OpenFlow-enabled devices may readily exist simultaneously with conventional Ethernet devices, allowing for gradual adoption (i.e., without disrupting existing networks) [46,47]. To improve security, OpenFlow version 1.5.1 suggested header encryption; however, the security settings are currently optional and are anticipated to be fixed in future protocol versions [48].

4. Methodology

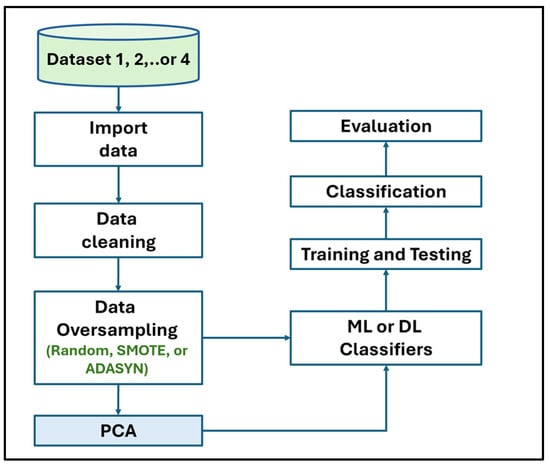

In this study, we conducted a series of scientific experiments to compare the impact of using three oversampling algorithms and PCA on several ML and DL models with four SDN datasets, as shown in Figure 2. To the best of our knowledge, most of the publicly available SDN datasets suffer from class imbalance issues. Therefore, several oversampling techniques, named Random Oversampling, SMOTE, and ADASYN, were utilized to balance the classes of the SDN datasets. In addition, we also studied the impact of applying PCA to the data after using the aforementioned oversampling algorithms to study its effectiveness. We trained several ML and DL classifiers such as DT, RF, XGBoost, Transformer, and DNN to compare their classification performance using the discussed techniques.

The workflow outlines a comparative analysis process for evaluating different datasets using ML and DL classifiers. All datasets were specific to the SDN environment. Selected datasets were loaded for processing as part of the data import procedure. Preprocessing operations like resolving missing values and eliminating duplicate rows were carried out in the cleaning stage to guarantee data quality. We used data.dropna() function provided by Python libraries to remove missing values that are referred to NaN from the datasets. This is useful for cleaning tasks such as handling incomplete datasets. Missing values can negatively impact ML or DL classifiers by reducing accuracy or introducing bias. These values might occur in the context of SDNs due to data packet loss or incomplete logging, making it necessary to preprocess the data effectively. Usually, datasets include noisy and unreliable data that might impact training and analysis. Furthermore, datasets employed in attack detection exhibit high dimensionality, complicating the extraction of information during training. Therefore, the elimination of inconsistent data guarantees that the classifiers can generalize better.

After that, data oversampling (or the data balancing stage) comes to address the class imbalance issue. Next, applying PCA is an optional step to reduce the dimensionality of the dataset. It retains the most significant features while discarding redundant ones. Applying ML and DL classifiers for modeling will split the dataset into two sets: training (80%) and testing (20%) to train the classifiers and evaluate their performance. The model then predicted the class labels for the testing data. The classification and evaluation phases were used to evaluate the model’s accuracy, precision, recall, and F1-score in classifying samples and assess its performance relatively to ensure a thorough evaluation of the model’s ability to classify data samples effectively. These procedures, in collaboration, ensure the development of robust models adapted to the unique challenges of SDN environments.

Figure 2.

Comparative analysis workflow.

The dataset is defined as a structured data collection that may be used to achieve different purposes. It is the foundation for all operations, strategies, and models that developers employ to analyze it [49]. Datasets typically include a number of data points organized into a single table with rows and columns. While columns provide the characteristics of the dataset, rows show the number of data points. In an SDN dataset, the columns represent the flow features, such as the source IP. However, the rows represent a unique data point or instance of data. Labeled datasets usually contain a column with labels to categorize the class of each row. They are commonly used in the field of machine learning, specifically supervised learning, while unlabeled datasets are typically used with unsupervised learning. They are utilized by developers to gather insights, make learned decisions, and train algorithms. The number of training samples relating to some classes is higher than that of other classes in circumstances when there is a data imbalance problem. In other words, class imbalance happens when there is a notable difference between two classes of the target variable, with one represented by many instances and the other by a few. Using an unbalanced dataset may result in classification or prediction bias [50,51].

Learning a classifier from an unbalanced dataset is a crucial and difficult topic in supervised learning models. In simple terms, class imbalance is a prevalent and persistent issue in classification. The term “class imbalance data” refers to information with an unbalanced distribution of classes. The number of training samples relating to some classes is higher than that of other classes in circumstances when there is a data imbalance problem. This problem, which arises when the number of samples in each class is not roughly equal, is a prevalent one in data mining. For instance, in binary classification, the data are said to be “imbalanced” if 80% of the samples belong to class A, whereas only 20% do so for class B [52]. According to conventional customs, the class with the most examples is known as the majority or negative class, while the class with the fewest examples is known as the minority or positive class [53].

4.1. Datasets Overview

SDN has specific characteristics, including its dynamic nature, centralized control, and the prevalence of certain attack types. By comparing SDN datasets with IoT datasets, for example, SDN datasets focus on cyberattacks targeting centralized network behaviors, while IoT datasets emphasize cyberattacks aimed at diverse devices. Therefore, understanding these characteristics helps researchers design optimal solutions when developing IDSs for the SDN environment.

In this study, we used four publicly available datasets specific to the SDN network: the DDoS SDN dataset [54], SDN Intrusion Detection [55], LR-HR DDoS 2024 Dataset for SDN-Based Networks [56], and the InSDN dataset [57]. They are referred to as Datasets 1, 2, 3, or 4, as shown in Table 2.

Table 2.

Datasets used in this study.

4.1.1. Dataset 1

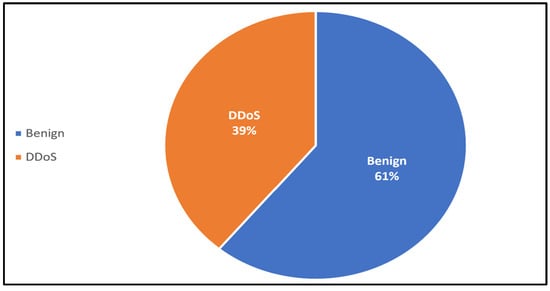

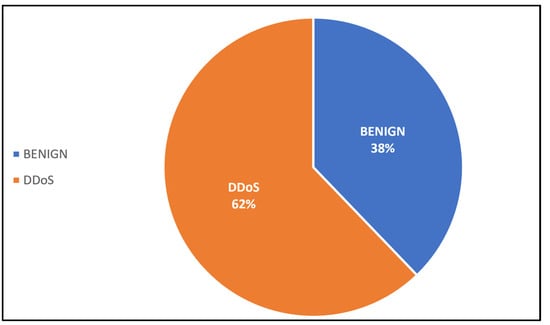

The first dataset includes benign network traffic and DDoS attack traffic, allowing for a straightforward comparison between normal and malicious activities. This binary classification dataset contains three categorical and twenty numeric features (including the Label). Some of these features are packetins, pktperflow, byteperflow, Protocol, port_no, tot_kbps. Minority class represents anomalous data, and it is higher than typical anomaly datasets, which often have much smaller anomaly proportions. Therefore, this distribution can help models learn both classes more effectively compared to more extreme imbalances. Table 3 and Figure 3 illustrate the distribution of these data instances.

Table 3.

Distribution of classes in Dataset 1.

Table 3.

Distribution of classes in Dataset 1.

| Category | Rows | Class Distribution (%) |

|---|---|---|

| Benign | 63,335 | 61% |

| DDoS | 40,504 | 39% |

Figure 3.

Proportion of classes in Dataset 1.

4.1.2. Dataset 2

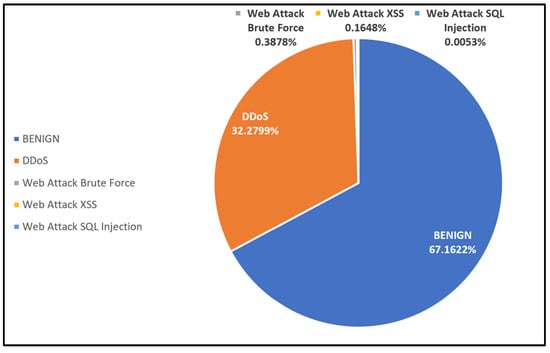

The second dataset includes five categories: benign network traffic, DDoS attack, web attack Brute Force, Web attack Cross-Site Scripting (XSS), and Web attack Structured Query Language (SQL) Injection, containing 79 features with 1,048,083 instances after cleaning the data. This multiclass dataset is heavily imbalanced, with the benign class comprising the majority and SQL Injection being extremely rare. DDoS attacks dominate predictions due to their higher frequency compared to Web attacks. Table 4 lists the distribution of these data instances, and Figure 4 shows the proportions of the dataset’s classes. In addition, Destination Port, Flow Duration, Total Fwd Packets, Total Backward Packets, Total length of Fwd Packets, and Total length of Bwd Packets are examples of features within this dataset.

Table 4.

Distribution of classes in Dataset 2.

Figure 4.

Proportions of classes in Dataset 2.

4.1.3. Dataset 3

The third dataset is extensive, including both low-rate and high-rate DDoS attacks in SDN environments. This dataset represents real-world settings, containing 22 features that were extracted from controller and network traffic, and contains 113,407 instances after cleaning the data. Some of the features of this dataset are flow_duration, protocol, srcport, dstport, byte_count, Tot Bwd Pkts, and TotLen Fwd Pkts. Table 5 and Figure 5 present the distribution of these data instances.

Table 5.

Distribution of classes in Dataset 3.

Table 5.

Distribution of classes in Dataset 3.

| Category | Rows | Class Distribution (%) |

|---|---|---|

| Benign | 42,899 | 38% |

| DDoS | 70,508 | 62% |

Figure 5.

Proportions of classes in Dataset 3.

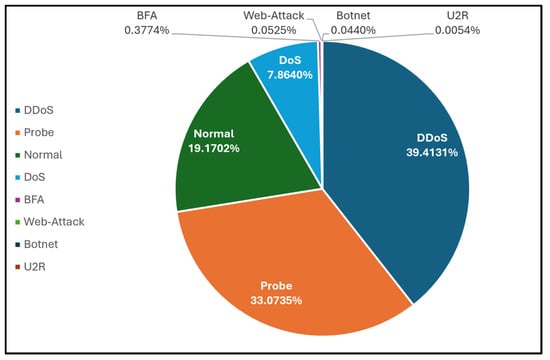

4.1.4. Dataset 4

The fourth dataset contains seven categories of attacks. Furthermore, the regular traffic in the produced data includes many common applications that offer services like SSL, DNS, FTP, Email, HTTPS, HTTP, and SSH. This dataset was created using four virtual machines (VMs). The total number of dataset instances is 343,939, which will be only 186,560 instances after cleaning the data. This dataset has more than 80 features such as Flow-id, Protocol, Duration, Src-IP, Dst-IP, TotLen-Fwd-Pkts, TotLen-Bwd-Pkts, SYN-Flg-Cnt, Flow-Byts/s, and Number of packets. Table 6 and Figure 6 show the distribution of these data instances.

Table 6.

Distribution of classes in Dataset 4.

Table 6.

Distribution of classes in Dataset 4.

| Category | Rows | Class Distribution (%) |

|---|---|---|

| DDoS | 73,529 | 39.4131% |

| Probe | 61,702 | 33.0735% |

| Benign | 35,764 | 19.1702% |

| DoS | 14,671 | 7.8640% |

| Brute Force Attacks | 704 | 0.3774% |

| Web-Attack | 98 | 0.0525% |

| BOTNET | 82 | 0.0440% |

| U2R | 10 | 0.0054% |

Figure 6.

Proportions of classes in Dataset 4.

Class imbalance can negatively impact classifier performance by biasing the learning process toward the majority class. In highly imbalanced datasets, such as Dataset 2, the benign class constitutes around 67.16% of the data, while some classes, such as Web Attack SQL Injection, represent only 0.0053% of the dataset. This significant disparity in class distribution means that the classifier favors the dominant class (benign) while neglecting the rare classes. As a result, the classifier may struggle to detect less frequent attacks, such as Web Attack XSS and Web Attack SQL Injection. To address this issue, oversampling techniques for minority classes can be applied to help the classifier focus more on underrepresented categories.

Principal component analysis (PCA) is a common standard approach that identifies the most relevant basis for re-expressing a given data collection by reducing dimensionality. When evaluating high-dimensional data, lowering the number or dimension of features related to a single class while maintaining their distinctiveness is essential for analyzing data [58,59]. Figure 7 illustrates the process of PCA that was used to reduce the dimensionality of the datasets. As was discussed earlier, each dataset has a number of features that are equal to n. After applying PCA, the resulting dataset will have a reduced number of features called PCA components, which can be referred to as z. In our case, the number of PCA components was 10.

Figure 7.

PCA overview.

4.2. Oversampling Algorithms

In data analysis, there are two primary methods for randomly resampling an unbalanced dataset: undersampling, which involves removing instances from the majority class, and oversampling, which comprises adding instances from the minority to the training dataset by copying them with replacement. Some important data that might be essential for classifier training may be dropped by undersampling strategies. While algorithms that oversample do not remove samples from the majority class, preserving original data from loss of information [60]. The main reason behind adopting oversampling techniques here is the lack of dataset instances. When the dataset contains a limited number of samples, applying undersampling techniques significantly reduces the dataset size, leading to the loss of necessary information from the majority class and less accuracy because it may not learn sufficient patterns [52].

In this research, three oversampling strategies were used: Random Oversampling, SMOTE, and ADASYN.

4.2.1. Random Oversampling

Random Oversampling is a useful process to deal with the issue of class imbalance and enhancing performance. Using this technique, original samples from the minority category are increased until the distribution of classes is balanced. It is easy to use because it indiscriminately duplicates current samples without adding any new data, while the two other strategies produce fresh synthetic data to supplement genuine instances. To clarify, Random Oversampling augments training data with numerous copies of selected minority classes. Oversampling can be performed repeatedly.

We consider the following:

- Nmaj as the number of majority class samples.

- Nmin as the number of minority class samples.

To balance the classes, we need to increase the number of minority class samples to match that of the majority class, as shown in Equation (1):

Number of synthetic samples needed = Nmaj − Nmin

4.2.2. SMOTE

Instead of oversampling with replacement, Chawla and colleagues suggested an oversampling strategy that aims to balance the dataset by producing synthetic samples for oversampling the minority class [15]. Recently, SMOTE has undergone several improvements and is a widely recognized approach for mitigating the effects of class imbalance in classifier construction [61]. Using feature space similarity between minority samples, this method synthesizes data [52]. For each minority instance, the k-nearest neighbors (kNN) of the same class are computed, and a subset of instances is selected at random according to the oversampling rate. The relationship between the minority instance and its closest neighbors is used to generate new synthetic instances. Consequently, the minority class’s choice limit extends wider into the majority class area, avoiding the overfitting issue [62].

The kNN algorithm is one of the most widely used anomaly detection techniques since it not only has a strong theoretical foundation but also provides high accuracy, low retraining costs, and realism and supports incremental learning [63]. kNN is a supervised regression and classification technique that makes a local approximation of the function and postpones all computation until the function has been evaluated. Based on the preponderance of a specific class in this neighborhood, kNN classification determines which group of k objects in the training set are closest to the test item. An unlabeled item is classified by computing its distance from the labeled objects, identifying its k-nearest neighbors, and using the class labels of these closest neighbors to obtain the object’s class label. The fundamental drawback of nearest neighbor-based solutions is that the KNN methodology cannot accurately label the traffic if the regular traffic data or the attack traffic does not have close enough neighbors. Complex calculations may also be needed to determine the distance between each item [64,65]. The mathematical formula for creating a new synthetic sample is shown in Equation (2):

where λ is a random number between 0 and 1.

4.2.3. ADASYN

The fundamental concept behind ADASYN is to use a weighted distribution for different minority class instances according to their learning difficulty. This means that instances from the minority classes that are more challenging to learn generate more synthetic data than simpler instances. Consequently, the ADASYN method enhances learning concerning distributions of data in two strategies: (1) by mitigating the bias caused via the class imbalance and (2) by adaptively moving the classification decision limit in the direction of the challenging cases [10].

In fact, this algorithm builds on the methodology of SMOTE by giving those challenging minority classes more weight at the categorization border to solve the problem of overlapping between classes.

The formula as presented in Equation (3):

where λ is adjusted based on the density of the minority samples.

New Sample = Original Sample + λ × (Nearest Neighbor−Original Sample)

Algorithm 1 presents an oversampling technique. The input file, ImbalancedDataset, refers to the CSV file that contains the data of the imbalance classes dataset. In our case, these can be Datasets 1, 2, 3, or 4. The output of the algorithm is the BalancedDataset, which contains a dataset with balanced classes. To ensure the oversampling technique can focus on generating synthetic samples based solely on the feature values, separating features and labels from the dataset is necessary. This step prevents errors, as oversampling techniques generally do not operate on labels. Then, oversampling techniques (SMOTE, ADASYN, or Random Oversampling) are applied to balance the dataset by creating new samples for the minority class. The variable random_state refers to the number of the generated synthetic samples and is set to 42, as is a common practice in research [66]. This value ensures that the same synthetic data points are generated each time the code runs.

After generating new samples, they are recombined with their corresponding labels to create a complete dataset. This phase guarantees that the dataset is in the required format for ML/DL classifiers, which need both features and labels for training and evaluation. The output is a balanced dataset with evenly distributed class labels, improving classifier performance and fairness in training.

| Algorithm 1: Applying an Oversampling Technique | |

| Input: | |

| 1: | ImbalancedDataset |

| Output: | |

| 2: | BalancedDataset |

| Procedure: | |

| 3: | Separate features and label from ImbalancedDataset |

| 4: | Apply the Oversampling technique (random_state = 42) |

| 5: | Combine Oversampled data with label |

| 6: | Save the balanced dataset to BalancedDataset |

| End Algorithm | |

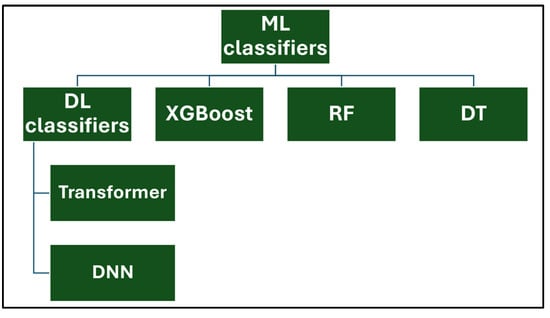

4.3. ML and DL Classifiers

ML and its subset, DL classifiers, are critical tools for data analysis, pattern detection, and prediction. These models are meant to uncover patterns in datasets, making them useful for applications such as anomaly detection. Their capacity to learn and adapt from data improves predictive accuracy, driving innovation across sectors. In this paper, we trained five well-known classifiers, including decision tree (DT), random forest (RF), XGBoost, Transformer, and deep neural network (DNN), as shown in Figure 8. The objective is to compare the impact of the three oversampling techniques, with and without PCA, on the aforementioned classifiers.

Figure 8.

Used ML/DL classifiers.

The selection of these classifiers was based on their diverse attributes and suitability for the functionalities of this field. DT and RF are interpretable classifiers that efficiently handle structured data and facilitate identifying critical decision patterns. XGBoost is a robust boosting algorithm known for its superior handling of imbalanced datasets, which is vital in our experiments. Transformer classifiers capture sequential dependencies in network traffic, rendering them suitable for detecting sophisticated attacks. DNNs leverage hierarchical feature extraction to facilitate deep pattern recognition in complex datasets. This selection ensures a balance between traditional tree-based classifiers and advanced DL approaches, optimizing both interpretability and prediction efficacy. Furthermore, traditional ML classifiers (e.g., DT, RF) are computationally less expensive but may generalize well with complex attack patterns. However, DL classifiers (e.g., Transformers, DNNs) tend to achieve higher accuracy but require more resources, computation, and training time. High-complexity models enhance detection but demand more memory, limiting real-time applicability.

4.3.1. Decision Tree

Decision tree (DT) is extensively utilized in classification problems and facilitates decision-making using a tree-like model composed of decisions and their consequences. DT is a common supervised learning classification approach that works with category or numerical data in IDS. A trained DT selects numerous packet features to establish its class. An optimum DT stores the most data with the fewest number of levels. This approach offers a clear classification method, is interpretable and simple to apply, and frequently allows for improved generalization by post-construction pruning, making it a popular model in intrusion detection. The classification procedure simply involves going from the tree’s root to the leaves with a fresh sample and selecting the path at each intermediate node based on the parameters determined during the training phase [59,67].

4.3.2. Random Forest

One of the popular and powerful ML classifiers is the random forest (RF) algorithm. This algorithm operates by generating a number of trees during the training phase to predict if a class is benign or unusual. The final class prediction is produced according to a majority vote of each tree’s class predictions. Each tree is built by taking a random subset of the dataset and measuring a random subset of characteristics in each division. This unpredictability creates heterogeneity among individual trees. This technique improves forecast accuracy and helps to prevent overfitting in decision trees. Moreover, it predicts error rates more precisely than decision trees [68].

4.3.3. XGBoost

XGBoost, or Extreme Gradient Boosting, is a systematic and advanced machine learning method known for its superior prediction accuracy. This algorithm proposed by Chen and Guestrin, is based on ensemble techniques. It uses depth-first search to create trees and improve gradients using parallel processing. XGBoost improves accuracy by efficiently implementing the stochastic gradient boosting technique. It has a built-in procedure for determining feature relevance and selecting features based on numerous criteria. The system uses decision trees to forecast outcomes. The regularization term penalty helps to prevent bias and overfitting during the training phase [67,69].

4.3.4. Transformer

A Transformer model is a fast DL model that handles input sequences in parallel, making them extremely efficient for training and inference. This model is a neural network based on the attention mechanism that was primarily employed to enable translation models to evaluate the significance of individual words in the source language to predict a single word in the object language, resulting in an integrated encoder-decoder model. This approach creates the encoder and decoder without RNNs by leveraging the self-attention method for the source and object language texts, respectively, in which distinct attention layers instruct the model to concentrate on useful words and ignore words that are not relevant in the texts. Nowadays, Transformer-based architectures are utilized for numerous applications, such as computer vision, time series predictions, and natural language processing (NLP) [70,71].

4.3.5. DNN

A deep neural network (DNN) is an advanced model of an artificial neural network (ANN) that has two or more hidden layers for data processing and analyzing between the input and output. DNNs are capable of simulating complex non-linear interactions, much like shallow artificial neural networks. The primary function of a neural network is to accept a collection of inputs, execute more sophisticated computations on them, and output results to solve real-world problems such as categorization. Every layer in DNN consists of one or more artificial nodes or neurons in such a way that these nodes are interconnected from layer to layer. This model has two advantages: its ability to learn features automatically and its scalability. Furthermore, the information is processed and propagated through DNN in a feed-forward fashion, that is, throughout the hidden layers from the input to the output layers [72,73].

Table 7 lists each classifier’s parameters along with their values and descriptions. These parameters were used in the experiments conducted in this study.

In XGBoost, the n_estimators parameter is set to 50, which is the boosting iterations, and it is used to speed up training and lower memory usage. The maximum depth, max_depth, is used as a default value when training the XGBoost classifier, which is 6. The learning rate and learning_rate are set to 0.1, as decreasing this number helps reduce overfitting and increases the generality of training [74]. The common values of subsample and colsample_bytree are 0.8 [75]. The random_state is set to 42 because it is a default value. The eval_metric is set to mlogloss when using multiclass classification and logloss when using binary classification.

In RF, the n_estimators parameter is set to 100 [76] as a default value, and max_depth is set to 10, which is common in practice. The minimum number of samples required to split an internal node in each decision tree, min_samples_split, is set to 10 to reduce overfitting and improve generalization. The minimum number of samples required to be in a leaf node, min_samples_leaf, is set to 5 to reduce variance and overfitting [77]. max_features is set to sqrt as a default parameter, which is used to set the number of features that are randomly selected for each split in the decision trees. The common parameters between DT and RF have similar values.

For the Transformer model, the embedding layer is set to Linear (input_dim -> 128), as the dataset contains numerical features. The encoder layers parameter is set to 3 to speed up training. The hidden dimension is set to 128, the number of heads is set to 8, the feedforward dimension is set to 512, and the number of layers is set to 3, as these parameters are efficient choices for tabular datasets. The loss function, learning rate, and batch size are set to default values [78]. Adam optimizer is used. Epochs are set to 10 to speed training and avoid unnecessary training of the model.

In DNN, the dense parameter of the input layer is set to 128 neurons, and ReLU is used as an activation function. These values are common with tabular datasets. The dropout of layer 1 is set to 0.3, and the dropout of layer 2 is set to 0.2 to maintain the generality of the model and reduce overfitting. For the output layer, the activation function is softmax, as it is commonly used with multiclass classification. The number of Epochs is set to 20. The batch size is set to 32, as it is the default [79].

Table 7.

Classifiers parameters.

Table 7.

Classifiers parameters.

| Classifier | Parameter | Value | Description |

|---|---|---|---|

| XGBoost | n_estimators | 50 | The number of boosting rounds. Higher values can improve performance but increase computation time. |

| max_depth | 6 | Maximum depth of each tree. Limits complexity to avoid overfitting. | |

| learning_rate | 0.1 | Step size shrinkage to prevent overfitting and improve generalization. | |

| subsample | 0.8 | Fraction of training samples used per boosting round to prevent overfitting. | |

| colsample_bytree | 0.8 | Fraction of features used per tree to enhance diversity. | |

| random_state | 42 | Ensures reproducibility by setting a seed for the random number generator. | |

| eval_metric | mlogloss | Evaluation metric used to measure model performance in multi-class classification. | |

| RF | n_estimators | 100 | Number of trees in the forest. Higher values improve performance but increase computation time. |

| max_depth | 10 | Maximum depth of each tree. Limits overfitting by restricting tree depth. | |

| min_samples_split | 10 | Minimum number of samples required to split an internal node. Higher values prevent unnecessary splits. | |

| min_samples_leaf | 5 | Minimum number of samples required in a leaf node. Prevents learning noise by ensuring meaningful leaf nodes. | |

| max_features | sqrt | Number of features considered at each split. ’sqrt’ selects the square root of total features to reduce overfitting. | |

| random_state | 42 | Ensures reproducibility by setting a seed for the random number generator. | |

| DT | max_depth | 10 | Maximum depth of each tree. Limits overfitting by restricting tree depth. |

| min_samples_split | 10 | Minimum number of samples required to split an internal node. Higher values prevent unnecessary splits. | |

| min_samples_leaf | 5 | Minimum number of samples required in a leaf node. Prevents learning noise by ensuring meaningful leaf nodes. | |

| random_state | 42 | Ensures reproducibility by setting a seed for the random number generator. | |

| Transformer | Embedding Layer | Linear (input_dim -> 128) | Embedding layer maps input features to a 128-dimensional space. |

| Encoder Layers | 3 | Transformer encoder applies attention mechanisms across input features. | |

| Hidden Dimension (d_model) | 128 | Hidden dimension size used in Transformer encoder layers. | |

| Number of Heads (nhead) | 8 | Number of attention heads in the Transformer model. | |

| Feedforward Dimension | 512 | Dimension of the feedforward network within each Transformer encoder layer. | |

| Number of Layers | 3 | Number of Transformer encoder layers stacked sequentially. | |

| Optimizer | Adam | Adam optimizer is used for training the model. | |

| Loss Function | CrossEntropyLoss | Cross-entropy loss function is used for classification tasks. | |

| Batch Size | 32 | Number of samples per batch during training. | |

| Epochs | 10 | Number of complete passes through the dataset. | |

| Learning Rate | 0.001 | Step size for adjusting weights during training. | |

| DNN | Input Layer | Dense (128, activation = ‘relu’) | Input layer with 128 neurons using ReLU activation function. |

| Hidden Layer 1 | Dense (64, activation = ‘relu’) | First hidden layer has 64 neurons and ReLU activation. | |

| Dropout 1 | Dropout(0.3) | Dropout layer with a rate of 0.3 to prevent overfitting. | |

| Hidden Layer 2 | Dense (64, activation = ‘relu’) | Second hidden layer has 64 neurons and ReLU activation. | |

| Dropout 2 | Dropout(0.2) | Dropout layer with a rate of 0.2 to further reduce overfitting. | |

| Output Layer | Dense(y_train.shape [1], activation = ‘softmax’) | Output layer with softmax activation to classify multiple classes. | |

| Optimizer | Adam | Adam optimizer is used for efficient learning. | |

| Loss Function | categorical_crossentropy | Categorical cross-entropy loss function for multi-class classification. | |

| Epochs | 20 | Number of times the model will go through the training dataset. | |

| Batch Size | 32 | Number of samples per batch during training. |

5. Results and Discussion

This section presents and discusses the findings of this work. A comparison study was conducted to demonstrate the impact of using oversampling techniques: Random Oversampling, SMOTE, and ADASYN on the performance of DNN, Transformer, XGBoost, RF, and DT classifiers on four SDN datasets. The original dataset, which refers to a dataset without applying oversampling techniques, was also included in this study to compare it with the oversampling techniques. In addition, this study analyzes the performance of using PCA along with these oversampling techniques to evaluate its effectiveness on classification performance. Moreover, computational cost analysis using oversampling and PCA techniques was provided in this study.

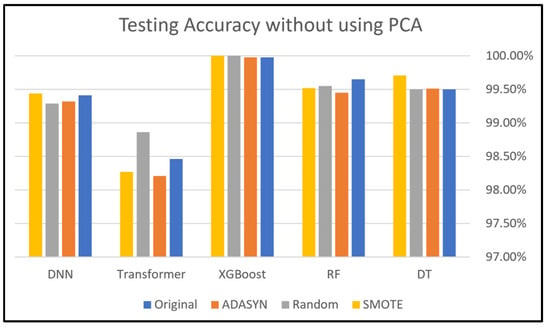

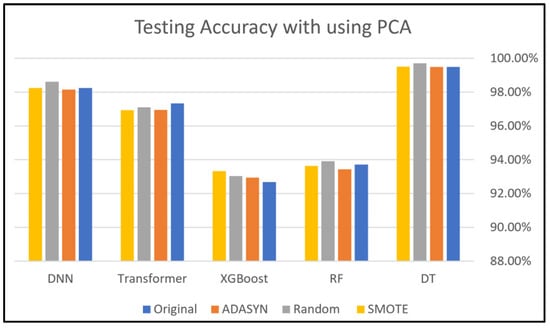

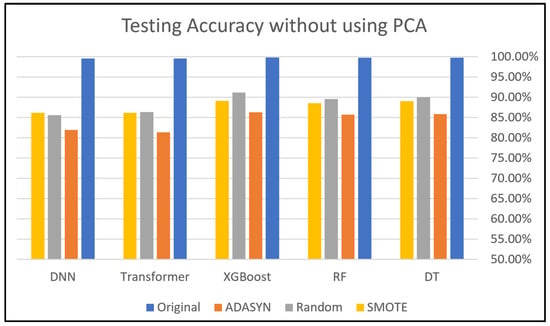

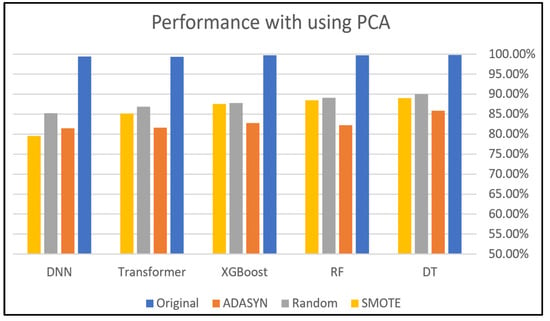

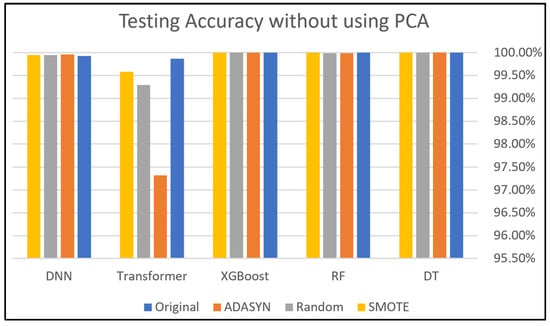

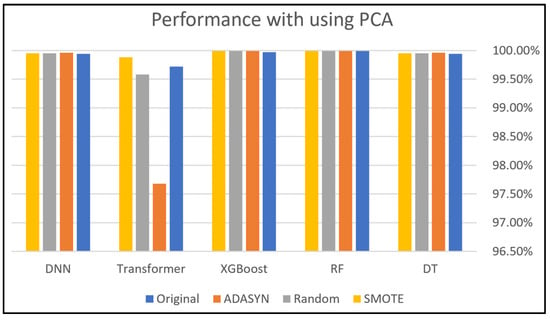

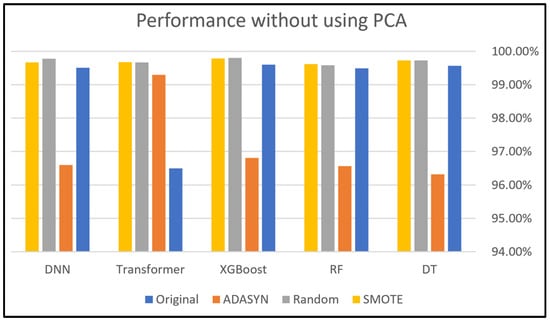

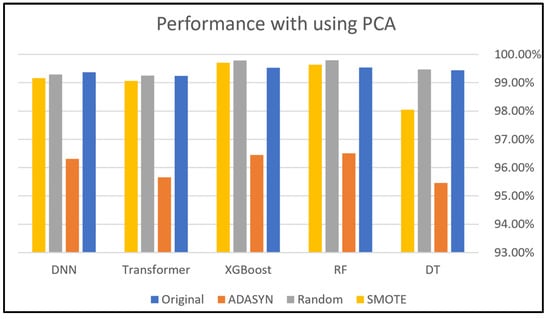

Section 5.1, Section 5.2, Section 5.3, Section 5.4 present the results of the test accuracy organized by dataset, from Dataset 1 to Dataset 4, and the results are presented in Figure 9, Figure 10, Figure 11, Figure 12, Figure 13, Figure 14, Figure 15 and Figure 16 after using Equation (4) [80].

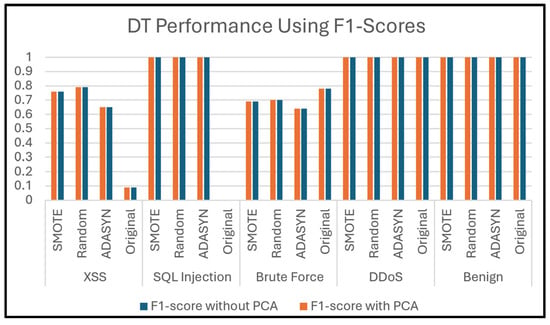

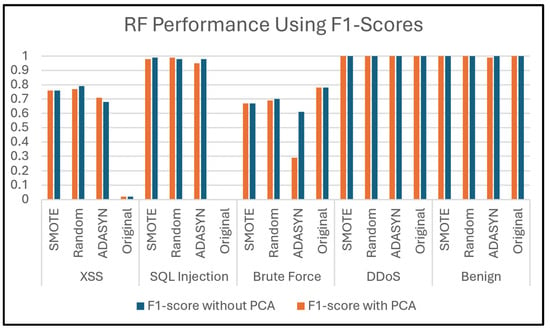

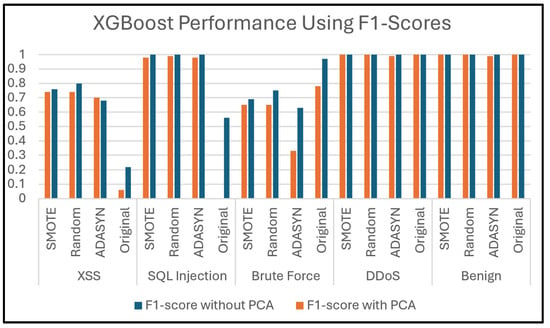

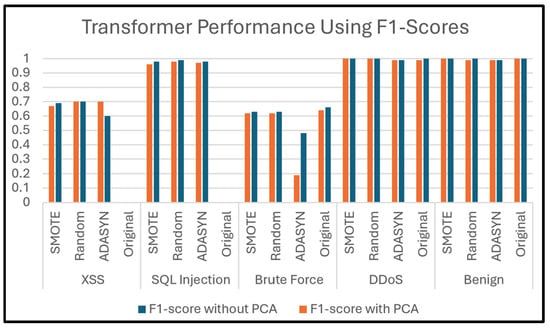

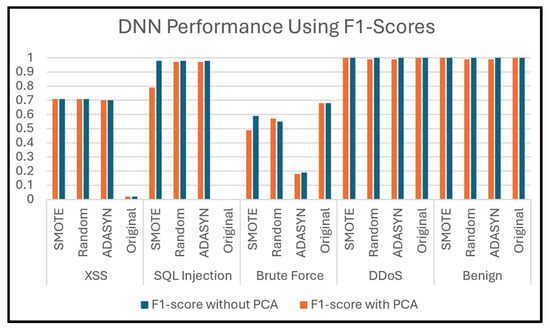

The evaluation metrics results are discussed in Section 5.5, Section 5.6, Section 5.7, Section 5.8, Section 5.9, organized by the classifier (DT, RF, XGBoost, Transformer, and DNN). Table 8, Table 9, Table 10, Table 11 and Table 12 list the calculated evaluation metrics: precision, recall, and F1-score, using Equations (5) to (7) [80].

Section 5.10 evaluates the performance of each classifier when predicting attack types.

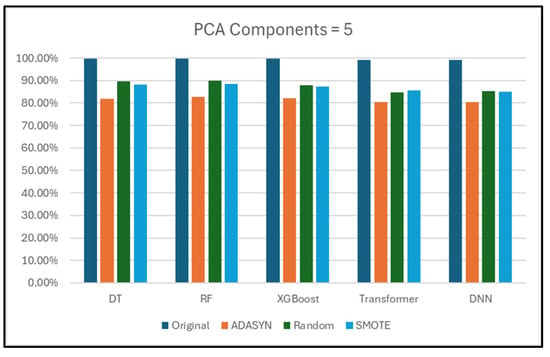

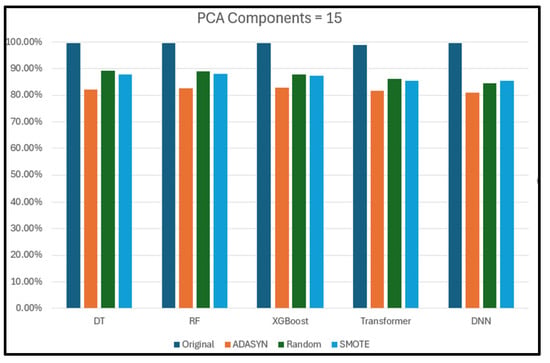

Section 5.11 compares the performance of each classifier when using different numbers of PCA components.

Section 5.13 delves into the limitations encountered in the research.

Lastly, Section 5.14 highlights the learned lessons and implications.

The experiments in this research were conducted on a personal computer equipped with an NVIDIA® GeForce RTX™ 4090 GPU with 24 GB of memory. All of this hardware was designed by NVIDIA, a manufacturer located in Santa Clara, CA, USA.

The processor is an Intel Core i9 14900K, with 3.20 GHz. It was manufactured by Intel Corporation, also located in Santa Clara, CA, USA. The computer is equipped with 32 GB of RAM and 1 TB of Hard Disk storage [81].

Regarding software, the Python programming language (Version 3.11.9), which runs on the Jupyter platform, was used. The imblearn library [82] was used to provide oversampling techniques: Random Oversampling, SMOTE, and ADASYN. The library Scikit-Learn [83] was used to implement the PCA algorithm [81].

5.1. Findings of Dataset 1

This subsection presents the evaluation of employing five classifiers: DNN, Transformer, XGBoost, RF, and DT classifiers to Dataset 1. Figure 9 and Figure 10 compare the accuracies of these classifiers with and without PCA, demonstrating the impact of different class-balancing techniques on each classifier’s performance. XGBoost shows the best performance with all oversampling techniques used in this study, which indicates its robust performance even without applying PCA. In addition, the DNN, RF, and DT classifiers show similar performance across all oversampling techniques, producing good results. However, the Transformer recorded the lowest performance compared to other classifiers with every oversampling technique.

Without PCA, we can say that the XGBoost classifier had the best performance overall because its accuracy was close to 100%. Specifically, the DT and DNN classifiers achieved their best performance when applying the SMOTE technique, while they performed slightly less effectively with the other two techniques. The accuracy of the Transformer classifier was good, but its best accuracy was achieved with the Random Oversampling technique. PCA sometimes improves performance, particularly in models like DT. This can be attributed to PCA reducing dimensionality and noise, which makes it easier for ML/DL models to learn significant patterns. The DNN and RF models have great stability and resilience; however, the XGBoost model was heavily impacted when using PCA, as shown in Figure 10, which is the opposite scenario compared to Figure 9. The sensitivity of this model comes from its performance, which varies by data preprocessing and PCA. The variability in accuracy across oversampling techniques indicates that XGBoost is highly influenced by data distribution. This dual approach mitigates the risk of overfitting while maintaining the robustness of the model.

The primary strength of XGBoost lies in its capability to refine predictions from the errors of previous steps, incorporating both a convex loss function and a penalty term that addresses model complexity. The implementation of this dual approach serves to mitigate the risk of overfitting while concurrently maintaining the robustness of the model.

As a gradient-boosting algorithm, its iterative error optimization process amplifies the impact of even minor changes in the input data. This makes it highly responsive to synthetic instances provided by oversampling. XGBoost functions by incrementally incorporating new trees that forecast the residuals generated by the preceding trees. The iterative process enables the model to continuously refine its predictions. The algorithm employs a gradient descent method to minimize the loss function, which is essential for enhancing the accuracy of the predictions. Moreover, PCA may contribute to this sensitivity by removing features that XGBoost relies on for accurate residual predictions. This can lead to instability, especially when combined with oversampling techniques that provide synthetic data points.

These findings also highlight the necessity of carefully selecting class-balancing approaches and dimensionality reduction strategies for optimizing model performance.

Figure 9.

Dataset 1 analysis without using PCA.

Figure 10.

Dataset 1 analysis using PCA.

5.2. Findings for Dataset 2

Figure 11 and Figure 12 depict the effects of data balancing and PCA techniques on the same classifiers using Dataset 2. At first glance, we find that the performance of all classifiers is close to 100% before applying any data balancing techniques. In this dataset, these techniques, with all five applied classifiers, did not maintain the testing accuracy observed in the original scenario before their use; the accuracy did not exceed 90% except for the XGBoost model in the case of not using PCA. This result might be caused by the nature of this dataset and the scarcity of data instances in some classes, as previously shown in Table 4. Furthermore, this may be attributed to the fact that the original data may contain information that directly contributes to improving the model’s performance, which can become less effective when oversampling techniques are applied.

Random Oversampling outperformed the other two balancing techniques, both with PCA and without PCA, because both SMOTE and ADASYN balance a dataset by generating synthetic data that could lead to synthetic noise that may mislead the classifier. Random Oversampling balances a dataset based on duplicating samples from the minority class, which avoids synthetic noise. However, Random Oversampling could lead to overfitting issues, but using techniques such as cross-validation and PCA can help address this issue.

The success of the oversampling techniques varies according to the model’s effectiveness and the dataset’s particular properties. While ADASYN may cause early performance reductions, it can eventually lead to more adaptable synthetic samples. Also, the ADASYN technique appeared to have lower performance, coming close to 80% with the Transformer and DNN models, likely due to the complexity it introduces in the data.

Figure 11.

Dataset 2 analysis without using PCA.

Figure 12.

Dataset 2 analysis using PCA.

5.3. Findings for Dataset 3

Regarding Dataset 3, without PCA, as shown in Figure 13, the accuracy ranges from 97.32% to 100, indicating a significantly broader range of performance, which may imply that classifiers without PCA are more sensitive to fluctuations when using data balancing strategies. With PCA, as shown in Figure 14, the accuracy ranges from 97.68% to 100%, suggesting that PCA helps maintain slightly higher overall performance by reducing dimensionality and noise. Moreover, the accurate results with PCA were excellent, as it achieved over 99.50% in all cases except for the Transformer model.

Both graphs illustrate that the Transformer classifier experiences a clear drop in performance when utilizing ADASYN, reaching the lowest position among all classifiers and approaches. This shows that the Transformer model is extremely sensitive to ADASYN. This sensitivity could be attributed to the nature of the classifier itself, as the Transformer may heavily rely on the original data distribution, making any alterations (like oversampling with ADASYN) cause performance instability. As a context-aware model, Transformer learns long-range dependencies, and any alterations can introduce inconsistencies that disrupt these dependencies. Additionally, the classifier’s complexity might render it more susceptible to inconsistencies provided by these techniques. Specifically, ADASYN generates new samples for underrepresented data, which could introduce more inconsistencies, further affecting the classifier’s performance. Therefore, the Transformer’s sensitivity arises from its dependence on the original data patterns and its limited compatibility with changes provided by ADASYN. Furthermore, this classifier relies on contextual relationships, and when PCA reduces dimensionality, critical dependencies may be altered, impacting performance. Hyperparameter tuning to mitigate overfitting is one of the solutions to address this issue.

The utilization of PCA has no meaningful impact on the performance of most classifiers in Dataset 3. This could be attributed to the oversampling technique on Dataset 3, where there was no clear variance in the data, and it is known that PCA captures the highest variance in the data [80]. However, in this case, PCA contributes to slightly improving the Transformer classifier and provides more stable performance across models.

Figure 13.

Dataset 3 analysis without using PCA.

Figure 14.

Dataset 3 analysis using PCA.

5.4. Findings for Dataset 4

Figure 15 and Figure 16 show how PCA did not enhance or sustain high performance across models when paired with data balancing techniques. The transformer classifier demonstrated the lowest performance when using the original dataset compared with other classifiers, which can be attributed to its sensitivity to redundant features in the original dataset without using PCA. Irrelevant features may hinder the effective ability of the Transformer model to learn patterns. Additionally, for this classifier to function at its best, it requires descriptive and well-structured features.

It is noteworthy that the application of the ADASYN strategy on all models was the least efficient among the three balancing strategies, especially with the Transformer model, and this does not mean that the performance is not good, but we described its effectiveness among them. The performance of the Transformer when using ADASYN fell below 96% with PCA after it achieved over 99% without PCA. Random Oversampling and SMOTE were more effective than ADASYN in Figure 15 and Figure 16 because of the necessity for neighboring computations and synthetic sample creation. Random Oversampling is computationally less costly since it simply requires simple duplication.

Figure 15.

Dataset 4 analysis without using PCA.

Figure 16.

Dataset 4 analysis using PCA.

Overall, according to all previous figures, the results sometimes show a slight improvement in the performance of the ML and DL classifiers using PCA, with data balancing techniques such as Random Oversampling and SMOTE. The performance of the majority of models is maintained by the use of PCA. This comparison, however, emphasizes the necessity of using adequate preprocessing and dimensionality reduction strategies to obtain the best model performance. It is worth mentioning that the performance of oversampling techniques varies depending on the nature of the dataset, the classifier used, and the complexity of the dataset. A comparative analysis of oversampling techniques was conducted to evaluate their varying performances in the context of SDN security. Each oversampling technique handles the creation of synthetic samples differently, which influences their effectiveness. Generally, SMOTE performs better than ADASYN in high-dimensional datasets since it minimizes excessive noise while enhancing synthetic diversity. Data from SDN security typically involves high-dimensional features that affect the effectiveness of oversampling techniques. Random Oversampling is simpler but risks overfitting. Although ADASYN is adaptive, it can degrade performance in complex or noisy data. Simpler classifiers may benefit more from Random Oversampling or SMOTE, as they do not rely on complex representations. Tree-based classifiers like RF and XGBoost generally benefit from oversampling techniques that add diversity, like SMOTE and ADASYN.

This research enhances the validity of the experiments and improves the reliability of the findings by calculating evaluation metrics that include precision, recall, and F1-score. Table 8, Table 9, Table 10, Table 11 and Table 12 record the macro average (M) and weighted average (W) values of each of these metrics, which are computed using Equations (8) and (9) [80]. Here, N refers to the number of classes in a dataset, metric refers to the evaluation metric (precision, recall, or F1-score) for class , and support refers to the number of samples in class .

Macro average calculates the simple average of the metric for each class individually without considering the number of samples for each class. It is useful for highlighting how the classifier performs on all classes equally. However, the weighted average multiplies each metric by the proportion of samples in the class relative to the total samples to provide a realistic view of classifier performance by emphasizing larger classes.

5.5. Evaluation of DT

Table 8 shows the evaluation metrics using a macro average and a weighted average for the DT classifier. The macro average evaluation for all metrics of the classifier was low using the Original Dataset 2 in both scenarios: with and without PCA. However, the performance for the same dataset was excellent when calculating the weighted average. This indicates that the performance of a classifier on imbalanced datasets can produce significant differences between macro average and weighted average metrics. After applying oversampling techniques, it is observed that the values of all evaluation metrics using macro average and weighted average become identical, which is expected since the dataset classes were balanced. Additionally, it is observed that PCA often maintains the performance of the DT classifier.

Table 8.

DT Evaluation metrics report.

Table 8.

DT Evaluation metrics report.

| Oversampling Techniques | Dataset | Without PCA | With PCA | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Precision (M) | Precision (W) | Recall (M) | Recall (W) | F1-Score (M) | F1-Score (W) | Precision (M) | Precision (W) | Recall (M) | Recall (W) | F1-Score (M) | F1-Score (W) | ||

| Original | 1 | 0.99 | 1.00 | 1.00 | 0.99 | 0.99 | 0.99 | 0.99 | 1.00 | 1.00 | 0.99 | 0.99 | 0.99 |

| 2 | 0.67 | 1.00 | 0.59 | 1.00 | 0.58 | 1.00 | 0.67 | 1.00 | 0.59 | 1.00 | 0.58 | 1.00 | |

| 3 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | |

| 4 | 0.92 | 1.00 | 0.99 | 1.00 | 0.94 | 1.00 | 0.88 | 0.99 | 0.81 | 0.99 | 0.84 | 0.99 | |

| ADASYN | 1 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 |

| 2 | 0.86 | 0.86 | 0.86 | 0.86 | 0.86 | 0.86 | 0.86 | 0.86 | 0.86 | 0.86 | 0.86 | 0.86 | |

| 3 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | |

| 4 | 0.97 | 0.97 | 0.96 | 0.96 | 0.96 | 0.96 | 0.96 | 0.96 | 0.95 | 0.95 | 0.95 | 0.95 | |

| Random | 1 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 |

| 2 | 0.91 | 0.91 | 0.90 | 0.90 | 0.90 | 0.90 | 0.91 | 0.91 | 0.90 | 0.90 | 0.90 | 0.90 | |

| 3 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | |

| 4 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 0.99 | 0.99 | 0.99 | 0.99 | 0.99 | 0.99 | |

| SMOTE | 1 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 |

| 2 | 0.90 | 0.90 | 0.89 | 0.89 | 0.89 | 0.89 | 0.90 | 0.90 | 0.89 | 0.89 | 0.89 | 0.89 | |

| 3 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | |

| 4 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 0.98 | 0.98 | 0.98 | 0.98 | 0.98 | 0.98 | |

5.6. Evaluation of RF

Table 9 provides an analysis of the findings of the RF classifier. When PCA is applied, there is a noticeable decline in classifier performance with Dataset 1 for all oversampling techniques. Without PCA, this dataset has distinctive features that make classification nearly perfect. Using PCA to reduce dimensions, likely discarding some of these critical features, leads to a loss of important patterns. Additionally, Dataset 2 consistently shows lower performance compared to other datasets across all those techniques.

Table 9.

RF evaluation metrics report.

Table 9.

RF evaluation metrics report.

| Oversampling Techniques | Dataset | Without PCA | With PCA | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Precision (M) | Precision (W) | Recall (M) | Recall (W) | F1-Score (M) | F1-Score (W) | Precision (M) | Precision (W) | Recall (M) | Recall (W) | F1-Score (M) | F1-Score (W) | ||

| Original | 1 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 0.93 | 0.94 | 0.94 | 0.94 | 0.93 | 0.94 |

| 2 | 0.74 | 1.00 | 0.58 | 1.00 | 0.56 | 1.00 | 0.73 | 1.00 | 0.60 | 1.00 | 0.56 | 1.00 | |

| 3 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | |

| 4 | 0.89 | 0.99 | 0.83 | 0.99 | 0.85 | 0.99 | 0.88 | 1.00 | 0.83 | 1.00 | 0.85 | 1.00 | |

| ADASYN | 1 | 0.99 | 0.99 | 0.99 | 0.99 | 0.99 | 0.99 | 0.94 | 0.94 | 0.93 | 0.93 | 0.93 | 0.93 |

| 2 | 0.86 | 0.86 | 0.86 | 0.86 | 0.85 | 0.86 | 0.85 | 0.85 | 0.82 | 0.82 | 0.79 | 0.79 | |

| 3 | 1.00 | 1.00 | 1.0 | 1.00 | 1.0 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | |

| 4 | 0.97 | 0.97 | 0.97 | 0.97 | 0.97 | 0.97 | 0.97 | 0.97 | 0.97 | 0.97 | 0.96 | 0.96 | |

| Random | 1 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 0.94 | 0.94 | 0.94 | 0.94 | 0.94 | 0.94 |

| 2 | 0.90 | 0.90 | 0.90 | 0.90 | 0.89 | 0.89 | 0.89 | 0.89 | 0.89 | 0.89 | 0.89 | 0.89 | |

| 3 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | |