Abstract

The advancement of pre-trained language models (PLMs) has provided new avenues for addressing text classification challenges. This study investigates the applicability of PLMs in the categorization and automatic classification of short-text safety hazard information specifically within mining industry contexts. Leveraging the superior word embedding capabilities of encoder-based PLMs, the standardized hazard description data collected from mine safety supervision systems were vectorized while preserving semantic information. Utilizing the BERTopic model, the study successfully mined hazard category information, which was subsequently manually consolidated and labeled to form a standardized dataset for training classification models. A text classification framework based on both encoder and decoder models was designed, and the classification outcomes were compared with those from ensemble learning models constructed using Naive Bayes, XGBoost, TextCNN, etc. The results demonstrate that decoder-based PLMs exhibit superior classification accuracy and generalization capabilities for semantically complex safety hazard descriptions, compared to Non-PLMs and encoder-based PLMs. Additionally, the study concludes that selecting a classification model requires a comprehensive consideration of factors such as classification accuracy and training costs to achieve a balance between performance, efficiency, and cost. This research offers novel insights and methodologies for short-text classification tasks, particularly in the application of PLMs in mine safety management and hazard analysis, laying a foundation for subsequent related studies and further improvements in mine safety management practices.

1. Introduction

Mine safety management is a complex and highly systematic task, involving multiple types of work, diverse categories of hazard sources, and multi-layered management processes [1]. Throughout the lifecycle of mine production, the core of safety management lies in accurately identifying hazards, rapidly responding to risks, and efficiently formulating intervention measures [2,3], thereby minimizing accident risks to ensure the safety of personnel and equipment. Although real-time monitoring technologies for fixed facilities and automated equipment have been widely adopted in mine production [4,5,6], a significant number of complex hazards still require professionals to engage directly on the production front lines [7]. These professionals identify, inspect, record, and oversee corrective actions by production personnel to manage hazards dynamically [8]. This process generates vast amounts of unstructured textual data [9]. Effectively mining and utilizing these data resources, which are rich in hazard information, has become a critical scientific issue in advancing the intelligent transformation of mine safety management [10].

From a data science perspective, the quantitative analysis of accident risk evolution patterns relies on high-quality structured datasets, necessitating systematic feature extraction and cluster analysis of raw textual data to achieve data dimensionality reduction and normalization. By constructing standardized datasets for hazard identification, it is possible not only to effectively identify major risk points but also to provide data support for formulating precise control strategies [11], thereby significantly enhancing the scientific rigor and effectiveness of mine safety management. However, in the context of increasingly complex industrial environments, hazard information exhibits characteristics of multi-source heterogeneity and dynamic evolution [12], making feature extraction, dimensionality reduction, and accurate classification of hazard data fundamental tasks for achieving precise quantification and statistical analysis.

In practical applications, the extraction and classification of hazard features involve two key steps: determining hazard categories and automatically classifying new hazard information [13,14]. The first step typically employs unsupervised topic mining methods to extract latent categories from textual data, achieving preliminary feature aggregation. The second step utilizes classification models to categorize newly generated hazard data into predefined categories, enabling dynamic management. However, both processes face certain challenges in the current field of safety hazard management. Topic models (such as Latent Dirichlet Allocation (LDA) and Nonnegative Matrix Factorization (NMF)) have been applied to hazard category mining and extraction due to their excellent performance in extracting topic structures from unstructured text [15,16]. These methods, through probabilistic models or matrix decomposition techniques, can effectively reveal latent risk types in hazard records [17]. Nevertheless, traditional topic models have limitations, including complex hyperparameter tuning, low interpretability of results, and inefficiency in modeling short texts [18]. Particularly when hazard information exhibits multi-topic characteristics or significant category overlap, these models often show lower classification accuracy and generalization capabilities, and they may overlook semantic information and dynamic changes in the text [19].

In the realm of automatic classification of hazard information, with the rapid development of machine learning and natural language processing technologies, traditional machine learning methods such as Support Vector Machines (SVM), Naive Bayes (NB), and K-Nearest Neighbors (KNN) have been widely used for hazard data classification [20,21,22,23]. These methods, through feature engineering and training on annotated data, can achieve a certain level of automatic classification of hazard information [24]. However, as the scale of hazard records continues to expand and the semantic complexity of textual content increases [25], traditional classification methods often exhibit limitations when handling high-dimensional, semantically rich hazard texts [26,27]. This is particularly evident in scenarios involving multi-domain, multi-level risk factors, where their ability to capture deep semantic and contextual relationships in text is insufficient [28]. Therefore, researching and developing hazard classification methods with greater generalization and semantic expression capabilities has become an important direction in this field.

In recent years, the emergence of PLMs (such as BERT and GPT) has provided a new perspective for the automatic classification of hazard information [29]. These models, through pre-training on large-scale textual data, possess deep semantic understanding and contextual capture capabilities, significantly enhancing classification performance and robustness in text classification tasks [30]. Recent advancements in PLMs have revolutionized topic mining methodologies across domains. For instance, the combination of PCC-LDA and BERT has shown significant improvement in topic coherence, outperforming traditional methods like LDA [31]. As evidenced in aviation safety oversight research, BERT-based classifiers achieve 89.7% F1-score in categorizing short-text hazard reports, demonstrating PLMs’ capacity to capture latent risk patterns in sparse data.

In the field of hazard information classification, BERT-based classifiers, combined with topic mining results, can be fine-tuned to adapt to the specific needs of safety management, enabling the identification of new hazard categories [32]. Additionally, PLMs can address the diversification and dynamic changes in hazard data through dynamic learning mechanisms, providing more intelligent technical support for safety management operations [33]. However, systematic research on the integration of PLMs with safety hazard management remains limited, particularly in the area of automatic classification of hazard information, where a mature theoretical framework and practical applications have yet to be established.

This study proposes an automatic classification method for hazard information that integrates PLMs. Specifically, the study design was in line with the following technical pathway:

- Hazard category extraction: utilizing the BERTopic topic mining model combined with PLMs to extract latent categories from raw textual data, and manually annotating these categories based on domain knowledge to construct a high-quality training dataset;

- In the text classification phase, optimizing text vectorization processing methods to address the short-text characteristics of hazard texts, and designing application pathways for different classification models;

- Employing ensemble learning methods to integrate multiple classification models, enhancing the stability and accuracy of Non-PLM classification approaches;

- Leveraging the semantic understanding capabilities of PLMs to achieve dynamic classification of hazard information, and conducting comprehensive evaluation and validation of the classification results.

Through these research methods, this study not only provides an efficient and precise solution for the classification and management of hazard information, but also offers theoretical support and technical references for safety management in complex industrial environments. The research outcomes will contribute to enhancing the intelligent level of safety hazard management, providing important foundations for the formulation of accident prevention measures and the optimization of safety management systems.

2. Methodology

2.1. Overall Approach to Text Classification Based on PLMs

The Transformer architecture is the cornerstone of PLMs [34], which innovatively introduces the multi-head self-attention mechanism, replacing the sequential processing patterns of traditional recurrent neural networks (RNNs) and convolutional neural networks (CNNs). This design enables the model to capture long-range dependencies in text sequences in parallel, significantly improving computational efficiency while enhancing model performance. Based on application scenarios and architectural characteristics, existing PLMs can be categorized into the following three types:

- (1)

- Encoder models. Represented by BERT, these models employ a bidirectional context encoding mechanism, focusing on text understanding tasks. Through masked language model pre-training, they generate context-aware text representations, excelling in tasks such as text classification and sequence labeling.

- (2)

- Decoder models. Examples include the GPT and LLaMA series, which use an auto-regressive approach to predict text sequences from left to right. These models perform exceptionally well in text generation tasks and are the primary architectural paradigm for generative language models.

- (3)

- Encoder-decoder models. Such as T5 and BART, these models combine the strengths of both encoder and decoder architectures. They exhibit significant advantages in sequence transformation tasks (e.g., machine translation, text summarization), capable of handling both text understanding and generation simultaneously.

Based on the above comparative analysis, this study ultimately selects encoder models and decoder models as the two types of PLMs to apply to the automatic classification of mine safety hazard texts. The specific applications are reflected in two aspects:

First, in terms of text representation, the deep semantic modeling capabilities of encoder models are utilized to transform unstructured safety hazard descriptions into high-dimensional vector representations. This pre-trained text vectorization method effectively captures semantic and syntactic features of the text, mapping semantically similar descriptions to adjacent regions in the vector space, thereby providing high-quality feature representations for subsequent classification tasks.

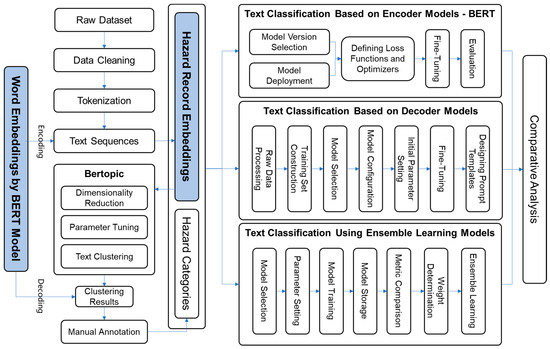

Second, in the construction of classification models, this study develops safety hazard text classification frameworks based on both encoder and decoder models, comparing them with classification methods based on Non-PLMs to evaluate the applicability of PLMs in this specific domain. The overall logic of the study is illustrated in Figure 1.

Figure 1.

Research method.

2.2. Hazard Category Classification Based on BERTopic

In the study of safety hazard text data classification, determining the main categories of safety hazards is the primary issue to be addressed. Safety hazard inspection data typically include specific descriptions of hazards, inspection times, and locations of discovery. Among these, the hazard description data often reflect the subject of the hazard and the specific issues it entails. Topic mining based on this field not only extracts the main categories of hazards, but also provides a crucial foundation for subsequent classification models. This study introduces the BERTopic topic mining model [35], leveraging the powerful semantic representation capabilities of PLMs combined with improved term frequency statistical techniques to explore the application effects of new methods for efficiently extracting topics from text data in safety hazard data. BERTopic significantly enhances model interpretability and analysis efficiency through a strategy that integrates clustering and topic word extraction. The core parameter for topic mining based on this model is CTF-ICF, defined as follows:

For hazard category i, all records within that category are treated as a single dataset to enhance the semantic density of hazard topics. Based on this, the intra-cluster term frequency (Cluster Term Frequency) is calculated as shown in Equation (1):

Here, represents the number of occurrences of keyword t in category i, and denotes the total number of occurrences of all keywords in category i.

Assuming there are a total of n hazard records and m unconnected records, the Inverse Cluster Frequency (ICF) for that category is calculated as shown in Equation (2):

where is the number of occurrences of keyword t in category i, and the summation is performed over all categories.

Thus, the CTF-ICF value for that category is calculated shown in Equation (3):

During the topic mining process, the CTF-ICF method is employed to calculate the importance score of hazard keywords within a category. The words with the highest CTF-ICF values are then extracted as the topic descriptions for that category.

To address the computational complexity, excessive memory usage, and dimen-sionality explosion caused by high-dimensional data, the BERTopic model employs the UMAP (Uniform Manifold Approximation and Projection) algorithm to reduce the di-mensionality of the created sentence embeddings. The dimensionality-reduced data were further input into HDBSCAN for clustering. HDBSCAN is a density-based clustering algorithm capable of effectively handling noisy data and automatically determining the number of clusters. This combination of UMAP and HDBSCAN allows for robust and scalable topic mining, even with complex and high-dimensional data. Ultimately, the determination of safety hazard categories will be achieved.

2.3. Safety Hazard Classification Methods

At present, in addition to rule-based systems, which require manual determination and configuration of classification rules, typical text classification algorithms were used, as shown in Table 1 [36]. The selection criteria were models that have been widely applied in the field and have achieved good results, or models that serve as foundational algorithms in the classification field.

Table 1.

Text classification algorithms.

In this study, the aforementioned classification methods are categorized into three types: Encoder Models (e.g., BERT), Decoder Models (e.g., GPT, LLaMA), and Non-PLMs (traditional machine learning models that have not undergone a pre-training phase on large-scale general-purpose corpora). To compare and analyze the classification performance of these models on short-text safety hazard data, this research primarily designs classification workflows based on encoder and decoder models. Additionally, an ensemble learning model formed by other representative Non-PLMs was selected as a benchmark for classification performance.

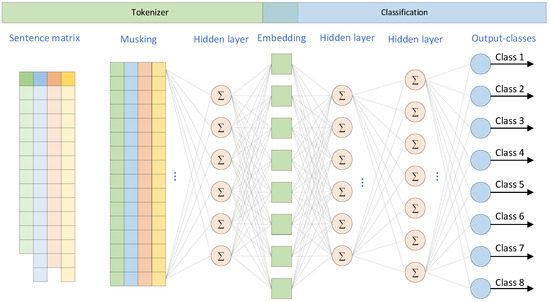

2.3.1. Text Classification Based on Encoder Models

This study employs the BERT model to validate the classification effectiveness of encoder models on hazard data. In text classification tasks, BERT excels at handling complex linguistic phenomena such as polysemy and metaphors. For instance, in sentences containing metaphors, BERT can accurately infer the implied meanings of words through contextual semantic information, thereby improving classification accuracy. This capability makes BERT particularly suitable for hazard description data, which often includes a significant number of colloquial expressions. Figure 2 illustrates the architectural framework for text classification research based on encoder models.

Figure 2.

Architecture of text classification based on encoder models.

Specifically, the complete workflow for text classification using the BERT model includes data preprocessing, model loading and fine-tuning, and model evaluation.

Data Preprocessing: For raw data, after cleaning and tokenization, vectorization is performed using the BERT model. During this process, the BERT model pads or truncates input sequences to ensure uniform input sequence lengths. When the text length exceeds the model’s maximum limit, a truncation strategy is typically employed to retain the initial portion of the text. If the text is shorter than required, it is padded with special tokens to maintain consistency and efficiency in model computations.

Model Loading and Fine-tuning: Based on task requirements and hardware resource constraints, an appropriate version of the BERT model is selected. Subsequently, a loss function and optimizer are defined. This study employs the cross-entropy loss function to optimize the model’s predictive performance, calculated as Equation (4):

where N represents the total number of samples, C denotes the number of categories, yij is the true label indicating whether the i-th sample belongs to the j-th category, and pij is the predicted probability from the model.

The preprocessed data are fed into the BERT model in batches, and the model is fine-tuned using the predefined loss function and optimizer to better adapt to the characteristics of target data. Fine-tuning requires appropriate hyperparameter settings, such as the number of training epochs and learning rate. During each epoch, the model iterates through the entire training dataset, and the optimizer updates the parameters to gradually reduce the loss function value.

After training, classification evaluation metrics were used to assess the classification performance.

2.3.2. Text Classification Based on Decoder Models

Decoder models are a fundamental component of current generative language models and have shown excellent performance in natural language processing tasks. Typical applications include text generation, intelligent writing, code assistance, intelligent customer service, and machine translation. However, their application in text classification tasks is relatively limited. This study explores the use of decoder models for short-text classification tasks.

Open-source decoder models (e.g., LLaMA, Qwen, Baichuan) are primarily trained on general-purpose data, leaving room for improvement in specific downstream scenarios and vertical domains. These models require further adaptation using task-specific data to suit particular contexts. The main difference from fine-tuning encoder models lies in the formatting requirements of the raw data. Decoder models typically require data to be organized in specific formats (e.g., Alpaca or ShareGPT formats). For instance, in the Alpaca format, each training data point must include an instruction (task description), input (input content), and output (expected output). Therefore, hazard data must be formatted accordingly.

Since the primary application of decoder models is not text classification, designing appropriate prompts is essential to guide the model in performing classification tasks. A well-designed prompt typically includes a task description, examples, and input text to help the model understand the task objectives and classification patterns. During the design process, it is crucial to define the task description, skill scope, and output format to ensure the model’s accuracy and operational effectiveness in task execution.

2.3.3. Comparative Model Based on Ensemble Learning

In text classification tasks, common methods include traditional machine learning algorithms (e.g., NB, SVM, KNN) and deep learning algorithms (e.g., TextCNN). In this study, these algorithms are first trained independently and then effectively combined through ensemble learning to form a unified model system for comparative analysis with the classification performance of the two types of PLMs.

In terms of model selection, this study focuses on the performance of different classification algorithms on short texts. In addition to classic algorithms like Naive Bayes, SVM, and KNN, the deep learning model TextCNN is selected, while XGBoost is chosen as the representative model for decision-tree-based algorithms.

During model evaluation, the selected base models are first trained on the training set, and their performance is comprehensively evaluated on an independent validation set. Evaluation metrics include core classification metrics such as Accuracy, Precision, Recall, and F1, enabling the selection of models with superior performance and diversity as the final base models.

To further integrate the classification results of various models, ensemble learning methods were employed to combine the selected classification models in the control group. Based on task characteristics, data properties, and the performance of the base models, this study adopts a weighted voting strategy for model integration. During the weighted voting process, the F1 of the base models on the validation set were used to determine voting weights, ensuring that better-performing models have a greater influence on the final results. In the prediction phase, the weighted voting method combines the predictions of each model, and the performance of the ensemble model was compared with that of individual base models to analyze its strengths and weaknesses.

3. Case Study

The raw data for this study originate from hazard records from a large metal mine in China, comprising 42,781 records with an average text length of 35 words and a maximum text length of 89 words. These records were exported via a data interface, each assigned a unique identifier, forming the initial dataset for analysis. Since some sentences may record multiple types of hazards simultaneously, engineering methods were employed to split these records into independent sentences, thereby refining the granularity of the analysis.

3.1. Data Preprocessing

This study utilizes the BERT-Base-Chinese pre-trained model for word embedding of the data. This model features a 12-layer Transformer architecture, 768 hidden units, 12 self-attention heads, and a total of 110 million parameters. Before tokenization, the hazard descriptions were cleaned to remove any duplicate or irrelevant records. Special characters and numbers were also removed to normalize the text data. Based on the model’s vocabulary, hazard descriptions were tokenized. After tokenization, the tokenized text was converted into corresponding token indices and further organized into tensor formats suitable for model input, ultimately forming sentence embeddings to provide input data for subsequent tasks.

3.2. Safety Hazard Category Classification

In this study, three key parameters of UMAP, n_neighbors (number of neighbors), n_components (target dimensionality), and metric (distance metric)—were set to 25, 10, and ’cosine’, respectively. These settings were chosen to balance the preservation of local and global structure in the data while ensuring efficient dimensionality reduction. For the HDBSCAN clustering process, the minimum cluster size was set to 30. This parameter influences the number of topics: a larger value results in fewer topics, while a smaller value yields more topics. During clustering, clusters with a category index of −1 are typically considered “noise” by HDBSCAN. These clusters contain texts that cannot be classified and lack meaningful semantic information, thus being excluded from topic word extraction, thereby enhancing the precision and interpretability of the clustering results.

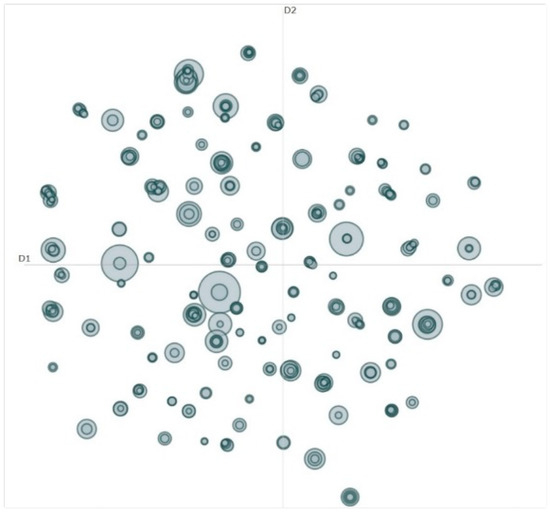

To reveal the relative distances and similarities between different topics, the clustering results were mapped onto a two-dimensional space, as shown in Figure 3. In the figure, each circle represents a cluster, and the axes D1 and D2 are used solely to represent the vector space without actual meaning. The figure shows that many categories exhibit high similarity, necessitating further merging to reduce the number of generated topics and eliminate some noise.

Figure 3.

Hazard clustering results.

The specific approach for topic merging involves calculating the CTF-ICF vectors between topics, merging the most similar vectors, and recalculating the CTF-ICF vectors after merging to update the representation of each topic. Through this process, a more concise and semantically consistent set of topics is obtained. Finally, after the topic mining algorithm identifies hazard topics, manual intervention is required to determine the categories for some ambiguous records. This step helps generate a more accurate hazard classification training set, thereby providing higher-quality raw data for subsequent text classification tasks. In this study, 17 hazard categories were ultimately identified, including lighting issues (5.43%), road problems (4.56%), support problems (14.87%), roof management issues (13.71%), ventilation problems (5.12%), fire safety issues (7.23%), vehicle safety issues (3.61%), safety protection issues (9.97%), improper blasting and equipment management (4.21%), power distribution facility issues (8.87%), pipeline problems (6.98%), line issues (5.89%), aging or damaged equipment and facilities (3.12%), missing safety signs and guardrails (2.78%), poor civilized production (1.98%), insufficient safety confirmation (1.66%), and other issues (0.01%).

3.3. Construction of Classification Models

The standardized dataset with category labels was partitioned through stratified random sampling into training, validation, and test sets using a 6:2:2 ratio (60% training, 20% validation, 20% testing), preserving original class distributions across all splits. To ensure statistical robustness, we conducted 10 independent trials of random splitting complemented by 5-fold cross-validation. For decoder model training, raw hazard descriptions were directly employed without text vectorization preprocessing, formatted into an Alpaca-style instruction structure containing <instruction>, <input>, and <output> fields to comply with autoregressive model requirements.

All experiments were executed on a dedicated research workstation equipped with an Intel Core i7-13700K processor, 32GB DDR5 system memory, and an NVIDIA RTX 3090 Ti graphics card, providing sufficient computational resources for model training and inference tasks.

- (1)

- Parameter Settings for the BERT Model

The BERT model used in this study was the BERT-Base-Chinese model to ensure consistency in semantic representation. During the fine-tuning phase, the Adam optimizer and a linear learning rate decay strategy were employed to ensure training stability. The parameter settings for the BERT model during fine-tuning are shown in Table 2. The parameter configuration strategy was designed following three fundamental principles: (1) compatibility with PLMs specifications, (2) empirical optimization through controlled pilot studies, and (3) practical adaptation to computational constraints.

Table 2.

Parameter settings for the BERT model.

- (2)

- Decoder Model Configuration

The decoder model selected for this study is the open-source LLaMA3-7B model, fine-tuned using the LoRA (Low-Rank Adaptation) method for parameter-efficient adaptation. Eight adjustable parameter layers were chosen, with a low-rank adaptation dimension of 32 for each layer, reducing GPU memory usage and improving training efficiency. The parameter configuration for the LLaMA3 model is shown in Table 3.

Table 3.

Parameter configuration for the LLaMA3 model.

After model training, the following prompt was used to evaluate the model’s performance. A batch processing script was built using Python (3.13.2) to process, analyze, and evaluate the model’s classification results.

Role: Mine Safety Hazard Classification Expert;

Description: Possesses extensive experience in mine safety management, familiar with the classification and characteristics of various hazards, capable of accurately determining hazard categories based on descriptions and providing reliable evidence;

Skills: Deep understanding of the provided mine safety hazard classification standards; accurate interpretation of hazard descriptions and determination of specific categories; providing clear and concise classification results, ensuring accuracy;

Goals: Accurately understand the hazard descriptions provided by users; determine the corresponding hazard category based on the description; ensure clear and concise output of classification results;

Output Format: Only output the specific hazard category.

- (3)

- Ensemble Learning Model Configuration

The parameter configurations of the KNN, SVM, XGBoost and TextCNN models are shown in Table 4. Among them, the construction of the Bayesian classifier, KNN and SVM models uses the sklearn library (Version 1.2.2). The XGBoost model uses the xgboost library (Version 1.7.5), and the TextCNN model is based on PyTorch (Version 2.0.1). In the ensemble learning process, the category determination threshold is set to 0.8.

Table 4.

Parameter settings for Non-PLMs.

The evaluation results for each model are shown in Table 5. Among these, the ensemble learning model did not divide the dataset into training and test sets during evaluation. The training set results for the LLaMA3 model refer to the results before fine-tuning, while the test set results are those after fine-tuning.

Table 5.

Classification model evaluation results.

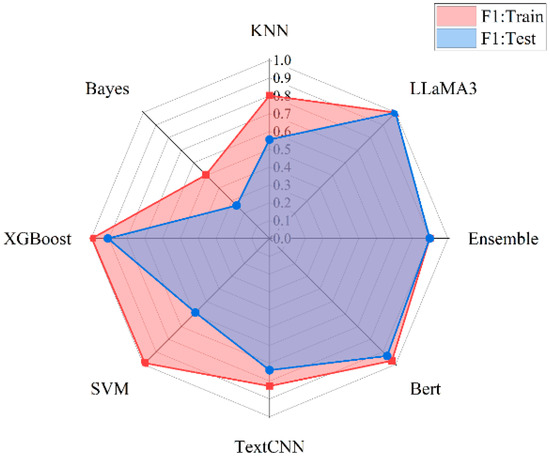

A radar chart was generated for the F1 scores of each model on the training and test sets, as shown in Figure 4.

Figure 4.

Radar chart of F1-score comparison for each model.

The detailed resource consumption metrics are summarized in Table 6.

Table 6.

Training resource consumption comparison.

4. Discussion

4.1. Analysis of BERTopic Model Application Effectiveness

This study has improved the CTF-ICF metric for the specific scenario of mine safety hazard classification, demonstrating certain advantages over traditional methods such as LDA and BTM. The BERT model effectively captures semantic features in short texts and converts them into high-quality embeddings, minimizing semantic loss during data transformation and ensuring more complete and coherent topic representation. Compared to traditional topic modeling methods, BERT overcomes the limitations of relying on word frequency statistics and co-occurrence patterns, accurately capturing the latent meanings of texts through deep semantic embeddings. This phrase-based (rather than single-keyword-based) topic representation improves the effectiveness and accuracy of topic clustering. Additionally, the introduction of the CTF-ICF metric further optimizes topic mining precision, reducing issues such as topic ambiguity and noise commonly found in traditional methods. Through task specific fine tuning, BERT adapts well to the characteristics of mine hazard text data, significantly enhancing the accuracy of topic mining and the clarity of clustering results.

However, some challenges were identified in this study. Despite improving input data quality through preprocessing, the HDBSCAN clustering algorithm treats clusters with a category index of −1 as “noise”. These clusters often contain texts that are difficult to classify or lack sufficient semantic information, leading to incomplete clustering results. Additionally, the BERT model may face overfitting risks when dealing with large amounts of noisy data. Therefore, reducing the model’s over-reliance on noisy data while improving classification accuracy remains a critical issue for future research.

4.2. Comparative Analysis of Text Classification Models

In terms of the classification performance of ensemble learning models, although the model integrates XGBoost, SVM, KNN, and TextCNN, its performance does not surpass that of individual models. This may be due to the insufficient adaptability of the weighted voting strategy to different base models. For example, while XGBoost performs excellently in single tasks, its performance does not improve and may even degrade when integrated with other models. This indicates that the parameters and strategies of ensemble learning models require more refined adjustments to fully exploit their potential. Meanwhile, the TextCNN model underperforms across all evaluation metrics, suggesting its limited adaptability to short-text hazard descriptions.

The BERT-based classification model, although slightly inferior to XGBoost on the training set, significantly outperforms other models on the test set, demonstrating strong generalization capabilities and robustness. This further highlights BERT’s potential advantages in capturing semantic information and handling noisy data in short-text classification tasks.

In comparison to similar research, this study represents the first application of decoder-based models, such as LLaMA3, in the field of mine safety hazard text classification. The LLaMA3 model exhibits strong semantic understanding and high accuracy in mine hazard text classification. During testing, it was observed that the model does not require splitting original records; when input contains descriptions of multiple hazard types, the model can return all corresponding categories. However, the high computational resource demands of decoder models limit their experimental scale, and occasional cross-category mislabeling phenomena lead to unstable classification results. This indicates that further research is needed to improve the reliability and cost-effectiveness of such models in industrial applications.

The computational resource requirements of classification algorithms are also a critical consideration in practical applications. In this study, traditional machine learning algorithms such as SVM and XGBoost have clear advantages in resource consumption, while PLMs like BERT and LLaMA3 require significantly higher hardware resources during training and inference, with LLaMA3 being particularly resource-intensive.

Although the data used in this study originate from a single metal mine, the model’s generalization capability to other types of mines, such as coal mines and non-metallic mines, should be discussed. Different industries have certain commonalities in the field of safety hazard investigation, especially in terms of hazard description text. This is because the original data of this study only used the hazard description field, which has a certain degree of commonality for different types of mines. For different types of mines, the core links are consistent. However, the classification effect still depends on the text tokenization and model training.

While this research is primarily oriented toward the Chinese language, the proposed methodological framework demonstrates considerable cross-lingual applicability. Notably, significant methodological variations exist between Chinese and English tokenization processes—while English relies on whitespace-based segmentation, Chinese necessitates sophisticated algorithmic implementations for word boundary detection. Therefore, extending this methodology to other languages constitutes a crucial direction for future research, requiring comprehensive analysis of target languages’ tokenization characteristics and corresponding adaptations in preprocessing protocols.

4.3. Limitations

Although this study achieves certain results in mine hazard topic mining and classification, there is still room for improvement in model optimization, data consistency, and resource efficiency.

First, data diversity and inconsistencies in processing significantly impact model performance. Semantic ambiguity and format variations in hazard descriptions may lead to error accumulation during topic mining and classification. For example, some records with ambiguous semantics or unclear classification standards are categorized as “other hazards”, reducing model bias in topic determination but inevitably introducing noise into subsequent classification tasks. Future research will focus on unifying data processing and feature extraction workflows to minimize error propagation. Additionally, handling noisy data and anomalous texts requires further optimization to enhance model robustness and result interpretability.

Second, the ensemble learning model in this study does not fully leverage the strengths of its base models, indicating room for improvement in model fusion strategies. Future research will explore more efficient ensemble strategies, such as stacking or adaptive weight allocation, to further enhance classification performance. Meanwhile, the limitations of classic deep learning models like TextCNN in capturing short-text semantics suggest the need for network structures or feature learning mechanisms better suited to short-text scenarios.

Finally, although decoder models demonstrate strong semantic understanding in classifying complex hazard descriptions, their high computational costs and occasional classification biases limit their large-scale industrial application. Future research will focus on optimizing resource requirements and improving classification stability to achieve a better balance between performance and cost. Additionally, task-specific fine-tuning methods and effective prompt design strategies will be explored to reduce cross-category mislabeling phenomena.

5. Conclusions

This study addresses the practical need for automatic classification of mine safety hazard texts, systematically analyzing the performance and applicability of various classification models and proposing a text classification solution integrating PLMs. Experimental results show that BERTopic-based text classification demonstrates strong semantic understanding and topic extraction capabilities when handling semantically complex mine hazard descriptions, improving text mining efficiency and result interpretability. Unifying data preprocessing and text vectorization workflows to reduce error accumulation caused by data inconsistencies is a key direction for enhancing model robustness in the future.

In the comparative analysis of classification models, this study validates the different characteristics and applicability of PLMs and Non-PLMs such as SVM and KNN. The results indicate that the XGBoost model performs well in efficiency and classification accuracy but fails to fully leverage synergistic effects in ensemble learning, highlighting the limitations of the current weighted voting mechanism. In contrast, the BERT-based classification model outperforms other Non-PLMs on the test set, demonstrating its potential in short-text classification. Through reasonable fine-tuning and prompt design, the LLaMA3 model exhibits excellent semantic understanding, but its high computational costs and occasional classification biases limit its feasibility in industrial applications.

Overall, this study provides robust technical support for text mining and classification of mine safety hazards and validates the application potential of PLMs in domain-specific tasks. Future research will focus on optimizing data consistency, improving model ensemble strategies, and exploring more cost-effective solutions tailored to industrial needs, thereby advancing the application of short-text classification technology in mine safety management and providing scientific and efficient solutions for hazard identification and dynamic management.

Author Contributions

Conceptualization, G.L.; Investigation, J.H.; Methodology, X.Q.; Resources, C.F.; Supervision, G.L.; Validation, X.Q. and J.H.; Writing—original draft, X.Q.; Writing—review and editing, G.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by The National Key Research and Development Program of China—2023 Key Special Project (No. 2023YFC2907403), the National Natural Science Foundation of China (No. 52404161) and the National Natural Science Foundation of China (No. 52074022).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The datasets used and/or analysed during the current study are available from the corresponding author upon reasonable request.

Acknowledgments

This research was supported by various open-source tools and frameworks. The implementation utilized the scikit-learn, XGBoost, and PyTorch libraries for machine learning and data processing. We acknowledge the use of the BERT-base-Chinese model for natural language processing tasks and the LLaMA3 model for advanced deep learning applications. Furthermore, the LLaMA Factory (Version 0.2.0) provided essential functionalities for fine-tuning and deploying the LLaMA3 model. We are grateful to the developers and contributors of these resources for making their work publicly available.

Conflicts of Interest

Author Chunchao Fan was employed by the company Shandong Gold Group Mining (Laizhou) Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Guo, Y.; Yang, F. Mining safety research in China: Understanding safety research trends and future demands for sustainable mining industry. Resour. Policy 2023, 83, 103632. [Google Scholar] [CrossRef]

- Ibrahim, A.; Nnaji, C.; Namian, M.; Shakouri, M. Evaluating the Impact of Hazard Information on Fieldworkers’ Safety Risk Perception. J. Constr. Eng. Manag. 2024, 150, 04023174. [Google Scholar] [CrossRef]

- Wang, J.; Guo, J. Risk pre-control mechanism of mines based on evidence-based safety management and safety big data. Environ. Sci. Pollut. Res. 2023, 30, 111165–111181. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Y.; Li, C.; Li, L.; Yang, K.; Yang, Y.; Zhang, G. Dynamic assessment and system dynamics simulation of safety risk in whole life cycle of coal mine. Environ. Sci. Pollut. Res. 2023, 30, 64154–64167. [Google Scholar] [CrossRef]

- Zhang, J. Design and implementation of coal mine safety monitoring system based on GIS. Commun. Mob. Comput. 2022, 2022, 4771395. [Google Scholar] [CrossRef]

- Huang, D.; Wang, X.; Chang, X.; Qiao, S.; Zhu, Y.; Xing, D. A safety assessment model of filling mining based on comprehensive weighting-set pair analysis. Environ. Sci. Pollut. Res. 2023, 30, 60746–60759. [Google Scholar] [CrossRef]

- Imam, M.; Baïna, K.; Tabii, Y.; Adlaoui, Y.; Benzakour, I.; Bourzeix, F. Ensuring Miners’ Safety in Underground Mines through Edge Computing: Real-Time Pose Estimation and PPE Compliance Analysis. IEEE Access 2024, 12, 145721–145739. [Google Scholar] [CrossRef]

- Patyk, M.; Nowak-Senderowska, D. Occupational risk assessment based on employees’ knowledge and awareness of hazards in mining. Int. J. Coal Sci. Technol. 2022, 9, 75. [Google Scholar] [CrossRef]

- Kunodzia, R.; Bikitsha, L.S.; Haldenwang, R. Perceived factors affecting the implementation of occupational health and safety management systems in the South African construction industry. Safety 2024, 10, 5. [Google Scholar] [CrossRef]

- Tingjiang, T.; Enyuan, W.; Ke, Z.; Changfang, G. Research on assisting coal mine hazard investigation for accident prevention through text mining and deep learning. Resour. Policy 2023, 85, 103802. [Google Scholar] [CrossRef]

- Miao, D.; Lv, Y.; Yu, K.; Liu, L.; Jiang, J. Research on coal mine hidden danger analysis and risk early warning technology based on data mining in China. Process. Saf. Environ. Prot. 2023, 171, 1–17. [Google Scholar] [CrossRef]

- Yu, K.; Zhou, L.; Liu, P.; Chen, J.; Miao, D.; Wang, J. Research on a Risk Early Warning Mathematical Model Based on Data Mining in China’s Coal Mine Management. Mathematics 2022, 10, 4028. [Google Scholar] [CrossRef]

- Sahoo, S.; Maiti, J.; Tewari, V.K. A framework to model contractors’ hazard and risk exposure at process plants using unsupervised text mining. Process Saf. Environ. Prot. 2024, 183, 24–45. [Google Scholar] [CrossRef]

- Guo, D.M.; Li, G.Q.; Hu, N.L.; Hou, J. Big data analysis and visualization of potential hazardous risks of the mine based on text mining. Chin. J. Eng. 2022, 44, 328–338. [Google Scholar]

- Weisser, C.; Gerloff, C.; Thielmann, A.; Python, A.; Reuter, A.; Kneib, T.; Säfken, B. Pseudo-document simulation for comparing LDA, GSDMM and GPM topic models on short and sparse text using Twitter data. Comput. Stat. 2023, 38, 647–674. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.; Sun, M.; Chen, Y.; Nie, B.; Li, Z.; Liu, W. Causal analysis of construction safety accidents in hydropower projects based on unsupervised LDA. China Saf. Sci. J. 2023, 33, 79. [Google Scholar]

- Eker, H. Natural Language Processing Risk Assessment Application Developed for Marble Quarries. Appl. Sci. 2024, 14, 9045. [Google Scholar] [CrossRef]

- Shahbazi, Z.; Byun, Y.-C. Topic Prediction and Knowledge Discovery Based on Integrated Topic Modeling and Deep Neural Networks Approaches. J. Intell. Fuzzy Syst. 2021, 41, 2441–2457. [Google Scholar] [CrossRef]

- Qiu, Z.; Liu, Q.; Li, X.; Zhang, J.; Zhang, Y. Construction and analysis of a coal mine accident causation network based on text mining. Process Saf. Environ. Prot. 2021, 153, 320–328. [Google Scholar] [CrossRef]

- Tian, D.; Li, M.; Han, S.; Shen, Y. A novel and intelligent safety-hazard classification method with syntactic and semantic features for large-scale construction projects. J. Constr. Eng. Manag. 2022, 148, 04022109. [Google Scholar] [CrossRef]

- Xu, Y.; Gan, Z.; Guo, R.; Wang, X.; Shi, K.; Ma, P. Hazard Analysis for Massive Civil Aviation Safety Oversight Reports Using Text Classification and Topic Modeling. Aerospace 2024, 11, 837. [Google Scholar] [CrossRef]

- Shang, L.; Yin, M.; Xiao, C.; Cheng, J. Research on text classification of railway safety incidents based on BLS. China Saf. Sci. J. 2022, 32, 103. [Google Scholar]

- Wang, R.; Zhang, Y.; Mao, S. Intelligent text classification and knowledge mining of hidden safety hazards in hydropower engineering construction. J. Hydroelectr. Eng. 2022, 41, 96–106. [Google Scholar]

- Li, T.; Sherong, Z.H.; Li, Z. Intelligent text classification method for water diversion project inspection based on character level CNN. J. Hydroelectr. Eng. 2021, 40, 89–98. [Google Scholar]

- Li, J.; Wu, C. Deep learning and text mining: Classifying and extracting key information from construction accident narratives. Appl. Sci. 2023, 13, 10599. [Google Scholar] [CrossRef]

- Luo, X.; Li, X.; Song, X.; Liu, Q. Convolutional neural network algorithm–based novel automatic text classification framework for construction accident reports. J. Constr. Eng. Manag. 2023, 149, 04023128. [Google Scholar] [CrossRef]

- Chi, N.W.; Lin, K.Y.; Hsieh, S.H. Using ontology-based text classification to assist Job Hazard Analysis. Adv. Eng. Inform. 2014, 28, 381–394. [Google Scholar] [CrossRef]

- Gupta, A.K.; Pardheev, C.G.V.S.; Choudhuri, S.; Das, S.; Garg, A.; Maiti, J. A novel classification approach based on context connotative network (CCNet): A case of construction site accidents. Expert Syst. Appl. 2022, 202, 117281. [Google Scholar] [CrossRef]

- Hu, X.; Wang, H.; Li, P. Online biterm topic model based short text stream classification using short text expansion and concept drifting detection. Pattern Recognit. Lett. 2018, 116, 187–194. [Google Scholar] [CrossRef]

- Wang, L.; Hu, X.; Liu, X.; Liu, Y.; Li, H.; Liu, J.; Yang, L. Emergency entity relationship extraction for water diversion project based on pre-trained model and multi-featured graph convolutional network. PLoS ONE 2023, 18, e0292004. [Google Scholar] [CrossRef]

- Rachamadugu, S.K.; Pushphavathi, T.P.; Khan, S.B.; Alojail, M. Exploring Topic Coherence With PCC-LDA and BERT for Contextual Word Generation. IEEE Access 2024, 12, 175252–175267. [Google Scholar] [CrossRef]

- Zhou, H.; Tang, S.; Huang, W.; Zhao, X. Generating risk response measures for subway construction by fusion of knowledge and deep learning. Autom. Constr. 2023, 152, 104951. [Google Scholar] [CrossRef]

- Wu, Z.; Wang, S.; Xu, H.; Shi, F.; Li, Q.; Li, L.; Qian, F. Research on ship safety risk early warning model integrating transfer learning and multi-modal learning. Appl. Ocean Res. 2024, 150, 104139. [Google Scholar] [CrossRef]

- Wang, Q.; Xia, R.; Yu, J.; Liu, Q.; Tong, S.; Xu, Z. From Text to Safety: A Novel Framework for Mining Unsafe Aviation Events Using Advanced Neural Network and Feature Network. Aerospace 2024, 11, 843. [Google Scholar] [CrossRef]

- Wang, Z.; Chen, J.; Chen, J.; Chen, H. Identifying interdisciplinary topics and their evolution based on BERTopic. Scientometrics 2023, 129, 7359–7384. [Google Scholar] [CrossRef]

- Kowsari, K.; Jafari Meimandi, K.; Heidarysafa, M.; Mendu, S.; Barnes, L.; Brown, D. Text classification algorithms: A survey. Information 2019, 10, 150. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).