Artificial Intelligence as the Next Frontier in Cyber Defense: Opportunities and Risks

Abstract

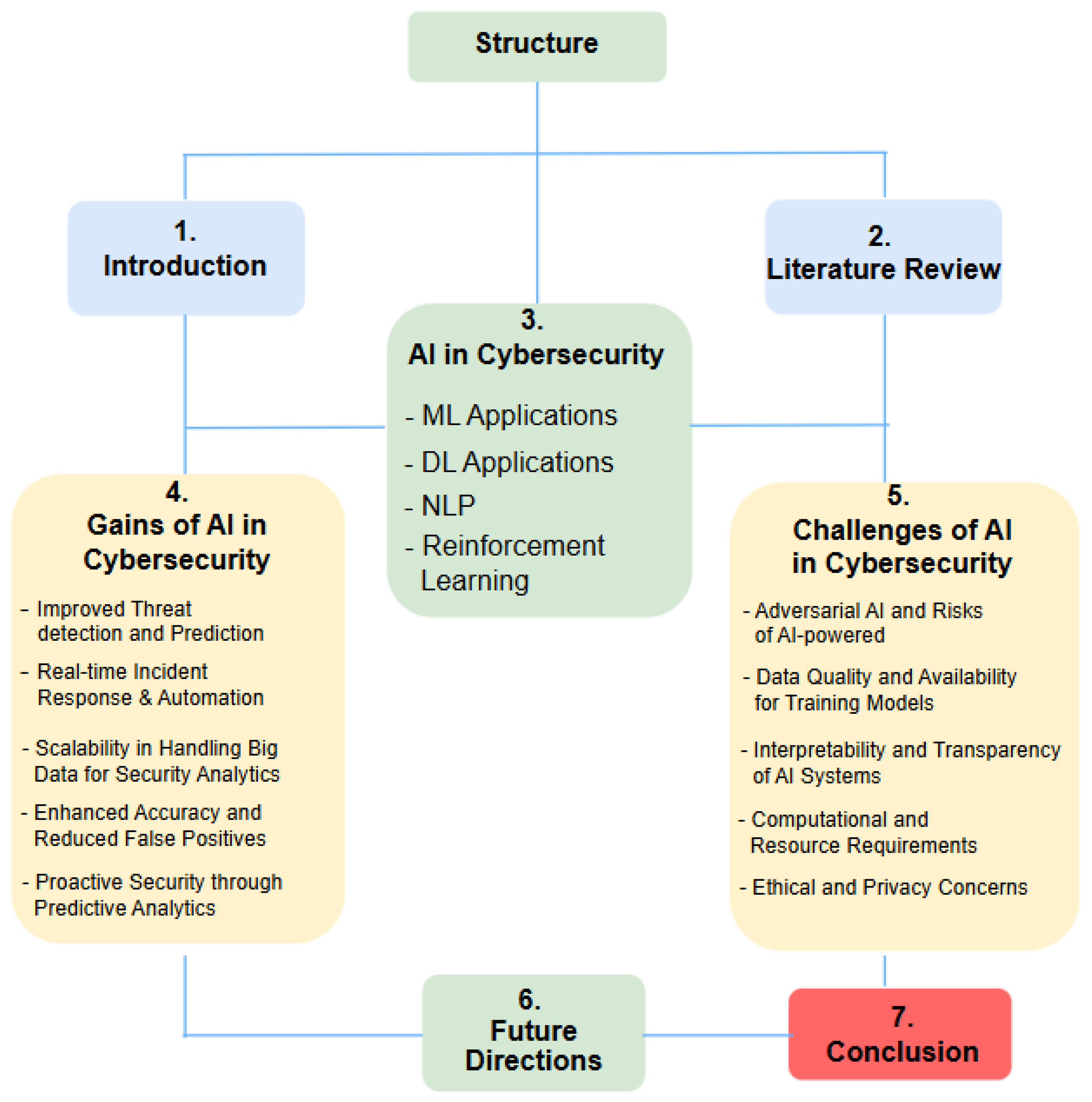

1. Introduction

- We present a unified and technically strong synthesis of the major AI paradigms, ML, DL, NLP, and RL, highlighting how each contributes to contemporary cybersecurity defense mechanisms.

- A structured mapping between AI model families and cybersecurity tasks is developed, clarifying how architectural characteristics, data modalities, and learning objectives align with functions such as intrusion detection, malware classification, phishing defense, anomaly detection, and autonomous cyber response.

- We offer a critical analysis of the practical strengths and limitations of AI-driven security systems, examining issues such as adversarial robustness, model interpretability, data scarcity, scalability, and computational overhead, thereby revealing the operational constraints that influence real-world adoption.

- Key research challenges and emerging directions are identified, including explainable and trustworthy AI, privacy-preserving learning models, multimodal threat intelligence fusion, and robust evaluation methodologies to guide future developments in AI-based cybersecurity.

- Strategic and governance considerations required for responsible deployment of AI in cybersecurity environments are articulated, emphasizing the importance of ethical design, regulatory alignment, and risk-aware system management.

2. Literature Review

3. Artificial Intelligence Applications in Cybersecurity

3.1. Machine Learning Applications in Cybersecurity

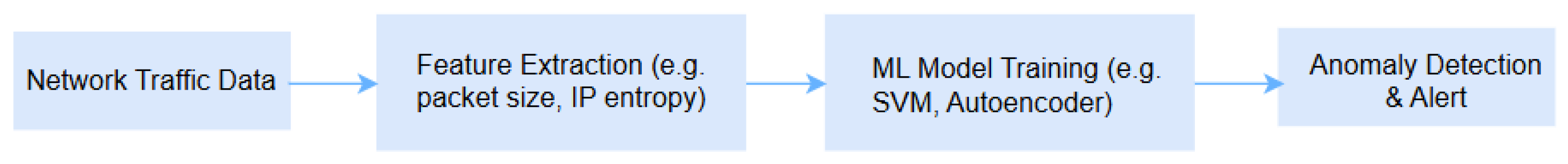

3.1.1. Anomaly Detection

3.1.2. Malware Classification

3.1.3. Phishing Detection

3.2. Deep Learning Applications in Cybersecurity

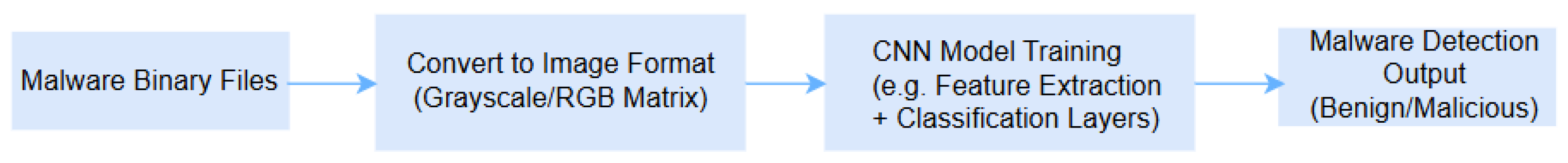

3.2.1. Image-Based Malware Detection

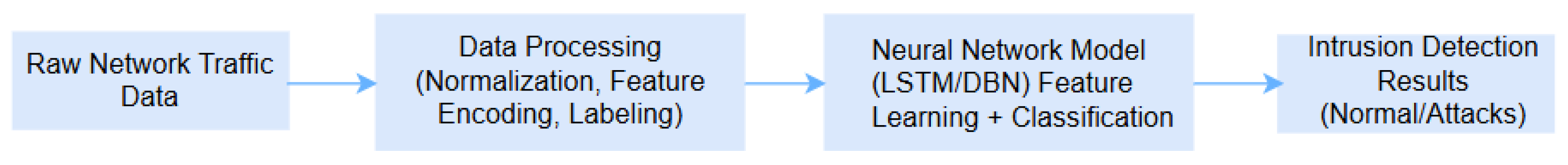

3.2.2. Intrusion Detection Using Neural Networks

3.3. Natural Language Processing Applications in Cybersecurity

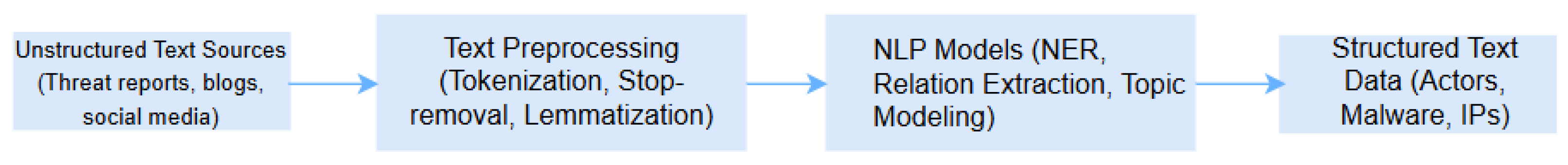

3.3.1. NLP for Threat Intelligence Extraction

3.3.2. Analyzing Unstructured Security Data

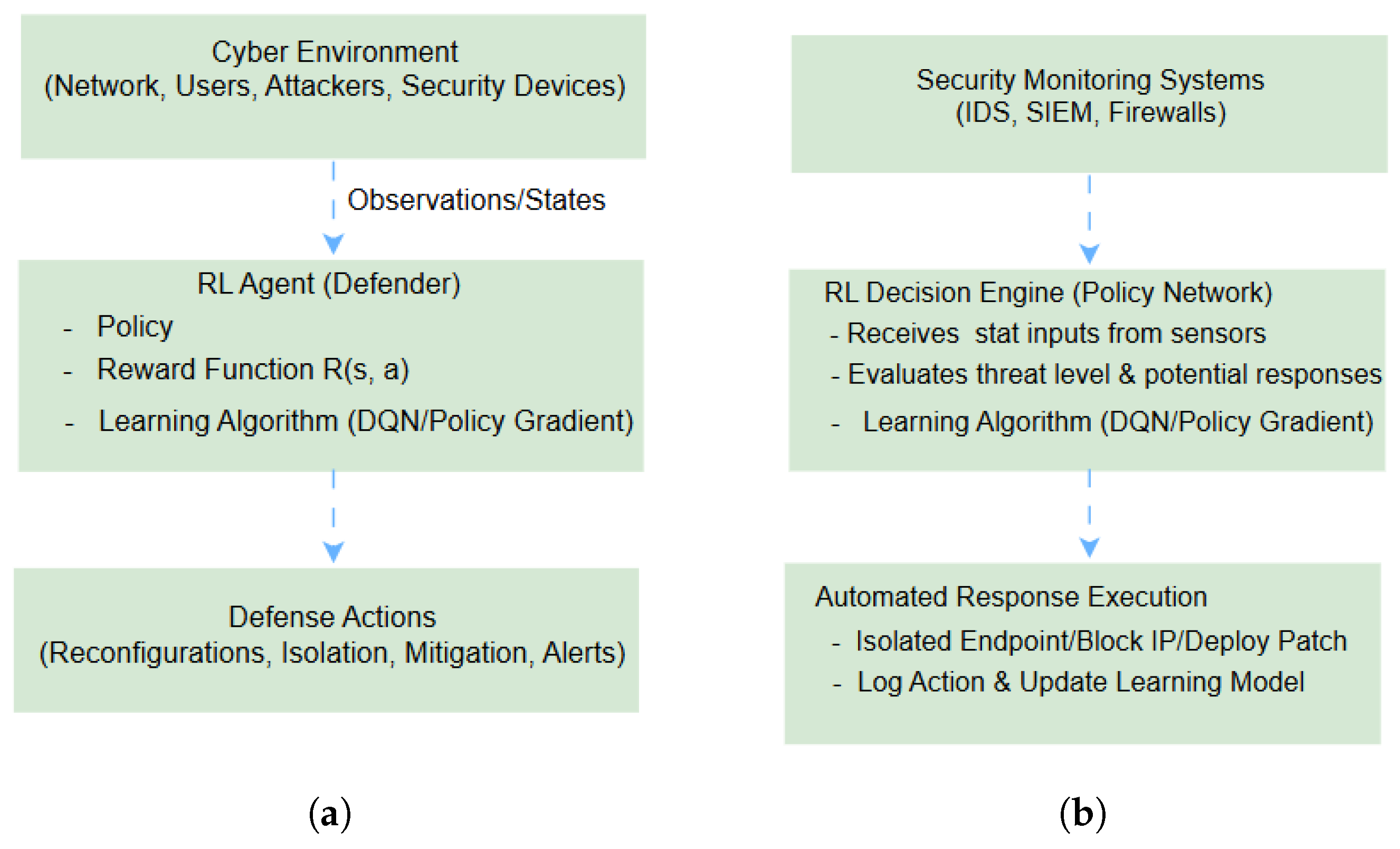

3.4. Reinforcement Learning Applications in Cybersecurity

3.4.1. Adaptive Defense Mechanisms

3.4.2. Automated Response Systems

4. Gains of AI for Cybersecurity

4.1. Improved Threat Detection and Prediction

4.1.1. Data-Driven Threat Detection

4.1.2. Predictive Threat Modeling

4.2. Real-Time Incident Response and Automation

4.2.1. Intelligent Automation of Incident Response

4.2.2. Reinforcement Learning for Adaptive Responses

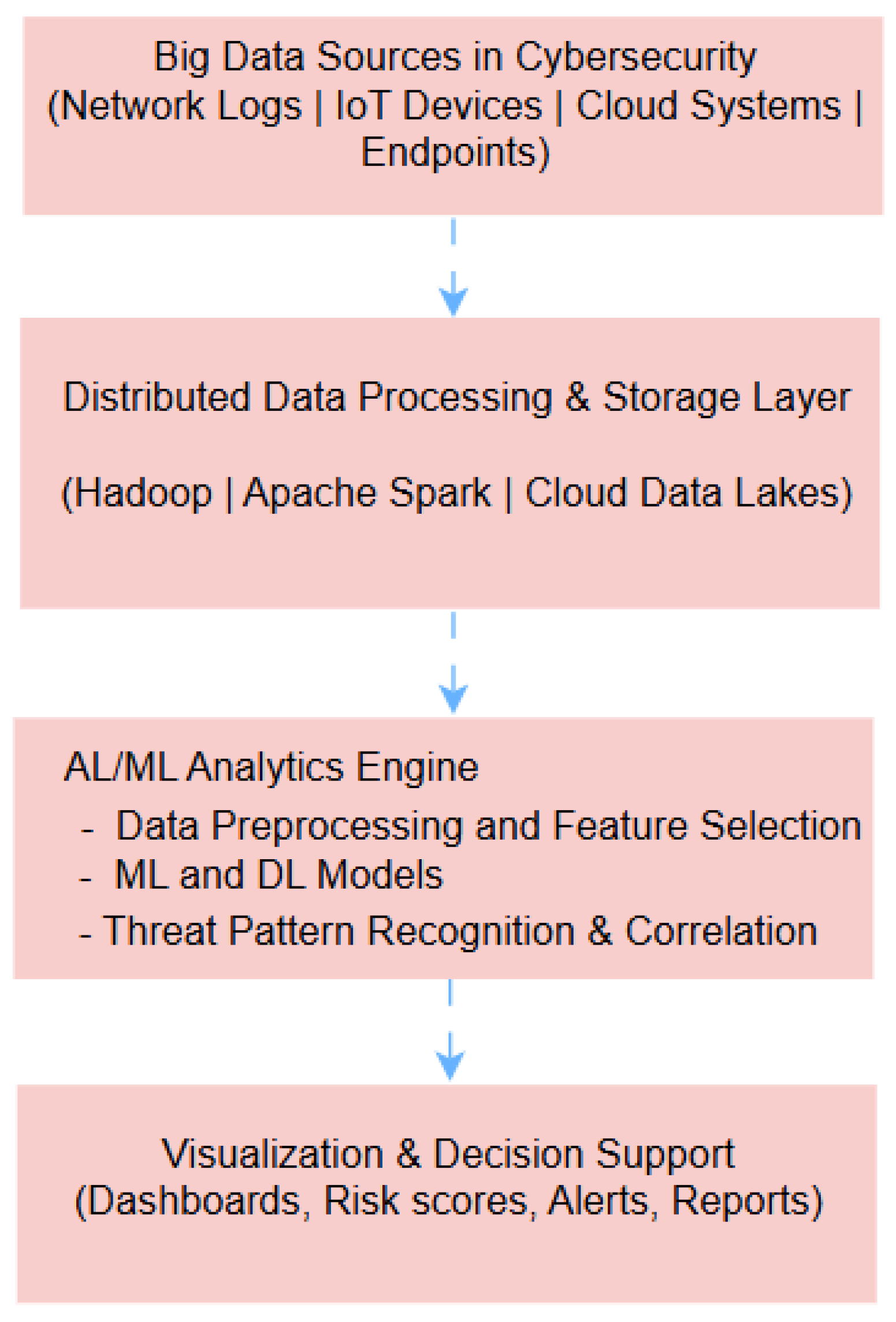

4.3. Scalability in Handling Big Data for Security Analytics

4.3.1. AI and Big Data Convergence in Cybersecurity

4.3.2. Real-Time and Distributed Security Analytics

4.4. Enhanced Accuracy and Reduced False Positives

4.4.1. AI-Powered Precision in Detection

4.4.2. Contextual Correlation and Adaptive Learning

4.4.3. Quantitative Gains and Practical Impact

4.5. Proactive Security Through Predictive Analytics

4.5.1. Predictive Threat Modeling and Risk Forecasting

4.5.2. Early Threat Detection and Incident Prevention

4.5.3. Predictive Maintenance and Vulnerability Management

4.5.4. Strategic and Operational Benefits

5. Challenges, Limitations, and Future Directions

5.1. Challenges and Limitations

5.1.1. Adversarial AI and Risks of AI-Powered Attacks

5.1.2. Data Quality and Availability of Training Models

5.1.3. Interpretability and Transparency of AI Systems

5.1.4. Computational and Resource Requirements

5.1.5. Ethical and Privacy Concerns

6. Future Directions

6.1. Explainable AI (XAI) for Trustworthy Cybersecurity

6.2. Integration of AI with Blockchain and IoT Security

6.3. Autonomous Security Systems for Critical Infrastructures

6.4. Policy and Governance Frameworks for AI in Cybersecurity

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| APTs | Advanced Persistent Threats |

| CNNs | Convolutional Neural Networks |

| CTI | Cyber Threat Intelligence |

| DBN | Deep Belief Network |

| DL | Deep Learning |

| DNN | Deep Neural Network |

| DQN | Deep Q-Network |

| EDR | Endpoint Detection and Response |

| IDS | Intrusion Detection System |

| IoT | Internet of Things |

| IoC | Indicators of Compromise |

| IPS | Intrusion Prevention System |

| kNNs | k-Nearest Neighbors |

| LDA | Latent Dirichlet Allocation |

| LSTM | Long Short-Term Memory |

| MARL | Multi-Agent Reinforcement Learning |

| ML | machine learning |

| NER | Named Entity Recognition |

| NLP | Natural Language Processing |

| PKI | Public Key Infrastructure |

| RNN | Recurrent Neural Network |

| RL | Reinforcement Learning |

| SDNs | Software-Defined Networks |

| SIEM | Security Information and Event Management |

| SOAR | Security Orchestration, Automation and Response |

| SOC | Security Operations Center |

| SVN | support vector machine |

| TTP | Tactics, Techniques, and Procedures |

| UEBA | User and Entity Behavior Analytics |

| XAI | Explainable Artificial Intelligence |

References

- Mohamed, N. Current Trends in AI and ML for Cybersecurity: A State-of-the-Art Survey. Cogent Eng. 2023, 10, 2272358. [Google Scholar] [CrossRef]

- Apruzzese, G.; Laskov, P.; Montes de Oca, E.; Mallouli, W.; Brdalo Rapa, L.; Grammatopoulos, A.V.; Di Franco, F. The Role of Machine Learning in Cybersecurity. Digit. Threat. 2023, 4, 8. [Google Scholar] [CrossRef]

- Yu, J.; Shvetsov, A.V.; Hamood Alsamhi, S. Leveraging Machine Learning for Cybersecurity Resilience in Industry 4.0: Challenges and Future Directions. IEEE Access 2024, 12, 159579–159596. [Google Scholar] [CrossRef]

- Miranda-García, A.; Rego, A.Z.; Pastor-López, I.; Sanz, B.; Tellaeche, A.; Gaviria, J.; Bringas, P.G. Deep Learning Applications on Cybersecurity: A Practical Approach. Neurocomputing 2024, 563, 126904. [Google Scholar] [CrossRef]

- Macas, M.; Wu, C.; Fuertes, W. Adversarial Examples: A Survey of Attacks and Defenses in Deep Learning-Enabled Cybersecurity Systems. Expert Syst. Appl. 2024, 238, 122223. [Google Scholar] [CrossRef]

- Fard, N.E.; Selmic, R.R.; Khorasani, K. A Review of Techniques and Policies on Cybersecurity Using Artificial Intelligence and Reinforcement Learning Algorithms. IEEE Technol. Soc. Mag. 2023, 42, 57–68. [Google Scholar] [CrossRef]

- Oh, S.H.; Kim, J.; Nah, J.H.; Park, J. Employing Deep Reinforcement Learning to Cyber-Attack Simulation for Enhancing Cybersecurity. Electronics 2024, 13, 555. [Google Scholar] [CrossRef]

- Alawida, M.; Mejri, S.; Mehmood, A.; Chikhaoui, B.; Isaac Abiodun, O. A Comprehensive Study of ChatGPT: Advancements, Limitations, and Ethical Considerations in Natural Language Processing and Cybersecurity. Information 2023, 14, 462. [Google Scholar] [CrossRef]

- Soori, M.; Dastres, R.; Arezoo, B.; Jough, F.K.G. Intelligent Robotic Systems in Industry 4.0: A Review. J. Adv. Manuf. Sci. Technol. 2024, 4, 2024007. [Google Scholar] [CrossRef]

- Kolosnjaji, B.; Xiao, H.; Xu, P.; Zarras, A. Artificial Intelligence for Cybersecurity: Develop AI Approaches to Solve Cybersecurity Problems in Your Organization; Packt Publishing Ltd.: Birmingham, UK, 2024; p. 356. [Google Scholar]

- Jafarizadeh, S.; Bour, H.; Soldani, D. AI-Driven Authentication Anomaly Detection for Modern Telco and Enterprise Networks. In Proceedings of the 2025 IEEE 50th Conference on Local Computer Networks (LCN), Sydney, Australia, 14–16 October 2025; pp. 1–6. [Google Scholar]

- Putra, H.; Mulyono, B.E.; Winarna, A.; Prakoso, L.Y. The Role of Artificial Intelligence (AI) in Addressing Cyber Threats. Indones. J. Interdiscip. Res. Sci. Technol. 2025, 3, 193–200. [Google Scholar] [CrossRef]

- Yadav, I.; Shekhawat, V.; Gautam, K.; Soni, G.K.; Yadav, R. Artificial Intelligence for Cybersecurity: Emerging Techniques, Challenges, and Future Trends. In Proceedings of the 2025 3rd International Conference on Sustainable Computing and Data Communication Systems (ICSCDS), Erode, India, 6–8 August 2025; pp. 1176–1180. [Google Scholar]

- Chen, G.; Yuan, Q. Application and Existing Problems of Computer Network Technology in the Field of Artificial Intelligence. In Proceedings of the 2021 2nd International Conference on Artificial Intelligence and Computer Engineering (ICAICE), Hangzhou, China, 5–7 November 2021; pp. 139–142. [Google Scholar]

- Nikolskaia, K.Y.; Naumov, V.B. The Relationship between Cybersecurity and Artificial Intelligence. In Proceedings of the 2021 International Conference on Quality Management, Transport and Information Security, Information Technologies (IT&QM&IS), Yaroslavl, Russia, 6–10 September 2021; pp. 94–97. [Google Scholar]

- Admass, W.S.; Munaye, Y.Y.; Diro, A.A. Cyber Security: State of the Art, Challenges and Future Directions. Cyber Secur. Appl. 2024, 2, 100031. [Google Scholar] [CrossRef]

- Glerean, E. Fundamentals of Secure AI Systems with Personal Data. 2025. Available online: https://elearning-2024.it.auth.gr/pluginfile.php/540242/mod_label/intro/spe-training-on-ai-and-data-protection-technical_en.pdf (accessed on 23 October 2025).

- Karn, A.L.; Ghanimi, H.M.; Iyengar, V.; Siddiqui, M.S.; Alharbi, M.G.; Alroobaea, R.; Yousef, A.; Sengan, S. Applying the Defense Model to Strengthen Information Security with Artificial Intelligence in Computer Networks of the Financial Services Sector. Sci. Rep. 2025, 15, 30292. [Google Scholar] [CrossRef]

- Nabi, F.; Zhou, X. Enhancing Intrusion Detection Systems through Dimensionality Reduction: A Comparative Study of Machine Learning Techniques for Cyber Security. Cyber Secur. Appl. 2024, 2, 100033. [Google Scholar] [CrossRef]

- Shevchuk, R.; Martsenyuk, V. Neural Networks toward Cybersecurity: Domain Map Analysis of State-of-the-Art Challenges. IEEE Access 2024, 12, 81265–81280. [Google Scholar] [CrossRef]

- Mohamed, N. Artificial Intelligence and Machine Learning in Cybersecurity: A Deep Dive into State-of-the-Art Techniques and Future Paradigms. Knowl. Inf. Syst. 2025, 67, 6969–7055. [Google Scholar] [CrossRef]

- Stamp, M.; Jureček, M. Machine Learning, Deep Learning and AI for Cybersecurity; Springer Nature: Cham, Switzerland, 2025. [Google Scholar] [CrossRef]

- Ali, R.; Ali, A.; Iqbal, F.; Hussain, M.; Ullah, F. Deep Learning Methods for Malware and Intrusion Detection: A Systematic Literature Review. Secur. Commun. Netw. 2022, 2959222. [Google Scholar] [CrossRef]

- Bennetot, A.; Donadello, I.; El Qadi El Haouari, A.; Dragoni, M.; Frossard, T.; Wagner, B.; Sarranti, A.; Tulli, S.; Trocan, M.; Chatila, R.; et al. A practical tutorial on explainable AI techniques. ACM Comput. Surv. 2024, 57, 1–44. [Google Scholar] [CrossRef]

- Dhinakaran, D.; Sankar, S.M.; Selvaraj, D.; Raja, S.E. Privacy-preserving data in IoT-based cloud systems: A comprehensive survey with AI integration. arXiv 2024, arXiv:2401.00794. [Google Scholar] [CrossRef]

- Pineda, V.G.; Valencia-Arias, A.; Giraldo, F.E.L.; Zapata-Ochoa, E.A. Integrating artificial intelligence and quantum computing: A systematic literature review of features and applications. Int. J. Cogn. Comput. Eng. 2025, 7, 26–39. [Google Scholar] [CrossRef]

- Menon, U.V.; Kumaravelu, V.B.; Kumar, C.V.; Rammohan, A.; Chinnadurai, S.; Venkatesan, R.; Hai, H.; Selvaprabhu, P. AI-powered IoT: A survey on integrating artificial intelligence with IoT for enhanced security, efficiency, and smart applications. IEEE Access 2025, 13, 50296–50339. [Google Scholar] [CrossRef]

- Protic, D.; Gaur, L.; Stankovic, M.; Rahman, M.A. Cybersecurity in Smart Cities: Detection of Opposing Decisions on Anomalies in the Computer Network Behaviour. Electronics 2022, 11, 3718. [Google Scholar] [CrossRef]

- Nelson, T.; O’Brien, A.; Noteboom, C. Machine Learning Applications in Malware Classification: A Meta-Analysis Literature Review. Int. J. Cybern. Inform. 2023, 12, 113–124. [Google Scholar] [CrossRef]

- Alhasan, A.E. Enhancing Real-Time Phishing Detection with AI: A Comparative Study of Transformer Models and Convolutional Neural Networks. 2025. Available online: https://www.diva-portal.org/smash/record.jsf?pid=diva2:1983139 (accessed on 23 October 2025).

- Catal, C.; Giray, G.; Tekinerdogan, B.; Kumar, S.; Shukla, S. Applications of Deep Learning for Phishing Detection: A Systematic Literature Review. Knowl. Inf. Syst. 2022, 64, 1457–1500. [Google Scholar] [CrossRef] [PubMed]

- Chaudhari, P.D.; Gudadhe, A. Deep Learning Approach and Its Application in the Cybersecurity Domain. In Proceedings of the 2025 International Conference on Machine Learning and Autonomous Systems (ICMLAS), Prawet, Thailand, 10–12 March 2025; pp. 520–524. [Google Scholar] [CrossRef]

- Nguyen, T.T.; Reddi, V.J. Deep Reinforcement Learning for Cyber Security. IEEE Trans. Neural Netw. Learn. Syst. 2021, 34, 3779–3795. [Google Scholar] [CrossRef] [PubMed]

- Son, T.T.; Lee, C.; Le-Minh, H.; Aslam, N.; Raza, M.; Long, N.Q. An Evaluation of Image-Based Malware Classification Using Machine Learning. In Advances in Computational Collective Intelligence; Communications in Computer and Information Science; Hernes, M., Wojtkiewicz, K., Szczerbicki, E., Eds.; Springer International Publishing: Cham, Switzerland, 2020; Volume 1287, pp. 125–138. [Google Scholar] [CrossRef]

- Ioannou, L.; Fahmy, S.A. Network Intrusion Detection Using Neural Networks on FPGA SoCs. In Proceedings of the 2019 29th International Conference on Field Programmable Logic and Applications (FPL), Barcelona, Spain, 9–13 September 2019; pp. 232–238. [Google Scholar] [CrossRef]

- Vasques, X. Machine Learning Theory and Applications: Hands-on Use Cases with Python on Classical and Quantum Machines; John Wiley & Sons: Hoboken, NJ, USA, 2024. [Google Scholar]

- Hasanov, I.; Virtanen, S.; Hakkala, A.; Isoaho, J. Application of Large Language Models in Cybersecurity: A Systematic Literature Review. IEEE Access 2024, 12, 176751–176778. [Google Scholar] [CrossRef]

- Arazzi, M.; Arikkat, D.R.; Nicolazzo, S.; Nocera, A.; KA, R.R.; Conti, M. NLP-Based Techniques for Cyber Threat Intelligence. Comput. Sci. Rev. 2025, 58, 100765. [Google Scholar] [CrossRef]

- Hanks, C.; Maiden, M.; Ranade, P.; Finin, T.; Joshi, A. Recognizing and Extracting Cybersecurity Entities from Text. In Proceedings of the Workshop on Machine Learning for Cybersecurity, International Conference on Machine Learning, Guangzhou, China, 2–4 December; pp. 1–7.

- Koschmider, A.; Aleknonytė-Resch, M.; Fonger, F.; Imenkamp, C.; Lepsien, A.; Apaydin, K.; Harms, M.; Janssen, D.; Langhammer, D.; Ziolkowski, T.; et al. Process Mining for Unstructured Data: Challenges and Research Directions. In Modellierung 2024; Gesellschaft für Informatik e.V.: Bonn, Germany, 2024; pp. 1–18. [Google Scholar] [CrossRef]

- Chhetri, B.; Namin, A.S. The Application of Transformer-Based Models for Predicting Consequences of Cyber Attacks. In Proceedings of the 2025 IEEE 49th Annual Computers, Software, and Applications Conference (COMPSAC), Toronto, ON, Canada, 8–11 July 2025; pp. 523–532. [Google Scholar] [CrossRef]

- Zade, N.; Mate, G.; Kishor, K.; Rane, N.; Jete, M. NLP Based Automated Text Summarization and Translation: A Comprehensive Analysis. In Proceedings of the 2024 2nd International Conference on Sustainable Computing and Smart Systems (ICSCSS), Coimbatore, India, 10–12 July 2024; pp. 528–531. [Google Scholar] [CrossRef]

- Scheponik, T.; Sherman, A.T. LDA Topic Analysis of a Cybersecurity Textbook. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP 2020), Online, 16–20 November 2020; Available online: https://par.nsf.gov/biblio/10160115 (accessed on 26 March 2025).

- Cengiz, E.; Gök, M. Reinforcement Learning Applications in Cyber Security: A Review. Sak. Univ. J. Sci. 2023, 27, 481–503. [Google Scholar] [CrossRef]

- Afolalu, O.; Tsoeu, M.S. Enterprise Networking Optimization: A Review of Challenges, Solutions, and Technological Interventions. Future Internet 2025, 17, 133. [Google Scholar] [CrossRef]

- Hammad, A.A.; Jasim, F.T. Adaptive Cyber Defense Using Advanced Deep Reinforcement Learning Algorithms: A Real-Time Comparative Analysis. J. Comput. Theor. Appl. 2025, 2, 523–535. [Google Scholar] [CrossRef]

- Jaber, A. Transforming Cybersecurity Dynamics: Enhanced Self-Play Reinforcement Learning in Intrusion Detection and Prevention System. In Proceedings of the 2024 IEEE International Systems Conference (SysCon), Montreal, QC, Canada, 15–18 April 2024; pp. 1–8. [Google Scholar] [CrossRef]

- Afshar, R.R.; Zhang, Y.; Vanschoren, J.; Kaymak, U. Automated Reinforcement Learning: An Overview. arXiv 2022, arXiv:2201.05000. [Google Scholar] [CrossRef]

- Zhou, Z.; Liu, G.; Tang, Y. Multi-Agent Reinforcement Learning: Methods, Applications, Visionary Prospects, and Challenges. IEEE Trans. Intell. Veh. 2024, 9, 8190–8211. [Google Scholar] [CrossRef]

- Diana, L.; Dini, P.; Paolini, D. Overview on intrusion detection systems for computers networking security. Computers 2025, 14, 87. [Google Scholar] [CrossRef]

- Ajeesh, A.; Mathew, T. Enhancing Network Security: A Comparative Analysis of Deep Learning and Machine Learning Models for Intrusion Detection. In Proceedings of the 2024 International Conference on E-mobility, Power Control and Smart Systems (ICEMPS), Thiruvananthapuram, India, 8–20 April 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Danish, M. Enhancing Cyber Security Through Predictive Analytics: Real-Time Threat Detection and Response. Int. J. Adv. Comput. Sci. Appl. 2025, 16, 38–49. [Google Scholar] [CrossRef]

- Kinyua, J.; Awuah, L. AI/ML in Security Orchestration, Automation and Response: Future Research Directions. Intell. Autom. Soft Comput. 2021, 28, 527–545. [Google Scholar] [CrossRef]

- Maleh, Y.; Shojafar, M.; Alazab, M.; Baddi, Y. Machine Intelligence and Big Data Analytics for Cybersecurity Applications; Studies in Computational Intelligence; Springer International Publishing: Cham, Switzerland, 2021; Volume 919. [Google Scholar] [CrossRef]

- Seabra, A.; Lifschitz, S. Advancing Polyglot Big Data Processing Using the Hadoop Ecosystem. arXiv 2025, arXiv:2504.14322. [Google Scholar] [CrossRef]

- Wang, J.; Hu, M.; Li, N.; Al-Ali, A.; Suganthan, P.N. Incremental Online Learning of Randomized Neural Network with Forward Regularization. arXiv 2024, arXiv:2412.13096. [Google Scholar] [CrossRef]

- Yurdem, B.; Kuzlu, M.; Gullu, M.K.; Catak, F.O.; Tabassum, M. Federated Learning: Overview, Strategies, Applications, Tools and Future Directions. Heliyon 2024, 10, e38137. [Google Scholar] [CrossRef]

- Berkani, M.R.A.; Chouchane, A.; Himeur, Y.; Ouamane, A.; Miniaoui, S.; Atalla, S.; Mansoor, W.; Al-Ahmad, H. Advances in Federated Learning: Applications and Challenges in Smart Building Environments and Beyond. Computers 2025, 14, 124. [Google Scholar] [CrossRef]

- Mehmood, M.K.; Arshad, H.; Alawida, M.; Mehmood, A. Enhancing Smishing Detection: A Deep Learning Approach for Improved Accuracy and Reduced False Positives. IEEE Access 2024, 12, 137176–137193. [Google Scholar] [CrossRef]

- Gonsalves, C. Contextual Assessment Design in the Age of Generative AI. J. Learn. Dev. High. Educ. 2025, 34, 1–14. [Google Scholar] [CrossRef]

- Tendikov, N.; Rzayeva, L.; Saoud, B.; Shayea, I.; Azmi, M.H.; Myrzatay, A.; Alnakhli, M. Security Information Event Management Data Acquisition and Analysis Methods with Machine Learning Principles. Results Eng. 2024, 22, 102254. [Google Scholar] [CrossRef]

- Osman, M.; He, J.; Zhu, N.; Mokbal, F.M.M. An Ensemble Learning Framework for the Detection of RPL Attacks in IoT Networks Based on the Genetic Feature Selection Approach. Ad Hoc Netw. 2024, 152, 103331. [Google Scholar] [CrossRef]

- Verma, A.; Ranga, V. Evaluation of Network Intrusion Detection Systems for RPL Based 6LoWPAN Networks in IoT. Wirel. Pers. Commun. 2019, 108, 1571–1594. [Google Scholar] [CrossRef]

- Sharma, M.; Elmiligi, H.; Gebali, F.; Verma, A. Simulating Attacks for RPL and Generating Multiclass Dataset for Supervised Machine Learning. In Proceedings of the 2019 IEEE 10th Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON), Vancouver, BC, Canada, 17–19 October 2019; pp. 20–26. [Google Scholar] [CrossRef]

- Johora, F.T.; Khan, M.S.I.; Kanon, E.; Rony, M.A.T.; Zubair, M.; Sarker, I.H. A Data-Driven Predictive Analysis on Cyber Security Threats with Key Risk Factors. arXiv 2024, arXiv:2404.00068. [Google Scholar] [CrossRef]

- Landauer, M.; Skopik, F.; Stojanović, B.; Flatscher, A.; Ullrich, T. A Review of Time-Series Analysis for Cyber Security Analytics: From Intrusion Detection to Attack Prediction. Int. J. Inf. Secur. 2025, 24, 3. [Google Scholar] [CrossRef]

- Dass, S.; Datta, P.; Namin, A.S. Attack Prediction Using Hidden Markov Model. In Proceedings of the 2021 IEEE 45th Annual Computers, Software, and Applications Conference (COMPSAC), Madrid, Spain, 12–16 July 2021; pp. 1695–1702. [Google Scholar] [CrossRef]

- Khan, M.Z.A.; Khan, M.M.; Arshad, J. Anomaly Detection and Enterprise Security Using User and Entity Behavior Analytics (UEBA). In Proceedings of the 2022 3rd International Conference on Innovations in Computer Science & Software Engineering (ICONICS), Karachi, Pakistan, 14–15 December 2022; pp. 1–9. [Google Scholar] [CrossRef]

- Dwivedi, R.; Dave, D.; Naik, H.; Singhal, S.; Omer, R.; Patel, P.; Qian, B.; Wen, Z.; Shah, T.; Morgan, G.; et al. Explainable AI (XAI): Core Ideas, Techniques, and Solutions. ACM Comput. Surv. 2023, 55, 1–33. [Google Scholar] [CrossRef]

- Tian, Z.; Cui, L.; Liang, J.; Yu, S. A Comprehensive Survey on Poisoning Attacks and Countermeasures in Machine Learning. ACM Comput. Surv. 2023, 55, 1–35. [Google Scholar] [CrossRef]

- Oprea, A.; Singhal, A.; Vassilev, A. Poisoning Attacks against Machine Learning: Can Machine Learning Be Trustworthy? Computer 2022, 55, 94–99. [Google Scholar] [CrossRef]

- Aljanabi, M.; Omran, A.H.; Mijwil, M.M.; Abotaleb, M.; El-kenawy, E.-S.M.; Mohammed, S.Y.; Ibrahim, A. Data Poisoning: Issues, Challenges, and Needs. In Proceedings of the 7th IET Smart Cities Symposium (SCS 2023), Online, 3–5 December 2023; pp. 359–363. [Google Scholar] [CrossRef]

- Zhou, Z.; Zhu, J.; Yu, F.; Li, X.; Peng, X.; Liu, T.; Han, B. Model Inversion Attacks: A Survey of Approaches and Countermeasures. arXiv 2024, arXiv:2411.10023. [Google Scholar] [CrossRef]

- Brundage, M.; Avin, S.; Clark, J.; Toner, H.; Eckersley, P.; Garfinkel, B.; Dafoe, A.; Scharre, P.; Zeitzoff, T.; Filar, B.; et al. The Malicious Use of Artificial Intelligence: Forecasting, Prevention, and Mitigation. arXiv 2024, arXiv:1802.07228. [Google Scholar] [CrossRef]

- Kouper, I.; Stone, S. Data Sharing and Use in Cybersecurity Research. CODATA Sci. J. 2024, 23, 1–19. [Google Scholar] [CrossRef]

- Mittelstadt, B. Interpretability and Transparency in Artificial Intelligence. In The Oxford Handbook of Digital Ethics; Oxford University Press: Oxford, UK, 2021; pp. 378–410. [Google Scholar] [CrossRef]

- Lasmar Almada, M.A. Law & Compliance in AI Security & Data Protection; AI and Data Protection Training Module; European Data Protection Supervisor: Brussels, Belgium, 2024; pp. 1–217. [Google Scholar]

- Huang, Y.; Arora, C.; Houng, W.C.; Kanij, T.; Madulgalla, A.; Grundy, J. Ethical concerns of generative AI and mitigation strategies: A systematic mapping study. arXiv 2025, arXiv:2502.00015. [Google Scholar] [CrossRef]

- Kuznetsov, O.; Sernani, P.; Romeo, L.; Frontoni, E.; Mancini, A. On the Integration of Artificial Intelligence and Blockchain Technology: A Perspective about Security. IEEE Access 2024, 12, 3881–3897. [Google Scholar] [CrossRef]

- Ruzbahani, A.M. AI-Protected Blockchain-Based IoT Environments: Harnessing the Future of Network Security and Privacy. arXiv 2024, arXiv:2405.13847. [Google Scholar] [CrossRef]

- Paulraj, J.; Raghuraman, B.; Gopalakrishnan, N.; Otoum, Y. Autonomous AI-Based Cybersecurity Framework for Critical Infrastructure: Real-Time Threat Mitigation. arXiv 2025, arXiv:2507.07416. [Google Scholar] [CrossRef]

- Kumar, S.; Upadhyay, P.; Ramsamy, G. Strengthening AI Governance: International Policy Frameworks, Security Challenges, and Ethical AI Deployment. ITU J. Future Evol. Technol. 2025, 6, 275–285. [Google Scholar] [CrossRef]

| AI Applications | Implementation | Common Techniques | Input Features | Key Outcome | Benefits | References |

|---|---|---|---|---|---|---|

| Machine learning | Anomaly Detection | SVM, kNN, Autoencoder, Isolation Forest | Network Traffic logs, user activity patterns | Detects abnormal network behavior | Identifies zero-day and insider threats | [28] |

| Malware Classification | Decision Tree, Random Forest, DNN | API calls, file structure, opcode sequences | Classifies benign vs. malicious files | Detects polymorphic and unknown malware | [29] | |

| Phishing Detection | Logistic Regression, Naive Bayes, NLP models | URL structure, email text, HTML features | Detects fraudulent emails and websites | Real-time and adaptive filtering | [30,31] | |

| Deep Learning | Image-Based Malware Detection | CNN Autoencoder | Malware binaries (converted to images) | Classifies malware families or variants | Handles obfuscated malware; no manual feature design | [34] |

| Intrusion Detection Systems | RNN, LSTM, DBN, DNN | Network traffic, flow records | Identifies normal vs. attack traffic | Learns temporal patterns; adaptable to dynamic threats | [32,33,35] | |

| Natural Language Processing | Threat Intelligence Extraction | NER, Dependency Parsing, Relation Extraction | Threat feeds, reports, blogs, dark web discussions | Extracted entities (IPs, malware, CVEs, actors) | Automates CTI generation; improves response time | [36,38,39] |

| Analysis of Unstructured Data | Topic Modeling (LDA), Transformer Models (BERT, GPT) | Logs, reports, social media, forum text | Classified threats, topic clusters, topic clusters, sentiment analysis | Processes large text volumes; identifies emerging threats | [37,40,41,42,43] | |

| Reinforcement Learning | Adaptive Defense Mechanisms | Q-Learning, DQN, Policy Gradient | Network state features (traffic alerts) | Dynamic adjustment of defense policies, configurations | Learns optimal policies, adapts to evolving attacks | [44,46,47] |

| Automated Response Systems | Actor-Critic, Multi-Agent RL, SARSA | Incident data, threat alerts | Autonomous mitigation actions (blocking, isolation) | Reduces response time, minimizes human intervention | [48,49] |

| Feature | Traditional Techniques | AI-Based Techniques | Key Advantages |

|---|---|---|---|

| Detection Approach | Signature/Rule-based | Behavioral/Learning-based | Detects unknown and polymorphic threats |

| Data Handling | Static, predefined | Dynamic, real-time analysis | Scales with big data and IoT networks |

| Adaptability | Low, manual updates | High, autonomous learning | Adapts to evolving threat landscapes |

| Accuracy | Moderate, with false positives | High precision and recall | Reduces alert fatigue |

| Predictive Capability | Minimal | Strong-via ML and DL models | Enables proactive cyber defense |

| Area | Manual Response | AI-Driven Response | Key Benefits of AI Integration |

|---|---|---|---|

| Detection Speed | Reactive; delayed by human review | Instantaneous; automated detection | Reduces time to contain breaches |

| Decision Making | Analyst-dependent | Data-driven and autonomous | Removes human bias and fatigue |

| Scalability | Limited to team capacity | Scales with network size | Handles high-volume alerts efficiently |

| Adaptability | Fixed playbooks | Self-learning adaptive models | Adjusts to evolving threats |

| Response Accuracy | Error-prone under stress | Consistent and optimized | Enhances reliability and resilience |

| Feature | Traditional Security Analytics | AI-Driven Security Analytics | Key Benefits |

|---|---|---|---|

| Data Processing | Limited; static storage-based | Scalable; distributed and parallel | Processes terabyes in real time |

| Adaptability | Manual updates and configuration | Continuous, autonomous learning | Self-optimizing for evolving threats |

| Data Sources | Structured logs only | Structured, semi-structured and unstructured | Broader visibility across platforms |

| Latency | High; batch processing | Low; real-time stream analytics | Rapid detection and mitigation |

| Scalability | Hardware-dependent | Cloud-native and distributed | Elastic scalability across environments |

| Characteristic | Reactive (Traditional) | Proactive (AI-Driven Predictive) | Advantages |

|---|---|---|---|

| Response Timing | After-attack detection | Before-attack occurrence | Early mitigation |

| Data Dependency | Signature and rule-based | Behavior, pattern and trend-based | Broader threat coverage |

| Adaptability | Manual updates | Continuous learning | Dynamic adaptation |

| Incident Prevention | Low | High | Reduced breach frequency |

| Resource Utilization | High (manual triage) | Optimized (automated prioritization) | Cost efficiency |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Afolalu, O.; Tsoeu, M.S. Artificial Intelligence as the Next Frontier in Cyber Defense: Opportunities and Risks. Electronics 2025, 14, 4853. https://doi.org/10.3390/electronics14244853

Afolalu O, Tsoeu MS. Artificial Intelligence as the Next Frontier in Cyber Defense: Opportunities and Risks. Electronics. 2025; 14(24):4853. https://doi.org/10.3390/electronics14244853

Chicago/Turabian StyleAfolalu, Oladele, and Mohohlo Samuel Tsoeu. 2025. "Artificial Intelligence as the Next Frontier in Cyber Defense: Opportunities and Risks" Electronics 14, no. 24: 4853. https://doi.org/10.3390/electronics14244853

APA StyleAfolalu, O., & Tsoeu, M. S. (2025). Artificial Intelligence as the Next Frontier in Cyber Defense: Opportunities and Risks. Electronics, 14(24), 4853. https://doi.org/10.3390/electronics14244853