1. Introduction

In industrial production, proper use of safety helmets is fundamental to ensuring occupational safety [

1]. However, traditional manual inspections and stationary video surveillance systems suffer from significant monitoring blind spots and high labor costs, compromising their ability to detect safety violations, like not wearing a helmet properly or consistently. Driven by considerable advances in deep learning [

2,

3,

4], computer vision-based intelligent monitoring systems have emerged as a promising alternative [

5]. Due to continuous improvements in parallel computing platforms, neural network models have evolved from the early LeNet [

6] architecture to contemporary deep architectures such as the ResNet series [

7], becoming increasingly computationally expensive and storage-intensive. Although these deep networks, which have hundreds of layers, demonstrate remarkable performance, their high computational complexity and substantial deployment costs make it challenging to deploy them efficiently on the resource-constrained edge devices commonly used in industrial environments. Moreover, most existing industrial monitoring systems treat visual analysis and language-based decision support as separate components, lacking effective synergistic learning mechanisms that could enhance overall system intelligence.

To enhance safety management in smart factories, it is essential to deploy surveillance cameras comprehensively in high-risk areas. Typical safety helmet detection scenarios in an industrial setting can be found in datasets such as the Safety Helmet (Hardhat) Wearing Detect (SHWD) dataset. However, industrial safety monitoring systems must cope with the real-time processing requirements of massive video streams. The substantial computational burden and memory consumption of large deep neural networks often result in insufficient real-time performance and poor economic efficiency. Therefore, there is an urgent need to develop lightweight algorithms that can reduce model complexity while maintaining high monitoring accuracy, particularly for applications in industrial safety and preventive monitoring.

The drive for efficient deployment has spurred the development of diverse techniques to compress models and reduce their computational demands. These techniques include weight quantization [

8,

9,

10,

11], low-rank decomposition [

12,

13,

14], knowledge distillation [

15,

16,

17], and pruning [

18,

19,

20,

21,

22,

23]. A popular compression technique, network pruning achieves a favorable balance between model efficiency and performance by strategically reducing parameters and structural complexity. The research focus has correspondingly shifted to the design of sophisticated pruning algorithms, exploring areas like pruning criteria [

20,

21,

23], sparsity optimization [

22], and dynamic strategies [

24]. The development of modern hardware accelerators has contributed significantly to the real-world deployment of structured pruning methods.

This work introduces Similarity-Aware Channel Pruning (SACP), a structured pruning strategy operating on the principle of feature similarity to enable efficient, automated channel-level compression. During fine tuning, SACP identifies redundant channels based on feature similarity and suppresses them by incorporating their L2 norm into the loss function. Through iterative learning, these redundant channels are continuously driven toward zero activation, followed by one-shot pruning to remove them entirely, thereby achieving efficient model compression while maintaining performance. To address the challenge of multi-scale object detection with varying imaging distances in complex industrial environments, we propose two novel mechanisms, Spatial Feature Fusion (SFF) and Channel Feature Fusion (CFF), which are integrated into the backbone and neck of YOLOv8, respectively, to enhance multi-scale feature representation and improve differentiation of objects at various distances and sizes. Furthermore, for intelligent operational decision-making at the plant level, we develop an LLM-powered assistant framework that integrates Retrieval-Augmented Generation (RAG) with an agent workflow, establishing an intelligent collaboration system that enables synergistic operation between visual analysis and linguistic reasoning. This system mines and interprets real-time violation data from monitoring streams, supports dynamic querying, and provides actionable insights for safety supervision and production management.

This study makes the following key contributions:

We propose a novel Similarity-Aware Channel Pruning (SACP) method that effectively identifies redundant channels through similarity measurement and suppresses them by incorporating their L2 norm into the loss function, achieving automated compression through iterative suppression and one-shot pruning while preserving model performance.

The proposed Spatial Feature Fusion (SFF) and Channel Feature Fusion (CFF) mechanisms are designed with the objective of robustly identifying objects in the context of significant size and distance variations, thereby elevating detection performance in complex industrial scenes. SFF is incorporated in the backbone to bolster spatial attention, and CFF is placed in the neck for channel-wise feature refinement.

An LLM-powered decision-support framework integrated with Retrieval-Augmented Generation (RAG) and agent workflow is developed, enabling real-time mining and interpretation of violation data for actionable safety supervision and operational guidance.

To assess the performance of our method, we conducted evaluations on multiple benchmark datasets and a specialized dataset for safety helmet detection. This evaluation highlights the method’s superior accuracy, efficiency, and practicality for industrial applications.

This paper begins with a review of related work in

Section 2. The proposed methodology is then introduced in

Section 3. In

Section 4, the method is validated through extensive experiments. The paper concludes with a summary in

Section 5.

2. Related Work

The evolution of computer vision has been driven by the continued development of deep convolutional neural networks, which deliver state-of-the-art performance across many tasks. However, the significant computational and memory resources required by these models restrict their practicality for large-scale monitoring systems. Additionally, for safety violation recognition tasks with limited object categories, such as safety helmet detection, an excessive number of parameters can lead to overfitting and reduced performance. Therefore, the application of model compression techniques is crucial for diminishing computational expense, accelerating inference, and reducing memory consumption, with the goal of facilitating the application of deep CNNs in environments with limited resources. Reports of encouraging results of the application of deep CNNs in a number of computer vision task domains continue to increase. With iterative updates to deep learning technology [

3,

4,

5] and the continuous improvement in parallel computing platforms, the recognition performance of vision detection based on neural network models has reached a level at which industrialization is possible. In the field of safety violation recognition, the engineering application of visual models considers several factors, including the model’s size, accuracy, and inference time. With the development of visual models ranging from the initial LeNet designed by LECUN [

6] to ResNet series networks [

7], model complexity and deployment costs limit their application in safety monitoring scenarios, making it difficult to achieve efficient computation on resource-constrained edge devices. Moreover, given that safety violations typically occur on varying scales, with complex visual features, and in a variety of industrial environments, enhancing the applied model’s architecture is often essential to ensuring the accuracy of violation detection. For the convenience of model deployment, many research works have proposed implementing model compression [

8,

12,

15,

18]. Among various compression techniques, network pruning has recently gained significant attention for its ability to facilitate the engineering deployment and application of models. By reducing network parameters to simplify the architecture, network pruning aims to lower computational expenses while preserving model performance in scenarios with limited violation categories. Many studies have focused on means of implementing more efficient pruning algorithms to obtain efficient networks, including pruning criteria [

20,

21,

23], sparsity optimization [

22], and dynamic pruning strategies [

24]. Recent advances in model pruning continue to push the boundaries of efficiency and automation. Methods such as AutoSlim [

25] and MetaPrune [

26] have introduced data-driven and meta-learning paradigms to automatically determine optimal pruning policies, achieving notable compression rates with minimal accuracy loss.

Efficient network pruning can be categorized into two types: unstructured and structured pruning. The usual process involves training large models, removing unnecessary parameters and connections, and fine-tuning to improve model accuracy. Among unstructured pruning methods, network pruning was initially introduced by LeCun et al. [

18], who used second-order derivative information to eliminate weights based on their significance. In subsequent weight pruning studies [

19], researchers proposed using magnitude-based methods to compress models. To avoid relying on specialized hardware or software libraries, many researchers have proposed structured pruning methods to achieve compact models. Some have proposed redundancy evaluation criteria to examine the value of channels. For instance, Li et al. [

20] ranked filters by their L1 norm and removed less important convolutional channels based on a preset pruning rate. Concurrently, lightweight object detection architectures have evolved rapidly. The YOLO series, ranging from YOLOv9 to the latest YOLOv11 [

27,

28,

29], has consistently improved the trade-off between speed and accuracy through architectural innovation, providing a strong baseline for real-time object detection.

Furthermore, the integration of Large Language Models (LLMs) into decision-support systems has introduced innovative opportunities for enhancing intelligent manufacturing applications. Recent studies demonstrated the effectiveness of multimodal LLMs in educational evaluation frameworks [

30] while also showcasing their potential for personalized learning applications in early childhood education [

31], highlighting the versatility of LLM-based systems across domains. Modern frameworks increasingly leverage Retrieval-Augmented Generation (RAG) [

32] to improve the reliability and contextual relevance of LLM-generated content, enabling real-time analysis and actionable interpretation of operational data. The RAG mechanism enhances traditional LLMs by incorporating an external knowledge retrieval component, enabling the system to dynamically access and utilize domain-specific information. This design effectively addresses the limitations of conventional LLMs that rely solely on parametric knowledge, which may suffer from outdated content or insufficient domain specificity. To assess the effectiveness of RAG-based systems, key evaluation metrics typically include retrieval accuracy and response relevance, which allow for meaningful comparisons with other LLM-based decision-support frameworks. In our approach, these metrics are employed to ensure the system maintains high performance standards while providing reliable decision support. Our work builds upon these developments by proposing a unified framework that integrates similarity-aware structured pruning, multi-scale feature fusion, and an LLM-powered agent system, with the aim of improving operational efficiency and decision support in smart manufacturing scenarios.

3. Methods

This section details the proposed framework, which incorporates similarity-aware channel pruning (SACP) and a RAG-based LLM.

3.1. Framework of Similarity-Aware Channel Pruning

Existing research has shown that network channel pruning often results in performance degradation [

33]. Many methods have proposed iterative compression of networks through cycles of pruning and retraining [

19,

20], which typically require extensive training epochs and complicate real-time performance monitoring. To address this, we introduce an iterative pruning strategy integrated within a single fine-tuning process. This approach not only reduces training overhead but also enables continuous accuracy supervision, thereby mitigating irreversible performance loss due to improper pruning.

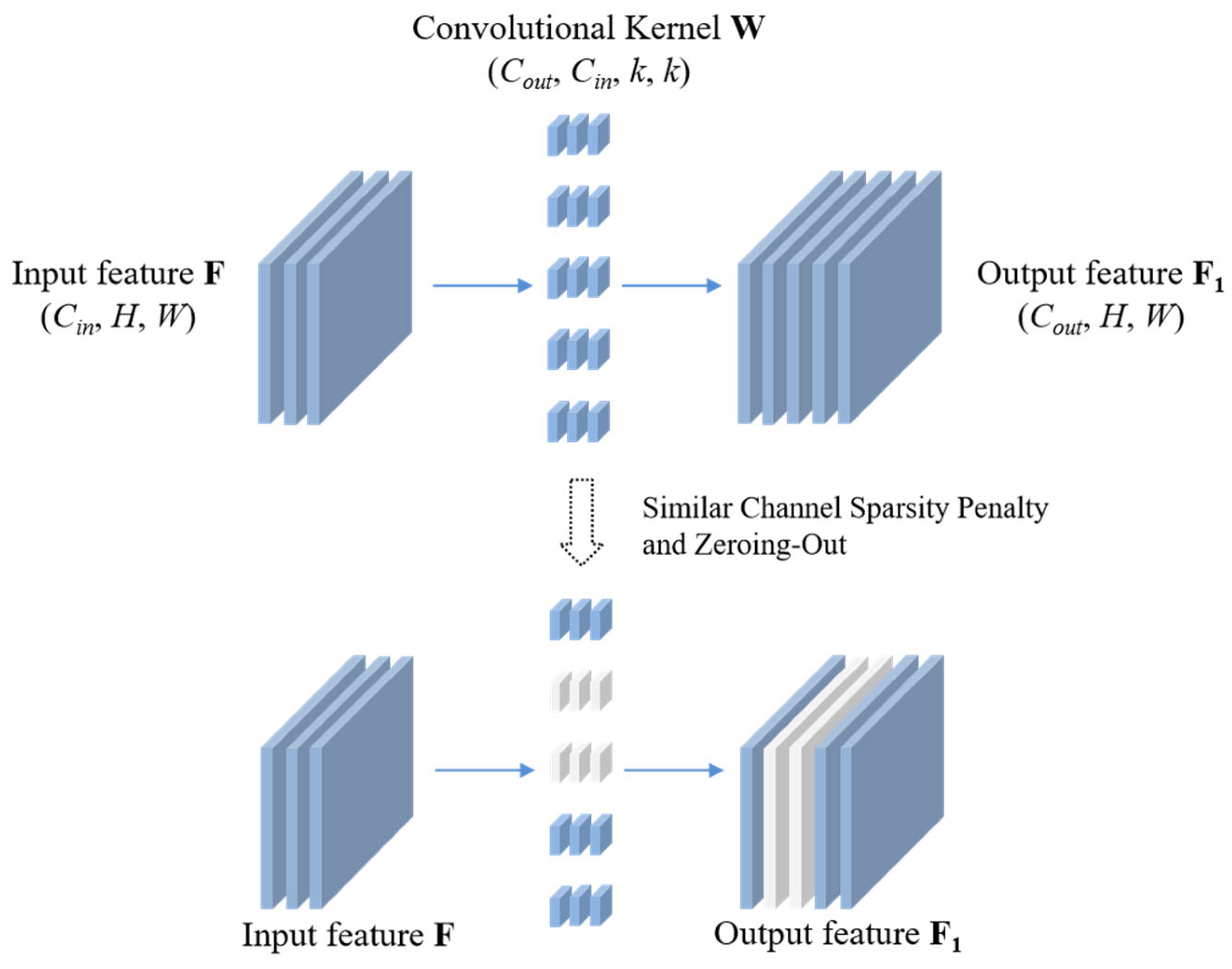

The overall framework of structured network slimming is illustrated in

Figure 1. The process begins with an input feature map

F with dimensions (

Cin,

H,

W), which is processed by a convolutional layer with kernels

W of dimensions (

Cout,

Cin,

k,

k), producing an output feature map

F1 with dimensions (

Cout,

H,

W). The core of our method is structured sparsity pruning. The white rectangular areas across

F1 and

W highlight a group of feature channels and their corresponding kernels that are deemed semantically similar. The central part of the figure symbolizes the application of a sparsity constraint (e.g., L2 norm) to these channel groups during training. This constraint penalizes kernel slices (white areas in

W) corresponding to the redundant feature channels (white areas in

F1), pushing their collective parameters toward zero. During slimming fine-tuning, we identify redundant feature channels based on their similarity characteristics and incorporate the L2 norm of these redundant channels into the loss function. This penalizes the network for producing similar feature representations, effectively suppressing (though not completely zeroing out) the output of redundant channels during training. Through iterative learning, highly similar channels are progressively driven to approach zero activation while maintaining network stability. Once the target pruning ratio and stability are achieved, we perform one-shot pruning to remove these approximately zeroed channels and their corresponding convolutional filters, followed by final fine-tuning of the compressed network. This method effectively reduces redundancy while preserving model accuracy, avoids multiple pruning–retraining cycles, and enables real-time compression monitoring.

We propose the Similarity-Aware Channel Pruning (SACP) method, which identifies redundant feature channels through similarity measurement and suppresses them by adding their L2 norm to the loss function. The method begins by calculating the similarity between the feature channels

Fi and

Fj in each layer using the L2 distance:

where

Fi and

Fj denote the feature maps of the

i-th and

j-th channels, respectively.

represents the L2 norm between the two channels, which measures the distance between the two sets of features. A smaller distance indicates that the channels are more similar. Channels with similarity below a threshold T are identified as redundant. The key innovation of SACP is to incorporate the L2 norm of these redundant channels directly into the loss function:

where

I represents the set of redundant channels.

represents the standard task-specific loss function, such as the cross-entropy loss for classification or the complete detection loss (e.g., including localization and classification losses) for object detection.

λ controls the penalty strength. This formulation explicitly penalizes the activation of redundant features during training, gradually driving their outputs to approach zero through iterative learning, which maintains training stability while ensuring effective compression.

The implementation of SACP involves using this similarity-based penalty criterion in the iterative slimming fine-tuning process to suppress feature channels to near-zero values. The process aims for a pre-defined compression ratio, which is a key hyperparameter representing the target proportion of redundant parameters for removal. When this target ratio and model stability are achieved, a single pruning of the convolutional channels corresponding to these approximately zeroed channels is performed to obtain the final slimmed model.

3.2. Spatial Feature Fusion Mechanism

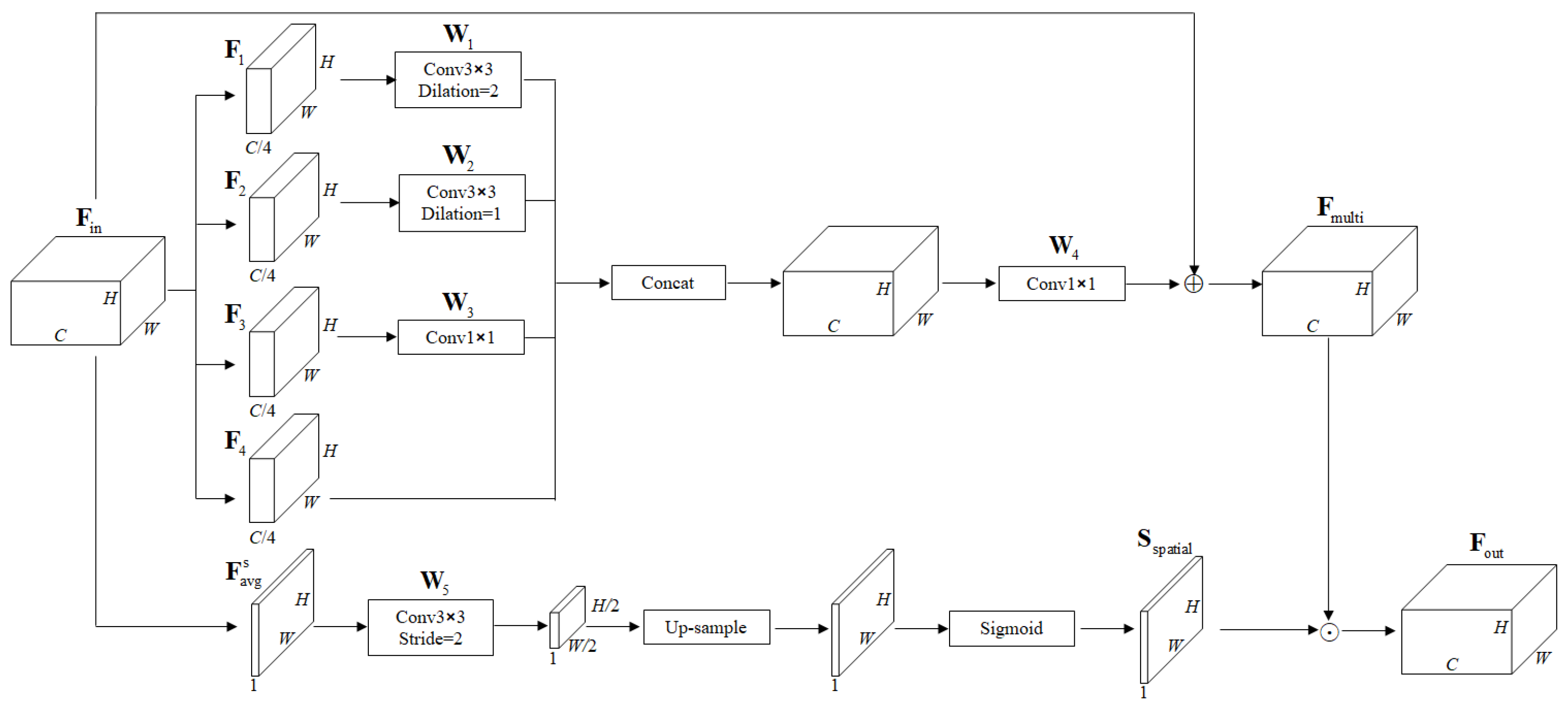

Considering the challenges of multi-scale object detection under varying imaging distances in complex industrial environments, we propose a spatial feature fusion mechanism to enhance multi-scale feature representation and improve detection accuracy for objects of varying sizes. Based on the YOLOv8 architecture—which is well-suited to real-time object detection tasks—we integrate a spatial feature fusion (SFF) module to efficiently extract and fuse multi-scale features. The SFF mechanism incorporates multi-scale feature fusion and spatial attention operations, enabling the network to adaptively focus on regions of interest across different scales. Multi-scale feature fusion enhances the model’s ability to capture objects of various sizes, particularly improving the detection of small targets, while the spatial attention operation emphasizes salient features by assigning spatially varying weights to pixel locations. This design not only strengthens feature discriminability but also suppresses irrelevant background information, allowing the network to concentrate on critical areas. The spatial feature fusion (SFF) mechanism is embedded within the backbone of the YOLOv8 architecture to enhance multi-scale spatial feature extraction, forming a strengthened network referred to as YOLOv8-SFF, as illustrated in

Figure 2.

Given input features

, the input feature

is split into four sets of features on average according to the channel dimension, producing each set of features

. This four-group split enables efficient multi-scale feature extraction by applying convolutional kernels of different receptive fields to different channel groups. Three groups are processed in parallel by kernels of size (1 × 1), (3 × 3), and (3 × 3) with a stride of 2, respectively, to capture point-wise, local, and broader contextual features, while the fourth group preserves the original information. A convolutional operation is performed on specified features using filters of different sizes, and the features are fused. The features obtained from multi-scale fusion are as follows:

where

represents a convolutional operation, and

represents element-wise addition. The average pooling operation is utilized to obtain the feature along the channel axis dimension.

Through convolutional operation, up-sampling, and S-shaped activation function operation, the spatial attention score

is obtained, calculated as follows:

where

is the S-shaped activation function sigmoid.

is the up-sampling function.

represents a convolutional operation.

represents the spatially compressed feature map obtained by applying average pooling along the channel dimension of the input feature map. This operation aggregates spatial information across all channels to produce a compact spatial descriptor. A dot multiplication operation is performed between the spatial attention score

and features

to obtain corrected output features. The core concept of Equation (3) is multi-scale feature fusion with identity preservation. It processes three feature groups in parallel using kernels of different receptive fields, concatenates the results with the fourth original feature group, and fuses them via a convolution (

). A residual connection (

) with the input

is used to preserve information and stabilize training. The basic purpose of Equation (4) is to generate a spatially aware attention map. It constructs a spatial attention score for each pixel through a convolutional layer (

) and upsampling. The sigmoid function σ normalizes the scores to [0, 1], producing the final attention map

to highlight important regions.

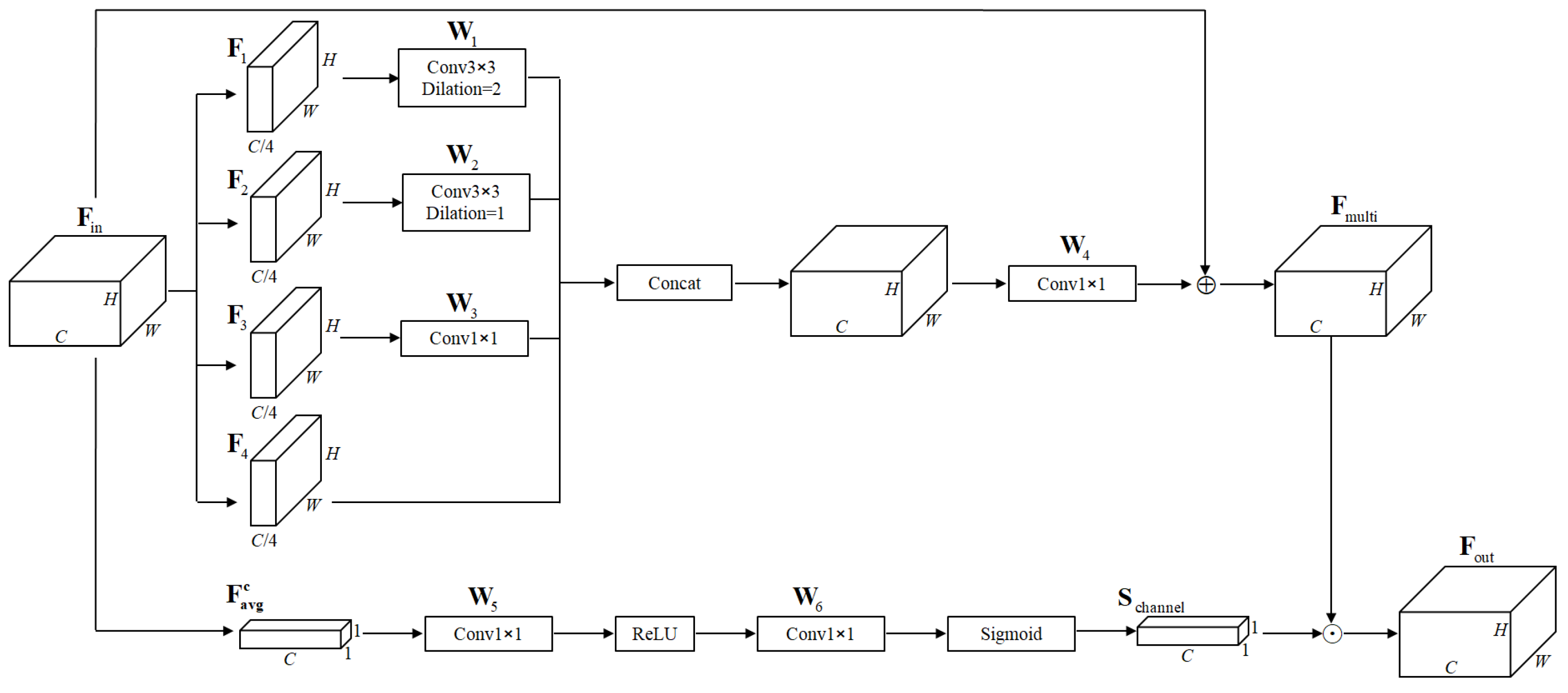

3.3. Channel Feature Fusion Mechanism

Network design typically involves concatenation operations to fuse features from shallow and deep layers, but this approach often overlooks the critical role of feature channels. To address this problem, we designed a channel feature fusion (CFF) mechanism that adaptively allocates weight scores to feature channels, enabling the network to emphasize more informative channels and prevent effective features from being diluted. The CFF mechanism incorporates multi-scale feature fusion and channel attention operations, enhancing the representational capacity of output features after concatenation. Multi-scale feature fusion captures object characteristics across different scales, while the channel attention operation enhances discriminative features and suppresses less relevant ones by assigning varying weights to each output channel. This mechanism is integrated after concatenation operations within the neck structure of the YOLOv8 architecture, forming an enhanced network termed YOLOv8-CFF, as illustrated in

Figure 3.

The feature calculation method employing multi-scale fusion is the same as in Equation (3). The average pooling operation along the spatial axis dimension is utilized to obtain the feature

. By applying convolutional operations and an activation function, the channel attention score

is obtained, which can be calculated as follows:

where

is the S-shaped activation function sigmoid, and

represents a convolutional operation. A dot multiplication operation is performed between the spatial attention score

and features

to obtain corrected output features.

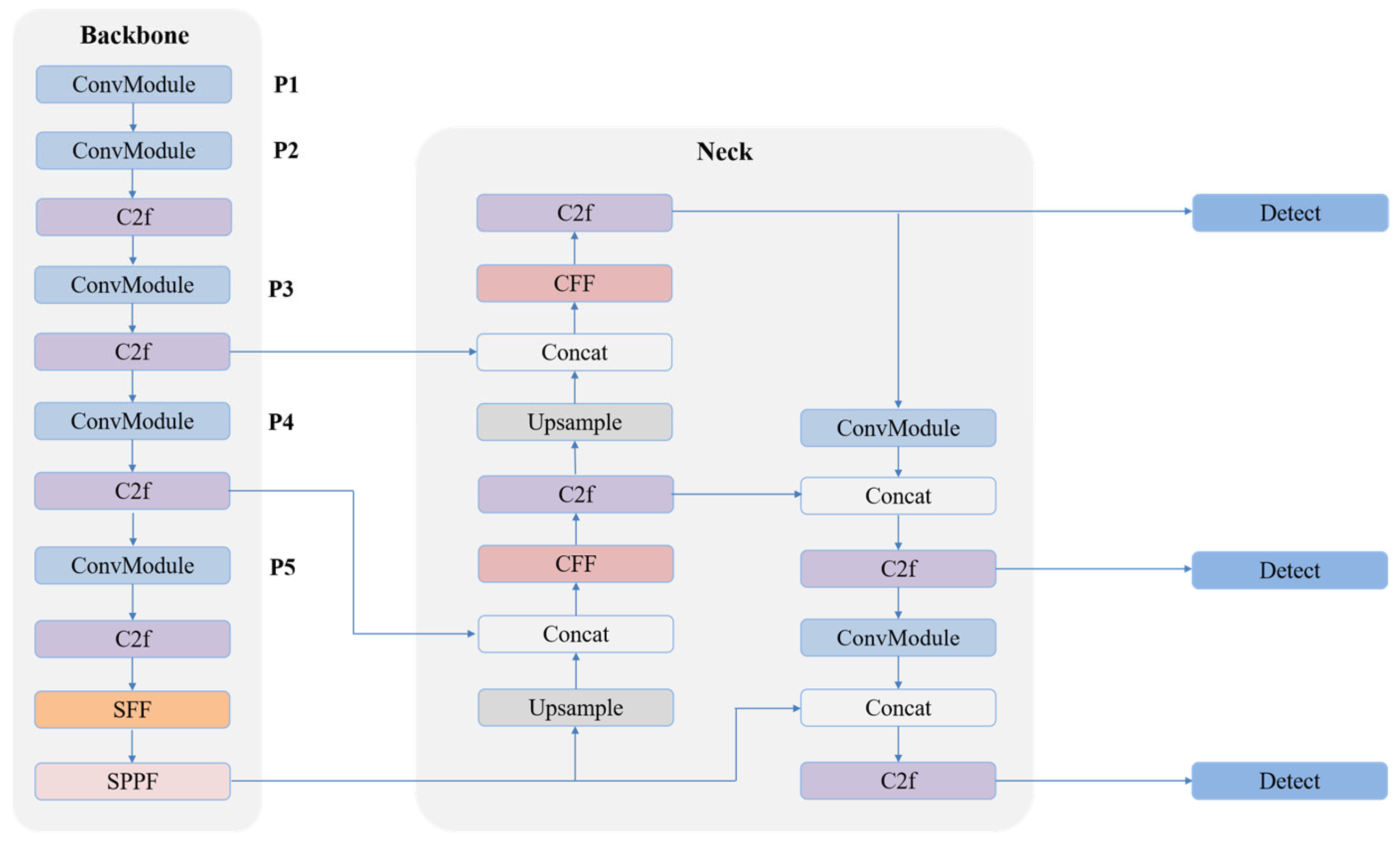

3.4. YOLOv8-SCFF Network Architecture

To comprehensively enhance the multi-scale feature representation capabilities of the detection network, we synergistically integrate the proposed spatial feature fusion (SFF) and channel feature fusion (CFF) mechanisms into the YOLOv8 architecture, constructing a more robust network named YOLOv8-SCFF.

SFF Module Placement: The SFF module is strategically integrated into the deeper layers of the backbone network, specifically after the last C2f module [

34] and preceding the SPPF module. This placement was chosen because the feature maps at this stage exhibit a balanced trade-off between rich semantic information and sufficient spatial resolution. Operating on these mid-resolution feature maps allows the SFF module to efficiently and effectively emphasize salient spatial regions and suppress irrelevant background noise, thereby providing enhanced input for the subsequent SPPF and neck networks.

CFF Module Placement: The CFF module is integrated into the neck network of YOLOv8. It is specifically deployed after the concatenation operations that fuse features from the backbone and the earlier layers of the neck. This placement is crucial as it operates on the concatenated feature maps, performing adaptive channel-wise re-calibration. By assigning optimal weights to channels, the CFF mechanism ensures that more informative features are emphasized and less useful ones are suppressed immediately after fusion, preventing the dilution of critical feature information before the final detection heads.

The combined action of these two modules—SFF enhancing spatial selectivity in the backbone and CFF refining channel importance in the neck—is a powerful dual-path enhancement strategy. The YOLOv8-SCFF network effectively addresses the challenges of detecting objects of varying sizes in complex industrial scenes by significantly boosting multi-scale feature representation, leading to improved detection accuracy while maintaining the network’s efficiency for real-time application. The overall architecture of YOLOv8-SCFF is illustrated in

Figure 4.

3.5. LLM-Powered Decision-Support Framework with RAG and AI Agent

To facilitate intelligent operational decision-making at the plant level, we develop an advanced decision-support framework that integrates Retrieval-Augmented Generation (RAG)with an AI agent workflow. This framework is designed to mine, interpret, and act upon real-time violation data extracted from industrial monitoring streams, thereby supporting dynamic querying, root cause analysis, and actionable safety recommendations. The integration of RAG and AI agent technologies enables the system to provide context-aware, data-driven insights for safety supervision and production management, complementing the automated model compression techniques described in previous sections.

The RAG module is the core of this framework, enhancing a large language model (LLM) by grounding its responses in authoritative, up-to-date knowledge. It operates by first retrieving relevant information from a structured knowledge base—containing safety regulations, historical cases, and operational protocols—based on the semantic similarity of the incoming violation event. The retrieved context and the violation data are then combined in a prompt, enabling the LLM to generate highly accurate, context-specific, and justifiable insights instead of generic or potentially incorrect responses.

The AI agent is the central reasoning engine that orchestrates the entire process, dynamically bridging real-time operational data with static knowledge. Upon receiving a violation event

V, it retrieves the latest information from real-time databases and integrates it with contextual static knowledge

K retrieved through the RAG module. The agent then invokes the LLM to perform reasoning, which can be formally represented as follows:

where

K denotes the retrieved knowledge and

V represents the real-time violation context. By integrating real-time behavioral data with retrieved knowledge such as established rules and historical cases, the agent generates comprehensive, evidence-based outputs—including specific violation alerts, tailored corrective measures, and personalized training recommendations—enabling timely and targeted safety interventions while ensuring continuous and adaptive safety management.

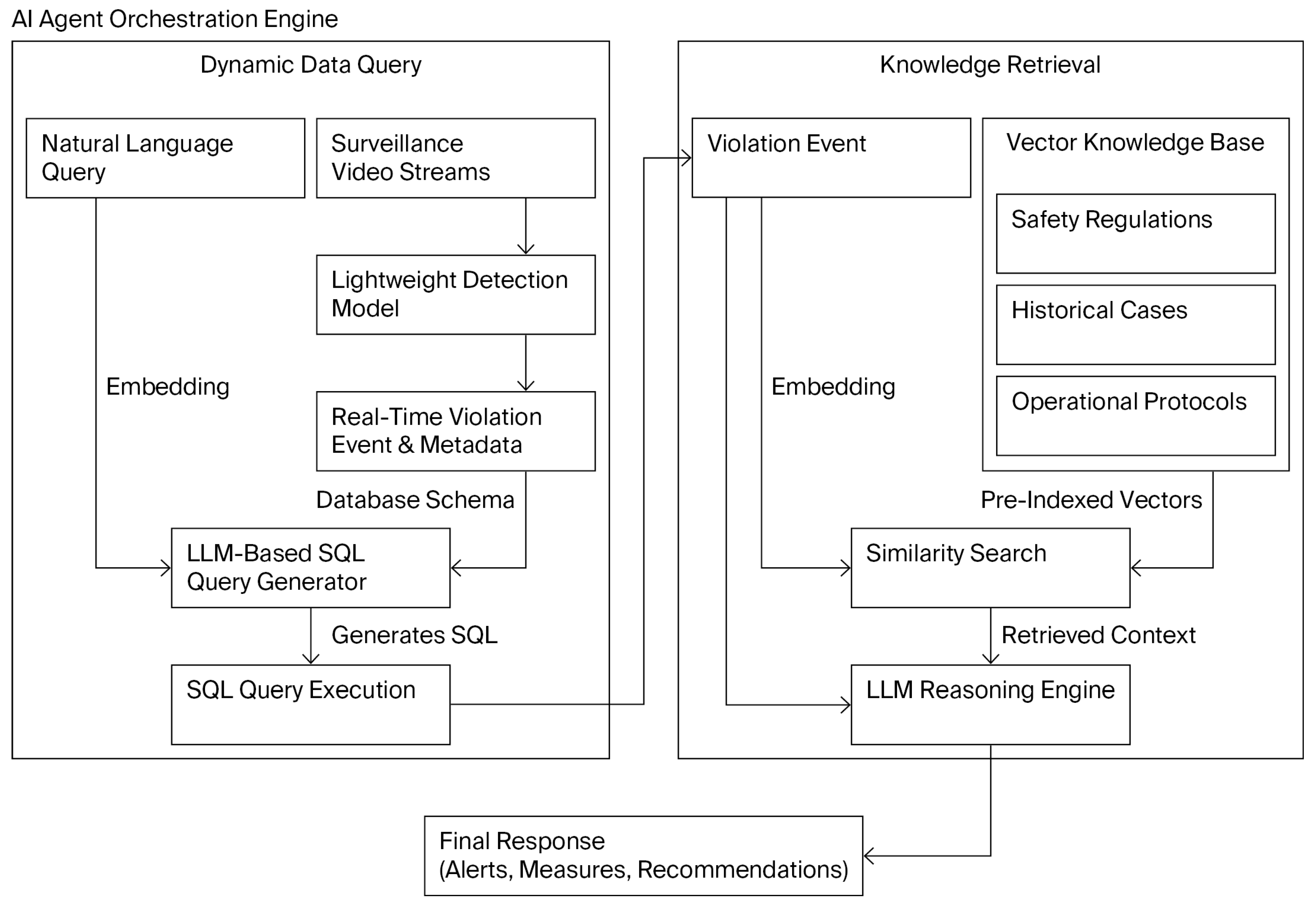

As shown in

Figure 5, the framework operates through an integrated workflow: surveillance video streams are continuously processed by our slimmed YOLOv8 model, which is enhanced with SFF and CFF mechanisms for real-time violation detection. The AI agent then coordinates the analysis by first employing an LLM-based SQL query generator to formulate precise queries from the detected events and associated metadata (e.g., time, location, violation type), retrieving relevant records from real-time violation databases. Subsequently, the agent orchestrates the RAG module to obtain contextual knowledge from the structured knowledge base, synthesizing both dynamic and static information to generate actionable outputs, including specific decision results, tailored corrective measures, and personalized training suggestions.

4. Experiments

In this section, we assess the efficacy of Similarity-Aware Channel Pruning (SACP) using a series of experiments conducted on various benchmark datasets. The experimental settings and configurations are detailed in

Section 4.1.

Section 4.2 presents the performance evaluation experiments of SACP based on iterative slimming fine-tuning on multiple benchmark datasets.

Section 4.3 further validates the overall framework through a case analysis, in which the YOLOv8-SCFF model undergoes compression via SACP before being evaluated on the industrial dataset, and comprehensive ablation studies on the proposed YOLOv8-SCFF architecture.

4.1. Experimental Settings

Experiments were conducted on four datasets: CIFAR [

35], ImageNet [

36], COCO [

37], and a safety helmet dataset, to evaluate the performance of the proposed method. CIFAR is a low-resolution image classification dataset consisting of 50,000 training images and 10,000 test images, all with a resolution of 32 × 32. The ImageNet-2012 dataset is as a comprehensive resource for image categorization tasks, encompassing a substantial collection of 1.2 million images for training purposes and a set of 50,000 validation images spanning 1000 categories. COCO 2017 is a major open-source vision dataset containing over 200,000 images annotated with 80 object categories. It supports tasks like object detection and segmentation through detailed annotations. The dataset includes 118k training, 5k validation, and 20k test images, serving as a key benchmark for model evaluation. The safety helmet dataset contains 11,000 images with an original resolution of 1920 × 1080. It includes bounding box annotations in the PASCAL VOC [

38] format for three classes: Helmet, Person, and Head. In the safety helmet dataset, 80% of samples were allocated to a training–validation dataset, while the remaining samples were designated as the test set to assess the model’s performance. The proposed method was assessed across various popular networks to confirm its generalizability, including VGG-16, ResNet-50, MobileNetv3, and YOLOv8.

Regarding training, for the CIFAR dataset, pretrained models were obtained by training from scratch, serving as the baseline, using the SGD optimizer. A weight decay of 10−4 and a momentum of 0.9 were applied. Training was conducted with a batch size of 128 for 300 epochs, starting with an initial learning rate of 0.1, which decayed by a factor of 10 at one-third and two-thirds of the total epochs. In the case of the safety helmet dataset, all models were trained with a batch size of 64 and a learning rate of 10−2. All experiments were conducted in PyTorchv2.5.0 and evaluated based on accuracy and resource cost metrics, utilizing an Intel Xeon Silver 4110 CPU @ 2.10 GHz (64 GB RAM) and an NVIDIA GeForce RTX 3090 GPU. For the proposed SACP method, the similarity threshold for identifying redundant channels was set to 0.8. The regularization coefficient λ that controls the strength of the similarity-aware penalty in the loss function was set to 0.001. The pruning ratio was determined by comparison with other models of similar parameter scales to ensure a fair evaluation of performance. During the fine-tuning stage that followed pruning, a learning rate of 0.001 was applied until the model’s performance stabilized.

4.2. Performance of SACP

4.2.1. VGG-16

VGG-16 is a well-known convolutional neural network (CNN) designed for image classification tasks and characterized by its single-branch architecture. In this study, we applied data augmentation and SACP to the VGG-16 architecture, obtaining models with different parameter scales to evaluate the effectiveness of the proposed method. The experimental results are summarized in

Table 1. For the CIFAR-10 dataset, which has a small number of classes and smaller image sizes, there was no accuracy loss when the model parameters were compressed by 76.4%. This indicates that the model contains parameters that are redundant and ineffective for such tasks. SACP achieved impressive compression rates of 93.6% for the VGG-16 model on the CIFAR-100 dataset while preserving accuracy compared to the baseline. In addition, SACP was compared with other typical pruning techniques at different compression ratios, and it consistently outperformed the other pruning methods. On the ImageNet dataset, we compared the performance of SACP with other pruning methods at various compression ratios to further assess the effectiveness of the proposed approach. As shown in

Table 1c, under the same compression levels, the test error of the SACP-based model is less than that of models obtained with other pruning techniques. This metric directly reflects the model’s detection error.

4.2.2. ResNet-50

We utilized SACP within the ResNet-50 architecture, a typical neural network that incorporates cross-layer connections, aiming to obtain slimmer networks while evaluating their performance on the CIFAR and ImageNet datasets. In this process, SACP was integrated with the proposed structured slimming framework to effectively compress ResNet-50. The data augmentation technique employed during training was consistent with those used for VGG-16, ensuring uniformity across our experiments. We utilized different pruning methods to compress ResNet-50, and

Table 2 summarizes the results of these experiments. From

Table 2a, it can be seen that for simple classification tasks, ResNet-50 can compress up to 70.9% with an accuracy loss of only 0.10% (test error increases from 21.02% to 21.12%), and its performance is superior to other pruning methods at the same compression amplitude. The robustness of the proposed method was further assessed on the complex ImageNet benchmark, a widely recognized standard for large-scale image classification. For difficult classification tasks like ImageNet, ResNet-50 compressed with our proposed SACP method shows less accuracy loss than other methods under the same compression ratio.

4.2.3. MobileNetv3

To further validate the generalization capability of the SACP method across diverse architectures, we applied it to MobileNetV3 [

41], a network specifically designed for mobile and embedded devices. Unlike the single-path structure of VGG-16 or the cross-layer connections of ResNet-50, MobileNetV3 incorporates neural architecture search (NAS)-optimized lightweight depth wise separable convolutions, linear bottleneck inverted residual blocks, and a lightweight attention mechanism (Squeeze-and-Excitation). This architecture is inherently highly compressed and refined. As the model is already lightweight, it presented a greater challenge for our pruning algorithm. Experiments were conducted on both the CIFAR and ImageNet datasets to comprehensively evaluate the compression efficacy of SACP on this extremely efficient architecture across different complexity scenarios. Results are presented in

Table 3.

As shown in

Table 3, SACP achieves a test accuracy of 92.03% with only 3.69 million parameters on CIFAR-10, exceeding the performance of DP-Net at 90.97% and closely approaching the baseline MobileNetV3-Large model, all while reducing parameters by 12.4%. On ImageNet, SACP attains 74.02% top-1 accuracy with 4.13 million parameters, corresponding to a 20.4% reduction in parameters. Notably, it outperforms both the manually engineered MobileNetV3-Large 0.75 variant and the widely adopted MobileNetV2 baseline, demonstrating its robustness and applicability even on highly optimized lightweight architectures.

4.3. Performance of YOLOv8-SCFF

4.3.1. Experiments on COCO Datasets

To further assess the effectiveness of our proposed approach, we evaluated the SACP technique on the YOLOv8 object detection framework. Experiments were conducted on the COCO 2017 dataset, a large-scale benchmark widely used for object detection tasks. Performance was quantified using the mean Average Precision (mAP) metric, specifically mAP@0.5:0.95, which measures detection accuracy under multiple IoU thresholds. We assessed the performance of our proposed models, YOLOv8m-SCFF and its pruned version YOLOv8m-SCFF-SACP, by comparing them with other state-of-the-art (SOTA) detection algorithms. All models were trained using the SGD optimizer, with a mini-batch size of 32 and an initial learning rate of 0.01. Experimental results are summarized in

Table 4. As shown in

Table 4, the proposed YOLOv8m-SCFF achieves a mAP@0.5:0.95 of 50.9%, outperforming the baseline YOLOv8m while maintaining a comparable parameter count. After applying the SACP algorithm, the model attained a mAP of 50.4% with significantly reduced parameters, demonstrating an effective balance between accuracy and model efficiency.

4.3.2. Experiments on Safety Helmet Dataset

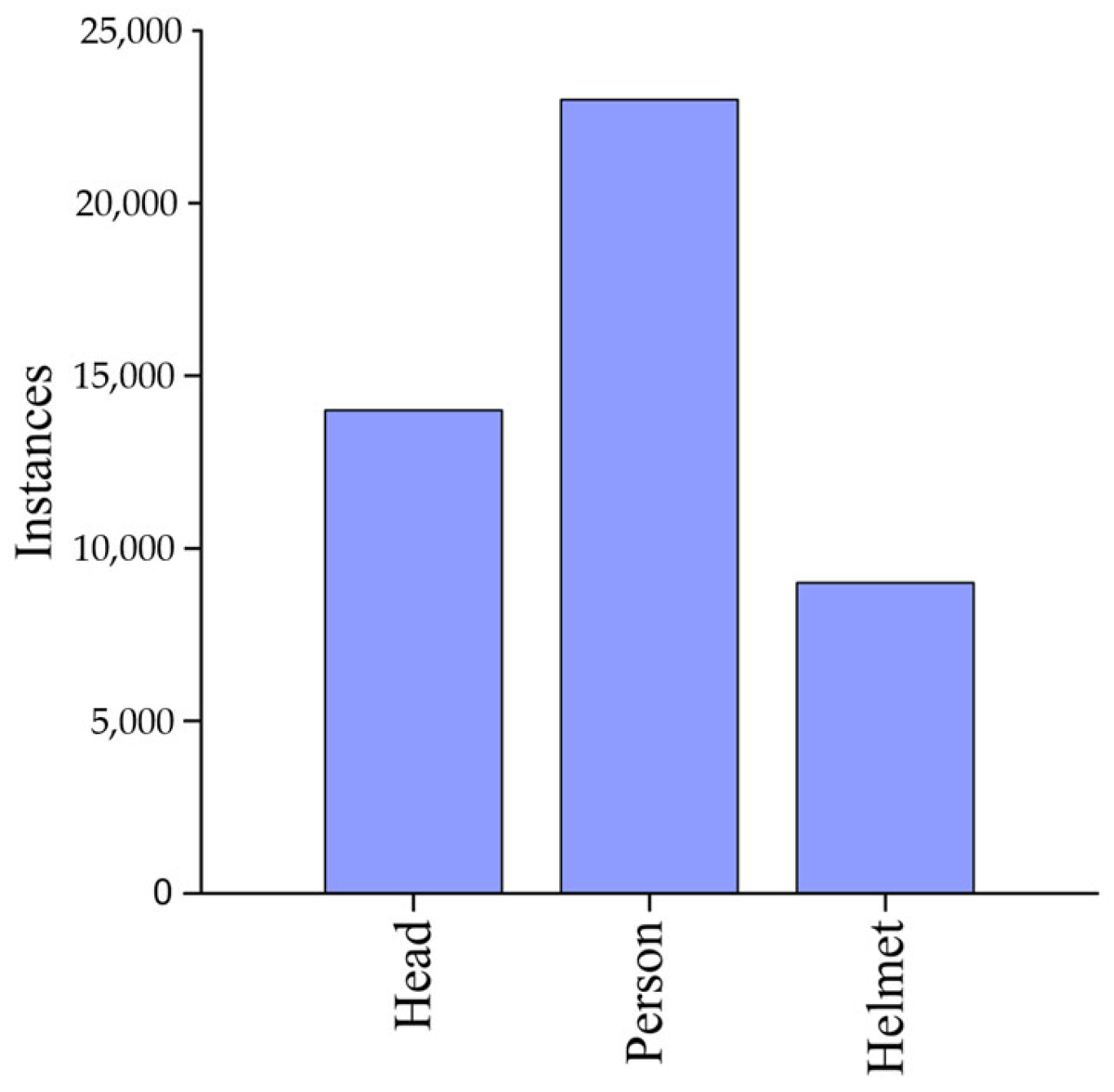

We conducted a series of experiments to evaluate the effectiveness of our proposed method on the safety helmet dataset, in which the dataset comprises images capturing various scenarios of helmet usage in real-world environments. The number of instances for each type in the dataset is shown in

Figure 6.

In practical deployment, achieving real-time monitoring while efficiently processing multiple video streams is critical. Therefore, we prioritized reducing the model’s parameter count to improve inference efficiency. We employed the mean Average Precision (mAP) metric to evaluate detection performance. The experimental results are summarized in

Table 5.

As shown in

Table 5, the proposed YOLOv8m-SCFF-SACP model maintains high detection accuracy while achieving a favorable balance between parameter efficiency and inference speed. With a mean Average Precision of 96.0% and 22.6 million parameters, the proposed model exceeds the performance of both YOLOv5m, which attains 94.7% mAP with 20.9 million parameters, and YOLOv8m, which achieves 95.5% mAP using 24.5 million parameters. The superior performance of our compressed model, along with the observed parameter redundancy in the original architectures on the industrial safety helmet dataset, indicates significant potential for structural optimization in this specific application scenario. These results demonstrate that the SACP method effectively reduces model size while retaining high precision by eliminating such redundancy. Furthermore, the reduced latency of the compressed model underscores its suitability for real-time applications.

We conducted systematic evaluations to assess the performance of the proposed spatial feature fusion (SFF) and channel feature fusion (CFF) modules integrated into the YOLOv8 framework. The experimental results on the safety helmet dataset, as summarized in

Table 6, demonstrate that incorporating the SFF module into the baseline YOLOv8m model improves the mAP@0.5 from 95.5% to 95.8%, while integration of the CFF module yields a more substantial gain, achieving 96.1% mAP@0.5. Although both fusion mechanisms enhance feature representation, the superior performance of the CFF module suggests its stronger ability to model channel-wise dependencies, which proves particularly beneficial for detecting safety helmets and related objects.

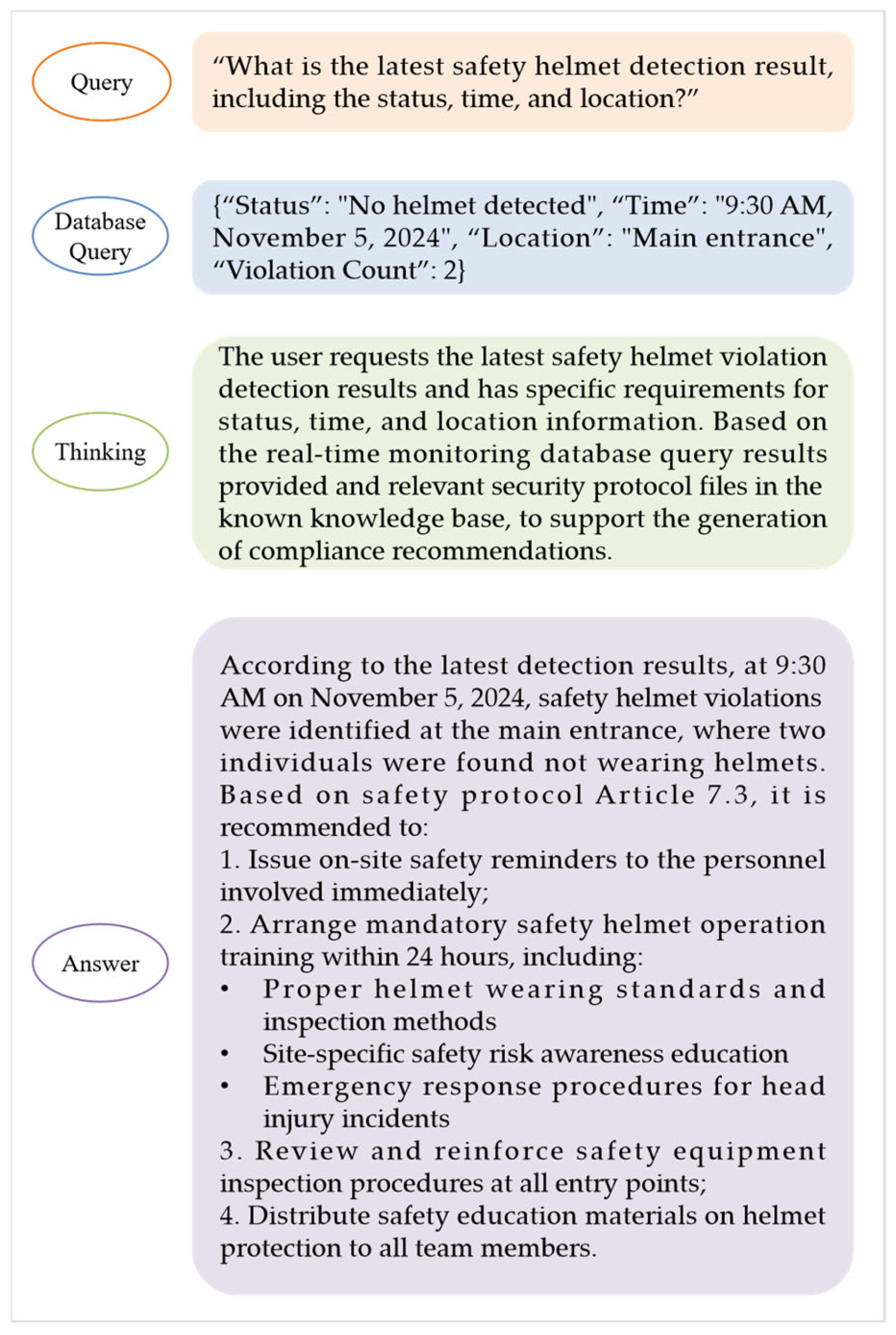

4.4. Proposed RAG and AI Agent in Practice

In our practical deployment, the proposed LLM-powered decision-support framework with RAG and an AI agent was rigorously evaluated in a real-world manufacturing environment to assess its operational effectiveness and impact on safety management. The system processed live video streams through an optimized YOLOv8-based detection model and used the AI agent to dynamically coordinate between real-time violation events and retrieve contextual knowledge. We observed that the framework was able to effectively translate raw detection alerts into actionable insights, including the identification of violations, suggested corrective measures, and relevant operational training guidelines. The integration of Qwen3-14B [

45] for SQL generation enabled fluent natural language-to-query conversion, allowing safety officers to retrieve structured violation records intuitively, while the use of Qwen3-32B [

45] in the reasoning phase ensured robust synthesis of multi-source data. The RAG component, implemented with Milvus [

46] and Qwen3-Embedding-4B [

47] embeddings, provided high-recall retrieval from safety guidelines and historical cases, effectively grounding model responses in verified knowledge. We conducted experiments using our safety compliance knowledge base (e.g., helmet detection violations and operational safety protocols) and the retrieval effectiveness was measured and compared across varying numbers of top-k retrieved passages. The experimental outcomes are presented in detail in

Table 7. The evaluation results in

Table 7 present the retrieval effectiveness of our RAG component across different numbers of retrieved passages (top-k). The metrics show that as k increases from 5 to 20, the recall improves significantly from 0.200 to 0.600, indicating that the system becomes more comprehensive in retrieving relevant safety guidelines and historical cases. While precision decreases from 0.800 to 0.600 as more passages are retrieved, this trade-off is acceptable for safety-critical applications where high recall is prioritized to ensure no critical safety information is overlooked. These results confirm that our RAG implementation provides balanced and effective retrieval performance, thereby ensuring the AI agent’s responses are well-supported by authoritative knowledge sources.

This framework directly addresses the significant challenge managers face in extracting target information from vast and complex monitoring datasets. The intelligent question–answer system, which leverages the proposed RAG, LLM, and AI agent architecture, enables real-time extraction of detailed violation data—such as specific times, locations, and contextual monitoring information—from the helmet detection system. By dynamically integrating these real-time events with retrieved knowledge, the system provides management with rapid, accurate judgment criteria and data-driven decision support, effectively transforming raw alerts into operational insights. The workflow of these components is illustrated in

Figure 7, which presents the architecture of the real-time helmet detection Q&A system based on RAG and agent.

Compared to conventional safety monitoring systems combining computer vision with rigid rule-based reasoning, our framework demonstrates critical advancements through its integrated design. The SACP method enables efficient compression for edge deployment while maintaining real-time performance. The SFF and CFF mechanisms enhance multi-scale detection capability for complex industrial scenarios. Most significantly, our LLM-powered framework with RAG integration enables dynamic adaptation to new regulations without manual updates, representing a substantial improvement over traditional systems through synergistic visual–linguistic collaboration.

These technical innovations collectively contribute to a system capable of sophisticated data processing, enabling real-time monitoring and identification of potential safety hazards and facilitating prompt preventive action. It significantly enhances safety management efficiency through automated violation detection and intelligent alert prioritization while improving violation responses via rapid incident analysis. This approach ensures workplace safety compliance and employee protection by transforming reactive monitoring into proactive risk prevention.

5. Conclusions and Future Work

This paper presents a structured pruning methodology based on feature similarity, termed Similarity-Aware Channel Pruning (SACP), which achieves efficient and automated channel-level compression by identifying redundant channels through similarity measurement and suppressing them via L2 norm regularization in the loss function. Through iterative fine-tuning, redundant channels are driven toward zero activation before one-shot removal, maintaining performance while significantly reducing model complexity. To improve multi-scale object detection in complex industrial settings, we introduced spatial feature fusion (SFF) and channel feature fusion (CFF) mechanisms, which were integrated into the backbone and neck of YOLOv8, respectively, to enhance feature representation and object discrimination across varying sizes and distances. Furthermore, we developed an LLM-powered assistant framework incorporating Retrieval-Augmented Generation (RAG) and an agent-based workflow, which enables real-time mining and interpretation of violation data from monitoring streams, supports natural language querying, and provides actionable insights for safety supervision and operational management. Extensive evaluations on multiple benchmark datasets and a real-world safety helmet dataset validate the efficiency, accuracy, and industrial applicability of the proposed system.

In future work, we plan to extend the compression framework to more advanced detection architectures and explore task-aware pruning criteria for improved accuracy–efficiency trade-offs. It should be noted that the current pruning strategy may have limited adaptability to transformer-based detection architectures, which represents a limitation of this study and warrants further investigation. We also aim to incorporate a broader set of regulatory documents and operational guidelines into the RAG-enhanced LLM system to support more comprehensive and compliant decision-making. Additional efforts will focus on optimizing cross-platform deployment and enhancing system adaptability in dynamic industrial environments. Furthermore, to address practical considerations for industrial deployment, future validation will incorporate specific metrics to quantify real-time performance constraints, including network latency (measured as end-to-end inference delay from image input to decision output), throughput (evaluated in terms of frames processed per second under typical workload conditions), and memory footprint (assessing both GPU and CPU memory consumption during continuous operation). Establishing these quantitative benchmarks will provide a comprehensive framework for validating the system’s readiness for real-world deployment.