1. Introduction

Multi-target tracking (MTT) relies on prior information and sensor measurement data to estimate the number and states of targets [

1] in a monitored region, which has found widespread application in domains including radar monitoring [

2], indoor localization [

3], biomedical analysis [

4], automated fault detection and diagnosis (AFDD) [

5], and autonomous driving [

6]. Traditional methods include two types: Multiple Hypothesis Tracking (MHT) [

7] achieves high-precision multi-target tracking by systematically exploring all matching paths between targets and observations; Joint Probabilistic Data Association (JPDA) [

8] works by calculating the association probabilities between measurements and targets, and fusing the information from different measurements into the target state estimation process in a weighted manner. However, in complex tracking environments, traditional multi-target tracking methods often face challenges such as reduced tracking accuracy and increased computational burden, largely due to factors like fluctuating target numbers and clutter interference. To meet the demands for precise tracking, random finite set (RFS) theory [

9] has seen remarkable growth within the realm of multi-target tracking. RFS-inspired methods treat target states and measurements as random finite sets, possessing good target state estimation performance, and avoiding complex data association processes. The Probability Hypothesis Density (PHD) filter, as noted in Reference [

10], stands as the most straightforward multi-target RFS filter; the Cardinalized Probability Hypothesis Density (CPHD) filter [

11] extends the PHD by simultaneously tracking both the PHD and the cardinality distribution. The Multi-target Multi-Bernoulli (MeMBer) filter [

9] is another RFS-based approach designed to handle multiple targets simultaneously by modeling the multi-target density as a multi-Bernoulli process. However, the MeMBer filter is prone to overestimating the number of targets during target tracking tasks, while the Cardinality Balanced MeMBer (CBMeMBer) filter proposed in [

12] effectively mitigates this problem. Furthermore, by incorporating the idea of labeled RFS, the generalized labeled multi-Bernoulli (GLMB) filter [

13] can simultaneously estimate target states and trajectories, with its simplified version being the labeled multi-Bernoulli (LMB) filter [

14].

Unlike filtering algorithms, which only use present measurements to update the predicted state, smoothing algorithms update past state estimates by utilizing historical measurements. This approach improves the accuracy of target tracking in scenarios where real-time processing is not essential. Multi-target Bayesian smoothers are often computationally intractable, leading researchers to propose approximate implementations, such as the PHD smoother [

15], the CPHD smoother [

16], and the MeMBer smoother [

17]. Nevertheless, these methods do not explicitly label trajectories, thereby hindering the association of target states with individual identities. The first derivation of a closed-form expression for the labeled RFS smoother is proposed in [

18], but the proposed GLMB smoother faces significant computational challenges due to its highly complex data association architecture. A multi-scan GLMB filter aiming to provide better state estimation is introduced in [

19]. However, its implementation involves solving an NP-hard assignment problem, resulting in high computational costs even with Gibbs sampling. An LMB smoother with computational efficiency is proposed in [

20], whose computational complexity is linear with the number of targets. A partially smoothed GLMB tracker is proposed in [

21], applying Rauch–Tung–Striebel (RTS) smoothing to trajectories estimated at each GLMB filtering step. A one-time step lagged GLMB smoother is further proposed in [

22], which shows better cardinality estimation performance compared to LMB filters under the same lag interval.

As sensor monitoring scenarios grow complex, multi-sensor (MS) data fusion becomes essential to mitigate single-sensor (SS) limitations (e.g., restricted field-of-view, sparse measurements, and instability [

23]), improving robustness and precision in multi-target estimation. Multi-sensor fusion systems are categorized as centralized and distributed. Compared to distributed fusion, centralized fusion minimizes information loss and is capable of achieving higher accuracy. Theoretically, it can attain optimal performance by fully leveraging the available data. In multi-sensor scenarios, the primary challenge lies in establishing reliable associations between data from various sensors. Existing centralized MS RFS filters include the MS-PHD filter, the MS-CPHD filter, and the MS-MeMBer filter. For both the MS-PHD and the MS-CPHD filter, it is computationally infeasible to evaluate the likelihood of all measurement partition subsets with the predicted density during the update process. Therefore, a greedy partitioning strategy [

24] is employed to focus on the measurement partition subsets that are most relevant to each predicted density. The MS-MeMBer filter proposed in [

25] updates the predicted Bernoulli component solely according to its associated multi-sensor subsets to reduce the computational complexity, while its complexity primarily originates from the implementation of multi-sensor measurements partitioning. The GLMB filter (SS-GLMB) can also be applied to multi-sensor scenarios, referred to as the MS-GLMB filter. Notably, the MS-GLMB filter implementation method proposed in [

26] achieves quadratic complexity in target cardinality and linear complexity in measurement count.

Nearly all of the existing RFS smoothers are designed for single-sensor scenarios. This is because, in centralized multi-sensor systems, processing historical measurements of all sensors simultaneously results in exponential growth as the number of sensors and the size of the smoothing windows increase. An MS-multi-scan GLMB filter is proposed in [

27], aiming to smooth target states from initialization to the current time using current measurements. However, this approach requires solving large-scale NP-hard multidimensional assignment problems. While the MS-multi-scan GLMB filter improves estimation accuracy, it impairs smoothing while filtering performance in practical multi-target tracking scenarios.

Here, we firstly extend the multi-target forward–backward smoothing method to multi-sensor scenarios and propose a centralized multi-sensor generalized labeled multi-Bernoulli smoother (MS-GLMB-S), aiming to enhance the accuracy of multi-target state estimation in multi-target tracking. The implementation method of MS-GLMB-S achieves quadratic complexity in target cardinality and linear complexity in measurement count and the length of lag interval. Compared with SS-GLMB-S, MS-GLMB-S not only accommodates multi-sensor scenarios but also extends the one-time-step lag to a multi-time-step lag during the smoothing process. Relative to MS-GLMB, MS-GLMB-S performs a lagged update on the GLMB filtering density generated by MS-GLMB, and further converts this filtering density into a GLMB smoothing density. Compared with smoothing frameworks of the “multi-scan” type, the Bayesian forward–backward smoothing framework in MS-GLMB-S is essentially different: the MS-multi-scan GLMB filter predicts and updates the multi-scan GLMB density, which can be understood as using the measurement at the current time to update the multi-target trajectories from the initial time to the current time. In contrast, MS-GLMB-S utilizes “multi-scan” measurements within a lag interval to update the standard GLMB density. The primary contributions presented in this paper include the following dimensions:

- (1)

We propose a multi-sensor GLMB smoother based on the standard Bayesian forward–backward smoothing framework. In the forward filtering part, the centralized MS-GLMB filter is applied to compute the filtering posterior density. In the backward smoothing part, the smoothing weights of the GLMB components are adjusted based on the filtering posterior density and measurements from multi-sensor within a lag interval. We derive expressions for the simplified multi-sensor backward corrector and the multi-target smoothing density.

- (2)

We present a computationally practical implementation of MS-GLMB-S by using the Gibbs sampling method. By deriving the time-decoupled form of the smoothing weight, a suboptimal Gibbs sampling for smoothing is proposed, allowing different sensors to sample independently at different lag intervals. This approach efficiently associates multi-sensor measurements and generates high-weight GLMB components during the smoothing process.

- (3)

We provide a detailed calculation of smoothing parameters in the linear Gaussian mixture model, and the proposed MS-GLMB-S is also applicable in nonlinear scenarios through the Extended Kalman Filter (EKF). We conduct simulation experiments both in linear and nonlinear scenarios to demonstrate the effectiveness and real-time performance of MS-GLMB-S. The results are compared with SS-GLMB, MS-GLMB, and SS-GLMB-S.

The remainder of this paper is structured as follows:

Section 2 presents an overview of RFS-based multi-sensor multi-target tracking.

Section 3 introduces the closed-form expression of the multi-sensor backward corrector and the recursion of the proposed MS-GLMB-S.

Section 4 details the efficient implementation method for the MS-GLMB-S. Simulation results and discussions are presented in

Section 5, while a summary of this paper is provided in

Section 6.

3. Proposed Centralized MS-GLMB-S

The proposed centralized MS-GLMB smoother is based on the Bayesian forward–backward framework. The filtering posterior density is obtained via centralized fusion of all sensor measurements at time . Subsequently, the posterior density at time is updated using each sensor’s stored measurements from time to , resulting in the smoothing density at time . For notational convenience, we use to denote the lag interval , i.e., , where is the lag interval length.

This section outlines the backward recursion process of the proposed smoother. In

Section 3.1, we derive the multi-sensor backward corrector, and in

Section 3.2, we present the calculation method for multi-target smoothing density.

3.1. Multi-Sensor Backward Corrector

The precise expression of the backward corrector is detailed in [

18], yet its calculation is challenging. To simplify computation, we neglect target birth and death during the backward process [

30], the multi-sensor backward corrector is formulated as

where

is the joint association map of sensor

from time

to time

,

denotes the set of all

, and

where

is the single target transition density, and the expression for

is presented in (6). Suppose that

, then (16) becomes

where

, and

Because target birth and death are ignored in the backward smoothing process, the label space remains constant (i.e.,

), thereby simplifying the computation of the backward corrector. We then define the key abbreviated notations as follows,

Assuming conditional independence between sensors, the multi-sensor backward corrector is formulated as

More specifically, within the smoothing lag interval, the processes of measurement data generation and target detection for each sensor do not interfere with one another; thus, their backward smoothing processes are also independent, i.e., Equation (19) holds.

3.2. MS-GLMB-S Recursion

In this subsection, we formulate the multi-target smoothing density using the multi-sensor backward corrector and the multi-target posterior density. According to Proposition 2 in [

30], if the multi-target posterior density follows the GLMB form in the single-sensor case, the smoothing density will also adhere to the GLMB form. The structural equivalence of the multi-sensor backward corrector to single-sensor cases allows that if the filtering posterior density in a multi-sensor scenario is a GLMB distribution,

then the multi-target smoothing density is also a GLMB distribution

where

Given the smoothing weight

from time

to time

, the smoothing weight from time

to time

is

where

As can be observed from (25), we have decoupled the expression for the smoothing weights across time, where the influence of each time step within the lag interval on the smoothing weight can be expressed via the scaling factor

. Next, we prove (25): starting with (16), we rearrange it as

Similarly, for multi-sensor scenarios, we have

Given the smoothing weight

from time

to

, the weight at time

t is derived via (22) as

Substituting (24) into (30), we have

In (29), we perform temporal decoupling on

, and substituting it into (31) yields

where

coincides with

in (25), thereby validating its correctness.

We intuitively present the time-decoupled calculation process of smoothing weights in

Table 1: when the smoothing window length is 0, the smoothing weight is equal to the filtering weight

; when the lag interval length is 1, the smoothing weight is the filtering weight multiplied by the scaling factor

; when the lag interval length is 2, the smoothing weight is

multiplied by the scaling factor

(the calculation of the scaling factor is given by (26) and (27)), and so on.

It should be emphasized that each denotes the GLMB component in the forward filtering process, whereas stands for the GLMB smoothing component in the backward smoothing process. The MS-GLMB-S recursion does not change any GLMB components ; instead, it only adjusts their respective weights. Specifically, the smoothing weight is proportional to the posterior weight scaled by , and is recursively computed via (25).

4. MS-GLMB-S Implementation

As illustrated in Equation (21), each GLMB element generates

smoothing components. As the lag interval length increases, computational complexity escalates exponentially. Herein, we present a practical approach to realize the MS-GLMB-S by truncating the smoothing density. Specifically, in

Section 4.1, we re-express the joint association map

in multidimensional array form. In

Section 4.2, we introduce a suboptimal Gibbs sampling approach with a stationary distribution to address the NP-hard multidimensional assignment problem. We then detail the linear Gaussian mixture implementation of MS-GLMB-S in

Section 4.3.

4.1. MS Extended Association Map

The extended association map defined as can be generalized to MS cases as , where denotes the number of measurements provided by the sensor . Following this framework, we reformulate the smoothing component using an extended association map.

During backward smoothing, the extended association map for the sensor

at time

is denoted as

, where

means track

is associated with the

-th measurement of the sensor

;

means misdetection of

. Since target birth and death are excluded from backward smoothing, the non-existent case

is omitted. The MS extended association map at time

is formulated as

where

In the previous section, we define the time window

as the smoothing interval, and the MS extended association map over this interval is formulated as

where

Since target birth and death are not considered during the smoothing process, the label set is

. We enumerate

, and the MS extended association map

can be written as a

matrix

where

denotes a sequence of length

. Let

denote all one-to-one MS extended association maps, and

,

will yield

and

from any

. Exploiting the 1–1 correspondence between

and

, the multi-target smoothing density from (21) is rewritten as follows,

According to (25),

can be written as

Based on this, we can achieve temporal decoupling of the multi-target smoothing density expression, which offers convenient support for the subsequent sampling process.

4.2. GLMB Smoothing Component Truncation

In the previous subsection, each smoothing component

is equivalently represented via a multi-sensor extended association map

, with the smoothing density weights defined by (35). To generate significant smoothing components, we sample

from a distribution

approximately proportional to the smoothing weight

. Given that the smoothing weight has a temporally decoupled structure, this distribution is formulated as

where

and

is selected to be roughly proportional to

. We sample from (37), i.e.,

using a Gibbs sampler. Given the current state

, the components

of the next state

follow the conditional sequence distribution

, where

. As iterations increase indefinitely, the sample distribution converges to the stationary distribution [

31].

For

, the distribution is denoted as

and then substituting (37) into (38) yields

Let

, and

, where

. Following Lemma 1 in [

27], (39) can be reformulated as

where

Since each in (37) is chosen to approximate , we set to obtain the optimal stationary distribution, where corresponds to the probability of label being associated with measurements at time .

In this case, the conditional

is a categorical distribution with

categories, leading to exponential growth of computational complexity even with a small number of sensors. To address this, we present a computationally efficient suboptimal stationary distribution through an approximation of

, allowing independent sampling of

from their respective distributions on

. Following Definition 2 in [

27], we formalize

as

Since each

must approximate

, we set

, where

,

represents the likelihood that label

corresponds to the measurement

of the sensor

. This approximation is reasonable because the sensor measurements are conditionally independent. Substituting (42) into (36) yields the suboptimal stationary distribution.

Let

, then (42) is rewritten as

where

, and

Note that target birth and death are not considered during smoothing, so the case is excluded. The proposed sampling method supports independent sampling of measurements from each sensor at each frame within the lag interval, and exhibits a computational complexity of , where . The associated sampling steps are presented as pseudocode in Algorithm 1.

| Algorithm 1: Suboptimal Gibbs |

|

|

| for |

| end |

4.3. Linear Gaussian Mixture (LGM) Implementation

In this subsection, (23) and (24) are calculated under the LGM framework, where the single-target transition density and likelihood take the form:

where

denotes the Gaussian probability density with mean

and covariance

.

The single-target filtering density follows the Gaussian mixture distribution.

Note that (23) and (24) also follow the Gaussian mixture distribution.

where

Under the LGM model,

in (21) is Gaussian with mean

and covariance

. Then the calculation for

in (51) is derived as

where

We briefly explain the structures of

,

, and

here:

and

are column stacked vectors;

is a diagonal stacked vector:

The computations for

,

are detailed in Section 4 of [

29]. Then

and

in (52) can be expressed as

For systems with nonlinear measurement and motion models, it should be noted that the computation of (54) and (56) incorporates and at each time step. Consequently, the Extended Kalman Filter (EKF) is applicable for computing and , as it utilizes the first-order Taylor series to obtain a linear approximation.

Algorithm 2 presents the pseudocode for the proposed MS-GLMB-S. The GLMB components are enumerated as , and the filtering posterior density in (20) is expressed as , where , . Similarly, multi-target smoothing density is expressed as , where and is the predetermined maximum number of smoothing components.

| Algorithm 2: Pseudo-code for proposed MS-GLMB-S |

|

|

5. Simulation Results

In

Section 5, two numerical experiments demonstrate the effectiveness of MS-GLMB-S. The first experiment showcases its performance in a linear Gaussian scenario, where position sensors track constant-velocity targets. The second experiment demonstrates its adaptability in a nonlinear scenario, where sensors provide both range and bearing measurements, and targets follow nonlinear trajectories. All subsequent experiments are implemented in MATLAB R2022a with a processor: AMD Ryzen 9 9950X (4.30 GHz).

5.1. Linear Gaussian

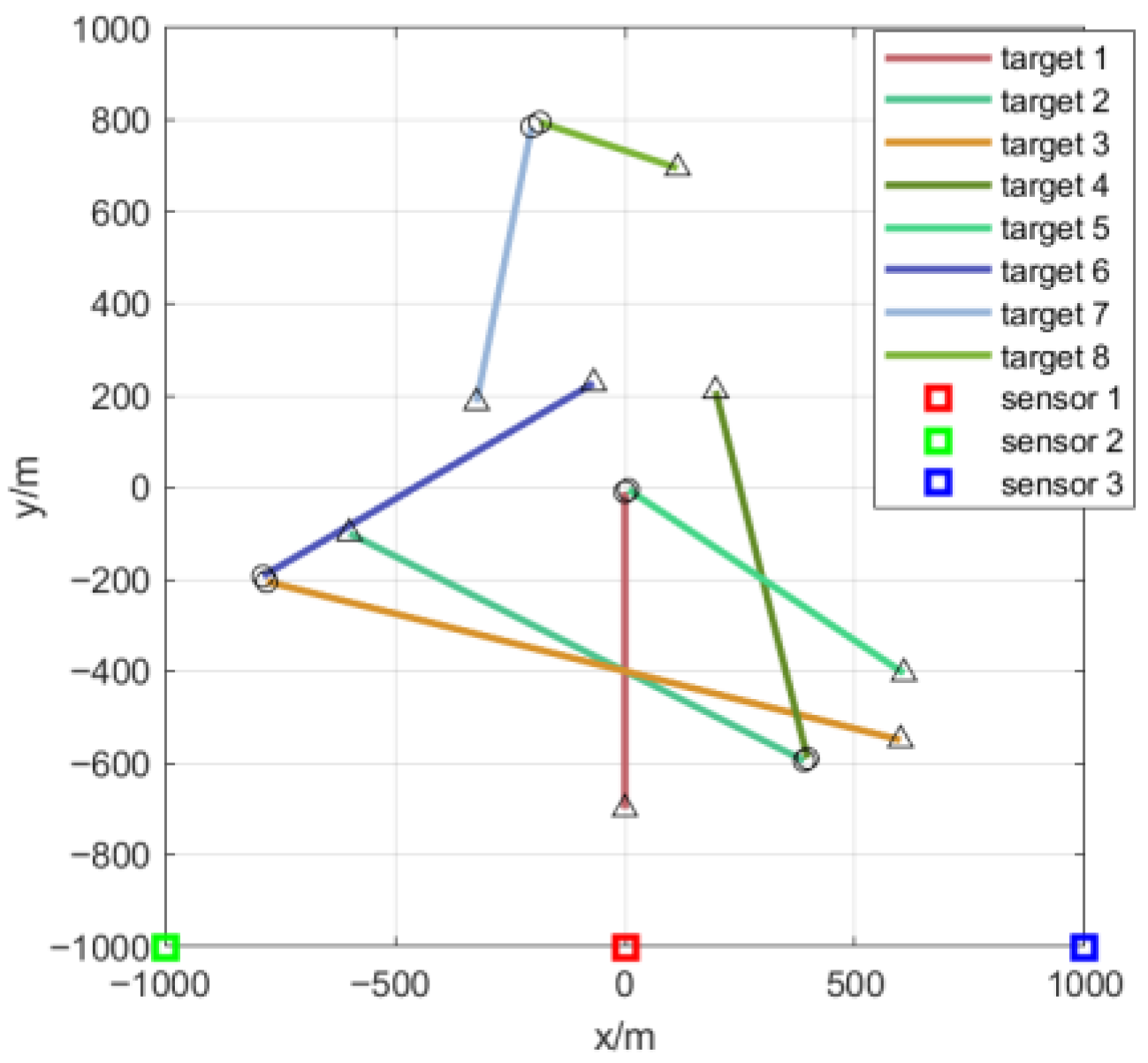

As shown in

Figure 1, all targets move at the same speed along a straight path. The starting and finishing positions are shown by the circles and triangles, while the locations of all three sensors are shown by the squares. In the first scenario, each sensor has a detection range covering the entire area and a sampling rate of 1 Hz, and only monitors the position of targets.

The target state is denoted as

, where

denotes the position and

denotes the velocity. The measurement from the sensor

is denoted as

.

,

,

, and

are defined as

respectively, where

,

,

,

,

. The target survival probability and detection probability are

and

, while the newborn targets follow an LMB distribution with four components. The clutter follows a Poisson distribution with

.

Table 2 shows the initial state and duration of each target, while the tracking performance is evaluated by the Optimal Sub-pattern Assignment (OSPA) distance [

32] and the OSPA

(2) (OSPA-on-OSPA) distance [

33]. The OSPA distance measures dissimilarity between two point sets, consisting of position and cardinality errors. A smaller value means better multi-target tracking performance, but it fails to reflect track label switching errors. Thus, the OSPA

(2) distance is included for comparison: it captures the overall error between true and estimated trajectory sets across multiple time steps, reflecting the effects of trajectory fragmentation and label switching, thereby more comprehensively evaluating multi-target tracking performance. All subsequent experimental results are the average values obtained from 100 Monte Carlo trials.

We conduct a comparison between the proposed MS-GLMB-S and three existing methods: the GLMB filter (SS-GLMB) [

34], the centralized MS-GLMB filter (MS-GLMB), and the GLMB smoother (SS-GLMB-S). Since SS-GLMB and SS-GLMB-S are designed for single-sensor scenarios, we utilize measurements from sensor 1. It is worth noting that since the type and detection range of the three sensors are identical, selecting any one of them will not affect the experimental results. For MS-GLMB and MS-GLMB-S, we utilize measurements from all three sensors. Notably, the lag interval length of MS-GLMB-S is also set to 1 in the simulation.

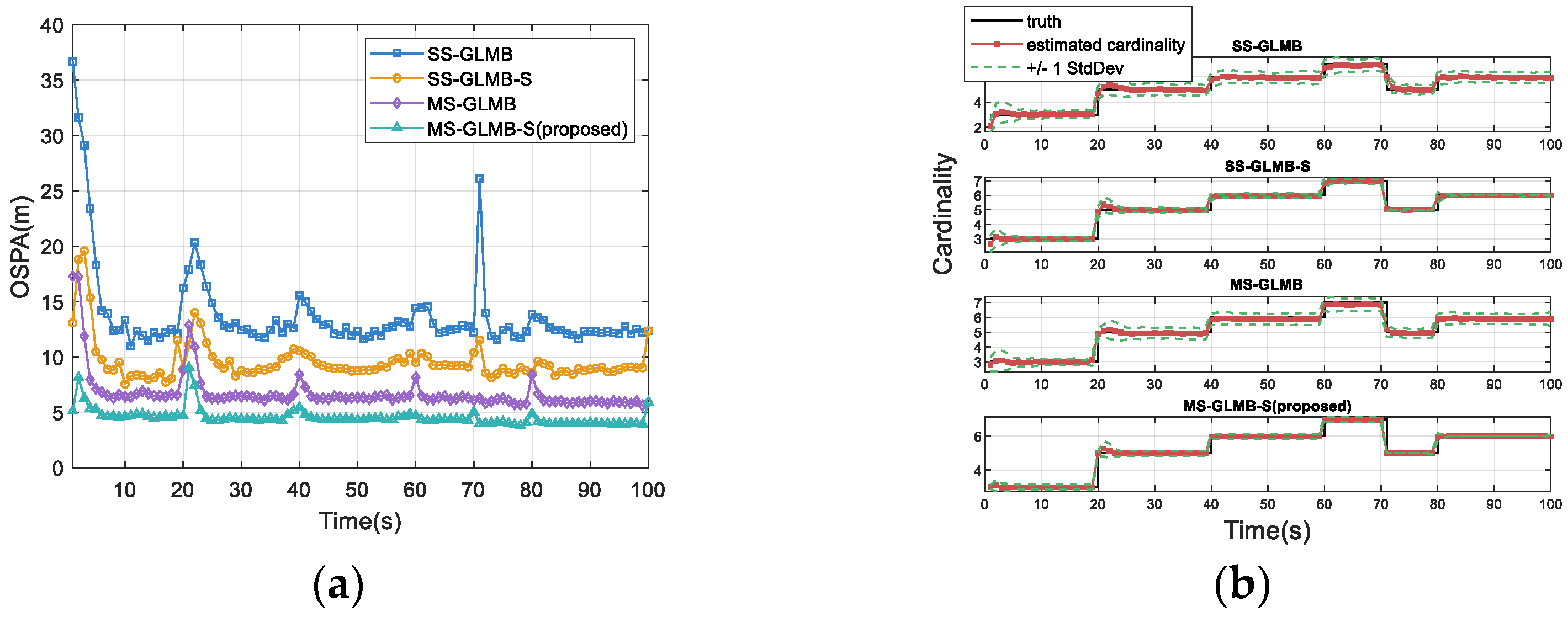

The OSPA distances and cardinality estimations corresponding to four methods are displayed in

Figure 2a,b. It is evident that the proposed MS-GLMB-S significantly outperforms SS-GLMB, SS-GLMB-S, and MS-GLMB. This performance gain is attributed to the fact that MS-GLMB-S not only considers the measurements from multiple sensors, but also uses all the measurements within the lag interval to update the previous target state.

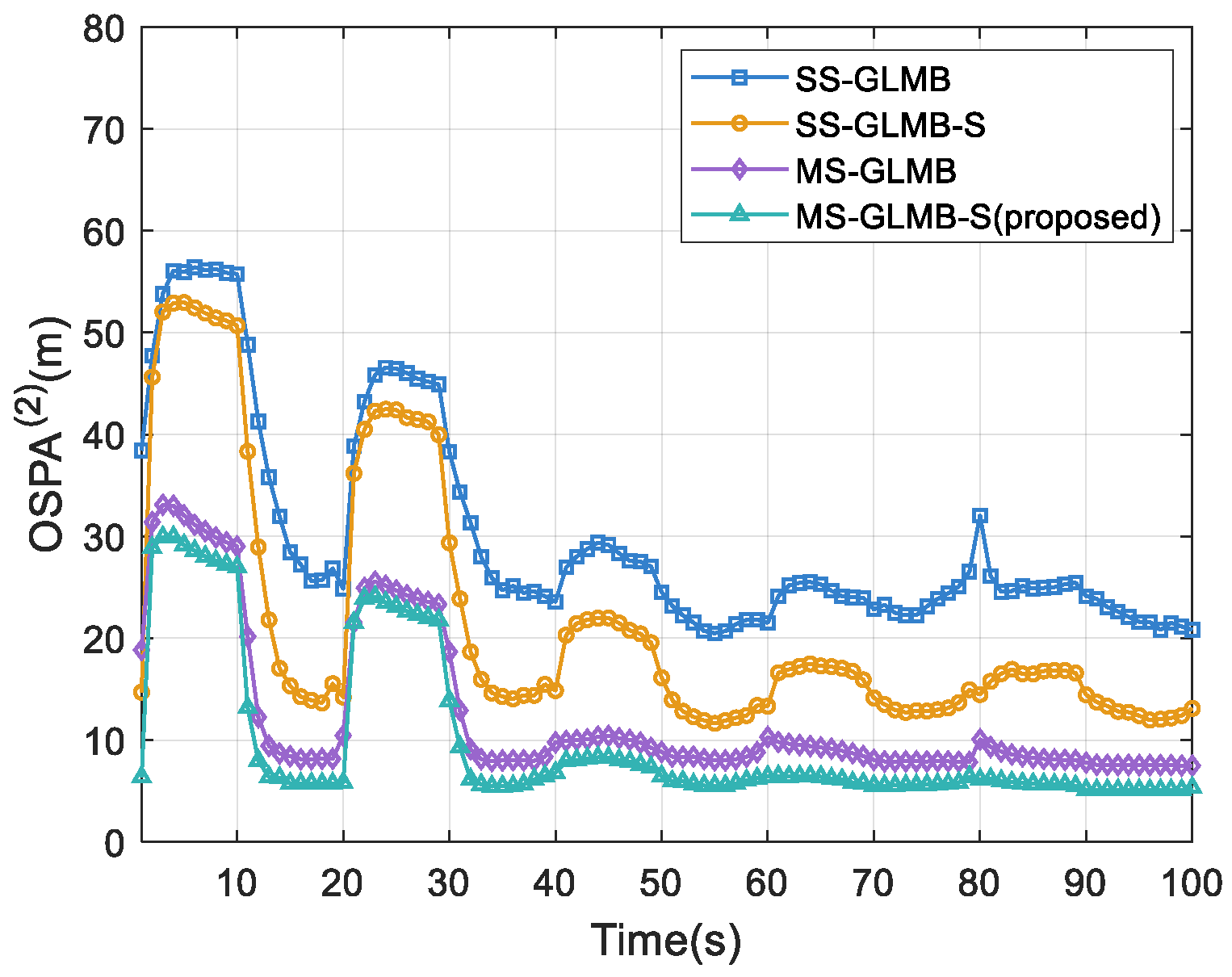

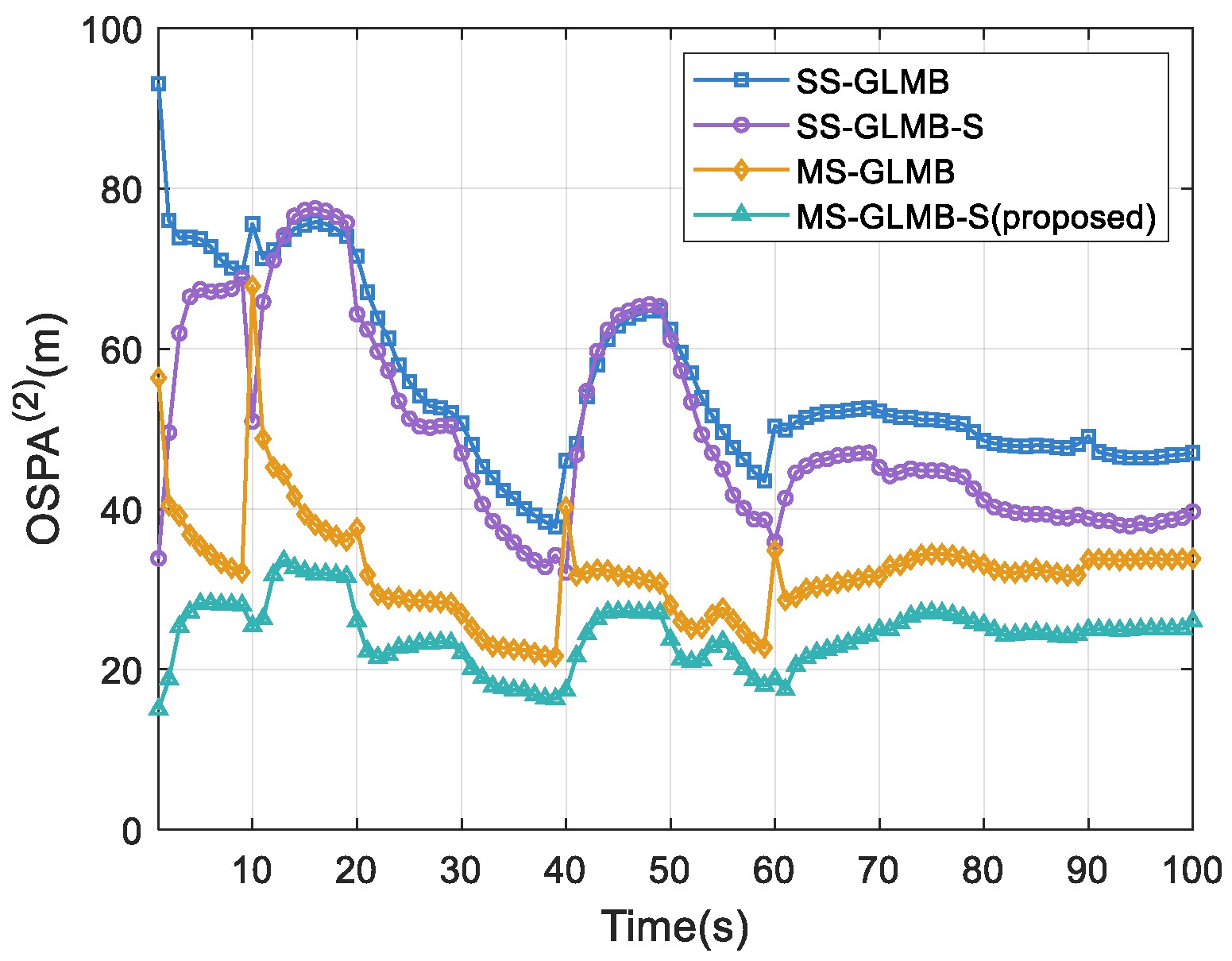

Figure 3 shows that the proposed MS-GLMB-S consistently achieves the smallest OSPA

(2) distance, which indicates that it has the highest tracking accuracy and effectively reduces the impact caused by track label switching.

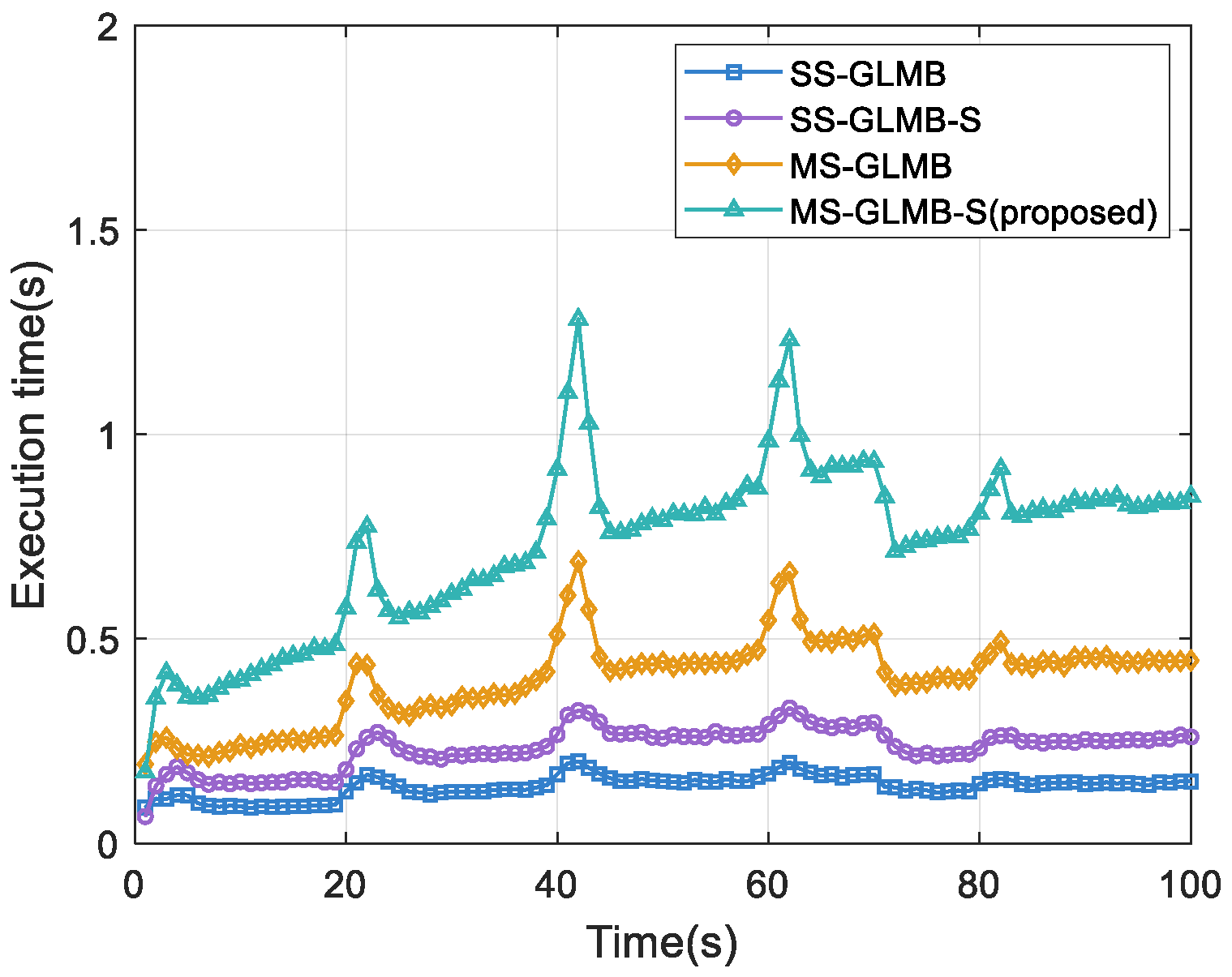

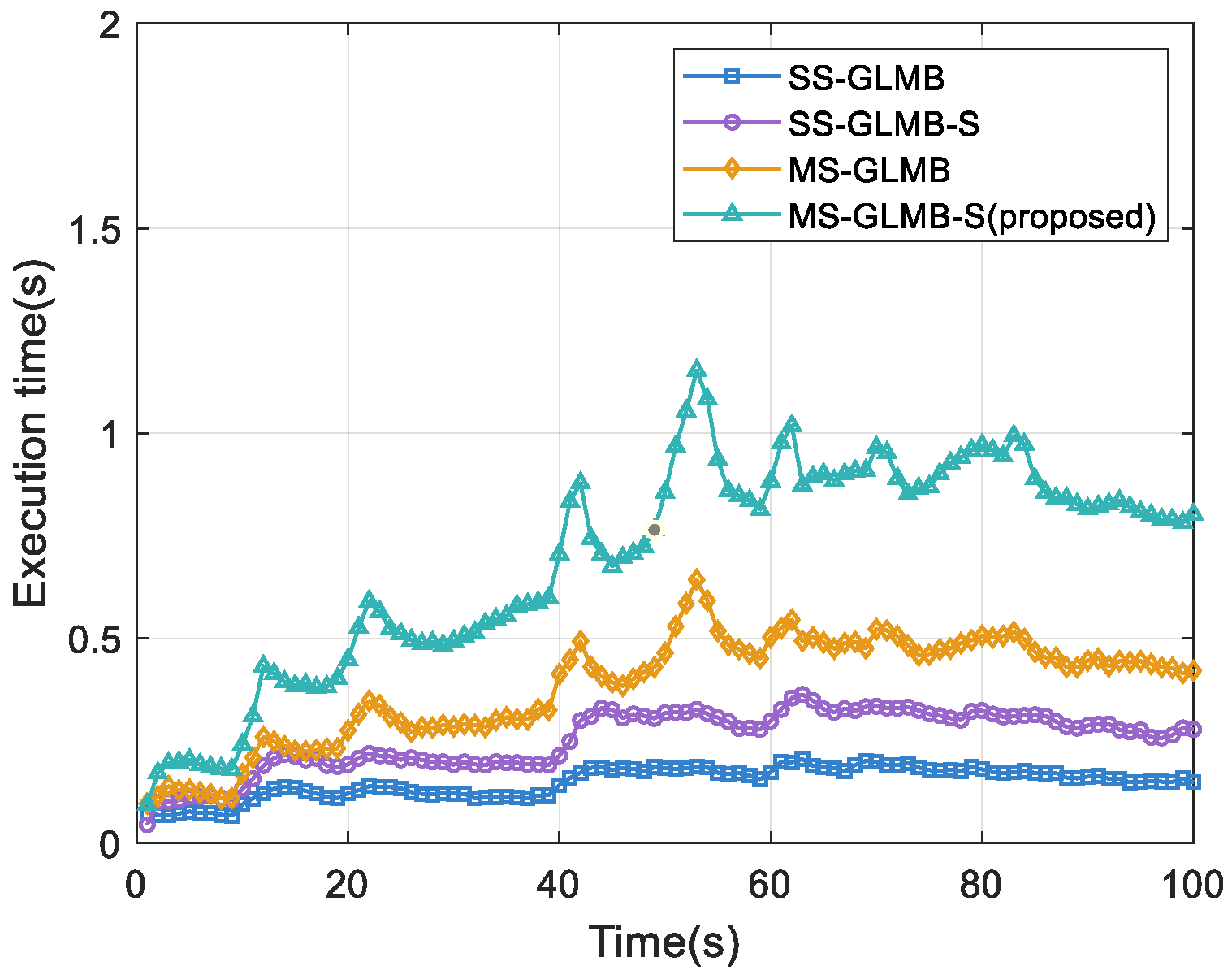

Figure 4 presents the per-frame runtime of the four methods. Of these methods, MS-GLMB-S exhibits the relatively longest average runtime, as it requires processing measurement data from each sensor within the lag interval.

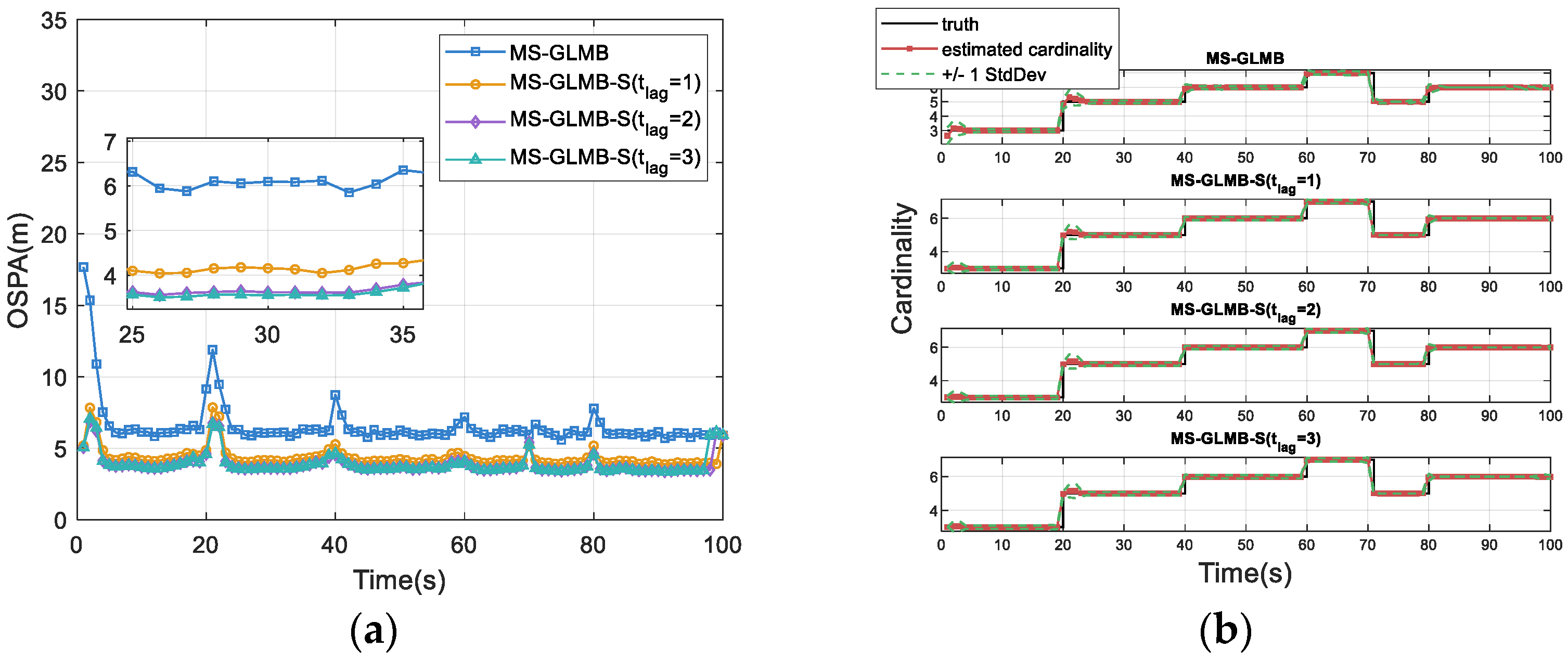

We further analyze the impact of varying lag intervals (

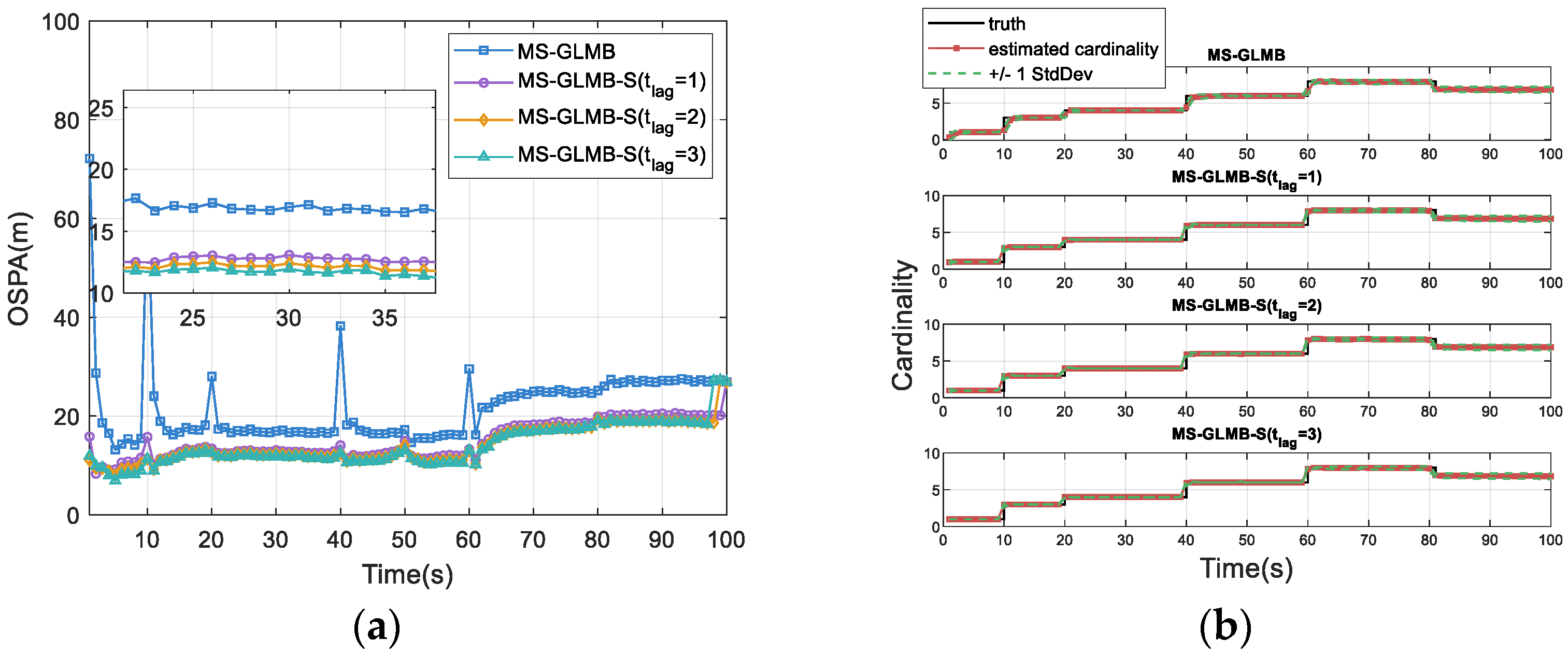

) on the performance of MS-GLMB-S. When the lag interval length is set to 0, MS-GLMB-S reduces to the standard MS-GLMB filter.

Figure 5a shows that the OSPA distance decreases with increasing lag interval length, indicating that the proposed MS-GLMB-S achieves superior tracking performance with a larger lag interval length. From

Figure 5b, it is noticeable that the performance improvement of MS-GLMB-S with increasing lag steps is mainly reflected in the gradual reduction in the OSPA localization components, while the cardinality estimations are nearly identical and closely match the true value. This indicates that increasing the lag interval yields limited improvement in the estimation accuracy of the target number, but can further enhance the estimation accuracy of target positions.

Then we test the impacts of different numbers of sensors and lag intervals on the tracking accuracy and real-time performance of MS-GLMB-S, with the results presented in

Table 3. Given the sensor sampling rate of 1 Hz, the tracking system is considered to have satisfactory real-time performance if the average runtime per frame is less than 1 s. As shown in

Table 3, when the number of sensors is set to 2, the average runtime per frame is less than 1 s for lag interval lengths of 1, 2, and 3. However, as the lag interval length increases from 2 to 3, the average OSPA distance decreases by only 0.03 m, while the average runtime increases by 0.25 s. When the number of sensors is set to 3, the tracking accuracy is continuously improved. Nevertheless, when the lag interval length is 3, the average runtime per frame exceeds 1 s, leading to a significant degradation in real-time tracking performance.

In summary, increasing the lag interval length enhances the performance of MS-GLMB-S, but an excessively large lag is not advisable. This is because such a lag not only causes a significant growth in computational complexity and undermines real-time capability but also does not bring about any notable performance enhancement.

To evaluate the robustness of the proposed MS-GLMB-S, we compute OSPA and OSPA

(2) distance under varying

and

. The number of sensors is set to 3, and the lag interval length is set to 1. The results are derived from 100 Monte Carlo simulations. As shown in

Table 4, the impact of decreasing the

on MS-GLMB-S performance is more notable than that of increasing

; nevertheless, the OSPA distance and OSPA

(2) distance do not exhibit severe changes. Such results further confirm that MS-GLMB-S is robust to variations in both

and

.

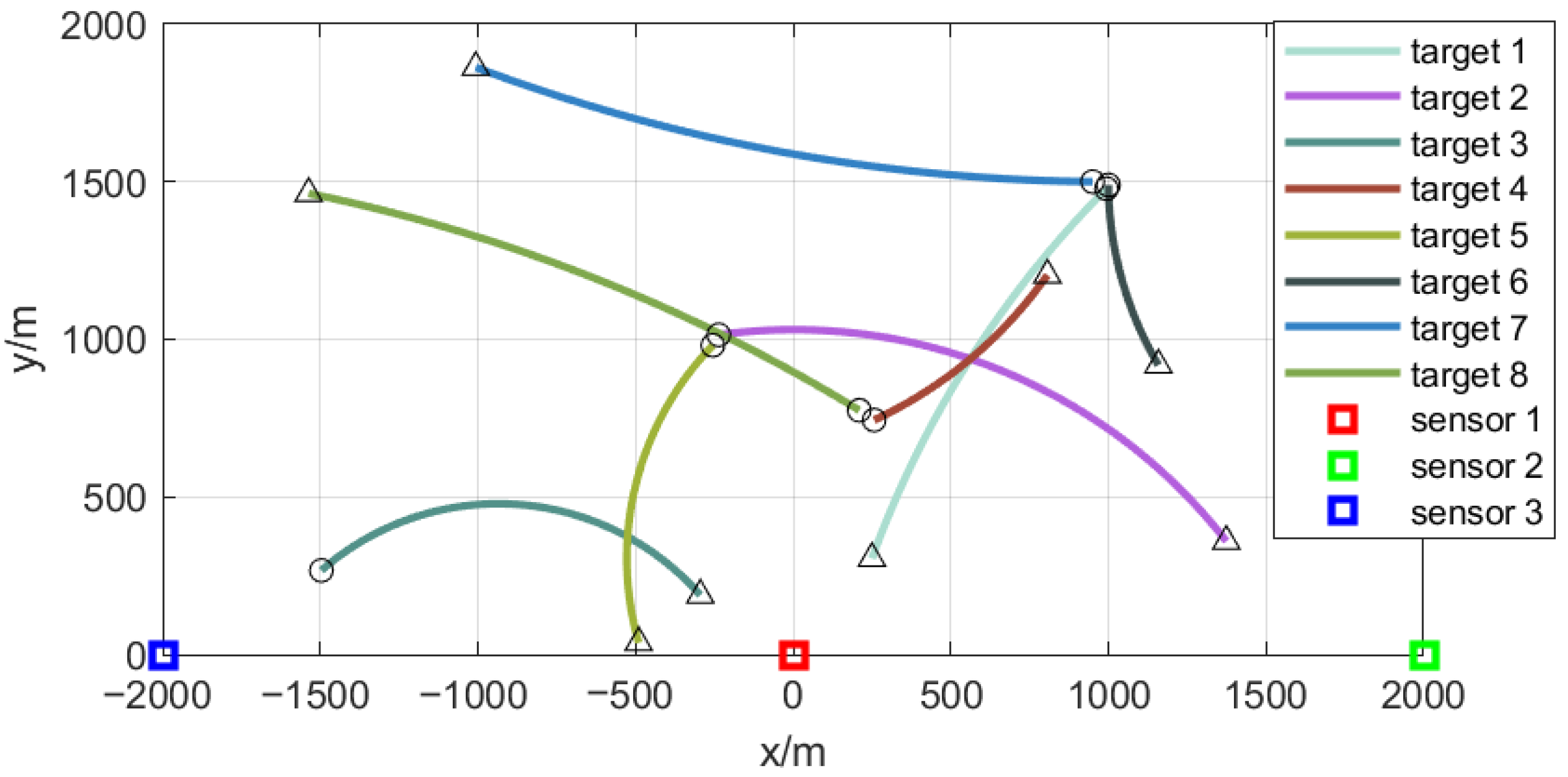

5.2. Nonlinear

As shown in

Figure 6, the starting and finishing positions are indicated by the circles and triangles, while the squares show the locations of all three sensors. Each target follows a nearly coordinated turn (NCT) model, with the state of interest represented by

, where

, and

is the turning rate. The NCT model is expressed as follows.

where

,

,

.

We set and as the turning rate noise. The target survival probability and detection probability are and , while the newborn targets have the form of an LMB distribution with four components.

In the second scenario, each sensor still has a detection range covering the entire area and a sampling rate of 1 Hz, and monitors the range and azimuth of targets. Measurements from the sensor

are noisy vectors of range and bearing

.

for the sensors

and

are given as follows.

where

is the position of the sensor

, explicitly given by

,

, and

. Range noise is

and bearing noise is

. The clutter follows a Poisson distribution with

.

Table 5 illustrates the initial state and duration of each target.

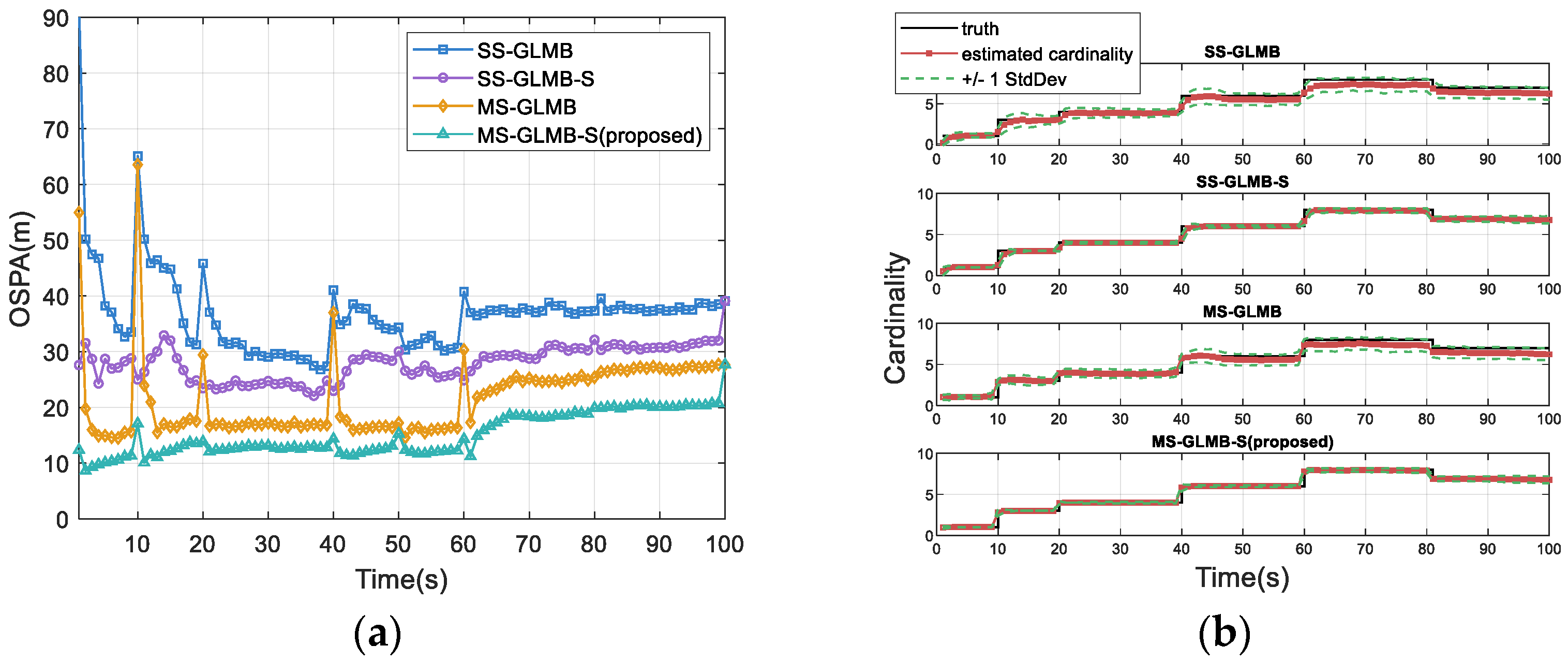

We then show the comparison of the proposed MS-GLMB-S with SS-GLMB, MS-GLMB, and SS-GLMB-S in the second scenario. For SS-GLMB and SS-GLMB-S, we utilize measurements from sensor 1. It is also worth noting that although the sensor measurements in the second experiment differ from those in the first, the type and detection range of the three sensors are identical. Thus, selecting any one of them will not affect the experimental results. For MS-GLMB and MS-GLMB-S, we utilize measurements from all three sensors. All subsequent experimental results are the average values obtained from 100 Monte Carlo trials.

Figure 7a,b show the OSPA distance and the cardinality estimation of the four methods. It is clear that even as the cardinality estimations of SS-GLMB and SS-GLMB-S show significant deviations after 50 s, MS-GLMB-S still maintains a smaller OSPA distance and a more accurate cardinality estimation in comparison to the other three methods. This demonstrates that in nonlinear target motion scenarios, MS-GLMB-S provides significantly higher target state estimation accuracy than SS-GLMB, SS-GLMB-S, and MS-GLMB.

Figure 8 presents the OSPA

(2) distances for the four competing methods, with MS-GLMB-S demonstrating a smaller OSPA

(2) distance compared to the remaining three. This indicates that in nonlinear scenarios, the MS-GLMB-S algorithm achieves the optimal tracking accuracy and effectively reduces the impact of track label switching. Meanwhile,

Figure 9 further shows that even in the context of more challenging nonlinear scenarios, the fluctuation range of the runtime curve of the MS-GLMB-S algorithm exhibits no significant difference compared with that in linear scenarios.

We then analyze the impact of varying lag intervals on the tracking accuracy and real-time performance of MS-GLMB-S. As illustrated in

Figure 10 and

Table 6, increasing the lag interval can enhance the performance of MS-GLMB-S; however, as discussed in

Section 5.1, this lag interval length should not be excessively large.

Overall, the proposed MS-GLMB-S demonstrates robust applicability in scenarios with nonlinear target motion and nonlinear sensor measurements, achieving better tracking performance compared to SS-GLMB, SS-GLMB-S, and MS-GLMB.

6. Conclusions

The centralized multi-sensor GLMB smoother (MS-GLMB-S) proposed in this paper is based on the Bayesian forward–backward framework, which includes backward smoothing and forward filtering. In the forward filtering part, the filtering posterior density is generated by the centralized MS-GLMB filter. In the backward smoothing part, we derive expressions for the smoothing density and the simplified multi-sensor backward corrector in multi-sensor scenarios. With the filtering density and multi-sensor measurements within the lag interval, the weights of the GLMB components are adjusted to the corresponding smoothing weights. Subsequently, a suboptimal Gibbs sampling method for smoothing is introduced to truncate the GLMB smoothing density for efficient implementation of MS-GLMB-S, allowing different sensors to sample independently at different time steps within the lag interval. Additionally, the calculation of smoothing parameters in the linear Gaussian mixture model is detailed. In both linear and nonlinear simulation settings, the MS-GLMB-S has been shown to outperform SS-GLMB, MS-GLMB, and SS-GLMB-S.

Since MS-GLMB-S does not consider target birth and death during the smoothing process, cardinality estimation fluctuates significantly at these moments. Future improvements can incorporate target birth and death to refine the multi-sensor multi-target forward–backward smoothing framework and verify its effectiveness. In addition, the proposed MS-GLMB-S is currently only applicable to multi-sensor scenarios with centralized fusion; future work can further extend its application to multi-sensor scenarios based on distributed fusion.