GCN-MHA Method for Encrypted Malicious Traffic Detection and Classification

Abstract

1. Introduction

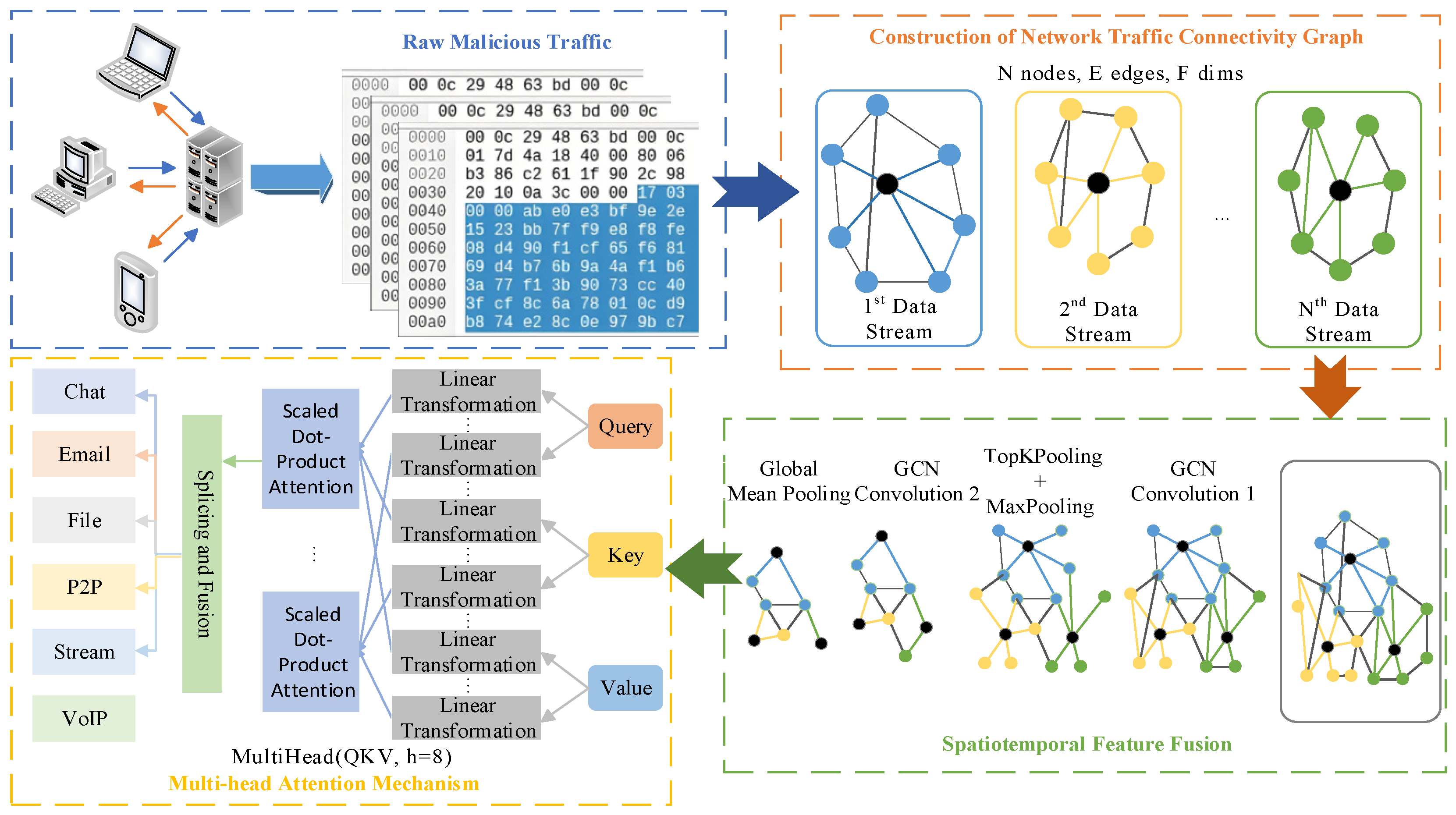

- Graph structure modeling and spatiotemporal feature enhancement: We present a Graph Convolutional Network with Multi-Head Attention (GCN-MHA) for detecting and classifying encrypted malicious traffic. The method builds a graph that connects nodes in the traffic data, giving the model a clear view of how flows relate to each other. This graph helps the network learn spatial patterns and track how these patterns change over time. With these clearer and stronger features, the system achieves more reliable intrusion detection.

- Integration of graph convolution and attention mechanism: We use a graph convolutional neural network to extract basic behavior patterns from each node in the graph. These patterns form clear features that help the model tell normal and malicious traffic apart. Then, a multi-head attention mechanism gives different weights to these features, allowing the system to focus on the ones that matter most. With this approach, the system can recognize network intrusion behavior more accurately.

- Model validation and performance improvement: We tested our model on the ISCX-VPN-encrypted traffic dataset. It achieved an accuracy of 98.79% and a recall of 99.24%. Compared with traditional methods, it performed better on key metrics such as accuracy, recall, and F1-score. These results show that the model is both effective and reliable for detecting encrypted malicious traffic.

2. Research Status

3. GCN-MHA Method

3.1. Connectivity Relationship Graph Construction

| Algorithm 1: Graph Construction and Pruning (complete pseudocode). |

| Input: Set of PCAP files |

| Output: Undirected graph G = (V,E), node-feature matrix X∈ℝ^{|V| × 23} |

| 1. Five-tuple flow aggregation /* (srcIP, dstIP, srcPort, dstPort, proto) with ≤30 s timeout */ 2. Extract 23-dimensional statistical features per flow (Table 3) → candidate nodes 3. Edge creation rule: if flow A→B AND reverse flow B → A exists within 30 s then add undirected edge (A,B) 4. Delete isolated nodes /* degree = 0 */ 5. Preserve sensitive nodes: if TLS version ≤1.1 OR SNI hits blacklist, keep the node 6. Return G, X |

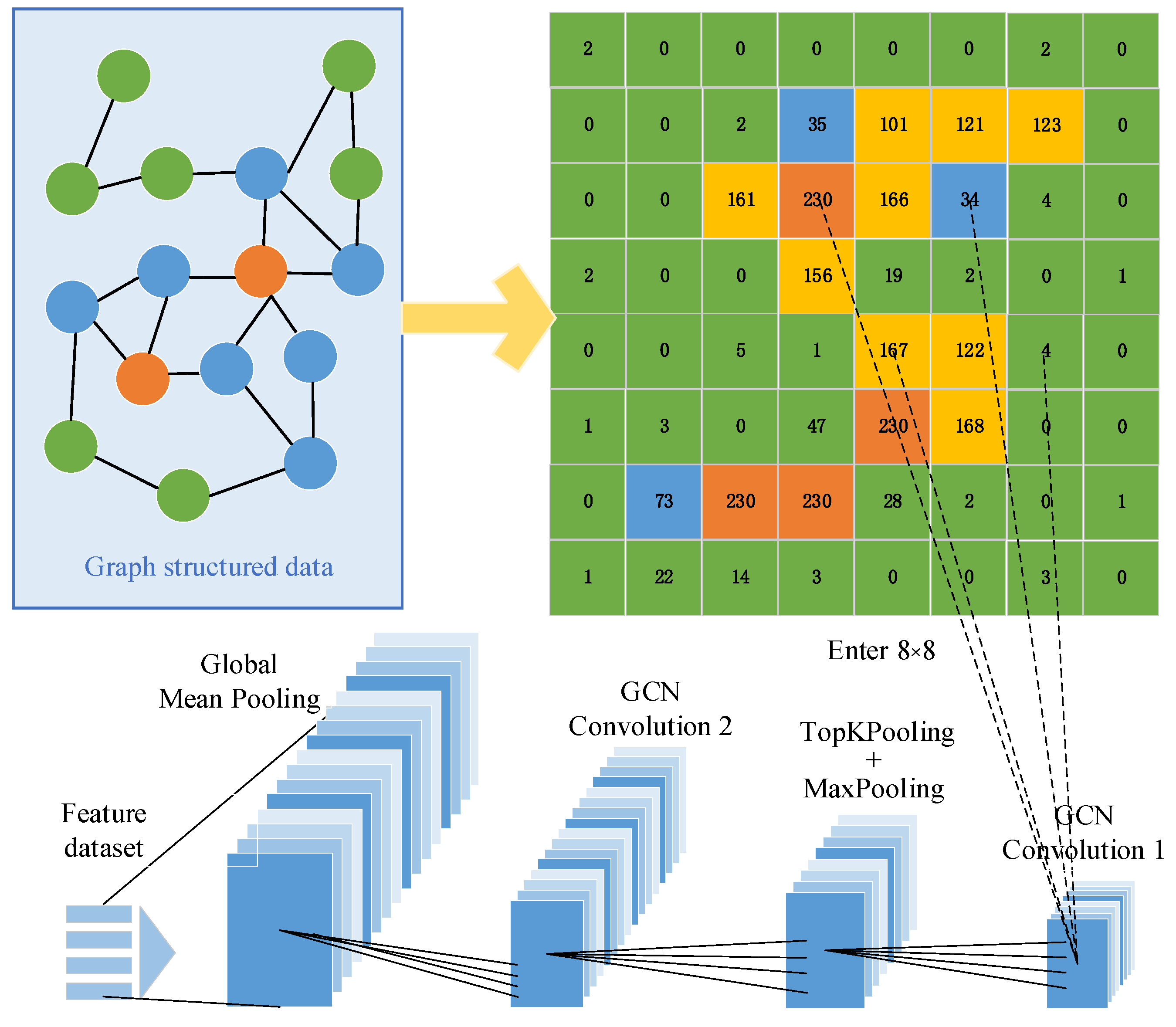

3.2. Spatiotemporal Feature Fusion

3.3. Multi-Head Attention Mechanism Weight Allocation

4. Experimental Analysis

4.1. Experimental Environment and Settings

4.2. Experimental Data Selection

4.3. Experimental Evaluation Metrics

4.4. Experiment and Analysis

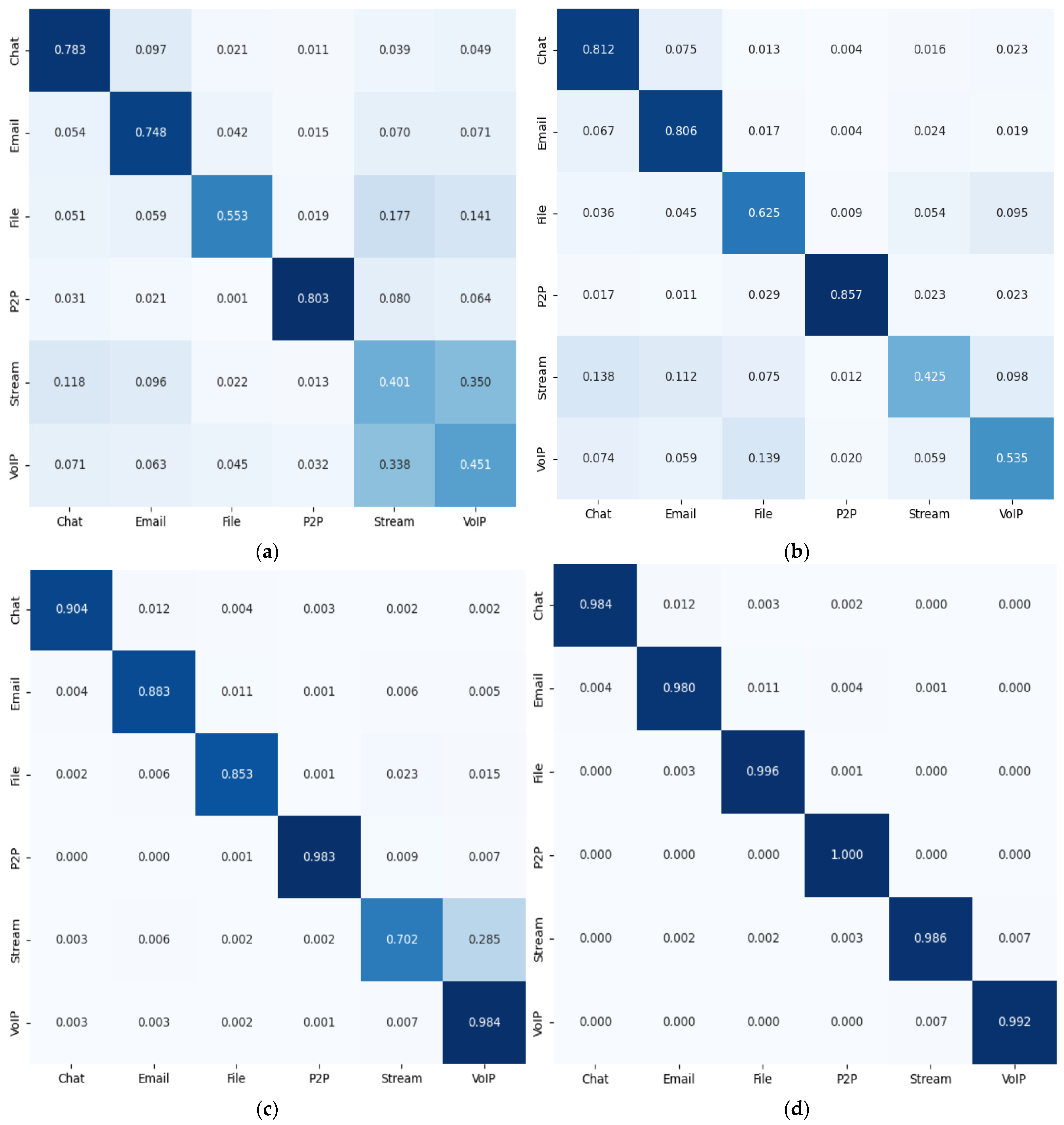

4.4.1. Experimental Results

4.4.2. Ablation Experiment

4.4.3. Performance Comparison

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| TLS/SSL | Transport Layer Security/Secure Sockets Layer |

| DDos | Distributed denial of service attack |

| SVM | Support Vector Machines |

| LSTM | Long Short-Term Memory |

| CNN | Convolutional Neural Network |

| GNN | Graph Neural Network |

| MLP | Multi-Layer Perceptron |

References

- Dang, Y.; Li, Q. Research on the Development of Foreign Artificial Intelligence Hotspot Security Technology. Inf. Secur. Commun. Priv. 2024, 12, 1–8. [Google Scholar] [CrossRef]

- Bian, Y.; Zheng, F.; Wang, Y.; Lei, L.; Ma, Y.; Zhou, T.; Dong, J.; Fan, G.; Jing, J. AsyncGBP+: Bridging SSL/TLS and Heterogeneous Computing Power With GPU-Based Providers. IEEE Trans. Comput. 2025, 74, 356–370. [Google Scholar] [CrossRef]

- Aguru, A.; Erukala, S. OTI-IoT: A Blockchain-based Operational Threat Intelligence Framework for Multi-vector DDoS Attacks. ACM Trans. Internet Technol. (TOIT) 2024, 24, 31. [Google Scholar] [CrossRef]

- Mosakheil, J.H.; Yang, K. PKChain: Compromise-Tolerant and Verifiable Public Key Management System. IEEE Internet Things J. 2025, 12, 3130–3144. [Google Scholar] [CrossRef]

- Mei, H.T.; Cheng, G.; Zhu, Y.L.; Zhou, Y.Y. Survey on Tor Passive Traffic Analysis. Ruan Jian Xue Bao/J. Softw. 2025, 36, 253–288. [Google Scholar] [CrossRef]

- Hazman, C.; Guezzaz, A.; Benkirane, S.; Azrour, M. Enhanced IDS with Deep Learning for IoT-Based Smart Cities Security. Tsinghua Sci. Technol. 2024, 29, 929–947. [Google Scholar] [CrossRef]

- Wang, X.; Dai, L.; Yang, G. A network intrusion detection system based on deep learning in the IoT. J. Supercomput. 2024, 80, 24520–24558. [Google Scholar] [CrossRef]

- Dalal, S.; Lilhore, U.K.; Faujdar, N.; Simaiya, S.; Ayadi, M.; Almujally, N.A.; Ksibi, A. Next-generation cyber attack prediction for IoT systems: Leveraging multi-class SVM and optimized CHAID decision tree. J. Cloud Comput. 2023, 12, 137. [Google Scholar] [CrossRef]

- Gou, J.; Li, J.; Chen, C.; Chen, Y.; Lv, Y. Network intrusion detection method based on random forest. Comput. Eng. Appl. 2020, 56, 82–88. [Google Scholar] [CrossRef]

- Bakir, H.; Ceviz, O. Empirical Enhancement of Intrusion Detection Systems: A Comprehensive Approach with Genetic Algorithm-based Hyperparameter Tuning and Hybrid Feature Selection. Arab. J. Sci. Eng. 2024, 49, 13025–13043. [Google Scholar] [CrossRef]

- Wang, Y. Advanced Network Traffic Prediction Using Deep Learning Techniques: A Comparative Study of SVR, LSTM, GRU, and Bidirectional LSTM Models. ITM Web Conf. 2025, 70, 03021. [Google Scholar] [CrossRef]

- L., S.P.; Emmanuel, W.R.S.; Rani, P.A.J. Network traffic classification based-masked language regression model using CNN. Concurr. Comput. Pract. Exp. 2024, 36, e8223. [Google Scholar] [CrossRef]

- Altaf, T.; Wang, X.; Ni, W.; Yu, G.; Liu, R.P.; Braun, R. GNN-Based Network Traffic Analysis for the Detection of Sequential Attacks in IoT. Electronics 2024, 13, 2274. [Google Scholar] [CrossRef]

- Zhao, D.; Yin, Z.; Cui, S.; Lu, Z. Malicious TLS Traffic Detection Based on Graph Representation. J. Inf. Secur. Res. 2024, 10, 209–215. Available online: http://www.sicris.cn/CN/Y2024/V10/I3/209 (accessed on 23 November 2025).

- Deng, H.; Yang, A.; Liu, Y. P2P traffic classification method based on SVM. Comput. Eng. Appl. 2008, 44, 122–126. [Google Scholar] [CrossRef]

- Wang, L.; Feng, H.; Liu, B.; Cui, M.; Zhao, H.; Sun, X. SSL VPN ENCRYPTED TRAFFIC IDENTIFICATION BASED ON HYBRID METHOD. Comput. Appl. Softw. 2019, 36, 315–322. [Google Scholar] [CrossRef]

- Shi, L.; Shi, S.; Wen, W. Malicious TLS Traffic Detection Based on Graph Representation. J. Inf. Secur. Res. 2022, 8, 736–750. Available online: http://www.sicris.cn/CN/Y2022/V8/I8/736 (accessed on 23 November 2025).

- Cheng, H.; Xie, J.; Chen, L. CNN-based Encrypted C&C Communication Traffic Identification Method. Comput. Eng. 2019, 45, 31–34+41. [Google Scholar] [CrossRef]

- Xu, H.; Ma, Z.; Yi, H.; Zhang, L. Network Traffic Anomaly Detection Technology Based on Convolutional Recurrent Neural Network. Netinfo Secur. 2021, 21, 54–62. [Google Scholar] [CrossRef]

- Luo, G.; Wang, X.; Dai, J. Random Feature Graph Neural Network for Intrusion Detection in Internet of Things. Comput. Eng. Appl. 2024, 60, 264–273. [Google Scholar] [CrossRef]

- Zheng, J.; Zeng, Z.; Feng, T. GCN-ETA: High-Efficiency Encrypted Malicious Traffic Detection. Secur. Commun. Netw. 2022, 2022, 4274139. [Google Scholar] [CrossRef]

- Chen, J.; Xie, H.; Cai, S.; Song, L.; Geng, B.; Guo, W. GCN-MHSA: A novel malicious traffic detection method based on graph convolutional neural network and multi-head self-attention mechanism. Comput. Secur. 2024, 147, 104083. [Google Scholar] [CrossRef]

- Cai, S.; Tang, H.; Chen, J.; Lv, T.; Zhao, W.; Huang, C. GSA-DT: A Malicious Traffic Detection Model Based on Graph Self-Attention Network and Decision Tree. IEEE Trans. Netw. Serv. Manag. 2025, 22, 2059–2073. [Google Scholar] [CrossRef]

- Yuan, X.; Wan, J.; An, D.; Pei, H. A novel encrypted traffic detection model based on detachable convolutional GCN-LSTM. Sci. Rep. 2025, 15, 27705. [Google Scholar] [CrossRef]

- Xu, H.; Geng, X.; Liu, J.; Lu, Z.; Jiang, B.; Liu, Y. A novel approach for detecting malicious hosts based on RE-GCN in intranet. Cybersecurity 2024, 7, 69. [Google Scholar] [CrossRef]

- Draper-Gil, G.; Lashkari, A.H.; Mamun, M.S.I.; Ghorbani, A.A. Characterization of Encrypted and VPN Traffic Using Time-Related Features. In Proceedings of the 2nd International Conference on Information Systems Security and Privacy ICISSP, Rome, Italy, 19–21 February 2016. [Google Scholar] [CrossRef]

| Literature | Year | Core Technology | Encryption Friendly | Dataset | Main Limitations |

|---|---|---|---|---|---|

| Deng He et al. [15] | 2008 | Linear classifier | × | Self-built P2P dataset | Only MSN P2P, closed scenario |

| Wang Lin et al. [16] | 2019 | Integrated tree + genetic algorithm optimization | √ | SSL VPN Dataset | Single class 92%, no encryption generalization |

| Shi Lin et al. [17] | 2022 | Time series modeling | √ | Linux APT Dataset | Need kernel sandbox, APT specific |

| Cheng Hua et al. [18] | 2019 | Local spatial feature extraction | √ | Encrypt C2C dataset | C2C vs. Web, binary classification only |

| Xu Hongping et al. [19] | 2021 | CNN + LSTM spatiotemporal fusion | √ | Network anomaly dataset | Fixed-length truncation, small-sample underfitting |

| Luo Guoyu et al. [20] | 2024 | Graph structure + random features | √ | IoT intrusion dataset | Multi-class performance average |

| Zheng et al. [21] | 2022 | GCN | √ | ISCX-VPN2016 | GCN + decision tree, poor interpretability |

| Chen et al. [22] | 2024 | GCN + Single-Head Attention | √ | ISCX-VPN2016 | Flow level expansion, quintuple leakage |

| Cai et al. [23] | 2025 | GSA + DT | √ | Network anomaly dataset | No verification in encrypted traffic |

| Yuan et al. [24] | 2025 | GCN-LSTM | √ | IoT intrusion dataset | No solution for small samples |

| Xu et al. [25] | 2024 | RE-GCN | × | Linux APT Dataset | Support encrypted traffic detection |

| GCN-MHA | — | GCN-MHA | √ | Multi-headed global |

| Layer | Input Tensor | Output Tensor | Params |

|---|---|---|---|

| Node Feature Input | (N, 1480) | (N, 23) | 23-dimensional feature nodes |

| GCN-1 | (N, 23), Adj (N, N) | (N, 64) | ReLU, dropout = 0.2 |

| Pool-1 | (N, 64) | (N1, 64), N1 = (0.8 N) | TopKPooling (k = 0.8 N) |

| GCN-2 | (N1, 64) | (N1, 128) | ReLU, dropout = 0.2 |

| Pool-2 | (N1, 128) | (1, 128) | Global Mean Pooling |

| MHAtt | (1, 128) | (1, 128) | 8 head, dim Q/K/V 128-16 |

| Classifier | (1, 128) | (1, C) | C = 2 (malicious/benign) Or 6 (fine category) |

| Num. | Field | Calculation Method |

|---|---|---|

| 0–3 | Package length statistics | mean/std/min/max |

| 4–7 | Arrival interval | mean/std/min/max |

| 8–11 | TLS fingerprint | Version, SNI length, number of Cipher suites, number of extensions |

| 12–14 | TCP flag ratio | FIN/SYN/RST |

| 15–18 | Byte distribution | First 4 moments |

| 19–22 | Window Size&TTL | mean/std |

| Traffic Classification | Content |

|---|---|

| Web Browsing | Chrome and Firefox |

| SMPTS, POP3S and IMAPS | |

| Chat | ICQ, AIM, Skype, Facebook and Hangouts |

| Streaming | Vimeo and Youtube |

| File Transfer | Skype, FTPS and SFTP using Filezilla and an external service |

| VoIP | Facebook, Skype and Hangouts voice calls (1 h duration) |

| P2P | uTorrent and Transmission (Bittorrent) |

| Traffic Categories | Subclass | Sample Quantity | Proportion of Total Sample | Data Source |

|---|---|---|---|---|

| Normal traffic | Web Browsing (Chrome/Firefox) | 32,456 | 38.1% | Normal web browsing |

| Email (SMPTS/POP3S) | 18,723 | 22.0% | Normal email transmission | |

| Chat (Skype/Facebook) | 17,499 | 20.5% | Normal instant messaging | |

| Subtotal | 68,678 | 80.6% | ||

| Malicious traffic | Chat | 6523 | 7.66% | Malicious software communicates with the control end |

| 7312 | 8.58% | Phishing email transmission with malicious attachments | ||

| File | 276 | 0.32% | Ransomware sample transmission | |

| P2P | 178 | 0.21% | Collaborative communication between DDoS attack nodes | |

| Stream | 445 | 0.52% | Streaming media transmission that hides malicious code | |

| VoIP | 1781 | 2.09% | Speech covert communication in APT attacks | |

| Subtotal | 16,515 | 19.4% | ||

| Total | 85,193 | 100% | ||

| Traffic Category | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Benign flow | 0.987 | 0.982 | 0.987 | 0.985 |

| CHAT | 0.984 | 0.950 | 0.997 | 0.973 |

| 0.980 | 0.950 | 0.996 | 0.972 | |

| FILE | 0.996 | 0.915 | 0.931 | 0.923 |

| P2P | 1.000 | 1.000 | 1.000 | 1.000 |

| STREAM | 0.986 | 0.921 | 0.948 | 0.935 |

| VoIP | 0.992 | 0.938 | 0.979 | 0.958 |

| Malicious flow | 0.9879 | 0.9478 | 0.9924 | 0.9696 |

| Traffic Type | Category (Detection Result/Total Dataset) | Accuracy | Recall | |||

|---|---|---|---|---|---|---|

| Benign Traffic | 67,776/68,678 | 98.69% | \ | |||

| Correctly Identified Malicious Flows | Misclassified Benign Flows | Unidentified Malicious Flows | ||||

| Malicious Traffic | CHAT | 6504/6523 | 341/15,425 | 19/6523 | 98.36% | 99.71% |

| 7285/7312 | 386/13,122 | 27/7312 | 97.98% | 99.63% | ||

| FILE | 257/276 | 24/10,071 | 19/276 | 99.58% | 93.12% | |

| P2P | 178/178 | 0/9849 | 0/178 | 100% | 100% | |

| STREAM | 422/445 | 36/3781 | 23/445 | 98.58% | 94.83% | |

| VoIP | 1744/1781 | 115/16,493 | 37/1781 | 99.17% | 97.92% | |

| Overall | 98.79% | 99.24% | ||||

| Parameters Value | Value | Remarks |

|---|---|---|

| Input feature dimension | 23 | Raw node feature dimension extracted from PCAP |

| Hidden dimension GCN-1 | 64 | Expands low-level features into richer representations. |

| Hidden dimension GCN-2 | 128 | Final node-level embedding dimension before pooling. |

| Number of GCN layers | 2 | Balances expressive power and computational cost. |

| GCN dropout | 0.2 | Prevents overfitting during neighborhood aggregation |

| TopKPooling ratio | 0.8 | Keeps 80% most informative nodes; removes redundant nodes. |

| Global pooling type | MeanPooling | Produces graph-level representation for classification. |

| Self-attention input dimension | 128 | Matches output dimension of GCN-2. |

| Number of attention heads | 8 | Each head captures different spatiotemporal subspaces. |

| Q/K/V dimension per head | 16 | Ensures (128 = 16 × 8) alignment for stable attention. |

| Classifier output dimension | 2 or 6 | Binary detection or fine-grained malicious traffic classification. |

| Loss Function | Cross-Entropy | Stable for both binary and multi-class tasks. |

| Optimizer | Adam | Effective for sparse gradients in GNN models. |

| Initial Learning Rate | 0.01 | Decay to 1 × 10−4 through cosine annealing strategy |

| Regularization Coefficient (L2) | 1 × 10−5 | Prevent overfitting |

| Batch Size | 4096 | Optimized according to NVIDIA RTX 4090 |

| Epoch | 50 | Maximum training epochs before early stop |

| Activation Function | ReLU | Enhance non-linear expression |

| Graph normalization method | Symmetric normalization | Stabilizes graph signal propagation in GCN. |

| Train/Validation split | 80%/20% | Consistent with dataset distribution and cross-validation setup. |

| Cross-validation folds | 10 | Ensures result robustness and statistical reliability. |

| Model | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Support Vector Machine (SVM) | 0.923 | 0.924 | 0.867 | 0.895 |

| Random Forest | 0.903 | 0.909 | 0.892 | 0.907 |

| Long Short-Term Memory (LSTM) | 0.863 | 0.884 | 0.853 | 0.868 |

| Convolutional Neural Network (CNN) | 0.910 | 0.910 | 0.905 | 0.910 |

| Convolutional Recurrent Neural Network (CRNN) | 0.970 | 0.958 | 0.977 | 0.967 |

| Graph Neural Network (GNN) | 0.934 | 0.970 | 0.926 | 0.948 |

| GCN-ETA | 0.974 | 0.975 | 0.964 | 0.969 |

| GCN-MHSA | 0.963 | 0.968 | 0.971 | 0.970 |

| GCN-MHA | 0.988 | 0.987 | 0.989 | 0.988 |

| Model/Dataset | ISCX-VPN2016 | USTC-TFC2016 | CIC-Darknet2020 |

|---|---|---|---|

| Support Vector Machine (SVM) | 0.923 | 0.895 | 0.863 |

| Random Forest | 0.903 | 0.873 | 0.79 |

| Long Short-Term Memory (LSTM) | 0.863 | 0.829 | 0.859 |

| Convolutional Neural Network (CNN) | 0.910 | 0.891 | 0.879 |

| Convolutional Recurrent Neural Network (CRNN) | 0.970 | 0.924 | 0.941 |

| Graph Neural Network (GNN) | 0.934 | 0.905 | 0.918 |

| GCN-ETA | 0.974 | 0.941 | 0.952 |

| GCN-MHSA | 0.963 | 0.932 | 0.949 |

| GCN-MHA | 0.988 | 0.961 | 0.973 |

| Model Components | Parameter Quantity | FLOPs | Proportion | Instructions |

|---|---|---|---|---|

| Graph convolutional layer (2 layers) | 12.8 | 8.6 | 35.8% | Including node feature aggregation and pooling operations |

| Multi-head attention layer | 28.5 | 12.3 | 51.2% | Q/K/V calculation and fusion of 8-head attention |

| Linear classification layer | 3.2 | 1.6 | 6.7% | Mapping from 512-dimensional features to 6 categories |

| Other (activation/pooling) | 0.5 | 1.5 | 6.3% | ReLU activation, MaxPool and other nonparametric operations |

| Total | 45 | 24 | 100% | - |

| Model | Parameter Quantity | Training Time (Epoch = 50) | Single-Sample Inference Time (ms) | Inference Throughput (Samples/Second) |

|---|---|---|---|---|

| SVM | No parameters | 0.5 h (training) | 85.3 | 11.7 |

| CRNN | 58.5 | 2.2 h | 22.4 | 44.6 |

| GCN-ETA | 51.7 | 2.0 h | 20.1 | 49.8 |

| GCN-MHSA | 62.3 | 2.5 h | 28.7 | 34.8 |

| GCN-MHA | 45.0 | 1.8 h | 15.2 | 65.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Y.; Wang, S.; Zhang, Z.; Hou, T.; Shen, J.; Wang, P.; Qiu, S.; Ma, L. GCN-MHA Method for Encrypted Malicious Traffic Detection and Classification. Electronics 2025, 14, 4627. https://doi.org/10.3390/electronics14234627

Liu Y, Wang S, Zhang Z, Hou T, Shen J, Wang P, Qiu S, Ma L. GCN-MHA Method for Encrypted Malicious Traffic Detection and Classification. Electronics. 2025; 14(23):4627. https://doi.org/10.3390/electronics14234627

Chicago/Turabian StyleLiu, Yanan, Suhao Wang, Zheng Zhang, Tianhao Hou, Jipeng Shen, Pengfei Wang, Shuo Qiu, and Lejun Ma. 2025. "GCN-MHA Method for Encrypted Malicious Traffic Detection and Classification" Electronics 14, no. 23: 4627. https://doi.org/10.3390/electronics14234627

APA StyleLiu, Y., Wang, S., Zhang, Z., Hou, T., Shen, J., Wang, P., Qiu, S., & Ma, L. (2025). GCN-MHA Method for Encrypted Malicious Traffic Detection and Classification. Electronics, 14(23), 4627. https://doi.org/10.3390/electronics14234627