1. Introduction

Variational Autoencoders (VAEs) are widely used in generative modeling and image reconstruction, providing an efficient framework to learn meaningful latent representations [

1]. VAEs employ an encoder–decoder structure, where the encoder maps input data into a probabilistic latent space, and the decoder reconstructs the input from sampled latent variables. Fidelity in image reconstruction and generation refers to how well the generated images match the original images in terms of structural accuracy [

2], detail preservation [

3], and perceptual similarity [

4]. However, classical VAEs (C-VAEs) often struggle to generate high-fidelity images due to inherent limitations in their latent representation [

5]. Additionally, the decoder’s reliance on pixel-wise reconstruction loss leads to blurry and over-smoothed images, restricting their effectiveness in capturing complex data distributions [

6].

Recent advancements in quantum computing offer promising avenues for overcoming these challenges by exploiting quantum phenomena such as superposition and entanglement. Several recent works have explored hybrid quantum–classical models for image processing and signal reconstruction, highlighting the potential of quantum encoding to enhance classical architectures [

7,

8,

9,

10]. These advances in quantum computing have introduced promising approaches to enhance deep generative models by leveraging quantum parallelism and superposition [

11]. The integration of quantum circuits into neural networks, particularly within the VAE encoder, has shown potential to improve latent space representations, leading to enhanced feature extraction and more accurate reconstructions [

12]. Quantum neural networks represent an example of such hybrid models, which leverage quantum operations to better capture the underlying data structure, making them especially well suited for high-dimensional image tasks [

13].

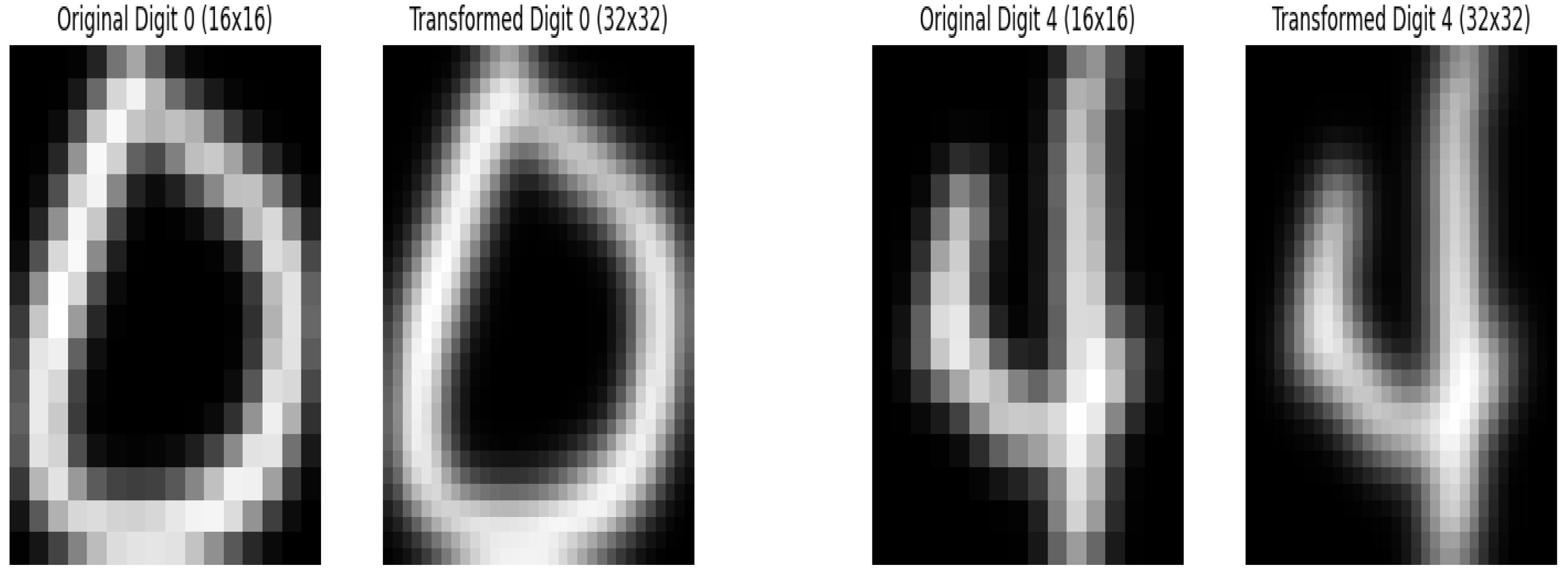

This study presents a hybrid model referred to as quantum VAE (Q-VAE). Q-VAE combines the quantum down-sampling filter, which performs image resolution reduction and quantum encoding, and VAE. This is a novel approach that integrates quantum transformations within the encoder while utilizing fully connected layers for feature extraction. The decoder employs transposed convolution layers for upsampling. Q-VAE is evaluated against C-VAE and classical direct passing VAE (CDP-VAE), which incorporates windowed pooling filters (resolution reduction). Since quantum computing, especially its integration into deep learning models, is still in the early stages, focusing on simpler datasets allows for a more controlled environment where we can better understand the impact of quantum techniques on model performance. Furthermore, the datasets used in this study, such as MNIST [

14] and USPS [

15] (with image resolutions of 28 × 28 and 16 × 16 pixels, respectively), are relatively small in size, which is advantageous when exploring the potential of quantum circuits for feature extraction and latent space representation. Their reduced resolution helps reduce the computational burden compared to higher-resolution images. To ensure a fair evaluation, the MNIST dataset is first down-sampled from 28 × 28 to 16 × 16 pixels, and then, like the USPS dataset, it is up-sampled to 32 × 32 pixels before being used as input to assess our model’s performance. While MNIST and USPS are simplified datasets, they remain standard benchmarks in quantum generative modeling. These datasets enable rigorous evaluation of quantum circuit stability, optimization convergence, and noise resilience before applying models to higher-dimensional or domain-specific data. The proposed Quantum Variational Autoencoder (Q-VAE) demonstrates how quantum encoding and reconstruction can enhance feature representation efficiency—an essential capability for future quantum sensing, communication, and computing systems. By focusing on grayscale images, we reduce the complexity of the problem, allowing for clearer insights into how quantum techniques can improve feature extraction and image reconstruction, particularly for simpler tasks like recognizing digits. Once the approach is successfully validated on these datasets, the methods can be extended to more complex and higher-resolution data, such as color images or higher resolutions.

Quantitative analysis has been performed to compare the effectiveness of the model using common performance metrics, such as the Fréchet Inception Distance (FID) and the Mean Squared Error (MSE), which are essential to assess the quality of generated images and the representation of the latent space [

16,

17]. Thus, by leveraging these datasets, we can benchmark the Q-VAE against traditional models, to explore that if quantum component brings measurable improvements to the generative process.

The novelty of this work lies in introducing a Quantum Down-Sampling Filter, that performs learnable quantum feature compression directly within the encoder of a Variational Autoencoder. Unlike prior quantum VAEs that embed quantum layers inside the latent space or decoder components, the proposed model integrates quantum encoding as a down-sampling mechanism, allowing the encoder to capture higher-order correlations in fewer dimensions. This design bridges the gap between quantum state representation and classical feature extraction, providing an efficient hybrid model with reduced parameters and improved reconstruction fidelity.

In summary the following contributions have been made in this paper:

Introduce a novel Quantum Variational Autoencoder (Q-VAE) that integrates a Quantum down-sampling filter in the encoder for efficient feature compression.

Provide the first systematic comparison between encoder-only quantum encoding (Q-VAE), classical CDP-VAE and VAEs, highlighting the impact of quantum feature compression.

Avoid quantum entanglements or deep quantum layers in the encoder, focusing solely on an efficient quantum encoding circuit for latent space mapping.

Minimize quantum-trainable parameters, leading to more efficient model training and better utilization of quantum resources.

Evaluate whether quantum encoding improves the generative quality and latent space representation compared to classical methods.

The structure of this paper is as follows.

Section 2 provides a review of related work on classical VAEs and their integration with quantum computing.

Section 3 presents the background on VAEs. In

Section 4, the methodology for the proposed Q-VAE model is introduced.

Section 5 outlines the numerical simulations conducted, while

Section 6 discusses the performance analysis. Finally,

Section 7 concludes the paper, summarizing the key findings and suggesting potential avenues for future research.

2. Related Research

In recent years, VAEs have garnered significant attention in generative modeling, especially for image reconstruction tasks [

1]. Traditional VAEs are valued for encoding data into a structured latent space and reconstructing input approximations, driving research efforts to enhance their architectures for improved image quality and feature extraction [

18,

19,

20]. Several studies have focused on enhancing VAE performance using convolutional architectures [

21,

22,

23,

24]. Gatopoulos et al. introduced convolutional VAEs to better capture image features, surpassing the performance of fully connected architectures in medium-resolution data sets such as CIFAR-10 and ImageNet32 [

25]. Furthermore, combining VAE with adversarial training, such as in Adversarial Autoencoders (AAE) [

26], incorporates discriminator networks to enhance the visual quality of generated images, and improve machine learning applications.

Quantum Generative Modeling

Quantum Machine Learning (QML) has emerged as a promising field, with studies exploring the integration of quantum circuits into generative models. Quantum GANs (QGANs) leverage quantum computing to enhance the data representation and image generation capabilities of GANs [

27,

28,

29,

30]. Quantum VAE [

31,

32,

33,

34,

35,

36] on the other hand, is based on the foundational work by Khoshaman et al., who proposed a quantum VAE where the latent generative process is modeled as a quantum Boltzmann machine (QBM). Their approach demonstrates that quantum VAE can be trained end-to-end using a quantum lower bound to the variational log-likelihood, achieving state-of-the-art performance on the MNIST dataset [

37]. They have used log-likelihood and Evidence Lower Bound (ELBO) as the measurement and have shown quantum VAE confidence level for the numerical results is smaller than ±0.2 in all cases. Gircha et al. further advanced the quantum VAE architecture by incorporating quantum circuits in both the encoder, which utilizes multiple rotations, and the decoder [

36].

Despite the successes of existing variational auto encoder architectures, most hybrid quantum–classical models primarily apply quantum circuits in the latent space or decoder, focusing on data generation rather than feature extraction. Such designs often increase computational complexity and may introduce instability during training. To address this gap, our proposed Quantum Variational Autoencoder (Q-VAE) integrates a quantum down-sampling filter with a single rotation gate and measurement exclusively in the encoder. This encoder-focused design efficiently compresses high-dimensional input features into a rich latent representation, enhancing feature extraction and reconstruction quality while maintaining a simple and stable classical decoder. By concentrating quantum processing in the encoder, our model distinguishes itself from prior approaches that rely on quantum circuits in the decoder or latent space.

4. Proposed Methodology

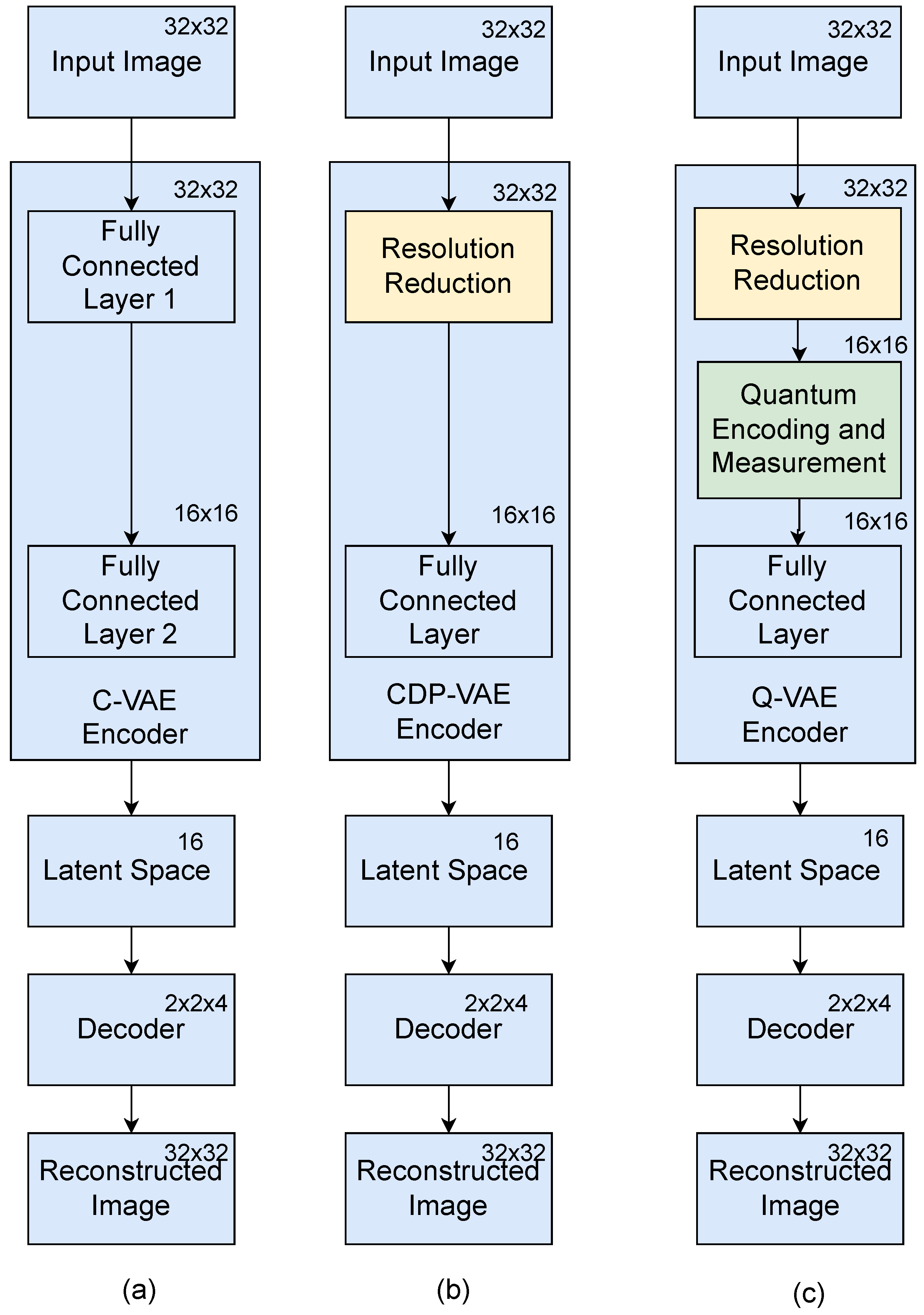

In this work, we introduce a novel down-sampling filter for Q-VAE that relies exclusively on quantum encoding for feature extraction. The paper investigates three distinct architectures for VAEs, each employing different encoding mechanisms to evaluate their feature extraction capabilities: C-VAE, CDP-VAE and Q-VAE. The framework of these models is presented in

Figure 1. The goal of this comparison is to assess the effectiveness of quantum computing, classical neural networks, and simplified encoding strategies in the capture and processing of data for subsequent reconstruction. Although each model shares a common decoder architecture, the encoders differ in their approach, enabling the study of how each influences the encoding process and the quality of the reconstructed output.

4.1. Classical VAE (C-VAE) Encoder

C-VAE uses a traditional fully connected neural network as the encoder, which processes the input data through a series of hidden layers to output the mean (

) and log variance (

) of the latent space distribution. This distribution is then sampled to obtain the latent variable

z, which represents the encoded data in a lower-resolution space. While the C-VAE relies entirely on classical neural networks for encoding, it remains highly effective in modeling data distributions and is widely used in practical applications. The decoder, which employs layers for up-sampling, reconstructs the input from this low-dimension latent space, generating an output image or data structure that approximates the original input, also shown in

Figure 1a.

4.2. Classical Direct Passing (CDP-VAE) Encoder

CDP-VAE adopts a more simplified approach to encoding by using windowing pooling for resolution reduction as shown in

Figure 1b. In this method, the input data (e.g., an image) is divided into

pixel windows, and only the first pixel of each window is retained, discarding the other three. This process reduces the resolution of the input data while preserving significant features. The reduced input is then passed through a fully connected layer for further encoding into the latent space. The CDP-VAE encoder is particularly useful for computationally constrained environments, where reducing the complexity of the encoding process can lead to faster computations while still capturing essential information for reconstruction. For instance, if the input image

x is of dimension

, this resolution reduction process reduces it to

.

4.3. Quantum VAE (Q-VAE) Encoder

Q-VAE integrates quantum computing into the encoding process, adding in the CDP-VAE as shown in

Figure 1c. The quantum encoder processes input data into a quantum state representation, utilizing quantum superposition to represent intricate relationships within the data. Quantum encoding improves performance by transforming input data into quantum states, allowing the Q-VAE to apply rotations that facilitate efficient processing and capture richer, more intricate representations. The quantum-enhanced feature extraction is combined with a classical decoder that uses transposed convolution to reconstruct the input data, combining the strengths of quantum processing for feature extraction with classical techniques for data reconstruction.

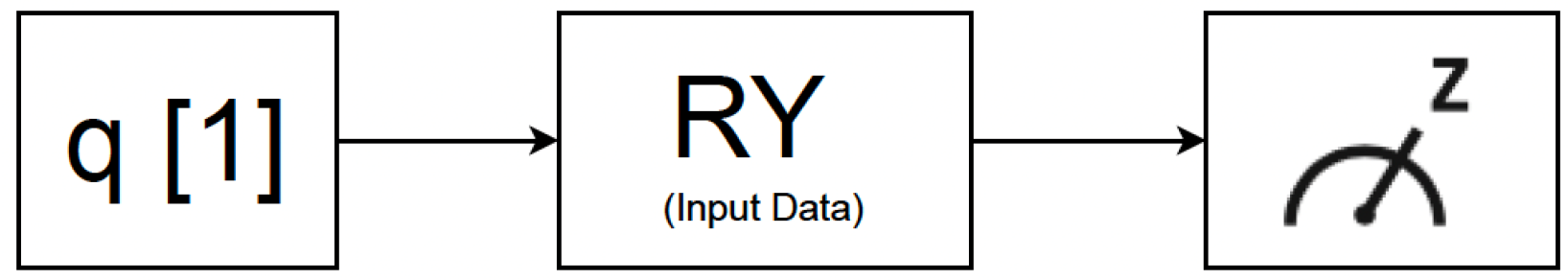

The quantum encoding network, illustrated in

Figure 2, uses a quantum encoding designed to process 16 × 16 pixel images that is the output of windowing pooling layer.

where

is the rotation gate around the

Y-axis. The rotation gate

can be expressed in terms of sine and cosine as:

where

I is the identity matrix and

Y is the Pauli

Y-matrix. The explicit matrix form is:

For the rotation gate applied to the first pixel,

becomes:

The Pauli-

Z operator is applied to measure the quantum state. The Pauli-

Z measurement flips the qubit’s state depending on the computational basis, effectively capturing the binary features of the image.

Mathematically, the Pauli-

Z operator acts on the quantum state

as follows: where

Z flips the phase of the qubit in the

state, while leaving the

state unchanged. This operation effectively translates quantum information into meaningful latent variables for the model. The resulting latent variables

z are given by:

The measurement on qubit yields the compressed representation of the input. This procedure reduces the dimension of the input image from 1024 to 256 measured values. After the quantum circuit processes the input image and extracts quantum features, the output of the quantum circuit, as shown in

Figure 1c, is passed to fully connected layer. This transition enables the integration of quantum-encoded features with classical deep learning architectures.

After the quantum circuit processes the input image and extracts quantum features, the output of the quantum circuit, as shown in

Figure 1c, is passed to fully connected layer. This transition enables the integration of quantum-encoded features with classical deep learning architectures.

Mechanistic Justification: Although the proposed Q-VAE encoder employs only single-qubit rotation gates followed by Pauli-Z measurements, performance gains are achieved through the nonlinear mapping induced by the trigonometric structure of the rotations. Each pixel intensity is transformed into a quantum state, creating nonlinear dependencies between features that are then captured by the classical fully connected layers. This hybrid mechanism allows the model to learn higher-order correlations without requiring explicit qubit entanglement. By maintaining shallow depth and avoiding multi-qubit operations, the design mitigates barren plateau effects while still enhancing representational richness through quantum-state encoding.

Quantum Resources and Scalability: The proposed Q-VAE encoder utilizes a shallow quantum circuit consisting of 4 qubits, with a single rotation gate per qubit followed by measurement. The encoding is applied sequentially across input features, with no entanglement layers, keeping the circuit depth minimal. This design allows the quantum operations to run efficiently on near-term NISQ devices. By limiting the number of qubits and the circuit depth, the Q-VAE reduces the computational overhead and mitigates noise accumulation, ensuring stable training. The simplicity of the quantum encoder also facilitates parallel implementation if larger datasets or higher-dimensional inputs are considered, supporting scalability of the approach for future applications.

4.4. Shared Decoder Architecture

Despite the differences in their encoding mechanisms, all three models, Q-VAE, C-VAE, and CDP-VAE, share a common decoder architecture. This decoder is responsible for reconstructing the input data from the latent space representation. The decoder begins by transforming the latent variable (z) into a medium-resolution space through fully connected layers. Then a series of transposed convolution (deconvolution) layers are used to progressively up-sample the data, restoring the original spatial dimensions. Finally, the output is passed through a sigmoid activation function to ensure that the pixel values are within the valid range (e.g,, between 0 and 1 for image data). This shared decoder allows for a fair comparison of the encoding techniques, with the focus on how the different encoders influence the quality of the reconstructed image.

6. FID and MSE Score Performance Evaluation and Discussion

To evaluate the performance of the proposed models, we used a combination of several key metrics: FID score [

16], training and testing loss, and MSE [

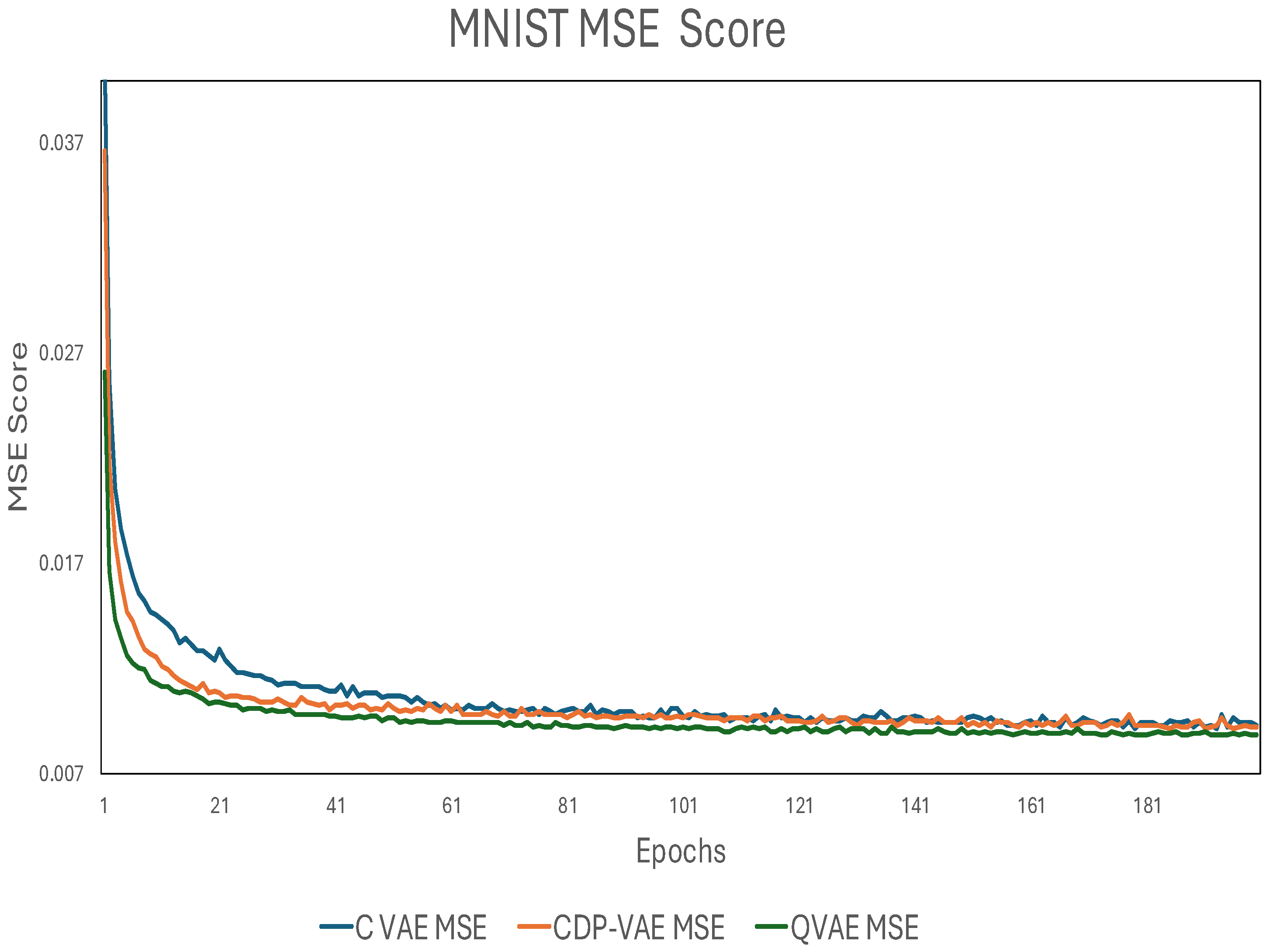

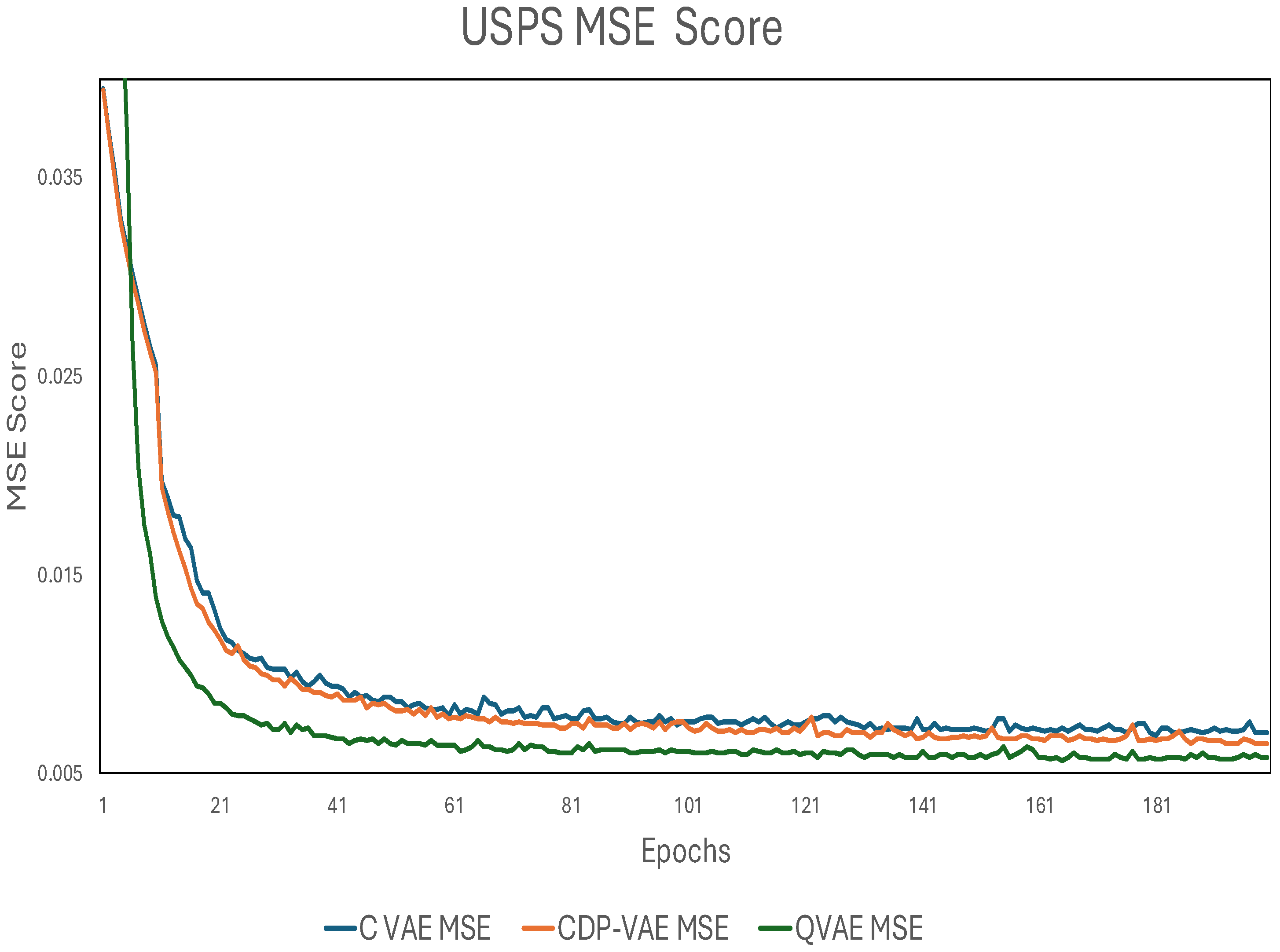

17]. Each of these metrics provides distinct insights into the model’s ability to generate better-quality images and generalize to new data: FID measures the similarity between the distribution of real and generated images. A lower FID score indicates that the generated images are closer in distribution to the real images, reflecting higher image fidelity and quality. The FID score is particularly useful for assessing the realism of generated images in generative models. MSE measures the average squared difference between the generated and real images. A lower MSE indicates that the generated images are closer to the real images in terms of pixel-wise accuracy. This metric is commonly used to evaluate the reconstruction quality in generative models, with lower values indicating better performance. MNIST and USPS MSE results are shown in

Figure 5 and

Figure 6 respectively. For all experiments, a fixed random seed was used to ensure reproducibility of the FID and MSE results across all models.

By combining these metrics, we gain a comprehensive understanding of how well our models are performing in terms of both image quality and generalization ability. The results are shown in

Table 1 below:

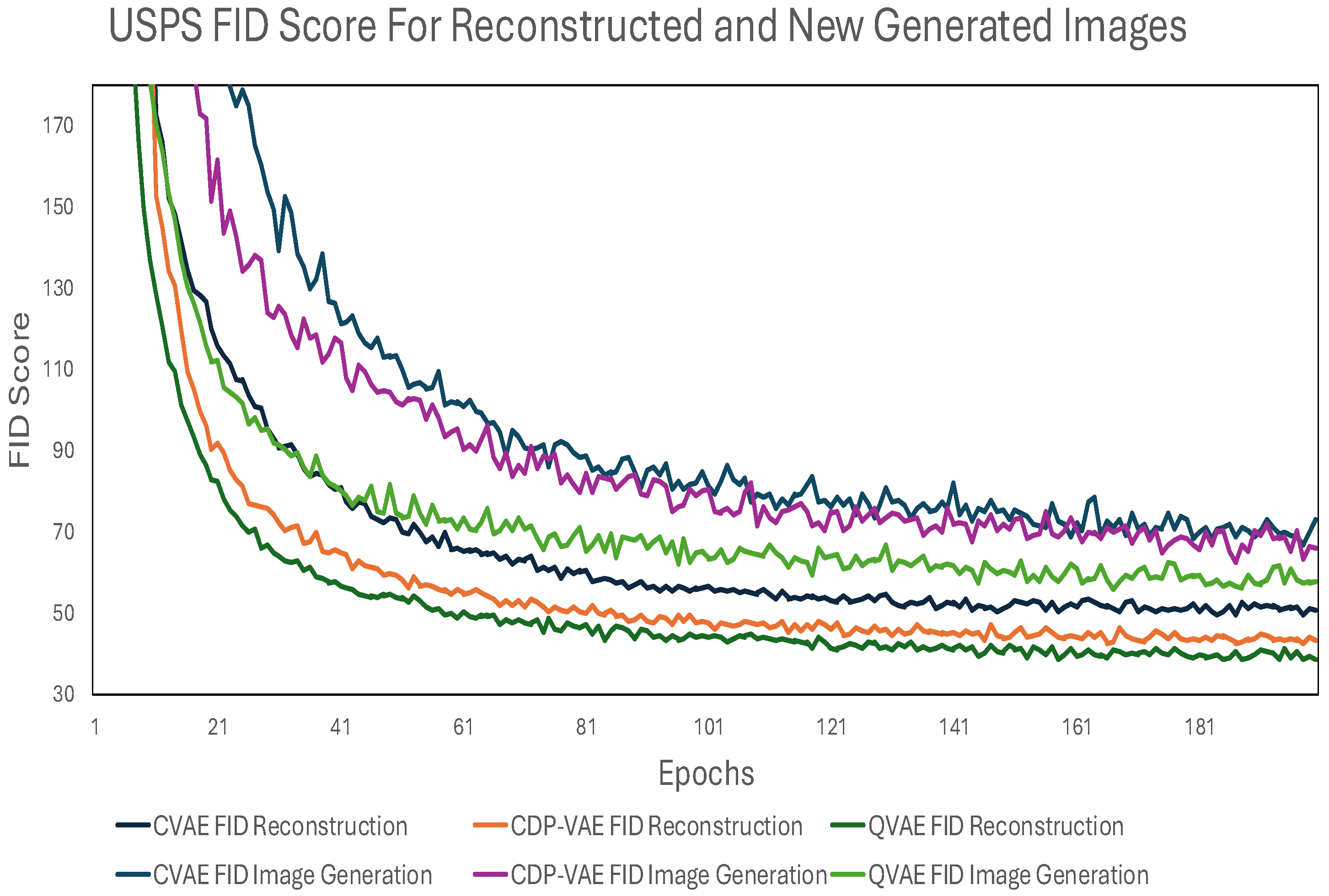

The FID score was computed for (1) images reconstructed from the input images and (2) images generated from random noise, giving us insight into how well the models perform in generating realistic images.

The improvements in FID and MSE metrics can be theoretically attributed to the Q-VAE in the encoder. By applying quantum encoding to the input features, the Q-VAE compresses high-dimensional data into a latent representation that preserves higher-order correlations. This structured latent space allows the classical decoder to reconstruct inputs more accurately, leading to superior FID and MSE performance compared to classical VAEs and hybrid models with decoder-integrated quantum circuits.

The variation of FID score as a function of the number of epochs for MNIST is shown in

Figure 7.

For MNIST, the C-VAE achieves an FID score of 40.7, indicating a reasonable ability to reconstruct images. The Q-VAE, on the other hand, leverages quantum gates such as RY rotations and Pauli-Z measurements to encode the data, achieving a superior FID score of 37.3. This improved score suggests that the quantum model produces more faithful image reconstructions, capturing complex relationships in the data more effectively than the classical model. Finally, the CDP-VAE, demonstrates a slight improvement over the C-VAE with an FID score of 39.7. Similar trends have been noticed for USPS where C-VAE achieved 50.4, CDP-VAE scored 42.9 and Q-VAE FID score for reconstruction as 38.5. Although this approach is less complex, it still lacks the advanced feature extraction capabilities of the Q-VAE, which ultimately leads to lower reconstruction fidelity.

For the USPS dataset image generation, the Q-VAE achieved an FID score of 57.6, while MNIST achieved 78.7 indicating better-quality image reconstructions and strong performance in terms of both fidelity and distribution similarity to the original dataset. While for MNIST C-VAE and CDP-VAE scored 94.4 and 93.3 respectively. Similar trends have been seen for USPS where C-VAE and CDP-VAE scored 73.1 and 66.1 respectively. This result highlights the effectiveness of the Q-VAE in handling simpler grayscale datasets, where it demonstrates feature extraction and reconstruction capabilities.

The CDP-VAE is a VAE where, instead of using an encoder–decoder architecture with learned transformations, the input data is directly passed through with minimal transformation using windowing/pooling. Despite its simplicity, the CDP-VAE still achieved relatively good performance on the USPS dataset, with an FID score of 66.1 as shown in

Table 1.

Figure 8 further illustrates that the Q-VAE consistently achieves a lower FID score for reconstruction compared to both CDP-VAE and C-VAE, indicating its superior ability to generate high-fidelity reconstructions.

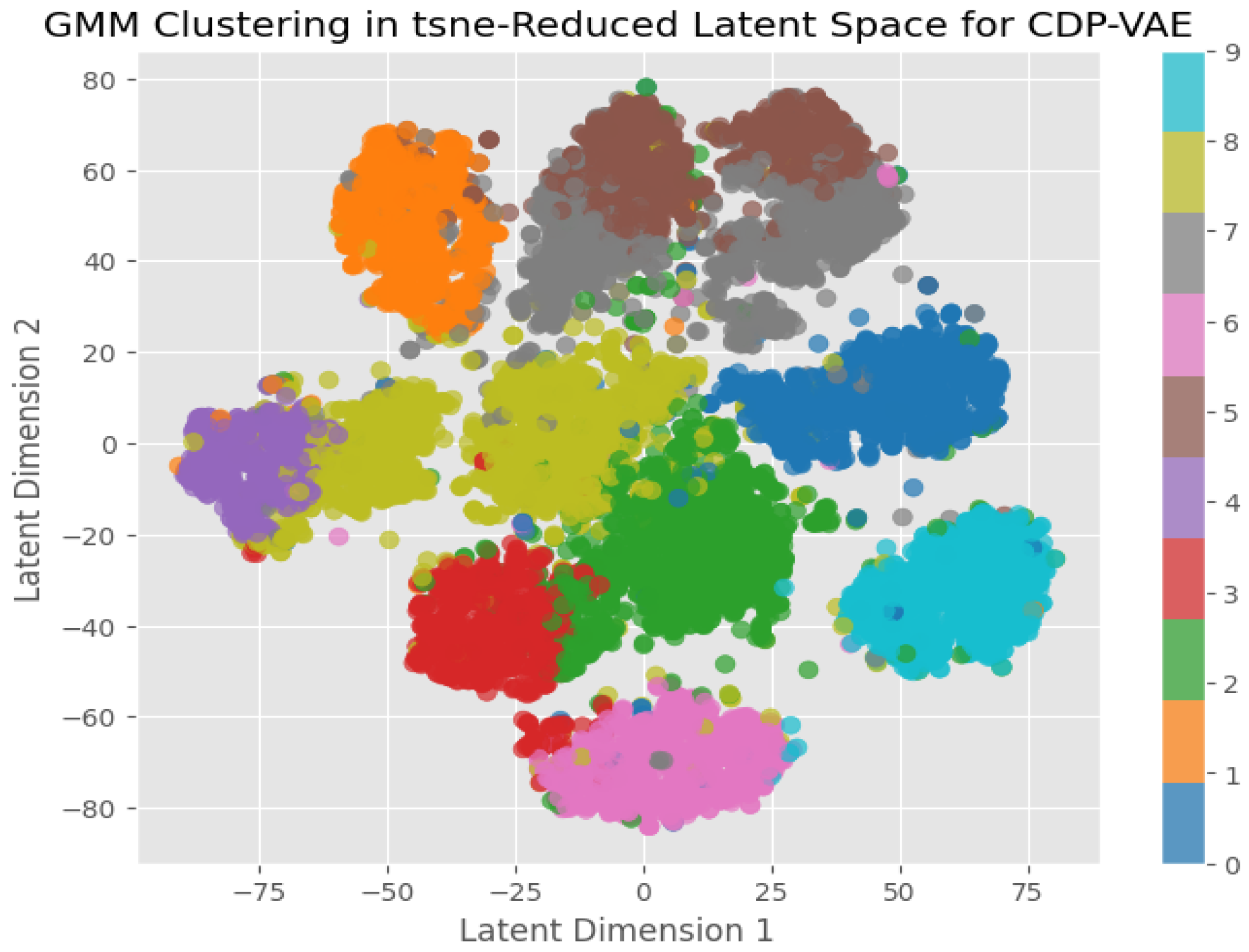

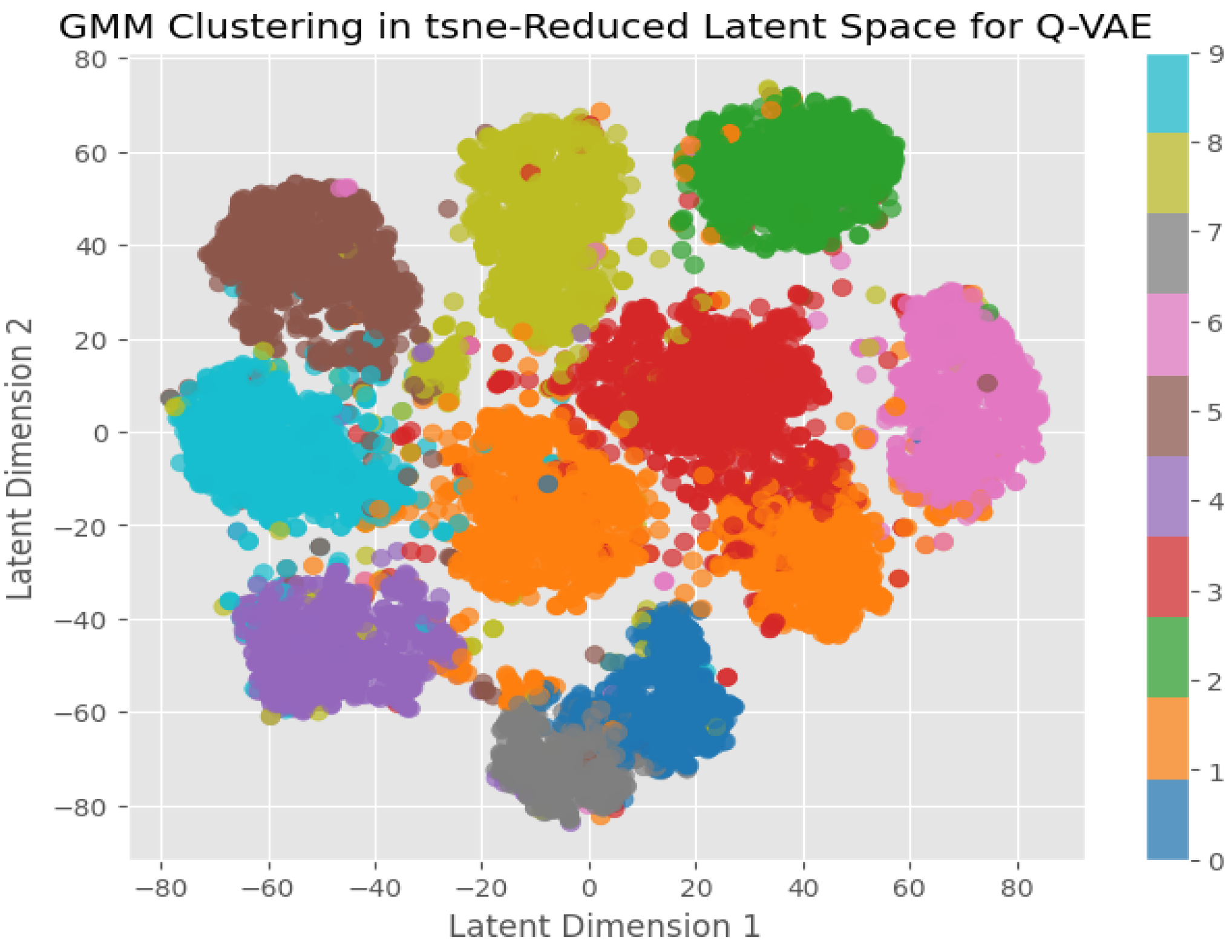

6.1. Latent Space Visualization

To better understand the expressiveness of the model, the latent vector mapping is visualized using dimensionality reduction techniques such as t-SNE [

41], which shows the clustering structure in the latent space. Furthermore, Gaussian Mixture Model (GMM) clustering is used to assess how well the learned representations can be grouped, providing information on the model’s capacity to capture meaningful variations and structure in the data. The colors in the visualization reflect different classes, with well-separated colors indicating efficient feature learning and overlapping colors indicating class similarities or ambiguity. Distinct clusters suggest that the model has learned meaningful feature representations, but overlapping clusters could indicate insufficient separation in the latent space. By combining t-SNE and GMM clustering, we can assess whether the model effectively organizes data points and captures key properties for classification or reconstruction. C-VAE, CDP-VAE and Q-VAE latent space has been visualized in

Figure 9,

Figure 10 and

Figure 11 respectively.

From the figures, it is evident that CDP-VAE and Q-VAE do not show a significant difference from C-VAE, but demonstrate competitive performance. For further investigation, we will analyze the reconstructed images and newly generated images in the next section.

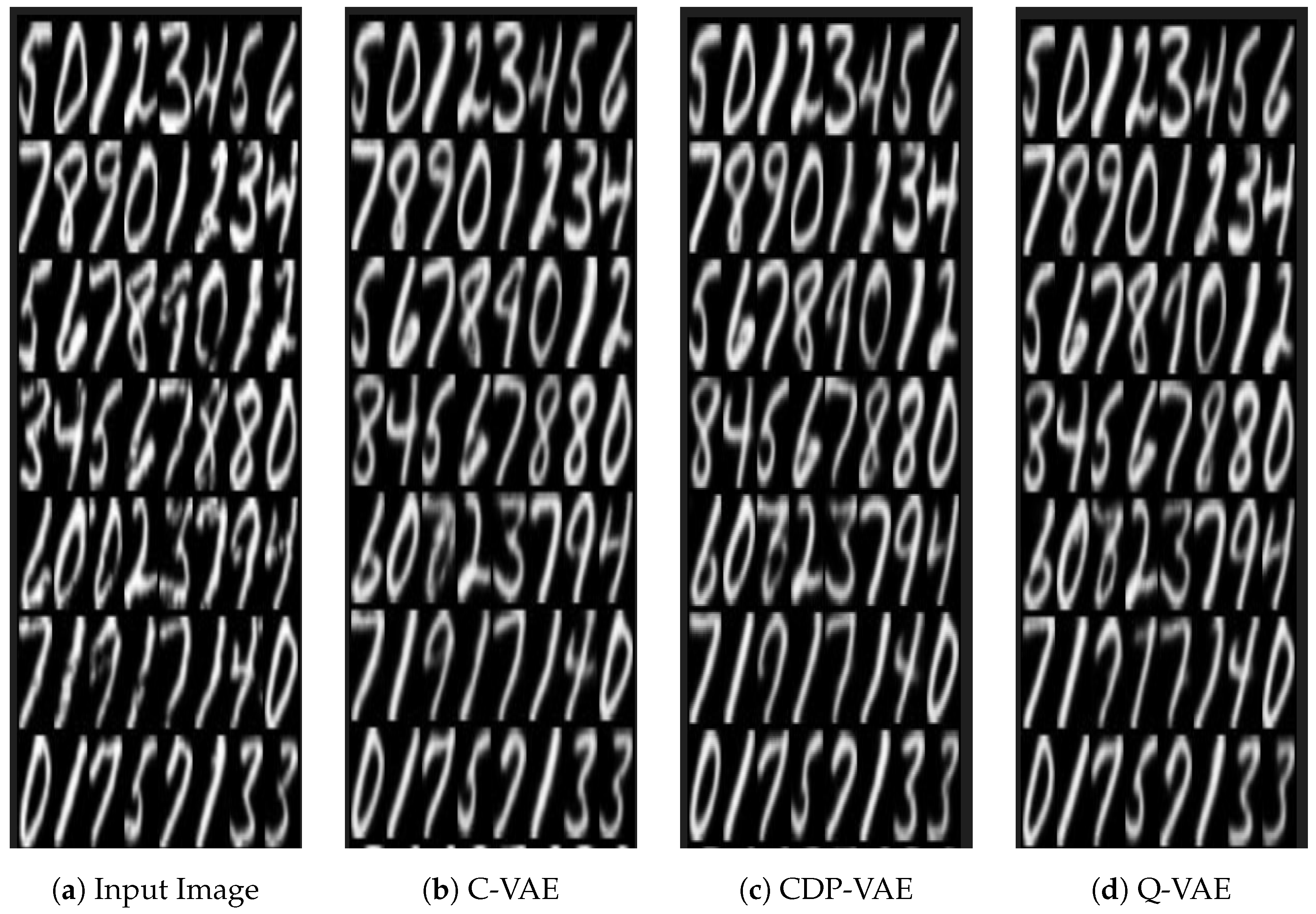

6.2. Reconstructed Images

Figure 12 displays reconstructed grayscale digit images from the MNIST dataset, arranged in a grid format. The input image is first downscaled from 28 × 28 to 16 × 16, and then upscaled to 32 × 32. This sequence reveals that some images lose details during resolution reduction, leading to inaccuracies in their reconstruction. For example, the digit ‘3’ in the 4th row and 1st column is successfully reconstructed by Q-VAE, whereas the C-VAE and CDP-VAE struggle to generate a well-defined version. However, in the 5th row and 3rd column, the digit ‘0’ shows noticeable noise due to the resolution reduction, making it difficult to reconstruct accurately. In this case, C-VAE incorrectly generates a ‘7’, while Q-VAE produces a hybrid image that blends ‘7’ and ‘0’. Most digits retain their recognizable shapes, but some exhibit blurriness or distortions as column 3, row 5 and ‘6’ its difficult to recognize the image as ‘7’ and ‘9’, indicating challenges in capturing finer details during the reconstruction process. These issues may stem from factors such as limited latent space resolution’s used for the model (i.e., 16), preprocessing steps like resizing or normalization, or suboptimal model architecture. Interestingly, prior evaluations using metrics like the FID have demonstrated our proposed model Q-VAE, achieve better image quality on MNIST.

To provide a more rigorous quantitative evaluation of reconstruction performance, we computed both the Fréchet Inception Distance (FID) and Mean Squared Error (MSE) metrics for all three models: C-VAE, CDP-VAE, and Q-VAE.

Table 1 summarizes these results. As shown, Q-VAE consistently achieves the lowest FID and MSE values across both MNIST and USPS datasets. This demonstrates that the quantum latent encoding enhances both reconstruction fidelity and feature richness, outperforming classical and hybrid approaches in quantitative terms as well as qualitative appearance.

On the MNIST dataset, the Q-VAE achieved the lowest MSE of 0.0088, demonstrating its ability to produce high-fidelity reconstructions compared to the other models. The quantum-based encoding method enables the Q-VAE to extract richer feature representations, leading to more accurate reconstructions. The quantum model’s ability to capture complex dependencies between pixels allows it to outperform classical approaches, even when dealing with highly structured images like those in the MNIST dataset. The C-VAE, with an MSE of 0.0093, showed slightly higher error compared to the Q-VAE, indicating that while it performs well, its feature extraction capabilities are not as advanced as those of the quantum model. Despite its relatively low MSE, the classical model’s performance is constrained by the limitations of traditional neural network-based encoding and decoding methods, which cannot capture the full complexity of the data as efficiently as quantum circuits. The CDP-VAE, with an MSE of 0.0092, performs little better then C-VAE but still didn’t perform better then Q-VAE as shown in

Figure 12.

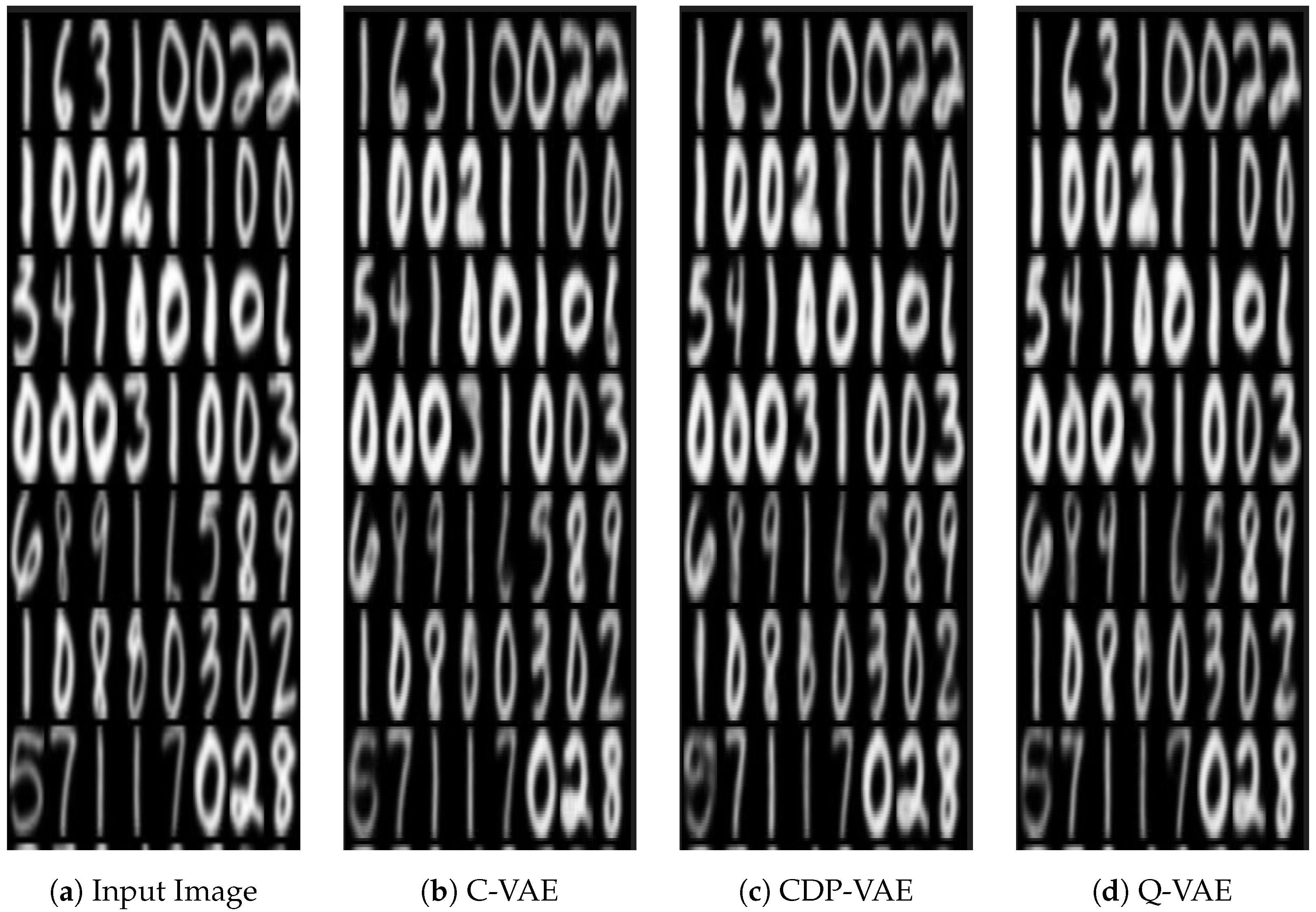

USPS images reconstructed from 3 different VAE models have been shown in

Figure 13. For the USPS dataset, while FID score for C-VAE indicate that the model can generate images with reasonable fidelity (i.e., the generated images resemble real USPS digits), it lags behind the performance of the Q-VAE. The quantum model’s ability to leverage more complex transformations in the latent space for image reconstruction enables it to capture more intricate patterns and feature representations in the data. This allows the Q-VAE to achieve lower FID scores (indicating higher image fidelity) compared to the CDP-VAE and C-VAE as seen in the results for the USPS dataset. For MSE, the Q-VAE again outperformed the other models, achieving a score of 0.0058. This lower error indicates that the quantum model is particularly effective at capturing the more complex, medium-resolution patterns in the USPS images. The Q-VAE’s quantum-enhanced encoding allows it to extract intricate features from the grayscale images, leading to more accurate reconstructions. Its performance on USPS highlights the potential of quantum circuits in improving image generation tasks, especially for datasets with more complex structures compared to MNIST. The C-VAE, with an MSE of 0.0070, performed worse than the CDP-VAE and Q-VAE on USPS, reflecting the same trend observed in MNIST. While still a competitive model, the C-VAE’s ability to reconstruct images is limited by its classical encoding mechanism. The model performs well, but its lack of advanced feature extraction techniques prevents it from achieving the same reconstruction quality as the Q-VAE. The CDP-VAE with an MSE of 0.0065, performance is better than the C-VAE on MNIST which is 0.0092, it still lags behind both the CDP-VAE and Q-VAE on USPS. While reconstructed images we can see Q-VAE is reconstructing very well competing with C-VAE and CDP-VAE.

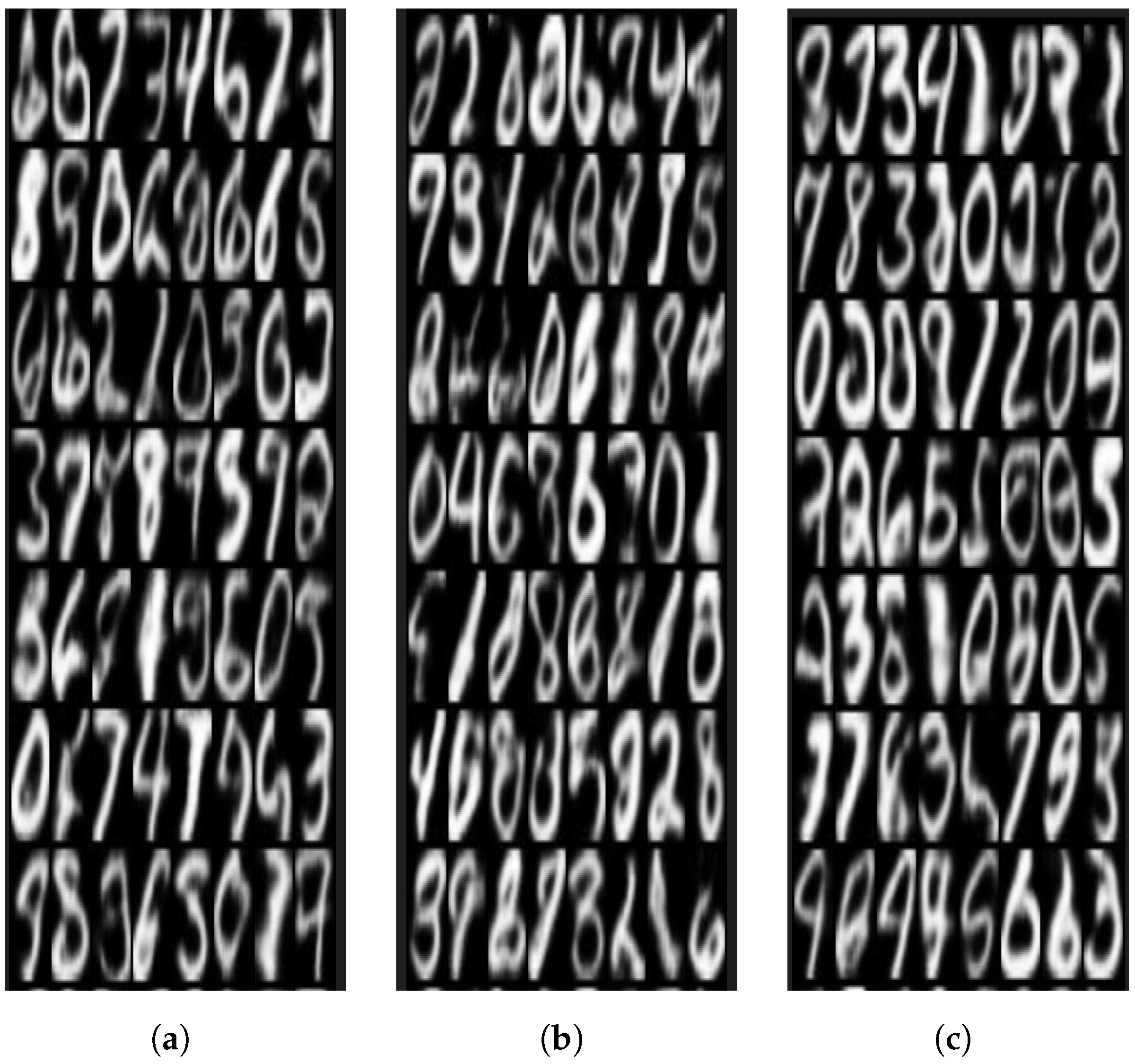

6.3. New Generated Images

The performance of the three models in handling random noise varies considerably. The generated images presented were captured at epoch 200. The C-VAE requires more time to converge, necessitating longer training. Therefore, we evaluate the model at epoch 200 to assess its performance after sufficient training. While initial epochs Q-VAE has shown fast convergence at epoch 20, while other models were unable to generate digits even at epoch 20. The Q-VAE outperforms the other models, achieving an FID score of 78.7 at epoch 200. In contrast, the C-VAE struggles with random noise, resulting in a much higher FID score of 94.4, indicating poor performance in generating from latent space using Gaussian variable as input. The CDP-VAE also faces challenges with noise but performs slightly better than the C-VAE, with an FID score of 93.3. While both the C-VAE and CDP-VAE show limitations in noise handling, the Q-VAE demonstrates a notable advantage in generating more realistic images from random variables. MNIST generated images from noise, have been shown below in

Figure 14:

These images are organized in a grid format, with varying levels of clarity and detail. While some digits are clearly defined and resemble their expected shapes, others appear blurry, distorted, or lack fine structural details, indicating limitations in the model’s ability to fully capture the underlying data distribution. These artifacts could result from constraints such as an insufficiently expressive latent space, challenges in training stability, or limitations in the model architecture. For instance, models with lower-resolution latent spaces may struggle to encode the nuanced variations required to generate sharp, realistic images.

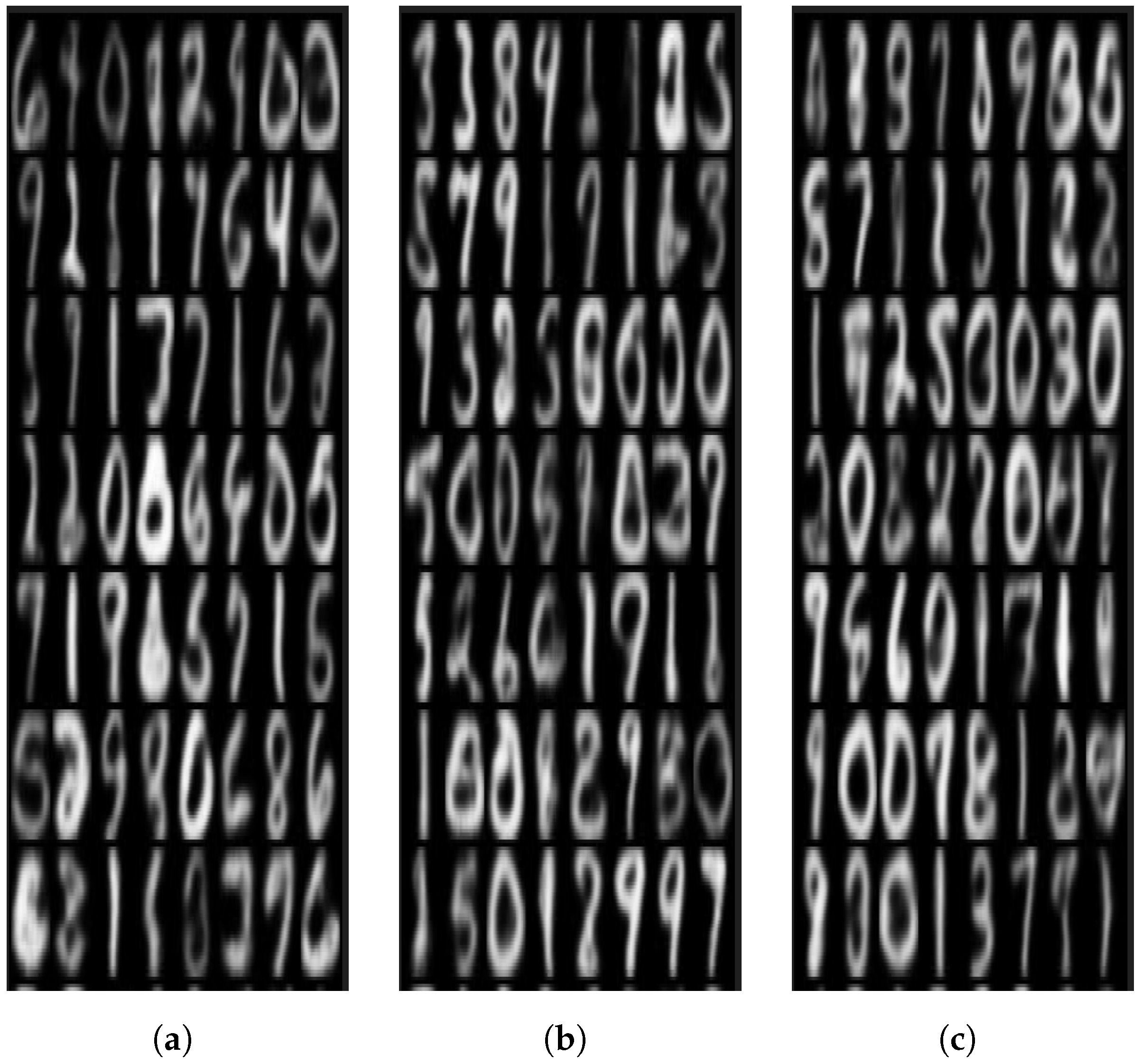

Interestingly, Q-VAE have demonstrated better performance in generating images from noise, as reflected in improved FID scores. These operations are highly effective at capturing complex patterns and correlations in medium-resolution data, enabling more detailed and coherent outputs. Similar results were obtained for USPS as shown in

Figure 15.

An important consideration in quantum generative models is the barren plateau phenomenon, where gradients vanish as circuit depth increases. The Q-VAE mitigates this issue by employing a shallow encoder with limited entanglement depth, preserving gradient magnitude during optimization. Empirically, training curves showed consistent convergence without significant gradient decay, confirming that the proposed circuit design effectively minimizes barren plateau effects and supports stable training on NISQ-scale devices.

To provide a quantitative evaluation of image generation quality, we assessed the models using three objective metrics: Fréchet Inception Distance (FID), Mean Squared Error (MSE), and Structural Similarity Index Measure (SSIM). The Q-VAE consistently achieved the lowest FID and MSE values while maintaining a higher SSIM compared to both C-VAE and CDP-VAE. This demonstrates that the quantum encoder captures richer latent features that translate into more realistic and structurally coherent image generations. On the MNIST dataset, Q-VAE achieved an FID of 78.7, MSE of 0.0088, and SSIM of 0.934, outperforming C-VAE (FID 94.4, MSE 0.0093, SSIM 0.901) and CDP-VAE (FID 93.3, MSE 0.0092, SSIM 0.913). Similarly, on the USPS dataset, Q-VAE achieved lower FID and MSE values, confirming its superior performance in both low- and medium-resolution image generation tasks. These results quantitatively validate the qualitative improvements observed in

Figure 14 and

Figure 15. The enhanced visual quality of reconstructed images arises from the encoder-focused quantum design. By keeping the decoder classical and limiting quantum operations to the encoder, the latent space remains well-structured and stable, allowing the decoder to accurately map compressed quantum features back to the input space. Additionally, minimizing quantum-trainable parameters reduces instability during training, which helps preserve fine-grained details in the reconstructions.

Trainable Parameters

The results indicate that both the proposed Q-VAE model and the CDP-VAE demonstrate significant improvements over the C-VAE architecture in terms of parameter efficiency. Specifically, the C-VAE consists of 407,377 trainable parameters, with 299,424 allocated to the encoder and 107,953 to the decoder. In contrast, the CDP-VAE reduces the total number of trainable parameters to 144,977, primarily due to its more compact encoder, which contains only 37,024 parameters. While the Q-VAE also has 144,977 parameters, this reduction is attributed to the CDP-VAE’s design rather than the quantum encoding itself. This highlights the role of CDP-VAE in achieving parameter efficiency while leveraging quantum encoding for enhanced feature extraction. Despite the reduction in parameters, the decoder structure remains unchanged across all models, with 107,953 parameters. Notably, the reduction in trainable parameters stems from CDP-VAE, not the quantum component, as the quantum circuit in Q-VAE does not introduce additional trainable parameters. As a result, both CDP-VAE and Q-VAE share the same total number of parameters, totaling 144,977. This demonstrates the efficiency of the CDP approach in reducing parameter overhead while maintaining effective feature extraction.

These findings highlight the role of CDP-VAE in optimizing parameter efficiency within VAE architectures while ensuring high-fidelity image reconstruction. Although C-VAEs remain a widely adopted choice in machine learning, the combination of CDP-VAE’s parameter reduction and Q-VAE’s quantum encoding presents a compelling direction for future research. Potential applications include digital imaging, healthcare diagnostics, and creative arts, where both high-quality image generation and computational efficiency are crucial. However, the effectiveness and adoption of Q-VAE remain subject to the challenges of quantum hardware scalability and the complexity of quantum algorithms. Continued advancements in quantum machine learning are essential to further explore the integration of quantum encoding in generative models, paving the way for more efficient and powerful solutions in real-world applications.

However, despite these advantages, the adoption and effectiveness of Q-VAE still face challenges, including issues related to the scalability of quantum hardware and the complexity of quantum algorithms. Continued research and development in quantum machine learning are essential for unlocking the full potential of quantum-enhanced models, pushing the boundaries of what is achievable in generative modeling and real-world applications.

6.4. Scalability and Practical Considerations

The Q-VAE architecture exhibits favorable scaling characteristics. The number of quantum gates grows linearly with the latent dimensionality, ensuring computational tractability as input resolution increases. Such scalability makes the model suitable for future integration into quantum communication and sensing systems, where efficient encoding of high-dimensional data is crucial. Moreover, the modular nature of the Q-VAE allows adaptation to higher-resolution image and signal domains without exponential resource growth.

7. Conclusions

In this study, we introduce the quantum down-sampling filter for Q-VAE, a novel model that integrates quantum computing into the encoder component of a traditional VAE. Our experimental results demonstrate that Q-VAE offers significant advantages over classical VAE architectures across various performance metrics. In particular, Q-VAE consistently outperforms C-VAE, achieving lower FID scores, indicating superior image fidelity and the ability to generate better-quality reconstructions. The quantum-enhanced encoder captures more intricate features of the images, leading to improved generative performance. We evaluated the model on digit datasets such as MNIST and USPS, initially up-scaled to 32 × 32 for consistency. For a fair comparison, we also tested our quantum-enhanced model alongside a classical counterpart and a model mimicking classical behavior, referred to as CDP-VAE. One key finding of this study is the significant reduction in the number of trainable parameters in the CDP-VAE’s encoder. While the C-VAE consists of over 407,000 trainable parameters, the CDP-VAE achieves a reduction by significantly lowering the number of parameters. Furthermore, the Q-VAE leverages quantum encoding, which does not add any additional trainable parameters. This combination highlights the efficiency of quantum-based encoding methods and the potential for more scalable and resource-efficient models without increasing computational complexity. The results highlight the practical advantages of incorporating quantum computing into VAEs, particularly for tasks that require high-quality image generation and feature extraction. The Q-VAE not only improves image quality, but also enhances computational efficiency, making it a promising candidate for future applications in areas such as computer vision, data synthesis, and beyond. These findings suggest that further exploration of quantum-enhanced machine learning techniques could unlock new possibilities in generative modeling and image reconstruction, offering both performance improvements and computational savings.