Data Traffic Prediction for 5G and Beyond: Emerging Trends, Challenges, and Future Directions: A Scoping Review

Abstract

1. Introduction

2. Related Works

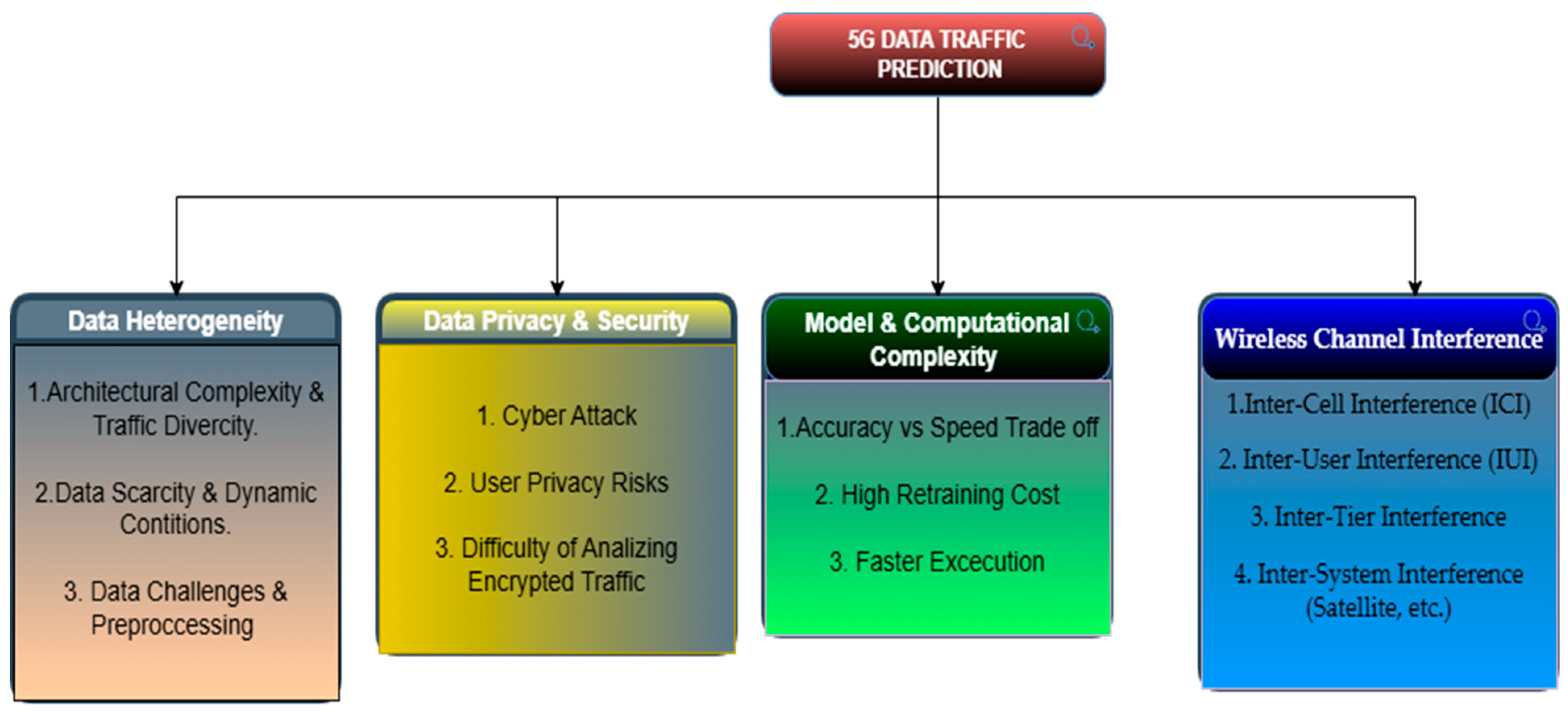

3. Key Challenges for Data Traffic Prediction

3.1. Data Heterogeneity

3.1.1. Architectural Complexity and Traffic Diversity

3.1.2. Data Scarcity and Dynamic Conditions

3.1.3. Data Challenges and Preprocessing

3.2. Data Privacy and Security

3.3. Model and Computational Complexity

3.4. Impact of Wireless Interference on Prediction Accuracy

3.4.1. Interference Classification

3.4.2. Interference Management Techniques

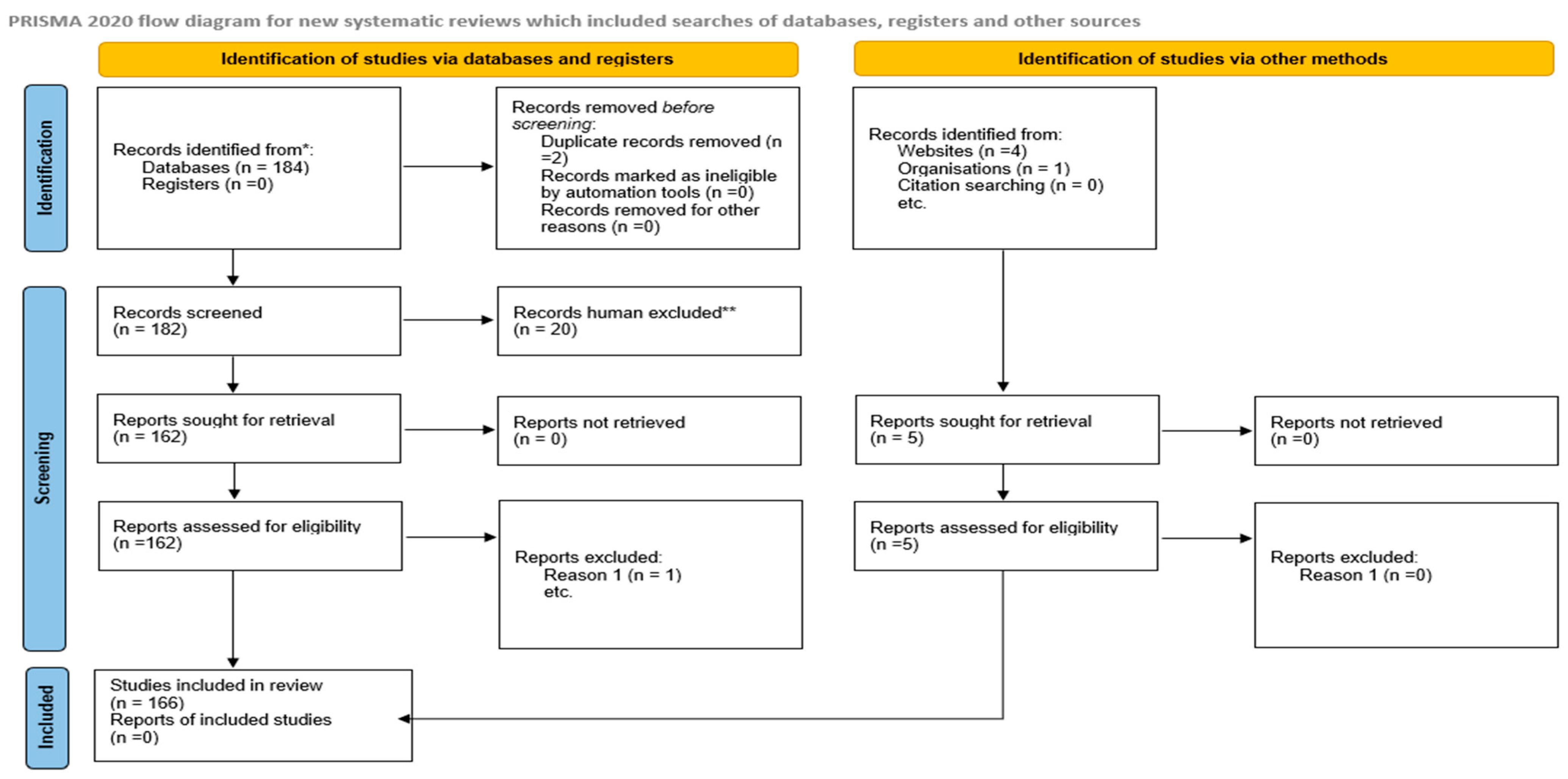

4. Methods

- Type of data traffic (The 5G service category the traffic prediction model addresses): (eMBB, URLLC, mMTC).

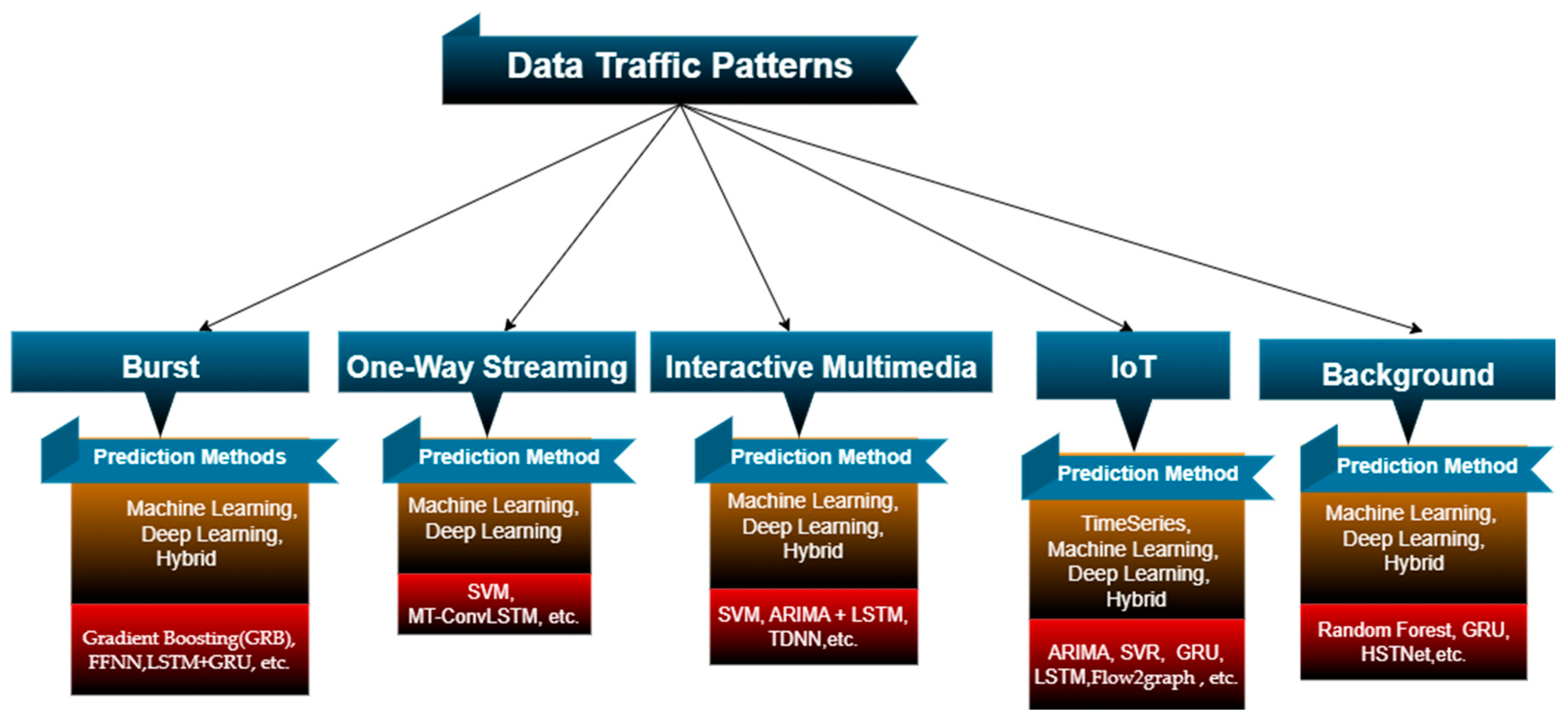

- Data Traffic Pattern (The intrinsic characteristic or nature of the data flow being analyzed and predicted): (Burst, One-Way streaming, interactive multimedia, IoT, background).

- Dataset characteristics (Attributes defining the source and nature of the traffic data used for training and evaluation): (network vs. single user data traffic, time sensitivity, spatial sensitivity, other characteristics (data CDRs, protocols, access technology)).

- Predictive models (The fundamental algorithmic approach used for the traffic prediction task): (Traditional (statistical, time-series), Contemporary (ML, DL, hybrid)

- Evaluation metrics (the performance and accuracy of the predictive model): (MSE, RMSE, MAE, MAPE, R2, etc.)

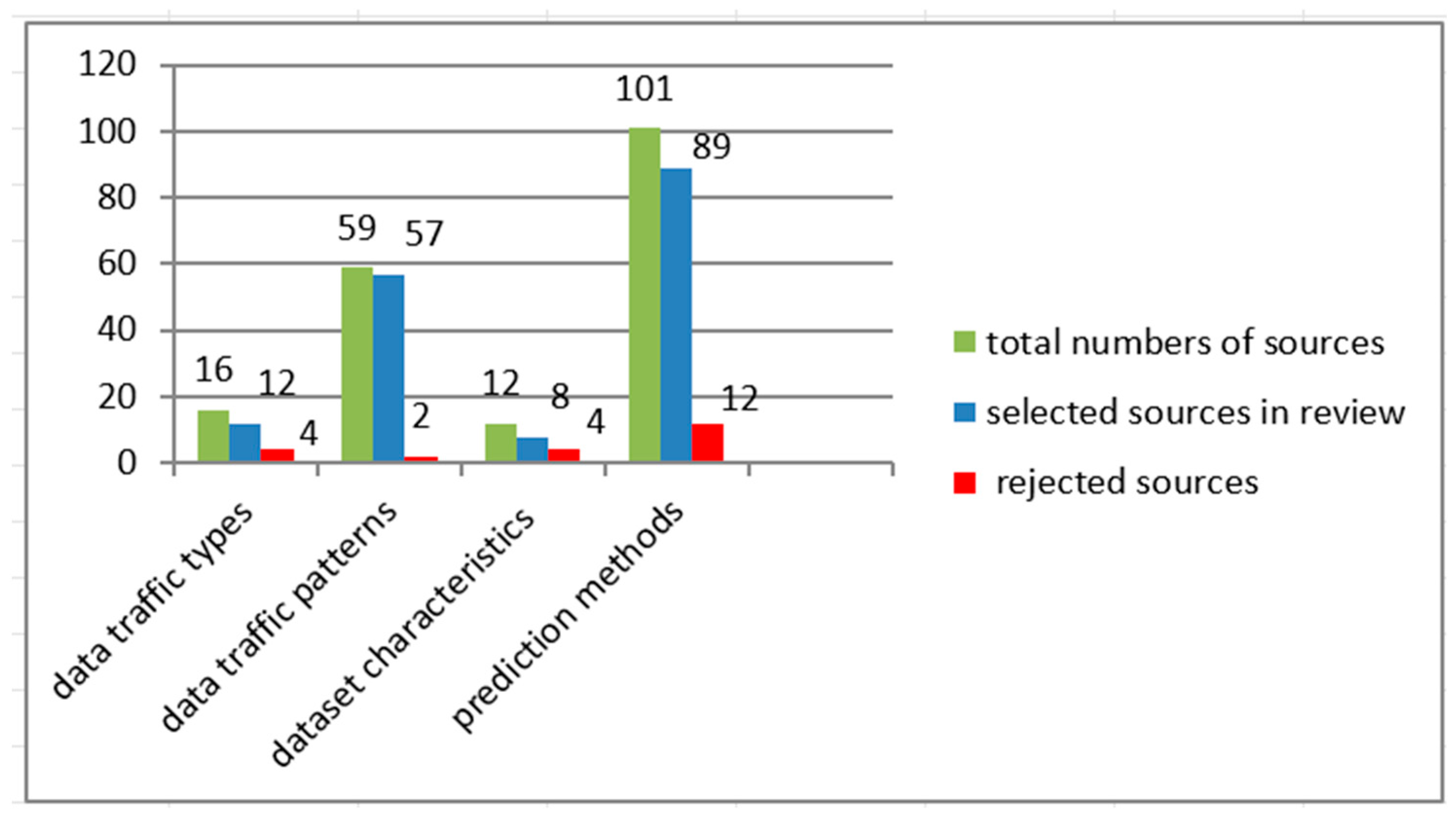

5. Results

- Data traffic types (n = 12),

- Data traffic patterns (n = 57),

- Dataset characteristics (n = 8),

- Prediction methods (n = 89).

5.1. Traffic Categories and Behavioral Patterns in 5G Networks

| Author | Year | Data Traffic Type |

|---|---|---|

| Alsenwi et al. [99] | 2021 | eMBB, URLLC, mMTC |

| Zhang et al. [113] | 2022 | |

| Abdelsadeket al. [114] | 2020 | |

| Kumar et al. [117] | 2022 | |

| Thantharate et al. [118] | 2019 | |

| Lykakis et al. [7] | 2023 | |

| Siddiqi et al. [107] | 2019 | eMBB, URLLC |

| Hsu et al. [108] | 2022 | eMBB |

| Sohaib et al. [110] | 2023 | |

| Popovski et al. [109] | 2018 | eMBB, mMTC |

| Ray et al. [111] | 2020 | mMTC |

| Belhadj et al. [112] | 2021 |

| Pattern | Data | Characteristics | Method |

|---|---|---|---|

| Burst Data Traffic [119,120,130,131,132,133,134,135,136,137,138,139,140,141,142] | Video Streaming, Online Gaming, Virtual Reality, IoT, Social Media Updates and Email Synchronization | Sudden Surges in Data Transfer Rates, Brief Duration, Irregular Patterns Variable Packet Sizes, Periodic Data Transmissions, Sporadic Data Flows | Random Forest, Decision Tree, k-Nearest Neighbors, Logistic Regression, Gaussian Processes, LSTM + GRU, Prophet Algorithm + GPR + ADMM, FFNN, Naïve Bayes (NB), Gradient Boosting (GRB) |

| One–Way Streaming Data Traffic [27,121,122,123,124,125,144,145,146] | Video and Audio Live Streaming | Continuous Flow of Data, Robust Bandwidth Requirements, Consistent Data Rates | SVM, ESN, MT-ConvLSTM |

| Interactive Multimedia Data Traffic [100,126,127,128,134,151,152,153,154,155,156,157,159,160,161,162] | Online Games, Virtual and Augmented Reality, Remote Telesurgery, Social Media Chat | Variable Data Rates | SVM, Bayes Net, Naïve Bayes, ARIMA + LSTM, TDNN |

| IoT Data Traffic [129,135,173,174,175,176,177,178,179,180,181,182,183,184,185,186] | Smart Home, Sensors, Vehicles, Devices etc. | Data Traffic Fluctuations, Peak Traffic Volumes small or large | ARIMA, VARMA/SVR, TFVPtime-LSH, GRU, LSTM, FFNN, NARX NN, Flow2graph |

| Background Data Traffic [130,187,188,189,190,192] | System Upgrades, Backups, Social Media Updates and Email Synchronization | Generated Data Traffic Without Use, Separated to Light Data Traffic and Heavy Data Traffic, Can Lead to High Signaling Overhead | GASTN, Random Forest, LSTM, GRU, HSTNet |

5.2. Dataset Characteristics in Cellular Network

| Author | Year | Dataset Characteristics |

|---|---|---|

| Shafiq et al. [198] | 2012 | Network vs. Single User Data Traffic |

| Cardona et al. [202] | 2014 | Temporal Sensitivity |

| Zhang et al. [203] | 2012 | Spatial Sensitivity |

| Sun et al. [199] | 2000 | Spatiotemporal Sensitivity |

| Paul et al. [200] | 2011 | |

| Wang et al. [201] | 2013 | |

| Trinh et al. [196] | 2020 | Other Characteristics |

| Naboulsi [33] | 2015 |

5.3. Current Approaches for Forecasting Cellular Network Data Traffic

5.3.1. Traditional Methods

| Author | Year | Model | Method | Advantages | Disadvantages |

|---|---|---|---|---|---|

| Chen et al. [39] | 2021 | Hidden Markov Markov Chain Naive Bayes | Statistical | 1. Low Computational Cost, 2. Excellent for Stationary Data | 1. Not Useful for Spatiotemporal and Nonstationary Data, 2. Lack Data Protection Techniques |

| Author | Year | Model | Method | Advantages | Disadvantages |

|---|---|---|---|---|---|

| Chen et al. [39] | 2021 | GARCH | Non-linear | 1. Low Computational Cost. 2. Useful for Spatiotemporal Data, 3. Good for IoT Data Traffic Pattern Especially from ΙoT Sensors. | 1. Low Percentage of Prediction in Heterogeny Data. 2. Lack Data Protection Techniques |

| ARIMA | Linear | ||||

| Levine et al. [217] | 1997 | SHADOW CLUSTER | Grouping Method | ||

| Sadek et al. [207] | 2004 | AR | Linear | ||

| MA | |||||

| GARMA | |||||

| Tan et al. [206] | 2010 | ARMA | |||

| Tikunovet al. [205] | 2007 | HOLT-WINTERS | |||

| Sciancalepore et al. [208] | 2017 | ||||

| Hajirahimi et al. [36] | 2019 | ARFIMA | |||

| Whittaker et al. [211] | 1997 | Kalman Filtering | |||

| Medhn et al. [209] | 2017 | SARIMA | |||

| AsSadha et al. [210] | 2017 | FARIMA | |||

| Mitchell et al. [214] | 2001 | MULTI–CELL + CLASS MODEL | Hybrid | ||

| Mehdi et al. [215] | 2022 | Fuzzy ARIMA | |||

| Tran et al. [216] | 2019 | Holt–Winter’s Mul. Seas. (HWMS) | |||

| Zhou et al. [213] | 2006 | GARCH + ARIMA | |||

| Choi et al. [212] | 2002 | PROBAB. | Probabilistic |

5.3.2. Contemporary Methods

| Author | Year | Model | Method | Advantages | Disadvantages |

|---|---|---|---|---|---|

| Khan et al. [147] | 2022 | SVM | Supervised ML | 1. Better Accuracy Than Time Series Methods. 2. Good for All Data Traffic Patterns, Especially For Interactive Multimedia Data Traffic is Excellent only in online Chat (WeChat, etc.) Data Traffic. 3. Lower Computational Complexity Than Deep Learning Methods. | 1. For Interactive Multimedia Data Traffic Patterns like Augment Reality is not Very Accurate. 2. Less Accurate Than DL and Hybrid Methods. 3. Lack Data Protection Techniques. |

| Aceto et al. [218] | 2021 | Markov Chains | |||

| Dash et al. [219] | 2019 | HMM | |||

| Yue et al. [51] | 2017 | Random Forest | |||

| Bouzidi et al. [220] | 2018 | ILF |

| Author | Year | Model | Method | Advantages | Disadvantages |

|---|---|---|---|---|---|

| Guo et al. [115] | 2019 | GRU | DL | 1. Better Accuracy Than Statistical and ML Methods. 2. Very Good For All Data Traffic Patterns. 3. Improve QoS and Data Flow Size. | 1. Computational Cost Than Statistical and ML Methods. 2. Lack Data Protection Techniques. 3. Less Accuracy Than Hybrid Contemporary Methods |

| Bega et al. [221] | 2019 | 3D-CNN | |||

| Zhang et al. [49] | 2018 | CNN | |||

| Liang et al. [222] | 2019 | ||||

| Cui et al. [50] | 2014 | ESN | |||

| Nikravesh et al. [223] | 2016 | MLP, MLPWD | |||

| Zhao et al. [265] | 2022 | BP | |||

| Yimenget al. [226] | 2022 | Transformers | |||

| Pfülb et al. [227] | 2019 | DNN | |||

| Chen et al. [164] | 2018 | LSTM | |||

| Zhou et al. [165] | 2018 | ||||

| Zhao et al. [166] | 2019 | ||||

| Trinh et al. [167] | 2018 | ||||

| Chen et al. [168] | 2019 | ||||

| Azzouni et al. [169] | 2017 | ||||

| Dalgkitsis et al. [170] | 2018 | ||||

| Alawe et al. [171] | 2018 | ||||

| Xiao et al. [116] | 2018 | ||||

| Jaffry et al. [224] | 2020 | FFNN | |||

| Gao [56] | 2022 | SLSTM | |||

| Guerra-Gomez et al. [172] | 2020 | TDNN | |||

| Selvamanjuet al. [225] | 2022 | DLMTFP |

| Author | Year | Model | Method | Advantages | Disadvantages |

|---|---|---|---|---|---|

| Paul et al. [150] | 2019 | k-means + Weiszfeld + LSTM-GRU | Hybrid | 1. Better Accuracy than other Methods, 2. Network Performance Optimization, 3. Quality of Service (QoS) Optimization, 4. Energy Consumption Reduction, 5. Excellent performance Especially in Burst, Interactive Multimedia, IoT (IoT Bursts) and Background Data Traffic. | 1. Lack of Balance Between Accuracy, Data Privacy, and Computational Cost |

| Andreoletti et al. [233] | 2019 | DCRNN | |||

| Pelekanou et al. [234] | 2018 | ILP + LSTM + MLP | |||

| Gong et al. [240] | 2024 | KGDA | |||

| Zang et al. [57] | 2015 | k-means + Wavelet transform + Elman-NN | |||

| Zheng et al. [148] | 2016 | RBMs + NN | |||

| Chen et al. [58] | 2018 | LSTM + CNN | |||

| Fang et al. [237] | 2022 | Wavelet Denoising + Deep Gaussian Process | |||

| Le et al. [52] | 2018 | Naïve Bayes + AR + NN + GP | |||

| Zhang et al. [59] | 2020 | HSTNet | |||

| Dommaraju et al. [250] | 2020 | ECMCRR-MPDNL | |||

| Wang et al. [143] | 2020 | LSTM + GPR | |||

| Gao et al. [251] | 2021 | DRL | |||

| Uyan et al. [257] | 2022 | k-means + n-beans | |||

| Wang et al. [60] | 2019 | DU-AAU | |||

| Xu et al. [94] | 2019 | ADMM + Cross-Validation + GP | |||

| Shawel et al. [229] | 2020 | Double Seasonal ARIMA | Hybrid | 1. Better Accuracy than other Methods, 2. Network Performance Optimization, 3. Quality of Service (QoS) Optimization, 4.Energy Consumption Reduction, 5. Excellent performance Especially in Burst, Interactive Multimedia, IoT (IoT Bursts) and Background Data Traffic. | 1. Lack of Balance Between Accuracy, Data Privacy, and Computational Efficiency |

| Yadav et al. [163] | 2021 | ARIMA + LSTM | |||

| Aldhyani et al. [236] | 2020 | FCM + LSTM + ANFIS | |||

| Li et al. [235] | 2020 | LSTM + CNN | |||

| Alsaade et al. [228] | 2021 | SES-LSTM | |||

| Selvamanju et al. [239] | 2022 | AOADBN-MTP | |||

| Li et al. [238] | 2022 | EEMD + GAN | |||

| Garrido et al. [55] | 2021 | CATP | |||

| Zeb et al. [232] | 2021 | Encoder–Decoder LSTM | |||

| Su et al. [31] | 2024 | Lightweight Hybrid Attention Deep Learning | |||

| Pandey et al. [248] | 2024 | 5GT-GAN-NET | |||

| Huang et al. [249] | 2019 | DQN | |||

| Mehri et al. [241] | 2024 | FLSP | |||

| Nashaat et al. [20] | 2024 | AML-CTP Framework | |||

| Hua et al. [230] | 2018 | CLSTM | |||

| Zhu et al. [254] | 2021 | LR + DNN | |||

| Bouzidi et al. [253] | 2019 | ILP + DRL + LSTM | Hybrid | 1. Better Accuracy than other Methods, 2. Network Performance Optimization, 3. Quality of Service (QoS) Optimization, 4. Energy Consumption Reduction, 5. Excellent performance Especially in Burst, Interactive Multimedia, IoT (IoT Bursts) and Background Data Traffic. | 1. Lack of Balance Between Accuracy, Data Privacy, and Computational Efficiency |

| Zhao et al. [53] | 2020 | STGCN-HO | |||

| Zeng et al. [252] | 2020 | Fusion-transfer + STC-N | |||

| Liu et al. [54] | 2021 | Prophet algorithm + GPR + ADMM | |||

| Jiang et al. [246] | 2024 | CNN)-graph Neural Network (GNN) | |||

| Zorello et al. [255] | 2022 | LR + LSTM + FFNN + MILP | |||

| Nan et al. [256] | 2022 | FedRU | |||

| Zhou et al. [245] | 2024 | Patch-based Neural Network | |||

| Wang et al. [149] | 2017 | GSAE + LSTM | |||

| Zhang et al. [231] | 2017 | SARIMA + top-K + Regression Tree Random Forest | |||

| Cai et al. [242] | 2024 | DBSTGNN-Att | |||

| Haoet al. [243] | 2024 | NCP | |||

| Cao et al. [244] | 2024 | HAN | |||

| Wu et al. [247] | 2024 | CLPREM | |||

| Chen et al. [92] | 2020 | DBLS |

5.4. Evaluation Metrics for the Data Traffic Prediction

- “ARMSE (Average Root Mean Square Error)” [231] Equation (3)

- “RRMSE (Relative RMSE)” [53] Equation (4)

- “NMSE (Normalized Mean Square Error)” [50] Equation (5)

- “NRMSE (Normalized Root Mean Square Error)” [228] Equation (6)

- “RE (Relative Error)” [115] Equation (7)

- “MRE (Mean Relative Error)” [225] Equation (8)

- “NMAE (Normalized Mean Absolute Error)” [57] Equation (9)

- “MA (Mean Accuracy)” [58] Equation (12)

- “SMAPE (Symmetric Mean Absolute Percentage Error)” [150] Equation (13)

- “Percentage Tolerance” [219] Equation (15)

- “True Predicted Rate (TPR)” [250] Equation (16)

- “False Positive Rate (FPR)” [250] Equation (17)

- “r (Pearson Coefficient)” [172] Equation (18)

- “R (Spearman’s Correlation Coefficient)” [254] Equation (19)

6. General Discussion of Future Directions

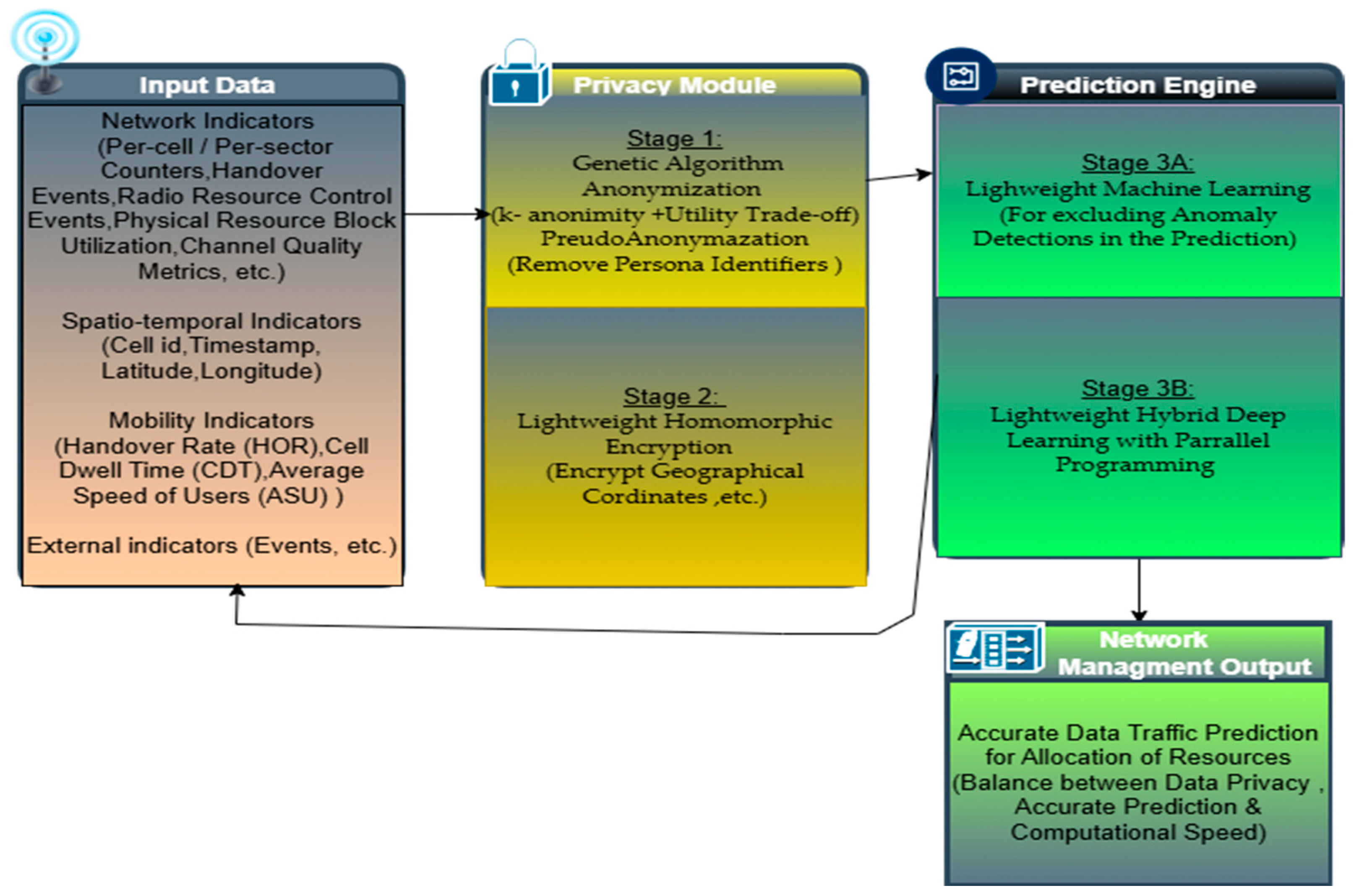

6.1. Framework Overview

6.2. Detailed Methodology

6.3. Validation Plan

7. Discussion and Analysis

8. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhang, C.; Patras, P.; Haddadi, H. Deep learning in mobile and wireless networking: A survey. IEEE Commun. Surv. Tutor. 2019, 21, 2224–2287. [Google Scholar] [CrossRef]

- Mohan, R.R.; Vijayalakshmi, K.; Augustine, P.J.; Venkatesh, R.; Nayagam, M.G.; Jegajohi, B. A comprehensive survey of machine learning based mobile data traffic prediction models for 5G cellular networks. In Proceedings of the AIP Conference 2024, Greater Noida, India, 20–21 November 2024; p. 100002. [Google Scholar]

- Tyokighir, S.S.; Mom, J.; Ukhurebor, K.E.; Igwue, G. New developments and trends in 5G technologies: Applications and concepts. Bull. Electr. Eng. Inform. 2024, 13, 254–263. [Google Scholar] [CrossRef]

- Attaran, M. The impact of 5G on the evolution of intelligent automation and industry digitization. J. Ambient. Intell. Humaniz. Comput. 2023, 14, 5977–5993. [Google Scholar] [CrossRef]

- Pons, M.; Valenzuela, E.; Rodríguez, B.; Nolazco-Flores, J.A.; Del-Valle-Soto, C. Utilization of 5G technologies in IoT applications: Current limitations by interference and network optimization difficulties—A review. Sensors 2023, 23, 3876. [Google Scholar] [CrossRef]

- Klaine, P.V.; Imran, M.A.; Onireti, O.; Souza, R.D. A survey of machine learning techniques applied to self-organizing cellular networks. IEEE Commun. Surv. Tutor. 2017, 19, 2392–2431. [Google Scholar] [CrossRef]

- Lykakis, E.; Kokkinos, E. Data Traffic Prediction in Cellular Networks. In Proceedings of the 4th International Conference in Electronic Engineering, Information Technology & Education (EEITE 2023), Chania, Greece, 15–18 May 2023. [Google Scholar]

- Pawar, V.; Zade, N.; Vora, D.; Khairnar, V.; Oliveira, A.; Kotecha, K.; Kulkarni, A. Intelligent Transportation System with 5G Vehicle-to-Everything (V2X): Architectures, Vehicular Use Cases, Emergency Vehicles, Current Challenges and Future Directions. IEEE Access 2024, 12, 183937–183960. [Google Scholar] [CrossRef]

- Shafiq, S.; Rahman, M.S.; Shaon, S.A.; Mahmud, I.; Hosen, A.S. A Review on Software-Defined Networking for Internet of Things Inclusive of Distributed Computing, Blockchain, and Mobile Network Technology: Basics, Trends, Challenges, and Future Research Potentials. Int. J. Distrib. Sens. Netw. 2024, 2024, 9006405. [Google Scholar] [CrossRef]

- Alzubaidi, O.T.H.; Hindia, M.N.; Dimyati, K.; Noordin, K.A.; Wahab, A.N.A.; Qamar, F.; Hassan, R. Interference challenges and management in B5G network design: A comprehensive review. Electronics 2022, 11, 2842. [Google Scholar] [CrossRef]

- Navarro-Ortiz, J.; Romero-Diaz, P.; Sendra, S.; Ameigeiras, P.; Ramos-Munoz, J.J.; Lopez-Soler, J.M. A survey on 5G usage scenarios and traffic models. IEEE Commun. Surv. Tutor. 2020, 22, 905–929. [Google Scholar] [CrossRef]

- Bhivgade, A.; Puri, C. 5G Wireless Communication and IoT: Vision, Applications, and Challenges. In Proceedings of the 2024 2nd DMIHER International Conference on Artificial Intelligence in Healthcare, Education and Industry (IDICAIEI), Wardha, India, 29–30 November 2024; pp. 1–6. [Google Scholar]

- Melikhov, E.O.; Stroganova, E.P. Intelligent Management of Combined Traffic in Promising Mobile Communication Networks. In Proceedings of the 2024 Systems of Signal Synchronization, Generating and Processing in Telecommunications (SYNCHROINFO), Vyborg, Russia, 1–3 July 2024; pp. 1–5. [Google Scholar]

- Papagiannaki, K.; Taft, N.; Zhang, Z.-L.; Diot, C. Long-Term Forecasting of Internet Backbone Traffic: Observations and Initial Models. In Proceedings of the 22nd Annual Joint Conference of the IEEE Computer and Communications Societies, San Francisco, CA, USA, 1–3 April 2003; pp. 1178–1188. [Google Scholar]

- Chakraborty, P.; Corici, M.; Magedanz, T. System Failure Prediction within Software 5G Core Networks using Time Series Forecasting. In Proceedings of the 2021 IEEE International Conference on Communications Workshops (ICC Workshops), Montreal, ON, Canada, 14–23 June 2021; pp. 1–7. [Google Scholar]

- Alzalam, I.; Lipps, C.; Schotten, H.D. Time-Series Forecasting Models for 5G Mobile Networks: A Comparative Study in a Cloud Implementation. In Proceedings of the 2024 15th International Conference on Network of the Future (NoF), Barcelona, Spain, 2–4 October 2024; pp. 54–62. [Google Scholar]

- Hong, W.-C. Application of seasonal SVR with chaotic immune algorithm in traffic flow forecasting. Neural Comput. Appl. 2012, 21, 583–593. [Google Scholar] [CrossRef]

- Xu, Y.; Xu, W.; Yin, F.; Lin, J.; Cui, S. High-accuracy wireless traffic prediction: A GP-based machine learning approach. In Proceedings of the 2017 IEEE Global Communications Conference (GLOBECOM 2017), Singapore, 4–8 December 2017; pp. 1–6. [Google Scholar]

- Caiyu, S.; Jinri, W.; Jie, D.; Shanyun, W. Prediction of 5th Generation Mobile Users Traffic Based on Multiple Machine Learning Models. In Proceedings of the 2022 IEEE Conference on Telecommunications, Optics and Computer Science (TOCS), Virtual, 11–12 December 2022; pp. 1174–1179. [Google Scholar]

- Nashaat, H.; Mohammed, N.H.; Abdel-Mageid, S.M.; Rizk, R.Y. Machine Learning-based Cellular Traffic Prediction Using Data Reduction Techniques. IEEE Access 2024, 12, 58927–58939. [Google Scholar] [CrossRef]

- Vinayakumar, R.; Soman, K.P.; Poornachandran, P. Applying deep learning approaches for network traffic prediction. In Proceedings of the 2017 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Udupi, India, 13–16 September 2017; pp. 2353–2358. [Google Scholar]

- Girardin, F.; Vaccari, A.; Gerber, A.; Biderman, A.; Ratti, C. Towards estimating the presence of visitors from the aggregate mobile phone network activity they generate. In Proceedings of the International Conference on Computers in Urban Planning and Urban Management, Hong Kong, China, 16–18 June 2009. [Google Scholar]

- Aronsson, L.; Bengtsson, A. Machine Learning Applied to Traffic Forecasting. Bachelor’s Thesis, University of Gothenburg, Gothenburg, Sweden, 2019. [Google Scholar]

- Selvamanju, E.; Shalini, V.B. Machine learning based mobile data traffic prediction in 5g cellular networks. In Proceedings of the 2021 5th International Conference on Electronics, Communication and Aerospace Technology (ICECA), Coimbatore, India, 2–4 December 2021; pp. 1318–1324. [Google Scholar]

- Guesmi, L.; Mejri, A.; Radhouane, A.; Zribi, K. Advanced Predictive Modeling for Enhancing Traffic Forecasting in Emerging Cellular Networks. In Proceedings of the 2024 15th International Conference on Network of the Future (NoF), Barcelona, Spain, 2–4 October 2024; pp. 209–213. [Google Scholar]

- Kuber, T.; Seskar, I.; Mandayam, N. Traffic prediction by augmenting cellular data with non-cellular attributes. In Proceedings of the 2021 IEEE Wireless Communications and Networking Conference (WCNC), Nanjing, China, 29 March–1 April 2021; pp. 1–6. [Google Scholar]

- Bejarano-Luque, J.L.; Toril, M.; Fernandez-Navarro, M.; Gijon, C.; Luna-Ramirez, S. A deep-learning model for estimating the impact of social events on traffic demand on a cell basis. IEEE Access 2021, 9, 71673–71686. [Google Scholar] [CrossRef]

- Zhu, T.; Boada, M.J.L.; Boada, B.L. Adaptive Graph Attention and Long Short-Term Memory-Based Networks for Traffic Prediction. Mathematics 2024, 12, 255. [Google Scholar] [CrossRef]

- Deeban, N.; Bharathi, P.S. A Robust and Efficient Traffic Analysis for 5G Network Based on Hybrid LSTM comparing with XGBoost to Improve Accuracy. In Proceedings of the 2023 International Conference on Artificial Intelligence and Knowledge Discovery in Concurrent Engineering (ICECONF), Chennai, India, 5–7 January 2023; pp. 1–8. [Google Scholar]

- Dangi, R.; Lalwani, P. A novel hybrid deep learning approach for 5G network traffic control and forecasting. Concurr. Comput. Pract. Exp. 2023, 35, e7596. [Google Scholar] [CrossRef]

- Su, J.; Cai, H.; Sheng, Z.; Liu, A.X.; Baz, A. Traffic prediction for 5G: A deep learning approach based on lightweight hybrid attention networks. Digit. Signal Process. 2024, 146, 104359. [Google Scholar] [CrossRef]

- Naboulsi, D.; Stanica, R.; Fiore, M. Classifying call profiles in large-scale mobile traffic datasets. In Proceedings of the IEEE Conference on Computer Communications (INFOCOM 2014), Toronto, ON, Canada, 27 April–2 May 2014; pp. 1806–1814. [Google Scholar]

- Naboulsi, D.; Fiore, M.; Ribot, S.; Stanica, R. Large-scale mobile traffic analysis: A survey. IEEE Commun. Surv. Tutor. 2015, 18, 124–161. [Google Scholar] [CrossRef]

- Joshi, M.; Hadi, T.H. A review of network traffic analysis and prediction techniques. arXiv 2015, arXiv:1507.05722. [Google Scholar] [CrossRef]

- Ahad, N.; Qadir, J.; Ahsan, N. Neural networks in wireless networks: Techniques applications and guidelines. J. Netw. Comput. Appl. 2016, 68, 1–27. [Google Scholar] [CrossRef]

- Hajirahimi, Z.; Khashei, M. Hybrid structures in time series modeling and forecasting: A review. Eng. Appl. Artif. Intell. 2019, 86, 83–106. [Google Scholar] [CrossRef]

- Mohammed, A.R.; Mohammed, S.A.; Shirmohammadi, S. Machine learning and deep learning-based traffic classification and prediction in software defined networking. In Proceedings of the 2019 IEEE International Symposium on Measurements & Networking (M&N), Catania, Italy, 8–10 July 2019; pp. 1–6. [Google Scholar]

- Li, J.; Pan, Z. Network traffic classification based on deep learning. KSII Trans. Internet Inf. Syst. 2020, 14, 4246–4267. [Google Scholar] [CrossRef]

- Chen, A.; Law, J.; Aibin, M. A Survey on Traffic Prediction Techniques Using Artificial Intelligence for Communication Networks. Telecom 2021, 2, 518–535. [Google Scholar] [CrossRef]

- Abbasi, M.; Shahraki, A.; Taherkordi, A. Deep learning for network traffic monitoring and analysis (NTMA): A survey. Comput. Commun. 2021, 170, 19–41. [Google Scholar] [CrossRef]

- Lohrasbinasab, I.; Shahraki, A.; Taherkordi, A.; Delia Jurcut, A. From statistical-to machine learning-based network traffic prediction. Trans. Emerg. Telecommun. Technol. 2022, 33, e4394. [Google Scholar] [CrossRef]

- Wang, X.; Wang, Z.; Yang, K.; Song, Z.; Feng, J.; Zhu, L.; Deng, C. Deep Learning Based Traffic Prediction in Mobile Network-A Survey. Authorea Preprints 2023.

- Ferreira, G.O.; Ravazzi, C.; Dabbene, F.; Calafiore, G.C.; Fiore, M. Forecastingnetwork traffic: A survey and tutorial with open-source comparative evaluation. IEEE Access 2023, 11, 6018–6044. [Google Scholar] [CrossRef]

- Wang, X.; Wang, Z.; Yang, K.; Song, Z.; Bian, C.; Feng, J.; Deng, C. A Survey on Deep Learning for Cellular Traffic Prediction. Intell. Comput. 2024, 3, 0054. [Google Scholar] [CrossRef]

- Sanchez-Navarro, I.; Mamolar, A.S.; Wang, Q.; Calero, J.M.A. 5gtoponet: Real-time topology discovery and management on 5g multi-tenant networks. Future Gener. Comput. Syst. 2021, 114, 435–447. [Google Scholar] [CrossRef]

- ElSawy, H.; Sultan-Salem, A.; Alouini, M.S.; Win, M.Z. Modeling and analysis of cellular networks using stochastic geometry: A tutorial. IEEE Commun. Surv. Tutor. 2016, 19, 167–203. [Google Scholar] [CrossRef]

- Mkocha, K.; Kissaka, M.M.; Hamad, O.F. Trends and Opportunities for Traffic Engineering Paradigms Across Mobile Cellular Network Generations. In Proceedings of the International Conference on Social Implications of Computers in Developing Countries, Dar es Salaam, Tanzania, 1–3 May 2019; pp. 736–750. [Google Scholar]

- Motlagh, N.H.; Kapoor, S.; Alhalaseh, R.; Tarkoma, S.; Hätönen, K. Quality of Monitoring for Cellular Networks. IEEE Trans. Netw. Serv. Manag. 2021, 19, 381–391. [Google Scholar] [CrossRef]

- Zhang, C.; Zhang, H.; Yuan, D.; Zhang, M. Citywide cellular traffic prediction based on densely connected convolutional neural networks. IEEE Commun. Lett. 2018, 22, 1656–1659. [Google Scholar] [CrossRef]

- Cui, H.; Yao, Y.; Zhang, K.; Sun, F.; Liu, Y. Network traffic prediction based on Hadoop. In Proceedings of the 2014 International Symposium on Wireless Personal Multimedia Communications (WPMC 2014), Sydney, Australia, 7–10 September 2014; pp. 29–33. [Google Scholar]

- Yue, C.; Jin, R.; Suh, K.; Qin, Y.; Wang, B.; Wei, W. LinkForecast: Cellular link bandwidth prediction in LTE networks. IEEE Trans. Mob. Comput. 2017, 17, 1582–1594. [Google Scholar] [CrossRef]

- Le, L.V.; Sinh, D.; Tung, L.P.; Lin, B.S.P. A practical model for traffic forecasting based on big data, machine-learning, and network KPIs. In Proceedings of the 2018 15th IEEE Annual Consumer Communications & Networking Conference (CCNC 2018), Las Vegas, NV, USA, 12–15 January 2018; pp. 1–4. [Google Scholar]

- Zhao, S.; Jiang, X.; Jacobson, G.; Jana, R.; Hsu, W.L.; Rustamov, R.; Talasila, M.; Aftab, S.A.; Chen, Y.; Borcea, C. Cellular Network Traffic Prediction Incorporating Handover: A Graph Convolutional Approach. In Proceedings of the 2020 17th Annual IEEE International Conference on Sensing, Communication and Networking (SECON 2020), Como, Italy, 22–25 June 2020; pp. 1–9. [Google Scholar]

- Liu, C.; Wu, T.; Li, Z.; Wang, B. Individual traffic prediction in cellular networks based on tensor completion. Int. J. Commun. Syst. 2021, 34, 4952. [Google Scholar] [CrossRef]

- Garrido, L.A.; Mekikis, P.V.; Dalgkitsis, A.; Verikoukis, C. Context-aware traffic prediction: Loss function formulation for predicting traffic in 5G networks. In Proceedings of the IEEE International Conference on Communications (ICC 2021), Montreal, ON, Canada, 14–18 June 2021; pp. 1–6. [Google Scholar]

- Gao, Z. 5G Traffic Prediction Based on Deep Learning. Comput. Intell. Neurosci. 2022, 2022, 3174530. [Google Scholar] [CrossRef] [PubMed]

- Zang, Y.; Ni, F.; Feng, Z.; Cui, S.; Ding, Z. Wavelet transform processing for cellular traffic prediction in machine learning networks. In Proceedings of the 2015 IEEE China Summit and International Conference on Signal and Information Processing (ChinaSIP), Chengdu, China, 12–15 July 2015; pp. 458–462. [Google Scholar]

- Huang, C.W.; Chiang, C.T.; Li, Q. A study of deep learning networks on mobile traffic forecasting. In Proceedings of the 2017 IEEE 28th Annual International Symposium on Personal Indoor and Mobile Radio Communications (PIMRC 2017), Montreal, ON, Canada, 8–13 October 2017; pp. 1–6. [Google Scholar]

- Zhang, D.; Liu, L.; Xie, C.; Yang, B.; Liu, Q. Citywide cellular traffic prediction based on a hybrid spatiotemporal network. Algorithms 2020, 13, 20. [Google Scholar] [CrossRef]

- Wang, S.; Li, F.; Ni, H.; Xu, L.; Jing, M.; Yu, J.; Wang, X. Rush Hour Capacity Enhancement in 5G Network Based on Hot Spot Floating Prediction. In Proceedings of the 2019 IEEE International Conferences on Ubiquitous Computing & Communications (IUCC) and Data Science and Computational Intelligence (DSCI) and Smart Computing, Networking and Services (SmartCNS), Shenyang, China, 21–23 October 2019; pp. 657–662. [Google Scholar]

- Stadler, T.; Oprisanu, B.; Troncoso, C. Synthetic data–anonymisation Groundhog Day. In Proceedings of the 31st USENIX Security Symposium (USENIX Security 22), Boston, MA, USA, 10–12 August 2022; pp. 1451–1468. [Google Scholar]

- Raghunathan, T.E. Synthetic data. Annu. Rev. Stat. Appl. 2021, 8, 129–140. [Google Scholar] [CrossRef]

- Ayala-Rivera, V.; Portillo-Dominguez, A.O.; Murphy, L.; Thorpe, C. COCOA: A synthetic data generator for testing anonymization techniques. In Proceedings of the International Conference on Privacy in Statistical Databases (PSD 2016), Dubrovnik, Croatia, 14–16 September 2016; pp. 163–177. [Google Scholar]

- Atat, R.; Liu, L.; Chen, H.; Wu, J.; Li, H.; Yi, Y. Enabling cyber-physical communication in 5G cellular networks: Challenges, spatial spectrum sensing, and cyber-security. IET Cyber Phys. Syst. Theory Appl. 2017, 2, 49–54. [Google Scholar] [CrossRef]

- Wang, T. High precision open-world website fingerprinting. In Proceedings of the 2020 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 18–21 May 2020. [Google Scholar]

- Ni, T.; Lan, G.; Wang, J.; Zhao, Q.; Xu, W. Eavesdropping mobile app activity via {Radio-Frequency} energy harvesting. In Proceedings of the 32nd USENIX Security Symposium, Anaheim, CA, USA, 9–11 August 2023; pp. 3511–3528. [Google Scholar]

- Mane, D.T.; Sangve, S.; Upadhye, G.; Kandhare, S.; Mohole, S.; Sonar, S.; Tupare, S. Detection of anomaly using machine learning: A comprehensive survey. Int. J. Emerg. Technol. Adv. Eng. 2022, 12, 134–152. [Google Scholar] [CrossRef]

- Gramaglia, M.; Fiore, M. On the anonymizability of mobile traffic datasets. arXiv 2014, arXiv:1501.0010. [Google Scholar]

- Stenneth, L.; Phillip, S.Y.; Wolfson, O. Mobile systems location privacy: “MobiPriv” a robust k anonymous system. In Proceedings of the 2010 IEEE 6th International Conference on Wireless and Mobile Computing, Networking and Communications, Niagara Falls, ON, Canada, 11–13 October 2010; pp. 54–63. [Google Scholar]

- Sweeney, L. Achieving k-anonymity privacy protection using generalization and suppression. Int. J. Uncertain. Fuzziness Knowl. Based Syst. 2002, 10, 571–588. [Google Scholar] [CrossRef]

- Madan, S.; Goswami, P. A privacy preserving scheme for big data publishing in the cloud using k-anonymization and hybridized optimization algorithm. In Proceedings of the 2018 International Conference on Circuits and Systems in Digital Enterprise Technology (ICCSDET), Kottayam, India, 21–22 December 2018; pp. 1–7. [Google Scholar]

- Li, N.; Li, T.; Venkatasubramanian, S. t-closeness: Privacy beyond k-anonymity and l-diversity. In Proceedings of the 2007 IEEE 23rd International Conference on Data Engineering, Istanbul, Turkey, 15–20 April 2007; pp. 106–115. [Google Scholar]

- Machanavajjhala, A.; Kifer, D.; Gehrke, J.; Venkitasubramaniam, M. l-diversity: Privacy beyond k-anonymity. ACM Trans. Knowl. Discov. Data 2007, 1, 3-es. [Google Scholar] [CrossRef]

- Abdrashitov, A.; Spivak, A. Sensor data anonymization based on genetic algorithm clustering with L-Diversity. In Proceedings of the 2016 18th Conference of Open Innovations Association and Seminar on Information Security and Protection of Information Technology (FRUCT-ISPIT), St. Petersburg, Russia, 18–22 April 2016; pp. 3–8. [Google Scholar]

- Poulis, G.; Skiadopoulos, S.; Loukides, G.; Gkoulalas-Divanis, A. Apriori-based algorithms for k^ m-anonymizing trajectory data. Trans. Data Priv. 2014, 7, 165–194. [Google Scholar]

- Medková, J.; Hynek, J. HAkAu: Hybrid algorithm for effective k-automorphism anonymization of social networks. Soc. Netw. Anal. Min. 2023, 13, 63. [Google Scholar] [CrossRef]

- Bolognini, L.; Bistolfi, C. Pseudonymization and impacts of Big (personal/anonymous) Data processing in the transition from the Directive 95/46/EC to the new EU General Data Protection Regulation. Comput. Law Secur. Rev. 2017, 33, 171–181. [Google Scholar] [CrossRef]

- Pawar, A.; Ahirrao, S.; Churi, P.P. Anonymization techniques for protecting privacy: A survey. In Proceedings of the 2018 IEEE Punecon, Pune, India, 30 November–2 December 2018; pp. 1–6. [Google Scholar]

- Rajesh, N.; Abraham, S.; Das, S.S. Personalized trajectory anonymization through sensitive location points hiding. Int. J. Inf. Technol. 2019, 11, 461–465. [Google Scholar] [CrossRef]

- Mano, M.; Ishikawa, Y. Anonymizing user location and profile information privacy-aware mobile services. In Proceedings of the 2nd ACM SIGSPATIAL International Workshop on Location Based Social Networks, San Jose, CA, USA, 2 November 2010; pp. 68–75. [Google Scholar]

- Cheng, M.; Zhao, B.; Su, J. A Real-Time Processing System for Anonymization of Mobile Core Network Traffic. In Proceedings of the Security, Privacy and Anonymity in Computation, Communication and Storage: SpaCCS 2016 International Workshops, TrustData, TSP, NOPE, DependSys, BigDataSPT, and WCSSC, Zhangjiajie, China, 16–18 November 2016; pp. 229–237. [Google Scholar]

- Chaddad, L.; Chehab, A.; Elhajj, I.H.; Kayssi, A. Mobile traffic anonymization through probabilistic distribution. In Proceedings of the 22nd Conference on Innovation in Clouds, Internet and Networks and Workshops (ICIN 2019), Paris, France, 18–21 February 2019; pp. 242–248. [Google Scholar]

- Martinez, E.B.; Ficek, M.; Kencl, L. Mobility data anonymization by obfuscating the cellular network topology graph. In Proceedings of the 2013 IEEE International Conference on Communications (ICC), Budapest, Hungary, 9–13 June 2013; pp. 2032–2036. [Google Scholar]

- Barak, O.; Cohen, G.; Toch, E. Anonymizing mobility data using semantic cloaking. Pervasive Mob. Comput. 2016, 28, 102–112. [Google Scholar] [CrossRef]

- Acar, A.; Aksu, H.; Uluagac, A.S.; Conti, M. A survey on homomorphic encryption schemes: Theory and implementation. ACM Comput. Surv. 2018, 51, 1–35. [Google Scholar] [CrossRef]

- Pulido-Gaytan, L.B.; Tchernykh, A.; Cortés-Mendoza, J.M.; Babenko, M.; Radchenko, G. A survey on privacy- preserving machine learning with fully homomorphic encryption. In Proceedings of the Latin American High Performance Computing Conference (LA-HCC 2020), Cuenca, Ecuador, 2–4 September 2020; pp. 115–129. [Google Scholar]

- Biksham, V.; Vasumathi, D. A lightweight fully homomorphic encryption scheme for cloud security. Int. J. Inf. Comput. Secur. 2020, 13, 357–371. [Google Scholar] [CrossRef]

- Ullah, S.; Li, J.; Chen, J.; Ali, I.; Khan, S.; Hussain, M.T.; Ullah, F.; Leung, V.C. Homomorphic encryption applications for IoT and light-weighted environments: A review. IEEE Internet Things J. 2024, 12, 1222–1246. [Google Scholar] [CrossRef]

- Praveen, R.; Pabitha, P. Improved Gentry–Halevi’s fully homomorphic encryption-based lightweight privacy preserving scheme for securing medical Internet of Things. Trans. Emerg. Telecommun. Technol. 2023, 34, e4732. [Google Scholar] [CrossRef]

- Du, D.; Zhao, W.; Wei, L.; Lu, S.; Wu, X. A lightweight homomorphic encryption federated learning based on blockchain in iov. In Proceedings of the 2022 IEEE Smartworld, Ubiquitous Intelligence & Computing, Scalable Computing & Communications, Digital Twin, Privacy Computing, Metaverse, Autonomous & Trusted Vehicles (SmarWorld/UIC/ScalCom/DigitalTwin/PriComp/Meta), Haikou, China, 15–18 December 2022; pp. 1001–1007. [Google Scholar]

- Chandrakar, I.; Hulipalled, V.R. Privacy Preserving Big Data mining using Pseudonymization and Homomorphic Encryption. In Proceedings of the 2021 2nd Global Conference for Advancement in Technology (GCAT), Bangalore, India, 1–3 October 2021; pp. 1–4. [Google Scholar]

- Chen, M.; Wei, X.; Gao, Y.; Huang, L.; Chen, M.; Kang, B. Deep-broad Learning System for Traffic Flow Prediction toward 5G Cellular Wireless Network. In Proceedings of the 2020 International Wireless Communications and Mobile Computing (IWCMC 2020), Limassol, Cyprus, 15–19 June 2020; pp. 940–945. [Google Scholar]

- Ayoubi, S.; Limam, N.; Salahuddin, M.A.; Shahriar, N.; Boutaba, R.; Estrada-Solano, F.; Caicedo, O.M. Machine learning for cognitive network management. IEEE Commun. Mag. 2018, 56, 158–165. [Google Scholar] [CrossRef]

- Xu, Y.; Yin, F.; Xu, W.; Lin, J.; Cui, S. Wireless traffic prediction with scalable Gaussian process: Framework, algorithms, and verification. IEEE J. Sel. Areas Commun. 2019, 37, 1291–1306. [Google Scholar] [CrossRef]

- Diethe, T.; Borchert, T.; Thereska, E.; Balle, B.; Lawrence, N. Continual learning in practice. arXiv 2019, arXiv:1903.05202. [Google Scholar] [CrossRef]

- Kelner, J.M.; Ziółkowski, C. Interference in multi-beam antenna system of 5G network. Int. J. Electron. Telecommun. 2020, 66, 17–23. [Google Scholar] [CrossRef]

- Liu, S.; Wei, Y.; Hwang, S.H. Guard band protection for coexistence of 5G base stations and satellite earth stations. ICT Express 2023, 9, 1103–1109. [Google Scholar] [CrossRef]

- Zaoutis, E.A.; Liodakis, G.S.; Baklezos, A.T.; Nikolopoulos, C.D.; Ioannidou, M.P.; Vardiambasis, I.O. 6G wireless communications and artificial intelligence-controlled reconfigurable intelligent surfaces: From supervised to federated learning. Appl. Sci. 2025, 15, 3252. [Google Scholar] [CrossRef]

- Alsenwi, M.; Tran, N.H.; Bennis, M.; Pandey, S.R.; Bairagi, A.K.; Hong, C.S. Intelligent resource slicing for eMBB and URLLC coexistence in 5G and beyond: A deep reinforcement learning based approach. IEEE Trans. Wirel. Commun. 2021, 20, 4585–4600. [Google Scholar] [CrossRef]

- Ali, M.; Chakraborty, S. Enabling video conferencing in low bandwidth. In Proceedings of the 2022 IEEE 19th Annual Consumer Communications & Networking Conference (CCNC), Virtual, 8–11 January 2022; pp. 487–488. [Google Scholar]

- Soós, G.; Ficzere, D.; Varga, P. User group behavioral pattern in a cellular mobile network for 5G use-cases. In Proceedings of the NOMS 2020–2020 IEEE/IFIP Network Operations and Management Symposium, Budapest, Hungary, 20–24 April 2020; pp. 1–7. [Google Scholar]

- Yang, J.; Qiao, Y.; Zhang, X.; He, H.; Liu, F.; Cheng, G. Characterizing user behavior in mobile internet. IEEE Trans. Emerg. Top. Comput. 2014, 3, 95–106. [Google Scholar] [CrossRef]

- Jiang, S.; Wei, B.; Wang, T.; Zhao, Z.; Zhang, X. Big data enabled user behavior characteristics in mobile internet. In Proceedings of the 2017 9th International Conference on Wireless Communications and Signal Processing (WCSP), Nanjing, China, 11–13 October 2017; pp. 1–5. [Google Scholar]

- Blackburn, J.; Stanojevic, R.; Erramilli, V.; Iamnitchi, A.; Papagiannaki, K. Last call for the buffet: Economics of cellular networks. In Proceedings of the 19th Annual International Conference on Mobile Computing & Networking (MobiCom’13), Miami, FL, USA, 30 September–4 October 2013; pp. 111–122. [Google Scholar]

- Halepovic, E.; Williamson, C. Characterizing and modeling user mobility in a cellular data network. In Proceedings of the 2nd ACM International Workshop on Performance Evaluation of Wireless Ad Hoc, Sensor, and Ubiquitous Networks (PE-WASUN’05), Montreal, ON, Canada, 10–13 October 2005; pp. 71–78. [Google Scholar]

- Walelgne, E.A.; Asrese, A.S.; Manner, J.; Bajpai, V.; Ott, J. Understanding data usage patterns of geographically diverse mobile users. IEEE Trans. Netw. Serv. Manag. 2020, 18, 3798–3812. [Google Scholar] [CrossRef]

- Siddiqi, M.A.; Yu, H.; Joung, J. 5G Ultra-Reliable Low-Latency Communication Implementation Challenges and Operational Issues with IoT Devices. Electronics 2019, 8, 981. [Google Scholar] [CrossRef]

- Hsu, Y.H.; Liao, W. eMBB and URLLC Service Multiplexing Based on Deep Reinforcement Learning in 5G and Beyond. In Proceedings of the 2022 IEEE Wireless Communications and Networking Conference (WCNC 2022), Austin, TX, USA, 10–13 April 2022; pp. 1467–1472. [Google Scholar]

- Popovski, P.; Trillingsgaard, K.F.; Simeone, O.; Durisi, G. 5G wireless network slicing for eMBB, URLLC, and mMTC: A communication-theoretic view. IEEE Access 2018, 6, 55765–55779. [Google Scholar] [CrossRef]

- Sohaib, R.M.; Onireti, O.; Sambo, Y.; Swash, R.; Ansari, S.; Imran, M.A. Intelligent Resource Management for eMBB and URLLC in 5G and beyond Wireless Networks. IEEE Access 2023, 11, 65205–65221. [Google Scholar] [CrossRef]

- Ray, S.; Bhattacharyya, B. Machine learning based cell association for mMTC 5G communication networks. Int. J. Mob. Netw. Des. Innov. 2020, 10, 10–16. [Google Scholar] [CrossRef]

- Belhadj, S.; Lakhdar, A.M.; Bendjillali, R.I. Performance comparison of channel coding schemes for 5G massive machine type communications. Indones. J. Electr. Eng. Comput. Sci. 2021, 22, 902. [Google Scholar] [CrossRef]

- Zhang, X.; Liang, K.; Chen, J.J.; Liu, J. Bandwidth Allocation for eMBB and mMTC Slices Based on AI-Aided Traffic Prediction. In Proceedings of the International Conference on Internet of Things as a Service (IoTaaS 2022), Cham, Switzerland, 17–18 November 2022; pp. 117–126. [Google Scholar]

- Abdelsadek, M.Y.; Gadallah, Y.; Ahmed, M.H. Resource allocation of URLLC and eMBB mixed traffic in 5G networks: A deep learning approach. In Proceedings of the GLOBECOM 2020–2020 IEEE Global Communications Conference, Taipei, Taiwan, 7–11 December 2020; pp. 1–6. [Google Scholar]

- Guo, Q.; Gu, R.; Wang, Z.; Zhao, T.; Ji, Y.; Kong, J.; Jue, J.P. Proactive dynamic network slicing with deep learning based short-term traffic prediction for 5G transport network. In Proceedings of the Optical Fiber Communication Conference, San Diego, CA, USA, 3–7 March 2019; p. W3J-3. [Google Scholar]

- Xiao, S.; Chen, W. Dynamic allocation of 5G transport network slice bandwidth based on LSTM traffic prediction. In Proceedings of the 2018 IEEE 9th International Conference on Software Engineering and Service Science (ICSESS 2018), Beijing, China, 23–25 November 2018; pp. 735–739. [Google Scholar]

- Kumar, N.; Ahmad, A. Machine learning-based QoS and traffic-aware prediction-assisted dynamic network slicing. Int. J. Commun. Netw. Distrib. Syst. 2022, 28, 27–42. [Google Scholar] [CrossRef]

- Thantharate, A.; Paropkari, R.; Walunj, V.; Beard, C. DeepSlice: A deep learning approach towards an efficient and reliable network slicing in 5G networks. In Proceedings of the 2019 IEEE 10th Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON), New York, NY, USA, 10–12 October 2019; pp. 0762–0767. [Google Scholar]

- Kresch, E.; Kulkarni, S. A poisson based bursty model of internet traffic. In Proceedings of the IEEE 11th International Conference on Computer and Information Technology, Paphos, Cyprus, 31 August–2 September 2011; pp. 255–260. [Google Scholar]

- Xu, Y.; Wang, Z.; Leong, W.K.; Leong, B. An end-to-end measurement study of modern cellular data networks. In Proceedings of the 15th International Conference on Passive and Active Measurement: (PAM 2014), Los Angeles, CA, USA, 10–11 March 2014; pp. 34–45. [Google Scholar]

- Uitto, M.; Heikkinen, A. Evaluation of live video streaming performance for low latency use cases in 5G. In Proceedings of the 2021 Joint European Conference on Networks and Communications & 6G Summit (EuCNC/6G Summit), Porto, Portugal, 8–11 June 2021; pp. 431–436. [Google Scholar]

- Qi, Y.; Hunukumbure, M.; Nekovee, M.; Lorca, J.; Sgardoni, V. Quantifying data rate and bandwidth requirements for immersive 5G experience. In Proceedings of the 2016 IEEE International Conference on Communications Workshops (ICC), Kuala Lumpur, Malaysia, 23–27 May 2016; pp. 455–461. [Google Scholar]

- Shrama, L.; Javali, A.; Routray, S.K. An overview of high-speed streaming in 5G. In Proceedings of the 2020 International Conference on Inventive Computation Technologies (ICICT), Coimbatore, India, 26–28 February 2020; pp. 557–562. [Google Scholar]

- Uitto, M.; Heikkinen, A. Evaluating 5G uplink performance in low latency video streaming. In Proceedings of the 2022 Joint European Conference on Networks and Communications & 6G Summit (EuCNC/6G Summit), Grenoble, France, 7–10 June 2022; pp. 393–398. [Google Scholar]

- Rath, A.; Goyal, S.; Panwar, S. Streamloading: Low-cost high-quality video streaming for mobile users. In Proceedings of the 5th Workshop on Mobile Video (MoVid’13), Oslo, Norway, 27 February 2013; pp. 1–6. [Google Scholar]

- Keshav, K.; Pradhan, A.K.; Srinivas, T.; Venkataram, P. Bandwidth allocation for interactive multimedia in 5g networks. In Proceedings of the 2021 6th International Conference on Communication and Electronics Systems (ICCES), Coimbatore, India, 8–10 July 2021; pp. 840–845. [Google Scholar]

- Keshvadi, S.; Karamollahi, M.; Williamson, C. Traffic characterization of instant messaging apps: A campus-level view. In Proceedings of the 2020 IEEE 45th Conference on Local Computer Networks (LCN), Sydney, Australia, 16–19 November 2020; pp. 225–232. [Google Scholar]

- Liu, Y.; Guo, L. An empirical study of video messaging services on smartphones. In Proceedings of the Network and Operating System Support on Digital Audio and Video Workshop (NOSSDAV’14), Singapore, 19–21 March 2014; pp. 79–84. [Google Scholar]

- Al-Barrak, A. Internet of Things (IoT). In Proceedings of the 2019 2nd International Conference on Engineering Technology and its Applications (IICETA), Najaf, Iraq, 27–28 August 2019; pp. 1–2. [Google Scholar]

- Baghel, S.K.; Keshav, K.; Manepalli, V.R. An investigation into traffic analysis for diverse data applications on smartphones. In Proceedings of the 2012 National Conference on Communications (NCC), Kharagpur, India, 3–5 February 2012; pp. 1–5. [Google Scholar]

- Lam, S. A New Measure for Charcterizing Data Traffic. IEEE Trans. Commun. 1978, 26, 137–140. [Google Scholar] [CrossRef]

- Ephremides, A. On the “Bursty Factor” as a Measure for Characterizing Data Traffic. IEEE Trans. Commun. 1978, 26, 1791–1792. [Google Scholar] [CrossRef]

- Li, H.; Yang, A.X.; Zhao, Y.Q.; Wang, Q. Application of Flash in the Analysis of Making Webpages and Mechanical Motiones. Appl. Mech. Mater. 2014, 496, 2078–2081. [Google Scholar] [CrossRef]

- Lecci, M.; Zanella, A.; Zorzi, M. An ns-3 implementation of a bursty traffic framework for virtual reality sources. In Proceedings of the 2021 Workshop on ns-3 (WNS3 ‘21), Kolding, Denmark, 23–24 June 2021; pp. 73–80. [Google Scholar]

- Weerasinghe, T.N.; Balapuwaduge, I.A.; Li, F.Y. Preamble transmission prediction for mmtc bursty traffic: A machine learning based approach. In Proceedings of the 2020 IEEE Global Communications Conference (GLOBECOM 2020), Taipei, Taiwan, 7–11 December 2020; pp. 1–6. [Google Scholar]

- Qian, F.; Wang, Z.; Gao, Y.; Huang, J.; Gerber, A.; Mao, Z.; Sen, S.; Spatscheck, O. Periodic transfers in mobile applications: Network-wide origin, impact, and optimization. In Proceedings of the 21st International Conference on World Wide Web (WWW 2012), Lyon, France, 16–20 April 2012; pp. 51–60. [Google Scholar]

- Falciasecca, G.; Frullone, M.; Riva, G.; Serra, A.M. On the impact of traffic burst on performances of high-capacity cellular systems. In Proceedings of the 40th IEEE Conference on Vehicular Technology (VTC ‘90), Orlando, FL, USA, 7–9 May 1990; pp. 646–651. [Google Scholar]

- Chousainov, I.A.; Moscholios, I.; Sarigiannidis, P.; Kaloxylos, A.; Logothetis, M. An analytical framework of a C-RAN supporting bursty traffic. In Proceedings of the 2020 IEEE International Conference on Communications (ICC 2020), Dublin, Ireland, 7–11 June 2020; pp. 1–6. [Google Scholar]

- Jiang, Z.; Chang, L.F.; Shankaranarayanan, N.K. Providing multiple service classes for bursty data traffic in cellular networks. In Proceedings of the IEEE International Conference on Computer Communications (INFOCOM 2000)—19th Annual Joint Conference of the IEEE Computer and Communications Societies, Tel Aviv, Israel, 26–30 March 2000; pp. 1087–1096. [Google Scholar]

- Shah, S.W.H.; Riaz, A.T.; Iqbal, K. Congestion control through dynamic access class barring for bursty MTC traffic in future cellular networks. In Proceedings of the 2018 International Conference on Frontiers of Information Technology (FIT), Islamabad, Pakistan, 17–19 December 2018; pp. 176–181. [Google Scholar]

- Anamuro, C.V.; Lagrange, X. Mobile traffic classification through burst traffic statistical features. In Proceedings of the 97th IEEE Vehicular Technology Conference (VTC 2023), Florence, Italy, 20–23 June 2023; pp. 1–5. [Google Scholar]

- Weerasinghe, T.N.; Balapuwaduge, I.A.; Li, F.Y. Supervised learning-based arrival prediction and dynamic preamble allocation for bursty traffic. In Proceedings of the IEEE INFOCOM 2019-IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), Paris, France, 29 April–2 May 2019; pp. 1–6. [Google Scholar]

- Wang, W.; Zhou, C.; He, H.; Wu, W.; Zhuang, W.; Shen, X. Cellular traffic load prediction with LSTM and Gaussian process regression. In Proceedings of the 2020 IEEE International Conference on Communications (ICC 2020), Virtual, 7–11 June 2020; pp. 1–6. [Google Scholar]

- Erman, J.; Gerber, A.; Ramadrishnan, K.K.; Sen, S.; Spatscheck, O. Over the top video: The gorilla in cellular networks. In Proceedings of the 2011 ACM SIGCOMM Conference on Internet Measurement (IMC’11), Berlin, Germany, 2–4 November 2011; pp. 127–136. [Google Scholar]

- Blaszczyszyn, B.; Karray, M.K. Impact of mean user speed on blocking and cuts of streaming traffic in cellular networks. In Proceedings of the 2008 14th European Wireless Conference (EW 2008), Prague, Czech Republic, 22–25 June 2008; pp. 1–7. [Google Scholar]

- Yao, L.; Bao, J.; Ding, F.; Zhang, N.; Tong, E. Research on traffic flow forecast based on cellular signaling data. In Proceedings of the 2021 IEEE International Conference on Smart Internet of Things (SmartIoT), Jeju Island, Republic of Korea, 13–15 August 2021; pp. 193–199. [Google Scholar]

- Khan, S.; Hussain, A.; Nazir, S.; Khan, F.; Oad, A.; Alshehr, M.D. Efficient and reliable hybrid deep learning-enabled model for congestion control in 5G/6G networks. Comput. Commun. 2022, 182, 31–40. [Google Scholar] [CrossRef]

- Zheng, K.; Yang, Z.; Zhang, K.; Chatzimisios, P.; Yang, K.; Xiang, W. Big data-driven optimization for mobile networks toward 5G. IEEE Netw. 2016, 30, 44–51. [Google Scholar] [CrossRef]

- Wang, J.; Tang, J.; Xu, Z.; Wang, Y.; Xue, G.; Zhang, X.; Yang, D. Spatiotemporal modeling and prediction in cellular networks: A big data enabled deep learning approach. In Proceedings of the IEEE Conference on Computer Communications (INFOCOM 2017), Atlanta, GA, USA, 1–4 May 2017; pp. 1–9. [Google Scholar]

- Paul, U.; Liu, J.; Troia, S.; Falowo, O.; Maier, G. Traffic-profile and machine learning based regional data center design and operation for 5G network. J. Commun. Netw. 2019, 21, 569–583. [Google Scholar] [CrossRef]

- Suznjevic, M.; Matijasevic, M. Trends in evolution of the network traffic of massively multiplayer online role-playing games. In Proceedings of the 2015 13th International Conference on Telecommunications (ConTEL), Graz, Austria, 13–15 July 2015; pp. 1–8. [Google Scholar]

- Qi, C.; Zhao, Z.; Li, R.; Zhang, H. Characterizing and modeling social mobile data traffic in cellular networks. In Proceedings of the 2016 IEEE 83rd Vehicular Technology Conference (VTC Spring), Nanjing, China, 15–18 May 2016; pp. 1–5. [Google Scholar]

- Park, J.; Popovski, P.; Simeone, O. Minimizing latency to support VR social interactions over wireless cellular systems via bandwidth allocation. IEEE Wirel. Commun. Lett. 2018, 7, 776–779. [Google Scholar] [CrossRef]

- Prasad, A.; Uusitalo, M.A.; Navrátil, D.; Säily, M. Challenges for enabling virtual reality broadcast using 5G small cell network. In Proceedings of the 2018 IEEE Wireless Communications and Networking Conference Workshops (WCNCW), Barcelona, Spain, 15–18 April 2018; pp. 220–225. [Google Scholar]

- Taleb, T.; Nadir, Z.; Flinck, H.; Song, J. Extremely interactive and low-latency services in 5G and beyond mobile systems. IEEE Commun. Stand. Mag. 2021, 5, 114–119. [Google Scholar] [CrossRef]

- Chen, D.Y.; Lin, P.C.; Chen, K.T. Does online mobile gaming overcharge you for the fun? In Proceedings of the 2013 12th Annual Workshop on Network and Systems Support for Games (NetGames), Denver, CO, USA, 9–10 December 2013; pp. 1–2. [Google Scholar]

- Drajic, D.; Krco, S.; Tomic, I.; Popovic, M.; Zeljkovic, N.; Nikaein, N.; Svoboda, P. Impact of online games and M2M applications traffic on performance of HSPA radio access networks. In Proceedings of the 2012 Sixth International Conference on Innovative Mobile and Internet Services in Ubiquitous Computing (IMIS), Palermo, Italy, 4–6 July 2012; pp. 880–885. [Google Scholar]

- Barba, P.; Stramiello, J.; Funk, E.K.; Richter, F.; Yip, M.C.; Orosco, R.K. Remote telesurgery in humans: A systematic review. Surg. Endosc. 2022, 36, 2771–2777. [Google Scholar] [CrossRef]

- Fiadino, P.; Casas, P.; Schiavone, M.; D’Alconzo, A. Online Social Networks anatomy: On the analysis of Facebook and WhatsApp in cellular networks. In Proceedings of the 2015 IFIP Networking Conference (IFIP Networking), Toulouse, France, 20–22 May 2015; pp. 1–9. [Google Scholar]

- Huang, Q.; Lee, P.P.; He, C.; Qian, J.; He, C. Fine-grained dissection of WeChat in cellular networks. In Proceedings of the 2015 IEEE 23rd International Symposium on Quality of Service (IWQoS), Portland, OR, USA, 15–16 June 2015; pp. 309–318. [Google Scholar]

- Sun, F.; Wang, P.; Zhao, J.; Xu, N.; Zeng, J.; Tao, J.; Song, K.; Deng, C.; Lui, J.C.; Guan, X. Mobile data traffic prediction by exploiting time-evolving user mobility patterns. IEEE Trans. Mob. Comput. 2021, 21, 4456–4470. [Google Scholar] [CrossRef]

- Shafiq, M.; Yu, X.; Laghari, A.A. WeChat text messages service flow traffic classification using machine learning technique. In Proceedings of the 2016 6th International Conference on IT Convergence and Security (ICITCS), Prague, Czech Republic, 26–29 September 2016; pp. 1–5. [Google Scholar]

- Yadav, A.; Singh, H.; Mala, S.; Shankar, A. Recognizing Massive Mobile Traffic Patterns to Understand Urban Dynamics. In Proceedings of the 11th International Conference on Cloud Computing, Data Science & Engineering (Confluence 2021), Noida, India, 28–29 January 2021; pp. 894–898. [Google Scholar]

- Chen, L.; Yang, D.; Zhang, D.; Wang, C.; Li, J. Deep mobile traffic forecast and complementary base station clustering for C-RAN optimization. J. Netw. Comput. Appl. 2018, 121, 59–69. [Google Scholar] [CrossRef]

- Zhou, Y.; Fadlullah, Z.M.; Mao, B.; Kato, N. A deep-learning-based radio resource assignment technique for 5G ultra dense networks. IEEE Netw. 2018, 32, 28–34. [Google Scholar] [CrossRef]

- Zhao, X.; Yang, K.; Chen, Q.; Peng, D.; Jiang, H.; Xu, X.; Shuang, X. Deep learning based mobile data offloading in mobile edge computing systems. Future Gener. Comput. Syst. 2019, 99, 346–355. [Google Scholar] [CrossRef]

- Trinh, H.D.; Giupponi, L.; Dini, P. Mobile traffic prediction from raw data using LSTM networks. In Proceedings of the 29th IEEE Annual International Symposium on Personal Indoor and Mobile Radio Communications (PIMRC 2018), Bologna, Italy, 9–12 September 2018; pp. 1827–1832. [Google Scholar]

- Chen, M.; Miao, Y.; Gharavi, H.; Hu, L.; Humar, I. Intelligent traffic adaptive resource allocation for edge computing-based 5G networks. IEEE Trans. Cogn. Commun. Netw. 2019, 6, 499–508. [Google Scholar] [CrossRef]

- Azzouni, A.; Pujolle, G. A long short-term memory recurrent neural network framework for network traffic matrix prediction. arXiv 2017, arXiv:1705.05690. [Google Scholar] [CrossRef]

- Dalgkitsis, A.; Louta, M.; Karetsos, G.T. Traffic forecasting in cellular networks using the LSTM RNN. In Proceedings of the 22nd Pan-Hellenic Conference on Informatics, Athens, Greece, 29 November–1 December 2018; pp. 28–33. [Google Scholar]

- Alawe, I.; Ksentini, A.; Hadjadj-Aoul, Y.; Bertin, P. Improving traffic forecasting for 5G core network scalability: A machine learning approach. IEEE Netw. 2018, 32, 42–49. [Google Scholar] [CrossRef]

- Guerra-Gomez, R.; Ruiz-Boque, S.; Garcia-Lozano, M.; Bonafe, J.O. Machine learning adaptive computational capacity prediction for dynamic resource management in C-RAN. IEEE Access 2020, 8, 89130–89142. [Google Scholar] [CrossRef]

- Mostafa, A.M. IoT Architecture and Protocols in 5G Environment. In Powering the Internet of Things With 5G Networks; Mohanan, V., Budiarto, R., Aldmour, I., Eds.; IGI Global: Hershey PA, USA, 2018; pp. 105–130. [Google Scholar]

- Macriga, G.A.; Sakthy, S.S.; Niranjan, R.; Sahu, S. An Emerging Technology: Integrating IoT with 5G Cellular Network. In Proceedings of the 2021 4th International Conference on Computing and Communications Technologies (ICCCT), Chennai, India, 16–17 December 2021; pp. 208–214. [Google Scholar]

- Ardita, M.; Orisa, M. Wi-Fi-Based Internet of Things (Iot) Data Communication Performance in Dense Wireless Network Traffic Conditions. J. Electr. Eng. Mechatron. Comput. Sci. 2021, 4, 31–36. [Google Scholar] [CrossRef]

- Sharma, S.K.; Wang, X. Distributed caching enabled peak traffic reduction in ultra-dense IoT networks. IEEE Commun. Lett. 2018, 22, 1252–1255. [Google Scholar] [CrossRef]

- Finley, B.; Vesselkov, A. Cellular iot traffic characterization and evolution. In Proceedings of the 2019 IEEE 5th World Forum on Internet of Things (WF-IoT), Limerick, Ireland, 15–18 April 2019; pp. 622–627. [Google Scholar]

- Gong, Y.; Zhang, Z.; Wang, K.; Gu, Y.; Wu, Y. IoT-Oriented Single-Transmitter Multiple-Receiver Wireless Charging Systems Using Hybrid Multi-Frequency Pulse Modulation. IEEE Trans. Magn. 2024, 60, 1–6. [Google Scholar] [CrossRef]

- Ma, H.; Tao, Y.; Fang, Y.; Chen, P.; Li, Y. Multi-Carrier Initial-Condition-Index-aided DCSK Scheme: An Efficient Solution for Multipath Fading Channel. IEEE Trans. Veh. Technol. 2025, 74, 15743–15757. [Google Scholar] [CrossRef]

- Alzahrani, R.J.; Alzahrani, A. Survey of traffic classification solution in IoT networks. Int. J. Comput. Appl. 2021, 183, 37–45. [Google Scholar] [CrossRef]

- Khedkar, S.P.; Canessane, R.A.; Najafi, M.L. Prediction of traffic generated by IoT devices using statistical learning time series algorithms. Wirel. Commun. Mob. Comput. 2021, 2021, 5366222. [Google Scholar] [CrossRef]

- Chen, X.; Liu, Y.; Zhang, J. Traffic prediction for Internet of Things through support vector regression model. Internet Technol. Lett. 2022, 5, e336. [Google Scholar] [CrossRef]

- Abdellah, A.R.; Mahmood, O.A.K.; Paramonov, A.; Koucheryavy, A. IoT traffic prediction using multi-step ahead prediction with neural network. In Proceedings of the 2019 11th International Congress on Ultra-Modern Telecommunications and Control Systems and Workshops (ICUMT), Dublin, Ireland, 28–30 October 2019; pp. 1–4. [Google Scholar]

- Wang, R.; Zhang, Y.; Peng, L.; Fortino, G.; Ho, P.H. Time-varying-aware network traffic prediction via deep learning in IoT. IEEE Trans. Ind. Inform. 2022, 18, 8129–8137. [Google Scholar] [CrossRef]

- Lu, Y.; Liu, L.; Panneerselvam, J.; Yuan, B.; Gu, J.; Antonopoulos, N. A GRU-based prediction framework for intelligent resource management at cloud data centres in the age of 5G. IEEE Trans. Cogn. Commun. Netw. 2019, 6, 486–498. [Google Scholar] [CrossRef]

- Hu, C.; Fan, W.; Zeng, E.; Hang, Z.; Wang, F.; Qi, L.; Bhuiyan, M.Z.A. Digital twin-assisted real-time traffic data prediction method for 5G-enabled internet of vehicles. IEEE Trans. Ind. Inform. 2021, 18, 2811–2819. [Google Scholar] [CrossRef]

- Abdelmotalib, A.; Wu, Z.; Zhou, P. Background traffic analysis for social media applications on smartphones. In Proceedings of the 2012 2nd International Conference on Instrumentation, Measurement, Computer, Communication and Control (IMCCC), Harbin, China, 8–10 December 2012; pp. 817–818. [Google Scholar]

- Gupta, S.; Garg, R.; Jain, N.; Naik, V.; Kaul, S. Android phone-based appraisal of app behavior on cell networks. In Proceedings of the 1st International Conference on Mobile Software Engineering and Systems (MobileSoft 2014), Hyderabad, India, 2–3 June 2014; pp. 54–57. [Google Scholar]

- Venkataramani, A.; Kokku, R.; Dahlin, M. TCP Nice: A mechanism for background transfers. In Proceedings of the 5th Symposium on Operating Systems Design and Implementation, Boston, MA, USA, 9–11 December 2002; pp. 329–343. [Google Scholar]

- Liu, C.; Zeng, L.; Shi, J.; Xu, F.; Xiong, G.; Yiu, S.M. Auto-identification of background traffic based on autonomous periodic interaction. In Proceedings of the 2017 IEEE 36th International Performance Computing and Communications Conference (IPCCC), San Diego, CA, USA, 10–12 December 2017; pp. 1–8. [Google Scholar]

- He, K.; Chen, X.; Wu, Q.; Yu, S.; Zhou, Z. Graph attention spatial-temporal network with collaborative global-local learning for citywide mobile traffic prediction. IEEE Trans. Mob. Comput. 2020, 21, 1244–1256. [Google Scholar] [CrossRef]

- Do, Q.H.; Doan, T.T.H.; Nguyen, T.V.A.; Duong, N.T.; Linh, V.V. Prediction of data traffic in telecom networks based on deep neural networks. J. Comput. Sci. 2020, 16, 1268–1277. [Google Scholar] [CrossRef]

- Mehmeti, F.; La Porta, T.F. Resource allocation for improved user experience with live video streaming in 5G. In Proceedings of the 17th ACM Symposium on QoS and Security for Wireless and Mobile Network (Q2SWinet ‘21), Alicante, Spain, 22–26 November 2021; pp. 69–78. [Google Scholar]

- Martin, A.; Egaña, J.; Flórez, J.; Montalban, J.; Olaizola, I.G.; Quartulli, M.; Viola, R.; Zorrilla, M. Network resource allocation system for QoE-aware delivery of media services in 5G networks. IEEE Trans. Broadcast. 2018, 64, 561–574. [Google Scholar] [CrossRef]

- Feng, H.; Ma, M. Traffic Prediction over Wireless Networks. In Wireless Network Traffic and Quality of Service Support: Trends and Standards; Lagkas, T.D., Angelidis, P., Georgiadis, L., Eds.; IGI Global: Hershey PA, USA, 2010; pp. 87–112. [Google Scholar]

- Trinh, H.D. Data Analytics for Mobile Traffic in 5G Networks Using Machine Learning Techniques. Ph.D. Thesis, Polytechnic University of Catalonia, Barcelona, Spain, 2020. [Google Scholar]

- OpenSignal.com. Available online: https://opencellid.org/ (accessed on 5 December 2023).

- Shafiq, M.Z.; Ji, L.; Liu, A.X.; Pang, J.; Wang, J. Characterizing geospatial dynamics of application usage in a 3G cellular data network. In Proceedings of the 2012 IEEE INFOCOM, Orlando, FL, USA, 25–30 March 2012; pp. 1341–1349. [Google Scholar]

- Sun, H.; Halepovitc, E.; Williamson, C.; Wu, Y. Characterization of CDMA2000 Cellular Data Network Traffic. Networks 2000, 7, 10. [Google Scholar]

- Paul, U.; Subramanian, A.P.; Buddhikot, M.M.; Das, S.R. Understanding traffic dynamics in cellular data networks. In Proceedings of the IEEE INFOCOM 2011, Shanghai, China, 10–15 April 2011; pp. 882–890. [Google Scholar]

- Wang, Y.; Faloutsos, M.; Zang, H. On the usage patterns of multimodal communication: Countries and evolution. In Proceedings of the IEEE INFOCOM 2013, Turin, Italy, 14–19 April 2013; pp. 3135–3140. [Google Scholar]

- Cardona, J.C.; Stanojevic, R.; Laoutaris, N. Collaborative consumption for mobile broadband: A quantitative study. In Proceedings of the 10th ACM International on Conference on Emerging Networking Experiments and Technologies, Sydney, Australia, 2–5 December 2014; pp. 307–318. [Google Scholar]

- Zhang, Y.; Årvidsson, A. Understanding the characteristics of cellular data traffic. In Proceedings of the 2012 ACM SIGCOMM Workshop on Cellular Networks: Operations, Challenges, and Future Design, Helsinki, Finland, 13 August 2012; pp. 13–18. [Google Scholar]

- Li, R.; Zhao, Z.; Zheng, J.; Mei, C.; Cai, Y.; Zhang, H. The learning and prediction of application-level traffic data in cellular networks. IEEE Trans. Wirel. Commun. 2017, 16, 3899–3912. [Google Scholar] [CrossRef]

- Tikunov, D.; Nishimura, T. Traffic prediction for mobile network using Holt-Winter’s exponential smoothing. In Proceedings of the 2007 15th International Conference on Software, Telecommunications and Computer Networks, Split-Dubrovnik, Croatia, 27–29 September 2007; pp. 1–5. [Google Scholar]

- Tan, I.K.; Hoong, P.K.; Keong, C.Y. Towards forecasting low network traffic for software patch downloads: An ARMA model forecast using CRONOS. In Proceedings of the 2010 2nd International Conference on Computer and Network Technology, Bangkok, Thailand, 23–25 April 2010; pp. 88–92. [Google Scholar]

- Sadek, N.; Khotanzad, A. Multi-scale high-speed network traffic prediction using k-factor Gegenbauer ARMA model. In Proceedings of the 2004 IEEE International Conference on Communications, Paris, France, 20–24 June 2004; pp. 2148–2152. [Google Scholar]

- Sciancalepore, V.; Samdanis, K.; Costa-Perez, X.; Bega, D.; Gramaglia, M.; Banchs, A. Mobile traffic forecasting for maximizing 5G network slicing resource utilization. In Proceedings of the IEEE Conference on Computer Communications (INFOCOM 2017), Atlanta, GA, USA, 1–4 May 2017; pp. 1–9. [Google Scholar]

- Medhn, S.; Seifu, B.; Salem, A.; Hailemariam, D. Mobile data traffic forecasting in UMTS networks based on SARIMA model: The case of Addis Ababa, Ethiopia. In Proceedings of the 2017 IEEE AFRICON, Cape Town, South Africa, 18–20 September 2017; pp. 285–290. [Google Scholar]

- AsSadhan, B.; Zeb, K.; Al-Muhtadi, J.; Alshebeili, S. Anomaly detection based on LRD behavior analysis of decomposed control and data planes network traffic using SOSS and FARIMA models. IEEE Access 2017, 5, 13501–13519. [Google Scholar] [CrossRef]

- Whittaker, J.; Garside, S.; Lindveld, K. Tracking and predicting a network traffic process. Int. J. Forecast. 1997, 13, 51–61. [Google Scholar] [CrossRef]

- Choi, S.; Shin, K.G. Adaptive bandwidth reservation and admission control in QoS-sensitive networks. IEEE Trans. Parallel Distrib. Syst. 2002, 13, 882–897. [Google Scholar] [CrossRef]

- Zhou, B.; He, D.; Sun, Z. Traffic Modeling and Prediction Using ARIMA/GARCH Model; Springer: Boston, MA, USA, 2006; pp. 101–121. [Google Scholar]

- Mitchell, K.; Sohraby, K. An analysis of the effects of mobility on bandwidth allocation strategies in multi-class cellular wireless networks. In Proceedings of the IEEE INFOCOM 2001, Anchorage, AL, USA, 22–26 April 2001; Volume 2, pp. 1005–1011. [Google Scholar]

- Mehdi, H.; Pooranian, Z.; Vinueza Naranjo, P.G. Cloud traffic prediction based on fuzzy ARIMA model with low dependence on historical data. Trans. Emerg. Telecommun. Technol. 2022, 33, e3731. [Google Scholar] [CrossRef]

- Tran, Q.T.; Hao, L.; Trinh, Q.K. Cellular network traffic prediction using exponential smoothing methods. J. Inf. Commun. Technol. 2019, 18, 1–18. [Google Scholar] [CrossRef]

- Levine, D.A.; Akyildiz, I.F.; Naghshineh, M. A resource estimation and call admission algorithm for wireless multimedia networks using the shadow cluster concept. IEEE/ACM Trans. Netw. 1997, 5, 1–12. [Google Scholar] [CrossRef]

- Aceto, G.; Bovenzi, G.; Ciuonzo, D.; Montieri, A.; Persico, V.; Pescapé, A. Characterization and prediction of mobile-app traffic using Markov modeling. IEEE Trans. Netw. Serv. Manag. 2021, 18, 907–925. [Google Scholar] [CrossRef]

- Dash, S.; Maheshwari, S.; Mahapatra, S. Traffic prediction in future mobile networks using hidden Markov model. In Proceedings of the IEICE Smart Wireless Communications (SmartCom 2019), New Jersey, NJ, USA, 4–6 November 2019. [Google Scholar]

- Bouzidi, E.H.; Luong, D.H.; Outtagarts, A.; Hebbar, A.; Langar, R. Online-based learning for predictive network latency in software-defined networks. In Proceedings of the 2018 IEEE Global Communications Conference (GLOBECOM 2018), Abu Dhabi, United Arab Emirates, 9–13 December 2018; pp. 1–6. [Google Scholar]

- Bega, D.; Gramaglia, M.; Fiore, M.; Banchs, A.; Costa-Perez, X. DeepCog: Cognitive network management in sliced 5G networks with deep learning. In Proceedings of the IEEE Conference on Computer Communications (INFOCOM 2019), Paris, France, 29 April–2 May 2019; pp. 280–288. [Google Scholar]

- Liang, D.; Zhang, J.; Jiang, S.; Zhang, X.; Wu, J.; Sun, Q. Mobile traffic prediction based on densely connected CNN for cellular networks in highway scenarios. In Proceedings of the 11th International Conference on Wireless Communications and Signal Processing (WCSP 2019), Xi’an, China, 23–25 October 2019; pp. 1–5. [Google Scholar]

- Nikravesh, A.Y.; Ajila, S.A.; Lung, C.H.; Ding, W. Mobile network traffic prediction using MLP, MLPWD, and SVM. In Proceedings of the 2016 IEEE International Congress on Big Data (Big Data Congress), San Francisco, CA, USA, 27 June–2 July 2016; pp. 402–409. [Google Scholar]

- Jaffry, S.; Hasan, S.F. Cellular traffic prediction using recurrent neural networks. In Proceedings of the IEEE 5th International Symposium on Telecommunication Technologies (ISTT), Shah Alam, Malaysia, 9–11 November 2020; pp. 94–98. [Google Scholar]

- Selvamanju, E.; Shalini, V.B. Deep Learning based Mobile Traffic Flow Prediction Model in 5G Cellular Networks. In Proceedings of the 3rd International Conference on Smart Electronics and Communication (ICOSEC 2022), Trichy, India, 20–22 October 2022; pp. 1349–1353. [Google Scholar]

- Yimeng, S.; Jianhua, L.; Jian, M.; Yaxing, Q.; Zhe, Z.; Chunhui, L. A Prediction Method of 5G Base Station Cell Traffic Based on Improved Transformer Model. In Proceedings of the 2022 IEEE 4th International Conference on Civil Aviation Safety and Information Technology (ICCASIT), Dali, China, 12–14 October 2022; pp. 40–45. [Google Scholar]

- Pfülb, B.; Hardegen, C.; Gepperth, A.; Rieger, S. A study of deep learning for network traffic data forecasting. In Proceedings of the 28th International Conference on Artificial Neural Networks (ICANN 2019), Munich, Germany, 17–19 September 2019; pp. 497–512. [Google Scholar]

- Alsaade, F.W.; Al-Adhaileh, M.H. Cellular traffic prediction based on an intelligent model. Mob. Inf. Syst. 2021, 2021, 1–15. [Google Scholar] [CrossRef]

- Shawel, B.S.; Debella, T.T.; Tesfaye, G.; Tefera, Y.Y.; Woldegebreal, D.H. Hybrid Prediction Model for Mobile Data Traffic: A Cluster-level Approach. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN 2020), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar]

- Hua, Y.; Zhao, Z.; Liu, Z.; Chen, X.; Li, R.; Zhang, H. Traffic prediction based on random connectivity in deep learning with long short-term memory. In Proceedings of the 2018 IEEE 88th Vehicular Technology Conference (VTC 2018), Chicago, IL, USA, 27–30 August 2018; pp. 1–6. [Google Scholar]

- Zhang, S.; Zhao, S.; Yuan, M.; Zeng, J.; Yao, J.; Lyu, M.R.; King, I. Traffic prediction-based power saving in cellular networks: A machine learning method. In Proceedings of the 25th ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems, Redondo Beach, CA, USA, 7–10 November 2017; pp. 1–10. [Google Scholar]

- Zeb, S.; Rathore, M.A.; Mahmood, A.; Hassan, S.A.; Kim, J.; Gidlund, M. Edge intelligence in softwareized 6G: Deep learning-enabled network traffic predictions. In Proceedings of the 2021 IEEE Globecom Workshops (GC Weeks), Madrid, Spain, 7–11 December 2021; pp. 1–6. [Google Scholar]

- Andreoletti, D.; Troia, S.; Musumeci, F.; Giordano, S.; Maier, G.; Tornatore, M. Network traffic prediction based on diffusion convolutional recurrent neural network. In Proceedings of the IEEE Conference on Computer Communications Workshops (INFOCOM 2019), Paris, France, 29 April 2019; pp. 246–251. [Google Scholar]

- Pelekanou, A.; Anastasopoulos, M.; Tzanakaki, A.; Simeonidou, D. Provisioning of 5G services employing machine learning techniques. In Proceedings of the 2018 International Conference on Optical Network Design and Modeling (ONDM), Dublin, Ireland, 14–17 May 2018; pp. 200–205. [Google Scholar]

- Li, M.; Wang, Y.; Wang, Z.; Zheng, H. A deep learning method based on an attention mechanism for wireless network traffic prediction. Ad Hoc Netw. 2020, 107, 102258. [Google Scholar] [CrossRef]

- Aldhyani, T.H.; Alrasheedi, M.; Alqarni, A.A.; Alzahrani, M.Y.; Bamhdi, A.M. Intelligent hybrid model to enhance time series models for predicting network traffic. IEEE Access 2020, 8, 130431–130451. [Google Scholar] [CrossRef]

- Fang, Z.; Zhao, R.; Yang, H. 5G Network Traffic Prediction Based on WP-Deep Gaussian. In Proceedings of the 2022 IEEE 4th International Conference on Civil Aviation Safety and Information Technology (ICCASIT), Dali, China, 12–14 October 2022; pp. 938–942. [Google Scholar]

- Li, J.; Li, X. 5G Network Traffic Prediction based on EEMD-GAN. In Proceedings of the 7th International Conference on Cyber Security and Information Engineering, Brisbane, Australia, 23–25 September 2022; pp. 408–412. [Google Scholar]

- Selvamanju, E.; Shalini, V.B. Archimedes optimization algorithm with deep belief network based mobile network traffic prediction for 5G cellular networks. In Proceedings of the 2022 4th International Conference on Smart Systems and Inventive Technology (ICSSIT), Tirunelveli, India, 20–22 January 2022; pp. 370–376. [Google Scholar]

- Gong, J.; Li, T.; Wang, H.; Liu, Y.; Wang, X.; Wang, Z.; Deng, C.; Feng, J.; Jin, D.; Li, Y. Kgda: A knowledge graph driven decomposition approach for cellular traffic prediction. ACM Trans. Intell. Syst. Technol. 2024, 15, 1–22. [Google Scholar] [CrossRef]

- Mehri, H.; Chen, H.; Mehrpouyan, H. Cellular Traffic Prediction Using Online Prediction Algorithms. arXiv 2024, arXiv:2405.05239. [Google Scholar] [CrossRef]

- Cai, Z.; Tan, C.; Zhang, J.; Zhu, L.; Feng, Y. Dbstgnn-att: Dual branch spatio-temporal graph neural network with an attention mechanism for cellular network traffic prediction. Appl. Sci. 2024, 14, 2173. [Google Scholar] [CrossRef]

- Hao, M.; Sun, X.; Li, Y.; Zhang, H. Edge-side cellular network traffic prediction based on trend graph characterization network. IEEE Trans. Netw. Sci. Eng. 2024, 11, 6118–6129. [Google Scholar] [CrossRef]

- Cao, S.; Wu, L.; Zhang, R.; Lu, J.; Wu, D.; Zhang, Z. Hypergraph attention recurrent network for cellular traffic prediction. IEEE Trans. Netw. Serv. Manag. 2024, 22, 1760–1774. [Google Scholar] [CrossRef]

- Zhou, J.; Luo, A.; Zhou, N. Mobile network traffic prediction based on cross-patch feature fusion. In Proceedings of the 2024 4th International Conference on Neural Networks, Information and Communication Engineering (NNICE), Guangzhou, China, 19–21 January 2024; pp. 1006–1010. [Google Scholar]

- Jiang, W.; Zhang, Y.; Han, H.; Huang, Z.; Li, Q.; Mu, J. Mobile traffic prediction in consumer applications: A multimodal deep learning approach. IEEE Trans. Consum. Electron. 2024, 70, 3425–3435. [Google Scholar] [CrossRef]

- Wu, X.; Wu, C. CLPREM: A real-time traffic prediction method for 5G mobile network. PLoS ONE 2024, 19, e0288296. [Google Scholar] [CrossRef]

- Pandey, C.; Tiwari, V.; Rodrigues, J.J.; Roy, D.S. 5GT-GAN-NET: Internet traffic data forecasting with supervised loss based synthetic data over 5G. IEEE Trans. Mob. Comput. 2024, 23, 10694–10705. [Google Scholar] [CrossRef]

- Huang, C.W.; Chen, P.C. Mobile traffic offloading with forecasting using deep reinforcement learning. arXiv 2019, arXiv:1911.07452. [Google Scholar] [CrossRef]

- Dommaraju, V.S.; Nathani, K.; Tariq, U.; Al-Turjman, F.; Kallam, S.; Patan, R. ECMCRR-MPDNL for Cellular Network Traffic Prediction with Big Data. IEEE Access 2020, 8, 113419–113428. [Google Scholar] [CrossRef]

- Gao, Z.; Yan, S.; Zhang, J.; Han, B.; Wang, Y.; Xiao, Y.; Ji, Y. Deep reinforcement learning-based policy for baseband function placement and routing of RAN in 5G and beyond. J. Light. Technol. 2021, 40, 470–480. [Google Scholar] [CrossRef]

- Zeng, Q.; Sun, Q.; Chen, G.; Duan, H.; Li, C.; Song, G. Traffic prediction of wireless cellular network based on deep transfer learning and cross-domain data. IEEE Access 2020, 8, 172387–172397. [Google Scholar] [CrossRef]

- Bouzidi, E.H.; Outtagarts, A.; Langar, R. Deep reinforcement learning application for network latency management in software defined networks. In Proceedings of the 2019 IEEE Global Communications Conference (GLOBECOM 2019), Waikoloa, HI, USA, 9–13 December 2019; pp. 1–6. [Google Scholar]

- Zhu, M.; Gu, J.; Shen, T.; Shi, C.; Ren, X. Energy-efficient and QoS guaranteed BBU aggregation in CRAN based on heuristic-assisted deep reinforcement learning. J. Light. Technol. 2021, 40, 575–587. [Google Scholar] [CrossRef]

- Zorello, L.M.M.; Bliek, L.; Troia, S.; Guns, T.; Verwer, S.; Maier, G. Baseband-Function Placement with Multi-Task Traffic Prediction for 5G Radio Access Networks. IEEE Trans. Netw. Serv. Manag. 2022, 19, 5104–5119. [Google Scholar] [CrossRef]

- Nan, J.; Ai, M.; Liu, A.; Duan, X. Regional-union based federated learning for wireless traffic prediction in 5G-Advanced/6G network. In Proceedings of the 2022 IEEE/CIC International Conference on Communications in China (ICCC Workshops), Foshan, China, 11–13 August 2022; pp. 423–427. [Google Scholar]

- Uyan, U.; Isyapar, M.T.; Ozturk, M.U. 5G Long-Term and Large-Scale Mobile Traffic Forecasting. arXiv 2022, arXiv:2212.10869. [Google Scholar]

- Cao, B.; Fan, J.; Yuan, M.; Li, Y. Toward accurate energy-efficient cellular network: Switching off excessive carriers based on traffic profiling. In Proceedings of the 31st Annual ACM Symposium on Applied Computing, Pisa, Italy, 4–8 April 2016; pp. 546–551. [Google Scholar]

- Li, R.; Zhao, Z.; Zhou, X.; Zhang, H. Energy savings scheme in radio access networks via compressive sensing-based traffic load prediction. Trans. Emerg. Telecommun. Technol. 2014, 25, 468–478. [Google Scholar] [CrossRef]

- Peng, C.; Lee, S.B.; Lu, S.; Luo, H.; Li, H. Traffic-driven power saving in operational 3G cellular networks. In Proceedings of the 17th Annual International Conference on Mobile Computing and Networking, Las Vegas, NV, USA, 19–23 September 2011; pp. 121–132. [Google Scholar]

- Wang, G.; Guo, C.; Wang, S.; Feng, C. A traffic prediction-based sleeping mechanism with low complexity in femtocell networks. In Proceedings of the 2013 IEEE International Conference on Communications Workshops (ICC), Budapest, Hungary, 9–13 June 2013; pp. 560–565. [Google Scholar]