1. Introduction

Mental illnesses, particularly anxiety and depression, can lead to profound suffering and significantly diminish social functioning and quality of life [

1]. According to a 2019 World Health Organization report [

2], one in every eight people worldwide is affected by a mental disorder. Given the elevated risk of suicide among those with mental illnesses, early detection and intervention have become critical public health objectives. A critical challenge in the early detection of mental disorders is that the majority of individuals likely experiencing these conditions do not seek professional assistance, despite the availability of mental health services. Consequently, many miss the critical window for timely and effective intervention [

2]. Instead, a significant number choose to share their emotions and personal experiences on social media platforms such as Reddit and Twitter. Unlike traditional offline psychotherapy, this form of online expression features a lower barrier to entry and generates substantial volumes of data, creating opportunities for the application of Natural Language Processing (NLP) techniques to identify mental health conditions. Transformer-based pre-trained models, such as BERT, have demonstrated exceptional capabilities in sentiment analysis [

3]. Nonetheless, research focusing on the application of these models for mental health analysis remains relatively nascent, particularly in the accurate identification and prediction of users’ mental health statuses. Data annotation in this domain is inherently complex, labor-intensive, and costly. Moreover, machine learning models in this field often grapple with challenges such as insufficient data and class imbalance, which significantly constrain their performance and overall effectiveness.

To address these issues, transfer learning technology has emerged as a powerful approach, allowing models trained on large-scale datasets to adapt to new tasks and greatly reducing the need for extensive annotated data. By leveraging transfer learning, pre-trained models like BERT can be fine-tuned for mental health analysis, achieving high performance and generalization capabilities even with smaller datasets [

4]. This study thus employs transfer learning strategies, fine-tuning pre-trained models on a small, annotated dataset, effectively enhancing the model’s performance in mental health classification.

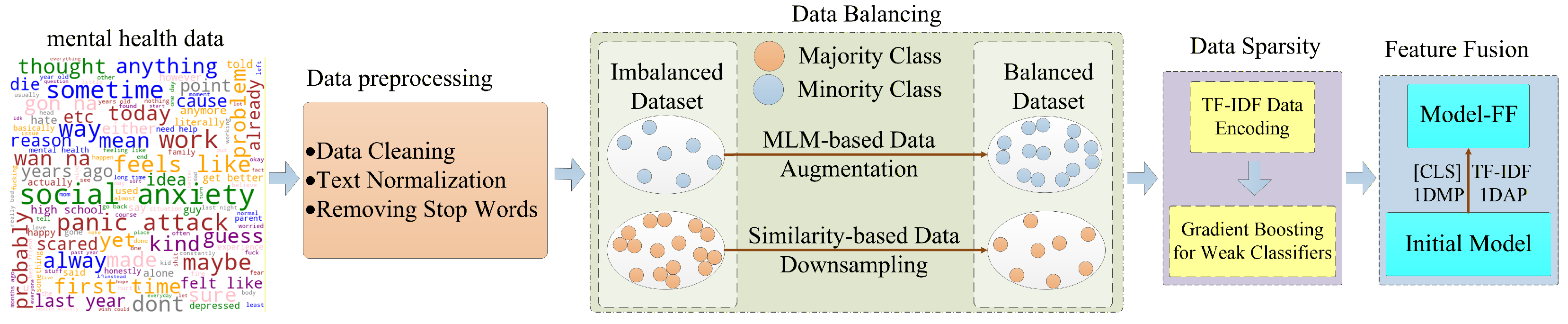

To further improve the model’s classification performance, the study also proposes optimization strategies. To address the class imbalance in mental health datasets, data augmentation and undersampling techniques are employed. Data augmentation generates more samples of the minority class through Masked Language Modeling (MLM) [

5], while undersampling reduces the majority class samples, creating a balanced dataset. This approach helps the model avoid overfitting and enhance its ability to recognize minority classes.can avoid overfitting and enhance its ability to recognize minority classes. Additionally, the study improves the model structure by proposing an optimization strategy for multi-feature fusion. Traditional BERT models typically use only the [CLS] [

5] vector for classification, but this study introduces a multi-feature fusion layer that integrates local features, global features, and Term Frequency–Inverse Document Frequency (TF-IDF) features. This enables the model to fully utilize the semantic information in the text, thereby further improving classification accuracy.

The methodology proposed in this study presents a novel framework for mental health analysis that establishes a distinct approach from recent large language model solutions like MentaLLaMA [

6]. While existing methods primarily rely on model scaling and domain adaptation of large language models, our work introduces fundamental innovations at the feature representation level. The methodology begins with comprehensive data preprocessing and class balancing, followed by the development of an enhanced model through sophisticated feature integration techniques. The primary contributions of this study are outlined as follows:

Innovative Multi-Source Feature Fusion: We introduce a novel feature fusion mechanism that fundamentally differs from single-modality approaches. Unlike MentaLLaMA’s reliance on massive parameter tuning, our method achieves enhanced performance through intelligent integration of local, global, and TF-IDF features, creating a more comprehensive and nuanced representation for mental health classification.

Inherent Interpretability Framework: Our approach embeds explainability directly into the model architecture through attention-based fusion weights, providing transparent decision-making processes. This contrasts with post hoc explanation methods commonly used in transformer frameworks and offers more reliable interpretability for clinical applications compared to black-box large language models.

Computationally Efficient Alternative: The proposed framework demonstrates that strategic feature engineering can achieve competitive performance without the computational overhead of large language models. Our model-agnostic fusion module enhances any pre-trained transformer through efficient feature combination rather than costly parameter updates, making advanced mental health analysis more accessible and deployable.

Robust Learning Methodology: By combining data balancing techniques with gradient boosting optimization, our approach effectively addresses class imbalance and feature sparsity while maintaining strong generalization capabilities across diverse mental health categories.

2. Related Work

Mental health analysis has emerged as a critical subfield within Natural Language Processing (NLP). Early NLP approaches predominantly relied on rule-based systems and sentiment lexicons; however, these methods demonstrated significant limitations in capturing the nuanced and complex emotions associated with mental health signals.

With the advent of deep learning, models such as Support Vector Machine (SVM), Random Forest (RF), Recurrent Neural Network (RNN), and Long Short-Term Memory (LSTM) networks have been extensively employed in text classification tasks. While these methods have achieved notable success in specific applications, they exhibit inherent shortcomings. Traditional models, such as SVM and RF, are heavily dependent on manual feature engineering, which can be labor-intensive and prone to bias. On the other hand, sequential models like RNN and LSTM, though capable of learning patterns in sequential data, often struggle to fully capture long-range contextual dependencies and the intricate diversity of sentiment and mental health classifications. These limitations underscore the need for more advanced methods to address the complexity inherent in mental health analysis.

For instance, Jina K et al. [

7] analyzed 488,472 posts from 248,537 users in the mental health community on Reddit. Using TF-IDF vectors with an XGBoost classifier, along with Continuous Bag of Words (CBOW) and convolutional neural network (CNN) classifier, they evaluated users’ mental states. Similarly, to assess the psychological impact of COVID-19, Banna et al. [

8] combined LSTM and CNN models to examine Twitter data, revealing insights into the pandemic’s impact on mental health. Tariq S et al. [

9] used TF-IDF to extract features from texts and adopted the semi-supervised learning mode of Co-training to construct SVM, RF and Naive Bayes (NB) models to predict mental illness, showing that collaborative training techniques can be highly effective in predicting mental illness. Other researchers have used polynomial NB, multi-layer perceptron and LightGBM to classify Reddit posts [

10], highlighting the challenges posed by the informal language, acronyms and slang, and noise typical of social media data. Further, a “one-shot decision approach” has been explored to assess individuals at risk of developing psychological disorders, using an integrated model combining SVM and K-Nearest Neighbors (KNN), with with noise label correction methods and intrinsic interpretability to distinguish between depressive symptoms and suicidal thoughts [

11]. Another study constructed a deep learning framework based on Bi-LSTM to classify the emotions of COVID-19-related discussions on social media [

12], outperforming the traditional LSTM model and other techniques.

In recent years, pre-trained language models based on the Transformer architecture, such as BERT [

5] and GPT [

13], have provided transformative solutions to longstanding challenges in text analysis. By effectively capturing contextual information, dynamically generating word embeddings, and minimizing the reliance on manual feature engineering, these models have significantly advanced the performance and generalization capabilities of NLP systems, particularly in sentiment analysis.

Models like BERT [

5] and GPT [

13] exploit the self-attention mechanism inherent to the Transformer architecture to address long-range dependency issues. Unlike the sequential processing paradigm of RNN and LSTM, self-attention enables parallel computation, assigning distinct attention weights to words based on their contextual relevance to other words within the text. This allows the models to accurately capture context in lengthy and complex texts. The incorporation of transfer learning further enhances these models’ utility. Through large-scale pre-training on extensive text corpora, these models develop rich and robust language representations. During fine-tuning, they can rapidly adapt to task-specific requirements, demonstrating strong performance even on datasets characterized by class imbalance. Additionally, ongoing research explores various optimization strategies to refine these models further, broadening their applicability to mental health analysis and related domains.

Dinu A et al. [

14] used three pre-trained models, BERT, RoBERTa and XLNET, to train on the SMHD mental health status dataset from Reddit, achieving state-of-the-art performance in post-level classification. Another study collected a dataset of 13 topics on Reddit, with five topics representing mental illnesses and eight representing no mental illness [

15]. Results showed that RoBERTa outperformed LSTM and BERT in mental illness analysis. Some studies have also built typologies for social media content based on psychological theory, such as DEPTWEET1, a dataset with 40,191 tweets and crowdsourced tags [

16]. Using BERT and DistilBERT, it was found that accurate depression diagnosis depends on models’ abilities to understand context and distinguish between different levels of depression severity. Finally, Ameer I et al. [

17] used unstructured user data from Reddit to train multiple models across traditional machine learning, deep learning and transfer learning, with RoBERTa achieving the highest classification accuracy and F1 score for mental health analysis. Yang K et al. [

6] tried to apply a large language model (LLM) to the analysis of explainable mental health on social media. Although LLM did not achieve the same performance as existing supervision methods in zero and few-shot settings, the researchers reframed explainable mental health analysis as a text generation task and constructed an IMHI dataset. Training the LLaMA2 model on the IMHI dataset led to results comparable to the most advanced discriminative methods.

Recent approaches like MentaLLaMA [

6] showcase the potential of adapting large language models for mental health analysis. However, this study establishes a distinct path: instead of scaling model size, we enhance performance through feature-level innovation. We propose a portable fusion module that upgrades any pre-trained transformer without costly fine-tuning, while embedding interpretability directly into the fusion process through meaningful attention weights—offering both transparency and efficiency absent in many complex frameworks.

3. Proposed Approach

The dataset used in this study (CounselChat) is a publicly available, pre-anonymized corpus hosted on Kaggle [

18]. As no personally identifiable information was accessible and no human subjects were recruited, this study qualifies for exemption from ethical review under the Common Rule (45 CFR 46.104(d)(4)). All analyses adhered to Kaggle’s data use policy and the ACM Code of Ethics. The dataset consists of two primary attributes for each entry: Text and Category. The dataset includes an extensive corpus of 310,000 records, distributed across eight distinct categories: depression, suicide, anxiety, social anxiety, eating disorders (edanonymous), health anxiety, and alcoholism. However, the dataset exhibits significant class imbalance, with certain categories containing disproportionately higher numbers of entries than others. This imbalance poses a substantial challenge for accurate analysis and classification. To address these challenges, this study implements a comprehensive methodological framework encompassing data preprocessing, class balancing, feature fusion, and transfer learning, as illustrated in

Figure 1. These measures collectively aim to enhance the representational quality of the data and improve model performance across imbalanced categories.

3.1. Data Preprocessing Principles

In Natural Language Processing (NLP), data preprocessing constitutes a critical phase that significantly influences the model’s efficacy and the precision of its outcomes. This study outlines the systematic preprocessing steps undertaken to maintain data integrity and enhance the robustness of the model’s predictions. The preprocessing pipeline comprises the following steps:

3.1.1. Data Cleaning

Removal of long texts: Text entries with excessively high word counts are identified and excluded to ensure consistency in dataset length and quality.

Lowercasing: All text entries are converted to lowercase to establish a uniform data format and eliminate case-sensitive discrepancies.

Elimination of URLs and excessive whitespace: URLs and unnecessary whitespace are removed, as they introduce noise and can adversely affect the model’s training process.

Removal of punctuation and numerical values: Punctuation marks and numbers are stripped from the text, as they typically do not contribute to sentiment or thematic analysis.

3.1.2. Standardization

Stemming: Words are reduced to their root forms, consolidating morphological variants into a unified representation. This simplification minimizes lexical diversity and facilitates more efficient model learning.

Morphological reduction: Similarly to stemming, morphological reduction removes affixes, enabling the model to treat semantically similar or related words as equivalent. This approach enhances the model’s interpretative capability and reduces redundancy in word representations.

3.1.3. Deletion of Stop Words

Elimination of English stop words: Frequently used words such as articles, prepositions, and conjunctions, which contribute little semantic value, are removed. By focusing on the most semantically significant terms, this step highlights key features in the text, improving the model’s focus and analytical performance.

By employing these preprocessing strategies, the study ensures that the data is not only cleaner and more uniform but also optimized for extracting meaningful patterns and improving model accuracy.

3.2. Data Balancing Mechanism

Imbalanced datasets often bias models toward majority classes, significantly impairing their ability to accurately identify minority categories and reducing overall predictive performance. To address this issue and enhance the model’s detection capabilities, this study employs a combination of data-level optimization strategies, including data augmentation and data balancing techniques.

For data augmentation, the study utilizes the Masked Language Modeling (MLM) method. This approach involves randomly selecting 15% of the words in a sentence, with 80% of the selected words masked, 10% replaced with random words, and 10% left unchanged. The pre-trained BERT model is then employed to predict the masked words, which are subsequently reintegrated into the original sentence to generate new text variants. To ensure data uniqueness, any duplicate variants generated during this process are removed. This technique effectively diversifies the dataset by increasing the representation of minority class samples and introducing linguistic variability, thereby enhancing the model’s generalization capabilities. Furthermore, the introduction of noise and textual variations improves the model’s robustness, enabling it to better capture contextual and semantic relationships and achieve more stable performance on complex classification tasks.

To further mitigate the detrimental effects of data imbalance, the study implements a data balancing strategy using similarity-based downsampling for majority class samples. Following the methodology proposed by Reimers et al. [

19], this approach calculates the Cosine Similarity between samples—a metric well-suited for measuring the semantic similarity of high-dimensional text feature vectors, as it effectively captures the inherent semantic relationships between text samples and helps accurately identify and retain representative samples in the dataset. Cosine Similarity scores range from −1 to 1, and samples with a score exceeding 0.75 are deemed redundant, with only one representative sample retained. This strategy reduces the over-representation of majority classes while preserving data diversity, thereby improving the model’s ability to learn balanced decision boundaries across categories.

3.3. Data Encoding and Data Sparsity

To alleviate the sparsity of TF-IDF features and construct an efficient classifier, this study designs a hybrid TF-IDF-based classifier construction workflow (as shown in Algorithm 1). This workflow integrates feature encoding and gradient boosting strategies, enabling the effective utilization of sparse data while retaining the discriminative power of TF-IDF representations.

The text content is converted into dimension-adjustable TF-IDF word vectors using TfidfVectorizer [

20], a widely adopted vectorization technique in text mining and information retrieval. This method transforms document collections into numerical feature representations based on the term frequency–inverse document frequency (TF-IDF) metric, which effectively balances local term importance against global distribution patterns [

21].

The TF-IDF value for a term

t in document

d is calculated as:

where

represents the frequency of term

t in document

d, and

quantifies the term’s rarity across the document collection [

21].

TF-IDF assigns higher weights to terms that appear frequently within a specific document but rarely across the entire corpus, thereby identifying terms with strong discriminative power for document classification [

22]. This characteristic makes TF-IDF particularly valuable for capturing distinctive lexical patterns in textual data.

After transformation, the input text is represented as a word vector array

, where

denotes the

i-th word vector. The sparsity

of the resulting representation is defined as:

where

indicates the total number of elements in

x, and

represents the count of zero elements in

x [

23].

| Algorithm 1 Hybrid TF-IDF-Based Classifier Construction for Addressing Data Sparsity. Proposed approaches for data sparsity. |

| 1. Procedure Input: ▷ Preprocessed dataset |

| 2. Output |

| 3. for t in range(): ▷ Gradient classifier |

| 4.

if |

| 5.

|

| 6.

|

| 7.

|

| 8.

return |

| 9.

for in range(): ▷ Building classifier |

| 10.

|

| 11.

|

| 12.

|

| 13.

|

| 14.

|

| 15.

return |

This study selects TF-IDF as an external feature based on its optimal balance between feature complementarity, computational efficiency, and model adaptability. TF-IDF quantifies term importance from a statistical perspective, effectively compensating for the limitations of pre-trained models in capturing explicit textual features, thereby establishing a powerful complementary relationship. Compared to psychologically-informed linguistic features that rely on external resources or N-gram features that may cause dimensionality issues, TF-IDF can be efficiently computed using only the text data itself, offering notable engineering advantages [

20]. Furthermore, its sparse vector representation can be seamlessly integrated into deep learning frameworks via attention mechanisms, enabling efficient information compression and fusion.

3.4. Classifier Design

After obtaining TF-IDF features, we apply gradient boosting [

24] with weak classifier models to mitigate sparsity in the feature space. The algorithm follows the standard gradient boosting framework [

24,

25], where at each iteration

m, the model is updated by focusing on misclassified samples from previous iterations.

The iterative construction process of the classifier adheres to the gradient boosting framework, with specific implementation steps detailed in the classifier building phase of Algorithm 1 (Steps 9–15). The pseudo-residuals

are calculated as the negative gradient of the loss function:

The optimal step size

is determined by minimizing the loss function:

This approach effectively reduces classification errors while maintaining model robustness across training epochs, with the learning rate controlling the contribution of each weak learner to the ensemble [

24].

3.5. Feature Fusion

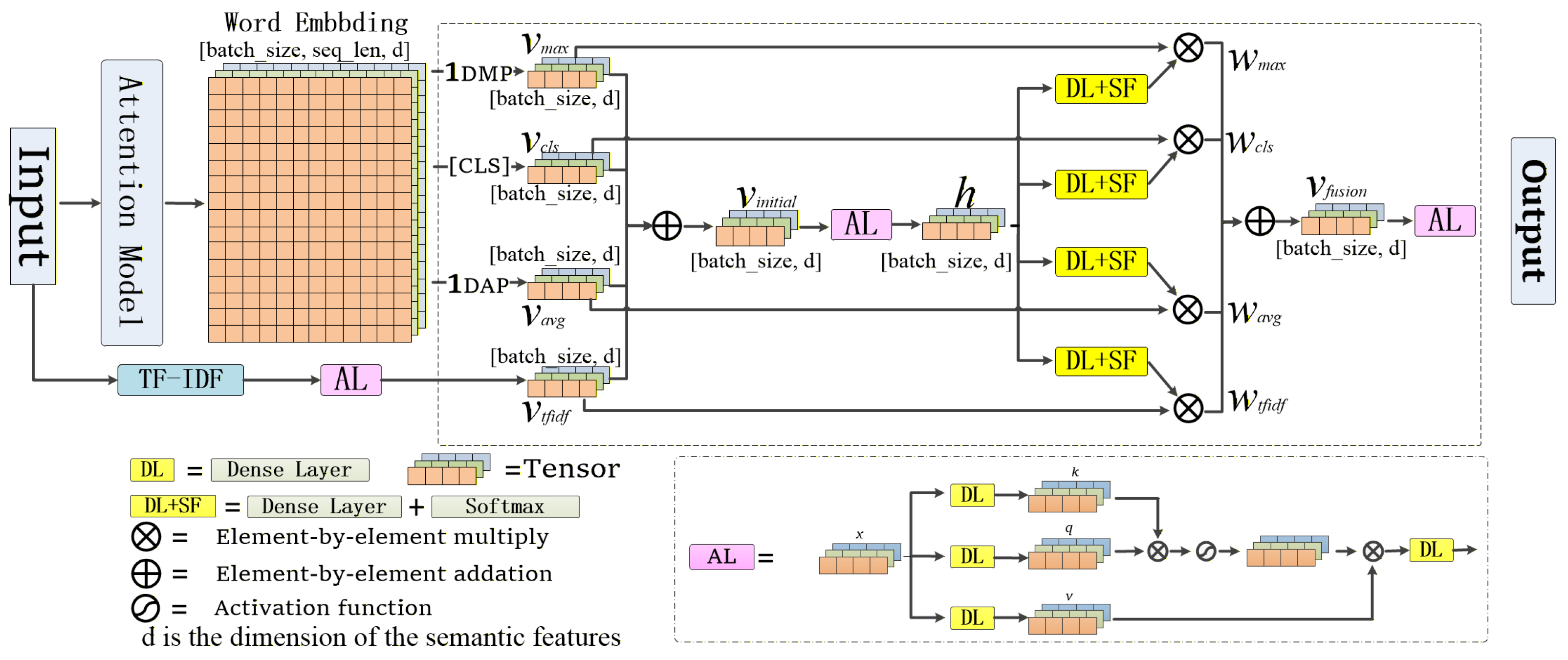

In order to further improve the performance of the model, this study improves the application of the pre-trained model in the text classification task. Traditional pre-trained models such as BERT only use the [CLS] vector of the output hidden layer for text classification, but do not make full use of global features. Aiming at the needs of sentiment analysis, this study proposes a multi-feature fusion attention layer, which integrates the original features, global features and TF-IDF features of the pre-trained model (Term frequency–inverse Document Frequency, used to evaluate the importance of a word in the document set). New features are generated and fed into the classifier for prediction. The structure of the multi-feature fusion attention layer is shown in

Figure 2. The experimental results show that the performance of the pre-trained models after feature fusion is significantly improved, which verifies the effectiveness of the feature fusion strategy.

The multi-source heterogeneous feature fusion method proposed in this study is based on an attention mechanism, which can adaptively adjust the contribution of different features to the classification task. The specific implementation is as follows:

First, multiple semantic features are extracted from the pre-trained model: the embedding vector corresponding to the [CLS] token in the last hidden layer is extracted as the contextual semantic feature, denoted as ; the global average pooling feature, denoted as , is obtained by taking the mean of the last hidden state tensor along the sequence dimension; the global max pooling feature, denoted as , is obtained by taking the maximum of the last hidden state tensor along the sequence dimension. These features capture the deep semantic information and contextual relationships of the text.

Second, the TF-IDF feature is processed: the term frequency–inverse document frequency is calculated for the input text to obtain a sparse vector

of dimension

. This sparse vector is mapped to a dense vector, denoted as

, with the same dimension as the semantic features through a single-head self-attention layer. It provides importance information at the term frequency statistical level. The single-head self-attention layer maps the sparse vector to query vector

Q, key vector

K, and value vector

V through linear transformation matrices

, and computes the dense vector

via the Softmax function:

where

d is the dimension of the semantic features.

Then, the initial fusion of the four features is performed:

The initial fused features are fed into an attention layer to generate an attention-weighted feature representation:

The feature

h generated by the attention layer is input into four independent weight generation modules, each consisting of a fully connected layer and a Softmax activation function, to generate dedicated weight vectors for the corresponding features:

Each has independent parameters, enabling the model to learn customized weight distribution patterns for different features, thereby enhancing the model’s expressive power.

The weight vectors are used to perform element-wise re-weighting of each original feature, and the re-weighted features are summed to obtain the final fused feature:

where the ⊙ symbol denotes element-wise multiplication, used to achieve fine-grained adjustment at the feature level.

The fused feature retains complete feature information and is input into the classifier. The final category probability distribution is output through the Softmax function.

The model adopts a hierarchical processing architecture that establishes a distinct paradigm from scale-based approaches like MentaLLaMA [

6]. Rather than relying on massive parameter tuning of large language models, our feature-centric methodology sequentially performs feature extraction from pre-trained models and statistical methods, followed by attention-based cross-feature interaction for adaptive multi-source fusion, and finally completes classification using the integrated representations. This design draws inspiration from cross-modal fusion principles but implements them within a single modality through a model-agnostic fusion layer that simultaneously enhances expressive power and provides inherent interpretability—achieving performance gains while maintaining computational efficiency and transparency unavailable in resource-intensive LLM solutions.

3.6. Computational Complexity Analysis

To assess the computational efficiency of the proposed feature fusion mechanism, this section analyzes its computational complexity and compares it with current mainstream feature fusion strategies.

Assume the input feature dimension is d, the number of features is k (where k = 4 in this study), and the sequence length is L.

3.6.1. Proposed Multi-Source Attention Fusion Mechanism

The computational cost of our method primarily stems from two parts:

Multi-source Feature Extraction: Extracting the CLS token, average pooling, and max pooling features from the pre-trained model has a time complexity of .

Attention Fusion Layer: This layer first generates weight vectors through independent attention modules, followed by element-wise weighted summation. Its time complexity is dominated by linear transformations, amounting to , where k is the number of features.

Consequently, the overall time complexity of the proposed method is . As it involves only linear projections and element-wise operations, the space complexity is also maintained at a reasonable level of .

3.6.2. Comparison with Mainstream Fusion Strategies

To highlight the efficiency of our method, we compare it with two common fusion strategies:

Simple Concatenation Followed by Attention: This method first concatenates multiple feature vectors and then computes attention on the resulting high-dimensional vector, leading to a high time complexity of . The computational cost increases quadratically with the number of features k.

Convolutional Block Attention Module (CBAM): While generic attention fusion modules like CBAM perform excellently in computer vision, their included channel and spatial attention mechanisms require structural adjustments when adapted to text sequences. Their complexity is , which may not be optimal for computational efficiency in the textual modality.

3.6.3. Comprehensive Analysis

Table 1 below comprehensively compares the complexity and characteristics of different fusion strategies:

The analysis results demonstrate that the proposed method achieves a good balance between computational complexity and feature interaction capability. Compared to the simple concatenation strategy, our method avoids computing attention in ultra-high-dimensional spaces by utilizing independent and parallel weight generation paths, thereby achieving finer-grained feature fusion at a lower computational cost.

4. Results and Analysis

4.1. Experiments Methods

Traditional machine learning methods (e.g., Support Vector Machine, Random Forest, Naive Bayes) require additional feature extraction steps, and often struggle with capturing contextual nuances in text. Consequently, conventional deep learning models tends to face challenges in handling context effectively, limiting their utility for complex text sentiment analysis tasks. This study, therefore, primarily focuses on pre-trained models with attention mechanisms, which offer enhanced offer enhanced capabilities for handling context in NLP tasks.

Pre-trained models built on the Transformer architecture with an attention mechanism enable parallel processing and are well-suited for capturing long-range dependencies, providing significant advantages over traditional RNN-based models in both performance and stability. Additionally, Pre-trained models demonstrate greater resilience when handling data imbalance, particularly through transfer learning and data augmentation. These techniques allow models to effectively capture features of minority class samples, improving accuracy in detecting rare emotional signals, which is essential in mental health analysis. This study uses a variety of pre-trained models based on the Transformer architecture, including:

GPT-2: Pre-training on a large amount of text data based on unsupervised learning, good at generating text [

13].

BERT: The use of bidirectional pre-trained language representation has revolutionized various tasks in the field of NLP [

5].

RoBERTa: Improved BERT performance through longer training, larger data sets, and removal of NSP tasks [

26].

DistilBERT: A lightweight version of BERT with fewer parameters but similar performance [

27].

SpanBERT: The model’s ability to recognize text fragments was improved by modifying the pretraining task [

28].

ALBERT: BERT memory consumption is reduced through parameter sharing and model compression [

29]

XLNet: Achieves bidirectional context learning by maximizing the expected likelihood of the arrangement order, making up for BERT’s limitation of ignoring dependencies between mask locations [

30].

In addition to the above models, this study also compares the traditional LSTM [

31], GRU [

32] and the latest MAMBA models [

33].

4.2. Experimental Data Preprocessing

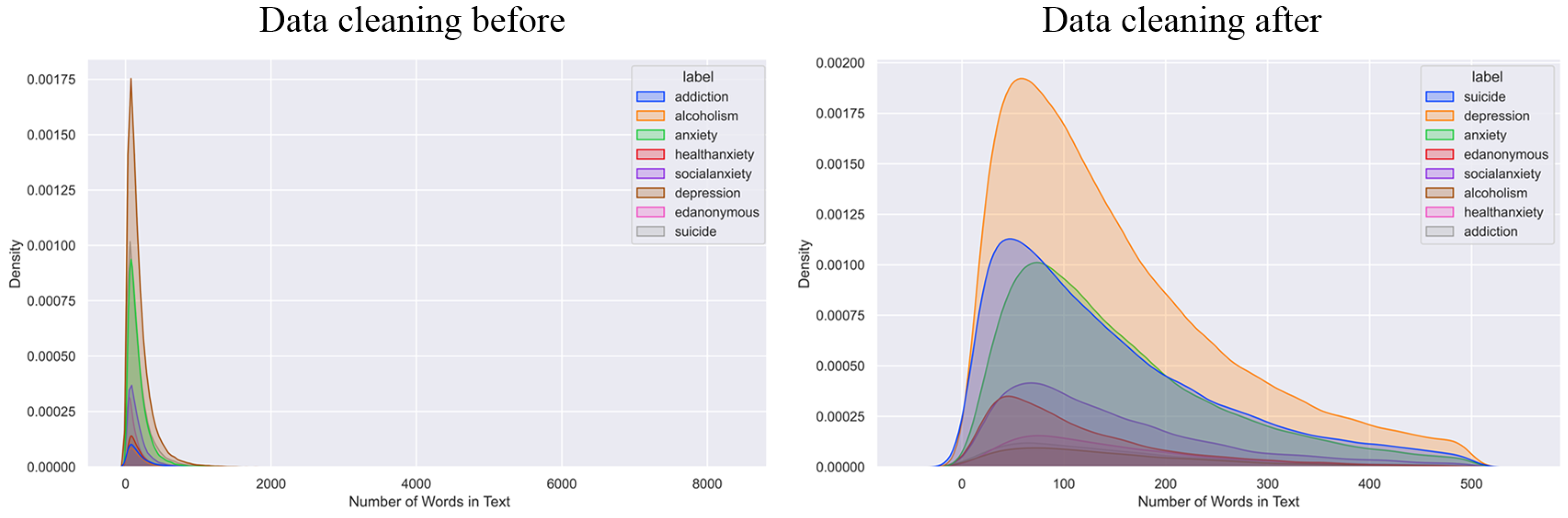

In the data cleaning experiment of

Section 3, we plotted a kernel density estimation plot for all categories of text in the original data set (see

Figure 3), which showed that over 99% of the texts had fewer than 1000 words, while very few had a word count between 1000 and 8000. This distribution was deemed unreasonable, so a threshold of 500 words was established. Eliminating abnormally long text reduces noise and outliers, enabling the model to better capture key data features and improving the efficiency and accuracy of model training.

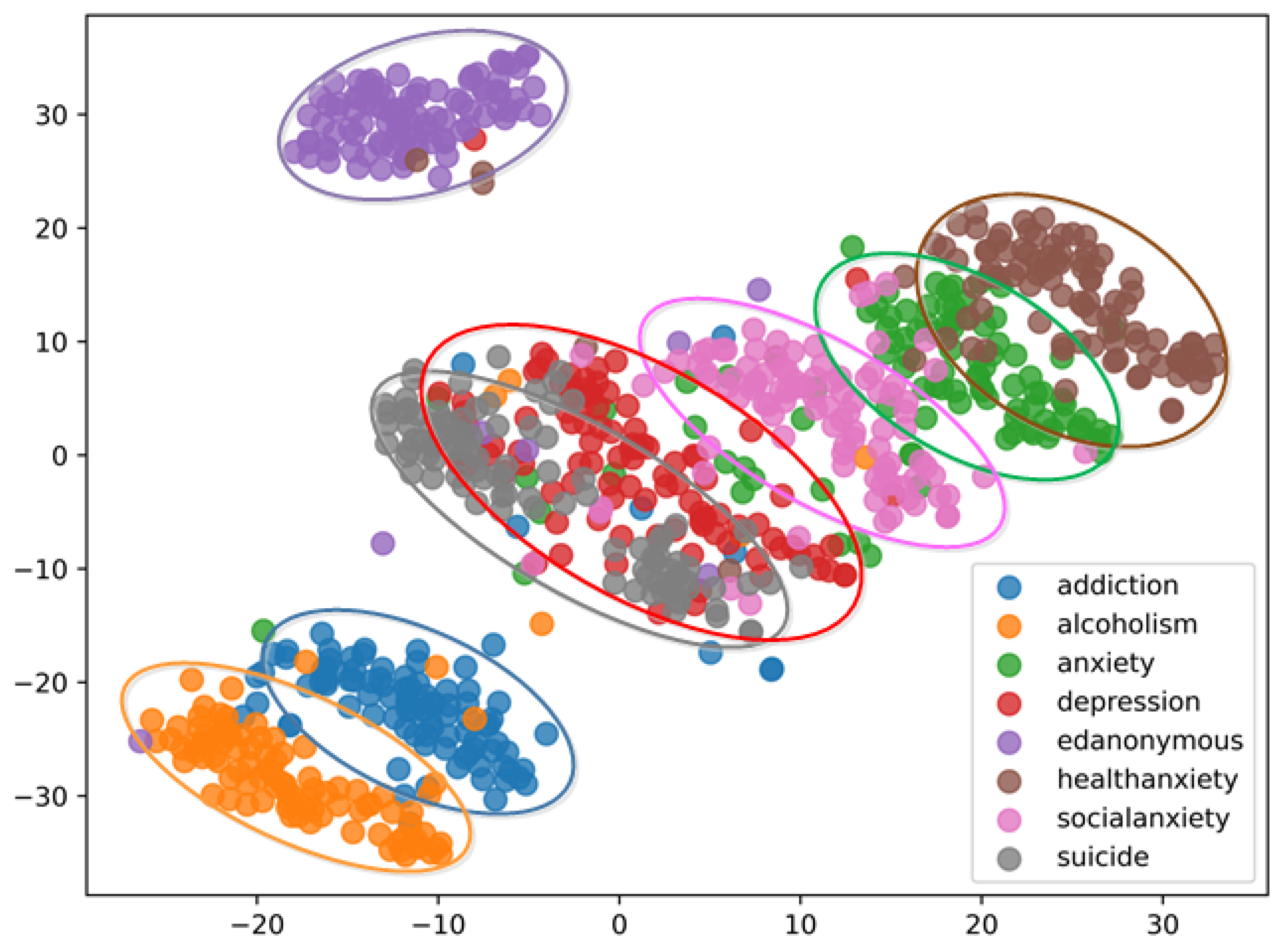

After data preprocessing, this study further investigates the correlations between different data categories. In this study, the original labels were refined to differentiate the categories of “anxiety,” “social anxiety,” and “health anxiety.” Although these three categories share some textual similarities, they exhibit discernible semantic differences. Other categories in the data set also showed notable correlations. For example, individuals with depression may show signs of suicidal ideation, and those with addiction could also struggle with alcoholism. These associations, while significant, are not absolute, as individuals may express and experience emotions uniquely. The goal of this study was to explore deeper correlations between emotional categories, rather than merely implementing simple emotional classifications or assessing mental health status through social media texts.

To investigate these correlations, we randomly selected 100 data samples from each category and processed them with Word Embedding processing [

34,

35] to obtain relevant features. These features were subsequently dimensionally reduced using t-SNE [

36]. Outliers were identified using the IQR (interquartile distance [

37]) method, and an optimal ellipse was fitted using the least-squares method, with the remaining data points falling within this ellipse.

Figure 4 shows that the data points of the anonymous category formed an isolated cluster, indicating clear distinction from other categories. Analysis of other categories showed a strong correlation between addiction and alcoholism, with weaker associations to other categories. In contrast, the suicide and depression categories exhibited considerable overlap, underscoring a strong correlation. While social anxiety and health anxiety showed relative independence, they were still related to general anxiety. These insights align with previous discussions on category correlation. Furthermore, results from the confusion matrix in subsequent experiments were consistent with the category correlation shown in

Figure 4, as indicated by the false-positive rates.

4.3. Experimental Data Balancing

In the data enhancement and balancing experiments described in

Section 3, we adopted a dual strategy of similarity-based downsampling and data augmentation-based upsampling to balance the dataset, effectively reducing the dominance of majority class samples and achieving a more equitable sample distribution. Specifically, the most prevalent categories saw notable declines in both proportion and quantity—for example, the "depression" class dropped from 39% (108,224 samples) to 25% (75,752 samples), while the "suicide" class decreased from 22% (61,118 samples) to 15% (45,835 samples). In contrast, minority classes experienced significant growth: the "alcoholism" class, originally accounting for 2% (5513 samples), rose to 7% (21,951 samples), and the "healthanxiety" class increased from 3% (8191 samples) to 8% (24,539 samples). Detailed changes in the proportion and quantity of all classes before and after balancing are summarized in

Table 2.

By integrating these two complementary data optimization techniques, the study successfully mitigated issues of model overfitting, particularly concerning majority class samples. Additionally, the improved balance in the dataset significantly enhanced the model’s ability to classify minority class samples, leading to overall improvements in predictive performance and robustness.

4.4. Experimental Setup

4.4.1. Pre-Trained Model Configuration

Due to computational resource limitations, each model configuration was only tested once. To ensure the reliability of the results, we validated the proposed method on seven pre-trained models with different architectures. All experiments were conducted under the same training-validation-test split and hyperparameter settings. All transformer-based pre-trained models (GPT-2, BERT, RoBERTa, DistilBERT, SpanBERT, ALBERT, XLNet) were sourced from the HuggingFace Transformers library, utilizing the base versions of each architecture. The MAMBA model was obtained from its official GitHub [

38] repository. The models and their corresponding tokenizers were loaded using their respective dedicated classes.

Model Specifications

Models and tokenizers were loaded using their specific classes from HuggingFace (e.g., BertModel, RobertaTokenizer)

Base versions were selected for consistency across experiments

Model weights were initialized from pre-trained checkpoints without domain-specific pre-training.

4.4.2. Hyperparameter Configuration

Transformer-based Models (GPT2, Bert, RoBERTa, DistilBERT, SpanBERT, ALBERT, XLNet, MAMBA):

Pre-trained Source: HuggingFace Transformers (base versions)

MAMBA: Official GitHub repository

Learning Rate: 1 × 10−5

Batch Size: 16

Optimizer: Adam with default PyTorch parameters

Loss Function: CrossEntropyLoss

Maximum Epochs: 10

Early Stopping: Enabled, selecting the model with minimum validation loss

LSTM and GRU Models:

Learning Rate: 0.001 (default Adam optimizer in Keras)

Batch Size: 32

Optimizer: Adam with default Keras parameters (learning rate = 0.001, = 0.9, = 0.999, = 1 × 10−7)

Loss Function: Categorical crossentropy

Maximum Epochs: 50

Early Stopping: Enabled, based on validation loss minimization

4.4.3. Data Configuration

Train/Validation/Test Split: 8:1:1 ratio

Maximum Sequence Length: 512 tokens

Evaluation Metrics: Accuracy, Recall, F1-Score

4.4.4. Computational Environment

GPU: NVIDIA RTX 4060 Ti (16GB VRAM)

CPU: Intel i5-12400

RAM: 32 GB

Python 3.9.18

PyTorch 2.1.2+cu121 (for transformer models)

TensorFlow-GPU 2.10.0 (for LSTM/GRU models)

HuggingFace Transformers library (for pre-trained models)

CUDA 12.1

4.4.5. Training Strategy

Transformer models utilized pre-trained weights from HuggingFace base versions

Consistent fine-tuning approach applied across all transformer models

Fixed learning rate schedule without decay for all models

Model selection based on early stopping with validation loss monitoring

Identical evaluation protocol across all models using the same data splits

In this study, the model and the improved versions of some pre-trained models are evaluated on the original and enhanced data sets. In this study, three evaluation metrics of model Accuracy, Recall and F1 Score were mainly investigated. Due to the uneven distribution of the data used in this study, recall rates and F1 scores are more indicative of the true performance of the model. Their calculation methods are as follows:

4.5. Experiment Result

The initial evaluation of the models was conducted on the original dataset, with the results summarized in

Table 3. These outcomes serve as the baseline for comparative analysis in subsequent experiments.

Table 4 presents the evaluation results of the feature fusion models applied to the original dataset. The inclusion of the multi-feature fusion attention layer resulted in significant performance improvements for several models. Notably, the BERT model exhibited substantial gains, with recall increasing from 76.0% to 80.7% and the F1 score rising from 76.6% to 81.0%. Similarly, the SpanBERT model demonstrated marked improvement, with recall increasing from 74.9% to 81.8% and the F1 score improving from 76.7% to 81.9%. Both models achieved notable enhancements, with recall and F1 scores rising by over 4%. In contrast, the performance improvements for the RoBERTa model were more modest, with recall increasing from 81.2% to 82.0% and the F1 score rising from 81.6% to 82.5%. The GPT-2 model, however, experienced a slight decline in performance, with recall decreasing marginally from 80.% to 80.5% and the F1 score dropping from 80.8% to 80.5%. These results highlight the effectiveness of the multi-feature fusion attention layer in enhancing the performance of certain models, particularly BERT and SpanBERT, while also underscoring the varying impact of feature fusion across different architectures.

Additionally, this study investigates the impact of data balancing on model performance. By augmenting minority class data and downsampling majority class data, we enhance the model’s generalization capability and robustness, thereby improving its overall performance.

Table 5 presents the performance of various pre-trained models on the dataset after data augmentation and balancing.A comparison with the results in

Table 3 reveals significant improvements across all models. For instance, the accuracy of the RoBERTa model increased from 0.792 to 0.824, the recall improved from 0.812 to 0.856, and the F1 score rose from 0.816 to 0.857. Similarly, the XLNet model exhibited enhancements, with accuracy increasing from 0.788 to 0.822, recall from 0.801 to 0.854, and F1 score from 0.809 to 0.854. Other models also demonstrated notable performance gains, indicating that the data balancing strategy effectively improves the classification performance of the models. Finally, we evaluated the feature fusion model on a balanced dataset, and the results are shown in

Table 6. All models achieved Accuracy, Recall, and F1 scores above 0.8. Among them, the RoBERTa model performed exceptionally well, with an accuracy of 0.832, a recall of 0.864, and an F1 score of 0.863—all exceeding 0.83. This is significantly better than the results of the benchmark experiment (

Table 3), the data balancing experiment (

Table 5), and the feature fusion experiment (

Table 4). DistilBERT and XLNet also performed well across all metrics, further validating the effectiveness of feature fusion models on balanced datasets.

The experimental results demonstrate the consistent effectiveness of the proposed feature fusion strategy across multiple pre-trained architectures. Although computational constraints precluded multiple replicate experiments, the observed performance improvements showed remarkable consistency across all seven pre-trained models (see

Table 6). This cross-model stability provides compelling evidence that the performance gains stem from the methodological innovations rather than random variations, establishing a solid empirical foundation for our approach.

The enhanced models not only achieved significant improvements in accuracy, recall, and F1-score on the original dataset but also exhibited superior generalization capabilities and minority class recognition on balanced datasets. Particularly noteworthy is the framework’s robust performance in addressing data imbalance, where the feature fusion mechanism enables more accurate capture of minority class patterns. These findings validate the potential for extending these improvements to broader natural language processing tasks, including sentiment analysis, named entity recognition, and machine translation.

Furthermore, while direct numerical comparison with closed-source implementations like MentaLLaMA is impractical, our approach establishes distinct advantages in computational efficiency and transparency. The framework achieves competitive performance (e.g., BERT-FF F1: 0.855) without requiring massive parameter sets typical of 7B+ LLMs, significantly enhancing accessibility. More importantly, unlike the “black-box” predictions characteristic of many large language models, our architecture provides intrinsic explainability through fusion attention weights—a crucial feature for clinical applications where decision transparency is paramount.

4.6. Analysis of Ablation Study

As evidenced in

Table 7, the ablation study conducted on the augmented dataset consistently validates the efficacy of the proposed feature fusion strategy across diverse model architectures. The incremental integration of TF-IDF and global semantic features reliably contributes to performance gains in most configurations, confirming the complementary nature of statistical and deep semantic representations.

BERT demonstrates the most pronounced sensitivity to feature enrichment, with TF-IDF and global features providing substantial individual improvements of +1.2% and +1.3% in the F1-score, respectively. RoBERTa and DistilBERT exhibit more modest but consistent gains from the complete fusion framework (+0.6% and +0.9% F1-score). Notably, even in the case of ALBERT where individual components showed slight degradation, the full fusion mechanism demonstrated robust integrative capability, nearly recovering baseline performance and highlighting the framework’s adaptability.

The consistent positive trajectory across architectures underscores that the observed improvements are systematic rather than stochastic, with the attention-based fusion mechanism effectively leveraging multi-source information to enhance model discriminability.

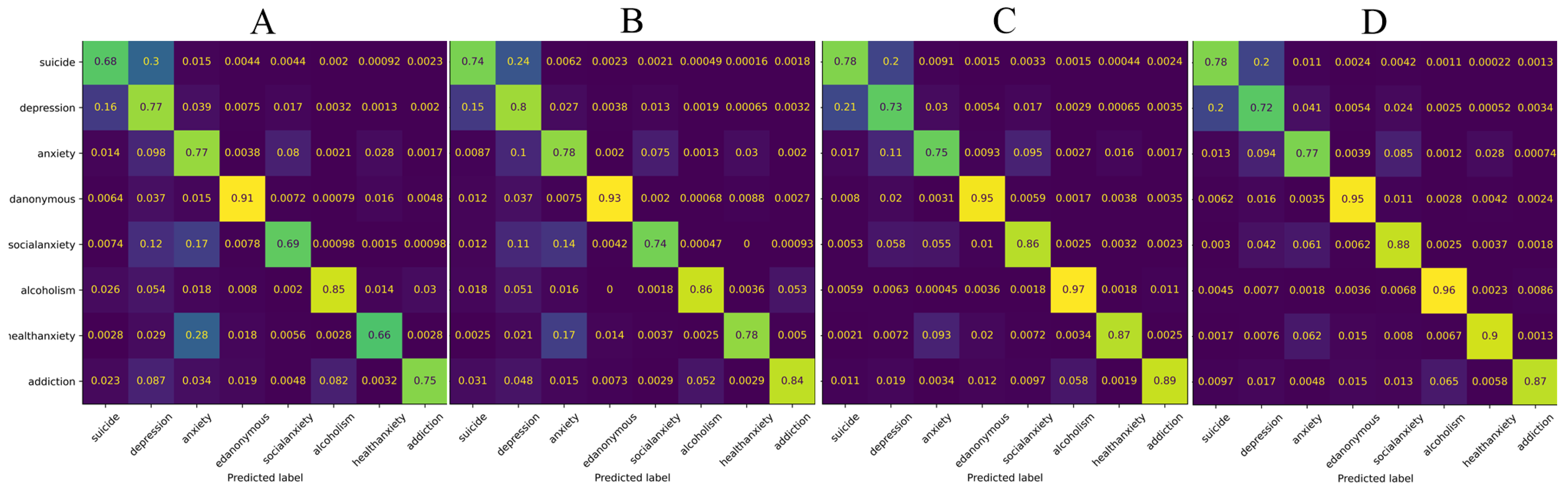

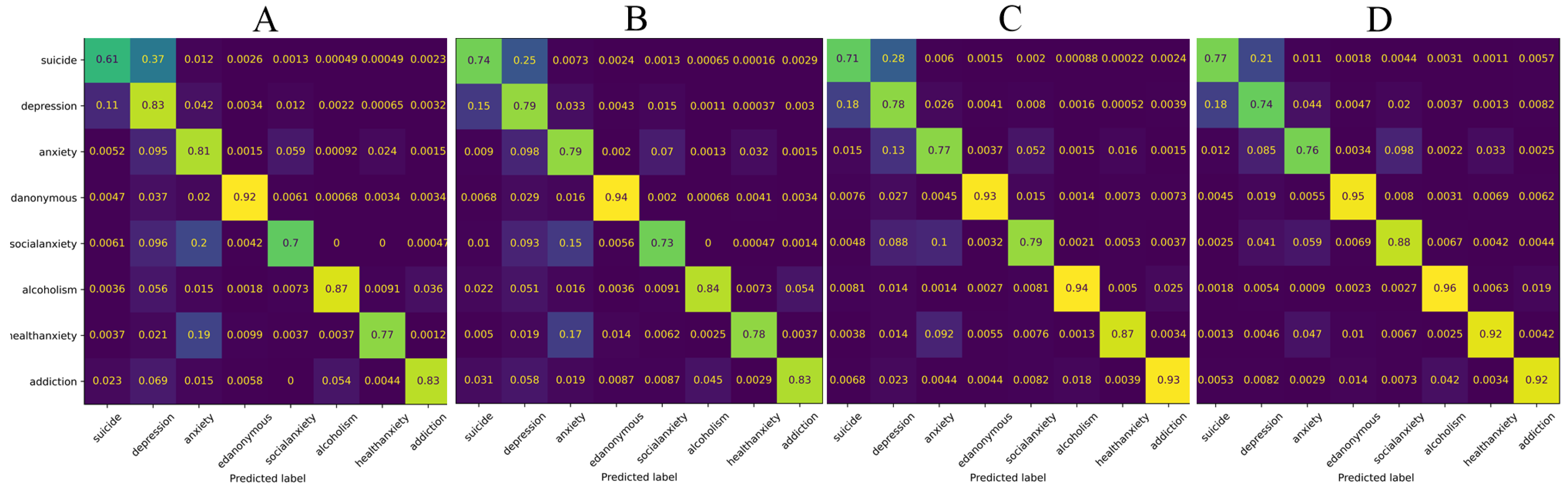

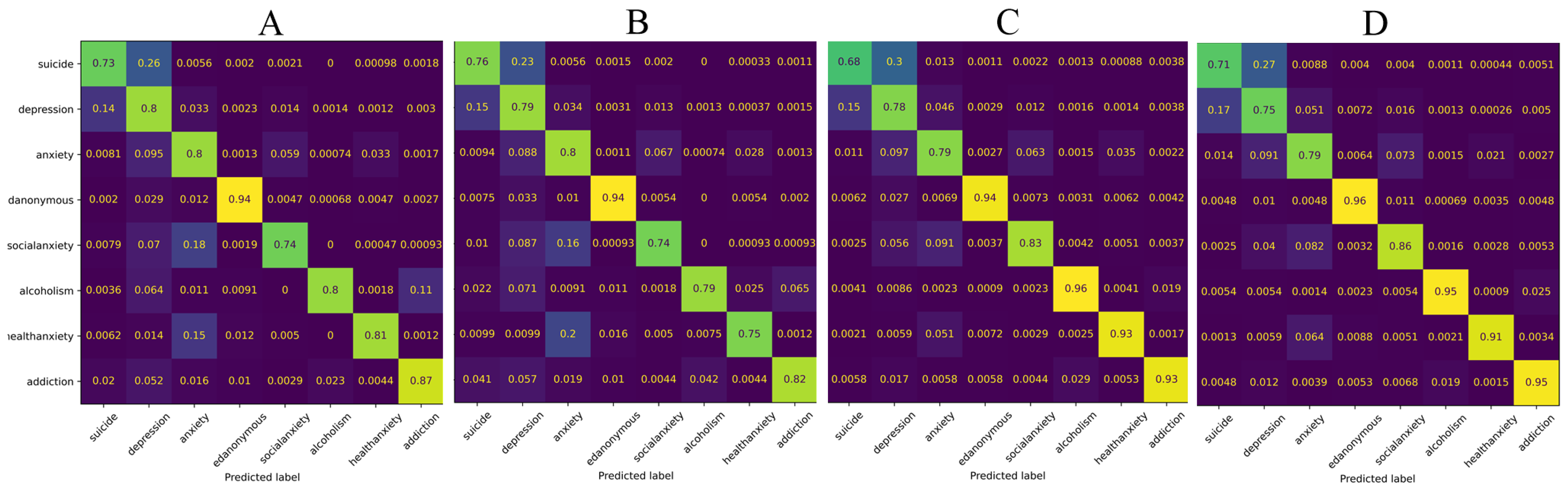

4.7. Model Classification Evaluation

To visually see which categories are more likely to be confused between each other, we use the confusion matrix for analysis.

Figure 5,

Figure 6 and

Figure 7 show the confusion matrix performance of BERT, RoBERT, and DistilBERT in the benchmark experiment (

Table 3), the feature fusion experiment (

Table 4), the data enhancement experiment (

Table 5), and the feature fusion and data enhancement combination experiment (

Table 6), respectively. Through comparative analysis of these confusion matrices, We found some correlation between eight categories in the dataset used in this study (depression, suicide, anxiety, social anxiety, edanonymous, health anxiety, addiction, alcoholism). Among them, the edanonymous category was most easily correctly identified by the model, while the confusion between depression and suicide was high, indicating a strong correlation between them. There is also a degree of confusion between suicide and anxiety. Although the direct correlation between health anxiety and social anxiety is not strong, they have some correlation with the anxiety category.

4.8. Model Classification Error Analysis

We selected some misclassified samples, shown in

Table 8. For instance, in Text 1, the statement “I just wish I could be happy and myself but I can’t” does not explicitly convey “suicide” but rather leans toward expressing “depression.” However, the emotional expression here is subtle, which makes it difficult for the model to accurately capture and classify the underlying sentiment. Such samples, with vague or unclear semantic expressions, often challenge the model’s ability to discern emotions in text, increasing classification difficulty. Similarly, Text 2 contains multiple statements (e.g., “Constant thought of suicide,” “All I want to do is end it,” “Mental institutions don’t help, therapist doesn’t help, medications don’t help,” and “I’m really intent on killing myself”) that clearly indicate suicidal thoughts. Despite this, the text is labeled as “depression,” rather than “suicide.” Such labeling inaccuracies can misguide the model during training, leading it to establish incorrect associations between emotions and categories. These errors directly impact prediction accuracy by confusing the boundaries between distinct emotional states. Additionally, Text 3 reflects “anxiety” (expressed through phrases like “my anxiety attacks insomnia” and “Anxiety has been affecting me academically and socially”), “depression” (e.g., “I have this unexplainable feeling of severe depression”), and even “suicide” (e.g., “wanting to just die and I already contemplate death every day”). Although Text 3 contains multiple emotional expressions, it is assigned only one label in the dataset. This issue, where multiple emotions correspond to a single label, complicates the model’s ability to handle complex emotional expressions. Such labeling disregards the diversity of emotions within the text, limiting the model’s capacity to accurately identify and distinguish different emotional features. These issues within the dataset—including unclear semantic expressions, label errors, and cases of multiple emotions assigned to a single label—not only reduce the accuracy of the model’s emotion classification but also hinder its generalization ability, affecting its performance in practical applications. These challenges complicate the model’s ability to accurately learn the nuanced relationships between emotions and text, especially in complex scenarios involving multiple emotions. To address these problems and improve model performance, more refined labeling and thorough data cleaning are necessary to ensure label accuracy and clarity of emotional expression. However, fine tagging and cleaning large amounts of text is a time-consuming and inefficient task. Manual annotation requires expertise to accurately distinguish between different emotions, especially when the text contains multiple complex emotions, and this complexity can increase the difficulty and workload of annotation. In addition, manual labeling is susceptible to subjective factors, and there may be differences in judgment between different taggers, which further increases the consistency challenge of data processing.

5. Conclusions

This study demonstrates the efficacy of employing pre-trained language models for emotional text classification, achieving substantial performance improvements through systematic data preprocessing and feature optimization. By implementing text standardization, noise reduction, and dataset simplification techniques—including lowercase conversion, stop word removal, and dry processing—we enhanced the models’ capacity to interpret nuanced emotional expressions.

To address class imbalance challenges, we applied data augmentation and downsampling techniques that significantly improved minority class identification and generalization capabilities. The introduction of a novel feature fusion approach, integrating TF-IDF features with transformer-based representations, further optimized text feature characterization and substantially enhanced emotion classification performance.

Our experimental results validate the proposed methodology. Models such as RoBERTa and BERT, when enhanced with our feature fusion mechanism, achieved significant gains in accuracy, recall, and F1-score on the balanced dataset. More importantly, this work outlines a distinct and valuable research direction. In contrast to the prevailing trend of pursuing performance through model scaling, as exemplified by large language models (LLMs) like MentaLLaMA, our research demonstrates that strategic feature engineering remains a highly viable path for performance enhancement. Our approach offers a set of compelling advantages in specific contexts: it is computationally more efficient, does not require massive parameter sets, and provides inherent explainability through its attention-based fusion mechanism—a feature particularly crucial for sensitive domains like mental health analysis.

Analysis of confusion matrices provided valuable insights into model behavior, revealing semantic correlations between categories and identifying challenges such as ambiguous expressions and labeling inconsistencies. These findings underscore the importance of dataset quality in emotional classification tasks.

Future research will focus on refining dataset labeling processes and exploring the application of our feature fusion framework to broader natural language processing tasks. The demonstrated combination of computational efficiency, transparent decision-making, and robust performance positions our approach as a viable pathway for real-world mental health applications where both accuracy and interpretability are paramount.