A Structure-Aware and Attention-Enhanced Explainable Learning Resource Recommendation Approach for Smart Education Within Smart Cities

Abstract

1. Introduction

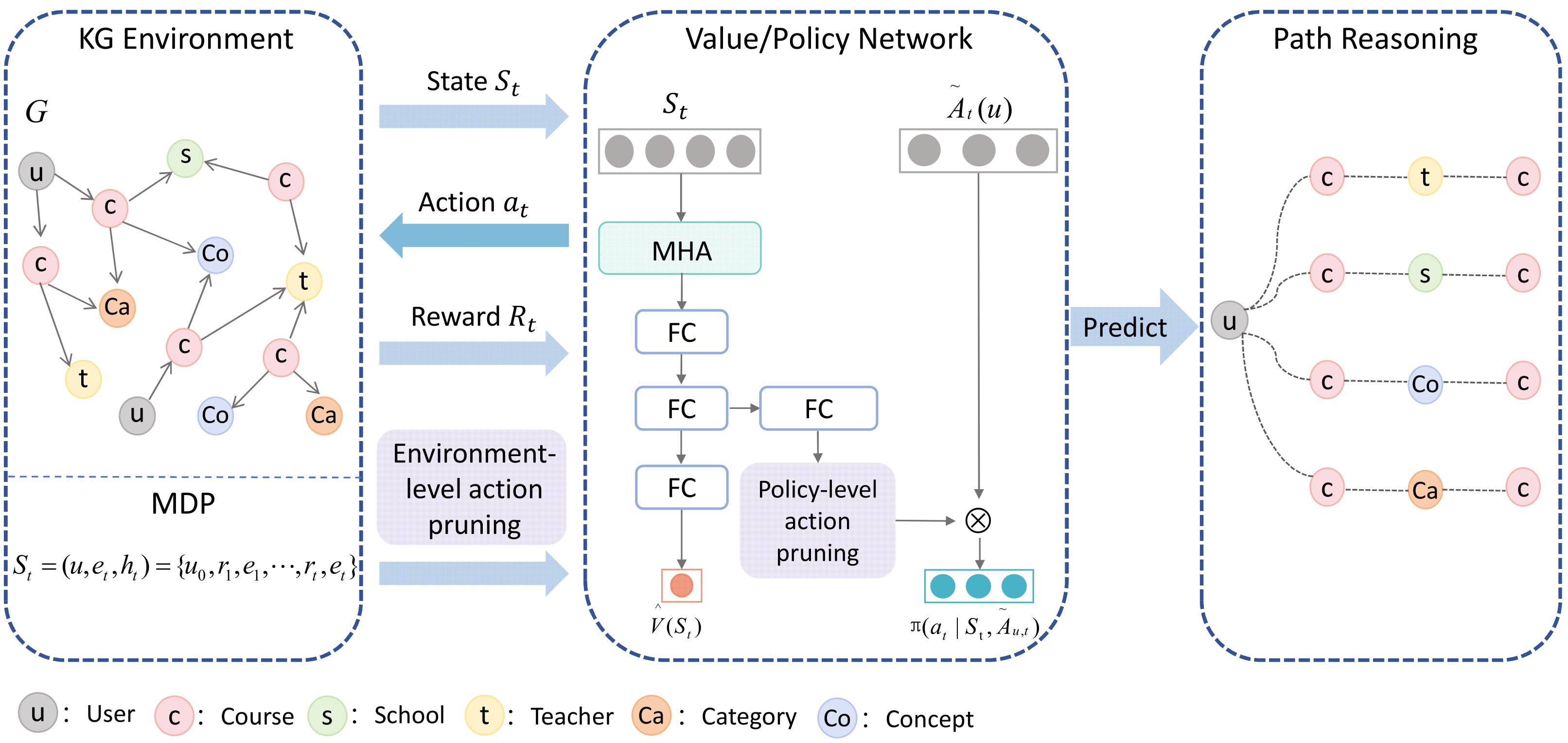

- We employ MHA to encode path states, effectively extracting critical semantic features. This enhances the agent’s ability to represent complex environments and addresses the limitations of existing models in state representation.

- We design a dual-layer action pruning strategy, applied at both the environment and policy levels, to compress the action space and maintain reasoning efficiency without sacrificing recommendation quality.

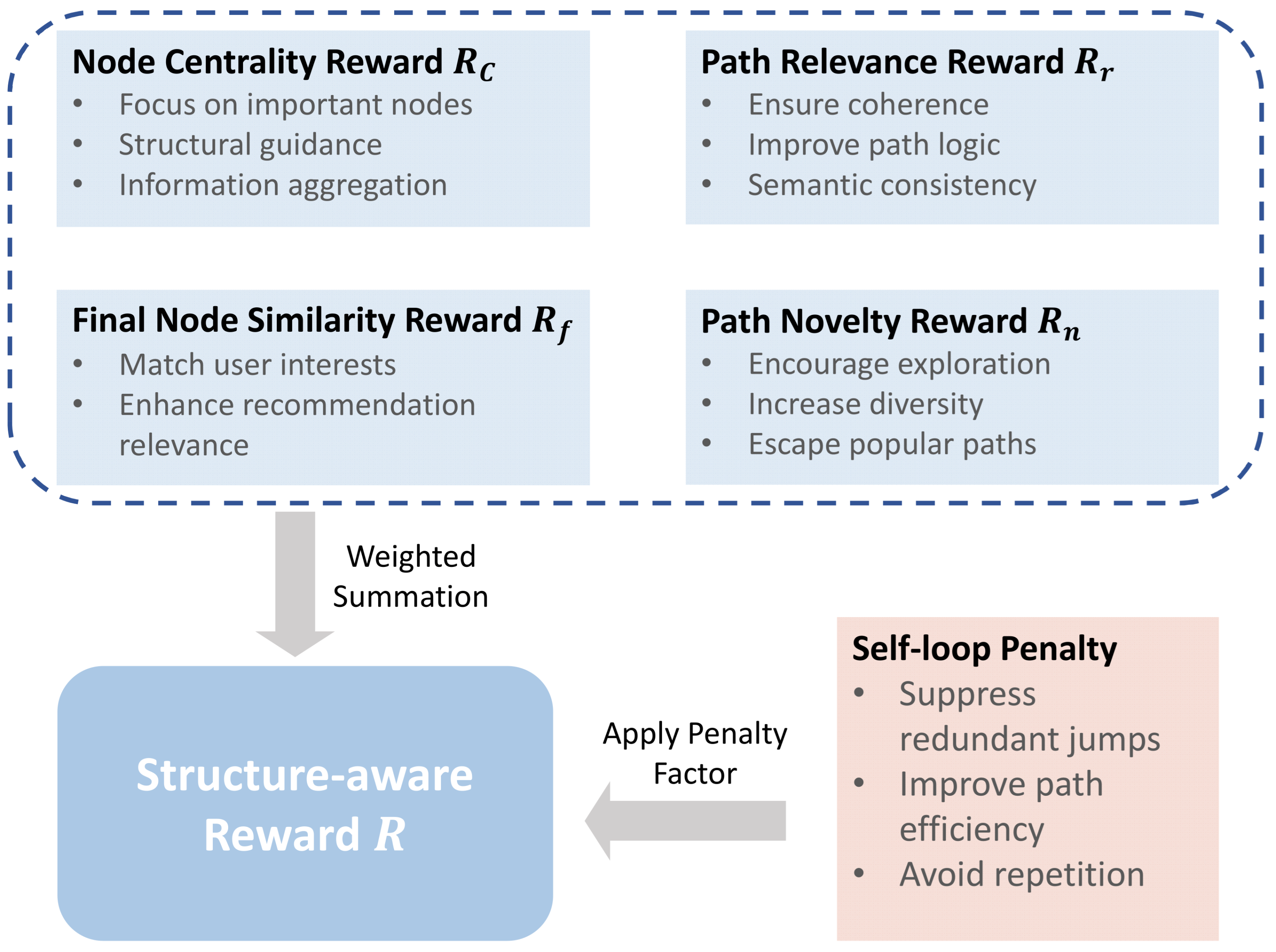

- We develop a structure-aware reward function that integrates structural and semantic information from the knowledge graph, alleviating reward sparsity and steering the agent toward logically consistent and interpretable paths.

- We conduct extensive experiments on two real-world educational datasets, COCO and MoocCube, and demonstrate that StAR outperforms several strong baselines across multiple evaluation metrics, confirming its effectiveness for learning resource recommendation in smart education scenarios.

2. Related Work

3. Methodology

- Environment construction and state initialization. The educational knowledge graph (entities and relations) defines the RL environment; each episode initializes the state from the target learner’s context.

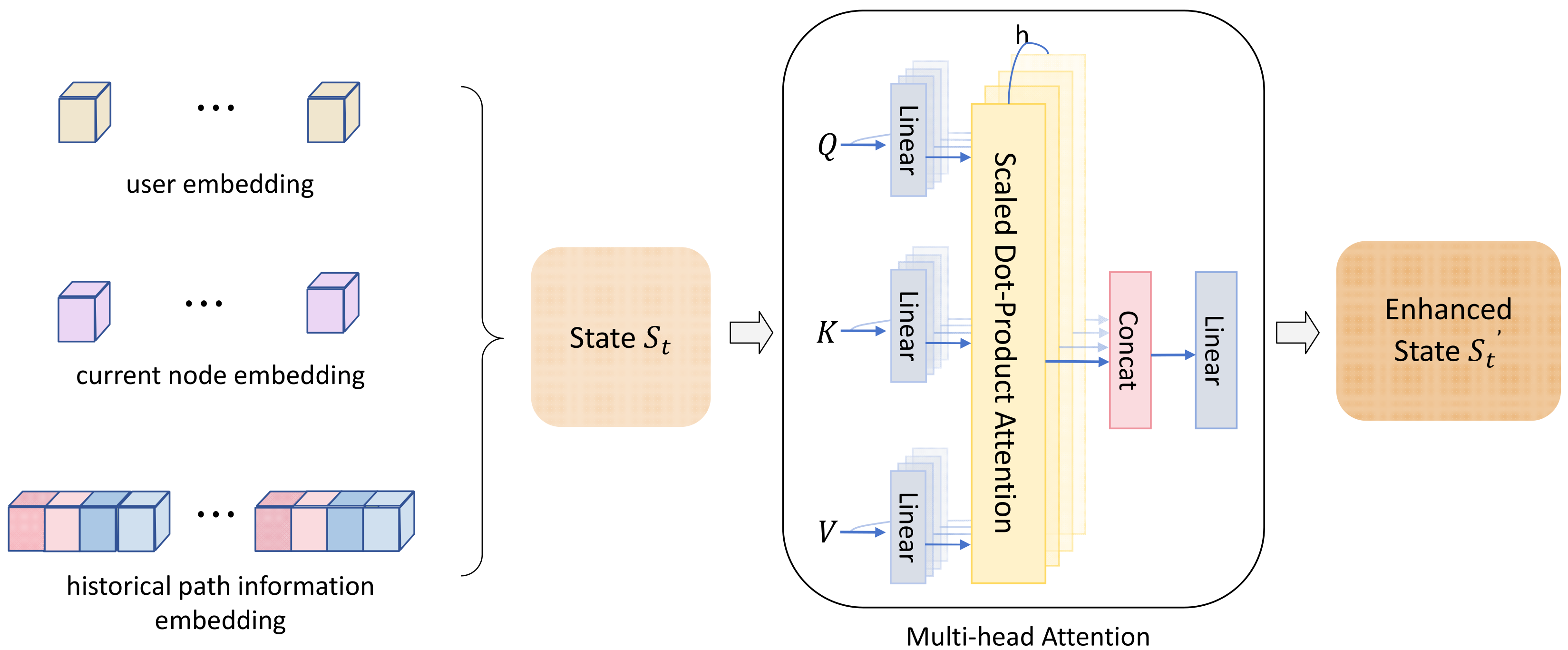

- State representation optimization. An MHA module captures long-range dependencies in the path history and highlights salient semantics, yielding a more expressive state.

- Action space pruning. To mitigate action-space explosion, we first perform environment-level pruning using TransE similarities and stochastic dropout to form an exploratory candidate set , and then apply policy-level Top-K pruning over Actor–Critic scores to obtain the executable set .

- Reward function design and path exploration. We combine node centrality, path relevance, final-node similarity, and path novelty, plus a mild self-loop penalty, to provide dense, informative guidance for coherent path generation.

3.1. Task Definition

- State: The state at time t is represented as , where denotes the user, represents the current entity, and encapsulates the historical context.

- Action Space: The action space at time t is defined as , where is a relation type and is the next entity.

- Reward: The reward function is designed to guide the agent toward high-quality path exploration.

- Policy: The policy governs the selection of action given the state .

3.2. State Representation Optimization Based on Multi-Head Attention

3.3. Dual-Layer Action Pruning Strategy

- Environment-level action pruning: The goal of environment-level pruning is to preliminarily reduce the action space and improve the efficiency of path search. First, previously visited nodes are removed to prevent path loops and redundant transitions. Then, node embeddings learned via the TransE model are employed to compute the semantic similarity between the current node and each candidate node:where and represent the embedding vectors of node i and node j, respectively. Then, the top-N nodes are selected based on their similarity scores, and a Dropout mechanism is applied to randomly sample from them, forming a semantically relevant and exploratory initial action set .

- Policy-level action pruning: Policy-level pruning further enhances the precision of action selection. An Actor-Critic policy network is employed to score the initial candidate action set:where represents the current state, denotes the policy network (Actor) that computes the state-action score function, and indicates the probability of selecting action under state . Ultimately, the top K actions with the highest probabilities are selected to form the final executable action set , thereby effectively reducing interference from low-value actions.

| Algorithm 1 Pseudocode of the double-layer action pruning strategy. | |

| Require: User embedding u, current state , current node , candidate action set , historical path , entity embedding e, pruning threshold N, dropout probability , policy network , number of actions to retain K | |

| Ensure: Final executable action set | |

| // Environment Layer Pruning | |

| 1: | |

| 2: | ▹ Temporary list |

| 3: for do | |

| 4: if then | |

| 5: continue | ▹ Remove historical nodes |

| 6: end if | |

| 7: | ▹ Compute semantic similarity |

| 8: | |

| 9: end for | |

| 10: | |

| 11: for do | |

| 12: if rand() > then | |

| 13: | ▹ Randomly retain to form preliminary action set |

| 14: end if | |

| 15: end for | |

| // Policy Layer Pruning | |

| 16: | ▹ Action score list |

| 17: for do | |

| 18: | ▹ Policy network scoring |

| 19: | |

| 20: end for | |

| 21: Apply softmax normalization to scores in P, obtaining | |

| 22: | |

| 23: return | |

3.4. Design of Structure-Aware Reward Function

4. Experiments

4.1. Datasets and Experimental Settings

4.2. Performance Evaluation Metrics

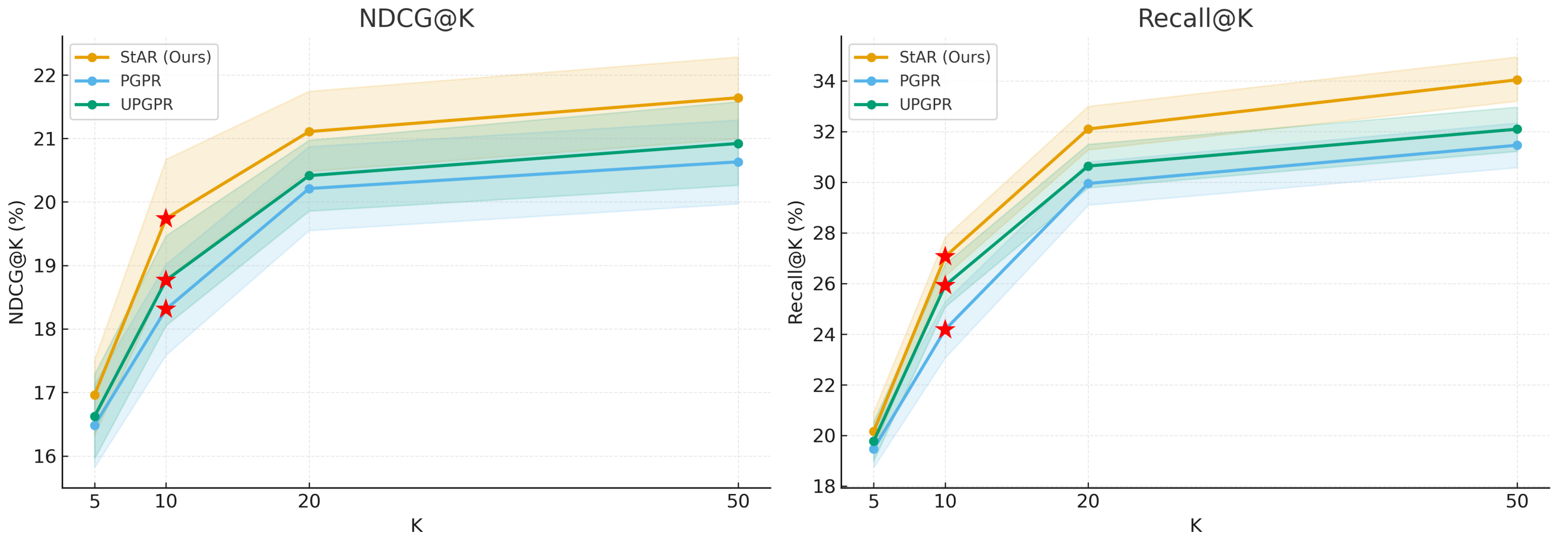

4.3. Results and Analysis

4.4. Ablation Experiment

- Effectiveness of MHATo evaluate MHA’s effect on state modeling, we conduct an ablation () by replacing MHA with simple feature concatenation. As shown in Table 5, removing the MHA leads to noticeable declines in all evaluation metrics on both the COCO and MoocCube datasets, indicating that MHA plays a key role in capturing both local and global dependencies between path nodes and in emphasizing critical information. Compared with the concatenation approach, MHA effectively suppresses noise and enhances the precision of state representation, thereby improving the action discrimination capability of the policy network. In summary, MHA significantly strengthens the capacity for state representation and is one of the key modules that support the improvement of path reasoning quality and recommendation performance in this method.We independently examine the effectiveness of the MHA module for state representation by replacing the original MLP encoder in the baseline PGPR framework with MHA, while keeping all other training configurations unchanged, in order to assess its standalone contribution to model performance. As shown in Table 6, the MHA-enhanced variant achieves consistent gains across all four metrics on both COCO and MoocCube. For example, NDCG improves from 9.13 to 9.74 on COCO and from 18.31 to 19.11 on MoocCube, accompanied by similar improvements in Recall, HR, and Precision. These results indicate that MHA captures longer-range semantic dependencies along multi-hop paths and yields more expressive state representations, thereby enabling more accurate policy decisions during graph reasoning.

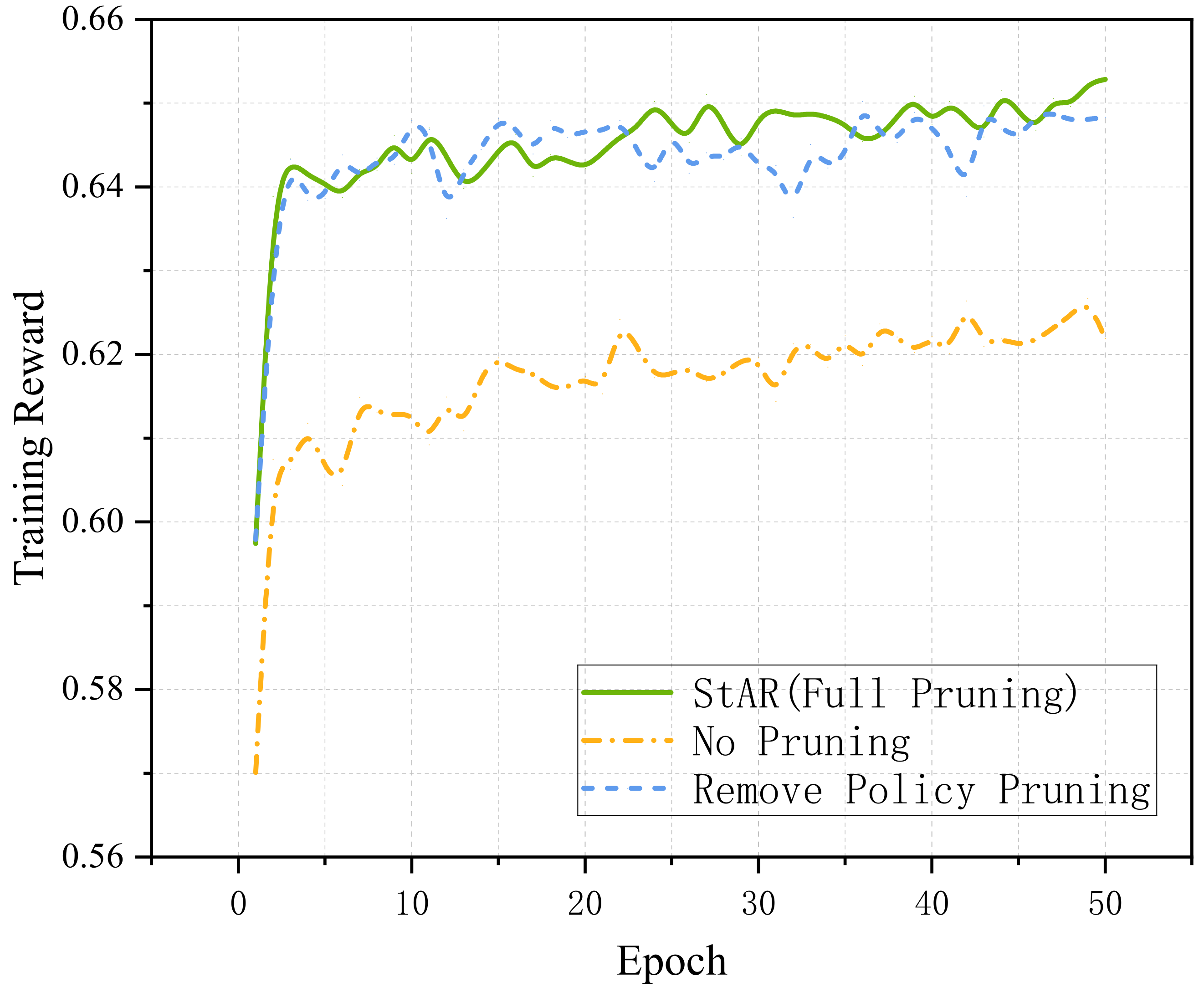

- Effectiveness of Dual-Layer Action PruningTo systematically evaluate the contribution of the dual-layer action pruning strategy to model performance, we design a series of ablation experiments, where the policy-layer Top-K pruning is removed (−P_A_P), retaining only the environment-layer pruning, in order to isolate and assess the impact of the policy layer in enhancing action discrimination while keeping action space manageable, and to avoid the training instability caused by an excessively large action space.As shown in Table 5, when the policy-layer pruning is removed, the model exhibits significant drops across all evaluation metrics on both the COCO and MoocCube datasets, and the training convergence also becomes noticeably slower. These results indicate that the policy-layer pruning enhances the discriminative capability of action selection after coarse filtering, effectively eliminating low-value actions, thereby improving path reasoning efficiency and recommendation accuracy.To further verify the necessity of the environment-layer pruning, we conduct a near “no-pruning” experiment (−All_P), where both max_acts and K are set to 1000, to preserve as many candidate actions as possible. The experimental results on the MoocCube dataset are shown in Figure 5. The model’s training time increases significantly, convergence slows down, and recommendation performance further deteriorates, indicating that environment-layer pruning plays a vital role in early-stage action filtering. Taken together, these experiments demonstrate that the dual-layer pruning strategy, through its “coarse-to-fine” collaborative filtering mechanism, effectively balances the trade-off between action space reduction and recommendation quality, and serves as a key design component for ensuring model efficiency and performance.We independently examine the effectiveness of the dual-layer action-pruning (DAP) module by reintroducing both pruning layers into the baseline PGPR framework, forming the complete DAP configuration to assess its standalone contribution to the overall model performance. As shown in Table 7, the enhanced variant (+StAR/DAP) consistently matches or outperforms the baseline PGPR on Top-10 NDCG, Recall, HR, and Precision across both COCO and MoocCube. By pruning actions at both the policy-logit level and the environment search stage, DAP suppresses low-value or semantically irrelevant transitions while preserving high-quality candidates. This structured reduction of the action space facilitates more focused exploration and leads to improved reasoning efficiency and recommendation accuracy.

- Effectiveness of the Structure-Aware RewardTo validate the impact of the structure-aware knowledge-based reward function on recommendation performance, we design a corresponding ablation experiment (). Specifically, the reward is computed solely based on the simple similarity between the final node and the target node, removing the joint consideration of factors such as node centrality, path relevance, final-node similarity, and path novelty.As shown in Table 5, removing the structure-aware reward function results in a decline in all evaluation metrics on both the COCO and MoocCube datasets, indicating that the reward function plays a critical role in guiding the agent to explore effective paths. Compared to traditional endpoint similarity-based rewards, the proposed structure-aware mechanism provides finer-grained and semantically rich feedback throughout the path generation process, effectively enhancing the logical coherence and personalization of the paths. The structure-aware reward function not only mitigates the sparse reward problem in reinforcement learning-based path reasoning but also provides essential support for enhancing both the interpretability and performance of the recommendations, making it a vital component contributing to the superior performance of the proposed method.We further isolate the contribution of the structure-aware reward (SR) by replacing the baseline cosine reward in the baseline PGPR with SR, while keeping all other training and evaluation settings identical. As shown in Table 8, the enhanced variant (+StAR/SR) consistently matches or outperforms the baseline PGPR across Top-10 NDCG, Recall, HR, and Precision on both COCO and MoocCube datasets. Compared with the sparse terminal rewards in PGPR, SR introduces denser and more informative feedback by integrating path relevance, node centrality, final-node similarity, and path novelty. This design enables the agent to pursue structurally consistent reasoning paths and facilitates more stable and effective policy learning.To quantitatively verify that the proposed structure-aware reward effectively alleviates the sparse-reward issue, we define the non-zero reward ratio per episode () as follows:where N denotes the total number of episodes, is the length of the i-th episode, is the reward at step t, and is the indicator function. This metric measures the proportion of reasoning trajectories that receive at least one non-zero reward signal, directly reflecting the density of the reward feedback.As shown in Table 9, on the MoocCube dataset, the proposed StAR model exhibits noticeably denser and smoother reward feedback than PGPR and UPGPR. This finding suggests that the structure-aware reward effectively transforms the sparse terminal reward into continuous stepwise feedback, facilitating smoother credit propagation and more stable policy optimization.

4.5. Parameter Analysis

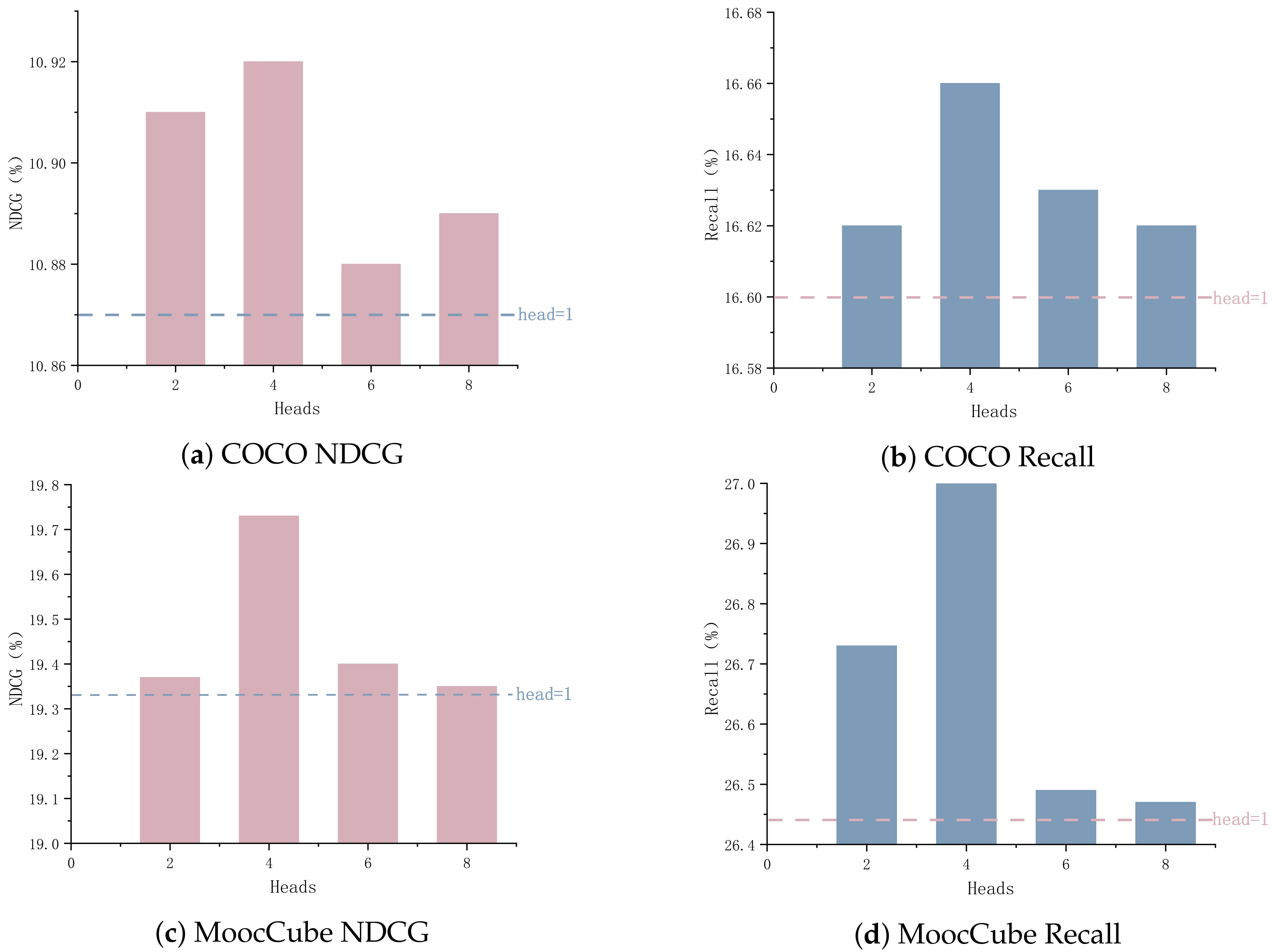

4.5.1. Impact of the Number of Attention Heads

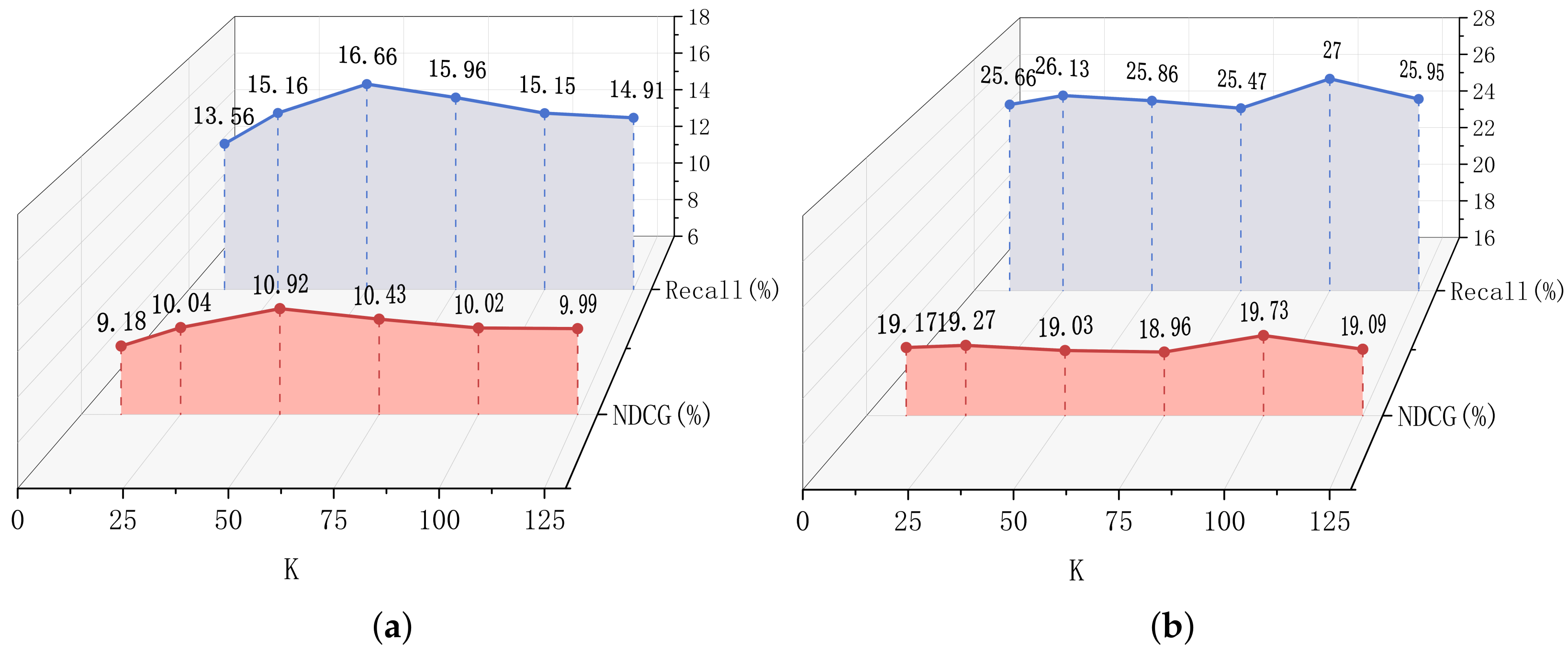

4.5.2. Impact of Top-K Pruning in Policy Layer

4.5.3. Impact of Reward Function Weight

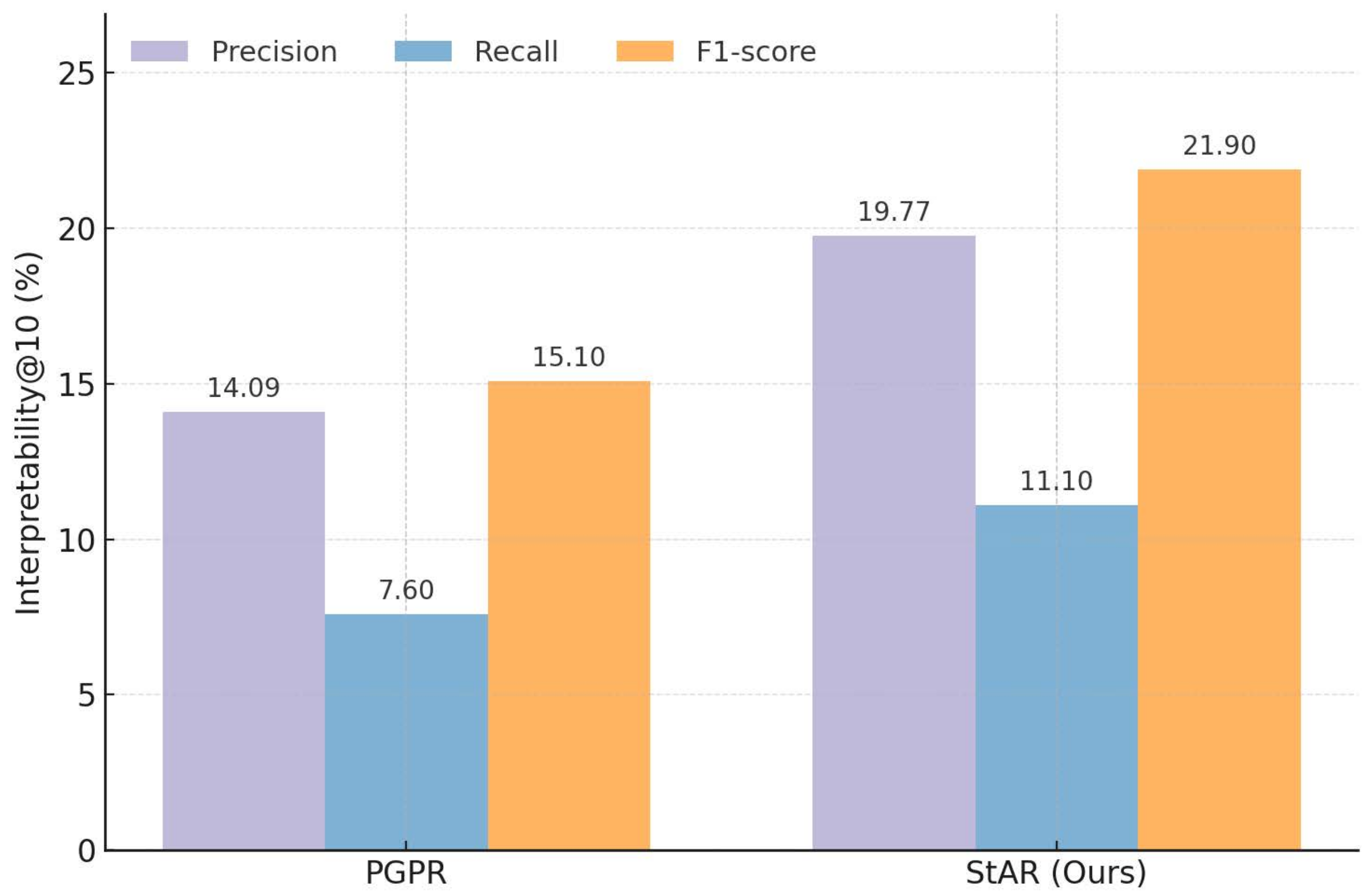

4.6. Interpretability Evaluation

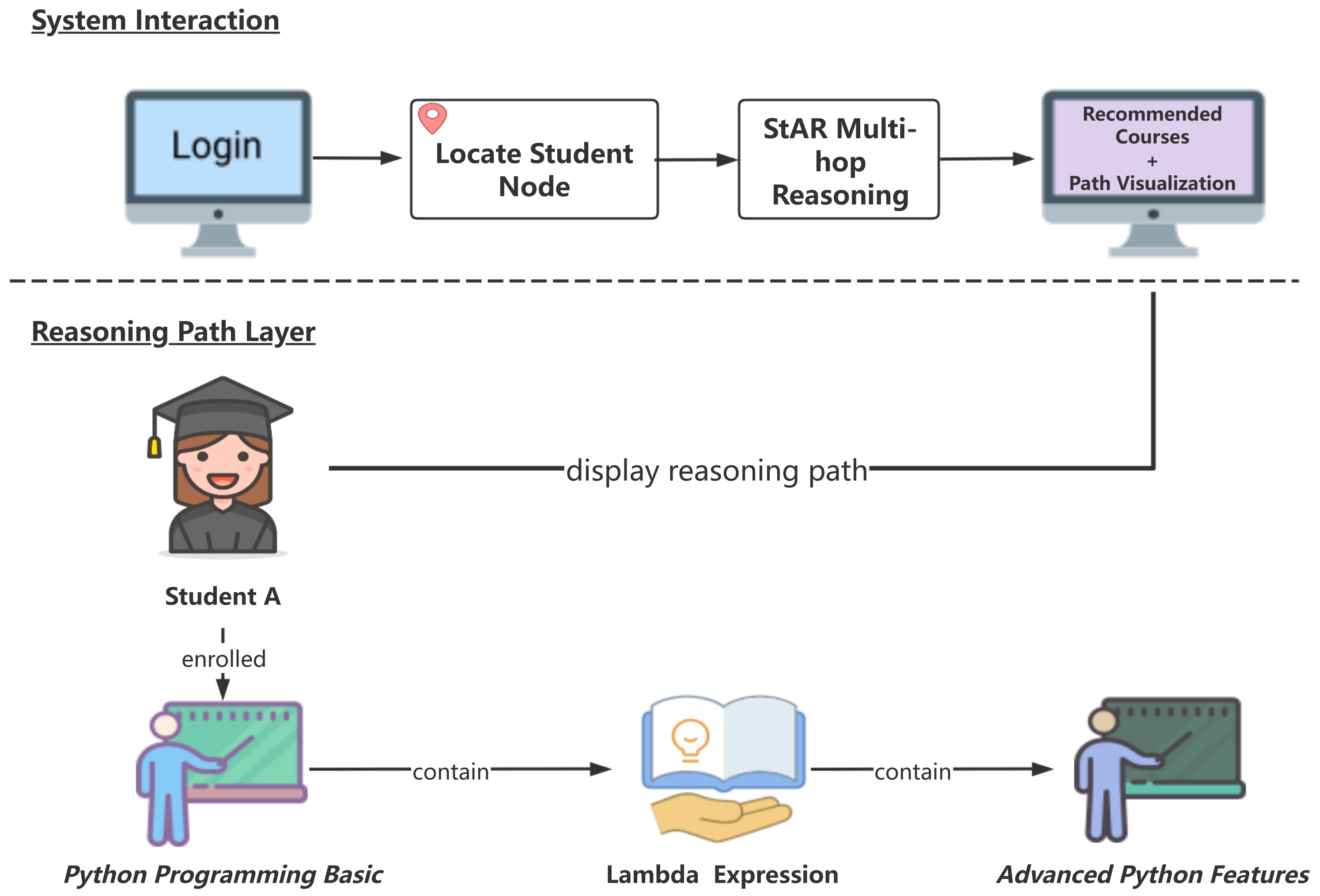

4.7. Case Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Kruhlov, V.; Dvorak, J. Social Inclusivity in the Smart City Governance: Overcoming the Digital Divide. Sustainability 2025, 17, 5735. [Google Scholar] [CrossRef]

- Jiang, S.; Song, H.; Lu, Y.; Zhang, Z. News Recommendation Method Based on Candidate-Aware Long-and Short-Term Preference Modeling. Appl. Sci. 2024, 15, 300. [Google Scholar] [CrossRef]

- Raj, N.S.; Renumol, V. An improved adaptive learning path recommendation model driven by real-time learning analytics. J. Comput. Educ. 2024, 11, 121–148. [Google Scholar] [CrossRef]

- Chen, G.; Chen, P.; Wang, Y.; Zhu, N. Research on the development of an effective mechanism of using public online education resource platform: TOE model combined with FS-QCA. Interact. Learn. Environ. 2024, 32, 6096–6120. [Google Scholar] [CrossRef]

- Maimaitijiang, E.; Aihaiti, M.; Mamatjan, Y. An Explainable AI Framework for Online Diabetes Risk Prediction with a Personalized Chatbot Assistant. Electronics 2025, 14, 3738. [Google Scholar] [CrossRef]

- Koren, Y.; Rendle, S.; Bell, R. Advances in collaborative filtering. In Recommender Systems Handbook; Springer: New York, NY, USA, 2021; pp. 91–142. [Google Scholar]

- Son, J.; Kim, S.B. Content-based filtering for recommendation systems using multiattribute networks. Expert Syst. Appl. 2017, 89, 404–412. [Google Scholar] [CrossRef]

- Quadrana, M.; Cremonesi, P.; Jannach, D. Sequence-aware recommender systems. ACM Comput. Surv. 2018, 51, 1–36. [Google Scholar] [CrossRef]

- Peng, C.; Xia, F.; Naseriparsa, M.; Osborne, F. Knowledge graphs: Opportunities and challenges. Artif. Intell. Rev. 2023, 56, 13071–13102. [Google Scholar] [CrossRef]

- Xian, Y.; Fu, Z.; Muthukrishnan, S.; De Melo, G.; Zhang, Y. Reinforcement knowledge graph reasoning for explainable recommendation. In Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval, Paris, France, 21–25 July 2019; pp. 285–294. [Google Scholar]

- Frej, J.; Shah, N.; Knezevic, M.; Nazaretsky, T.; Käser, T. Finding paths for explainable MOOC recommendation: A learner perspective. In Proceedings of the 14th Learning Analytics and Knowledge Conference, Kyoto, Japan, 18–22 March 2024; pp. 426–437. [Google Scholar]

- Afsar, M.M.; Crump, T.; Far, B. Reinforcement learning based recommender systems: A survey. ACM Comput. Surv. 2022, 55, 1–38. [Google Scholar] [CrossRef]

- Liu, H.; Cai, K.; Li, P.; Qian, C.; Zhao, P.; Wu, X. REDRL: A review-enhanced Deep Reinforcement Learning model for interactive recommendation. Expert Syst. Appl. 2023, 213, 118926. [Google Scholar] [CrossRef]

- Riedmiller, M.; Hafner, R.; Lampe, T.; Neunert, M.; Degrave, J.; Wiele, T.; Mnih, V.; Heess, N.; Springenberg, J.T. Learning by playing solving sparse reward tasks from scratch. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 4344–4353. [Google Scholar]

- Ma, A.; Yu, Y.; Shi, C.; Zhen, S.; Pang, L.; Chua, T.-S. PMHR: Path-Based Multi-Hop Reasoning Incorporating Rule-Enhanced Reinforcement Learning and KG Embeddings. Electronics 2024, 13, 4847. [Google Scholar] [CrossRef]

- Li, Y.; Lu, L.; Li, X. A hybrid collaborative filtering method for multiple-interests and multiple-content recommendation in E-Commerce. Expert Syst. Appl. 2005, 28, 67–77. [Google Scholar] [CrossRef]

- Deldjoo, Y.; Schedl, M.; Cremonesi, P.; Pasi, G. Recommender systems leveraging multimedia content. ACM Comput. Surv. 2020, 53, 1–38. [Google Scholar] [CrossRef]

- Sarwar, B.; Karypis, G.; Konstan, J.; Riedl, J. Item-based collaborative filtering recommendation algorithms. In Proceedings of the 10th International Conference on World Wide Web, Hong Kong, China, 1–5 May 2001; pp. 285–295. [Google Scholar]

- Pazzani, M.J.; Billsus, D. Content-based recommendation systems. In The Adaptive Web: Methods and Strategies of Web Personalization; Springer: Berlin/Heidelberg, Germany, 2007; pp. 325–341. [Google Scholar]

- Sinha, R.; Swearingen, K. The role of transparency in recommender systems. In Proceedings of the CHI’02 Extended Abstracts on Human Factors in Computing Systems, Minneapolis, MN, USA, 20–25 April 2002; pp. 830–831. [Google Scholar]

- Rendle, S.; Freudenthaler, C.; Schmidt-Thieme, L. Factorizing personalized markov chains for next-basket recommendation. In Proceedings of the 19th International Conference on World Wide Web, Raleigh, NC, USA, 26–30 April 2010; pp. 811–820. [Google Scholar]

- Bordes, A.; Usunier, N.; Garcia-Durán, A.; Weston, J.; Yakhnenko, O. Translating embeddings for modeling multi-relational data. In Proceedings of the 27th International Conference on Neural Information Processing Systems (NIPS’13), Lake Tahoe, NV, USA, 5–10 December 2013; Curran Associates Inc.: Red Hook, NY, USA, 2013; pp. 2787–2795. [Google Scholar]

- Zhang, F.; Yuan, N.J.; Lian, D.; Xie, X.; Ma, W.-Y. Collaborative knowledge base embedding for recommender systems. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 353–362. [Google Scholar]

- Wang, X.; He, X.; Cao, Y.; Liu, M.; Chua, T.-S. Kgat: Knowledge graph attention network for recommendation. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 950–958. [Google Scholar]

- Wang, X.; Wang, D.; Xu, C.; He, X.; Cao, Y.; Chua, T.-S. Explainable reasoning over knowledge graphs for recommendation. Proc. AAAI Conf. Artif. Intell. 2019, 33, 5329–5336. [Google Scholar] [CrossRef]

- Garcia, F.; Rachelson, E. Markov decision processes. In Markov Decision Processes in Artificial Intelligence; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2013; pp. 1–38. [Google Scholar]

- Gao, H.; Ma, B. Online Learning Strategy Induction through Partially Observable Markov Decision Process-Based Cognitive Experience Model. Electronics 2024, 13, 3858. [Google Scholar] [CrossRef]

- Zhu, A.; Ouyang, D.; Liang, S.; Shao, J. Step by step: A hierarchical framework for multi-hop knowledge graph reasoning with reinforcement learning. Knowl.-Based Syst. 2022, 248, 108843. [Google Scholar] [CrossRef]

- Zheng, S.; Chen, W.; Wang, W.; Zhao, P.; Yin, H.; Zhao, L. Multi-hop knowledge graph reasoning in few-shot scenarios. IEEE Trans. Knowl. Data Eng. 2023, 36, 1713–1727. [Google Scholar] [CrossRef]

- Tao, S.; Qiu, R.; Ping, Y.; Ma, H. Multi-modal knowledge-aware reinforcement learning network for explainable recommendation. Knowl.-Based Syst. 2021, 227, 107217. [Google Scholar] [CrossRef]

- Su, J.; Huang, J.; Adams, S.; Chang, Q.; Beling, P.A. Deep multi-agent reinforcement learning for multi-level preventive maintenance in manufacturing systems. Expert Syst. Appl. 2022, 192, 116323. [Google Scholar] [CrossRef]

- Lin, Y.; Liu, Y.; Lin, F.; Zou, L.; Wu, P.; Zeng, W.; Chen, H.; Miao, C. A survey on reinforcement learning for recommender systems. IEEE Trans. Neural Netw. Learn. Syst. 2023, 35, 13164–13184. [Google Scholar] [CrossRef] [PubMed]

- Mu, R.; Marcolino, L.S.; Zhang, Y.; Zhang, T.; Huang, X.; Ruan, W. Reward certification for policy smoothed reinforcement learning. Proc. AAAI Conf. Artif. Intell. 2024, 38, 21429–21437. [Google Scholar] [CrossRef]

- Yu, J.; Luo, G.; Xiao, T.; Zhong, Q.; Wang, Y.; Feng, W.; Luo, J.; Wang, C.; Hou, L.; Li, J. MOOCCube: A large-scale data repository for NLP applications in MOOCs. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 3135–3142. [Google Scholar]

- Dessì, D.; Fenu, G.; Marras, M.; Reforgiato Recupero, D. Coco: Semantic-enriched collection of online courses at scale with experimental use cases. In Trends and Advances in Information Systems and Technologies, Proceedings of the World Conference on Information Systems and Technologies, Naples, Italy, 27–29 March 2018; Springer: Cham, Switzerland, 2018; pp. 1386–1396. [Google Scholar]

- Fareri, S.; Melluso, N.; Chiarello, F.; Fantoni, G. SkillNER: Mining and mapping soft skills from any text. Expert Syst. Appl. 2021, 184, 115544. [Google Scholar] [CrossRef]

- Tan, J.; Xu, S.; Ge, Y.; Li, Y.; Chen, X.; Zhang, Y. Counterfactual explainable recommendation. In Proceedings of the 30th ACM International Conference on Information & Knowledge Management, Online, 1–5 November 2021; pp. 1784–1793. [Google Scholar]

- Chen, C.; Zhang, M.; Liu, Y.; Ma, S. Neural attentional rating regression with review-level explanations. In Proceedings of the 2018 World Wide Web Conference, Lyon, France, 23–27 April 2018; pp. 1583–1592. [Google Scholar]

| Relation Type | Semantic Description |

|---|---|

| Enrolled | The user is enrolled in a course (User-Course) |

| Teach | A teacher teaches a course (Teacher-Course) |

| Contain | A course contains certain concepts (Concept-Course) |

| Provide | A school provides a course (School-Course) |

| Belong_to | A course belongs to a category (Category-Course) |

| Metric | COCO | MoocCube |

|---|---|---|

| Users | 17,457 | 6507 |

| Courses | 20,926 | 687 |

| Interactions | 279,792 | 97,223 |

| Avg. Interactions | 16.1 | 14.9 |

| Hyperparameters | Value |

|---|---|

| Optimizer | Adam |

| Learning Rate | 0.001 |

| Entropy Weight | 0.01 |

| Gamma | 0.99 |

| Batch Size | 32 |

| Epoch | 50 |

| Hidden | [512, 256] |

| Max Actions | 250 |

| Dataset | Model | NDCG/% | Recall/% | HR/% | Precision/% |

|---|---|---|---|---|---|

| COCO | Pop | ||||

| CFKG | |||||

| KGAT | |||||

| PGPR | |||||

| UPGPR | |||||

| StAR | |||||

| MoocCube | Pop | ||||

| CFKG | |||||

| KGAT | |||||

| PGPR | |||||

| UPGPR | |||||

| StAR |

| Model | COCO | MoocCube | ||||||

|---|---|---|---|---|---|---|---|---|

| NDCG | Recall | HR | Precision | NDCG | Recall | HR | Precision | |

| StAR | 10.92 | 16.66 | 27.60 | 3.07 | 19.74 | 27.00 | 44.15 | 5.19 |

| 9.83 | 14.57 | 24.88 | 2.74 | 19.08 | 25.79 | 42.65 | 4.93 | |

| −P_A_P | 9.87 | 14.77 | 25.03 | 2.76 | 19.16 | 26.02 | 43.35 | 5.03 |

| −All_P | 9.77 | 14.60 | 24.87 | 2.72 | 18.87 | 25.41 | 42.40 | 4.89 |

| 10.16 | 15.31 | 25.83 | 2.87 | 19.49 | 26.55 | 43.89 | 5.16 | |

| Model | COCO | MoocCube | ||||||

|---|---|---|---|---|---|---|---|---|

| NDCG | Recall | HR | Precision | NDCG | Recall | HR | Precision | |

| PGPR | 9.13 | 14.12 | 24.11 | 2.64 | 18.31 | 24.17 | 40.08 | 4.70 |

| +StAR/MHA | 9.74 | 14.56 | 24.84 | 2.71 | 19.11 | 25.87 | 42.71 | 5.03 |

| Model | COCO | MoocCube | ||||||

|---|---|---|---|---|---|---|---|---|

| NDCG | Recall | HR | Precision | NDCG | Recall | HR | Precision | |

| PGPR | 9.13 | 14.12 | 24.11 | 2.64 | 18.31 | 24.17 | 40.08 | 4.70 |

| +StAR/DAP | 9.33 | 13.90 | 23.86 | 2.63 | 18.88 | 25.60 | 42.20 | 4.98 |

| Model | COCO | MoocCube | ||||||

|---|---|---|---|---|---|---|---|---|

| NDCG | Recall | HR | Precision | NDCG | Recall | HR | Precision | |

| PGPR | 9.13 | 14.12 | 24.11 | 2.64 | 18.31 | 24.17 | 40.08 | 4.70 |

| +StAR/SR | 10.32 | 15.85 | 26.54 | 2.96 | 19.51 | 26.51 | 43.88 | 5.19 |

| Model | Reward Type | Interpretation | |

|---|---|---|---|

| UPGPR | Binary (terminal only) | 0.225 | Highly sparse |

| PGPR | Cosine (terminal continuous) | 0.462 | Moderately sparse |

| StAR (ours) | Structure-aware (stepwise dense) | 0.998 | Substantially dense |

| Group | NDCG | Recall | HR | Precision | Description | |

|---|---|---|---|---|---|---|

| A0 | 18.78 | 25.56 | 42.46 | 4.97 | Uniform weights | |

| A1 | 19.74 | 27.03 | 44.15 | 5.19 | StAR configuration | |

| A2 | 19.37 | 26.50 | 43.72 | 5.07 | Final-similarity only | |

| A3 | 19.38 | 26.28 | 43.11 | 5.12 | No novelty | |

| A4 | 19.58 | 26.68 | 43.91 | 5.12 | Swap | |

| A5 | 19.11 | 25.87 | 42.35 | 4.98 | High novelty |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bu, T.; Zheng, H.; Zhao, F. A Structure-Aware and Attention-Enhanced Explainable Learning Resource Recommendation Approach for Smart Education Within Smart Cities. Electronics 2025, 14, 4561. https://doi.org/10.3390/electronics14234561

Bu T, Zheng H, Zhao F. A Structure-Aware and Attention-Enhanced Explainable Learning Resource Recommendation Approach for Smart Education Within Smart Cities. Electronics. 2025; 14(23):4561. https://doi.org/10.3390/electronics14234561

Chicago/Turabian StyleBu, Tianxue, Hao Zheng, and Fen Zhao. 2025. "A Structure-Aware and Attention-Enhanced Explainable Learning Resource Recommendation Approach for Smart Education Within Smart Cities" Electronics 14, no. 23: 4561. https://doi.org/10.3390/electronics14234561

APA StyleBu, T., Zheng, H., & Zhao, F. (2025). A Structure-Aware and Attention-Enhanced Explainable Learning Resource Recommendation Approach for Smart Education Within Smart Cities. Electronics, 14(23), 4561. https://doi.org/10.3390/electronics14234561