Semantic-Guided Mamba Fusion for Robust Object Detection of Tibetan Plateau Wildlife

Abstract

1. Introduction

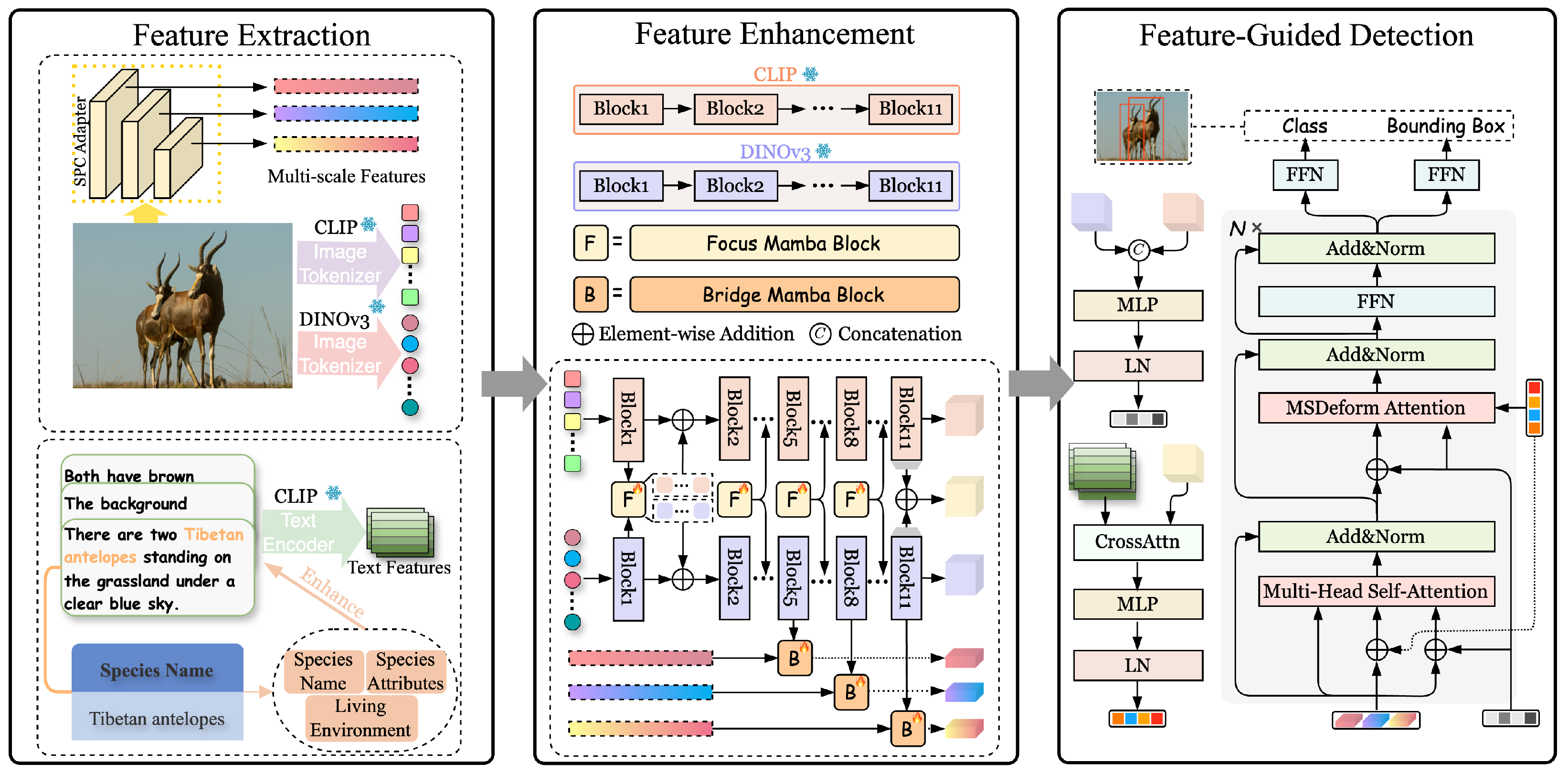

2. Method

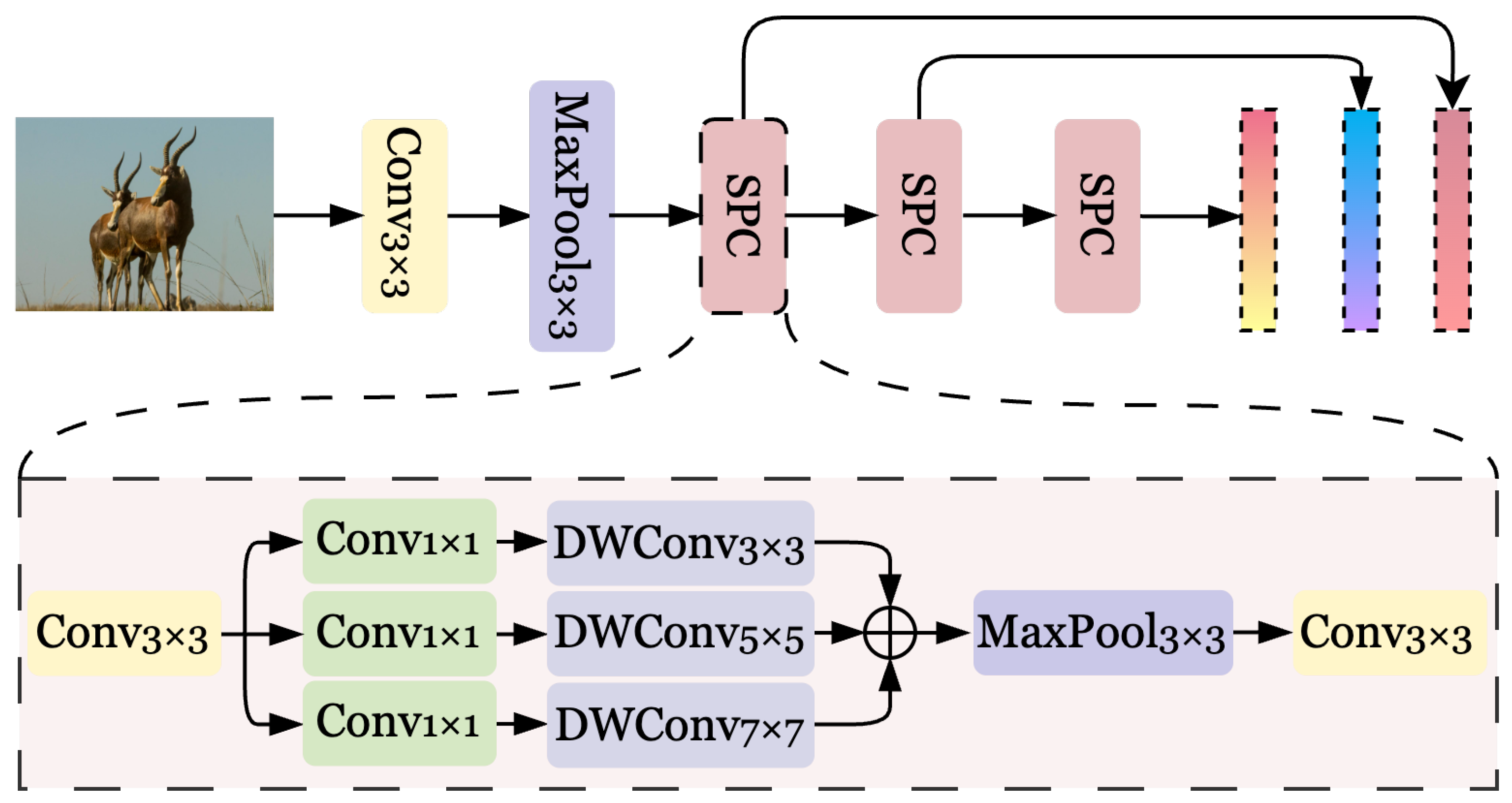

2.1. Feature Extraction

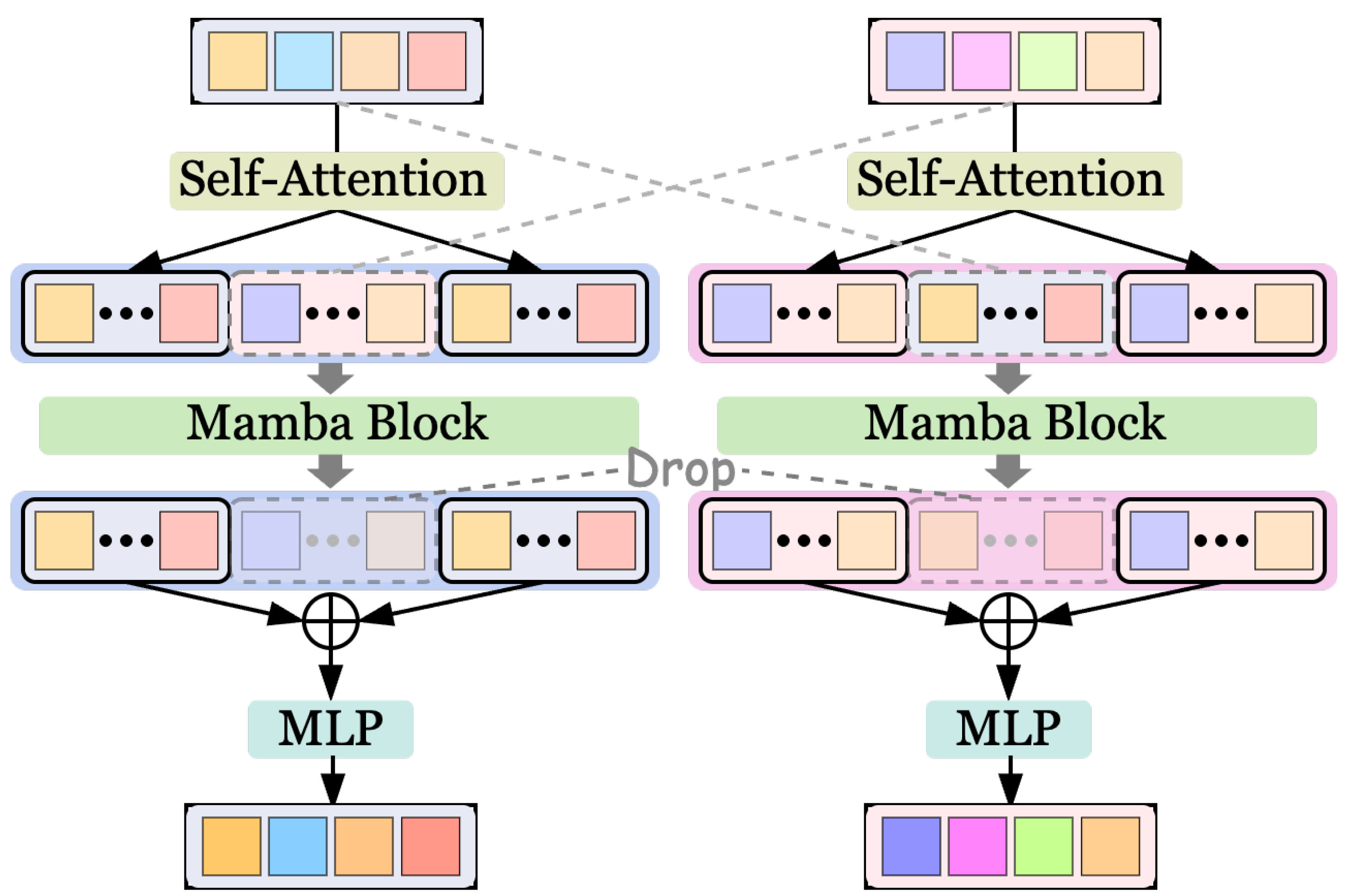

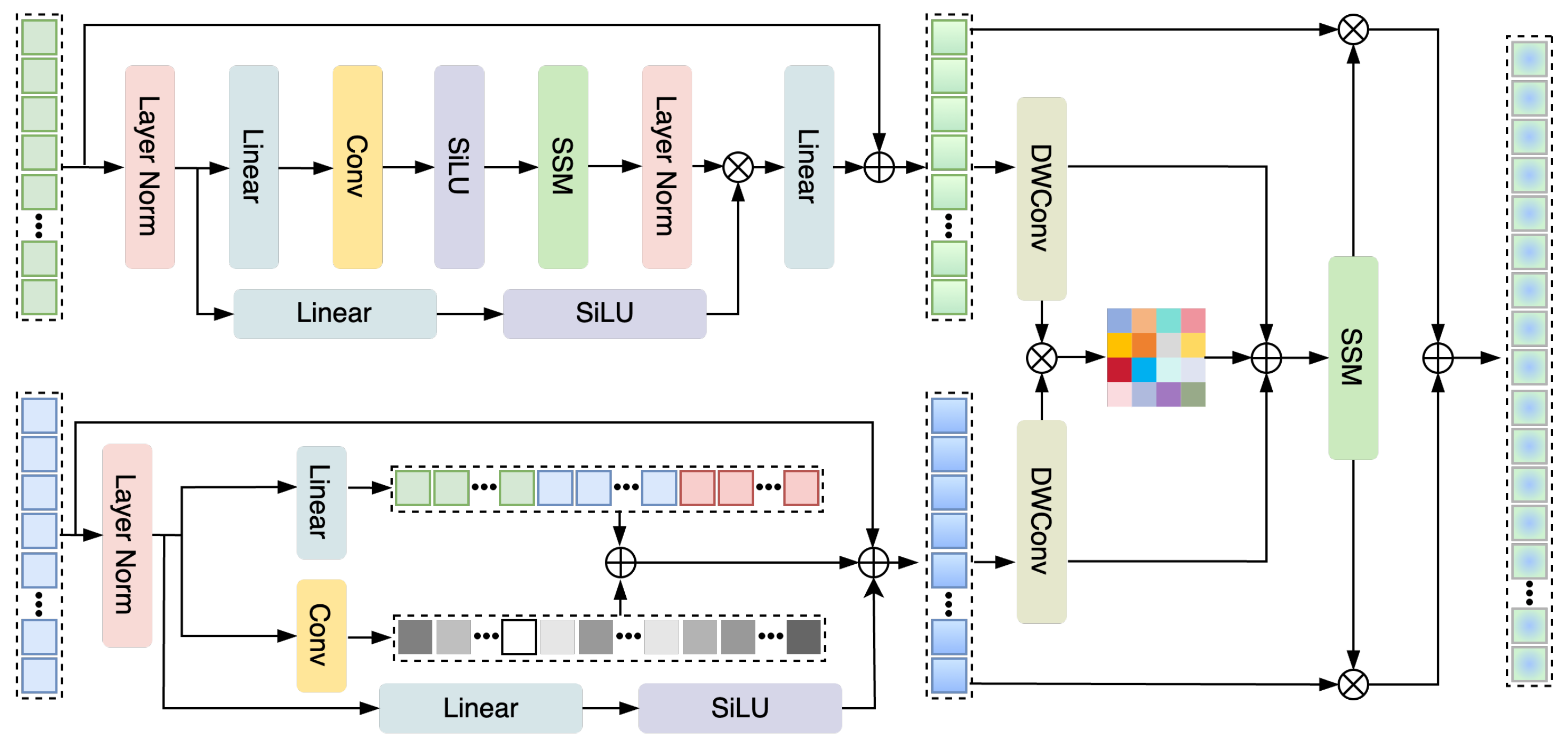

2.2. Feature Enhancement

2.3. Feature-Guided Detection

3. Experiments

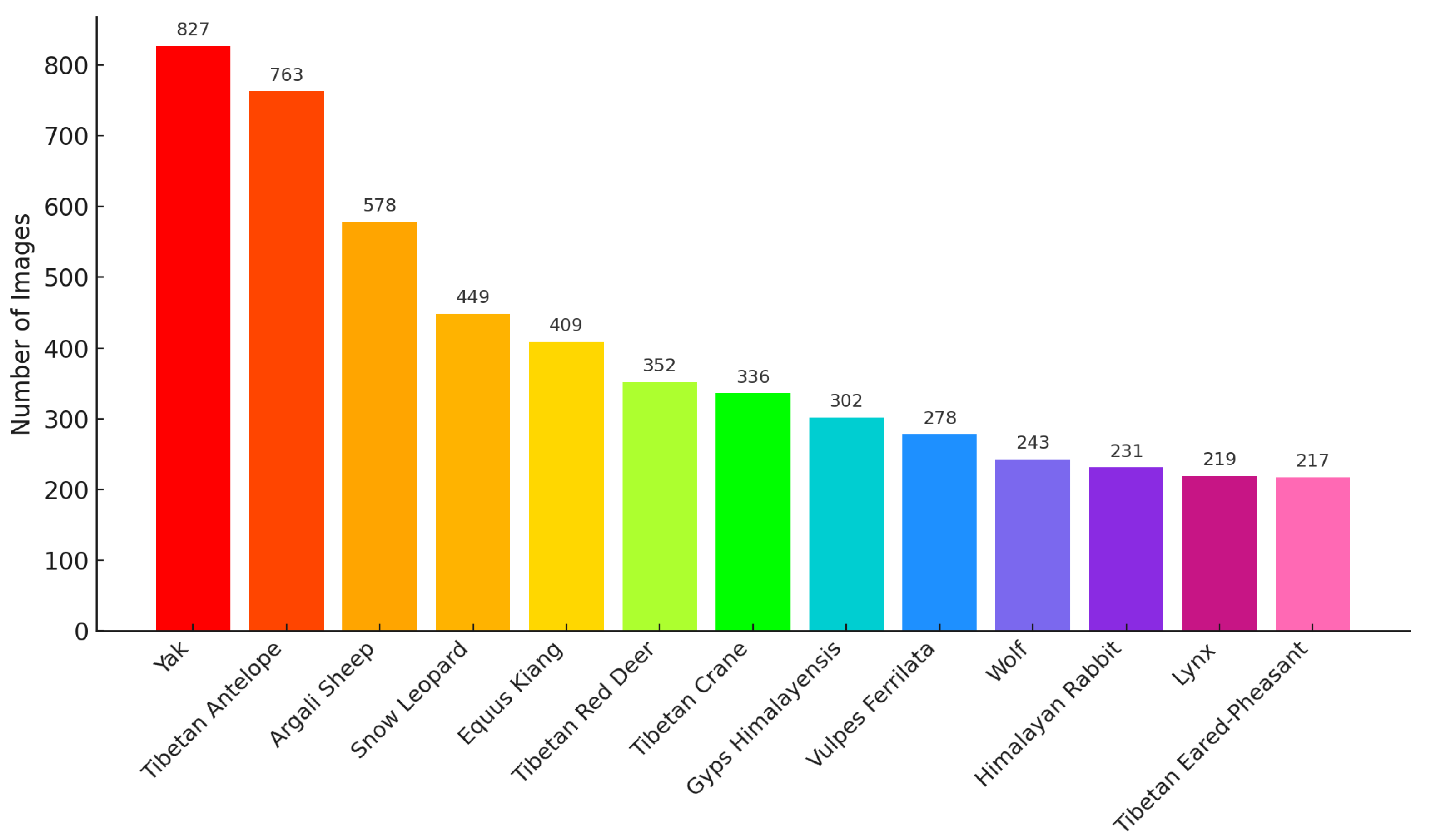

3.1. Dataset

3.2. Experimental Setup

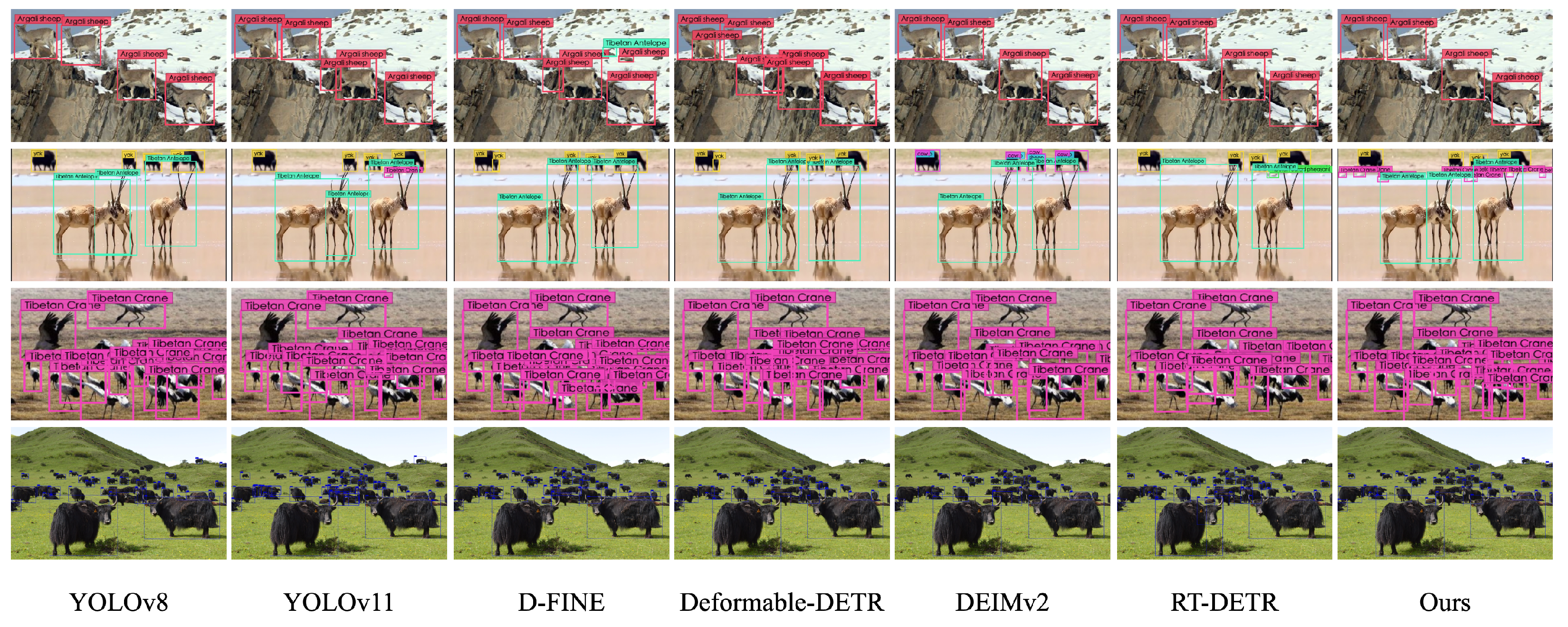

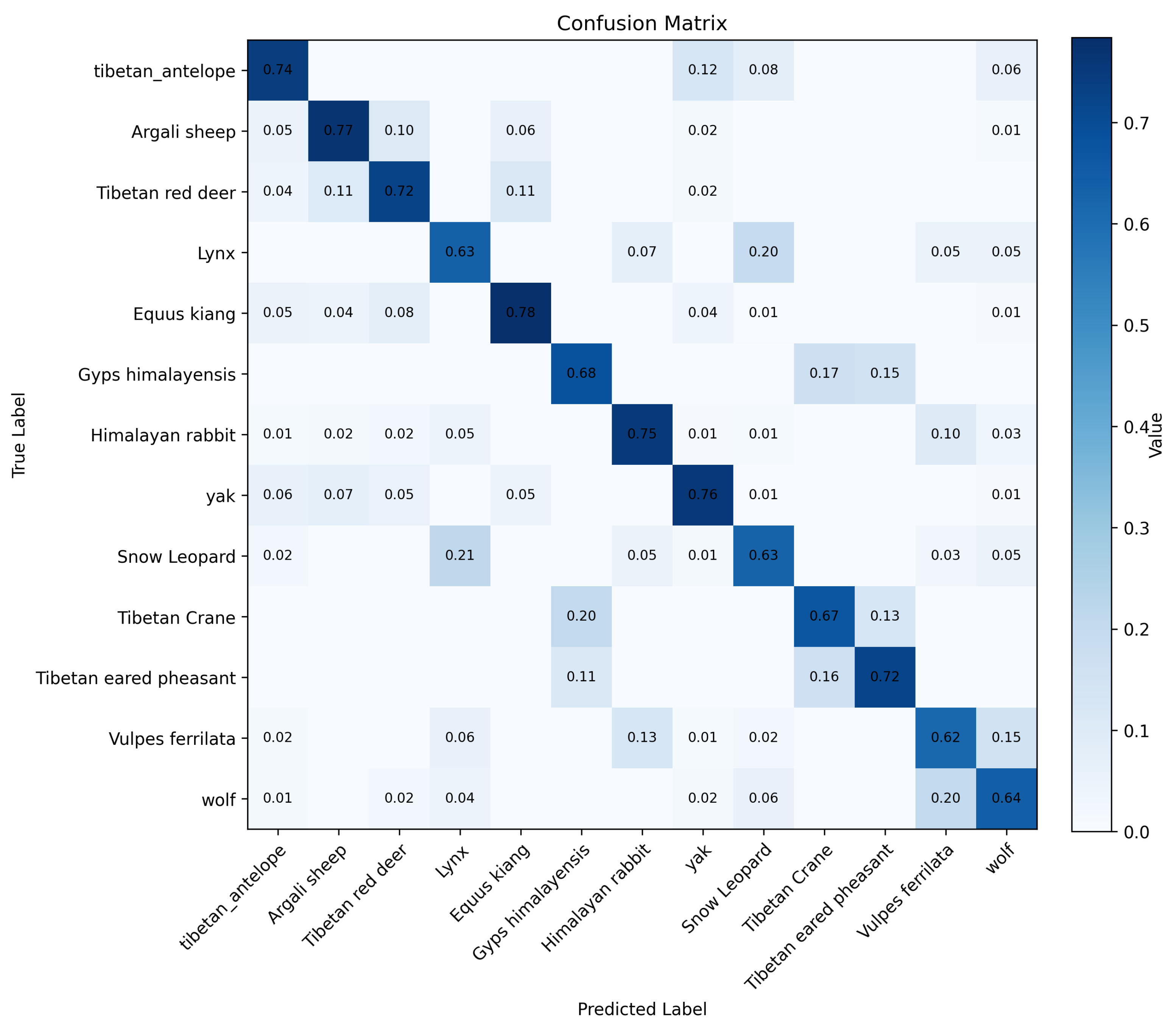

3.3. Comparative Experiments

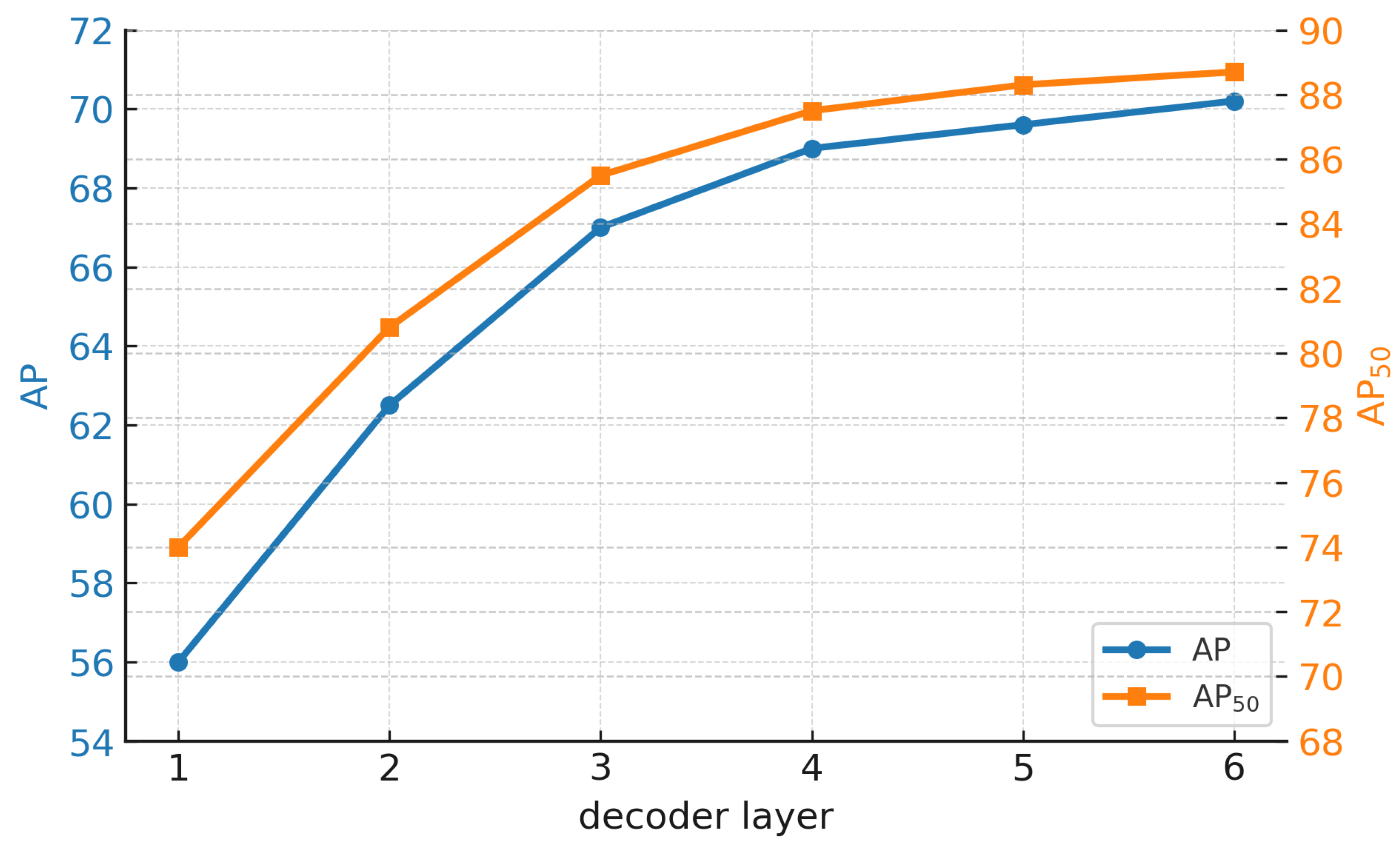

3.4. Ablation Study

4. Discussion

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| BMB | Bridge Mamba Block |

| DWConv | Depthwise Convolution |

| FFN | Feed-Forward Network |

| FMB | Focus Mamba Block |

| SPC | Spatial Pyramid Convolution |

| SSM | State Space Model |

| VLM | Visual Language Model |

References

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 580–587. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27 June–2 July 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable detr: Deformable transformers for end-to-end object detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Sun, P.; Zhang, R.; Jiang, Y.; Kong, T.; Xu, C.; Zhan, W.; Tomizuka, M.; Li, L.; Yuan, Z.; Wang, C.; et al. Sparse r-cnn: End-to-end object detection with learnable proposals. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 14454–14463. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Zhang, H.; Li, F.; Liu, S.; Zhang, L.; Su, H.; Zhu, J.; Ni, L.M.; Shum, H.Y. Dino: Detr with improved denoising anchor boxes for end-to-end object detection. arXiv 2022, arXiv:2203.03605. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 16965–16974. [Google Scholar]

- Mulero-Pázmány, M.; Hurtado, S.; Barba-González, C.; Antequera-Gómez, M.L.; Díaz-Ruiz, F.; Real, R.; Navas-Delgado, I.; Aldana-Montes, J.F. Addressing significant challenges for animal detection in camera trap images: A novel deep learning-based approach. Sci. Rep. 2025, 15, 16191. [Google Scholar] [CrossRef]

- Kim, J.I.; Baek, J.W.; Kim, C.B. Hierarchical image classification using transfer learning to improve deep learning model performance for amazon parrots. Sci. Rep. 2025, 15, 3790. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Zhang, H.; Xu, F. Intelligent detection method for wildlife based on deep learning. Sensors 2023, 23, 9669. [Google Scholar] [CrossRef]

- Ma, Z.; Dong, Y.; Xia, Y.; Xu, D.; Xu, F.; Chen, F. Wildlife real-time detection in complex forest scenes based on YOLOv5s deep learning network. Remote Sens. 2024, 16, 1350. [Google Scholar] [CrossRef]

- Xian, Y.; Zhao, Q.; Yang, X.; Gao, D. Thangka image object detection method based on improved YOLOv8. In Proceedings of the 2024 13th International Conference on Computing and Pattern Recognition, Tianjin, China, 25–27 October 2024; pp. 214–219. [Google Scholar]

- Ke, W.; Liu, T.; Cui, X. IECA-YOLOv7: A Lightweight Model with Enhanced Attention and Loss for Aerial Wildlife Detection. Animals 2025, 15, 2743. [Google Scholar] [CrossRef]

- Gong, H.; Liu, J.; Li, Z.; Zhu, H.; Luo, L.; Li, H.; Hu, T.; Guo, Y.; Mu, Y. GFI-YOLOv8: Sika deer posture recognition target detection method based on YOLOv8. Animals 2024, 14, 2640. [Google Scholar] [CrossRef]

- Chen, L.; Li, G.; Zhang, S.; Mao, W.; Zhang, M. YOLO-SAG: An improved wildlife object detection algorithm based on YOLOv8n. Ecol. Inform. 2024, 83, 102791. [Google Scholar] [CrossRef]

- Xian, Y.; Lee, Y.; Shen, T.; Lan, P.; Zhao, Q.; Yan, L. Enhanced object detection in thangka images using Gabor, wavelet, and color feature fusion. Sensors 2025, 25, 3565. [Google Scholar] [CrossRef]

- Guo, Z.; He, Z.; Lyu, L.; Mao, A.; Huang, E.; Liu, K. Automatic detection of feral pigeons in urban environments using deep learning. Animals 2024, 14, 159. [Google Scholar] [CrossRef]

- Jenkins, M.; Franklin, K.A.; Nicoll, M.A.; Cole, N.C.; Ruhomaun, K.; Tatayah, V.; Mackiewicz, M. Improving object detection for time-lapse imagery using temporal features in wildlife monitoring. Sensors 2024, 24, 8002. [Google Scholar] [CrossRef] [PubMed]

- Wu, Z.; Zhang, C.; Gu, X.; Duporge, I.; Hughey, L.F.; Stabach, J.A.; Skidmore, A.K.; Hopcraft, J.G.C.; Lee, S.J.; Atkinson, P.M.; et al. Deep learning enables satellite-based monitoring of large populations of terrestrial mammals across heterogeneous landscape. Nat. Commun. 2023, 14, 3072. [Google Scholar] [CrossRef] [PubMed]

- Delplanque, A.; Théau, J.; Foucher, S.; Serati, G.; Durand, S.; Lejeune, P. Wildlife detection, counting and survey using satellite imagery: Are we there yet? GIScience Remote Sens. 2024, 61, 2348863. [Google Scholar] [CrossRef]

- Brack, I.V.; Ferrara, C.; Forero-Medina, G.; Domic-Rivadeneira, E.; Torrico, O.; Wanovich, K.T.; Wilkinson, B.; Valle, D. Counting animals in orthomosaics from aerial imagery: Challenges and future directions. Methods Ecol. Evol. 2025, 16, 1051–1060. [Google Scholar] [CrossRef]

- Krishnan, B.S.; Jones, L.R.; Elmore, J.A.; Samiappan, S.; Evans, K.O.; Pfeiffer, M.B.; Blackwell, B.F.; Iglay, R.B. Fusion of visible and thermal images improves automated detection and classification of animals for drone surveys. Sci. Rep. 2023, 13, 10385. [Google Scholar] [CrossRef]

- Backman, K.; Wood, J.; Brandimarti, M.; Beranek, C.T.; Roff, A. Human inspired deep learning to locate and classify terrestrial and arboreal animals in thermal drone surveys. Methods Ecol. Evol. 2025, 16, 1239–1254. [Google Scholar] [CrossRef]

- Korkmaz, A.; Agdas, M.T.; Kosunalp, S.; Iliev, T.; Stoyanov, I. Detection of Threats to Farm Animals Using Deep Learning Models: A Comparative Study. Appl. Sci. 2024, 14, 6098. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLOv8; Ultralytics: Frederick, MD, USA, 2023. [Google Scholar]

- Mou, C.; Liang, A.; Hu, C.; Meng, F.; Han, B.; Xu, F. Monitoring endangered and rare wildlife in the field: A foundation deep learning model integrating human knowledge for incremental recognition with few data and low cost. Animals 2023, 13, 3168. [Google Scholar] [CrossRef] [PubMed]

- Simões, F.; Bouveyron, C.; Precioso, F. DeepWILD: Wildlife Identification, Localisation and estimation on camera trap videos using Deep learning. Ecol. Inform. 2023, 75, 102095. [Google Scholar] [CrossRef]

- Wang, S. Effectiveness of traditional augmentation methods for rebar counting using UAV imagery with Faster R-CNN and YOLOv10-based transformer architectures. Sci. Rep. 2025, 15, 33702. [Google Scholar] [CrossRef] [PubMed]

- Gu, A.; Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar] [CrossRef]

- Li, M.; Yuan, J.; Chen, S.; Zhang, L.; Zhu, A.; Chen, X.; Chen, T. 3DET-mamba: State space model for end-to-end 3D object detection. In Proceedings of the 38th International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 9–15 December 2024; pp. 47242–47260. [Google Scholar]

- Jin, X.; Su, H.; Liu, K.; Ma, C.; Wu, W.; Hui, F.; Yan, J. UniMamba: Unified Spatial-Channel Representation Learning with Group-Efficient Mamba for LiDAR-based 3D Object Detection. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 11–15 June 2025; pp. 1407–1417. [Google Scholar]

- Ning, T.; Lu, K.; Jiang, X.; Xue, J. MambaDETR: Query-based Temporal Modeling using State Space Model for Multi-View 3D Object Detection. arXiv 2024, arXiv:2411.13628. [Google Scholar]

- You, Z.; Wang, N.; Wang, H.; Zhao, Q.; Wang, J. MambaBEV: An efficient 3D detection model with Mamba2. arXiv 2024, arXiv:2410.12673. [Google Scholar] [CrossRef]

- Wang, Z.; Li, C.; Xu, H.; Zhu, X.; Li, H. Mamba yolo: A simple baseline for object detection with state space model. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; Volume 39, pp. 8205–8213. [Google Scholar]

- Hatamizadeh, A.; Kautz, J. Mambavision: A hybrid mamba-transformer vision backbone. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 11–15 June 2025; pp. 25261–25270. [Google Scholar]

- He, J.; Fu, K.; Liu, X.; Zhao, Q. Samba: A Unified Mamba-based Framework for General Salient Object Detection. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 11–15 June 2025; pp. 25314–25324. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, Online, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Siméoni, O.; Vo, H.V.; Seitzer, M.; Baldassarre, F.; Oquab, M.; Jose, C.; Khalidov, V.; Szafraniec, M.; Yi, S.; Ramamonjisoa, M.; et al. Dinov3. arXiv 2025, arXiv:2508.10104. [Google Scholar]

- Jocher, G.; Qiu, J. Ultralytics YOLO11; Ultralytics: Frederick, MD, USA, 2024. [Google Scholar]

- Huang, S.; Hou, Y.; Liu, L.; Yu, X.; Shen, X. Real-Time Object Detection Meets DINOv3. arXiv 2025, arXiv:2509.20787. [Google Scholar] [CrossRef]

- Peng, Y.; Li, H.; Wu, P.; Zhang, Y.; Sun, X.; Wu, F. D-FINE: Redefine regression task in DETRs as fine-grained distribution refinement. arXiv 2024, arXiv:2410.13842. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

| Model | Params (M) | GFLOPs | FPS | AP | |||||

|---|---|---|---|---|---|---|---|---|---|

| YOLOv8-S [27] | 11 | 29 | 247 | 55.0 | 72.9 | 59.8 | 37.7 | 60.0 | 70.1 |

| YOLO11-S [41] | 9 | 22 | 233 | 57.8 | 76.2 | 63.0 | 40.5 | 63.2 | 73.1 |

| D-FINE-S [43] | 10 | 25 | 113 | 60.9 | 79.8 | 66.2 | 44.1 | 66.4 | 76.7 |

| Deformable-DETR [5] | 40 | 173 | 32 | 59.1 | 78.3 | 64.0 | 42.5 | 64.2 | 75.4 |

| DEIMv2-S [42] | 10 | 26 | 77 | 63.4 | 82.2 | 69.3 | 46.2 | 69.1 | 79.5 |

| RT-DETR [9] | 42 | 136 | 121 | 64.9 | 84.0 | 71.2 | 48.4 | 70.8 | 81.1 |

| Ours | 28 | 102 | 68 | 70.2 | 88.7 | 76.8 | 53.1 | 76.4 | 86.5 |

| Model | TA | AS | TR | L | EK | GH | HR | Y | SL | TC | TE | VF | W |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| YOLOv8-S [27] | 59.3 | 60.9 | 57.1 | 49.7 | 61.8 | 54.6 | 58.5 | 60.0 | 47.8 | 52.6 | 55.1 | 48.7 | 50.7 |

| YOLO11-S [41] | 61.9 | 63.6 | 60.0 | 52.3 | 64.4 | 57.1 | 61.2 | 62.7 | 50.9 | 55.3 | 57.7 | 50.9 | 52.6 |

| D-FINE-S [43] | 65.1 | 67.0 | 63.3 | 55.2 | 67.9 | 60.2 | 64.3 | 65.9 | 53.7 | 58.4 | 61.1 | 54.2 | 56.0 |

| Deformable-DETR [5] | 63.3 | 64.9 | 61.5 | 53.6 | 66.0 | 58.4 | 62.6 | 64.0 | 52.0 | 56.7 | 59.3 | 52.6 | 54.4 |

| DEIMv2-S [42] | 66.3 | 68.3 | 64.6 | 57.5 | 69.9 | 62.3 | 66.8 | 68.1 | 56.4 | 61.0 | 64.2 | 56.7 | 59.2 |

| RT-DETR [9] | 69.0 | 70.8 | 67.3 | 57.1 | 71.7 | 62.2 | 69.2 | 70.0 | 56.1 | 60.6 | 67.0 | 55.9 | 58.2 |

| Ours | 74.3 | 76.7 | 72.5 | 63.5 | 78.4 | 68.4 | 75.2 | 75.8 | 62.8 | 66.9 | 72.2 | 61.7 | 64.2 |

| Model | Params (M) | GFLOPs | AP | |||||

|---|---|---|---|---|---|---|---|---|

| YOLOv8-S [27] | 11 | 29 | 44.9 | 61.8 | 48.6 | 25.7 | 49.9 | 61.0 |

| YOLO11-S [41] | 9 | 22 | 46.6 | 63.4 | 50.3 | 28.7 | 51.3 | 64.1 |

| D-FINE-S [43] | 10 | 25 | 48.5 | 65.6 | 52.6 | 29.1 | 52.2 | 65.7 |

| Deformable-DETR [5] | 40 | 173 | 46.9 | 65.6 | 51.0 | 29.6 | 50.1 | 61.6 |

| DEIMv2-S [42] | 10 | 26 | 50.9 | 68.3 | 55.1 | 31.4 | 55.3 | 70.3 |

| RT-DETR [9] | 42 | 136 | 53.1 | 71.3 | 57.7 | 34.8 | 58.0 | 70.0 |

| Ours | 28 | 102 | 55.8 | 74.0 | 61.0 | 38.2 | 60.5 | 73.5 |

| Resolution | AP | |||||

|---|---|---|---|---|---|---|

| 512 × 512 | 67.8 | 86.0 | 73.4 | 49.2 | 73.1 | 84.7 |

| 640 × 640 | 70.2 | 88.7 | 76.8 | 53.1 | 76.4 | 86.5 |

| 800 × 800 | 70.9 | 89.2 | 77.4 | 55.0 | 76.9 | 87.2 |

| SPC | FMB | BMB | Text-Guide | AP | ||

|---|---|---|---|---|---|---|

| 56.2 | 75.0 | 61.4 | ||||

| ✓ | 59.8 | 79.1 | 64.5 | |||

| ✓ | 60.2 | 79.4 | 64.8 | |||

| ✓ | 60.5 | 79.8 | 65.1 | |||

| ✓ | ✓ | 63.2 | 82.5 | 68.0 | ||

| ✓ | ✓ | 63.7 | 83.0 | 68.4 | ||

| ✓ | ✓ | 63.4 | 82.8 | 68.1 | ||

| ✓ | ✓ | ✓ | 67.1 | 86.4 | 73.3 | |

| ✓ | ✓ | ✓ | ✓ | 70.2 | 88.7 | 76.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lan, P.; Xian, Y.; Shen, T.; Lee, Y.; Zhao, Q. Semantic-Guided Mamba Fusion for Robust Object Detection of Tibetan Plateau Wildlife. Electronics 2025, 14, 4549. https://doi.org/10.3390/electronics14224549

Lan P, Xian Y, Shen T, Lee Y, Zhao Q. Semantic-Guided Mamba Fusion for Robust Object Detection of Tibetan Plateau Wildlife. Electronics. 2025; 14(22):4549. https://doi.org/10.3390/electronics14224549

Chicago/Turabian StyleLan, Ping, Yukai Xian, Te Shen, Yurui Lee, and Qijun Zhao. 2025. "Semantic-Guided Mamba Fusion for Robust Object Detection of Tibetan Plateau Wildlife" Electronics 14, no. 22: 4549. https://doi.org/10.3390/electronics14224549

APA StyleLan, P., Xian, Y., Shen, T., Lee, Y., & Zhao, Q. (2025). Semantic-Guided Mamba Fusion for Robust Object Detection of Tibetan Plateau Wildlife. Electronics, 14(22), 4549. https://doi.org/10.3390/electronics14224549