Cognitive Chain-Based Dual Fusion Framework for Multi-Document Summarization

Abstract

1. Introduction

2. Related Work

2.1. Traditional Multi-Document Summarization Methods

2.2. LLM-Based MDS Methods

3. Cognitive Chain-Driven Generative Framework

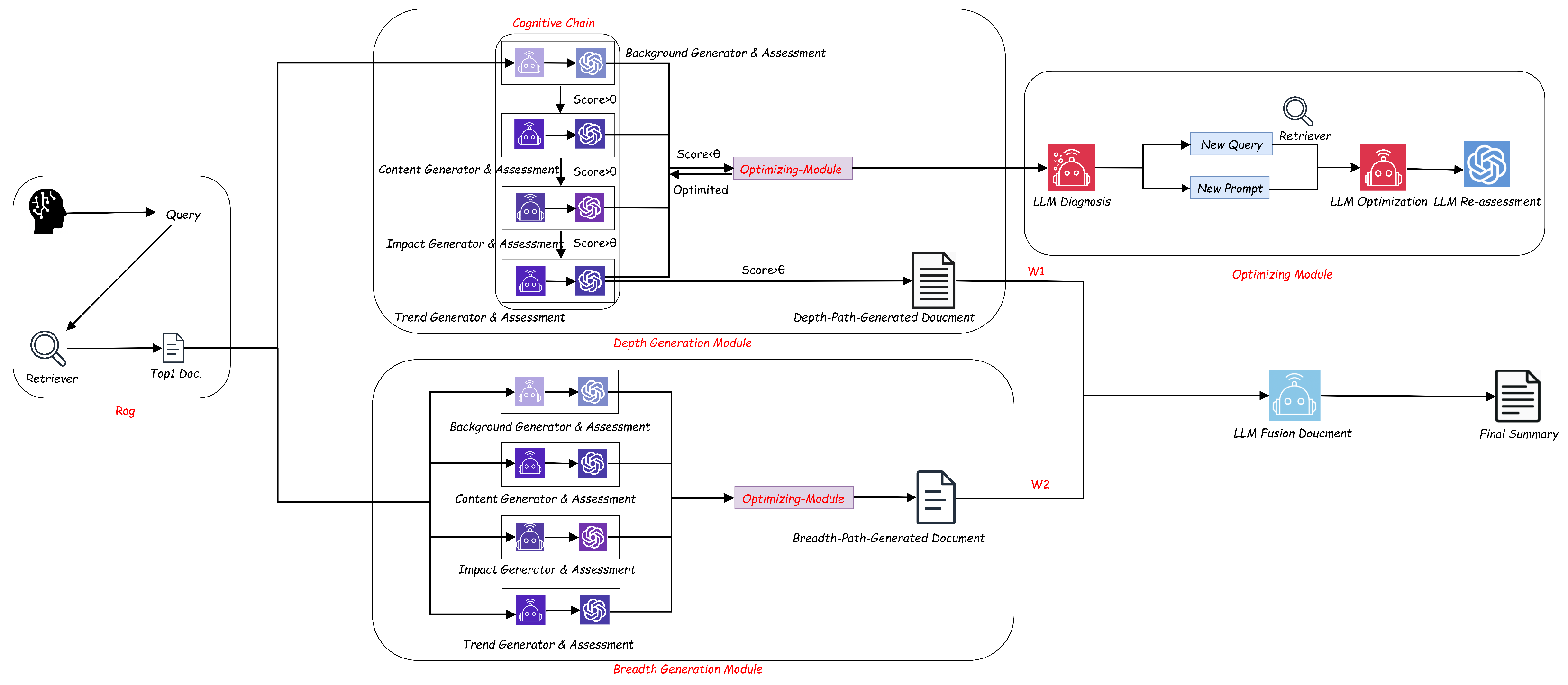

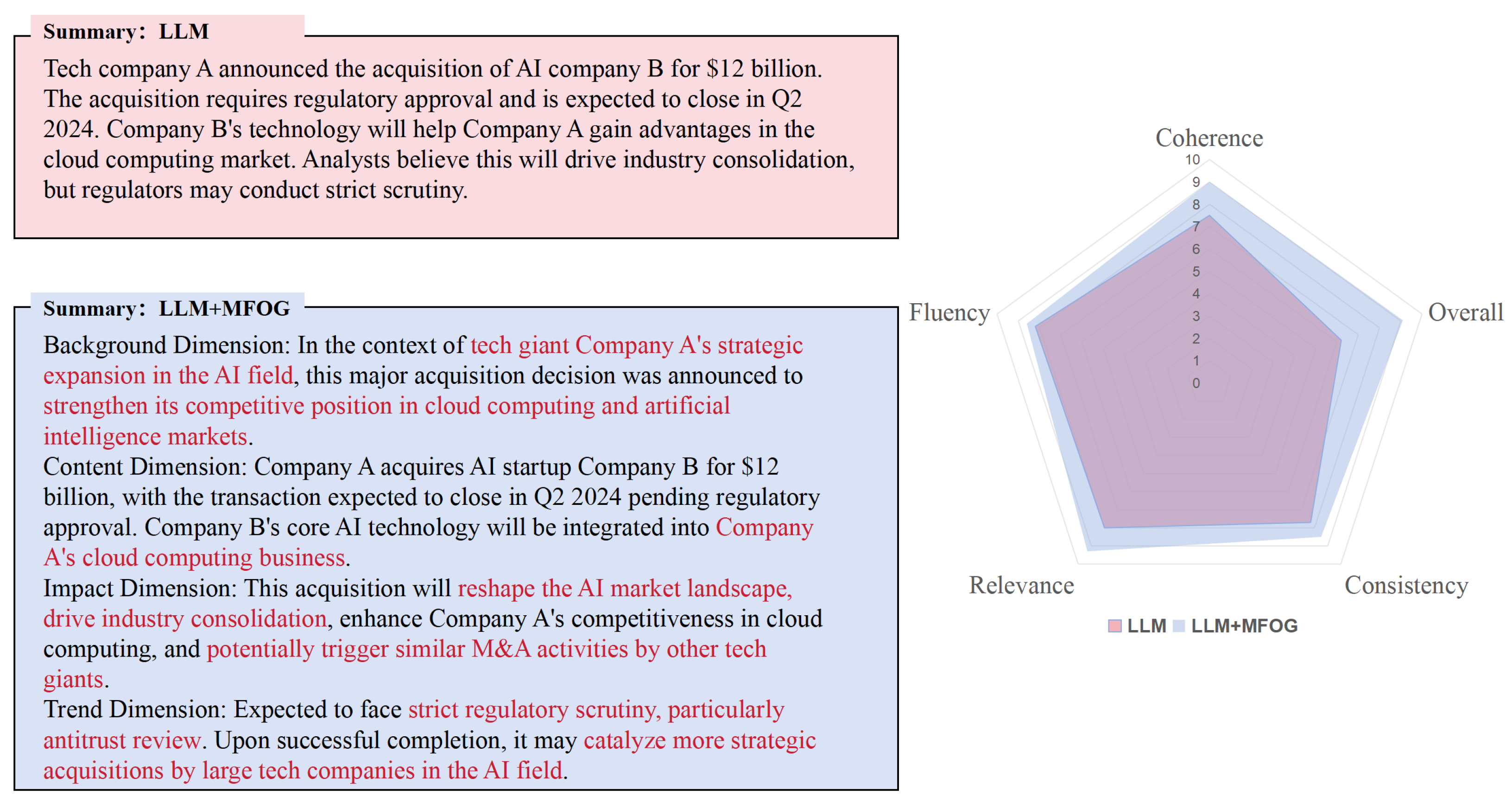

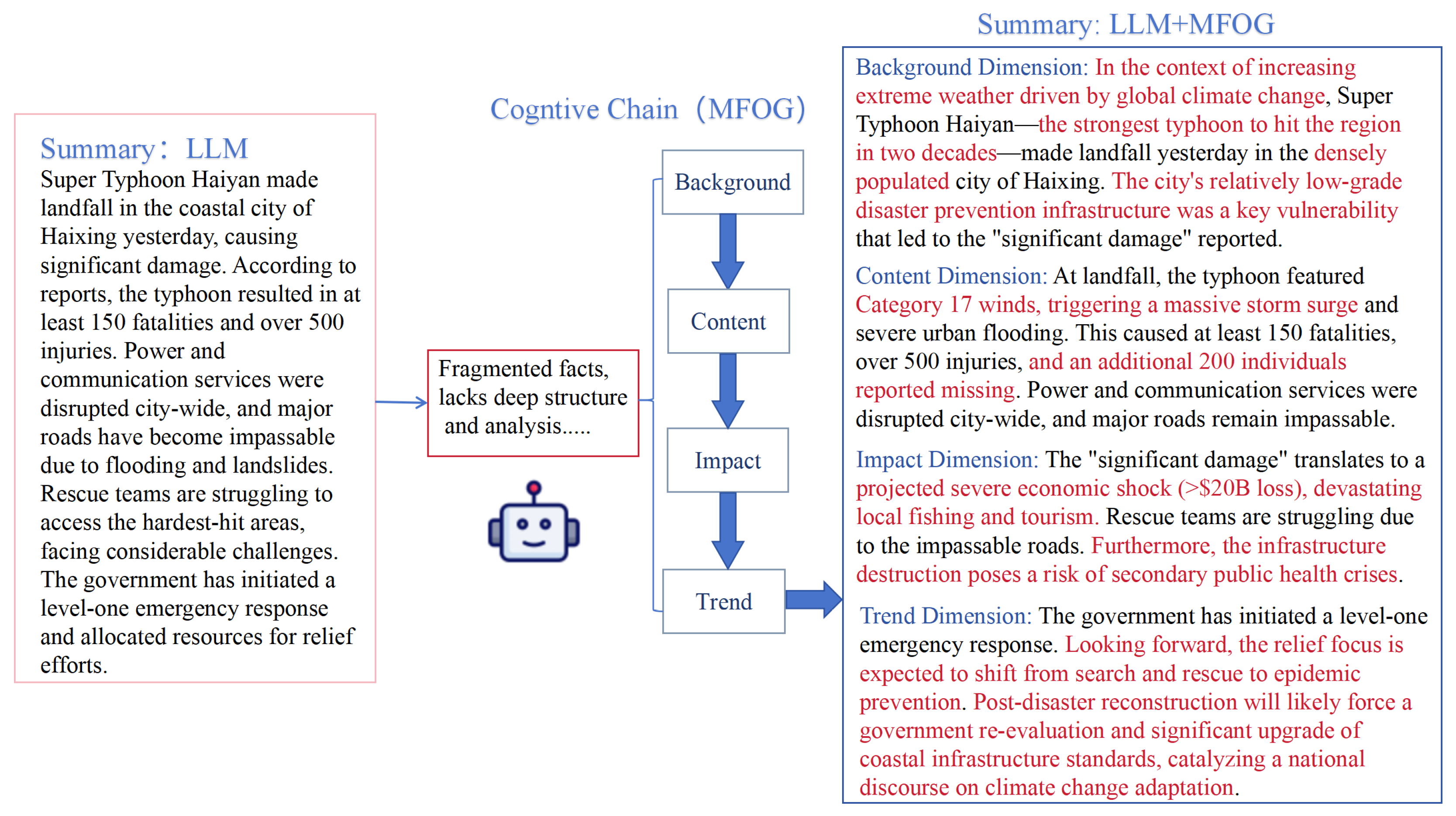

3.1. Overall Framework Architecture

- The depth-focused path follows the dimensional sequence, utilizing a closed-loop “Generate–Evaluate–Optimize” model to ensure logical coherence.

- The breadth-first parallel path generates content for all dimensions simultaneously, ensuring high efficiency and preventing error accumulation.

3.2. The Cognitive Chain

3.3. Implementation of the Cognitive Chain

3.4. Dual-Path Generation and Optimization

3.4.1. Self-Optimization Logic and Evaluation Prompts

| Algorithm 1 Dimension-specific evaluation logic (internal loop). |

|

3.4.2. On-Demand RAG and Query Formulation

| Algorithm 2 Pseudo-code for RAG trigger and query formulation. |

|

3.5. Overall Quality Assessment

3.6. LLM Fusion Document Module

4. Experimental Evaluation

4.1. Dataset

- Multi-News [25] is a large-scale dataset derived from the news aggregator newser.com, comprising 56,216 instances. Each instance consists of 2–10 related news articles and a human-authored summary. The documents, which span diverse domains such as politics, business, and sports, feature significant informational overlap and complementarity. This dataset is therefore ideal for assessing the ability of our four-dimensional Cognitive Chain to integrate complex, multifaceted information.

- DUC-2004 [26] is a classic MDS benchmark consisting of 50 document clusters, each containing 10 news articles on a specific topic (e.g., political events and natural disasters). For each cluster, four human-written reference summaries are provided, from which one is used as the target in our experiments. As a gold standard in the field, DUC-2004 provides an authoritative platform for benchmarking the performance of our framework against prior extractive and abstractive work.

- Multi-XScience [27] is a large-scale dataset of scientific articles sourced from ArXiv and the Microsoft Academic Graph, comprising over 40,000 instances. Each instance includes the abstract of a target paper and the abstracts of the related papers it cites. This dataset was selected to evaluate the cross-domain generalization of our framework and to test the adaptability of the Cognitive Chain to academic texts. Notably, we applied the same fixed Cognitive Chain to the Multi-XScience dataset without modification. We hypothesize that this generalization is effective because our defined chain aligns robustly with the standard structure of scientific communication:

- ·

- Background maps naturally to the Introduction and Related Work, setting the research context.

- ·

- Content corresponds to the Methodology and Results, detailing the core scientific contribution.

- ·

- Impact aligns with the Discussion and Conclusion, interpreting the significance of the findings.

- ·

- Trends relates to the Future Work sections, forecasting subsequent research directions.

This alignment suggests that the proposed Cognitive Chain is not an arbitrary construct but a fundamental cognitive sequence for analyzing informational documents. The instruction-tuned Llama-2-7B-chat model demonstrates the flexibility to adapt these abstract dimensions to the specific structural norms of diverse domains, confirming the framework’s robust generalization capabilities.

4.2. Evaluation Metrics

4.3. Implementation Environment

4.4. Result Analysis

4.5. Analysis of Ablation Studies

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Bender, E.M.; Gebru, T.; McMillan-Major, A.; Shmitchell, S. On the dangers of stochastic parrots: Can language models be too big? In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, Virtual, 3–10 March 2021; pp. 610–623. [Google Scholar]

- Ma, C.; Zhang, W.E.; Guo, M.; Wang, H.; Sheng, Q.Z. Multi-document summarization via deep learning techniques: A survey. ACM Comput. Surv. 2022, 55, 1–37. [Google Scholar] [CrossRef]

- Mihalcea, R.; Tarau, P. Textrank: Bringing order into text. In Proceedings of the 2004 Conference on Empirical Methods in Natural Language Processing, Barcelona, Spain, 25–26 July 2004; pp. 404–411. [Google Scholar]

- Carbonell, J.; Goldstein, J. The use of MMR, diversity-based reranking for reordering documents and producing summaries. In Proceedings of the 21st Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, Melbourne, Australia, 24–28 August 1998; pp. 335–336. [Google Scholar]

- Barzilay, R.; McKeown, K.R. Sentence fusion for multidocument news summarization. Comput. Linguist. 2005, 31, 297–328. [Google Scholar] [CrossRef]

- See, A.; Liu, P.J.; Manning, C.D. Get To The Point: Summarization with Pointer-Generator Networks. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Vancouver, BC, Canada, 30 July–4 August 2017; pp. 1073–1083. [Google Scholar] [CrossRef]

- Yang, Z.; Yang, D.; Dyer, C.; He, X.; Smola, A.; Hovy, E. Hierarchical attention networks for document classification. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016; pp. 1480–1489. [Google Scholar]

- Wu, Z.; Pan, S.; Chen, F.; Long, G.; Zhang, C.; Yu, P.S. A comprehensive survey on graph neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 4–24. [Google Scholar] [CrossRef] [PubMed]

- Ernst, O.; Caciularu, A.; Shapira, O.; Pasunuru, R.; Bansal, M.; Goldberger, J.; Dagan, I. Proposition-Level Clustering for Multi-Document Summarization. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Seattle, WA, USA, 10–15 July 2022; pp. 1765–1779. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Lewis, M.; Liu, Y.; Goyal, N.; Ghazvininejad, M.; Mohamed, A.; Levy, O.; Stoyanov, V.; Zettlemoyer, L. BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 7871–7880. [Google Scholar] [CrossRef]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res. 2020, 21, 1–67. [Google Scholar]

- Zhang, J.; Zhao, Y.; Saleh, M.; Liu, P. Pegasus: Pre-training with extracted gap-sentences for abstractive summarization. In Proceedings of the International Conference on Machine Learning, Virtual, 13–18 July 2020; pp. 11328–11339. [Google Scholar]

- Xiao, W.; Beltagy, I.; Carenini, G.; Cohan, A. PRIMERA: Pyramid-based Masked Sentence Pre-training for Multi-document Summarization. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Dublin, Ireland, 22–27 May 2022; pp. 5245–5263. [Google Scholar] [CrossRef]

- Pazhouhan, M.; Karimi Mazraeshahi, A.; Jahanbakht, M.; Rezanejad, K.; Rohban, M.H. Wave and Tidal Energy: A Patent Landscape Study. J. Mar. Sci. Eng. 2024, 12, 1967. [Google Scholar] [CrossRef]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.T.; Rocktäschel, T. Retrieval-augmented generation for knowledge-intensive nlp tasks. Adv. Neural Inf. Process. Syst. 2020, 33, 9459–9474. [Google Scholar]

- Karpukhin, V.; Oguz, B.; Min, S.; Lewis, P.S.; Wu, L.; Edunov, S.; Chen, D.; Yih, W.t. Dense Passage Retrieval for Open-Domain Question Answering. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing, EMNLP 2020, Online, 16–20 November 2020; pp. 6769–6781. [Google Scholar]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Xia, F.; Chi, E.; Le, Q.V.; Zhou, D. Chain-of-thought prompting elicits reasoning in large language models. Adv. Neural Inf. Process. Syst. 2022, 35, 24824–24837. [Google Scholar]

- Sarthi, P.; Abdullah, S.; Tuli, A.; Khanna, S.; Goldie, A.; Manning, C.D. Raptor: Recursive abstractive processing for tree-organized retrieval. In Proceedings of the Twelfth International Conference on Learning Representations, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Edge, D.; Trinh, H.; Cheng, N.; Bradley, J.; Chao, A.; Mody, A.; Truitt, S.; Larson, J. From local to global: A graph rag approach to query-focused summarization. arXiv 2024, arXiv:2404.16130. [Google Scholar] [CrossRef]

- Kim, H.; Kim, B.H. NexusSum: Hierarchical LLM Agents for Long-Form Narrative Summarization. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Vienna, Austria, 27 July–1 August 2025; pp. 10120–10157. [Google Scholar] [CrossRef]

- Asai, A.; Wu, Z.; Wang, Y.; Sil, A.; Hajishirzi, H. Self-Rag: Learning to Retrieve, Generate, and Critique Through Self-Reflection. In Proceedings of the Twelfth International Conference on Learning Representations (ICLR), Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Yan, S.Q.; Gu, J.C.; Zhu, Y.; Ling, Z.H. Corrective Retrieval Augmented Generation. arXiv 2024, arXiv:2401.15884. [Google Scholar] [CrossRef]

- Li, L.; Chen, W.; Li, J.; Chen, L. Relation-r1: Cognitive chain-of-thought guided reinforcement learning for unified relational comprehension. arXiv 2025, arXiv:2504.14642. [Google Scholar]

- Fabbri, A.; Li, I.; She, T.; Li, S.; Radev, D. Multi-News: A Large-Scale Multi-Document Summarization Dataset and Abstractive Hierarchical Model. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 1074–1084. [Google Scholar] [CrossRef]

- Over, P.; Yen, J. An introduction to DUC-2004; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2004.

- Lu, Y.; Dong, Y.; Charlin, L. Multi-XScience: A large-scale dataset for extreme multi-document summarization of scientific articles. arXiv 2020, arXiv:2010.14235. [Google Scholar]

- Lin, C.Y. Rouge: A package for automatic evaluation of summaries. In Proceedings of the Text Summarization Branches Out, Barcelona, Spain, 25–26 July 2004; pp. 74–81. [Google Scholar]

- Zhang, T.; Kishore, V.; Wu, F.; Weinberger, K.Q.; Artzi, Y. Bertscore: Evaluating text generation with bert. arXiv 2019, arXiv:1904.09675. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Banerjee, S.; Lavie, A. METEOR: An automatic metric for MT evaluation with improved correlation with human judgments. In Proceedings of the ACL Workshop on Intrinsic and Extrinsic Evaluation Measures for Machine Translation and/or Summarization, Ann Arbor, MI, USA, 29 June 2005; pp. 65–72. [Google Scholar]

- Ryu, S.; Do, H.; Kim, Y.; Lee, G.; Ok, J. Multi-Dimensional Optimization for Text Summarization via Reinforcement Learning. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Bangkok, Thailand, 11–16 August 2024; pp. 5858–5871. [Google Scholar] [CrossRef]

| Dimension | Path | Prompt Template |

|---|---|---|

| Background | Depth | Role: You are a professional summarization analyst. Task: Your goal is to provide the essential background and context. Input: Based on the provided documents: [Document Context]. Action: Summarize foundational background (key entities, timelines, locations). Do not describe the event itself, only the context. Produce a concise paragraph. |

| Breadth | Role: You are a professional summarization analyst. Task: Your goal is to provide the essential background and context. Input: Based on the provided documents: [Document Context]. Action: Summarize foundational background (key entities, timelines, locations). Do not describe the event itself, only the context. Produce a concise paragraph. | |

| Content | Depth | Role: You are a professional summarization analyst. Task: Your goal is to detail the core events, building upon established context. Input: You are given: 1. Source Documents: [Document Context]. 2. Previously Generated Background: [Generated Background]. Action: Using Source Documents, synthesize core facts/developments. Use ’Previously Generated Background’ as a starting point and ensure logical flow. Focus only on the main event. Produce a concise paragraph. |

| Breadth | Role: You are a professional summarization analyst. Task: Your goal is to independently detail the core events. Input: Based on the provided documents: [Document Context]. Action: Synthesize core facts and key developments from the documents. Focus only on the main event. Produce a concise paragraph. | |

| Impact | Depth | Role: You are a professional summarization analyst. Task: Your goal is to analyze consequences based on prior analysis. Input: You are given prior analysis: 1. Background: [Generated Background]. 2. Core Content: [Generated Content]. 3. Source Documents: [Document Context]. Action: Based on prior analysis, analyze immediate and long-term impacts (e.g., social, economic) from Source Documents. Analysis must be a direct consequence of ’Core Content’. Produce a concise analytical paragraph. |

| Breadth | Role: You are a professional summarization analyst. Task: Your goal is to independently analyze the consequences of an event. Input: Based on the provided documents: [Document Context]. Action: Analyze immediate and long-term impacts of the event. Identify consequences across domains (e.g., social, economic) from the documents. Produce a concise analytical paragraph. | |

| Trends | Depth | Role: You are a professional summarization analyst. Task: Your goal is to forecast future developments based on a complete analysis. Input: You are given the complete analysis: 1. Background: [Generated Background]. 2. Core Content: [Generated Content]. 3. Impact Analysis: [Generated Impact]. 4. Source Documents: [Document Context]. Action: Based on the full analysis, forecast future trends. Your forecast must be logically derived from the ’Impact Analysis’. Produce a concise forecast. |

| Breadth | Role: You are a professional summarization analyst. Task: Your goal is to independently forecast future developments. Input: Based on the provided documents: [Document Context]. Action: Forecast future trends and developments related to the event described in the documents. Produce a concise forecast. |

| Prompt Name | Purpose | Prompt Template |

|---|---|---|

| CRITIQUE_TMPL | Generate Qualitative Critique | Role: You are an expert fact-checker and summarization evaluator. Input: 1. Source Documents: [Source Documents] 2. Generated Summary for [Dimension]: [Content to Evaluate] Criteria: Compare the Summary against the Source Documents. Check for: - Factual Accuracy: Are there any hallucinations? - Completeness: Is key information for this dimension missing? Action: Provide a brief, critical analysis of the summary’s flaws. If it is perfect, state that. |

| SCORING_TMPL | Convert Critique to Score [0, 1] | Role: You are a strict scoring engine. Input: 1. Generated Summary: [Content to Evaluate] 2. Expert Critique: [Text Critique] Task: Based strictly on the critique, assign a quality score . - 1.0: Perfect, no errors. - <0.8: Requires regeneration (contains errors or omissions). Output: Output only the score in this exact format: “Score: [value]”. |

| Prompt Name | Purpose | Prompt Template |

|---|---|---|

| DIAGNOSIS_PROMPT | Formulate Search Query | Role: You are a diagnostic assistant focused on information retrieval. Input: 1. Failed Summary Draft: [Failed Content] 2. Expert Critique: [Text Critique] Task: Analyze the critique to identify exactly what information is missing or factually incorrect. Formulate a specific, targeted search query to retrieve this missing evidence from the knowledge base. Output: Output only the search query string. |

| REGENERATION_PROMPT | Regenerate with Context | Role: You are a senior editor performing corrective summarization. Input: 1. Original Draft: [Failed Content] 2. Identified Issues: [Text Critique] 3. New Evidence: [Retrieved Snippets] Task: Rewrite the draft to address the identified issues. You must incorporate the “New Evidence” to fix factual errors or fill information gaps. Maintain the original style but ensure accuracy and completeness. Output: The revised, optimized summary paragraph. |

| Name | Purpose | Prompt Template |

|---|---|---|

| FUSION | Integration | Role: Senior Editor. Input: Draft 1 (Depth-Focused): []; Draft 2 (Breadth-Focused): []. Task: Synthesize a final summary. Use Draft 1 as the primary structural foundation (approx. 70% weight) to ensure logical coherence. Integrate unique, complementary details from Draft 2 (approx. 30% weight) to enhance coverage without disrupting the narrative flow. Avoid redundancy. Output: The final polished summary. |

| Models | ROUGE-1 | ROUGE-2 | ROUGE-L | BERTScore | METEOR | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| M-N | DUC | M-X | M-N | DUC | M-X | M-N | DUC | M-X | M-N | DUC | M-X | M-N | DUC | M-X | |

| TextRank | 41.35 | 28.15 | 19.88 | 15.62 | 7.11 | 3.98 | 20.09 | 12.81 | 17.24 | 52.43 | 31.50 | 52.81 | 19.15 | 11.23 | 16.90 |

| ProCluster | 45.83 | 32.47 | 24.61 | 18.07 | 9.69 | 5.81 | 23.03 | 14.94 | 21.60 | 54.72 | 35.95 | 58.24 | 22.70 | 13.16 | 20.95 |

| PRIMERA | 48.70 | 34.20 | 26.10 | 20.50 | 11.50 | 6.80 | 25.80 | 15.40 | 22.50 | 56.61 | 38.10 | 59.10 | 24.50 | 15.40 | 22.10 |

| GraphRAG | 49.55 | 34.82 | 26.85 | 21.28 | 11.91 | 7.15 | 27.10 | 15.73 | 22.93 | 57.31 | 38.95 | 59.85 | 26.20 | 15.84 | 22.82 |

| RAPTOR | 49.85 | 34.71 | 27.85 | 21.50 | 11.82 | 7.03 | 27.55 | 15.63 | 22.81 | 57.15 | 39.02 | 59.68 | 26.35 | 16.05 | 22.69 |

| NexusSum | 49.38 | 34.50 | 27.15 | 21.65 | 11.75 | 7.20 | 27.40 | 15.80 | 23.10 | 57.25 | 38.80 | 60.10 | 26.50 | 15.95 | 23.05 |

| MFOG | 51.08 | 36.12 | 28.51 | 22.76 | 12.48 | 8.21 | 28.91 | 16.83 | 24.12 | 58.21 | 40.16 | 60.15 | 28.03 | 16.98 | 23.76 |

| Models | Coherence | Consistency | Fluency | Relevance | Overall | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Avg | SD | Avg | SD | Avg | SD | Avg | SD | Avg | SD | |

| TextRank | 6.75 | 0.18 | 6.82 | 0.16 | 6.78 | 0.19 | 6.79 | 0.17 | 6.77 | 0.18 |

| ProCluster | 8.02 | 0.15 | 8.15 | 0.14 | 8.05 | 0.16 | 8.08 | 0.15 | 8.09 | 0.16 |

| PRIMERA | 8.31 | 0.13 | 8.40 | 0.12 | 8.35 | 0.14 | 8.36 | 0.13 | 8.36 | 0.14 |

| GraphRAG | 8.55 | 0.11 | 8.82 | 0.10 | 8.58 | 0.11 | 8.79 | 0.11 | 8.61 | 0.11 |

| RAPTOR | 8.65 | 0.10 | 8.75 | 0.08 | 8.68 | 0.12 | 8.70 | 0.09 | 8.67 | 0.12 |

| NexusSum | 8.40 | 0.08 | 8.80 | 0.08 | 8.30 | 0.07 | 8.85 | 0.08 | 8.86 | 0.08 |

| MFOG | 9.35 | 0.06 | 9.01 | 0.06 | 9.20 | 0.07 | 9.31 | 0.06 | 9.16 | 0.07 |

| Models | R-1 | R-2 | R-L | BS | MT |

|---|---|---|---|---|---|

| Standard RAG | 47.95 | 20.25 | 25.10 | 55.42 | 24.30 |

| W/O depth | 48.80 | 21.05 | 26.20 | 56.10 | 25.05 |

| W/O Parallel | 49.60 | 21.95 | 27.20 | 56.80 | 26.15 |

| W/O Fusion | 50.15 | 22.40 | 27.05 | 57.55 | 27.00 |

| W/O RAG | 48.85 | 20.85 | 26.80 | 56.15 | 25.10 |

| W/O Cognitive Chains | 50.10 | 21.60 | 26.15 | 57.60 | 26.70 |

| MFOG (Full) | 51.08 | 22.76 | 28.91 | 58.21 | 28.03 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, C.; Zhang, L.; Zhang, J.; Zheng, Q. Cognitive Chain-Based Dual Fusion Framework for Multi-Document Summarization. Electronics 2025, 14, 4545. https://doi.org/10.3390/electronics14224545

Li C, Zhang L, Zhang J, Zheng Q. Cognitive Chain-Based Dual Fusion Framework for Multi-Document Summarization. Electronics. 2025; 14(22):4545. https://doi.org/10.3390/electronics14224545

Chicago/Turabian StyleLi, Chenyang, Long Zhang, Junshuai Zhang, and Qiusheng Zheng. 2025. "Cognitive Chain-Based Dual Fusion Framework for Multi-Document Summarization" Electronics 14, no. 22: 4545. https://doi.org/10.3390/electronics14224545

APA StyleLi, C., Zhang, L., Zhang, J., & Zheng, Q. (2025). Cognitive Chain-Based Dual Fusion Framework for Multi-Document Summarization. Electronics, 14(22), 4545. https://doi.org/10.3390/electronics14224545