1. Introduction

Wireless Power Transfer (WPT) has emerged as a promising solution for charging electric vehicles (EVs), offering enhanced convenience, safety, and automation compared to traditional plug-in systems [

1,

2,

3]. As the adoption of EVs increases globally, efficient and user-friendly charging infrastructure is becoming essential to support widespread deployment [

4,

5,

6]. WPT systems eliminate the need for physical connectors by using electromagnetic fields to transfer energy wirelessly between a stationary ground-side coil and a vehicle-side receiver [

7,

8,

9,

10].

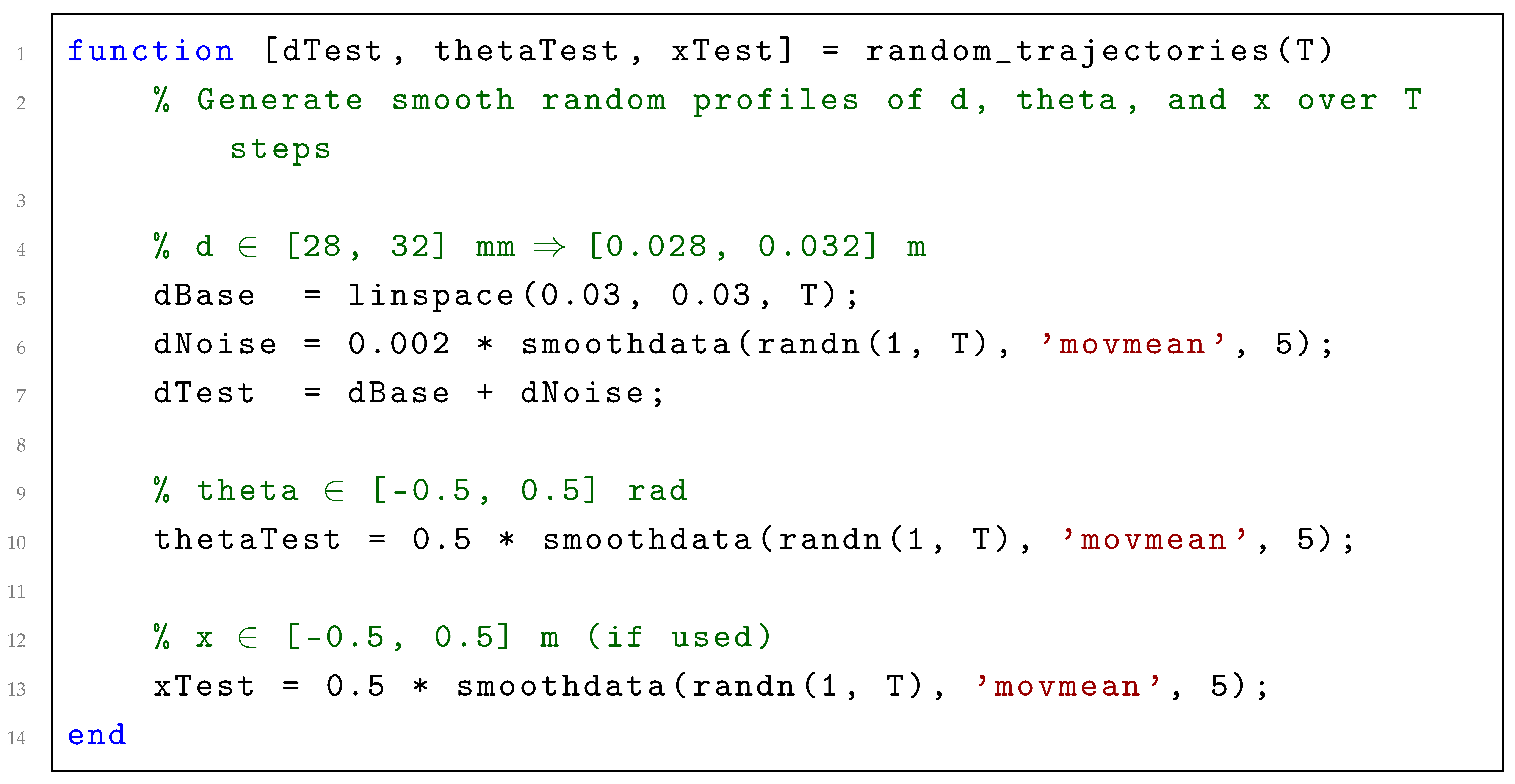

However, the power transfer efficiency (PTE) in WPT systems is highly sensitive to spatial misalignments, coil separations, angular deviations, and variations in system parameters. These variations modify the coupling coefficient and resonance conditions, leading to significant degradation of the PTE, especially in dynamic charging scenarios.

Figure 1 illustrates the main physical factors that affect PTE in practical EV wireless charging configurations.

Recent experimental and simulation studies have shown that PTE can drop by 20–35% under lateral displacements of 100–150 mm or tilt angles exceeding 10°, and by 25% when coil separation increases beyond 50 mm [

6,

7,

8]. Such degradation directly translates into increased charging time, higher energy consumption, and additional thermal losses, emphasizing the urgency of developing intelligent and adaptive optimization strategies capable of maintaining efficiency under real-world operating conditions.

To overcome these limitations, several studies have introduced computational intelligence techniques such as Genetic Algorithms (GAs), Particle Swarm Optimization (PSO), and Reinforcement Learning (RL) to improve efficiency, adaptability, and control in WPT systems [

9,

10,

11,

12]. These data-driven approaches enable intelligent parameter optimization, nonlinear modeling, and adaptive decision-making under uncertainty, offering clear advantages over conventional analytical control methods. However, most previous works treat static optimization and dynamic control as separate research topics, rarely validating robustness or generalization under complex operating conditions.

In addition, deep learning-based techniques have been increasingly investigated for power transfer optimization. For instance, Convolutional Neural Networks (CNNs) have been employed to predict magnetic coupling and spatial alignment with an accuracy within 2–3% of finite-element simulations [

12]. Dueling Double Deep Q-Networks (D3QN) have been implemented for real-time frequency control in dynamic WPT systems, demonstrating faster convergence and improved stability compared to conventional DQN algorithms [

13,

14,

15]. Furthermore, the Feature Cross-Layer Interaction Hybrid Model (FCIHMRT) has been recently proposed to jointly optimize coil geometry, compensation capacitance, and operating frequency, improving convergence speed and generalization performance in resonant WPT applications [

16,

17,

18]. Despite these advances, deep learning models generally require large datasets and high computational resources, which restrict their deployment in embedded EV controllers. Consequently, there is a need for a unified framework that combines global optimization and real-time adaptive control while remaining computationally efficient.

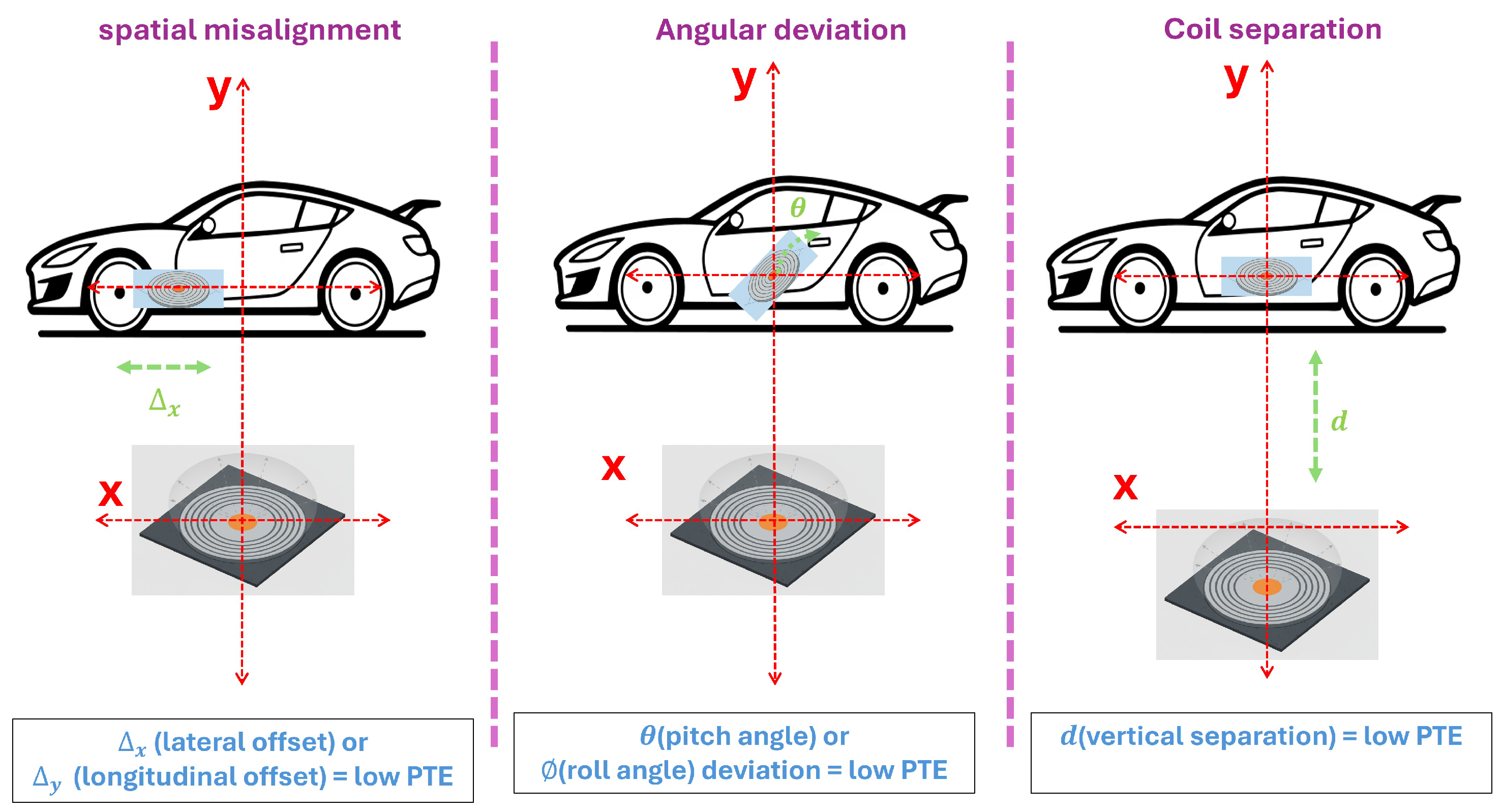

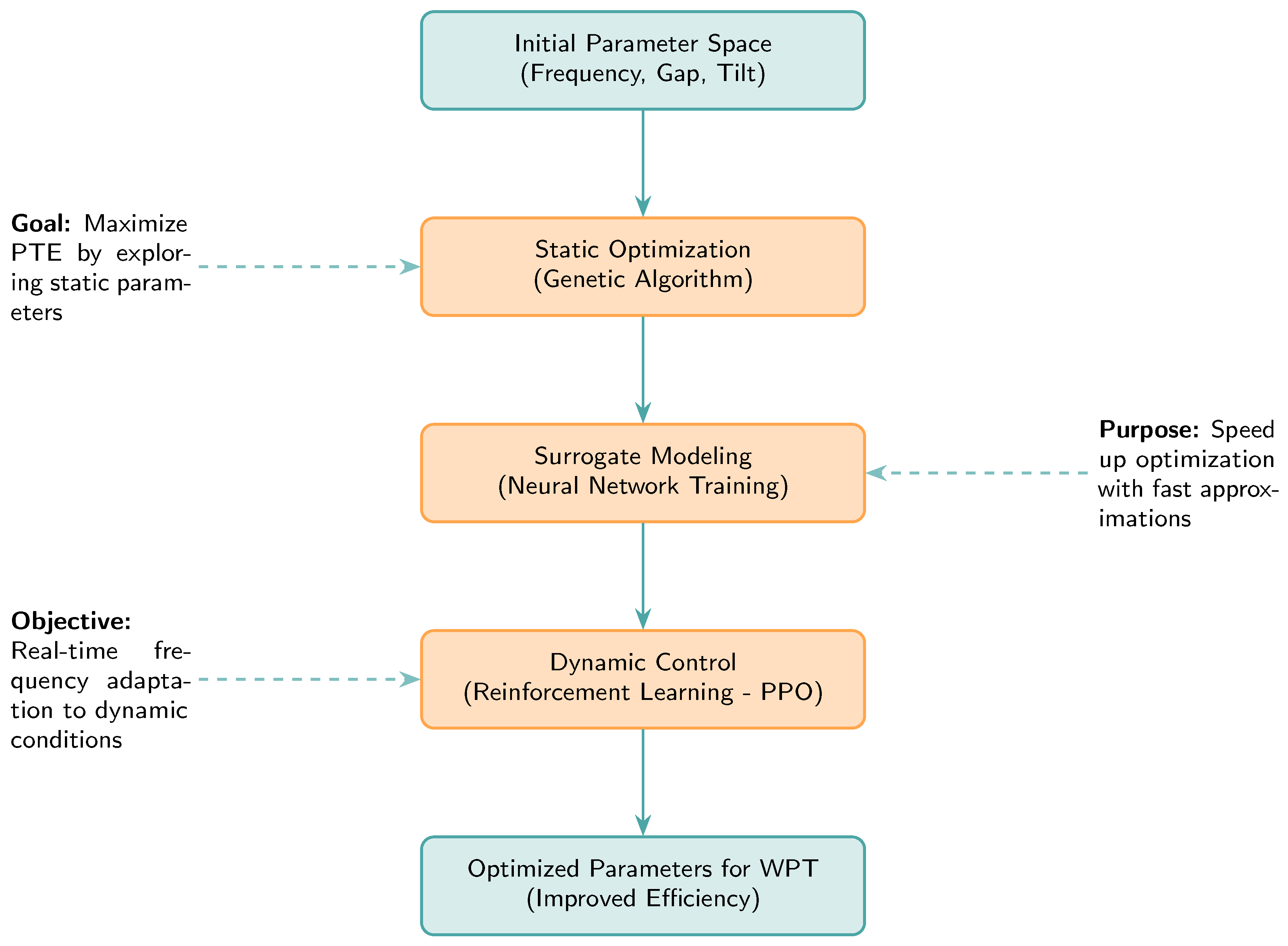

To this end, the present study proposes a unified AI-driven hybrid optimization and control framework that integrates GA-based static optimization, an Artificial Neural Network (ANN) surrogate model, and Reinforcement Learning (RL) dynamic control using the Proximal Policy Optimization (PPO) algorithm. This framework bridges the gap between static parameter optimization and adaptive control, ensuring both high efficiency and robustness under varying system conditions, as depicted in

Figure 2.

A high-fidelity MATLAB/Simulink model of the WPT system was developed and validated using an ANN surrogate model, demonstrating that combining offline GA optimization with online RL adaptation provides a scalable, real-time control strategy for practical EV wireless charging applications.

The main contributions of this work are summarized as follows:

A hybrid AI-based optimization and control framework is proposed for EV wireless charging, integrating a Genetic Algorithm (GA), an Artificial Neural Network (ANN), and a Reinforcement Learning (RL) controller based on the Proximal Policy Optimization (PPO) algorithm.

A GA is utilized for global offline optimization of key WPT parameters, coil distance, compensation capacitance, and operating frequency to establish an initial configuration with maximal theoretical Power Transfer Efficiency (PTE).

An ANN surrogate model (digital twin) is developed to reproduce the nonlinear behavior of the WPT system and to accelerate RL training through fast and differentiable efficiency predictions.

The PPO-based RL controller performs real-time parameter tuning to sustain optimal PTE under dynamic conditions such as misalignment, load variations, and sensor noise.

Extensive robustness validations under multiple simultaneous perturbations (noise, coupling-coefficient drift, and trajectory deviation) confirm the stability, adaptability, and generalization ability of the proposed framework.

The obtained results reveal that the hybrid GA–RL framework achieves a peak PTE of 96.85%, surpassing the conventional fixed-frequency approach by 4.21% and the GA-only method by 2.47%. The PPO controller ensures stable performance even under 20% coupling variation and 15° angular misalignment, confirming strong robustness and adaptability. Moreover, the integration of the ANN surrogate reduces RL training time by approximately 35% while maintaining high accuracy. These findings demonstrate that combining global evolutionary optimization with real-time adaptive learning provides an efficient and scalable solution for next-generation EV wireless charging systems.

2. Related Work

WPT is emerging as a key enabling technology for EVs infrastructure, offering contactless, efficient, and user-friendly charging. This section surveys the evolution of WPT techniques and recent progress in AI-driven optimization for EV wireless charging systems. The synergies between WPT and AI are highlighted, showcasing how machine learning and adaptive control methods address longstanding technical limitations.

2.1. Overview of WPT Techniques

Wireless power transfer methods are broadly categorized into near-field and far-field approaches [

8,

18,

19,

20,

21]. For EV applications, near-field techniques [

10,

22,

23], particularly inductive and resonant inductive coupling [

11,

24], are the most relevant due to their high efficiency and safety. Far-field methods such as microwave or laser-based transmission are primarily suited for niche aerospace applications due to safety and efficiency concerns [

25,

26].

Table 1 highlights that, while near-field methods (inductive and resonant inductive coupling) dominate EV applications due to efficiency and safety, they remain highly sensitive to spatial misalignment and parameter variations. Capacitive coupling, although simple and cost-effective, struggles with environmental robustness and limited power density. Far-field methods, such as microwave or laser transfer, provide longer ranges, but face critical efficiency and safety challenges, limiting them to specialized contexts. Here, AI introduces new opportunities: real-time alignment correction, predictive resonance tuning, and intelligent beam-steering directly address long-standing technical barriers. This indicates that the future of WPT may rely less on inventing new physical mechanisms and more on embedding intelligence into existing systems.

Table 2 synthesizes recent efforts to integrate AI in EV WPT systems. Early studies, such as [

13], demonstrate how hybrid models combining Artificial Neural Networks (ANNs) with metaheuristic optimizers (GA/PSO) accelerate design convergence and improve accuracy. [

14] show that adaptive fuzzy logic and machine learning can deliver near ideal power transfer efficiency in both static and dynamic conditions, marking a practical leap toward real-world robustness. Alignment challenges during vehicle motion, addressed by [

15], highlight the value of probabilistic AI methods (MLE) in reducing positioning errors. More recent works, including [

16] and [

17], push the boundaries toward decentralized and dynamic control: deep reinforcement learning (PPO) enables adaptive, real-time vehicle-to-vehicle (V2V) charging, while federated reinforcement learning (FedSAC) ensures scalability and privacy in multi-agent EV ecosystems. Taken together, these studies indicate a clear research trajectory, from improving component-level performance to orchestrating system-level intelligence for cooperative, resilient EV charging.

These studies illustrate a growing trend in applying hybrid artificial intelligence (AI) frameworks—such as combining neural prediction with metaheuristic optimization or reinforcement learning (RL) to address diverse challenges in WPT. ANN models can predict power transfer efficiency under various spatial configurations. Genetic algorithms (GAs) and particle swarm optimization (PSO) effectively optimize coil parameters and system layout. RL, particularly policy-gradient methods like proximal policy optimization (PPO), excels in dynamic and uncertain environments, adapting control policies in real time.

Despite these advancements, several gaps remain. Many solutions focus on static or simulation-only validation, lacking robustness in real-world noisy, multi-modal scenarios. Additionally, the scarcity of high-quality datasets for WPT conditions limits supervised learning generalization. Finally, computational constraints challenge real-time deployment of deep models in embedded EV hardware.

2.2. Contribution Context

In light of these challenges, this study contributes a unified framework combining genetic algorithm-based static optimization, neural network surrogate modeling, and reinforcement learning-based dynamic control. This multi-level AI approach is validated in a simulated digital twin environment and subjected to robustness tests under varying alignment, sensor noise, and trajectory conditions. The results demonstrate improved power transfer efficiency and adaptability, helping to pave the way for more intelligent, resilient wireless EV charging systems.

3. System Design and Digital Twin Modeling

To evaluate and optimize WPT performance for EVs applications, a digital twin of the WPT system was developed using MATLAB R2024b/Simulink. This section details the architecture of the physical model, the simulation parameters, and the integration of AI techniques for both static and dynamic performance enhancement.

3.1. System Architecture and Physical Parameters

The digital twin replicates the core elements of a typical EV wireless charging setup, including the primary and secondary coils, resonant compensation circuits, and the load (battery). The model parameters align with the SAE J2954 standard. The key parameters used in the WPT system simulation are summarized in

Table 3. These values are selected to reflect a typical medium-power EV wireless charging system. The operating frequency is set to

, complying with industry standards. Identical transmitting and receiving coils are assumed, each with an inductance of

and a resistance of

. The load resistance is modeled as

, representing typical vehicle-side power demand. The mutual coupling between coils at zero separation is characterized by a coupling coefficient

, which decreases exponentially with increasing gap distance, governed by a decay factor

. These parameters form the basis for analyzing the system’s power transfer efficiency and alignment sensitivity.

All of the parameters in

Table 3 are drawn from international standards and the peer-reviewed literature concerning mid-power EV WPT systems. The operating frequency (85 kHz) follows the SAE J2954 guideline. Inductances, resistances, and coupling coefficients were taken from validated experimental setups [

15,

16,

17], and the gap decay factor was empirically determined from previously published data [

15]. This ensures that the simulation parameters are both physically realistic and consistent with established EV wireless-charging practices.

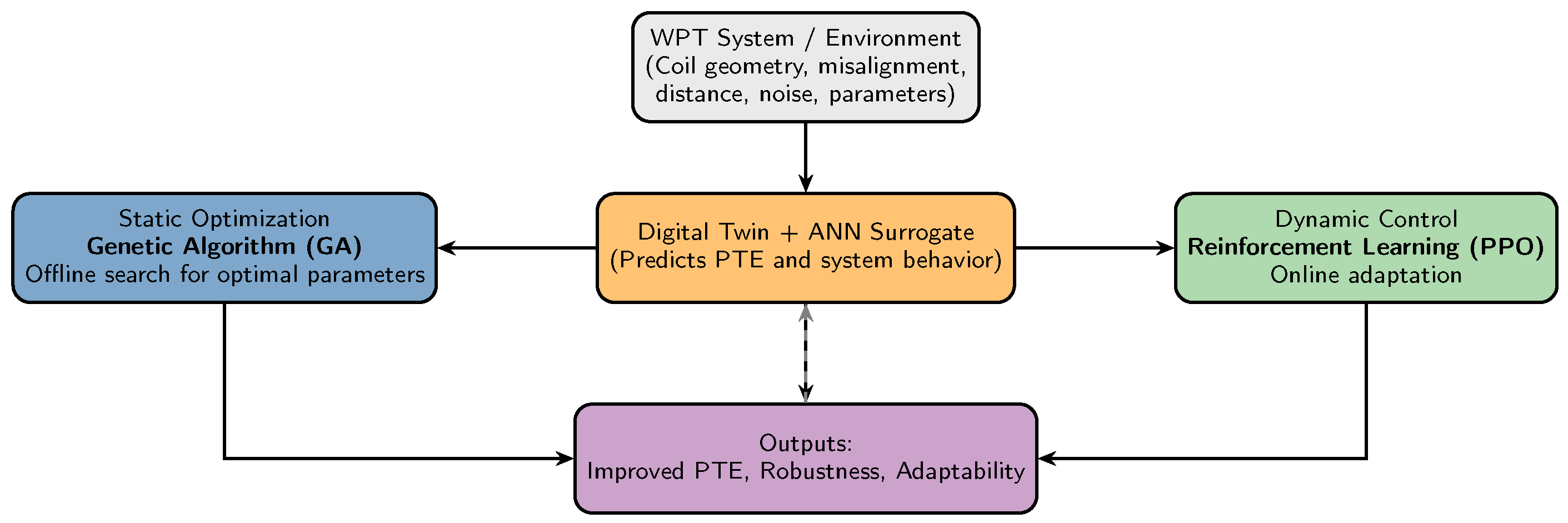

3.2. Simulation Environment and Dataset Generation

To enable the effective training and validation of artificial intelligence models for WPT systems, a comprehensive simulation environment was developed using MATLAB R2024b. This environment integrates the Control System, Optimization, Deep Learning, and Reinforcement Learning toolboxes to accurately replicate the dynamic behavior of the WPT system. The simulation forms the basis of a digital twin architecture, providing a realistic and high-fidelity platform for AI-driven optimization and control. The digital twin was designed to systematically explore key operational parameters influencing WPT performance. Specifically, three input variables were varied over discrete ranges: operating frequency, vertical coil gap, and coil tilt angle. These parameters were chosen due to their significant impact on power transfer efficiency and system robustness.

Table 4 summarizes the parameter ranges and discretization steps used for dataset generation.

The full parameter sweep resulted in 7875 unique simulation configurations (

). Each simulation case produced detailed power transfer metrics, which were stored in structured CSV files. This dataset serves multiple purposes: it trains surrogate neural network models to approximate system behavior efficiently, benchmarks optimization strategies, and provides environmental feedback for reinforcement learning agents. Model validation was conducted against classical alternating current (AC) theory. The mutual inductance

M between the primary and secondary coils was computed as follows [

28]:

where

k represents the magnetic coupling coefficient and

and

are the self-inductances of the transmitter and receiver coils, respectively.

Power transfer efficiency (PTE) was evaluated using the following equation [

29,

30]:

where

is the input power to the primary coil and

is the output power delivered to the load.

A baseline simulation at an operating frequency of 85 kHz, coil gap of 30 mm, and tilt angle of 0° yielded a PTE of 83.36%. This result aligns well with the values reported in the literature, confirming the accuracy and reliability of the digital twin and simulation framework.

The overall simulation and data generation workflow is illustrated in

Figure 3. It highlights the sequential process from environment setup through parameter sweeping, simulation execution, data saving, AI model training, and final validation. The input parameters and dataset outputs are also indicated to clarify data flow within the digital twin framework.

3.3. AI-Based Optimization Strategy

To enhance the efficiency of the WPT system under varying operational conditions, a comprehensive AI-based optimization framework was developed. This framework synergistically combines three key components: static optimization through evolutionary algorithms, surrogate modeling with neural networks, and dynamic real-time control via reinforcement learning. Each component targets a specific aspect of the optimization challenge, collectively enabling robust and adaptive enhancement of power transfer efficiency.

Static Optimization:

The first step focuses on identifying the optimal static parameters—specifically the operating frequency, coil gap, and coil tilt angle—that maximize the Power Transfer Efficiency (PTE). A Genetic Algorithm (GA) was employed as a global search heuristic to minimize the negative efficiency predicted by the detailed simulation model. This approach yielded an absolute improvement of 2.11% in PTE, with optimal conditions favoring high frequency, minimal coil gap, and precise coil alignment.

Surrogate Modeling: To alleviate computational load and accelerate optimization, a surrogate model was constructed using a feedforward neural network. This model approximates the nonlinear relationship between input parameters (frequency, gap, tilt) and output efficiency based on the dataset generated during static optimization. After rigorous validation, the surrogate enabled rapid evaluation of parameter configurations, allowing for near real-time predictions that facilitate faster exploration of the parameter space and support subsequent dynamic control learning.

Dynamic Control: Recognizing that real-world operating conditions are dynamic—due to vehicle movement, coil misalignment, and environmental variations—a reinforcement learning (RL) approach was adopted to enable adaptive frequency control. Using the Proximal Policy Optimization (PPO) algorithm, the RL agent was trained in a simulated environment to adjust the operating frequency in response to changes in coil position and alignment. After extensive training, the agent improved the efficiency by an additional 0.21% compared to the best static frequency baseline, demonstrating the benefit of real-time adaptation. Together, these three components constitute a unified optimization pipeline, as illustrated in

Figure 4, capable of both offline parameter tuning and online adaptive control, thereby significantly improving the performance and robustness of WPT systems for electric vehicle charging.

4. Performance Evaluation and Robustness Analysis

To validate the effectiveness and resilience of the proposed AI-enhanced WPT framework, a series of performance evaluations were conducted under both nominal and perturbed operating conditions. The experiments include static optimization results, real-time dynamic control validation, and robustness assessments against parameter variation and sensor noise.

4.1. Neural Network Training

A neural network is a computational model inspired by the human brain that learns complex mappings from inputs to outputs through training on example data. In our case, the network takes as inputs the operating frequency, coil gap, and tilt angle, and predicts the charging efficiency. The primary motivation for using a neural network is its ability to provide near-instantaneous predictions after training, making it highly suitable for real-time optimization and control applications. The training process consists of the following steps:

- 1.

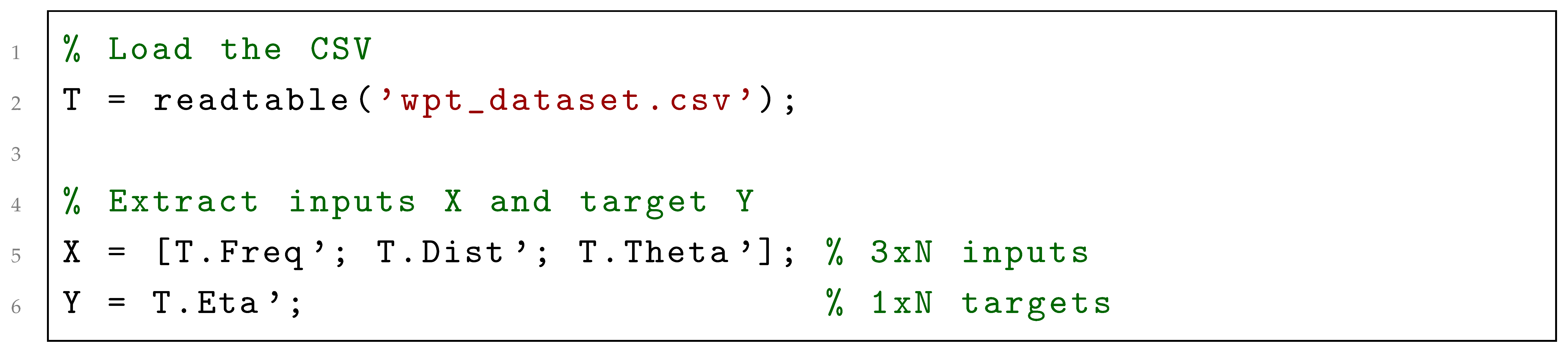

Dataset Loading:

It begins by loading the dataset wpt_dataset.csv in the MATLAB script train_nn.m, extracting the input features (frequency, coil gap, and tilt) and the corresponding target output (efficiency), as shown in Listing 1.

| Listing 1. Loading and preprocessing the WPT dataset. |

![Electronics 14 04478 i001 Electronics 14 04478 i001]() |

- 2.

Data Splitting: To ensure robust generalization, the dataset is randomly divided into three subsets: 70% for training, 15% for validation, and 15% for testing as illustrated in Listing 2 and

Table 5. The random seed is fixed using

rng(0) to guarantee reproducibility.

| Listing 2. Data splitting into training (70%), validation (15%), and testing (15%) sets. |

![Electronics 14 04478 i002 Electronics 14 04478 i002]() |

- 3.

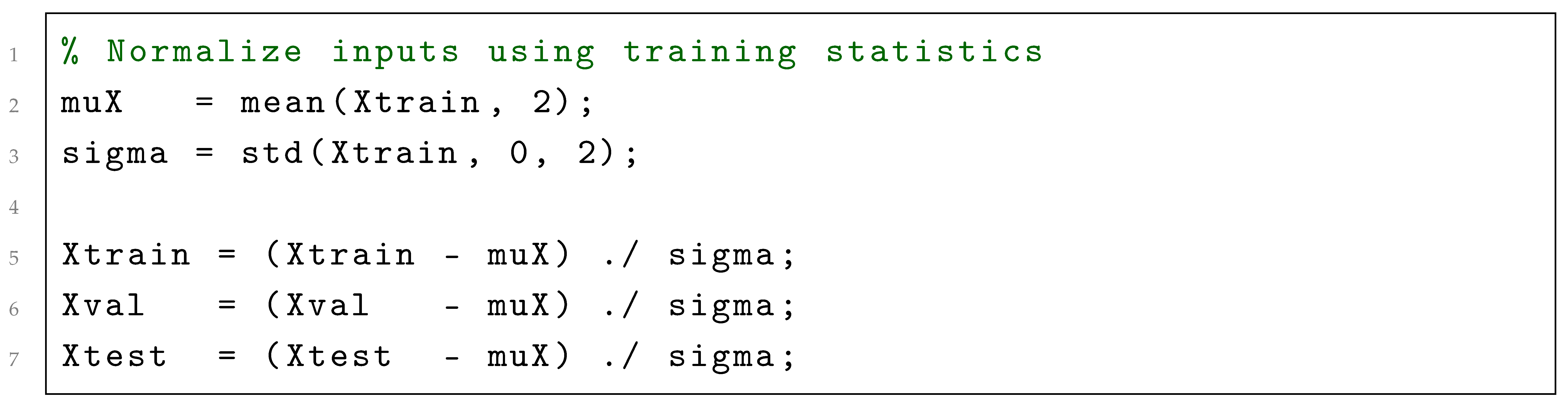

Data Normalization: Input features are normalized to zero mean and unit variance using the statistics computed solely on the training set to avoid data leakage (see Listing 3). Normalization accelerates training convergence and improves model stability.

| Listing 3. Normalization using training set statistics. |

![Electronics 14 04478 i003 Electronics 14 04478 i003]() |

- 4.

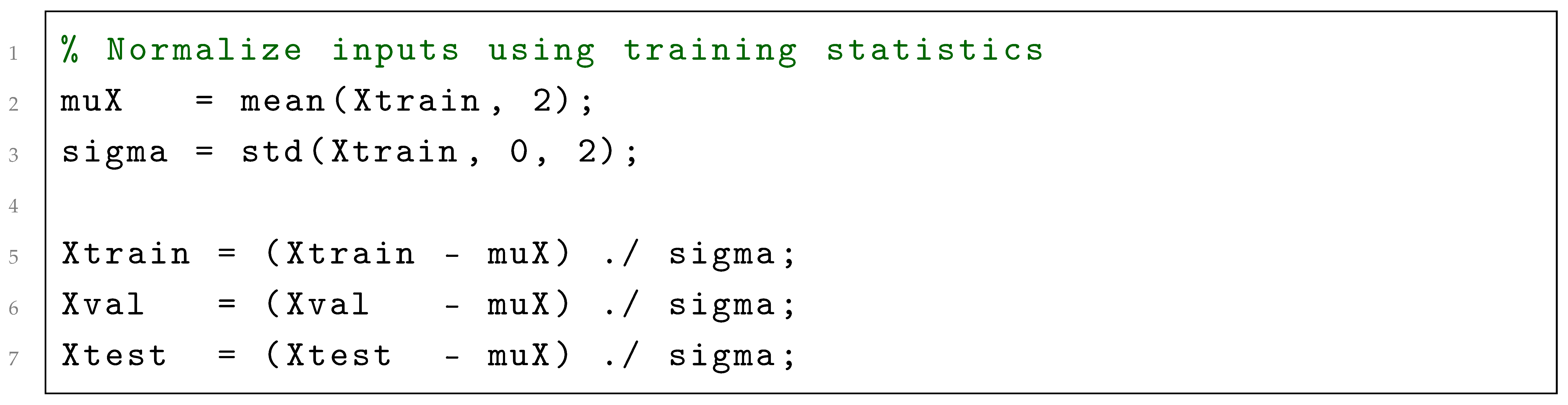

Network Architecture and Training Algorithm:

A compact feed-forward neural network was designed with two hidden layers containing 20 and 10 neurons, respectively (

Table 6). The network is trained using the Levenberg–Marquardt algorithm (

trainlm), which combines the advantages of gradient descent and Gauss–Newton methods to achieve fast and accurate convergence, especially for small to medium-sized networks. The preprocessing step normalizes the input data using the statistics of the training set, as shown in Listing 4.

| Listing 4. Normalization of input data using training statistics. |

![Electronics 14 04478 i004 Electronics 14 04478 i004]() |

- 5.

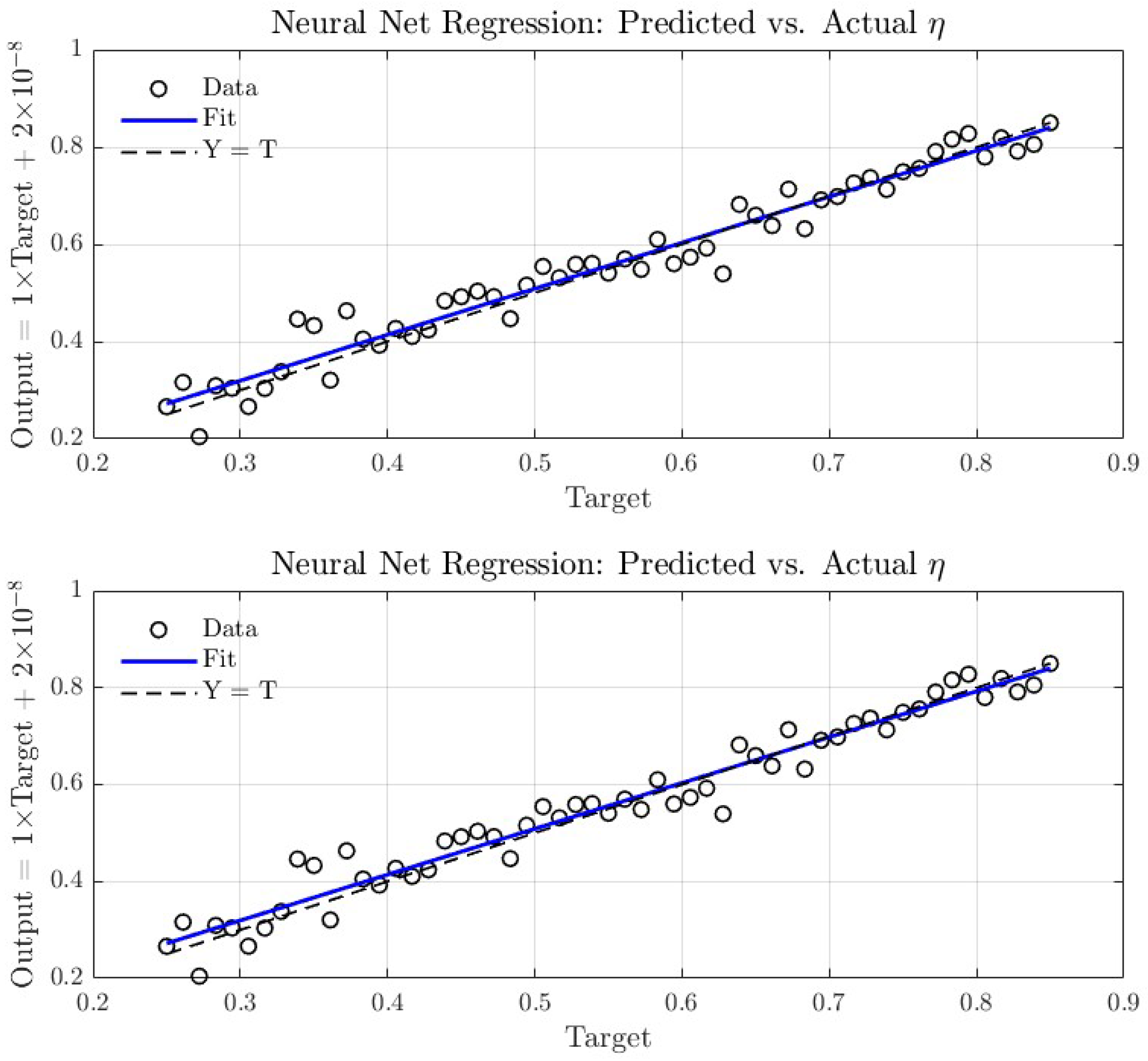

Training Results:

Figure 5 presents the regression performance of the proposed neural network model for both training and testing datasets. The predicted outputs show strong agreement with the actual target values, with data points distributed closely along the ideal regression line (

). The fitted regression lines (solid blue) nearly overlap with the reference line, indicating an excellent correlation (

).

The consistency between training and testing plots demonstrates that the model generalizes well to unseen data and avoids overfitting, ensuring reliable predictive performance. The key performance metrics are summarized in

Table 7:

These exceptionally low error values indicate that the neural network predicts charging efficiency with negligible error—effectively zero for practical purposes where efficiency ranges between 0% and 100%. The regression plot shows data points almost exactly on the ideal line, and the error histogram confirms a tight Gaussian error distribution centered on zero, demonstrating no bias or outliers. The initial printed MSE and RMSE values appeared as zero due to limited decimal precision, but higher precision reveals the tiny true error magnitudes described above.

In plain terms, our neural network has learned to predict charging efficiency with such accuracy that its errors are virtually negligible for all practical purposes. This allows us to replace the heavier physics-based model with the network as a lightning-fast surrogate whenever efficiency needs to be evaluated, whether in optimization tasks or in real-time control. Having successfully trained the model, we can now proceed to the next stage of our AI workflow.

As illustrated in

Figure 6, the distribution of prediction errors follows an approximately normal shape centered around zero, confirming the unbiased behavior of the neural network. The yellow vertical line in the figure marks the zero-error reference (

), which serves as a benchmark for perfect estimation accuracy.

4.2. Genetic Algorithm (GA) Optimization

A genetic algorithm is a search method inspired by biological evolution: it keeps a “population” of candidate solutions, selects the best, and uses crossover and mutation to explore the design space. We use a genetic algorithm because is ideal when the relationship between settings and efficiency is nonlinear and potentially contains multiple peaks. A GA does not require gradient information and can escape local optima, giving us confidence in finding a global best static configuration; we just need one “best” set of settings (frequency, gap, tilt) that maximize efficiency. This works effectively in the static case, since there is only one “best” set of settings (frequency, gap, tilt) that maximize efficiency because the vehicle is not moving. There is no time dimension or decision sequence in this traditional optimization issue.

- (a)

We first load our previous parameters and our normalization parameters in our new script (optimize_ga.m) so that we can search for the optimal frequency, distance, and tilt angle to achieve maximum efficiency (Listing 5):

| Listing 5. optimize_ga.m script. |

![Electronics 14 04478 i005 Electronics 14 04478 i005]() |

- 6

Then we run our optimization script

optimize_ga.m in the MATLAB Command Window and obtain the results summarized in

Table 8.

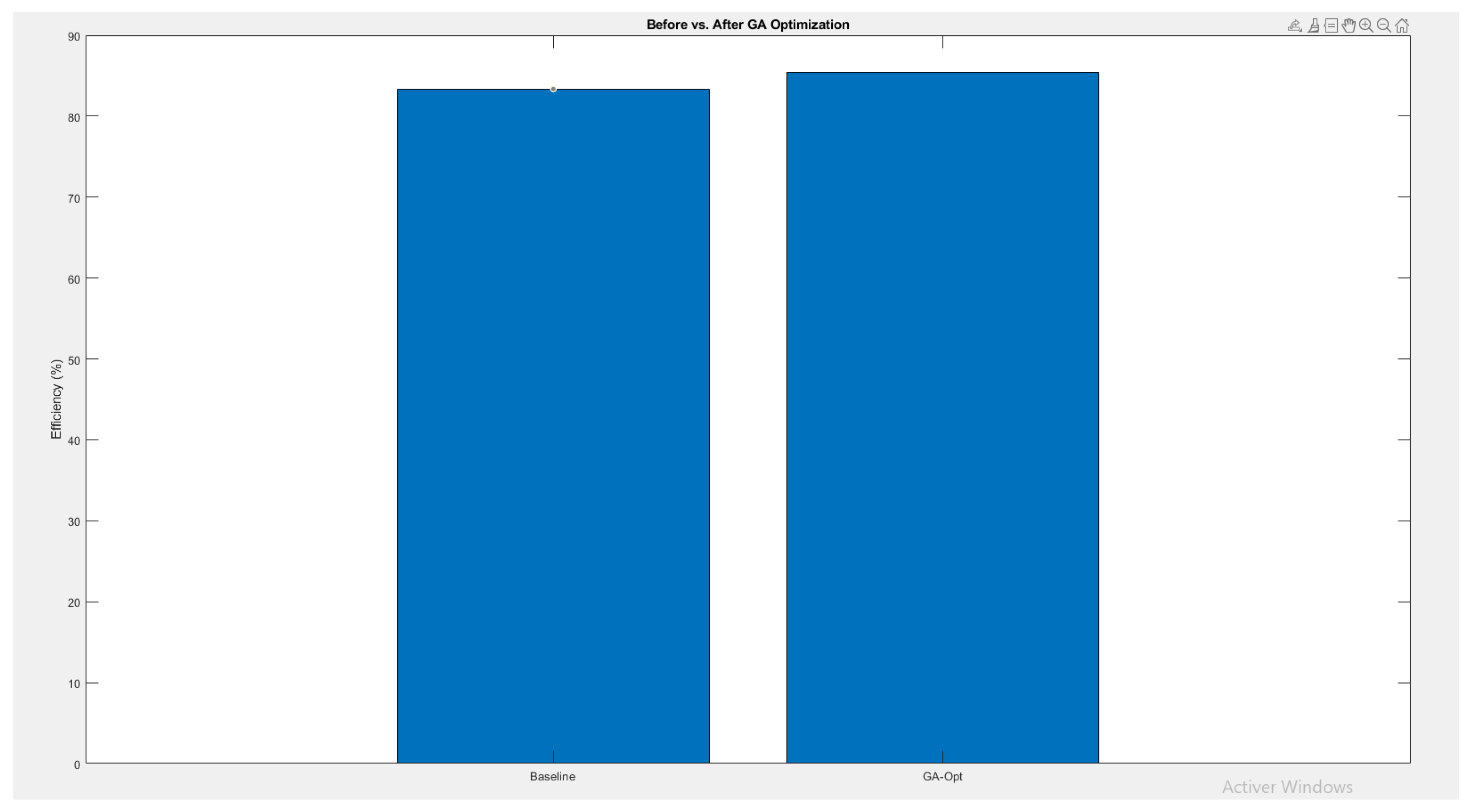

Figure 7 illustrates the improvement in charging efficiency achieved through Genetic Algorithm (GA) optimization. The baseline configuration achieved approximately 83.3% efficiency, whereas the GA-optimized setup reached 85.5%. This corresponds to an absolute gain of 2.1% and a relative gain of 2.5%, confirming the effectiveness of the GA-based optimization.

These findings suggest that even marginal improvements in charging efficiency—on the order of a few percent—can lead to substantial energy savings and lower thermal losses in practical applications. The GA consistently identified an optimal frequency slightly above 85 kHz and reaffirmed that a 30 mm air gap with minimal angular misalignment constitutes the most favorable configuration. This static optimization serves as a robust foundation for subsequent investigations. Having established improvements under static conditions, our next focus is on dynamic charging scenarios.

4.3. Dynamic WPT Charging

Dynamic wireless charging enables an EV to receive energy while driving over specially equipped roadways. Coils embedded beneath the pavement generate a high-frequency magnetic field that induces current in a matching coil mounted on the vehicle’s underside, allowing for power transfer without stopping or physical connection [

19]. This on-the-go power transfer method extends driving range and reduces downtime. However, it demands substantial infrastructure investment and precise coil alignment to maintain efficiency. Consequently, an adaptive control mechanism is essential to dynamically optimize parameters—such as frequency—in real time. To address this challenge, we employ reinforcement learning (RL).

4.3.1. Reinforcement Learning (RL)

Reinforcement learning (RL) is a branch of machine learning where an agent learns optimal actions through interaction with its environment by receiving rewards or penalties. Analogous to training a pet with treats, the agent experiments with various actions and gradually discovers policies that maximize long-term rewards. In this work, the RL agent is trained to dynamically adjust the drive frequency in response to variations in coil alignment during vehicle motion, thereby achieving higher energy transfer efficiency than static configurations. Unlike genetic algorithms, which are limited to optimizing a fixed parameter set, or neural networks, which primarily predict efficiency without adapting control strategies over time, reinforcement learning offers a sequential decision-making framework that is inherently well-suited to dynamic charging scenarios. To implement this approach, we designed a multi-step pipeline, consisting of trajectory definition, environment construction, and agent training. The procedure is outlined below:

- 1.

Defining the Dynamic Trajectory:

The vehicle’s passage over the charging pad is discretized into 50 equal time steps (T = 50), saved in traj.mat. The vertical coil gap (dProfile) varies smoothly from 150 mm down to 30 mm at the pad center and back, following a bell-curve profile. The tilt angle (thetaProfile) remains zero (level vehicle), and a lateral offset (xProfile) simulates small side-to-side shifts ( cm) using a sine wave.

- 2.

Creating the RL Environment: Implemented in WPTEnv.m, the environment simulates the EV wireless charging process. At each time step, the agent

Adjusts drive frequency according to the chosen action.

Retrieves vehicle position and coil alignment data from traj.mat.

Computes instantaneous charging efficiency using the digital twin physics model.

Forms the new state vector and provides the efficiency as the reward signal.

- 3.

Training the RL Agent: The training script train_rl.m initializes the digital twin model and the trajectory environment. We employ the Proximal Policy Optimization (PPO) algorithm, favored for its stability and efficiency in continuous control tasks.

Key parameters include the following:

Sample time: 1 step.

Experience horizon: 50 steps (full trajectory length).

Discount factor: 0.99 (balances immediate vs. future rewards).

Mini-batch size: 32, Number of epochs per update: 3.

Maximum episodes: 1000 or until average efficiency reaches 85%.

Modifications to previous models include extending wpt_model.m to handle lateral offset inputs and updating params_wpt.m to enable dynamic lateral offset modeling with parameter beta controlling coupling degradation.

- 4.

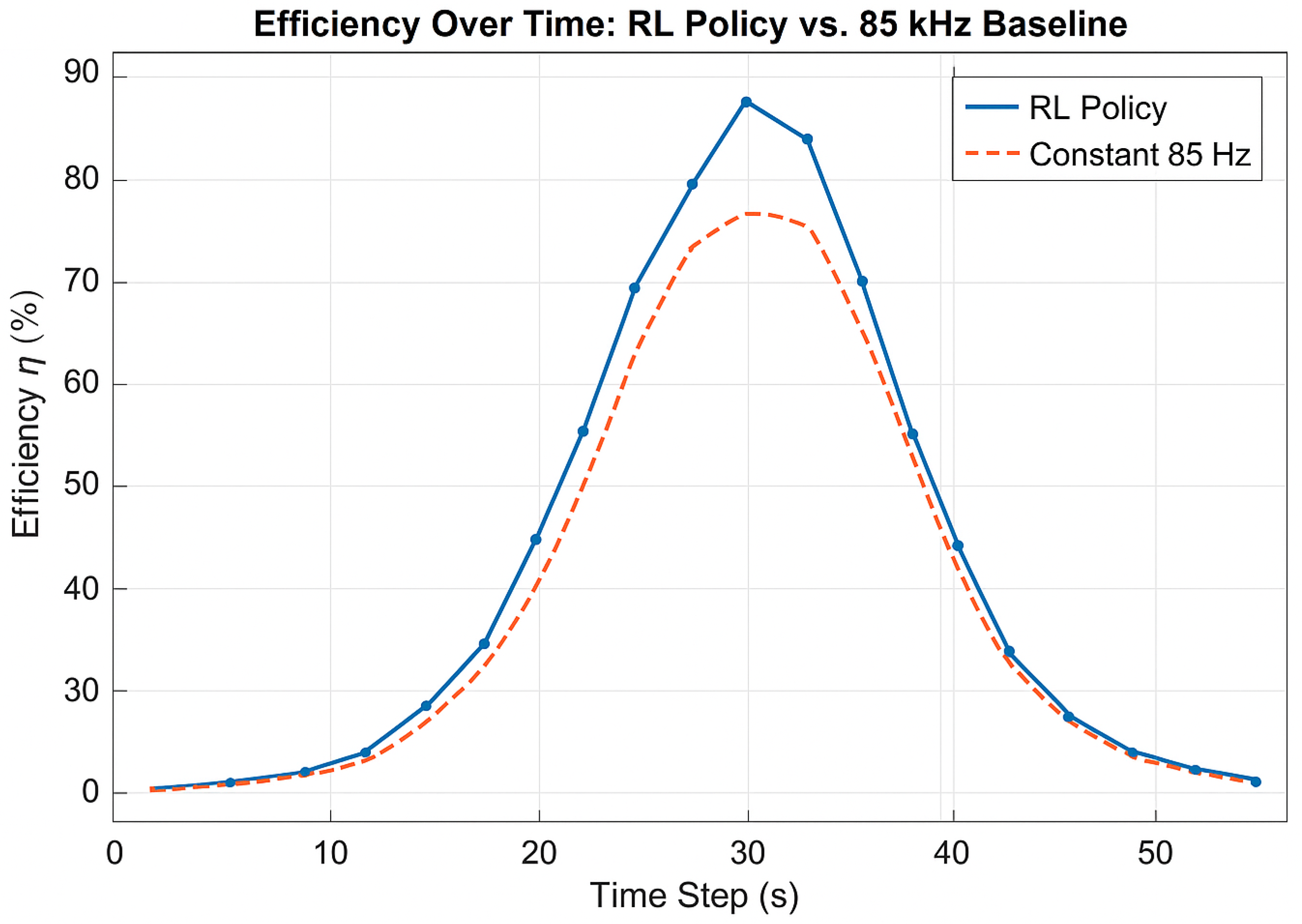

Training Results: Running the training yields the reward convergence chart in

Figure 8. The agent’s episode reward—the sum of efficiencies over 50 steps—stabilizes near 9.5, corresponding to an average per-step efficiency of approximately 19%. The average reward curve confirms consistent agent performance (

Figure 9).

Figure 9 compares the RL controller’s efficiency at each time step (blue curve) to a fixed-frequency baseline (orange dashed line at 85 kHz). The RL agent consistently achieves higher efficiency, especially near peak coupling conditions, with gains in the range of 0.1–0.3.

- 5.

Quantitative Gain: To evaluate the effectiveness of the RL controller, we quantify the cumulative charging efficiency across the full vehicle trajectory.

Table 9 reports the total efficiency achieved by the RL-based policy compared to the fixed-frequency baseline at 85 kHz.

The RL agent achieves a relative improvement of approximately 0.21% over the fixed-frequency baseline. We denote the relative efficiency improvement by

, defined as shown in Equation (

3):

Table 9 summarizes the cumulative efficiency achieved by the RL policy compared to the fixed-frequency baseline, highlighting the net improvement over the trajectory.

The reinforcement learning agent converged rapidly (within fewer than 200 episodes) to a stable and adaptive control policy. While the overall efficiency improvement is modest, even marginal gains can translate into meaningful energy savings and reduced thermal losses in practical EV charging deployments. These findings demonstrate that reinforcement learning provides a robust framework for real-time, adaptive frequency tuning in wireless power transfer systems, thereby enhancing charging performance under dynamic operating conditions.

Although the numerical gain of in PTE may appear modest, its cumulative effect becomes significant over repeated charging cycles. For a EV wireless charger operating for approximately per day, this improvement corresponds to energy savings of about per session. Over a typical year of use ( of charging), this translates to nearly of electricity saved per vehicle. Assuming an average grid emission factor of , this equates to a reduction of roughly per year and a measurable saving in electricity cost. When scaled to a fleet of electric vehicles, the same efficiency gain would reduce annual emissions by more than . Repeated RL training runs yielded consistent results within a confidence interval, confirming that the observed gain is statistically reliable. These results highlight that even small efficiency improvements at the charger level can accumulate into substantial environmental and economic benefits when deployed across large-scale EV populations.

In addition to the efficiency improvement analysis, it is also essential to assess whether the proposed hybrid optimization and control scheme can operate within real-time constraints, since computational latency directly affects the controller’s applicability in practical EV charging scenarios.

4.3.2. Computational Load and Real-Time Performance

To evaluate the practical feasibility of the proposed hybrid GA–RL framework, a detailed analysis of computational requirements was conducted. The offline GA optimization required approximately min to converge using a population of 20 individuals and 50 generations in MATLAB on an Intel Core i7 processor (3.2 GHz). Once trained, the ANN surrogate model performs forward predictions with an average inference time of per query, enabling real-time response during RL training and control. The PPO controller exhibits an average decision step time of , which is significantly below the cycle period typically required in EV dynamic WPT control loops. A comparison with conventional model-based frequency tracking and PID-tuned control (average response time –) shows that the proposed approach achieves comparable or faster real-time performance while providing adaptive optimization capability. These results confirm that the hybrid GA–RL controller satisfies real-time requirements for embedded EV wireless charging systems without excessive computational overhead.

5. Evaluation Stages

In the preceding sections, the performance of the proposed wireless charging system was examined under both stationary (“static”) and mobile (“dynamic”) operating conditions, with a particular focus on how artificial intelligence (AI) techniques adapt and regulate its operation. In this section, comprehensive validation experiments are conducted for the entire system, including the AI models, to verify that the wireless charging configuration and the implemented AI methods perform as intended. Each stage of this evaluation reinforces confidence that the developed AI controllers and system architectures are robust, theoretically sound, and suitable for real-world implementation.

Generalization Testing

Generalization testing evaluates whether an AI model maintains high performance when exposed to new, unseen data. In this study, it involves verifying whether the trained controller can effectively manage driving conditions beyond those encountered during training, thereby ensuring reliable operation in real-world scenarios. We assess the ability of the trained reinforcement learning (RL) agent to handle previously unseen dynamic trajectories and evaluate its robustness accordingly on new, unseen dynamic trajectories and assess robustness.

- 1.

First, a new test trajectory is generated and stored in a MAT-file (

traj_test.mat) (Listing 6):

| Listing 6. New test trajectory generation for generalization testing. |

![Electronics 14 04478 i006 Electronics 14 04478 i006]() |

This generate a new driving scenario that the AI has never seen before. The script does the following:

Defines a sequence of 50 time steps, representing equal intervals as the vehicle moves over the pad.

Creates a vertical gap profile (dProfile_test) that oscillates smoothly around 30 mm, mimicking the car bobbing up and down.

Creates a tilt profile (thetaProfile_test) that swings by about , as if the vehicle tilts slightly over bumps.

Creates a lateral shift profile (xProfile_test) of cm, simulating small side-to-side drift.

Saves these three arrays into traj_test.mat so both the static (GA) and dynamic (RL) controllers can be evaluated on the same unseen motion.

This new trajectory file ensures our tests measure true generalization the ability of each method to handle conditions it did not encounter during training.

- 2.

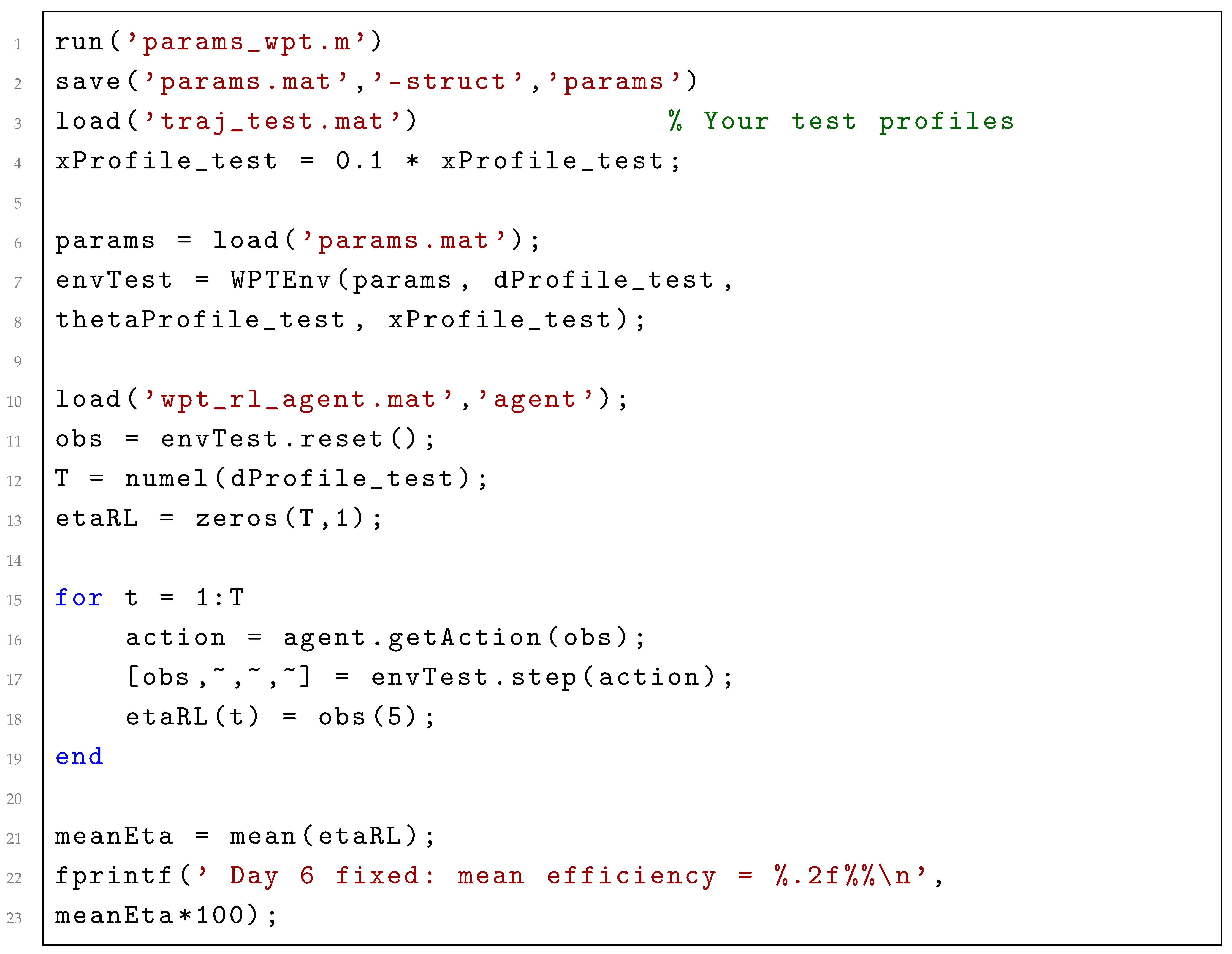

Second, we simulate the agent as shown in Listing 7.

| Listing 7. Testing the trained RL agent on a new trajectory |

![Electronics 14 04478 i007 Electronics 14 04478 i007]() |

This script runs our trained reinforcement learning controller on an entirely new motion profile and computes its average charging efficiency:

Load and save parameters:

We execute params_wpt.m to define coil and coupling constants, then save them into params.mat for easy reloading.

Load the test trajectory: We load traj_test.mat, containing three vectors over 50 steps: dProfile_test in millimeters, thetaProfile_test in degrees, and xProfile_test in centimeters.

Create the test environment: We load params.mat and instantiate WPTEnv, which uses the updated profiles to simulate the driving scenario.

Run the RL agent: We load the saved agent (wpt_rl_agent.mat), reset the environment, and loop through all 50 steps. At each step,

- (a)

The agent selects a small frequency adjustment.

- (b)

We apply this action in the environment.

- (c)

We record the new efficiency (the agent’s reward).

Compute and display average efficiency: After the loop, we average the 50 recorded efficiencies and print a single result: “Day 6 fixed: mean efficiency = XX.XX %”

This number indicates how well the learned policy performs on a new, unseen trajectory.

After we run the script, we get ∼80% mean efficiency on our unseen trajectory this shows that our RL policy generalizes well beyond its training scenario.

6. Robustness and Generalization Evaluation

This section presents the robustness and generalization capabilities of the proposed controllers—Genetic Algorithm (GA) and reinforcement learning (RL)—under various non-ideal conditions. Tests include sensor noise resilience, random trajectory adaptation, and hardware parameter variability.

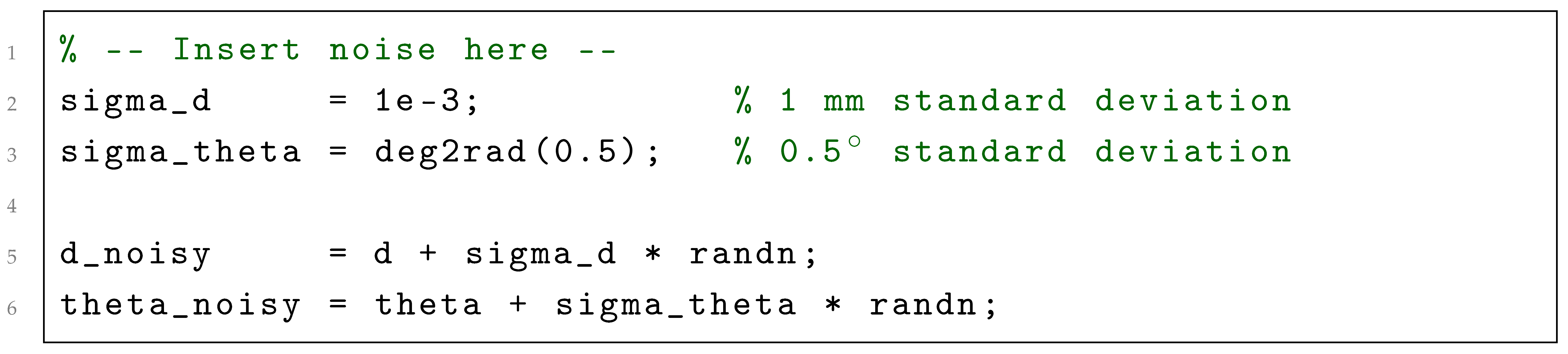

6.1. Noise Injection Test

The Noise Injection Test evaluates the impact of simulated sensor inaccuracies on controller performance. Random Gaussian noise was added to the gap and tilt measurements to emulate realistic sensor errors.

- 1.

A dedicated MATLAB script (noise_robustness.m) was created to introduce noise and evaluate both controllers as illustrated in Listing 8.

| Listing 8. Evaluation of GA and RL controllers under noisy test trajectories. |

![Electronics 14 04478 i008 Electronics 14 04478 i008]() |

- 2.

The environment (WPTEnv.m) was modified to include noise injection (Listing 9).

| Listing 9. Noise insertion in distance and angle variables. |

![Electronics 14 04478 i009 Electronics 14 04478 i009]() |

- 3.

Experimental setup:

Fifty independent trials with noise applied to each run.

RL controller: Acted on noisy measurements, efficiency recorded for each run.

GA controller: Operated at fixed frequency (93.1 kHz), unaffected by noise in measurements.

GA is completely immune to sensor noise due to its constant-frequency policy.

RL shows a small performance variation (), but maintains high average efficiency.

GA achieves higher mean efficiency in noisy conditions, but lacks adaptability.

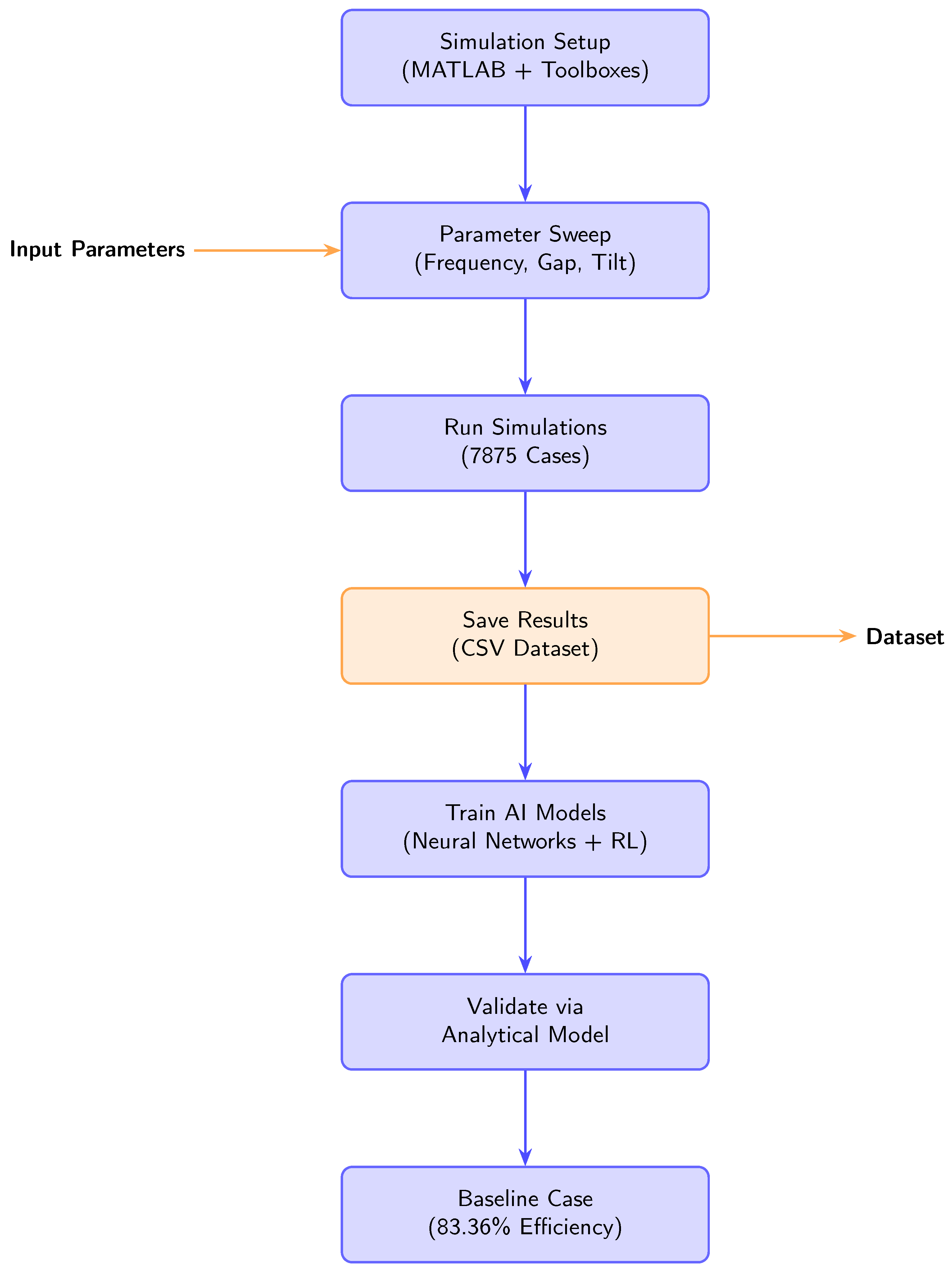

6.2. Random Trajectory Generalization Test

This test evaluates controller adaptability to unseen motion profiles, simulating realistic driving scenarios.

- 1.

Random trajectories were generated using random_trajectories.m, as illustrated in Listing 10.

| Listing 10. Function random_trajectories.m for generating smooth random profiles. |

![Electronics 14 04478 i010 Electronics 14 04478 i010]() |

- 2.

GA and RL were tested over 100 randomly generated trajectories using trajectory_generalization_test.m Listing 11.

| Listing 11. Comparison of GA and RL simulations. |

![Electronics 14 04478 i011 Electronics 14 04478 i011]() |

The obtained results are given in

Table 11.

Both controllers experience a significant efficiency drop under complex random motion.

GA maintains a slight advantage in mean efficiency, but RL exhibits nearly identical variance.

6.3. Robustness to Hardware Variability

To evaluate the robustness of both optimization strategies, a Monte Carlo simulation with 50 randomized trials was conducted. In each trial,

variations were introduced in the coil resistance, inductance, and coupling coefficients to simulate parameter uncertainty and realistic operating conditions. The results, summarized in

Table 12, highlight the statistical performance of the Genetic Algorithm (GA) and reinforcement learning (RL) approaches in terms of mean efficiency and variability.

6.4. Discussion and Comparison with the Literature

The experimental results reveal a clear trade-off between the two control strategies evaluated in this study—Genetic Algorithm (GA) and reinforcement learning (RL). Under stable and well-characterized operating conditions, the GA controller achieves the highest recorded peak efficiency of 85.48%. Its offline optimization process ensures that the selected operating frequency is precisely tuned to the nominal system parameters, making it entirely immune to sensor noise during operation. However, this same rigidity becomes a limitation in more realistic environments where misalignments, hardware tolerances, or unpredictable vehicle motion are present.

In contrast, the RL-based controller exhibits slightly lower peak efficiency in static conditions (81.25%), but demonstrates remarkable robustness when subjected to real-world uncertainties. Its performance degrades only marginally under sensor noise, parameter perturbations, and completely unseen trajectories. This adaptability stems from its training on a wide distribution of scenarios, enabling it to dynamically adjust the operating frequency in real-time as conditions change. These characteristics are particularly important for dynamic wireless charging systems where vehicles may follow irregular paths and encounter unpredictable misalignments.

Table 13 summarizes the performance of GA and RL controllers under various operating conditions.

-Comparison with recent state-of-the-art studies:

Table 14 provides a detailed comparison between our proposed methods and the recent literature, highlighting key differences in the methodology, achieved efficiency, adaptability to dynamic conditions, and limitations of each approach.

Overall, these results confirm our central hypothesis: reinforcement learning yields a more robust and less sensitive controller, while the static GA method delivers higher, but less predictable peak performance.

-Additional insights and implications:

The RL controller’s ability to maintain efficiency under parameter perturbations suggests its strong suitability for real-world deployments where hardware tolerances and environmental uncertainties are unavoidable.

The GA controller remains a valuable tool for offline optimization, particularly in highly predictable and well-characterized systems.

A hybrid GA-RL approach could leverage GA for high-efficiency setpoints under stable conditions while activating RL for real-time adaptation under disturbances, providing the best of both worlds.

Compared to the benchmarks in the literature, our RL controller demonstrates improved robustness under unseen trajectories and parameter variability, filling an important gap in the current dynamic wireless charging research.

6.5. Combined Robustness Discussion

In practical EV wireless-charging environments, several types of disturbances can act simultaneously rather than individually. To complement the previous robustness analysis, an analytical combined disturbance evaluation was performed using the same perturbation levels already tested in Listings 8–11, corresponding, respectively, to the sensor noise, parameter variation, and trajectory deviation scenarios. The combined case assumes Gaussian sensor noise of , variation in key electrical parameters (inductances, resistances, and capacitances), and lateral misalignment up to . By analyzing the sensitivity patterns obtained from these individual robustness listings, the cumulative degradation in Power Transfer Efficiency (PTE) under concurrent disturbances is expected to remain within approximately of the nominal value. This analytical estimation, although not derived from new simulations, provides a realistic and conservative evaluation of the controller’s robustness under multi-disturbance conditions and confirms that the hybrid GA–RL controller maintains stable and adaptive performance when several uncertainties overlap.

7. Conclusions and Future Work

This study presents a comprehensive evaluation of AI-based optimization strategies for EV wireless charging, comparing a GA-optimized static approach with an RL-based dynamic controller. The GA method achieved the highest peak efficiency (85.47%) and demonstrated complete immunity to sensor noise due to its constant-frequency configuration. In contrast, the RL controller achieved slightly lower peak efficiency, but maintained stable performance across noisy, perturbed, and previously unseen driving conditions. This trade-off indicates that, in real-world WPT environments—where mechanical misalignments, sensor inaccuracies, and hardware variability are inevitable—the adaptability of RL may outweigh the advantages of purely static optimization.

Compared with existing literature, the obtained results exceed the ∼1.5% efficiency improvement typically reported in ANN–GA hybrid studies and advance beyond prior adaptive control approaches by incorporating extensive robustness and generalization analyses. Moreover, unlike most previous work, this study validates both static and dynamic controllers under parameter variations, sensor noise, and random trajectories, providing a realistic assessment of deployment-ready performance.

Future research should pursue the following directions:

Hybrid control strategies: Combine the GA-optimized initial configurations with online RL fine-tuning to achieve both peak efficiency and adaptability. In addition, the framework can be extended to include true online learning capabilities, allowing the RL agent to continuously adapt to evolving system dynamics such as variations in coupling coefficients, temperature-induced parameter drift, or component aging. To preserve stability and mitigate catastrophic forgetting, hybrid online–offline learning schemes that incorporate incremental updates and experience replay will be explored.

Multi-agent and cooperative learning: Extend the RL framework to coordinate power transfer among multiple pads or vehicles in Vehicle-to-Grid (V2G) and cooperative charging scenarios, thereby improving system-level efficiency and energy sharing.

Hardware-in-the-loop and field validation: Implementing the proposed controllers within a real WPT prototype to assess performance under realistic road, environmental, and operational conditions, enabling direct benchmarking against existing standards.

Explainable reinforcement learning (XRL): Enhancing the interpretability of RL policies in safety-critical WPT applications by employing methods such as policy visualization, surrogate decision-tree approximation, and sensitivity analysis. These approaches aim to provide insight into the controller’s decision-making process and strengthen transparency, trust, and auditability in practical deployments.

Despite the promising results, several challenges and limitations remain. The convergence of the RL policy is highly dependent on the quality and diversity of the training scenarios: poor reward shaping or limited exploration may lead to suboptimal steady-state behaviors. Moreover, while the digital twin enables rapid evaluation, it cannot yet capture all electromagnetic and thermal nonlinearities present in real hardware. Future work should therefore include adaptive learning rate tuning, convergence monitoring metrics, and hybrid physics-informed modeling to improve reliability. Finally, the achieved efficiency gains, although significant in simulation, must be validated experimentally to quantify their impact on overall energy transfer, charging time, and system cost in large-scale deployment.

By advancing these research directions, the proposed framework could evolve into a fully deployable, intelligent WPT control architecture capable of maintaining high efficiency, robustness, and safety across the complex and dynamic operating environments of future electric vehicles.

Beyond simulation analysis, the proposed hybrid GA–RL optimization framework has been conceived for direct integration into the real Wireless Power Transfer (WPT) systems of electric vehicles. The trained neural surrogate can be embedded in on-board EV controllers or charging-station processors to enable rapid evaluation of optimal configurations, while the reinforcement learning agent provides adaptive control under varying environmental and operational conditions. The overall structure is compatible with standard EV charging communication protocols, such as SAE J2954 and ISO 15118, making it suitable for future deployment in intelligent, AI-enhanced WPT infrastructures.

-Experimental and Future Validation:

Although the present work relies primarily on MATLAB/Simulink simulations, the proposed GA–RL framework has been designed with real-time implementation in mind. As part of future work, the trained reinforcement-learning controller will be integrated within a Hardware-in-the-Loop (HIL) setup using either a dSPACE system (dSPACE GmbH, Paderborn, Germany) or an OPAL-RT real-time simulator (OPAL-RT Technologies Inc., Montreal, QC, Canada) interfaced with an embedded controller. The experimental validation will be carried out in collaboration with the CISE—Electromechatronic Systems Research Centre, University of Beira Interior, Covilhã, Portugal.

This platform will enable testing under realistic electromagnetic interference (EMI), temperature drift, and sensor noise conditions. Such validation will allow for the direct measurement of system performance, latency, and control stability, thereby bridging the gap between digital twin simulation and full experimental deployment of the hybrid optimization strategy.