E2E-MDC: End-to-End Multi-Modal Darknet Traffic Classification with Conditional Hierarchical Mechanism

Abstract

1. Introduction

- Innovative proposal of a conditional hierarchical classification mechanism. Unlike traditional independent multi-task learning or hard cascade classification, this paper implements end-to-end hierarchical learning through a soft conditioning design that enables joint optimization across all classification levels. Specifically, the probability distribution (rather than hard decisions) of upper-level classification is used as conditional input for lower levels, enabling gradient backpropagation through Softmax operations while preserving prediction uncertainty information. This design maintains cascade accuracy of 94.90% while controlling the hierarchical violation rate below 0.8%.

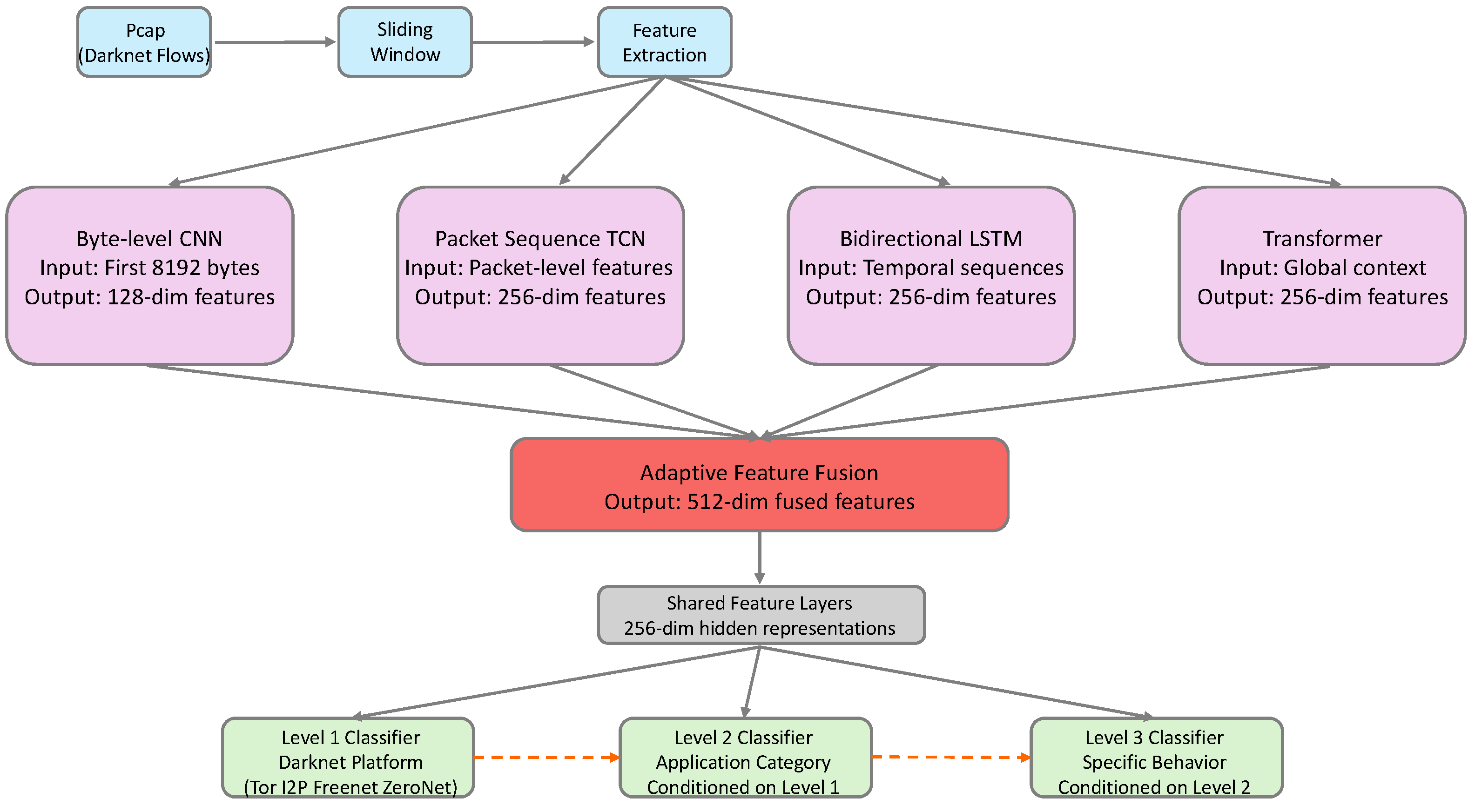

- Design of a four-modal complementary feature extraction architecture tailored for Darknet traffic characteristics. Targeting different information dimensions of encrypted traffic, this paper carefully designs four specialized neural network modules: (i) byte-level CNN captures encrypted protocol patterns through learnable byte embeddings; (ii) temporal convolutional network employs exponentially growing dilation rates (1, 2, 4, 8) to extract multi-scale temporal patterns; (iii) bidirectional LSTM fuses recurrent features with self-attention outputs through gating mechanisms; (iv) Transformer employs a [CLS] token mechanism to provide global sequence representation for traffic classification. Experiments demonstrate that this complementary design achieves 95.02% accuracy in Level 3 fine-grained classification.

- Proposal of a multi-objective joint optimization framework that unifies classification accuracy, hierarchical consistency, and feature diversity in an end-to-end differentiable loss function. Through carefully designed loss weights (classification 0.3/0.3/0.4, consistency 0.1, diversity 0.01), the system effectively maintains logical reasonability of predictions while optimizing the main task and prevents model degradation into single-modal dependency.

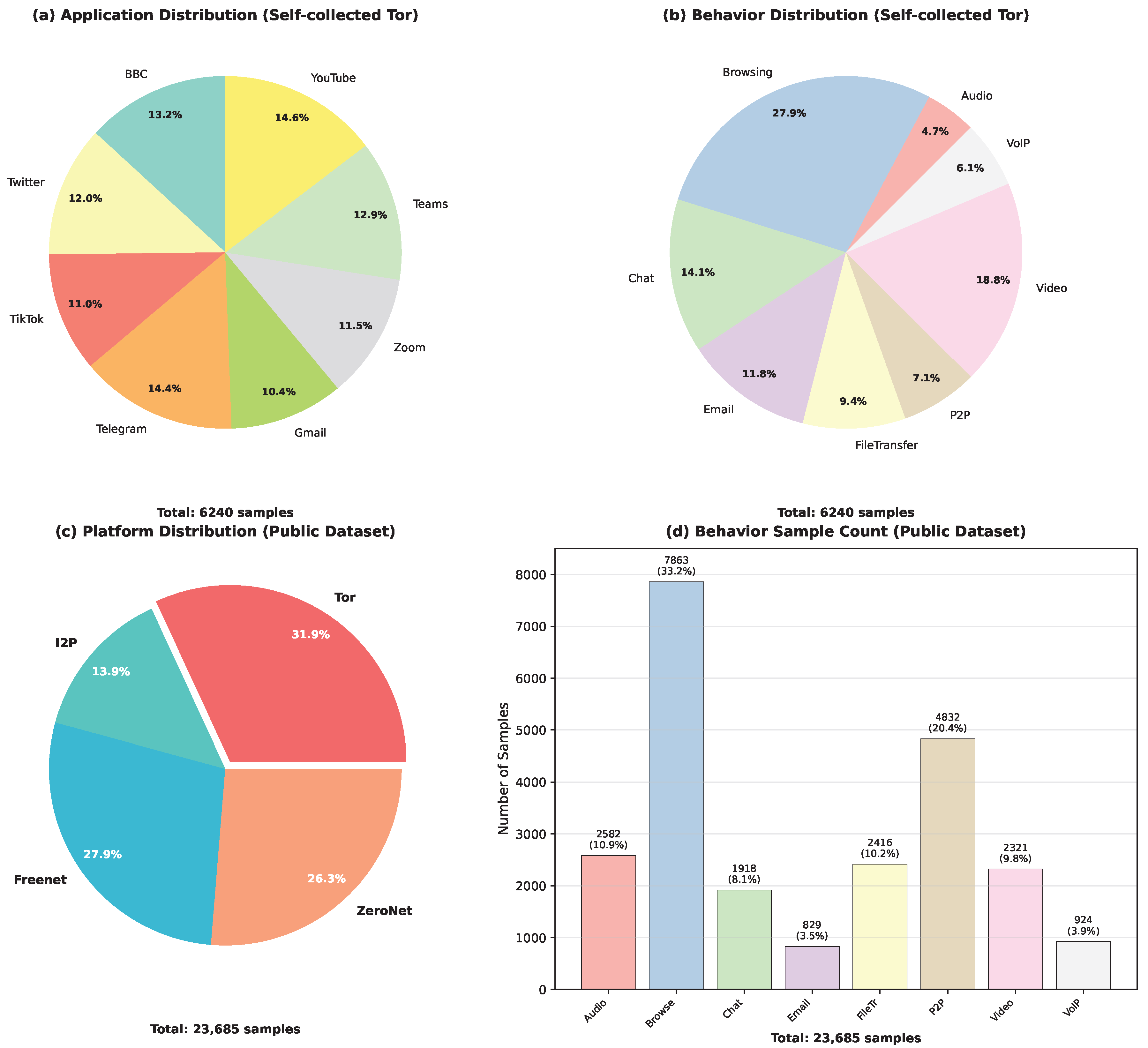

- Construction of a fine-grained Tor traffic benchmark dataset containing 6240 annotated samples across 8 behavior categories from 8 mainstream applications (BBC, Twitter, Telegram, etc.), employing a sliding window mechanism (window size 2000 packets, step size 1500 packets) to ensure completeness of traffic patterns, providing a new evaluation benchmark for Darknet traffic classification research.

2. Background and Related Work

2.1. Darknet

2.2. Traditional Machine Learning-Based Methods

2.3. Deep Learning-Based Methods

2.4. Multi-Modal Traffic Classification

2.5. Hierarchical Classification Methods

2.6. Summary of Existing Methods

3. Methodology

3.1. Problem Definition

3.2. System Architecture Overview

3.3. Data Preprocessing Pipeline

3.3.1. Sliding Window Mechanism

3.3.2. Feature Normalization Strategy

3.4. Multi-Modal Feature Extraction

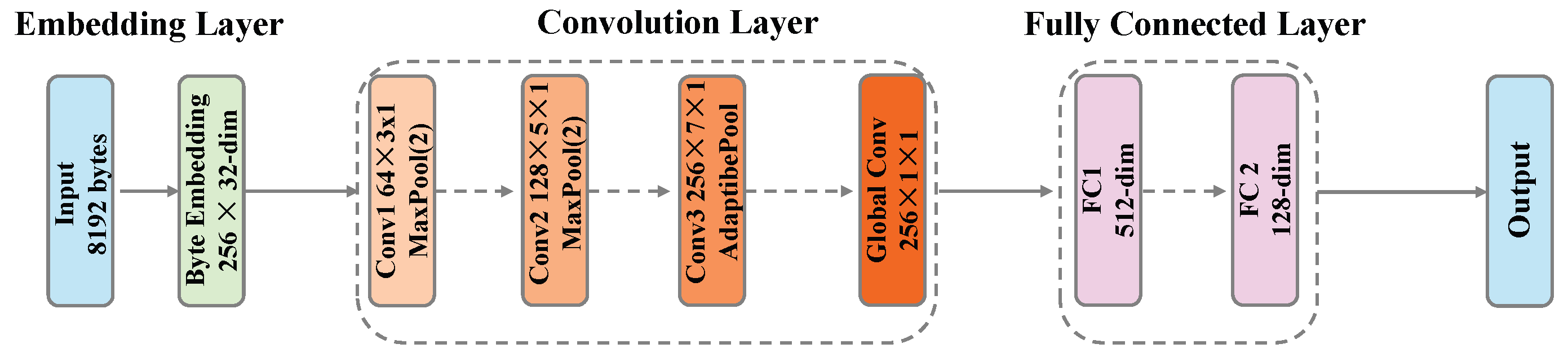

3.4.1. Byte-Level CNN Module

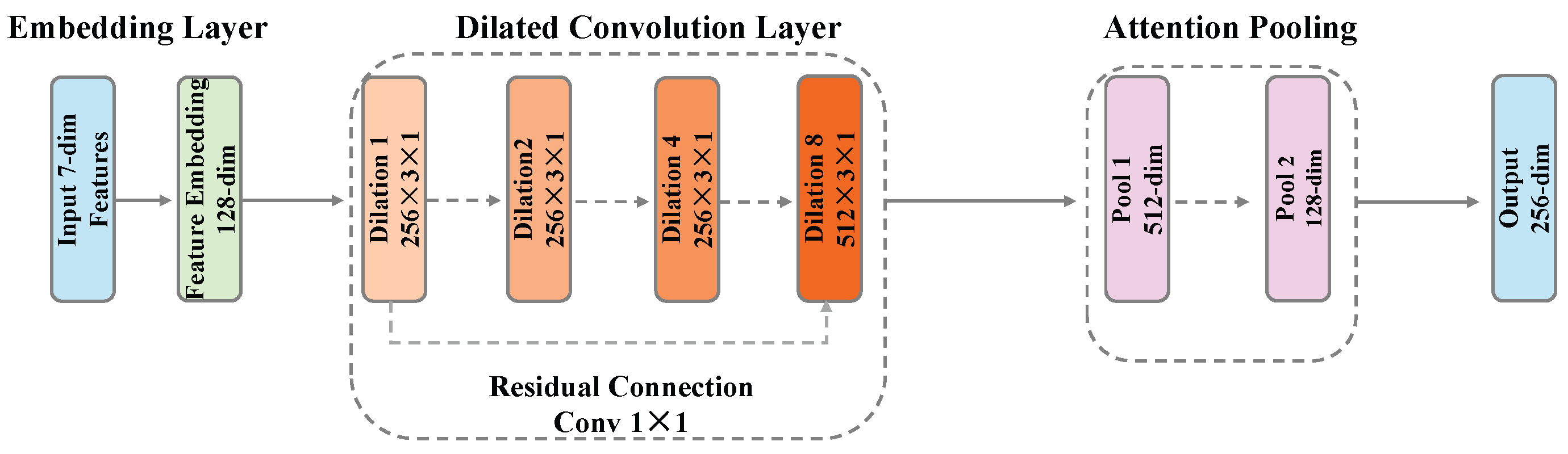

3.4.2. Packet Sequence Temporal Convolutional Network (TCN)

- Layer 1: 256 convolution kernels, dilation rate 1, capturing direct relationships between adjacent packets

- Layer 2: 256 convolution kernels, dilation rate 2, capturing patterns with 1-packet intervals

- Layer 3: 256 convolution kernels, dilation rate 4, capturing larger-range temporal patterns

- Layer 4: 512 convolution kernels, dilation rate 8, capturing long-range dependencies

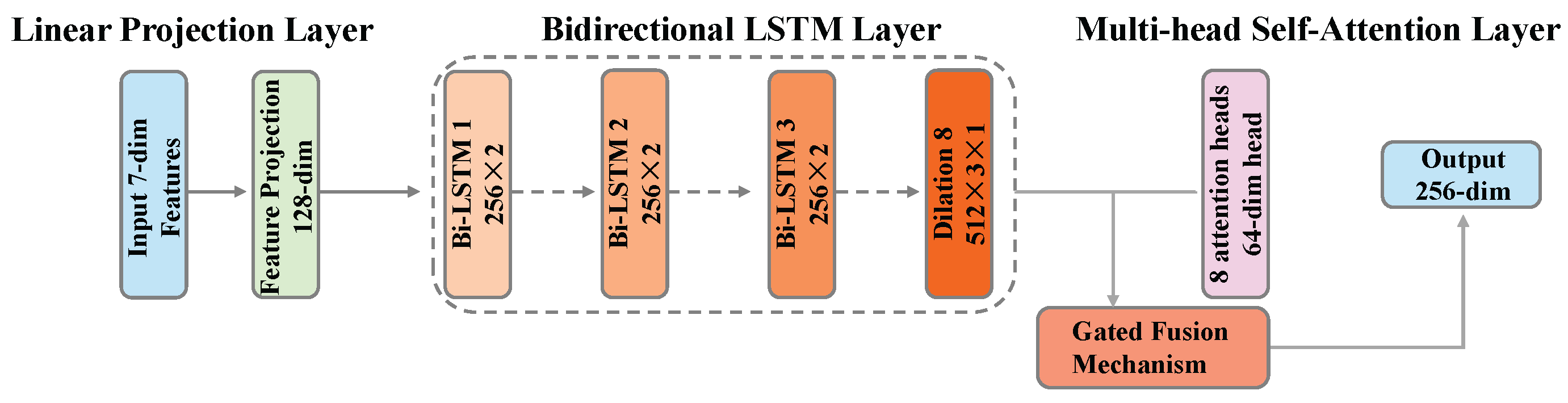

3.4.3. Bidirectional LSTM with Attention Module

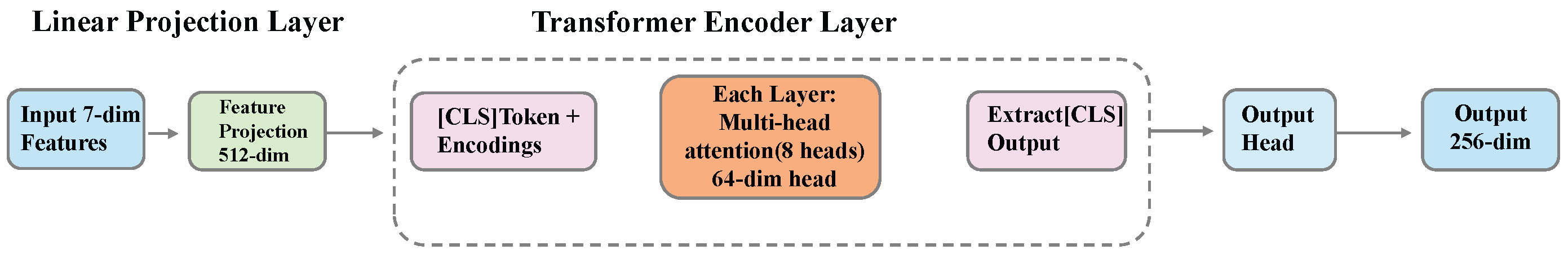

3.4.4. Transformer Global Feature Extractor

- Number of attention heads: 8;

- Dimension per head: 64 (total 512 dimensions);

- Feed-forward network dimension: 2048;

- Activation function: GELU;

- Dropout rate: 0.1.

3.5. Adaptive Feature Fusion

3.5.1. Attention-Driven Weighting Mechanism

- Byte features: dimensions (expansion projection);

- TCN features: dimensions (semantic alignment);

- LSTM features: dimensions (semantic alignment);

- Transformer features: dimensions (semantic alignment).

- For highly encrypted traffic, byte-level features may contain limited information, making temporal features more important.

- For applications with obvious interaction patterns (such as chat), LSTM and Transformer features may be more discriminative.

- For streaming media applications, statistical features of packet sequences (TCN) may be most critical.

3.5.2. Cross-Modal Attention Mechanism

- Embedding dimension: 256;

- Number of attention heads: 8;

- Dimension per head: 32;

- Dropout rate: 0.1.

- Byte-level features may gain global context by attending to Transformer features;

- LSTM features may enhance understanding of local patterns by attending to TCN features;

- Transformer features may refine identification of specific protocols by attending to byte-level features.

- First layer: dimensions, with batch normalization, ReLU activation, and 0.3 dropout.

- Second layer: dimensions, with batch normalization, ReLU activation, and 0.2 dropout.

- Third layer: dimensions, outputting the final fused features.

3.6. Conditional Hierarchical Classifier

3.6.1. Hierarchical Structure Design

- First fully connected block: dimensions, followed by batch normalization, ReLU activation, and 0.3 dropout. This layer compresses fused features to a more compact representation space while preventing overfitting through higher dropout rates.

- Second fully connected block: dimensions, also equipped with batch normalization, ReLU activation, and 0.3 dropout. This layer further refines features while maintaining dimensionality, learning representations more suitable for hierarchical classification.

- First layer: dimensions, batch normalization + ReLU + 0.3 dropout;

- Second layer: dimensions, outputting classification logits for Level 1.

- Input: [Shared features (256 dim), Level 1 probabilities ( dim)];

- First layer: dimensions, batch normalization + ReLU + 0.3 dropout;

- Second layer: dimensions, outputting classification logits for Level 2.

- Input: [Shared features (256 dim), Level 2 probabilities ( dim)];

- First layer: dimensions, batch normalization + ReLU + 0.3 dropout;

- Second layer: dimensions, outputting classification logits for Level 3.

3.6.2. Conditional Probability Modeling

- Feature-level conditioning: Classification heads at subsequent levels receive the probability distribution from the previous level as additional input, providing explicit conditional information.

- Implicit regularization: Through the shared feature extraction layer, classification heads at different levels are softly constrained, encouraging them to learn consistent representations.

- Loss function constraints: During training, we not only optimize classification accuracy at each level but also ensure predictions conform to predefined hierarchical structure through hierarchical consistency loss (detailed in Section 3.7).

3.6.3. Inference Strategy

3.7. Loss Function Design

3.7.1. Weighted Classification Loss

3.7.2. Hierarchical Consistency Loss

3.7.3. Feature Diversity Regularization

3.7.4. Total Loss Function

4. Experimental Evaluation and Analysis

4.1. Experimental Setup

4.1.1. Dataset Description

4.1.2. Baseline Methods

- Random Forest [1]: We extract 28-dimensional statistical features including packet size statistics (mean, variance, maximum, minimum), inter-arrival time distribution, packet direction ratio, byte entropy, flow duration, and idle time. The classifier uses n_estimators = 100 and max_depth = 20, following [1] which achieved 98% accuracy on CIC-Darknet2020.

- XGBoost [4]: Using the same 28 statistical features, we apply feature selection to retain the top 30 most discriminative features following [4]. Hyperparameters are n_estimators=100, learning_rate = 0.1, and max_depth = 6, which are commonly adopted values for gradient boosting in traffic classification.

- SVM: Configured with RBF kernel (C = 1.0, gamma = ‘scale’) using the same 28-dimensional feature set as Random Forest.

- DarkDetect [7]: This CNN-LSTM hybrid architecture consists of 3 convolutional layers (64, 128, 256 filters with kernel size 3) followed by 2 bidirectional LSTM layers (256 hidden units per direction). The original method targets binary darknet detection; we extend it to three-level hierarchical classification by adding separate classification heads for Level 1, Level 2, and Level 3. Training follows the unified deep learning setup with architectural parameters preserved from [7].

- DIDarknet [8]: This image-based method converts raw traffic into grayscale images and employs ResNet-50 pretrained on ImageNet. Following [8], we use the first 784 packets to construct traffic images through temporal binning. The pretrained backbone is fine-tuned with learning_rate = while maintaining other settings consistent with the unified setup.

- ODTC (adapted) [21]: As the state-of-the-art multi-modal method for darknet traffic classification, ODTC employs CNN and BiGRU fusion with multi-head attention [21]. The original architecture performs flat classification; we adapt it to hierarchical classification while preserving its core design:

- –

- CNN module: 3 convolutional layers with filters (kernel size 3), batch normalization, ReLU activation, and max pooling;

- –

- BiGRU module: 2 bidirectional GRU layers with 128 hidden units per direction, processing packet-level temporal features;

- –

- Attention fusion: 4-head multi-head attention (dimension 512) to fuse spatial and temporal features;

- –

- Hierarchical adaptation: We train three independent classifiers ( for each level) on the fused 512-dimensional features. During inference, post-hoc hierarchical constraints ensure predictions satisfy parent-child relationships.

- Stacking Ensemble [16]: Following [16], we implement a two-layer stacking architecture where Random Forest, XGBoost, and a 2-layer neural network (128 → 64 hidden units) serve as base learners. Their predictions are combined through a logistic regression meta-learner. All base learners use the same 28-dimensional statistical features.

4.1.3. Experimental Environment and Implementation Details

4.1.4. Evaluation Metrics

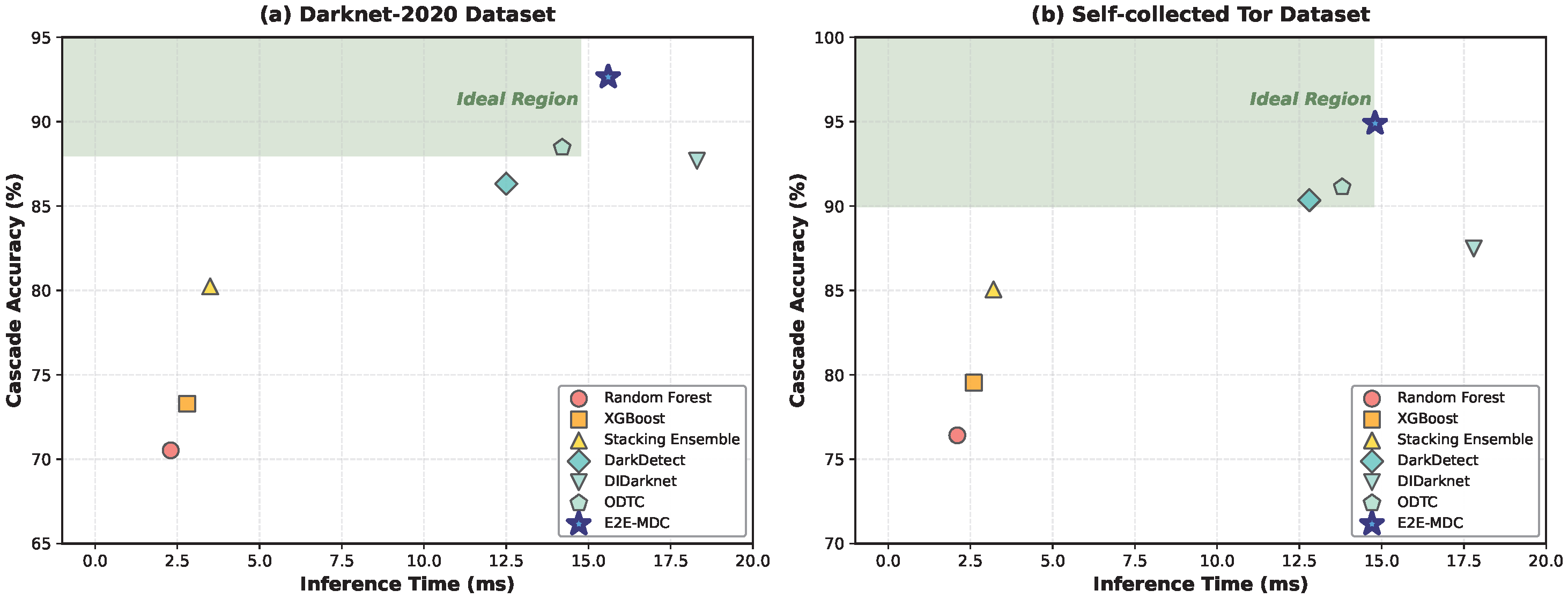

4.2. Main Experimental Results

4.2.1. Overall Comparison with Baseline Methods

4.2.2. Hierarchical Classification Performance Analysis

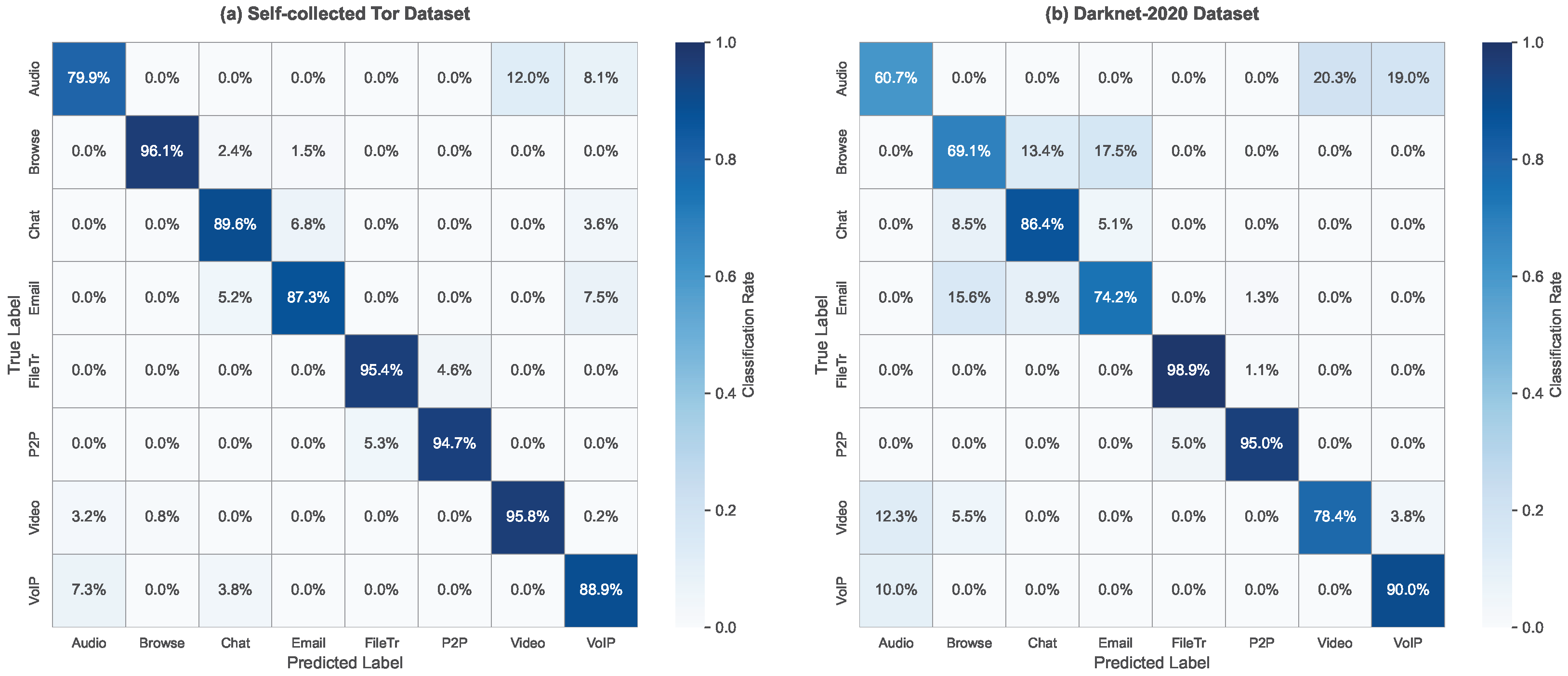

4.2.3. Fine-Grained Classification Results

4.3. Ablation Studies and Sensitivity Analysis

4.3.1. Module Contribution Analysis

4.3.2. Hyperparameter Sensitivity Analysis

4.4. Visualization Analysis

4.4.1. Confusion Matrix Analysis

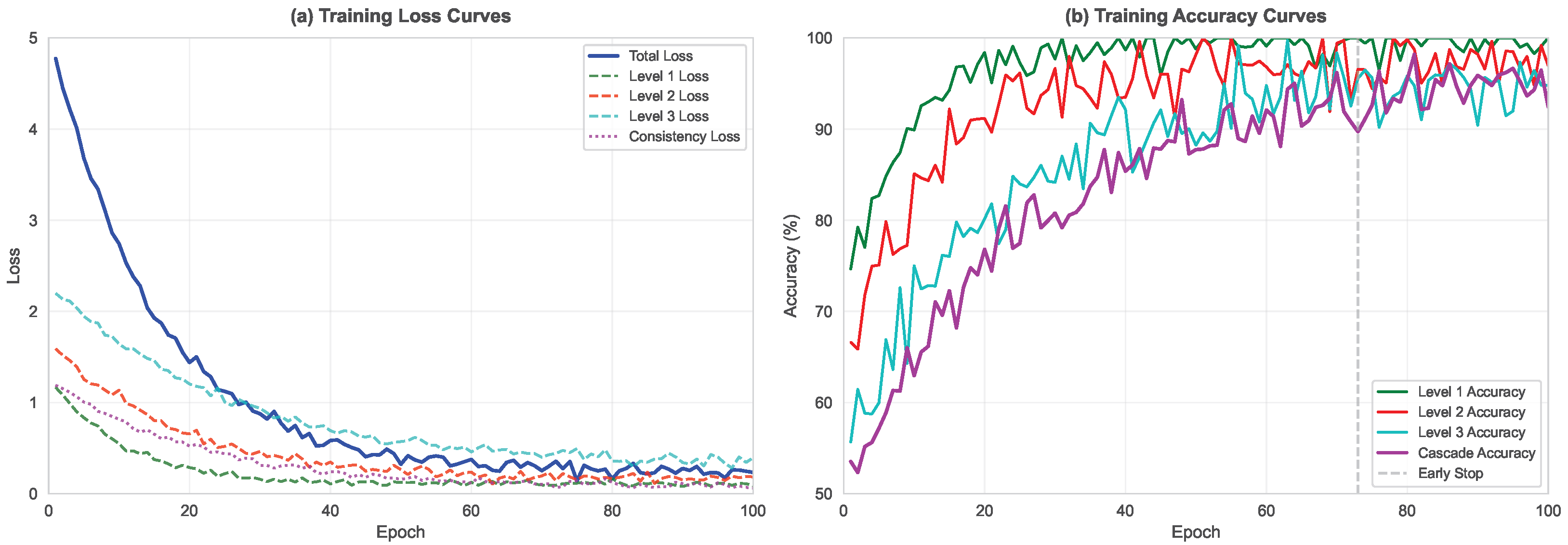

4.4.2. Training Process Visualization

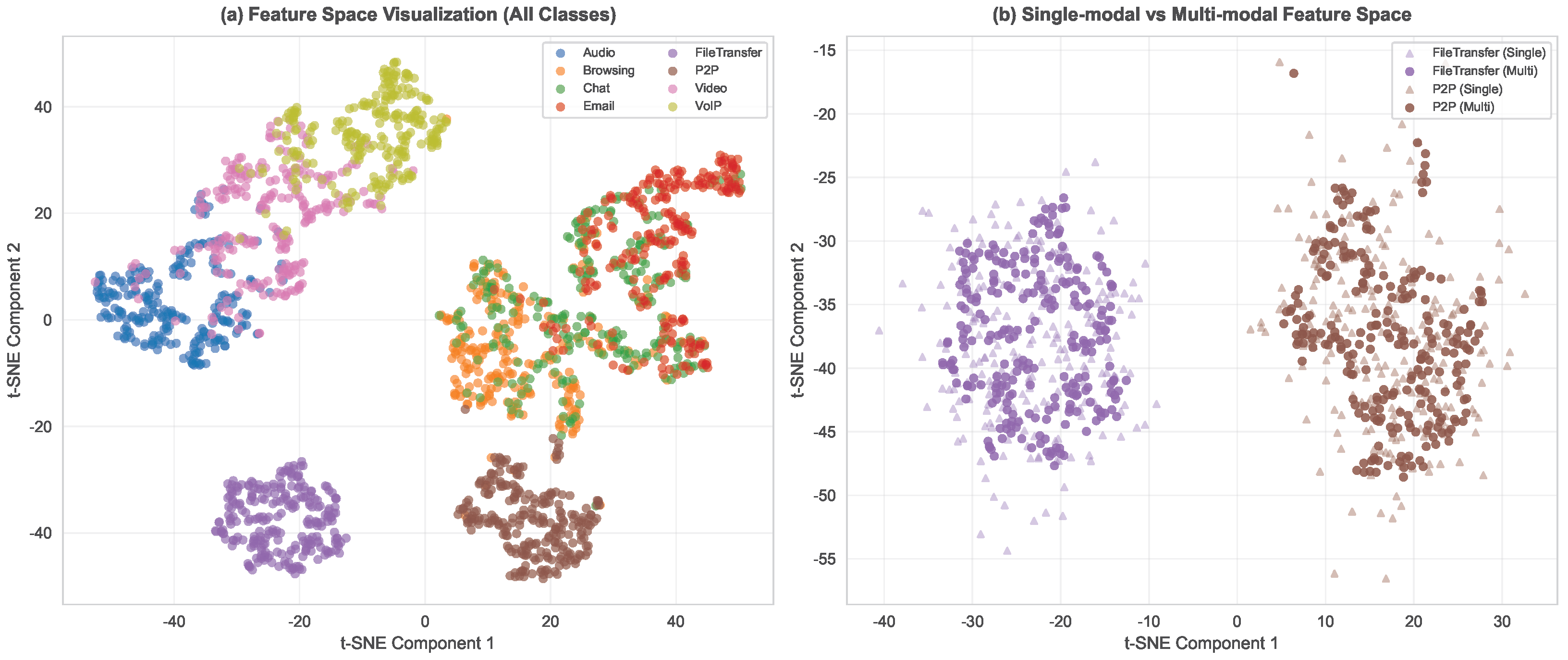

4.4.3. Feature Space Visualization

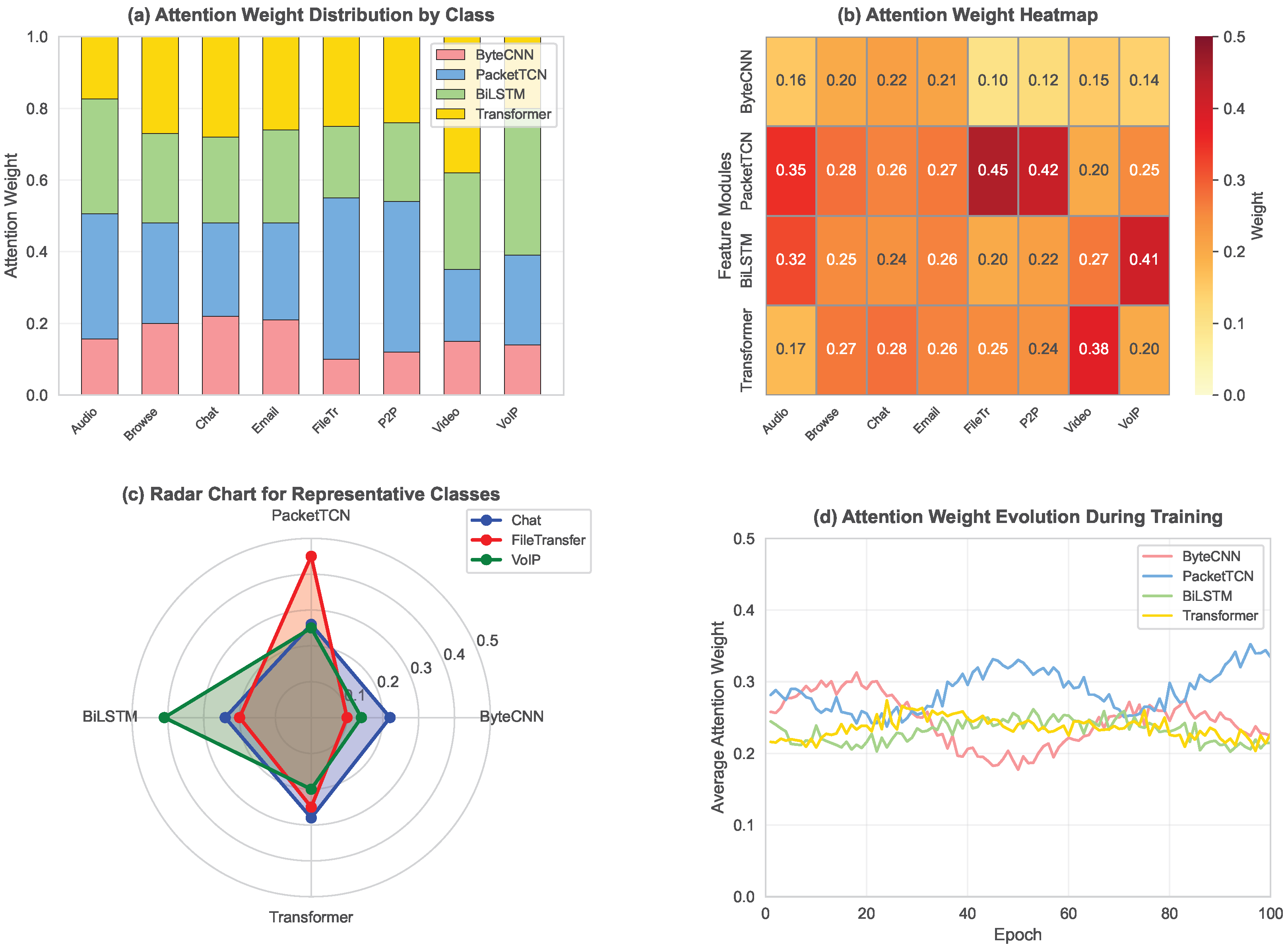

4.4.4. Attention Weight Distribution Visualization

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Rust-Nguyen, N.; Sharma, S.; Stamp, M. Darknet traffic classification and adversarial attacks using machine learning. Comput. Secur. 2023, 127, 103098. [Google Scholar] [CrossRef]

- Aceto, G.; Ciuonzo, D.; Montieri, A.; Pescapé, A. MIMETIC: Mobile encrypted traffic classification using multimodal deep learning. Comput. Netw. 2019, 165, 106944. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Marim, M.C.; Ramos, P.V.B.; Vieira, A.B.; Galletta, A.; Villari, M.; Oliveira, R.M.; Silva, E.F. Darknet traffic detection and characterization with models based on decision trees and neural networks. Intell. Syst. Appl. 2023, 18, 200199. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.Y. LightGBM: A highly efficient gradient boosting decision tree. In Advances in Neural Information Processing Systems 30 (NIPS 2017); Curran Associates: Long Beach, CA, USA, 2017; pp. 3146–3154. [Google Scholar]

- Sarwar, M.B.; Hanif, M.K.; Talib, R.; Younas, M.; Sarwar, M.U. DarkDetect: Darknet Traffic Detection and Categorization Using Modified Convolution-Long Short-Term Memory. IEEE Access 2021, 9, 113705–113713. [Google Scholar] [CrossRef]

- Habibi Lashkari, A.; Kaur, G.; Rahali, A. DIDarknet: A Contemporary Approach to Detect and Characterize the Darknet Traffic using Deep Image Learning. In Proceedings of the 10th International Conference on Communication and Network Security, Tokyo, Japan, 27–29 November 2020; pp. 1–13. [Google Scholar]

- Lan, J.; Liu, X.; Li, B.; Li, Y.; Geng, T. DarknetSec: A novel self-attentive deep learning method for darknet traffic classification and application identification. Comput. Secur. 2022, 116, 102663. [Google Scholar] [CrossRef]

- Hu, Y.; Zou, F.; Li, L.; Yi, P. Traffic Classification of User Behaviors in Tor, I2P, ZeroNet, Freenet. In Proceedings of the 2020 IEEE 19th International Conference on Trust, Security and Privacy in Computing and Communications (TrustCom), Guangzhou, China, 29 December 2020–1 January 2021; pp. 418–424. [Google Scholar]

- Montieri, A.; Ciuonzo, D.; Bovenzi, G.; Persico, V.; Pescapé, A. A dive into the dark web: Hierarchical traffic classification of anonymity tools. IEEE Trans. Netw. Sci. Eng. 2020, 7, 1043–1054. [Google Scholar] [CrossRef]

- Dingledine, R.; Mathewson, N.; Syverson, P. Tor: The second-generation onion router. In Proceedings of the 13th USENIX Security Symposium, San Diego, CA, USA, 9–13 August 2004; pp. 303–320. [Google Scholar]

- Pudjihartono, N.; Fadason, T.; Kempa-Liehr, A.W.; O’Sullivan, J.M. A review of feature selection methods for machine learning-based disease risk prediction. Front. Bioinform. 2022, 2, 927312. [Google Scholar] [CrossRef]

- Bolón-Canedo, V.; Alonso-Betanzos, A. Ensembles for feature selection: A review and future trends. Inf. Fusion 2019, 52, 1–12. [Google Scholar] [CrossRef]

- Song, B.; Chang, Y.; Liao, M.; Wang, Y.; Chen, J.; Wang, N. CDBC: A novel data enhancement method based on improved between-class learning for darknet detection. Math. Biosci. Eng. 2023, 20, 14959–14977. [Google Scholar] [CrossRef]

- Almomani, A. Darknet traffic analysis and classification system based on modified stacking ensemble learning algorithms. Inf. Syst.-Bus. Manag. 2023, 21, 241–276. [Google Scholar] [CrossRef]

- Mohanty, H.; Roudsari, A.H.; Lashkari, A.H. Robust stacking ensemble model for darknet traffic classification under adversarial settings. Comput. Secur. 2022, 120, 102830. [Google Scholar] [CrossRef]

- Shapira, T.; Shavitt, Y. FlowPic: A generic representation for encrypted traffic classification and applications identification. IEEE Trans. Netw. Serv. Manag. 2021, 18, 1218–1232. [Google Scholar] [CrossRef]

- Dong, C.; Zhang, C.; Lu, Z.; Liu, B.; Jiang, B. CETAnalytics: Comprehensive effective traffic information analytics for encrypted traffic classification. Comput. Netw. 2020, 176, 107258. [Google Scholar] [CrossRef]

- Lin, P.; Ye, K.; Xu, C.-Z. A novel multimodal deep learning framework for encrypted traffic classification. IEEE Trans. Netw. Serv. Manag. 2022, 19, 2427–2443. [Google Scholar] [CrossRef]

- Zhai, J.; Sun, H.; Xu, C.; Sun, W. ODTC: An online darknet traffic classification model based on multimodal self-attention chaotic mapping features. Electron. Res. Arch. 2023, 31, 5056–5082. [Google Scholar] [CrossRef]

- Li, Z.; Bu, L.; Wang, Y.; Ma, Q.; Tan, Y.; Bu, F. Hierarchical Perception for Encrypted Traffic Classification via Class Incremental Learning. Comput. Secur. 2025, 149, 104195. [Google Scholar] [CrossRef]

- Patil, S.; Dhage, S. Darkweb research: Past, present, and future trends and mapping to sustainable development goals. Heliyon 2023, 9, e22269. [Google Scholar] [CrossRef]

- Aceto, G.; Ciuonzo, D.; Montieri, A.; Pescapé, A. Mobile encrypted traffic classification using deep learning: Experimental evaluation, lessons learned, and challenges. IEEE Trans. Netw. Serv. Manag. 2019, 16, 445–458. [Google Scholar] [CrossRef]

- Abu Al-Haija, Q.; Krichen, M.; Abu Elhaija, W. Machine-learning-based darknet traffic detection system for IoT applications. Electronics 2022, 11, 556. [Google Scholar] [CrossRef]

- Lin, M.; Chen, Q.; Yan, S. Network in network. In Proceedings of the 2nd International Conference on Learning Representations (ICLR), Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Bai, S.; Kolter, J.Z.; Koltun, V. An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. arXiv 2018, arXiv:1803.01271. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; Volume 1, pp. 4171–4186. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. In Proceedings of the 7th International Conference on Learning Representations (ICLR), New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

| Method | Level 1 | Level 2 | Level 3 | Cascade | F1-Score | p-Value |

|---|---|---|---|---|---|---|

| (%) | (%) | (%) | Acc (%) | (%) | (vs. Ours) | |

| Random Forest | <0.001 | |||||

| XGBoost | <0.001 | |||||

| Stacking Ensemble | <0.001 | |||||

| DarkDetect | <0.001 | |||||

| DIDarknet | <0.001 | |||||

| ODTC | <0.001 | |||||

| E2E-MDC (Ours) | — |

| Method | Level 1 | Level 2 | Level 3 | Cascade | F1-Score | p-Value |

|---|---|---|---|---|---|---|

| (%) | (%) | (%) | Acc (%) | (%) | (vs. Ours) | |

| Random Forest | — | <0.001 | ||||

| XGBoost | — | <0.001 | ||||

| Stacking Ensemble | — | <0.001 | ||||

| DarkDetect | — | <0.001 | ||||

| DIDarknet | — | <0.001 | ||||

| ODTC | — | |||||

| E2E-MDC (Ours) | — |

| Method | Public Dataset | Self-Collected Dataset | ||

|---|---|---|---|---|

| (%) | (%) | (%) | (%) | |

| Random Forest | ||||

| XGBoost | ||||

| Stacking Ensemble | ||||

| DarkDetect (CNN-LSTM) | ||||

| DIDarknet (ResNet-50) | ||||

| ODTC | ||||

| E2E-MDC (Ours) | ||||

| Category | Precision | Recall | F1-Score | Main Confusion |

|---|---|---|---|---|

| (%) | (%) | (%) | Categories | |

| Audio | 95.7 | 96.7 | video | |

| Browsing | 78.4 | 90.2 | email, chat | |

| Chat | 97.4 | 86.4 | browsing | |

| 74.2 | 74.2 | browsing | ||

| Filetransfer | 97.3 | 98.9 | p2p | |

| P2P | 93.4 | 95.0 | filetransfer | |

| Video | 89.4 | 78.4 | audio, browsing | |

| VoIP | 100.0 | 90.3 | audio |

| Category | Precision | Recall | F1-Score | Main Confusion |

|---|---|---|---|---|

| (%) | (%) | (%) | Categories | |

| Audio | 88.7 | 85.3 | video, voip | |

| Browsing | 94.4 | 96.1 | chat, email | |

| Chat | 91.2 | 89.6 | browsing, email | |

| 89.5 | 87.3 | browsing, chat | ||

| Filetransfer | 96.8 | 95.4 | p2p | |

| P2P | 95.3 | 94.7 | filetransfer | |

| Video | 93.6 | 95.8 | audio, browsing | |

| VoIP | 92.1 | 88.9 | audio, chat |

| Configuration | Level 3 | Cascade | F1-Score | Params |

|---|---|---|---|---|

| (%) | Acc (%) | (%) | (M) | |

| Complete Model | 95.02 | 94.90 | 94.84 | 12.1 |

| w/o ByteCNN | 93.41 | 92.18 | 92.76 | 9.8 |

| w/o PacketTCN | 92.76 | 91.45 | 91.89 | 10.3 |

| w/o BiLSTM | 92.15 | 90.87 | 91.34 | 10.9 |

| w/o Transformer | 93.67 | 92.84 | 93.12 | 9.2 |

| w/o Attention Fusion | 91.83 | 89.76 | 90.45 | 11.8 |

| w/o Conditional Hier. | 89.34 | 84.52 | 88.67 | 11.9 |

| w/o Consistency Loss | 93.28 | 91.34 | 92.41 | 12.1 |

| Independent Classifiers | 88.76 | 79.23 | 87.92 | 12.3 |

| Flat Classification | 91.45 | — | 90.78 | 8.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, J.; Chen, Y.; Ji, Q.; Yu, W.; Ni, L.; Dai, C.; Kang, L.; Luo, J. E2E-MDC: End-to-End Multi-Modal Darknet Traffic Classification with Conditional Hierarchical Mechanism. Electronics 2025, 14, 4457. https://doi.org/10.3390/electronics14224457

Zhang J, Chen Y, Ji Q, Yu W, Ni L, Dai C, Kang L, Luo J. E2E-MDC: End-to-End Multi-Modal Darknet Traffic Classification with Conditional Hierarchical Mechanism. Electronics. 2025; 14(22):4457. https://doi.org/10.3390/electronics14224457

Chicago/Turabian StyleZhang, Junyuan, Yang Chen, Qingbing Ji, Wei Yu, Lulin Ni, Chengpeng Dai, Lu Kang, and Jie Luo. 2025. "E2E-MDC: End-to-End Multi-Modal Darknet Traffic Classification with Conditional Hierarchical Mechanism" Electronics 14, no. 22: 4457. https://doi.org/10.3390/electronics14224457

APA StyleZhang, J., Chen, Y., Ji, Q., Yu, W., Ni, L., Dai, C., Kang, L., & Luo, J. (2025). E2E-MDC: End-to-End Multi-Modal Darknet Traffic Classification with Conditional Hierarchical Mechanism. Electronics, 14(22), 4457. https://doi.org/10.3390/electronics14224457