4.1. Experiment Setting

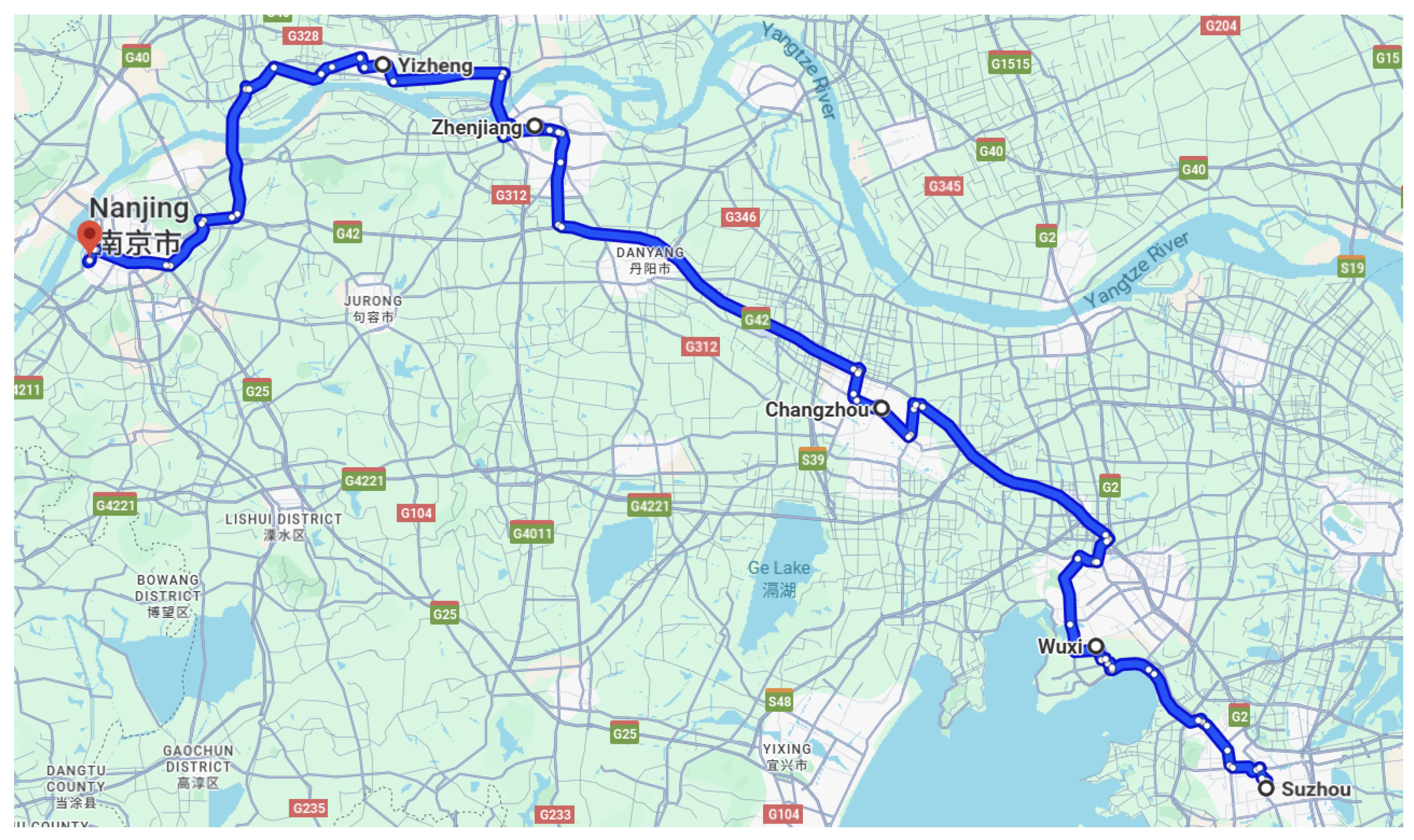

A simulated platoon of five fully charged Tesla EVs travels along a modeled Suzhou–Nanjing route, as shown in

Figure 1. The route parameters are derived from realistic geographic and energy consumption data. The average energy-consumption rate is set to

, and each vehicle has a battery capacity of

. Most previous studies use four-vehicle platoons [

23]. Here, we set the platoon size to

, which expands the action space by about five times while keeping the computational cost manageable. This setting allows richer positional interactions—especially among mid-platoon vehicles—and creates a more representative dynamic re-sequencing scenario. It also produces a smoother, more statistically meaningful SOC distribution across vehicle positions, making the evaluation of energy balance clearer. In addition, the larger action space encourages more diverse policy exploration during DRL training, improving the generalization of the learned policy. When the platoon size exceeds six, however, the factorial growth of permutation-based actions makes the search space excessively large and the training time impractical. Thus,

provides a practical trade-off between behavioral richness and computational feasibility. All vehicles are assumed to maintain ideal V2V communication for state sharing, so that the analysis can focus on control policy learning and energy coordination.

The platoon is allowed to reconfigure its formation at five designated re-sequencing stages between six checkpoints along the route: Suzhou (), Wuxi (), Changzhou (), Zhenjiang (), Yizheng (), and Nanjing (). The total route length is approximately 196.6 miles.

Based on the aerodynamic position parameters reported in ref. [

26], the electricity usage reduction rates are set as

Since aerodynamic measurements beyond the third vehicle are not available in the existing literature and the incremental aerodynamic benefit beyond the third position is relatively small, we assume

Thus, the consumption matrix

is constructed according to Equation (

2) as follows:

The simulation is executed on an NVIDIA GeForce RTX 5090 (32 GB GDDR7) using the parameters listed in

Table 1. Before fixing the final reward coefficients, we ran preliminary experiments to explore the typical ranges of the three objectives: SOC standard deviation, minimum remaining SOC, and formation stability. Because both

and

naturally fall within [0, 1], the stability metric

was normalized to the same scale. A balanced reward configuration was then chosen to maintain stable optimization among the three objectives. To avoid the agent’s over-reliance on the SOC-ranking action, the terminal bonus

and penalty

were kept as small constants.

4.1.1. Parameter Sensitivity Analysis

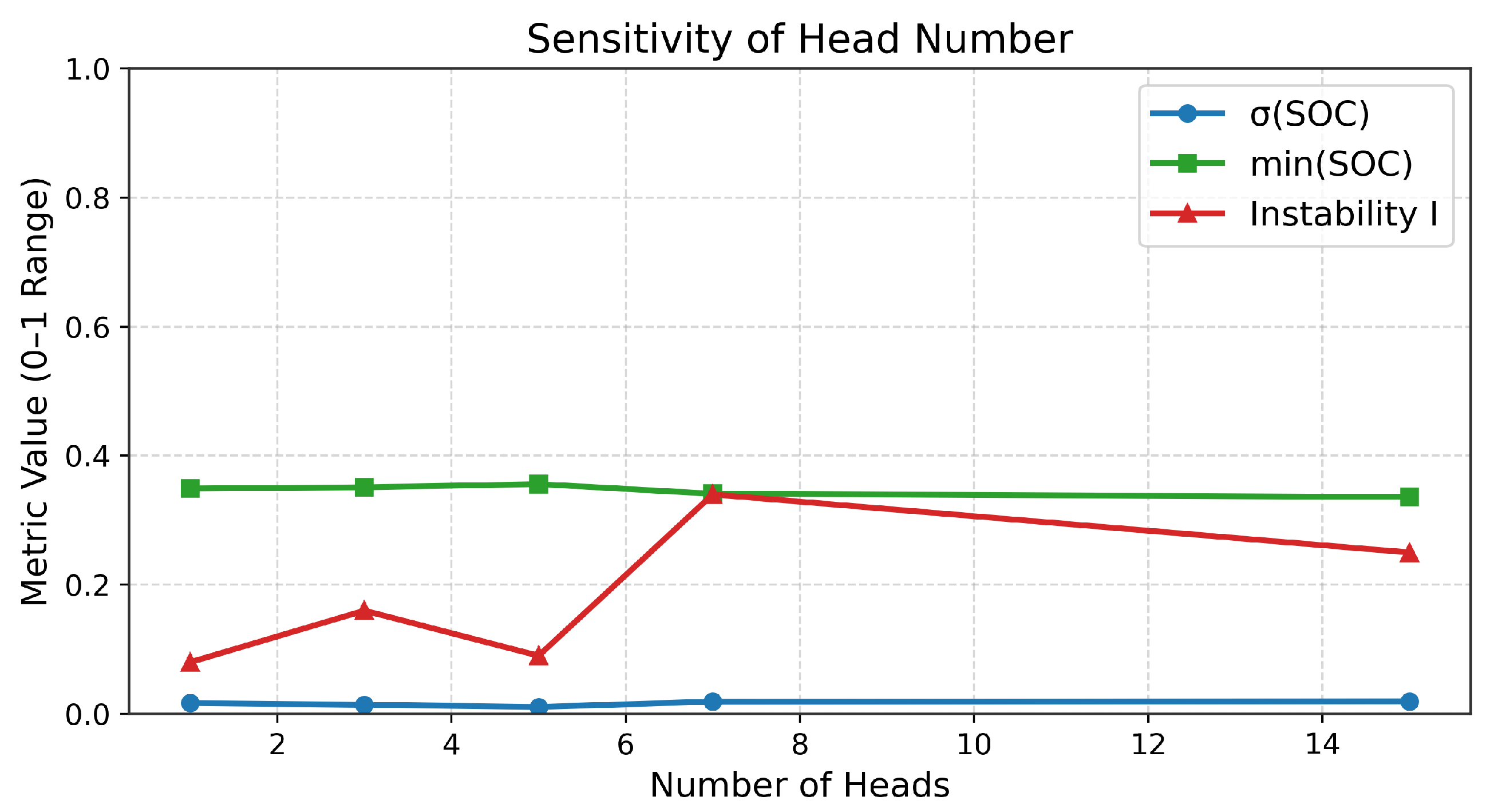

To validate the chosen parameter configuration, sensitivity analyses were performed to assess how key factors influence learning performance. Specifically, we examined the impact of the number of bootstrap heads in the Bootstrapped DQN and the reward coefficients , , and . The sensitivity tests for the number of heads were conducted over 20,000 training episodes, while those for the reward coefficients were each performed over 5000 episodes. These analyses confirmed that the selected configuration achieves a stable trade-off among fairness, energy assurance, and formation stability.

This sensitivity analysis was conducted to study how the number of bootstrap heads (

) affects the learning performance of the Bootstrapped DQN. As shown in

Table 2 and

Figure 2, the results indicate that increasing

improves exploration at first, but too many heads may lead to unstable value estimation. The configuration with

achieves balanced performance in terms of SOC deviation, minimum SOC, stability, and average reward. This value is therefore adopted in the main experiments.

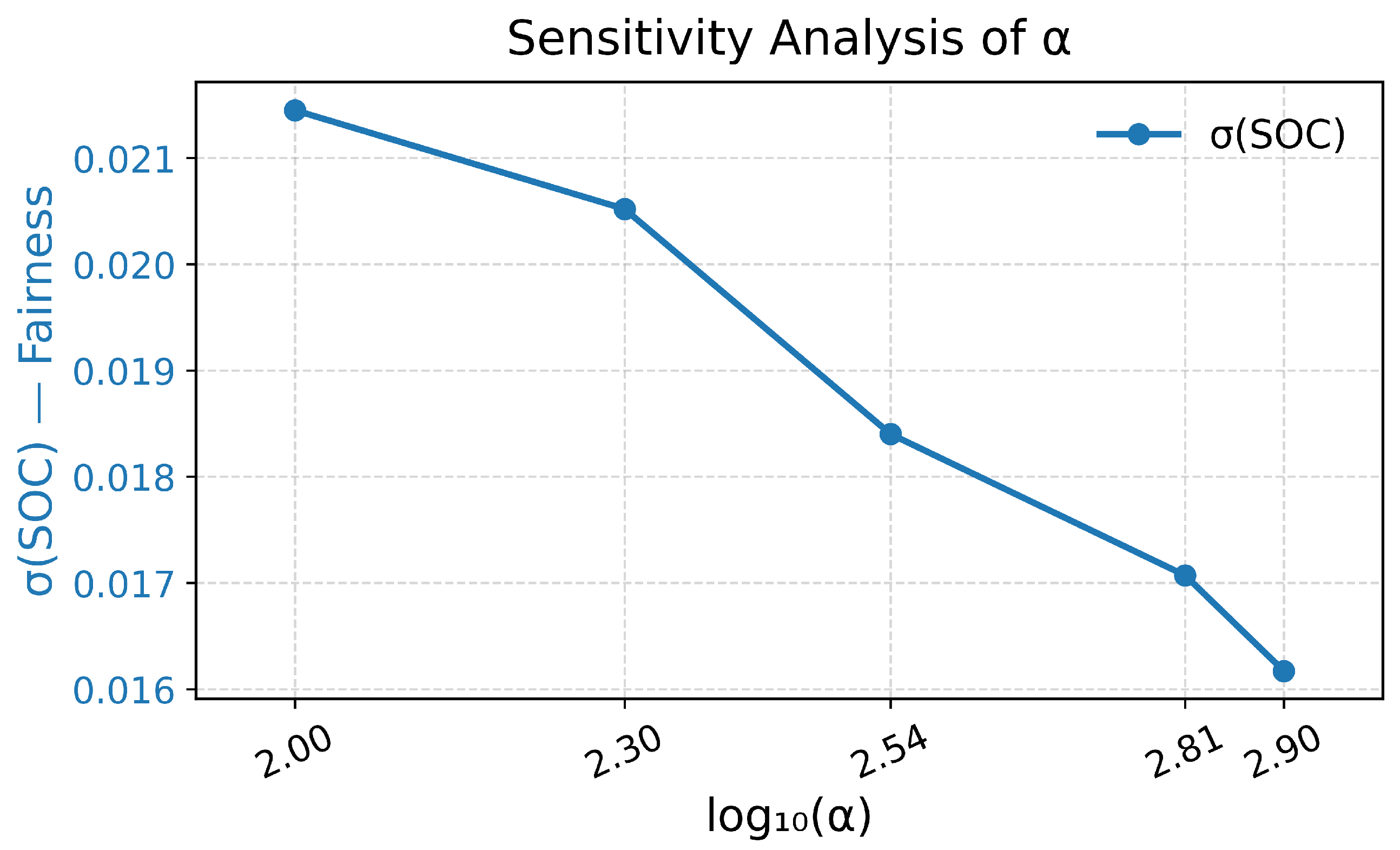

This sensitivity analysis evaluates the effect of the fairness weight

on learning performance. As shown in

Table 3 and

Figure 3, the SOC standard deviation

decreases monotonically as

increases, indicating that a larger weight improves energy fairness among vehicles. Considering convergence stability and computational cost, a moderate value of

was used in the main experiments to maintain a reasonable balance between fairness and overall performance.

This sensitivity analysis evaluates the effect of the energy assurance weight

on overall performance. As shown in

Table 4 and

Figure 4, the minimum remaining SOC increases gradually as

grows, indicating that a stronger emphasis on energy assurance improves the fleet’s energy security. Beyond moderate values, the improvement becomes marginal, suggesting that a moderate

provides sufficient energy protection without noticeably affecting other objectives. The adopted configuration already enables the model to handle the minimum SOC objective effectively, ensuring adequate energy assurance without additional tuning.

This sensitivity analysis investigates how the stability weight

influences formation control. As shown in

Table 5 and

Figure 5, increasing

consistently reduces the instability metric

, indicating that stronger penalization of formation changes improves stability. The reduction is steep when

increases from 0 to 25 and becomes more gradual beyond 40, suggesting diminishing returns at higher values. The chosen moderate

thus provides sufficient stability improvement while keeping formation adjustments responsive and efficient.

4.1.2. Baseline Algorithms

For comparative evaluation, four representative DQN variants are selected as baselines: the standard DQN [

28,

29], Double DQN [

30], Dueling DQN [

31], and a static SOC-based ranking heuristic [

18]. All baseline algorithms are trained under identical simulation conditions to ensure fair comparison.

4.2. Evaluation Metrics

We evaluate the scheduling performance of the proposed algorithm in terms of fairness, policy stability, formation stability, computational efficiency, and energy guarantee. Fairness is quantified by the SOC standard deviation

; policy stability by the return standard deviation

; formation stability by the stability metric

, where a smaller

indicates higher stability; computational efficiency by the average inference time; and energy guarantee by the minimum remaining SOC

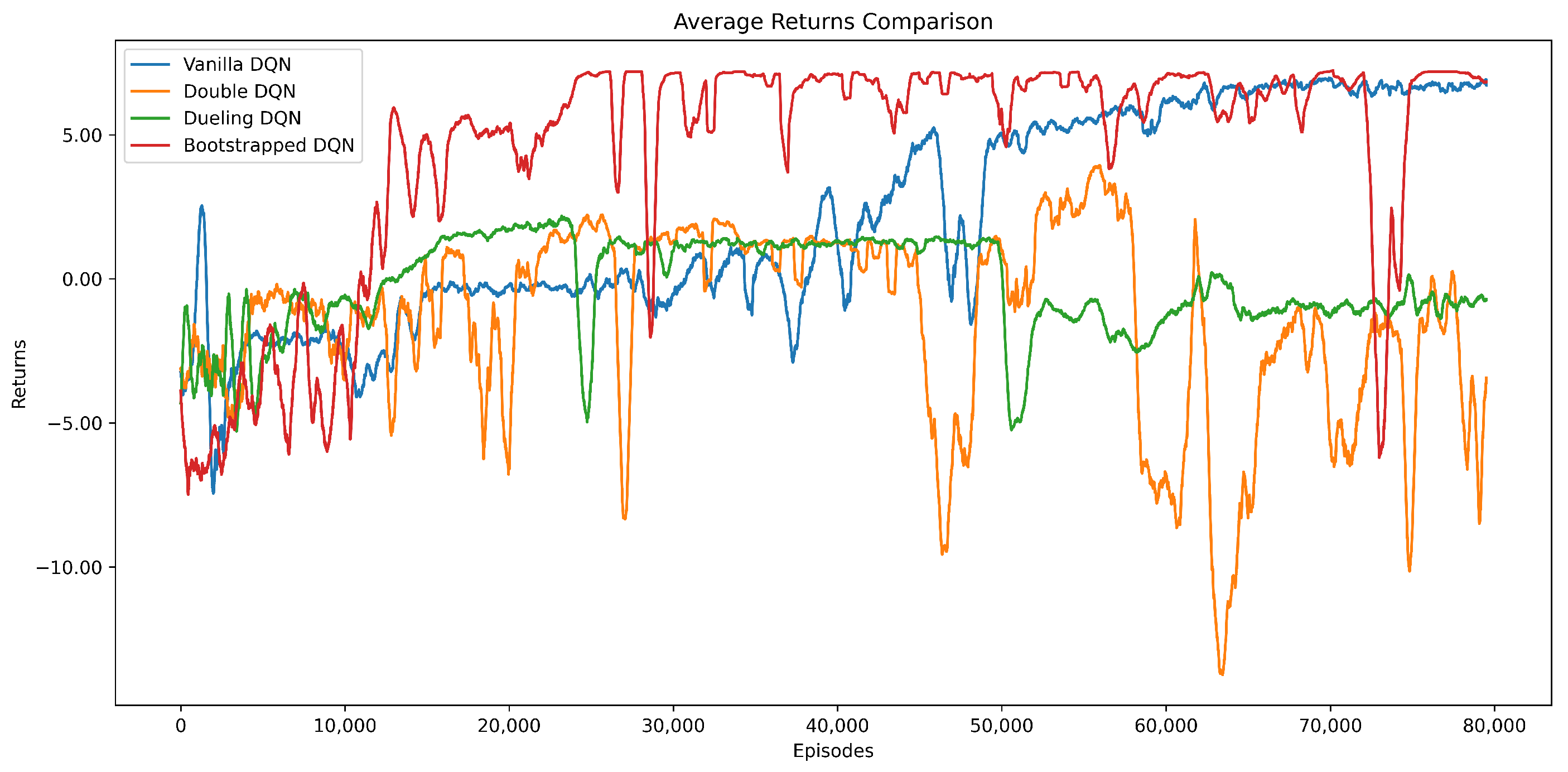

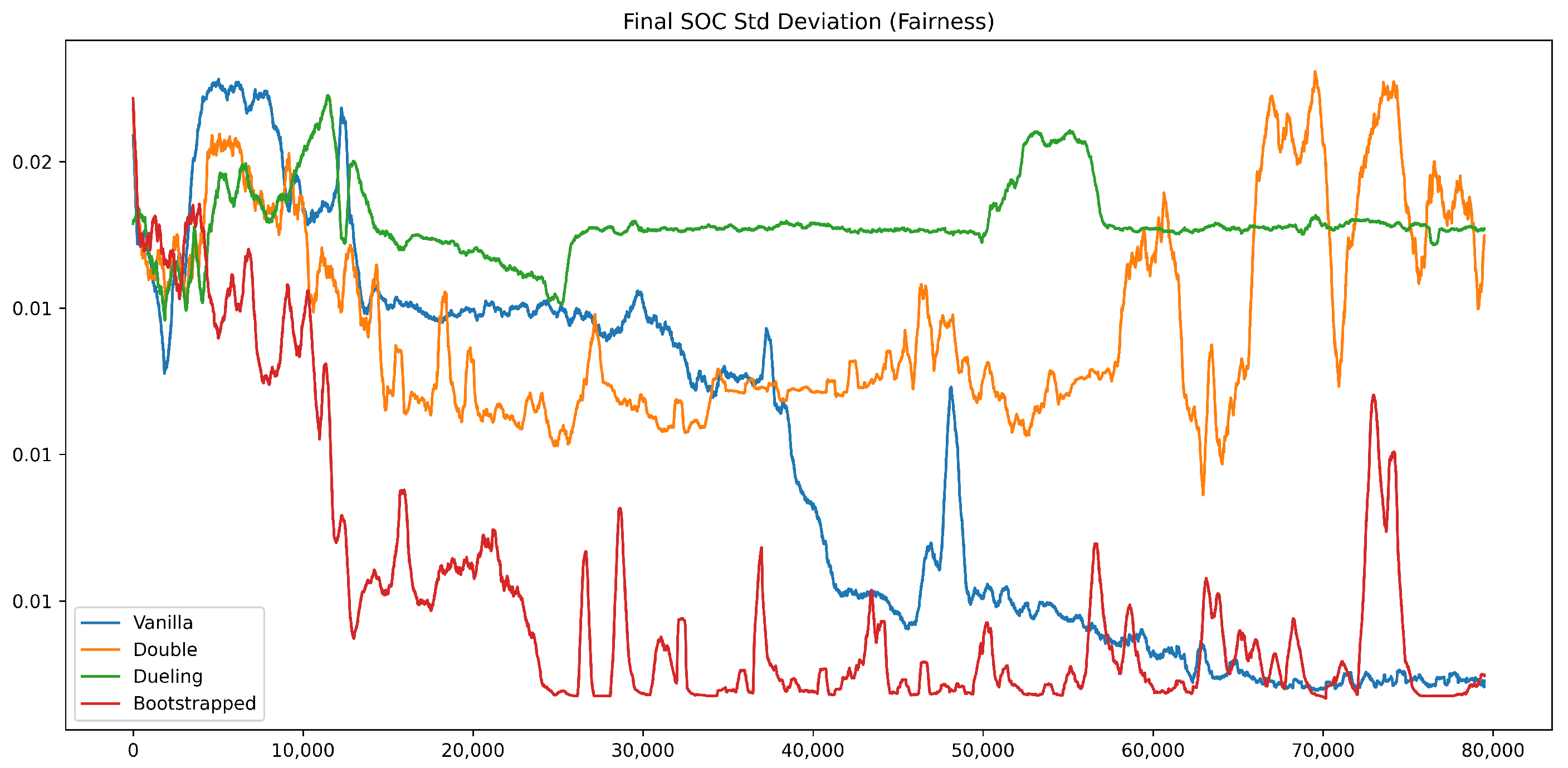

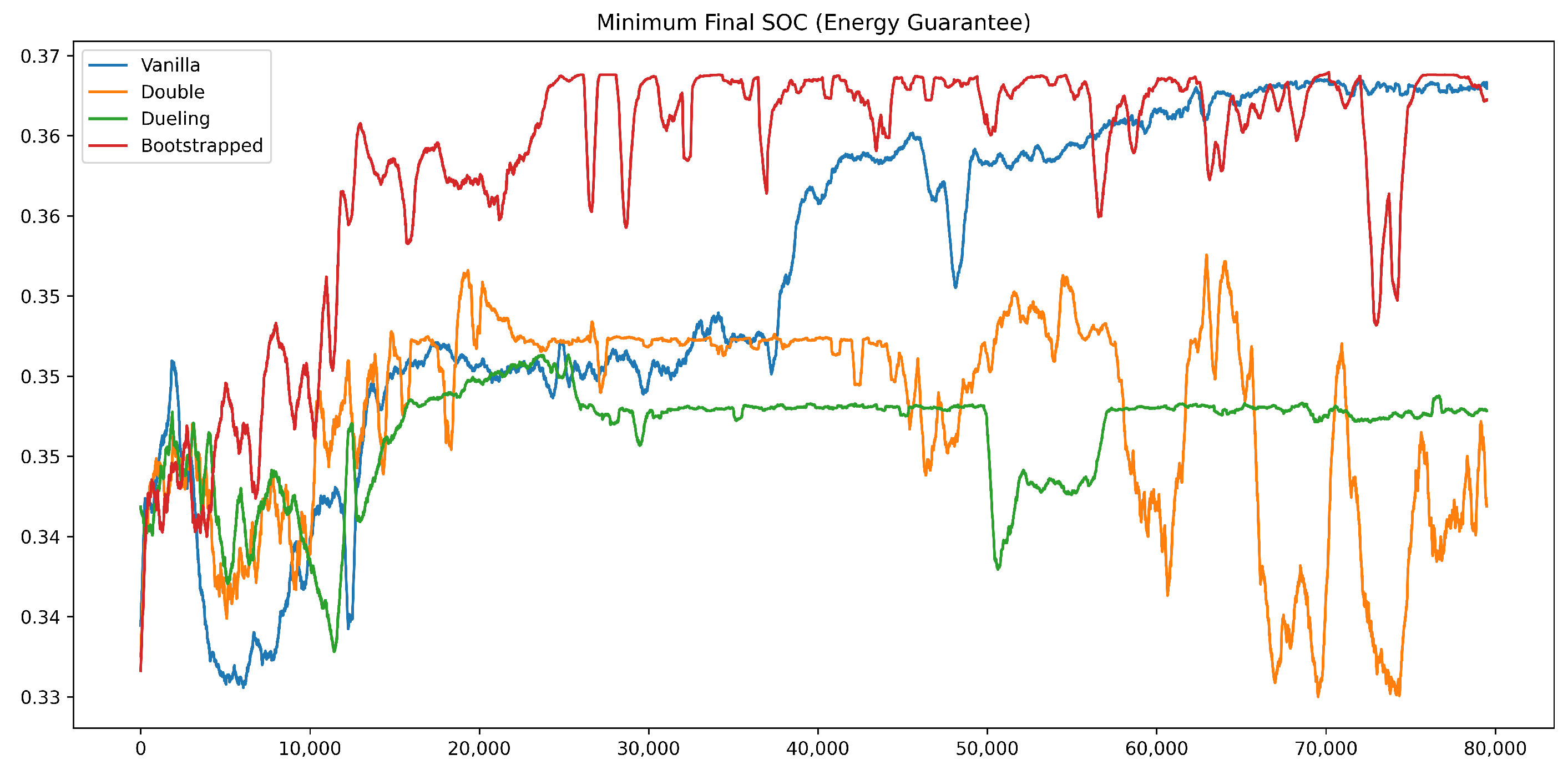

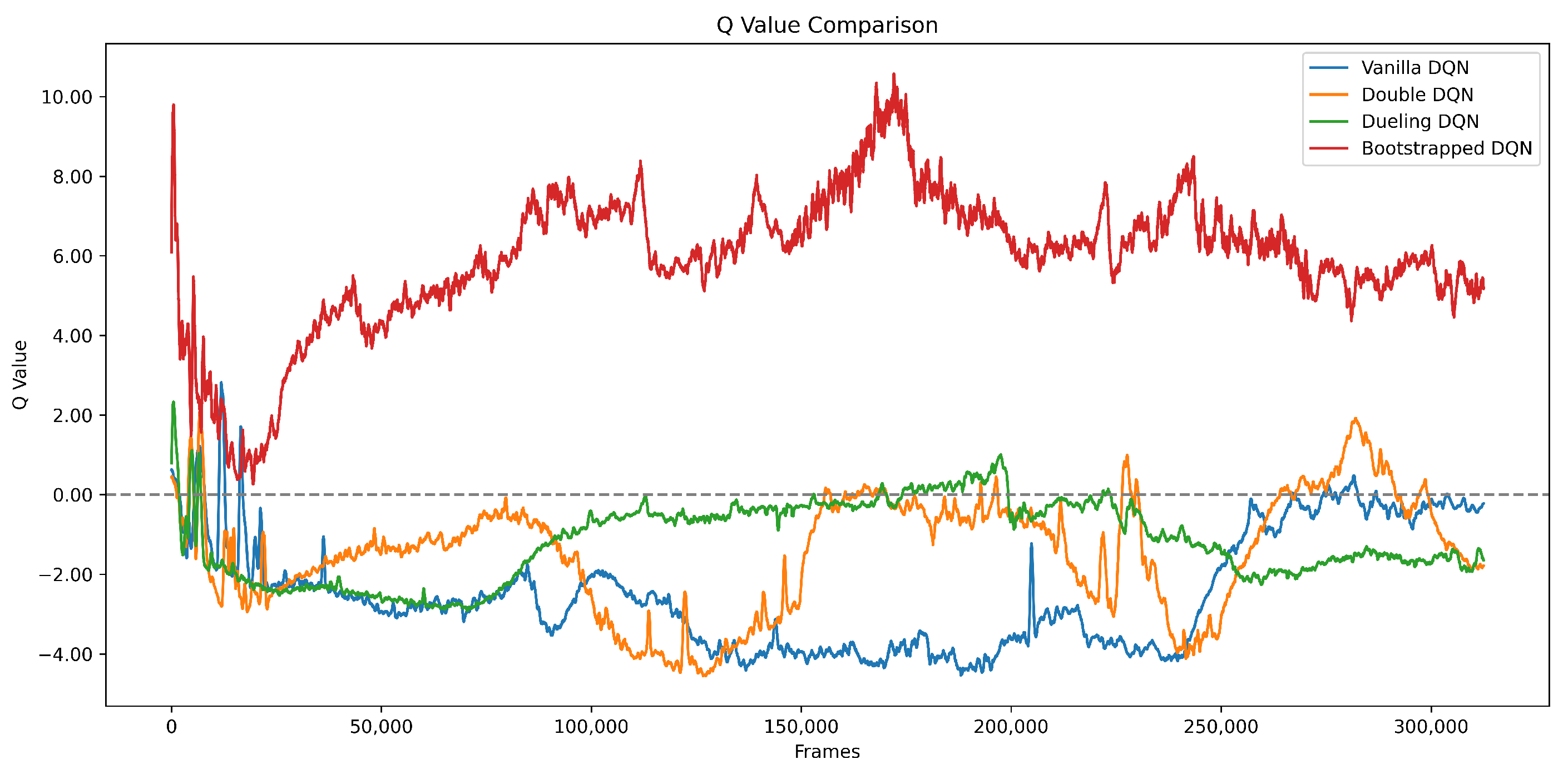

. These metrics were continuously monitored during training to verify the convergence and stability of each algorithm. As shown in

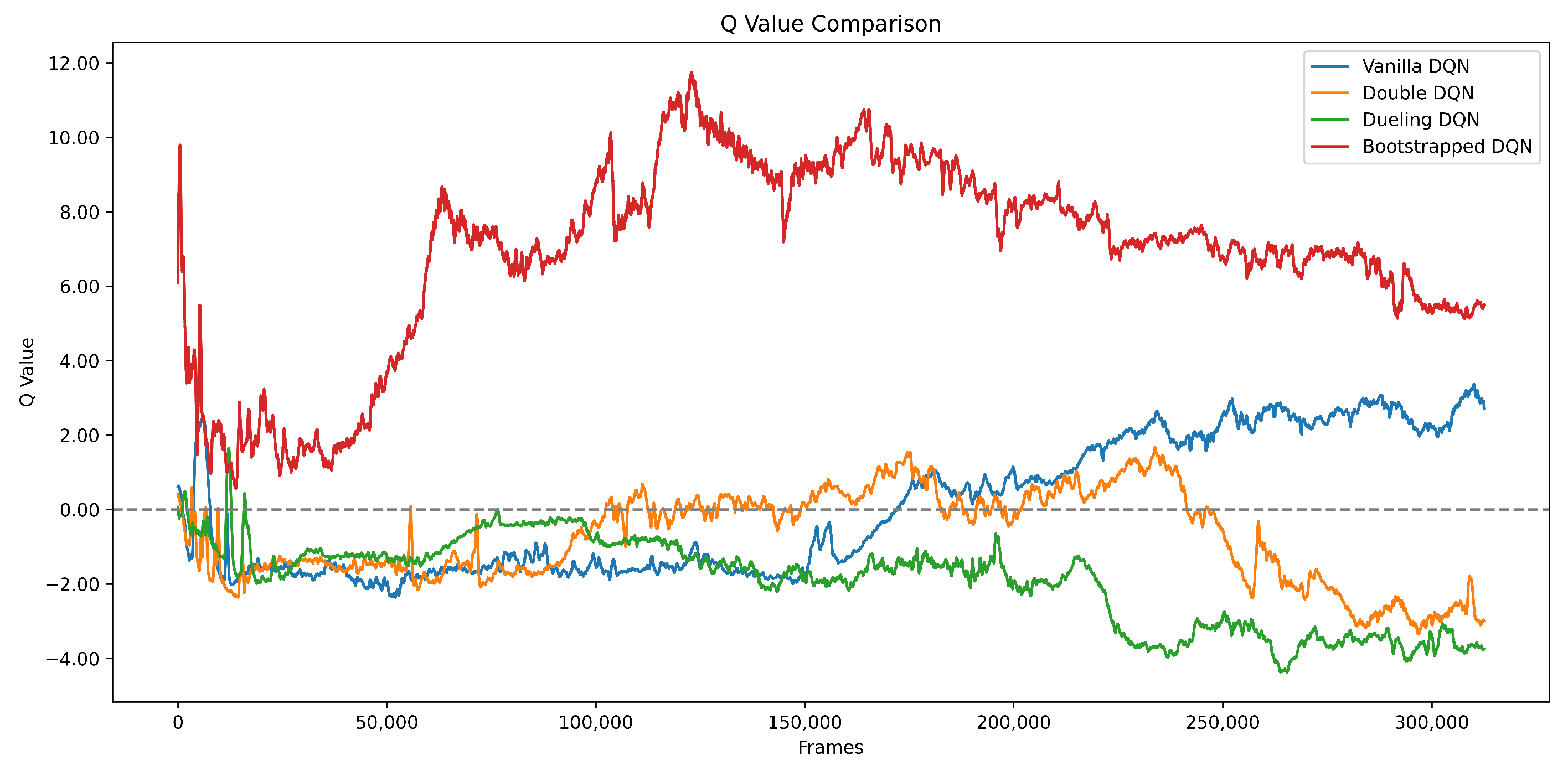

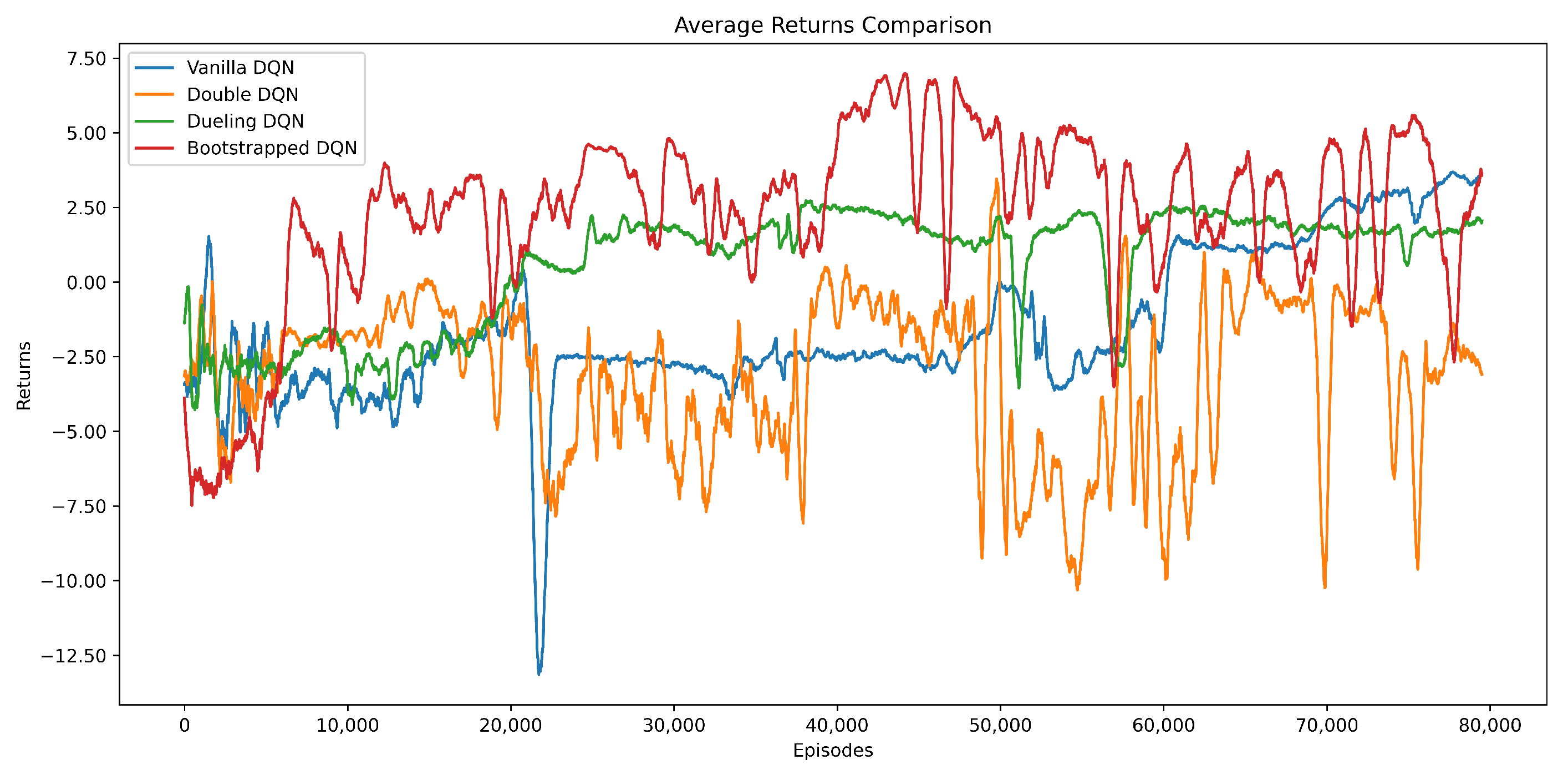

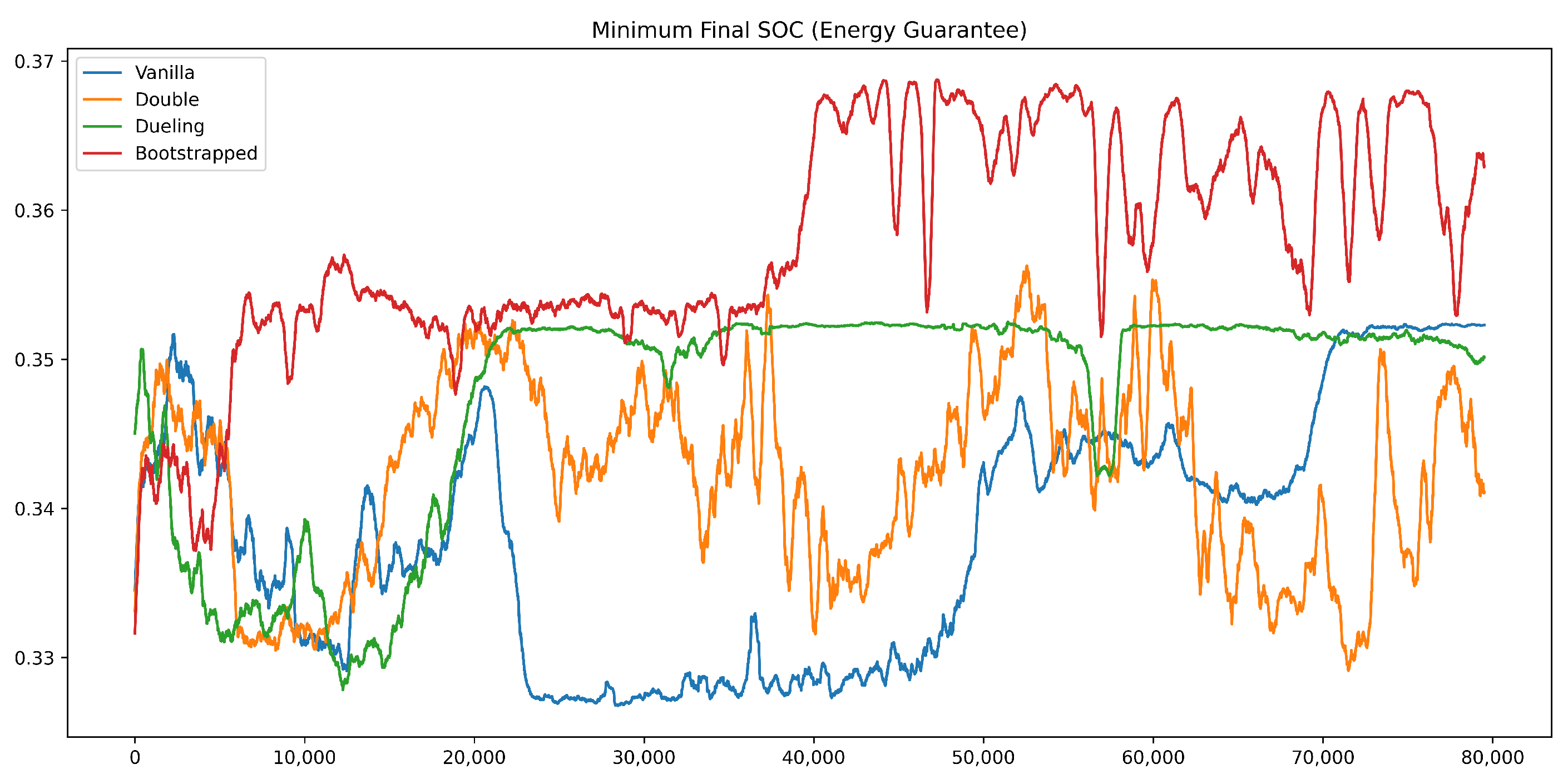

Figure 6,

Figure 7,

Figure 8,

Figure 9 and

Figure 10, the trajectories of Q-values, average returns, SOC deviation, minimum SOC, and formation stability together depict the overall training and validation process. The Bootstrapped DQN converged rapidly—within about 10,000 episodes—while maintaining the highest average return and the lowest SOC deviation, highlighting its superior learning efficiency and fairness performance.

4.3. Training Process

To evaluate the policy stability of our algorithm, we take Vanilla DQN, Double DQN, Dueling DQN and SOC ranking algorithms as the baseline algorithms. The training results are shown below.

Figure 6 shows the Q-value trajectories during training. The Bootstrapped DQN quickly rises to a plateau around 12 and then gradually decreases toward 6, remaining consistently higher than those of the other methods despite slight oscillations. Vanilla DQN exhibits a smooth transition from negative to positive values with moderate fluctuations. Double DQN fluctuates widely and often crosses zero, suggesting unstable learning in the later stages. Dueling DQN stays at a low level without sustained improvement, indicating weak value estimation capability. These observations confirm that Bootstrapped DQN achieves faster and more stable value learning than the other baseline algorithms.

Figure 7 shows the evolution of the average return during training. The Bootstrapped DQN rises rapidly after about 10,000 episodes and maintains a high return thereafter, with minor oscillations that reflect active yet stable exploration. Vanilla DQN improves gradually and stabilizes at positive values in the later stage. Double DQN exhibits large fluctuations and frequently drops below zero, suggesting unstable policy learning. Dueling DQN remains close to zero throughout most of the training, showing limited progress. Compared with the other DQN variants, Bootstrapped DQN achieves both faster convergence and smoother learning dynamics.

Figure 8 shows the evolution of the SOC standard deviation during training. The Bootstrapped DQN converges rapidly and consistently maintains the lowest deviation, stabilizing near 0.005 after about 10,000 episodes. This behavior demonstrates that its policy achieves the most balanced energy consumption across the fleet. Vanilla DQN also reduces SOC variance but converges more slowly and less steadily. In contrast, Double DQN and Dueling DQN stay at much higher levels—around 0.015 and 0.017—indicating weaker capability in maintaining fairness among vehicles. Overall, Bootstrapped DQN exhibits clear superiority in ensuring equitable energy distribution within the platoon.

Figure 9 shows the evolution of the minimum remaining SOC during training. The Bootstrapped DQN rapidly raises the minimum SOC to about 0.36 within 20,000 episodes and keeps it at the highest level thereafter, reflecting a strong capability for maintaining energy reserves. Vanilla DQN improves more gradually and eventually reaches a similar level with mild fluctuations. Double DQN oscillates sharply and often drops below 0.345, whereas Dueling DQN stays nearly flat around 0.348. Taken together, these observations show that Bootstrapped DQN provides the most reliable energy assurance across the training process.

Figure 10 shows the evolution of the formation stability metric

during training. Double DQN maintains the highest instability throughout, while Dueling DQN achieves the lowest

after convergence. Bootstrapped DQN and Vanilla DQN stay between the two extremes, keeping moderate stability levels. Among all methods, Bootstrapped DQN reaches a steady and balanced performance, indicating stable coordination without excessive formation changes.

To evaluate the impact of reward design, we construct a simplified environment by removing penalties for illegal actions and bonuses for SOC-based ranking, disabling explicit reward guidance during training.

Figure 11 shows the Q-value trajectories during training without reward shaping. The Bootstrapped DQN increases rapidly between 20,000 and 60,000 frames and then stabilizes near 6, staying above the other algorithms throughout the process. Vanilla DQN remains mostly negative with only minor improvement toward the end. Double DQN fluctuates sharply around zero and does not reach convergence, whereas Dueling DQN achieves brief early gains followed by a gradual decline. Overall, Bootstrapped DQN preserves stable value estimation even in the absence of explicit reward guidance.

Figure 12 shows the evolution of the average return during training without reward shaping. The Bootstrapped DQN rises steadily after about 40,000 episodes and stabilizes around 4–5, showing clear convergence and consistently higher returns than the other methods. Vanilla DQN gradually improves in the later stage and levels off near 2–3. Dueling DQN stays relatively flat around 1.5–2.5 with limited progress, whereas Double DQN oscillates strongly and often drops below zero. Even without reward shaping, Bootstrapped DQN maintains smooth learning dynamics and stable policy improvement throughout training.

Figure 13 shows the convergence of the SOC standard deviation during training without reward shaping. The Bootstrapped DQN decreases rapidly and stabilizes around 0.008, reaching the lowest deviation among all algorithms. Vanilla DQN remains near 0.010 with mild fluctuations. Double DQN stays at the highest level between 0.021 and 0.025, showing strong volatility, whereas Dueling DQN stays relatively steady around 0.016 without further decline. Bootstrapped DQN still achieves the most balanced energy distribution among vehicles under this simplified training condition.

Figure 14 depicts the evolution of the minimum remaining SOC during training without reward shaping. The Bootstrapped DQN rises rapidly between 10,000 and 40,000 episodes and stabilizes around 0.365, maintaining the top trajectory throughout. Dueling DQN remains steady near 0.350, Vanilla DQN declines to about 0.330 before recovering, and Double DQN fluctuates heavily below 0.33. Throughout the training, Bootstrapped DQN maintains the highest and most stable minimum SOC among all approaches.

Figure 15 illustrates the evolution of the formation stability metric

during simulation training without reward shaping. Dueling DQN exhibits the most stable yet overly conservative behavior. Double DQN shows the highest instability, reflecting erratic decision making in the absence of explicit penalties. Bootstrapped DQN and Vanilla DQN maintain moderate stability levels, balancing steadiness and adaptability. These results demonstrate that Bootstrapped DQN preserves robust coordination performance even without reward guidance.

Based on the above analysis, the removal of reward shaping leads to clear degradation of fairness and energy guarantee across all methods, consistent with Theorem 2 in [

18]. Nevertheless, Bootstrapped DQN retains comparatively stronger robustness, achieving higher remaining SOC and lower standard deviation than other algorithms, even though its performance is weaker than in the shaped setting. This robustness highlights its adaptability to sparse or poorly designed rewards and its potential for practical use in platoon control.

4.4. Convergence and Stability Analysis

The convergence behavior of the proposed Bootstrapped DQN framework is illustrated in

Figure 6,

Figure 7,

Figure 8,

Figure 9,

Figure 10,

Figure 11,

Figure 12,

Figure 13,

Figure 14 and

Figure 15. As shown in

Figure 6 and

Figure 7, Bootstrapped DQN rapidly converges to a high Q-value plateau of 12 and consistently maintains higher returns than the baseline algorithms, indicating fast and stable learning.

Figure 8 and

Figure 9 further show that the SOC standard deviation and the minimum remaining SOC converge to steady values of 0.005 and 0.36 after 10,000 and 20,000 episodes, respectively, revealing balanced energy consumption and reliable energy assurance across the fleet. Regarding formation stability,

Figure 10 indicates that Bootstrapped DQN maintains a moderate instability level (

), achieving a desirable trade-off between robustness and flexibility.

To evaluate the influence of reward design,

Figure 11,

Figure 12,

Figure 13,

Figure 14 and

Figure 15 present the results obtained without explicit reward shaping. Although convergence becomes slower in this ablation setting, Bootstrapped DQN still achieves the highest Q-values and returns, the lowest SOC deviation (0.008), and a moderate stability index (

), demonstrating that the proposed framework remains robust even under weaker reward guidance.

4.5. Numerical Results

We compare four DRL algorithms with a conventional SOC-ranking heuristic [

18]. The results are reported in

Table 6 for the main experiment and in

Table 7 for the ablation study where penalty and reward terms are removed.

As shown in

Table 6, Vanilla DQN and Double DQN show a limited improvement in fairness, while stability

values remain relatively high, indicating weaker scheduling robustness. Dueling DQN exhibits a relatively stable return trajectory, but this stability is achieved at the expense of fairness and minimum SOC, suggesting that energy guarantees are compromised. Bootstrapped DQN, in contrast, provides the most balanced trade-off. It achieves the lowest SOC deviation, thereby ensuring fairness across the fleet, while simultaneously maintaining the highest minimum SOC and moderate formation stability. Although its inference time is slightly longer than the other DRL algorithms, the difference remains negligible for practical applications. The SOC-ranking heuristic, serving as a theoretical benchmark, unsurprisingly achieves the lowest standard deviation by design. However, its extremely poor performance in terms of minimum SOC and stability highlights its impracticality for real-world platoon control. Overall, Bootstrapped DQN provides near-optimal fairness together with robustness and strong energy guarantees, demonstrating superior applicability in real-world EV platoon management.

As shown in

Table 7, removing penalties and SOC-based ranking rewards leads to a noticeable degradation across all methods. Fairness deteriorates notably compared with the shaped setting, while the minimum SOC values also decrease, reflecting weaker energy guarantees. However, stability remains relatively unaffected. Among the four algorithms, the Bootstrapped DQN demonstrates the highest resilience, achieving the lowest SOC deviation, the highest minimum SOC, and moderate stability despite the absence of reward shaping. These results highlight that reward shaping terms effectively guide training. The Bootstrapped DQN demonstrates strong robustness and adaptability under sparse or poorly designed reward signals, making it a reliable option for real-world platoon scheduling where reward functions may be incomplete or difficult to design precisely.