1. Introduction

With the continuous advancement of power electronics and miniaturized embedded systems, the health prognostics and management (PHM) of passive components, especially tantalum capacitors, have become increasingly critical [

1,

2,

3,

4,

5,

6]. Tantalum capacitors are widely used in high-reliability applications such as the oil and gas industry, aerospace, communication base stations, and industrial control due to their high-capacitance density and stable performance [

7,

8,

9,

10,

11,

12]. However, their degradation behavior under thermal and electrical stress directly threatens system reliability [

13,

14,

15,

16,

17,

18]. To address this, condition monitoring (CM) and remaining useful life (RUL) prediction techniques have been increasingly adopted. Among various RUL prediction paradigms, data-driven approaches based on sensor measurements have shown strong potential thanks to their ability to model complex degradation patterns without relying on precise physical failure models [

19,

20,

21,

22]. In this context, deep learning methods, especially those capable of capturing temporal and nonlinear characteristics of degradation signals, offer powerful tools for modeling the aging process of tantalum capacitors [

23,

24,

25,

26].

Unlike mechanical systems that often exhibit evident vibration-based degradation patterns, the internal deterioration process of tantalum capacitors is typically latent and difficult to observe directly during their operational lifespan. Among the various indicators, temperature, particularly the internal core temperature, has proven to be one of the most sensitive signals for tracking capacitor degradation [

27], as it reflects the combined effects of increased leakage current, elevated equivalent series resistance (ESR), and thermal stress accumulation. However, due to structural limitations, direct access to the core temperature is impractical in real-world applications. In contrast, the surface temperature of the capacitor casing can be conveniently monitored using noncontact infrared temperature sensors. These surface measurements, when corrected for environmental effects, serve as reliable proxies for internal degradation trends. Notably, such temperature data inherently possess rich temporal dynamics and exhibit strong correlations with the degradation states of the component. This requires the adoption of advanced modeling approaches that can effectively capture both sequential dependencies and complex feature interactions within the temperature measurement data, providing a solid foundation for accurate and online remaining useful life (RUL) prediction.

In recent years, deep learning has emerged as a promising method for the RUL prediction of capacitors. Delanyo D.K.B. et al. [

28] successfully used bidirectional long short-term memory (BiLSTM) to predict the RUL of aluminum electrolytic capacitors. The network can better capture the degradation trend of AEC and improve the prediction accuracy compared with traditional LSTM. Z. Wang et al. [

29] used the ARI-MA-Bi-LSTM hybrid model, which combined ARIMA’s ability to extract nonlinear features from linear Bi-LSTM, to achieve AEC prediction from an early stage. F. Wang et al. [

30] proposed an ensemble learning method combining Chained-SVR and 1D-CNN for the prognostics of the RUL of aluminum electrolytic capacitors (AECs). The experimental results show that the proposed method not only improves the robustness of the individual models but also achieves the best performance among all the compared methods. Q. Sun et al. [

9] proposed a residual lifetime prediction method for electrolytic capacitors based on GRU and PSO-SVR. They achieved better prediction accuracy compared to traditional methods on both NASA and experimental datasets. G. Lou et al. [

31] proposed a two-stage online RUL prediction framework based on the bidirectional long short-term memory (BiLSTM) network and the H∞ observer. The results indicate that the BiLSTM network can explain more than 99% of the variation of capacitance, achieving competitive prediction accuracy when compared with offline methods. Z. Yi et al. [

32] introduced a novel method for SOH estimation and RUL prediction, based on a hybrid neural network optimized by an improved honey badger algorithm (HBA). The method combines the advantages of a convolutional neural network (CNN) and a bidirectional long short-term memory (BiLSTM) neural network. The results show that the proposed hybrid model effectively extracts features, enriches local details, and enhances global perception capabilities. A.F. Shahraki et al. [

33] proposed an RUL prediction method based on the long short-term memory (LSTM) model. This method can reduce computational time and complexity while ensuring high prediction performance. X. Zhao et al. [

34] applied the fuzzy theory to the fault diagnosis of a shunt capacitor. At the same time, they proposed a map-based fault diagnosis system. This method not only improves the accuracy of power capacitor fault diagnosis and identification but also provides a new method for the application of power capacitor fault research and development.

Despite the growing application of deep learning techniques in RUL prediction, several critical challenges remain unresolved in the context of tantalum capacitors. First, the degradation behavior of capacitors is often modulated by complex and dynamic environmental conditions, such as fluctuating ambient temperature and electrical loading, which introduce significant noise and obscure the true degradation signals. Second, most existing approaches are trained on offline datasets and lack the capacity for real-time inference, limiting their applicability in scenarios requiring continuous health monitoring and timely maintenance decisions. Third, conventional neural network architectures often fall short in modeling the multiscale temporal dependencies and intricate feature correlations embedded within long-term degradation data. These challenges collectively underscore the need for a unified and adaptive prediction framework that can (i) effectively suppress environmental interference; (ii) support online, real-time prognostics; and (iii) robustly extract and learn subtle yet informative degradation patterns. Overcoming these limitations is essential for achieving accurate and dependable RUL estimation of passive electronic components in high-reliability and safety-critical applications.

To address the aforementioned challenges, this paper presents a novel CNN-LSTM-Attention-based framework for online RUL prediction of tantalum capacitors operating under complex and variable conditions. The key contributions of this work are summarized as follows:

(1) A temperature-based data preprocessing method is proposed, in which a dual-sensor configuration (comprising an infrared temperature sensor and an ambient temperature sensor) is utilized to compensate for environmental interference. This strategy enables the extraction of degradation-relevant thermal signals, thereby improving the quality and reliability of the input features.

(2) A unified hybrid deep learning architecture is developed by integrating convolutional neural networks (CNNs), long short-term memory (LSTM) units, and attention mechanisms. This architecture effectively captures local degradation features, models long-term temporal dependencies, and dynamically emphasizes the most informative patterns within the data. Additionally, a channel attention mechanism is incorporated to adaptively reweight feature channels, enhancing the model’s capacity to extract subtle yet critical degradation cues across spatial dimensions.

(3) Extensive experimental evaluations based on the accelerated aging data of real-world tantalum capacitors demonstrate the proposed method’s superior performance over conventional LSTM and CNN-LSTM baselines. The model achieves significant improvements in prediction accuracy, robustness, and generalization capability. Notably, the proposed approach is particularly well suited for online prognostic scenarios where only non-intrusive surface temperature measurements are available and holds strong potential for extension to other types of capacitors and passive electronic components in broader PHM applications.

The rest of this paper is organized as follows.

Section 2 presents the problem formulation and related preliminaries, including the temperature degradation modeling of tantalum capacitors.

Section 3 details the proposed online RUL prediction method, including the data preprocessing strategy and the hybrid CNN-LSTM-Attention architecture.

Section 4 validates the proposed framework using accelerated degradation experiments on real-world tantalum capacitors and discusses the prediction results. Finally,

Section 5 concludes the paper and outlines potential directions for future research.

2. Preliminaries

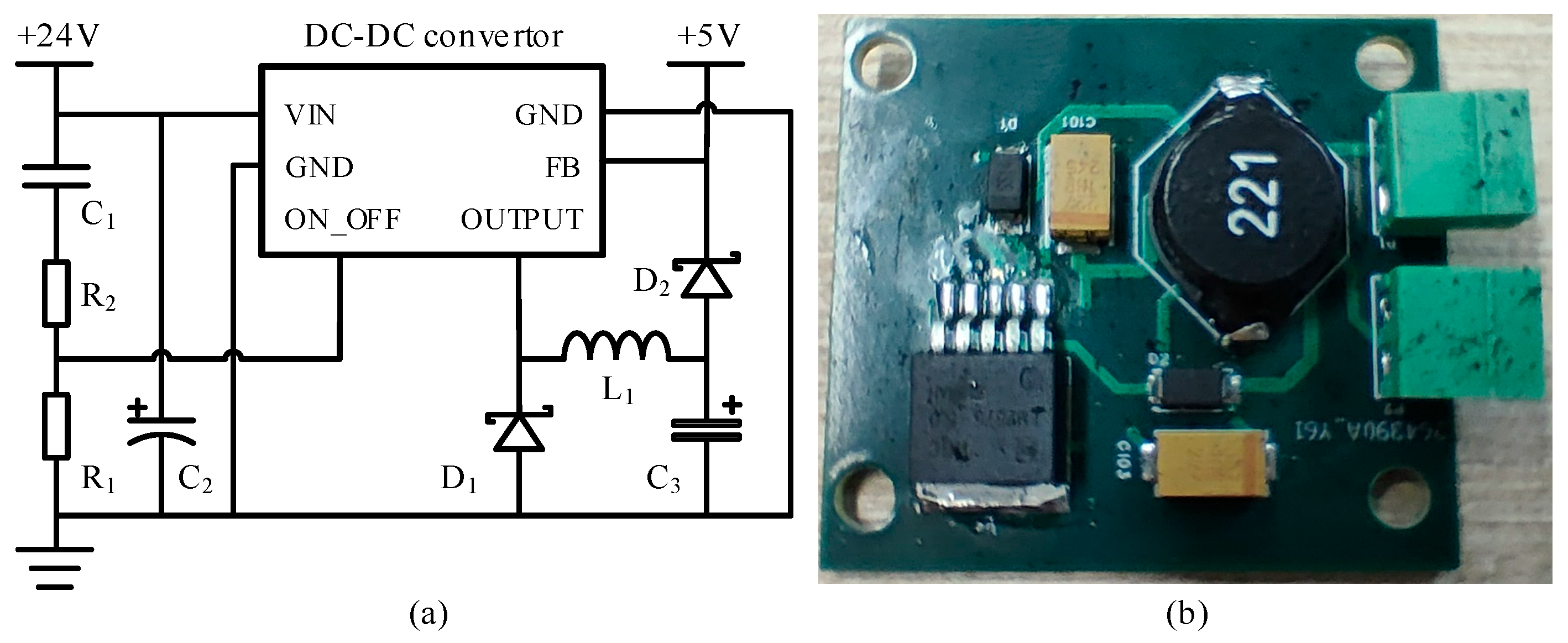

During the degradation process of tantalum capacitors, the core temperature plays a critical role in determining their performance and operational life. However, under complex working conditions, obtaining the core temperature of tantalum capacitors is challenging. In contrast, the operating temperature of the capacitor, which corresponds to the surface casing temperature, can be conveniently measured using noncontact detection methods. The operating temperature of a capacitor is influenced by the ambient temperature, heat dissipation conditions, and electrical operating parameters. Heat dissipation is affected by variables such as the structure of packaging, spatial configuration, and the accumulation of dust. In high-reliability application scenarios, these factors are typically stable under long-term operating conditions. Therefore, this work neglects the impact of heat dissipation conditions. The relationship between the core temperature and the operating temperature is described as follows:

where

Th(

t) represents the core temperature of the tantalum capacitor at moment

t. α is a constant that only relates to the ambient thermal resistance.

T(

t) represents the operating temperature of the tantalum capacitor at moment t.

Te(

t) is ambient temperature.

Tdc(

t) denotes the temperature induced by losses due to leakage current.

Tac(

t) denotes the temperature induced by losses due to ripple current. From Equation (1), the operating temperature can be derived:

Equation (2) elucidates the relationship between the operating temperature and the core temperature of tantalum capacitors. Specifically, the operating temperature is determined by the heat dissipation arising from two components: leakage current and ripple current. Additionally, the ambient temperature plays a significant role as an indispensable factor influencing the operating temperature. The temperature rise of the operating temperature in tantalum capacitors caused by leakage current follows the relationship described below.

where

Udc represents the DC voltage that remains unchanged during capacitor degradation.

ic is the leakage current.

H is the thermal conductivity.

S refers to the surface area of the capacitor.

1/HS is simplified as a constant value,

β. The temperature rise of the operating temperature in tantalum capacitors caused by ripple current can be described as follows:

Combining Equation (2), the total temperature variation in the operating temperature of tantalum capacitors caused by performance degradation can be expressed as follows:

The core temperature representing the degradation state of the tantalum capacitor can be expressed as follows:

During the aging process of tantalum capacitors, the degradation of performance significantly accelerates the rise of the operating temperature. Specifically, the degradation of performance parameters and the equivalent series resistance (ESR) lead to a rapid increase in the core temperature of the tantalum capacitor. Therefore, long-term continuous monitoring of the core temperature provides a convenient means to predict the remaining useful life (RUL) of the tantalum capacitor.

3. The Proposed Method

3.1. Overview of Proposed Method

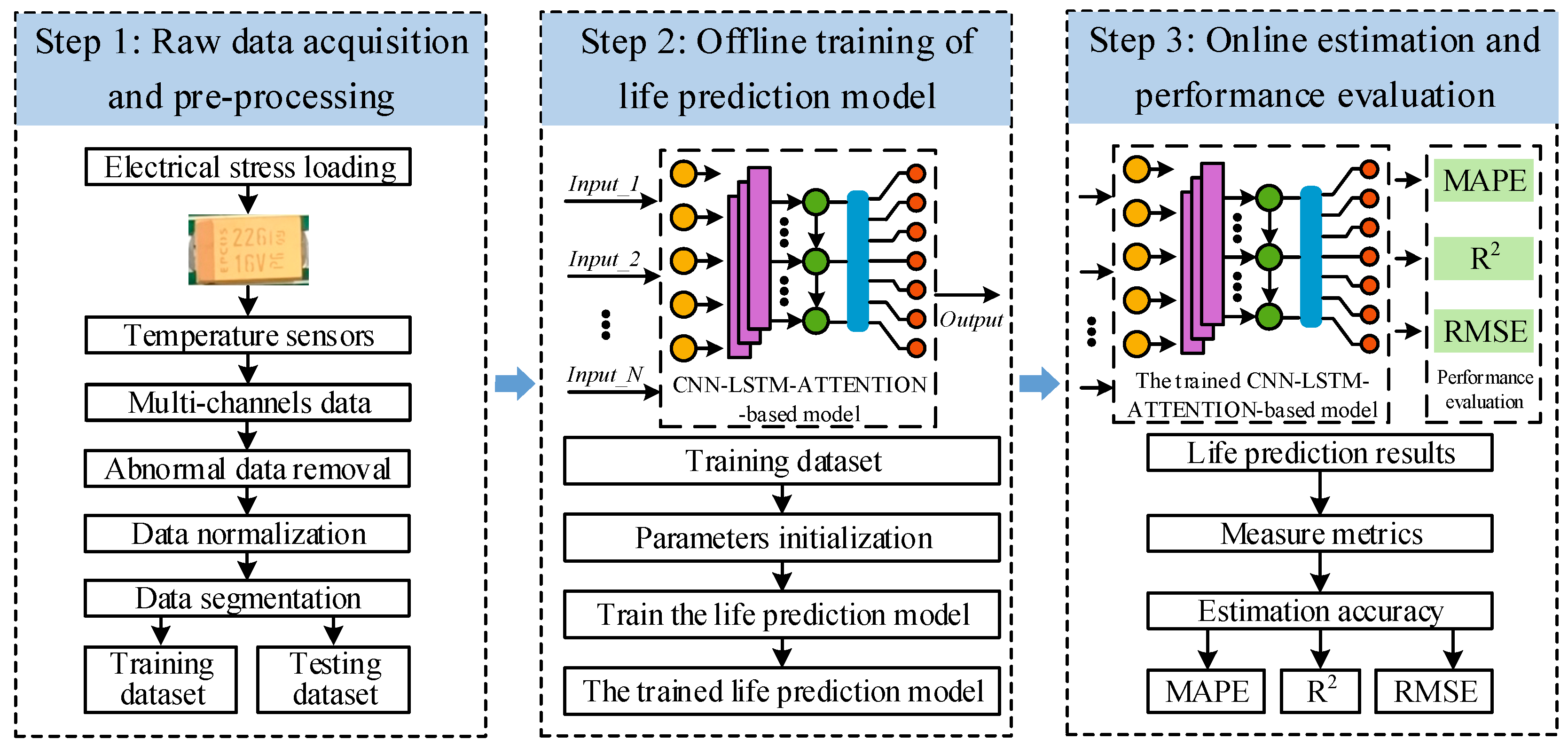

Figure 1 illustrates the framework of the proposed CNN-LSTM-Attention-based method for predicting the RUL of tantalum capacitors, which consists of three main steps.

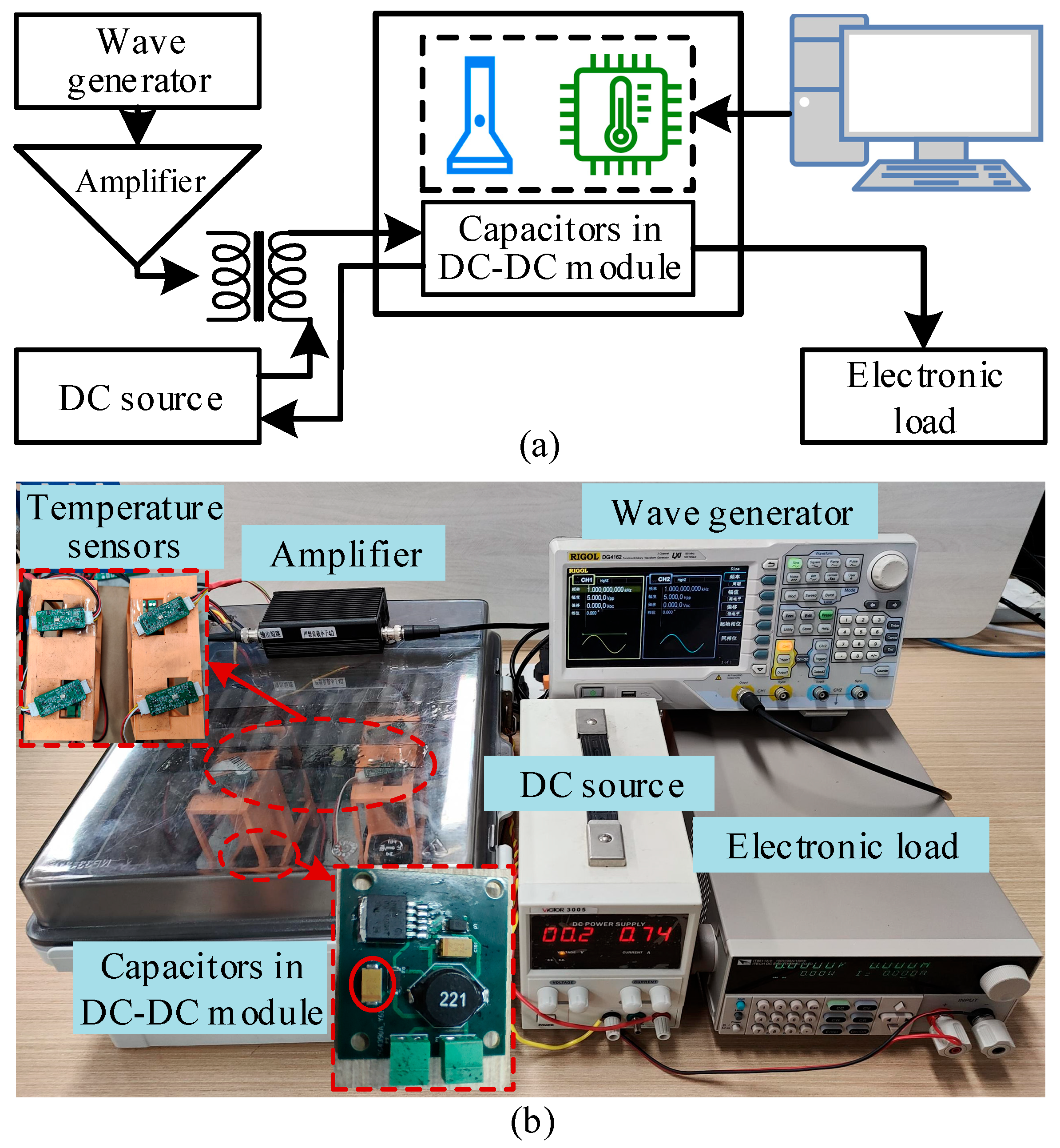

(1) The first step involves the acquisition and preprocessing of temperature data for the tantalum capacitors. The degradation data used in this study is sourced from accelerated degradation tests. During the data preprocessing phase, the degradation data of the tantalum capacitors are processed to remove interference caused by environmental temperature. Considering the potential impact of environmental temperature changes on the degradation process, this study considers multiple factors, including the operating temperature and temperature rise slope. These factors are integrated to construct the input dataset, which is then used to train the neural network model. Meanwhile, during the initial phase of accelerated testing, considering that the rapid temperature rise of the capacitor does not represent its gradual aging process, the lifetime is calculated after the temperature stabilizes. The acquired lifetime data is preprocessed to generate the dataset for the tantalum capacitor lifetime prediction algorithm.

(2) The second step involves the offline training of the lifetime prediction model. The purpose is to systematically train the deep neural network model using the training dataset. To be specific, the input data and corresponding degradation labels are simultaneously fed into the constructed neural network. The input data is propagated through the multi-layer structure of the model, ultimately yielding the predicted lifetime of the capacitor. The error between the predicted values and the actual lifetime is calculated, and the backpropagation algorithm is employed to optimize the internal parameters of the model. This process continues until the predefined number of training iterations is reached.

(3) The third step involves online prediction and performance evaluation. The target of online prediction is to estimate the remaining lifetime of the tantalum capacitor in real time. At this stage, predictions are made based on current and historical data. Sample data from the test set is input into the trained neural network model, and the model outputs the predicted remaining lifetime of the capacitor. Various evaluation metrics, such as MAPE and RMSE, are then used to quantitatively assess the accuracy of the model’s predictions, thereby evaluating the overall performance of the model. Additionally, the predicted lifetime values are compared with the actual lifetime values recorded from accelerated tests to validate the effectiveness and accuracy of the model.

3.2. Data Preprocessing Method

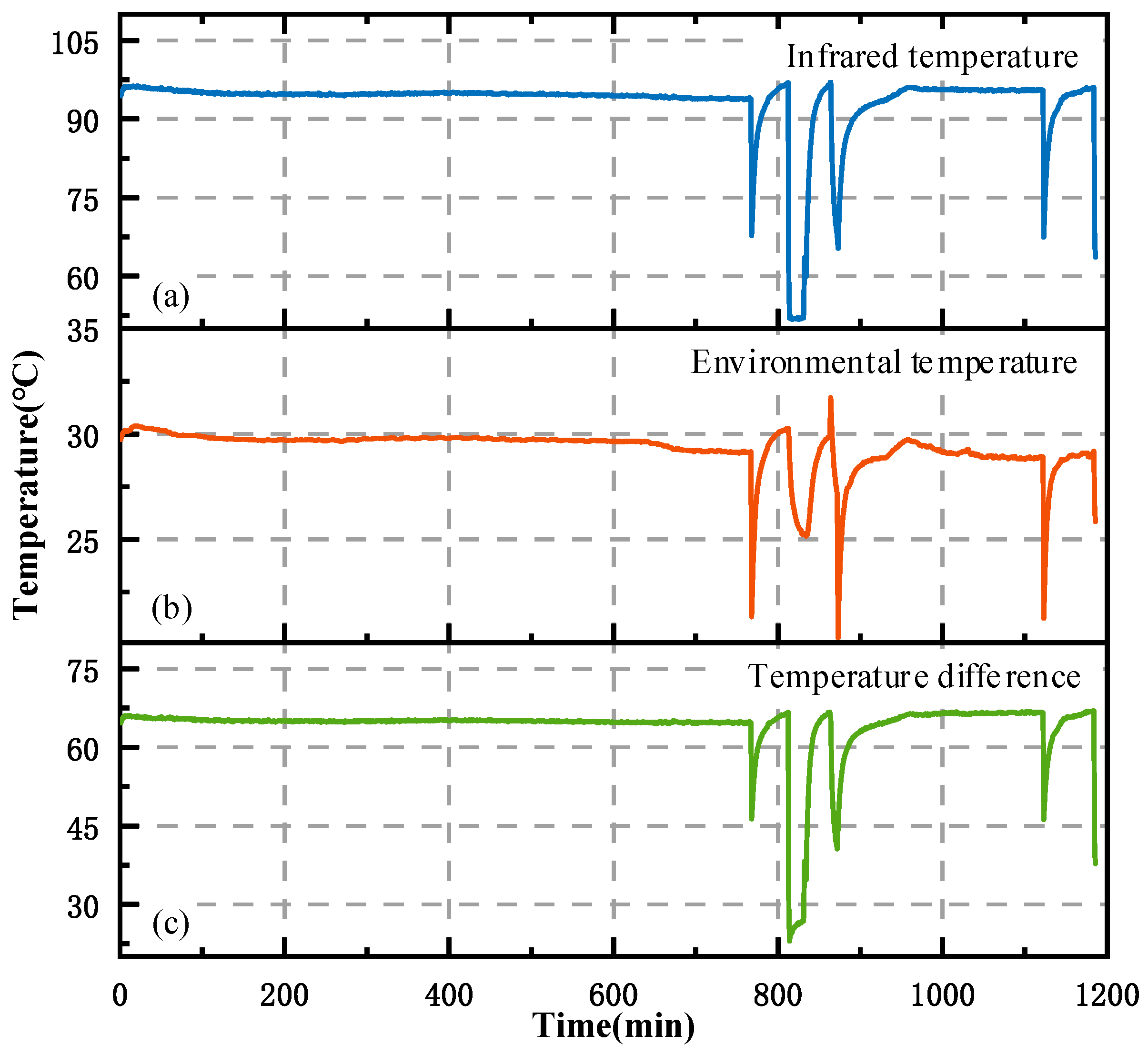

During the accelerated testing process, considering the potential impact of ambient temperature on the degradation temperature data of the tantalum capacitor, data preprocessing measures are implemented with the aim of minimizing the interference from ambient temperature, thereby ensuring the accuracy of the acquired tantalum capacitor degradation temperature data. To be specific, two temperature sensors are adopted in the experimental setup. Herein,

y1(

t) represents the tantalum capacitor degradation temperature data measured by an infrared temperature sensor, while

y2(

t) indicates the real-time ambient temperature conditions surrounding the tantalum capacitor, monitored by an ambient temperature sensor.

y3(

t) represents the true temperature data of the tantalum capacitor, which is calculated using the following formula:

By subtracting the ambient temperature data from the measured tantalum capacitor temperature data, the true temperature data of the tantalum capacitor can be obtained, thereby minimizing the potential interference of ambient temperature on the test data and ensuring a high degree of reliability and accuracy in the dataset used for training the CNN-LSTM-Attention model.

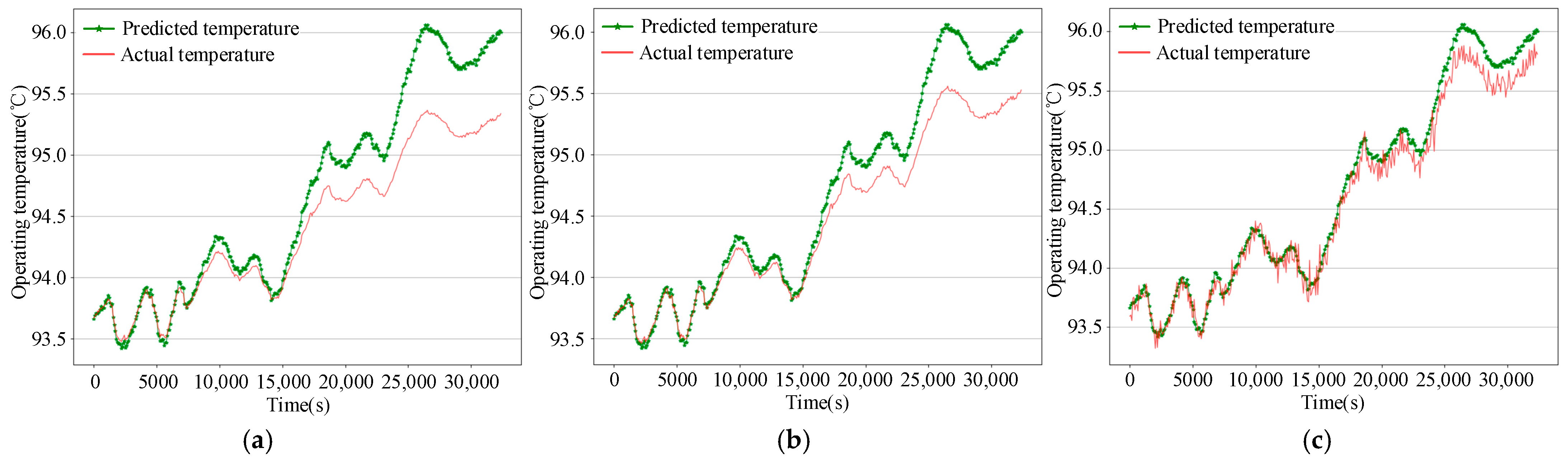

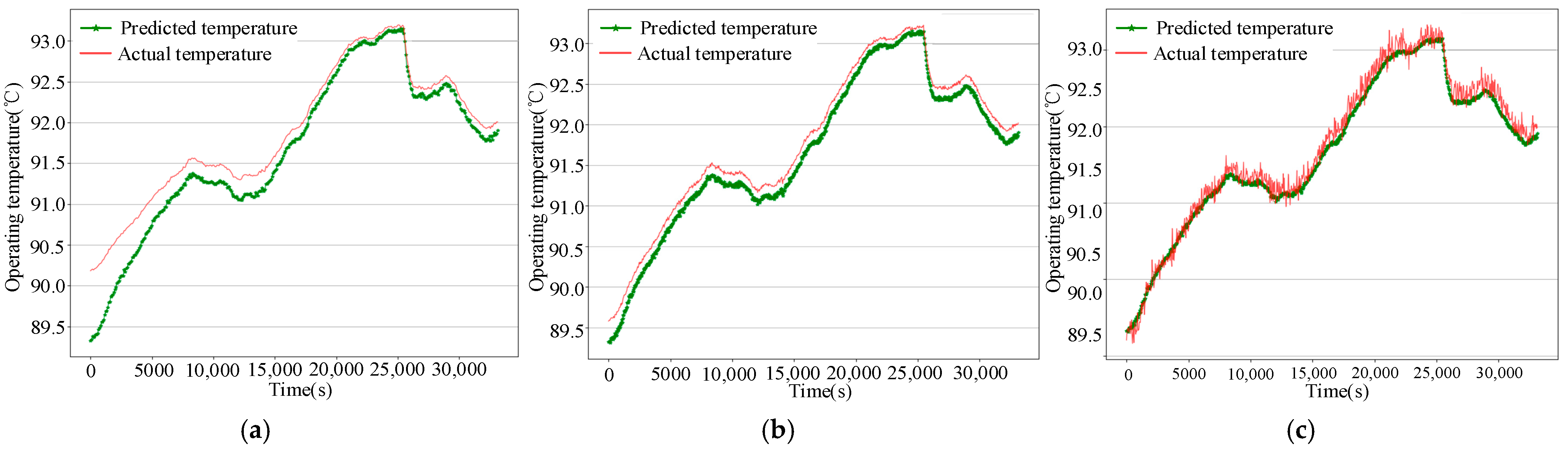

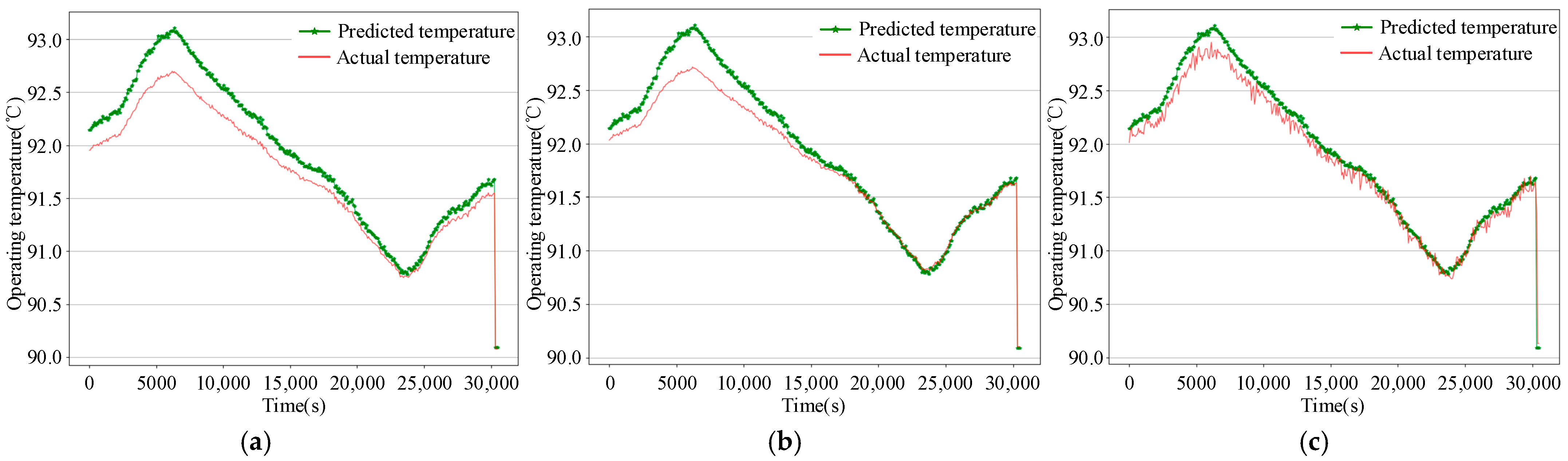

3.3. The Proposed CNN-LSTM-Attention-Based Remaining Useful Life Prediction Method

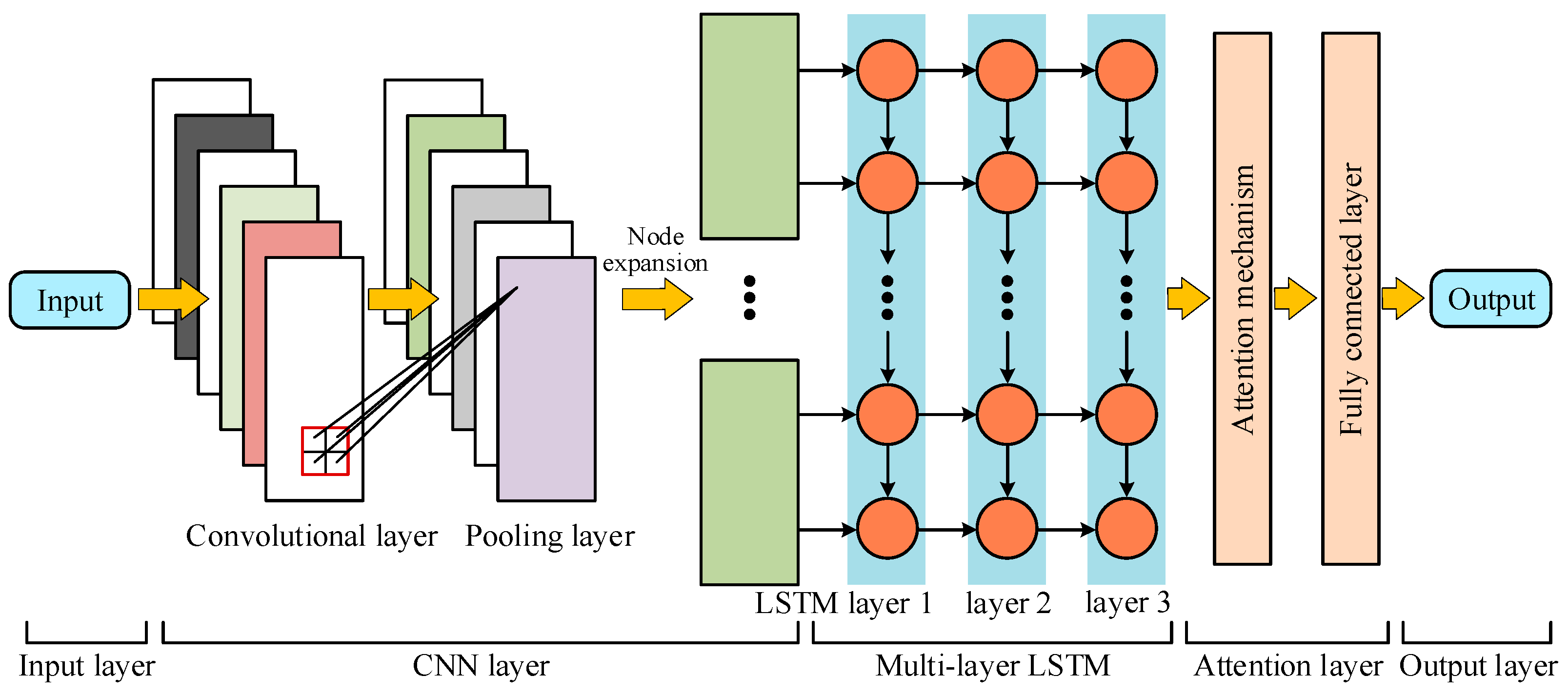

The workflow for predicting the RUL of tantalum capacitors integrates deep learning techniques with the physical degradation mechanisms of the capacitors. It estimates the remaining lifetime by analyzing multi-dimensional degradation data, including operating temperature, electrical parameters, and environmental conditions. Given the strong feature extraction capability of convolutional neural networks (CNNs) and considering the long time series of degradation data for tantalum capacitors, a CNN-LSTM-Attention-based model has been developed for RUL prediction. The structure of the prediction model is illustrated in

Figure 2.

To be specific, the CNN is composed of convolutional layers, pooling layers, and fully connected layers. It can automatically learn meaningful features from the input data without the need for manual feature preprocessing. The convolutional layers serve as the foundation and core of the CNN, carrying out the critical task of feature extraction. The convolution operation is expressed as follows:

where

Ci,j represents the value of the output feature map at position (

i,

j).

I is the input data.

K is a convolution kernel of size

p ×

q.

b is the offset term. As the convolutional kernel slides over the input layer, the equation can be expressed as

By comparing Equations (8) and (9), it can be observed that i′ = i + m, j′ = j + m, meaning the output values undergo the same spatial displacement as the input. This convolution operation provides the CNN with a degree of translation invariance, enabling it to preserve the spatial information of the input data. To ensure consistency between the spatial dimensions of the input data and the output feature maps, zero-padding is applied when the convolution kernel reaches the edges of the input data. The number of convolutional kernels directly determines the depth (number of channels) of the output feature maps. Additionally, the size of the convolutional kernels is a key factor in defining the receptive field, as kernels of different sizes can extract spatial features at various scales.

The pooling layer is a crucial structure in CNN for reducing the spatial dimensions of data and is typically placed after convolutional layers. By reducing the dimensions of feature maps while maintaining the number of channels, the pooling layer decreases the number of parameters in subsequent layers, enhancing computational efficiency and helping to mitigate overfitting. The most common pooling methods are Average Pooling and Max Pooling. Considering the superior performance of Max Pooling in capturing and extracting prominent features, this study adopts the Max Pooling strategy. The mathematical expression is as follows:

After being processed by the convolutional and pooling layers, the data needs to be flattened before being input into the fully connected (FC) layer. The function of the FC layer is to further integrate and reduce the dimensionality of the features extracted and fused by the preceding network layers, ultimately producing the prediction results through the forward propagation algorithm. The mathematical representation of the fully connected layer typically involves a linear combination of weight matrices and bias vectors. The expression for the fully connected layer is as follows:

where

z represents the output of the current layer,

w represents the parameter value of the current layer,

a represents the output of the previous layer, and

b represents the offset item.

LSTM (long short-term memory) networks are designed to address the vanishing and exploding gradient problems commonly encountered when processing long sequence data. The core architecture consists of a forget gate, an input gate, an output gate, and a memory cell. The forget gate regulates the extent to which previous memory cell content is retained. The input gate determines how much new information is stored in the memory cell. The output gate controls how much of the memory unit content will be used to calculate the current hidden state and output. These gates perform computations based on the current input and the previous hidden state, using a Sigmoid activation function that produces outputs between 0 and 1, representing the proportion of information that passes through. The update mechanisms for the three gates and the memory cell are as follows:

In the equation, represents the intermediate memory unit at the current time step, derived by applying the tanh activation function to the current input and the previous hidden state. The outputs of the forget gate and input gate regulate the extent of memory unit updates, enabling the storage and propagation of temporal information within the LSTM network. Finally, the output of the output gate, combined with the tanh-activated value of the current memory unit, determines the output at the current time step. This output is then used to update the hidden state for the next time step. This design allows the LSTM to incorporate the historical information of the entire input sequence into a state update at each time step, enhancing the performance in processing long sequence data.

The application of attention mechanisms in deep learning is inspired by the human visual attention system, which allows for the concentration and shifting of attention during information processing. Attention mechanisms are generally classified into two types: hard attention and soft attention. Considering the advantages of dynamic weight allocation in soft attention, this study adopts the soft attention mechanism. The mathematical expression of the soft attention mechanism can be represented as follows:

where

represents the weighted output of the model in time steps,

represents the attention weight for the i-th element of the input data, and

denotes the corresponding input feature.

Through this weighting process, the model dynamically adjusts its focus at each time step, prioritizing the processing of input features assigned higher weights. This mechanism not only enhances the flexibility of the model in processing information but also significantly improves its ability to capture and utilize critical data features.

The channel attention mechanism is specifically designed to optimize the importance of channels in the convolutional layers of a model, serving as a key implementation of soft attention. The mechanism is implemented by introducing both a squeeze module and an excitation module. The squeeze module is adopted to reduce the feature map of each channel into a single scalar value, effectively capturing and representing the global information of that channel. The mathematical expression is as follows:

where

H represents the height of the convolution layer, and

W represents the width of the convolution layer.

uc represents the convolution kernel parameter of the

c-th channel.

The excitation module evaluates and determines the relative importance of each channel by applying an activation function. The specific implementation process is as follows:

where

δ represents a specific nonlinear threshold function, such as ReLU.

σ represents the activation function that processes the global information of each channel, such as a Sigmoid. Through this mechanism, the excitation module assigns a weight to each channel, reflecting its contribution and importance within the entire network. The channel importance adjustment method, based on dynamic learning, provides an effective means for deep learning models to process complex information in a more refined and flexible manner, thereby enhancing the accuracy and efficiency of the model.