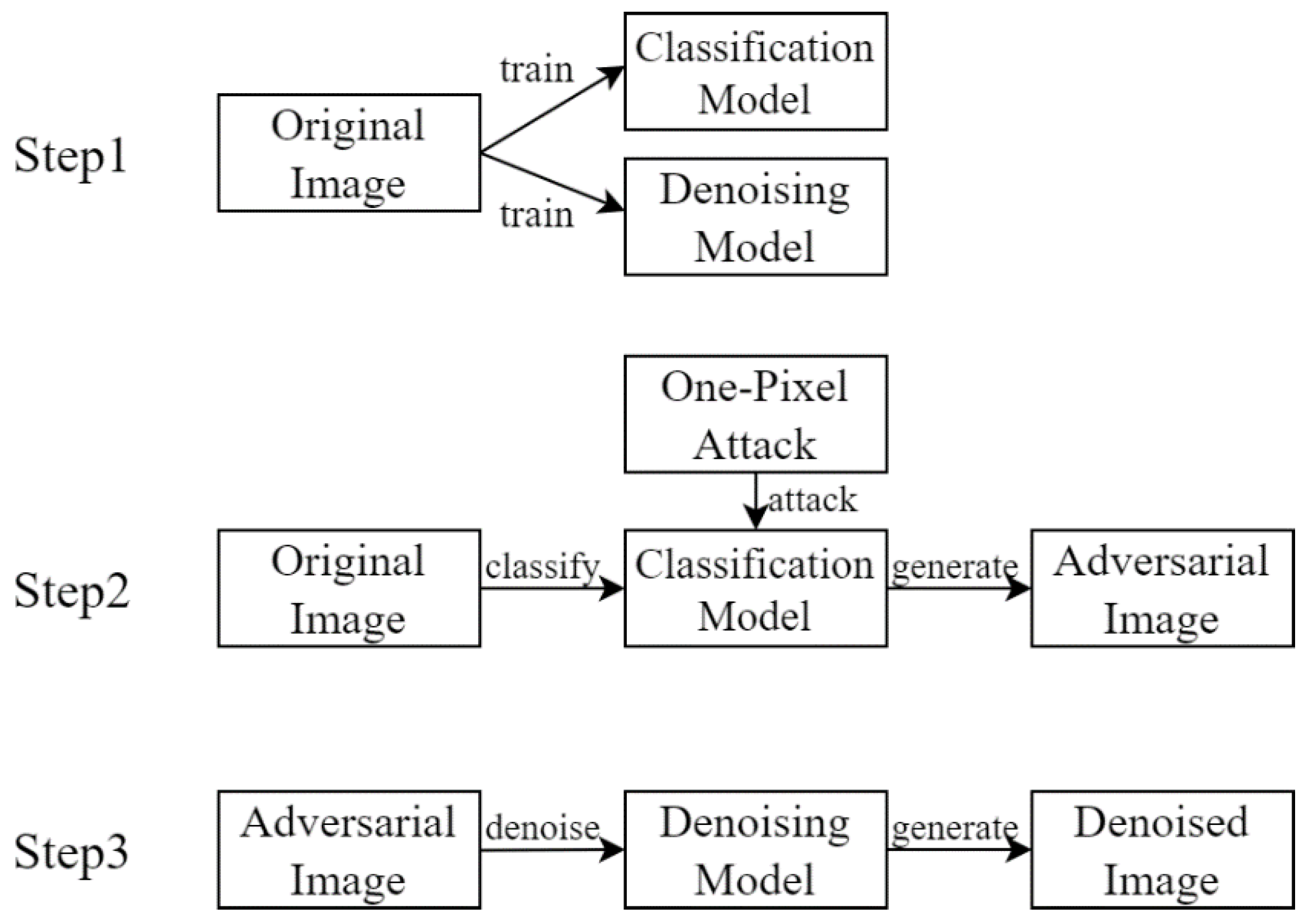

This study is based on applying pixel attacks to images and describing the methods used to restore the attacked images to their original state. The different techniques and theories used in the experiments are described below.

3.1.1. Attack Method

- (1)

One-Pixel Attack

The original image is assumed to be represented as an n-dimensional array

, and the machine learning model under attack is denoted as

f. The confidence level

f(

x) can be obtained by inputting the original normal image

x into the model

f, and then, adversarial images can be generated by perturbing the pixels in the image

x. The perturbed pixels can be expressed as. The limit of the perturbation is denoted as

L. Given that the set of categories in the dataset is

, and the category of the original image is

, the goal is to transform it into the adversarial category, where

,

C. This problem can be described by the following formula:

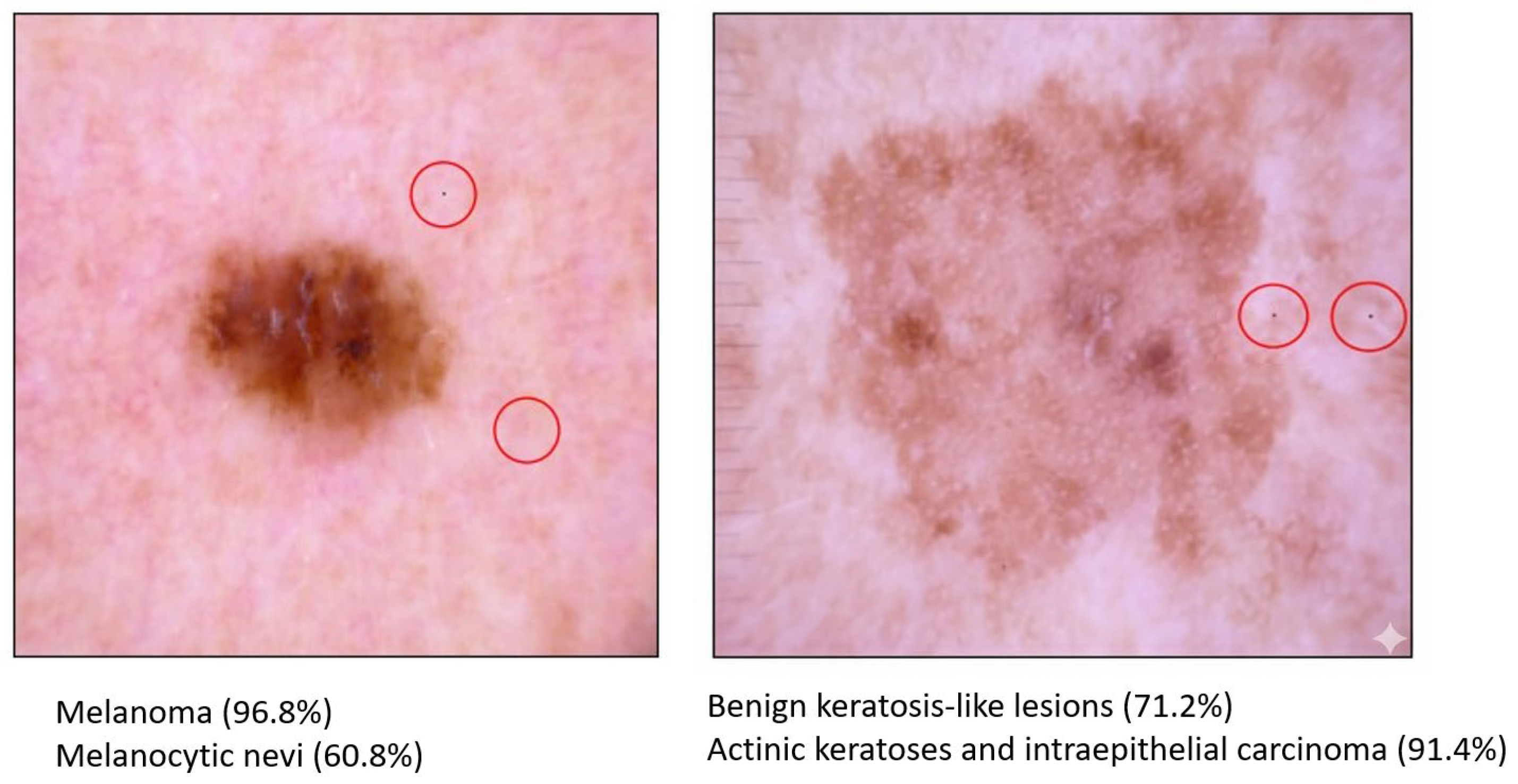

In the case of a one-pixel attack, since the intention is just to alter a single pixel in the image, the value of L is set to 1, rendering the above objective function as an optimization problem. The most straightforward solution is to make an exhaustive search, which requires trying all combinations composed of the image’s x-coordinates, y-coordinates, and RGB color channels. However, this method requires an enormous amount of time, potentially years, when dealing with large images or images with multiple color channels. For instance, in a 3 × 224 × 224 image, this algorithm must decide the x and y coordinates and the values of the red, green, and blue channels. As each channel has 256 possible combinations and 224 × 224 possible coordinate combinations, 224 × 224 × 256 × 256 × 256 = 841,813,590,016 combinations would need to be applied to generate a single adversarial image. Since it would be inefficient to apply an exhaustive search to this amount of data, differential evolution algorithms are used to generate combinations in these cases.

- (2)

Differential Evolution

Differential Evolution (DE) [

12] is a branch of the Evolution Strategy (ES) [

32], which is designed based on the concept of a natural breeding process. The DE process used in this study is as follows: Initial population, mutation, crossover, selection, termination, and fitness score.

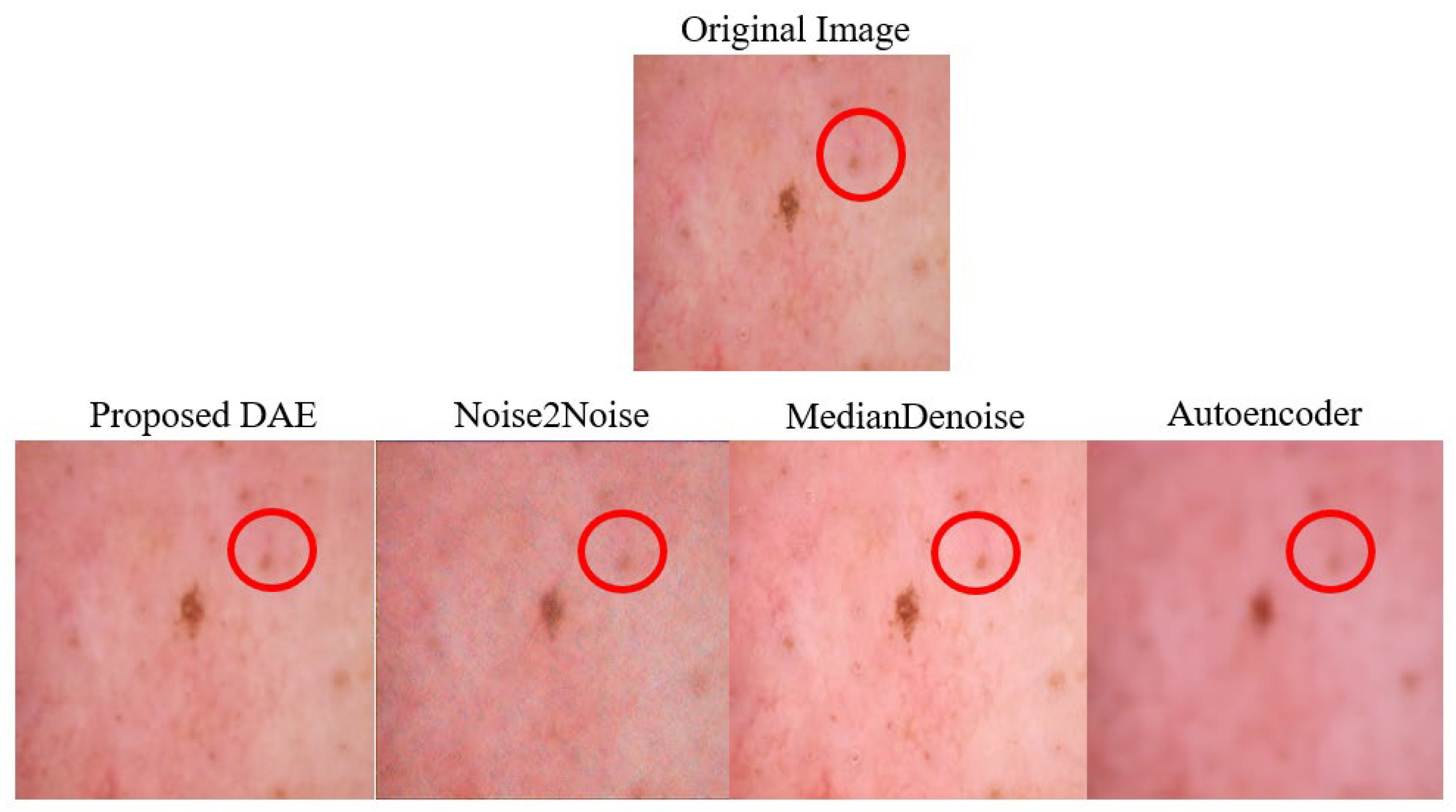

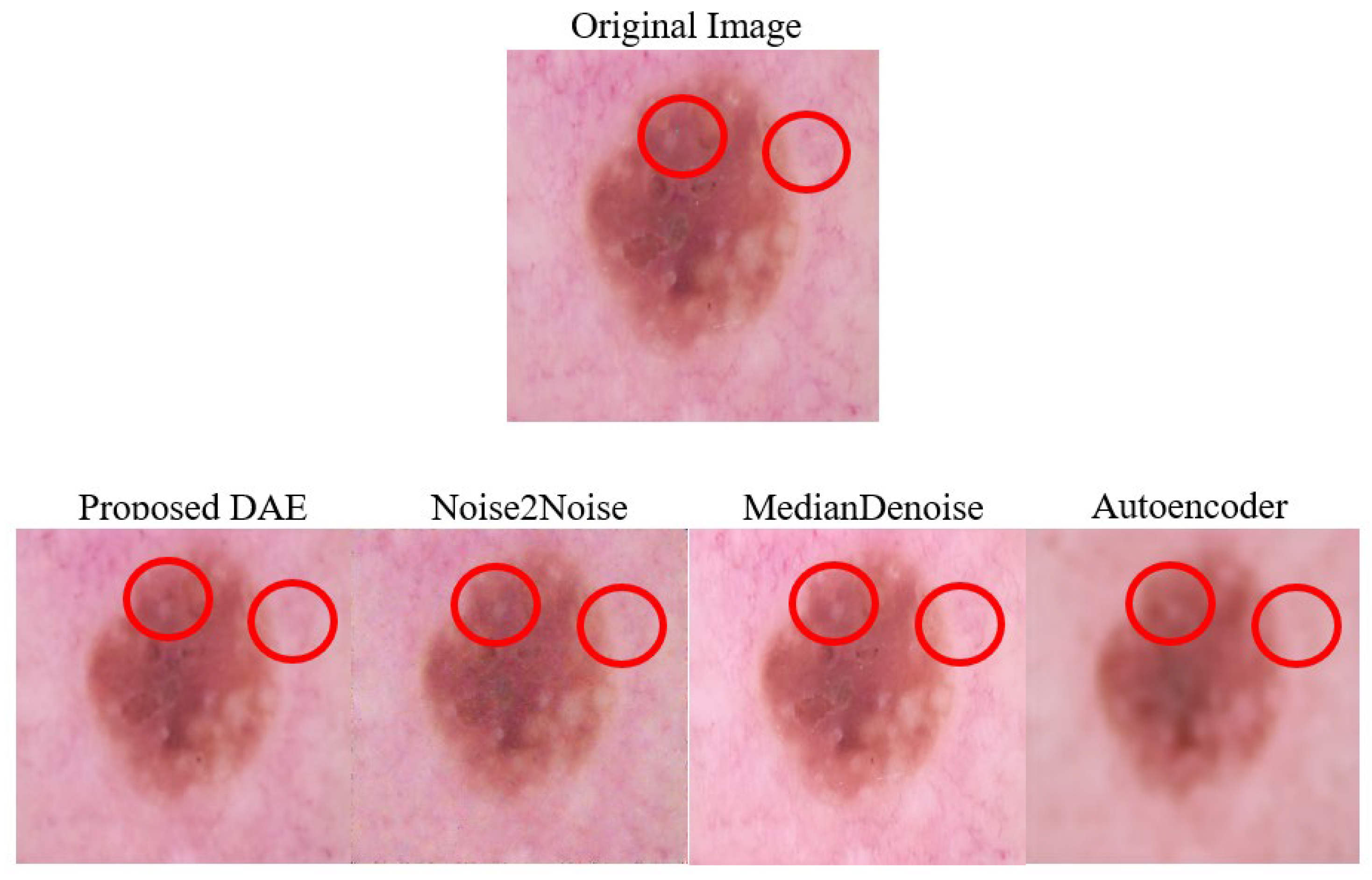

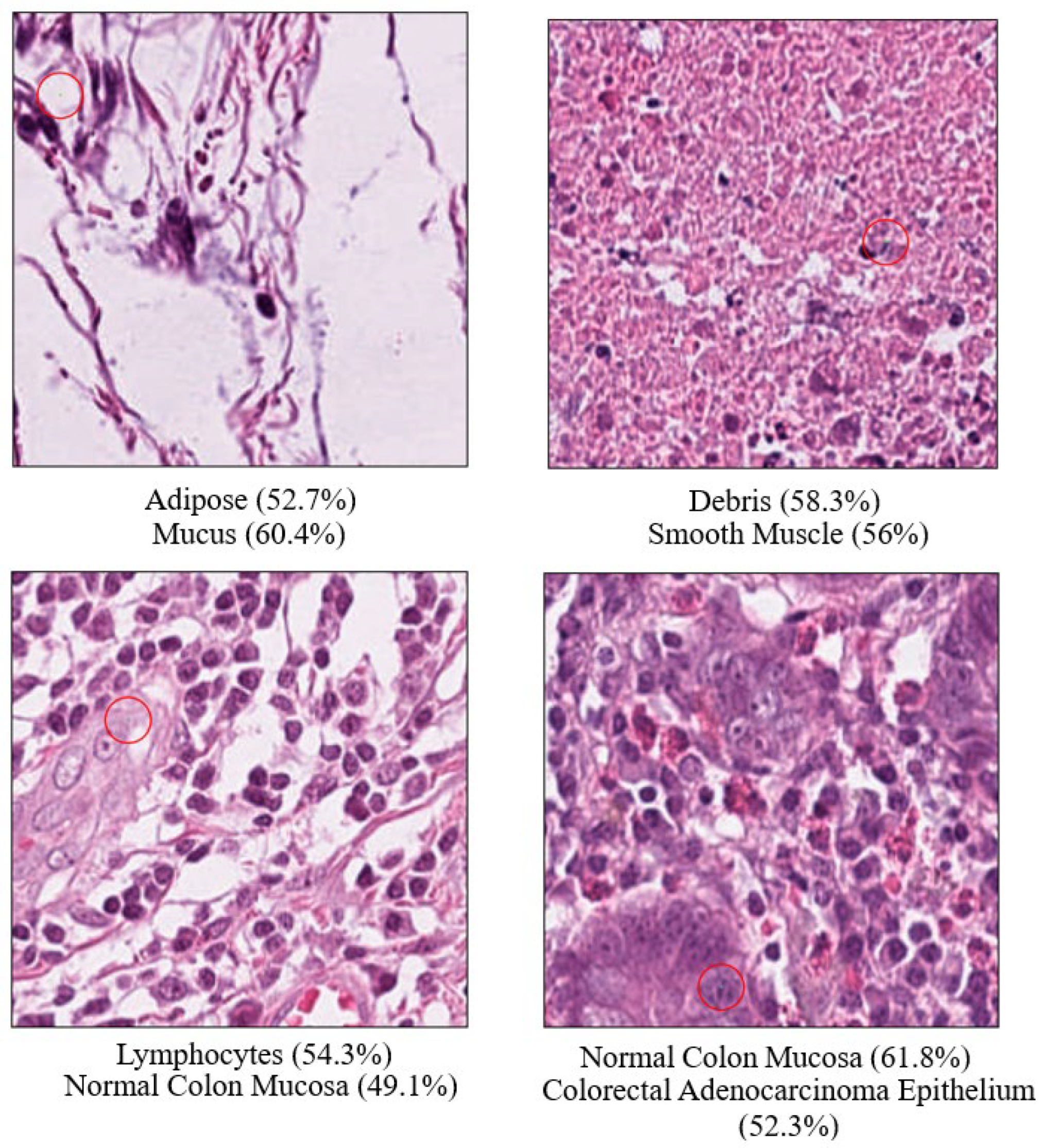

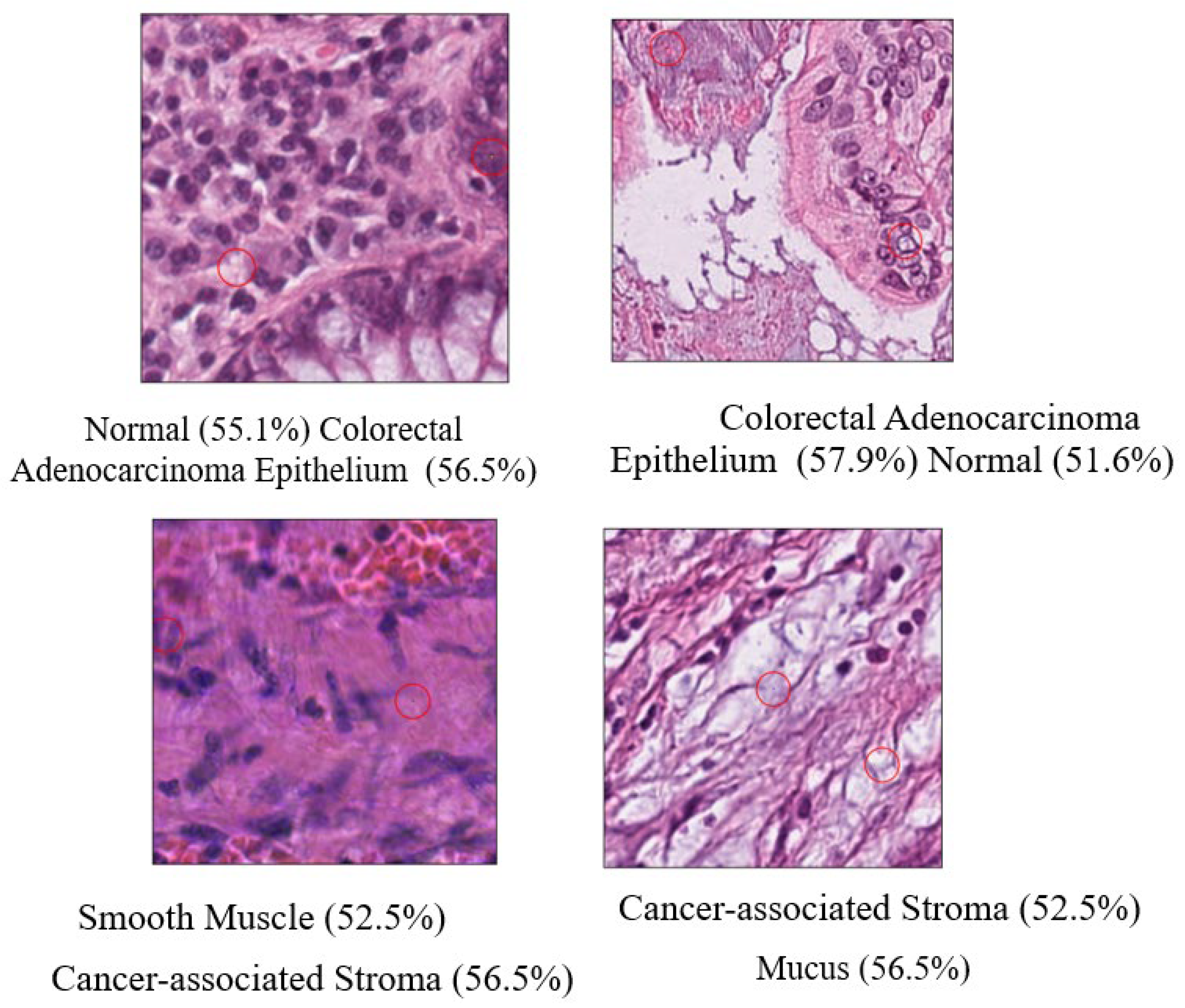

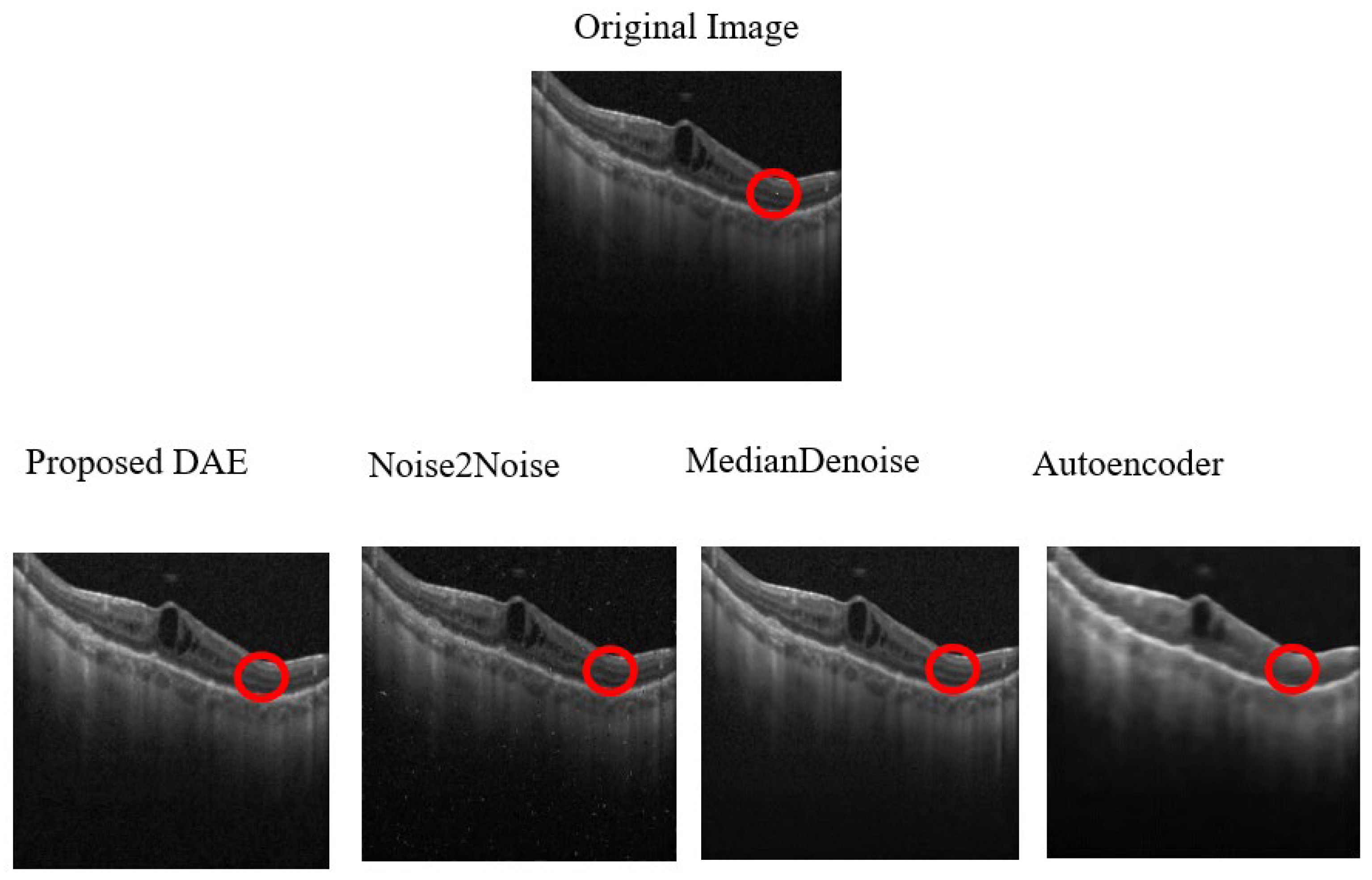

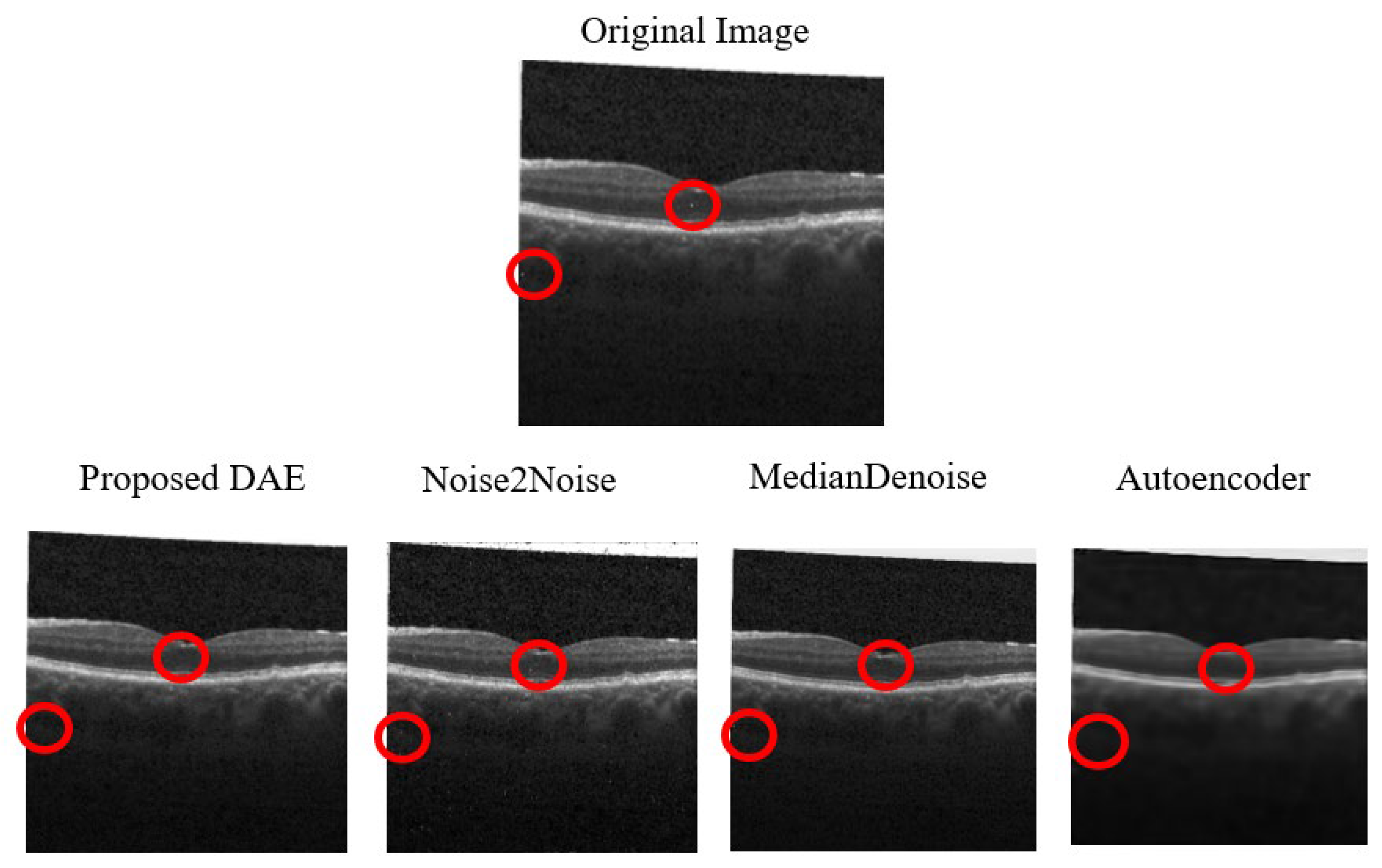

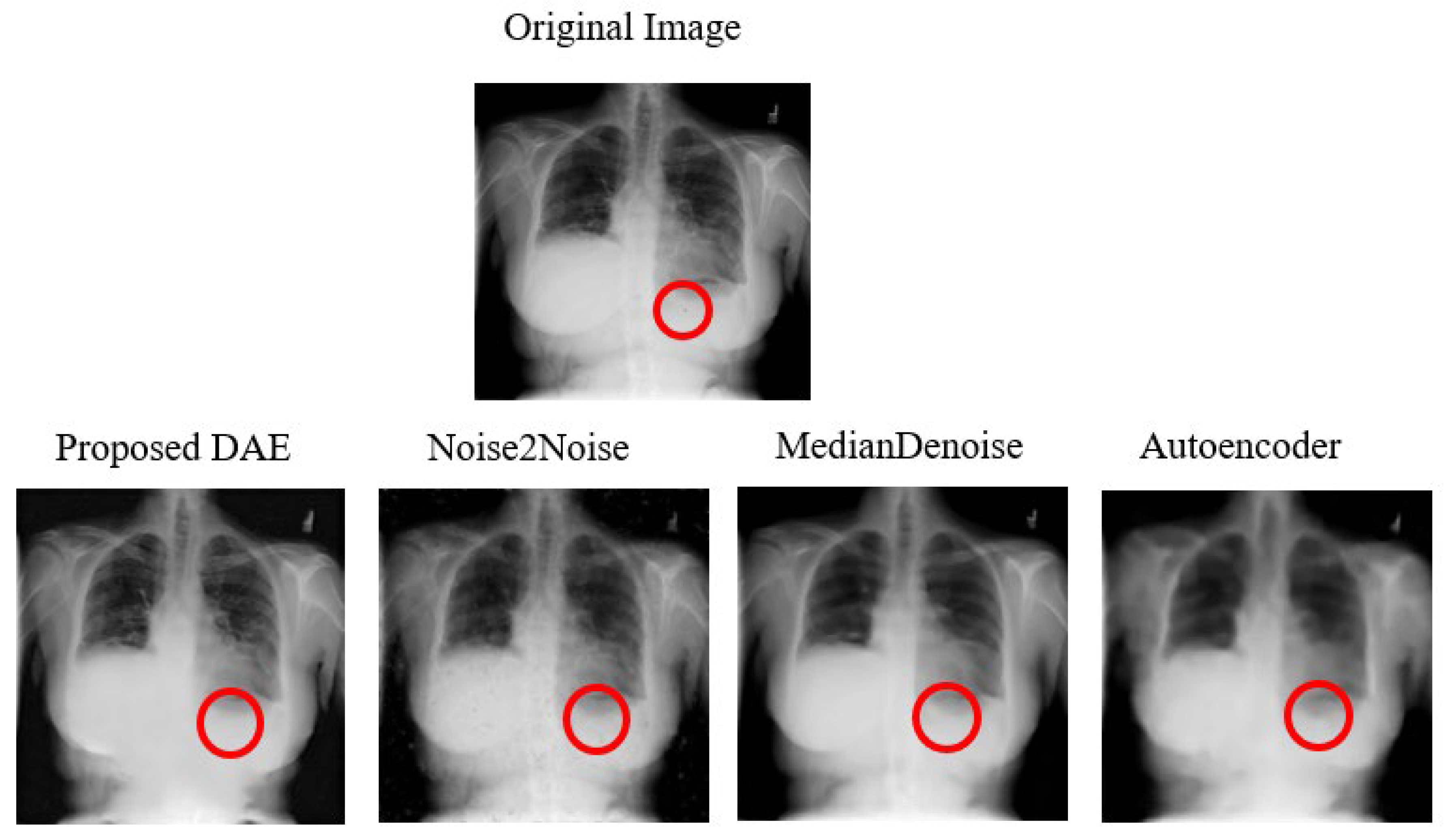

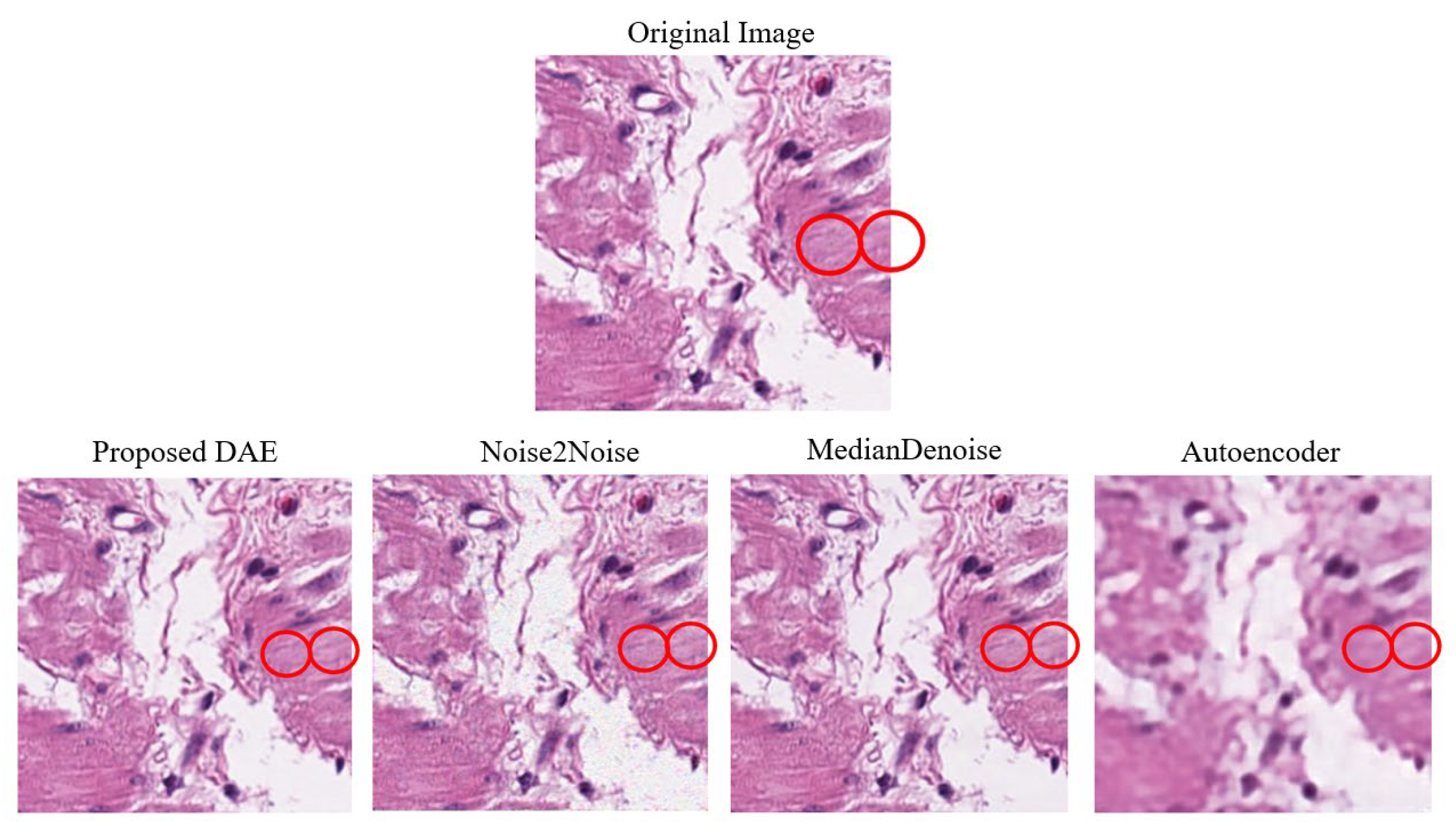

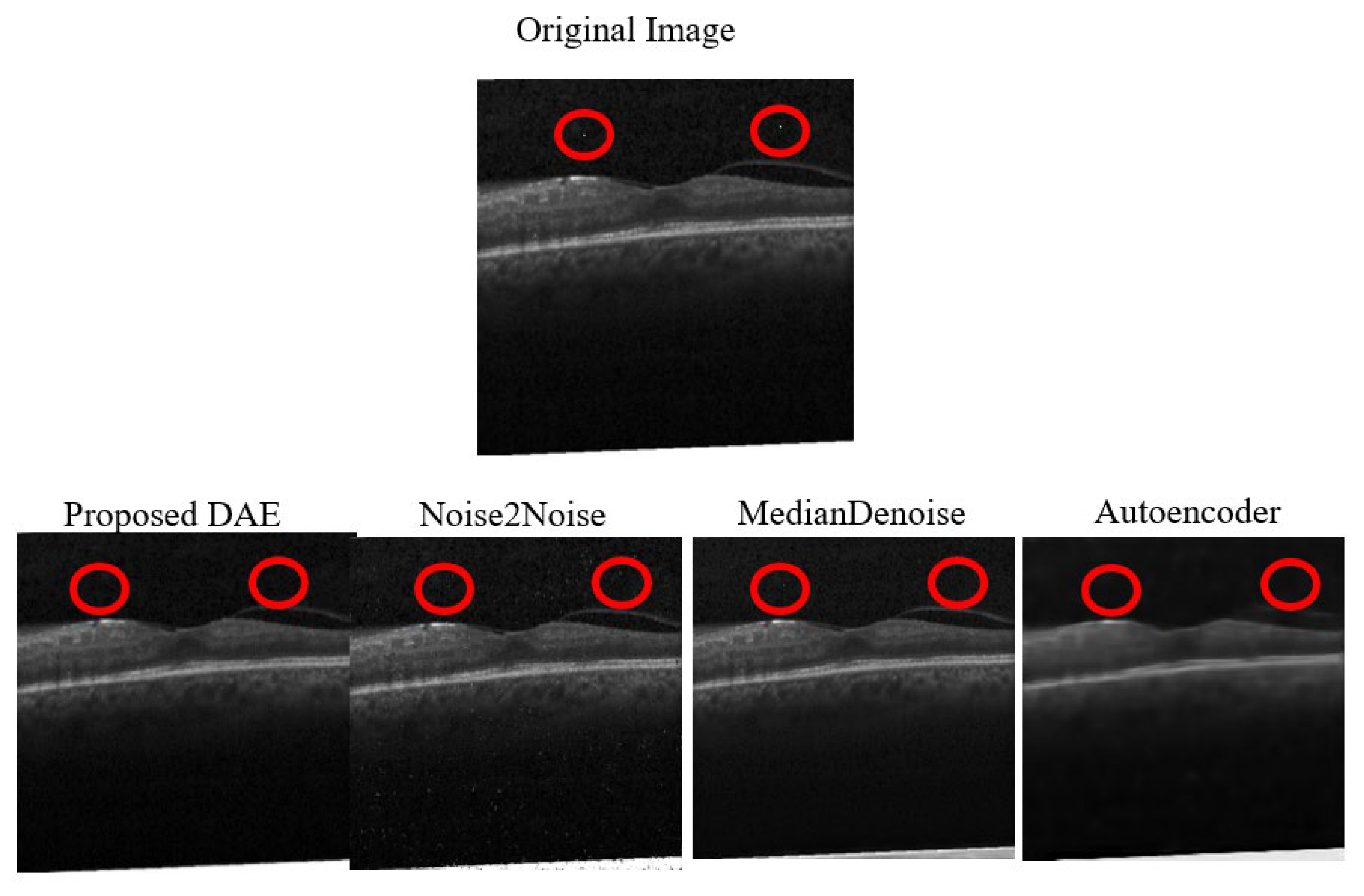

3.1.2. Denoising Model

Different denoising models and combinations will be used in this study to successfully denoise medical images that have been subjected to single pixel attacks, and to compare and analyze the restoration results. Pixel restoration primarily follows the method outlined by Senapati et al. [

9], with adjustments made to the model architecture and experimental settings. Three other existing denoising models will also be used for comparison. Image optimization will refer to the approach used by Zhang et al. [

22] to more effectively remove noise points from images.

There are several methods to add noise to images, such as salt-and-pepper noise, Gaussian noise, Poisson noise, etc. Among them, random-valued impulsive noise preserves the colors of some pixels and replaces others with random values obtained from a range of normalized pixel values cross the RGB channels, rather than replacing them with pure white or black. Each pixel has a probability p of being replaced and a probability 1–p of retaining its original color. Random-valued impulsive noise, compared to Gaussian noise, is a close approximation of the alterations made by single pixel attacks. The different denoising models trained in this study utilize this method of noise addition for denoising purposes. The denoising model architectures used in the study will be introduced separately below, along with their principles.

In the first approach, based on the Noise framework, the authors addressed the single-pixel attack data. Since the noise from these attacks is minimal (a single manipulated pixel), the authors used images with a consistent 10% noise level as both the input and target for model training. For the second, a CNN model incorporating an intermediate layer, we adopted the strategy of Liang et al. [

23].

Training images were generated by adding random impulse noise with a noise level incremented by 10% across the range of 10% to 90%, thereby creating a diverse set of noisy inputs. The objective of this training was to optimize the weights for mapping noisy inputs to their clean counterparts. To accommodate the mix of color and grayscale images in the dataset, the number of input layer channels was tailored to the color type of the input image. Both models utilized the Adam optimizer, with training conducted over 100 epochs and a fixed batch size of 16.

- (1)

Autoencoder

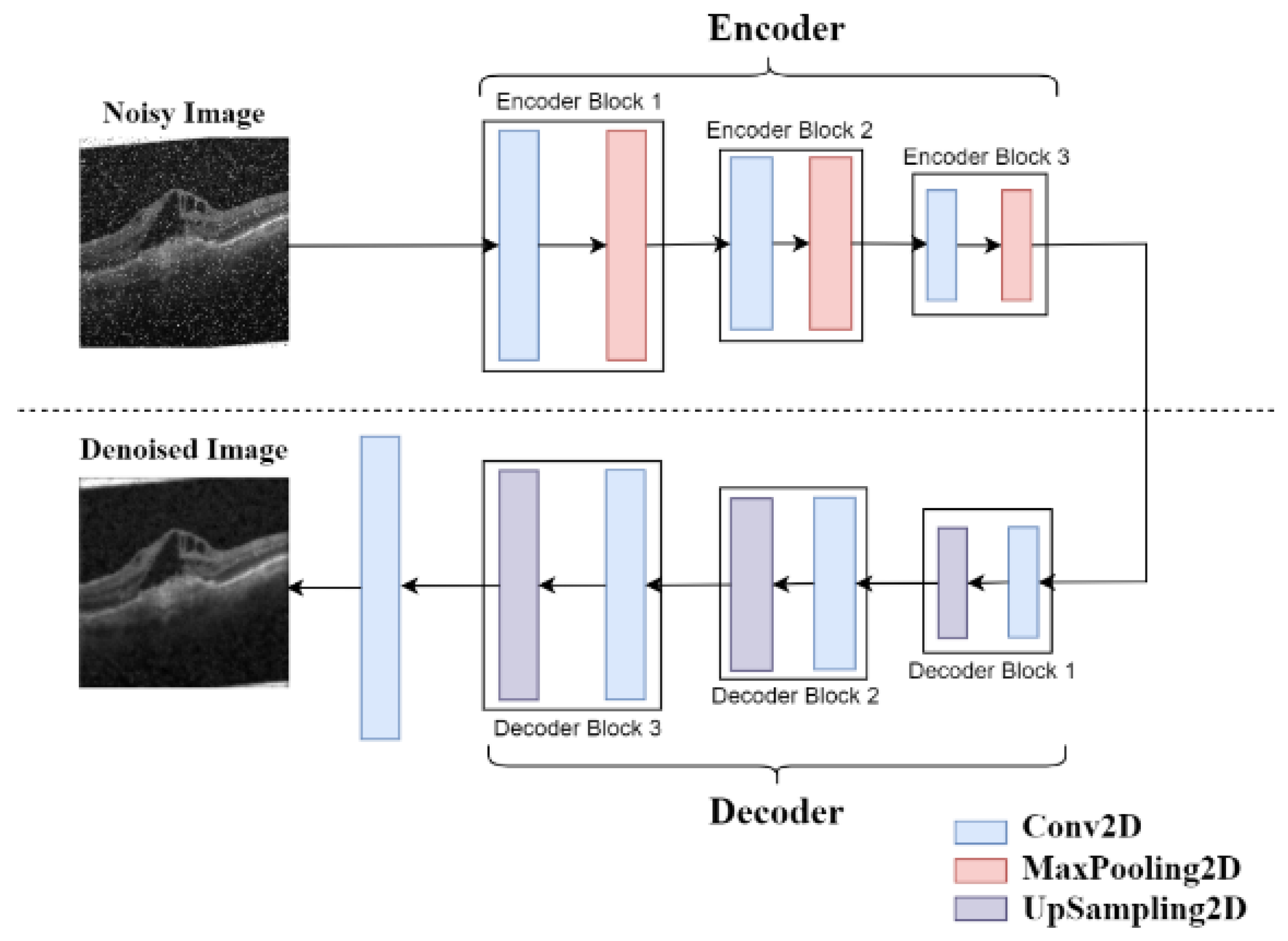

The relatively shallow and straightforward denoising model architecture used by Senapati et al. [

9] is shown in

Figure 1. Similarly, the decoder part only consisted of three convolutional layers, followed by up-sampling, and finally, an additional convolutional layer was added to reconstruct the image. The details of the single-channel model are presented in

Table 1, while those of the RGB three-channel model are shown in

Table 2.

- (1.1)

Method Validation and Optimization

Experiments were conducted in this study using the AbdomenCT dataset from the Kaggle Medical MNIST, following the experimental set-up by Senapati et al. [

9] to validate the original model’s accuracy. The model architecture continued to be modified based on the results of each training session, which led to improved Peak Signal-to-Noise Ratio (PSNR) values. The original dataset image, results of the original study, the experimental results that validated the original model, and the results after optimizing the model are shown from left to right in

Figure 2. Meanwhile, the results of denoising from the original study, the validation experiment, and the optimized model are listed in sequence in

Table 3.

- (2)

Denoising Autoencoder (DAE)

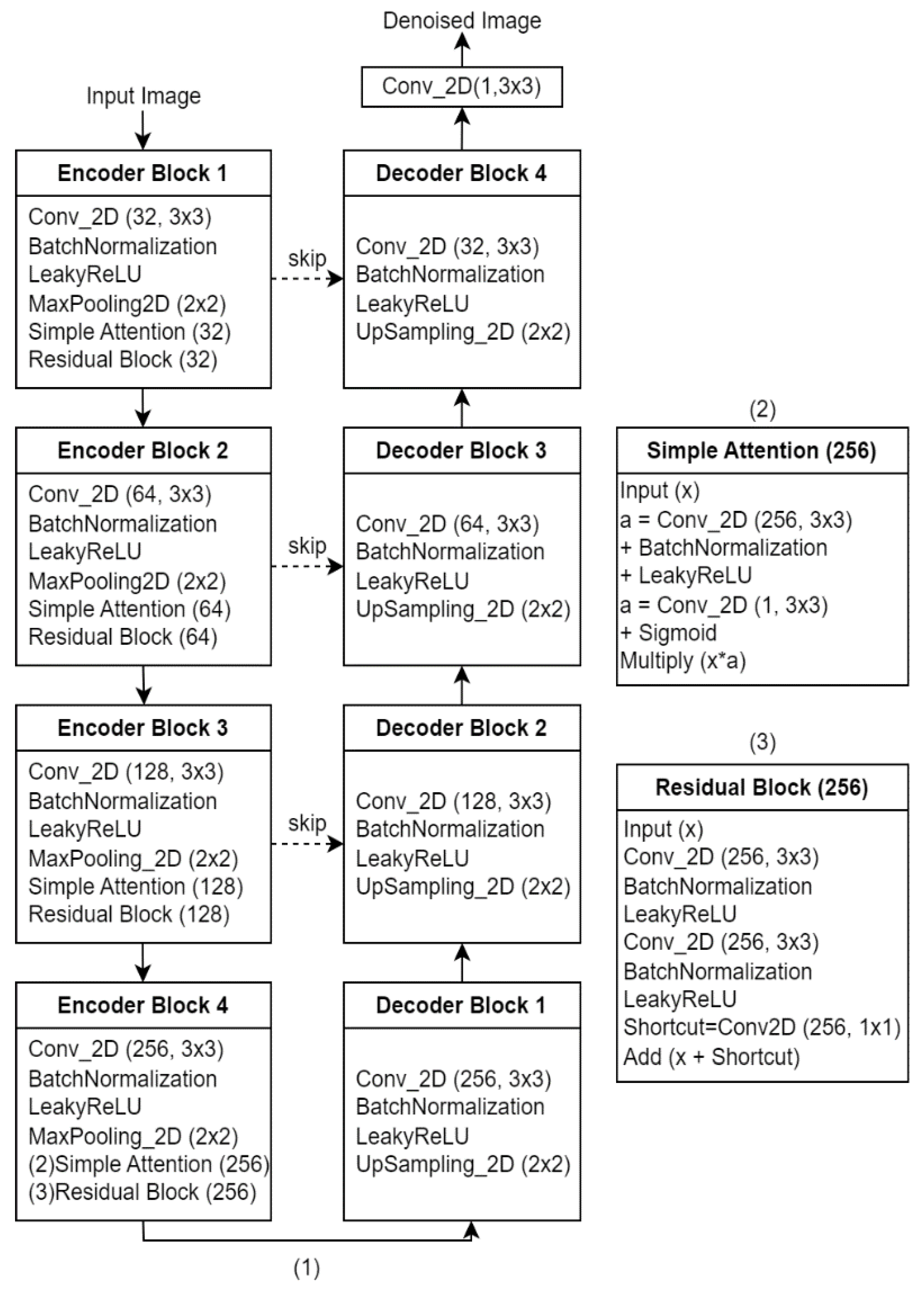

This model refers to the one proposed by Senapati et al. [

9] and adjusts the architecture of the original model. An autoencoder is a neural network model primarily used for unsupervised learning and feature learning. Its core concept entails encoding and decoding input data to learn a compressed representation of it while preserving important information. The denoising autoencoder (DAE) used in this study is a variant of autoencoders that are specifically designed to handle noisy data. Unlike traditional autoencoders, the primary goal of DAEs is to restore original noise-free images by learning from noisy input images. They are widely applied to real-world image processing tasks due to their ability to adapt and automatically learn the features of diverse data.

Due to there being considerable room for improvement in the architecture of the original model, sequential adjustments were made to enhance the reconstructed image’s quality and denoising effect. These adjustments were tested using the same Kaggle Abdomen CT public dataset that was used in the original paper, training 10,000 images and testing 2000 images to obtain the average experimental results. The experimental results of the sequential improvements made to the original model architecture are presented in

Table 4, which √ indicates increased depth and number of convolutional kernels, ★ denotes the use of Batch Normalization and LeakyReLU activation, ▲ represents the addition of attention layers, ● signifies residual blocks, and ♦ indicates skip connections. As shown in the table, each module addition resulted in improved experimental outcomes, thereby demonstrating the model’s feasibility.

Additionally, the depth of a model can have a significant impact on its ability to capture the features of an image. However, a model that is too deep may suffer from overfitting, which means that its performance in this study was evaluated to determine if the depth was an issue by reducing and increasing the number of layers in both the encoder and decoder. As in the original paper, the Kaggle Abdomen CT public dataset was used for the test, with 10,000 images for training and 2000 images for testing. The average denoising results for each depth are shown in

Table 5. The experimental results indicate that the model achieves a better denoising performance at the current depth by effectively capturing the features of the image without encountering an overfitting issue, thereby validating the appropriateness of the model design at this depth.

The simple architecture of the original model is shown in

Figure 3, while the improved model’s simplified architecture is depicted in

Figure 4. A detailed schematic of the model can be seen in

Figure 4 and

Figure 5.

In contrast, non-trainable parameters are primarily found in Batch Normalization layers. As these mean and variance parameters are solely used to normalize the data and otherwise remain fixed throughout this process, with no incremental updates, they are classified as non-trainable. The performance of the model is primarily improved by learning and adjusting the trainable parameters, while the non-trainable parameters are used to normalize the data, which helps to maintain the model’s stability.

- (2.1)

Encoder

In the encoding phase, the model transforms the input into a latent representation that captures the primary features of the input image. The noise filter function in the encoder enables the model to perform effectively when dealing with noisy images. The Denoising Autoencoder (DAE) learns to effectively filter out noise while preserving important image information when trained on noisy images, which enhances the model’s robustness and improves its ability to remove unnecessary noise during image reconstruction. 10% random impulse noise is added to images before training to enable the model to perform denoising training. Since the size of the attacked image is 224 × 224, the encoder input size is also set to 224 × 224. A detailed explanation will be provided in this study of the optimizations made to the original model [

9] and how these improvements are reflected in the experimental results. These optimizations include increasing the depth of the model and the number of convolutional kernels, using Batch Normalization and LeakyReLU activation functions, introducing a Simple Attention Layer, embedding residual blocks, and adding Skip Connections.

In

Figure 4, √ denotes an increase in the model’s depth and the number of convolutional kernels. The ★ symbol indicates the addition of Batch Normalization and LeakyReLU activation functions in the model, significantly improving its training stability and expressiveness. The ▲ symbol represents the introduction of the Simple Attention Layer into the model, which aims to enable the model to focus adaptively on important parts of the input. The ● symbol indicates the embedding of residual blocks, which can also enhance the performance of deep models. Residual blocks introduce skip connections that directly add the input to the output, thereby addressing common issues in deep models, such as vanishing and exploding gradients. Finally, the ♦ symbol represents the addition of Skip Connections, further enhancing the flow of information within the model.

These encoder optimizations enable the model to identify and extract useful information more effectively during the initial feature extraction phase.

- (2.2)

Decoder

In the decoding phase, the model maps the latent representation back to the original input to reconstruct the original data. The decoder’s primary goal is feature restoration. By learning to effectively reverse the latent representation generated by the encoder and reconstruct it into the original image, the decoder can rebuild important features from the input image.

This study used a mirrored convolutional layer configuration for the decoder, which was opposite to that of the encoder. The Denoising Autoencoder (DAE) used this mirrored design to take advantage of data symmetry, which improved its learning efficiency and enabled it to more effectively capture the image’s features.

Optimizations to the decoder in the new denoising model compared to the original model described in the paper are explained in detail in the next sections, along with the contribution they made to the improved experimental results.

Due to the mirrored design of the encoder and decoder, the decoder also has a depth of four layers, as indicated by the √ symbol. A deeper decoder can reconstruct images better because the deeper layers can progressively recover detailed information. The network can learn more comprehensive reconstruction features as the depth of the model increases, leading to an improved performance in restoring image details. Additionally, increasing the number of convolutional kernels enhances the model’s capability to capture a richer set of features.

Furthermore, both the encoder and decoder use the Batch Normalization and LeakyReLU activation functions. Batch Normalization helps the model to achieve better reconstruction results, while LeakyReLU facilitates learning in the negative value region, which enhances the model’s expressiveness and stability during decoding, thereby improving its ability to reconstruct features effectively.

In summary, these optimizations ensure that the decoder can more effectively restore the original image features, enhancing denoising and better restoration quality.

- (3)

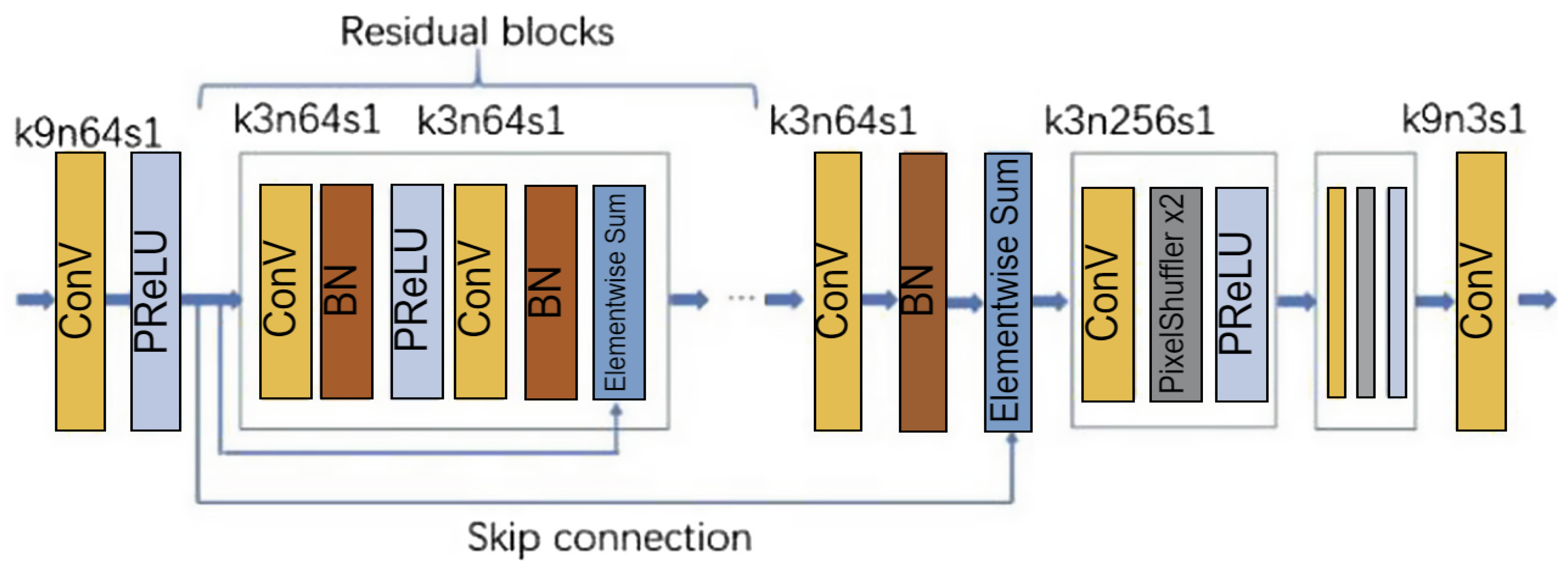

SRResNet Based on Noise2Noise Framework

Chen et al. [

5] used the Noise2Noise framework for denoising, disregarding the need to obtain many clean images and making it unnecessary to train with many noisy images and corresponding clean images. The original data only requires some original images to which noise is added to generate multiple noisy images to serve as input images and target images for training the deep learning model. When using the Noise2Noise framework, it is necessary to choose an appropriate deep neural network structure, appropriate noise type, and loss function to defend an image against a one-pixel attack. SRResNet (as shown in

Figure 6) is used as the deep learning structure. This generator network in SRGAN is mainly used for image super-resolution (SR) tasks. It improves the image quality by learning the mapping relationship between high-resolution and low-resolution images. This model is mainly constructed of 16 residual blocks. The network structure does not restrict the size of the input image, and the size of the input and output images is the same. This network model can remove Gaussian noise, impulsive noise, Poisson noise, and text. It is suitable for the defense models of many different types of source data because it constructs safe applications. The loss function used here is the annealed version of the L

0 loss function, which is based on the following formula;

where

f represents the neural network model, =10

–8, and

γ will be annealed linearly from 2 to 0 during training. In this case, the added random noise has the characteristic of zero expectation, and the loss function will not learn the characteristic of the noise. Therefore, the same effect for training will be achieved using noisy and non-noisy images. As a result, the model parameters obtained will be very close to those obtained by training with clean images, which enables the model to be trained to efficiently denoised images without requiring pairs of noisy and corresponding clean images.

- (4)

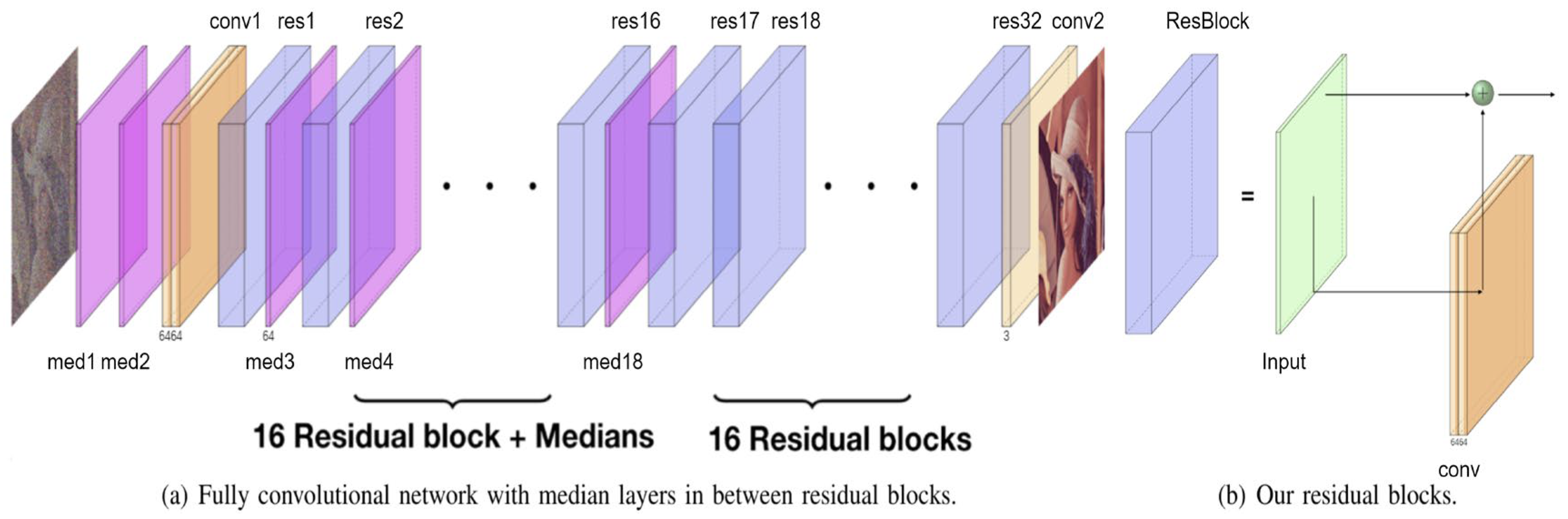

CNN with a Median Layer

Liang et al. [

23] proposed this denoising model, which was primarily designed to remove salt-and-pepper noise, a type of impulsive noise. The denoising effect is achieved by combining the deep neural network model with a median filter, a conventional nonlinear filter, which is particularly effective in removing impulsive noise by replacing the center pixel with the median value of a given window. The so-called median layer is defined as the application of the median filter with a moving window method on each feature channel. For example, an RGB color input image corresponds to three feature channels, and there may be multiple sets of features after convolution. Patches of a specified size (e.g., 3 × 3 or 5 × 5) need to be extracted from each channel pixel to apply the filter, and the median of the elements in each patch forms a new sequence. The median layer is applied to each feature channel and then combined to create a new set of features. If the convolution generates 64 feature channels, the median layer will be applied 64 times.

As shown in

Figure 7, this is a fully convolutional neural network (FCNN) in which the input data size is not restricted. Its architecture starts with two consecutive median layers, followed by a series of residual blocks and interleaved median layers, while the last part only consists of residual blocks between which median layers are inserted in the first half of the sequence. Each convolutional layer generates 64 features, and the residual blocks are designed as residual connections spanning two layers of 64 feature convolutions, followed by batch normalization layers and rectified linear unit (ReLU) activation functions, as shown in

Figure 7b. This model utilizes the simplest L

2 as the objective loss function, and the loss can be simply defined as the mean squared error between the estimated image and the ground truth image. This is because minimizing the mean squared error is directly related to increasing the denoising performance metric, peak signal-to-noise ratio (PSNR).