A Feasibility Study on Enhanced Mobility and Comfort: Wheelchairs Empowered by SSVEP BCI for Instant Noise Cancellation and Signal Processing in Assistive Technology

Abstract

1. Introduction

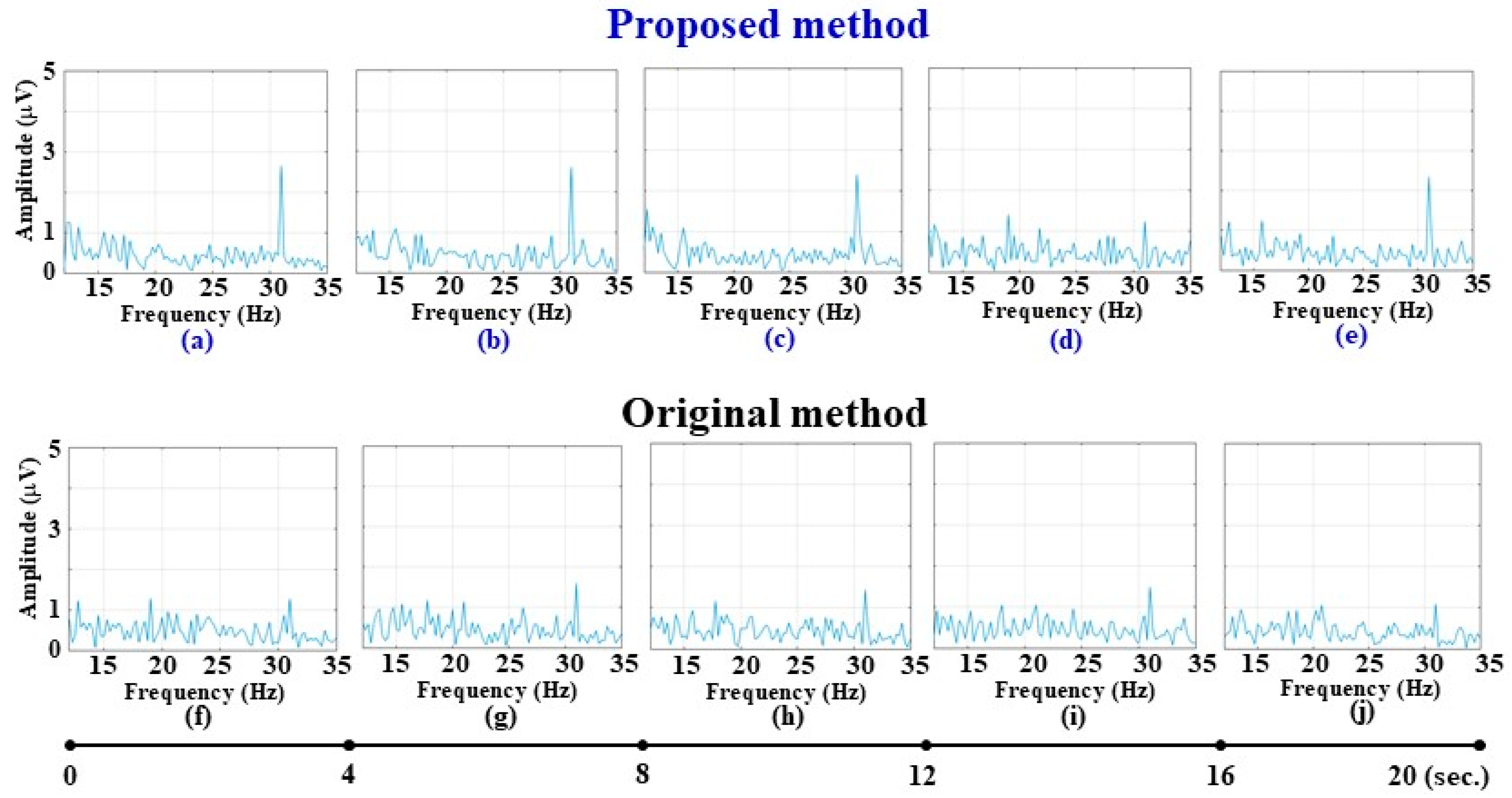

- Different control platforms were employed: an unmanned aerial vehicle was used in [36], whereas a wheelchair control system was implemented in this study.

- Unlike [36], which did not present the specific signal processing or classification algorithm, this study introduces an improved algorithm for SSVEP-based control.

2. Materials and Methods

2.1. Wheelchair Control System Based on SSVEP BCI

- Wi-Fi 6E offers a suite of enhanced features that make it highly suitable for real-time applications such as wireless wheelchair control. Its reduced latency and increased bandwidth are particularly important for achieving responsive and precise navigation. Furthermore, Wi-Fi 6E provides dependable connectivity through decreased interference and more stable signal transmission, ensuring continuous and reliable communication for the user.

- Another essential feature of Wi-Fi 6E is its extended coverage, allowing users to maintain connectivity over broader distances in indoor and outdoor environments, enhancing overall mobility. In settings with dense wireless usage, the improved interference management helps keep control signals robust against surrounding electromagnetic noise. Additionally, enhanced security protocols safeguard the system from unauthorized access, protecting user privacy and ensuring operational safety.

- In this study, Wi-Fi 6E is also used to stream EEG/EOG data, GPS information, and user metrics to a cloud-based management platform for remote monitoring and data logging. Integration with the Internet of Things (IoT) allows electric wheelchairs to interface seamlessly with smart sensors, healthcare monitoring devices, and environmental control systems, fostering more innovative and personalized care solutions. Furthermore, Wi-Fi 6E facilitates greater accessibility and inclusivity by providing a high-performance, user-friendly wireless infrastructure. This advancement enhances the effectiveness of assistive technologies, rendering mobility solutions more practical and empowering for individuals with physical impairments.

2.2. Method

3. Experimental Results

3.1. Left Signal

3.2. Right Signal

3.3. Forward Flight Signal

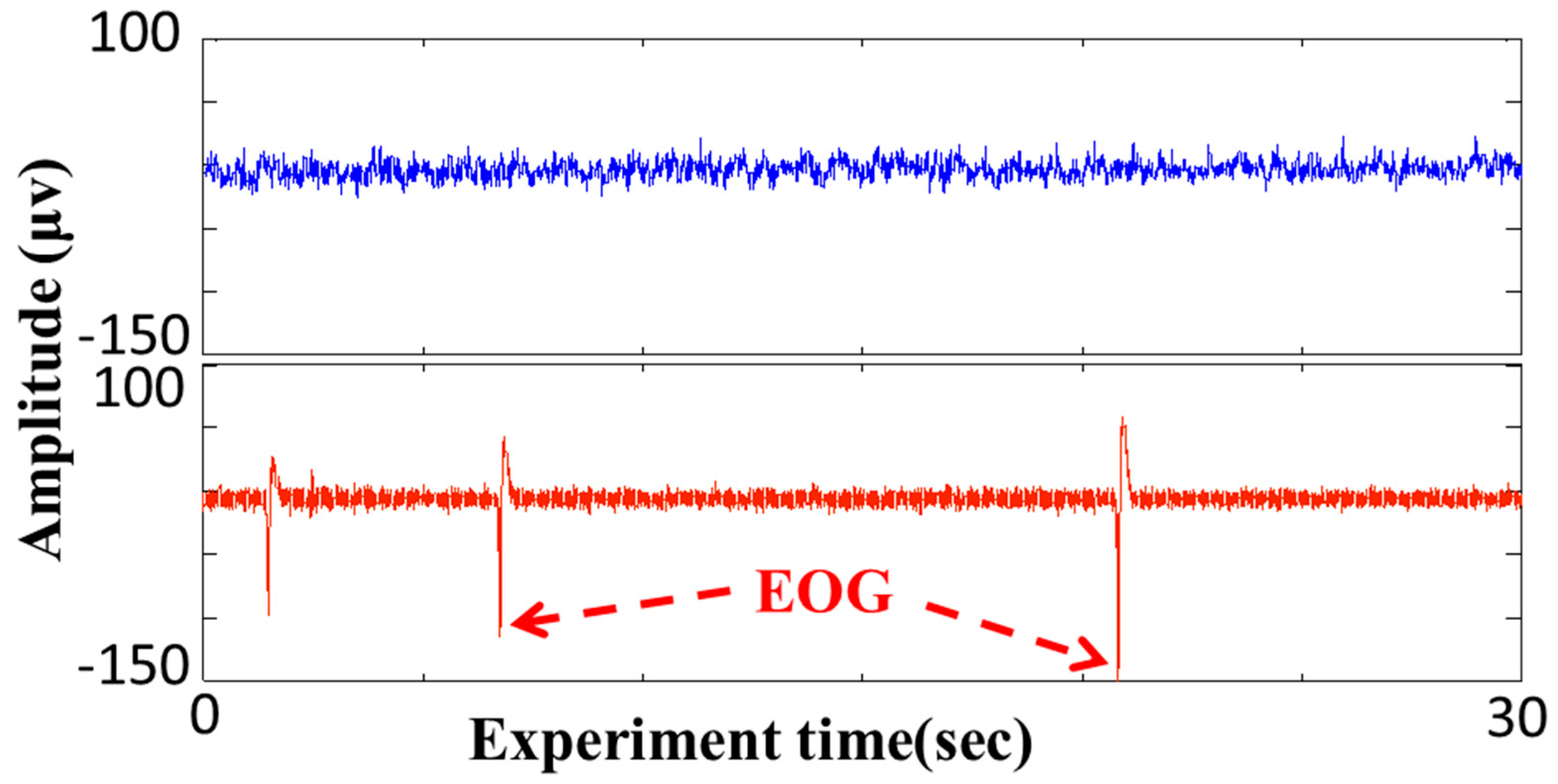

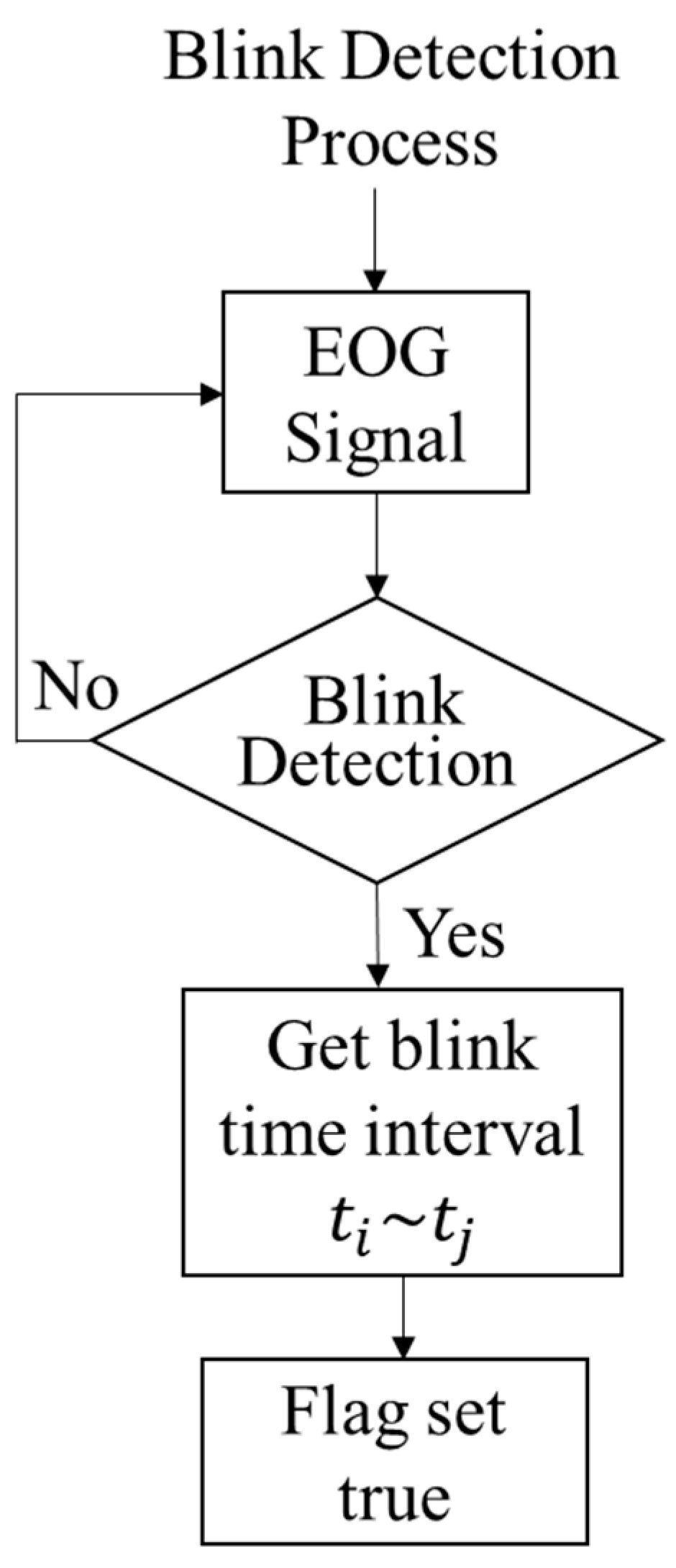

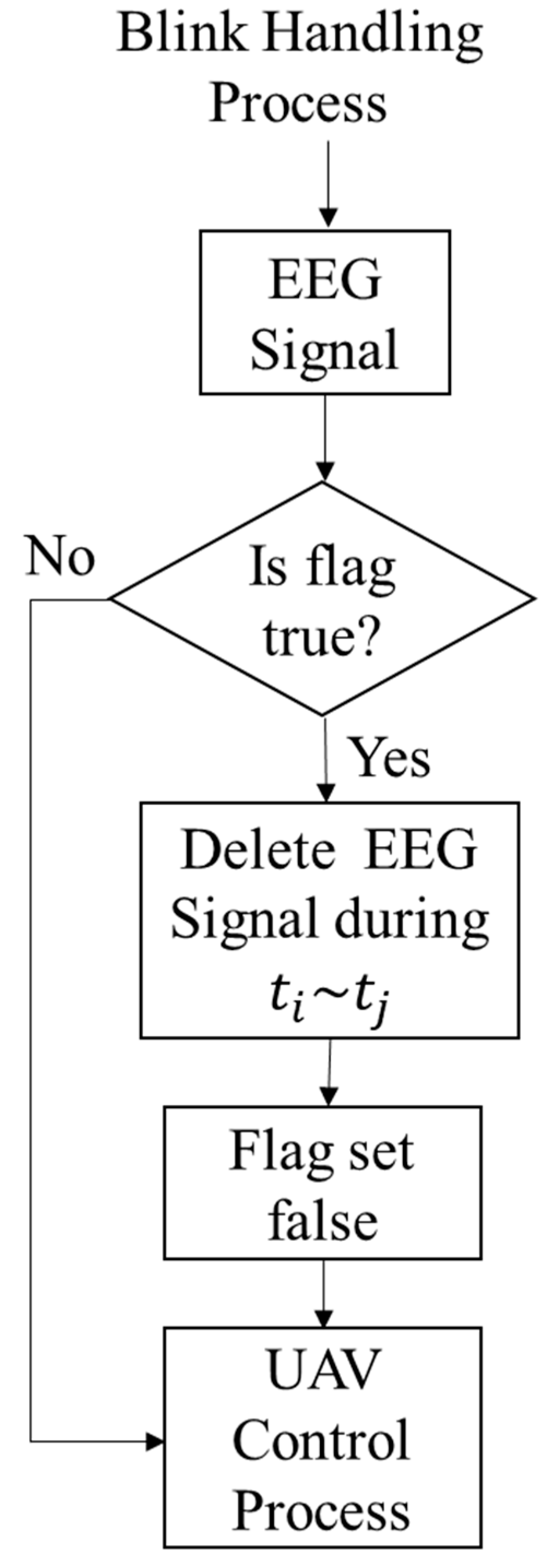

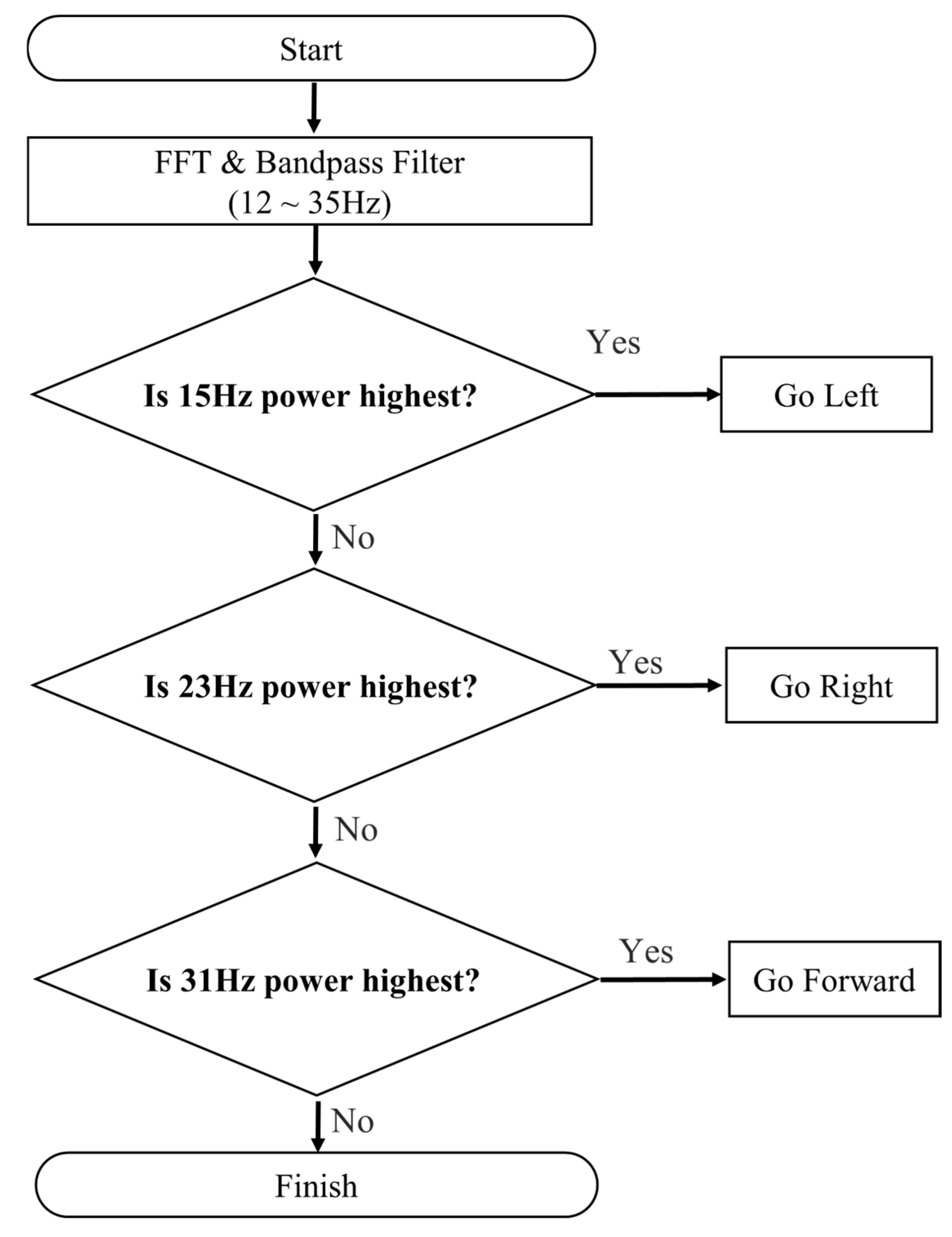

| Algorithm 1 Blink Detection Process |

| 1 //procedure 2 Set variable blink start time ,, flag, time seriest; 3 while (Obtain time series t of EOG signal recording) 4 { 5 if (EOG has high amplitude) then //blink occurs 6 { 7 save blink start time 8 save blink stop time ; 9 flag set true; 10 } 11 if (flag == true) then 12 { 13 delete EEG times of signal during ~; 14 flag set false; 15 Execute FFT on the EEG signal 16 If 15 Hz power is the highest, then 17 { 18 Go Left; 19 } 20 else if 23 Hz power is highest, then 21 { 22 Go Right; 23 } 24 else if 31 Hz power is highest, then 25 { 26 Go Forward; 27 } 28 else 29 { 30 Do Nothing; 31 } 32 } 33} 34//end procedure |

3.4. Comparison and Discussion

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Carlson, T.; Millan, J. Brain-controlled wheelchair: A robotic architecture. IEEE Robot. Autom. Mag. 2013, 20, 65–73. [Google Scholar] [CrossRef]

- Shaheen, S.; Umamakeswari, A. Intelligent wheelchair for people with disabilities. Int. J. Eng. Technol. 2013, 5, 391–397. [Google Scholar]

- Lin, J.; Yang, W. Wireless brain-computer interface for electric wheelchairs with EEG and eye-blinking signal. Int. J. Innov. Comput. Inf. Control 2012, 8, 6011–6024. [Google Scholar]

- Palumbo, A.; Gramigna, V.; Calabrese, B.; Ielpo, N. Motor-imagery EEG-based BCIs in wheelchairs movement and control: A systematic literature review. Sensors 2021, 21, 6285. [Google Scholar] [CrossRef]

- Tiwari, P.; Choudhary, A.; Gupta, S.; Dhar, J.; Chanak, P. Sensitive brain-computer interface to help maneuver a miniature wheelchair using electroencephalography. In Proceedings of the 2020 IEEE International Students’ Conference on Electrical, Electronics and Computer Science (SCEECS), Bhopal, India, 22–23 February 2020. [Google Scholar]

- Barriuso, A.; Pérez-Marcos, J.; Paz, J. Agent-based intelligent interface for wheelchair movement control. Sensors 2018, 18, 1511. [Google Scholar] [CrossRef]

- Nakanishi, M.; Tanji, Y.; Tanaka, T. Waveform-coded steady-state visual evoked potentials for brain-computer interfaces. IEEE Access 2021, 9, 144768–144775. [Google Scholar] [CrossRef]

- Chen, W. Intelligent manufacturing production line data monitoring system for industrial Internet of things. Comput. Commun. 2020, 151, 31–41. [Google Scholar] [CrossRef]

- TaghiBeyglou, B.; Bagheri, F. EOG artifact removal from single- and multi-channel EEG recordings through the combination of long short-term memory networks and independent component analysis. arXiv 2023, arXiv:2308.13371. [Google Scholar]

- Cruz, A.; Pires, G.; Lopes, A.; Carona, C.; Nunes, U. A self-paced BCI with a collaborative controller for highly reliable wheelchair driving: Experimental tests with physically disabled individuals. IEEE Trans. Hum.-Mach. Syst. 2021, 51, 109–119. [Google Scholar] [CrossRef]

- Sherlock, I. How Little-Known Capabilities of Wi-Fi® 6 Help Connect IoT Devices with Confidence; Technical Article SSZTCX4; Texas Instruments: Dallas, TX, USA, 2023; Available online: https://www.ti.com/document-viewer/lit/html/SSZTCX4 (accessed on 22 October 2025).

- Mridha, M.; Chandra Das, S.; Kabir, M.; Lima, A.; Islam, M.; Watanobe, Y. Brain-computer interface: Advancement and challenges. Sensors 2021, 21, 5746. [Google Scholar] [CrossRef]

- Bousseta, R.; El Ouakouak, I.; Gharbi, M.; Regragui, F. EEG based brain computer interface for controlling a robot arm movement through thought. IRBM 2018, 39, 129–135. [Google Scholar] [CrossRef]

- Sun, Z.; Huang, Z.; Duan, F.; Liu, Y. A novel multimodal approach for hybrid brain–computer interface. IEEE Access 2020, 8, 89909–89918. [Google Scholar] [CrossRef]

- Podmore, J.; Breckon, T.; Aznan, N.; Connolly, J. On the relative contribution of deep convolutional neural networks for SSVEP-based bio-signal decoding in BCI speller applications. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 611–618. [Google Scholar] [CrossRef]

- Vaughan, T. Brain-computer interfaces for people with amyotrophic lateral sclerosis. Handb. Clin. Neurol. 2020, 168, 33–38. [Google Scholar] [CrossRef]

- Borgheai, S.; McLinden, J.; Zisk, A.; Hosni, S.; Deligani, R.; Abtahi, M.; Mankodiya, K.; Shahriari, Y. Enhancing communication for people in late-stage ALS using an fNIRS-based BCI system. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 1198–1207. [Google Scholar] [CrossRef] [PubMed]

- Hosni, S.; Borgheai, S.; McLinden, J.; Shahriari, Y. An fNIRS-based motor imagery BCI for ALS: A subject-specific data-driven approach. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 3063–3073. [Google Scholar] [CrossRef] [PubMed]

- Cajigas, I.; Vedantam, A. Brain-computer interface, neuromodulation, and neurorehabilitation strategies for spinal cord injury. Neurosurg. Clin. 2021, 32, 407–417. [Google Scholar] [CrossRef]

- Chaudhar, U.; Birbaumer, N. Communication in locked-in state after brainstem stroke: A brain-computer-interface approach. Ann. Transl. Med. 2015, 3, S29. [Google Scholar]

- Norcia, A.M.; Appelbaum, L.G.; Ales, J.M.; Cottereau, B.R.; Rossion, B. The steady-state visual evoked potential in vision research: A review. J. Vis. 2015, 15, 4. [Google Scholar] [CrossRef]

- Kołodziej, M.; Tarnowski, P.; Sawicki, D.; Majkowski, A.; Rak, R.; Bala, A.; Pluta, A. Fatigue detection caused by office work with the use of EOG signal. IEEE Sens. J. 2020, 20, 15213–15223. [Google Scholar] [CrossRef]

- Lee, M.; Williamson, J.; Won, D.; Fazli, S.; Lee, S. A high performance spelling system based on EEG-EOG signals with visual feedback. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 1443–1459. [Google Scholar] [CrossRef]

- Zhang, W.; Li, Y.; Wei, K.; Zhang, Z. A two-port microstrip antenna with high isolation for Wi-Fi 6 and Wi-Fi 6E applications. IEEE Trans. Antennas Propag. 2022, 70, 5227–5234. [Google Scholar] [CrossRef]

- Altan, G.; Kutlu, Y.; Allahverdi, N. Deep belief networks based brain activity classification using EEG from slow cortical potentials in stroke. Int. J. Appl. Math. Electron. Comput. 2016, 205–210. [Google Scholar] [CrossRef]

- Meinel, A.; Castaño-Candamil, S.; Blankertz, B.; Lotte, F.; Tangermann, M. Characterizing regularization techniques for spatial filter optimization in oscillatory EEG regression problems. Neuroinformatics 2019, 17, 235–251. [Google Scholar] [CrossRef]

- Shukla, P.; Chaurasiya, R.; Verma, S.; Sinha, G. A thresholding-free state detection approach for home appliance control using P300-based BCI. IEEE Sens. J. 2021, 21, 16927–16936. [Google Scholar] [CrossRef]

- Oralhan, Z. A new paradigm for region-based P300 speller in brain computer interface. IEEE Access 2019, 7, 106618–106627. [Google Scholar] [CrossRef]

- Li, M.; Yang, G.; Xu, G. The effect of the graphic structures of humanoid robot on N200 and P300 potentials. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 1944–1954. [Google Scholar] [CrossRef] [PubMed]

- Shuffrey, L.; Rodriguez, C.; Rodriguez, D.; Mahallati, H.; Jayaswal, M.; Barbosa, J.; Syme, S.; Gimenez, L.; Pini, N.; Lucchini, M.; et al. Delayed maturation of P2 flash visual evoked potential (VEP) latency in newborns of gestational diabetic mothers. Early Hum. Dev. 2021, 163, 105503. [Google Scholar] [CrossRef]

- Yu, F.; Zhang, Y.; Luan, X.; Wu, Y. Clinical application of intracranial pressure monitoring based on flash visual evoked potential in treatment of patients with hypertensive intracerebral hemorrhage. Clin. Schizophr. Relat. Psychoses 2023, 17, 1–6. [Google Scholar]

- Peng, Y.; Wong, C.; Wang, Z.; Wan, F.; Vai, M.; Mak, P.; Hu, Y.; Rosa, A. Fatigue evaluation using multi-scale entropy of EEG in SSVEP-based BCI. IEEE Access 2019, 7, 108200–108210. [Google Scholar] [CrossRef]

- Zhao, X.; Liu, C.; Xu, Z.; Zhang, L.; Zhang, R. SSVEP stimulus layout effect on accuracy of brain-computer interfaces in augmented reality glasses. IEEE Access 2020, 8, 5990–5998. [Google Scholar] [CrossRef]

- Demir, A.; Arslan, H.; Uysal, I. Bio-inspired filter banks for frequency recognition of SSVEP-based brain–computer interfaces. IEEE Access 2019, 7, 160295–160303. [Google Scholar] [CrossRef]

- Chang, C.T.; Huang, C.H. Novel method of multi-frequency flicker to stimulate SSVEP and frequency recognition. Biomed. Signal Process. Control 2022, 71, 103243. [Google Scholar] [CrossRef]

- Chung, M.A.; Lin, C.W.; Chang, C.T. The human—Unmanned aerial vehicle system based on SSVEP—Brain computer interface. Electronics 2021, 10, 3025. [Google Scholar] [CrossRef]

- Chang, C.T.; Huang, C. A novel method for the detection of VEP signals from frontal region. Int. J. Neurosci. 2017, 128, 520–529. [Google Scholar] [CrossRef]

- Li, Y.; Xiang, J.; Kesavadas, T. Convolutional correlation analysis for enhancing the performance of SSVEP-based brain-computer interface. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 2681–2690. [Google Scholar] [CrossRef]

- Zhang, Y.; Xie, S.; Wang, H.; Zhang, Z. Data analytics in steady-state visual evoked potential-based brain–computer interface: A review. IEEE Sens. J. 2021, 21, 1124–1138. [Google Scholar] [CrossRef]

- Lin, B.; Wang, H.; Huang, Y.; Wang, Y.; Lin, B. Design of SSVEP enhancement-based brain computer interface. IEEE Sens. J. 2021, 21, 14330–14338. [Google Scholar] [CrossRef]

- Shi, T.; Ren, L.; Cui, W. Feature extraction of brain–computer interface electroencephalogram based on motor imagery. IEEE Sens. J. 2020, 20, 11787–11794. [Google Scholar] [CrossRef]

- Ferrero, L.; Ortiz, M.; Quiles, V.; Iáñez, E.; Azorín, J. Improving motor imagery of gait on a brain–computer interface by means of virtual reality: A case of study. IEEE Access 2021, 9, 49121–49130. [Google Scholar] [CrossRef]

- Cheng, S.; Wang, J.; Zhang, L.; Wei, Q. Motion imagery-BCI based on EEG and eye movement data fusion. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 2783–2793. [Google Scholar] [CrossRef] [PubMed]

- Lin, Y.; Wang, Y.; Wei, C.; Jung, T. Assessing the quality of steady-state visual-evoked potentials for moving humans using a mobile electroencephalogram headset. Front. Hum. Neurosci. 2014, 8, 182. [Google Scholar] [CrossRef] [PubMed]

- Barria, P.; Pino, A.; Tovar, N.; Gomez-Vargas, D.; Baleta, K.; Díaz, C.; Múnera, M.; Cifuentes, C. BCI-based control for ankle exoskeleton T-FLEX: Comparison of visual and haptic stimuli with stroke survivors. Sensors 2021, 21, 6431. [Google Scholar] [CrossRef]

- Na, R.; Hu, C.; Sun, Y.; Wang, S.; Zhang, S.; Han, M.; Yin, W.; Zhang, Z.; Chen, X.; Zheng, D. An embedded lightweight SSVEP-BCI electric wheelchair with hybrid stimulator. Digit. Signal Process. 2021, 116, 103101. [Google Scholar] [CrossRef]

- Chen, W.; Chen, S.; Liu, Y.; Chen, Y.; Chen, C. An electric wheelchair manipulating system using SSVEP-based BCI system. Biosensors 2022, 12, 772. [Google Scholar] [CrossRef]

- Rivera-Flor, H.; Gurve, D.; Floriano, A.; Delisle-Rodriguez, D.; Mello, R.; Bastos-Filho, T. CCA-based compressive sensing for SSVEP-based brain-computer interfaces to command a robotic wheelchair. IEEE Trans. Instrum. Meas. 2022, 71, 4010510. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, Y.; Pan, Y.; Xu, P.; Guan, C. A transformer-based deep neural network model for SSVEP classification. Neural Netw. 2023, 164, 521–534. [Google Scholar] [CrossRef]

- Wei, Q.; Li, C.; Wang, Y.; Gao, X. Enhancing the performance of SSVEP-based BCIs by combining task-related component analysis and deep neural network. Sci. Rep. 2025, 15, 365. [Google Scholar] [CrossRef] [PubMed]

| Time Interval (s) | Subject | ||||

|---|---|---|---|---|---|

| A | B | C | D | E | |

| 0–4 | on | on | on | on | on |

| 4–8 | on | on | on | on | on |

| 8–12 | on | on | on | on | on |

| 12–16 | on | on | on | on | on |

| 16–20 | on | on | on | on | on |

| Time Interval (s) | Subject | ||||

|---|---|---|---|---|---|

| A | B | C | D | E | |

| 0–4 | on | on | on | on | on |

| 4–8 | on | off | on | on | on |

| 8–12 | on | on | on | on | on |

| 12–16 | on | on | on | on | on |

| 16–20 | off | on | on | off | on |

| Time Interval (s) | Subject | ||||

|---|---|---|---|---|---|

| A | B | C | D | E | |

| 0–4 | on | on | on | on | on |

| 4–8 | on | on | on | on | on |

| 8–12 | on | on | on | on | on |

| 12–16 | on | on | on | on | on |

| 16–20 | on | on | on | on | on |

| Time Interval (s) | Subject | ||||

|---|---|---|---|---|---|

| A | B | C | D | E | |

| 0–4 | on | on | on | on | on |

| 4–8 | on | on | off | on | on |

| 8–12 | on | on | on | off | off |

| 12–16 | on | on | on | on | on |

| 16–20 | off | off | on | on | on |

| Time Interval (s) | Subject | ||||

|---|---|---|---|---|---|

| A | B | C | D | E | |

| 0–4 | on | on | on | on | on |

| 4–8 | on | on | on | off | on |

| 8–12 | off | off | on | off | on |

| 12–16 | on | on | on | on | on |

| 16–20 | on | on | on | on | off |

| Time Interval (s) | Subject | ||||

|---|---|---|---|---|---|

| A | B | C | D | E | |

| 0–4 | on | on | on | on | on |

| 4–8 | on | on | off | on | off |

| 8–12 | off | off | on | on | off |

| 12–16 | on | on | on | off | on |

| 16–20 | on | on | off | on | on |

| Frequency (Hz) | Blink Handling | Subject | ||||

|---|---|---|---|---|---|---|

| A | B | C | D | E | ||

| 15 | Yes | 100% | 100% | 100% | 100% | 100% |

| No | 80% | 80% | 100% | 80% | 100% | |

| 23 | Yes | 100% | 100% | 100% | 100% | 100% |

| No | 80% | 80% | 80% | 80% | 80% | |

| 31 | Yes | 80% | 80% | 100% | 80% | 80% |

| No | 80% | 80% | 60% | 80% | 60% | |

| Frequency (Hz) | Noise Handling | Average |

|---|---|---|

| 15 | Yes | 100% |

| No | 88% | |

| 23 | Yes | 100% |

| No | 80% | |

| 31 | Yes | 84% |

| No | 72% | |

| Total | Yes | 94.68% |

| No | 80% |

| Literature | [37] | [45] | [46] | [47] | [48] | This study |

| Accuracy (%) | ~85 | ~80 | >90 | 95+ | Not reported | 94.69 |

| ITR (bits/min) | 18.3 | N/A | 25.7 | 31.2 | N/A | 26.8 |

| Decision Window (s) | 4.0 | 5.0 | 3.0 | 2.0 | Not reported | 4.0 |

| EEG Channels | 8 | 8 | 32 | 64 | Not reported | 1 |

| Stimulus Frequency/Modulation | 13–31 Hz, sinusoidal flicker | 8–12 Hz, phase-coded | 10–15 Hz, code-modulated | 8–15 Hz, transformer-based decoding | Not reported | 15, 23, 31 Hz, sinusoidal modulation |

| Noise processing (EOG/Blink) | Limited EOG processing, primarily SSVEP-focused | Basic blink detection; lacks in-depth EOG handling | Does not address blink/EOG noise | No blink or EOG handling mentioned | No blink or EOG handling mentioned | Real-time blink detection and EOG-based noise elimination with 14.68% accuracy improvement |

| Classification accuracy and low-energy performance | Decent accuracy (about 85%), no explicit mention of low-energy signal optimization | Moderate accuracy (~80%), with limited energy optimization focus | High accuracy via CCA-based compressive sensing, no energy discussion | Very high accuracy with transformer-based deep learning model | No blink or EOG handling mentioned | 94.69% classification accuracy with low-energy SSVEP signals (15/23/31 Hz) |

| Communication integration | Uses basic Bluetooth transmission | Local communication; lacks advanced network integration | Focus on compressive signal coding; no real-time wireless communication described | Not the main focus; local analysis assumed | No blink or EOG handling mentioned | Wi-Fi 6E integration for GPS/cloud monitoring and real-time command streaming |

| BCI edge processing | Some signal processing at embedded MCU level | Minimal on-device processing, mostly centralized | Signal encoding optimized, unclear if fully edge-executed | Cloud/PC-based model inference, not embedded | No blink or EOG handling mentioned | Integrated FFT processing and signal correction on wheelchair’s control unit |

| Clinical applicability | Tested with disabled users in lab trials | Basic prototype validation | No clinical validation reported | No clinical or hardware integration discussed | No blink or EOG handling mentioned | Validated on healthy volunteers with realistic settings, includes video streaming for bedridden support |

| Innovation in technology integration | Integrates BCI with wheelchair control, but lacks real-time blink adaptation | Embedded stimulator design but lacks hybrid multi-modal input innovation | Novel compressive sensing technique applied to SSVEP decoding | State-of-the-art transformer classification; high model complexity | No blink or EOG handling mentioned | Combines SSVEP, EOG blink filtering, FFT, and cloud/GPS for novel assistive architecture |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chang, C.-T.; Pai, K.-J.; Chung, M.-A.; Lin, C.-W. A Feasibility Study on Enhanced Mobility and Comfort: Wheelchairs Empowered by SSVEP BCI for Instant Noise Cancellation and Signal Processing in Assistive Technology. Electronics 2025, 14, 4338. https://doi.org/10.3390/electronics14214338

Chang C-T, Pai K-J, Chung M-A, Lin C-W. A Feasibility Study on Enhanced Mobility and Comfort: Wheelchairs Empowered by SSVEP BCI for Instant Noise Cancellation and Signal Processing in Assistive Technology. Electronics. 2025; 14(21):4338. https://doi.org/10.3390/electronics14214338

Chicago/Turabian StyleChang, Chih-Tsung, Kai-Jun Pai, Ming-An Chung, and Chia-Wei Lin. 2025. "A Feasibility Study on Enhanced Mobility and Comfort: Wheelchairs Empowered by SSVEP BCI for Instant Noise Cancellation and Signal Processing in Assistive Technology" Electronics 14, no. 21: 4338. https://doi.org/10.3390/electronics14214338

APA StyleChang, C.-T., Pai, K.-J., Chung, M.-A., & Lin, C.-W. (2025). A Feasibility Study on Enhanced Mobility and Comfort: Wheelchairs Empowered by SSVEP BCI for Instant Noise Cancellation and Signal Processing in Assistive Technology. Electronics, 14(21), 4338. https://doi.org/10.3390/electronics14214338