A-WHO: Stagnation-Based Adaptive Metaheuristic for Cloud Task Scheduling Resilient to DDoS Attacks

Abstract

1. Introduction

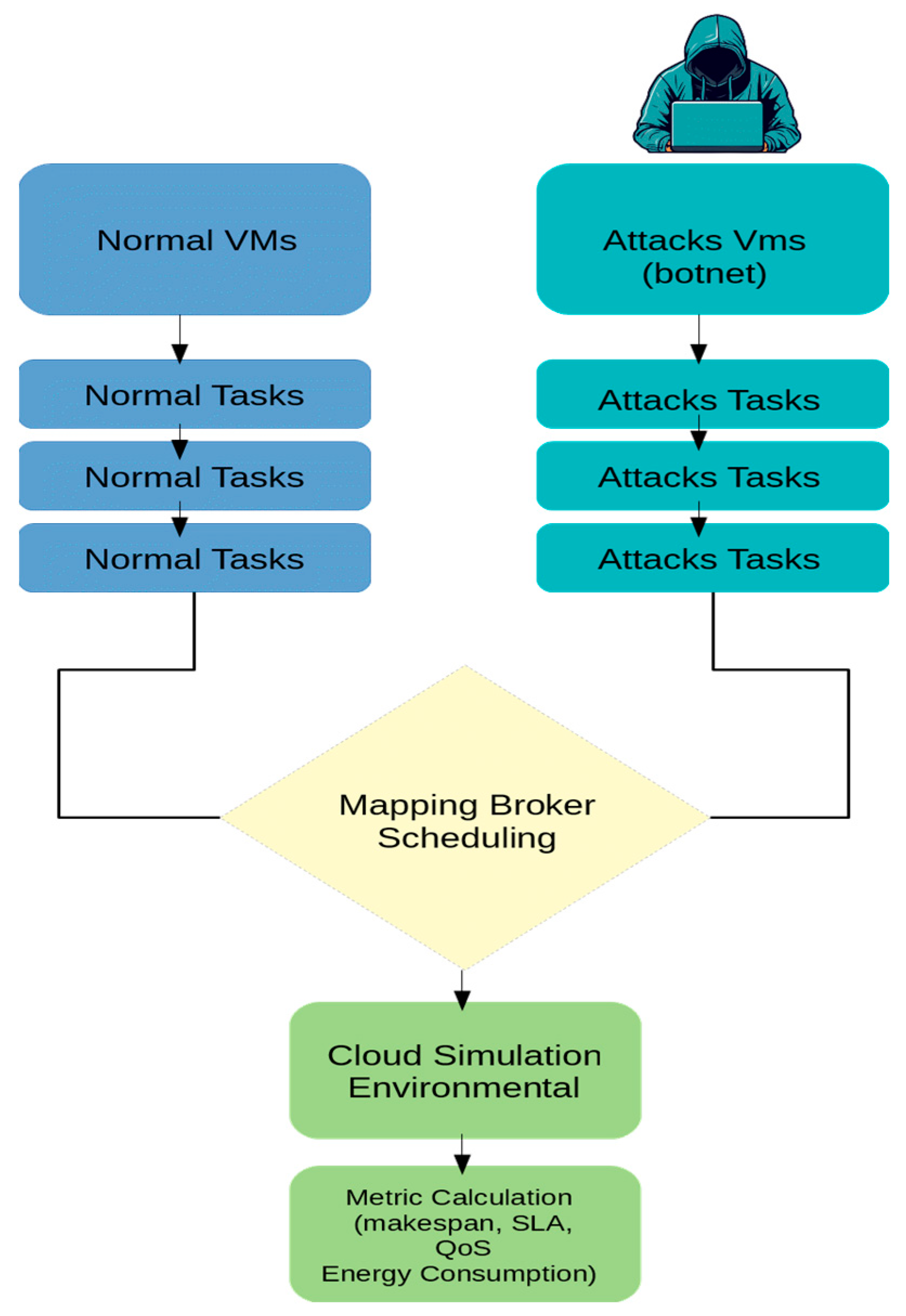

- Comparative Performance Analysis: We conduct systematic evaluations in the CloudSim environment by analyzing the performance of Genetic Algorithm (GA), Particle Swarm Optimizer (PSO), Artificial Bee Colony (ABC), Wild Horse Optimizer (WHO), Crow Search Optimizer Algorithm (CSOA), and Sine–Cosine (SCA) algorithms, considering a multidimensional set of performance indicators for attacks under DDoS conditions.

- Enhanced WHO Algorithm: We introduce Adaptive-WHO (A-WHO), an improved version of the standard WHO algorithm, which integrates a stagnation-aware adaptive diversity mechanism. This adjustment significantly reduces the risk of premature convergence and, thus, improves scheduling effectiveness during normal and adversarial conditions.

- Realistic DDoS Attack Modeling: We create a more realistic DDoS attack scenario by integrating malicious VMs and adversarial cloudlets, allowing a thorough assessment of the algorithms’ resilience against service degradation and resource exhaustion in a realistic scenario.

2. Related Work

3. Materials and Method

3.1. Task Scheduling Formulation and System Modeling

3.1.1. Makespan

3.1.2. Service Level Agreement (SLA) Compliance

3.1.3. Energy Consumption

3.1.4. Quality of Service (QoS) Metrics

- Execution Time (ET): The actual processing time during which a task is actively executed on the CPU.

- Response Time (RT): The total time elapsed from the moment a task is requested by the user until its complete execution.

3.1.5. Resource Utilization

3.2. Applied Metaheuristic Algorithms

3.2.1. Wild Horse Optimizer Algorithm

3.2.2. Genetic Algorithm

3.2.3. Particle Swarm Optimization

3.2.4. Artificial Bee Colony Algorithm

- Employed bees explore the solution space based on existing information,

- Onlooker bees evaluate promising regions by utilizing shared information,

- Scout bees search for new solutions in unexplored areas.

3.2.5. Crow Search Optimization Algorithm

3.2.6. Sine–Cosine Algorithm

3.2.7. Proposed Adaptive Wild Horse Optimizer

- Stagnation Detection and Intervention: A stagnation counter monitors progress in the fitness values. When no improvement is observed beyond a dynamically determined threshold (based on maximum function evaluations), random perturbations are introduced to increase population diversity and escape local optima.

- Diversity-based Movements: When population diversity decreases, the Water Hole and Escape Move components of WHO are adaptively expanded, encouraging the exploration of new regions in the search space.

- Parameter Stabilization: Experimental tuning identified optimal values for the Stallion Percentage (PS = 0.15) and Escape Probability (0.18), reducing parameter sensitivity and ensuring a more stable convergence process.

- Convergence Monitoring and Analysis: The best fitness value at each iteration is recorded to identify stagnation phases and analyze the adaptive behavior of the algorithm objectively.

| Algorithm 1. A-WHO with Stagnation-Aware Augmentation |

| 1 Input: population X, maxIter, ε (improvement tolerance), 2 τ (stagnation threshold), γ (perturbation gain), λ (escape gain) 3 Initialize population X 4 Evaluate f(X), determine Leader L and f_last * 5 s ← 0 // stagnation counter 6 for t = 1 … maxIter do 7 Evaluate population, update Leader L and f_t * 8 if |f_t * − f_last *| < ε then 9 s ← s + 1 10 else 11 s ← 0 12 f_last * ← f_t * 13 end if 14 δ ← s / τ 15 if s ≥ τ then // Adaptive Perturbatıon (Equation (2)) 16 R_base ← Uniform (−2, 2) 17 noise ← Gaussian (0, 1) 18 R ← R_base + γ * δ * noise 19 else // Standard WHO Behavior 20 R ← Uniform(–2, 2) 21 end if 22 for each horse i do 23 if i == Leader then // Water-Hole update with perturbed R 24 Xi ← Xi + R * rand() * (Lb − Ub) 25 else // Escape dynamics (Equation 3) 26 Xi ← Xi + rand() * (1 + λ * δ) * (Xi − L) 27 end if 28 end for 29 end for 30 Return best solution found |

3.2.8. DDoS Attack Simulation Methodology

- CPU Consumption: High-complexity workloads exceeding 1,000,000 MI to overload processing units.

- I/O Load: Input/output operations with 10,000 MB data blocks to create intensive storage and network traffic.

- Continuous Traffic: A full utilization model to guarantee persistent and uninterrupted attack traffic.

3.3. Simulation Environment

4. Experimental Results

4.1. Performance Evaluation and Metric-Based Analysis

- At the 10-iteration level, CSOA (47.58 s) and ABC (47.53 s) achieved the shortest execution times, whereas GA (9 min 33 s) required the longest completion time. Under DDoS attacks, execution times increased for all algorithms, with GA (15 min 41 s) experiencing the largest rise.

- At the 20-iteration level, ABC (1 min 29 s) and CSOA (1 min 51 s) again delivered the lowest execution times, while GA (39 min 56 s) reached the highest runtime. DDoS attacks further increased execution times at this iteration level, with GA (51 min 19 s) once more being the most affected algorithm.

- At the 50-iteration level, execution times grew dramatically, with ABC (5 min 40 s) achieving the shortest runtime, whereas GA (3 h 57 min) required the longest completion time. Under DDoS conditions, GA (3 h 45 min) again produced the highest runtimes, confirming its sensitivity to adversarial scenarios.

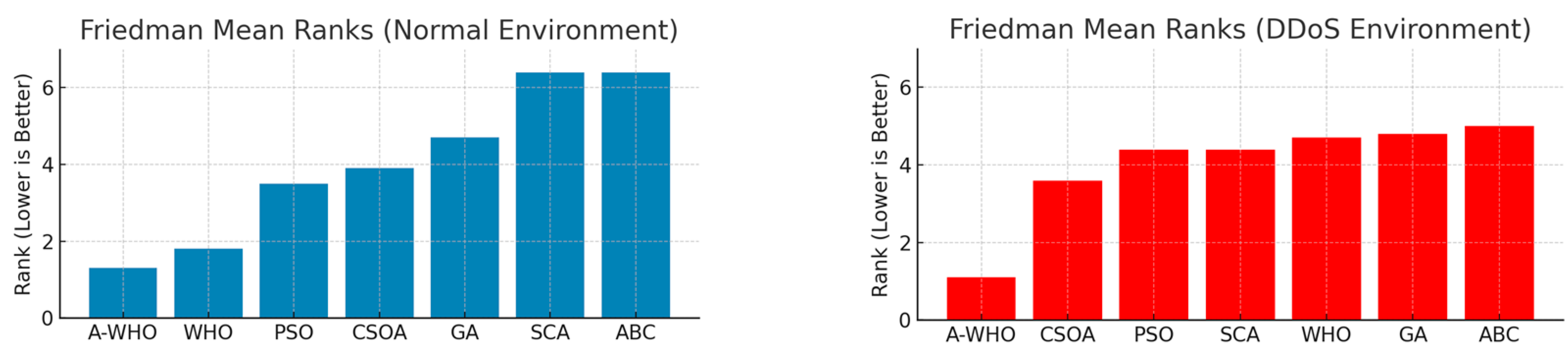

4.2. Statistical Evaluation Using the Friedman Test

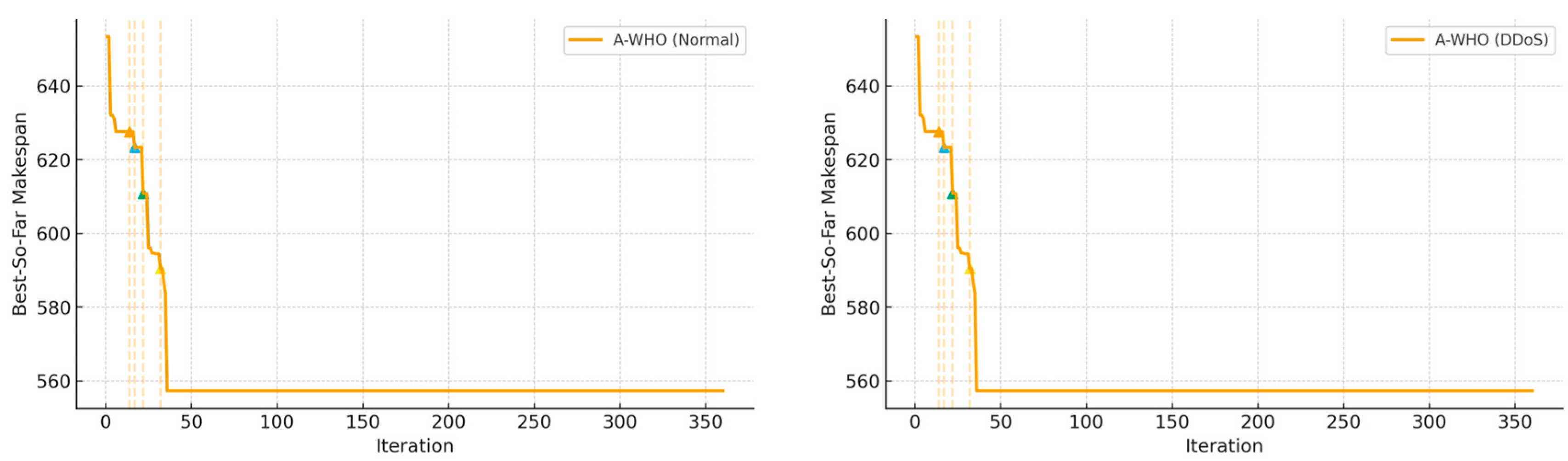

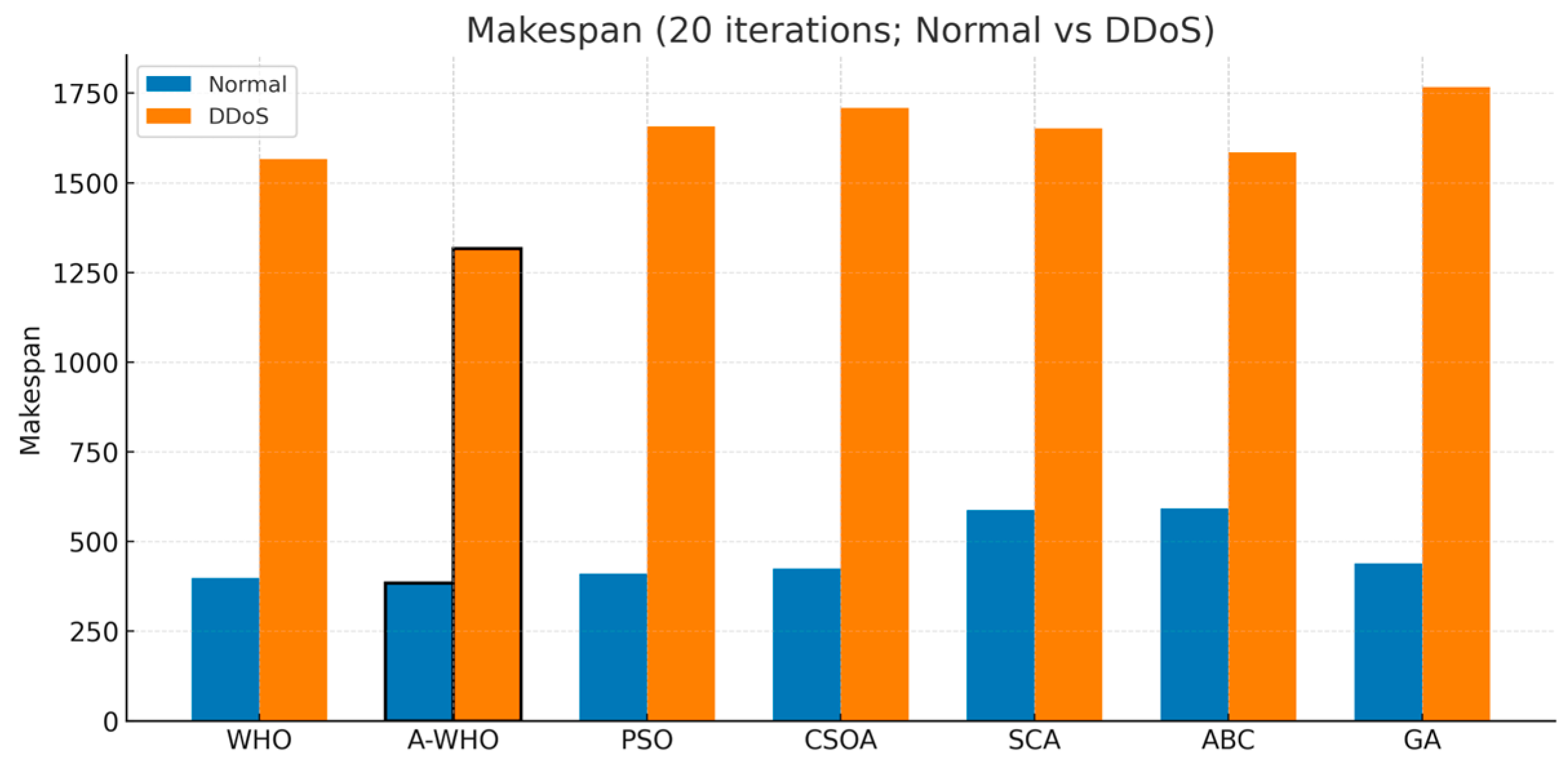

4.3. Effect of DDoS on Algorithmic Behavior

4.4. Robustness and Performance Evaluation of the Proposed A-WHO Algorithm

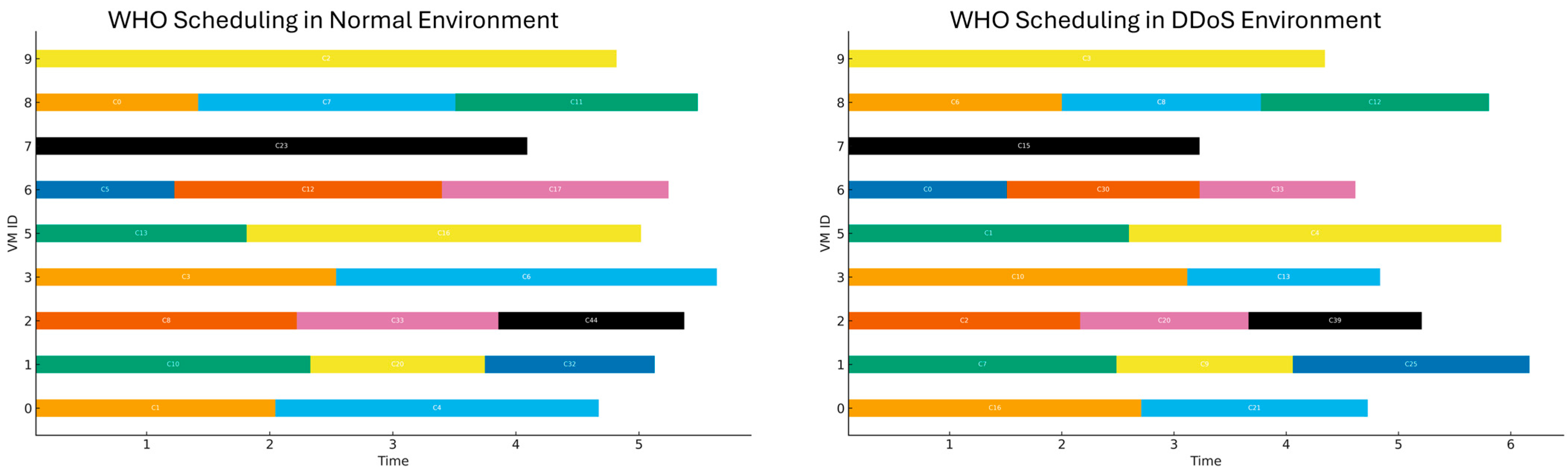

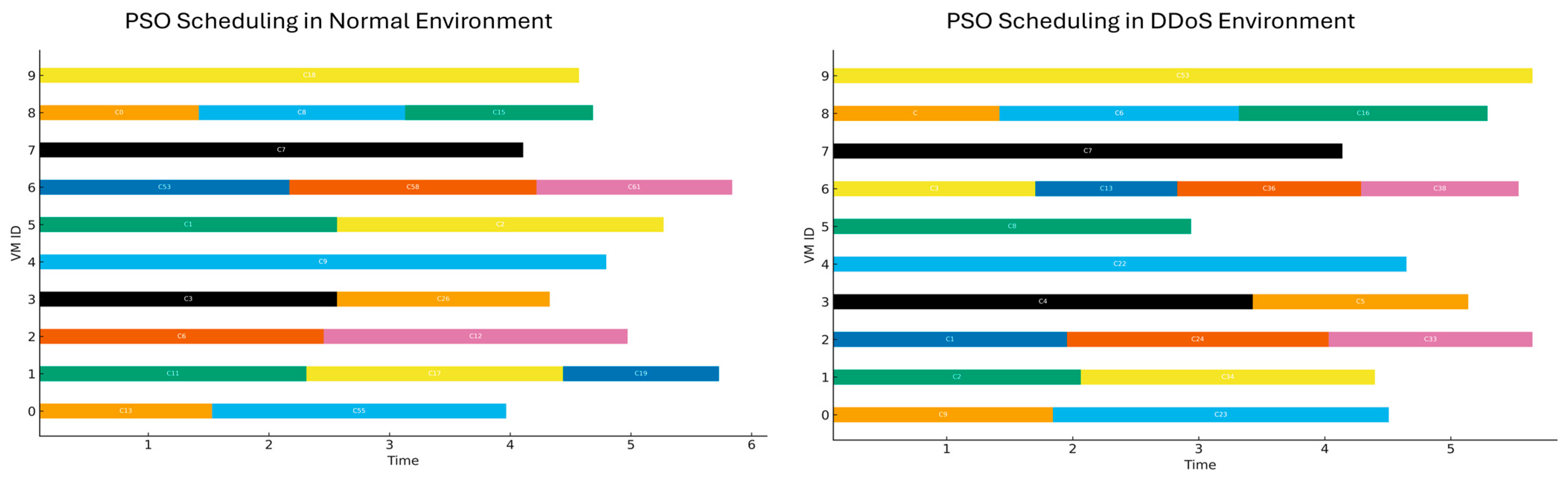

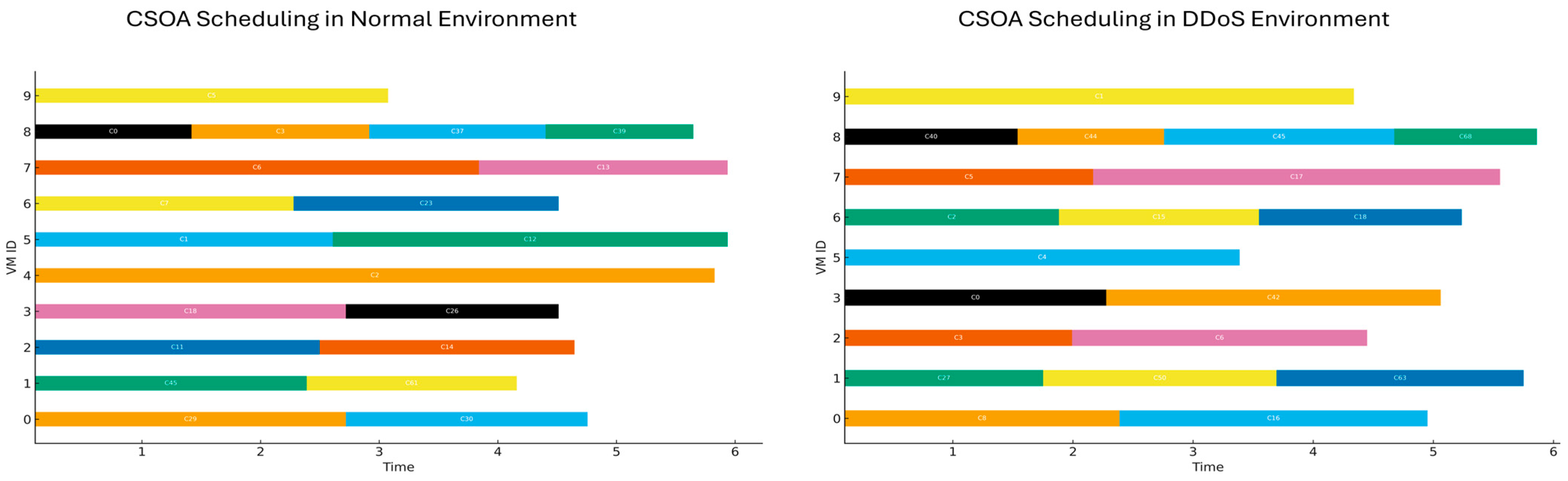

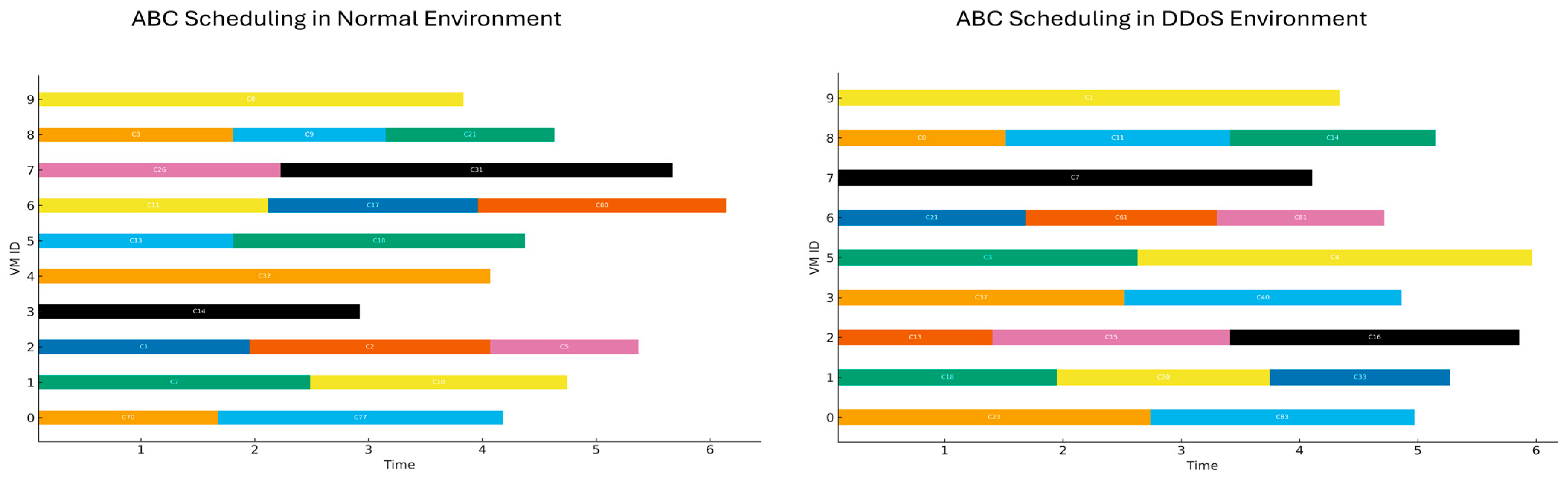

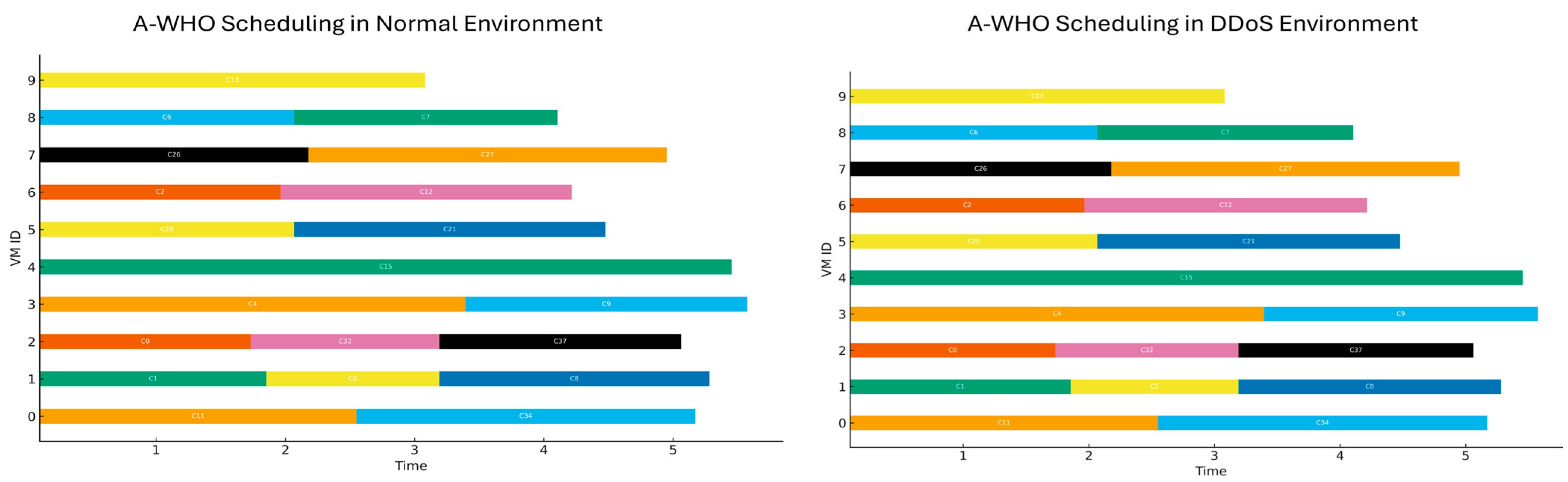

4.5. Visualization of Comparative Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhang, A.-N.; Chu, S.-C.; Song, P.-C.; Wang, H.; Pan, J.-S. Task scheduling in cloud computing environment using advanced Phasmatodea population evolution algorithms. Electronics 2022, 11, 1451. [Google Scholar] [CrossRef]

- Zhou, R.; Zeng, Y.; Jiao, L.; Zhong, Y.; Song, L. Online and Predictive Coordinated Cloud–Edge Scrubbing for DDoS Mitigation. IEEE Trans. Mob. Comput. 2024, 23, 9208–9223. [Google Scholar] [CrossRef]

- Zhou, M.; Mu, X.; Liang, Y. SOE: A Multi-Objective Traffic Scheduling Engine for DDoS Mitigation with Isolation-Aware Optimization. Mathematics 2025, 13, 1853. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Abd Elkhalik, W.; Sharawi, M.; Sallam, K.M. Task Scheduling Approach in Cloud Computing Environment Using Hybrid Differential Evolution. Mathematics 2022, 10, 4049. [Google Scholar] [CrossRef]

- Chandrashekar, C.; Krishnadoss, P.; Kedalu Poornachary, V.; Ananthakrishnan, B.; Rangasamy, K. HWACOA Scheduler: Hybrid Weighted Ant Colony Optimization Algorithm for Task Scheduling in Cloud Computing. Appl. Sci. 2023, 13, 3433. [Google Scholar] [CrossRef]

- Amer, D.A.; Attiya, G.; Ziedan, I. An efficient multi-objective scheduling algorithm based on spider monkey and ant colony optimization in cloud computing. Clust. Comput. 2024, 27, 1799–1819. [Google Scholar] [CrossRef]

- Parthasaradi, V.; Karunamurthy, A.; Hussaian Basha, C.H.; Senthilkumar, S. Efficient task scheduling in cloud computing: A multiobjective strategy using horse herd–squirrel search algorithm. Int. Trans. Electr. Energy Syst. 2024, 2024, 1444493. [Google Scholar] [CrossRef]

- Abraham, O.L.; Bin Ngadi, M.A.; Mohamad Sharif, J.B.; Mohd Sidik, M.K. Multi-objective optimization techniques in cloud task scheduling: A systematic literature review. IEEE Access 2025, 13, 54321–54345. [Google Scholar] [CrossRef]

- Cui, M.; Wang, Y. An effective QoS-aware hybrid optimization approach for workflow scheduling in cloud computing. Sensors 2025, 25, 4705. [Google Scholar] [CrossRef]

- Nandagopal, M.; Manavalan, T.; Praveen Kumar, K.; Manogaran, N.; Kesavan, D.; Al-Khasawneh, M.A. Enhancing energy efficiency in cloud computing through task scheduling with hybrid cuckoo search and transformer models. Discov. Comput. 2025, 28, 199. [Google Scholar] [CrossRef]

- Khaleel, M.; Safran, M.; Alfarhood, S.; Gupta, D. Combinatorial metaheuristic methods to optimize the scheduling of scientific workflows in green DVFS-enabled edge–cloud computing. Alex. Eng. J. 2024, 86, 458–470. [Google Scholar] [CrossRef]

- Long, G.; Wang, S.; Lv, C. QoS-aware resource management in cloud computing based on fuzzy meta-heuristic method. Clust. Comput. 2025, 28, 276. [Google Scholar] [CrossRef]

- Dahan, F. An innovative approach for QoS-aware web service composition using whale optimization algorithm. Sci. Rep. 2024, 14, 22622. [Google Scholar] [CrossRef]

- Mangalampalli, S.; Karri, G.R.; Kose, U. Multi-objective trust-aware task scheduling algorithm in cloud computing using whale optimization. J. King Saud. Univ. Comput. Inf. Sci. 2023, 35, 791–809. [Google Scholar] [CrossRef]

- Naruei, I.; Keynia, F. Wild horse optimizer: A new meta-heuristic algorithm for solving engineering optimization problems. Eng. Comput. 2021, 37, 5095–5144. [Google Scholar] [CrossRef]

- Saravanan, G.; Neelakandan, S.; Ezhumalai, P.; Maurya, S. Improved wild horse optimization with levy flight algorithm for effective task scheduling in cloud computing. J. Cloud Comput. 2023, 12, 24. [Google Scholar] [CrossRef]

- Kaplan, F.; Babalık, A. Performance analysis of cloud computing task scheduling using metaheuristic algorithms in DDoS and normal environments. Electronics 2025, 14, 1988. [Google Scholar] [CrossRef]

- Li, B.; Li, J.; Jia, M. ADFCNN-BiLSTM: A Deep Neural Network Based on Attention and Deformable Convolution for Network Intrusion Detection. Sensors 2025, 25, 1382. [Google Scholar] [CrossRef]

- Chandrasiri, S.; Meedeniya, D. Energy-Efficient Dynamic Workflow Scheduling in Cloud Environments Using Deep Learning. Sensors 2025, 25, 1428. [Google Scholar] [CrossRef] [PubMed]

- Whitley, D. A genetic algorithm tutorial. Stat. Comput. 1994, 4, 65–85. [Google Scholar] [CrossRef]

- Peng, Z.; Pirozmand, P.; Motevalli, M.; Esmaeili, A. Genetic algorithm-based task scheduling in cloud computing using MapReduce framework. Math. Probl. Eng. 2022, 2022, 4290382. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the IEEE International Conference on Neural Networks (ICNN), Perth, WA, Australia, 27 November–1 December 1995; Volume 1–6, pp. 1942–1948. [Google Scholar]

- Lipsa, S.; Dash, R.K. SLA-based task scheduling in cloud computing using randomized PSO algorithm. In Proceedings of the Symposium on Computing and Intelligent Systems, New Delhi, India, 10 May 2024; pp. 206–217. [Google Scholar]

- Karaboga, D. An Idea Based on Bee Swarm for Numerical Optimization (Technical Report TR06); Department of Computer Engineering, Erciyes University: Kayseri, Turkey, 2005; pp. 1–10. [Google Scholar]

- Li, J.; Han, Y. A hybrid multi-objective artificial bee colony algorithm for flexible task scheduling problems in cloud computing system. Clust. Comput. 2020, 23, 2483–2499. [Google Scholar] [CrossRef]

- Askarzadeh, A. A novel metaheuristic method for solving constrained engineering optimization problems: Crow Search Algorithm. Comput. Struct. 2016, 169, 1–12. [Google Scholar] [CrossRef]

- Mirjalili, S. SCA: A sine cosine algorithm for solving optimization problems. Knowl.-Based Syst. 2016, 96, 120–133. [Google Scholar]

| Dimension | Standard WHO | Proposed A-WHO |

|---|---|---|

| Optimization workflow | Grazing → Mating → Escape → Water Hole | Same workflow preserved |

| Stagnation awareness | Not supported | Supported. Stagnation is detected and monitored. |

| Leader update rule (normal phase) | Updated using standard WHO operator (Equation (1)) | Water Hole retained; R modulated by Equation (2); Escape noise scaled by (1 + λ · δ(t)) |

| Leader update rule (stagnation phase) | Not applicable | Updated using diversification rule (Equation (2)) |

| Diversification mechanism | None | Adaptive perturbation applied to the stallion (leader) only |

| Trigger principle | – | Activated when the algorithm shows no improvement for a predefined period |

| Exploration–exploitation balance | Fixed behavior throughout the run | Dynamically adjusted only during stagnation to escape local minima |

| Impact on baseline behavior | Single-phase optimization | Dual-phase behavior: normal → stagnation-handling → back to normal |

| Code reference | RunNativeWHO() (no stagnation block) | WHO_Scheduler: includes stagnation counter and perturbation block |

| Simulation Parameter | Value |

|---|---|

| VM Count | 10 |

| Cloudlet Count | 10 |

| Data Center Storage | 1 TB |

| Data Center RAM | 100 GB |

| Data Center Bandwidth | 100 GB/s |

| Data Center OS | Linux/Zen |

| Data Center MIPS | 1000 |

| VM Storage | 10 GB |

| VM RAM | 1 GB |

| VM Bandwidth | 1 GB/s |

| VM MIPS Range | 100–1000 |

| VM CPU (PEs) | 1 |

| VM Scheduling Policy | Time Shared |

| Cloudlet File Size | 300 MB |

| Cloudlet Length | 1000–2000 MI (rng based) |

| DDoS Parameters | Attack VM = 20% of VM Count Attack Cloudlet = 50% of cloudlet count Attack VM MIPS = 1000 Attack Cloudlet Length = 1,000,000+ MI |

| Algorithm | Parameter | Values |

|---|---|---|

| PSO | Particles | 75 |

| Inertia weight | 0.9-0.4 | |

| C1, C2 | 1.5, 1.5 | |

| Velocity clamp | 0.2 * (max-min) | |

| GA | Population size | 75 |

| Crossover rate | 0.5 | |

| Mutation rate | 0.015 | |

| Elitism | Yes | |

| Tournament size | 5 | |

| ABC | Colony size | 75 |

| Fitness Probability Scaling | 0.9 * fitness + 0.1 | |

| Food source | 37 | |

| Limit | D * food sources | |

| Perturbation factor (θ) | [−1, 1] | |

| CSOA | Population size | 75 |

| Awareness probability (AP) | 0.1 | |

| Flight length (FL) | 1.0 | |

| Memory update | Best-so-far | |

| SCA | Population size | 75 |

| Amplitude coefficient (a) | 2.0-0.0 | |

| Exploration factor (r1) | Dynamic | |

| Oscillation angle (r2) | [0, 2π] | |

| Distance scaling (r3) | [0, 2] | |

| Switch probability (r4) | 0.5 cosine | |

| WHO | Population size | 75 |

| Stallion percentage (PS) | 0.2 | |

| Escape propability | 0.1 | |

| Grazing behavior | Cosine based | |

| Mating behavior | AVG combination | |

| Escape behavior | Random perturbation | |

| Waterhole behavior | Directional movement toward the global best | |

| A-WHO | Population size | 75 |

| Stallion percentage (PS) | 0.15 | |

| Escape propability | 0.18 | |

| Grazing behavior | Cosine based | |

| Mating behavior | AVG combination | |

| Escape behavior | Stagnation-aware random perturbation | |

| Waterhole behavior | Directional movement toward the global best | |

| Stagnation threshold | 10 iteration 10 | |

| 20 iteration 13 | ||

| 50 iteration 30 |

| Experiment | Environment | Iterations | Cloudlet | VMs | Population | Max Fes | Number of Trials |

|---|---|---|---|---|---|---|---|

| 1st | Normal | 10 | 1000 | 10 | 75 | 750 | 10 |

| 2nd | DDoS | 10 | 1000 | 10 | 75 | 750 | 10 |

| 3rd | Normal | 20 | 1000 | 10 | 75 | 1500 | 10 |

| 4th | DDoS | 20 | 1000 | 10 | 75 | 1500 | 10 |

| 5th | Normal | 50 | 1000 | 10 | 75 | 3750 | 10 |

| 6th | DDoS | 50 | 1000 | 10 | 75 | 3750 | 10 |

| Algorithm | Normal Makespan | Δ Makespan (%) | DDoS Makespan | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Min | Max | Avg | Std | Δ | Min | Max | Avg | Std | |

| WHO | 398.80 | 437.78 | 414.92 | 12.36 | 313.18% | 579.52 | 2361.28 | 1714.35 | 532.74 |

| A-WHO | 366.24 | 424.51 | 396.05 | 19.03 | 306.76% | 429.69 | 2831.27 | 1610.98 | 755.16 |

| PSO | 375.44 | 450.93 | 420.27 | 21.63 | 416.07% | 1502.86 | 2770.80 | 2168.90 | 430.98 |

| CSOA | 405.65 | 476.92 | 445.19 | 26.70 | 280.28% | 487.66 | 3231.51 | 1692.99 | 830.50 |

| SCA | 518.49 | 633.95 | 598.93 | 33.86 | 214.25% | 1197.22 | 2383.07 | 1882.16 | 340.26 |

| ABC | 580.54 | 623.99 | 607.10 | 15.31 | 214.48% | 1252.86 | 3674.65 | 1909.20 | 714.95 |

| GA | 336.82 | 481.71 | 438.60 | 41.23 | 291.81% | 1218.84 | 1997.18 | 1718.47 | 279.41 |

| Algorithm | Normal Makespan | Δ Makespan (%) | DDoS Makespan | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Min | Max | Avg | Std | Δ | Min | Max | Avg | Std | |

| WHO | 361.87 | 420.51 | 398.75 | 19.94 | 292.82% | 483.96 | 2287.68 | 1566.35 | 486.14 |

| A-WHO | 362.44 | 404.31 | 383.99 | 13.36 | 242.86% | 439.36 | 1969.26 | 1316.53 | 610.42 |

| PSO | 400.57 | 432.05 | 410.15 | 8.974 | 304.10% | 1459.00 | 1912.28 | 1657.43 | 216.58 |

| CSOA | 395.35 | 458.38 | 424.49 | 19.54 | 302.42% | 1442.41 | 1996.24 | 1708.24 | 255.34 |

| SCA | 534.78 | 616.88 | 587.45 | 25.67 | 181.26% | 675.18 | 2344.39 | 1652.26 | 510.74 |

| ABC | 545.16 | 617.43 | 592.25 | 22.70 | 167.59% | 771.06 | 2291.97 | 1584.78 | 492.46 |

| GA | 322.98 | 475.98 | 439.14 | 44.81 | 302.35% | 1162.42 | 2425.82 | 1766.87 | 452.94 |

| Algorithm | Normal Makespan | Δ Makespan (%) | DDoS Makespan | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Min | Max | Avg | Std | Δ | Min | Max | Avg | Std | |

| WHO | 333.31 | 380.58 | 364.94 | 15.25 | 180.83 | 378.68 | 1497.61 | 1024.85 | 507.81 |

| A-WHO | 310.01 | 387.20 | 360.93 | 20.74 | 171.11 | 438.82 | 1506.70 | 978.53 | 434.57 |

| PSO | 347.33 | 382.41 | 365.14 | 11.88 | 278.48 | 402.15 | 1986.09 | 1381.97 | 426.54 |

| CSOA | 374.24 | 426.15 | 399.36 | 19.12 | 274.90 | 1162.41 | 1932.24 | 1497.21 | 249.20 |

| SCA | 557.47 | 589.40 | 571.21 | 10.40 | 123.46 | 664.73 | 1857.76 | 1276.45 | 374.13 |

| ABC | 532.75 | 597.97 | 563.17 | 21.51 | 141.02 | 1212.03 | 1533.52 | 1357.38 | 133.00 |

| GA | 407.39 | 456.64 | 434.53 | 14.67 | 153.22 | 504.47 | 1505.76 | 1100.32 | 407.53 |

| Algorithm | SLA % (Normal) | SLA % (DDoS) |

|---|---|---|

| WHO | None | 0.51% |

| A-WHO | None | 0.54% |

| PSO | None | 0.55% |

| CSOA | None | 0.52% |

| SCA | None | 0.50% |

| ABC | None | 0.53% |

| GA | None | 0.49% |

| Algorithm | SLA % (Normal) | SLA % (DDoS) |

|---|---|---|

| WHO | None | 0.52% |

| A-WHO | None | 0.50% |

| PSO | None | 0.54% |

| CSOA | None | 0.49% |

| SCA | None | 0.55% |

| ABC | None | 0.50% |

| GA | None | 0.49% |

| Algorithm | SLA % (Normal) | SLA % (DDoS) |

|---|---|---|

| WHO | None | 0.54% |

| A-WHO | None | 0.50% |

| PSO | None | 0.53% |

| CSOA | None | 0.54% |

| SCA | None | 0.52% |

| ABC | None | 0.53% |

| GA | None | 0.49% |

| Algorithm | Normal Environment | DDoS Environment | ||

|---|---|---|---|---|

| Exec Time | Response Time | Exec Time | Response Time | |

| WHO | 2.62 | 126.54 | 11.95 | 128.86 |

| A-WHO | 2.60 | 125.33 | 12.33 | 128.69 |

| PSO | 2.59 | 124.89 | 12.45 | 128.23 |

| CSOA | 2.62 | 128.98 | 11.95 | 131.65 |

| SCA | 2.66 | 152.41 | 11.84 | 153.79 |

| ABC | 2.65 | 153.30 | 12.19 | 156.40 |

| GA | 2.64 | 133.87 | 11.65 | 136.57 |

| Algorithm | Normal Environment | DDoS Environment | ||

|---|---|---|---|---|

| Exec Time | Response Time | Exec Time | Response Time | |

| WHO | 2.60 | 124.75 | 11.90 | 127.36 |

| A-WHO | 2.59 | 124.01 | 11.44 | 126.86 |

| PSO | 2.55 | 123.12 | 12.33 | 126.01 |

| CSOA | 2.59 | 128.45 | 11.54 | 131.33 |

| SCA | 2.65 | 152.73 | 12.49 | 156.26 |

| ABC | 2.65 | 152.47 | 11.88 | 156.33 |

| GA | 2.64 | 133.78 | 11.46 | 136.23 |

| Algorithm | Normal Environment | DDoS Environment | ||

|---|---|---|---|---|

| Exec Time | Response Time | Exec Time | Response Time | |

| WHO | 2.57 | 121.88 | 12.28 | 124.85 |

| A-WHO | 2.57 | 121.95 | 11.74 | 124.86 |

| PSO | 2.46 | 120.14 | 11.97 | 123.09 |

| CSOA | 2.58 | 128.17 | 12.35 | 131.19 |

| SCA | 2.66 | 152.33 | 12.10 | 155.42 |

| ABC | 2.65 | 151.67 | 12.08 | 154.69 |

| GA | 2.64 | 133.78 | 11.21 | 136.41 |

| Algorithm | Energy (J) Normal | Energy (J) DDoS |

|---|---|---|

| WHO | 219,015.58 | 1,547,922.22 |

| A-WHO | 216,138.60 | 1,639,987.23 |

| PSO | 223,731.65 | 1,638,480.04 |

| CSOA | 230,058.61 | 1,557,542.33 |

| SCA | 283,045.82 | 1,608,563.61 |

| ABC | 284,315.29 | 1,660,905.68 |

| GA | 239,070.85 | 1,670,423.50 |

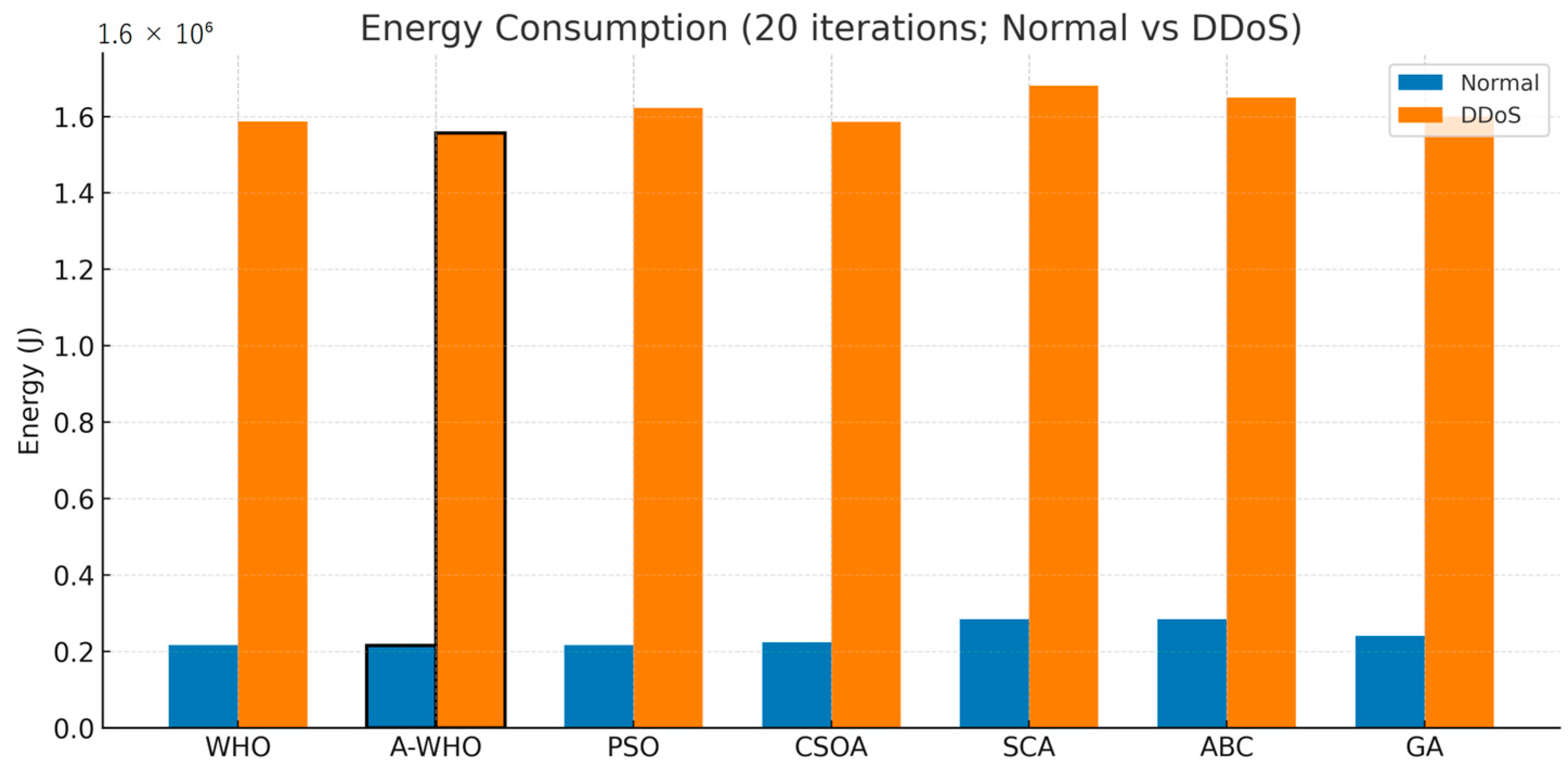

| Algorithm | Energy (J) Normal | Energy (J) DDoS |

|---|---|---|

| WHO | 215,905.99 | 1,586,825.90 |

| A-WHO | 214,969.92 | 1,556,767.35 |

| PSO | 216,093.56 | 1,622,310.38 |

| CSOA | 223,682.70 | 1,585,813.91 |

| SCA | 284,407.70 | 1,680,857.85 |

| ABC | 284,087.64 | 1,649,140.59 |

| GA | 240,677.96 | 1,600,374.29 |

| Algorithm | Energy (J) Normal | Energy (J) DDoS |

|---|---|---|

| WHO | 212,261.98 | 1,601,824.99 |

| A-WHO | 213,440.53 | 1,580,640.70 |

| PSO | 196,594.09 | 1,584,865.89 |

| CSOA | 217,936.18 | 1,649,153.31 |

| SCA | 282,430.96 | 1,617,707.43 |

| ABC | 281,066.42 | 1,640,609.68 |

| GA | 241,147.62 | 1,564,848.91 |

| Algorithm | Utilization Rate % (Normal) | Utilization Rate % (DDoS) |

|---|---|---|

| WHO | 49.66% | 29.44% |

| A-WHO | 49.25% | 29.09% |

| PSO | 47.89% | 30.02% |

| CSOA | 48.15% | 29.77% |

| SCA | 39.25% | 30.72% |

| ABC | 38.87% | 31.00% |

| GA | 50.22% | 29.91% |

| Algorithm | Utilization Rate % (Normal) | Utilization Rate % (DDoS) |

|---|---|---|

| WHO | 50.05% | 29.84% |

| A-WHO | 48.28% | 29.20% |

| PSO | 50.30% | 28.67% |

| CSOA | 50.41% | 29.43% |

| SCA | 39.06% | 29.24% |

| ABC | 39.30% | 30.09% |

| GA | 51.16% | 31.05% |

| Algorithm | Utilization Rate % (Normal) | Utilization Rate % (DDoS) |

|---|---|---|

| WHO | 47.02% | 29.12% |

| A-WHO | 45.52% | 29.49% |

| PSO | 54.84% | 29.59% |

| CSOA | 52.29% | 29.01% |

| SCA | 39.18% | 29.67% |

| ABC | 39.96% | 29.63% |

| GA | 50.59% | 30.04% |

| Algorithm | Runtime (s) (Normal) | Runtime (s) (DDoS) |

|---|---|---|

| WHO | 00:00:54.79 | 00:00:59.68 |

| A-WHO | 00:00:53.03 | 00:01:02.77 |

| PSO | 00:00:52.28 | 00:00:53.97 |

| CSOA | 00:00:47.58 | 00:00:52.59 |

| SCA | 00:00:50.68 | 00:00:57.89 |

| ABC | 00:00:47.53 | 00:00:51.75 |

| GA | 00:09:33.89 | 00:15:41.95 |

| Algorithm | Runtime (s) (Normal) | Runtime (s) (DDoS) |

|---|---|---|

| WHO | 00:01:59.18 | 00:02:19.07 |

| A-WHO | 00:02:17.90 | 00:02:32.84 |

| PSO | 00:01:52.59 | 00:02:01.94 |

| CSOA | 00:01:51.06 | 00:01:51.45 |

| SCA | 00:02:17.54 | 00:02:11.57 |

| ABC | 00:01:29.72 | 00:01:49.89 |

| GA | 00:39:56.48 | 00:51:19.13 |

| Algorithm | Runtime (s) (Normal) | Runtime (s) (DDoS) |

|---|---|---|

| WHO | 00:08:55.92 | 00:16:29.34 |

| A-WHO | 00:09:47.47 | 00:10:03.77 |

| PSO | 00:06:49.32 | 00:06:57.86 |

| CSOA | 00:06:13.72 | 00:06:53.15 |

| SCA | 00:15:09.23 | 00:09:03.47 |

| ABC | 00:05:40.86 | 00:05:36.26 |

| GA | 03:57:40.41 | 03.45:74.58 |

| Algorithm | Mean Rank |

|---|---|

| A-WHO | 1.30 |

| WHO | 1.80 |

| PSO | 3.50 |

| CSOA | 3.90 |

| GA | 4.70 |

| SCA | 6.40 |

| Algorithm | Mean Rank |

|---|---|

| A-WHO | 1.10 |

| CSOA | 3.60 |

| PSO | 4.40 |

| SCA | 4.40 |

| WHO | 4.70 |

| GA | 4.80 |

| ABC | 5.00 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kaplan, F.; Babalık, A. A-WHO: Stagnation-Based Adaptive Metaheuristic for Cloud Task Scheduling Resilient to DDoS Attacks. Electronics 2025, 14, 4337. https://doi.org/10.3390/electronics14214337

Kaplan F, Babalık A. A-WHO: Stagnation-Based Adaptive Metaheuristic for Cloud Task Scheduling Resilient to DDoS Attacks. Electronics. 2025; 14(21):4337. https://doi.org/10.3390/electronics14214337

Chicago/Turabian StyleKaplan, Fatih, and Ahmet Babalık. 2025. "A-WHO: Stagnation-Based Adaptive Metaheuristic for Cloud Task Scheduling Resilient to DDoS Attacks" Electronics 14, no. 21: 4337. https://doi.org/10.3390/electronics14214337

APA StyleKaplan, F., & Babalık, A. (2025). A-WHO: Stagnation-Based Adaptive Metaheuristic for Cloud Task Scheduling Resilient to DDoS Attacks. Electronics, 14(21), 4337. https://doi.org/10.3390/electronics14214337