Real-Time Detection and Segmentation of Oceanic Whitecaps via EMA-SE-ResUNet

Abstract

1. Introduction

- An enhanced EMA-SE-ResUNet model that integrates EMA and SENet modules to improve feature representation, model stability, and the extraction of subtle whitecap edges under varying sea conditions.

- A dynamic data augmentation and joint loss strategy that enhances model generalization and balances edge accuracy with region completeness.

- On shipborne video datasets, the proposed model demonstrates superior robustness and generalization compared with traditional algorithms. It also achieves higher segmentation accuracy than other deep learning models, including the baseline U-Net, providing reliable technical support for whitecap-related meteorological and oceanographic studies.

2. Related Work

2.1. Whitecaps Detection and Coverage Estimation

2.2. Deep Learning for Marine Image Segmentation

3. Data and Methods

3.1. Data

3.2. Dataset Generation

- Data Construction: Video sequences exhibiting optimal illumination and high signal-to-noise ratios were first filtered. Keyframes were extracted using a systematic equidistant sampling strategy, and images with high whitecap coverage density were selected based on expert annotation. Using threshold-based segmentation as the baseline, whitecap regions underwent targeted discrimination and refined manual correction, followed by the removal of sky portions potentially misclassified by the threshold method, ensuring training sample masks achieved pixel-accurate annotations of whitecap coverage. Ultimately, a standardized dataset containing 1100 samples was constructed and partitioned into training, validation, and test sets—with 900, 100, and 100 samples, respectively—with the test set used to evaluate the model’s performance.

- Data Preprocessing: Multidimensional data augmentation techniques were applied, including random affine transformation operations in spatial dimension (rotation: ±10°, scaling: ±20%, horizontal/vertical flipping) and introduced HSV channel perturbations in spectral augmentation (hue: ±0.1, saturation: ±0.7, value: ±0.3). Input dimensions were unified via grayscale padding to a fixed resolution, with semantic labels undergoing one-hot encoding, and pixel values were normalized to [0, 1] using min-max scaling. Standardized image tensors and their corresponding binarized annotation matrices were finally generated, establishing a high-quality data foundation for model training.

3.3. Evaluation Metrics

4. Model and Training Parameters

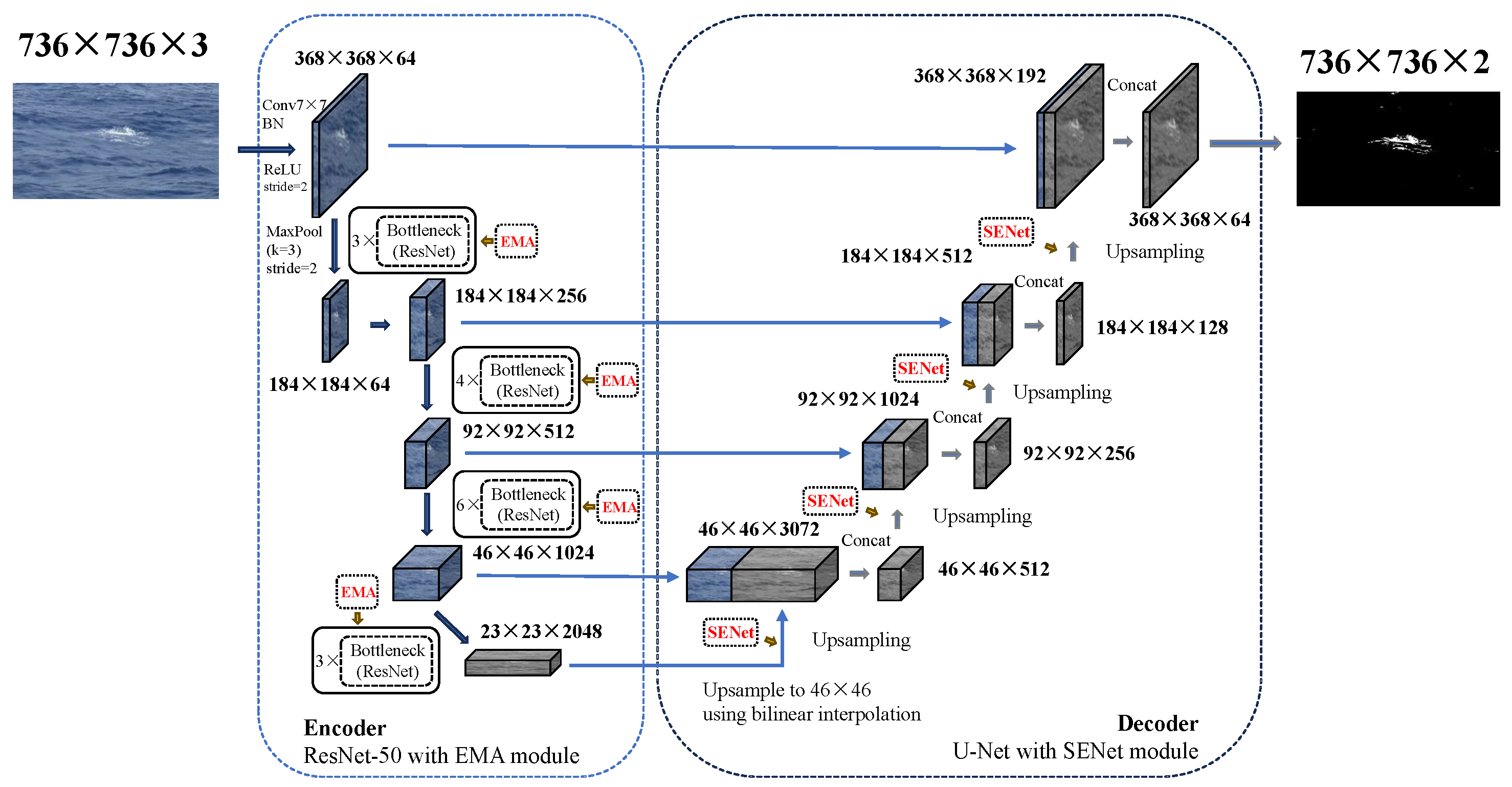

4.1. Main Structure of EMA-SE-ResUNet

4.2. Detailed Improvement Strategy

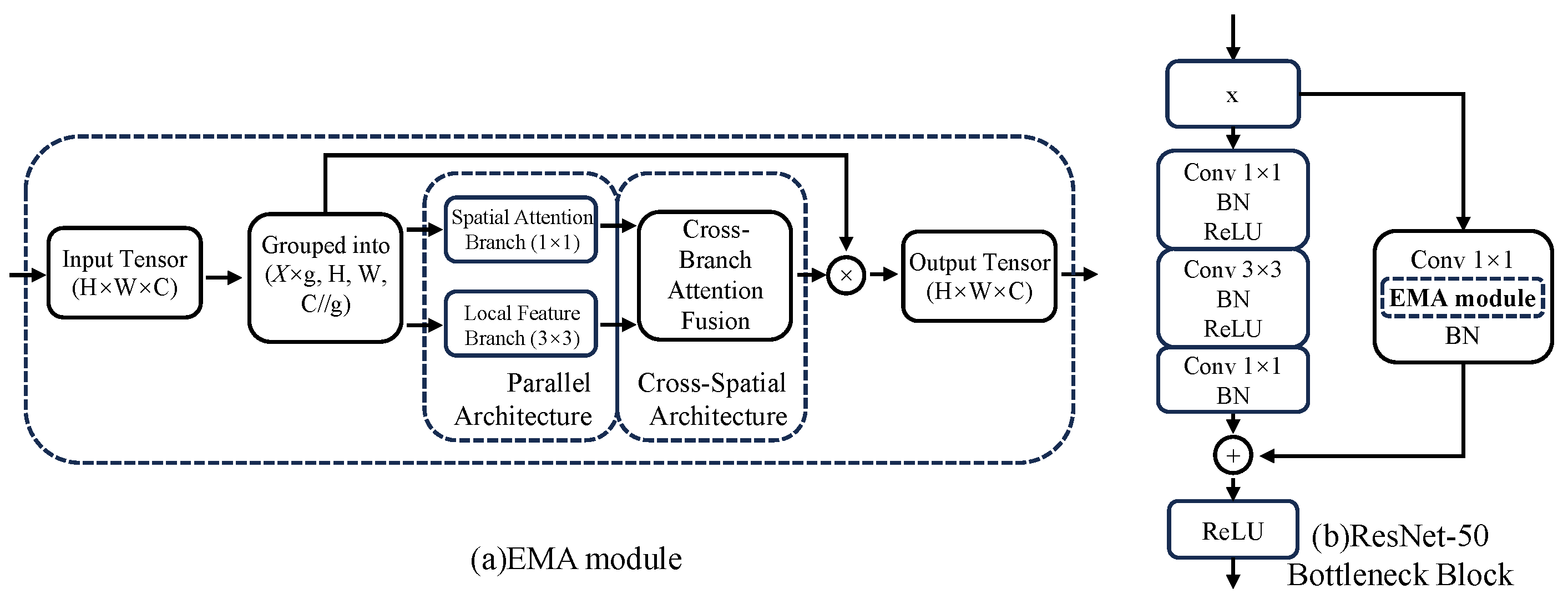

4.2.1. EMA-Enhanced ResNet

- Channel grouping mechanism: The input feature layer X is partitioned into g groups of sub-features along the channel dimension (in this work, g = 32). Each group of sub-features is denoted aswhere C is the number of channels, and H and W represent the height and width of the feature map, respectively.

- Grouped parallel paths: Feature extraction is performed through parallel paths. The 1 × 1 branch preserves large-scale semantic information of input features (e.g., the overall region of patches), while the 3 × 3 branch focuses on local details (e.g., edge sharpness and morphological structures of whitecaps), establishing multi-granularity perception across spatial dimensions.

- Cross-spatial dynamic weight allocation: Spatial attention weight maps are generated via Softmax to highlight the saliency of whitecap regions. The weighted features are then fused with the original skip connection features, enabling cross-regional semantic information propagation (e.g., dispersed whitecap patches and edge context).

4.2.2. SENet-Enhanced U-Net

4.2.3. Synergy Between EMA and SENet

4.3. Loss Function and Training Configuration

5. Results and Analysis

5.1. Training Environment

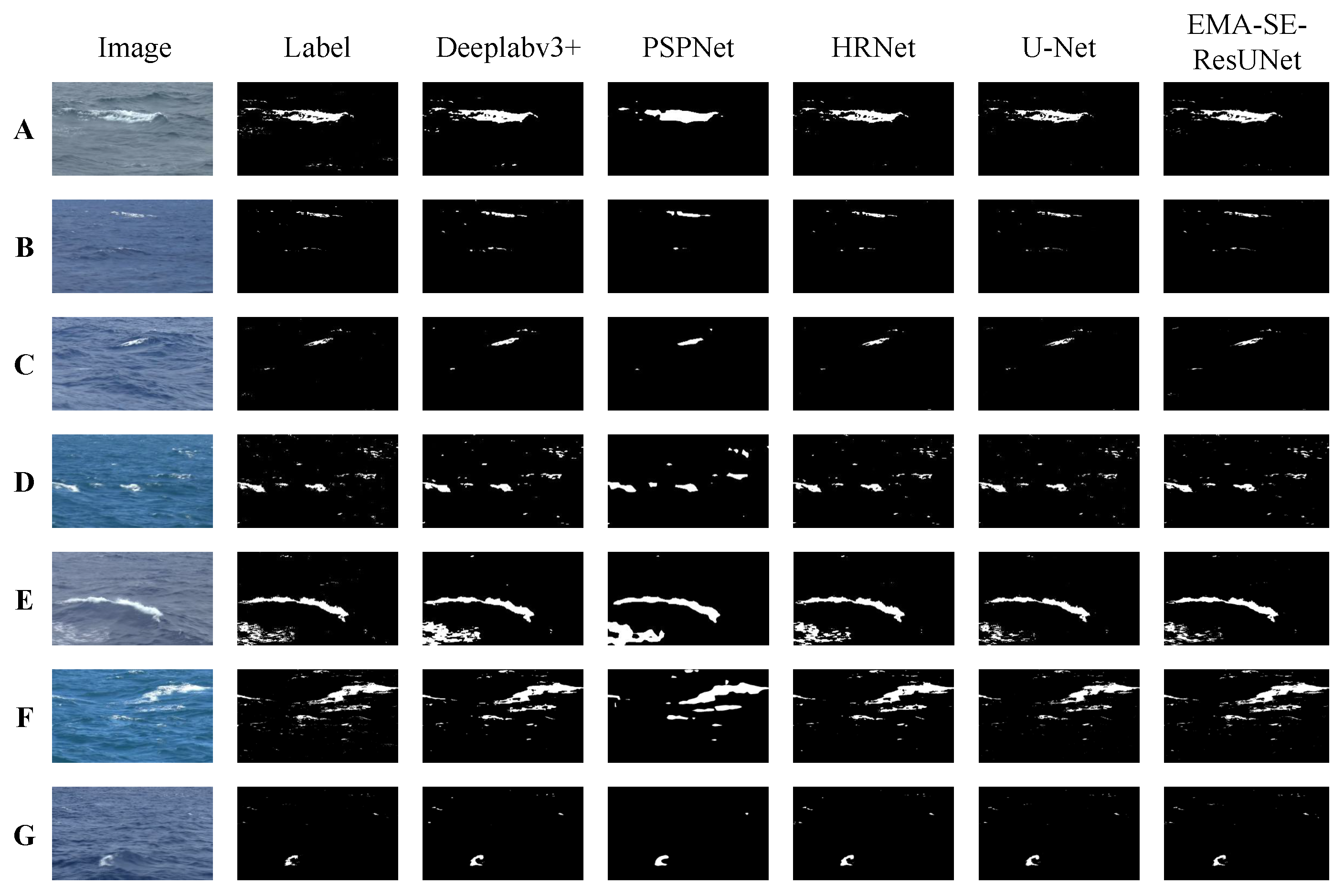

5.2. Model Performance Evaluation

5.3. Evaluation of Whitecap Coverage Extraction

5.4. Summary of Model Analysis

5.5. Discussion

6. Conclusions

- While ResUNet reduces computational load with only tiny accuracy loss, the introduction of EMA and SENet modules significantly enhances model robustness, providing an effective refinement strategy for whitecap segmentation.

- The proposed model exhibits strong robustness across diverse lighting conditions and wave morphology variations, enabling accurate detection and segmentation of small whitecap features while efficiently focusing on critical target regions.

- The model outperforms popular algorithms in key metrics such as IoUW and PAE, while reducing GFLOPs by 57.87% compared to traditional U-Net. It also supplies high-fidelity whitecap coverage extraction, balancing accuracy with real-time efficiency. Under the current runtime environment, it achieves real-time processing at 10.17 frames per second (with potential for further acceleration in higher-configuration systems), meeting the energy-efficiency demands of long-term monitoring onboard research vessels.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Monahan, E.C.; O’Muircheartaigh, I.G. Whitecaps and the passive remote sensing of the ocean surface. Int. J. Remote Sens. 1986, 7, 627–642. [Google Scholar] [CrossRef]

- Bortkovskii, R.S.; Novak, V.A. Statistical dependencies of sea state characteristics on water temperature and wind-wave age. J. Mar. Syst. 1993, 4, 161–169. [Google Scholar] [CrossRef]

- Callaghan, A.; de Leeuw, G.; Cohen, L.; O’Dowd, C.D. Relationship of Oceanic Whitecap Coverage to Wind Speed and Wind History. Geophys. Res. Lett. 2008, 35, L23609. [Google Scholar] [CrossRef]

- Baker, C.M.; Moulton, M.; Palmsten, M.L.; Brodie, K.; Nuss, E.; Chickadel, C.C. Remotely Sensed Short-Crested Breaking Waves in a Laboratory Directional Wave Basin. Coast. Eng. 2023, 183, 104327. [Google Scholar] [CrossRef]

- Lafon, C.; Piazzola, J.; Forget, P.; Despiau, S. Whitecap coverage in coastal environment for steady and unsteady wave field conditions. J. Mar. Syst. 2007, 66, 38–46. [Google Scholar] [CrossRef]

- Schwendeman, M.; Thomson, J. Observations of whitecap coverage and the relation to wind stress, wave slope, and turbulent dissipation. J. Geophys. Res. Oceans 2015, 120, 8346–8363. [Google Scholar] [CrossRef]

- Brumer, S.E.; Zappa, C.J.; Brooks, I.M.; Tamura, H.; Brown, S.M.; Blomquist, B.W.; Cifuentes-Lorenzen, A. Whitecap coverage dependence on wind and wave statistics as observed during SO GasEx and HiWinGS. J. Phys. Oceanogr. 2017, 47, 2211–2235. [Google Scholar] [CrossRef]

- Deike, L. Mass transfer at the ocean–atmosphere interface: The role of wave breaking, droplets, and bubbles. Annu. Rev. Fluid Mech. 2022, 54, 191–224. [Google Scholar] [CrossRef]

- Callaghan, A.H.; White, M. Automated processing of sea surface images for the determination of whitecap coverage. J. Atmos. Ocean. Technol. 2009, 26, 383–394. [Google Scholar] [CrossRef]

- Zhao, B.; Lu, Y.; Ding, J.; Jiao, J.; Tian, Q. Discrimination of oceanic whitecaps derived by sea surface wind using Sentinel-2 MSI images. J. Geophys. Res. Oceans 2022, 127, e2021JC018208. [Google Scholar] [CrossRef]

- Yin, Z.; Lu, Y. Optical Quantification of Wind-Wave Breaking and Regional Variations in Different Offshore Seas Using Landsat-8 OLI Images. J. Geophys. Res. Atmos. 2025, 130, e2024JD041764. [Google Scholar] [CrossRef]

- Anguelova, M.D.; Webster, F. Whitecap coverage from satellite measurements: A first step toward modeling the variability of oceanic whitecaps. J. Geophys. Res. Oceans 2006, 111, C3. [Google Scholar] [CrossRef]

- Qi, J.; Yang, Y.; Zhang, J. Global Prediction of Whitecap Coverage Using Transfer Learning and Satellite-Derived Data. Remote Sens. 2025, 17, 1152. [Google Scholar] [CrossRef]

- Liu, X.; Zhang, S.; Li, M.; Dang, C. Study on Comparison, Improvement and Application of Whitecap Automatic Identification Algorithm. Semicond. Optoelectron. 2017, 38, 758–761. [Google Scholar] [CrossRef]

- Al-Lashi, R.S.; Webster, M.; Gunn, S.R.; Czerski, H. Toward omnidirectional and automated imaging system for measuring oceanic whitecaps coverage. J. Opt. Soc. Am. A 2018, 35, 515–521. [Google Scholar] [CrossRef]

- Wang, Y.; Sugihara, Y.; Zhao, X.; Nakashima, H.; Eljamal, O. Deep Learning-Based Image Processing for Whitecaps on the Ocean Surface. J. Japan Soc. Civ. Eng. Ser. B2 (Coast. Eng.) 2020, 76, I_163–I_168. [Google Scholar] [CrossRef]

- Yang, X.; Potter, H. A Novel Method to Discriminate Active from Residual Whitecaps Using Particle Image Velocimetry. Remote Sens. 2021, 13, 4051. [Google Scholar] [CrossRef]

- Hu, X.; Yu, Q.; Meng, A.; He, C.; Chi, S.; Li, M. Using Optical Flow Trajectories to Detect Whitecaps in Light-Polluted Videos. Remote Sens. 2022, 14, 5691. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Proceedings of the 18th International Conference, Munich, Germany, 5–9 Octobe 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Ouyang, D.; He, S.; Zhang, G.; Luo, M.; Guo, H.; Zhan, J.; Huang, Z. Efficient multi-scale attention module with cross-spatial learning. In Proceedings of the 2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar] [CrossRef]

- Bakhoday-Paskyabi, M.; Reuder, J.; Flügge, M. Automated measurements of whitecaps on the ocean surface from a buoy-mounted camera. Methods Oceanogr. 2016, 17, 14–31. [Google Scholar] [CrossRef]

- Shaban, M.; Salim, R.; Abu Khalifeh, H.; Khelifi, A.; Shalaby, A.; El-Mashad, S.; Mahmoud, A.; Ghazal, M.; El-Baz, A. A deep-learning framework for the detection of oil spills from SAR data. Sensors 2021, 21, 2351. [Google Scholar] [CrossRef]

- Ren, Y.; Li, X.; Li, Z.; Liu, B.; Wang, C.; Zhang, H. Development of a dual-attention U-Net model for sea ice and open water classification on SAR images. IEEE Geosci. Remote Sens. Lett. 2021, 19, 4010205. [Google Scholar] [CrossRef]

- Kikaki, K.; Kakogeorgiou, I.; Hoteit, I.; Karantzalos, K. Detecting Marine Pollutants and Sea Surface Features with Deep Learning in Sentinel-2 Imagery. ISPRS J. Photogramm. Remote Sens. 2024, 210, 39–54. [Google Scholar] [CrossRef]

- Yan, T.; Wan, Z.; Deng, X.; Zhang, P.; Liu, Y.; Lu, H. MAS-SAM: Segment Any Marine Animal with Aggregated Features. arXiv 2024, arXiv:2404.15700. [Google Scholar] [CrossRef]

- Li, Y.; Hou, Q.; Zheng, Z.; Cheng, M.M.; Yang, J.; Li, X. Large selective kernel network for remote sensing object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 16794–16805. [Google Scholar] [CrossRef]

- Park, J.; Woo, S.; Lee, J.Y.; Kweon, I.S. BAM: Bottleneck attention module. arXiv 2018. [Google Scholar] [CrossRef]

- Yang, L.; Zhang, R.Y.; Li, L.; Xie, X. SIMAM: A simple, parameter-free attention module for convolutional neural networks. In Proceedings of the 38th International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 11863–11874. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar] [CrossRef]

- Cai, X.; Lai, Q.; Wang, Y.; Wang, W.; Sun, Z.; Yao, Y. Poly kernel inception network for remote sensing detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 27706–27716. [Google Scholar] [CrossRef]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar] [CrossRef]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep high-resolution representation learning for human pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5693–5703. [Google Scholar] [CrossRef]

| Module | Improvement Location |

IoUw (%) ↑ |

Gain (%) ↑ |

PAE (%) ↓ |

Gain (%) ↓ |

FLOPs (G) ↓ |

Gain (G) ↓ | |

|---|---|---|---|---|---|---|---|---|

| ResNet Bottleneck Junction | Skip in ResNet Bottleneck | |||||||

| ResUNet | // | 70.75 | // | 0.108 | // | 381.239 | // | |

| +LSK | ✓ | 70.95 | 0.2 | 0.113 | 0.005 | 496.618 | 115.379 | |

| +LSK | ✓ | 70.39 | −0.36 | 0.119 | 0.011 | 410.289 | 29.05 | |

| +SENet | ✓ | 71.55 | 0.8 | 0.102 | −0.006 | 381.363 | 0.124 | |

| +SENet | ✓ | 71.8 | 1.05 | 0.104 | −0.004 | 381.273 | 0.034 | |

| +BAM | ✓ | 70.7 | −0.05 | 0.107 | −0.001 | 393.416 | 12.177 | |

| +BAM | ✓ | 71.17 | 0.42 | 0.109 | 0.001 | 384.289 | 3.05 | |

| +SIMAM | ✓ | 69.90 | −0.85 | 0.112 | 0.004 | 381.239 | <0.001 | |

| +SIMAM | ✓ | 71.46 | 0.71 | 0.102 | −0.006 | 381.239 | <0.001 | |

| +EMA | ✓ | 72.07 | 1.32 | 0.096 | −0.012 | 401.777 | 20.538 | |

| +EMA | ✓ | 72.97 | 2.22 | 0.081 | −0.027 | 386.385 | 5.146 | |

| Module | Improvement Location |

IoUw (%) ↑ |

Gain (%) ↑ |

PAE (%) ↓ |

Gain (%) ↓ |

FLOPs (G) ↓ |

Gain (G) ↓ | |

|---|---|---|---|---|---|---|---|---|

| U-Net Pre- Upsampling | U-Net Post- Upsampling | |||||||

| ResUNet | // | 70.75 | // | 0.108 | // | 381.239 | // | |

| +EMA | ✓ | 71.80 | 1.05 | 0.101 | −0.007 | 405.808 | 24.569 | |

| +EMA | ✓ | 71.45 | 0.7 | 0.106 | −0.002 | 382.62 | 1.381 | |

| +CBAM | ✓ | 71.48 | 0.73 | 0.103 | −0.005 | 381.397 | 0.158 | |

| +CBAM | ✓ | 71.28 | 0.53 | 0.104 | −0.004 | 381.307 | 0.068 | |

| +BAM | ✓ | 71.59 | 0.84 | 0.103 | −0.005 | 395.832 | 14.593 | |

| +BAM | ✓ | 70.03 | −0.72 | 0.128 | 0.02 | 382.052 | 0.813 | |

| +CAA | ✓ | 67.75 | −3.0 | 0.139 | 0.031 | 555.713 | 174.474 | |

| +CAA | ✓ | 70.72 | −0.03 | 0.114 | 0.006 | 391.122 | 9.883 | |

| +SENet | ✓ | 71.84 | 1.09 | 0.098 | −0.01 | 381.359 | 0.12 | |

| +SENet | ✓ | 71.6 | 0.85 | 0.099 | −0.009 | 381.272 | 0.033 | |

| Model | IoUW (%) ↑ | F1W (%) ↑ | PAE (%) ↓ | FLOPs (G) ↓ | Params (M) ↓ | FPS ↑ |

|---|---|---|---|---|---|---|

| U-Net | 71.22 ± 19.76 | 83.19 ± 19.91 | 0.115 ± 0.225 | 904.953 | 31.044 | 7.9 |

| ResUNet | 70.75 ± 20.26 | 82.87 ± 20.61 | 0.108 ± 0.221 | 381.239 | 43.937 | 11.04 |

| EMA-ResUNet | 72.97 ± 18.60 | 84.37 ± 18.46 | 0.086 ± 0.163 | 386.385 | 43.992 | 10.12 |

| SE-ResUNet | 71.84 ± 19.07 | 83.61 ± 19.05 | 0.099 ± 0.247 | 381.359 | 45.285 | 10.83 |

| EMA-SE-ResUNet | 73.32 ± 18.23 | 84.60 ± 18.04 | 0.081 ± 0.156 | 386.505 | 45.340 | 10.17 |

| Model | IoUW (%) ↑ | F1W (%) ↑ | PAE (%) ↓ | FLOPs (G) ↓ | Params (M) ↓ | FPS ↑ |

|---|---|---|---|---|---|---|

| Deeplabv3+ | 64.93 ± 17.87 | 78.34 ± 18.86 | 0.138 ± 0.285 | 344.760 | 54.709 | 9.54 |

| PSPNet | 48.94 ± 20.14 | 65.71 ± 25.39 | 0.197 ± 0.486 | 244.605 | 46.707 | 13.55 |

| HRNet | 65.23 ± 19.45 | 78.96 ± 20.77 | 0.117 ± 0.274 | 387.797 | 65.847 | 8.94 |

| U-Net | 71.22 ± 19.76 | 83.19 ± 19.91 | 0.115 ± 0.225 | 904.953 | 31.044 | 7.9 |

| EMA-SE-ResUNet | 73.32 ± 18.23 | 84.60 ± 18.04 | 0.081 ± 0.156 | 386.505 | 45.340 | 10.17 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, W.; Wei, Y.; Chen, X. Real-Time Detection and Segmentation of Oceanic Whitecaps via EMA-SE-ResUNet. Electronics 2025, 14, 4286. https://doi.org/10.3390/electronics14214286

Chen W, Wei Y, Chen X. Real-Time Detection and Segmentation of Oceanic Whitecaps via EMA-SE-ResUNet. Electronics. 2025; 14(21):4286. https://doi.org/10.3390/electronics14214286

Chicago/Turabian StyleChen, Wenxuan, Yongliang Wei, and Xiangyi Chen. 2025. "Real-Time Detection and Segmentation of Oceanic Whitecaps via EMA-SE-ResUNet" Electronics 14, no. 21: 4286. https://doi.org/10.3390/electronics14214286

APA StyleChen, W., Wei, Y., & Chen, X. (2025). Real-Time Detection and Segmentation of Oceanic Whitecaps via EMA-SE-ResUNet. Electronics, 14(21), 4286. https://doi.org/10.3390/electronics14214286