LBP-LSB Co-Optimisation for Dynamic Unseen Backdoor Attacks

Abstract

1. Introduction

- We propose a complementary LBP–LSB invisible-trigger generation method, where LBP encodes local texture structure and LSB maintains visual imperceptibility, and their synergy enhances trigger robustness.

- We design a PRNG-based dynamic randomized embedding mechanism, in which pseudo-random coordinate selection breaks fixed-position patterns, reducing detectability by defenses that assume fixed embedding locations.

- We conduct systematic experiments and comparative analyses on CIFAR-10 and Tiny-ImageNet. The results demonstrate that the proposed method performs well in terms of concealment and attack success rate.

2. Relevant Theoretical Foundations

2.1. Local Binary Pattern

2.2. Least Significant Bit

2.3. Pseudorandom Number Generator (PRNG)

2.4. Backdoor Attack

2.5. Backdoor Defence

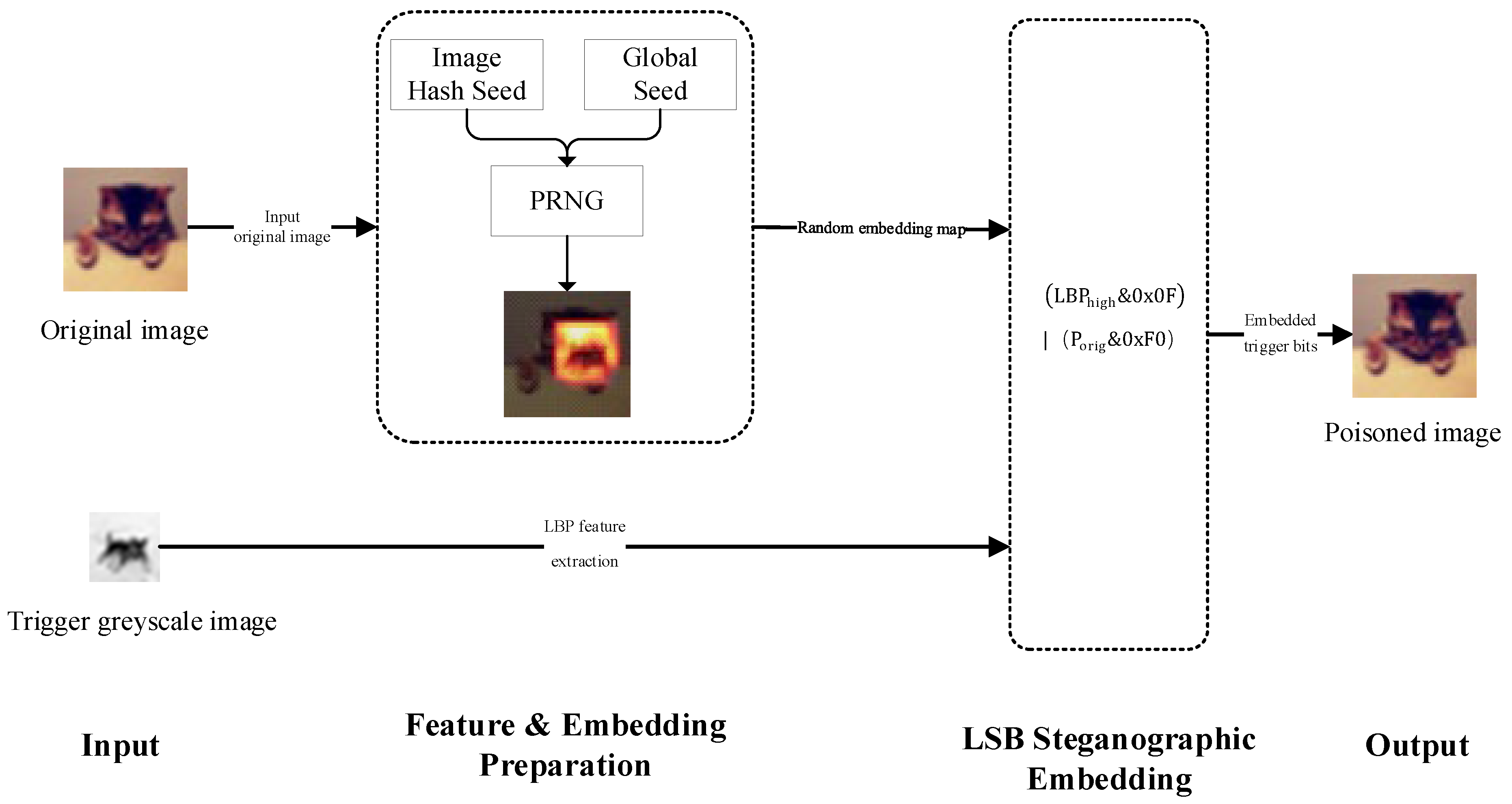

3. Dynamic Invisible Backdoor Attack Method with LBP-LSB

3.1. Threat Model

3.1.1. Problem Definition

3.1.2. Attack Targets

3.2. Attack Design

- (1)

- Step One: Trigger Feature Extraction

- (2)

- Step Two: Dynamic Embedding Position Selection

- (3)

- Step Three: LSB Steganography Embedding

| Algorithm 1: LBP-LSB Co-optimization for dynamic unseen backdoor attack | |

| Step | Operation |

| 1 | Require: Target image ; optional trigger image ; LBP radius (default 1); number of neighbors (default 8); LSB bits (default 4); master seed (default 1234) |

| 2 | Ensure: Poisoned image |

| 3 | Initialize PRNG with seed . |

| 4 | If trigger image is provided then Convert Trigger to grayscale using BT.601 formula: Resize Trigger to dataset-specific dimensions. (Use 16 × 16 for CIFAR-10 triggers; 28 × 28 for Tiny-ImageNet triggers.) Compute LBP pattern . End If |

| 5 | Compute image-specific seed: |

| 6 | Initialize PRNG with combined seed |

| 7 | Randomly select embedding start position such that the trigger fits inside the image |

| 8 | Extract the high b bits of the LBP pattern: |

| 9 | For each pixel in do If Image is grayscale then Else For each channel do End For End If End For |

| 10 | Return Image’ |

3.3. Trigger LBP Value Extraction

3.4. Trigger Embedding Position

- (1)

- Master Seed: Injected via the seed parameter to guarantee experimental reproducibility. If unspecified, dynamically generated using a system entropy source (timestamp ⊕ process ID) to ensure controllable global randomness.

- (2)

- Image Seed: Derived from the first 8 bits of the SHA-256 hash of the target image’s content, guaranteeing identical embedding locations for the same image across different runs. Altering the master seed will change the image seed.

3.5. LSB Steganography

4. Experimental Evaluation

4.1. Datasets and Models

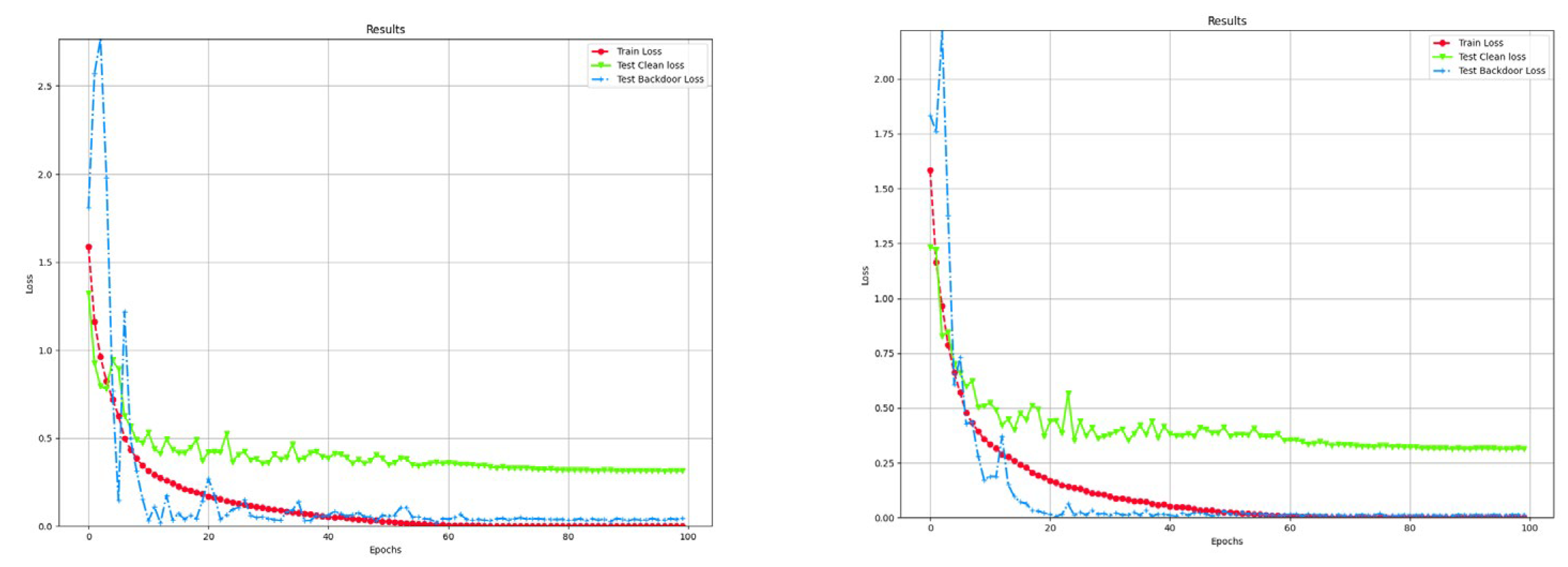

4.2. Attack Success Rate and Clean Data Accuracy

Experimental Results

4.3. Sensitivity Analysis

4.3.1. Model Sensitivity Analysis

4.3.2. Target Label Sensitivity Analysis

4.3.3. Poisoning Rate Sensitivity

4.4. Analysis of Concealment and Dynamism

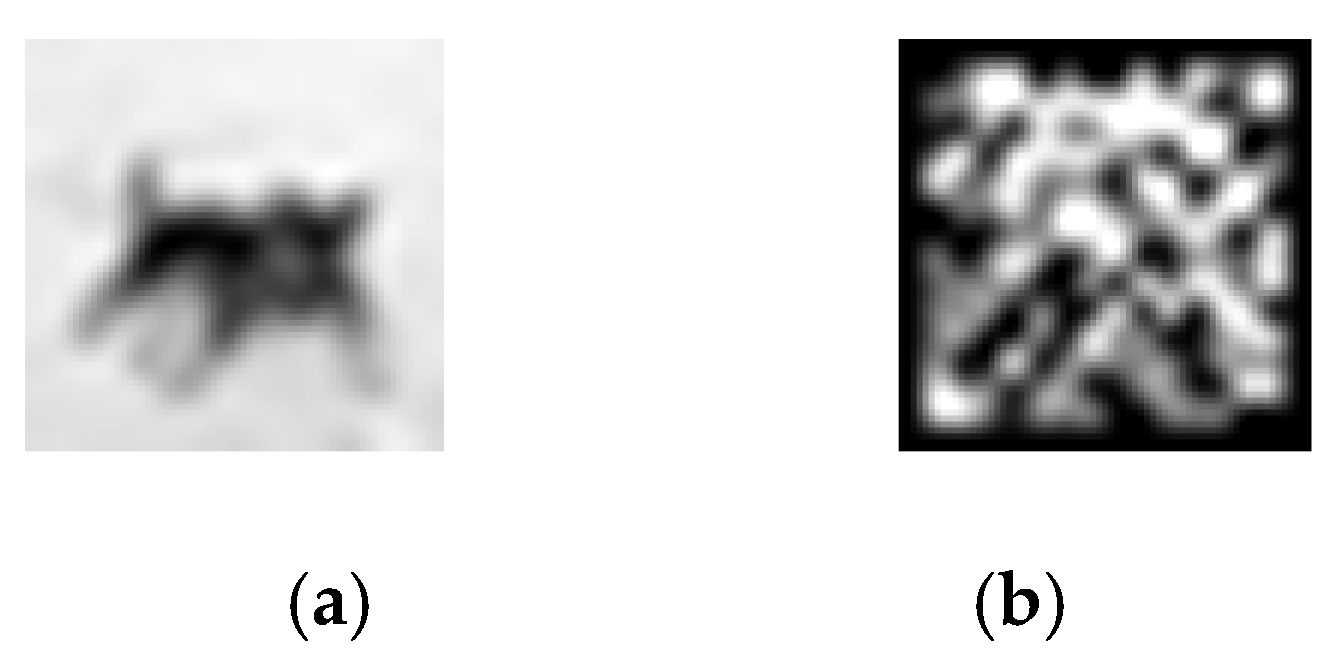

4.4.1. Concealment Analysis

4.4.2. Dynamism Analysis

4.5. Ablation Experiments

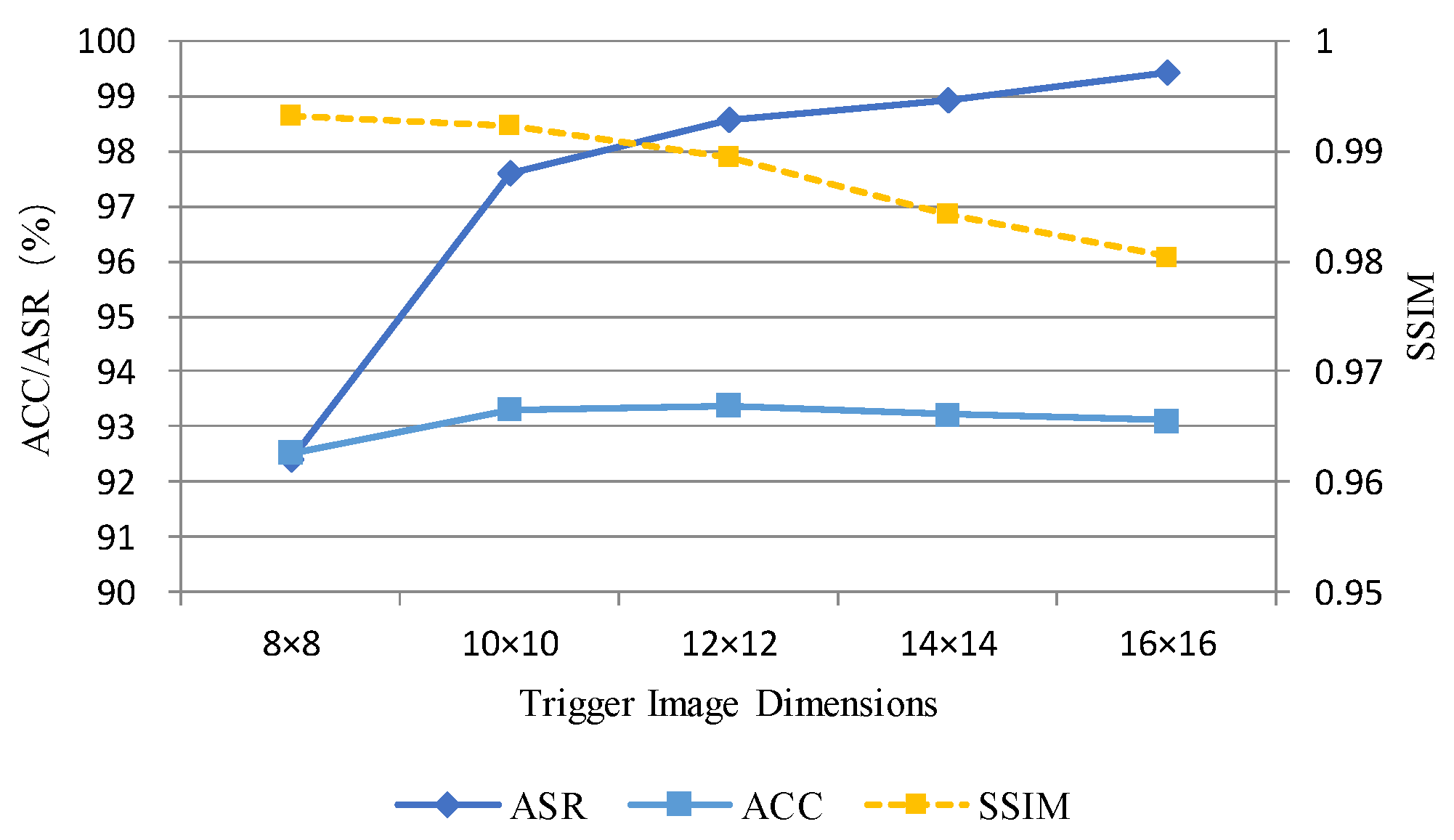

4.5.1. Trigger Image Dimension

- CIFAR-10 dataset

- 2.

- Tiny-ImageNet dataset

4.5.2. Embedding Bit Numbers

4.5.3. Complementarity of LBP and PRNG

- (1)

- V1—Plain LSB + PRNG: High bits of trigger pixels are embedded into carrier pixels with PRNG-based random start positions, without LBP encoding.

- (2)

- V2—LBP LSB static: Trigger pixels are replaced by their LBP-coded values and embedded at a fixed position (no PRNG).

- (3)

- V3—LBP LSB + PRNG: Full method, combining LBP-coded trigger with PRNG randomization (original proposed method).

- CIFAR-10 Ablation Analysis:

- 2.

- Tiny-ImageNet Analysis:

4.6. Defence Experiments

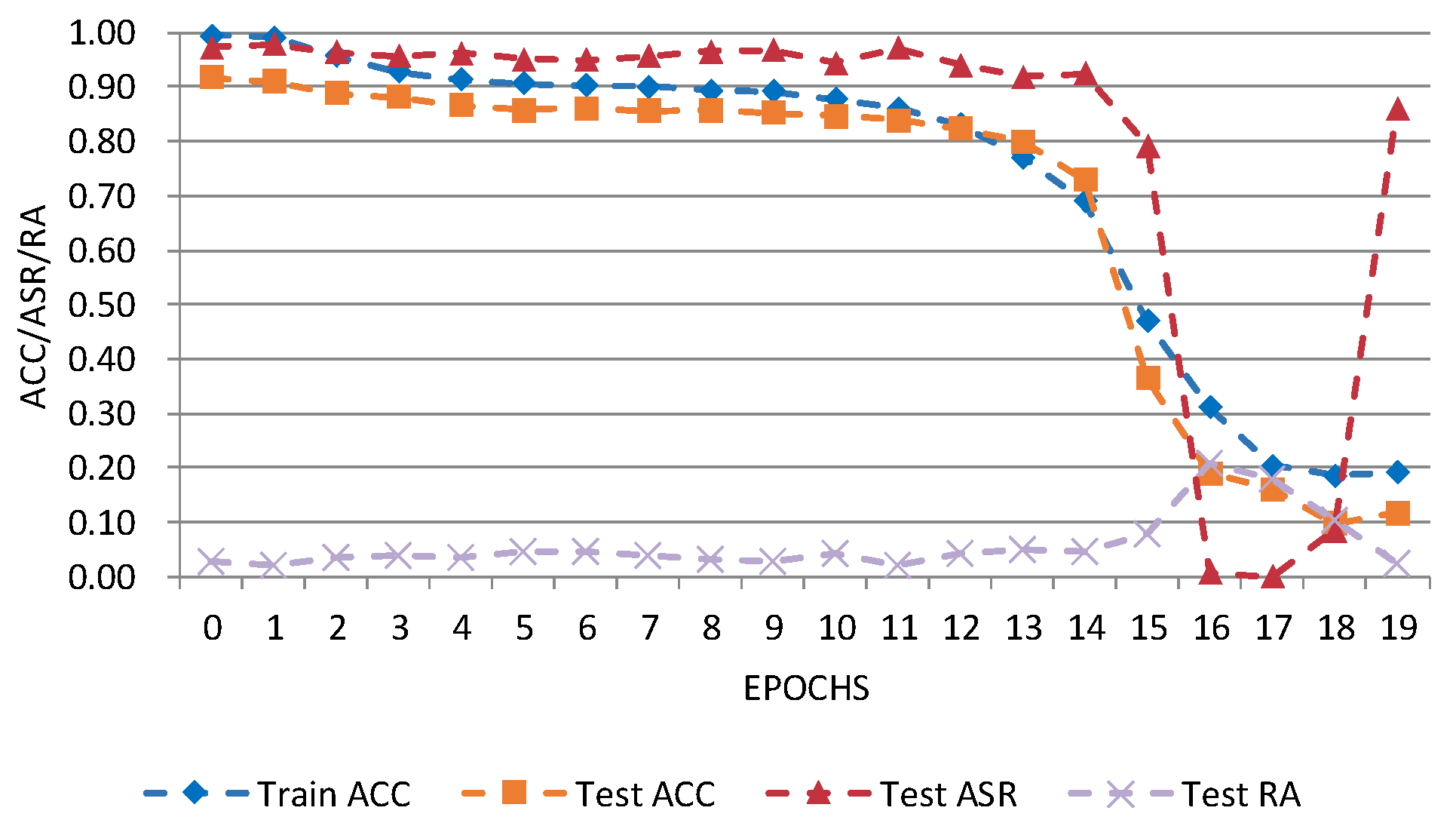

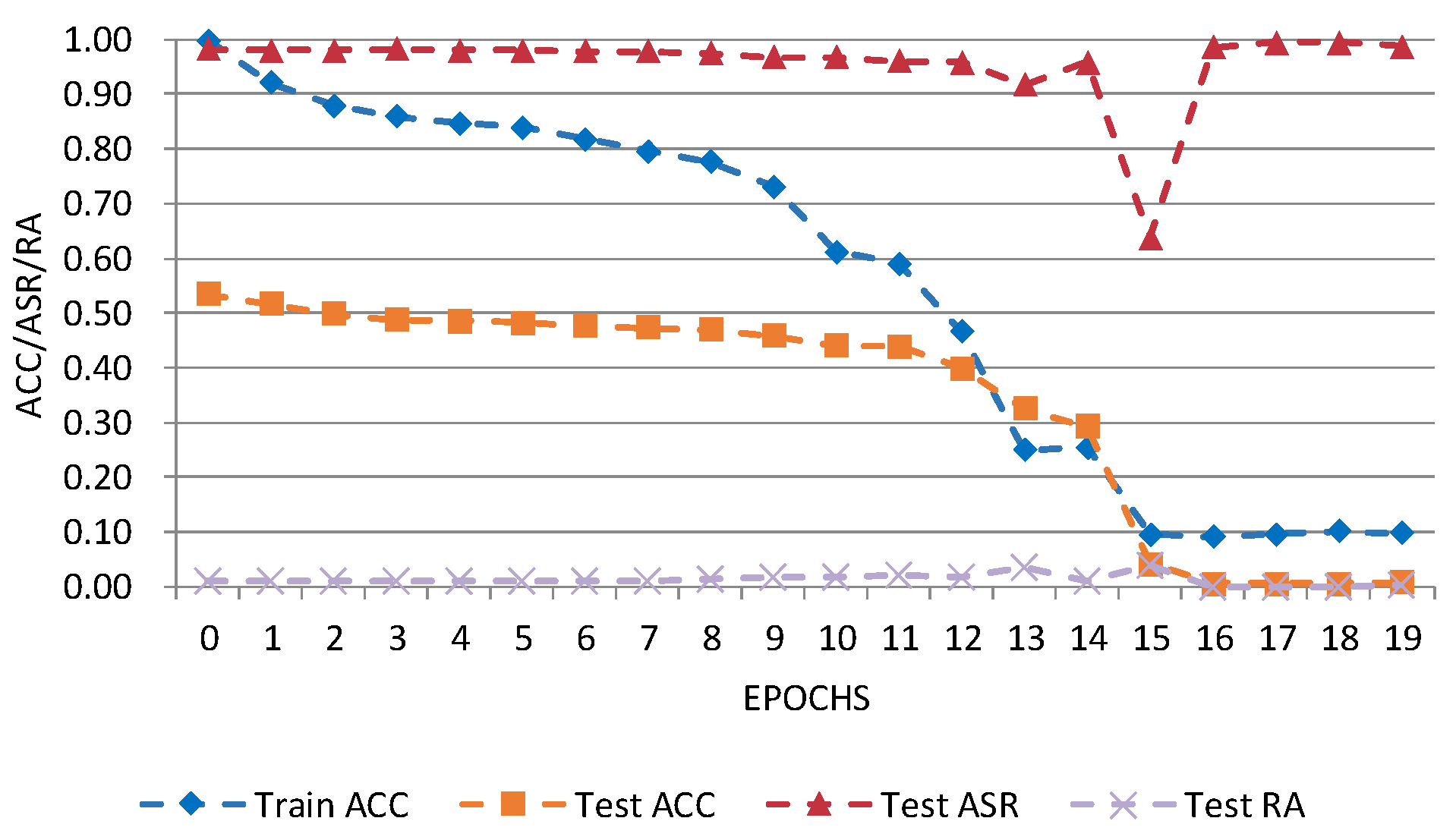

4.6.1. D-BR Defence Experimental Methodology

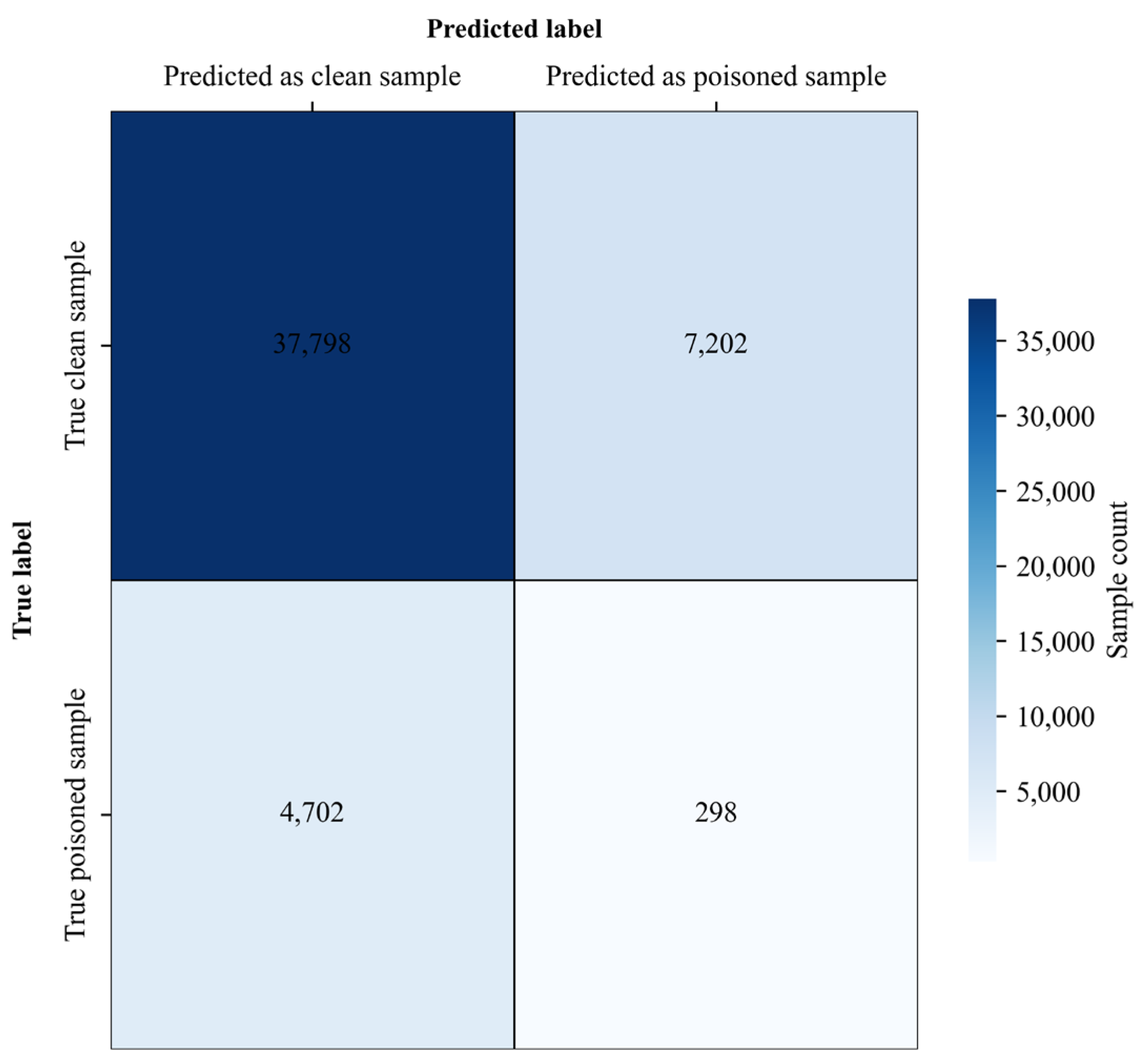

4.6.2. SPECTRE Defence Experimental Methodology

5. Conclusions

6. Limitations

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Li, L.; Mu, X.; Li, S.; Peng, H. A review of face recognition technology. IEEE Access 2020, 8, 139110–139120. [Google Scholar] [CrossRef]

- Zablocki, É.; Ben-Younes, H.; Pérez, P.; Cord, M. Explainability of deep vision-based autonomous driving systems: Review and challenges. Int. J. Comput. Vis. 2022, 130, 2425–2452. [Google Scholar] [CrossRef]

- Chowdhary, K.R.; Chowdhary, K.R. Natural language processing. In Fundamentals of Artificial Intelligence; Springer: New Delhi, India, 2020; pp. 603–649. [Google Scholar]

- Amit, Y.; Felzenszwalb, P.; Girshick, R. Object detection. In Computer Vision: A Reference Guide; Springer International Publishing: Cham, Switzerland, 2021; pp. 875–883. [Google Scholar]

- Gu, T.; Liu, K.; Dolan-Gavitt, B.; Garg, S. Badnets: Evaluating backdooring attacks on deep neural networks. IEEE Access 2019, 7, 47230–47244. [Google Scholar] [CrossRef]

- Cheddad, A.; Condell, J.; Curran, K.; Mc Kevitt, P. Digital image steganography: Survey and analysis of current methods. Signal Process. 2010, 90, 727–752. [Google Scholar] [CrossRef]

- Li, S.; Xue, M.; Zhao, B.; Zhu, H.; Zhang, X. Invisible backdoor attacks on deep neural networks via steganography and regularization. IEEE Trans. Dependable Secur. Comput. 2020, 18, 2088–2105. [Google Scholar] [CrossRef]

- Yang, B.; Chen, S. A comparative study on local binary pattern (LBP) based face recognition: LBP histogram versus LBP image. Neurocomputing 2013, 120, 365–379. [Google Scholar] [CrossRef]

- Kietzmann, P.; Schmidt, T.C.; Wählisch, M. A guideline on pseudorandom number generation (PRNG) in the IoT. ACM Comput. Surv. (CSUR) 2021, 54, 1–38. [Google Scholar] [CrossRef]

- Zhu, S.; Zhu, C.; Wang, W. A new image encryption algorithm based on chaos and secure hash SHA-256. Entropy 2018, 20, 716. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Liu, C.; Li, B.; Lu, K.; Song, D. Targeted backdoor attacks on deep learning systems using data poisoning. arXiv 2017, arXiv:1712.05526. [Google Scholar] [CrossRef]

- Nguyen, A.; Tran, A. Wanet--imperceptible warping-based backdoor attack. arXiv 2021, arXiv:2102.10369. [Google Scholar]

- Liu, Y.; Ma, X.; Bailey, J.; Lu, F. Reflection backdoor: A natural backdoor attack on deep neural networks. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 182–199. [Google Scholar]

- Zeng, Y.; Park, W.; Mao, Z.M.; Jia, R. Rethinking the backdoor attacks’ triggers: A frequency perspective. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 16473–16481. [Google Scholar]

- Li, Y.; Lyu, X.; Koren, N.; Lyu, L.; Li, B.; Ma, X. Anti-backdoor learning: Training clean models on poisoned data. Adv. Neural Inf. Process. Syst. 2021, 34, 14900–14912. [Google Scholar]

- Chen, W.; Wu, B.; Wang, H. Effective backdoor defense by exploiting sensitivity of poisoned samples. Adv. Neural Inf. Process. Syst. 2022, 35, 9727–9737. [Google Scholar]

- Tran, B.; Li, J.; Madry, A. Spectral signatures in backdoor attacks. In Proceedings of the 32nd International Conference on Neural Information Processing Systems, Montréal, QC, Canada, 3–8 December 2018. [Google Scholar]

- Gao, Y.; Xu, C.; Wang, D.; Chen, S.; Ranasinghe, D.C.; Nepal, S. Strip: A defence against trojan attacks on deep neural networks. In Proceedings of the 35th Annual Computer Security Applications Conference, San Juan, PR, USA, 9–13 December 2019; pp. 113–125. [Google Scholar]

- Tang, D.; Wang, X.F.; Tang, H.; Zhang, K. Demon in the variant: Statistical analysis of {DNNs} for robust backdoor contamination detection. In Proceedings of the 30th USENIX Security Symposium (USENIX Security 21), Virtual Event, 11–13 August 2021; pp. 1541–1558. [Google Scholar]

- Chou, E.; Tramer, F.; Pellegrino, G. Sentinet: Detecting localized universal attacks against deep learning systems. In Proceedings of the 2020 IEEE Security and Privacy Workshops (SPW), San Francisco, CA, USA, 21 May 2020; pp. 48–54. [Google Scholar]

- Liu, X.; Li, M.; Wang, H.; Hu, S.; Ye, D.; Jin, H.; Wu, L.; Xiao, C. Detecting backdoors during the inference stage based on corruption robustness consistency. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 16363–16372. [Google Scholar]

- Wu, B.; Chen, H.; Zhang, M.; Zhu, Z.; Wei, S.; Yuan, D.; Zhu, M.; Wang, R.; Liu, L.; Shen, C. Backdoorbench: A comprehensive benchmark of backdoor learning. Adv. Neural Inf. Process. Syst. 2022, 35, 10546–10559. [Google Scholar] [CrossRef]

- Tanchenko, A. Visual-PSNR measure of image quality. J. Vis. Commun. Image Represent. 2014, 25, 874–878. [Google Scholar] [CrossRef]

- Bakurov, I.; Buzzelli, M.; Schettini, R.; Castelli, M.; Vanneschi, L. Structural similarity index (SSIM) revisited: A data-driven approach. Expert Syst. Appl. 2022, 189, 116087. [Google Scholar] [CrossRef]

- Jayasumana, S.; Ramalingam, S.; Veit, A.; Glasner, D.; Chakrabarti, A.; Kumar, S. Rethinking fid: Towards a better evaluation metric for image generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 9307–9315. [Google Scholar]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 586–595. [Google Scholar]

- Zhou, Y.; Ni, T.; Lee, W.B.; Zhao, Q. A Survey on Backdoor Threats in Large Language Models (LLMs): Attacks, Defenses, and Evaluation Methods. Trans. Artif. Intell. 2025, 1, 3. [Google Scholar] [CrossRef]

- Yuan, S.; Xu, G.; Li, H.; Zhang, R.; Qian, X.; Jiang, W.; Cao, H.; Zhao, Q. FIGhost: Fluorescent Ink-based Stealthy and Flexible Backdoor Attacks on Physical Traffic Sign Recognition. arXiv 2025, arXiv:2505.12045. [Google Scholar] [CrossRef]

| Categories of Defence Methods | Principle | Defensive Measures |

|---|---|---|

| Model-optimisation-based defence methods | Suppressing backdoor behaviour by adjusting the model training process or parameters | ABL [15] D-BR [16] |

| Data-cleansing-based defence methods | Defence achieved through detecting or purging poisoned samples within training data | SPECTRE [17] |

| Pre-training detection-based defence methods | Identifying backdoors prior to model deployment via feature analysis or perturbation testing | STRIP [18], SCAN [19] |

| Inference-time detection-based defence methods Defence Method Categories | Real-time monitoring of input or output anomalies during the model inference phase | SentiNet [20] TeCo [21] |

| Method | CIFAR-10 | Tiny-ImageNet | ||

|---|---|---|---|---|

| ASR (%) | ACC (%) | ASR (%) | ACC (%) | |

| Clean [22] | 0 | 93.90 | 0 | 57.28 |

| WaNet [12] | 92.41 | 90.11 | 98.35 | 48.03 |

| BadNets [5] | 94.24 | 91.68 | 99.06 | 50.56 |

| ReFool [13] | 94.56 | 92.21 | 98.19 | 54.15 |

| Low Frequency [14] | 99.05 | 93.10 | 98.32 | 55.13 |

| Our Method | 99.38 ± 0.06 | 93.17 ± 0.03 | 98.45 ± 0.05 | 55.17 ± 0.06 |

| Dataset | Model | ASR (%) | ACC (%) |

|---|---|---|---|

| CIFAR-10 | ResNet-18 | 99.38 | 93.17 |

| MobileNetV3-Large | 93.50 | 82.98 | |

| Tiny-ImageNet | ResNet-18 | 98.45 | 55.17 |

| ConvNeXt-Tiny | 95.83 | 63.52 |

| Dataset | Target (idx) | ASR (%) | ACC (%) |

|---|---|---|---|

| CIFAR-10 | Airplane (0) | 99.38 | 93.17 |

| Automobile (1) | 99.38 | 93.67 | |

| Frog (6) | 99.37 | 93.19 | |

| Tiny-ImageNet | Egyptian cat (0) | 98.45 | 55.17 |

| Tabby (66) | 98.44 | 55.31 | |

| Teapot (71) | 98.46 | 55.32 |

| Dataset | Pratio | ASR (%) |

|---|---|---|

| CIFAR-10 | 0.01 | 5.44 |

| 0.02 | 7.12 | |

| 0.05 | 98.44 | |

| 0.1 | 99.38 | |

| Tiny-ImageNet | 0.01 | 1.58 |

| 0.02 | 4.24 | |

| 0.05 | 93.85 | |

| 0.1 | 98.45 |

| Method | CIFAR-10 | Tiny-ImageNet | ||||||

|---|---|---|---|---|---|---|---|---|

| PSNR | SSIM | FID | LPIPS | PSNR | SSIM | FID | LPIPS | |

| WaNet | 31.70 | 0.9741 | 0.0241 | 0.0043 | 26.56 | 0.9149 | 1.2376 | 0.0554 |

| ReFool | 15.35 | 0.7792 | 1.4412 | 0.0514 | 15.82 | 0.7724 | 2.7029 | 0.1466 |

| Low Frequency | 22.47 | 0.9660 | 2.8655 | 0.0526 | 22.18 | 0.9625 | 3.1362 | 0.1252 |

| Our Method | 36.23 | 0.9804 | 0.0135 | 0.0010 | 37.89 | 0.9871 | 0.0615 | 0.0037 |

| Embedding Type | ASR | ACC | Convergence Epoch |

|---|---|---|---|

| Dynamic | 99.38 | 93.17 | 25 |

| Static | 99.73 | 93.11 | 18 |

| Trigger Image Dimensions | CIFAR-10 | ||

|---|---|---|---|

| ASR (%) | ACC (%) | SSIM | |

| 8 × 8 | 92.40 | 92.52 | 0.9932 |

| 10 × 10 | 97.61 | 93.31 | 0.9923 |

| 12 × 12 | 98.56 | 93.39 | 0.9894 |

| 14 × 14 | 98.93 | 93.23 | 0.9843 |

| 16 × 16 | 99.38 | 93.17 | 0.9804 |

| Trigger Image Dimensions | Tiny-ImageNet | ||

|---|---|---|---|

| ASR (%) | ACC (%) | SSIM | |

| 16 × 16 | 98.05 | 55.12 | 0.9963 |

| 20 × 20 | 98.30 | 55.58 | 0.9944 |

| 24 × 24 | 98.41 | 55.11 | 0.9918 |

| 28 × 28 | 98.45 | 55.17 | 0.9871 |

| 32 × 32 | 98.45 | 55.21 | 0.9834 |

| Dataset | Bit | ASR (%) | ACC (%) | SSIM |

|---|---|---|---|---|

| CIFAR-10 | 1 | 7.82 | 80.11 | 0.9997 |

| 2 | 9.12 | 78.87 | 0.9987 | |

| 3 | 98.16 | 91.72 | 0.9945 | |

| 4 | 99.38 | 93.17 | 0.9804 | |

| Tiny-ImageNet | 1 | 12.24 | 50.79 | 0.9995 |

| 2 | 15.97 | 49.56 | 0.9983 | |

| 3 | 97.94 | 54.77 | 0.9962 | |

| 4 | 98.45 | 55.17 | 0.9871 |

| Dataset | Variant | LBP | PRNG | ASR (%) | ACC (%) | ASR (%) Under D-BR | FNR (SPECTRE) |

|---|---|---|---|---|---|---|---|

| CIFAR-10 | V1 | ✕ | ✓ | 98.43 | 93.45 | 6.96 | 0.9584 |

| V2 | ✓ | ✕ | 99.73 | 93.14 | 7.18 | 0.8359 | |

| V3 | ✓ | ✓ | 99.38 | 93.17 | 85.94 | 0.9404 | |

| Tiny-ImageNet | V1 | ✕ | ✓ | 98.41 | 55.26 | 96.45 | 0.8454 |

| V2 | ✓ | ✕ | 99.14 | 55.21 | 10.43 | 0.8072 | |

| V3 | ✓ | ✓ | 98.45 | 55.17 | 98.74 | 0.8944 |

| Dataset | Method | ACC (%) | ASR (%) | RA |

|---|---|---|---|---|

| CIFAR-10 | WaNet | 66.42 | 89.53 | 0.0521 |

| BadNets | 36.84 | 09.42 | 0.3197 | |

| ReFool | 9.98 | 0 | 0.1110 | |

| Low Frequency | 12.33 | 76.28 | 0.0202 | |

| Our Method | 11.75 | 85.94 | 0.0236 | |

| Tiny-ImageNet | WaNet | 18.22 | 84.12 | 0.0381 |

| BadNets | 34.27 | 08.16 | 0.3051 | |

| ReFool | 0.50 | 96.03 | 0.0002 | |

| Low Frequency | 0.52 | 98.10 | 0.0004 | |

| Our Method | 0.70 | 98.74 | 0.0021 |

| Dataset | Method | TN | FP | FN | TP | TPR | FNR | FPR |

|---|---|---|---|---|---|---|---|---|

| CIFAR-10 | WaNet | 30,429 | 5507 | 12,071 | 1993 | 0.1417 | 0.8583 | 0.1532 |

| ReFool | 37,938 | 7062 | 4562 | 438 | 0.0876 | 0.9124 | 0.1569 | |

| BadNets | 37,983 | 7017 | 4517 | 483 | 0.0966 | 0.9034 | 0.1559 | |

| Low Frequency | 37,867 | 7133 | 4633 | 367 | 0.0734 | 0.9266 | 0.1585 | |

| Our Method | 37,798 | 7202 | 4702 | 298 | 0.0596 | 0.9404 | 0.1600 | |

| Tiny-ImageNet | WaNet | 60,519 | 11,356 | 24,418 | 3707 | 0.1318 | 0.8682 | 0.1579 |

| ReFool | 76,075 | 13,925 | 8945 | 1055 | 0.1055 | 0.8945 | 0.1547 | |

| BadNets | 76,426 | 13,574 | 8594 | 1406 | 0.1406 | 0.8594 | 0.1508 | |

| Low Frequency | 76,076 | 13,924 | 8944 | 1056 | 0.1056 | 0.8944 | 0.1547 | |

| Our Method | 76,076 | 13,924 | 8944 | 1056 | 0.1056 | 0.8944 | 0.1547 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Luo, Z.; Li, F.; Peng, J. LBP-LSB Co-Optimisation for Dynamic Unseen Backdoor Attacks. Electronics 2025, 14, 4216. https://doi.org/10.3390/electronics14214216

Luo Z, Li F, Peng J. LBP-LSB Co-Optimisation for Dynamic Unseen Backdoor Attacks. Electronics. 2025; 14(21):4216. https://doi.org/10.3390/electronics14214216

Chicago/Turabian StyleLuo, Zhenyan, Fuxiu Li, and Jiao Peng. 2025. "LBP-LSB Co-Optimisation for Dynamic Unseen Backdoor Attacks" Electronics 14, no. 21: 4216. https://doi.org/10.3390/electronics14214216

APA StyleLuo, Z., Li, F., & Peng, J. (2025). LBP-LSB Co-Optimisation for Dynamic Unseen Backdoor Attacks. Electronics, 14(21), 4216. https://doi.org/10.3390/electronics14214216