Abstract

Contractual agreements often contain clauses that are unfair, creating unjust suffering in one party to the agreement. ContractNerd leverages advanced Large Language Models (LLMs) to analyze contractual agreements and identify issues across four categories: missing clauses, unenforceable clauses, legally sound clauses, and legal but risky clauses. By using a structured methodology that integrates LLM-based clause comparison, enforceability checks against jurisdiction-specific regulations, and assessments of risk-inducing traits, ContractNerd provides a comprehensive analysis of contractual terms. To evaluate the tool’s effectiveness, we compare its analyses with those from existing platforms on rental clauses that have led to court litigation. ContractNerd’s interface helps users (both drafters and signing parties) to navigate complex contracts, offering actionable insights to flag legal risks and disputes.

1. Introduction

Power imbalances in contractual agreements often result in second parties, notably potential tenants and employees, facing unfair terms. Large corporations, employers, and landlords may use their legal resources to include unreasonable clauses, leaving second parties vulnerable to legal penalties for legitimate actions (e.g., overly restrictive tenant covenants). To address this issue, ContractNerd leverages Large Language Models (LLMs) in the current prototype LLaMA to analyze contractual agreements and alert users to potentially problematic clauses.

The goals are to (i) alert contractual agreement authors to clauses that might be unenforceable or ambiguous and (ii) alert contract recipients to missing clauses or biased clauses. We expect that its use may help avoid unnecessary disputes.

1.1. ContractNerd in Action

ContractNerd can be accessed at https://contractnerd-production.up.railway.app (accessed on 15 October 2025) and ongoing.

The interaction of the reader of a rental agreement proceeds as follows:

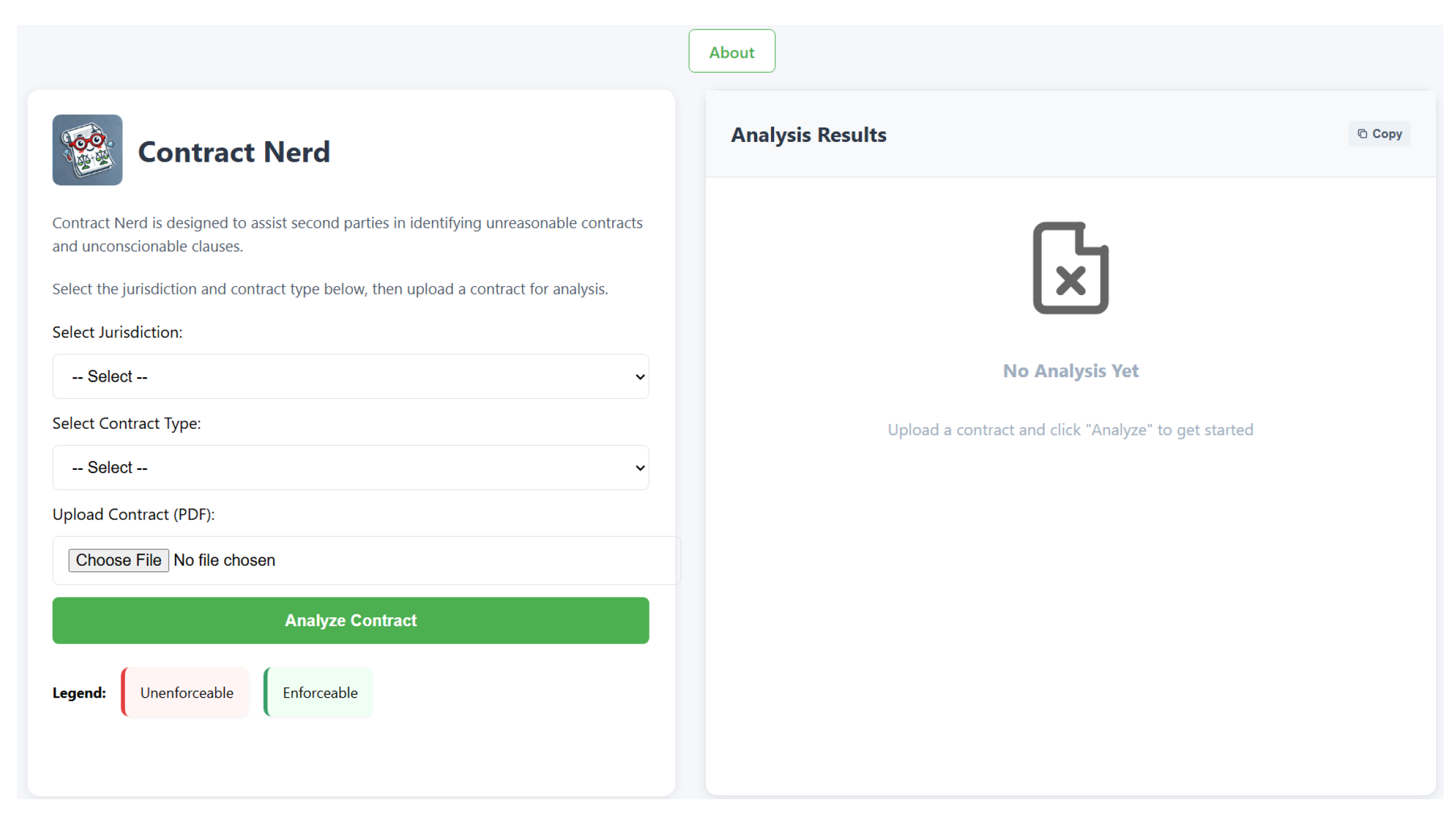

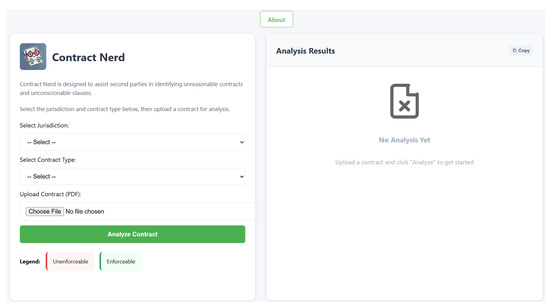

- User starts at the landing page of Figure 1.

Figure 1. Landing page for ContractNerd user interface.

Figure 1. Landing page for ContractNerd user interface. - User uploads a contractual agreement in PDF format on the landing page after selecting a contract type (e.g., rental agreement, employment agreement, etc.) and a jurisdiction (e.g., New York) as in Figure 1.

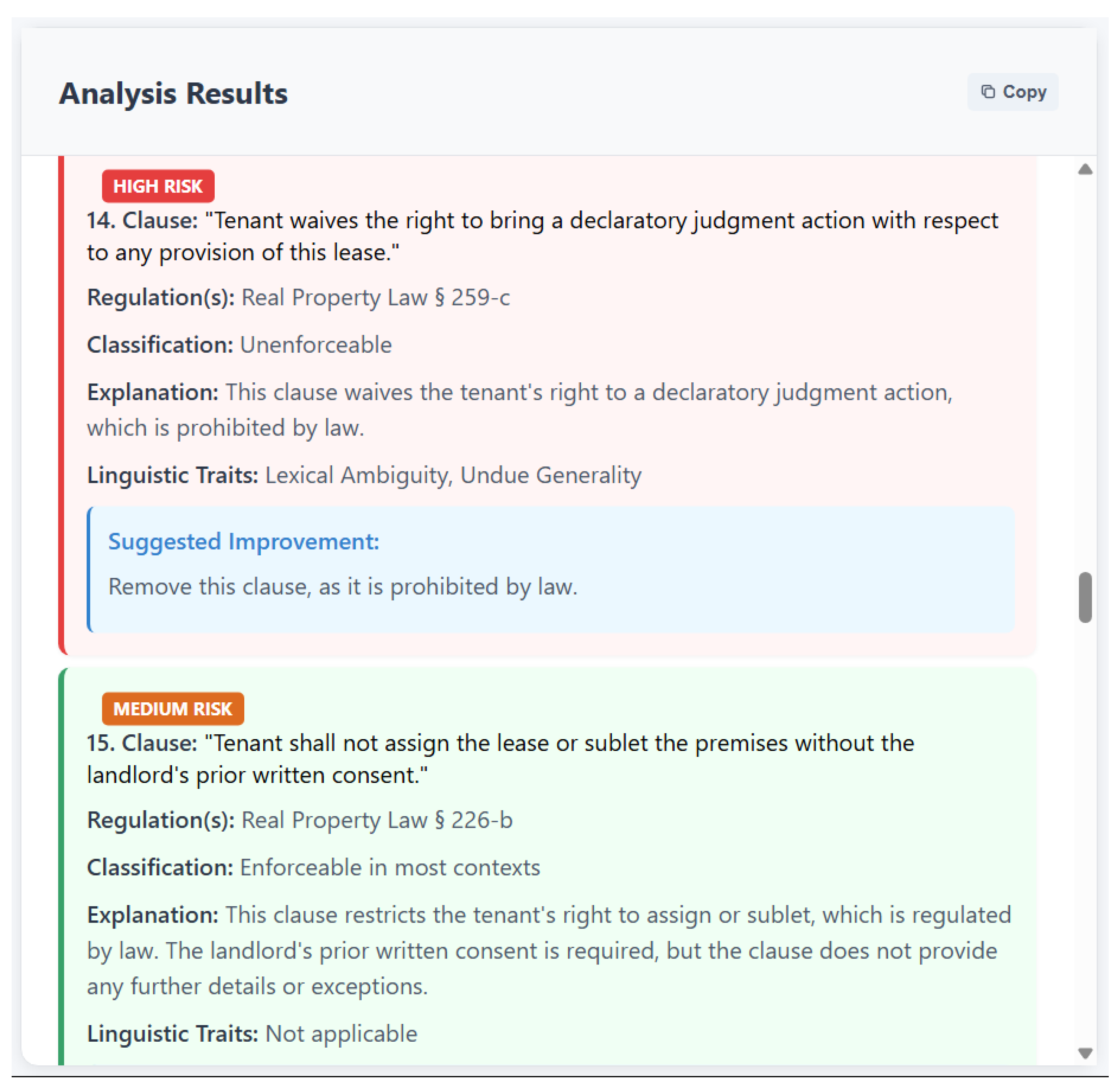

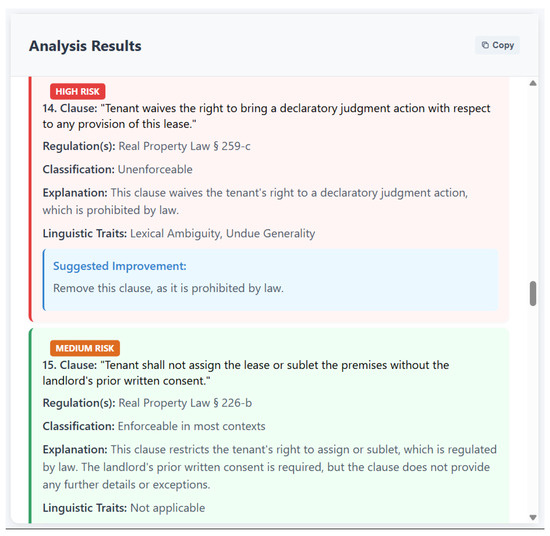

- User receives an analysis of the (rental in the provided example) agreement as shown in Figure 2.

Figure 2. Results displayed after clause analysis.Based on the prompts in Section 4.2, the report flags clauses as missing, unenforceable, legally risky, or legally sound. The goal is to give rental agreement recipients the ability to interpret the results and object to questionable clauses.

Figure 2. Results displayed after clause analysis.Based on the prompts in Section 4.2, the report flags clauses as missing, unenforceable, legally risky, or legally sound. The goal is to give rental agreement recipients the ability to interpret the results and object to questionable clauses.

Ambiguous Clauses

An example of an ambiguous clause that the system would typically flag can be seen below:

“ Tenant must provide written notice of intent to vacate at a reasonable time. ”

This is ambiguous because “reasonable” is undefined.

Unenforceable Clauses

Examples of unenforceable clauses would be:

- “Tenant shall not withhold rent for any reason without the landlord’s prior written consent.”

- “Employee agrees not to work for any business in the United States for two years following termination.”: In many jurisdictions—such as California—this is unenforceable under statutes prohibiting broad non-compete agreements. Even in states that allow non-competes, the scope here is excessively broad in geography and duration, making it likely invalid.

Missing Clauses

A missing clause refers to an essential legal or procedural component that is expected in a standard agreement but is absent. For example:

- No language is provided detailing the procedure for returning the tenant’s security deposit upon termination of the lease.

- “The agreement contains no procedure for the employee to file grievances or address workplace disputes.”

One-sided Clauses

Example of a one-sided clause:

“Tenant shall be responsible for all repairs, regardless of cause.”

This shifts unreasonable responsibility onto the tenant, including damage due to structural issues or natural wear and tear, which are typically the landlord’s responsibility.

ContractNerd for rental agreement Writers

Note that the two use cases of ContractNerd are to support both recipients/readers of contract agreements as well as writers of such agreements. Consider again the unenforceable clauses:

“Tenant shall not withhold rent for any reason without the landlord’s prior written consent.”

To be rendered enforceable, the clause could be rewritten as follows (as ContractNerd suggests):

“Tenant shall not withhold rent except in cases where the Landlord has breached the warranty of habitability, as provided by law.”

1.2. Summary of Contributions

The contributions of this paper and our system ContractNerd are as follows:

- The presentation of a layman-friendly system to analyze contracts in order to detect biased and/or unenforceable clauses along with explanations.

- A detailed explanation of the workflow and prompts used to achieve those goals.

- A set of experiments to evaluate various rental agreement analysis tools, including ContractNerd, based on user feedback and court rulings as detailed by cases highlighted in Thomson Reuters WestLaw.

- Examples of the application of ContractNerd for employment contracts.

- A framework for builders of similar document-analysis systems.

2. Related Work

ContractNerd builds on two major research threads: document-level evaluation using Large Language Models (LLMs) and contract-specific legal analysis tools. Our system combines aspects of both while integrating retrieval-augmented generation (RAG) for context-aware clause assessment.

Document-level reasoning using LLMs: Recent work has explored how LLMs can be used to evaluate and reason over long documents. For instance, RAGSys [1] enables real-time self-improvement by storing prior LLM interactions to iteratively enhance retrieval and generation quality. Similarly, DeepRAG [2] separates reasoning and retrieval into distinct phases, supporting multi-hop inference over complex documents.

A wide array of systems now incorporate RAG for specialized applications. QA-RAG [3] targets pharmaceutical compliance, improving accuracy by incorporating structured regulatory content. CaseGPT [4] enhances clinical decision-making by generating medical case reasoning informed by similar past cases. Microsoft’s CoRAG [5] takes this further by dynamically reformulating queries in response to intermediate reasoning steps. VideoRAG [6] demonstrates the potential of RAG beyond text by retrieving and integrating multimodal (video) context into LLM outputs.

Contract-Review Systems: There is a growing interest in using LLMs for contract review. Systems like goHeather [7], YesChatContractChecker [8], and Legly [9] automate contract clause identification and enforceability assessment, though often without jurisdiction-aware context. Gao et al. [10] and Rengaraju [11] also analyze rental agreements using LLMs; these works either require model retraining or do not incorporate retrieval-based reasoning.

3. Materials and Methods

3.1. Process Workflow

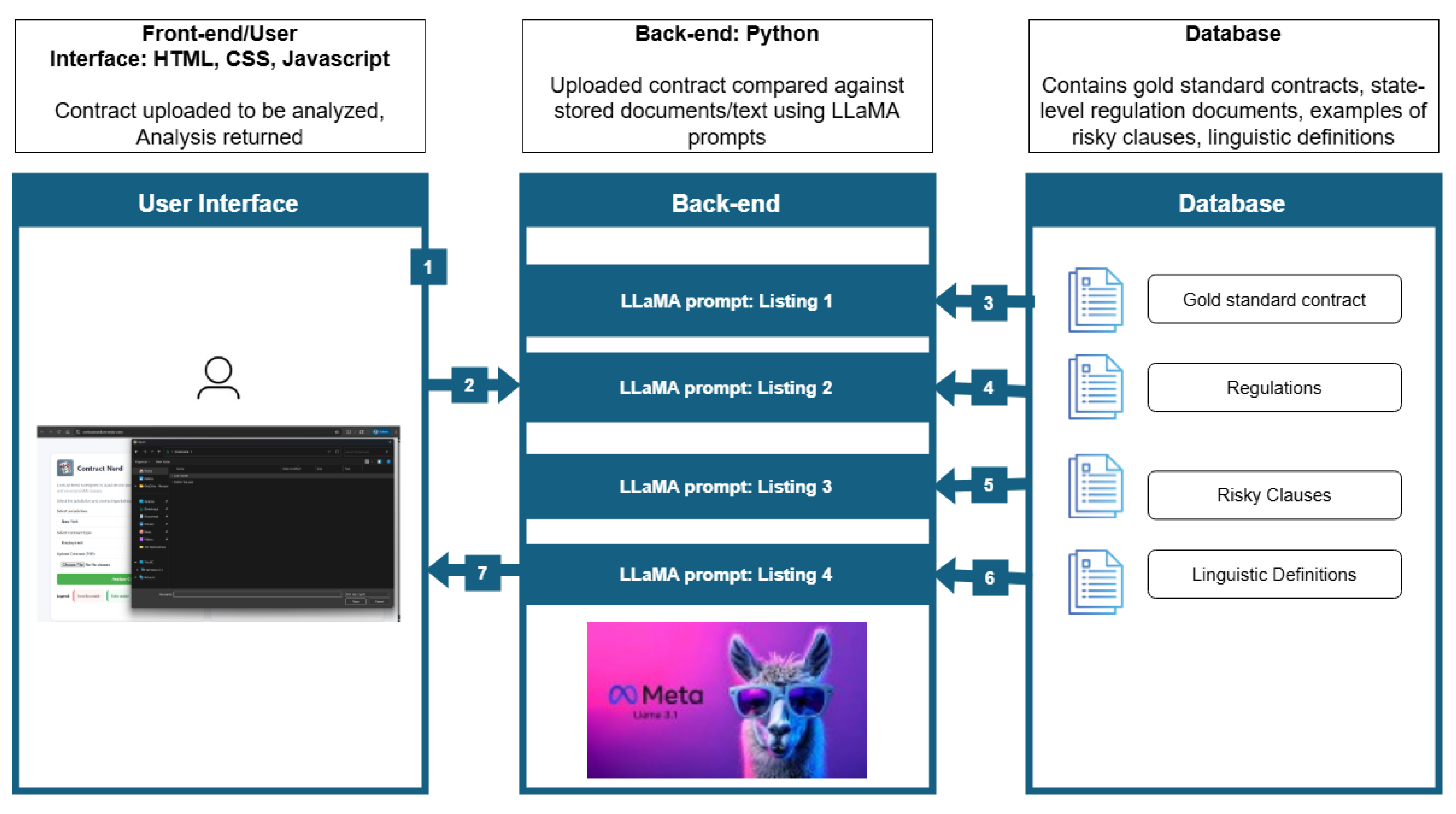

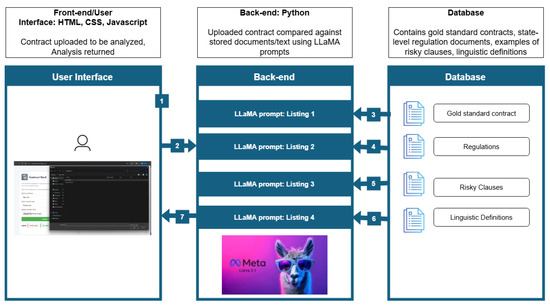

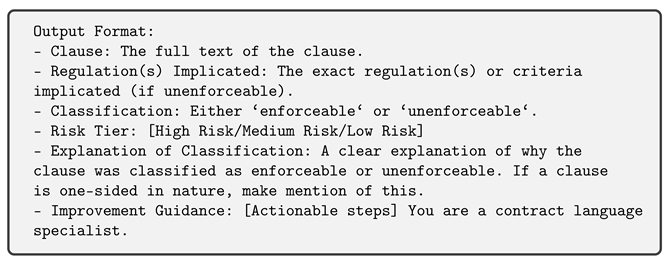

The processing components, shown in Figure 3, support a three-step interface: ingest, process with prompts, and output.

Figure 3.

Project workflow consists of three components: (i) ingest document, (ii) apply prompts based on the document and the rules, (iii) deliver output.

3.2. Supporting Data

This project used the following legal document libraries to help in analyzing rental agreements. The following sources provided examples of clauses, regulations, and details of lawsuits:

- Thomson Reuters WestLaw (https://signon.thomsonreuters.com/?productid=CBT&lr=0&culture=en-US&returnto=https%3a%2f%2f1.next.westlaw.com%2fCosi%2fSignOn&tracetoken=0514251349100j4DoQMa9n77sc_TtFHKFtoxdL2lf5mC6WbEDEbRTvUDeMiIF4-T4MoN9gsShPYAfygKn98JnpxOzCEndUOqRDFEM69ODsV7NIaRjeKgogmPjncySCp8PC27VSGx6iTi2aOsdA_OY5HsdxugLvQxGZ52DPBHs6r_SDLiJpUi_MHAyKUOqzn5vs8awNMVVxeD4DFWHDa-RTsBpE_2KPVvr_0FFHTmSapSD37XyNhTNgvK-ULJ7KAI_rCnn30pLg5EB2_yfoxAPKKYaJSNdsLdo28rE04T78jN1nySl6kLlKkD9Sll0JBfKqWtNEoxPZ00zi6oamOWzatGgR7MYg01RXgEKizCQ6dVtfZHz6ni6MV-TeuGU71YYS2S4KHNiVSqk&bhcp=1): A repository offering ongoing access to detailed records of court proceedings, which was consulted during the experimental phase of the study. Last accessed in 14 May 2025.

- Justia (https://contracts.justia.com/contract-clauses/): Serves as a reference for standard rental agreement language, showcasing examples of frequent clauses like “Waiver” etc. Last accessed in 1 November 2024.

- Afterpattern (https://afterpattern.com/clauses): Serves as a reference for standard rental agreement language, showcasing examples of frequent clauses like “Acceleration of Rent” and “Landlord Indemnification.”. Last accessed in 1 November 2024.

- Agile Legal (https://www.agilelegal.com/contract-clause-library): Includes a comprehensive library of legal clauses, offering clear explanations of commonly used terms such as “Amendment or Waiver” and “Limitation of Liability.” Last accessed in 1 November 2024.

- Sample rental agreements from the official State government websites (https://nystatemls.com/documents/forms/NYStateMLS_Residential_Lease_Agreement.pdf), used to identify key provisions in lease agreements. Last accessed in 1 November 2024.

- PDF documents (https://ag.ny.gov/sites/default/files/tenants_rights.pdf) detailing state-level regulations. Last accessed in 1 November 2024.

4. Implementation

This section starts by discussing the Large Language Model used and its parameter setting. Next, we describe the prompts and their purposes across two main contract types: lease agreements and employment agreements.

4.1. LLM Platform and Hyperparameter Settings

The model’s details are specified below:

- –

- Model: LLaMA 3.3 70B

- –

- Prompt Strategy: Zero-shot and few-shot learning

- –

- Temperature = 0: Ensures deterministic outputs, vital for structured and accurate clause evaluation.

- –

- Top_p = 1 and Top_k = 1: Guarantees the model selects the most probable completion without randomness.

- –

- Max_tokens = 4096: Allows for handling large and complex contracts, particularly rental agreements.

4.2. Prompt Strategy

ContractNerd focuses on four principal sources of clause problems:

- Clauses that are missing but are critical for contract completeness.

- Clauses that violate local laws that may render a clause unenforceable.

- Biased clauses that pose risks to the second party and therefore may lead to litigation.

- Clauses that contain linguistic flaws.

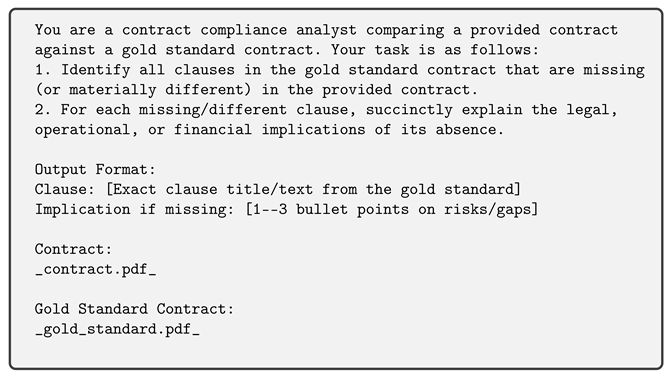

4.3. Missing Clauses

ContractNerd’s identification of missing clauses relies on a collection of “complete rental agreements,” such as the NY State contract database [12]. Through the prompt in Listing 1 below, the LLaMA model compares the uploaded agreement against this standard contract to flag omissions. Missing clauses are identified using the following prompt:

| Listing 1. Prompt used to identify missing clauses in a contract thereby rendering it incomplete. |

|

4.4. Enforceability

ContractNerd determines the enforceability of each clause by comparing it against stored regulations.

- The model evaluates each clause’s compliance with legal standards and assigns a confidence score (low, medium, high).

- Clauses with high confidence of non-compliance are flagged and reported to the user.

- Clauses with low/medium confidence undergo further scrutiny in subsequent stages.

The LLaMA model prompts use a template to determine compliance with the goal of achieving context-aware and jurisdiction-specific evaluations. The prompt used to compare the agreements against the relevant jurisdiction can be seen below Listing 2:

We designed certain rules in the prompt template to support accurate and legally meaningful assessments:

- Rule 1: Always include the exact clause text in quotes after “Clause:”This ensures that the model’s analysis is anchored to the precise language of the contract, preventing misinterpretation or loss of context that could arise from paraphrasing.

- Rule 4: Never combine clauses - analyze each separatelySeparating clauses allows for clause-level risk and enforceability assessment, avoiding false positives or negatives that could result from aggregated or conflated language.

- Rule 6: Preserve all numbers and names exactly as writtenNumerical and named details often carry legal significance (e.g., deadlines, amounts, party identifiers). Preserving them ensures the model evaluates compliance accurately with respect to the intended contract specifications.

The other rules support consistency and formatting, but the above three are particularly important for maintaining the legal integrity and interpretability of the model’s outputs.

| Listing 2. Prompt to evaluate a contract with respect to regulations to determine compliance. |

|

4.5. Riskiness

We define “riskiness” at the clause level using a structured framework grounded in enforceability and legal vulnerability. Each clause is assessed along two dimensions as in Listing 3:

- 1.

- Risk Tier Assignment:

- High Risk: The clause clearly violates applicable law in commonly occurring scenarios.

- Medium Risk: The clause may raise enforceability concerns depending on the context, jurisdiction, or specific terms of enforcement.

- Low Risk: The clause is generally compliant and enforceable but may still require monitoring due to evolving interpretations or narrow exceptions.

- 2.

- Contextual Enforceability:Each clause is further classified as one of the following:

- Enforceable in [specific contexts]

- Unenforceable under [specific conditions]

This tiered classification aims to provide both a legal and practical assessment of a clause’s vulnerability. High-risk clauses tend to be one-sided, overbroad, or contrary to statutory protections; medium-risk clauses are more defensible but may be challenged under certain factual or procedural circumstances; and low-risk clauses typically align with standard legal practice.

Once the above assessments are completed, ContractNerd conducts a final check to identify clauses that exhibit linguistic flaws that may increase legal uncertainty as shown in Listing 4. The four major sources of such flaws are as follows:

- Lexical Ambiguity: Words allow multiple interpretations without sufficient context (e.g., “reasonable time”), leading to disputes over meaning [13].

- Syntactic Ambiguity: Unclear grammatical structure obscures obligations (e.g., “The contractor will repair the walls with cracks”) [14].

- Undue Generality: Provisions are overly broad or vague in scope (e.g., “must meet all necessary standards”), reducing precision and predictability [15].

- Redundancy: Restating obligations unnecessarily, which reduces drafting efficiency and risks internal inconsistency [13].

Addressing these flaws improves enforceability and reduces the likelihood of disputes. In our formulations, we made use of recent research suggesting ways that Large Language Models can assist in detecting such flaws systematically [10].

ContractNerd recommends improvements for medium- and high-risk clauses. These suggestions include alternative phrasing or structural adjustments to improve fairness and enforceability. Low-risk clauses are considered acceptable as written and require no revision.

| Listing 3. Prompt to determine regulatory conformance of contract clauses. |

|

| Listing 4. Analyze contracts from a linguistic point of view. |

|

5. Experiments on Rental Contracts

This section details the experiments that were run across two specific types of agreements: rental agreements and employment agreements. The rental agreements Section 5.1 details in-depth analyses to assess the effectiveness of ContractNerd at analyzing lease agreements. The employment agreements Section 6 gives a high-level view of ContractNerd’s performance in analyzing employment contracts.

5.1. Rental Agreements

These experiments compare state-of-the-art systems as well as ContractNerd from the points of view of (i) functionality, (ii) performance in flagging unenforceable clauses and assigning risk, (iii) a human evaluation, and (iv) a qualitative expert evaluation. We start with functionality.

5.1.1. Functionality Comparison

To compare state-of-the-art systems, this experiment applied goHeather, Legly, YesChat Contract Checker, and ContractNerd to several rental agreements.

Both ContractNerd and goHeather flagged risky clauses, while YesChat and Legly did not. Sample outputs for an anecdotal test clause are summarized in the prose table below.

As shown in Table 1, we summarize the Clause 1 comparison across the four tools.

Table 1.

How Clause 1 is analyzed by the various tools.

Clause 1 (fictitious): “By signing this agreement, the employee agrees to work for the company for the rest of their life, thereby forfeiting their right to resign.”

As shown in Table 1, we summarize the Clause 1 comparison across the four tools.

Clause 2 (non-fictitious): “Tenant waives the right to bring a declaratory judgment action with respect to any provision of this lease.”

Viewing the results in Table 2, we can see that the different systems support different functionalities. We summarize this in Table 3.

Table 2.

Clause 2 analysis comparison by the various tools.

Table 3.

System Functionality Comparisons: ContractNerd, goHeather, Legly, and YesChat Contract Checker. A checkmark indicates functionality is provided.

5.1.2. Automated Accuracy Evaluation

This experiment compared these tools using a structured framework comprising the following steps:

- Data Used: Using the keywords in the box below, we extracted 102 clauses from documented rental lawsuit cases in New York State available on Thomson Reuters WestLaw (https://signon.thomsonreuters.com/?productid=CBT&lr=0&culture=en-US&returnto=https%3a%2f%2f1.next.westlaw.com%2fCosi%2fSignOn&tracetoken=0514251349100j4DoQMa9n77sc_TtFHKFtoxdL2lf5mC6WbEDEbRTvUDeMiIF4-T4MoN9gsShPYAfygKn98JnpxOzCEndUOqRDFEM69ODsV7NIaRjeKgogmPjncySCp8PC27VSGx6iTi2aOsdA_OY5HsdxugLvQxGZ52DPBHs6r_SDLiJpUi_MHAyKUOqzn5vs8awNMVVxeD4DFWHDa-RTsBpE_2KPVvr_0FFHTmSapSD37XyNhTNgvK-ULJ7KAI_rCnn30pLg5EB2_yfoxAPKKYaJSNdsLdo28rE04T78jN1nySl6kLlKkD9Sll0JBfKqWtNEoxPZ00zi6oamOWzatGgR7MYg01RXgEKizCQ6dVtfZHz6ni6MV-TeuGU71YYS2S4KHNiVSqk&bhcp=1 (accessed on 1 November 2024)) in which the legal dispute focused on specific contractual terms. We did no further filtering. Each clause was labeled enforceable or unenforceable based on the court’s finding of enforceability.Here were the keywords used as the basis for the advanced search within WestLaw:landlord, tenant, indemnification clause,rental agreement, lease agreement

- Tool Inference: Each extracted clause was input into the respective tools under evaluation: Zero-Shot ContractNerd, goHeather, Legly, and YesChat.

- Performance Measurement: The outputs of the tools were compared against the ground truth (i.e., the court’s ruling) to compute standard classification metrics: accuracy, precision, recall, and F1 score.

As shown in Table 4, ContractNerd achieved the highest overall performance across all metrics compared to the other tools.

Table 4.

Tool performance: The positive case is “Unenforceable.” Each tool’s performance includes 95% confidence intervals and p-values for statistical significance testing against a null classifier (majority class = “Enforceable”). The null model (random classifier) predicts “Unenforceable” with probability , matching the observed class prevalence. All tools significantly outperform the null (), indicated in green. A paired permutation test was conducted on the predictions of ContractNerd against goHeather, revealing a p-value of 0.1796. This suggests that the difference in performance is not large enough to be statistically distinguishable from random variation given the sample size of 102 clauses.

5.1.3. Human Feedback Experiment

For a semantically rich comparative evaluation of the best state-of-the-art system goHeather and ContractNerd, we conducted a human evaluation experiment. To set up this experiment, both ContractNerd and goHeather analyzed a set of 26 lease clauses under New York State law. The system outputs were then reviewed side-by-side by human evaluators (both expert and lay) to determine which system provided higher-quality legal analysis.

5.1.4. System Output Collection

ContractNerd and goHeather independently generated structured analyses for each of the 26 clauses. Outputs included clause classification, implicated legal statutes, textual explanation, and any identified linguistic traits.

5.1.5. Evaluation Framework

To assess the quality of each system’s output, a comparative evaluation table was developed and provided to six human reviewers (one expert in contracts and five lay users). The lay reviewers comprised a chartered accountant (CIMA), a vice-chair of the institutional review board of a major academic institution, a medical doctor as well as a senior human factors consultant, a lecturer in mechanical engineering, and a college student majoring in finance and economics. Reviewers were instructed to evaluate the two systems’ outputs for each clause based on the following three criteria:

- Relevance—How directly the analysis addressed the content and intent of the clause.

- Accuracy—Whether the legal references and interpretations were factually and legally correct.

- Completeness—Whether the analysis covered all significant legal and contextual aspects.

For each clause, reviewers indicated which system produced the better output (or selected “tie” when applicable) and were encouraged to provide optional comments explaining their choice. The experiment named the systems “System A” and “System B” so as to not bias the reviewers’ judgments based on prior knowledge or expectations. The order of presentation (i.e., which system appeared as A or B) was randomized across examples to further reduce potential bias. These precautions were taken to ensure that the evaluation focused solely on the quality of the outputs rather than any preconceived notions about the systems themselves.

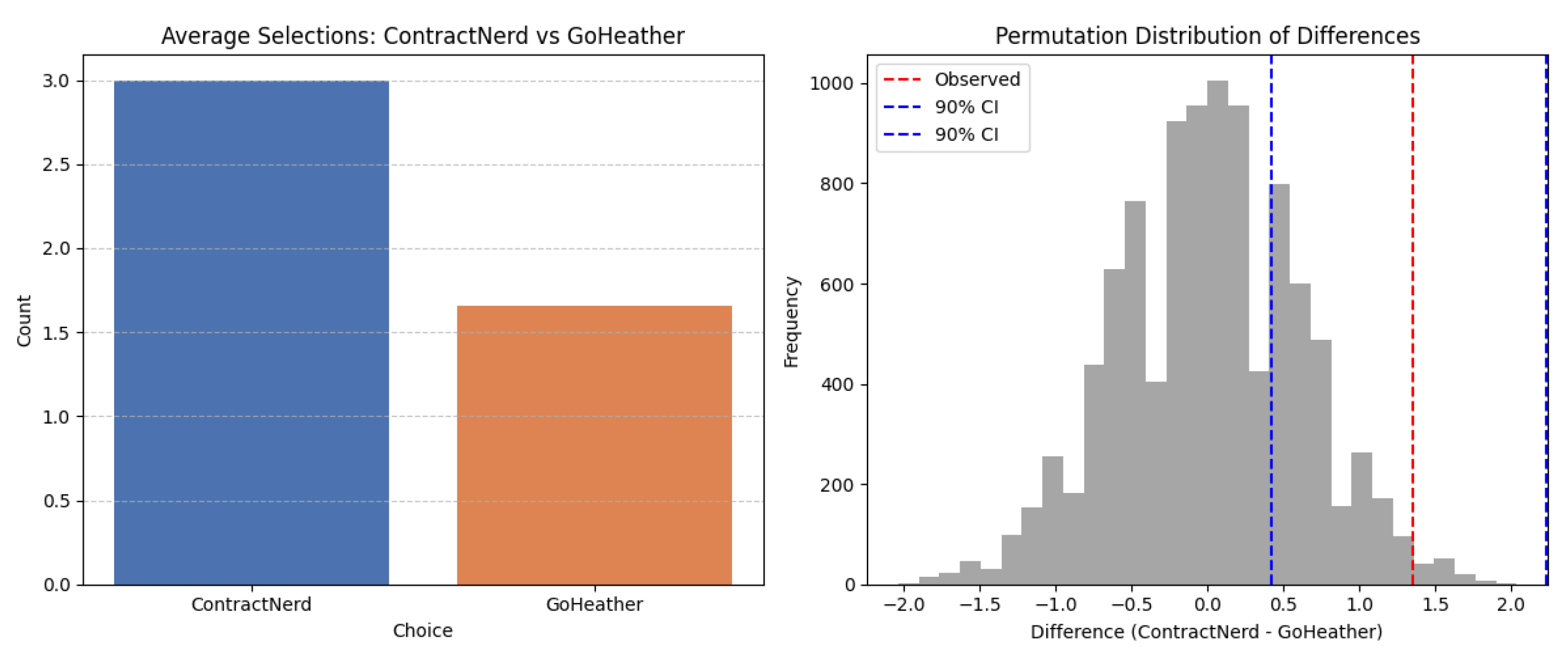

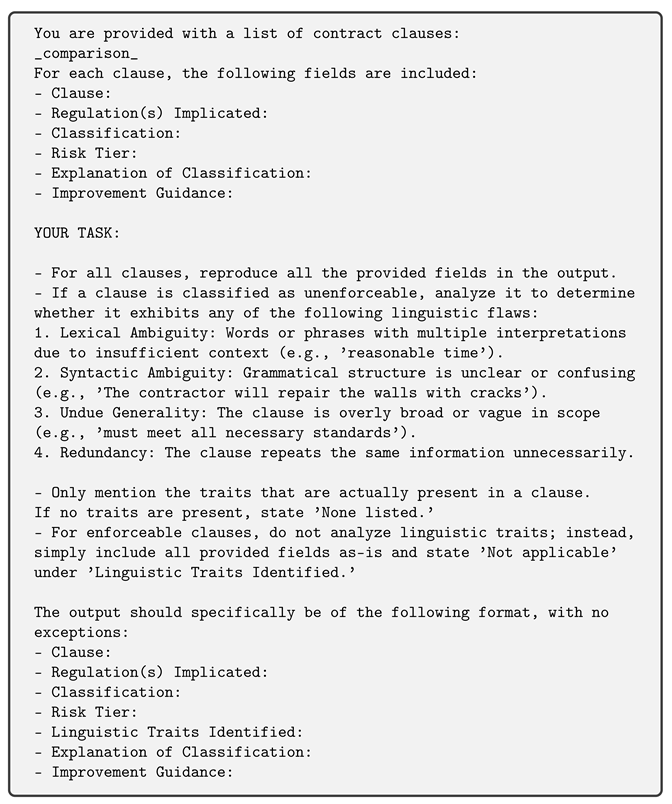

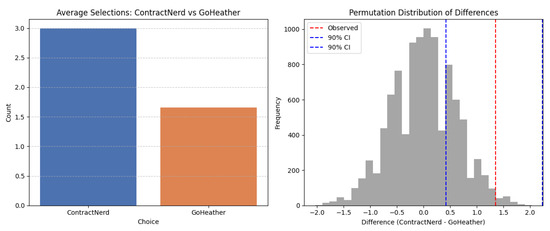

Figure 4 shows the results of these tests aggregated to quantify overall comparative performance across the 26 clauses.

Figure 4.

Mean reviewer preferences per question. The paired permutation test (Katari et al., 2021) [16], yielded a p-value of 0.009, suggesting the observed preference for ContractNerd is unlikely to arise by chance (pval < 0.05). The 90% confidence interval (CI; dashed blue lines) for the mean difference (ContractNerd score − GoHeather score) ranges from 0.42 to 2.23. The histogram shows the permutation distribution (gray) of differences under the null hypothesis, with the observed difference (red dashed line) lying far in its right tail. Results are based on 10,000 resamples of paired responses.

As can be seen from the chart, overall, ContractNerd received more preferential ratings than goHeather.

That said, reviewer commentary not only focused on what ContractNerd does well, but also on potential areas of improvement:

- “System A seems to give a bit more information but assumes knowledge of legal terms. B explains implications of the various clauses and the output of A could be enhanced by incorporating this language.”

- “System A provides more detail and leaves me feeling more informed (especially under the explanation section). It also mentions the regulation that the clause is under and the classification, providing me with the opportunity to look it up for myself if I want to read about it in more depth. I find this informative.”

- “System B details how this clause may affect the tenant which provides more of a picture of what they would agree to should this clause be part of the tenancy agreement. Though this is not information that causes any legal risk, it is a ’nice-to-have’ and is not detailed by System A.”

- “The classification in System A makes the difference - it gives quick judgment without too much reading required. I do however appreciate the additional context given in B.”

- “If the user is a tenant, B offers a better explanation. Perhaps include a toggle to specify landlord/tenant user role”

5.1.6. Expert Qualitative Analysis

As a further form of comparative analysis, Professor Clayton Gillette, a professor of contract law at New York University, conducted a rigorous assessment of both systems’ outputs. He evaluated each clause analysis along the three aforementioned dimensions, as we show in Table 5 below and continued in Table A3 in the Appendix B. In general, he found ContractNerd more complete (see, for example, comments regarding points (1) and (3)) but too, well, nerdy (see comments regarding points (2) and (4)), as shown in Table 5, and continued in Table A3 in the Appendix B.

Table 5.

Lease Agreement Clause Analysis by law professor Clayton Gillette.

5.1.7. Other Jurisdictions

The examples detailed above focus on ContractNerd’s analysis of clauses that can be found in rental agreements with specific reference to New York state rental laws. However, the approach can be generalized across various jurisdictions, which we show in detail in Appendix A where we apply the same methodology to New Jersey and California. The main takeaways are as follows:

- Table A2, Appendix A shows the different ways in which New Jersey and California balance landlord rights to recover costs and tenant protections under lease cancellation scenarios. In both cases, ContractNerd classifies clauses governing the application of re-renting income toward landlord expenses and tenant debts as medium risk and enforceable, provided that expenses are reasonable and well-documented (see Table A2, Appendix A). However, California law explicitly requires landlords to mitigate damages by making reasonable efforts to re-rent and imposes stricter demands for documentation and expense limits, highlighting a stronger tenant protection framework compared with New Jersey’s approach. This suggests that, while both states protect landlord interests, California limits recoverable costs more rigorously.

- Another notable contrast concerns the warranty of habitability. In the case of New Jersey, a high risk classification was assigned to clauses that ambiguously limit the landlord’s obligation to provide essential services, deeming such provisions unenforceable if basic habitability standards are not met (Table A2, Appendix A). In contrast, California’s approach views similar clauses as low risk, recognizing that the warranty covers only fundamental residential fitness without guaranteeing additional amenities. This difference highlights California’s more permissive stance toward landlords’ limitations on service levels, whereas New Jersey seemingly prioritizes safeguarding tenant access to essential services under its Residential Landlord-Tenant Act.

These examples show that ContractNerd performs appropriately different analyses of the same contract clauses in different jurisdictions.

6. Employment Agreements

Table 6 below highlights ContractNerd’s ability to flag specific regulations in a jurisdiction-specific way in employment law. As we will see, different jurisdictions embody divergent regulatory philosophies regarding employee protection, contractual enforceability, and the permissible scope of employer control.

Table 6.

Analysis of Employment Clauses by ContractNerd.

Various themes emerge as particularly noteworthy:

- Divergence in Recovery of Employer Costs: The first clause—authorizing the employer to recover the cost of company-paid benefits upon employee resignation—illustrates a sharp jurisdictional contrast. In New Jersey, while the clause is broadly enforceable under the New Jersey Wage and Hour Law, its breadth creates a high risk of overreach, particularly when applied without proportionality or itemization. In California, the same clause risks violating strict statutory prohibitions on wage recoupment under Labor Code §221. This demonstrates how California adopts a more employee-centric approach, prioritizing wage integrity over contractual flexibility, whereas New Jersey permits broader enforcement provided statutory procedures are followed.

- Non-Compete and Restraint-of-Trade Clauses. The second clause, which functions as an in-term non-compete, can be enforceable in New Jersey under the New Jersey Trade Secrets Act if narrowly drafted. However, the language here is excessively broad—extending even to passive stock ownership—and is therefore vulnerable to legal challenge. In contrast, California law, through Business and Professions Code §16600, makes such restrictions almost entirely unenforceable unless a narrow statutory exception applies. This difference illustrates California’s near-total rejection of restraints on employee freedom, compared with New Jersey’s conditional acceptance based on reasonableness and legitimate business interests.

7. Limitations

Scalability:

A potential limitation of the current prototype is efficiency when processing very large contractual documents. As contracts increase in length and clause count, a single forward pass through the language model may become impractical due to context window constraints and latency.

To address this, the system can be extended with a partition-and-union approach. The algorithm proceeds as follows:

- Partitioning: The document is segmented into smaller, overlapping chunks (e.g., 3–5 pages, or 10–15 clauses per chunk) to ensure that clause boundaries are preserved.

- Clause Extraction: Each chunk is independently analyzed by the language model to identify and classify clauses.

- Union of Results: The results from all chunks are combined into a unified set of clauses. Duplicate or overlapping clauses are merged.

- Completeness Tracking: Metadata (clause IDs, page/line numbers) is maintained during chunking so that all original clauses are accounted for in the final union.

- Post-Processing: The unified clause set is reconciled against the original document to ensure coverage, flagging any missing or unprocessed clauses for re-analysis if necessary.

This strategy would allow the system to scale to longer documents while maintaining clause completeness and minimizing the risk of omission. Such an approach has been successfully applied in related applications (e.g., large-text summarization), and represents a natural extension of the current work.

Considerations of Bias:

As the review paper [17] discusses, machine learning systems are based on data and are therefore vulnerable to the bias inherent in that data. That is a possible consideration for this work for the following reasons:

- Employment contracts, especially as enforceable documents, are strongly anchored in the formal economy. Underground economy work is often carried out without any documentation and certainly no notion of legal enforcement. Therefore, the bias here is that this work is relevant only to those fortunate enough to be in the formal economy.

- Another source of bias is that our work is specific to the United States with an Anglo-Saxon style of laws based on precedents. Because we infer possible questionable clauses based on the analysis of laws, we believe, but haven’t proved, that similar methods could apply to countries which follow a rule-based legal system.

Considerations of Accuracy:

While ContractNerd is designed to be advisory rather than authoritative, the high quality of its output might convince users that it is in fact authoritative. That is not so. Partly because of the inexactitude of AI technology and partly because of the irrationality of human actors in the judicial system, we can imagine a scenario in which ContractNerd states that a clause should be permissible, but that a contract drafter loses a lawsuit based on that clause. We urge contract drafters to use ContractNerd as one of many tools that they might use to improve the quality and fairness of contracts.

8. Conclusions

ContractNerd integrates jurisdiction-aware legal retrieval with LLaMA 3.3’s reasoning capabilities to analyze different styles of contracts (here, rental and employment) using the same prompt. ContractNerd compares favorably with state-of-the-art tools (goHeather, Legly, and YesChat) in citing enforceable regulations (85.3% accuracy) and flagging risky terms (76.9% F1-score). Three key innovations drive this effectiveness:

- Context-Aware Evaluation: ContractNerd grounds its analysis in specific regulations (e.g., NYS rental laws), reducing false positives in enforceability judgments.

- Structured Risk Framework: The tiered risk classification (High/Medium/Low) and contextual enforceability labels provide both an evaluation and suggestions for rewording, while linguistic trait analysis (lexical ambiguity, undue generality) further helps contract writers refine clause wording.

- User-Centric Design: Human evaluations resulted in a quite dramatic and statistically significance preference for ContractNerd’s outputs compared with the state of the art. This held particularly for ContractNerd’s regulatory citations and classification clarity.

To see the details of the grading and suggestions that ContractNerd performed across other jurisdictions as well as details of the expert qualitative evaluation, please see the appendices.

Future work centers around overcoming the scalability limitations and expanding the scope of our analysis to different kinds of contracts along with extensive user testing.

Author Contributions

Conceptualization, D.S., M.S.; Data curation, M.S., Y.D., H.Y.; Formal analysis, M.S., Y.D., H.Y., D.S.; Investigation, M.S., Y.D., H.Y., D.S.; Methodology, M.S., Y.D., H.Y., D.S.; Resources, M.S., Y.D., H.Y.; Software, M.S., Y.D., H.Y.; Validation, M.S., Y.D., H.Y., D.S.; Writing—original draft, M.S., Y.D., H.Y., D.S.; Writing—review editing, M.S., Y.D., H.Y., D.S.; Supervision: D.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partly funded by NYU Wireless.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The data sources and prompts are given in the body and appendix of this paper.

Acknowledgments

We extend our gratitude to Clayton Gillette, Professor of Contract Law, for his helping us with data sources, reviews of ContractNerd’s outputs, and his overall advice. We would also like to thank Harsh Kashyap and Shela Wu for their advice and help regarding the ContractNerd architecture.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Appendix A. Applying ContractNerd to Rental Agreement Other Jurisdictions

While the examples given above focus on New York, ContractNerd expands across multiple jurisdictions in the USA. Below are examples of clause analyses for leases based in other states in the USA.

Table A1.

Lease clause analysis summary.

Table A1.

Lease clause analysis summary.

| Clause | New Jersey | California |

|---|---|---|

| “Pursuant to this provision, the tenant is to indemnify the landlord ‘for any damage flowing from such use’.” | Risk Tier:

Medium Risk Regulation(s): N.J.S.A. 2A:18-61.1 Classification: Enforceable in specific contexts (e.g., tenant’s use directly causes damage) Linguistic Traits: Not applicable Explanation: May relate to landlord’s right to recover damages under Anti-Eviction Act, but language requires clarification. Improvement: Rephrase to clarify scope: “The tenant shall indemnify the landlord for damages caused by the tenant’s negligence or willful misconduct.” | Risk Tier: Medium Risk Regulation(s): California Civil Code §§ 2782, 2782.5 Classification: Enforceable in specific contexts Linguistic Traits: Undue Generality Explanation: California law limits indemnity clauses in leases, especially if they require the tenant to indemnify the landlord for landlord’s own negligence. The phrase “any damage flowing from such use” is broad and ambiguous, potentially exposing the tenant to excessive liability. Improvement: Clarify and limit indemnity obligations to damages caused by tenant’s negligence or misconduct. Include clear carve-outs for landlord’s own negligence or willful misconduct. Use precise language defining “damage” and scope of indemnity. |

| “If Landlord breaches said agreement the parties hereby agree to use the American Arbitration Association to resolve their disputes and the prevailing party will be allowed to recover costs, expenses, and damages of said arbitration including but not limited to attorneys fees.” | Risk Tier: Medium Risk Regulation(s): N.J.S.A. 2A:18-61.1, N.J.S.A. 46:8-48 Classification: Enforceable where arbitration agreement is fair/reasonable Linguistic Traits: Not applicable Explanation: Subject to Anti-Eviction Act and Truth in Renting Act; fee recovery provisions may be regulated. Improvement: Clarify fairness: “Parties agree to AAA arbitration, each bearing own costs unless ruled otherwise.” | Risk Tier: Low Risk Regulation(s): California Arbitration Act (Cal. Code Civ. Proc. §§ 1280–1294.2) Classification: Enforceable Linguistic Traits: Not applicable Explanation: California generally enforces arbitration clauses and mutual attorney’s fees provisions unless unconscionable or violating public policy. Specifying the AAA and fee-shifting to the prevailing party aligns with California law and promotes efficient dispute resolution. Improvement: Specify procedures for initiation of arbitration, timelines, and confidentiality terms for completeness. Consider defining “prevailing party” to avoid disputes over fee recovery. |

Table A2.

Lease clause analysis summary continued.

Table A2.

Lease clause analysis summary continued.

| Clause | New Jersey | California |

|---|---|---|

| “If this Lease is canceled, or Landlord takes back the Apartment, the following takes place: … (3) Any rent received by Landlord for the re-renting shall be used first to pay Landlord’s expenses and second to pay any amounts Tenant owes under this Lease. Landlord’s expenses include the costs of getting possession and re-renting the Apartment, including, but not only reasonable legal fees, brokers fees, cleaning and repairing costs, decorating costs and advertising costs.” | Risk Tier:

Medium Risk Regulation(s): N.J.S.A. 2A:18-61.1, N.J.S.A. 46:8-48 Classification: Enforceable if expenses are reasonable/documentedif expenses are reasonable/documented Linguistic Traits: Not applicable Explanation: Procedures must comply with Anti-Eviction Act and Truth in Renting Act. Improvement: Specify, “Landlord shall apply re-renting income first to reasonable documented expenses, then tenant debtsProcedures must comply with Anti-Eviction Act and Truth in Renting Act. Improvement: Specify, ”Landlord shall apply re-renting income first to reasonable documented expenses, then tenant debts.” | Risk Tier: Medium Risk Regulation(s): California Civil Code §§ 1951.2, 1951.4 Classification: Enforceable under certain conditions Linguistic Traits: Not applicable Explanation: California requires landlords to mitigate damages by making reasonable efforts to re-rent. Prioritizing expenses before tenant obligations is common but must be reasonable and supported by documentation. Overly broad expense categories may invite disputes over what costs are recoverable. Improvement: Define “reasonable” more precisely or provide caps where possible. Require itemization of expenses to be charged against rent proceeds. |

| “The prong of the warranty of habitability implied in every residential lease by which the landlord covenants that the premises are fit for the uses reasonably intended by the parties does not encompass the level of services and amenities that tenants reasonably expect to be provided under the financial and other terms of their individual leases.” | Risk Tier: High Risk Regulation(s): N.J.S.A. 2A:42-84 Classification: Unenforceable if essential services are withheld Linguistic Traits: Undue Generality Explanation: Warranty of habitability under NJ Residential Landlord-Tenant Act requires essential services. Improvement: State explicitly, “Landlord shall provide [list essentials] per warranty of habitability requirements.” | Risk Tier: Low Risk Regulation(s): California Civil Code §§ 1941, 1941.1 Classification: Enforceable Linguistic Traits: Undue Generality Explanation: The warranty of habitability requires landlords to provide premises fit for basic residential use but does not guarantee amenities or service levels beyond those implied by law or explicitly stated in lease agreements. This clause correctly limits landlord obligations consistent with California law. Improvement: Consider specifying which services or amenities are excluded or included to avoid ambiguity. |

Appendix B. Expert Qualitative Analysis Continued

Table A3.

Lease Agreement Clause Analysis by law professor Clayton Gillette continued.

Table A3.

Lease Agreement Clause Analysis by law professor Clayton Gillette continued.

| Clause | System | Analysis | Comment |

|---|---|---|---|

| Late fee: Fixed Amount. $50 for each occurrence | ContractNerd (System A) |

| ContractNerd is easier to understand. The reference to New York law raises more issues than it resolves for the average reader. Both clauses are relevant and accurate. |

| GoHeather (System B) |

| ||

| Tenant waives the right to bring a declaratory judgment action with respect to any provision of this lease. | ContractNerd (System A) |

| ContractNerd is better, though I am not sure it is accurate to say that it is prohibited. Tenants cannot be forced to waive, but if they agree, the clause may be enforceable. |

| GoHeather (System B) |

| ||

| Tenant shall not assign the lease or sublet the premises without the landlord’s prior written consent | ContractNerd (System A) |

| Specifying the allowance for service animals avoids any issues about the subject. |

| GoHeather (System B) |

| ||

| Tenant must provide written notice of intent to vacate at least 60 days prior to lease termination. | ContractNerd (System A) |

| Leaving the time period blank signals to the tenant that this is a time period that can and should be bargained for to address the circumstances of the individual landlord and tenant. |

| GoHeather (System B) |

|

References

- RAGSys: Real-Time Self-Improvement for LLMs Without Retraining. Available online: https://www.reddit.com/r/MachineLearning/comments/1iyszck (accessed on 14 May 2025).

- DeepRAG: A Markov Decision Process Framework for Step-by-Step Retrieval-Augmented Reasoning. Available online: https://www.reddit.com/r/MachineLearning/comments/1iiyobh (accessed on 14 May 2025).

- Kim, J.; Min, M. From RAG to QA-RAG: Integrating Generative AI for Pharmaceutical Regulatory Compliance Process. arXiv 2024, arXiv:2402.01717. Available online: https://arxiv.org/abs/2402.01717 (accessed on 14 May 2025). [CrossRef]

- Yang, R. CaseGPT: A Case Reasoning Framework Based on Language Models and Retrieval-Augmented Generation. arXiv 2024, arXiv:2407.07913. Available online: https://arxiv.org/abs/2407.07913 (accessed on 14 May 2025).

- CoRAG: Chain-of-Retrieval Augmented Generation. Available online: https://www.reddit.com/r/machinelearningnews/comments/1ic9jst (accessed on 14 May 2025).

- VideoRAG: Enhancing Large Language Models with Dynamic Video Retrieval and Multimodal Knowledge Integration. Available online: https://www.reddit.com/r/ArtificialInteligence/comments/1i1xhhe (accessed on 14 May 2025).

- GoHeather. AI Contract Review App. GoHeather. 2025. Available online: https://www.goheather.io/products/ai-contract-review-app (accessed on 15 November 2024).

- YesChat. Contract Analysis. YesChat. 2025. Available online: https://www.yeschat.ai/gpts-9t557DQlTCC-Contract (accessed on 15 November 2024).

- Legly. AI-Powered Contract Analysis. Legly. 2025. Available online: https://www.legly.io (accessed on 15 November 2024).

- Gao, Y.; Gan, Y.; Chen, Y.; Chen, Y. Application of Large Language Models to Intelligently Analyze Long Construction Contract Texts. Constr. Manag. Econ. 2025, 43, 226–242. [Google Scholar] [CrossRef]

- Rengaraju, U. Automated Contract Analysis Using Snowflake-Arctic and Llama. Medium. Available online: https://usharengaraju.medium.com/automated-contract-analysis-using-snowflake-arctic-and-llama-17c0c7bd9a36 (accessed on 15 October 2024).

- New York State Lease Agreement. Available online: https://documents.dps.ny.gov/public/Common/ViewDoc.aspx?DocRefId=%7B1F7CE8C5-7408-425F-B513-58E94B6E6FDB%7D (accessed on 14 May 2025).

- Butt, P.; Castle, R. Modern Legal Drafting: A Guide to Using Clearer Language; Cambridge University Press: Cambridge, UK, 2006. [Google Scholar]

- Adams, K.A. A Manual of Style for Contract Drafting; American Bar Association: Chicago, IL, USA, 2004. [Google Scholar]

- Goetz, C.J.; Scott, R.E. Principles of Relational Contracts. Va. Law Rev. 1981, 67, 1089–1150. [Google Scholar] [CrossRef]

- Katari, M.S.; Tyagi, S.; Shasha, D. Synthesis Lectures on Mathematics and Statistics. In Statistics is Easy: Case Studies on Real Scientific Datasets; Morgan & Claypool Publishers: San Rafael, CA, USA, 2021. [Google Scholar]

- Barbierato, E.; Gatti, A.; Incremona, A.; Pozzi, A.; Toti, D. Breaking away from AI: The Ontological and Ethical Evolution of Machine Learning. IEEE Access 2025, 13, 55627–55647. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).