1. Introduction

In recent years, artificial intelligence—particularly the rapid advancement of large language models (LLMs)—has been transforming the technological landscape across various industries. Since Google introduced BERT (Bidirectional Encoder Representations from Transformers) in 2018 [

1], LLMs have entered the public spotlight. BERT’s adoption of a bidirectional Transformer architecture significantly improved contextual understanding in natural language processing tasks. Subsequently, OpenAI’s GPT-2 [

2] and GPT-3 [

3] demonstrated the remarkable capabilities of large models in text generation and complex problem-solving. In 2022, Google released PaLM [

4], and OpenAI launched ChatGPT, which further accelerated development in the field. ChatGPT, in particular, gained global popularity due to its human-like interactive experience. The release of GPT-4 in 2023 marked a shift from unimodal to multimodal models, enhancing the ability to process mixed inputs such as text and images and thereby broadening the scope of applications. These models, primarily based on the Transformer architecture, are generally trained through self-supervised learning. They excel in tasks such as information extraction, content summarization, reading comprehension, and even code generation, underscoring their significance in modern technology and their potential to drive industrial transformation.

Alongside these developments, the rapid growth of automotive electronics and information technology has resulted in increasingly complex vehicle systems, posing greater challenges for fault diagnosis and repair. Traditional diagnostic approaches rely heavily on the experience of technicians and manual tools, which often limits both efficiency and accuracy. Misdiagnoses or insufficient expertise can lead to delays in repair and increased inconvenience for vehicle owners. Accurately and promptly identifying the root cause of vehicle issues has thus become a critical challenge in modern automotive maintenance. Furthermore, due to a lack of professional knowledge, average car owners often depend entirely on service providers’ conclusions when diagnosing vehicle faults, which can create information asymmetry and undermine trust. For example, a common issue such as the illumination of the “check engine” light may stem from various underlying causes—ranging from minor fuel quality problems to complex combustion system failures—each requiring vastly different repair costs. However, with the rapid development of AI technologies, particularly LLMs, the automotive repair industry is now facing a transformative opportunity. Integrating LLMs with domain-specific knowledge in automotive fault diagnosis holds great promise for improving both diagnostic accuracy and efficiency.

Recent studies have shown that integrating domain knowledge with large language models can effectively enhance the models’ expertise and the reliability of their information, making it a valuable area for in-depth research. For example, in knowledge-intensive fields such as medicine, chemistry, and communications, numerous studies have explored how to combine domain knowledge to strengthen the capabilities of large models. These works improve models’ understanding of specialized knowledge and increase their applicability to domain-specific tasks by constructing structured knowledge systems and integrating domain expertise into pretrained models. Information reliability is also a concern across many fields. For instance, Im and Pearmai [

5] enhanced the stability of video transmission through unequal error protection strategies, while Chan et al. [

6] proposed a construction method for discrete orthogonal matrices to improve the consistency of numerical transformations.

In the medical domain, Mashatian et al. [

7] constructed a knowledge-enhanced question-answering model using a retrieval-augmented generation (RAG) framework based on diabetes self-management education standards; their model achieved 98% accuracy under appropriate prompting. Yu et al. [

8] proposed a zero-shot RAG-based diagnostic method for detecting cardiovascular disease and sleep apnea from ECG signals, demonstrating superior performance compared to few-shot learning approaches. Park et al. [

9] developed a retrieval-augmented language model to improve accessibility and accuracy in dental consultations. Zelin et al. [

10] introduced RareDxGPT, which leverages retrieval-augmented techniques to provide ChatGPT with information on 717 rare diseases, achieving a diagnostic accuracy of 43% and significantly outperforming ChatGPT-3.5’s 23%.

In the field of chemistry, Liu et al. [

11] employed prompt engineering techniques to incorporate domain knowledge into LLMs, enhancing their performance in scientific tasks. Zhao et al. [

12] developed the ChemDFM model, which is pretrained on chemistry textbooks and the scientific literature to improve comprehension of chemical knowledge. Zhang et al. [

13] introduced ChemLLM, which integrates structured chemical knowledge to support multitask processing and facilitate smooth dialogue. Shi et al. [

14] proposed a few-shot learning approach based on LLMs to improve the automatic extraction of synthesis information for metal–organic frameworks (MOFs).

In the communications domain, Saado et al. [

15] explored the use of LLMs combined with word-embedding techniques to improve the understanding and retrieval of domain-specific terminology. They constructed a domain knowledge base and utilized an RAG framework to fine-tune general-purpose LLMs, achieving higher accuracy and relevance in answering domain-specific questions compared to ChatGPT-3.5.

However, integrating large language models with domain-specific knowledge in automotive fault diagnosis presents several key challenges:

- (1)

Lack of Standardized Data: Due to the highly specialized nature of the task, the absence of standardized dialogue formats, concerns over personnel privacy, and proprietary restrictions, there is a scarcity of suitable diagnostic dialogue datasets in the automotive domain.

- (2)

Complex Multi-Module Interdependencies: Automotive systems are highly interconnected, with fault information often propagating across multiple modules. Data from a single module is frequently insufficient for a comprehensive and accurate diagnosis.

- (3)

Limitations in Domain Knowledge: General-purpose language models are typically pretrained on broad datasets that lack specialized terminology and knowledge related to automotive diagnostics, which limits their ability to correctly interpret user inputs in this field.

- (4)

Risk of hallucination: Without proper domain constraints, LLMs are prone to generating inaccurate or even fabricated content. In automotive repair, such misinformation can lead to significant financial losses or serious safety risks.

To address these challenges, this study proposes an intelligent fault diagnosis framework that integrates knowledge graphs with large language models. In collaboration with a well-known German automobile manufacturer, we conducted in-depth processing of raw diagnostic dialogue data for the first time, thereby mitigating issues related to data scarcity and lack of specialization. The proposed framework combines the strengths of knowledge graphs and LLMs, enhancing the system’s capability to handle multi-module faults and enabling proactive information querying. It also offers a novel reference architecture for broader industrial applications.

The main contributions of this paper are as follows:

- (i)

Based on raw automotive fault diagnostic dialogues, we performed data cleaning and selection to construct a high-quality dataset, which serves as the foundation for building an automotive fault diagnosis knowledge graph.

- (ii)

To enhance the semantic matching capability in automotive fault question–answer retrieval, this study proposes a novel embedding model, QASE (Query-Aware Semantic Embedding). The model is built on a dual-tower bi-encoder architecture and incorporates multiple innovations to improve the discriminative power of semantic representations and the robustness of the model.

- (iii)

We introduce an initial information extraction module and a fault detection and state-tracking module to monitor known fault information and track changes, thereby supporting the model in actively querying for unknown data.

- (iv)

A dual-stage information judgment module and a mode Invocation and switching module are designed to enhance the model’s understanding of user-provided inputs and dynamically invoke appropriate diagnostic modes based on different scenarios.

- (v)

A three-tiered data structure is constructed, linking entities, dialogues, and technical maintenance documents and thereby improving diagnostic accuracy.

- (vi)

Ablation experiments demonstrate the effectiveness of the proposed modules. Our framework outperforms a pretrained LLM (GPT-3.5) by 46.1% in diagnostic accuracy and achieves over 14% improvement compared to other benchmark frameworks.

2. Related Work

2.1. Prompt Engineering

Prompt engineering involves designing and optimizing input prompts to guide large language models (LLMs) in generating outputs that align with users’ expectations. By crafting clear and effective prompts, users can help models better understand task requirements and adapt to various scenarios. Prompts are generally categorized into hard prompts and soft prompts [

16]: Hard prompts are more suitable for static tasks, while soft prompts are better suited for dynamic tasks. Additionally, prompts can be classified by their interaction mode as online prompts [

17] or offline prompts [

18].

To improve prompt quality, several strategies are commonly used, including clarifying the task objective, providing contextual information, using examples for guidance, employing step-by-step prompting, and narrowing the output’s scope. The two primary types of prompt engineering are zero-shot prompting and few-shot prompting. Zero-shot prompting involves asking the model to perform a task without providing any examples. The model relies solely on the task’s description and context to generate responses. Techniques such as role prompting, emotion prompting, and style prompting are commonly used to instruct LLMs to generate outputs in specific formats or tones. Few-shot prompting, on the other hand, provides a small number of task-specific examples to help the model better understand the task and generate relevant outputs. Studies have shown that factors such as the number of examples [

3], order of presentation [

19], label distribution [

20], label quality [

21], format [

22], and similarity between examples and inputs [

20] can all significantly affect model performance.

In addition to zero-shot and few-shot methods, several advanced prompting techniques have emerged, including Chain-of-Thought (CoT) prompting [

23], Auto-CoT prompting [

24], Self-Consistency prompting [

25], Tree-of-Thoughts prompting [

26], Skeleton-of-Thought prompting [

27], and Graph-of-Thought prompting [

28].

2.2. Retrieval-Augmented Generation

Retrieval-augmented generation (RAG) [

29] is a natural language processing technique that combines retrieval and generation mechanisms. Proposed by Meta AI in 2020, RAG enhances the output quality of large language models by supplementing them with external knowledge. The RAG pipeline involves parsing and vectorizing user queries, retrieving relevant information from a knowledge base using a retrieval model, filtering and ranking the retrieved content, and finally inputting the most relevant context into an LLM to generate high-quality responses.

The RAG framework consists of two core components: a retrieval module and a generation module. In the retrieval stage, textual content is transformed into vectors using text-embedding techniques, and approximate nearest neighbor (ANN) algorithms are employed to rapidly locate the most relevant information. In the generation stage, the retrieved context is used to guide the language model in producing coherent and accurate responses. The development of text embeddings can be traced back to mid-20th-century linguistic theories, notably Harris’s distributional semantics model [

30] and Bengio’s neural probabilistic language model [

31]. Key advancements in embedding methods include Word2Vec, GloVe, ELMo, and BERT, as well as more recent models like M3E, Jina, XLNet, ERNIE 3.0, and E5.

To ensure retrieval of semantically relevant vectors, semantic search technologies are essential. These methods can identify synonyms, contextual relationships, and nuanced semantic dependencies, ensuring that search results align closely with the user’s actual intent [

32]. ANN techniques, which balance accuracy and computational efficiency, are currently the most widely adopted for this purpose. ANN methods [

33,

34] can be broadly categorized into four types: partition-based, hashing-based, graph-based, and quantization-based approaches.

2.3. Knowledge Graph-Augmented Large Language Models

A knowledge graph (KG) is a structured representation of knowledge in the form of a graph, where entities (e.g., people, locations, objects) are represented as nodes, and the relationships between them (e.g., belongs to, located in, similar to) are represented as edges. Knowledge graphs typically organize information using triples, each consisting of a head entity, a relation, and a tail entity.

The construction of a knowledge graph involves four major stages: data acquisition, information extraction, knowledge fusion, and knowledge refinement. Knowledge graphs not only store factual information but also support inference, enabling the discovery of latent relationships. They have become foundational for a variety of applications such as intelligent question answering, recommendation systems, and semantic search.

In recent years, integrating knowledge graphs with large language models (LLMs) has emerged as a promising approach for improving generation quality and mitigating hallucination in model outputs. For example, Niu et al. [

35] proposed the Re-KGR method, which uses self-refinement to enhance knowledge graph retrieval, thereby reducing factual errors in the medical domain. Wang et al. [

36] introduced the LPKG framework, which extracts structured knowledge from KGs to improve the planning capabilities of LLMs. Zhou et al. [

37] proposed CogMG, which addresses the issues of incomplete knowledge coverage and asynchronous updates in KGs. Their framework enables LLMs to identify and complete missing triples, thus reducing hallucinations while supporting dynamic updates to meet real-world needs. Similarly, Gilbert et al. [

38] demonstrated that integrating KGs can improve LLMs’ reasoning abilities and alleviate communication challenges in medical information processing. These studies improve the domain-specific generative and reasoning abilities of large language models through the construction of relevant knowledge graphs.

Recently, several studies have explored the integration of knowledge graphs and large language models from new structural and mathematical perspectives. Liu et al. [

39] proposed a structure-aware alignment-tuning method for knowledge graph completion, significantly improving structural consistency and relational reasoning. Zhou et al. [

40] developed a region-embedding framework based on Lie group theory, providing a geometric interpretation for complex query answering. These studies are complementary to the present work, providing valuable insights for further enhancing the interpretability and generalization capability of knowledge graph-augmented large language models.

2.4. Integration of Domain Knowledge with Large Language Models

Recent research has made considerable progress in leveraging artificial intelligence to empower traditional domains, aiming to reduce costs, improve efficiency, and drive industrial transformation. Although mature studies in the field of automotive fault diagnosis remain limited, substantial efforts have been made in areas such as aircraft maintenance, education, and healthcare. For instance, Wang et al. [

41] constructed an aircraft ontology knowledge base, integrating domain knowledge (e.g., component hierarchies) into large language models to enhance the reliability of maintenance recommendations. Bui et al. [

42] developed a cross-data-source knowledge graph in the higher education context, employing intent detection and embedding-based relational mining techniques to enable LLM-driven precise question-answering services. Dao et al. [

43] designed a multimodal dialogue system to build a personalized health management platform for diabetes prevention.

3. Methods

3.1. Framework

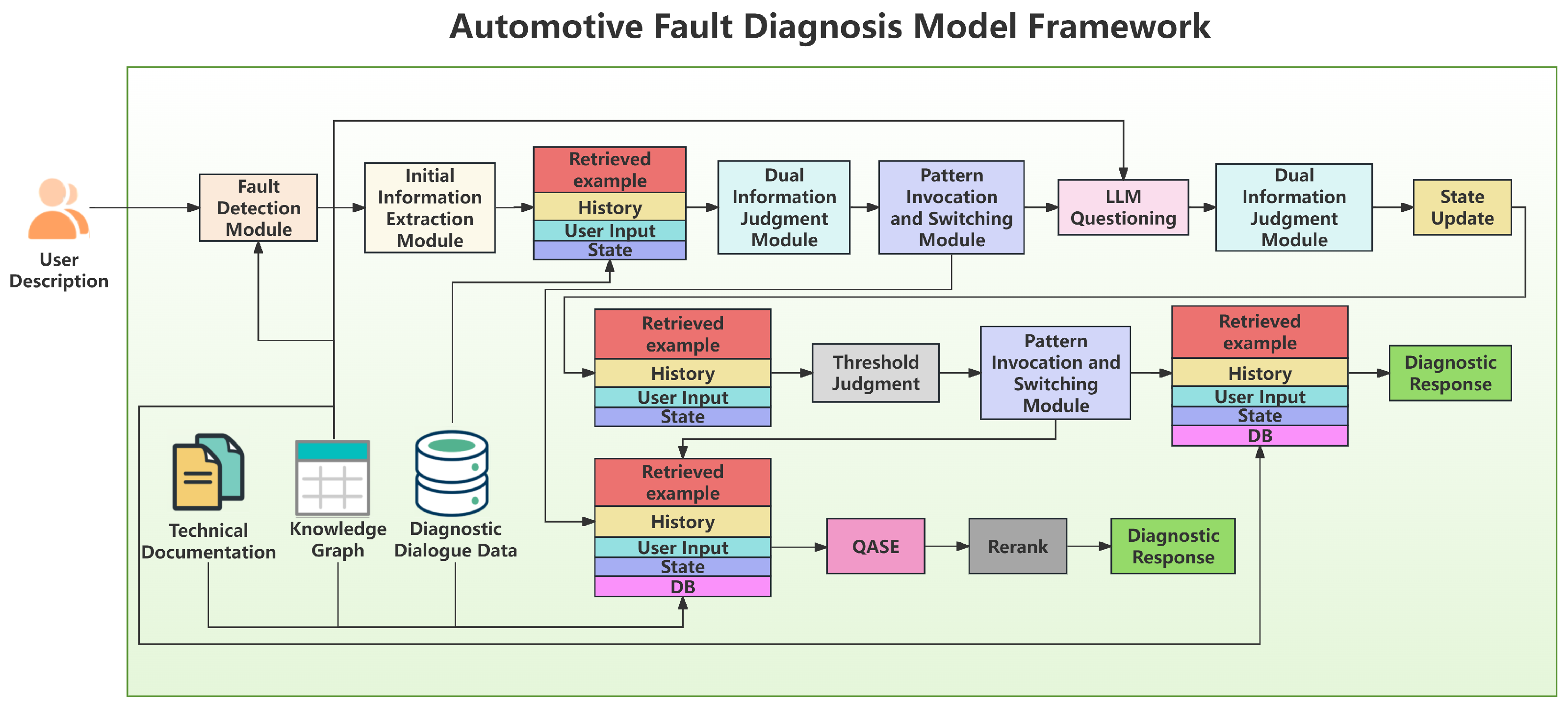

In this section, we will provide a detailed explanation of the proposed framework, which is illustrated in

Figure 1. The framework consists of multiple modules working in collaboration, with key components including the QASE model, fault detection and state-tracking module, initial information extraction module, dual information judgment module, mode-switching and invocation module, and a pre-trained large language model (LLM).

The workflow of the framework begins with the user providing a description of the automotive fault. The system will match fault patterns and extract initial information. Leveraging a knowledge graph, the framework will actively inquire about missing critical diagnostic information and dynamically switch modes based on user feedback. In certain cases, the module will switch to the AI model retrieval mode (see

Section 3.5), while if the framework switches to the knowledge base retrieval mode, the LLM enters an inquiry state, continuously updating its belief state and annotating user responses.

Once all necessary information is gathered, the system performs threshold judgments based on the annotations and switch modes accordingly. If specific conditions are met during the diagnostic process, the framework will switch to the AI model retrieval mode, generating diagnostic feedback based on the QASE model and historical inquiries. In the knowledge base retrieval mode, precise diagnostics are provided through the knowledge graph and multi-turn questioning. The pre-trained language model used in this framework is the Wenxin 3.5 model, which is tailored for Chinese data.

Additionally, we illustrate the system’s end-to-end data flow in

Figure 2, highlighting the process from user input to the final diagnostic output.

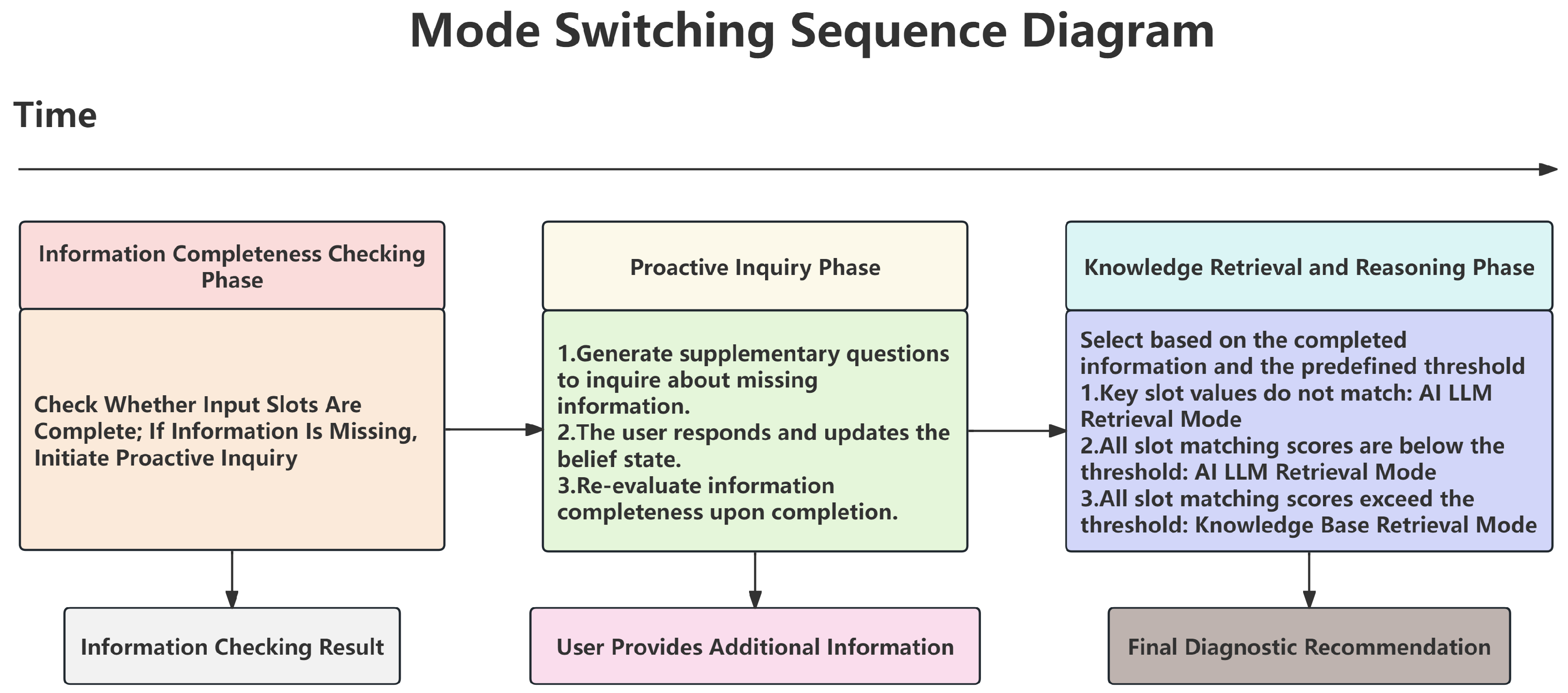

Figure 3 presents the mode-switch timing diagram, demonstrating how the system alternates between knowledge-graph retrieval and model-based retrieval during the diagnostic process.

3.2. QASE Model

In semantic matching tasks, the core objective of an embedding model is to map natural language text into a semantic space such that semantically similar sentence vectors are close to each other, while semantically irrelevant vectors remain distant. Existing sentence-embedding models typically employ pre-trained language models (e.g., BERT or RoBERTa) as encoders and derive sentence-level representations from the output layer using either the [CLS] token or mean pooling. However, such fixed pooling strategies present two major limitations in domain-specific question–answering scenarios: (1) they lack task awareness, making them susceptible to interference from stopwords or function words, which leads to the under-representation of key information; (2) they exhibit limited discriminative power when dealing with hard negatives that are lexically similar but semantically mismatched.

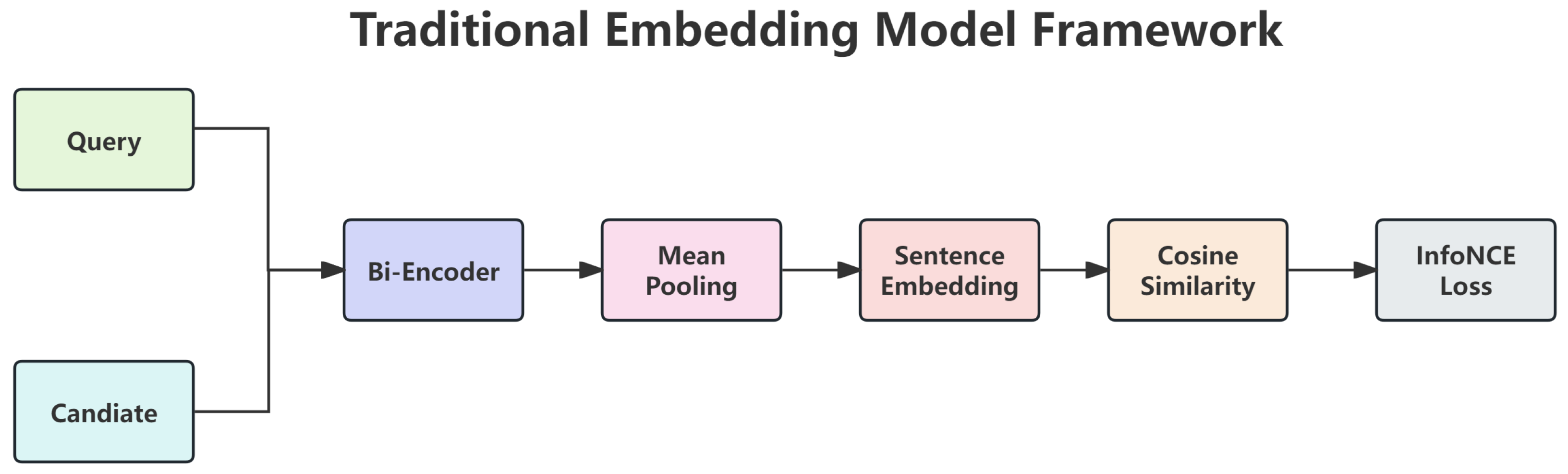

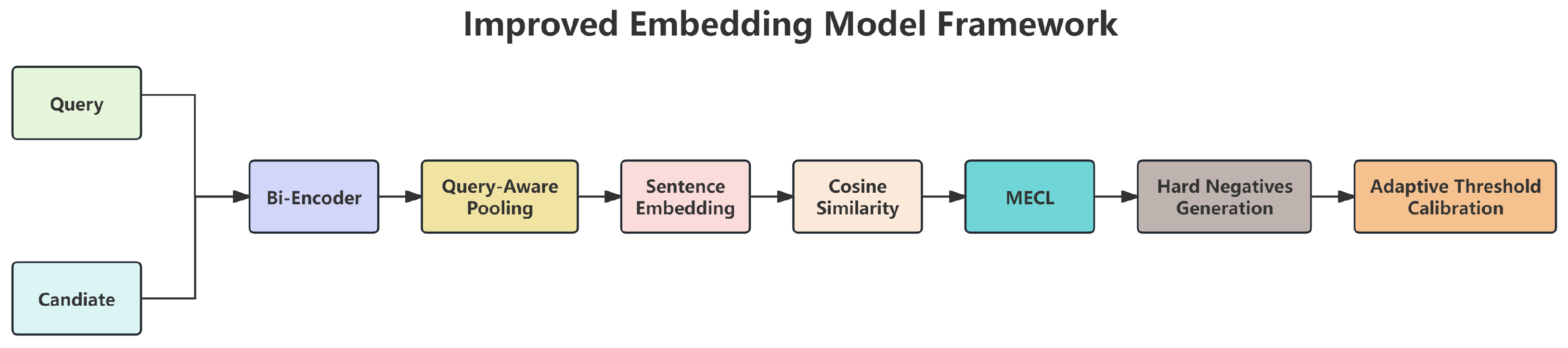

To address these issues, we propose the QASE model by introducing a series of enhancements on top of the traditional bi-encoder framework. Compared with the standard bi-encoder structure (

Figure 4), QASE integrates query-aware pooling, enhanced contrastive loss, hard negative mining, and adaptive threshold calibration. These innovations not only preserve the retrieval efficiency of the dual-tower architecture but also significantly improve the discriminative power of semantic representations and the accuracy of question–answer matching. The overall architecture of QASE is illustrated in

Figure 5.

The QASE model primarily consists of a bi-encoder, query-aware pooling, cosine similarity computation, margin–exponential contrastive loss (MECL), hard negative mining, and adaptive threshold calibration. Specifically, the bi-encoder encodes the query and the answer separately; query-aware pooling leverages an attention mechanism to extract key semantic features; MECL enhances semantic discrimination by applying positive constraints and negative penalties; hard negative mining generates challenging negative samples—constructing contrastive pairs that are superficially similar but semantically incorrect—to improve the model’s robustness in real-world scenarios; adaptive threshold calibration ensures stability during inference deployment.

Compared with conventional embedding models, our approach introduces three main improvements: the query-aware pooling mechanism (QAP), which enables the model to actively focus on the core semantics of user queries during the embedding stage; the margin–exponential contrastive loss (MECL), which provides stronger discriminative power when handling lexically similar but semantically different negative samples; and adaptive threshold search and calibration, which enhance the model’s stability.

3.2.1. Query-Aware Pooling

We designed a learnable attention pooling mechanism to replace fixed [CLS] or mean pooling strategies. Given the token representations from the encoder,

, key tokens are adaptively selected through trainable attention weights:

The final sentence representation is given by

Here, W and w are trainable parameters. This mechanism enables the model to focus on key entities and action words in the text, such as “engine vibration,” thereby enhancing the discriminative power for question–answer matching.

3.2.2. Margin-Exponential Contrastive Loss

To enhance the model’s discriminative ability on hard negatives, we propose the margin-enhanced contrastive loss (MECL). Given a query vector

q and an answer vector

a, their similarity is computed as

The loss function consists of two components: a positive-example constraint and a negative-example penalty.

Here, m denotes the margin, and denotes temperature. When the similarity of a positive pair is insufficient, drives it closer to 1; when the similarity of a negative pair exceeds the margin m, the loss grows exponentially. This mechanism encourages positive samples to cluster more tightly while negative samples are pushed farther apart. During training, it strengthens inter-class discriminability and preserves intra-class compactness, thereby improving the discriminative power and robustness of semantic embedding vectors.

3.2.3. Lexical–Semantic Coupled Hard Negative Mining

During the training data construction phase, to further enhance the model’s robustness in real-world scenarios, we propose a lexical–semantic coupled hard negative generation strategy. This method leverages TF-IDF to select answers that are lexically highly similar but semantically mismatched as negative samples, thereby constructing contrastive pairs that are “surface-similar but semantically incorrect.” Such a strategy simulates confusion scenarios commonly encountered in actual question–answer retrieval, enabling the model to better learn to distinguish fine-grained semantic differences.

3.2.4. Adaptive Threshold Calibration

During the model evaluation and deployment phase, instead of using a fixed threshold, we perform a grid search on the validation set to determine the optimal threshold and integrate it with the model. This approach ensures stability and reproducibility during inference and deployment, preventing performance fluctuations that may occur when using a fixed threshold across different test sets.

3.3. Fault Detection and State-Tracking Module

The fault detection and state-tracking module plays a crucial role in the overall framework, especially in two key scenarios: one when processing the user’s initial input, and the other when the system is engaging in iterative questioning with the user. Different scenarios require the module to perform different tasks.

When the module is active, two distinct prompts are given to the model based on the task at hand: one is to detect the fault domain, and the other is to output the changes or newly added slot values in the current round and update the global belief state. According to the constructed knowledge graph, automotive faults can be categorized into 91 major phenomena. Therefore, through the fault domain detection prompt, the large model is guided to correctly classify the faults and subdivide them into 91 types. Then, in handling the global belief-state prompt, parameters that need to be focused on are introduced, referred to as slots, which are derived from the knowledge graph.

Due to the diversity of fault manifestations, the language used by users to describe faults often varies, and even descriptions of the same fault may differ. Moreover, some similar fault mechanisms might be different. This module needs to understand and distinguish various expressions to ensure correct classification and matching, avoiding misdiagnosis. By utilizing the knowledge graph, the module locks in relevant key parameters after matching the fault, forming an initial state table. During the iterative questioning process, as the user’s information is updated, the belief state is continuously updated in real-time, ensuring that the diagnosis is always based on the most current information.

Once the fault described by the user is identified, the system leverages the dependencies in the knowledge graph to determine the slots requiring attention. For slots that remain unfilled in the belief state, the system automatically generates supplementary questions. This mechanism does not rely on reinforcement learning; instead, it employs dynamic templates and contextual prompts to formulate queries, enabling low-cost and highly interpretable proactive information completion.

3.4. Initial Information Extraction Module

During the initial input phase, the user’s fault description may not only involve external manifestations but also contain information essential for an accurate diagnosis. This information must be proactively extracted by the system, otherwise, it may lead to redundant inquiries during subsequent knowledge graph-based questioning, affecting the system’s intelligence and response efficiency.

Once the system identifies the fault domain associated with the user’s description, the initial information extraction module will, in conjunction with the relevant slots for that fault domain, recognize and extract the key information already provided in the user’s input and populate this information into the belief-state table. This process ensures that the framework avoids redundant inquiries in subsequent steps, thereby improving diagnostic efficiency. Ultimately, this module will generate a state table, as shown in

Table 1, with the key information initially mentioned being recorded.

3.5. Dual Information Judgment Module

The dual information judgment Module is one of the key strategies in our framework, playing a crucial role after initial information extraction and during the iterative questioning process, wherein the system evaluates and updates the relevance and semantic consistency of user inputs throughout the multi-turn dialogue.

Specifically, this mechanism first queries the user’s responses to follow-up questions and matches them with entries in the fault domain’s specified slots. It then determines whether the user’s response corresponds to the preset values in the slots. If a match is found, the corresponding slot in the belief-state table will be updated. However, considering the richness and diversity of natural language, relying solely on preset slot values cannot cover all possible expressions. Therefore, we introduce secondary semantic discrimination using the large model to further ensure the accuracy of information extraction and updating. This process is accomplished using the prompts shown in

Table 2.

The dual information judgment module is applied twice during the fault diagnosis process. The first application occurs after initial information extraction, working in tandem with the mode-switching and invocation module to determine the initial mode selection of the framework. The second application happens during the follow-up questioning process, ensuring continuous updates and the accuracy of the global belief state.

When the user provides lengthy inputs, the system invokes a large language model to extract keywords and parameters relevant to the queried slots. If the response is detected to be unrelated to the question, the system initiates a clarifying follow-up inquiry.

3.6. Mode-Switching and Invocation Module

In the proposed framework, we have designed both the knowledge base retrieval mode and the AI large model retrieval mode to adapt to different fault diagnosis needs, thereby improving diagnosis accuracy. The knowledge base retrieval mode is suitable for common faults that have sufficient case support in the knowledge graph, providing precise diagnostic results through follow-up questions and matching. On the other hand, the AI large model retrieval mode is used for rare faults, generating diagnostic suggestions by combining historical inquiry data.

The mode-switching and invocation module has two applications in the framework: the first is in conjunction with the dual information judgment module, and the second is for routing after the global belief state has been fully updated.

In the knowledge graph, different slots have different levels of importance. We have designed the system so that higher-priority slots are asked first, determining the mode-switching strategy.

During the dual information judgment phase, the model asks the user based on slot priorities and matches the responses with preset slot values. If the highest-priority slot still remains unmatched, the fault is deemed rare, and the system switches to the AI large model retrieval mode; otherwise, it enters the knowledge base retrieval mode for iterative questioning.

After updating the global belief state, if the initial mode was the knowledge base retrieval mode, a final mode-switching evaluation occurs before the final diagnosis. If low-priority slots still exhibit high mismatch (beyond the threshold), the system switches to the AI large model retrieval mode; otherwise, the knowledge base retrieval mode is maintained to generate the diagnosis.

3.7. Three-Level Knowledge Base

In the field of automotive fault diagnosis, we use a three-level RAG (retrieval-augmented generation) data combination structure to match the data types required by different components of the framework, thus enhancing the diagnostic effectiveness of the system.

The first level consists of diagnostic cases related to common faults. After extracting entities from these cases, we merge them into a more fundamental and easily interpretable information layer. The knowledge graph formed by these entities assists in the fault domain determination, the follow-up questioning of key information, and the generation of final diagnostic opinions in the knowledge base retrieval mode.

The second level consists of cleaned diagnostic conversation data. Entities from the first level are matched with relevant dialogue data in the second layer based on their relevance as detected by the large model, handling less common faults. This level also serves as a few-shot example to be embedded in the prompt for the large model.

The third level consists of automotive maintenance technical documents, which include clearly defined professional terminology and related knowledge in the automotive field. These foundational pieces of knowledge come from technical documents provided by automotive manufacturers and are highly reliable.

This structure is crucial in the AI large model retrieval mode, where it enhances the generation effect by combining it with the global knowledge graph, ensuring that the model provides high-quality diagnostic feedback across various fault scenarios.

4. Experiment

4.1. Datasets

The dataset originates from the China headquarters of a well-known German automotive manufacturer and is authorized for use under a commercial research agreement. It comprises approximately 130,000 dialogue instances, covering multi-turn fault inquiry and diagnostic interactions conducted by experienced maintenance technicians. The dialogues span a wide range of vehicle models and fault types, including fault queries, feedback, and diagnostic suggestions provided by technicians, ensuring sufficient diversity. Prior to use, all data were anonymized to remove personally identifiable information (PII), such as vehicle owners’ names, license plate numbers, and contact details. The collection and use of the data fully comply with relevant laws, regulations, and contractual agreements and are strictly limited to research purposes. Given that the dataset contains commercially sensitive information, it is proprietary and not publicly accessible; researchers seeking access may contact the corresponding author to request permission.

As the data originate from real-world dialogues, issues such as missing turns or incomplete follow-up in some cases exist, and therefore, the dataset requires cleaning and organization prior to use.

After the cleaning process, the dataset consists of over 60,000 dialogue entries. We categorize the data based on the seven major automotive assemblies (such as engine, chassis, electrical, etc.) and cluster the fault diagnosis dialogues for each assembly. For faults with similar manifestations, we further analyze how internal parameter differences affect repair plans and summarize diagnostic and repair strategies under different conditions. In total, we identified 91 common major fault types along with 335 corresponding standard diagnostic or repair solutions. In addition, 65 extremely rare faults were also included in the retrieval database, with the fault distribution for each assembly shown in

Table 3.

Based on the cleaned and organized data, this study constructs an automotive fault diagnosis knowledge graph. The core of the knowledge graph includes five categories of entities: assembly classification, fault classification, slots, slot values, and repair solutions. Several key relationship types are defined within the graph. Slot combination conditions are used to represent compound conditions formed by multiple slots and their values, enabling the precise identification of complex fault patterns and the efficient triggering of repair solutions. For example, when the combination of specific slot values meets certain conditions, the system can automatically deduce the corresponding repair strategy.

Since each type of fault may correspond to multiple potential causes, our framework integrates the “fault–symptom–solution” relationships from the knowledge graph to enable reasoning and diagnosis. The knowledge graph encodes the multiple possible causes associated with each fault phenomenon, as well as the key slots that need to be considered when diagnosing these causes. Moreover, the final solution is defined at the component level, meaning that the system explicitly identifies the parts that may require repair or replacement.

During the construction of the knowledge graph, domain experts were repeatedly consulted to review and refine its structure and content, ensuring both accuracy and reliability. They also assisted in designing a multi-fault association mechanism based on slot combinations, enabling the knowledge graph to integrate information across different slots and provide diagnostic support for typical compound or multi-fault scenarios.

During model training, to ensure the integrity of the dataset and the fairness of evaluation, we employed a case-level partitioning strategy, ensuring that the same fault case does not appear in both the training and testing sets, thereby preventing data leakage. In addition, the dataset was partitioned according to vehicle models, guaranteeing that the test set includes samples from each model while minimizing the overlap of similar cases.

4.2. Evaluation Metrics

We evaluated the performance of the framework from multiple dimensions, monitoring the operational process and analyzing the fault domain detection, belief-state tracking, response generation, and overall dialogue performance.

In fault domain detection, we assess the framework’s ability to identify fault types using domain detection accuracy (DDA). Belief-state tracking is measured by slot-filling F1 score and joint goal accuracy (JGA), which reflect the accuracy and completeness of slot filling. The F1 score combines precision and recall, while JGA represents the proportion of correctly filled slots.

Specifically, in this study, the knowledge graph predefines key slots that require attention along with their candidate slot value sets. The model semantically matches the user’s natural language responses against these candidate slot values to determine equivalence. If the system determines that a user input is semantically consistent with a candidate slot value, the corresponding slot is updated with this predefined standard value. A correct slot filling is counted when the system accurately identifies and fills a standard slot value that aligns with the user’s intended semantics. Accordingly, the slot-filling accuracy in this study is measured based on complete slot–value pair semantic matching, constituting a span-level evaluation rather than a token-level computation. This approach better reflects the overall accuracy of the system in terms of semantic understanding and belief-state updating.

In addition, belief-state update accuracy is introduced to assess the precision of state updates, and a fuzzy matching strategy is employed to mitigate the impact of spelling errors. During belief-state updates, users may modify previously filled slot values through natural language instructions. To ensure that the system can correctly identify the target slots even in the presence of vague expressions or typographical errors, when a user input contains slot-related descriptions, the system compares these descriptions with the set of slot names in the current belief state.

If expressions similar to or misspelled versions of slot names appear in the input text, the system determines the most likely referenced slot by combining character similarity and semantic similarity. Character similarity is computed using the normalized Levenshtein edit distance, as defined in Equation (

6), while semantic similarity is obtained via a text encoder.

The final combined matching score is defined in Equation (

7), where

is a weighting coefficient. If the combined matching score of a slot exceeds a predefined threshold, the system determines that the user intends to modify the value of that slot:

For response generation, we evaluate the quality of generated responses using BLEU and ROUGE metrics, which focus on word-level overlap and content coverage, respectively. Specifically, we employ BLEU-2 and ROUGE-L in our experiments. For overall performance, the framework is primarily evaluated based on fault diagnosis accuracy, which is determined by calculating the semantic similarity between the generated diagnostic results and the actual outcomes. These multi-dimensional evaluation metrics comprehensively reflect the applicability and effectiveness of the framework in different task scenarios. Some relevant metrics are calculated as follows:

4.3. Automatic Metrics Results

For baseline comparisons, we conducted experiments using GPT-3.5 (base model), GPT-4, GPT-3.5 with retrieval-augmented generation (RAG), GPT-4 with RAG, and HuDi_LLM. The specific model versions used were GPT-4-0125-preview and GPT-3.5-turbo-0125.

The GPT baseline models were evaluated under both zero-shot and few-shot settings. Specifically, the GPT-3.5 baseline experiments were conducted in the zero-shot setting without any retrieval augmentation or fine-tuning to ensure fair comparison across frameworks. In the few-shot setting, five examples were provided per task. All models were evaluated with a context length of 4096 tokens, a retrieval size of

, and retrieval indices constructed from knowledge graph nodes (entities and triples), technical document summaries, and question–answer pairs. The zero-shot and few-shot prompts are presented in

Appendix A Table A1 and

Table A2.

Since our dataset consists of Chinese automotive diagnostic dialogues provided by the China headquarters of a German automotive company, we additionally employed the Wenxin 3.5 model, a Chinese-optimized LLM, to evaluate its performance in mixed-language scenarios.

4.3.1. Domain Detection

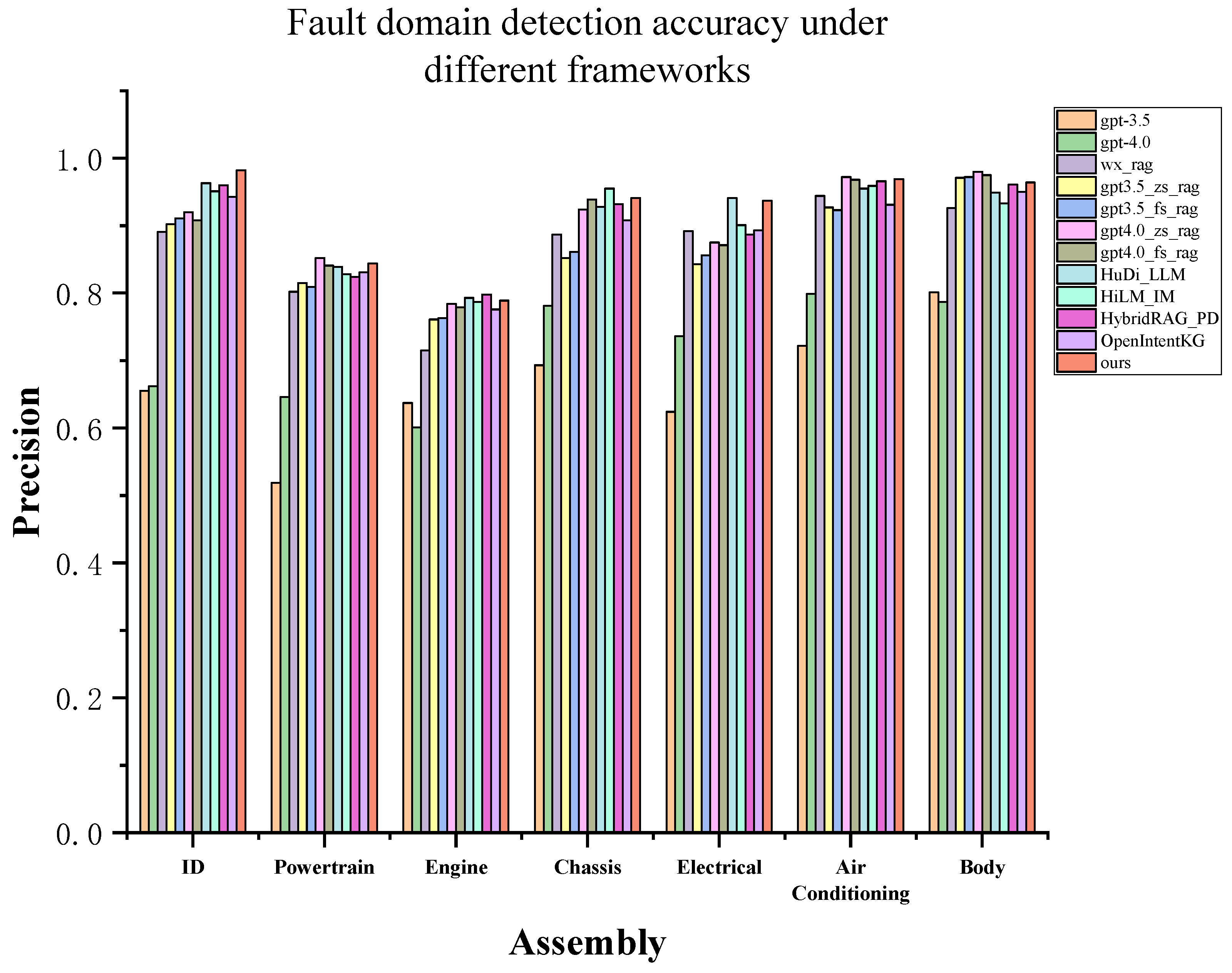

In

Figure 6, we compare the fault domain detection accuracy of different frameworks for various components in automotive fault diagnosis. The experimental results show that there are certain differences in fault detection accuracy across frameworks, which may influence the subsequent information inquiry paths and diagnostic decisions. In some cases, relying solely on pre-trained models may fail to accurately identify fault patterns based on natural language descriptions, leading to faults that should belong to different components being incorrectly classified under the same component. However, under the frameworks based on RAG or knowledge graphs, the fault domain detection accuracy was improved.

Additionally, we observed that, regardless of the detection framework used, the fault domain detection accuracy for the powertrain component and the engine component consistently remained at a lower level compared to the other major components. We hypothesize that this may be due to the more complex structure of these two components and the fact that their fault manifestations share more similarities with faults from other components, making it more challenging for the model to learn, which ultimately results in lower detection accuracy compared to the other components.

The multi-metric results, including precision, recall, the F1 score for each component, and the dialogue success rate for each component, are presented in

Table 4. In this study, a dialogue is considered successful if the system provides the correct final diagnostic recommendation. Moreover,

Figure 7 illustrates the confusion relationships among different components. It can be observed that the model still exhibits some confusion between semantically similar categories; however, it is able to correctly distinguish fault types of different systems in most scenarios.

4.3.2. Belief-State Tracking

The results of belief-state tracking (Slot-F1, JGA, and belief-state update accuracy) are shown in

Table 5. The results demonstrate that, compared to other frameworks, our approach outperforms in both accuracy and real-time response ability to state changes. This is primarily due to the effective protection of belief-state updates provided by the dual information judgment module, which enhances the ability to capture the user’s true intent. By transforming tasks into semantic matching, we avoid potential understanding biases or information loss that may arise when the large model handles lengthy or complex responses.

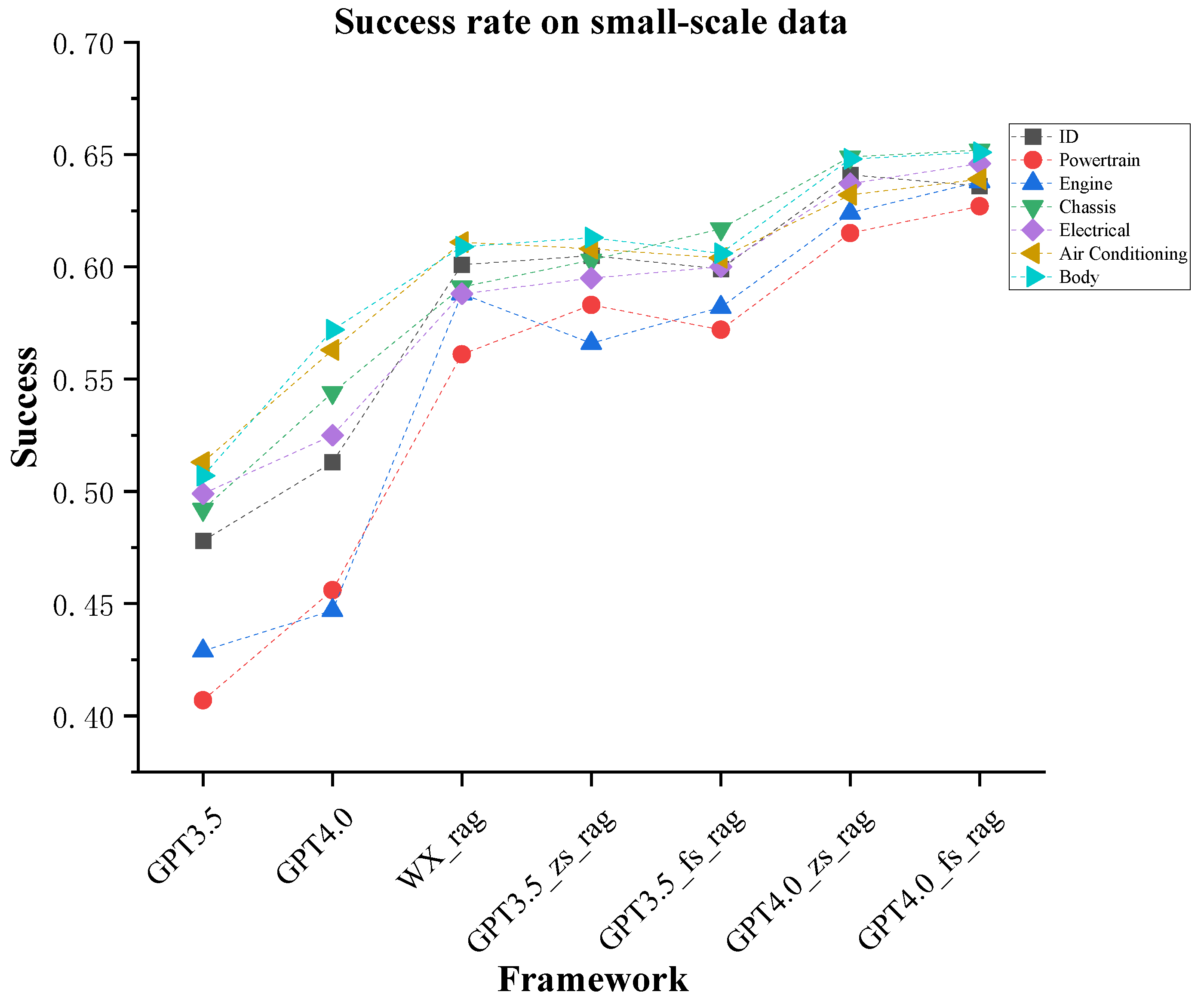

As shown in

Figure 8, on smaller-scale datasets (such as individual components), simpler frameworks can achieve good results through customized prompts. While our framework uses fewer examples in the prompts, we can adapt strategies from these simpler frameworks by increasing the number of examples in the prompts for more specific tasks to improve performance. However, our work focuses more on enhancing the generalization across overall automotive repair scenarios rather than optimizing for a single component. On large-scale datasets, excessively long prompts may be counterproductive and hinder the improvement of cross-component generalization abilities.

4.3.3. Response Generation

The evaluation results of response generation quality (BLEU and ROUGE-L) are shown in

Table 5. It can be observed that the overall scores for these two metrics are relatively low. For pre-trained models, the lower diagnostic accuracy directly affects the generation quality, resulting in lower BLEU and ROUGE-L scores. In traditional frameworks combining large models with RAG, the responses from the large model are usually more verbose. Although the diagnostic accuracy is generally average, the shorter penalty factor improves the scores slightly. However, in our framework, due to the higher diagnostic accuracy, the generated responses are more semantically aligned with the reference answers, leading to relatively higher BLEU and ROUGE-L scores. That said, due to the existence of multiple valid response options in dialogue, the use of a single-reference text limits the upper bounds of these metrics.

4.3.4. Dialogue-Level Performance

Table 5 presents the evaluation results for overall dialogue success rates. In this study, a dialogue is considered successful if a correct diagnostic conclusion is provided at the end. The experimental results indicate that our framework outperforms other frameworks in terms of dialogue success rate. This advantage can be attributed to several key factors, such as the high accuracy and timeliness of belief-state tracking, as well as the role of the mode-switching module. This module is capable of selecting the appropriate diagnostic mode based on different contexts, thereby optimizing the diagnostic process. These factors work in synergy, making the diagnostic process more standardized, ensuring that it is well-supported by sufficient evidence, and effectively reducing diagnostic errors.

4.3.5. The Impact of Different Numbers of Cases in Prompts

We also investigated the impact of the number of examples in prompts on dialogue success rates. In our framework, prompts with examples are mainly applied to the information extraction and update process. The results, shown in

Figure 9, indicate that a small number of examples, compared to zero-shot, leads to better performance. However, increasing the number of examples further does not result in additional performance improvements.

In addition,

Table 6 provides the 95% confidence intervals for the key metrics and presents

t-test results for critical comparisons. All experiments were conducted based on three random splits, with confidence intervals computed using 1000 bootstrap resamples. The results indicate that while the proposed framework does not show statistically significant differences on DDA compared to some strong baselines (e.g., GPT4.0_zs_rag, HuDi_LLM), it achieves statistically significant improvements (

) on major metrics such as Slot-F1, JGA, and Success. These findings further validate the robustness and reliability of the proposed framework.

4.4. Human Evaluation

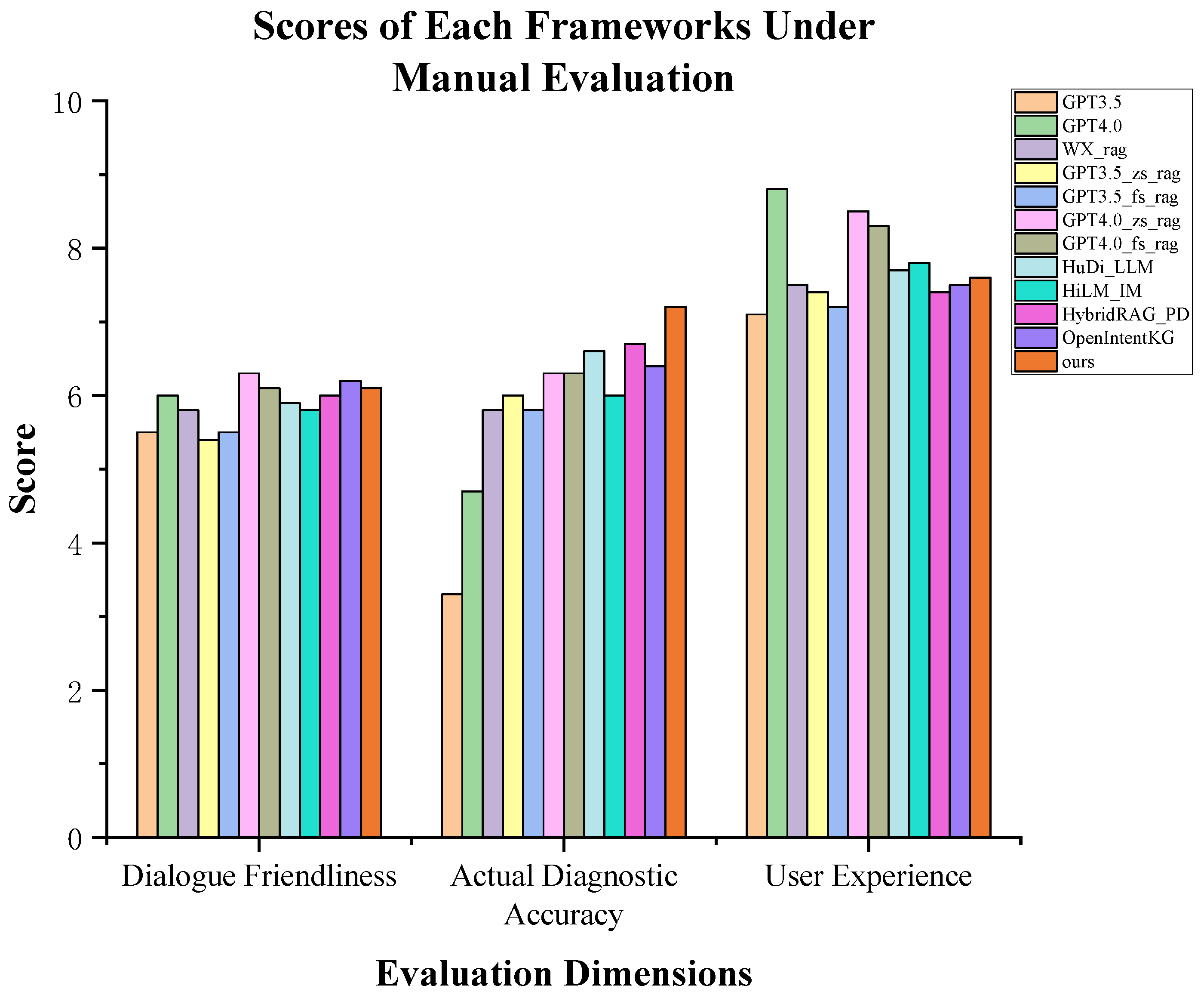

We invited 10 drivers with a certain level of knowledge about vehicle repairs to test our proposed diagnostic framework and other existing frameworks based on common faults and diagnostic results they encounter. Participants rated the frameworks on three dimensions, conversational friendliness, diagnostic correctness, and user experience, with scores ranging from 1 to 10, as shown in

Figure 10. The results indicate that all frameworks scored similarly in conversational friendliness, as they all incorporated large language models and were able to communicate normally with users. In terms of user experience, GPT-4.0 had the fastest response time, scoring the highest, while our framework outperformed others in diagnostic correctness, receiving a higher score, which suggests stronger accuracy in fault identification and higher user satisfaction.

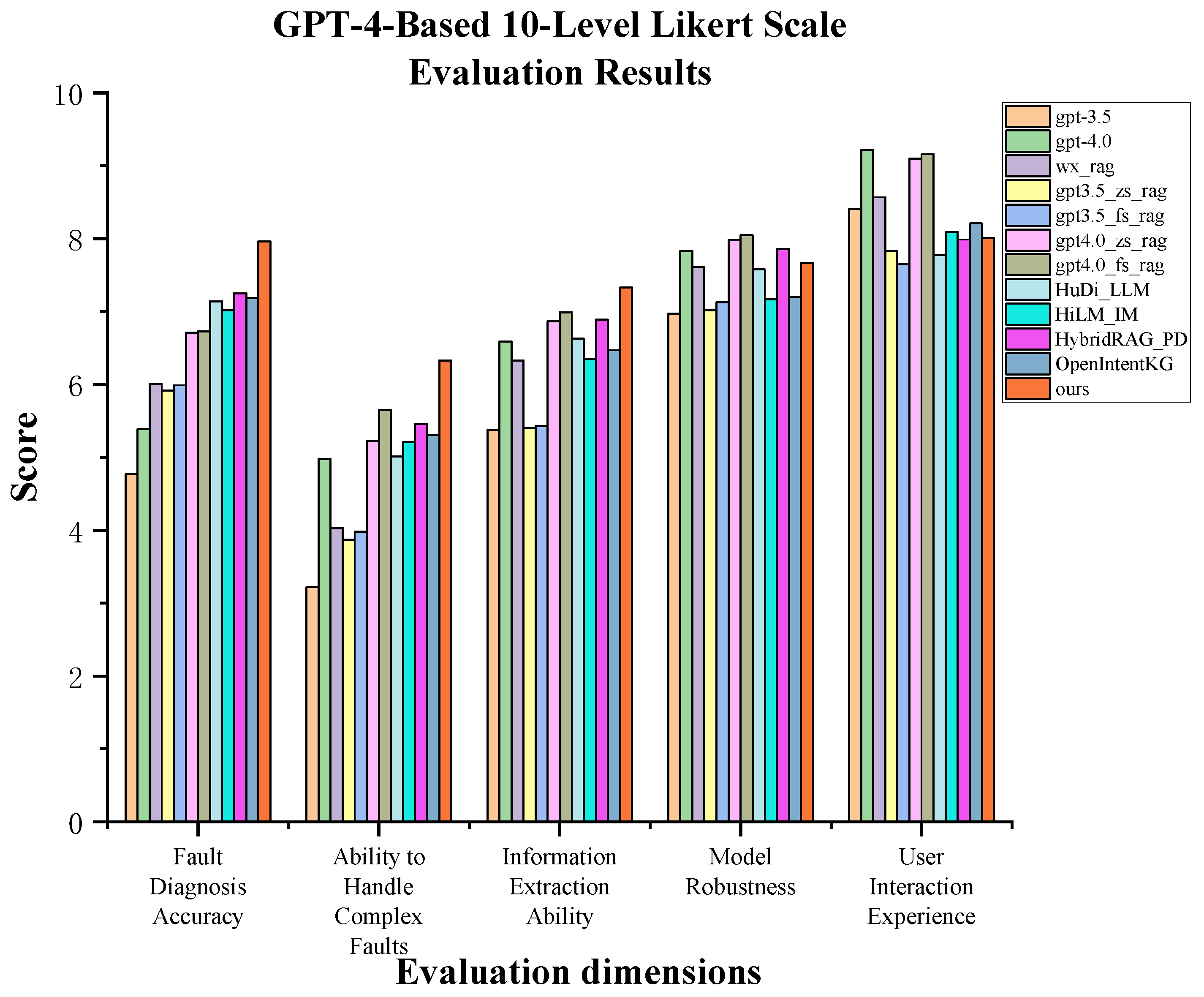

The relevant literature suggests [

46] that the evaluation results of large language models are highly consistent with human evaluations and exhibit strong stability. Therefore, we introduced GPT-4.0 for framework comparison evaluation. We designed a 10-point Likert scale and used the GPT-4-0125-Preview model to evaluate each framework across several dimensions, including fault diagnosis accuracy, complex fault handling ability, information extraction capability, model robustness, and user interaction experience, with scores ranging from 1 to 10 for each dimension. The evaluation results are shown in

Table 7, and the bar chart of GPT-4’s average scores across different frameworks is presented in

Figure 11. Through comparison, our framework demonstrated improvements in fault diagnosis accuracy, complex fault handling, and information extraction ability compared to other frameworks. However, the user interaction experience score was relatively lower, mainly because our framework tends to provide direct explanatory solutions, which may seem somewhat rigid and not as smooth and natural as pre-trained models.

4.5. Ablation Experiment

To verify the effectiveness of each module, we conducted ablation experiments on the framework architecture. In these experiments, we first evaluated the QASE model together with the remaining modules and their combinations. The results are reported in

Table 8. As shown in

Table 8, each module contributes positively to the overall performance of the framework, confirming the rationality of the proposed design.

Subsequently, we further tested the retrieval performance of different component combinations of the QASE model on rare fault diagnosis QA data. The results are presented in

Table 9. As can be seen in

Table 9, compared with the baseline bi-encoder model, the inclusion of our proposed components consistently leads to better performance.

4.6. Fault Diagnosis System

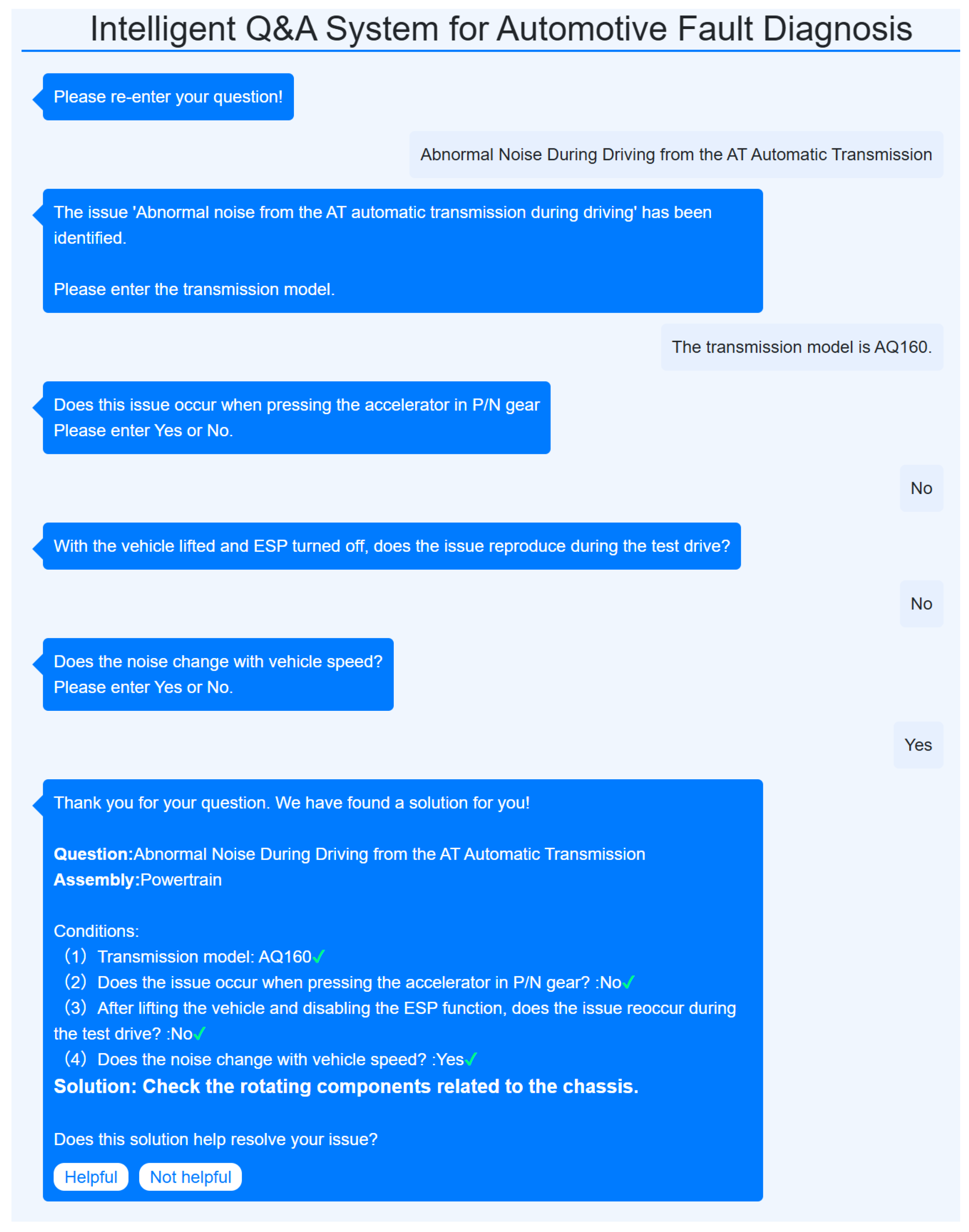

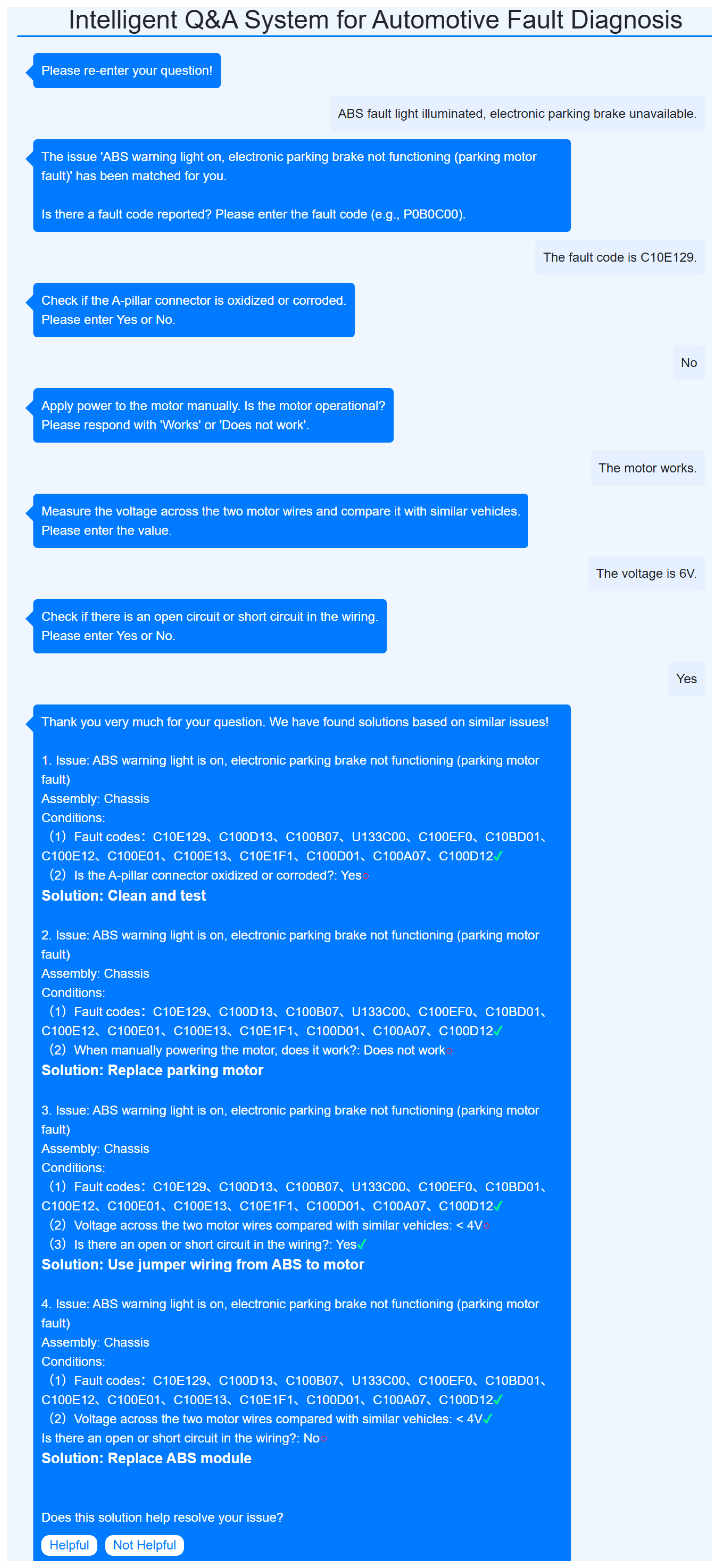

Based on the fault diagnosis framework proposed in this paper, we conducted diagnostic demonstrations for both common and rare fault types. Common faults were identified and processed using the knowledge base retrieval mode, while rare faults were diagnosed through the AI large model retrieval mode, which also provided repair information from similar cases for user reference. The complete fault diagnosis dialogue processes are illustrated in

Figure A1 and

Figure A2 in the

Appendix A.

5. Discussion

This paper proposes a novel automotive fault diagnosis framework to address the challenges of low efficiency, high costs, and trust issues inherent in traditional methods. By integrating artificial intelligence technologies and exploring case studies where knowledge from other domains is combined with large models, we ultimately developed a new framework for automotive fault diagnosis.

During the research process, we collaborated with a well-known German automotive company, utilizing their raw automotive fault diagnosis dialogue data to successfully construct a standardized dataset and related knowledge graph. To enhance the framework’s diagnostic capabilities, we designed and incorporated several key modules: the QASE model, fault detection and state-tracking module, initial information extraction module, dual information judgment module, mode-switching module, and hierarchical knowledge base structure. These modules enable the framework to not only correctly understand and categorize faults but also proactively query missing information and provide precise diagnoses.

Specifically, the QASE model integrates query-aware pooling, enhanced contrastive loss, hard negative mining, and adaptive threshold calibration. These innovations not only preserve the retrieval efficiency of the dual-tower architecture but also significantly improve the discriminative power of semantic representations and the accuracy of question–answer matching; the fault detection and state-tracking module ensures accurate follow-up queries by understanding the semantic information from the user; the initial information extraction module prevents redundant questioning and enhances system intelligence; the dual information judgment module handles different expressions of the same meaning; the mode-switching module selects the appropriate processing mode based on diagnostic information; the hierarchical knowledge base matches the necessary data, further improving diagnostic accuracy.

When applying this framework for fault diagnosis, we achieved an accuracy rate of 77.3%, which represents an improvement of 46.1% over the pre-trained large model (GPT-3.5) and a more than 14% improvement over other common frameworks combining domain knowledge with large models. In

Table 6, we also validated various combinations of modules, proving the effectiveness of all components.

For human evaluation, we invited ten drivers with basic maintenance knowledge to rate the dialogue framework in terms of user-friendliness, diagnostic accuracy, and overall user experience. In addition, GPT-4 was employed to perform a 10-point Likert-scale assessment. In future work, we plan to conduct large-scale surveys and field studies involving professional technicians and end users to systematically evaluate the practical applicability of the proposed framework in real-world maintenance scenarios.

Our framework possesses a certain capability for uncertainty reasoning. When user descriptions are incomplete or contain missing information, the system retrieves semantically similar historical cases and generates multiple candidate diagnostic results. Based on the currently available information, it outputs the most probable repair suggestions, thereby enhancing the robustness and interpretability of the diagnostic process.

Although the current system does not explicitly output confidence scores, the reasoning paths are traceable to entity–relation chains in the knowledge graph, providing transparent sources for each diagnosis and repair recommendation and enabling explainability and result verification. Furthermore, when generating diagnostic suggestions, the system simultaneously presents a slot-value matching table, allowing users to understand the basis of each diagnosis and assisting technicians in verification.

In future work, we plan to incorporate confidence estimation to further improve the reliability and trustworthiness of the diagnostic framework.

Although the system demonstrates excellent performance in fault diagnosis, several types of errors were identified during the evaluation process. The main categories are as follows:

- (1)

Slot Misfilling: When the user’s expression is relatively indirect, the model may misinterpret the slot value, leading to incorrect diagnostic conclusions. For example, in the case of an engine camshaft exhibiting delayed ignition or failing to reach the specified adjustment target, the model may ask whether the camshaft signal wheel phase is normal. If the user does not respond directly but instead provides a comparison result based on another vehicle’s top dead center position, inevitable measurement deviations may cause the model to incorrectly determine that the phase is abnormal, resulting in an incorrect recommendation to replace the camshaft, whereas the actual fault lies in the pulley.

- (2)

Knowledge Graph Coverage Gap: Some fault scenarios are not sufficiently represented in the knowledge graph due to the limited number of related cases, leading to incomplete or inaccurate diagnostic results. For instance, the issue where the instrument cluster occasionally displays a message indicating that the lane-keeping assistance system is temporarily unavailable occurs very rarely. As a result, the corresponding solution in the knowledge graph is incomplete. When encountering such issues, the system typically suggests restarting the vehicle’s system; however, a simple restart may not necessarily resolve the problem, as a software version update might also be required.

- (3)

Language Style Bias: The model exhibits inconsistent performance when faced with different linguistic styles. For example, when users describe a fault using expressions that include regional dialects or non-standard phrasing, the model may occasionally misinterpret the meaning and classify the fault incorrectly, leading to diagnostic errors.

To address the issue of slot misfilling, we plan to augment the training data with a greater variety of synonyms and paraphrased expressions to enhance the model’s semantic understanding capability. For the knowledge graph coverage gap, we will continue collaborating with automotive manufacturers to collect additional diagnostic data and regularly update the graph with missing fault types and repair solutions. To mitigate the impact of language style bias, future work will focus on corpus diversification and the normalization of dialectal or colloquial expressions, thereby reducing the model’s sensitivity to linguistic variations.

Of course, this study still has several limitations, which can be further addressed and improved in the following directions:

- (1)

Homophones and spelling errors in Chinese user input may affect diagnostic accuracy. In future work, we plan to enhance the system’s fault tolerance by strengthening the spelling correction and synonym mapping mechanisms, thereby reducing misdiagnoses caused by user input errors.

- (2)

Although the proposed framework achieves satisfactory performance in fault diagnosis accuracy, there is still room for improvement in response speed and efficiency. Future work should further optimize the framework architecture to enhance responsiveness and fluency, thereby improving the overall user experience.

- (3)

The scalability of the framework is also a key direction for future research. Due to variations in system architectures across different brands and vehicle models, the entities and relations in the knowledge graph may need to be adjusted for specific models. Future work could leverage transfer learning, cross-brand data mapping, and automated knowledge extraction techniques to enable dynamic updates and cross-model expansion of the knowledge graph, thereby enhancing the system’s generalizability and maintainability.

- (4)

Wenxin 3.5 was selected as the underlying language model in this study primarily due to its strong capabilities in Chinese semantic understanding and domain-specific dialogue processing, which allow it to better accommodate Chinese data in the automotive diagnostic domain. Nevertheless, we acknowledge that Wenxin 3.5 still exhibits performance gaps compared to some other models, such as Tongyi 3.5, DeepSeek v3, Claude 3, and Gemini 1.5. In future work, we plan to incorporate model replacement and multi-LLM collaboration mechanisms within the framework, aiming to achieve higher performance and multilingual support without altering the system’s architecture.

6. Conclusions

In this paper, we proposed a new framework that combines domain knowledge with large models to address the challenges of inefficiency and trust issues in automotive fault diagnosis. Compared to traditional retrieval-augmented generation frameworks, our framework introduces new modules such as the QASE model, fault detection and state tracking, initial information extraction, dual information judgment, pattern switching, and hierarchical knowledge bases, all of which have been validated for their effectiveness through multiple evaluations. Our framework achieved an accuracy of 77.3% in automotive fault diagnosis and an improvement of 46.1% over the pre-trained large language model (GPT-3.5), and it surpassed other frameworks by more than 14%. Moreover, the framework demonstrates good cross-domain applicability and can be extended to fault diagnosis in other industries. Future research will focus on exploring its potential in other fields.