Abstract

In large-scale distributed storage simulations, disk simulation plays a critical role in evaluating system reliability, scalability, and performance. However, the existing virtual disk technologies face challenges in supporting ultra-large capacities and high-concurrency workloads under constrained physical resources. To address this limitation, we propose an overcapacity mapping (OCM) virtual disk technology that substantially reduces simulation costs while preserving functionality similar to real physical disks. OCM integrates thin provisioning and data deduplication at the Linux Device Mapper layer to construct virtual disks whose logical capacities greatly exceed their physical capacities. We further introduce an SSD-based tiered asynchronous I/O strategy to mitigate performance bottlenecks under high-concurrency random read/write workloads. Our experimental results show that OCM achieves substantial space savings in scenarios with data duplication. In high-concurrency workloads involving small-block random I/O, cache acceleration yields up to 7.8× write speedup and 248.2× read speedup. Moreover, we deploy OCM in a Kubernetes environment to construct a Ceph system with 3 PB logical capacity using only 8.8 TB of physical resources, achieving 98.36% disk cost savings.

1. Introduction

With the rapid advancement of the information society, the volume of data generated worldwide has been increasing exponentially. At present, approximately 400 million TB of data are generated globally each day, and projections estimate that the global data volume will reach 181 ZB by 2025 [1]. This trend not only underscores the role of data as a critical production factor but also accentuates the urgent demand for high-performance large-capacity storage ability. In this context, large-scale storage systems have evolved into a core element of modern digital infrastructure and are widely deployed across diverse domains, including the Internet of Things, finance, and converged media. It is anticipated that, with the continued advancement of related technologies, application scenarios will become increasingly diverse, thereby presenting new challenges to the reliability, scalability, and performance of large-scale distributed storage systems. Therefore, conducting in-depth research and evaluation of large-scale distributed storage systems is of significant importance.

However, when conducting systematic research on large-scale storage systems, directly establishing an experimental environment with real physical equipment often presents two major challenges: First, fully replicating a large-scale storage system requires substantial hardware investment, along with complex deployment, operational, and resource scheduling overheads. Second, reducing the scale of the experimental environment, although cost-effective, leads to limited node counts, simplified network structures, and insufficient load capacity, thereby preventing experimental results from accurately capturing critical issues observed in real-world scenarios, such as node failures, network congestion, and degraded high-concurrency I/O performance. To address these challenges, storage simulation technology has emerged as an important alternative to real-world deployments.

Storage simulation technology reproduces the operational characteristics of large-scale storage systems within a low-cost and controllable virtual environment, encompassing system architecture, operational mechanisms, and typical scenarios such as node failures, network congestion, and high concurrency. This enables researchers to analyze, optimize, and evaluate system reliability, scalability, and performance without reliance on large-scale physical equipment. Storage simulation research is typically organized into different abstraction levels, including the architecture layer, device layer, I/O layer, and application layer. Among these, disk simulation is a critical technology at the device layer, modeling and reproducing behaviors such as capacity management, read/write latency, concurrent access, and error semantics of the underlying storage medium. The current disk simulation research can generally be divided into two categories: (1) disk simulation frameworks targeting the device and micro-architecture layers, which describe medium and firmware behavior by modeling control paths, I/O queues, and parallel scheduling; and (2) virtual disks aimed at system integration, which expose standard block device interfaces that can be directly recognized and utilized by the operating system and upper-layer applications. The former, represented by tools such as SimpleSSD [2], MQSim [3], and FlashSim [4], are valuable for evaluating disk I/O strategies and micro-architecture designs (e.g., parallelism, scheduling, and garbage collection). However, their results generally remain at the model level or are presented in statistical form, without providing a mountable block device interface, thereby limiting their applicability to large-scale storage simulation. In contrast, virtual disk technology offers stronger integration capabilities and greater simulation fidelity, making it more suitable for large-scale storage simulation and research. Building on this, the present study focuses on virtual disk technology and explores a method for constructing virtual disks that can be recognized and utilized by upper-layer systems, thereby supporting the infrastructure required for large-scale storage simulation.

Various technologies have been proposed to construct high-capacity virtual disks. Hajkazemi et al. [5] identified significant write amplification issues in networked virtual disks during small-block random writes and proposed the Log-Structured Virtual Disk (LSVD). By incorporating a log-structured architecture at both the cache and backend storage layers, LSVD enhances write performance and consistency guarantees in cloud storage environments. This architecture leverages a sequential write strategy, where incoming data is first logged in a local cache before being sequentially uploaded to object storage, thereby significantly mitigating performance degradation caused by random writes. Xing et al. [6] addressed the issue of load imbalance across routing servers arising from skewed access patterns in cloud block storage systems by introducing VDMig, a virtual disk management mechanism driven by adaptive migration based on access characteristics. This mechanism analyzes the access trajectories of large-scale cloud virtual disks (CVDs) and employs semantic-aware techniques for load modeling and migration scheduling. Through this approach, it achieves fine-grained load balancing at the routing layer, thereby significantly enhancing overall service quality and improving system resource utilization. Zhao et al. [7] introduced RR-Row (Redirect-on-Write), a virtual machine disk format designed for record/replay scenarios in cloud environments. By adopting a redirect-on-write mechanism that retains all the written data within the virtual disk, RR-Row enables logging systems to record only block IDs instead of full data content, reducing log volume by up to 68% and enhancing system I/O performance. Tian et al. [8] proposed a secure virtual disk, termed AtomicDisk, for trusted execution environments. Its core mechanism introduces synchronous atomicity to mitigate data inconsistency caused by eviction attacks. In this design, the system preserves data freshness and integrity against malicious or abnormal elimination by distinguishing between user submissions and cache evictions during synchronous writes. Raaf et al. [9] systematically investigated and enhanced RedHat’s Virtual Disk Optimizer (VDO). To address the substantial performance degradation caused by enabling inline deduplication and compression on HDDs, they proposed optimization strategies including on-demand allocation (ODA), optimization of non-verified (NV) deduplication based on strong hashing, and metadata migration to SSDs. They further introduced a read-path prefetch mechanism to improve the performance and cost efficiency of virtual disks under sequential workloads. These studies have yielded significant advancements in virtual disk architecture, performance optimization, and security assurance. However, they primarily focus on single-device or scenario-specific issues and do not address the capacity challenges inherent in large-scale storage simulation.

A range of virtual disk technologies capable of supporting over-provisioning have already been developed in both industry and the open-source community. For example, the scsi_debug module in the Linux kernel enables the creation of virtual disks with logical capacities that significantly exceed their physical memory buffers. Internally, scsi_debug manages this memory using a circular buffer: when the volume of written data exceeds the buffer size, the oldest data is overwritten without notifying the upper layers of disk-full conditions or physical capacity exhaustion. Although this design imposes no restrictions on write operations, it fails to realistically emulate error feedback and system behavior under full-disk conditions. Furthermore, when a request is issued to read overwritten data, scsi_debug returns the most recently written content instead of an error, leading to inaccurate emulation and reduced reliability in large-scale storage simulation scenarios. Virtual machine technologies based on hypervisors [10,11,12] (such as Xen and QEMU-KVM) are also capable of constructing high-capacity virtual disks by employing thin provisioning strategies. Mainstream virtual disk formats—such as QCOW2, VMDK, and VDI—support over-provisioning through sparse file techniques [13]. In these formats, the declared logical capacity of a virtual disk can significantly exceed the actual allocated physical space. Initially, only metadata is stored, and physical blocks are dynamically allocated on demand. Unwritten virtual blocks consume no physical storage and return logical zero values when read. This mechanism significantly reduces host storage consumption for idle virtual disks through deferred allocation of physical resources, thereby enhancing overall resource utilization. However, these virtual machine disk formats inherently lack data deduplication capabilities, leading to redundant data being repeatedly stored in the underlying physical space. Although virtual machine disks provide large logical capacities, the actual available space remains severely constrained, making it challenging to support large-scale data read and write operations in storage simulation environments.

Nevertheless, these virtual machine disk technologies are capable of meeting most application requirements under typical workloads and have therefore been widely adopted in cloud computing and virtualization environments. However, their performance bottlenecks and scalability limitations become increasingly apparent when deployed in complex scenarios requiring high performance, reliability, or hybrid storage support. To address these shortcomings, researchers have proposed various optimization techniques aimed at enhancing the applicability of virtual disk systems. For instance, Li et al. [14] introduced the Triple-L method to improve virtual machine disk I/O performance in virtualized NAS environments. This approach incorporates Shadow-Base, Log-Split, and Local-Swap techniques to localize image and log operations, thereby reducing network latency and enhancing I/O performance. Eunji Lee et al. [15] proposed a caching strategy called Pollution Defensive Caching (PDC) to mitigate the performance degradation caused by frequent VM log updates in fully virtualized systems. PDC identifies and isolates VM log data to prevent cache pollution while allowing other I/O requests to be processed by the host cache without interference. Through this dynamic cache management, PDC effectively enhances virtual disk I/O performance. Minho Lee et al.1 proposed a transaction-oriented hypervisor optimization method that integrates transactional support, group synchronization (gsync), and selective Copy-on-Write (CoW) mechanisms to reduce unnecessary file synchronization and write operations, thereby enhancing the performance of QCOW2-format virtual disks. With the ongoing development of SSD technologies, numerous studies have focused on optimizing virtual machine disks on physical SSDs. For example, Kim et al. [16] proposed an address reshaping technique at the virtualization layer to sequentialize random write requests, reducing SSD garbage collection overhead and improving random write performance in flash storage environments. Yang et al. [17] proposed the AutoTiering method, which predicts virtual machine performance trends to dynamically optimize disk allocation and migration strategies, thereby improving storage resource utilization in all-flash data centers while avoiding excessive migration overhead. Chen et al. [18] proposed a virtual machine disk migration prefetching algorithm, termed StriPre, in a cross-cloud environment, aimed at addressing degraded startup performance caused by high latency and low hit rates during virtual disk migration. The method identifies a “multi-strip alternating pattern” in disk access trajectories and integrates step-size prediction with symmetry analysis to prefetch future I/O requests efficiently, thereby significantly improving hit rates during migration. Overall, these studies have made significant contributions to I/O performance, cache management and storage optimization of virtual machine disks, thereby enhancing disk performance in virtual machine environments. However, the existing virtual machine disk technologies and related optimization efforts exhibit two main limitations: first, they generally rely on the virtual machine operating environment and lack versatility across broader system scenarios; second, most improvements target specific cases, such as log writing, network storage, or all-flash architectures, while offering limited support for hybrid storage environments and high-concurrency random write workloads. These limitations hinder the ability of such technologies to provide the required capacity expansion and stable I/O performance guarantees for large-scale storage simulation under constrained physical resources.

Physical capacity denotes the actual amount of space available on the underlying storage medium for data storage. Logical capacity denotes the amount of storage space presented to users by the operating system through a logical interface (e.g., the Linux block device interface), which abstracts the physical capacity. In general, logical capacity and physical capacity remain consistent. For instance, the operating system may abstract a 500 GB physical disk as a 500 GB block device, on which users can create partitions or file systems. When upper-layer applications write data to the block device, the data is ultimately stored on the physical medium. If the written data exceeds the physical capacity, the system triggers an error or exception, indicating insufficient space.

To support large-scale storage simulation, the primary objective of this paper is to construct large-scale (i.e., both numerous and high-capacity) virtual disks on a limited number of physical disks while ensuring efficient I/O processing under high concurrency. This design ensures that the virtual disks operate stably over the long term in the simulation environment, thereby supporting large-scale storage research. Based on this objective, the main contributions of this paper are as follows:

- (1)

- We propose an overcapacity mapping (OCM) method that integrates deduplication-aware thin provisioning. This method leverages thin provisioning and data deduplication techniques at the Device Mapper layer to construct an overcapacity virtual disk (OverCap) whose logical size exceeds the actual physical storage capacity. Thin provisioning is an on-demand allocation mechanism that allows the declared logical capacity of a volume to exceed the available physical storage, with physical blocks being allocated only when data is actually written. This approach effectively mitigates the constraints imposed by limited physical disks in large-scale storage simulations, enabling full utilization of the logical capacity of virtual disks in realistic redundant data scenarios, thereby supporting large-scale data writes.

- (2)

- We design a tiered asynchronous I/O strategy leveraging SSD caching. By decoupling write and read paths and employing threshold-driven control together with asynchronous scheduling, this strategy significantly improves OCM throughput in complex concurrent environments and alleviates I/O performance bottlenecks of overcapacity virtual disks under highly concurrent workloads.

- (3)

- We conduct a series of multidimensional experiments to systematically validate the proposed OCM approach, covering key aspects such as deduplication-aware write capability and the performance of caching mechanisms in both write and read paths. In the space saving evaluation, we use real-world mixed data for writing tests with varying duplication ratios to verify the effectiveness of OCM in recognizing redundant data and reducing physical storage consumption. In the performance assessment, we design test scenarios that vary duplication ratio, concurrency level, and block size, and evaluate OCM’s performance across workloads using metrics such as write throughput and read acceleration ratio. The experimental results demonstrate that the virtual disk constructed using the OCM method exhibits reliable availability in practical write scenarios and delivers stable I/O performance under highly concurrent read and write workloads.

The remainder of this paper is organized as follows: Section 2 presents the technical background relevant to the proposed approach. Section 3 details the implementation of the proposed OCM method. Section 4 presents the experimental evaluation conducted to demonstrate the effectiveness of OCM. Section 5 includes a discussion and outlines future research directions, with a focus on the potential applications of OCM in future storage system development. Finally, Section 6 concludes the paper and outlines the potential applications of OCM in the evolution of storage technologies.

2. Technical Background

2.1. Thin Provisioning

Thin provisioning (TP) enables the creation of logical volumes (LVs) with large logical capacities on physical storage resources (e.g., disks, partitions, or physical volumes) by dynamically allocating the underlying storage. These logical volumes, hereafter referred to as Thin_LVs, are exposed as block device files in the operating system. When a Thin_LV is activated, the LVM driver registers it with the kernel and records metadata describing the logical device (e.g., logical size, block size, and associated physical device). Upper-layer applications can query this metadata via LVM APIs to retrieve information about Thin_LV, while the physical volume (Thin_PV) associated with the Thin_LV remains transparent to those applications. Notably, when a Thin_LV is created, storage space equal to its logical capacity is not immediately allocated. Instead, storage is allocated only when data is actually written. Specifically, the system does not allocate storage for unwritten logical blocks, and reading such blocks returns a preset fill value (typically zero), indicating unallocated space. Once a write operation references a logical block address (LBA) for the first time, the thin provisioning manager allocates a physical block (PBA) from the storage pool to map to that LBA. This on-demand allocation significantly improves storage resource utilization and allows the system to flexibly adapt to evolving workload demands.

In Linux, the Device Mapper (DM) framework provides a dedicated module for this purpose, known as Device Mapper thin provisioning (DM-thin). DM is a block device management framework within the Linux kernel that supports customized storage mapping, data transformation, and policy control to enable highly flexible storage management. By performing storage management operations at the kernel level, DM reduces overhead and improves data access efficiency. To create a new logical device type, a DM target must be constructed and registered with the operating system. Subsequently, users can instantiate corresponding targets, which are presented as standard block devices to the file system and applications. Block device information is maintained within kernel data structures and exposed to the operating system through kernel and file system interfaces.

2.2. Data Deduplication Technology

Data deduplication [19,20] is a data compression technique designed to eliminate redundant data in storage systems by identifying and removing duplicate data blocks, thereby conserving storage space. During the deduplication process, the system typically computes a fingerprint for each data block and compares it against existing fingerprints stored in an index to determine data uniqueness. If the fingerprint of a new block matches one already in the index, the block is identified as a duplicate, and only a reference to the original block is stored, thereby avoiding redundant physical writes4. This method is particularly effective in environments characterized by high data redundancy [21,22,23].

Deduplication strategies are generally categorized into file-level and block-level approaches [24]. In block-level deduplication, data can be further partitioned into either fixed-size chunks or variable-size chunks. Fixed-size chunking divides data into uniform blocks, offering simplicity and low overhead, but is susceptible to misalignment caused by data shifts. In contrast, variable-size chunking defines chunk boundaries based on content, allowing for more effective detection of duplicates, albeit at the cost of increased algorithmic complexity. OCM adopts the fixed-size chunking strategy to ensure that logical data blocks generated during each write operation maintain consistency in size and content when handling duplicate data, thereby significantly improving deduplication efficiency.

Furthermore, the timing of deduplication is a critical factor influencing its overall effectiveness. Generally, deduplication can be performed in either an inline or post-processing manner9. Inline deduplication performs fingerprint computation and duplicate detection at the time of data ingestion, effectively preventing redundant data from being physically written, although it may introduce additional I/O latency. In contrast, post-processing deduplication allows data to be written in full before conducting periodic scans for duplicate identification, which avoids immediate performance overhead but temporarily increases storage consumption.

2.3. SSD Cache and Tiered Storage

SSD Cache: SSD caching is a widely adopted strategy for improving I/O performance in storage systems by introducing a high-speed caching layer (typically an SSD) placed in front of slower backend devices such as HDDs [25]. Functioning at the block device layer, the SSD cache intercepts I/O requests from upper-layer file systems or logical volumes and dynamically decides whether to fulfill the request from the cache or forward it to the backend storage based on predefined caching policies. A typical SSD caching system maintains a dedicated metadata region responsible for managing essential control structures, consisting of two primary components: (1) a cache mapping table that tracks the mapping between logical block addresses and cached data blocks, enabling fast lookup and accurate cache hit/miss determination; and (2) block state metadata, which records block attributes such as validity, dirtiness, access frequency (hotness), and timestamps, thereby supporting cache replacement policies and ensuring data consistency. Based on how write operations are handled, SSD cache systems can be classified into write-through and write-back modes [26]. In the write-through mode, all write requests are synchronously written to both the backend device and the cache layer, with the cache used solely to accelerate read operations, ensuring data consistency at the cost of reduced write performance. In contrast, the write-back mode temporarily stores write requests in the SSD cache and defers writing to the backend device asynchronously, with flushing or eviction triggering physical persistence. The write-back strategy significantly reduces HDD seek overhead and write amplification through buffered write aggregation and sequential batch persistence, making it the dominant design in performance-sensitive scenarios.

Tiered Storage: Tiered storage refers to the deployment of multiple storage devices with different performance and capacity characteristics at the physical layer, where data is distributed across these tiers based on access frequency and lifecycle. An SSD cache can be considered a specific case of tiered storage, typically forming a two-level hierarchy where the upper tier serves as a high-speed cache and the lower tier as the primary storage [27]. At the block device management level (e.g., Device Mapper), tiering strategies usually rely on access heat statistics and replacement algorithms such as LRU [28], LFU [29], and FIFO [30] to periodically evaluate access frequency and temporal patterns of blocks, thereby determining which cache blocks to retain or evict and enabling automatic migration between hot and cold data.

3. Methods

3.1. Problem Analysis and Overview of the Proposed Approach

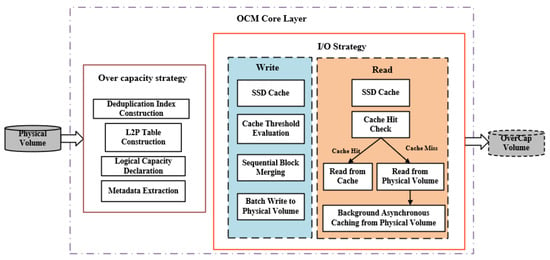

This research addresses the challenge of efficiently constructing large-scale overcapacity virtual disks with constrained physical storage resources while maintaining high disk performance under highly concurrent random write workloads. To address this challenge, we propose the OCM method, the overall architecture of which is illustrated in Figure 1. The method consists of two core components:

Figure 1.

Architecture of the OCM simulation method.

- (1)

- A deduplication-aware overcapacity logical volume construction strategy. OCM directly initializes overcapacity logical volumes (OverCap volume) upon physical volume partitions and builds metadata structures comprising a deduplication index and a logical-to-physical (L2P) mapping table. Based on these structures, OCM declares a mapping where the logical capacity exceeds the physical capacity, thereby enabling over-provisioning, and registers the logical device following volume metadata extraction.

- (2)

- A hierarchical asynchronous I/O optimization strategy based on SSD caching. After completing the initial construction of the OverCap volume, OCM employs a hierarchical I/O optimization strategy based on SSD caching. In the write path, the cache strategy leverages an SSD-based layer to temporarily absorb write requests from upper-layer components. When the cache reaches a predefined threshold, the strategy merges the buffered data into sequential blocks and performs asynchronous batch writes to the physical volume. In the read path, the strategy first evaluates cache hits within the SSD cache layer. If a cache hit occurs, the requested data is returned immediately; otherwise, the strategy accesses the underlying HDD-based physical storage and initiates asynchronous background caching of the retrieved data.

3.2. Deduplication-Aware OverCap Volume Construction Mechanism

The deduplication-aware overcapacity logical volume construction mechanism addresses two primary challenges: (1) how to construct logical volumes whose declared capacities significantly exceed available physical storage; and (2) how to ensure that the declared logical capacity remains fully writable and practically usable, approximating the effective space of a real large-capacity disk.

To meet these objectives, a construction mechanism for overcapacity logical volumes (referred to as OverCap volumes) is developed by integrating the Linux kernel’s dm-thin module with a content-aware deduplication strategy. During volume initialization, OCM initiates a deduplication-aware initialization path to construct a content fingerprint index table, laying the foundation for content-driven block address allocation and reuse during subsequent writes. The block size for OverCap is fixed at 4 KB, consistent with mainstream file systems (e.g., ext4), to facilitate logical address alignment and mapping, and improve duplicate detection granularity, thereby enhancing overall deduplication efficiency. This mechanism supports logical space declarations that far exceed physical capacity while ensuring OverCap volume stability and availability under complex read/write workloads and unexpected interruptions through structured metadata initialization, dynamic mapping, and consistency maintenance mechanisms.

The OverCap volume uses physical disk partitions as its fundamental construction unit. On each partition, a unique OverCap instance is created, with independent metadata structures initialized, including a deduplication index table and an L2P mapping table. These structures support dynamic mappings between LBA and PBA, enabling explicit separation of logical and physical address spaces. To support large-scale duplicate data simulation scenarios, OCM employs a customized metadata structure design that incorporates content-level indexing, reference count fields, and auxiliary metadata for consistency support, thereby ensuring the availability and recoverability of deduplicated volumes.

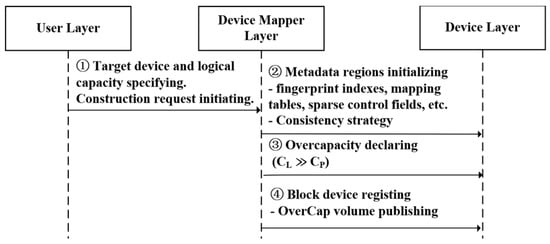

The detailed construction workflow is illustrated in Figure 2.

Figure 2.

Workflow for constructing OverCap volumes. denotes the logical volume size, and represents the allocated physical storage capacity.

- (1)

- OCM initiates the construction process based on user-specified parameters, including the target physical partition, logical volume identifier, and expected logical capacity, and enters the metadata initialization phase.

- (2)

- The Device Mapper target module initializes the metadata structures required for OverCap volume management on the specified physical storage device. These metadata components comprise the fingerprint index, logical-to-physical mapping table, and sparse control segments. In addition, it registers mechanisms supporting metadata crash recovery and consistency-aware write strategies.

- (3)

- Upon completing metadata initialization, OCM explicitly declares the overcapacity mapping relationship within the metadata region.

- (4)

- The constructed OverCap volume is then exposed as a standard block device via the Device Mapper framework, enabling transparent access by upper-layer file systems or distributed storage applications without altering upper-layer I/O semantics.

Through the above mechanism, OCM achieves an overcapacity configuration in which the logical capacity significantly exceeds the physical capacity and ensures each data block is subject to fingerprint-based uniqueness verification during the write process to eliminate redundant storage. This mechanism provides a low-cost, large-capacity, and high-fidelity storage substrate for virtualized distributed storage systems, thus making it particularly suitable for testing and simulation scenarios characterized by a high proportion of duplicate data.

3.2.1. Deduplication-Aware Metadata Structure Initialization

In constructing OverCap volumes, the deduplication-aware metadata structure is designed based on a core principle: to establish a content-driven dynamic address translation path, where content fingerprints serve as the lookup keys, LBA acts as the primary index, and PBA defines the destination mappings. Specifically, the deduplication index table employs the cryptographic hash of each data block as the index key to identify duplicate content and retrieve the corresponding PBA. In parallel, the mapping table uses the LBA as the primary key to store the associated PBA, alongside reference count metadata to manage shared block lifecycle and garbage collection.

During the initialization phase, OCM completes the initialization and registration of metadata structures, which lays the foundation for a content-aware mapping mechanism. By tightly coupling the fingerprint index with the logical-to-physical address mapping table, OCM facilitates duplicate data detection, logical-to-physical address binding, and shared block tracking during subsequent write operations. These capabilities constitute the essential metadata foundation for supporting data deduplication and enabling physical space reuse. The metadata initialization process comprises the following three key components:

- (1)

- Fingerprint Index Initialization: For each data block to be written, a content fingerprint is first computed using the cryptographic hash function BLAKE2b, which serves as the unique identifier for deduplication operations. The deduplication lookup process is defined as:

Here, denotes the set of all existing fingerprints and represents the set of physical block addresses. The lookup function searches the deduplication index table for the fingerprint . If a match is found , this indicates that is a duplicate, and the corresponding physical block address is returned directly. Otherwise, a new physical block is allocated, and the fingerprint index is updated accordingly.

BLAKE2b is a cryptographically strong hash function characterized by high computational complexity and robust security. It operates over a 256-bit hash space, which theoretically yields distinct hash values, resulting in an extremely low probability of hash collision. This property ensures a high level of accuracy and reliability in data deduplication operations. Furthermore, OCM strictly enforces byte-level consistency during duplicate detection: a data block is regarded as identical only if its BLAKE2b fingerprint matches exactly. If any encoding differences exist, even when the contents are semantically similar, they are treated as distinct data and allocated a new physical block address.

- (2)

- L2P Mapping Table Initialization: OCM adopts a sparse mapping strategy, in which physical blocks are not preallocated for the logical address space. Instead, a mapping is established only upon the first write, as represented by

If the incoming data is determined to be a duplicate, the mapping table records that references an existing ; otherwise, a new physical block is allocated. This mapping behavior is tightly integrated with the deduplication result, forming a content-aware address resolution path.

- (3)

- Reference Counting Mechanism Initialization: Since multiple logical addresses may reference the same physical address, OCM incorporates a reference counting mechanism to monitor the usage of each physical block and ensure correctness during space reclamation. The reference count is computed as

Here, indicates the number of logical addresses currently mapped to , where is the LBA to PBA mapping function, is the indicator function returning 1 when maps to , and N is the total number of logical blocks. When the reference count drops to zero, it signifies that no logical address points to that physical block, which can then be safely reclaimed and returned to the free block pool for future allocations.

3.2.2. Metadata Consistency Strategy Design

As shown in Figure 2, during the construction phase, OCM not only initializes metadata structures but also incorporates a metadata consistency maintenance strategy to ensure metadata integrity under high-concurrency write workloads and unexpected failures. This strategy is structured around three operational paths: write buffering, periodic synchronization, and failure recovery. The overarching goal is to maintain metadata consistency and to enable rapid recovery following unexpected shutdowns.

During the write phase, OCM first performs deduplication checks: if a data block already exists, the corresponding physical block is reused via a reference counting mechanism; if the block is new, a physical block is allocated and the logical-to-physical mapping table is updated accordingly. To ensure the atomicity of metadata operations, OCM employs a write-once buffering strategy. Updates to the fingerprint index table and the LBA → PBA mapping table are first staged in an in-memory buffer (Mbuf), where modified entries are marked as “dirty page,” and persistence is deferred to reduce the overhead of frequent disk writes. Furthermore, all changes involving reference counts and mapping relationships are synchronously recorded in a recovery log and batch-flushed to the persistent metadata area through a periodic checkpoint mechanism. As a result, even under conditions of high write concurrency or sudden failures, OCM can recover to a consistent state through log replay, thereby preventing duplication errors and mapping inconsistencies caused by partial writes or incorrect updates and ensuring overall data integrity.

To balance consistency and throughput, a periodic metadata flushing mechanism is employed. This mechanism flushes all dirty pages in batches to the persistent metadata area Mdisk while concurrently recording corresponding checkpoint entries into the metadata journal. By sequentially appending journal entries and checkpoint markers, OCM can define clear consistency boundaries, enabling efficient crash recovery without requiring a full journal scan.

When an abnormal interruption occurs and the system is restarted, the OCM method triggers the log replay mechanism during the initialization stage. This mechanism relies on the most recently committed checkpoint to replay the necessary metadata update operations from the metadata log, thereby restoring the mapping tables and core metadata structures (e.g., the fingerprint index) to a consistent state and preventing fingerprint conflicts or logical-to-physical mapping inconsistencies resulting from partially applied updates. The overall process is shown in Algorithm 1.

| Algorithm 1: Metadata Consistency Preservation and Recovery Mechanism |

Input: I/O Request Queue Q, Metadata Buffer , Persistent Metadata Storage Output: Ensure metadata consistency during runtime and support recovery after crash

|

3.3. Tiered Asynchronous I/O Optimization Strategy Based on SSD Caching

OCM enables the construction of ultra-large logical volumes atop limited physical storage resources through the deployment of OverCap volumes. However, performance bottlenecks may arise during write operations, primarily stemming from the reliance on HDDs as backend storage: (1) latency fluctuations under high-concurrency workloads, where multi-threaded write requests face contention during mapping updates, deduplication lookups, and physical writes, leading to write amplification and disk queue congestion; (2) high read latency and low cache hit rates as the oversized logical volume may cause frequently accessed hot data to incur frequent and inefficient disk read operations in the absence of a proper caching mechanism.

To enhance the usability and responsiveness of OCM, a tiered asynchronous I/O optimization strategy leveraging SSD caching is incorporated into the storage stack. This strategy introduces an intermediate caching layer between the logical abstraction and backend physical disks, effectively decoupling frontend and backend I/O paths. In the write path, a mechanism combining sequential block merging with asynchronous batch flushing is employed to alleviate backend pressure and optimize write scheduling. In the read path, a hotspot-aware cache hit detection mechanism is implemented to enhance read speed under high data redundancy workloads.

3.3.1. Asynchronous Flush Mechanism Based on Write Aggregation

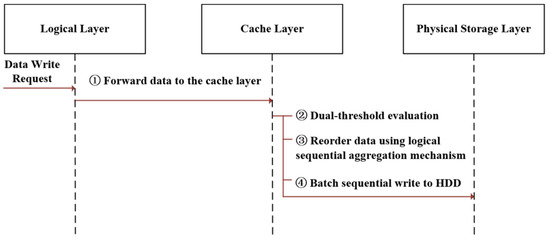

To enhance the write responsiveness and sustained flushing performance of OverCap volumes under high-concurrency workloads, we propose an asynchronous flush strategy based on write aggregation. By introducing SSD-based write caching and deferred flushing mechanisms, this strategy effectively decouples frontend and backend I/O paths and improves sequential write bandwidth. As illustrated in Figure 3, the flush strategy consists of four stages:

Figure 3.

Hierarchical asynchronous write workflow with SSD cache. The arrows represent the direction of data flow, where steps 1–4 indicate the sequential process from the logical layer to the physical storage layer.

- (1)

- Write Request Buffering: Upon receiving write requests from the upper layer, logical layer buffers the data in a high-performance SSD cache instead of writing immediately to the backend disk. This cache functions as the write entry point, temporarily storing incoming write data to support low-latency response and enable batch scheduling.

- (2)

- Dual-Threshold Flush Decision: We employ a dual-threshold flushing policy based on cache usage and refresh cycle. As formalized below,

Here, denotes the current volume of buffered write data, represents the maximum cache capacity, is the timestamp of the last flush, and defines the minimum interval between flushes. A flush is triggered when either the cache utilization exceeds a predefined threshold or the time since the last flush surpasses the minimum flush interval .

- (3)

- Logical Sequential Aggregation: To enhance write throughput, OCM implements a logical sequential aggregation mechanism. Write requests are sorted by LBA and grouped into logically contiguous write units based on address proximity. This aggregation can span original request boundaries, thereby maximizing sequential disk throughput and minimizing write amplification and disk seek overhead.

- (4)

- Batch Asynchronous Write: The aggregated data is dispatched for persistence in batches via background threads. A dedicated flush queue maintains logical address order, ensuring that foreground write paths remain latency-free. The scheduler dynamically adjusts batch sizes based on I/O load to ensure adaptive performance.

Furthermore, this flush mechanism is closely integrated with OCM’s metadata consistency module. Metadata changes originating from write operations are marked as dirty pages and atomically persisted during the flush phase. Details of the consistency protocol are described in Section 3.2.2.

3.3.2. Read Cache Hit Mechanism Based on Access

This subsection introduces a cache hit mechanism based on hotness detection, which dynamically determines cache hits by tracking the access frequency of logical blocks within a sliding time window. In conjunction with hit detection for unwritten data in the write cache, the mechanism effectively reduces backend disk access overhead and improves responsiveness and cache utilization.

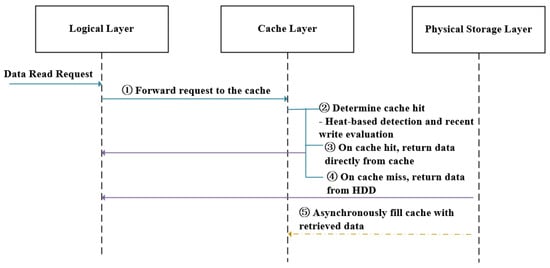

The processing flow of this strategy includes the following five stages:

- (1)

- Request Forwarding: Upon receiving a read request from the upper layer, the logical layer immediately forwards the request to the cache layer for hit detection.

- (2)

- Hotness Evaluation and Cache Query: The cache layer determines whether the target logical block is a cache hit based on its access frequency and write status. To quantify access frequency, the strategy defines a hotness evaluation function:

where indicates whether logical block was accessed during the t-th time slice (1 if accessed, 0 otherwise). W denotes the width of the sliding window, counting the number of accesses in the most recent W time slices. T refers to the current time slice, and marks the beginning of the evaluation window. This function reflects the access frequency of , with higher values indicating more frequent recent access.

The read cache mechanism defines a hotness threshold to determine whether a logical block qualifies to reside in the cache. When , mechanism considers the block hot and prioritizes its preloading or retention in the SSD cache to support fast cache hits. Additionally, blocks still residing in the write cache (i.e., not yet flushed and without assigned PBAs) are also considered cache hits, regardless of their hotness, to maintain consistency during read access.

- (3)

- Cache Hit Return: If the hit detection determines that the block is hot or resides in the write cache, OCM directly returns the corresponding data from the cache, bypassing the backend disk access path. This significantly reduces I/O latency and alleviates the overhead of frequent metadata lookups.

- (4)

- Cache Miss Fallback: If the target logical block is neither hot nor found in the write cache, OCM loads the corresponding data from the backend disk and returns it. This fallback path ensures consistency in cases where the hotness-based strategy does not yield a cache hit.

- (5)

- Background Cache Fill: To improve the likelihood of future cache hits, the cache manager asynchronously triggers a background fill operation after returning the data. The loaded logical block then enters the hotness tracking pipeline and updates its value in future evaluations.

These stages, as illustrated in Figure 4, demonstrate the dynamic coordination capabilities of the strategy in identifying hot blocks, integrating write-cache awareness, and enabling asynchronous cache fill operations, thereby effectively optimizing read response performance in scenarios characterized by repeated access and data hotness.

Figure 4.

Workflow of read cache hit based on hot data detection. Arrows indicate the direction of data flow and control sequence: left-to-right arrows represent read request propagation, right-to-left arrows denote data return paths, and dashed arrows indicate asynchronous cache fill operations triggered after cache misses.

4. Performance Evaluation

4.1. Experiment Setup

The experiments were conducted on four physical servers with identical hardware configurations, all of which were H3C R49003 models ( New H3C Technologies Co., Ltd., Hangzhou, China). Each server was equipped with an Intel Xeon Silver 4114 processor (10 cores, 2.20 GHz), 256 GB of DDR4 memory, a 2 TB SATA HDD, and a 980 GB SATA SSD, thereby providing sufficient computing and storage resources to support the creation of OverCap volumes and the subsequent performance tests. All servers ran CentOS Linux version 8.5.2111 with kernel version 4.18.

The experiments set key parameters, including data block size, number of concurrent threads, and data duplication rate, to comprehensively assess OCM’s capacity utilization and performance under diverse load conditions. Each performance test was executed five times independently using the FIO tool under identical environmental configurations and load parameters, and the median of the five runs was adopted as the performance evaluation metric. This testing strategy effectively mitigated the impact of occasional system jitter and background interference on the experimental results.

4.2. Capacity Availability Verification of OverCap Volumes

To evaluate the capacity availability of the OverCap volume in a real mixed-data environment, this section presents a duplication-aware data writing experiment. Five 30 GB physical partitions were created on the HDD, with each partition used to construct a separate OverCap volume of 1 TB logical capacity. The test dataset was constructed by random sampling from a real mixed-data corpus (hereafter the mixed dataset) comprising video, audio, image, and document files. The duplication rate of written data was controlled at five levels (20%, 40%, 60%, 80%, and 100%), thereby forming five experimental scenarios. To ensure consistency, the dataset size in each scenario was fixed at 25 GB, with unique and redundant data mixed at predefined ratios. For example, in the 20% duplication scenario, the ratio of unique to redundant data was 4:1, resulting in a dataset of 20 GB unique and 5 GB redundant data. In the 100% duplication scenario, the dataset consisted entirely of 25 GB of redundant data. Each dataset was written to the OverCap volume twice for validation. For each scenario, the physical storage consumption of the OverCap volume was recorded, and the space saving ratio was quantified as follows:

where denotes the space saving ratio achieved by OCM, reflecting the proportion of storage space conserved through deduplication and thin provisioning. and represent the actual physical space usage and the total logical data written, respectively.

As shown in Table 1, with increasing data duplication ratio, the physical space consumption of the OverCap volume decreased markedly, while the space saving ratio steadily improved. Specifically, as the duplication ratio increased from 20% to 40%, 60%, 80%, and finally 100%, the OverCap volume’s space saving ratio rose from approximately 23% to about 99%. In the fully duplicated case, nearly no additional physical space was consumed. These results indicate that OverCap volumes can efficiently detect and eliminate redundant data as duplication increases, allowing the logical write volume to far exceed actual storage consumption, thereby showcasing exceptional spatial efficiency and logical scalability.

Table 1.

Space savings of OverCap.

It is important to note that the space saving ratio does not exhibit a strictly linear relationship with the data duplication ratio. The primary cause of these discrepancies lies in the additional physical space required by OverCap volumes to maintain internal metadata structures that support thin provisioning and deduplication capabilities. Specifically, OverCap maintains metadata such as fingerprint indices, reference counters, and logical-to-physical address mappings to facilitate the elimination of redundant data.

As a result, even under workloads with extremely high duplication ratios, a small amount of physical space is still consumed by these metadata components, leading to a slightly lower space saving ratio than the theoretical maximum (i.e., not fully matching the duplication percentage). For instance, under the 100% duplication scenario, where all additional writes should theoretically be fully deduplicated and incur no extra physical cost, approximately 1% of space was not conserved. This overhead is attributed to the metadata necessary for recording new logical mappings and updating reference counters. Similarly, under the 80% duplication scenario, the actual space savings were marginally below the theoretical value due to the same reasons.

Nevertheless, these deviations were relatively minor, and the majority of redundant data was successfully eliminated, thereby validating the effectiveness of the OverCap deduplication mechanism. In the 100% duplication case, the OverCap volume logically received a total of 50 GB of write requests but physically stored only approximately 25 GB of unique data (plus a small amount of metadata), resulting in virtually no doubling of physical space consumption. This N-to-1 storage mapping significantly expands the user-visible logical capacity while maintaining physical resource consumption nearly proportional to the volume of unique data.

4.3. Evaluation of SSD-Backed Hierarchical Asynchronous I/O Performance

This section systematically evaluates the OverCap volume’s I/O performance under three key workload characteristics: data block size, number of concurrent requests, and data duplication rate. The experiments employed FIO as the workload generator, executed in libaio mode with direct = 1 configured to bypass the operating system’s page cache and guarantee that data was written directly to disk. Each test processed a fixed data volume of 2 GB with a maximum runtime of 60 s. During testing, data block sizes were configured across a multi-level range from 4 KB to 4 MB, covering typical application scenarios from fine-grained to coarse-grained random I/O. The number of concurrent requests varied from 1 to 192 to simulate workloads of different intensities. The data duplication rates were set to 0, 20%, 40%, 60%, 80%, and 100%. All tests were conducted under identical hardware and system conditions to ensure that the results accurately reflected the processing capabilities of OverCap’s underlying read and write paths.

In the write path, non-redundant data with a duplication rate of 0 was used to eliminate the positive impact of the deduplication mechanism on performance, thereby enabling a focused evaluation of the underlying write path’s processing capabilities. In the read path, fully redundant data with a duplication rate of 100% was used to simulate typical hot data access scenarios, maximize the hit rate of the read path, and evaluate OverCap’s access efficiency under ideal read workloads.

4.3.1. Benchmark

This subsection evaluates the baseline performance of OverCap volumes under three representative workload characteristics in the absence of SSD cache acceleration. The objective is to reveal the fundamental I/O processing capabilities of OverCap and to establish a baseline for subsequent analyses of the effectiveness of the SSD-based acceleration mechanism.

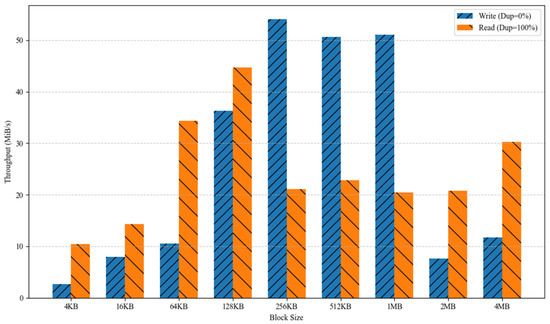

To investigate the influence of block size on OverCap volume behavior, read/write bandwidth measurements were performed under a fixed concurrency level of 32 threads. The results, shown in Figure 5, exhibit a “rise-then-fall” pattern in read and write bandwidth as the block size varies, reflecting the interaction between upper-layer I/O request sizes and OverCap’s fixed 4 KB block segmentation granularity.

Figure 5.

Bandwidth of the OCM benchmark across varying block sizes.

In the write path, the bandwidth exhibited a consistent upward trend as the block size increased from 4 KB to 256 KB, rising from 3.2 MiB/s to a peak of 53.8 MiB/s. It then plateaued within the 512 KB to 1 MB range, maintaining approximately 51–52 MiB/s before declining markedly to 14.5 MiB/s and 12.2 MiB/s under 2 MB and 4 MB block sizes, respectively. This behavior is primarily due to OverCap’s use of a fixed 4 KB block granularity. All incoming write requests must be decomposed into multiple 4 KB sub-blocks at the Device Mapper layer, each undergoing fingerprint computation, deduplication lookup, and logical-to-physical mapping updates. For mid-sized requests (e.g., 128 KB–512 KB), the number of sub-blocks remains moderate, allowing the kernel to handle fingerprinting and metadata operations efficiently, thereby forming a performance-optimal region. However, as request granularity increases further (e.g., 2 MB–4 MB), the number of sub-blocks per I/O operation grows substantially, introducing significant computational overhead from intensive deduplication and metadata processing, which ultimately results in diminished write bandwidth.

On the read path, bandwidth demonstrated a more intricate fluctuation pattern with respect to block size. From 4 KB to 128 KB, bandwidth increased steadily from 10.5 MiB/s to 44.7 MiB/s, indicating that, under a 100% duplication hotspot workload, OverCap efficiently resolved logical-to-physical mappings and achieved rapid data localization. However, in the 256 KB to 1 MB range, bandwidth decreased significantly to 20–23 MiB/s. This degradation arises from OverCap’s 4 KB block granularity, which necessitates decomposing large read requests into many sub-blocks. These sub-blocks, often mapped to physically non-contiguous locations under deduplication, result in fragmented access patterns. As OverCap operates atop the Device Mapper layer, it lacks the capability to merge these scattered blocks into a single I/O, requiring each 4 KB sub-block to be issued independently. This results in frequent address transitions and scheduling overhead, which increases latency and reduces overall read bandwidth.

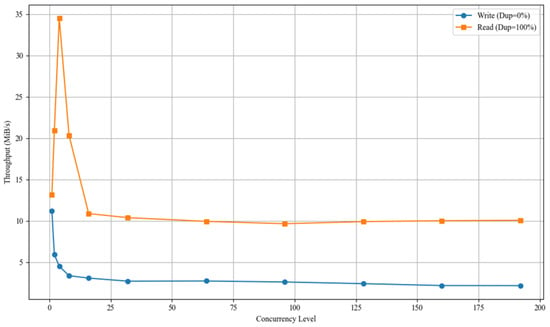

Additional experiments were conducted to evaluate the impact of concurrency levels on read and write performance, with the goal of assessing the I/O handling capabilities of OverCap volumes under high-concurrency access patterns. In this experiment, the block size was fixed at 4 KB, and concurrency levels were progressively scaled to observe trends in read and write bandwidth under the baseline scenario. The experimental results are shown in Figure 6.

Figure 6.

Bandwidth of the OCM benchmark under different concurrency levels.

On the write path, bandwidth decreased steadily with increasing concurrency, dropping from 11.2 MiB/s under a single thread to 2.5 MiB/s at 192 threads—indicating a 77.7% reduction in throughput. This trend suggests that write performance of OverCap is sensitive to concurrent I/O pressure, primarily limited by synchronization conflicts during metadata operations such as fingerprint generation, duplication checking, logical mapping construction, and reference counter updates. In contrast, the read bandwidth peaked at 34.5 MiB/s with two concurrent threads, then gradually declined and stabilized at approximately 10 MiB/s. This result implies that, even in scenarios involving fully duplicated data, OverCap’s address translation paths experienced structural contention and scheduling delays under high concurrency, ultimately constraining the horizontal scalability of the read path.

An experiment was designed to evaluate the impact of data duplication ratio on the read and write performance of the baseline scenario. Under fixed conditions of a 4 KB block size and 32 concurrent threads, the duplication ratio was incrementally varied, and the corresponding changes in bandwidth were measured. The experimental results are presented in Figure 7.

Figure 7.

Bandwidth of the OCM benchmark across varying data duplication rates.

The write bandwidth remained generally low, increasing modestly from 2.7 MiB/s to 3.3 MiB/s as the duplication ratio increased from 0% to 100%, yielding only a modest 22% improvement. In the baseline configuration, even duplicate data had to follow the entire deduplication pipeline. Furthermore, upon deduplication hits, metadata updates were still necessary, and most duplicate blocks had to be recorded in the metadata journal and have their reference counters updated. As a result, the increase in duplication ratio did not significantly reduce write overhead, resulting in minimal performance gains. In contrast, read bandwidth consistently ranged between 10.3 and 10.7 MiB/s, and the duplication ratio had no noticeable effect on read performance. This outcome indicated that, under the baseline scenario, read requests for hot data were entirely served by backend physical storage, with read performance primarily constrained by metadata lookup efficiency and the latency of backend I/O operations.

4.3.2. Performance Comparison of Cache Optimization Mechanisms

This section presents a series of comparative experiments. The OverCap volume performance was evaluated under two configurations: with and without caching optimizations enabled (baseline). Specifically, OverCap incorporates a write aggregation-based asynchronous flush strategy (hereafter referred to as W-Cache) into the write path and an access hotness-based read cache hit mechanism (hereafter referred to as R-Cache) into the read path, with the goal of improving throughput under repetitive write and read workloads, respectively. The experiments were performed along three key dimensions: block size, number of concurrent threads, and data duplication ratio in order to examine differences in performance under varying I/O granularities, workload intensities, and data characteristics. Throughput served as the primary performance indicator. Additionally, a speedup metric was introduced to quantify the I/O performance improvement achieved through the tiered asynchronous I/O cache optimization strategy, defined as follows:

where denotes the I/O throughput with the cache mechanism enabled, and represents the performance without caching. A higher speedup value indicates a greater acceleration effect provided by the caching mechanism.

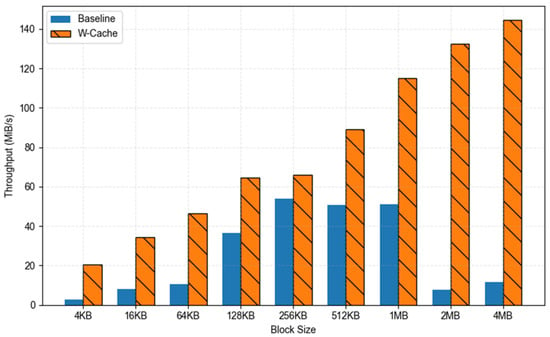

- (1)

- Write Performance Comparison: In this experiment, the data deduplication ratio was set to 0%, and the operating system page cache was bypassed to ensure that the measurements accurately represented the inherent performance of the underlying write path.

We first compared the write throughput of W-Cache and baseline using 32 concurrent threads across a range of data block sizes. The corresponding results are illustrated in Figure 8. W-Cache consistently outperformed baseline across all block sizes, indicating consistent and efficient write acceleration. In small-block write scenarios (4 KB–64 KB), the baseline exhibited limited throughput as each individual write unit required full deduplication processing. This process involved frequent fingerprint calculations, index lookups, and metadata updates, thereby significantly constraining overall OverCap volume throughput. In contrast, W-Cache leveraged an aggregation mechanism to merge scattered writes into sequential batches, which significantly reduced the frequency of metadata processing and I/O scheduling overhead. This approach yielded a bandwidth improvement of up to 6.4×, highlighting its superior optimization for small-scale I/O workloads. In medium-block write scenarios (128 KB–1 MB), baseline performance plateaued, while W-Cache demonstrated continued performance improvements, with speedup factors ranging from 1.7× to 2.3×. This finding indicates that the cache aggregation mechanism continued to effectively reduce redundant overhead and enhance throughput for medium-granularity writes. In large-block write scenarios (2 MB–4 MB), the baseline throughput declined significantly, while W-Cache exhibited greater adaptability, sustaining steady bandwidth improvements with a consistent speedup of more than 3.5×. This performance enhancement was attributed not only to the optimization of the request scheduling mechanism enabled by the caching strategy but also to the inherent advantage of SSDs in handling large sequential writes. The aggregated write requests generated by W-Cache were more likely to trigger page alignment and internal buffering mechanisms in the underlying SSDs, thereby reducing write amplification and alleviating concurrency-induced jitter.

Figure 8.

Comparative write performance between baseline and W-Cache across block sizes (deduplication ratio = 0; threads = 32).

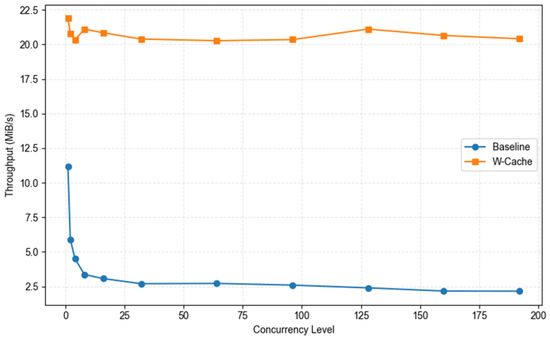

We conducted a performance evaluation of baseline and W-Cache under varying levels of thread concurrency, with the block size fixed at 4 KB. As shown in Figure 9, baseline exhibited a slight performance improvement under low concurrency (1–8 threads) but rapidly reached saturation beyond 16 threads, with bandwidth stabilizing between 2.5 Mib/s and 2.9 MiB/s. This performance bottleneck was attributed to contention for shared internal resources: concurrent threads competing for access to the fingerprint index and deduplication metadata led to increased lock contention and I/O queuing. In contrast, W-Cache consistently maintained stable write bandwidth across all concurrency levels, with negligible variance. This result demonstrates high concurrency tolerance, with sustained throughput of approximately 20 MiB/s regardless of thread count and no discernible performance degradation under high concurrency. The superior stability of W-Cache is attributed to its frontend write aggregation buffer and background batch flushing strategy, which efficiently buffer and consolidate high-throughput write streams in memory, thereby alleviating pressure on the slower backend path. Notably, in high-concurrency scenarios, W-Cache significantly outperformed baseline, achieving up to a 7× speedup, confirming its robustness under multi-threaded stress and its strong parallel processing capabilities.

Figure 9.

Write throughput comparison between baseline and W-Cache under varying concurrency levels (deduplication ratio = 0; block level = 4 KB).

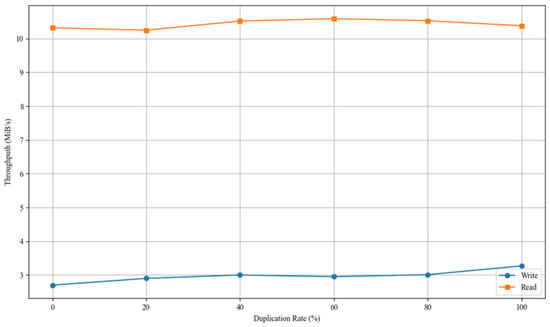

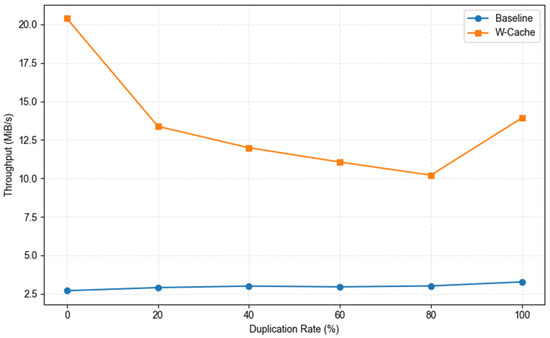

Furthermore, although the write performance test was conducted with a default duplication rate of 0%, we also evaluated performance under scenarios with varying levels of duplicate data. The experimental results, presented in Figure 10, indicate that, with 32 concurrent threads and a fixed block size of 4 KB, the write bandwidth of the W-Cache solution decreases from 20.6 MiB/s to 10.3 MiB/s as the duplication rate increases from 0% to 80%, exhibiting a pronounced downward trend. This phenomenon suggests that, as the proportion of duplicate data increases, the benefits of cache write merging are progressively offset by the additional overhead of fingerprint calculation, duplication detection, and metadata maintenance. However, when the duplication rate reaches 100%, nearly all writes bypass the disk due to deduplication hits, which simplifies the system write path and causes the bandwidth to recover to 13.8 MiB/s, forming a typical “V-shaped” fluctuation pattern. In contrast, the baseline system, due to its inherently lower write performance and absence of a cache absorption mechanism, consistently maintains a bandwidth of 2.6–2.9 MiB/s across all duplication rates, rendering it virtually insensitive to changes in duplicate data.

Figure 10.

Impact of data duplication rates on write performance: baseline versus W-Cache (threads = 32; block size = 4 KB).

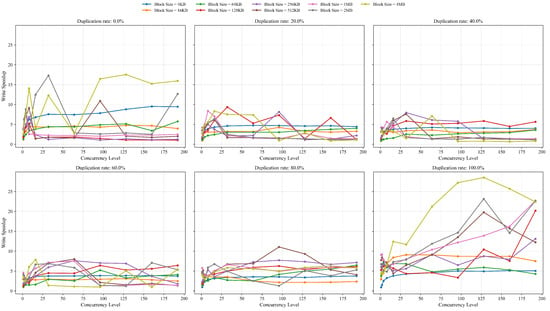

To comprehensively evaluate the performance adaptability of W-Cache under multidimensional workloads, we analyzed the write speedup trends of W-Cache relative to baseline across various combinations of data block sizes, concurrency levels, and data duplication rates. As illustrated in Figure 11, W-Cache consistently outperformed baseline in all test scenarios while exhibiting notable differences in acceleration effects under specific workload conditions.

Figure 11.

Evaluated write speedup achieved by W-Cache across test scenarios.

Specifically, when the data block size was in the small-block range (4 KB, 16 KB, and 64 KB), the speedup curves exhibited minimal fluctuation irrespective of the duplication rate. For example, in the subfigures corresponding to duplication rates of 0% and 20%, the acceleration ratios for small-block writes were mostly clustered within the 2×–5× range, with no significant fluctuations across different concurrency levels. This behavior demonstrates the stability of W-Cache’s aggregation mechanism under fine-grained I/O workloads, where its performance advantage remains relatively consistent. These results indicate that W-Cache substantially mitigates metadata update frequency and scheduling overhead associated with high-frequency small-block writes. However, the achievable acceleration remains limited by the inherent constraints in write aggregation granularity and the upper bound of caching benefits.

In contrast, as the block size increased to 128 KB and beyond—particularly under the 100% duplication rate scenario—the speedup curves exhibited larger amplitude fluctuations and rising trends, indicating heightened variability. For instance, when the block size was 4 MB and the duplication rate reached 100%, the speedup of W-Cache surged from a minimum of approximately 2× to a peak of nearly 28×, a disparity substantially exceeding that in small-block cases. This result demonstrates that W-Cache achieves substantial throughput amplification under large-block and high-redundancy workloads.

Within the intermediate duplication rate range of 20–80%, some increases in speedup were also observed; however, the magnitude of speedup variation was notably lower than in the 100% scenario. This indicates that partial redundancy hits can indeed yield performance benefits but are insufficient to fully leverage the synergistic optimization between cache aggregation and backend processing. These findings further validate that W-Cache exhibits nonlinear scaling in optimization effectiveness under compound workloads with increasing redundancy and I/O granularity, performing particularly well in high-contention bottleneck-prone environments.

- (2)

- Read Performance Comparison: We conducted comparative experiments to assess the performance gains of R-Cache under hotspot data access scenarios. To fully stimulate cache hit rates and highlight the advantages of the caching mechanism, all read tests were uniformly configured with a data duplication rate of 100%, simulating high-reuse hotspot access patterns to maximize the visibility of R-Cache’s optimization capability for random read performance.

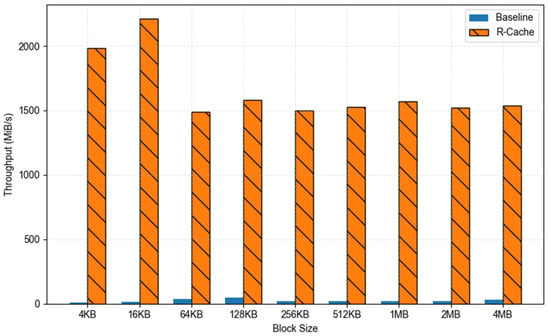

First, we compared the read throughput of R-Cache and baseline using a fixed concurrency of 32 threads across different block sizes. As shown in Figure 12, R-Cache consistently outperformed baseline across the entire block size range, with especially significant gains in small-block scenarios. In the 4 KB–64 KB range, baseline suffered from frequent block accesses and L2P mapping lookups, with each request traversing the I/O stack and accessing backend devices, leading to persistently low throughput. In contrast, R-Cache cached hotspot data on the SSD after the initial access, allowing subsequent hits to bypass the backend read path and significantly increase throughput, thus demonstrating its efficacy in managing random hotspot read workloads. As the block size increased (128 KB–1 MB), both configurations experienced throughput improvements. R-Cache continued to benefit from sustained cache hits with high-speed access, maintaining throughput above 1500 MiB/s and demonstrating robust adaptability to medium-sized requests. In large-block sequential reads (2 MB–4 MB), although baseline performance improved due to the sequential nature of access, the performance differential narrowed slightly. Nonetheless, R-Cache continued to provide a substantial performance advantage.

Figure 12.

Comparative read performance between baseline and R-Cache across block sizes (deduplication ratio = 100%; threads = 32).

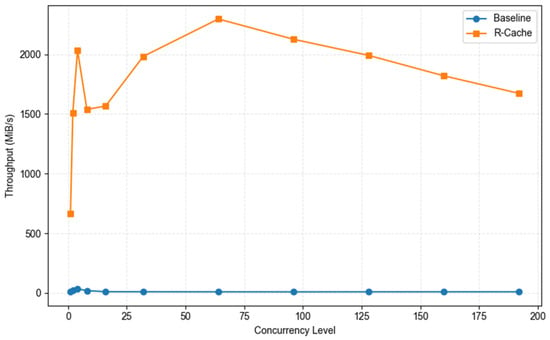

To evaluate the scalability of the caching mechanism under concurrent read scenarios, we performed a set of performance comparison experiments using a fixed block size of 4 KB. Figure 13 presents the experimental results. As the number of concurrent threads increased from 1 to 4, R-Cache’s read bandwidth significantly increased, indicating that hot data could be quickly cached and efficiently accessed through cache hits. In the 4–8 concurrency range, the curve briefly dipped, which may be attributed to incomplete cache residency stabilization, transient contention during index lookups, and scheduling overhead associated with switching a subset of requests between the cache and backend devices. As concurrency increased to 16, the cache hit rate and device parallelism jointly contributed to another rise in bandwidth, with bandwidth peaking at 64 concurrent threads. This suggests that, at this concurrency level, cache hit rate, index overhead, and backend queue depth were optimally aligned. Subsequently, as concurrency continued to increase, contention for index and mapping lookups, kernel queue management overhead, and SSD queuing latency became more pronounced, leading to a gradual decline in R-Cache throughput, although overall performance remained significantly superior to the baseline configuration. Overall, R-Cache effectively maintains read performance across varying concurrency levels and consistently outperforms the baseline under high-concurrency conditions, thereby demonstrating the strong scalability and adaptability of its caching mechanism.

Figure 13.

Read throughput evaluation of baseline and R-Cache under varying concurrency levels (deduplication ratio = 100%; block size = 4 KB).

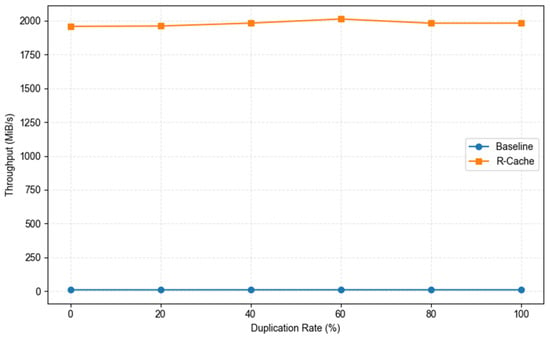

We conducted a comparative experiment to evaluate read performance under varying duplication rates, using a fixed block size of 4 KB and a concurrency level of 32, in order to examine the acceleration capability of R-Cache across different data duplication scenarios. The experimental results are presented in Figure 14. The read bandwidth of both baseline and R-Cache remained relatively stable across the full duplication rate range. Notably, R-Cache consistently maintained a high throughput of approximately 2000 MiB/s, significantly outperforming baseline. This result indicates that the acceleration capability of R-Cache is not dependent on the degree of data deduplication but rather stems primarily from its structural optimization of the hot read path and the cache hit mechanism. This is because, during read operations, the mapping between logical and physical addresses is established at the initial write stage and is persistently recorded in the metadata. The processing of read requests essentially consists of two steps: first retrieving the physical address corresponding to the target logical address from the mapping table and then locating and returning the data from physical storage. Therefore, even if the data duplication rate changes, the mapping between logical and physical addresses remains unchanged, and the read process remains consistent. This implies that read performance primarily depends on the efficiency of table lookup and data access and does not exhibit significant fluctuations across varying levels of data redundancy.

Figure 14.

Read performance under varying duplication rates: a comparison of baseline and R-Cache (block size = 4 KB; threads = 32).

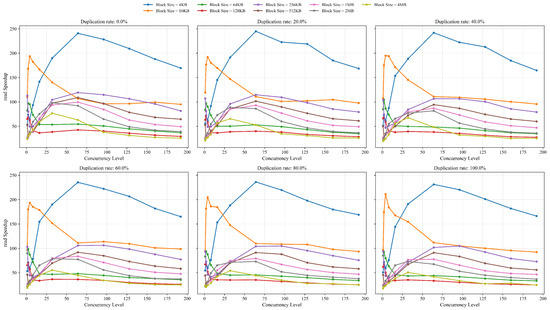

To comprehensively evaluate the read performance of OverCap under multidimensional workloads, we quantified the overall speedup of R-Cache relative to baseline across various combinations of block size, concurrency level, and data duplication rate, as illustrated in Figure 15. The overall results show that R-Cache consistently delivered substantial performance improvements across most test configurations, highlighting robust adaptability to diverse workload characteristics.

Figure 15.

Measured read speedup of R-Cache under varying conditions.

Specifically, under the combination of small block sizes and moderate concurrency levels, R-Cache achieved the most significant throughput gains. For example, under the 4 KB workload, R-Cache achieved a peak speedup of over 220× and consistently maintained this high level across all duplication ratios, indicating its strong ability to detect and efficiently cache hotspot data in fine-grained high-frequency random access scenarios. By leveraging frontend cache hits, R-Cache significantly reduces access latency and mitigates backend I/O pressure. Under large-block random read workloads (e.g., 1 MB and 2 MB), although baseline could partially benefit from reduced address translation overhead due to larger block sizes, R-Cache still maintained a stable speedup of over 2×, indicating that its mechanism is not only suitable for fragmented small-I/O accesses but also capable of delivering continuous performance gains in medium-to-large block scenarios, demonstrating strong workload generalizability.

Moreover, as evidenced by Figure 14 and Figure 15, the speedup distributions remained highly consistent across different duplication rate settings without exhibiting fluctuations as redundancy increased. This further confirms that the performance benefits of R-Cache primarily stem from its ability to identify hot data and optimize the hit path rather than dependence on data redundancy levels.

The speedup exhibited an increasing-then-plateauing trend as the number of concurrent threads increased, reaching its peak within the medium concurrency range. This indicates that R-Cache effectively capitalizes on access aggregation effects brought by moderate parallelism, thereby improving cache hit rate and read path concurrency. Under extremely high concurrency, performance gains began to saturate, mainly due to limitations imposed by metadata consistency maintenance, cache contention, and writeback latency within the caching layer. Overall, R-Cache demonstrated strong horizontal scalability and consistently delivered substantial throughput improvements under a wide range of complex workload combinations, with particularly significant advantages in small-I/O, high-concurrency, and hot-read scenarios.

4.4. Experimental Validation in Large-Scale Distributed Storage Systems

Finally, to systematically verify the support capabilities and practical applicability of the proposed OCM method in large-scale distributed storage environments, a large-scale Ceph storage system was constructed on the Kubernetes platform (version 1.28.12). On each physical server, 32 OverCap volumes, each with a logical capacity of 24 TB (the largest single-disk capacity available on the market), were created using OCM. These virtual disks were attached to 32 Ceph OSD pods per server. In total, 128 OSD nodes were deployed across four servers, forming a Ceph storage cluster with a logical capacity of approximately 3 PB. Deploying a system of this scale with traditional physical disks would require 128 24 TB hard drives. By contrast, this experiment consumed only 8.8 TB of actual physical capacity (4 × 2.2 TB). Constructing a Ceph storage system of equivalent scale with conventional disks would incur an estimated disk cost of approximately USD 61,000; with OCM, this cost was reduced to about USD 2000, representing a saving of roughly 96.72%.

5. Discussion

The OCM proposed in this paper, through its integrated design of thin provisioning, deduplication awareness, and layered asynchronous I/O, achieves reproducible overcapacity virtual disk construction with constrained physical resources. It also maintains acceptable resource utilization and stable I/O performance under representative workloads, thereby validating its feasibility and practical value as a supporting platform for large-scale distributed storage simulation. Building on these results, the subsequent discussion addresses the research objectives, resource consumption and performance optimization, and simulation scale, thereby further clarifying the positioning and limitations of OCM.

Research Objectives. The objective of OCM is to develop a low-cost, controllable, and reproducible virtual disk tailored for large-scale storage research, with an emphasis on ensuring the usability of over-provisioned capacity and achieving a balance between resource consumption and performance rather than pursuing extreme performance optimization. In contrast, the existing studies primarily focus on enhancing the performance of a single virtual disk, often without systematically accounting for the consumption of general-purpose resources such as CPU and memory. Although Ref. [8] also integrated thin provisioning and deduplication mechanisms, its objective was to enhance the production availability of HDD-based primary storage, which fundamentally differs from OCM’s positioning as a simulation-oriented approach for large-scale expansion. Therefore, this paper does not include such comparisons but instead emphasizes validating OCM’s capacity availability and resource–performance balance in large-scale storage simulations.