Abstract

As cyberattacks grow increasingly sophisticated, the timely exchange of Cyber Threat Intelligence (CTI) has become essential to enhancing situational awareness and enabling proactive defense. Several challenges exist in CTI sharing, including the timely dissemination of threat information, the need for privacy and confidentiality, and the accessibility of data even in unstable network conditions. In addition to security and privacy, latency and throughput are critical performance metrics when selecting a suitable platform for CTI sharing. Substantial efforts have been devoted to developing effective solutions for CTI sharing. Several existing CTI sharing systems adopt either centralized or blockchain-based architectures. However, centralized models suffer from scalability bottlenecks and single points of failure, while the slow and limited transactions of blockchain make it unsuitable for real-time and reliable CTI sharing. To address these challenges, we propose a DDS-based framework that automates data sanitization, STIX-compliant structuring, and real-time dissemination of CTI. Our prototype evaluation demonstrates low latency, linear throughput scaling at configured send rates up to 125 messages per second, with 100% delivery success across all scenarios, while sustaining low CPU and memory overheads. The findings of this study highlight the unique ability of DDS to overcome the timeliness, security, automation, and reliability challenges of CTI sharing.

1. Introduction

In recent years, cyberattacks have risen significantly, resulting in significant consequences for organizations globally. The “WannaCry” ransomware attack in 2017 severely disrupted more than 60 trusts within the UK’s National Health Service (NHS), preventing access to critical patient data and causing surgery delays and patient appointment cancellations [1]. The attack rapidly spread to over 200,000 systems in more than 150 countries [1]. Additionally, a recent report indicates a 435% rise in ransomware attacks from 2019 to 2020 [2]. According to another report, 68% of industry practitioners believe that cybersecurity risks pose a threat to their businesses [3], and 56% of utilities have been targeted by cyberattacks [4]. The frequency of cyberattacks is increasing, along with the expenses borne by victims. The projected financial impact of the SolarWinds attack is approximately $100 billion [5]. Cyber Threat Intelligence (CTI) has become a crucial element of standard security practices, assisting organizations in risk prioritization, timely detection, mitigation, and containment of attacks [6]. A SANS report reveals that approximately 60% of organizations currently employ CTI in their security procedures. While 25% intend to incorporate it into their security operations. The report also reveals that approximately 47% possess dedicated CTI teams [7]. Research indicates that organizations capable of leveraging CTI data improve their cybersecurity posture by 2.5 times relative to their counterparts [8].

Cyber threat intelligence (CTI) refers to evidence-based knowledge of attack information collected, processed, and analyzed to understand existing or emerging threats in the cyber world, aiding in the prevention and detection of cyberattacks [9]. It plays a crucial role in enhancing situational awareness, operational efficiency, and response capabilities in cybersecurity [10]. Cybersecurity is a versatile and increasingly complex field. As our reliance on sophisticated technology grows, the threat landscape also expands and evolves in dynamic and challenging ways. While traditional cybersecurity methods that focus on identifying and addressing vulnerabilities, weaknesses, and configurations are essential, they are no longer sufficient on their own [11]. One challenge in CTI is that individual organizations lack access to a comprehensive range of relevant information necessary for accurate identification, detection, and prevention of cyberattacks. It is not possible for any organization to effectively protect itself while remaining isolated. The exchange of CTI is crucial for the timely and cost-effective identification and response to future attacks [12]. There is a significant demand for extensive CTI sharing. Effective CTI exchange can enhance the CTI of individual organizations [13]. By sharing this information, each organization can gain a more comprehensive understanding of the threat landscape, both in general and in terms of specific indicators to identify attackers. The threat faced by organization X today may be encountered by organization Y tomorrow, especially if both are targeted by the same adversary. If organization X shares their specific insights and experiences about a threat they encountered as a cyber-threat indicator, organization Y can use this information to address these threats proactively and vice versa. The existing literature on cybersecurity information sharing highlights numerous potential benefits, such as incident response, deterrence, and cost savings for organizations in both public and private sectors. However, despite these advantages, achieving successful cybersecurity information sharing remains challenging [14].

Traditional methods like emails and online portals are inefficient and insecure [15], while centralized models suffer from performance bottlenecks and single point of failure. Numerous frameworks have been developed for Cyber Threat Intelligence (CTI) sharing, including Structured Threat Information Expression (STIX) and Trusted Automated Exchange of Intelligence Information (TAXII). However, to facilitate the automatic dissemination of CTI information, not only should a set of standards be utilized to produce threat information in a structured format, but also a scalable real-time system should be implemented [16]. Several challenges exist in CTI, including the timely dissemination of threat information, the necessity for privacy and confidentiality, and the accessibility of data even when the network is not stable. In addition to security and privacy, latency and throughput are critical performance metrics when choosing a suitable platform for CTI sharing [17]. Publish-subscribe model has been used to address scalability and automation in the system developed [18,19]. Kang et al. [20] validated the effectiveness of the Real-Time Data Distribution Service (RDDS) in cyber-physical systems (CPS), demonstrating its ability to reduce computation and communication overhead while ensuring reliable and timely data delivery to subscribers, even under challenging network conditions. However, to the best of our knowledge, no prior work has applied DDS to the domain of CTI sharing.

Existing CTI sharing systems generally adopt either centralized or blockchain-based architectures. In centralized models, participants connect to a central hub, creating a single point of failure. If the hub experiences downtime or degraded performance, the entire CTI exchange process is disrupted, and attackers can compromise the system via this single entry point. Furthermore, centralized architectures struggle to scale effectively in large operational environments [21]. Although some centralized systems such as the TAXII-based implementation proposed by Wang et al., which achieves relatively low end-to-end latency (48–72 ms) [22] offer efficient performance, they remain vulnerable to these inherent structural weaknesses. Similarly, commercial centralized CTI sharing tools such as IBM X-Force Exchange, AT&T Cybersecurity USM Anywhere CrowdStrike Falcon X, OpenCTI, ThreatConnect, ThreatQuotient, and AlienVault Open Threat Exchange (OTX) face the same single-point-of-failure issue and often produce overwhelming volumes of data. The Malware Information Sharing Platform and Threat Sharing (MISP), an open-source initiative for exchanging malware and threat intelligence among trusted parties, offers a broader set of functionalities compared to our proposed solution. However, MISP lacks a Quality of Service (QoS) policy to ensure the reliable and durable delivery of CTI information.

Timeliness is a critical characteristic of actionable CTI. Rapid distribution enables defenders to mitigate vulnerabilities before exploitation and to reduce infection rates. Consequently, CTI sharing platforms must be capable of disseminating actionable intelligence in real time, ensuring that recipients have immediate access to relevant data [21]. While blockchain-based architectures offer decentralization, they often fail to meet CTI sharing requirements for timeliness, scalability, throughput, and reliability. The author of [21] evaluated 11 blockchain-based and 3 machine learning-based CTI sharing solutions, assessing their ability to address key CTI sharing challenges. The results showed that none addressed network bandwidth limitations, and only 27% of blockchain-based solutions attempted to improve timeliness.

For example, the blockchain-based framework in [18] recorded 3.09 s latency, 0.32 msg/s throughput, and 96% success rate at a 100 msg/s send rate. Another blockchain based solution proposed by Hajizadeh et al. [17] achieved 49.7 msg/s throughput at 50 msg/s send rate and only 59.7 msg/s throughput at 100 msg/s send rate, with average latency of 10 s at 100 msg/s send rate and maximum latency rising from 14 s at 1000 transactions to nearly 36 s as the load increased.

To overcome these limitations, we propose and evaluate a real-time, secure Data Distribution Service (DDS) [23] based CTI sharing framework leveraging Connext DDS secure. Connext DDS secure is particularly promising due to its ability to ensure privacy, scalability, and optimal performance in terms of latency and throughput. In our evaluation, our proposed DDS-based framework maintained 100 msg/s throughput, average latency between 0.910 ms (32-byte messages) and 0.995 ms (8192-byte messages), a 100% success rate under the same 100 msg/s send rate and 1000-message workload setup with blockchain-based solutions, and recorded a maximum latency of 3.7 ms with full security enabled, while also requiring fewer CPU and memory resources than the blockchain-based solutions. These results demonstrate that DDS can meet stringent timeliness, and reliability requirements that centralized and blockchain-based solutions struggle to satisfy. The objectives of our framework are to improve situational awareness, reduce manual data processing, and foster better organizational collaboration by automating threat analysis and ensuring timely, secure and automated dissemination of threat information.

The main contributions of this paper are summarized as follows:

- We propose a DDS-based CTI sharing framework that addresses key CTI sharing challenges such as reliable and durable delivery, timeliness, high throughput, scalability, automation, and secure dissemination.

- We design and implement an automated data sanitization tool that systematically removes sensitive information, thereby preserving organizational privacy during CTI sharing.

- We develop an automated STIX generation system that converts raw, sanitized data into structured CTI, enabling efficient, machine-consumable, and human-readable intelligence exchange.

- We implement a prototype of the proposed framework and conduct a detailed performance evaluation. The results demonstrate sub-millisecond average latency, linear throughput scaling with configured send rates, and 100% delivery success across test scenarios, suggesting the practical feasibility of DDS for real-time CTI sharing.

In summary, we show that integrating DDS with STIX and our proposed automated sanitization pipeline results in a novel CTI sharing solution that guarantees data privacy through sanitization, ensures interoperability and automation via standards-compliant STIX generation, and achieves real-time, scalable, reliable, and secure dissemination of CTI. To the best of our knowledge, this is the first work that applies DDS to CTI sharing, demonstrating its unique ability to overcome the timeliness, scalability, security, automation, throughput, reliability, and interoperability challenges of CTI sharing.

This paper is organized as follows. Section 2 presents a literature review, highlighting existing research and their contributions. In Section 3, we provide essential background on the DDS, which serves as the foundation for understanding our proposed solution. Section 4 details the architecture of our proposed framework. Section 5 introduces our threat model, demonstrating the framework’s robustness against potential attacks. In Section 6, we describe the experimental setup used to evaluate the performance of our proposed framework. Finally, Section 7 concludes the paper and offers recommendations for future research.

2. Literature Review

2.1. Motivation for CTI Sharing

A recent cybercrime study by Accenture indicates a significant increase in the financial losses organizations experience from various cyberattacks. The study projected that within the next five years, cyber threats will jeopardize the assets of companies vulnerable to this category of attacks, with an estimated value of $5.2 trillion [24]. Organizations do not rely exclusively on the CTI they collect themselves. If an organization only uses its own data, it becomes much more difficult to respond to threats that may have already affected other organizations. Instead, they incorporate threat intelligence from various sources to arrive at a comprehensive view of the threat landscape. A typical method of sharing CTI is through IOC lists, which usually consist of unordered collections of known indicators identified by monitoring systems or other organizations [25]. Not all threat actors possess the same level of knowledge and resources for carrying out attacks. Understanding the behavior and objectives of these actors is a critical component of CTI. Enhancing knowledge of threat actors and their tactics is essential for conducting threat assessments and prioritizing security controls [26].

The vast majority of organizations believe that sharing threat information is one of the most effective strategies to counter today’s adversaries [27]. The SANS study supports this claim, indicating that approximately 81% of security professionals utilize shared CTI to enhance cybersecurity capability. Moreover, the same study by SANS indicated that the volume of produced and consumed threat information has been consistently rising over the past few years. This trend indicates substantial growth in the number of organizations actively engaged in information sharing [28]. The availability of CTI does not necessarily prevent attackers from continuing to exploit a specific attack vector [29]. An exploit developed by the NSA, which was leaked in April 2017, was exploited by the WannaCry ransomware just a month later. This occurred because many systems had not been updated, despite patches being available for a month prior to the attack [30,31]. Understanding adversaries should be a collective effort. When an attacker targets an organization, it is beneficial to share indicators and Tactics, Techniques, and Procedures (TTPs) with other organizations that may face the same threat [32].

Cyber threat information is beneficial if it provides complete and relevant information in a timely manner [33]. In the past, organizations depended on informal methods such as emails, phone calls, or face-to-face meetings to share cybersecurity-related information. A research study conducted by the Ponemon Institute survey revealed that 39% of participants identified slow and manual sharing processes as barriers to full participation in CTI exchange, while 24% said these processes prevented them from sharing altogether [34]. Slow processes, such as copying and pasting spreadsheets or meeting with peers to exchange information, hinder effective collaboration. Data processing is primarily performed manually, requiring analysts to assess problems, implement solutions, and share the findings. This manual preparation is labor-intensive and time-consuming, causing information to quickly become outdated. Analysts must prepare outgoing information for trusted stakeholders and analyze incoming intelligence for factors like content relevance, source reliability, and impact. The triage of CTI depends on the risk priority assigned at the end of the analysis. Automation can help reduce human errors, such as miscommunication [35]. Certain cyberattacks happen within seconds across many different organizations, utilizing the same or related attack patterns. The quick dissemination of information is a crucial characteristic of CTI exchange due to the limited response time frame [36]. The significance of timely sharing of CTI can be seen when the quality of the disseminated data becomes useless in an hour or over the course of days. Research indicates that 60% of malicious domains possess a lifespan of one hour or fewer [37]. Thus, the usefulness of CTI is a race against the clock, as the value of threat data can quickly lose its value. Therefore, the timely distribution of information about malicious activities is crucial for proactive defense strategists.

2.2. Architectures for CTI Sharing

Private organizations may utilize one of two principal architectures for the distribution of CTI. The primary architecture involves a centralized approach where a singular entity is responsible for the management and distribution of CTI to all participants. The alternative architecture for CTI sharing is the peer-to-peer model. In this setup, private entities directly exchange CTI without any intermediaries, which significantly enhances the system’s responsiveness. This method allows the CTI consumers to obtain data directly from the providers, eliminating the risk of single points of failure that can occur with centralized architecture [38]. The exchange of CTI among similar entities can be significantly beneficial, as these entities often face adversaries utilizing similar TTPs and targeting the same types of infrastructures. Cooperation among organizations improves the efficacy and efficiency of countering advanced cyber threats. This partnership will enable organizations to mitigate risks and improve their overall security posture [38]. However, cooperation among organizations is hindered by the lack of a reliable and secure CTI sharing platform [39].

2.3. Blockchain and Cloud Based Solutions

Kamhoua et al. [40] introduced a game-theoretic model aimed at addressing the complexities of CTI sharing in cloud environments. The model relied on assessing the benefits and drawbacks of sharing CTI. The framework models the strategic interactions between cloud users, capturing the conditions under which they are motivated to participate in collective defense efforts by sharing valuable threat information. Building on this, Schaberreiter et al. [41] proposed a quantitative methodology for evaluating the trustworthiness of CTI sources based on a set of defined quality parameters. Recognizing the varying reliability of CTI sources, the authors developed a framework that continuously assesses the quality of each source by evaluating factors such as timeliness, extensiveness, verifiability, and false positives. Their work differs from ours, as we aim to facilitate secure, timely, and automated CTI sharing.

A recent study [42] examines prevalent issues in the field of CTI sharing and proposes a model that utilizes the security features of blockchain. They created a CTI sharing platform utilizing the STIX 2.0 standard and Hyperledger Fabric to address major challenges in information sharing, specifically interoperability issues, trust, and privacy concerns. This solution utilized Fabric channels and smart contracts to enhance efficiency and effectiveness in the exchange of CTI among various parties. The authors claimed that their model offers an effective and efficient framework for a CTI sharing network capable of overcoming the trust barriers and data privacy concerns inherent in this domain. However, the performance of the proposed solution has not been assessed.

A system for sharing CTI using Hyperledger Fabric and Software-Defined Networking (SDN) was developed to combine the security features of a private blockchain with the management capabilities of SDN, enhancing the protection of threat data and reducing the detection-to-response time. Performance metrics such as throughput and latency in data transactions from the Fabric channels were used to evaluate the system. However, maintaining a complete CTI ledger on the blockchain limits scalability due to the growing number of CTI participants and the inherently slow transaction speeds of blockchain technology [17].

A cloud-edge-based data security architecture has been proposed to facilitate the secure sharing and analysis of CTI across organizations [43]. This framework addresses confidentiality concerns by introducing a Five-Level Trust Model, allowing data owners to select appropriate sanitization techniques, such as anonymization or homomorphic encryption, based on their trust in the cloud infrastructure or collaborating organizations. The architecture supports dynamic and scalable deployment, with data sanitization occurring either at the edge or in the cloud. While the hybrid cloud edge model enhances secure CTI sharing and preserves data privacy, it also inherits typical limitations of cloud environments, including latency, throughput, and security issues.

A blockchain system has been introduced for the secure and efficient exchange of CTI, utilizing the TAXII framework and private blockchain technology [18]. The authors address key challenges in threat sharing, such as trust, privacy, interoperability, and automation, by using a private permissioned blockchain to store the hash of CTI while preserving data integrity and complying with the GDPR’s right to be forgotten. The system uses smart contracts to manage secure transactions between participants, enabling efficient communication without compromising privacy. A comprehensive evaluation of the system demonstrates its capability to ensure secure and trusted CTI sharing while maintaining high levels of data privacy and integrity. However, despite its promising approach, the implementation inherits significant limitations from its underlying technologies. The use of the TAXII framework, combined with the Flask web server, introduces a notable delay in relaying messages to consumers. Moreover, the integration of blockchain further degrades performance by increasing latency and reducing throughput, which undermines the scalability of the system and timely delivery of CTI information.

To address the cybersecurity challenges faced by small- and medium-sized enterprises (SMEs), ref. [44] propose a novel solution leveraging shared CTI. The authors develop a prototype based on the MISP, which automates the processing of CTI, prioritizes cybersecurity threats, and delivers actionable recommendations tailored specifically to SMEs. Ref. [45] propose a comprehensive architecture that enables SMEs to share CTI data securely with third-party analysis services while preserving both privacy and confidentiality. The architecture leverages a Managed Security Service (MSS) that gathers and processes CTI data from SMEs and utilizes the C3ISP framework for policy-based data sharing. The framework includes features like customizable Data Sharing Agreements (DSAs) and supports advanced privacy-preserving techniques such as anonymization and homomorphic encryption. Both works described above focused on providing SMEs with the tools to participate in CTI sharing, enabling them to benefit from the aggregated analysis of cyber threat information.

Haque and Krishnan [13] propose a framework for automating cyber defense through the secure sharing of structured CTI. Their approach integrates automated threat detection, STIX-based CTI generation, and secure sharing via the TAXII protocol, leveraging Relationship-Based Access Control (ReBAC) to manage varying levels of access control between organizations. The authors implement their system within a cloud environment, utilizing machine learning (K-means clustering) for threat detection and OpenStack services for scalability. The authors employ a TAXII server with a request-and-reply model, which limits the system’s scalability due to its centralized architecture. This approach inherently creates a single point of failure, potentially affecting the robustness and availability of the CTI sharing system.

Jesus et al. [46] review the challenges and opportunities in open, crowd-sourced CTI sharing, emphasizing how confidentiality concerns hinder widespread adoption. The study analyzes over one million CTI feeds from MISP (2014–2022) and performs a threat analysis using the LINDDUN framework, aiming to demonstrate that confidentiality risks are overestimated. The authors highlight that by redesigning sharing architectures or sanitizing data, CTI sharing can be made safer. The authors further propose a meta-architecture for secure, open CTI sharing. Their architecture relies on public infrastructure such as open cloud servers to store CTI information. Just like other cloud-based solutions, their architecture is susceptible to issues such as data breaches, limited throughput, and high latency.

Jin et al. [47] propose CTI-Lense, a framework designed to evaluate the effectiveness of CTI sharing through the STIX format. Their work emphasizes the need for more structured, timely, and comprehensive CTI sharing to strengthen collective cybersecurity defenses. By analyzing approximately 6 million STIX objects from 10 publicly available sources over nine years, the study uncovers key challenges, including insufficient data volume to address the daily influx of threats and delays in sharing critical information like malware signatures. The study also highlights the slower dissemination of other threat types as limitation of real-time response capabilities. Based on these findings, the authors recommend practical considerations to enhance the scalability and effectiveness of STIX data sharing among organizations.

A blockchain-based framework has been proposed for incentivizing CTI sharing in the context of Industrial Control Systems (ICS) [48]. The framework comprises three components: off-chain storage using IPFS, a permissioned blockchain based on Hyperledger Fabric, and a decentralized application interfacing with participants. The framework facilitates secure and private CTI sharing by employing the Traffic Light Protocol (TLP) for controlled access and cryptographic measures for confidentiality. The framework incentivizes participation through discount-based rewards for high-quality CTI contributions. The authors employ permissioned networks and private channels for communication with stakeholders and utilize the TLP to enable legal incident reporting and collaboration with governmental authorities. The framework also addresses the financial barriers faced by small and medium-sized organizations in participating in CTI sharing by implementing a cost-effective model requiring only registration and subscription fees while eliminating transaction fees. The authors also try to address the uncertainties regarding the volatile valuation of cryptocurrency tokens by providing a discount on subscription fees based on the current market conditions for more stable and reliable economic incentives for CTI contributions. However, the framework does not support machine data processing, as the CTI information is shared in an unstructured format, requiring analysts to manually analyze and reformat the data, which is both tedious and time-consuming. Furthermore, the framework is specifically tailored to ICS, limiting its applicability to other domains, and it inherits blockchain limitations, such as latency and throughput constraints due to slow and limited transaction of blockchain.

Gong and Lee [49] propose BLOCIS, a blockchain-based open CTI system designed to resist Sybil attacks while enhancing the reliability of shared threat data. The framework utilizes a three-layered architecture: the user layer for contributors and consumers, the blockchain network layer for secure storage and data exchange, and the feed layer for CTI evaluation and dissemination. Data contributions are validated through smart contracts, ensuring integrity and penalizing contributors of malicious or invalid data. The system employs STIX as the standard format for threat data and enforces privacy through encryption during sharing. Simulations demonstrate the framework’s effectiveness in reducing the reliability of malicious contributors, increasing the cost of Sybil attacks, and maintaining the integrity of shared CTI. However, just like other blockchain-based solutions, its reliance on blockchain technology introduces performance challenges, including latency and throughput.

A blockchain-based architecture has been introduced to incentivize CTI sharing through a decentralized application on the Ethereum platform [50]. The system employs smart contracts to manage transactions and introduces a CTI token as a digital asset to create market value and encourage all participants, such as producers, consumers, investors, donors, and owners, to share and utilize CTI data dynamically. Semantic web standards, including STIX, OWL, and SWRL, are integrated to enhance interoperability and support the exchange of actionable intelligence. Simulations and experiments validate the model’s benefits, but its scalability is challenged by inherent blockchain limitations, including storage costs and transaction overhead, as acknowledged by the authors.

Ming Liu et al. [16] emphasize the need for scalable real-time systems within trusted communities to promote the automatic sharing of CTI. The authors propose a cloud-based architecture comprising five key components: a trust evaluation unit to maintain a watchlist of collaborators’ trust scores, a threat data collection unit for raw and structured threat data aggregation, a centralized analytics unit for processing data and generating structured threat feeds, a storage unit with indexed NoSQL databases for efficient data retrieval, and an integration unit equipped with APIs for automatic feed integration into defense systems. The proposed architecture aims to facilitate real-time threat sharing while addressing scalability through cloud-based infrastructures. The authors recommend the use of standardized formats like STIX to facilitate the exchange of structured threat information and ensure interoperability. However, the proposed architecture remains largely conceptual, lacking an implemented proof of concept or experimental validation to demonstrate its practicality and effectiveness.

Wen et al. [51] propose RTB-RM, a blockchain framework for reliable and transparent CTI sharing, addressing challenges such as trust, inflexible sharing policies, and a lack of dispute resolution mechanisms. The framework incorporates a Multidimensional Reputation Model (MRM) to evaluate users’ trustworthiness based on historical behaviors and current actions to enhance the credibility of threat information. The framework facilitates selective data sharing tailored to trust levels and data sensitivity to balance the demands of CTI exchange and security considerations. The key components of the framework include blockchain for data sharing with a smart contract to execute the core logic of data sharing, IPFS for storing encrypted CTI data, and a third-party arbitration institution to resolve disputes. The system implements smart contracts for automated transactions, ensuring transparency and security in CTI exchanges. The authors, however, acknowledge performance limitations and functional challenges within the system, including issues with adaptability, flexibility, and the collaborative capabilities of its core components.

Provatas et al. [52] propose a decentralized architecture for CTI sharing, leveraging the STIX and TAXII standards on a private permissioned blockchain, implemented with Hyperledger Fabric. The study addresses challenges of centralized CTI-sharing models, such as vulnerabilities to denial-of-service attacks, data tampering, and single points of failure. However, the system’s reliance on blockchain introduces inherent limitations that can impact performance, such as increased latency and resource overhead associated with blockchain transactions. Additionally, the use of the basic TAXII authentication scheme raises security concerns, as its simplicity poses potential risks.

2.4. Machine Learning-Based Approaches

Within the field of CTI, organizations can employ Artificial Intelligence (AI) and Machine Learning (ML) techniques to automate data collection and processing, integrate with current security systems, absorb unstructured data from various sources, and correlate information from various sources [53]. Machine learning techniques have also been applied in related areas of information security. For example, Liu et al. employed semantic segmentation to selectively encrypt only the most sensitive regions of images, thereby improving processing efficiency while maintaining confidentiality in image sharing [54]. To enhance computational efficiency in image encryption, Song et al. introduced a batch image encryption scheme that processes multiple images simultaneously through cross-image permutation and bidirectional diffusion [55].

ML algorithms can significantly automate various components of the CTI life cycle, including collection, processing, and analysis, thereby enhancing CTI sharing [56]. Ghazi et al. [6] proposed a natural language processing solution to extract threat feeds from unstructured CTI sources, including security blogs. TTPdrill [12] is a tool that specializes in the automatic extraction of threats from unstructured texts, producing knowledge graphs for analytical purposes. However, both approaches primarily focus on the extraction of CTI feed from textual data sources rather than real-time distribution. Likewise, the study in [57] employed a Naive Bayes classifier and natural language processing to forecast threat incidents from diverse IoT data; however, their approach was confined to IoT and relied on pre-existing datasets, making it unsuitable for real-time CTI sharing.

Other intelligent approaches focus on privacy-preserving sharing. The framework in [58] proposes sharing machine learning models instead of raw IOCs, enabling direct integration into IDS/IPS. Sarhan et al. [59] applied Federated Learning (FL) for CTI sharing, where models are locally trained and only updated weight parameters are exchanged between participants. SeCTIS [15] combines FL and blockchain to further enhance confidentiality by sharing model weights instead of raw data. While these approaches reduce data exposure and improve privacy, the SANS CTI networking report [60] highlights that raw threat data often delivers the most operational value to CTI participants, raising concerns about the practical utility of solutions that limit information to model weights.

In summary, AI/ML and FL approaches offer strong capabilities for extracting intelligence from unstructured data, and addressing privacy issues in CTI sharing. However, they often rely on offline datasets, require significant computational resources, or limit the practical utility of shared intelligence, and thus fall short of ensuring real-time actionable CTI dissemination. Our DDS-based framework addresses these dissemination challenges, ensuring that once CTI is produced, it can be reliably and securely delivered in real-time to all participants.

Table 1 offers a comparative assessment of prior research contributions against our proposed framework. We evaluated the existing research using the fundamental evaluation criteria for CTI sharing systems outlined by [18], namely: integrity, low latency and high throughput, participant trust through controlled access, confidentiality, and automation. Drawing on additional insights from the literature, we extended these criteria to better reflect existing challenges in CTI sharing. As noted by [39], the lack of a secure and reliable CTI sharing platform remains a key barrier to organizational participation in CTI sharing. In response, we included support for decentralization, standardized threat intelligence formats, reliable and durable message delivery, and organizations’ privacy protection in our evaluation. As illustrated in Table 1, and to the best of our knowledge, no existing solution in the literature provides a real-time, secure, automated, scalable, reliable and actionable CTI sharing with low latency and high throughput.

Table 1.

Comparison of existing research with proposed solution.

3. Data Distribution Service

DDS is a publish–subscribe middleware standard, introduced by the Object Management Group (OMG) in 2003, and has been improved and enhanced in recent years. This software connectivity standard facilitates secure real-time information exchange, modular application development, and efficient integration in distributed systems. DDS outlines a programming model for the Real-Time Publish-Subscribe (RTPS) protocol in distributed systems and has been adopted by various vendors suited to various applications. The DDS offers a global data repository where publishers and subscribers can write and read data, respectively. Moreover, it provides rapid and reliable dissemination of time-sensitive data across various transport networks. Additionally, DDS offers a customizable data distribution framework by incorporating various types of data sources. As a result, it provides substantial data throughput with microsecond performance and efficient QoS management through a distributed cache, known as a dataspace.

DDS Entities

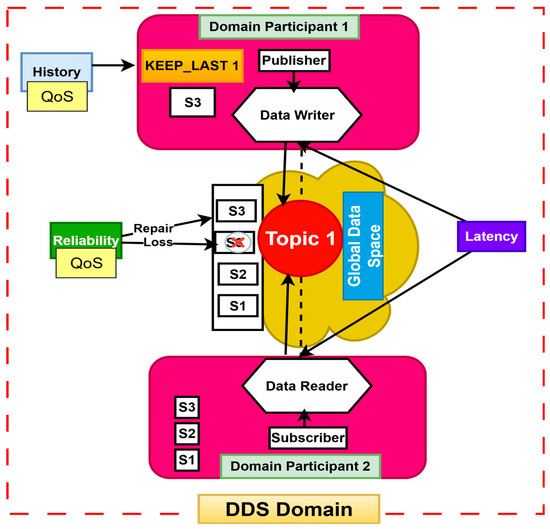

Figure 1 illustrates the overview of a DDS system comprising one domain and topic. It illustrates almost all the key entities of DDS, including data writer, data reader, publisher, subscriber, and their relationships. These entities are described in [61] as follows:

Figure 1.

An overview of the data distribution service.

Domain: The DDS domain serves as the global data store comprising the information supplied by the applications registered within that domain. The Global Data Space (GDS) is a conceptual framework for the storage of all data. Applicants seeking to publish or modify data must be publishers, while those wishing to receive and utilize the data must be subscribers. The GDS comprises various domains, each of which can be further subdivided into distinct logical partitions. Domains are mutually exclusive; thus, an entity such as a topic can reside in only one domain but may appear in multiple partitions. The GDS must be entirely decentralized to eliminate single points of failure within the systems.

Domain Participant: A domain participant indicates the application’s membership within a domain, which is a concept that unifies all applications to facilitate communication among them. The domain participant serves as the container for all other entities and separates applications from one another when multiple applications operate on the same node.

Publisher: A publisher is an entity responsible for data dissemination that transmits data into the GDS. However, the publisher itself cannot perform that function; it can only disseminate data through a data writer. A data writer is linked to a publisher. A publisher may include various data writers to disseminate distinct data types.

Subscriber: A subscriber is an entity designated to receive data from the GDS. It could not directly access the data; therefore, it must obtain data through a data reader. A data reader is linked to a subscriber. A subscriber may possess numerous distinct data readers.

Topic: A topic is defined as the entity that connects publishers and subscribers, typically comprising a unique name, a data type, and a set of Quality of Service (QoS) settings. A message can only be written by the data writer or read by the data reader if a subscriber is subscribed to the same topic as the publisher. Additionally, it is essential that the QoS of both the publisher and subscriber is compatible.

Quality of Service: Each entity within the DDS possesses a specific set of QoS settings, enabling communication between endpoints only when their QoS configurations are compatible. DDS offers an extensive array of QoS policies, including data availability, reliable data delivery, data timeliness, as well as resource management and configuration.

The OMG DDS standard provides numerous benefits to users, including seamless integration, performance efficiency, scalability, enhanced security, an open standard, enabled QoS, scalable discovery, and broad applicability.

Bode et al. [62] conducted comprehensive evaluations of four popular DDS implementation middleware: OpenDDS, RTI Connext, FastDDS, and CycloneDDS, across different experimental contexts. The findings from this study indicate that RTI Connext, which will be used in the development of this framework, demonstrates greater overall performance among the evaluated implementations.

Real-Time Innovation: Real-Time Innovations (RTI) is a leading provider of software infrastructure for intelligent systems. Its primary product, RTI Connext, is a suite designed to enable secure real-time information sharing across multiple applications. RTI Connext is widely recognized as the leading software infrastructure for intelligent distributed systems on a global scale. Developed in accordance with the DDS standard, Connext provides key features essential for real-time systems. These include continuous availability, high reliability, low latency, scalability, and robust security. By integrating these capabilities, Connext ensures efficient and secure data exchange, making it a critical component in the development of intelligent, distributed applications [63].

4. Proposed Framework

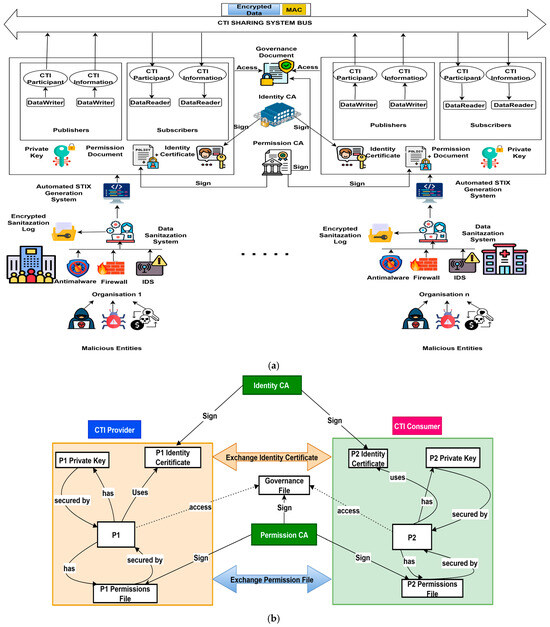

In this section, we describe our proposed framework for sharing CTI that is secure, scalable, and automated. Our framework is designed to support peer-to-peer.

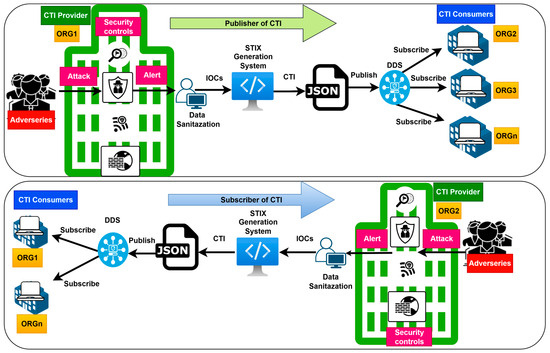

CTI sharing among organizations while preserving organizations’ privacy during the sharing process. As shown in Figure 2, the framework comprises several key components described below.

Figure 2.

Proposed framework architecture.

At the top left of the architecture, we can see adversaries who are malicious actors that continuously target valuable organizations information systems and assets. The CTI provider shown in Figure 2 represents an organization that deploys various security controls, such as firewalls, Intrusion Detection Systems (IDS), and anti-malware systems, to protect the organization from various cyberattacks executed by malicious actors. These security controls detect cyberattacks by monitoring malicious IOC, Such as malicious IP addresses, domain names, malware hash values, and other detected IOCs. These raw threat data are collected from the security logs and alerts from our security controls.

After the collection, before the raw threat data is shared with interested CTI consumers, it passes through our data sanitization tool described below.

4.1. Description of Our Data Sanitization Tool

In this section, we describe the data sanitization tool that we propose to integrate into our CTI sharing framework. Considering the growing concern about privacy protection in CTI sharing, we designed a data sanitization tool to automatically identify and remove sensitive organizational and personal data from the raw threat data received from the security controls. It ensures that only indicators of compromise are transmitted to CTI consumers during the sharing process, taking into account the GDPR data minimization principle [64]. The sanitization process comprises the following main components: extraction component, classification component, contextual filtering module, admin review interface, STIX object generator, and logging module. A Recent study demonstrated the use of multithreading in an intra-bitplane scrambling scheme to accelerate image encryption, showing that dividing the encryption tasks across multiple threads can significantly reduce execution time [65]. We apply multithreading using Python’s ThreadPoolExecutor to enhance the data sanitization process. This approach enables us to concurrently process each extracted data element, performing classification and conversion of raw threat data into STIX format in parallel, thereby reducing processing time.

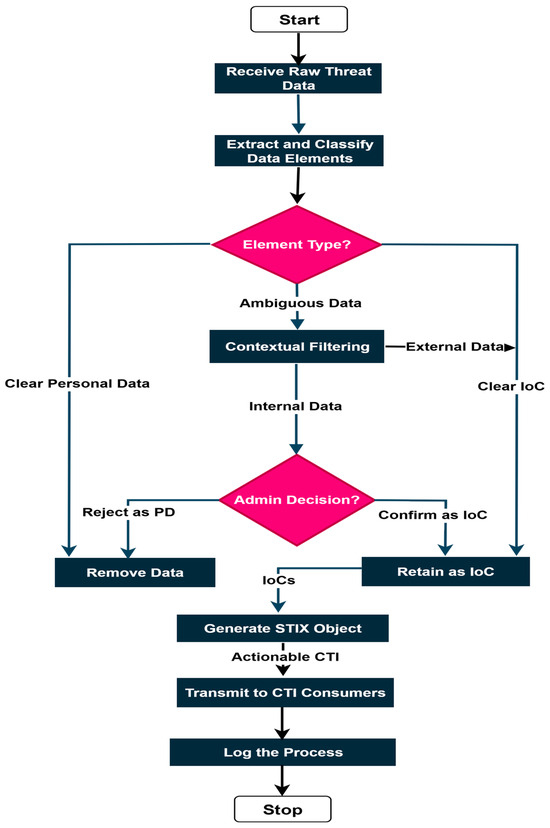

The workflow of the sanitization process is illustrated in Figure 3. The 5 components of the sanitization process are described below.

Figure 3.

Data sanitization workflow.

Core Orchestrator: The first component is the Core Orchestrator, which is responsible for receiving raw threat data from the security controls and coordinating the sanitization workflow. It initiates the extraction, classification, contextual filtering, and automated conversion of raw data into STIX format. It ensures that all the detected threat data flows through the defined sanitization process. As raw threat data is received, the orchestrator logs the event and dispatches the data to the Extraction Component.

Extraction and Classification Component: The extraction and classification components operate together to transform raw threat data into meaningful and shareable intelligence. First, the extraction component identifies and isolates specific data elements by applying well-defined pattern matching rules. These rules are designed to capture structured formats commonly found in CTI, such example, IPv4 addresses are extracted using a pattern that matches four groups of one to three digits separated by periods, ensuring recognition of any syntactically valid address. Similarly, email addresses are detected through rules that match standard username and domain structures. Additional patterns are applied to identify personal data such as phone numbers, credit card numbers, and Social Security Numbers.

Once extracted, each data element is automatically categorized by the classification component into one of three classes shown in Figure 3. Elements that match patterns for sensitive personal information (e.g., phone numbers, credit cards, Social Security Numbers) are classified as personal data and automatically removed. Elements that clearly indicate an indicator of compromise (IOC), such as public IP addresses outside RFC1918 ranges or malware hashes conforming to standard formats, are classified as IOCs and retained for sharing. Remaining elements that cannot be unambiguously categorized, such as private IP addresses, internal domains, or certain email addresses, are labeled as ambiguous and forwarded to the Contextual Filtering Module for further analysis using organization-specific information.

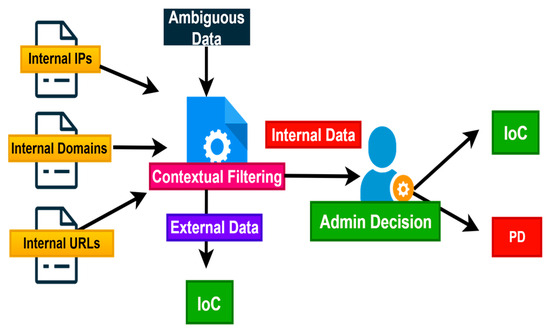

Contextual Filtering Module: The Contextual Filtering Module refines the categorization of data elements initially flagged as ambiguous by the Classification Component. This module incorporates organization-specific metadata to distinguish internal assets from elements that clearly indicate a threat. For example, when processing an IP address within the RFC1918 private range, we compare it against a list of organization-defined internal IP ranges specified in our configuration. If a match is found, the IP address is labeled as internal and forwarded for administrator review, rather than being automatically retained as an IOC. A similar strategy is applied to email addresses: we compare their domain portions against a predefined list of internal domains. A match suggests that the email is part of the internal infrastructure and is therefore flagged for administrator review. The same approach applies to domain names and URLs, which we compare against known internal resource identifiers to determine if they originate from trusted sources. Only data elements that do not match any internal organizational identifiers are automatically classified as clear IOCs, as illustrated in Figure 4. Internal or otherwise ambiguous elements are instead flagged for manual review by an administrator. We intentionally treat internal data elements, such as private IP addresses or internal email domains, as ambiguous rather than discarding them. This decision is rooted in the understanding that attacks may originate from within the organization, whether due to insider threats or compromised internal systems. By flagging these elements for administrative review, we maintain internal visibility into potential threats without sharing sensitive infrastructure details with external CTI consumers.

Figure 4.

Contextual filtering module.

Admin Review Interface: For flagged data elements, a prompt is triggered for administrator review and requests confirmation on whether the element should be retained as an IOC or removed as sensitive data, as shown in Figure 4. We simulate this manual review interface using Python’s Tkinter library, which allows us to create a pop-up dialog window for administrator decisions. When an ambiguous element is detected, the system displays a pop-up notification containing the ambiguous data element and asks the administrator whether it should be retained as an IOC. The dialog presents two options: “Yes” to confirm that the element should be kept or “No” to reject it as sensitive data. Once the administrator makes a selection, the decision is logged for audit purposes. This human-in-the-loop mechanism helps in mitigating false positives and ensuring that valuable threat intelligence is not simply discarded in the sanitization process.

STIX Object Generator: In traditional CTI sharing, unstructured threat information is gathered and disseminated through generic, insecure tools like telephone and email. On the other hand, a structured framework employs a standard language throughout the sharing process [53]. Our framework employs STIX to describe threat data in a structured format. STIX is a formal standard language utilized to represent various cyber threat information in actionable format, which facilitates the management of cyber threat response operations [56]. However, producing STIX-compatible data from unstructured threat information remains predominantly manual. In this work, we designed an approach to automate the generation of raw threat data into STIX for sharing actionable CTI with relevant consumers. Upon completion of sanitization, validated raw threat data is transmitted to the Automated STIX Generation system, which produces structured CTI for dissemination to CTI consumers. This automation allows us to quickly transform IOCs into structured CTI. We use the stix2 library to generate STIX-compliant objects, enabling CTI to be represented in a standard format that is both machine-consumable and human-readable. By transforming this process, we reduce the time needed for threat analysis. Faster processing means that CTI can be shared in a timely manner, which is crucial for effective cybersecurity defense. As delayed sharing can impede timely responses to emerging threats. In fact, a survey by the Ponemon Institute found that 39% of participants identified slow and manual processes as barriers to CTI sharing participation, while 24% reported such delays prevented them from sharing intelligence altogether [34].

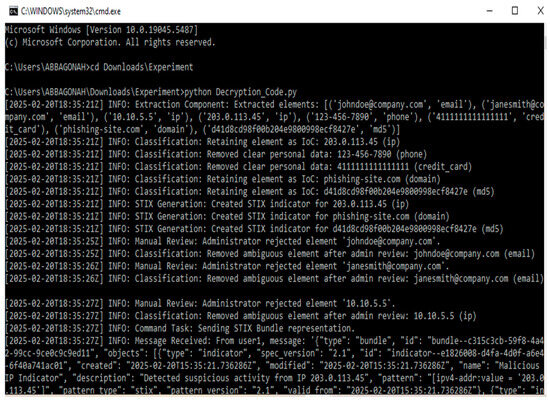

Logging Module: During the CTI sharing process, we ensure that every decision and action taken during the sanitization process is recorded through a logging module. We implemented this module using Python’s built-in logging library, enhanced with a custom EncryptedFileHandler. This handler encrypts all log data using Fernet symmetric encryption, ensuring that unauthorized entities cannot access the log data. All log records are retained for 90 days, after which they are automatically deleted to comply with data storage limitation principles of GDPR. Throughout the process, from the extraction of raw threat data elements, through their classification and contextual filtering, to the administrator’s manual review and the generation of STIX format CTI, we log key events. For instance, whenever a data element is extracted, classified, or removed, we record the event along with the relevant details. Similarly, any administrator intervention via the manual review interface is logged to capture the decision made. This logging module allows for traceability. The decrypted log is shown in Figure 5.

Figure 5.

CTI sanitization log.

4.2. CTI Sharing with DDS

DDS employs a decoupled publish/subscribe model to enable efficient, scalable, and flexible communication in distributed systems. In this model, publishers and subscribers are not directly connected to each other; instead, they interact through a shared data space called a “Topic.” This decoupling provides several advantages. Publishers only need to know the Topic they are writing to, and subscribers only need to know the Topic they are interested in. This eliminates the need for publishers to manage individual connections to subscribers, allowing the system to scale easily as new participants join or leave. Additionally, the decoupled architecture supports dynamic discovery, enabling applications to automatically find and connect to relevant data sources without manual configuration. This model also enhances security and reliability by isolating data flows and allowing fine-grained control through QoS policies. By abstracting the communication details, DDS simplifies application development and ensures that data is delivered where when, and how it is needed [66]. Additionally, DDS provides low latency and high throughput through several specific mechanisms implemented in the RTPS protocol. First, RTPS uses a compact binary wire protocol (Common Data Representation—CDR) to minimize message size and reduce transmission overhead. It supports multicast communication, allowing a single message to be sent to multiple subscribers simultaneously, which reduces network traffic. The protocol also employs batching, where multiple samples are combined into a single network packet, optimizing bandwidth usage and increasing throughput [67]. In our framework, we leverage the DDS secure implemented by RTI Connext to facilitate the efficient and secure sharing of CTI among organizations. Once threat data is sanitized and converted into the structured STIX format, the CTI publisher publishes the CTI information to a specific DDS topic, which acts as a shared communication channel between CTI consumers and providers. On the receiving side, each subscriber listens to the same topic. When a CTI message is published, the DDS middleware automatically distributes the message to all subscribed CTI consumers, without requiring direct connections between publishers and consumers. Each CTI consumer then processes the incoming CTI information, which may include updating their security configurations to block the detected IOC to proactively protect themselves from emerging cyberattacks. Figure 6a shows the experimental Implementation of our proposed framework. The prototype integrates our proposed data sanitization and automated STIX generation system within a DDS secure Implementation of our framework. The prototype comprises two DDS topics: CTI_Participant, which manages the registration of CTI providers and consumers, and CTI_Information, which facilitates the automated exchange of actionable threat intelligence. To ensure secure CTI sharing, our framework relies on two Trusted Certificate Authorities (CAs): an Identity Certificate Authority, responsible for signing each participant’s identity certificate, and a Permissions Certificate Authority, which signs both the Permissions Document and the Governance Document. The Permissions Document defines the specific domains, partitions, and topics each participant is authorized to access, while the Governance Document outlines system-wide security policies, including encryption requirements for designated domains and topics. All CTI participants operate under a unified Governance Document to ensure policy consistency.

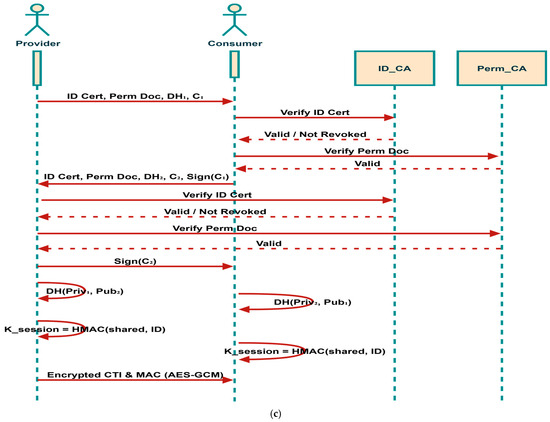

Figure 6.

(a): Prototype implementation of our framework. Some icons in this figure are sourced from Flaticon.com for illustration purposes. (b): Security artifacts of our framework. (c): Sequence diagram illustrating secure CTI sharing in our framework.

As shown in Figure 6b, each participant owns three different security artifacts: (i) an identity certificate, containing the participant’s public key and digitally signed by the Identity CA; (ii) a private key, used to create digital signatures; and (iii) a Permissions Document, detailing the participant’s access rights. Upon discovery, if a CTI provider and consumer have not yet established trust, they initiate a mutual authentication via a challenge-response protocol over a reliable channel. This begins with the provider transmitting its identity certificate, Permissions Document, a freshly generated ephemeral Diffie-Hellman public key (DH1), and a random challenge (C1). Upon receipt, the consumer verifies the provider’s certificate, ensuring it is signed by a trusted CA and not listed in the Certificate Revocation List (CRL), and responds with its own identity certificate, Permissions Document, a second Diffie-Hellman public key (DH2), a new challenge (C2), and a digital signature of C1. This signature is generated by computing a SHA-256 hash of C1 and encrypting the digest using the consumer’s RSA-2048 private key. The provider then performs an analogous verification of the consumer’s credentials and returns a digital signature of C2, similarly hashed and encrypted with its own private key.

Following successful mutual authentication, both parties derive a shared secret by combining their own Diffie-Hellman private key with the peer’s public key; this secret is never transmitted over the network. From this shared secret, a unique session key is derived using a key derivation function based on HMAC-SHA256, incorporating a session-specific identifier. The resulting session key is employed by the AES-GCM (128-bit) algorithm to encrypt CTI messages and generate message authentication codes (MACs), thereby ensuring both confidentiality and Integrity. Because each session utilizes a distinct key that is never reused, even if a session key is compromised, previously exchanged messages remain secure providing perfect forward secrecy. The overall process of ensuring authentication, confidentiality, and Integrity is illustrated in Figure 6c.

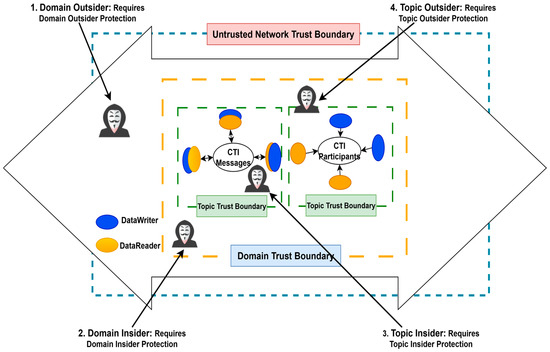

5. Threat Modeling

Threat modeling is a structured approach to identify and mitigate potential security threats systematically. A recent study [68] demonstrates the effectiveness of data-centric threat modeling in DDS-based systems for security modeling, reducing configuration errors, and streamlining the deployment of secure system architectures. In this work, we apply Data-centric threat modeling approach to identify possible security threats posed by adversarial entities to our proposed framework. Our threat model is shown in Figure 7, highlighting the trust boundaries and protection layers in our CTI sharing framework.

Figure 7.

Threat Model.

To analyze the resilience of our CTI-sharing framework, we first characterize adversaries based on realistic capabilities they might possess within the untrusted network. Specifically, we assume that adversaries can:

- Intercept and observe any CTI message traversing the network.

- Legitimately participate in CTI sharing, initiating, or responding to messages.

- Impersonate other authorized participants by crafting and sending forged messages.

- Act as message recipients, either legitimately or via deception.

- Observe all transmitted CTI messages, assuming adversaries have visibility of all network communications.

Within the untrusted network, we have two trust boundaries: The first is the Domain Trust Boundary, which is a conceptual boundary distinguishing between the internal elements of a Domain, including data and applications operated by authorized Domain Participants, and external entities. The second is the Topic Trust Boundary, a logical boundary distinguishing the internal components of a Topic, including data and applications with authorized DataWriters or DataReaders, from external entities.

From the Domain Trust Boundary, we identify two primary attacker roles that help us reason about the threat landscape: Domain Outsiders and Domain Insiders. A Domain Outsider refers to any entity that has access to the physical network where a Domain operates but lacks the necessary credentials to join it. In our context, an entity qualifies as a Domain Outsider if it cannot present acceptable credentials such as valid certificates or private keys associated with a Permissions Document’s Grant. In contrast, a Domain Insider possesses authorized access to a Domain, but leverages that access with malicious intent. This role captures scenarios where an attacker has compromised legitimate credentials, perhaps by stealing a certificate and corresponding private key of a legitimate participant, and uses them to instantiate a DomainParticipant. Another case captures attackers who infiltrate a device already running a valid DomainParticipant. In doing so, they inherit the trust and privileges granted to that compromised entity, gaining access to the Domain’s internal resources.

Similarly, the Topic Trust Boundary introduces two additional roles: Topic Outsiders and Topic Insiders. A Topic Outsider is an entity that, while legitimately authorized to participate in a Domain, does not have permission to interact with a specific Topic within that Domain. For example, an attacker might exploit access to a device running an authorized DomainParticipant but lack the rights to publish or subscribe to the targeted Topic. Even though the attacker resides within the trusted Domain boundary, they remain outside the scope of trust for that particular Topic. Meanwhile, a Topic Insider has both Domain and Topic-level authorization but misuses that privilege. One typical example involves an attacker who obtains the credentials of a DomainParticipant authorized to publish to a Topic. By recreating a DomainParticipant using those stolen credentials, the attacker gains direct access to the Topic, potentially enabling them to inject or extract sensitive information.

Given these assumptions, we categorize potential threats into three groups based on adversarial objectives: (1) Unauthorized Subscription, (2) Unauthorized Publication, and (3) Tampering and Replay. In the following sections, we discuss each threat category and how our framework mitigates them:

Unauthorized Subscription (Confidentiality Violation): Unauthorized subscription, also known as eavesdropping, refers to the ability of an unauthorized entity to access sensitive data without proper permission. In the context of our system, this threat arises when an unauthorized participant, Eve, with a DataReader, subscribes to a Topic CTI, even though she lacks the necessary authorization. In non-secure DDS environments, Eve can be automatically discovered by legitimate participants say Alice, who may then begin transmitting data to Eve unknowingly. By using the security plugin in our framework, we ensure that only authenticated and authorized participants can access data. Each DomainParticipant must present valid credentials, including a certificate signed by a trusted Certificate Authority (CA), during the authentication phase. This guarantees that only legitimate participants join the Domain. Additionally, access control is enforced through signed permissions files, which specify which Topics a participant may publish or subscribe to.

Unauthorized Publication (Integrity Violation): Unauthorized publication means publishing data in the CTI sharing system without authorization, which can affect the trustworthiness of our CTI sharing. For example, consider Trudy, a malicious user with a DataWriter that is not supposed to publish to Topic CTI. In an insecure scenario, Trudy and Bob will discover each other, then Trudy will start sending data samples to Bob. This could be a major problem since Bob may be receiving legitimate samples from Alice as well as forged samples from Trudy. To prevent this, our framework enforces access control at both the participant and endpoint levels. Permissions files, signed by a trusted authority, define which participants are allowed to publish to specific topics. During the authentication and discovery process, these permissions are validated, and unauthorized DataWriters are prevented from publishing. Additionally, message integrity and authenticity are ensured by appending a Message Authentication Code (MAC), generated using AES-GCM, to each message. Only participants with the correct cryptographic keys, established through secure key exchange during authentication, can generate valid MACs. If a message is received from an unauthorized publisher or the MAC validation fails, the message is discarded, ensuring that only data from authorized and authenticated participants is accepted.

Tampering and Replay (Integrity Violation): Tampering involves the interception and modification of data in transit, while replay attacks involve resending previously captured valid messages to disrupt system behavior. Our framework protects against these threats by using cryptographic integrity checks and origin authentication. Each message is appended with a MAC, which is computed over the message contents and a secret session key. If a message is altered, the MAC validation at the receiver will fail, and the message will be rejected. To prevent a compromised subscriber from impersonating a writer, for instance, a legitimate subscriber Mallory, who is permitted to subscribe to a topic CTI, may take advantage of the secret information obtained for subscription to attempt to write data on that topic and convince other subscribers of its authenticity. To prevent this threat, our framework supports origin authentication by generating receiver-specific MACs using unique keys for each reader. This ensures that only the legitimate writer can generate valid MACs for each intended receiver, preventing impersonation and maintaining data integrity. Replay protection is further enforced by including sequence numbers and session information in the cryptographic process, allowing receivers to detect and discard replayed messages.

6. Experimental Results

6.1. Functional Evaluation of CTI Processing

This part of our evaluation aimed at validating the core functionalities of our proposed data sanitization tool. The tests evaluate the framework’s ability to process, classify, and convert raw threat data into STIX format for sharing.

A code was written using the Visual Studio Code IDE version 1.100.3 for the implementation and evaluation of the experiment. Table 2 presents the hardware specifications utilized in the experiment, while Table 3 outlines the software specifications employed in the experiment.

Table 2.

Hardware specifications used in the experiment.

Table 3.

Software specifications used in the experiment.

6.1.1. Data Source

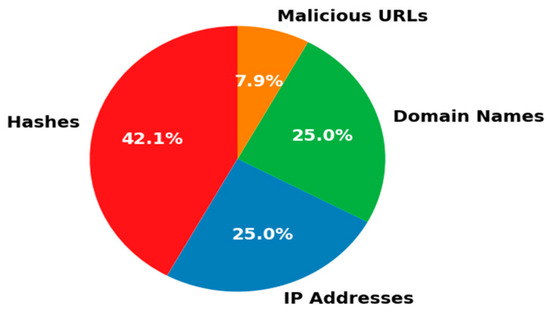

To test and validate our proposed data sanitization approach, we use the publicly available dataset titled “Swift Phishing Campaign” [69] from the Threat Intelligence Repository on GitHub. This dataset aggregates malicious IOCs contributed by the cybersecurity community observed in phishing attack. The repository’s objective aims to provide up-to-date IOC data for threat detection, incident response, and security research. Specifically, the dataset comprises 54 IP addresses, 54 domain names, 17 URLs, and 91 malware hashes, each representing distinct elements of the phishing campaign’s infrastructure [69]. Recognizing that operational environments often contain personally identifiable information (PII), we generated a synthetic PII dataset to simulate realistic conditions for testing our data sanitization tool. Using the Faker library, we created synthetic data that include usernames, internal IP addresses, internal email addresses, and internal URLs, credit card numbers, phone numbers, and Social Security numbers. We integrated this synthetic PII with the IOC dataset to create a comprehensive and realistic dataset for testing our proposed data sanitization tool. Figure 8 shows the distribution of the dataset.

Figure 8.

Distribution of IOC types in the dataset.

6.1.2. Evaluation Results

IOC Extraction

To evaluate the effectiveness of our proposed sanitization tool in extracting and processing IOCs, we conducted tests under two different load conditions: a lightly loaded environment and a highly loaded environment. In both cases, IOCs were randomly sampled within each category. The results of these evaluations are presented in Table 4, Table 5, Table 6 and Table 7.

Table 4.

Per-category results for the lightly loaded environment.

Table 5.

Overall results for the lightly loaded environment.

Table 6.

Per-category results for the highly loaded environment.

Table 7.

Overall metrics for the highly loaded environment.

- Lightly Loaded Environment

In the lightly loaded environment, our test batch consisted of 13 IOCs, covering four categories: domains, URLs, hashes, and IP addresses.

As shown in Table 4, our system correctly extracted all IOCs with perfect precision, recall, and accuracy across all categories.

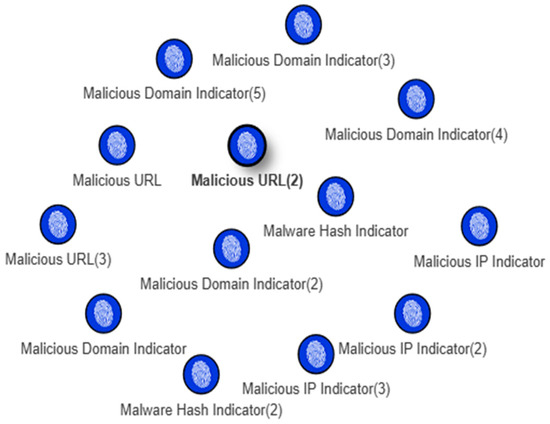

Table 5 presents the overall performance, confirming that no false positives (FP) or false negatives (FN) were observed, leading to an overall accuracy of 100%. Figure 9 shows that all the malicious IOCs are successfully extracted and converted to the STIX format.

Figure 9.

STIX visualization of the 13 extracted IOCs.

- Highly Loaded Environment

For the highly loaded environment, we processed a batch of 65 IOCs to test the performance of our sanitization tool under high load. Table 7 shows all the malicious IOCs are successfully extracted and converted to the STIX format.

The per-category results, shown in Table 6, demonstrate high performance across all IOC types, with perfect precision and recall for IPs, URLs, and hashes. However, one false positive was observed in the domain category, slightly reducing its precision to 85.71%. This misclassification results from our domain extraction logic, where a portion of a URL containing an image file was classified as a domain. To address this limitation, we refined our domain matching regular expression to prevent file names with common image extensions from being misclassified as domains while preserving our ability to extract malicious domain names.

Table 7 presents the overall performance metrics for the highly loaded environment. Despite the single false positive, our system maintained an overall accuracy of 98.48%, with an F1 score of 99.24% and perfect recall.

Ambiguous Data Handling

In our second test, we aimed to ensure that ambiguous data, including internal emails, internal IP addresses, and internal URLs, is correctly flagged for manual review rather than being automatically shared as threat intelligence. To simulate a realistic operational scenario, we combined ambiguous IOCs we generated using faker library with malicious indicators from the Threat Intelligence dataset.

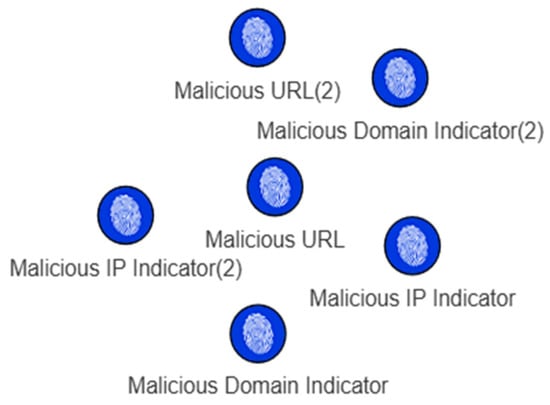

Our test input consisted of 7 ambiguous IOCs (2 domain names, 2 URLs, 1 internal email, and 2 IP addresses) and 6 malicious IOCs (2 URLs, 2 domain names, and 2 IP addresses). Our system successfully flagged all 7 ambiguous IOCs for administrator review, while the 6 malicious IOCs were automatically processed and converted into STIX objects, as shown in Figure 10. Table 8 presents the overall ambiguous data handling performance.

Figure 10.

Processed malicious IOCs.

Table 8.

Ambiguous data handling result.

PII Data Filtering

In our third test, we focused on verifying that our sanitization tool can effectively detect and filter sensitive information, ensuring that no personally identifiable information (PII) is shared as part of the final CTI information. To simulate a realistic operational scenario, we integrated synthetic PII data generated using the Faker library with malicious indicators taken from the threat intelligence dataset. Our input dataset for this test comprised two groups. The first group contained seven PII elements, including two Social Security Numbers, two phone numbers, two usernames, and one credit card number. The second group consisted of four malicious IOCs, including two IP addresses and two malware hashes. We observed that the system automatically filtered out all seven PII elements from the input, and only the four malicious IOCs were processed and converted into STIX objects. This result demonstrates that our data sanitization tool is highly effective in removing sensitive data during the CTI sharing process.

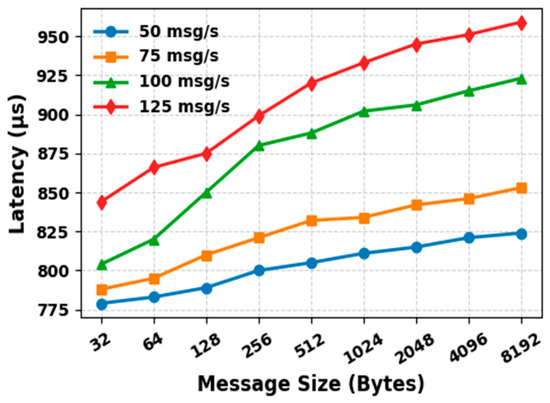

Latency, Throughput and Success Rate Evaluation

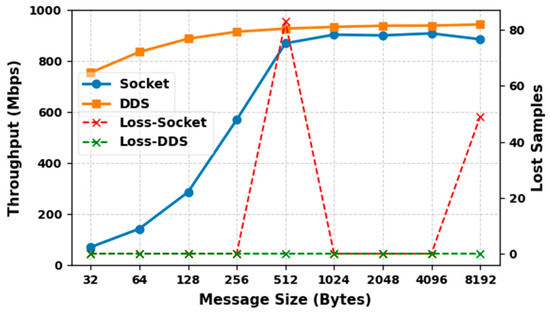

Our second experimental setup focuses on analyzing the performance of DDS communication in our proposed CTI sharing framework. Latency, throughput, and success rate are considered to be the most important performance metrics and are used to evaluate the performance of our proposed CTI sharing framework. Latency represents the time it takes for a data sample to be transmitted from the Publisher to the Subscriber. Throughput indicates the average number of data samples successfully delivered to the consumers per second. Success rate measures the percentage of samples successfully received out of the total number of samples sent during the test execution. For this evaluation, we ran the prototype of our framework on two different machines connected via a network switch.

Table 9 summarizes the technical specifications of the test environment.

Table 9.

Test environment.

We configure the DDS policies that are essential to CTI information sharing. These policies govern how data is published, stored, and transmitted during the sharing process. Table 10 outlines the QoS policies and parameter values selected for this evaluation. The QoS policies are briefly described below.

Table 10.

Configured QoS policies for evaluation.

- Partition: A Partition is used to allow or deny data writers or data readers of a topic to exchange data. In CTI sharing, it allows logical grouping of CTI Providers and Consumers according to their respective organizations or trust groups. This grouping is achieved by assigning string-based identifiers, known as partitions, to Publishers and Subscribers. This policy is useful in CTI sharing where multiple organizations participate in CTI sharing, as it allows for dynamic control over data visibility at the topic level. For instance, we can assign specific partitions to healthcare organizations, ensuring they only share and receive threat intelligence relevant to their sector. Similarly, industrial organizations can operate within their own partitions, maintaining a clear separation of data streams.

- Durability: Specifies whether data published by a DataWriter is stored and made available to late-joining DataReaders. We can choose among several options: VOLATILE, which does not store data; TRANSIENT_LOCAL, which retains data in the DataWriter’s own memory for as long as it remains active; TRANSIENT, which leverages the Persistence Service to hold data in volatile memory; and PERSISTENT, which uses non-volatile storage via the Persistence Service. In our CTI sharing framework, we configure this policy to PERSISTENT. This configuration ensures that critical threat intelligence remains accessible to late-joining organizations, allowing them to retrieve previously published data. By leveraging the PERSISTENT setting, we enhance the resilience and reliability of our framework, ensuring that all participants have access to the complete threat intelligence data.

- Reliability: Specifies whether data lost during transmission should be repaired by the system. We can choose between BEST_EFFORT, where samples are sent without delivery guarantees, and RELIABLE, which automatically retransmits any lost data. In our framework, we set this policy to RELIABLE to ensure that all threat intelligence data is successfully delivered to all participating organizations. This policy guarantees the completeness of shared data, even in the presence of network instability. By ensuring reliable delivery, we enhance the dependability of our CTI sharing framework and maintain a comprehensive understanding of potential threats. Thereby improving organizations’ security posture.

- History: Controls how much data is stored and how stored data is managed for a DataWriter or DataReader. It determines whether the system keeps all data samples or only the most recent ones, and it works in conjunction with the Resource Limits QoS Policy to define the actual memory allocation for the data samples. We configured the History QoS Policy to control how our system stores and manages threat intelligence data. This policy offers two modes. In KEEP_LAST, we retain only the most recent n samples per data instance, where n is set by the depth parameter. This mode suits applications that need only the latest updates. In KEEP_ALL, we keep every sample until it is explicitly acknowledged or removed, with overall storage capped by our Resource Limits QoS Policy. In our CTI sharing framework, we choose KEEP_ALL to ensure that all threat intelligence data is retained until it is successfully delivered to all subscribers.

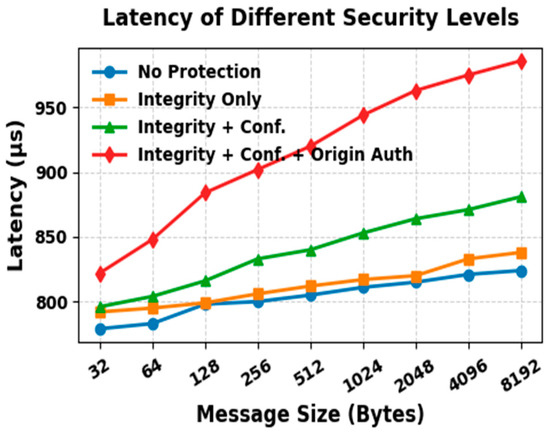

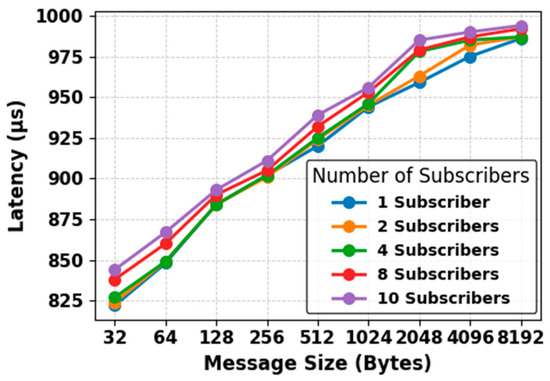

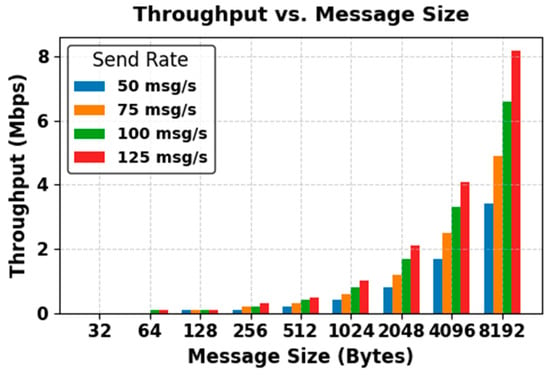

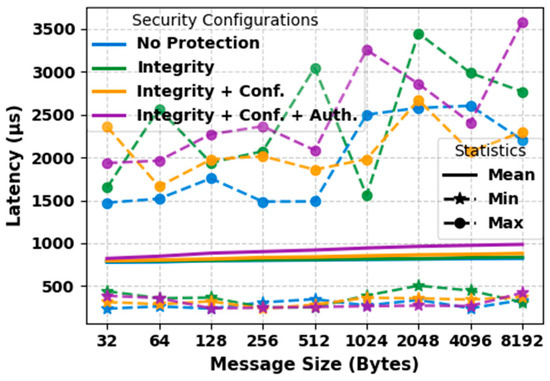

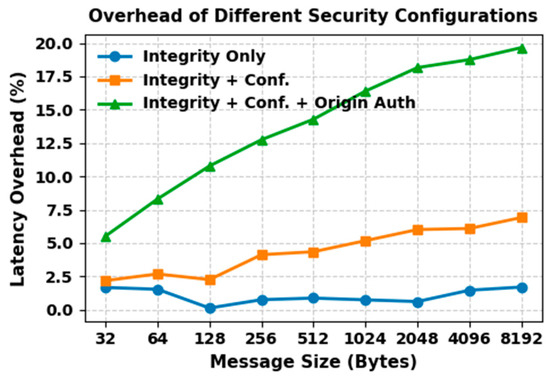

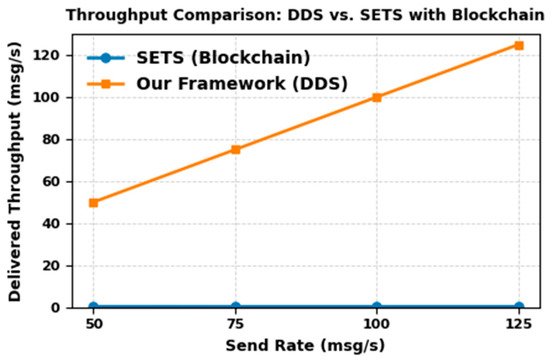

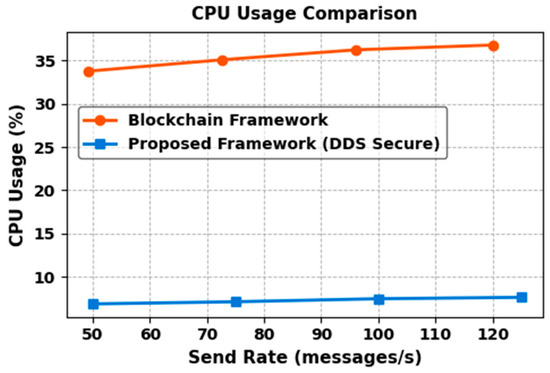

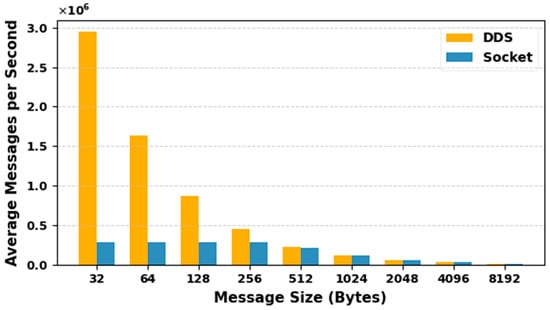

- Resource Limits: Configures the amount of memory that a DataWriter or DataReader may allocate to store data in local caches, also referred to as send or receive queues. It is set by the integer variables max_samples and max_samples_per_instance. The default value is defined as LENGTH_UNLIMITED, which means there is no predefined limit, and the middleware can allocate memory dynamically as needed. In our CTI sharing framework, we use the default LENGTH_UNLIMITED setting for this QoS policy. This ensures that the system can handle varying workloads and high volumes of threat intelligence, allowing the system to scale effectively.