Abstract

The recent rapid developments in the field of artificial intelligence (AI) indicate that it is an extremely dynamic field that is constantly evolving and penetrating all levels of human activity. The cultural sector therefore cannot remain unaffected. In this paper, we explore how artificial intelligence can collaborate with museum and cultural heritage professionals to create optimal cultural experiences for visitors, as a starting point for student training on AI tools. Starting with a search for appropriate large language models, students tested various tools to determine the most suitable tool for cultural content management. They then connected common elements found across different cultural spaces to construct specific and emotional narratives. The students also explored ways in which artificial intelligence tools can go beyond the creation of narratives and create entire virtual worlds for use in CAVE environments. Thus, the present work studies a wide spectrum of possible uses of AI in the cultural domain, spanning from the creation of engaging narratives to the creation of engaging virtual worlds, with the goal of introducing students to the challenges and potential of generative AI.

1. Introduction

Artificial intelligence (AI) is growing rapidly and finding its place in almost all aspects of human activity. The cultural sector is not unaffected by the prevalence of AI, which could be a valuable tool in assisting curators, museum educators, humanities experts, etc., to build new cultural experiences and exhibitions and assist visitors in meaning-making. UNESCO has a strong interest in AI and its potential in the cultural domain and has held dedicated meetings for exploring the benefits of AI’s use as well as the ethical implications of its implementation [1]. Similarly, the European Commission is also exploring the uses of AI in the cultural and heritage sector, and it reports on multiple uses such as artwork reconstructions, assistance in archeological research, etc. [2]. Furthermore, Europe’s digital platform for cultural heritage, Europeana, is concerned with the uses of AI in cultural heritage, mainly due to copyright issues, but it also sees opportunities like significant educational potential and has released guidelines for the educational use of AI with the Europeana collections in the context of cultural heritage for teaching [3]. In addition, Europeana supported the development of the AI4Culture platform that allows cultural heritage institutions to use a wide range of large language models and resources for different purposes including multilingual text recognition, subtitle generation, image and metadata enrichment, data sharing and reuse, and machine translation [4].

All these rapid developments in the field of artificial intelligence entail increased training needs for (future) professionals in the cultural heritage domain. Thus, within the framework of an Erasmus+ Blended Intensive Programme (BIP), students and teachers from six European countries met at the Polytechnic of Portalegre in Portugal for a week and worked together on the issues of artificial intelligence (AI) as a key enabler of digital transformation [5]. With the guidance of teachers specializing in cultural heritage and cultural technologies, a group of students focused on the use of AI in the cultural domain and explored its different potential uses. In particular, the following main research questions were investigated: 1. RQ1: Which AI model is better for handling cultural content? 2. RQ2: How can AI help with discovering associations? 3. RQ3: Can AI make meaningful cultural narratives?

Following the BIP meeting, a group of researchers extended the initial concept, exploring the potential of AI in the cultural domain, and moved beyond the initial concept. Therefore, the main contribution of this paper is a pipeline for automatically generating narratives. The work is a proof of concept, rather than a work with complete evaluation data, since the approach followed was primarily used for educational purposes under the Erasmus Blended Intensive Programme. Nevertheless, this paper presents the potential of AI for the creation of narratives and immersive experiences.

2. AI in Cultural Heritage

AI is already used in the cultural heritage domain in different ways. AI is currently used to preserve, curate, present, and assess cultural heritage. Currently, AI is used to digitize and restore cultural heritage by implementing image recognition technologies, natural language processing, and machine learning for tasks such as cataloging, the restoration of missing parts of artifacts, etc. [6]. For example, AI was used to analyze unstructured textual data (e.g., policy documents, the literature, and social media) to uncover the cultural significance of built heritage and support heritage planning [7]. Different tools are also used to archive material and structure cultural content [8]. During COVID-19, AI was also used to monitor elements, such as air quality, the behavior of visitors in indoor spaces, etc., that could lead to the spreading of the virus in important cultural heritage sites [9]. Currently, AI tools are advancing towards the creation of 3D objects that can be used in virtual worlds. AI uses generative models and algorithms such as Generative Adversarial Networks and Variational Autoencoders that can learn from existing 3D data and create new 3D content [10,11,12,13,14,15,16]. In addition, AI tools that use Neural Radiance Fields can scan 2D images and produce 3D models (although not yet perfect) allowing the creation of virtual environments [17,18,19,20,21]. In addition, Conversational Agents have also been coupled with Virtual Reality technologies to allow, for example, museum visitors to interact with a female skeleton from the Upper Paleolithic era [22]. Thus, it seems that, at the moment, AI is moving towards the creation of 3D objects and entire environments, and potentially it could significantly reduce production effort. However, the tools are still evolving, and AI does not seem to be able to autonomously produce entire virtual worlds, since AI’s full potential is not yet fully visible [23]. The existing tools can nonetheless help produce elements of the virtual world that a human designer can use to synthesize the experience as a whole. From the literature review, it seems that soon there will be a coupling of extended reality (virtual and augmented) with AI to reconstruct monuments [24]. The Internet of Things will also utilize AI technology to allow cultural objects to communicate with each other and create new narratives [25].

Regarding the creation of narratives, AI can also be used to create stories that are interesting and engaging [26]. AI seems capable of making stories with plot twists and unexpected progression but compared with human-made stories up to the present AI-generated stories seem more progressive in terms of gender roles and sexuality than those created by humans [27]. AI-generated narratives are also used in cultural heritage to present specific heritage elements, such as visualizations of collective memories [28]. Advanced Conversational Agents, including AI-powered chatbots [29], using technologies such as natural language processing (NLP) [30], machine learning (ML) [31], and large language models (LLMs) [32], are also currently used to enhance the cultural experience, allow the exploration of heritage [33], and create hybrid spaces where AI and humans interact with each other. For example, researchers created an intelligent audio guide system that combined Audio Augmented Reality (AAR) with an AI-powered chatbot to enable interactive storytelling [34].

In addition, AI has been successfully used in creating cultural narratives as game elements. The stories created were used in a scavenger hunt game to allow museum visitors to actively engage with the museum content [35]. AI also supports mobile applications that visitors can use to detect artworks and to interact with them through a chatbot that creates textual and auditory information. A previous study showed that when interacting with such a chatbot, visitors showed increased engagement with the artworks [36]. Furthermore, generative AI has been used to create a museum guide. Due to the accuracy challenges that emerge during the creation of a guide, human oversight is still necessary to guarantee scientific integrity. Despite these challenges, AI-generated museum guides could also provide personalized material based on unique visitor preferences [37]. Therefore, there seems to be an increased interest in the automatic creation of narratives or at least for ways that assist heritage professionals with the creation of cultural narratives. AI seems to be a very promising tool and for this reason there are studies that explore the potential of AI in story development. For example, Ref. [38] focused on the design and implementation of a platform that assisted curators in writing interactive stories. However, stories do not always come from official sources but could also derive from the unofficial stories of local people. AI can help local communities enhance such unofficial stories with images [39] and make these stories linkable and findable [40]. AI-assisted narrative enrichment through visualizations also seems to be a popular trend since there are a few recent studies that exploit the potential of AI regarding narrative visualizations for cultural heritage [41]. Finally, GPT-4 has also been used to create interactive storytelling and allow personalized city explorations by highlighting points of interest and making the user the protagonist in the story created [42].

Despite concerns about the quality of such narratives and the possible biases they might reflect and reproduce [43,44], as well as various ethical concerns stemming from the use of AI in the cultural heritage domain [45], the use of AI in the field of experiencing cultural heritage and art is rapidly advancing and pertinent research is ongoing [46,47,48].

3. Methodology

Although the current work primarily focuses on training students as future cultural heritage professionals on the use of current AI tools, there were also three main research questions for the research that the students would try to answer. A group of five students participated, who performed all the tests to answer R1, R2, and R3.

RQ1: Which AI model is better for handling cultural content?

To answer the first RQ, different text generation AI models were assessed to examine their ability to handle cultural content-related information, artworks, artists, eras, and styles. We used the open-access collection of the Metropolitan Museum of Art in New York City as a resource, extracting cultural content filtered by object type, specifically paintings. The free versions of the AI models we examined were DeepSeek V2, ChatGPT 3.5 (OpenAI), Claude 3 Haiku (Anthropic), and Gemini 2.5 Flash (Google DeepMind). These models were chosen because they provide a free version and are thus accessible to students. The text generation AI models were also selected based on their differences in database generation, training data, operational mode (including live web access), pre-training architecture, and applied cognition tools. We decided to restrict this exercise to four models, as the main goal was to show students the different results that can be produced by different models even when using the same prompts. The various strengths and limitations of these AI models were also considered, such as DeepSeek’s risks to security and privacy, ChatGPT’s potential for hallucinations and factual gaps without web access, Claude’s lack of live web access, and Gemini’s not fully explained replies (Table 1) [49,50]. Since AI models are rapidly improving, the limitations observed by students and reported here are from April 2025. According to the ahrefs.com web traffic data checker [51] as of April 2025, the following organic traffic (e.g., users that visit from unpaid sources) was found for each model: ChatGPT (https://chatgpt.com): 270.8 M, Gemini (https://gemini.google.com): 17.6 M, Claude (https://claude.ai): 1.9 M, and DeepSeek (https://chat.deepseek.com/): 2.6 M.

Table 1.

Strengths and limitations of AI models used.

Two paintings were provided as examples: “The Trout Pool” (c. 1870) by Worthington Whittredge and “Fortified Wall at Tivoli” (ca. 1786–1806) by Simon Denis. The paintings were chosen because they are relatively unknown to the public and less material is available about them online, compared with very well-known paintings, such as the Mona Lisa, for example. This provided a controlled baseline to assess the accuracy and reliability of each model before applying them to more complex and interpretive heritage sites.

Each model was provided with the same information, namely painting title, the date of creation, and artist. Then, the following questions were asked to all models, once per model:

- What kind of materials and techniques were used?

- Where was the painting created?

- What is the cultural background of the painting?

- What is its provenance history?

- Are there any unique anecdotes or stories connected with the object?

RQ2: How can AI help with discovering associations?

For RQ2, the replies of the text generation AI systems were used to produce metadata (such as object type, creation date, materials, etc.) for the cultural objects or artifact documentation, which could be compared with the existing metadata of the original resource, the Metropolitan Museum of Art in New York City. For the next phase of the study, we used the text generation AI models to search for information on monumental European cultural sites all included in the UNESCO lists. The examples used in this setting included the Portalegre Cathedral (Portugal), the Škocjan Caves (Slovenia), the Curonian Spit (Lithuania), the Ancient City of Nessebar (Bulgaria), the Acropolis of Athens (Greece), the Belfries (Belgium), and the Aachen Cathedral (Germany) were suggested as examples in this setting. The sites were chosen based on the nationalities of the participating students in the BIP and the main landmark of the host town. The information retrieved by the AI models was used to develop Dublin Core (DCMI) metadata and was then further explored by the AI models to discover associations between monuments. The prompt used in all models once was “find associations between X, Y” (to associate two sites), “find associations between X, Y, Z” (to associate three sites), etc.

The approach of feeding AI models with metadata was a conscious one considering its benefits and limitations for the student training process. This grounded and context-specific analysis offers a greater level of control compared with a general research query, which is appropriate for student training. Avoiding general internet knowledge and relying primarily on metadata leads to fewer inaccuracies, allows the discovery of previously unknown links (since it does not rely on well-documented information found over the internet), avoids using common keywords and search engine optimization (since it focuses on raw data enhancing unbiased analysis), and can rely on available large heritage datasets often available from heritage institutions [52]. However, potential drawbacks must also be acknowledged here, such as the quality of available metadata (not a problem in the current work, since the metadata were curated by the researchers), the lack of broader content and information beyond the available metadata, and the time commitment needed to prepare the metadata.

RQ3: Can AI make meaningful cultural narratives?

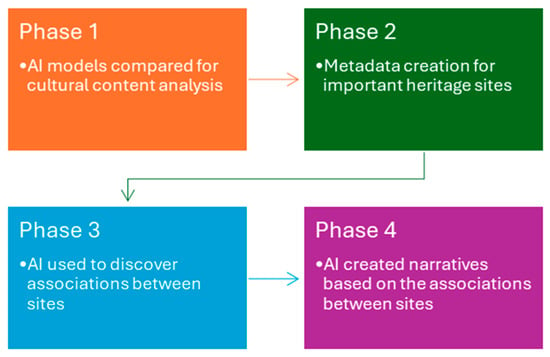

Finally, we asked the AI to create cultural narratives or find familiar stories that connect these heritage landmarks with their environment and their local or international communities. This approach evaluated the text generation AI models’ ability to produce meaningful storytelling for important heritage sites and their connections. All the phases of the methodology are presented in Figure 1.

Figure 1.

Methodology phases.

Being a part of student training and an activity in an Erasmus BIP, the entire process was planned to last for one day. More specifically, from 9.30 to 11.30 students compared the large language models for their readability, validity, and usefulness, recording the strengths and weaknesses of each model, focusing on Research Question 1 (R1). From 12.00 to 13.00 and 14.00–15.00 the focus shifted to R2, where students researched the production of metadata and the discovering of associations between sites. Finally, from 15.30 to 17.00 the focus concluded with R3, during which the AI created stories for the different sites.

4. Results

RQ1: Which AI model is better for handling cultural content?

When different AI models were tested for their ability to handle cultural content, important differences were found, showing the importance of cross-checking AI outcomes. Students were asked to check the outcomes in terms of readability, validity, and usefulness (scale of 0–5). Students specified the three terms and defined the different levels to proceed with the evaluation of the AI-generated content (Table 2).

Table 2.

Student evaluations for the AI models used.

More specifically, for the readability of the generated content the following scale was used [53]: 1—very difficult (text has problems with structure, grammar, syntax, spelling, and/or uses complex language and unknown terminology); 2—difficult (problems with structure and fewer errors; the terminology might still be confusing, but most parts make sense); 3—moderate (clear structure and flow, with minor language issues that do not affect understanding); 4—easy (good structure, easy to follow, very few, if any, grammatical/syntactical errors); 5—very easy (easy to follow, clear, and engaging; the reader does not require any effort to read, and aids like headings and bullet points are also used).

Regarding the validity, accuracy, and reliability of the produced content, the following scale was used [54,55]: 1—unreliable (factual errors, the use of uncredible sources, and misinformation); 2—questionable (does not refer to sources and sources are outdated; inaccuracies might still exist but be limited); 3—credible (mostly accurate content but lacks a full range of references; content seems correct but you might have to cross-check); 4—reliable (content is accurate and supported by strong and verifiable sources); 5—highly reliable (multiple high-quality sources are used to support all claims).

Regarding usefulness, the following scale was used [56,57]: 1—not useful (content is irrelevant); 2—minimally useful (although the content touches on the topic, it gives very little new or practical knowledge); 3—moderately useful (the content is somewhat useful, but it lacks detail or clear applicability); 4—useful (covers the user needs and provides applicable knowledge that allows the users to achieve their goals); 5—highly useful (the content is the ultimate solution to the problem and is easily applicable).

The students discussed the AI outcomes as a group and decided on the evaluation of each dimension. In particular, the following results were found when information was requested on the paintings “The Trout Pool” and the “Fortified Wall at Tivoli”.

Overall, ChatGPT (free version) presented the correct information for both paintings, followed by Claude. Both ChatGPT and Claude also recognized the materials and techniques of both paintings and their history, presenting correct information. For the painting “The Trout Pool”, Gemini presented incorrect information and mistakenly identified it as another painting entitled “The Death of Socrates”. However, for the second painting, the “Fortified Wall at Tivoli”, Gemini returned the correct information. Finally, DeepSeek did not provide valid information for the four prompts. Based on this, and due to the performance of the different AI models, it was decided to continue with ChatGPT and Claude.

RQ2: How can AI help with discovering associations?

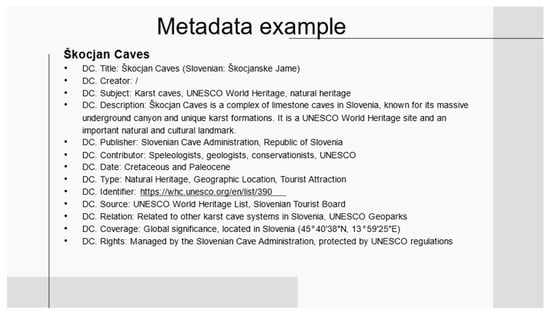

Once the optimal AI model was identified, metadata was created for important monuments from different European countries, using the Dublin Core Metadata Initiative (DCMI), for the 1. Portalegre Cathedral (Portugal), 2. Škocjan Caves (Slovenia), 3. Curonian Spit (Lithuania), 4. Acropolis of Athens (Greece), 5. Belfries (Belgium).

Dublin Core consists of fifteen data elements, in particular the title, creator, subject, description, publisher, contributor, date, type, format, identifier, source, language, relation, coverage, and rights. The specific sites were chosen since four of them are world-famous UNESCO sites and the Portalegre Cathedral, although less known, was the main monument in the host town of the Erasmus program. It was also interesting to see what associations could be found between more or less well-known monuments. Figure 2 provides an example of the metadata for one of the sites used in the study, i.e., the Škocjan Caves in Slovenia.

Figure 2.

Example of metadata created for the Škocjan Caves in Slovenia.

Once metadata was created for all five of the sites, the AI was asked to reveal associations between them. Several connections were found based on their history, cultural significance, geography, purpose of use, etc. The following associations were found using the AI when two sites were compared:

- Acropolis and Belfries of Belgium: Both are human-made architectural sites that reflect the political, civic, and cultural history of their respective regions. Both were built with advanced architectural techniques of their time, representing civic pride and governance. Both are influenced by European history, reflecting political evolution (Ancient Greece is associated with Democracy, while Medieval Belgium is associated with Civic Autonomy).

- Acropolis and Curonian Spit: Both involve landscape modifications (the Acropolis being built on a rocky outcrop, and the Curonian Spit shaped by both natural forces and human intervention).

- Acropolis and Portalegre Cathedral: Both sites are not only historical monuments but also enduring symbols of the cultural legacy they represent, impacting their civilizations’ subsequent development. Both the Acropolis and the Portalegre Cathedral are sacred spaces, albeit in different religious and cultural contexts, where spirituality intertwines with physical space and broader societal values.

- Curonian Spit and Belfries of Belgium: Both are cross-border heritage sites, involving bilateral cooperation (Lithuania/Russia for Curonian Spit and Belgium/France for the Belfries).

- Škocjan Caves and Belfries: Some belfries were used as watchtowers, like the natural underground chambers of Škocjan Caves that were historically used for shelter or strategic defense.

- Škocjan Caves and Curonian Spit: Both are natural heritage sites with unique geological and ecological significance.

- Curonian Spit and Škocjan Caves: Symbols of nature’s power and fragility, with both sites being vulnerable to environmental changes (erosion for Curonian Spit, water damage for Škocjan Caves). Both were influenced by glaciation and geological processes, forming unique landscapes.

- The Belfries and the Portalegre Cathedral: Both built in Renaissance styles.

- Škocjan caves and Portalegre Cathedral: The Škocjan Caves are formed in limestone, which is significant in historical architecture. Many cathedrals, including some in Portugal, incorporate limestone in their construction, potentially linking the Portalegre Cathedral and Škocjan Caves. Portalegre Cathedral may have used limestone in parts of the cathedral’s construction, particularly in structural or decorative elements.

- Škocjan Caves and Curonian Spit: The Reka River shaped Škocjan Caves, carving its massive underground canyon. The Baltic Sea and Curonian Lagoon define the Curonian Spit’s shifting landscapes. Water plays a fundamental role in the formation and evolution of both sites.

When the AI was asked to find connections between three sites, it found that the main connection between the Belfries, the Acropolis, and the Portalegre Cathedral is religion-based. Moreover, when the AI was asked to find associations between given sites, the AI focused on the four famous sites, the Acropolis, the Škocjan Caves, the Curonian Spit, and the Belgian Belfries, and reported that they are all UNESCO heritage sites, meaning they share a common goal of preservation, tourism management, and international recognition. Finally, when the AI was asked to find associations between all five sites, the following was reported: “They all face preservation challenges due to tourism, environmental threats, and climate change. Each site represents different engineering feats, from city planning to natural adaptation. They represent different aspects of identity—political, cultural, and environmental. All sites illustrate the long-term relationship between humans and their surroundings.”

RQ3: Can AI make meaningful cultural narratives?

After connections were found for all of the sites, the AI was asked to create a story that would connect them all. The story the AI provided was “Škocjan caves and Curonian Spit had been natural fortifications in a time where people hadn’t started building castles to protect themselves. On the other hand, Acropolis was created as a dedication to the goddess Athena because they wanted her protection. Belfries had a warning role in its time and Portalegre Cathedral is a dedication to God for protection. The common thing between all of these cultural objects is the protection against something people were afraid of, either it’s an enemy from another place or the possibility of something bad happening in the future”. It seems that the story has a common theme, the protection of humans, and the narrative is built around this concept. The DeepAI image generator was also used to create a single image with all five sites that could accompany the story created (Figure 3).

Figure 3.

Image with the Škocjan Caves, the Curonian Spit, the Acropolis of Athens, the belfries of Belgium, and the Portalegre Cathedral, created with DeepAI image generator.

In addition, Claude was asked to use the associations found to produce a short narrative about the four monuments with an emotional tone, using only factual information. The following story was created:

The Pilgrimage of Shadows

Professor Helena Santos felt a chill as she entered Portalegre Cathedral. The 16th-century Portuguese cathedral stood silent in the evening light, its Mannerist and Baroque features casting long shadows across the stone floor. Helena was documenting UNESCO World Heritage Sites across Europe, but her research had revealed disturbing coincidences. While examining restoration records from 1986—the same year Škocjan Caves received UNESCO designation—she discovered accounts of strange acoustic anomalies in Portalegre Cathedral’s crypt. The cathedral’s renovation team had reported inexplicable sounds echoing through its chambers, similar to the eerie natural acoustics documented in Slovenia’s vast Škocjan Cave system. Both locations reported sounds that seemed to travel impossibly through solid stone. Helena’s investigation next led her to the Curonian Spit, that fragile sand peninsula between Lithuania and Russia. Local preservationists had found Portuguese coins dating to the exact period of Portalegre Cathedral’s construction buried beneath the dunes. No historical record explained their presence. Most disturbing was her discovery at the Acropolis of Athens. Among the ancient Greek site’s lesser-known inscriptions was a passage describing distant western lands and a “house of worship where stone speaks.” The text dated to approximately 430 BCE—exactly 2000 years before Portalegre Cathedral’s cornerstone was laid in 1556. As night fell on Portalegre Cathedral, Helena stood alone within its walls. The evening temperature dropped suddenly as it often did in the ancient stone building. Natural erosion patterns in the limestone columns created shadows that seemed to move in the fading light. Water dripping in the crypt below created rhythmic echoes through the cathedral’s perfect acoustic design. Helena’s rational mind knew these were natural phenomena—the same limestone formations connected many ancient European structures, the same architectural principles governed sound in large stone chambers. Yet standing there, surrounded by centuries of history, the connections between these four monuments felt unnervingly deliberate. She switched on her flashlight and continued her documentation, the beam catching dust particles that danced in the air like ancient messengers between worlds long forgotten.

The text above, although poetic, seems to provide inaccurate historical information, such as the phrase “a house of worship where stone speaks” for the Acropolis of Athens, and a loose connection between the sites, using the example of acoustics. Nevertheless, the text is captivating, and it could be a good starting point for the creation of fictional narratives that could be used to enhance cultural experiences. The findings from the student project prompted the exploration of the use of AI in an immersive cultural experience and the possible integration with Virtual Reality (VR) environments. For this reason, we decided to make use of the equipment available to us at the University of the Peloponnese, which will allow future developments and the further exploration of the potential uses of AI technology for advanced cultural experiences.

5. Engaging Virtual Worlds

The present work showed which AI models are (as of April 2025) more ready to handle cultural content and discover associations between heritage elements (e.g., sites, monuments, etc.). At the moment, AI tools can also create engaging narratives that combine (hi)stories of cultural importance which reveal commonalities between sites, possibly highlighting global human needs, like in this case, the need for protection or the existing need to preserve these sites [58]. However, it seems that the relationship between AI and cultural heritage has only just started. There are currently significant advancements in Agentic AI and planner-based agents. Agentic AI models work in a proactive fashion, understanding the context of the users’ prompts, and addressing complex tasks. Advanced AI models can also form and follow plans, and they are known as planner-based agents. A planner-based AI can understand the overall goals of users, identify the right tool to achieve these goals, create detailed plans, and execute and monitor each step. Systems like Genspark, Gemini Deep Research, Manaus, etc., are good examples of AI that move beyond answering single questions and are capable of dealing with more complex requests. Since such AI advancements in all fields, including cultural heritage, are rapid [59] the necessity to train future professionals is eminent.

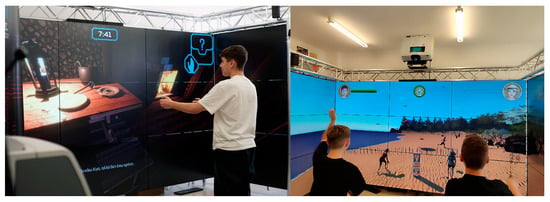

In a future study, coupling AI’s ability to create cultural content (narratives, images, audio, etc.) with Virtual Reality environments could also be explored. In particular, the MobiCAVE, a room-sized Cane Automatic Virtual Environment (Figure 4) [60], could be used to allow embodied interaction with virtual cultural content that has been created by AI models. The system’s room-scale setup and gesture-based controls create the perfect way to turn regular stories into engaging experiences that feel real and are spread out in space. On the other hand, AI can create cultural stories that are tied to a place, based on themes, or rich in symbolism—but above all, it can co-create the story the user wants to experience, responding to emotional triggers and mnemonic audiovisual elements.

Figure 4.

The MobiCAVE.

As it seems, the near future will bring AI tools that will be able to design entire virtual worlds and be able to recreate historical worlds and events, allowing people to engage with cultural heritage in new ways [61]. Combined with the ability to create narratives (and possible personalized narratives) and new AI-generated experiences, the engagement with the past and our heritage will change form. Experiences could be more engaging, personal, emotional, immersive, and engaging, possibly requiring new paradigms in creating cultural heritage experiences. By incorporating AI technologies in the cultural heritage sector, several challenges emerge. One important aspect is to look for the validity and the scientific correctness of the information produced by AI technologies. In addition, despite AI’s ability to generate content, entire worlds, and experiences that are impressive, the experiences created also need to be coherent, meaningful, and engaging. Furthermore, relying more and more on AI for the generation of heritage experiences (3D worlds, narratives, etc.) might reduce human creativity and the opportunity to express artistic and creative talent. Thus, the use of AI in the field of cultural heritage has a strong ethics dimension [62] regarding the dynamic interaction of AI with humans and the ways human activity is influenced, altered, or even replaced.

The present work primarily aimed to engage future professionals with generative AI models and allow them to study their effectiveness for handling cultural data and producing cultural narratives. The focus was not on specific models, as such, but on showing students how such AI-generated content should be treated. In a world where content can be very easily produced, it is essential to train students in checking content, questioning its validity and usefulness, and moving towards hybrid situations where humans and AI collaborate beyond the limitations of both [39].

Moreover, there are a few limitations of the present work, primarily because its focus was educational. For this reason, only four AI models were used to check their ability to handle cultural content and create cultural narratives. Thus, the approach was not systematic, but it revealed differences in the models’ abilities. In addition, the evaluation of the AI outcomes was performed solely by students and not by cultural experts. In future work, we intend to compare the AI models and their ability to handle cultural content in a more systematic way, including more models and an evaluation of results based on experts’ input. This work, therefore, should be understood from the perspective of training the young generation of future GLAM (Galleries, Libraries, Archives, and Museums) professionals, allowing them to see the limitations and also the potential of AI technology. In addition, future work will also involve a chain-of-thought approach (e.g., using thinking models) and multi-step reasoning where AI is first prompted to generate detailed descriptions and then asked to find links between heritage sites. Thus, the direct method used in the current work, which was primarily chosen for training purposes, may not fully exploit the reasoning capabilities of current language models.

Finally, the current work only briefly raised the issue of the validity of AI models. Future research should delve deeper into validity issues and examine ways that heritage institutions should design practical workflows to handle AI outputs that could be both creative and potentially flawed. The current work only touched on this issue as it aimed to train future heritage professionals in the use of AI models and allow them to realize their potential and limitations.

Author Contributions

Conceptualization, A.A. and A.C.; methodology, A.A. and A.C.; software, A.T., E.R. and G.L.; validation, A.A. and A.C.; formal analysis, A.A. and A.C.; investigation, P.C., G.G., I.L. and C.S.; resources, P.C., G.G., I.L. and C.S.; data curation, P.C., G.G., I.L. and C.S.; writing—original draft preparation, A.A., P.D., A.T. and E.R.; writing—review and editing, A.A.; visualization, A.A.; supervision, A.A. and A.C.; project administration, A.A. and A.C.; funding acquisition, A.A. and A.C. All authors have read and agreed to the published version of the manuscript.

Funding

The mobility of students and staff participating in this study was funded by the Erasmus+ Blended Intensive Programme.

Data Availability Statement

Data is contained within the article.

Acknowledgments

We would like to thank the Portalegre Polytechnic University in Portugal for the excellent organization of the Erasmus BIP program, as well as the Erasmus office at the University of West Attica (Greece) for their efficiency in supporting student and staff mobility. During the preparation of this manuscript/study, the authors used multiple AI tools for the purposes described below. DeepAI image generator was used for the creation of Figure 3. In addition, different AI tools were tested for the creation of content, due to the nature of the specific work, like Perplexity, ChatGPT, Claude, Gemini, and Le Chat Mistral Large. Finally, Gemini was also used to proofread parts of the text. The authors have reviewed and edited the output and take full responsibility for the content of this publication.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- UNESCO. Ethics of Artificial Intelligence. Available online: https://www.unesco.org/en/artificial-intelligence/recommendation-ethics?hub=32618 (accessed on 31 July 2025).

- European Parliament. Artificial Intelligence in the Context of Cultural Heritage and Museums: Complex Challenges and New Opportunities. Available online: https://www.europarl.europa.eu/RegData/etudes/BRIE/2023/747120/EPRS_BRI(2023)747120_EN.pdf (accessed on 31 July 2025).

- Teaching with Europeana. AI in the Context of Cultural Heritage. Available online: https://teachwitheuropeana.eun.org/updates/ai-in-the-context-of-cultural-heritage/ (accessed on 31 July 2025).

- Europeana Pro. Launching the AI4Culture Platform: Empowering Cultural Heritage with AI Tools and Resources. Available online: https://pro.europeana.eu/post/launching-the-ai4culture-platform-empowering-cultural-heritage-with-ai-tools-and-resources (accessed on 31 July 2025).

- Portalegre Polytechnic University. Blended Intensive Program on Digital Transformation: Artificial Intelligence as a Key Enabler of Digital Transformation. Available online: https://bip.ipportalegre.pt (accessed on 31 July 2025).

- Ba’ai, N.; Aris, A. AI and Cultural Heritage: Preserving and Promoting Global Cultures Through Technology. Nanotechnol. Percept. 2024, 20, 170–176. [Google Scholar] [CrossRef]

- Foroughi, M.; Wang, T.; Roders, P. In Praise of Diversity in Participatory Heritage Planning Empowered by Artificial Intelligence: Windcatchers in Yazd. Urban Plan. 2025, 10, 8724. [Google Scholar] [CrossRef]

- Hardman, L.; Aroyo, L.; Ossenbruggen, J.; Hyvönen, E. Using AI to Access and Experience Cultural Heritage. IEEE Intell. Syst. 2009, 24, 23–25. [Google Scholar] [CrossRef]

- Greco, E.; Gaetano, A.; De Spirt, A.; Semeraro, S.; Piscitelli, P.; Miani, A.; Mecca, S.; Karaj, S.; Trombin, R.; Hodgton, R.; et al. AI-Enhanced Tools and Strategies for Airborne Disease Prevention in Cultural Heritage Sites. Epidemiologia 2024, 5, 267–274. [Google Scholar] [CrossRef]

- Wang, W.; Huang, Q.; You, S.; Yang, C.; Neumann, U. Shape inpainting using 3d generative adversarial network and recurrent convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2298–2306. [Google Scholar]

- Gadelha, M.; Maji, S.; Wang, R. 3d shape induction from 2d views of multiple objects. In Proceedings of the 2017 International Conference on 3D Vision (3DV), Qingdao, China, 10–12 October 2017; pp. 402–411. [Google Scholar] [CrossRef]

- Nash, C.; Williams, C.K.I. The shape variational autoencoder: A deep generative model of part-segmented 3d objects. Comput. Graph. Forum 2017, 36, 1–12. [Google Scholar] [CrossRef]

- Liu, S.; Giles, L.; Ororbia, A. Learning a hierarchical latent-variable model of 3d shapes. In Proceedings of the 2018 International Conference on 3D Vision (3DV), Verona, Italy, 5–8 September 2018; pp. 542–551. [Google Scholar] [CrossRef]

- Beetz, M.; Banerjee, A.; Grau, V. Generating subpopulation-specific biventricular anatomy models using conditional point cloud variational autoencoders. In International Workshop on Statistical Atlases and Computational Models of the Heart; Springer International Publishing: Cham, Switzerland, 2021; pp. 75–83. [Google Scholar] [CrossRef]

- Biljecki, F.; Ledoux, H.; Stoter, J. Generating 3D city models without elevation data. Comput. Environ. Urban Syst. 2017, 64, 1–18. [Google Scholar] [CrossRef]

- Saha, S.; Rios, T.; Minku, L.L.; Stein, B.V.; Wollstadt, P.; Yao, X.; Back, T.; Sendhoff, B.; Menzel, S. Exploiting generative models for performance predictions of 3D car designs. In Proceedings of the 2021 IEEE Symposium Series on Computational Intelligence (SSCI), Orlando, FL, USA, 5–7 December 2021; pp. 1–9. [Google Scholar] [CrossRef]

- Zhang, J.; Zhan, F.; Wu, R.; Yu, Y.; Zhang, W.; Song, B.; Lu, S. Vmrf: View matching neural radiance fields. In Proceedings of the 30th ACM International Conference on Multimedia, Lisboa, Portugal, 10–14 October 2022; pp. 6579–6587. [Google Scholar] [CrossRef]

- Gu, K.; Maugey, T.; Knorr, S.; Guillemot, C. Omni-nerf: Neural radiance field from 360 image captures. In Proceedings of the 2022 IEEE International Conference on Multimedia and Expo (ICME), Taipei, Taiwan, 18–22 July 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Kniaz, V.V.; Knyaz, V.A.; Bordodymov, A.; Moshkantsev, P.; Novikov, D.; Barylnik, S. Double nerf: Representing dynamic scenes as neural radiance fields. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Moscow, Russia, 24–26 April 2023; Volume 48, pp. 115–120. [Google Scholar] [CrossRef]

- Arshad, M.A.; Jubery, T.; Afful, J.; Jignasu, A.; Balu, A.; Ganapathysubramanian, B.; Krishnamurthy, A. Evaluating Neural Radiance Fields for 3D Plant Geometry Reconstruction in Field Conditions. Plant Phenomics 2024, 6, 0235. [Google Scholar] [CrossRef] [PubMed]

- Lin, C.H.; Ma, W.C.; Torralba, A.; Lucey, S. Barf: Bundle-adjusting neural radiance fields. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 5741–5751. [Google Scholar]

- Lorenzoni, G.; Iacono, S.; Martini, L.; Zolezzi, D.; Vercelli, G.V. Virtual Reality and Conversational Agents for Cultural Heritage Engagement. In Proceedings of the Conference Proceedings, the Future of Education 2025, Online, 26–27 June 2025. [Google Scholar]

- Münster, S.; Maiwald, F.; Di Lenardo, I.; Henriksson, J.; Isaac, A.; Graf, M.; Beck, C.; Oomen, J. Artificial Intelligence for Digital Heritage Innovation: Setting up a R&D Agenda for Europe. Heritage 2024, 7, 794–816. [Google Scholar] [CrossRef]

- Harisanty, D.; Obille, K.; Anna, N.; Purwanti, E.; Retrialisca, F. Cultural heritage preservation in the digital age, harnessing artificial intelligence for the future: A bibliometric analysis. Digit. Libr. Perspect. 2024, 40, 609–630. [Google Scholar] [CrossRef]

- Vassilakis, C.; Poulopoulos, V.; Antoniou, A.; Wallace, M.; Lepouras, G.; Nores, M.L. exhiSTORY: Smart exhibits that tell their own stories. Future Gener. Comput. Syst. 2018, 81, 542–556. [Google Scholar] [CrossRef]

- Colucci Cante, L.; Di Martino, B.; Graziano, M.; Branco, D.; Pezzullo, G.J. Automated storytelling technologies for cultural heritage. In International Conference on Emerging Internet, Data & Web Technologies; Springer Nature Switzerland: Cham, Switzerland, 2024; pp. 597–606. [Google Scholar] [CrossRef]

- Beguš, N. Experimental Narratives: A Comparison of Human Crowdsourced Storytelling and AI Storytelling. Humanit. Soc. Sci. Commun. 2023, 11, 1392. [Google Scholar] [CrossRef]

- Almeida, P.; Teixeira, A.; Velhinho, A.; Raposo, R.; Silva, T.; Pedro, L. Remixing and repurposing cultural heritage archives through a collaborative and AI-generated storytelling digital platform. In Proceedings of the 2024 ACM International Conference on Interactive Media Experiences Workshops, Stockholm, Sweden, 12–14 June 2024; pp. 100–104. [Google Scholar] [CrossRef]

- Casillo, M.; Colace, F.; Gupta, B.B.; Lorusso, A.; Santaniello, D.; Valentino, C. The role of AI in improving interaction with cultural heritage: An overview. In Handbook of Research on AI and ML for Intelligent Machines and Systems; IGI Global: Hershey, PA, USA, 2024; pp. 107–136. [Google Scholar]

- Chen, Y.; Zhang, L.; Dong, Q. Using natural language processing to evaluate local conservation text: A study of 624 documents from 303 sites of the World Heritage Cities Programme. J. Cult. Herit. 2024, 70, 259–270. [Google Scholar] [CrossRef]

- Harth, A. The study of pigments in cultural heritage: A review using machine learning. Heritage 2024, 7, 3664–3695. [Google Scholar] [CrossRef]

- Zhang, J.; Xiang, R.; Kuang, Z.; Wang, B.; Li, Y. ArchGPT: Harnessing large language models for supporting renovation and conservation of traditional architectural heritage. Herit. Sci. 2024, 12, 220. [Google Scholar] [CrossRef]

- Machidon, O.M.; Tavčar, A.; Gams, M.; Duguleană, M. CulturalERICA: A conversational agent improving the exploration of European cultural heritage. J. Cult. Herit. 2020, 41, 152–165. [Google Scholar] [CrossRef]

- Tsepapadakis, M.; Gavalas, D. Are you talking to me? An Audio Augmented Reality conversational guide for cultural heritage. Pervasive Mob. Comput. 2023, 92, 101797. [Google Scholar] [CrossRef]

- Hettmann, W.; Wölfel, M.; Butz, M.; Torner, K.; Finken, J. Engaging museum visitors with AI-generated narration and gameplay. In International Conference on ArtsIT, Interactivity and Game Creation; Springer Nature Switzerland: Cham, Switzerland, 2022; pp. 201–214. [Google Scholar]

- Wang, H.; Matviienko, A. Experiencing Art Museum with a Generative Artificial Intelligence Chatbot. In Proceedings of the 2025 ACM International Conference on Interactive Media Experiences, Niterói, Brazil, 3–6 June 2025; pp. 430–436. [Google Scholar]

- Trichopoulos, G.; Konstantakis, M.; Caridakis, G.; Katifori, A.; Koukouli, M. Crafting a museum guide using ChatGPT4. Big Data Cogn. Comput. 2023, 7, 148. [Google Scholar] [CrossRef]

- Mensa, E.; Damiano, R. From plot to prompts: A case study on interactive narratives in museums. In Proceedings of the 3rd Workshop on Artificial Intelligence for Cultural Heritage (AI4CH 2024), Bolzano, Italy, 6–28 November 2024; Volume 3865, pp. 102–108. [Google Scholar]

- He, Z.; Su, J.; Chen, L.; Wang, T.; Lc, R. ‘I Recall the Past’: Exploring How People Collaborate with Generative AI to Create Cultural Heritage Narratives. Proc. ACM Hum. Comput. Interact. 2025, 9, 1–30. [Google Scholar] [CrossRef]

- Hannaford, E.D.; Schlegel, V.; Lewis, R.; Ramsden, S.; Bunn, J.; Moore, J.; Nenadic, G. Our Heritage, Our Stories: Developing AI tools to link and support community-generated digital cultural heritage. J. Doc. 2024, 80, 1133–1147. [Google Scholar] [CrossRef]

- Xu, N.; Liu, Y.; Chen, Y.; Jiang, Z.; Ren, Y.; Zhang, Z.; Yu, L. ArtifactShow: Incorporating Generative AI into Narrative Visualization for Interactive Cultural Experience. In Proceedings of the Extended Abstracts of the CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 26 April 2025; pp. 1–8. [Google Scholar]

- Ferracani, A.; Bertini, M.; Pala, P.; Nannotti, G.; Principi, F.; Becchi, G. Personalized generative storytelling with AI-visual illustrations for the promotion of knowledge in cultural heritage tourism. In Proceedings of the 6th Workshop on the Analysis, Understanding and Promotion of Heritage Contents, Melbourne, Australia, 28 October–1 November 2024; pp. 28–32. [Google Scholar]

- Gillespie, T. Generative AI and the politics of visibility. Big Data Soc. 2024, 11, 20539517241252131. [Google Scholar] [CrossRef]

- Foka, A.; Griffin, G. AI, Cultural Heritage, and Bias: Some Key Queries That Arise from the Use of GenAI. Heritage 2024, 7, 6125–6136. [Google Scholar] [CrossRef]

- Pansoni, S.; Tiribelli, S.; Paolanti, M.; Frontoni, E.; Giovanola, B. Design of an Ethical Framework for Artificial Intelligence in Cultural Heritage. In Proceedings of the 2023 IEEE International Symposium on Ethics in Engineering, Science, and Technology (ETHICS), West Lafayette, IN, USA, 18–20 May 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Constantinides, N.; Constantinides, A.; Koukopoulos, D.; Fidas, C.; Belk, M. CulturAI: Exploring Mixed Reality Art Exhibitions with Large Language Models for Personalized Immersive Experiences. In Proceedings of the Adjunct Proceedings of the 32nd ACM Conference on User Modeling, Adaptation and Personalization, UMAP’24, Cagliari, Italy, 1–4 July 2024; pp. 102–105. [Google Scholar] [CrossRef]

- Sylaiou, S.; Dafiotis, P.; Koukopoulos, D.; Koukoulis, K.; Vital, R.; Antoniou, A.; Fidas, C. From physical to virtual art exhibitions and beyond: Survey and some issues for consideration for the metaverse. J. Cult. Herit. 2024, 66, 86–98. [Google Scholar] [CrossRef]

- Dafiotis, P.; Sylaiou, S.; Stylianidis, E.; Koukopoulos, D.; Fidas, C. Evaluating Uses of XR in Fostering Art Students’ Learning. Multimodal Technol. Interact. 2025, 9, 36. [Google Scholar] [CrossRef]

- Medium. Comparing Top AI Models: Claude, ChatGPT, DeepSeek, Grok. Available online: https://mohessaid.medium.com/from-chatgpt-to-grok-21d15975ca8c (accessed on 2 August 2025).

- SGU. A Comparison of Leading AI Models: DeepSeek AI, ChatGPT, Gemini, and Perplexity AI. Available online: https://sgu.ac.id/a-comparison-of-leading-ai-models-deepseek-ai-chatgpt-gemini-and-perplexity-ai/ (accessed on 2 August 2025).

- Ahrefs. Website Traffic Checker. Available online: https://ahrefs.com/traffic-checker (accessed on 31 August 2025).

- Bandi, P.K.R. The Role of Metadata in Making Data AI-Ready: Enhancing Data Discoverability and Usability. J. Comput. Sci. Technol. Stud. 2025, 7, 954–963. [Google Scholar] [CrossRef]

- Flesch, R. A new readability yardstick. J. Appl. Psychol. 1948, 32, 221–233. [Google Scholar] [CrossRef]

- Rieh, S.Y.; Danielson, D.R. Credibility: A review of the literature and some future research directions. In Proceedings of the 2007 Ameign of Everyday Things; Basic Books: New York, NY, USA, 2013. [Google Scholar]

- Metzger, M.J. Making sense of credibility on the Web: Models for evaluating online information and recommendations for future research. J. Am. Soc. Inf. Sci. Technol. 2007, 58, 2078–2091. [Google Scholar] [CrossRef]

- Cooper, A. The Inmates Are Running the Asylum: Why High-Tech Products Drive Us Crazy and How to Restore the Sanity; Pearson Higher Education: London, UK, 2004. [Google Scholar]

- Norman, D.A. The Design of Everyday Things; Basic Books: New York, NY, USA, 2013. [Google Scholar]

- Lucas-Moreira, O.D.; Núñez-Díaz, J. Narratives in the Age of AI: Reflections on Literature and Communication. YUYAY Estrateg. Metodol. Didácticas Educ. 2025, 4, 77–93. [Google Scholar] [CrossRef]

- Pavlidis, G. Agentic AI for Cultural Heritage: Embedding Risk Memory in Semantic Digital Twins. Computers 2025, 14, 266. [Google Scholar] [CrossRef]

- Theodoropoulos, A.; Stavropoulou, D.; Papadopoulos, P.; Platis, N.; Lepouras, G. Developing an interactive VR CAVE for immersive shared gaming experiences. Virtual Worlds 2023, 2, 162–181. [Google Scholar] [CrossRef]

- Chodvadiya, C.; Solanki, V.; Singh, K.G. Intelligent virtual worlds: A survey of the role of AI in the metaverse. In Proceedings of the 2024 3rd International Conference for Innovation in Technology (INOCON), Bangalore, India, 1–3 March 2024; pp. 1–6. [Google Scholar]

- Thomas, S. AI and actors: Ethical challenges, cultural narratives and industry pathways in synthetic media performance. Emerg. Media 2024, 2, 523–546. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).