Abstract

This paper presents the development and evaluation of a method for rendering realistic haptic textures in virtual environments, with the goal of enhancing immersion and surface recognizability. By using Blender for the creation of geometric models, Unity for real-time interaction, and integration with the Touch haptic device from 3D Systems, virtual surfaces were developed with parameterizable characteristics of friction, stiffness, and relief, simulating different physical textures. The methodology was assessed through two experimental phases involving a total of 47 participants, examining both tactile recognition accuracy and the perceived realism of the textures. Results demonstrated improved overall performance and reduced variability between textures, suggesting that the approach can provide convincing haptic experiences. The proposed method has potential applications across a wide range of domains, including education, medical simulation, cartography, e-commerce, entertainment, and artistic creation. The main contribution of this research lies in the introduction of a simple yet effective methodology for haptic texture rendering, which is based on the flexible adjustment of key parameters and iterative optimization through human feedback.

1. Introduction

Human–Computer Interaction (HCI) is an interdisciplinary field that examines how people use and understand computing systems. Its aim is to create technologies that are functional, usable, accessible, and enjoyable. Drawing from computer science, psychology, design, sociology, and other disciplines, HCI develops interfaces that meet human needs [1,2]. It addresses user interface design, usability, accessibility, and user experience, while also engaging with challenges posed by technologies such as artificial intelligence, the Internet of Things, and virtual/augmented reality [1].

Haptic Human–Computer Interaction (HHCI), a subfield of HCI, uses the sense of touch for more natural communication with computing systems [3]. Real-time haptic interactions can be active or passive [4]. Through force, vibration, and pressure feedback, users can “feel” virtual objects, enriching experiences beyond audiovisual channels. HHCI applications span the fields of medical training, entertainment, virtual/augmented reality, and e-commerce, while also improving accessibility for people with disabilities [5,6]. Research emphasizes the realistic reproduction of textures and forces, cost reduction, and personalized experiences.

Recent haptic technologies employ touch as a main interaction channel. For visually impaired users, tools like dynamic Braille displays, smart wearables with haptic signals, and vibration-based mobile apps enhance daily life and digital access. More advanced feedback systems such as gloves simulating texture, pressure, or temperature, and force-feedback devices enable realistic interaction with virtual objects [7,8,9,10]. Overall, haptic interaction strengthens accessibility, offers immersive experiences, and opens new opportunities across diverse fields.

Touch is a fundamental human sense, distinct from vision and hearing as it requires active engagement to perceive texture, temperature, or shape. Research by Roberta L. Klatzky et al. highlights how the finger processes haptic patterns through friction [11]. The skin, acting as a complex sensory organ, transmits information via specialized receptors: mechanoreceptors, thermoreceptors, and nociceptors. Merkel disks detect fine details, Meissner’s corpuscles light touch, Pacinian corpuscles high-frequency vibrations, and Ruffini endings stretch and motion. This variety enables precise, multidimensional perception. Sensitivity is higher in the fingertips due to greater receptor density, while receptors may be rapidly or slowly adapting, contributing to the perception of transient vibrations or sustained pressure [7,12,13]. Unlike sight and hearing, limited to specific organs, touch spans the whole body, supporting direct, continuous interaction with the environment essential for safety, movement, and daily adaptation.

Over the past decade, virtual reality (VR) has advanced mainly in visual immersion, yet the absence of touch limits realism, as perception is inherently multisensory. Multimodal VR, combining visual and haptic stimuli, is expected to enhance applications in e-commerce, education, and entertainment by offering more authentic experiences [14,15]. Accurate reproduction of texture and vibrations is central to haptic perception, with digital materials contributing to product design and sales. Research has emphasized the haptic rendering of textiles [16]. Physically based approaches offer accuracy but require high computational resources, whereas data-driven methods are more flexible but less scalable. Despite advances in techniques such as vibrotactile feedback, electrostatic methods, and ultrasonic systems, realistic rendering of complex textures remains challenging. The integration of artificial intelligence and deep learning algorithms opens new opportunities, enabling more personalized and adaptive experiences.

This work presents the development and evaluation of a haptic simulation environment created in Unity3D platform version 2021.1.18f1, which includes six different virtual textures (brick, metal, wood, sand, ice, and concrete) enhanced with haptic properties for increased realism. Using the Touch haptic device (3D Systems, Rock Hill, SC, USA), participants interacted with the surfaces through adjustable properties friction, hardness, and relief to assess perceived realism. Two experimental phases were conducted with a total of 47 participants; in the first phase, the textures were evaluated both visually and haptically, with the ability to adjust parameters, while in the second phase, textures were recognized exclusively through touch in a randomized order. The results showed an overall improvement in recognition accuracy between the two experiments, with significant gains for metal and wood textures. Statistical analysis revealed a reduction in standard deviation, indicating more consistent performance across textures, while confusion matrices highlighted specific textures (concrete, brick) that require further optimization. These findings contribute to the advancement of haptic simulation methods for realistic virtual environments. Despite the encouraging results, the present study has limitations stemming from the use of a limited number of haptic patterns and a relatively small participant sample. Future research is expected to expand the range of haptic stimuli and apply experimental procedures to larger user populations, aiming to enhance the generalizability of the results and provide a more rigorous assessment of the system’s effectiveness.

Despite the growing interest in haptic interaction, many existing approaches face limitations. Several studies focus on the design and implementation of complex geometric patterns for each texture, which increases development time and requirements. Others develop sophisticated machine learning models, which demand specialized expertise, significant training time, and computational resources. The present study introduces a methodology based on the direct parameterization of three fundamental properties (friction, stiffness, relief), providing control without the need for training neural networks or generating complex geometric models for each texture. This approach is implemented through affordable hardware (Touch device) and software (Unity, Blender), making it more flexible and less costly compared to solutions relying on specialized wearable systems. An additional distinction lies in the systematic process of optimizing texture realism through two successive experimental phases. The use of human feedback to refine parameters, combined with the statistical analysis of recognition rates, temporal variations, and confusions, provides both quantitative and qualitative evidence for the effectiveness of the method. Such an approach, which aims to enhance recognizability through touch alone, serves as a complement to machine learning based methodologies.

2. Related Works

The study by Hodaya Dahan et al. [17] aimed to develop and evaluate a haptic virtual reality system for distinguishing hardness and texture, in comparison with established clinical tests (Shape/Texture Identification Test and Moberg Pick-Up Test). A total of 44 healthy adults participated in the procedure, interacting via the 3D Systems Touch haptic device with pairs of virtual surfaces differing in friction or hardness, and each time, selecting the smoother or harder surface. The difficulty was dynamically adjusted using a “staircase” method. The results demonstrated very high agreement with traditional measurements, proving that the proposed VR-based test is reliable and can be used as an alternative or complementary tool in clinical settings.

Existing VR applications rarely provide the sense of touch experienced in the real world, particularly the perception of texture when moving across a surface. Current methods typically require a separate model for each texture, which limits scalability. Negin Heravi et al. [18,19] present the development and evaluation of a machine learning model for rendering realistic haptic textures in real-time within virtual reality (VR) environments. The model is a neural network that takes as input an image from the GelSight visuo-haptic sensor along with the user’s motion and pressure data. Its output is a spectrum of accelerations (DFT magnitude), which is converted into vibrotactile feedback via a high-frequency vibrotactile transducer connected to the 3D Systems Touch device. The method was tested in an experimental study with 25 participants across four phases: similarity comparison, forced-choice trials, evaluation (e.g., rough–smooth), and generalization to novel, unseen textures. Results demonstrated that the model is real-time implementable, easily scalable to multiple textures, and capable of producing realistic texture vibrations even for surfaces not included in the training set, thereby enhancing the haptic experience in VR.

Alongside the aforementioned studies, recent advanced methods have explored the use of deep learning. In particular, the work of Joolee and Jeon [20] presented a comprehensive data-driven framework (Deep Spatio-Temporal Networks) for the realistic real-time synthesis of acceleration signals. Although this approach achieves remarkable fidelity for both isotropic and anisotropic textures, its reliance on large datasets and the complex process of model training limits its immediate applicability in rapid prototyping scenarios. From a different perspective, the study by Lee et al. [21] focused on the design of a lightweight and wearable haptic device for rendering stiffness in Augmented Reality (AR) environments. Their device, based on a passive tendon-locking mechanism, highlights stiffness as a critical parameter for realistic interaction one that our work also incorporates and systematically investigates. However, while their solution is optimized for AR interaction using a wearable device, our method aims at the flexibility of direct parameterization of multiple properties for Virtual Reality (VR) applications using a stylus device. Furthermore, the work of Rouhafzay and Cretu [22] developed a visuo-haptic framework for object recognition, guided by a visual attention model. The integration of multiple information sources (cutaneous and kinesthetic stimuli) aligns with the spirit of our multiparametric approach. Nevertheless, the present study differentiates its methodology by focusing exclusively on haptic-based texture recognition, systematically isolating the tactile channel in order to clearly assess its contribution without visual guidance or the complexity of video integration thus complementing this broader spectrum of approaches.

The research by Tzimos N. et al. [23] focuses on the development and evaluation of geometric haptic patterns for three-dimensional virtual objects, aiming to enhance the tactile interaction experience. Nine different geometric patterns were created and tested in a virtual environment using the 3D Systems Touch (Phantom Omni) haptic device and H3DAPI software. In the experimental procedure, both blind and sighted users were asked to identify the textures without visual cues. Participants were able to distinguish different textures but faced difficulties with precise recognition.

In a related study, Papadopoulos K. et al. [24] examined similar geometric haptic patterns, focusing on roughness as a tactile variable. The study investigated users’ ability to recognize friction and hardness parameters via a haptic device (Geomagic Touch) in both visually impaired and sighted participants. It was found that accurate haptic discrimination requires noticeably distinct surfaces. Additionally, visually impaired users needed more time and effort to perceive these properties, highlighting the need for specialized design of haptic interface content with an emphasis on accessibility.

The study by Ruiz et al. [25] proposes a method for training models that recognize objects using both vision and touch. They employ synthetic data from 3D simulations, as real haptic samples are scarce. This approach is cost-effective and easily scalable. The results demonstrate that using both virtual and real samples significantly improves recognition accuracy, highlighting the value of multimodal methods for robotic systems. In contrast, the study by Cao et al. [26] presents an approach to haptic rendering that leverages visual information, allowing texture representation without direct physical contact. The work emphasizes the potential of integrating visual and haptic signals to produce realistic tactile sensations.

The study by Tena-Sánchez et al. [27] presents a virtual training environment based on the digital reconstruction of real vegetation, aiming to simulate the haptic experience. This approach combines three-dimensional modeling with haptic rendering methods to realistically reproduce the textures and tactile characteristics of plant elements. The work highlights the potential of haptic simulations in training scenarios, strengthening the connection between physical and virtual environments. The study by Bazelle et al. [28] investigates a cost-effective and lightweight approach to texture simulation in virtual reality through the use of vibrotactile haptic gloves. The system employs simple vibrators on the fingers to reproduce the roughness of different materials, based on heightmap data and hand tracking. The experimental results indicate that users can distinguish between different textures with reasonable accuracy, demonstrating the potential to deliver a convincing haptic experience at low cost.

3. Materials and Methods

3.1. Short Description

The application was developed in Unity3D (version 2021.1.18f1)using the Touch haptic device by 3D Systems to simulate realistic tactile sensations. Six materials brick, metal, wood, sand, ice, and concrete were modeled and parameterized with stiffness, friction, and texture values to reproduce their tactile characteristics. Experimental procedures involved user interaction with the virtual textures, first with visual feedback and then in blind recognition tasks. The collected data allowed optimization of haptic parameters and evaluation of users’ ability to identify materials solely through touch.

3.2. Software and Device

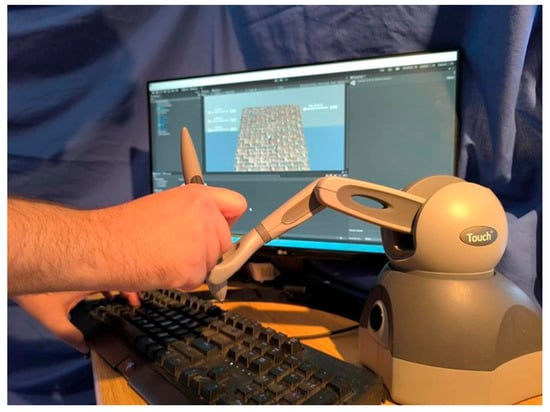

The Touch device (Figure 1) is among the most widely recognized haptic devices and is extensively employed in research and education. It was designed as a cost-effective force-feedback tool, with the objective of realistically reproducing the sense of touch in virtual environments. Its initial design was based on three parameters: the type of haptic sensation to be delivered, the number of actuators required, and the available workspace [29,30]. The device features 3 active degrees of freedom and 3 passive degrees of freedom with orientation sensors, enabling natural and comfortable use through a lightweight stylus [29,30]. It offers high precision, with a spatial resolution of 0.055 mm, a maximum force output of 3.3 N, and a weight of approximately 3 lb. Its operation relies on three-dimensional kinesthetic feedback, with position and velocity measured via optical encoders [31]. The device features a communication interface that enables connection and data exchange with the computer, supporting real-time programming through the ToolKit 3D Touch software in a Visual C++ environment [31]. Experimental studies evaluating its use in virtual reality (VR) environments or in robotic systems with haptic guidance highlight its applicability in domains such as education, teleoperation, and medical interventional procedures [31]. A significant research contribution of the device concerns the development of haptic rendering techniques, which enable the simulation of properties such as friction, stiffness, and texture, thereby enhancing the realism and validity of haptic simulations. The workspace defined by the manufacturer, within which the device operates correctly, providing accurate force feedback, is a rectangular parallelepiped with dimensions 160 mm (width) × 120 mm (height) × 70 mm (depth) [32].

Figure 1.

“Touch Haptic Device”, developed by 3D Systems.

For the development of the virtual environment, the Unity platform version 2021.1.18f1 was selected over other available alternatives. Unity 3D is one of the most widely used development engines, employed not only in the video game industry but also in broader applications of interactive environments. Its widespread adoption is attributed to the flexibility and scalability it offers, making it suitable both for large development teams with complex requirements and for smaller teams or individual creators. Furthermore, the platform’s user-friendliness, combined with the extensive available documentation and active support community, further enhance its popularity. Finally, the fact that the core version of the platform is available free of charge constitutes a significant factor in terms of accessibility, facilitating the entry of new users into the field of virtual environment and interactive application development [33].

Haptics Direct Plugin 1.1 is a plugin that enables the direct integration of the Unity platform with the Touch haptic device, without the need to develop external and complex programming interfaces. Its contribution was crucial in the present implementation, as it facilitated the incorporation of the virtual stylus into the application scene. Furthermore, through the available component provided by the plugin, it was possible to configure textures in a simple and efficient manner, enhancing both the realism and the functionality of the virtual environment [34].

Blender version 2.80 was utilized for the creation of textures, an open-source software tool employed in a wide range of 3D graphics applications, including modeling, texture development, animation, visual effects, and other forms of digital creation. Its widespread adoption is due, on one hand, to the fact that it is freely available software, offering high accessibility, and on the other hand, to the dynamic user community that has developed around it, providing numerous tutorials and online resources. These factors make Blender particularly attractive for educational, research, and professional purposes in the field of 3D design and creation [35].

The application was developed using the C# (version 8.0) programming language within the Visual Studio development environment. The choice of these specific tools is justified by their full compatibility with the Unity engine and its libraries, which facilitates the integration of functionalities and the efficient implementation of the software [36].

3.3. Experimental Design and Conditions

To create realistic textures in the application’s virtual environment, two fundamental mapping techniques were employed: Diffuse Map and Displacement Map, both central tools in 3D modeling. The Diffuse Map defines the visual color and appearance of the surface without affecting its relief, whereas the Displacement Map generates geometric irregularities on the surface through brightness information, thereby providing a sense of depth and relief. The maps were created in Blender, utilizing real-life photographs to achieve realistic appearance and high accuracy in haptic representation. Each texture was paired with a Diffuse Map and a Displacement Map, which were subsequently integrated into Unity for the implementation of the application. All textures originated from the Polyhaven.com platform [37], which provides high-quality materials free of charge under the Creative Commons Zero (CC0) license, allowing unrestricted and lawful use without copyright limitations. The quality and open availability of these textures significantly contributed to the rendering of realistic surfaces in the application.

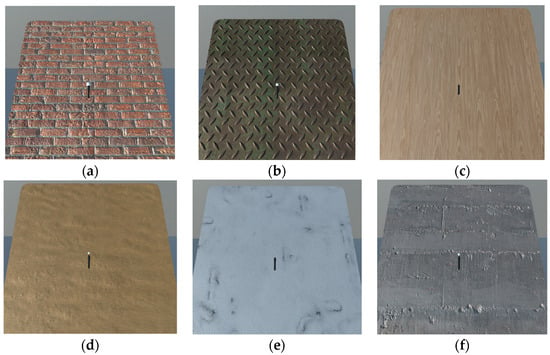

For the purposes of the application, a total of six different textures were selected, each according to specific criteria in order to provide distinct tactile challenges through the Touch device. The chosen textures include brick, metal, wood, sand, ice, and concrete.

- Brick: The brick texture was selected due to its recognizable rectangular pattern and the geometric irregularities on its surface, which create a strong geometric identity. These characteristics make it suitable for applications that require haptic recognition and evaluation through touch (Figure 2a).

- Metal: The metal texture is represented through a metallic floor with a characteristic embossed pattern. The surface combines rigid geometry with a smooth sensation, offering a distinctive haptic experience that merges relief with smoothness (Figure 2b).

- Wood: The wood texture is represented through a desk surface and was selected primarily for its recognizability through touch rather than for pronounced geometric relief, which is limited by the material’s nature. Recognition is mainly based on hardness and friction parameters, complemented by the subtle relief that conveys the sensation of wood (Figure 2c).

- Sand: The sand texture represented a special case within the application, as it was the only one that incorporated the viscosity parameter. This texture is non-solid, allowing the digital stylus to sink into the surface, thereby providing a distinct and unique haptic sensation compared to the other textures (Figure 2d).

- Ice: The ice texture was selected for its completely smooth surface, where relief does not play a significant role. It serves as a counterexample to wood, enabling the evaluation of users’ ability to distinguish textures based on hardness and friction parameters, independently of surface relief (Figure 2e).

- Concrete: The concrete texture is characterized by pronounced geometric relief, though without a specific or repetitive pattern. The surface exhibits random variations, at times recessed and at other times raised, making it difficult to identify the texture based on a consistent geometric pattern, as is the case with brick or metal (Figure 2f).

Figure 2.

Selection of six distinct material textures from the natural environment for haptic evaluation: (a) brick; (b) metal; (c) wood; (d) sand; (e) ice; (f) concrete.

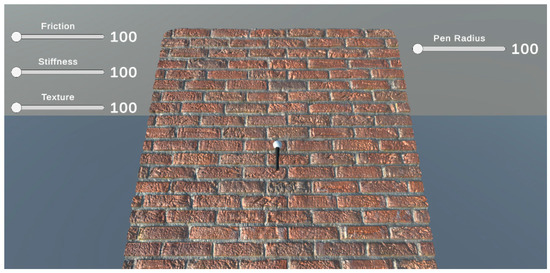

In the development of the application, the design framework for the UI/UX focused on functionality and simplicity, with the aim of facilitating usability and ensuring a seamless flow in the user experience. The user interface incorporates sliders, which are employed for texture parameterization; the sliders on the left correspond to the settings of each texture (e.g., brick), while the slider on the right controls the size of the digital pen. In terms of User Experience, the application was designed so that the user focuses exclusively on interacting with the haptic Touch device and visually observing the screen, without the need to manipulate the UI or other functions. All operations, such as texture changes or application initialization, are managed by the administrator, thereby ensuring a smooth and uninterrupted interaction experience.

The initial user interface, as designed, is presented in Figure 3. At the center, a three-dimensional haptic model of each selected material is displayed, on the left side, three sliders define the values of the haptic properties of the model’s texture, while on the right side, an additional slider controls the size of the haptic stylus. Following experimental trials conducted in the laboratory, the authors determined specific values for the sliders. The initial parameter values were derived from a structured calibration procedure, during which the researchers, having real material samples at hand (such as wood, metal, and brick), iteratively adjusted the friction, stiffness, and texture settings within the Unity environment. The goal of this process was to determine, for each material, the values that most closely approximate the sensation of the corresponding physical material. The final values were selected based on the collective agreement of the researchers, ensuring that the set of six textures achieved the best possible balance between realism and the ability to differentiate one texture from another. These values were adopted as the initial parameters in the experimental procedure (Table 1).

Figure 3.

User interface.

Table 1.

Initial values of tactile properties of textures.

- Friction: Defines the surface friction; low values produce a smoother sensation, while high values increase roughness, without altering the geometry.

- Stiffness: Determines the hardness of the object, influencing the resistance of the texture to applied pressure and the perceived intensity of its relief.

- Texture: Adjusts the prominence of the geometry; low values generate smooth surfaces, whereas high values produce a pronounced relief.

- Viscosity: Applied exclusively to the sand texture, it defines the material’s density and affects the resistance encountered when the stylus penetrates the surface.

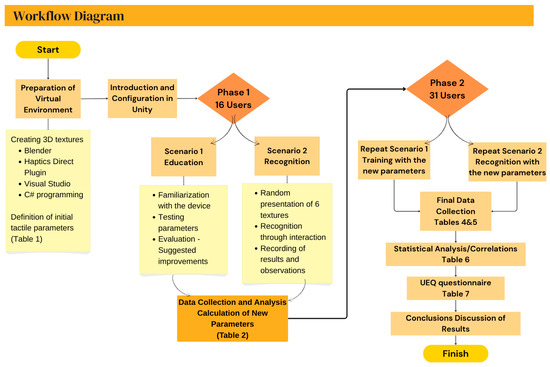

The methodology followed is based on a systematic, iterative process for optimizing haptic parameters, combining quantitative measurements (recognition rates, interaction times) with qualitative human feedback. The experimental part of the study was structured into two distinct phases, each comprising two interaction scenarios. The first phase was conducted with sixteen (16) participants, all students of the Department of Informatics and Electronic Engineering, International Hellenic University, who, as part of the tactile applications workshop interacted via the haptic Touch device with six selected materials from the physical world (Figure 2). The haptic properties of these materials had been defined according to the initial values established by the authors (Table 1).

In the first scenario of the experimental phase, participants were initially asked to familiarize themselves with the Touch device using the “brick” texture. This process involved testing the pressure and movements of the stylus in both horizontal and vertical directions, combined with the corresponding visual representation on the screen, without any initial adjustments to the user interface settings. Subsequently, tests were conducted with varying slider values to allow participants to understand their effect on the haptic experience. The final stage of the scenario involved participants evaluating the degree of correspondence between the initial settings of each texture and the respective real-world surface, as well as providing suggestions for potential modifications that could enhance the fidelity of the simulation. Proposed modifications were systematically recorded during the scenario, including the deviation (positive or negative) from the initial values.

The second scenario focused on the interaction and recognition of textures. Initially, participants were informed that the interaction would occur exclusively through the Touch device, without any visual representation of the texture on the screen. The six textures were presented in a random order, and users were asked to identify them “blindly,” with unlimited time for each trial. The initial values of the haptic properties for each texture corresponded to the preset values (Table 1) and remained unchanged, regardless of any prior modifications made by the participant, while each texture was presented only once per cycle. Activation and switching of textures were controlled by the administrator through dedicated keys, and the application recorded the interaction time for each texture. Upon completion of the procedure, statistical data were collected to evaluate the realism of the textures and the potential need for adjustments, including the following:

- Which textures were correctly or incorrectly identified;

- Which textures caused confusion;

- The time spent on each texture.

The second phase of the experiment repeated the same interaction scenarios, with the difference that the initial values of the haptic properties for each texture were based on the adjusted values derived from the suggestions of the sixteen participants in the first phase (Table 2). A total of thirty-one (31) individuals participated in this second phase.

Table 2.

Values derived from the suggestions of the sixteen participants in the first phase.

Finally, participants had the option to voluntarily complete a brief questionnaire evaluating their experience during the process. A total of 47 individuals participated in this stud. Of these, 41 provided responses to the evaluation questionnaire. The distribution of gender, age, and prior experience with haptic devices for each participant is presented in Table 3.

Table 3.

Age and experience data of participants.

4. Results

The aim of the present study was to investigate how users perceive haptic technology during their interaction with virtual objects that represent corresponding physical materials in three-dimensional environments. The results of the interaction with the selected haptic surfaces of real-world materials (Figure 2), combined with the data collected through observation and the questionnaire, are analyzed in the following section. The research primarily focused on identifying the optimal haptic parameters that should be assigned to the textures of virtual materials in order to more faithfully approximate their counterparts in the physical environment. In addition, the study examined users’ ability to recognize different texture patterns in three-dimensional scenes through the use of haptic devices. In the first phase of the study, which involved sixteen (16) participants, the initial values of the haptic properties were used for the textures under examination. The experimental data yielded both the average recognition rates of the six patterns and the interaction times required to achieve recognition. An important finding was the determination of new haptic property values by the participants, which were subsequently adopted as the basis for the second phase of the experiment, involving thirty-one (31) new users.

In a recognition experiment with binary responses (“Yes”/“No”), the recognition rate for each object, although intuitive, provides an incomplete picture of reality. It represents an average that may arise from entirely different response patterns. The Standard Deviation (SD) and Standard Error (SE) are introduced precisely to uncover this hidden information, namely, the variability and reliability of our measurements [38]. The SD reveals the degree of agreement within our sample and serves as an indicator of the internal consistency of the data for each object individually. The SE quantifies the uncertainty of the estimated recognition rate [39,40]. In summary, the calculation of SD answers the question, “To what extent do users disagree with each other regarding the recognition of each material?”, whereas the SE addresses, “If the interaction were extended to a new group of users, how different would the result be?” For binary variables (correct/incorrect recognition), the standard deviation (SD) and standard error (SE) were calculated using the following equations:

SD = √[p × (1 − p)]

SE = SD/√n

- p: success rate (in decimal form)

- n: number of participants.

Between the first and second recognition phases, notable differences were observed in the values of the standard deviation (SD) and the standard error (SE), indicating how stable and reliable the results are. In the first phase, which included 16 participants, the SD ranged approximately from 0.4 to 0.5, while the SE was relatively high (10–12%). This suggests greater uncertainty in the recognition rates due to the small sample size the fewer the participants, the less stable the results tend to be. In the second phase, with 31 participants, the SD remained nearly the same (0.39–0.50), indicating that the variability in participants’ performance did not change substantially. However, the SE decreased (to 7–9%), meaning that the recognition rates became more reliable and robust. In simple terms, although individual differences remained approximately constant, the inclusion of more participants made the overall results more stable, accurate, and valid for material comparison.

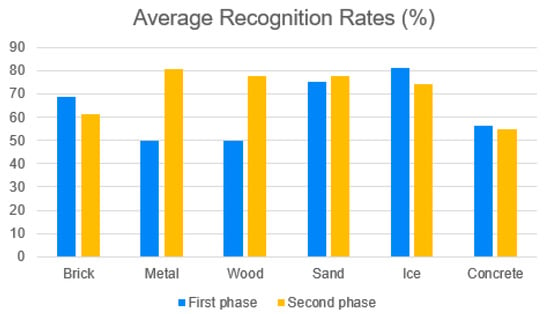

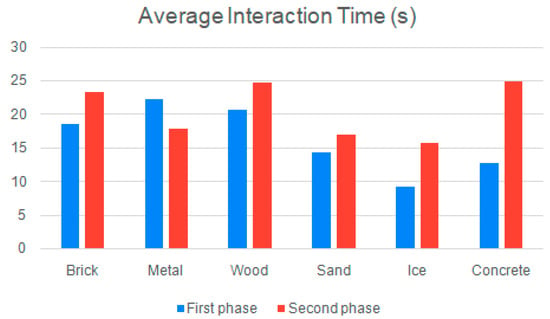

The aggregate results of the two phases are presented in Table 4 and Table 5 The differences in recognition rates and interaction times between the two phases of the experiment for each material are also presented in the following diagrams (Figure 4 and Figure 5).

Table 4.

Results of the first phase of the experiment.

Table 5.

Results of the second phase of the experiment.

Figure 4.

First and second phase of the experiment; average recognition rates.

Figure 5.

First and second phase of the experiment; average interaction time.

To further assess the reliability of the observed improvements, the effect size Cohen’s d was calculated for recognition accuracy between the two experimental phases (Table 6). The Cohen’s d index is a measure of effect size used to estimate the magnitude of the difference between two groups, expressed in units of standard deviation. While statistical significance tests (e.g., t-tests, ANOVA) determine whether a difference exists and the probability that it occurred by chance, effect size measures such as Cohen’s d address the question of “how large is the difference.” In the present experiment, where the two phases (before and after optimization) involved different groups of participants (n1 = 16, n2 = 31) and SD values had already been computed, the calculation of Cohen’s d is methodologically appropriate for estimating the magnitude of improvement in recognition accuracy [41].

d = (M2 − M1)/SDp

SDp = √[(SD12 + SD22)/2]

- d: Cohen’s d

- M1: Average recognition rates (%) for phase1

- SD1: Recognition SD for phase1

- M2: Average recognition rates (%) for phase2

- SD2: Recognition SD for phase2

- SDp: The pooled standard deviation of the two groups

Table 6.

Cohen’s d index between the two experimental phases.

Table 6.

Cohen’s d index between the two experimental phases.

| Material | Phase 1 (M1, SD1) | Phase 2 (M2, SD2) | Cohen’s d | Interpretation |

|---|---|---|---|---|

| Brick | 68.75%, 0.463 | 61.29%, 0.487 | −0.16 | Slight decrease in recognition |

| Metal | 50%, 0.500 | 80.62%, 0.395 | +0.67 | Significant improvement |

| Wood | 50%, 0.500 | 77.42%, 0.418 | +0.60 | Significant improvement |

| Sand | 75%, 0.433 | 77.42%, 0.418 | +0.06 | Almost no change |

| Ice | 81.25%, 0.390 | 74.19%, 0.438 | −0.17 | Slight decrease in recognition |

| Concrete | 56.25%, 0.496 | 54.84%, 0.498 | −0.03 | Almost no change |

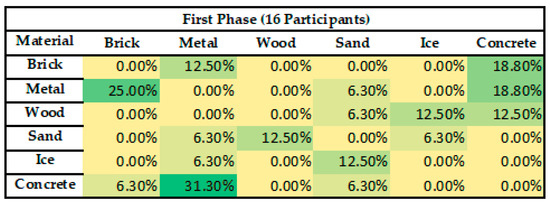

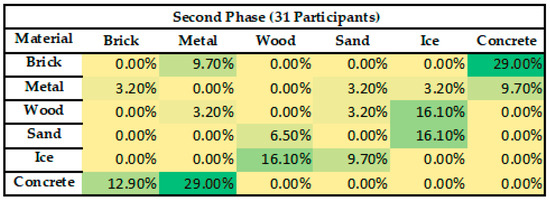

During the interactions, certain misassociations were identified among the six materials under examination. The summary table (Table 7) and heatmaps (Figure 6 and Figure 7) quantitatively presents the observed instances of confusion, enabling analysis of recognition patterns and the difficulty of distinguishing each material. The color intensity (ranging from light yellow to dark green) corresponds to the magnitude of the percentage. The darker the green, the more frequently the two materials were confused.

Table 7.

The summary table quantitatively presents the observed instances of confusion.

Figure 6.

HeatMap first phase (16 participants).

Figure 7.

HeatMap second phase (31 participants).

Upon completion of the experiment, 41 out of the total 47 participants responded to a set of 8 questions based on the UEQ (Appendix A). The User Experience Questionnaire (UEQ), consisting of eight items rated on a 1–5 scale, provides a multifaceted analysis of UX, covering both classical dimensions (e.g., attractiveness, efficiency) and more specialized ones (e.g., content quality, overall experience). The UEQ proved to be a suitable tool for measuring user experience in novel HCI applications, as it can guide application design improvements by revealing both strengths and weaknesses [42]. The findings of this questionnaire are presented in Table 8.

Table 8.

UEQ—User Experience Questionnaire results.

The phases of the experimental procedures are presented in the diagram below (Figure 8).

Figure 8.

The phases of the experiment.

5. Discussion

Observation of participant interactions revealed a high degree of acceptance and enthusiasm, given that for most individuals, this was their first encounter with a haptic device. Familiarization with the environment and the use of the Touch device was immediate, with only minor difficulties related to determining the appropriate pressure of the stylus, which were quickly overcome. Overall, the process was evaluated positively, as users remained focused and engaged throughout the experimental procedure, with no reports of fatigue or negative emotions.

These new settings formed the basis of the second phase, enhancing the fidelity of the simulation. The most significant parameter modifications (friction, stiffness, texture, and viscosity in the case of sand) corresponded primarily to materials that exhibited higher recognition error rates. Brick showed an increase in friction, stiffness, and texture, making it more stable and rough. Similarly, Concrete became more frictional, stiffer, and more textured, reflecting a strengthening of its core mechanical characteristics. Metal exhibited a reduction in friction, while its stiffness and texture increased, suggesting that despite its slipperiness, it became sturdier and more visually pronounced in surface detail. Wood displayed increases in both friction and stiffness while maintaining its already high texture, making it more resistant while preserving its characteristic feel. Sand showed a slight increase in friction but reduced stiffness and texture, resulting in a softer and less rough tactile sensation. Finally, Ice remained frictionless but became slightly softer with an increase in texture, indicating minor changes in surface perception without altering its inherent slipperiness. Overall, user interactions appeared to reinforce the characteristics of hard and frictional materials, whereas softer and more slippery materials demonstrated smaller variations.

With regard to texture recognition, overall performance improved in the second phase, indicating that the modified values contributed to greater clarity and realism. In the first phase, with 16 participants, recognition rates and interaction times varied across materials. Ice achieved the highest recognition rate (81.25%) and the shortest interaction time (9.31 s), suggesting that users could identify it quickly and clearly. In contrast, Brick showed moderate recognition (68.75%) but required the longest interaction time (18.63 s), indicating that its texture was more complex or demanded more exploration. Metal and Wood both had recognition rates of 50%, with interaction times of 22.25 and 20.65 s, respectively, implying that users found these textures more difficult to distinguish and required prolonged contact. Sand, despite a high recognition rate (75%), had a relatively short interaction time (14.37 s), while Concrete exhibited moderate recognition (56.25%) with a time of 12.76 s. Overall, these results suggest that more distinctive textures, such as Ice and Sand, are recognized more quickly, whereas less pronounced or more homogeneous textures, such as Metal and Wood, require longer exploration to be accurately identified.

Following the modification of haptic property values, the recognition results and interaction times of the 31 participants in the second phase revealed significant differences compared to the first phase. Metal achieved the highest recognition rate (80.62%) with an interaction time of 17.85 s, indicating that the adjustments made its texture more easily distinguishable and faster to identify. Wood and Sand both had recognition rates of 77.42%, though Wood required more time (24.76 s) than Sand (17.09 s), suggesting that the enhanced intensity of Wood’s texture demanded more exploration. Ice remained relatively easy to recognize at 74.19%, with a reduced interaction time of 15.85 s, indicating faster recognition compared to the previous phase. Brick showed a decrease in recognition (61.29%) and an increase in interaction time (23.33 s), suggesting that the modified properties hindered its quick identification. Finally, Concrete displayed moderate recognition (54.84%) but the longest interaction time (24.95 s), implying that users required more time to identify this texture. Overall, the changes to the haptic properties resulted in improved recognizability for materials such as Metal and Wood, while other textures, such as Brick, became more difficult to identify and required longer interaction.

In parallel, the confusions recorded during recognition highlighted specific pairs of textures with overlapping characteristics (e.g., roughness or hardness), which made them more difficult to distinguish. The systematic documentation of these confusions provides useful insights for improving future parameter settings and avoiding overlaps between similar haptic surfaces. Notably, frequent confusions were observed between Metal and Concrete, as well as between Wood and Ice, indicating that certain material pairs exhibit overlapping haptic properties.

In the first phase, with 16 participants, using the initial property values, the frequency of material confusions varied considerably. Brick was primarily confused with Concrete (18.8%) and secondarily with Metal (12.5%). Metal was often confused with Brick (25%) and Concrete (18.8%), and to a lesser extent with Sand (6.3%). Wood was frequently mistaken for Ice (31.3%), with lower confusion rates for Concrete (12.5%) and Sand (6.3%). Sand was mainly confused with Wood (12.5%) and less often with Metal and Ice (6.3% each). Ice was confused primarily with Sand (12.5%) and secondarily with Metal (6.3%). Finally, Concrete was strongly confused with Metal (31.3%) and less so with Brick and Sand (6.3% each). Overall, materials with more similar haptic properties, such as Brick–Concrete and Metal–Concrete, showed higher confusion rates, whereas more distinct materials, such as Ice and Sand, exhibited lower confusion.

In the second phase, with 31 participants and the adjusted haptic property values, a shift was observed in the confusion patterns between materials. Brick was still mainly confused with Concrete (29.0%) and to a lesser extent with Metal (9.7%), indicating that distinguishing it from Concrete remained challenging. Metal exhibited markedly reduced confusion with all other materials, being confused primarily with Concrete (9.7%) and only minimally with Brick, Sand, and Ice (3.2% each), suggesting that the adjustments made it more easily recognizable. Wood continued to be confused with Ice (16.1%), with lower confusion rates for Metal and Sand (3.2% each). Sand was confused mainly with Ice (16.1%) and to a lesser extent with Wood (6.5%). Ice showed confusion mainly with Wood (16.1%) and Sand (9.7%). Finally, Concrete displayed high confusion with Metal (29.0%) and lower confusion with Brick (12.9%). Overall, the modifications to the haptic properties reduced overall confusion for materials such as Metal and Brick, while more similar or softer textures, such as Wood, Sand, and Ice, maintained certain levels of confusion.

In conclusion, the comparison between the two phases demonstrates that the revision of the haptic property values had a clear impact on both the recognition and discrimination of materials. In the first phase, with the initial values, materials such as Metal and Wood exhibited high confusion rates and required longer interaction times for recognition, whereas Ice and Sand were identified quickly and clearly. Following the adjustments to the properties in the second phase, recognition improved significantly for materials such as Metal and Wood, interaction times decreased for more distinctive textures, and overall confusion rates were reduced, particularly among closely related materials. Nevertheless, certain materials with more homogeneous or softer textures, such as Concrete, Sand, and Ice, continued to show moderate levels of confusion. Overall, the revision of the haptic properties proved effective, making most materials more distinguishable and recognizable, thereby enhancing the participants’ interaction experience.

The comparison of the two experimental phases using the Cohen’s d index quantitatively confirmed the variations observed in the recognition rates of the six materials, providing an estimate of the magnitude of the effect of the haptic parameter adjustments. The results showed that the largest positive effects were recorded for the materials Metal (d = 0.67) and Wood (d = 0.60), corresponding to a medium-to-large effect size according to Cohen’s criteria. These increases indicate that the adjustments in friction, stiffness, and relief values substantially enhanced the distinctiveness and realism of these materials, making them more easily recognizable by participants. Conversely, for Brick (d = −0.16), Ice (d = −0.17), and Concrete (d = −0.03), the index values suggest very small or negative effects, implying that the parameter adjustments may have slightly reduced recognition accuracy or had no significant impact. Finally, Sand (d = 0.06) exhibited an almost negligible change, consistent with the overall stability of performance for this material. Overall, the Cohen’s d values support the conclusion that the adjustments of the haptic properties were most effective for textures with initially lower recognizability (e.g., Metal and Wood), confirming the positive contribution of the optimization process to the realism and haptic discriminability of the virtual surfaces.

The observed confusions in texture recognition are not random but can be interpreted based on the physiological characteristics of the human haptic system and the technical specifications of the Touch device. The human cutaneous sensory system comprises specialized mechanoreceptors with distinct response profiles. Although the 3D Systems Touch device offers good spatial resolution, it exhibits technical limitations that may affect the fidelity of haptic rendering. Its maximum output force (3.3 N) is significantly lower than human capability, resulting in limited realism when rendering the stiffness of materials such as metal or concrete. Moreover, the accurate representation of fine textures depends on the haptic rendering algorithm, which often struggles to convey subtle differences in vibration frequencies. Finally, the inertia and friction of the mechanism reduce sensitivity at higher frequencies, introducing “noise” that constrains the discrimination of fine differences between materials.

The pronounced confusion between Concrete and Metal can be explained by the device’s limitations in generating high frequencies, which are essential for stimulating the Pacinian corpuscles, rendering the two textures difficult to distinguish. Concrete, characterized by random low and mid-frequency reliefs, and Metal, with its smooth yet rigid surface, tend to be conflated due to the device’s low maximum output force (3.3 N). Similarly, the bidirectional confusion between Wood and Ice highlights the device’s challenges in simulating differences in friction and stiffness. While Merkel and Meissner receptors contribute to discrimination through static pressure and micro-slips, the enhancement of ice’s texture appears to have diminished the perceptual cues related to its low friction, thereby hindering accurate differentiation between wood and ice.

The analysis of the UEQ results highlights an overall exceptionally positive user experience, with all dimensions recording scores above 87%. The highest values were observed in the dimensions Leading edge (97.56%), Interesting (97.07%), and Exciting (96.1%), indicating that participants perceived the interaction environment as particularly innovative, attractive, and capable of maintaining their engagement. Similarly high evaluations were reported for the dimensions Inventive (94.63%) and Clear (93.17%), reflecting users’ perceptions of innovation and clarity in use. The indicators related to the system’s practical usability namely Easy (90.24%), Efficient (89.76%), and Supportive (87.8%), although slightly lower, still remain at very high levels. The internal consistency of the User Experience Questionnaire (UEQ) was assessed using Cronbach’s Alpha [43]. The obtained value (α = 0.54) indicates low internal reliability, suggesting that participants’ evaluations varied across the different UEQ items. This result may reflect the multidimensional nature of the questionnaire, which captures diverse aspects of user experience (e.g., efficiency, attractiveness, novelty), rather than a single homogeneous construct. Moreover, the relatively small sample size (N = 41) may have contributed to this lower reliability estimate. Nonetheless, the consistently high mean scores across all items (above 87%) confirm a generally positive perception of the system’s usability and innovation.

6. Conclusions

In conclusion, the study demonstrated that the development of parameterizable textures through haptic feedback devices can substantially enhance the sense of realism and the recognizability of surfaces in virtual environments. However, limitations related to sample size and the range of examined textures highlight the need for future research involving larger and more diverse user groups, as well as richer sets of materials. While the two-phase experimental design provided valuable iterative insights and allowed for parameter optimization between phases, the generalizability of the study is constrained by the sample size. With 16 participants in the first phase and 31 in the second, the total sample (N = 47) offers a solid basis for preliminary conclusions and proof-of-concept validation, yet remains limited for drawing broad statistical generalizations. Consequently, the statistical power of the study is affected. Due to the relatively small number of participants, there is a risk that we may not have detected all genuine, albeit small or subtle, improvements and differences between textures, even if such effects were indeed present. Future developments should focus not only on improving the fidelity of physical texture representation and reducing confusion between similar surfaces, but also on leveraging more specialized techniques. For instance, the use of deep learning models could enable adaptive texture rendering based on individual user characteristics and movements, while integration into haptic gloves would allow interaction with the entire palm, thereby enhancing the range of tactile stimuli. Moreover, applications in fields such as medical training or remote education could greatly benefit from high-fidelity haptic simulations, making learning more realistic and safer. Future work will involve modeling the relationship between texture parameters (friction, stiffness, relief) and recognition rates using regression or perceptual modeling approaches to quantitatively predict misclassification patterns. Finally, the exploration of multisensory experiences where haptic stimuli are combined with auditory cues may further enhance material perception and discrimination, paving the way for more comprehensive and realistic interactions.

Author Contributions

Conception and design of experiments: N.T.; performance of experiments: E.P. and G.K.; analysis of data: N.T. and E.P.; writing of the manuscript: N.T., G.V., S.K., and G.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data are provided on request.

Acknowledgments

The authors would like to express their sincere gratitude to all the individuals that participated in this study.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Table A1.

UEQ—User Experience Questionnaire.

Table A1.

UEQ—User Experience Questionnaire.

| UEQ Option A | Likert Scale (1 to 5) | UEQ Option B |

|---|---|---|

| Obstructive | ◦ 1 ◦ 2 ◦ 3 ◦ 4 ◦ 5 | Supportive |

| Complicated | ◦ 1 ◦ 2 ◦ 3 ◦ 4 ◦ 5 | Easy |

| Inefficient | ◦ 1 ◦ 2 ◦ 3 ◦ 4 ◦ 5 | Efficient |

| Clear | ◦ 1 ◦ 2 ◦ 3 ◦ 4 ◦ 5 | Confusing |

| Boring | ◦ 1 ◦ 2 ◦ 3 ◦ 4 ◦ 5 | Exciting |

| Not interesting | ◦ 1 ◦ 2 ◦ 3 ◦ 4 ◦ 5 | Interesting |

| Conventional | ◦ 1 ◦ 2 ◦ 3 ◦ 4 ◦ 5 | Inventive |

| Usual | ◦ 1 ◦ 2 ◦ 3 ◦ 4 ◦ 5 | Leading edge |

References

- Tzimos, N.; Voutsakelis, G.; Kokkonis, G. Haptic Human Computer Interaction. In Proceedings of the 6th World Symposium on Communication Engineering (WSCE 2023), Thessaloniki, Greece, 27–29 September 2023. [Google Scholar]

- Hartson, H.R. Human–computer interaction: Interdisciplinary roots and trends. J. Syst. Softw. 1998, 43, 103–118. [Google Scholar] [CrossRef]

- Giri, G.S.; Maddahi, Y.; Zareinia, K. An Application-Based Review of Haptics Technology. Robotics 2021, 10, 29. [Google Scholar] [CrossRef]

- Rodríguez, J.-L.; Velázquez, R.; Del-Valle-Soto, C.; Gutiérrez, S.; Varona, J.; Enríquez-Zarate, J. Active and Passive Haptic Perception of Shape: Passive Haptics Can Support Navigation. Electronics 2019, 8, 355. [Google Scholar] [CrossRef]

- Azofeifa, J.D.; Noguez, J.; Ruiz, S.; Molina-Espinosa, J.M.; Magana, A.J.; Benes, B. Systematic Review of Multimodal Human–Computer Interaction. Informatics 2022, 9, 13. [Google Scholar] [CrossRef]

- Gokul, P.J. A Review on Haptic Technology: The Future of Touch in Human-Computer Interaction. Int. J. Adv. Res. Sci. Commun. Technol. (IJARSCT) 2025, 5, 11. [Google Scholar]

- Ozioko, O.; Dahiya, R. Smart Tactile Gloves for Haptic Interaction, Communication, and Rehabilitation. Adv. Intell. Syst. (WILEY) 2021, 4, 2100091. [Google Scholar] [CrossRef]

- See, A.R.; Choco, J.A.G.; Chandramohan, K. Touch, Texture and Haptic Feedback: A Review on How We Feel the World around Us. Appl. Sci. 2022, 12, 4686. [Google Scholar] [CrossRef]

- Najm, A.; Banakou, D.; Michael-Grigoriou, D. Development of a Modular Adjustable Wearable Haptic Device for XR Applications. Virtual Worlds 2024, 3, 436–458. [Google Scholar] [CrossRef]

- Wegen, M.; Herder, J.L.; Adelsberger, R.; Pastore-Wapp, M.; van Wegen, E.E.H.; Bohlhalter, S.; Nef, T.; Krack, P.; Vanbellingen, T. An Overview of Wearable Haptic Technologies and Their Performance in Virtual Object Exploration. Sensors 2023, 23, 1563. [Google Scholar] [CrossRef]

- Klatzky, R.L.; Nayak, A.; Stephen, I.; Dijour, D.; Tan, H.Z. Detection and Identification of Pattern Information on an Electrostatic Friction Display. IEEE Trans. Haptics 2019, 1, 99. [Google Scholar] [CrossRef]

- Lederman, S.J.; Klatzky, R.L. Haptic Perception: A Tutorial. Atten. Percept. Psychophys. 2009, 71, 1439–1459. [Google Scholar] [CrossRef]

- Abraira, V.E.; Ginty, D.D. The Sensory Neurons of Touch. Neuron 2013, 79, 618–639. [Google Scholar] [CrossRef]

- Petit, O.; Velasco, C.; Spence, C. Digital Sensory Marketing: Integrating New Technologies Into Multisensory Online Experience. J. Interact. Mark. 2018, 45, 42–61. [Google Scholar] [CrossRef]

- Jin, S.-A.A. The impact of 3-D virtual haptics in marketing. Psychol. Mark. 2011, 28, 240–255. [Google Scholar] [CrossRef]

- Periyaswamy, T.; Islam, M.R. Tactile Rendering of Textile Materials. J. Text. Sci. Fash. Technol. 2022, 9. [Google Scholar] [CrossRef]

- Dahan, H.; Stern, H.; Bitan, N.; Bouzovkin, A.; Levanovsky, M.; Portnoy, S. Construction of a haptic-based virtual reality evaluation of discrimination of stiffness and texture. Cogent Eng. 2022, 9, 2105556. [Google Scholar] [CrossRef]

- Heravi, N.; Culbertson, H.; Okamura, A.M.; Bohg, J. Development and Evaluation of a Learning-Based Model for Real-Time Haptic Texture Rendering. IEEE Trans. Haptics 2024, 99, 705–716. [Google Scholar] [CrossRef]

- Heravi, N.; Yuan, W.; Okamura, A.M.; Bohg, J. Learning an Action-Conditional Model for Haptic Texture Generation. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020. [Google Scholar]

- Joolee, J.B.; Jeon, S. Data-Driven Haptic Texture Modeling and Rendering Based on Deep Spatio-Temporal Networks. IEEE Trans. Haptics 2021, 1, 99. [Google Scholar] [CrossRef]

- Lee, Y.; Lee, S.; Lee, D. Wearable Haptic Device for Stiffness Rendering of Virtual Objects in Augmented Reality. Appl. Sci. 2021, 11, 6932. [Google Scholar] [CrossRef]

- Rouhafzay, G.; Cretu, A.-M. A Visuo-Haptic Framework for Object Recognition Inspired by Human Tactile Perception. Proceedings 2018, 4, 47. [Google Scholar] [CrossRef]

- Tzimos, N.; Voutsakelis, G.; Kontogiannis, S.; Kokkonis, G. Evaluation of Haptic Textures for Tangible Interfaces for the Tactile Internet. Electronics 2024, 13, 3775. [Google Scholar] [CrossRef]

- Papadopoulos, K.; Koustriava, E.; Georgoula, E.; Kalpia, V. 3D haptic texture discrimination in individuals with and without visual impairments using a force feedback device. Univers. Access Inf. Soc. 2025, 24, 2433–2446. [Google Scholar] [CrossRef]

- Ruiz, C., Jr.; de Jesús, Ò.; Serrano, C.; González, A.; Nonell, P.; Metaute, A.; Miralles, D. Bridging realities: Training visuo-haptic object recognition models for robots using 3D virtual simulations. Vis. Comput. 2024, 40, 4661–4673. [Google Scholar] [CrossRef]

- Cao, G.; Jiang, J.; Mao, N.; Bollegala, D.; Li, M.; Luo, S. Vis2Hap: Vision-based Haptic Rendering by Cross-modal Generation. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023. [Google Scholar] [CrossRef]

- Martinelli, A.; Fabiocchi, D.; Picchio, F.; Giberti, H.; Carnevale, M. Design of an Environment for Virtual Training Based on Digital Reconstruction: From Real Vegetation to Its Tactile Simulation. Designs 2025, 9, 32. [Google Scholar] [CrossRef]

- Bazelle, A.; Pourrier-Nunez, H.; Rignault, M.; Chang, M. Simulation of different materials texture in virtual reality through haptic gloves. In Proceedings of the SA ‘18: SIGGRAPH Asia 2018, Tokyo, Japan, 4–7 December 2018. [Google Scholar] [CrossRef]

- Massie, T.H.; Salisbury, J.K. The PHANTOM Haptic Interface: A Device for Probing Virtual Objects. In Proceedings of the ASME, Winter Annual Meeting, Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems, Chicago, IL, USA, 6–11 November 1994; Volume 55. [Google Scholar]

- Salisbury, K.; Brock, D.; Massie, T.; Swarup, N.; Zilles, C. Haptic rendering: Programming touch interaction with virtual objects. In Proceedings of the 1995 Symposium on Interactive 3D Graphics (SI3D), Monterey, CA, USA, 9–12 April 1995; pp. 123–130. [Google Scholar] [CrossRef]

- Jarillo-Silva, A.; Domınguez-Ramırez, O.A.; Parra-Vega, V.; Ordaz-Oliver, J.P. PHANToM OMNI Haptic Device: Kinematic and Manipulability. In Proceedings of the Electronics, Robotics and Automotive Mechanics Conference (CERMA), Cuernavaca, Morelos, Mexico, 22–25 September 2009; pp. 193–198. [Google Scholar]

- Martín, J.S.; Triviño, G. A study of the Manipulability of the PHANToM OMNI Haptic Interfae. In Proceedings of the 3rd Workshop in Virtual Reality, Interactions, and Physical Simulation (Vriphys), Madrid, Spain, 6–7 November 2006. [Google Scholar]

- Unity Real-Time Development Platform 3D, 2D, VR & AR Engine. Available online: https://unity.com/ (accessed on 22 September 2025).

- The Best Assets for Game Making Unity Asset Store. Available online: https://assetstore.unity.com/ (accessed on 22 September 2025).

- Blender-The Free and Open Source 3D Creation Software. Available online: https://www.blender.org/ (accessed on 22 September 2025).

- Visual Studio Code. Available online: https://code.visualstudio.com/ (accessed on 22 September 2025).

- Poly Haven. Available online: https://polyhaven.com/ (accessed on 22 September 2025).

- Schumm, W.R.; Crawford, D.W.; Lockett, L. Patterns of Means and Standard Deviations with Binary Variables: A Key to Detecting Fraudulent Research. Biomed. J. Sci. Tech. Res. 2019, 23, 17151–17153. [Google Scholar] [CrossRef]

- Biau, D.J. In Brief: Standard Deviation and Standard Error. Clin. Orthop. Relat. Res. 2011, 469, 2661–2664. [Google Scholar] [CrossRef]

- Barde, M.P.; Barde, P.J. What to use to express the variability of data: Standard deviation or standard error of mean? Perspect. Clin. Res. 2012, 3, 113–116. [Google Scholar] [CrossRef]

- Lakens, D. Calculating and reporting effect sizes to facilitate cumulative science: A practical primer for t-tests and ANOVAs. Front. Psychol. 2013, 4, 863. [Google Scholar] [CrossRef]

- Santoso, H.B.; Schrepp, M.; Hasani, L.M.; Fitriansyah, R.; Setyanto, A. The use of User Experience Questionnaire Plus (UEQ+) for cross-cultural UX research: Evaluating Zoom and Learn Quran Tajwid as online learning tools. Heliyon 2022, 8, 11748. [Google Scholar] [CrossRef]

- Tavakol, M.; Dennick, R. Making Sense of Cronbach’s Alpha. Int. J. Med. Educ. 2011, 2, 53–55. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).