1. Introduction

With the rapid advancement of fifth-generation mobile communications technology (5G) and Internet of Things (IoT), mobile network traffic demonstrates explosive growth and high dynamism. 5G and its evolving technology, B5G (Beyond 5G) networks, have introduced three typical application scenarios: enhanced Mobile Broadband (eMBB), massive Machine-Type Communications (mMTCs), and Ultra-Reliable Low-Latency Communications (URLLCs). These scenarios pose new challenges for traffic prediction. The eMBB scenario, characterized by high-speed data transmission, exhibits bursty traffic patterns and high bandwidth demands, with the primary prediction target being throughput. The mMTC scenario, characterized by massive device connectivity, shifts the prediction focus from throughput to the number of connections. The URLLC scenario requires extremely low latency and high reliability, necessitating the prediction of network load to ensure quality of service. Typical applications of these scenarios include smart cities, connected vehicles, and the industrial Internet. Accurate traffic prediction not only enhances user experience but also significantly reduces operational costs, improving the autonomy and intelligence of networks.

Traditional traffic prediction methods are mostly based on statistical models or shallow machine learning approaches. The Seasonal Autoregressive Integrated Moving Average (SARIMA) model has long been used for communication traffic prediction due to its effective characterization of seasonality and trend components [

1]. Support Vector Regression (SVR), on the other hand, handles nonlinear relationships through kernel functions and has demonstrated advantages in early traffic prediction [

2]. Although these methods perform well in stationary time series prediction, their assumptions of linear or static modeling make it difficult to capture the non-stationarity and multimodal evolution characteristics caused by mixed services, user mobility, and sudden events in 5G environments [

3]. In recent years, deep learning models such as Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) have been widely applied to traffic prediction tasks due to their powerful sequence modeling capabilities [

4,

5]. However, these methods typically rely on large amounts of labeled data for training and exhibit limited generalization capability when faced with data distribution shifts or unseen traffic patterns, often leading to performance degradation [

6]. This makes it challenging for them to adapt to the dynamic and variable environment of 5G networks.

Meta-learning, as a “learning to learn” paradigm, enables models to quickly adapt to new tasks by extracting shared knowledge from multiple related tasks, making it particularly suitable for addressing model initialisation problems in few-shot scenarios [

7]. In the field of traffic prediction, meta-learning constructs abstract representations of task distributions to provide personalized model initialisation strategies for different base stations or regions, thereby significantly improving prediction efficiency and accuracy. However, most existing meta-learning traffic prediction models are based on the assumption of stationary traffic patterns. Their meta-learners (such as K-Nearest Neighbors, deep neural networks, or Gaussian mixture models) often employ fixed structures or a fixed number of components, making it difficult to effectively handle concept drift issues caused by factors like changes in base station functionality or the introduction of new services in real-world networks.

The core challenge of non-stationary traffic patterns lies in their underlying data distributions, which are not static but undergo structural shifts over time, space, and external events (such as holidays, emergencies, or cyberattacks). These manifest as: (1) the emergence of new patterns (e.g., newly built metro stations or schools); (2) the disappearance of old patterns (e.g., decommissioned factories); (3) the merging and splitting of patterns (e.g., evolution of mixed commercial–residential zones); and (4) the ambiguity of pattern boundaries (e.g., functionally mixed areas). These changes cause meta-learning models based on fixed component numbers (e.g., GMM with fixed K values) to suffer from pattern omission, component degradation, and inefficient resource allocation, severely limiting their applicability and scalability in real-world networks.

To address these challenges, this paper proposes a meta-learning traffic forecasting model based on a Dynamic Component Management (DCM) mechanism, termed GMM-SCM-DCM. Building upon the Gaussian Mixture Model (GMM) as its meta-learning backbone, the model introduces dynamic component splitting and merging mechanisms to achieve adaptive tracking and modeling of non-stationary traffic patterns. Specifically, a bimodal similarity metric combining probabilistic and spatial similarity is designed to evaluate pattern novelty in new tasks, thereby triggering component generation, merging, or removal operations. Furthermore, a Single-Component Mechanism (SCM) is employed as the initial weight allocation strategy for the base learner, mitigating pattern confusion and training instability potentially caused by multi-component synthesis.

The major contributions of this paper include:

Proposing a meta-learning framework with a DCM mechanism, enabling the model to perform online identification, adaptation, and evolution of 5G traffic patterns.

Designing a dual-modal similarity metric and a three-layer response strategy to achieve fine-grained initialisation and rapid adaptation for different service modes (such as eMBB and mMTC).

Constructing a non-stationary traffic evaluation benchmark incorporating typical 5G service modes based on a real cellular dataset, and systematically validating the model’s advantages in prediction accuracy, convergence speed, and generalization capability.

The paper is structured as follows.

Section 2 systematically reviews 5G/B5G requirements and existing traffic prediction approaches.

Section 3 details the GMM-SCM-DCM architecture and algorithms.

Section 4 describes the experimental setup and datasets.

Section 5 presents and discusses the results. Finally,

Section 6 concludes the paper and suggests future research directions.

3. Model Architecture

In this section, we first outline the proposed traffic forecasting framework, GMM-SCM-DCM. Building upon this, we define the foundational learner, the meta-learner, and the DCM mechanism for long-term forecasting tasks.

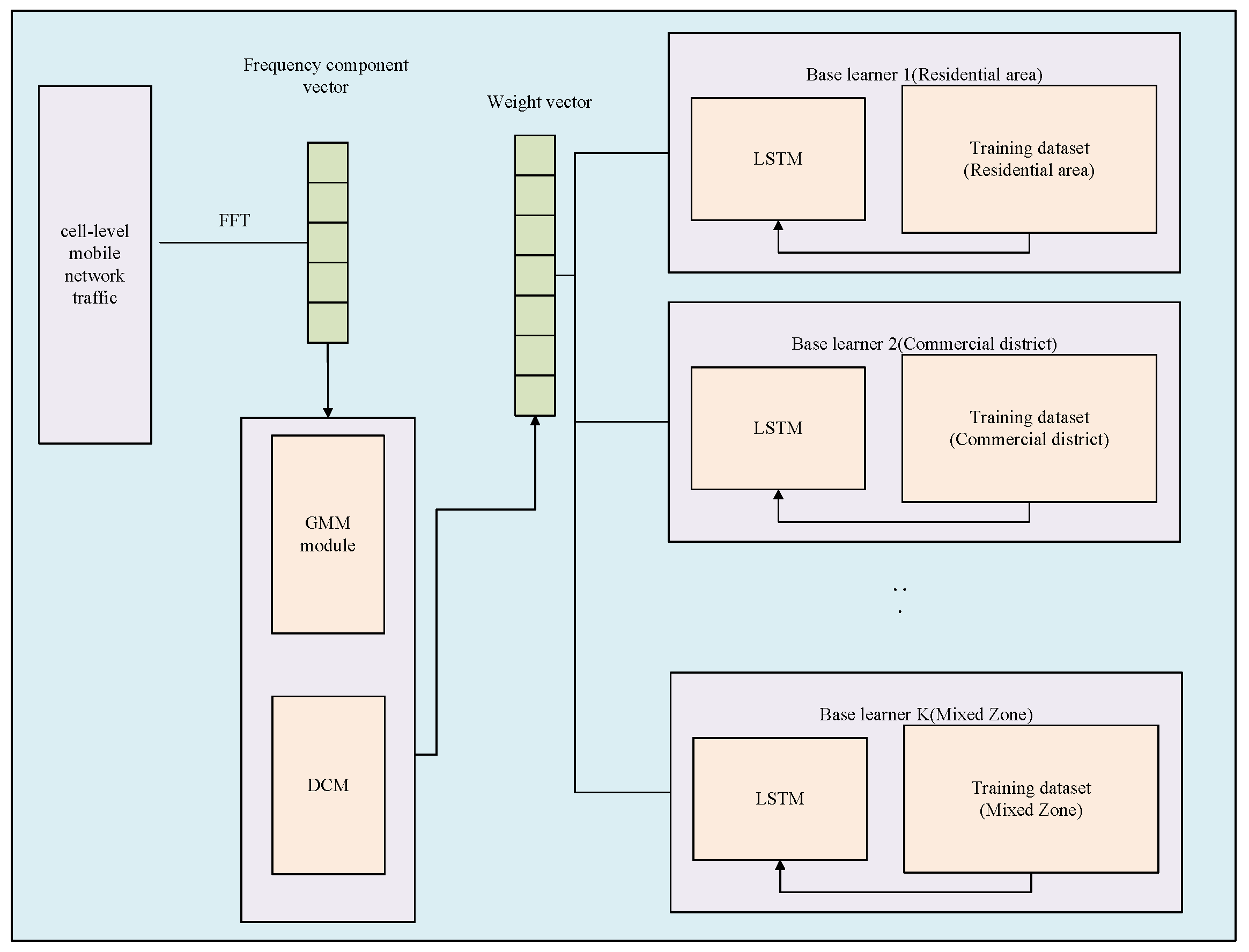

As illustrated in

Figure 1, the GMM-SCM-DCM model comprises three steps. The first step subjects cellular traffic payload data to processing through operations such as the Fast Fourier Transform (FFT) to derive the traffic feature frequencies for each cellular network, which are then used to train GMMs. The second step feeds the frequency–domain features of new traffic tasks into the DCM for novelty detection. If the traffic pattern is identified as existing, the SCM mechanism assigns initial weights and executes the prediction task. If the pattern is classified as a similar pattern, it provides similarity weights and then fine-tunes using a fine-tuned dataset. If the pattern is classified as a completely new traffic pattern, a new component must be created; this step is termed component splitting. The third step utilizes the soft weights produced by the GMM to select an appropriate base learner for network traffic load prediction.

3.1. Problem Definition

This work addresses the prediction of total throughput at the base station level in 5G cellular networks, a critical input for network resource scheduling and capacity planning. We formulate the cellular traffic load prediction problem as a multivariate time series forecasting task. Consider

N cellular base stations where the traffic load of each base station at time

t is denoted as

. Given an observed historical sequence

of

T time steps, the objective is to predict the traffic load for the next

time steps:

where

f is the prediction model and

represents the model parameters. In the meta-learning framework, each base station or region is treated as a task

, with its training data

and test data

. The objective of the meta-learner is to learn shared knowledge from multiple tasks, enabling new task

to rapidly adapt with only a few samples:

where

is the parameter of the meta-learner.

3.2. Analysis of Dataset and Spatio-Temporal Characteristics

The experiments in this study are based on a publicly available mobile network traffic dataset collected by Telecom Italia in Milan. This dataset contains traffic records from November 2013 to January 2014, covering approximately 10,000 grid cells.

To construct a set of feature vectors suitable for this study, the time series data within the dataset was converted into frequency–domain information through the following steps:

- (1)

Time series construction: The entire time span of the dataset was divided into consecutive one-hour time intervals.

- (2)

Data normalization: The traffic load was normalized to the range [0, 1] using the Min-Max algorithm. The normalized sequences distinctly reveal the diverse traffic patterns exhibited by cells across different functional zones. As illustrated in

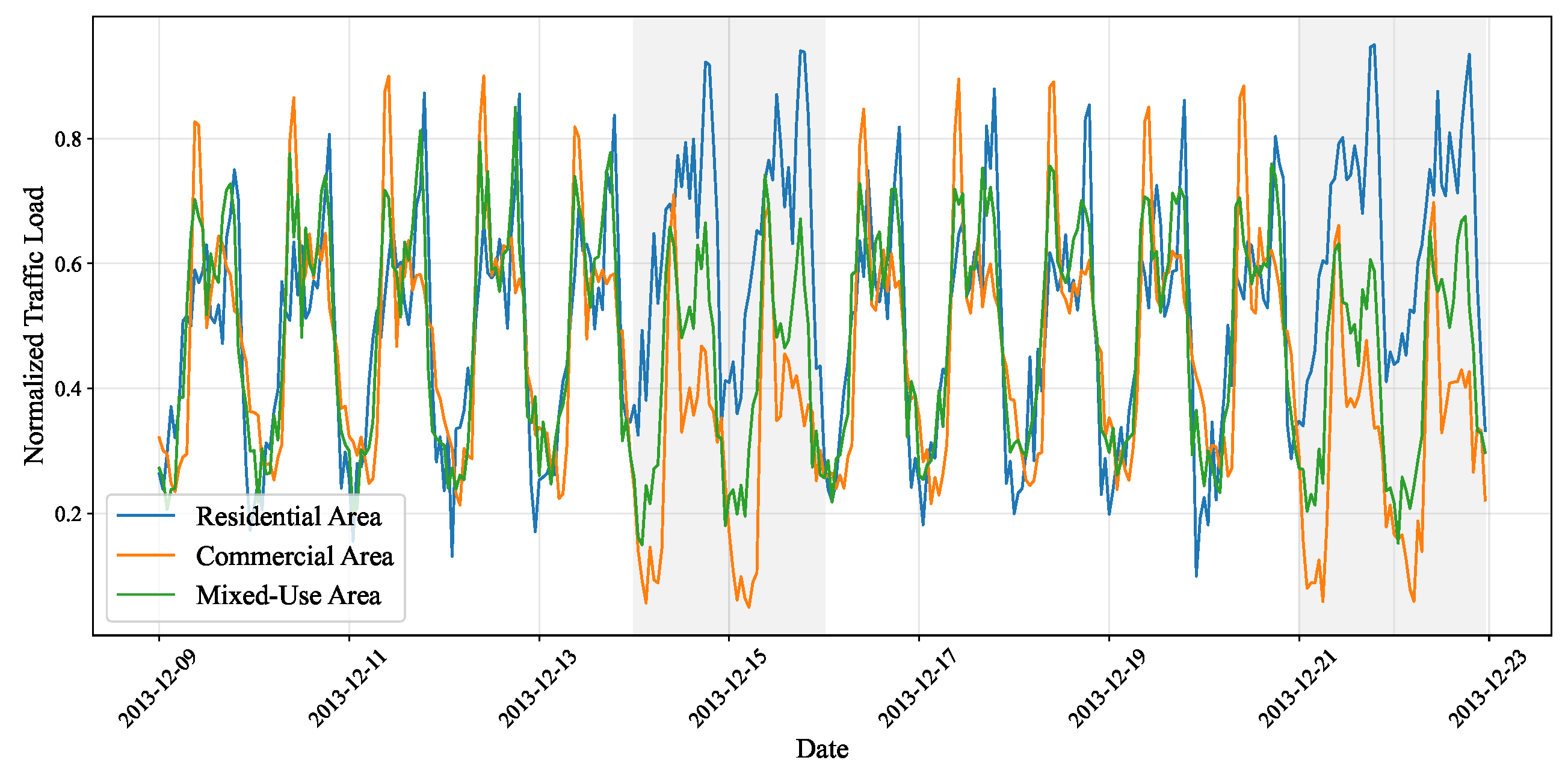

Figure 2, three representative cells display markedly different temporal characteristics during identical time periods, demonstrating the significant impact of functional differentiation within urban spaces on communication behavior. Residential areas exhibit stronger evening peak pulses (18:00–21:00), with a notable increase in baseline traffic throughout weekends. Commercial districts exhibit sharper morning peak traffic (8–11 a.m.) with markedly reduced weekend volumes. Mixed-use areas display distinct dual peaks during morning and evening hours, exhibiting moderate weekend effects.

- (3)

Feature extraction: Leveraging the periodic nature of cellular network traffic, each cell’s traffic load data is treated as a discrete signal with a period

h (one week). Its frequency domain characteristics are analyzed via Fast Fourier Transform (FFT). Five principal frequency components (corresponding to periods of 1 week, 1 day, 12 h, 8 h, and 6 h) are selected from the frequency domain information. Their real and imaginary parts collectively form a 10-dimensional feature vector:

- (4)

Foundational sample construction: Formulating the traffic forecasting task as a supervised learning problem, where each foundational sample comprises data from the preceding three hours as input, with the fourth hour serving as the forecast output.

Unlike previous studies which assumed a steady-state environment, this paper focuses on the non-stationary evolution of flow patterns. To this end, we undertook a critical reconstruction and annotation of the dataset (see

Section 4.4 for details), thereby establishing an evaluation benchmark that more accurately reflects genuine dynamic variations.

3.3. Probabilistic Modeling of the Feature Space

Let the feature vector for the historical baseline task be

, which follows a mixture model composed of

K Gaussian distributions:

The model parameters are , where represents the mixture coefficients in the Gaussian mixture model, denoting the probability of selecting the kth Gaussian model during data generation.

denotes the mean of the kth Gaussian model, and denotes its variance. Each Gaussian distribution corresponds to a category of base station traffic features exhibiting similar traffic patterns (e.g., commercial areas, residential areas, etc.).

For the

kth Gaussian component (

k), the corresponding optimal set of weight vectors is

The class centers of the weight vectors are

When inputting the meta-feature

for a new task

q, the posterior probabilities for its membership in each component are as follows:

The component with the highest posterior probability is selected, and initial weights are assigned according to the following rules.

The initial weight allocation employs an SCM, selecting the base model weight whose probability distribution most closely approximates that of the meta-feature vector as the initial weight:

is employed to compute the Mahalanobis distance, enhancing the measurement of local similarity by introducing the covariance structure of the component .

Unlike traditional GMM component learners, the number of components K in this study is not a pre-set and fixed hyperparameter. Instead, the proposed DCM mechanism (

Section 3.2) dynamically adjusts the GMM structure—splitting, merging, or eliminating components—based on the novelty of patterns exhibited by new tasks. This enables the model to continuously adapt to non-stationary environments.

3.4. Dynamic Component Management Mechanism

DCM directly acts upon the meta-learner, indirectly optimizing the initialisation of the base learner by managing the evolution of GMM components. To determine whether an existing meta-learner is suitable for a new task

q—i.e., whether the meta-learner requires evolution—this paper designs a dual-modal similarity metric based on probabilistic and spatial similarity. This metric primarily reflects the novelty of the traffic pattern in the traffic data stream for the new task, with specific rules as shown in the formula.

where

denotes the novelty metric,

represents the meta-feature vector for new task

q,

denotes the posterior probability,

is the mean vector for component

k,

denotes the Mahalanobis distance, and

represents the meta-feature dimension.

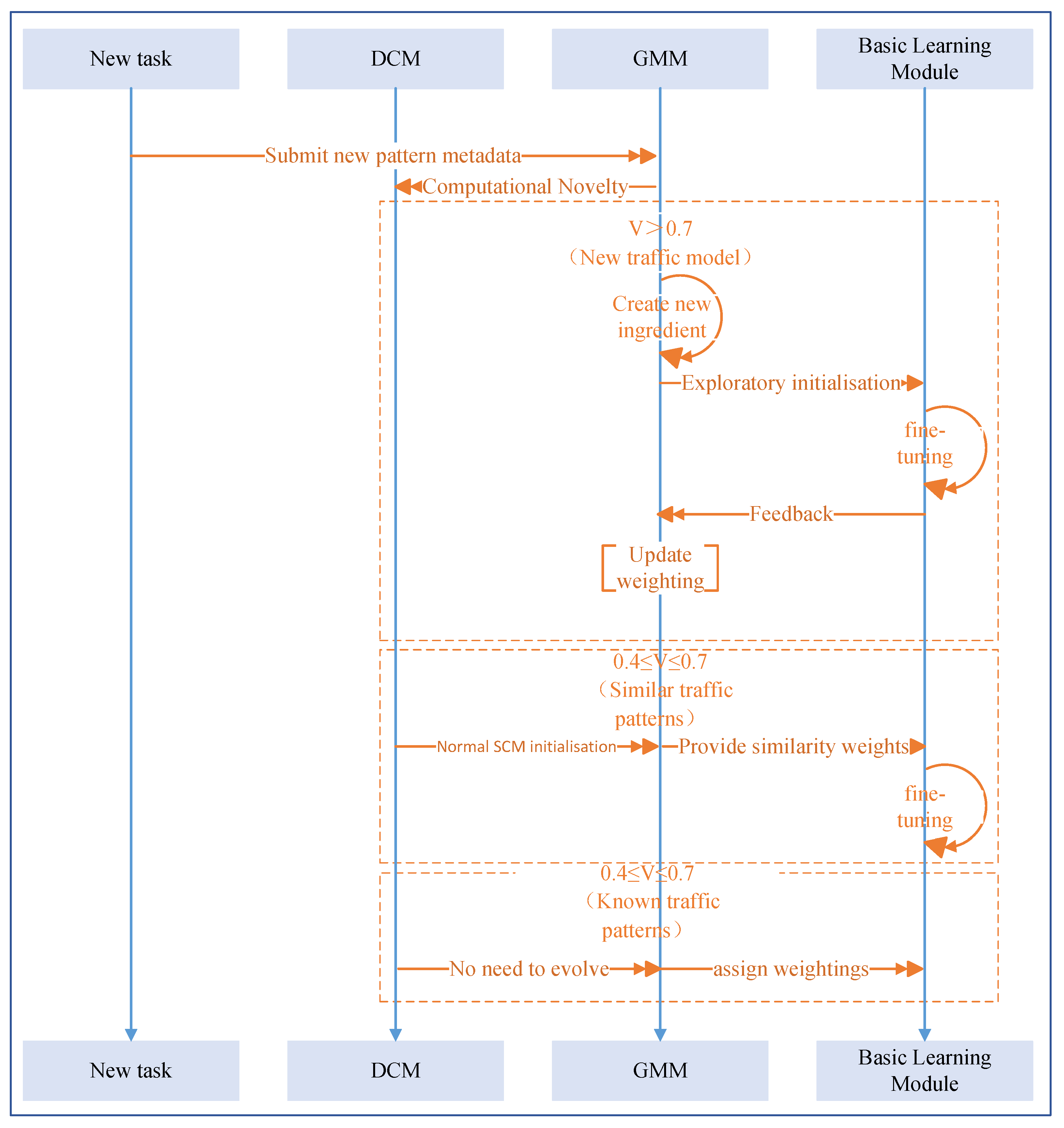

Based on the novelty metric for traffic patterns, this paper designs a three-tier response strategy utilizing DCM, as illustrated in

Figure 3. When a new task requires prediction, DCM first assesses pattern novelty. If the pattern is classified as an existing traffic pattern, the SCM mechanism directly assigns initial weights and enters operational status. If judged as a similar pattern, it provides similarity weights before undergoing fine-tuning using the fine-tuning dataset. If the pattern is classified as an entirely novel traffic pattern, a new component must be created—a process termed component splitting. The specific splitting methodology is detailed in the subsequent sections.

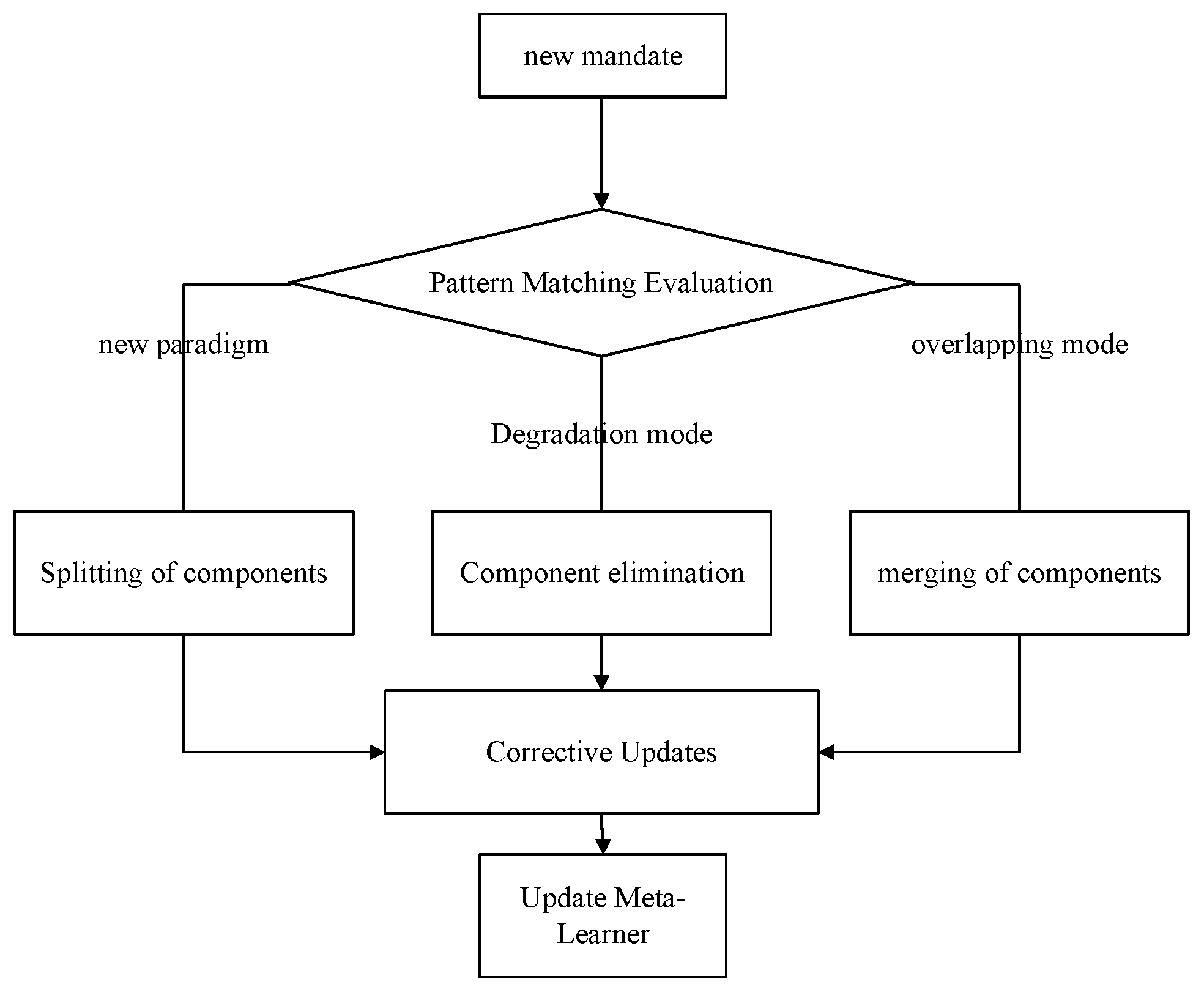

When existing components fail to meet the prediction requirements of new tasks, the DCM mechanism is triggered. Its operational flow is illustrated in

Figure 4.

The splitting threshold is set at

, the merging threshold at

, and the minimum survival mixture coefficient at

, with decisions being made via the formula.

When triggering a component splitting decision, parameters are updated as follows:

where

denotes the latest component index;

,

represent the mean and covariance interpolation coefficients, respectively;

denotes the global feature variance;

denotes the mixture coefficient transfer rate;

denotes the weighting noise standard deviation; and

denotes Gaussian noise.

When triggering component merging decisions, parameters are updated as follows:

where

(the mean difference vector),

denotes the component similarity,

represents the KL divergence, and

stands for the cosine similarity.

When the component contribution reaches the exclusion threshold, the exclusion mechanism is triggered. The exclusion criteria are as follows:

The design of the DCM fully accounts for the dynamic characteristics of 5G networks. The operational logic can be formalized as follows:

Component merging may correspond to the consolidation of functionally similar areas during network optimization or the decline of certain service patterns. When two components (e.g., patterns of two commercial areas) become similar due to urban development, merging them simplifies the model and prevents overfitting.

Component elimination may correspond to the decommissioning of a base station or the obsolescence of outdated service patterns (e.g., relocation of an industrial zone). This ensures efficient utilization of the meta-learner’s resources by focusing only on currently active patterns.

3.5. Initial Weighting Mechanism for Basic Learners

In the preceding section, both the SCM and Multi-Component Mechanism (MCM) were discussed. For meta-learning traffic forecasting tasks under stationary flow patterns, selecting MCM may outperform SCM in certain scenarios. However, for non-stationary flow patterns, this study adopts a “split–merge” path based on Gaussian Mixture Models. Within this path, a bijective relationship exists between “component-flow pattern-weight set”. This bijective relationship ensures that each component corresponds uniquely to a specific flow pattern and its associated weight. The MCM’s composite weights may disrupt this bijection. In contrast, the DCM aligns more closely with the logical requirements of this path. Furthermore, MCM composite weights may induce oscillation in the evolutionary path. Consequently, this section’s research adopts the SCM as the initial weighting mechanism for the base learner, a choice grounded in both the model framework and engineering practice considerations.

The SCM mechanism operates on the principle that if the meta-features of two tasks exhibit similar probability distributions, their optimal model weights should likewise be similar. It selects the base model weight whose probability distribution most closely approximates that of the meta-feature vector as the initial weight:

where

is used to compute the Mahalanobis distance, incorporating the covariance structure

to enhance the measurement of local similarity.

4. Experiments and Analysis

4.1. Experimental Objectives

This section aims to validate the proposed GMM-SCM-DCM meta-learning framework for non-stationary cellular traffic forecasting. This includes:

(1) Validating the effectiveness of the meta-learning initialisation strategy: Demonstrating the framework’s superiority in prediction accuracy and convergence speed compared with random initialisation, fixed initialisation, and other meta-learning approaches.

(2) Validate adaptability to non-stationary environments: By constructing a non-stationary cellular traffic test set, demonstrate that the DCM mechanism handles distribution drift more effectively than traditional methods.

(3) Validating model robustness: Testing model performance across different base learner architectures and training set sizes.

4.2. Baseline Algorithms and Parameter Settings

For a comprehensive evaluation, five categories of baseline methods are selected for comparison in this section.

(1) Traditional Statistical/Shallow Learning Methods

SVR (RBF): Support Vector Regression with Radial Basis Function kernel. Parameters: kernel coefficient , regularization parameter , epsilon tube , implemented using LIBSVM.

SARIMA: Seasonal Autoregressive Integrated Moving Average model. Parameter configuration: , seasonal parameters , where p, P represent autoregressive orders, d, D represent differencing orders, q, Q represent moving average orders, and indicates daily periodicity.

(2) Deep Learning Methods

CLN-FIWV: LSTM with fixed initialisation weights. Parameters: weight initialisation using Xavier uniform distribution, learning rate , batch size , hidden layer dimensions , total trainable parameters: 428.

CLN-RSIWV: LSTM with randomly sampled initialisation weights. Parameters: weight initialisation , learning rate , batch size , hidden layer dimensions , and total trainable parameters: 428.

(3) Meta-Learning Methods

ML-TP (KNN): Meta-learning framework using K-Nearest Neighbors as the meta-learner. Parameters: number of neighbors , distance metric: Euclidean distance, weight assignment: uniform weights, feature dimension .

dmTP (DNN): Meta-learning framework using deep neural network as the meta-learner. Architecture: three fully connected layers , activation function: ReLU, output dimension matches base learner’s weight dimension, optimizer: Adam with learning rate .

GMM-SCM: Gaussian Mixture Model meta-learner with Single-Component Mechanism. Parameters: number of components K selected automatically via BIC in the range , covariance type: full, initialisation: K-Means++, maximum iterations .

GMM-SCM-DCM: The complete proposed model extending GMM-SCM with a Dynamic Component Mechanism. Parameters: splitting threshold , merging threshold , minimum survival mixture coefficient , weight noise standard deviation , mixture coefficient transition rate .

4.3. Parameter Settings

(1) Baseline learner configuration.

To test the robustness of the entire model, this experiment employs LSTM networks of two distinct architectures as base learners, utilizing mean squared error (MSE) as the loss function.

Table 3 details the parameter settings for the two LSTM network architectures.

(2) Meta-learner and correction parameters

KNN model: K-nearest neighbors set to 3.

dmTP’s DNN architecture: Configuration (sets a three-layer hidden network [300, 300, 400].)

GMM model: Implemented using scikit-learn’s GaussianMixture. Number of Gaussian components K automatically selected within the range via Bayesian Information Criterion (BIC).

Feedback strength coefficient: Hyperparameter in the MCM correction mechanism set to 1.0.

(3) Training settings

Meta-training dataset: Primarily tested at two scales—1000 samples and 7000 samples—to validate model effectiveness under small-sample conditions.

Fine-tuning set: Simulates data scarcity scenarios, primarily testing four scales: 24, 168, 500, and 840 (corresponding to 1 day, 1 week, approximately 3 weeks, and 5 weeks of data, respectively).

Training Epochs: 100.

4.4. Dataset Construction

To reflect traffic non-stationarity, this section reconstructs stationary and non-stationary test sets based on the Telecom Italia Milan dataset. As the Telecom Italia Milan dataset does not directly provide labels such as “residential area” or “commercial area”, we must classify cells according to their traffic pattern characteristics. This study employs a data-driven unsupervised learning algorithm for clustering analysis. The core principle is that cellular traffic from different functional zones (e.g., residential, commercial) exhibits distinct, quantifiable pattern characteristics. We define a set of well-specified meta-features to characterize these patterns, apply the K-Means++ algorithm for cell clustering, and finally assign functional zone labels based on the statistical features of each cluster.

4.4.1. Feature Engineering

Using to represent the normalized traffic load time series of a cell P assuming traffic from different functional zones exhibits distinct periodic intensities and contour profiles. Based on this, we define five meta-features to characterize each cell area:

- (1)

: Ratio of average weekend traffic to average weekday traffic. Typically, this ratio exceeds 1 in residential areas and falls below 1 in commercial zones.

- (2)

: Average traffic volume during the weekday morning peak period (e.g., 8:00–10:00).

- (3)

: Average traffic volume during the evening peak period on weekdays (e.g., 18:00–20:00).

- (4)

: Information entropy of the flow sequence, used to measure flow uncertainty.

Here, we partition the range of into B equal-width bins (bins); denotes the probability that the traffic value falls within the ith bin.

- (5)

: 24-h lag autocorrelation coefficient, measuring the strength of diurnal periodicity.

Compute the above five feature components for all cells to obtain the feature matrix

, where

P denotes the total number of cells. Subsequently perform Z-score standardization to eliminate dimensional effects. Here,

and

represent, respectively, the mean and standard deviation of the

dth feature across all cells.

4.4.2. K-Means++ Clustering Algorithm

This section primarily focuses on applying the K-Means++ clustering algorithm for cluster analysis. Given the standardized feature matrix

, to partition

P cells into

K clusters

, minimizing the sum of squares within clusters, the problem can be defined as:

where

represents the centroid of cluster

.

For the aforementioned NP-complete problem, this study employs the standard K-Means algorithm combined with K-Means++ intelligent initialisation to obtain approximate solutions. K-Means++ selects initial centroids via the following probability distribution:

- (1)

Randomly select the first centroid uniformly .

- (2)

For each non-cluster data point , calculate the squared distance to the nearest selected cluster center .

- (3)

Select the next centroid probabilistically .

- (4)

Repeat steps (2) to (3) until K centers are selected.

- (5)

Run standard K-Means algorithm iterations until centers stabilize or maximum iterations reached.

Finally, employing the elbow method and contour coefficient analysis, the optimal number of clusters is determined by synthesizing the peak values of both elbow points and contour coefficients.

4.4.3. Classification of Cellular Networks

Based on the calculations in

Section 4.4.2, when K = 4, the following clustering results are obtained, as detailed in

Table 4. This clustering outcome provides the foundation for constructing subsequent steady-state and non-steady-state test sets.

4.4.4. Construction of the Dataset

(1) Training set

A selection shall be made from residential and commercial areas, with these two categories of cells being randomly scrambled before a 70% proportion is randomly sampled.

(2) Stationary Test Set

Select residential and commercial areas to constitute the remaining 30% of cells outside the training set. Traffic patterns in these zones exhibit pronounced, reproducible periodicity (such as morning and evening rush hours, weekend patterns) and relatively stable distribution.

(3) Non-Stationary Test Set:

Selected from all non-stationary cell areas.

As shown in

Table 5, The meta-training set comprises 70% randomly selected residential and commercial cells (Data from 1 November 2013 to 1 December 2013. The test portion consists of both stationary and non-stationary test sets, formed from the remaining 30% stationary cells and all non-stationary cells, respectively. For these test cells, this paper utilized data from 16 December 2013 to 22 December 2013 for fine-tuning, with all comparative models ultimately being evaluated using data from 23 December 2013 to 29 December 2013. This design ensures complete spatial and temporal isolation of test data from training data.

4.4.5. Simulation and Mapping of 5G Service Patterns

Although the original dataset originates from the 4G era, its diverse traffic patterns demonstrate high comparability and foresight regarding early 5G service characteristics. The four region types identified through clustering can be mapped to typical 5G scenarios:

Commercial Areas: Mapped to eMBB-dominated scenarios, characterized by sharp morning peaks on workdays with high throughput demands.

Residential Areas: Mapped to hybrid eMBB and partial mMTC scenarios, exhibiting evening peaks on workdays and stable all-day traffic on weekends, reflecting applications like home broadband and smart home devices.

Mixed-Use Areas: Represent the spatiotemporal mixture of eMBB services, constituting the most common complex scenario in urban environments.

Non-Stationary Areas: Represent traffic anomalies potentially caused by emergency events or cyberattacks, or unpredictable traffic in new service pilot zones, serving as critical testbeds for model adaptability.

Through this construction method, the non-stationary test set in this paper effectively simulates the concept drift problems caused by service dynamics and urban evolution in 5G networks.

5. Results and Analysis

5.1. Foundational Performance Evaluation

(1) Under stable conditions, all meta-learning methods (KNN, DNN, GMM) still outperform traditional methods, though with more reasonable margins. For instance, the optimal meta-learning method (GMM-SCM) achieves a relative reduction of approximately 13.6% in MAE compared with the optimal traditional deep learning method (CLN-FIWV).

(2) GMM-SCM performs marginally better than KNN and DNN, demonstrating the efficacy of probabilistic modeling.

(3) GMM-SCM-DCM performed very closely to GMM-SCM (with an MAE difference of merely 0.0004), consistent with expectations. In stationary environments, the advantages of dynamic fusion are negligible and may even yield slightly inferior results to GMM-SCM due to the introduction of minor noise.

(1) In non-stationary environments, all methods exhibit significant performance degradation, with MAE generally increasing and R2 markedly decreasing, which aligns with expectations for non-stationary flow forecasting tasks.

(2) The advantage of GMM-SCM-DCM becomes more pronounced. MAE is reduced by approximately 9.3% compared with GMM-SCM and improves by approximately 14.4% over the best baseline method (dmTP).

(3) KNN and DNN meta-learners exhibited severe performance degradation, as they struggled to handle novel patterns (non-stationarity) unseen in the training set. Conversely, the GMM-SCM-DCM model demonstrated superior generalization capability and robustness through its probabilistic framework and the dynamic weighting of DCM.

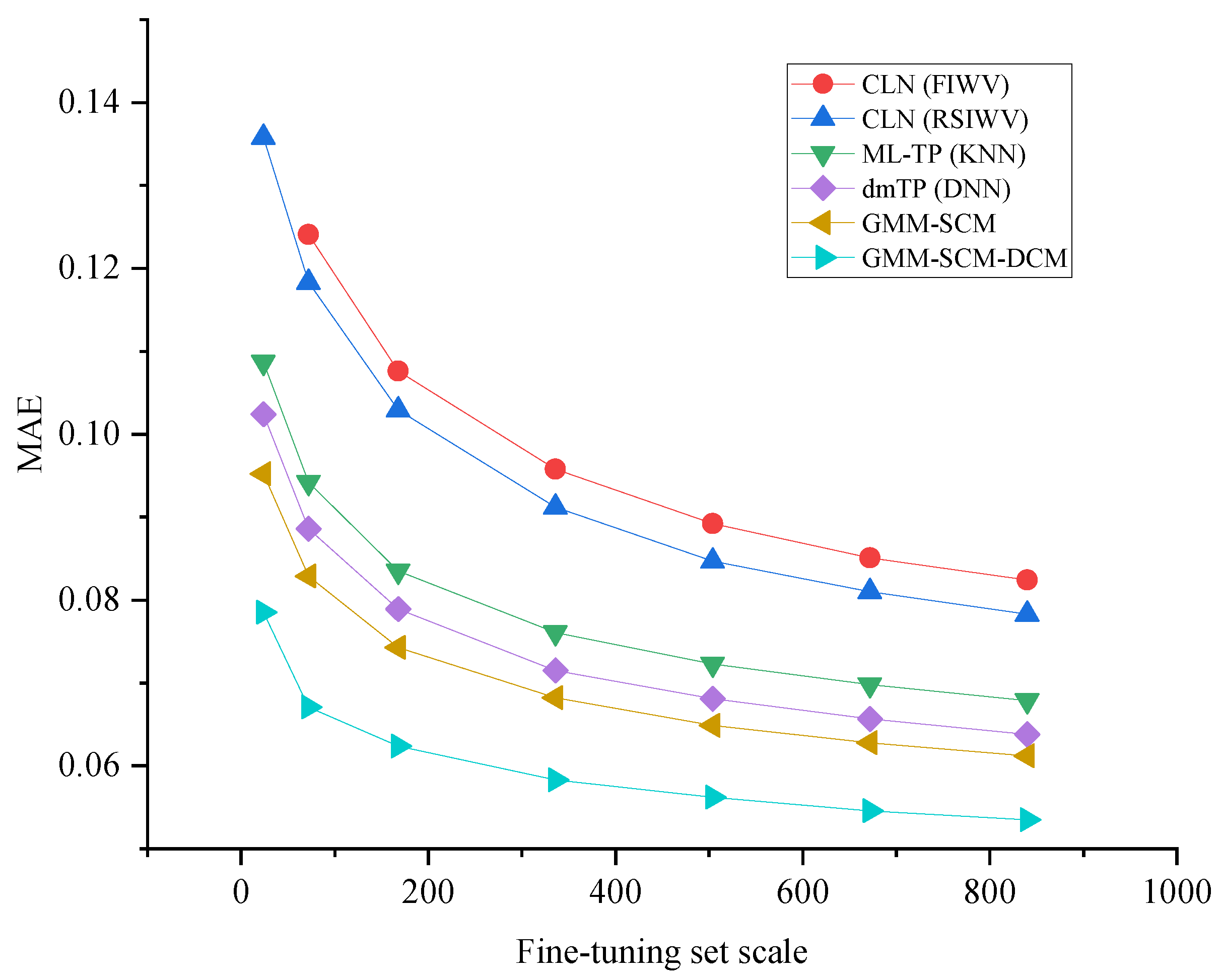

Figure 5 illustrates the impact of fine-tuning dataset scale on performance (non-stationary test set). As shown in

Figure 5, the CLN-RSIWV and CLN-FIWV curves exhibit the highest performance, declining only gradually with increasing data volume. The KNN, DNN, and GMM-SCM curves occupy intermediate positions. The GMM-SCM-DCM curve consistently remains at the bottom, maintaining low error even with minimal data, demonstrating robust performance under small-sample conditions. As data volume increases, all curves gradually converge, but GMM-SCM-DCM ultimately achieves the best performance.

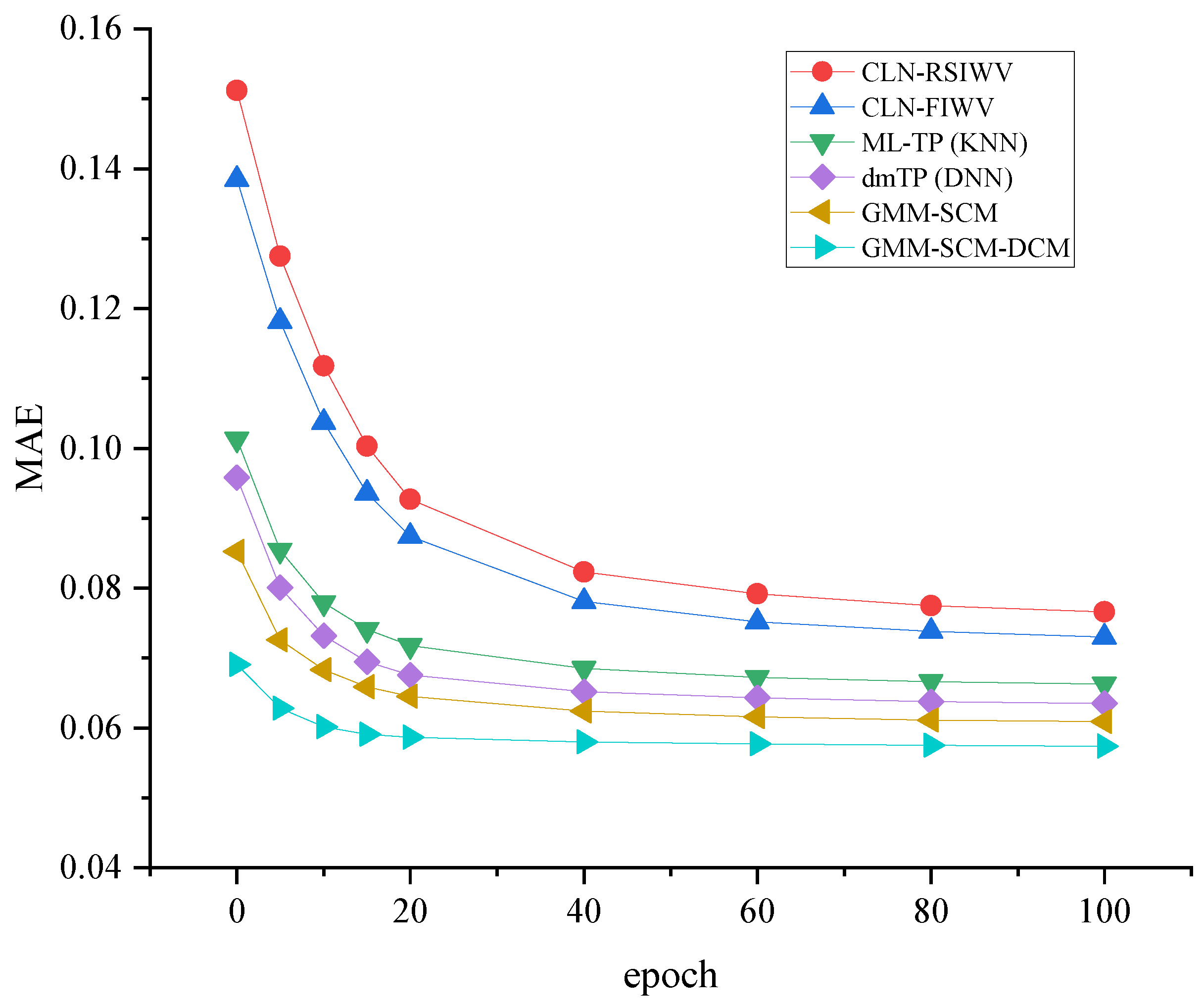

Figure 6 illustrates the convergence behavior of different models. As shown, the error curve for the CLN method starts from an extremely high value and requires approximately 80 iterations to converge slowly. GMM-SCM begins at a lower starting point. GMM-SCM-DCM exhibits the lowest starting point and converges rapidly to a stable state within approximately 10–15 iterations, demonstrating that its initial weights are very close to the optimal solution and effectively enhancing training efficiency.

5.2. Model Robustness Testing

To demonstrate that the proposed framework is independent of specific base learner architectures and maintains stable performance across varying data scales, this section conducts robustness testing using a non-stationary test set.

Analysis of the results in

Table 8 reveals that regardless of whether the base learner employs LSTM or GRU, the GMM-SCM-DCM framework consistently and significantly outperforms the conventional initialisation method (CLN-FIWV). GRU, owing to its fewer parameters and simpler structure, exhibits a marginal advantage under the limited data conditions of this experiment. This indicates that the proposed meta-learning framework constitutes a generalized architecture whose effectiveness is independent of specific underlying network choices, demonstrating robust performance.

Figure 7 illustrates the sensitivity of different models to meta-training set size. As shown, dmTP (DNN) performance is highly dependent on the number of meta-training samples, even underperforming KNN at 1000 samples before improving with increased data. GMM-SCM and GMM-SCM-DCM demonstrate robust performance even with small meta-training sets, exhibiting stable improvement with increasing data volume. This validates the superior data efficiency and small-sample meta-learning capabilities of the GMM-SCM-DCM model.

Moreover, the performance of all models improves with an increase in the meta-training set size. Notably, even under extremely small sample conditions (1000 samples), GMM-SCM-DCM still provides high-quality initialisation, significantly outperforming traditional methods with larger datasets (7000 samples).

5.3. Ablation Studies

To validate the effectiveness of the proposed DCM and SCM mechanisms individually, as well as their synergistic effect when combined, ablation experiments were conducted on non-stationary traffic datasets. The comparison models are as follows:

GMM-MCM-DCM: Employing the Multi-Component Mechanism in place of SCM as the negative control group.

(GMM-SCM (Static): employs the SCM mechanism but disables DCM functionality (i.e., the number of GMM components remains fixed and is not dynamically adjusted). This group serves to validate the role of DCM.)

GMM-SCM-DCM (Ours): This paper’s proposed complete model.

The results of the ablation experiments are shown in

Table 9. Analysis reveals:

GMM-MCM-DCM vs. GMM-SCM-DCM (Static): GMM-MCM-DCM (exhibited the poorest performance (highest MAE, lowest R2), coupled with slow convergence. This validates this paper’s view: under multi-component fusion mechanisms, synthetic weights disrupt the bijective relationship between “component-pattern-weight set“, causing training oscillations and performance degradation, demonstrating the efficacy and necessity of the SCM mechanism.

GMM-SCM vs. GMM-SCM-DCM: Disabling DCM resulted in a noticeable decline in model performance on the non-stationary test set (MAE increased from 0.0835 to 0.0898), alongside requiring more iterations to converge. This indicates that when confronted with novel patterns unseen in the training set, the fixed GMM structure fails to effectively characterize and adapt, leading to a performance bottleneck. This directly demonstrates the core contribution of the DCM mechanism in addressing non-stationarity.

Overall, GMM-SCM-DCM achieved optimal performance, demonstrating the synergistic effect of SCM and DCM mechanisms: SCM ensures precise initialisation within components, while DCM ensures the component structure can dynamically evolve to adapt to external changes.

5.4. Cross-Dataset Generalization Capability Validation

This section’s experiments validate the GMM-SCM-DCM framework’s performance on another independent public dataset, demonstrating its effectiveness is not dataset-specific and possesses strong generalization capabilities.

The dataset selected for this section’s experiments is the Telecom Shanghai Dataset. Like Telecom Italia, this dataset is another commonly used public benchmark dataset within the field. Data preprocessing procedures are consistent with those applied to the Telecom Italia dataset.

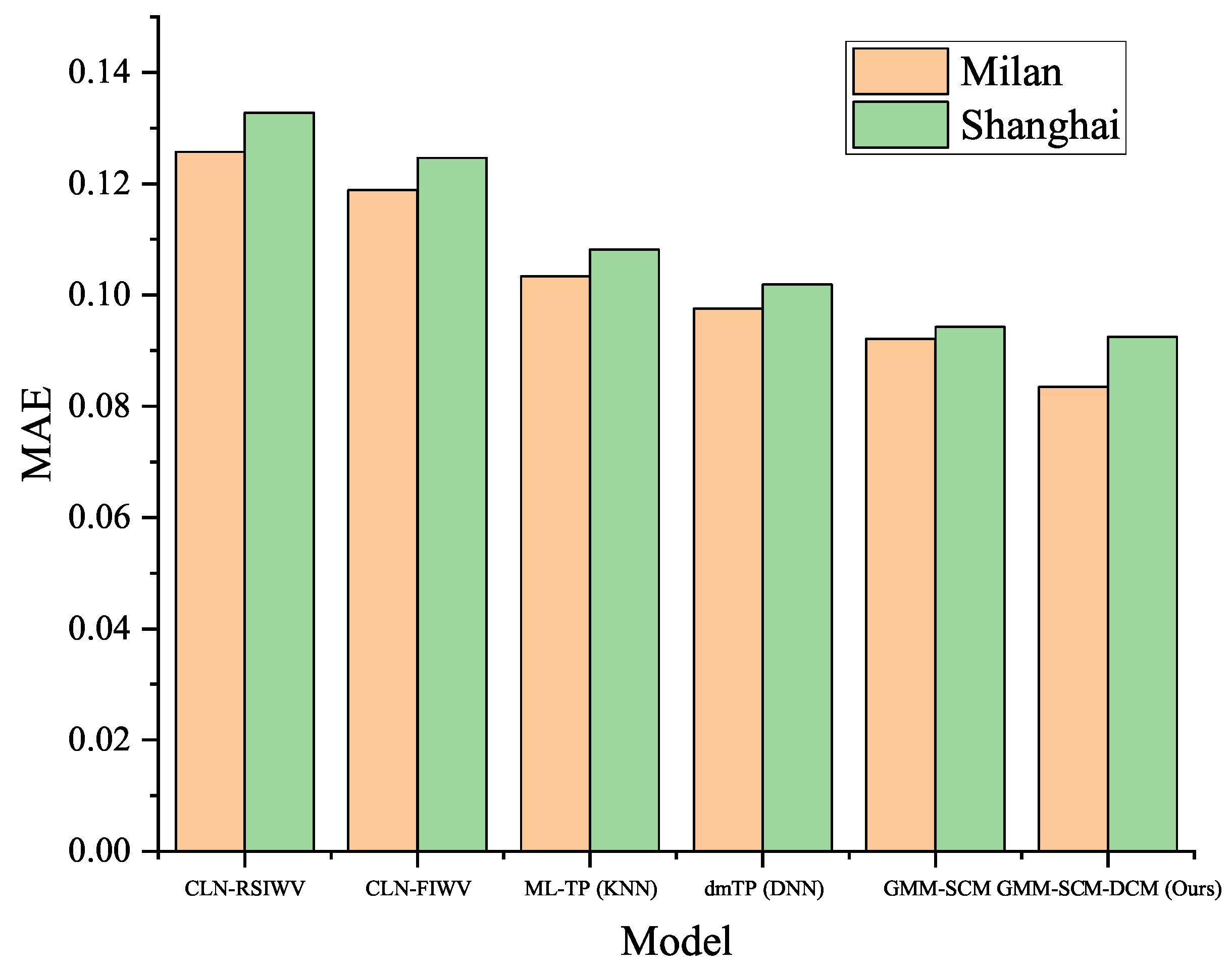

As shown in

Table 10 and

Figure 8, the GMM-SCM-DCM framework demonstrated exceptional robustness when confronted with the more complex distribution and potentially higher noise levels of the Shanghai dataset. Although all models exhibited varying degrees of performance degradation, GMM-SCM-DCM still delivered superior results, indicating it is a highly reliable, versatile, and robust framework.

5.5. Visualization of GMM Component Dynamic Evolution Process

The objective of this section’s experiments is to visually demonstrate the DCM mechanism’s management of the entire lifecycle of GMM components (generation, splitting, merging, and elimination) within a long-duration simulated task flow, thereby validating its capability to handle non-stationary traffic fluctuations.

Using non-stationary flow to create a simulated scenario:

Initial stable period (T0–T50): The model has learned through the meta-training set two stable patterns: ”commercial zone” (marked by morning rush hour) and “residential zone” (marked by evening rush hour).

Non-stationary zone emerges (T50–T100): A large-scale construction project (e.g., new underground station) commences in one area, causing its traffic flow to lose periodicity and become disordered with violent fluctuations. The DCM must recognize this as a new “non-stationary zone” pattern.

Functional evolution resulting information of hybrid zones (T100–T200): An original “commercial zone” gradually evolves in function due to the completion of surrounding residential buildings, beginning to exhibit characteristics of both morning and evening rush hours. The DCM must detect this change and merge the old commercial pattern with the new hybrid pattern.

Figure 9 clearly demonstrates the DCM mechanism’s pivotal role in addressing non-stationary evolution of urban functions, specifically:

Capturing new non-stationary patterns (T = 75): When construction zones induce non-stationary flow patterns (high entropy values, weak periodicity), existing commercial and residential components could not adequately characterize these patterns. The DCM generated a new component (Comp.3) via splitting operations, whose weight steadily increased, demonstrating the model’s successful identification and adaptation to this abrupt change.

Adapting to functional evolution (T = 175): As an area evolves from purely commercial to mixed use, its flow characteristics begin incorporating both morning and evening peak periods. DCM detects feature overlap and convergence between the original commercial component (Comp.1) and the emerging mixed use pattern, triggering a merging operation. The original commercial component is absorbed, with a new, more accurate mixed use Component (Comp.4) becoming dominant, optimizing the model structure and eliminating redundancy.

Clearing extinct patterns (T = 250): A residential zone component (Comp.2) experiences persistently declining traffic activity, its weight eventually falling below the minimum survival threshold. DCM then triggers an elimination mechanism, removing this component to concentrate model resources on currently active patterns.

Achieving a new steady state: Ultimately, the model is dominated by Comp.3 (non-stationary zone) and Comp.4 (mixed zone), accurately reflecting the city’s entirely new functional state following its evolution.

5.6. Computational Complexity Analysis

This section provides both theoretical analysis and experimental comparison of the computational complexity for the proposed method and baseline algorithms during both training and inference phases.

Theoretical Analysis:

SARIMA: Time complexity is , where p, q are non-seasonal AR and MA orders, P, Q are seasonal AR and MA orders, and L is the sequence length. Space complexity is .

SVR: Training using the sequential minimal optimization algorithm has complexity between and , where n is the number of samples and d is the feature dimension. Inference complexity is , where is the number of support vectors.

LSTM (CLN-RSIWV/FIWV): Training complexity is , where T is the sequence length, L is the number of LSTM layers, h is the hidden dimension, and is the input dimension. The complexity for a single time step forward pass is .

ML-TP (KNN): Training only requires storing meta-features with complexity . Inference requires computing similarities between the new task and all historical tasks with complexity , where N is the number of tasks and d is the feature dimension.

dmTP (DNN): Training complexity is , where E is the number of epochs, B is the batch size, L is the number of layers, and is the number of neurons in layer l. Inference complexity is .

GMM-SCM: Training using the Expectation Maximization algorithm has per-iteration complexity , where K is the number of components, N is the number of samples, and d is the feature dimension. Inference complexity for computing posterior probabilities is .

GMM-SCM-DCM: The training complexity is the same as GMM-SCM. During online adaptation, the DCM mechanism adds operations for pattern novelty detection and component management: pattern novelty detection complexity , component splitting , component merging , and component elimination .

Experimental Results:

All models were evaluated under identical hardware conditions, recording average training and inference times on the non-stationary test set. The test set contains 100 tasks, each comprising 168 h (1 week) of traffic data with a time slot length of 1 h. Experimental results are shown in

Table 11.

The experimental results show that traditional statistical methods (SARIMA, SVR) have advantages in training and inference speed but limited prediction accuracy. Deep learning methods (CLN series) require longer training times but offer fast inference. Among meta-learning methods, the KNN meta-learner has the fastest training but slower inference; the DNN meta-learner has the highest training complexity; and GMM-based methods achieve a good balance between training and inference efficiency. GMM-SCM-DCM significantly improves prediction accuracy and adaptation capability in non-stationary environments with only a modest increase in computational overhead (approximately 19% increase in training time and 7% increase in inference time compared with GMM-SCM), demonstrating good engineering feasibility.

Furthermore, this paper analyzes the relationship between computational complexity and task scale for each model. As the number of tasks N increases, the inference time of the KNN meta-learner grows linearly (), while the inference time of GMM-based methods remains stable (), making them more suitable for large-scale deployment scenarios.

6. Conclusions

The experiments in this chapter demonstrate that the GMM-SCM-DCM model outperforms traditional deep learning methods and other meta-learning baseline algorithms in cellular traffic forecasting tasks, whether for stationary or non-stationary traffic, in terms of prediction accuracy, convergence speed, and robustness.

Analysis reveals that within the GMM-SCM-DCM framework, the GMM provides probabilistic modeling of weight distributions, offering greater interpretability and statistical efficiency than KNN and DNN. SCM delivers precise initialisation under stationary conditions. DCM is pivotal for handling non-stationary traffic, with its dynamic fusion mechanism significantly enhancing the model’s generalization capability and robustness. This framework is particularly suited for scenarios involving new base stations or sudden events, where historical data is scarce or diverges substantially from past patterns, rendering traditional methods ineffective. Our approach enables rapid provision of high-quality initialisation, achieving an efficient “cold start”.

The findings of this research not only present a novel and efficient meta-learning solution for cellular network traffic forecasting but also offer valuable insights and implementation pathways for broader non-stationary time series prediction challenges. Moving forward, we shall further explore integrating the DCM mechanism with paradigms such as online learning and continuous learning to enhance the model’s adaptability and stability within long-term evolving environments.

The findings of this study not only provide an efficient meta-learning solution for cellular network traffic prediction but also offer valuable modeling insights and implementation pathways for broader non-stationary time series forecasting problems. Although GMM-SCM-DCM demonstrates excellent performance in non-stationary traffic prediction, several promising research directions remain:

Online and continual learning: Integrating the DCM mechanism with online learning frameworks to achieve lifelong learning on continuously arriving data streams while mitigating catastrophic forgetting.

Federated meta-learning: Extending the proposed framework to federated learning environments, enabling collaborative meta-learning across cellular networks under data privacy constraints.

Interpretability and visualization: Incorporating explainable AI techniques (e.g., SHAP, LIME) to provide visual interpretations of the meta-learner’s component assignment and pattern evolution processes.

Cross-modal fusion: Enhancing the perception and prediction capability for non-stationary patterns by incorporating external multimodal data (e.g., meteorological, social events, traffic flow).

Lightweight design and edge deployment: Optimizing the model architecture and component management strategies to adapt to the resource constraints of edge computing devices, enabling real-time prediction on the end side.

Future work will focus on these directions to further enhance the model’s applicability and intelligence in practical network environments.