Abstract

Time-domain signal models have been widely applied to single-channel music source separation tasks due to their ability to overcome the limitations of fixed spectral representations and phase information loss. However, the high acoustic similarity and synchronous temporal evolution between vocals and accompaniment make accurate separation challenging for existing time-domain models. These challenges are mainly reflected in two aspects: (1) the lack of a dynamic mechanism to evaluate the contribution of each source during feature fusion, and (2) difficulty in capturing fine-grained temporal details, often resulting in local artifacts in the output. To address these issues, we propose an attention-driven time-domain convolutional network for vocal and accompaniment source separation. Specifically, we design an embedding attention module to perform adaptive source weighting, enabling the network to emphasize components more relevant to the target mask during training. In addition, an efficient convolutional block attention module is developed to enhance local feature extraction. This module integrates an efficient channel attention mechanism based on one-dimensional convolution while preserving spatial attention, thereby improving the ability to learn discriminative features from the target audio. Comprehensive evaluations on public music datasets demonstrate the effectiveness of the proposed model and its significant improvements over existing approaches.

1. Introduction

With the exponential growth in digital media, the volume of music data has surged dramatically, and music plays an increasingly integral role in various aspects of human life. In the field of music information retrieval (MIR) [1], single-channel music source separation has emerged as a fundamental yet challenging task, supporting a wide range of downstream applications such as music genre classification, melody extraction, singing voice detection, and singer identification. The advancement of these applications greatly enhances the functionality and user experience of intelligent audio systems [2,3,4,5]. In the music industry, source separation enables producers to remix classic recordings, extract vocals for creating acapella versions, or isolate instrumental tracks for sampling and mashup creation. For music education, separated tracks allow students to practice along with isolated instruments, helping them better understand complex musical arrangements. In the entertainment sector, karaoke applications rely on vocal removal to generate backing tracks, while streaming platforms use source separation to offer interactive features such as real-time vocal suppression or instrument emphasis based on user preferences. Additionally, source separation facilitates accessibility applications, such as enhancing vocal clarity for hearing-impaired listeners or creating customized audio mixes for therapeutic purposes. From a technical perspective, separated sources significantly improve the accuracy of music information retrieval tasks, including chord recognition, tempo detection, and music transcription, which are fundamental for automated music analysis systems and AI-powered music composition tools.

However, the highly similar and temporally correlated characteristics of vocals and accompaniment in monaural recordings pose significant challenges for accurate separation. Due to the overlapping frequency content and synchronous temporal patterns between sources, the separation process becomes an urgent problem, requiring sophisticated modeling techniques to achieve reliable performance. As a result, developing effective methods for single-channel music source separation remains a critical research issue in intelligent audio analysis, and feature extraction plays a pivotal role in a music source separation method as it transforms raw audio signals into meaningful representations that capture the distinctive characteristics of different sources. In the context of vocal and accompaniment separation, discriminative features are essential for distinguishing between the harmonic structures of singing voices and the complex spectral patterns of musical instruments. Effective feature extraction enables the model to identify source-specific attributes such as pitch continuity in vocals, rhythmic patterns in drums, and harmonic progressions in accompaniment, which are crucial for accurate source separation. Moreover, high-quality feature representations directly impact downstream applications: precise vocal extraction enhances melody transcription and singer identification systems, while clean accompaniment separation facilitates music remixing and automatic music transcription. Without robust feature extraction mechanisms, separation models struggle to capture the subtle acoustic differences between overlapping sources, leading to artifacts and degraded performance in subsequent music information retrieval tasks.

Recently, deep learning-based methods have emerged as the dominant paradigm in music source separation, driven by the rapid development of neural networks. These models capture complex time-frequency patterns and semantic representations from large-scale data, significantly outperforming traditional methods in separation quality. Early neural network-based methods for music source separation primarily operated in the frequency domain. These approaches first convert the audio waveform into a spectrogram representation, treating the task as an image-processing problem, where convolutional architectures are employed to learn spatial patterns for source identification and separation. However, music signals are inherently temporal, and many salient acoustic features, such as transient structures and phase coherence, are not fully captured in the magnitude spectrogram. Moreover, frequency-domain methods often discard or approximate phase information, which can lead to noticeable artifacts and degradation in audio quality. To address these limitations, researchers have proposed time-domain approaches that operate directly on the raw waveform. These methods utilize deep neural networks to learn the fine-grained temporal patterns that differentiate vocals from accompaniment, enabling end-to-end waveform modeling without explicit spectral transformations. By preserving phase and temporal continuity, time-domain models have shown promising results in achieving more natural and artifact-free source separation.

Despite the progress made by the above methods, they still have the following limitations: firstly, during the fusion process, the contribution of features from the mixture and the target music representations to the final mask generated by the network is unknown. There is a lack of a dynamic mechanism to evaluate the contribution of each source, resulting in an inability to accurately distinguish the importance of different sources during mask generation, which in turn reduces the precision and robustness of the separation results. Secondly, existing methods struggle to capture fine-grained temporal details, often resulting in local artifact noise in the output, which is particularly pronounced in transient or rapidly changing segments. These artifacts disrupt the temporal continuity and structural consistency of the separated sources, significantly degrading the naturalness and perceptual quality of the final audio.

To address the above issues, we propose an attention-driven time-domain convolutional network (ADTDCN) for source separation of vocal and accompaniment. Specifically, ADTDCN contains two modules: the embedding attention module (EAM) and the efficient convolutional block attention module (E-CBAM). (1) The EAM introduces an adaptive weighting mechanism that dynamically learns the importance of target embeddings and mixed signals based on their relevance to the separation objective, replacing static fusion strategies. By compressing temporal and channel information into compact scalar representations and learning fusion weights through a lightweight attention network, EAM enhances source-specific feature emphasis and improves separation performance and generalization ability. (2) The E-CBAM enhances fine-grained temporal feature learning by replacing CBAM’s dimensionality-reducing channel attention with a 1D efficient channel attention mechanism and adapting spatial attention for sequence data. This design captures both cross-channel dependencies and local temporal correlations more effectively, mitigating artifacts caused by disrupted time continuity and improving the separation network’s ability to model transient acoustic details.

Our contributions are summarized as follows:

(1) We design an embedding attention module (EAM) to perform adaptive source weighting, enabling the network to emphasize signal features more relevant to the target mask during training.

(2) We develop an enhanced convolutional block attention module (E-CBAM) that integrates an efficient channel attention mechanism to strengthen local feature extraction while preserving spatial attention, thereby improving the model’s ability to learn discriminative features from target audio signals.

(3) Extensive experiments conducted on three publicly available music datasets demonstrate that the proposed ADTDCN significantly outperforms state-of-the-art baselines, validating its superior effectiveness for vocal and accompaniment source separation.

The remainder of the paper is organized as follows. After introducing related works in Section 2, we formulate the proposed attention-driven time-domain convolutional network in Section 3. Then, we report the experimental details and conduct a detailed experimental analysis in Section 4. Finally, we summarize our work and provide a direction for future work in Section 5.

2. Related Works

In this section, we organize related work by the processing domain—frequency-domain versus time-domain—rather than by the learning paradigm. Under this domain-based view, each domain may include both learning-based methods (including shallow machine learning and deep learning) and non-learning signal-processing approaches. To avoid confusion, we treat shallow machine learning and deep learning as learning-based methods within the same domain; deep learning can be viewed as a high-capacity subclass of machine learning. These two methods cast separation as supervised estimation of masks or waveforms, with performance scaling with data and capacity; shallow machine learning depends on feature engineering, while deep learning is more data/regularization sensitive, and misestimation can introduce artifacts. In this paper, ‘traditional methods’ refer specifically to non-learning techniques such as CASA/heuristics, decomposition (e.g., NMF), and repetition/melody-based methods.

2.1. Traditional Music-Separation Methods

Traditional methods for music source separation can be categorized into three types: Firstly, computational auditory scene analysis (CASA)-based methods simulate human auditory perception by using hand-crafted rules and heuristic algorithms to extract target sources from mixed signals [6,7,8]. For example, Roman et al. [9] proposed a supervised learning-based speech separation algorithm, while Hu et al. [10] introduced the Hu-Wang model and subsequently developed improved versions [11,12,13]. These approaches handcraft grouping and masks from perceptual cues, offering lightweight and interpretable processing, but they generalize poorly under heavy overlap and complex arrangements. Although intuitive and biologically inspired, these methods struggle to generalize to complex music scenes and often exhibit degraded performance in noisy environments or when sources share overlapping frequency components. Secondly, non-negative matrix factorization (NMF)-based methods decompose the magnitude spectrogram of a mixed signal into a set of non-negative basis spectra and corresponding activation patterns, enabling the reconstruction of individual sources [14,15]. For instance, Lee et al. [14] made significant contributions to speech enhancement and speaker extraction, and their techniques were later adapted for music separation. Chanrungutai et al. [15] further extended this framework with several innovative improvements. These methods are lightweight and interpretable but are limited by their linear modeling assumptions, making them less effective in handling nonlinear and complex source mixtures. Thirdly, melody and periodicity-based methods leverage differences in pitch contours, melodic structure, and temporal periodicity between vocals and accompaniment [16,17,18]. Rafii et al. [17] proposed the repeating pattern extraction technique (REPET) to separate repeating backgrounds from non-repeating foregrounds. They exploit accompaniment periodicity/repetition versus vocal variability to separate foreground from background, working well with highly repetitive backing. However, these methods rely heavily on accurate melody extraction and often perform poorly on non-melodic vocal signals or when dealing with complex harmonics and non-periodic rhythmic patterns.

2.2. Frequency-Domain Music-Separation Methods

In recent years, the separation method based on the frequency domain has also attracted the attention of relevant researchers. Frequency-domain methods typically transform raw audio waveforms into spectrograms, treating them as images to facilitate the use of computer vision techniques for music recognition, classification, and separation. For example, Wang et al. [19] formulated the separation task as a binary classification problem and applied a support vector machine to generate an ideal binary mask (IBM). However, this method suffers from significant information loss in cases of misclassification, and the DNN structure used fails to capture the temporal dependencies of audio signals. To address these issues, Huang et al. [20] introduced recurrent neural networks (RNNs) to improve separation performance by optimizing the predicted vocal and accompaniment signals using ideal ratio masks (IRMs). Jansson et al. [21] applied the image segmentation model U-Net to spectrograms of mixed audio, learning time-frequency masks corresponding to individual sources. Similarly, Park et al. [22] proposed a stacked hourglass network (SH-4stack) that learned multi-scale features from spectrograms to generate source-specific masks for vocal–accompaniment separation. Additionally, Lu et al. [23] proposed a novel MSS approach based on the band-split rope Transformer (termed as BS-RoFormer) that originates from combining the ideas of band-split and hierarchical Transformer architecture. Tong et al. [24] proposed a novel frequency-domain network to explicitly split the spectrogram of the mixture into several subbands and introduce a sparsity-based encoder to model different frequency bands. These methods share a common approach: transforming music waveforms into spectrograms and treating the separation task as an image analysis problem, thereby enabling the application of computer vision models. However, music signals are inherently temporal in nature, and their dynamic characteristics cannot be fully captured through static spectrogram representations alone.

2.3. Time-Domain Music-Separation Methods

To address the limitations of frequency-domain methods, researchers proposed time-domain approaches that operated directly on raw waveforms. These methods utilized deep neural networks to learn fine-grained temporal patterns that differentiated vocals from accompaniment, enabling end-to-end waveform modeling without explicit spectral transformations. By preserving phase information and temporal continuity, time-domain models demonstrated promising results in producing more natural and artifact-free source separation.

The time-domain music separation process consists of three main stages: signal processing, model training, and waveform reconstruction. In contrast to frequency-domain separation models, time-domain approaches directly perform convolutional encoding on raw waveform data during the signal processing stage, without requiring time–frequency transformation. Consequently, waveform reconstruction does not suffer from phase estimation issues and merely involves a corresponding convolutional decoding operation. This waveform-based strategy has achieved breakthrough performance in speech separation tasks, notably in the Conv-TasNet model. Takahashi et al. [25] proposed a nested structure called D3Net for music source separation. The model was inspired by the densely connected convolutional network architecture DenseNet [26], and employed exponentially larger receptive fields through multi-dilated convolutions, enabling the simultaneous modeling of multiple resolutions. D3Net achieved strong performance in separating vocals from accompaniment, including drums, bass, and other instruments. Drawing inspiration from the speech separation model Conv-TasNet [27], Rouard et al. [28] introduced Demucs, a time-domain music source separation model. Demucs retained the core structure of Conv-TasNet, incorporated an LSTM layer between the encoder and decoder to capture temporal dependencies, and adopted a U-Net-style architecture for feature fusion. While Demucs surpassed the state-of-the-art models of its time in terms of source-to-distortion ratio (SDR), its accuracy in separating vocals from complex mixtures still lagged behind some competing models. Qiao et al. [29] further optimized Conv-TasNet by introducing sample-level convolutions in both the encoder and decoder to preserve fine-grained acoustic features. They proposed a new time-domain architecture, VAT-SNet, which incorporated vocal and accompaniment embeddings generated by an auxiliary network as references. This design significantly improved the accuracy of vocal–accompaniment separation. Building on the above studies, time-domain approaches have been extended to various applications, including speech enhancement, speech recognition, and multimodal speech separation [30,31,32,33].

Although the above methods achieved promising results, they lacked dynamic mechanisms to evaluate the relative contribution of different sources during feature fusion and struggled to capture fine-grained temporal details, often resulting in local artifacts in the separated outputs. Therefore, we propose an attention-driven time-domain convolutional network (ADTDCN) to achieve more accurate separation of vocals and accompaniment.

3. Attention-Driven Time-Domain Convolutional Network

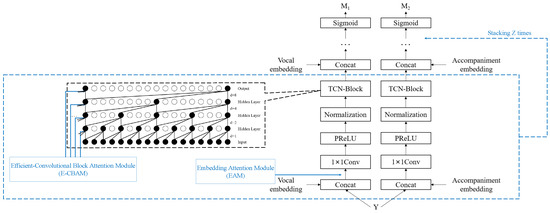

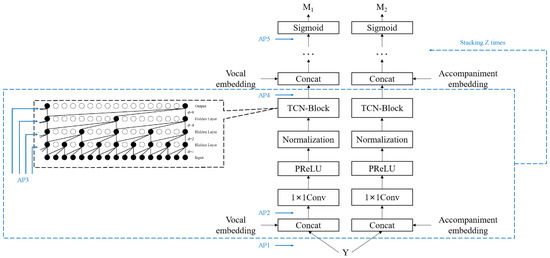

The overall architecture of ADTDCN is shown in Figure 1, and its core innovation lies in the design of the embedding attention module and the efficient convolutional block attention module. First, for the multiple concatenated fusion operations between the primary signal and the target music representation in the separation network, an embedding attention module (EAM) is incorporated to enable the network to automatically weight the target music embedding weights when mapping the resulting high-dimensional features to locations, and to focus attention on the feature dimensions that are more relevant to the target music, in order to correct the solution space of the mask obtained from training. Second, an efficient convolutional block attention module (E-CBAM) is incorporated into each layer of a one-dimensional deep temporal convolutional network block (TCN-Block), which compensates for the limited ability of local feature extraction caused by hollow convolution in the TCN with the channel and spatial attention mechanisms, and strengthens the attention to relevant information in two dimensions, thus further improving the accuracy of the target music mask obtained from the separation network. This module compensates for the limitation of insufficient local feature extraction capability induced by dilated convolution in TCN via the channel attention mechanism and spatial attention mechanism, and enhances the focus on task-relevant information across the channel and spatial dimensions, thereby further improving the accuracy of the target music mask generated by the separation network. In the following sections, we provide a detailed introduction to each component of the proposed ADTDCN.

Figure 1.

The overall architecture of ADTDCN.

3.1. Separation Network

The proposed ADTDCN model is built upon the VAT-SNet architecture [27], which serves as its backbone network. The separator takes the encoder-processed music signal as input and combines it with the target music representation from the auxiliary network to generate a mask of the corresponding music for the purpose of separating the whole network. First, the time-domain music signal X is passed through the encoder to derive the processed music signal Y.

where represents the N-dimensional representation of the music waveform data in the latent space after the first layer of convolution. X denotes the composed of all music clips, and is the first layer of convolution with N channels.

3.2. Embedding Attention Module

Then, ADTDCN concatenates and fuses the target music representations (vocal embedding and accompaniment embedding) with the mixed music signal Y along the channel dimension. It is first necessary to clarify the origin of these target embeddings (i.e., vocal embedding and accompaniment embedding): both are extracted by the auxiliary network introduced in Section 3.4—a module specifically designed to provide source-specific reference features for the main separation network. However, the contributions of the target sources and the mixture are inherently different, with varying levels of importance. Therefore, greater weights should be assigned to the more informative components. To avoid the limitations of manually assigning these weights, we introduce an encoding attention mechanism (EAM), which is embedded into the original fusion process. This mechanism enables the model to automatically learn the relative importance of each input signal during training, thereby enhancing its ability to estimate the target music masks more accurately.

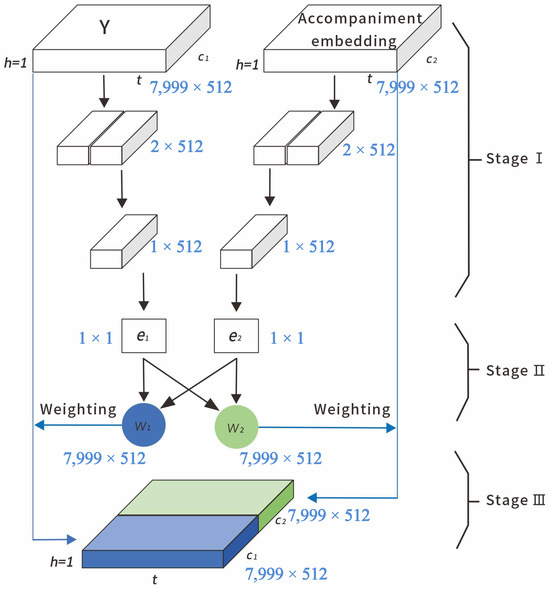

EAM functions as a time-varying mixture-of-experts gate that fuses the mixture-conditioned and embedding-conditioned streams by learning reliability weights rather than performing additional separation. The internal attention network operates on global temporal summaries to capture slowly varying envelopes and timbral statistics that are informative under co-modulated musical dynamics. This summary is passed through a tiny normalization–projection–nonlinearity stack to produce two logits and a softmax gate, yielding a convex, frame-wise weighting of the two streams. Because only the final weighted sum is broadcast over time, the compute cost is dominated by a single element-wise mixing of two tensors. The composition of the EAM is shown in Figure 2, where it should be noted that the height of the EAM is 1 since it is proposed for temporal sequences.

Figure 2.

The architecture of the embedding attention module.

Since our encoder has 512 channels and a convolution kernel size of 16, and our input audio is 4 s of 16 kHz music, our input scale is 7999 × 512. The feature changes in each stage are also marked in the figure. The process of EAM is divided into three stages. Take the fusion process of accompaniment embedding and main road signal Y as an example: in the first stage, the vocal signal and the main road signal are compressed and extracted to a characteristic real number, i.e., we get , which represent the respective characteristics of the two signals. The second stage uses a linear network to learn the relationship between two feature real numbers representing the two features, followed by a Sigmoid activation to obtain the weights and normalized between 0∼1. are the weights representing the importance of the two features. The third stage applies weights and to the original Y and the accompaniment embedding, and then the resulting signal is channel-spliced. The network structure for each stage is set up as follows, taking the embedding operation process as an example.

(1) Stage 1: This phase is divided into two processes. Firstly, in the time dimension, the embedding matrix of size is compressed into a feature vector of size , where t and c represent the embedding length and the number of channels, respectively.

where i represents the i-th embeddings of Y and , and . represents the average pooling. Then, in the channel dimension, a fully connected layer is used to compress and extract the size Feature_emb into a size Feature_real e.

(2) Stage 2: The structure of the network in this phase can be represented as:

where g represents the linear network, b is a bias term to better fit the complex correlation between embedding and the main path signal Y, represents the Sigmoid function, and represents the output weight vector.

(3) Stage 3: The output weights of phase 2 are regarded as the respective importance of the target music signal and the main road signal in fusion after network learning, and , are weighted channel by channel by multiplication to embedding and Y, respectively, and the weighted signals are spliced in the channel dimension, i.e., we get the band-weighted signals of the target music fused with the mixed music in series.

where the output Z contains adaptively weighted information from both sources, guiding the downstream separation process more effectively. It is worth noting that the remaining convolutional layers share the same structure; therefore, the overall convolutional output Y is expressed as follows:

where , ∗ is the convolution operator, and represents the non-linear activation function.

EAM learns soft fusion weights between mixture-conditioned and embedding-conditioned streams, functioning as a time-varying mixture-of-experts gate. When vocal cues dominate, the gate privileges the stream whose embedding better explains these cues; when accompaniment structure dominates, the weighting shifts accordingly. This soft arbitration mitigates ambiguity under co-modulated envelopes, yielding cleaner masks and reduced leakage.

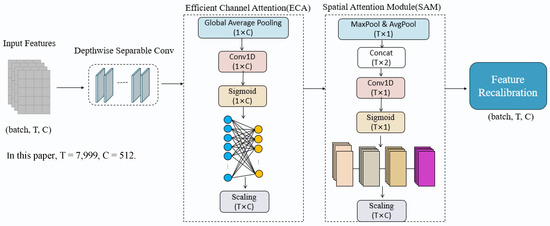

3.3. Efficient Convolutional Block Attention Module

After obtaining the final output of the encoder, we input into the TCN-Block module. The core of the TCN-Block module is a time-domain convolutional network (TCN). To reduce the number of parameters and computational cost, the TCN-Block employs depthwise separable convolutions [34,35] instead of standard convolutions. The exponentially increasing dilation factors within the TCN-Block ensure a sufficiently large receptive field for capturing the long-term dependencies of music signals. Its 1D deep temporal convolutional architecture exploits historical information to analyze feature similarity and dissimilarity, mapping them into a high-dimensional space for clustering similar features and distinguishing dissimilar ones. The fully convolutional design further accelerates training and supports efficient parallel computation.

Although the TCN-Block module effectively captures the long-term dependencies of music signals, it struggles to model fine-grained temporal details, often leading to local artifact noise in the output—particularly evident in transient or rapidly changing segments. These artifacts disrupt the temporal continuity and structural consistency of the separated sources, significantly degrading the naturalness and perceptual quality of the final audio. Therefore, we design an efficient convolutional block attention module based on the convolutional block attention module (CBAM) [36] to address this issue.

E-CBAM replaces the fully connected channel attention module in CBAM with the 1D convolutional efficient channel attention (ECA) mechanism, and retains the spatial attention module (SAM) in CBAM. As shown in Figure 3, to further improve the feature learning ability of the separation network on the target music-related information, an efficient channel attention and spatial attention module is assembled into an efficient convolutional block attention module (E-CBAM) and inserted into the separation network:

where denote the vocal mask and the accompaniment mask. denotes the vocal embedding and the accompaniment embedding. F represents the total fusion convolution operation.

Figure 3.

The architecture of efficient convolutional block attention module.

For sequence data, the sequence length can be considered as the spatial dimension and the features in the sequence as the channel dimension. When processing audio sequences, for example, the channel attention mechanism can be used to enhance the transfer of information between different channels in order to better capture the relationships and dependencies between features. In this case, the channel refers to the representation of the signal at different locations in the frames of the sequence. The 2D convolution involved in the attention mechanism in E-CBAM is replaced with 1D convolution in the code implementation. The spatial attention module, on the other hand, captures the relationship between different locations by applying convolution operations and maximum pooling in the spatial dimension. The resulting spatial attention weights are likewise adaptively weighted between spatial information by multiplying them by the input feature map. In the spatial attention mechanism, 2D convolutional layers are used to extract spatial features, but this spatial structure does not exist in sequence data. Therefore, the 2D convolutional layer can be replaced with a 1D convolutional layer in order to extract temporal features in sequence data to compensate for the loss of information continuity due to the grid effect of null convolution in TCN. The 1D convolutional layer extracts information around each time step by sliding a fixed-size window along the time axis. In sequence data, each time step corresponds to a feature vector, and these feature vectors can be arranged into a matrix in chronological order. Similar to pixels in an image, each time step in a sequence has a local dependency, so the relationship between them can be modeled by a combination of channel-attentive and spatial-attentive mechanisms.

The encoder’s channel axis can be viewed as a learned subband or atomic representation where nearby channels encode correlated harmonic groups. E-CBAM’s channel attention (ECA) applies a local 1D convolution along channels, which acts like a banded, correlation-aware reweighting over these groups. This favors channel patterns that are jointly consistent with the target while suppressing near-band competitors, thereby tackling acoustic similarity. Its temporal attention (SAM) then supplies a frame-wise saliency that emphasizes time instants with transiently higher discriminability. Because the target–interferer separability is non-stationary in music, such time-varying emphasis addresses synchronous evolution by up-weighting frames where the target is momentarily more separable.

3.4. Decoder

Finally, the decoder reconstructs the vocal and accompaniment waveforms by applying the target mask to the encoded mixed music and performing symmetrical deconvolution operations.

where V represents the process whereby the decoder rebuilds the target music waveform. ⊙ denotes the element-wise (Hadamard) product between the encoded mixture Y and the mask . Unlike traditional methods that rely on STFT/ISTFT, ADTDCN employs stacked convolution and transposed convolution to maintain phase information and waveform continuity. Here, the “continuity” of phase and waveform refers to their smooth temporal evolution: the waveform represents a time-domain signal that changes continuously without abrupt jumps, and the phase spectrum evolves smoothly across adjacent frames in STFT-based analysis. Preserving such continuity is essential, as discontinuities would introduce audible artifacts (e.g., clicks or unnatural distortions). To enhance mask accuracy in the face of high vocal–accompaniment similarity, an auxiliary network is introduced to extract deep acoustic features from reference signals. This network shares its encoder with the main network and includes a music extractor composed of normalization, 1D convolution, and stacked ResNetBlocks. These ResNetBlocks preserve feature integrity through residual connections, enabling more effective embedding of vocal and accompaniment features to guide mask generation.

For the accompaniment embedding (as shown in Figure 2), its acquisition process is as follows:

(1) The auxiliary network takes the pure accompaniment signal (a reference signal from the dataset, e.g., the left-channel pure accompaniment in MIR-1K) as input.

(2) It first processes the pure accompaniment signal through the shared encoder—consistent with the main network’s encoder (using N-channel 1D convolution with a 16 × 1 kernel), mapping the time-domain signal to a latent-space representation .

(3) is then fed into the music extractor (a core component of the auxiliary network), which consists of global layer normalization (GLN), 1D convolution (3 × 1 kernel, 512 channels), and 3 stacked ResNetBlocks (see Table 1 for hyperparameters). The ResNetBlocks use residual connections to avoid feature degradation, while the 1D convolution captures local temporal patterns of the accompaniment.

Table 1.

Model training hyperparameter settings.

(4) The output of the music extractor is the final accompaniment embedding—a compact vector encoding discriminative features (timbre, temporal dynamics) of the pure accompaniment.

3.5. Optimization

The training objective of ADTDCN involves optimizing all parameters by minimizing the overall loss function. The final objective function is based on SI-SNR (scale-invariant signal-to-noise ratio), and it is formulated as follows:

Among them, is the projection of estimated signal onto the original pure target signal s, is the residual (i.e., noise component) of the estimated signal after removing the projection of the target signal, and denotes the estimated vocal and accompaniment signals obtained from the decoder output.

4. Experimental Results and Analysis

4.1. Experimental Environment and Dataset

The experiments were conducted on a CPU Intel Xeon W-2133, with six cores and twelve threads at 3.6GH, 32GB of RAM, GPU RTX3090, CUDA11.1, Python 3.10, and pytorch 2.2.2. The experiments use the public dataset MIR-1K released by Ref. [37], which consists of 1000 16 kHz sampled music clips professionally cut from 110 Chinese songs, with durations ranging from 4 s to 13 s, which are uniformly cut to 4 s in the experiments in this paper to ensure the consistent length of the training input data. The pure accompaniment and vocals used in the experiments are the audio stored in the left and right channels separately, and the mix used in the experiments is a single-channel mix of the pure accompaniment and vocals of the left and right channels from the above audio files at 0 dB. In the experiment, 800 clips (80% of the total 1000 clips) served as the training set, and 200 clips (20%) as the test set. To validate hyperparameters without introducing an independent validation set (which would reduce the amount of available training data), we further split the 800-clip training set into a “sub-training set” (700 clips) and a “validation subset” (100 clips) temporarily during the training phase—this temporary split was only applied to the training process and did not alter the total number of training samples (remaining 800 clips in total) or compromise the independence of the test set, which was kept completely separate from the training process throughout the experiment to ensure unbiased performance evaluation.

4.2. Evaluation Indicators

For the evaluation metrics of the separation results, the separation toolbox (blind source separation evaluation, BSS _EVAL) [38,39] provides a method based on the quantization of the source signal with its estimated signal in terms of signal-to-distortion ratio (SDR), source-to-interference ratio (SIR) and source-to-artifact ratio (SAR), which is based on the decomposition of the estimated predicted mixed signal into four parts:

where denotes the portion of the target audio source estimation signal that is correlated with the original pure signal, denotes the interference signal, denotes the artifacts introduced by the algorithm, representing the residual part of after removing and , consisting of algorithm-induced distortions (e.g., temporal discontinuities or spectral artifacts) that do not originate from either the target or interfering signals, and denotes the noise signal.

To eliminate ambiguity and align with the BSS_EVAL standard, we formally define each component using mathematical expressions, where denotes the original pure target signal and denotes the original interfering signal. The specific definitions of these components are expressed as:

where denotes the inner product between and , and denotes the L2-norm of .

where and follow the same inner product and L2-norm definitions as above.

In addition, since the dataset used in this experiment is a standard dataset, which does not contain noise terms, is 0 and can be omitted. Thus, we can define the signal–distortion ratio, source–interference ratio, and source–artifact ratio metrics as follows:

To comprehensively evaluate separation quality across the entire test set, we introduce global metrics that account for varying clip durations. Here, we take GSDR as an example:

where represents the signal–distortion ratio of the kth music, and represents the time weight, which is the duration of the kth music. The global signal–distortion ratio (GSDR) is the duration-weighted average of SDR values across all clips.

Similarly, GSIR and GSAR denote the metrics after adding time weights to SIR and SAR and averaging over the test set of music, respectively, and are computed in the same way as GSDR. GSIR and GSAR are particularly suitable for this vocal–accompaniment separation task for three reasons:

(1) They address the duration variability of test clips: The MIR-1K dataset (used in this study) includes clips ranging from 4s to 13s; duration weighting prevents short, less informative clips from disproportionately influencing the overall evaluation, ensuring results reflect real-world performance (where longer music segments are more common).

(2) They target the two core challenges of the task: As highlighted in the introduction, vocal–accompaniment separation faces two key issues—residual interference and algorithmic artifacts. GSIR directly quantifies interference suppression (a key goal of separating vocals from accompaniment), while GSAR measures artifact reduction (critical for preserving audio naturalness), making the metrics tightly aligned with the study’s objectives.

(3) They enable fair cross-model comparison: By normalizing per-clip performance into a single global score (weighted by practical relevance), GSIR and GSAR provide a consistent benchmark to compare different models (e.g., ADTDCN vs. VAT-SNet vs. Conv-TasNet), avoiding ambiguity from relying solely on local metrics that vary across individual clips.

In summary, SDR, SIR, and SAR quantify separation quality for individual clips (local metrics), while GSDR, GSIR, and GSAR provide an overall assessment of model performance across the entire dataset (global metrics) by integrating per-clip metrics with duration-based weighting. The higher the values of GSDR, GSIR, and GSAR, the better the model separation performance.

It should be noted that commonly used classification metrics such as accuracy, precision, recall, and F1-score are not directly applicable to music source separation. This task is essentially a regression problem at the waveform level, where the objective is to reconstruct continuous audio signals rather than to classify discrete categories. Therefore, accuracy is undefined, and precision/recall/F1 can only be computed if the separation results are converted into binary time–frequency masks, which is not a standard practice in waveform-based methods. For this reason, the mainstream evaluation in source separation research relies on SDR, SIR, SAR, and their global variants (GSDR, GSIR, and GSAR), which are widely recognized as more reliable indicators of separation quality. In this study, we adopt these metrics to provide a comprehensive and fair evaluation of model performance.

4.3. Implementation Details

The experiment is divided into two parts, as the lightweight attention mechanism module can be inserted into different locations of the network and have different impacts, so the ablation experiment process is carried out first to further discuss the multiple possibilities of incorporating the attention mechanism, thus verifying the effectiveness of incorporating the E-CBAM in TCN-Block. Secondly, ADTDCN (EAM), ADTDCN (E-CBAM), and ADTDCN, incorporating a single attention mechanism and two attention mechanisms, respectively, were tested against the baseline model and the better-performing music separation models in recent years to analyze the impact of the improvements made on the network performance in terms of an objective evaluation metric. The experiment is learned for 100 epochs (with a batch size of 4) on the training dataset with the initial learning rate set to . If the accuracy does not improve in three consecutive periods, the learning rate is halved (the minimum learning rate is capped at to prevent non-convergence), the normalization process uses global layer normalization (GLN), and the other hyperparameters are shown in Table 1, which lists the hyperparameters following the default configurations reported in [27,29], thereby ensuring consistency with prior work and reproducibility.

4.4. Baselines

We evaluate the performance of ADTDCN by comparing it against a series of music source separation models.

Huang [20] proposes the joint optimization of the deep learning models an extra masking layer.

U-Net [21] adapts the U-Net architecture to the task of vocal separation.

SH-4stackc [22] designs a simple yet effective method for music source separation using convolutional neural networks.

Hourglass Network [40] constructs a new loss function according to the characteristics of the voice signal, which could make the network learn and optimize better.

MSSN [41] proposes a lightweight multi-stage network for monaural vocal and accompaniment separation.

Conv-TasNet [27] uses a linear encoder to generate a representation of the speech waveform optimized for separating individual speakers.

VAT-SNet [29] proposes a new time-domain architecture which incorporated vocal and accompaniment embeddings generated by an auxiliary network as references.

4.5. Performance Comparisons and Analyses

To evaluate the impact of the proposed embedding attention mechanism (EAM) and the efficient convolutional attention mechanism (E-CBAM) on music source separation quality, we compare the performance of ADTDCN (with EAM), ADTDCN (with E-CBAM), and ADTDCN (with both) against other representative music separation models. The evaluation is conducted on 200 music clips from the MIR-1K dataset, using GSDR, GSIR, and GSAR as evaluation metrics for both vocal and accompaniment separation. The results are presented in Table 2 and Table 3, respectively.

Table 2.

Vocal separation performance comparison.

Table 3.

Accompaniment separation performance comparison.

As shown in Table 2 and Table 3, incorporating either EAM or E-CBAM into the separation network leads to significant improvements in separation quality. Specifically, ADTDCN with both attention mechanisms achieves the best overall performance. Compared to the baseline model, VAT-SNet, and other models, such as Conv-TasNet and the classical frequency-domain music separation methods, our approach improves the GSDR by 1.1 dB and also achieves notable gains in GSIR and GSAR. Furthermore, ADTDCN also outperforms recent advanced models, including the improved hourglass network [40] and the multi-stage music separation network (MSSN) [41], demonstrating the effectiveness and generalizability of the proposed attention-enhanced architecture.

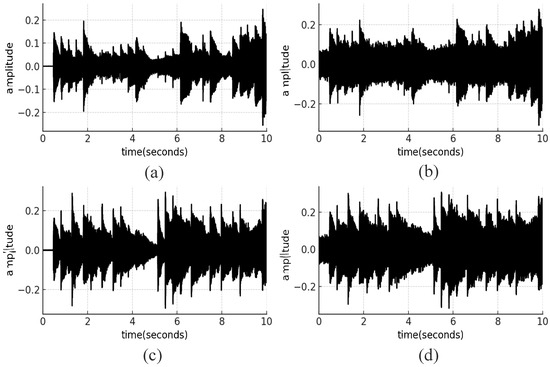

To visualize the effectiveness of signal separation, we attach the signal separation graphs before and after using ADTDCN for separation. As shown in Figure 4, the separated vocal exhibits well-formed harmonic stacks in the 1–4 kHz region and reduced accompaniment energy between harmonics, indicating effective vocal extraction. In contrast, the separated accompaniment shows visible artifacts: high-frequency streaks above 6 kHz and faint vocal harmonic residues around 1–4 kHz. These patterns suggest residual vocal leakage and transient distortions on the accompaniment stem. This qualitative observation is consistent with our quantitative results. ADTDCN achieves strong GSIR/GSAR for vocals in Table 2, reflecting effective interference suppression and lower artifact levels on the vocal stem, while the accompaniment stem, though improved overall in Table 3, is more susceptible to residual vocal traces and high-frequency artifacts. We attribute this asymmetry to the strong harmonic structure of vocals and synchronous evolution with accompaniment, which can bias the separator toward vocal preservation at the expense of completely artifact-free accompaniment. Our attention design (EAM + E-CBAM) mitigates but does not fully eliminate this effect.

Figure 4.

Comparison of waveforms before and after separation. (a) Original accompaniment waveform. (b) Separated accompaniment waveform. (c) Original vocal waveform. (d) Separated vocal waveform.

4.6. Ablation Study

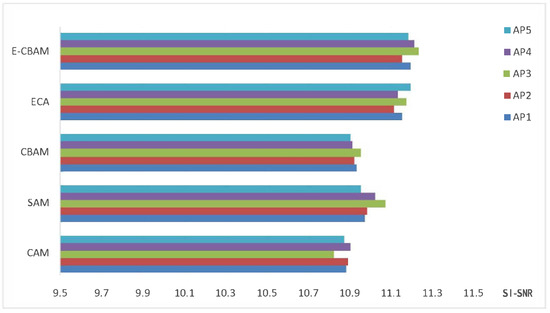

To verify the advantages of the channel attention mechanism ECA in E-CBAM over CAM in CBAM, we will compare and analyze the different attentions involved in the above modules. Moreover, since the channel attention mechanism and spatial attention mechanism can be arbitrarily inserted into different locations in the network, the incorporation of different attention mechanisms at different locations has an impact on the final results. To this end, ablation experiments demonstrate the effect of different positions of each attentional mechanism in the separation network on the network’s separation performance. Figure 5 shows five positions in the separation network that can be considered for incorporating the attention mechanism, named AttentionPosition1 (AP1), AttentionPosition2 (AP2), AttentionPosition3 (AP3), AttentionPosition4 (AP4), and AttentionPosition5 (AP5).

Figure 5.

Schematic diagram of the integration position of the attention mechanism module.

Where AP1 refers to a layer of attention mechanism before fusing the primary signal Y with the target music embedding generated by the auxiliary network, which is considered as an optional position because at this point, the primary signal Y is obtained by convolving the waveform signal X with the encoder. Adding the attention mechanism to AP1 can realize a weight perturbation of the signal at the initial position of the separation network, so that the network can focus on the useful information in the main signal Y as much as possible, while suppressing the useless information. Combined with the introduction of the structure and parameters of the baseline model VAT-SNet network in the previous section, it can be seen that the blue dashed part in Figure 5 will be stacked Z times in the separation network, which fuses the embedding generated by the auxiliary network with the main road signal several times, and feeds the fused signal into the one-dimensional depth-expanded convolutional block after the dimensionally downgraded mapping. The attention mechanism added at the position inside the blue dashed line implies that the accompanying network stacking will be repeated Z times. AP2 refers to adding a layer of attention mechanism after each tandem fusion to strengthen the attention to the target music embedding; AP3 refers to adding the attention mechanism after each layer of convolution in the TCN-Block, which is similar to the position of Ref. [36], who incorporated CBAM into ResNet, so as to make the network continuously strengthen the feature learning of the target music related information through stacking; based on the previous experience of inserting too many attention mechanism modules in other networks, there is a risk of over-fitting, so the addition of AP4 means that only after one fusion and deep expansion convolution, it makes the network difficult to be trained. So AP4 is added to represent the addition of a layer of attention mechanism after only one fusion and deep expansion convolution, instead of adding every layer in the expansion convolution block, which is convenient for comparing the performance of the model with that of AP3 insertion of the attention mechanism. AP5 is not part of the stacking section, but refers to the option of adding a layer of attention mechanism after Z times stacking of the whole separation network to do a reinforcement of the relevant information in the signal. The exact location has been identified in Figure 5.

Figure 6 shows the different performances on the network separation performance after inserting different attentional mechanisms into the separated network positions AP1–AP5. Table 4 shows the performance comparison of ADTDCN (E-CBAM) with respect to VAT-SNet and ConvTasNet in terms of the loss function (SI-SNR) values in a tabular manner, and it can be seen that, compared to the SI-SNR value obtained by Conv-TasNet (9.73) and the SI-SNR value obtained by the baseline model VATSNet (10.87), both the incorporation of the channel attention mechanism (CAM or ECA) and the spatial attention mechanism (SAM) can positively affect the network.

Figure 6.

Impact of different insertion positions on performance.

Table 4.

SI-SNR value performance comparison.

As illustrated in Figure 6, the channel attention module (CAM) exhibits limited optimization capability for the network. In particular, it produces adverse effects when integrated at the AP3 position. Furthermore, CBAM, which applies CAM followed by the spatial attention module (SAM), performs less effectively than using SAM alone in most positions. This suggests that CAM lacks compatibility with the structure of VAT-SNet. In contrast, the efficient channel attention (ECA) mechanism shows a consistent positive impact when applied independently at different network positions. The AP3 position corresponds to the insertion point after each convolutional layer within the TCN-Block. Since this block is repeatedly stacked in the network, the local cross-channel interaction strategy adopted by ECA, which does not introduce additional dimensional transformations, demonstrates superior performance and better integration at this position. The effectiveness of ECA is further confirmed through the performance of ECA-based CBAM (referred to as E-CBAM), which applies ECA followed by SAM. This combination enhances the convolutional capabilities of the TCN structure and achieves the best performance at AP3.

As shown in Table 5, AP3 achieves the best separation performance. However, when the attention modules are placed at AP4 or AP5, the SI-SNR value drops noticeably compared with AP3.

Table 5.

Effect of attention module positions (AP1–AP5) on SI-SNR performance.

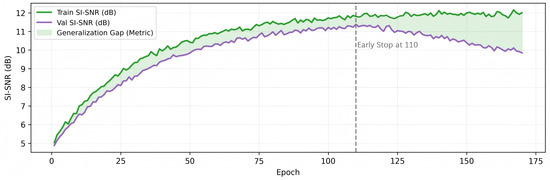

This performance drop suggests that placing attention modules too deep within the network increases the risk of overfitting. While a deeper module position might allow the model to learn more complex patterns from the training data, it can also lead to memorizing training-specific artifacts rather than learning generalizable features. To further investigate this phenomenon, we conducted a detailed analysis of the training process for the model with the module at position AP4, as shown in Figure 7.

Figure 7.

Training and validation SI-SNR curves for the model with the attention module at position AP4. The shaded area represents the generalization gap. The dashed line indicates the early stopping point at epoch 110.

Figure 7 illustrates the training and validation SI-SNR curves over 175 epochs. The green line (Train SI-SNR) consistently increases, eventually reaching approximately 12.1 dB, which indicates the model is effectively fitting the training data. In contrast, the purple line (Val SI-SNR) peaks at around epoch 110 with a value of 11.35 dB and then begins to decline. This divergence between the training and validation curves, highlighted by the widening “Generalization Gap” (the shaded area), is a classic sign of overfitting. The model’s performance on unseen validation data starts to worsen while its performance on the training data continues to improve.

This empirical evidence strongly supports our hypothesis. The early stopping point at epoch 110 for AP4, while achieving a decent validation score, still falls short of the peak performance of AP3 (11.39 dB), and the model’s instability past this point highlights its tendency to overfit. Therefore, simply stacking attention modules at deeper positions is not a reliable strategy for performance enhancement. A carefully selected, shallower position like AP3 provides a better trade-off between model complexity and generalization ability, leading to a more robust and effective model.

Based on the above analysis, E-CBAM is finally integrated at the AP3 position, that is, after each convolutional layer within the TCN-Block. The resulting model, named ADTDCN (E-CBAM), is adopted for comparative experiments.

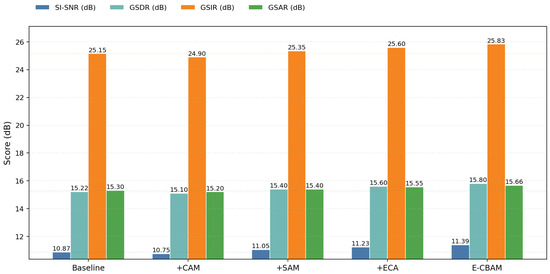

To further clarify the contribution of the efficient convolutional block attention module (E-CBAM) within the separation network, we conducted detailed ablation studies by selectively enabling or disabling its subcomponents, as shown in Figure 8. Specifically, five configurations were compared: (1) the baseline VAT-SNet without any attention mechanism, (2) VAT-SNet with the original channel attention module (CAM) from CBAM, (3) VAT-SNet with only the spatial attention module (SAM), (4) VAT-SNet with the efficient channel attention (ECA) module, and (5) VAT-SNet with the full E-CBAM (ECA + SAM).

Figure 8.

Ablation study results for different attention configurations in the separation network. The bar chart illustrates the comparative performance of the baseline model (VAT-SNet) and its variants with additional attention modules, including CAM, SAM, ECA, and the combined E-CBAM. The results show that the addition of CAM alone provides limited or even adverse improvement, while SAM and ECA yield more consistent gains. The integration of both modules in the E-CBAM achieves the best overall performance across all metrics, highlighting the superior contribution of the efficient channel attention mechanism and the spatial attention mechanism when jointly applied.

Table 4 and Figure 8 together indicate that directly introducing CAM leads to limited or even negative effects, especially when inserted into the TCN-Block (AP3 position). SAM alone provides moderate gains, indicating that temporal saliency plays a positive role in capturing transient acoustic features. By contrast, ECA consistently yields performance improvements across all insertion positions, with the best results achieved at AP3. When ECA and SAM are combined as E-CBAM, the model achieves the highest overall performance in terms of SI-SNR, GSDR, GSIR, and GSAR. Compared to VAT-SNet, E-CBAM improves SI-SNR by approximately 4.8% and surpasses Conv-TasNet by about 17.1%, demonstrating its strong adaptability and effectiveness.

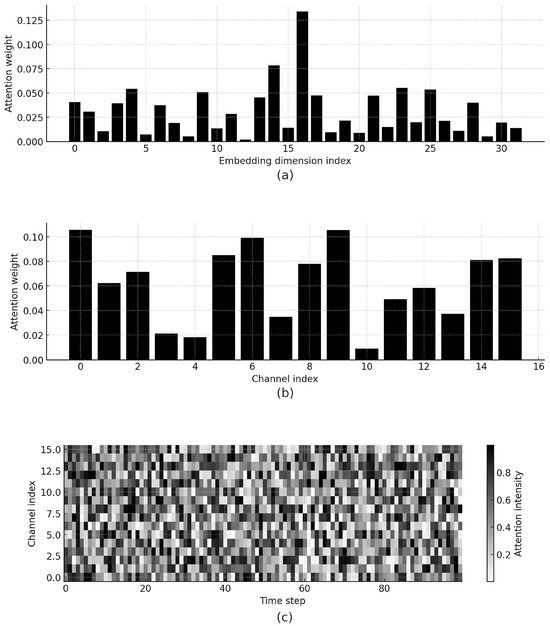

These results confirm that E-CBAM significantly enhances local temporal feature extraction and channel correlation modeling within the TCN-Block. The cooperative effect of efficient channel and spatial attention mitigates the limitations of dilated convolution in capturing fine-grained temporal patterns, while simultaneously emphasizing frames with higher discriminability. Consequently, the integration of E-CBAM is both necessary and beneficial for improving the overall separation performance. To further demonstrate the “attention-driven” behavior of our proposed model, we visualize the attention maps produced by the embedding attention module (EAM) and the efficient convolutional block attention module (E-CBAM). Representative results are shown in Figure 9.

Figure 9.

Visualization of attention mechanisms. (a) EAM highlighting discriminative embedding dimensions. (b) Channel attention weights from E-CBAM (ECA). (c) Temporal attention distribution from E-CBAM (SAM).

As illustrated in Figure 9a, EAM distributes its attention weights unevenly across embedding dimensions. This indicates that the module selectively emphasizes target-relevant embeddings while suppressing less useful components, thereby enhancing the effectiveness of feature fusion.

Figure 9b presents the channel attention weights from E-CBAM’s ECA component. Certain channels receive higher weights than others, suggesting that the model identifies and amplifies discriminative feature channels related to the target source (e.g., vocal harmonics), while down-weighting channels dominated by irrelevant background or accompaniment features.

Finally, Figure 9c shows the temporal attention distribution from the SAM component of E-CBAM. The heatmap highlights that the module allocates stronger attention to specific time steps (e.g., vocal onsets and transient events), while suppressing interference in accompaniment-dominated segments. This behavior demonstrates the module’s ability to capture fine-grained temporal details that are critical for music source separation.

Overall, these visualizations provide direct evidence of how the proposed attention mechanisms emphasize relevant features and suppress irrelevant ones. They not only confirm the quantitative improvements reported in previous sections but also enhance the interpretability of our approach by showing how attention guides the separation process in practice.

4.7. Computational Complexity

We evaluate the computational complexity of ADTDCN using two measures: parameter count (Params) and floating-point operations (FLOPs). The dominant contributor is the 1 × 1 pointwise projection in the TCN backbone. By contrast, E-CBAM and EAM are lightweight attention modules that add only marginal parameters and computational overhead. Dilation in the TCN enlarges the receptive field without altering the per-layer computational order. The encoder/decoder cost is implementation-dependent; except for the first layer, the network employs lightweight mappings (e.g., 1 × 1 or grouped convolutions). All figures are computed under the hyperparameters in Table 1. Under the experimental setting—4 s audio at 16 kHz (64,000 samples), a standard encoder stride, and eight TCN layers after each fusion—the model comprises approximately 12.67 M parameters and requires about 202 GFLOPs. The specific calculation is as follows:

We use 1MAC = 2FLOPs. Let T be the temporal length, , the input/output channels, and K the 1D convolution kernel size.

(1) Core Formulas

- Standard 1D convolution:

- Pointwise 1 × 1 convolution:

- Depthwise-separable 1D convolution:EAM and E-CBAM add negligible parameters/FLOPs relative to the backbone; element-wise nonlinearities and normalization FLOPs are ignored.

(2) Model Instantiation

Assume backbone channels , kernel , bottleneck channels . Each TCN layer contains:

- Depthwise-separable conv: depthwise () + pointwise 1 × 1

- Two bottleneck 1 × 1 projections: and

Per TCN layer (including all the above):

Substitute , , : = 394,752, = 789,504T, and there are 32 TCN layers in total (4 fusion stages, 8 layers each): = 32 × 394,752 ≈ 12.63 M, = 32 × 789,504T = 25,264,128T. Example with : = 25,264,128 × 7999 ≈ 202 × 109 FLOPs ≈ 202 .

(3) Encoder/Decoder

- Example (first encoder layer, mono input , kernel : , .

- Other encoder/decoder layers follow the core formulas above. Their total cost is small compared to the TCN backbone. EAM/E-CBAM overhead is negligible.

In summary, total parameters (dominated by the TCN backbone) are approximately 12.63 M; adding encoder, decoder, and attention, they are about 12.67 M. Total computation is approximately 202GFLOPs at .

5. Conclusions

In this paper, we present an attention-enhanced time-domain convolutional network designed to improve feature fusion and local pattern extraction for music source separation. The proposed model integrates the embedding attention module and the efficient convolutional block attention module into the separation framework, enabling dynamic assignment of relevance weights to input signals and more effective capture of critical temporal dependencies. Experimental results show that the proposed model consistently outperforms baseline approaches in vocal–accompaniment separation, particularly in complex and overlapping musical environments. Beyond accuracy improvements, the incorporation of attention mechanisms also enhances the interpretability and adaptability of the model.

5.1. Practical Applications

High-quality vocal and accompaniment separation directly enables a wide range of real-world applications across music production, education, consumer entertainment, accessibility, and music information retrieval (MIR).

- Professional music production: Clean stems support remixing, remastering, and restoration of legacy recordings. Isolated vocals enable a cappella creation and singer re-synthesis, while accompaniment stems facilitate sampling and mashups with minimal bleed and artifacts.

- Live and interactive audio: Real-time or near-real-time separation allows on-stage vocal enhancement, instrument emphasis/suppression in live mixing, and audience-personalized sound fields in immersive events.

- Music education and practice: Track-level isolation lets learners solo or mute parts to study arrangement, articulation, and intonation. Educators can create stem-based exercises and feedback tools that target pitch, rhythm, and blend.

- Consumer applications: Karaoke and singing apps rely on robust vocal removal/ extraction; streaming platforms can provide interactive controls (e.g., “vocals up,” “drums down”) to personalize listening. Clean stems also improve AI cover generation and user-driven remixes.

- Accessibility and health: Speech/vocal foregrounding improves intelligibility for hearing-impaired users. Customized mixes can reduce listening fatigue and support therapeutic or rehabilitation scenarios.

- MIR and downstream AI tasks: Separated sources improve chord recognition, beat/tempo estimation, melody transcription, singer identification, and timbre analysis. Cleaner inputs reduce error propagation in tagging, recommendation, and generative modeling.

Our method’s time-domain convolutional design and enhanced discriminative feature extraction (e.g., attention to local temporal patterns and harmonic cues) are well aligned with these use cases: it reduces cross-talk and artifacts that typically hinder remixing and transcription, and it supports low-latency inference desirable for interactive applications.

5.2. Future Work

Notably, the current work focuses on binary vocal–accompaniment separation, and extending ADTDCN to more complex separation tasks remains a key direction for future research—this extension faces some core challenges:

(1) Higher inter-source spectral overlap: Unlike the relatively distinct spectral boundaries between vocals and accompaniment, multiple instruments (e.g., violin vs. viola, piano vs. harpsichord) often share overlapping harmonics and transient patterns, increasing feature discrimination difficulty;

(2) Diverse dynamic feature patterns: Instruments with different acoustic properties (e.g., sustained strings vs. impulsive percussion, narrow-band flutes vs. broad-band drums) require the model to adapt to varying temporal-spatial feature scales;

(3) Significant energy dynamic range differences: Weak-signal instruments (e.g., clarinet) are easily overwhelmed by loud instruments (e.g., trumpet), leading to biased mask estimation for low-energy sources;

(4) Cross-genre acoustic heterogeneity: Unlike stable vocal–accompaniment feature distribution in a single genre, accompaniment layers across genres (e.g., classical orchestration vs. electronic synth, jazz brass vs. rock guitar) differ sharply in spectral density, rhythmic base frequency, and vocal-timbre matching—hindering transfer of single-genre learned discrimination patterns, causing separation accuracy fluctuations in non-training genres;

(5) Comprehensive model efficacy constraints: Elevated computational complexity from multiple attention mechanisms (impacting real-time application feasibility), overfitting risks due to insufficient dataset size/diversity, poor interpretability of attention mechanisms, and single-channel input design limiting utilization of multi-channel spatial information collectively restrict the model’s practical deployment and generalization capabilities.

To address these challenges, we plan to adopt the following optimization strategies built upon ADTDCN’s core architecture:

(1) Upgrade EAM to a multi-source embedding attention module (M-EAM): Replace the binary weighting mechanism with multi-head attention to dynamically learn weight distributions for multiple instrument embeddings, ensuring underrepresented instruments (e.g., weak woodwinds) receive sufficient attention;

(2) Extend E-CBAM to a multi-scale E-CBAM (MS-E-CBAM): Introduce variable 1D convolution kernel sizes (e.g., 3 × 1 for fine-grained wind instrument features, 7 × 1 for broad temporal percussion features) to capture instrument-specific local patterns;

(3) Adopt a multi-task joint training framework: Combine multi-instrument separation with instrument classification, using category priors (e.g., timbre templates of violins, cellos, and trumpets) to guide the network in learning source-specific features and reducing cross-source interference;

(4) Introduce a Genre-Adaptive Guidance Branch (GAG-B) into E-CBAM: Use 1D convolution to extract key genre features (e.g., spectral entropy, rhythmic complexity), dynamically adjust attention weight allocation to enable the model to adapt to accompaniment features of different genres (e.g., broad spectrum of classical orchestration, transient signals of electronic music), and reduce cross-genre separation accuracy fluctuations;

(5) Construct an interpretable multi-channel framework: Adopt dynamic sparse attention (reducing redundant computations), introduce contrastive learning to enhance adaptation to data diversity, add attention heatmap visualization modules to improve interpretability, and extend input layers to support multi-channel feature fusion.

Therefore, future work will explore the following:

(1) Extending ADTDCN to more complex multi-instrument scenarios (e.g., the multi-instrument scenarios with different types of combinations mentioned earlier) to expand adaptability to different scenarios;

(2) Integrating multi-head attention and cross-modal attention (e.g., fusing audio waveform features with music score semantic features) to further enrich the model’s representational capacity;

(3) Optimizing network lightweight design (e.g., pruning redundant E-CBAM branches, quantizing convolution kernels) to adapt to real-time music separation scenarios (e.g., live streaming, real-time mixing);

(4) Constructing a large-scale annotated dataset covering 15+ genres (integrating GTZAN, MedleyDB, etc.), optimizing pre-training strategies with cross-genre transfer learning, and verifying the model’s separation performance in niche genres (e.g., folk music, extreme electronic music) to further improve cross-scenario adaptability;

(5) Investigating cross-channel feature interactions (e.g., dual-channel attention flows) to exploit spatial information, establish quantitative correlations between attention patterns and separation performance (e.g., gradient attribution analysis), and use self-supervised pre-training to reduce reliance on large annotated datasets, breaking comprehensive efficacy constraints from multiple dimensions.

Author Contributions

Conceptualization, project administration M.L.; methodology, writing—original draft preparation, formal analysis, visualization, software Z.Z.; data curation, validation X.Q.; investigation, resources C.S.; writing review and editing, supervision R.S.; All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data presented in this study is available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ADTDCN | Attention-driven time-domain convolutional network |

| BSS_EVAL | Blind source separation evaluation toolbox |

| CASA | Computational auditory scene analysis |

| CBAM | Convolutional block attention module |

| EAM | Embedding attention module |

| E-CBAM | Efficient convolutional block attention module |

| GSAR | Global source-to-artifacts ratio |

| GSDR | Global signal–distortion ratio |

| GSIR | Global source-to-interference ratio |

| IBM | Ideal binary mask |

| MIR | Music information retrieval |

| NMF | Non-negative matrix factorization |

| RNNs | Recurrent neural networks |

| SDR | Source-to-distortion ratio |

| SI-SNR | Scale-invariant signal-to-noise ratio |

| TCN-Block | Temporal convolutional network block |

| TCN | Time-domain convolutional network |

| VAT-SNet | Vocal and accompaniment time-domain separation network |

References

- Li, W.; Li, Z.; Gao, Y. Understanding Digital Music: A Review of Music Information Retrieval Technologies. Fudan Univ. J. (Nat. Sci.) 2018, 57, 271–313. [Google Scholar] [CrossRef]

- Kang, J.; Wang, H.; Su, G.; Liu, L. A Survey on Music Emotion Recognition. Comput. Eng. Appl. 2022, 58, 64–72. [Google Scholar] [CrossRef]

- Kum, S.; Nam, J. Joint detection and classification of singing voice melody using convolutional recurrent neural networks. Appl. Sci. 2019, 9, 1324. [Google Scholar] [CrossRef]

- You, S.D.; Liu, C.H.; Chen, W.K. Comparative study of singing voice detection based on deep neural networks and ensemble learning. Hum.-Centric Comput. Inf. Sci. 2018, 8, 34. [Google Scholar] [CrossRef]

- Sharma, B.; Das, R.K.; Li, H. On the Importance of Audio-Source Separation for Singer Identification in Polyphonic Music. In Proceedings of the INTERSPEECH, Graz, Austria, 15–19 September 2019; pp. 2020–2024. [Google Scholar] [CrossRef]

- Weintraub, M. A computational model for separating two simultaneous talkers. In Proceedings of the ICASSP’86—IEEE International Conference on Acoustics, Speech, and Signal Processing, Tokyo, Japan, 7–11 April 1986; Volume 11, pp. 81–84. [Google Scholar] [CrossRef]

- Bregman, A.S. Progress in the study of auditory scene analysis. In Proceedings of the 2007 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics, New Paltz, NY, USA, 21–24 October 2007; pp. 122–126. [Google Scholar] [CrossRef]

- Rosenthal, D.F.; Okuno, H.G.; Okuno, H.; Rosenthal, D. Computational Auditory Scene Analysis: Proceedings of the Ijcai-95 Workshop; CRC Press: Boca Raton, FL, USA, 2021. [Google Scholar]

- Roman, N.; Wang, D.; Brown, G.J. Speech segregation based on sound localization. J. Acoust. Soc. Am. 2003, 114, 2236–2252. [Google Scholar] [CrossRef] [PubMed]

- Hu, G.; Wang, D. Monaural speech segregation based on pitch tracking and amplitude modulation. IEEE Trans. Neural Netw. 2004, 15, 1135–1150. [Google Scholar] [CrossRef]

- Hu, G.; Wang, D. Auditory segmentation based on onset and offset analysis. IEEE Trans. Audio Speech Lang. Process. 2007, 15, 396–405. [Google Scholar] [CrossRef]

- Jin, Z.; Wang, D. A supervised learning approach to monaural segregation of reverberant speech. IEEE Trans. Audio Speech Lang. Process. 2009, 17, 625–638. [Google Scholar] [CrossRef]

- Hu, G.; Wang, D. A tandem algorithm for pitch estimation and voiced speech segregation. IEEE Trans. Audio Speech Lang. Process. 2010, 18, 2067–2079. [Google Scholar] [CrossRef]

- Lee, D.D.; Seung, H.S. Learning the parts of objects by non-negative matrix factorization. Nature 1999, 401, 788–791. [Google Scholar] [CrossRef]

- Chanrungutai, A.; Ratanamahatana, C.A. Singing voice separation for mono-channel music using non-negative matrix factorization. In Proceedings of the 2008 International Conference on Advanced Technologies for Communications, Hanoi, Vietnam, 6–9 October 2008; pp. 243–246. [Google Scholar] [CrossRef]

- Rafii, Z.; Pardo, B. A simple music/voice separation method based on the extraction of the repeating musical structure. In Proceedings of the 2011 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Prague, Czech Republic, 22–27 May 2011; pp. 221–224. [Google Scholar] [CrossRef]

- Rafii, Z.; Pardo, B. Repeating pattern extraction technique (REPET): A simple method for music/voice separation. IEEE Trans. Audio Speech Lang. Process. 2012, 21, 73–84. [Google Scholar] [CrossRef]

- Liutkus, A.; Rafii, Z.; Badeau, R.; Pardo, B.; Richard, G. Adaptive filtering for music/voice separation exploiting the repeating musical structure. In Proceedings of the 2012 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Kyoto, Japan, 25–30 March 2012; pp. 53–56. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, D. Towards scaling up classification-based speech separation. IEEE Trans. Audio Speech Lang. Process. 2013, 21, 1381–1390. [Google Scholar] [CrossRef]

- Huang, P.S.; Kim, M.; Hasegawa-Johnson, M.; Smaragdis, P. Deep learning for monaural speech separation. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 1562–1566. [Google Scholar] [CrossRef]

- Jansson, A.; Humphrey, E.; Montecchio, N.; Bittner, R.; Kumar, A.; Weyde, T. Singing voice separation with deep U-Net convolutional networks. In Proceedings of the 18th International Society for Music Information Retrieval Conference, Suzhou, China, 23–27 October 2017. [Google Scholar]

- Park, S.; Kim, T.; Lee, K.; Kwak, N. Music source separation using stacked hourglass networks. arXiv 2018, arXiv:1805.08559. [Google Scholar] [CrossRef]

- Lu, W.T.; Wang, J.C.; Kong, Q.; Hung, Y.N. Music source separation with band-split rope transformer. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 481–485. [Google Scholar]

- Tong, W.; Zhu, J.; Chen, J.; Kang, S.; Jiang, T.; Li, Y.; Wu, Z.; Meng, H. SCNet: Sparse compression network for music source separation. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 1276–1280. [Google Scholar]

- Takahashi, N.; Mitsufuji, Y. D3net: Densely connected multidilated densenet for music source separation. arXiv 2020, arXiv:2010.01733. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar] [CrossRef]

- Luo, Y.; Mesgarani, N. Conv-tasnet: Surpassing ideal time–frequency magnitude masking for speech separation. IEEE/ACM Trans. Audio Speech Lang. Process. 2019, 27, 1256–1266. [Google Scholar] [CrossRef] [PubMed]

- Rouard, S.; Massa, F.; Défossez, A. Hybrid transformers for music source separation. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Qiao, X.; Luo, M.; Shao, F.; Sui, Y.; Yin, X.; Sun, R. Vat-snet: A convolutional music-separation network based on vocal and accompaniment time-domain features. Electronics 2022, 11, 4078. [Google Scholar] [CrossRef]

- Xu, L.; Wang, J.; Yang, W.; Luo, Y. Multi Feature Fusion Audio-visual Joint Speech Separation Algorithm Based on Conv-TasNet. J. Signal Process 2021, 37, 1799–1805. [Google Scholar]

- Hasumi, T.; Kobayashi, T.; Ogawa, T. Investigation of network architecture for single-channel end-to-end denoising. In Proceedings of the 2020 28th European Signal Processing Conference (EUSIPCO), Amsterdam, The Netherlands, 24–28 August 2020; pp. 441–445. [Google Scholar] [CrossRef]

- Zhang, Y.; Jia, M.; Gao, S.; Wang, S. Multiple Sound Sources Separation Using Two-stage Network Model. In Proceedings of the 2021 4th International Conference on Information Communication and Signal Processing (ICICSP), Shanghai, China, 24–26 September 2021; pp. 264–269. [Google Scholar] [CrossRef]

- Jin, R.; Ablimit, M.; Hamdulla, A. Speech separation and emotion recognition for multi-speaker scenarios. In Proceedings of the 2022 3rd International Conference on Pattern Recognition and Machine Learning (PRML), Chengdu, China, 22–24 July 2022; pp. 280–284. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W. Tobias Weyand Marco Andreetto and Hartwig Adam. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Haase, D.; Amthor, M. Rethinking depthwise separable convolutions: How intra-kernel correlations lead to improved mobilenets. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 14600–14609. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European conference on computer vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar] [CrossRef]

- Hsu, C.L.; Jang, J.S.R. On the improvement of singing voice separation for monaural recordings using the MIR-1K dataset. IEEE Trans. Audio Speech Lang. Process. 2009, 18, 310–319. [Google Scholar] [CrossRef]

- Vincent, E.; Gribonval, R.; Févotte, C. Performance measurement in blind audio source separation. IEEE Trans. Audio Speech Lang. Process. 2006, 14, 1462–1469. [Google Scholar] [CrossRef]