Ensemble Learning Model for Industrial Policy Classification Using Automated Hyperparameter Optimization

Abstract

1. Introduction

2. Definition of Industrial Policy

- (1)

- Promotion of economic growth: Providing strategic direction to support the expansion of gross domestic product and overall economic growth. This includes fostering the development of emerging industrial sectors and encouraging innovation and advancement in existing industries.

- (2)

- Export promotion: Strengthening the nation’s export capabilities to compete in the global market. This involves enhancing the competitiveness of domestic products and services abroad while promoting international trade opportunities.

- (3)

- Technological innovation and R&D: Developing strategic initiatives to enhance national technological capabilities and drive innovation. This includes supporting R&D efforts, stimulating collaboration between industry and academia, and integrating novel technologies to boost the competitiveness of key industrial sectors.

- (4)

- Balanced regional and industrial development: Implementing strategies to ensure equitable growth across domestic regions and industrial sectors. This incorporates reducing regional disparities, supporting sustainable industrial expansion, and promoting infrastructure development to support long-term economic balance.

- (5)

- Job creation: Stimulating employment growth by promoting the expansion of emerging and developing industrial sectors. This requires supporting workforce development programs, advancing investment in high-potential industries, and implementing policies that reduce unemployment and enhance job stability.

3. Research Methodology

- (1)

- Data collection and labeling

- (2)

- Data preprocessing

- (3)

- Data loading

- (i)

- A single random split may, by chance, result in a ‘favorable’ or ‘unfavorable’ division. Therefore, using multiple random seed numbers allows such randomness to be averaged out.

- (ii)

- This allows us to verify whether the model consistently performs well across different data splits and to ensure that it generalizes rather than overfits to a specific split.

- (iii)

- By measuring performance on five different test datasets, more reliable estimates of model performance can be obtained, and the corresponding mean and standard deviation of the performance metrics can be assessed.

- (iv)

- This allows us to examine the extent to which the randomness in data splitting affects model performance.

- (4)

- Machine learning model development

- (5)

- Hyperparameter tuning

- (6)

- Model evaluation

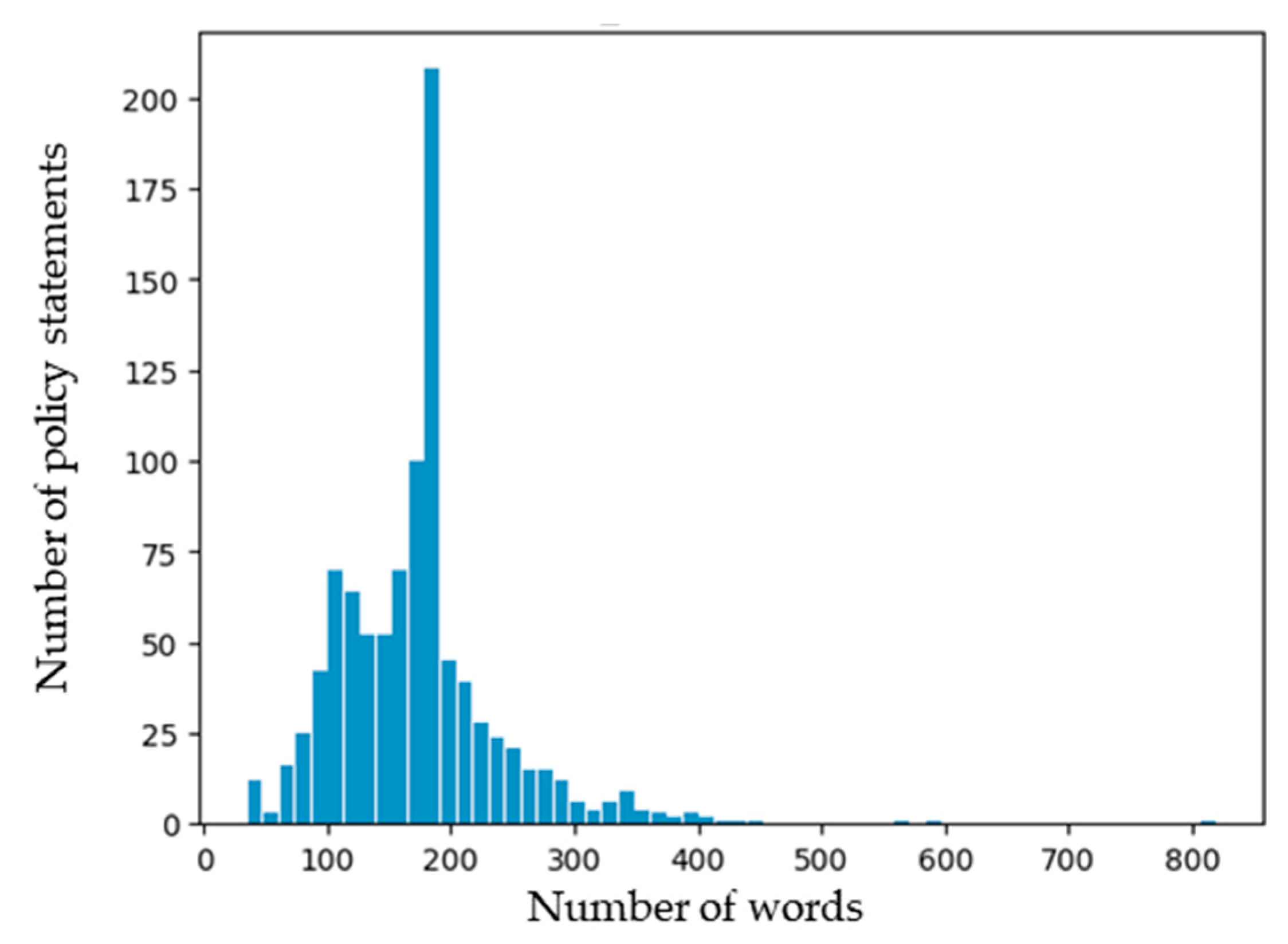

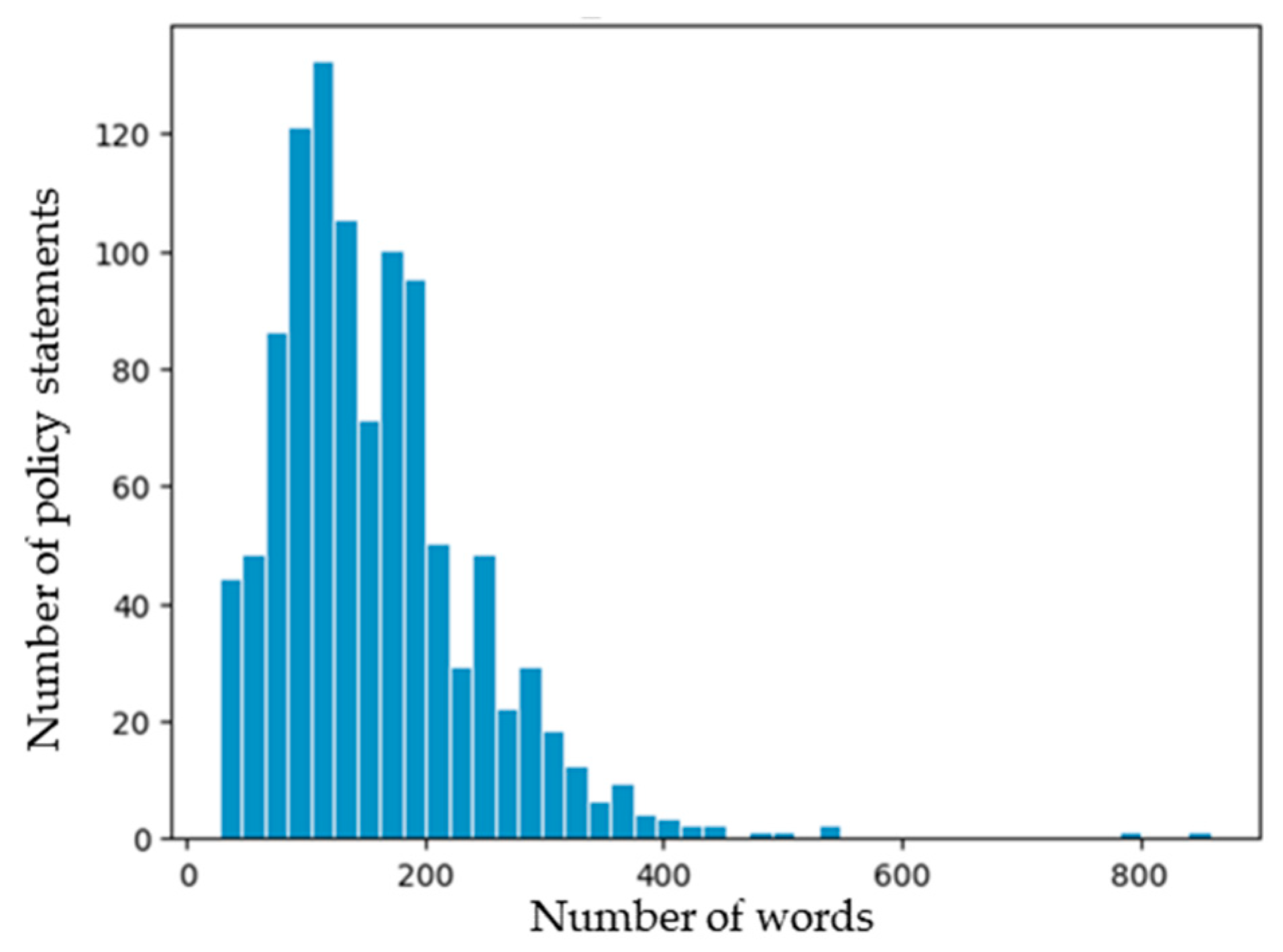

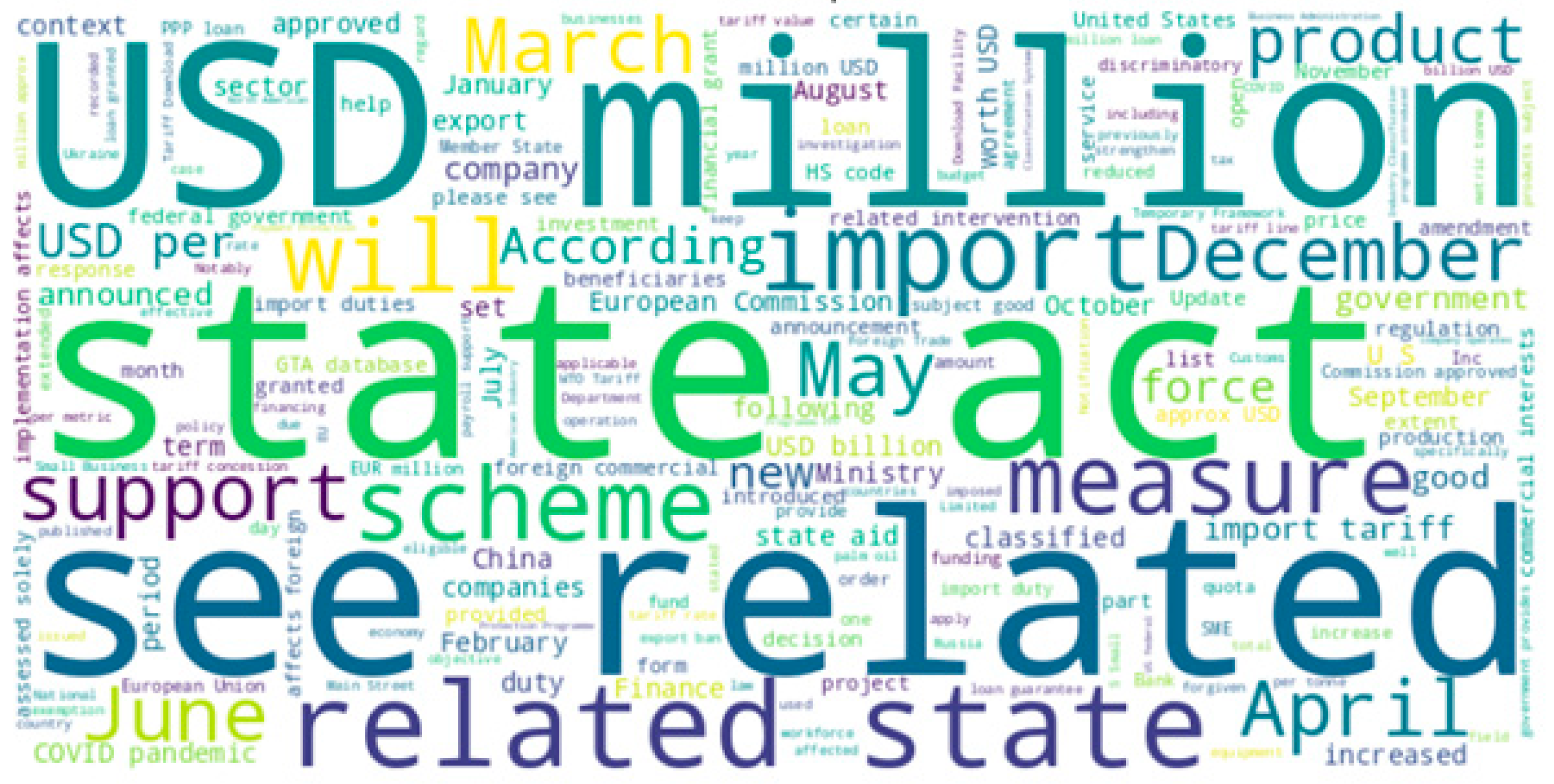

3.1. Data for Analysis

3.2. Main Contributions of Research

- (1)

- A new approach is introduced to categorize a large volume of policy documents, released daily by GTA, into IP or non-IP classifications. Thus, the findings of this study can be utilized to automatically classify these policy documents, facilitating more timely and strategic responses to IPs that may impact the domestic markets.

- (2)

- This study recommends employing ensemble-based analytical frameworks that outperform the conventional logistic regression approach. First, the adoption of random forest, a widely recognized bagging technique, is proposed, followed by an assessment of its predictive performance. Next, the outcomes of boosting algorithms commonly applied in classification tasks—such as gradient boosting, LightGBM, and XGBoost—are examined and discussed.

- (3)

- Finally, an innovative approach integrated with the cross-validation technique is proposed to optimize the parameters of logistic regression and ensemble-based models. The Optuna technique, designed for automated hyperparameter optimization, is applied to further enhance model accuracy.

4. Machine Learning Model

4.1. Logistic Regression

- (i)

- Regularization strength (C) controls the inverse of the penalty applied during model training. Lower C values impose stronger regularization, whereas higher values allow for weaker constraints. By default, C is set to 1.0.

- (ii)

- The regularization type (penalty) specifies the form of constraints imposed on the model during training. Commonly used options are l1 (Lasso), l2 (Ridge, default setting), elasticnet (a blend of l1 and l2), or none (no regularization constraints applied).

- (iii)

- The optimization algorithm (solver) specifies the method used to adjust the model parameters during training. Available options include liblinear, lbfgs, newton-cg, sag, and saga, each characterized by distinct computational efficiency profiles [16,17,18]. By default, lbfgs is selected as the solver.

- (iv)

- The class weight parameter (class_weight) modifies the importance assigned to each class in order to compensate for imbalanced class distributions. Options include ‘none’ (default: uniform weighting) and ‘balanced’ (assigns weights inversely proportional to class occurrence rates).

- (v)

- The max_iter parameter sets the maximum number of iterations the solver may execute to reach convergence. A higher value for this parameter may enhance stability when handling complex or high-dimensional datasets. The default is 100.

4.2. Ensemble Model

4.2.1. Random Forest

- (i)

- The number of trees, defined by n_estimators, determines how many decision trees are built in the model. Using a larger value generally improves model stability and performance, but increases computational expense. By default, n_estimators is set to 100.

- (ii)

- The max_features (maximum number of features) specifies how many predictor variables are considered when splitting nodes during the decision tree building process. Smaller values increase model randomness and can help reduce overfitting. In classification tasks, max_features is often defined as the square root of the total feature count (sqrt).

- (iii)

- The max_depth (maximum depth) controls how deep each tree can grow. Allowing greater depth helps the model learn more complex relationships but can also lead to overfitting. The default setting is ‘none’.

- (iv)

- The minimum samples required to split (min_samples_split) defines the smallest number of observations that must be present in a node for it to be divided. Higher values result in simpler trees, which can help mitigate overfitting by limiting unnecessary splits. By default, min_samples_split is set to 2.

- (v)

- The min_samples_leaf (minimum number of samples per leaf) sets the minimum number of data points that must be contained in a leaf node. Increasing this value can help smooth the model’s structure by reducing the occurrence of minor or irregular branches. The default setting is 1.

4.2.2. Gradient Boosting

- (i)

- The n_estimators parameter (number of trees) indicates how many boosting stages are sequentially created. Using a larger value may improve predictive accuracy but may also raise the likelihood of overfitting and computational cost. The default setting is 100.

- (ii)

- The learning_rate controls how much each individual tree contributes to the final model. A lower value necessitates more iterations to reach a comparable level of accuracy but typically improves generalization. The default setting is 0.1.

- (iii)

- Maximum tree depth (max_depth) restricts the maximum depth each tree is allowed to reach. Allowing deeper trees helps the model learn more complex patterns in the data, but it also raises the chance of overfitting. The default setting is 3, resulting in relatively shallower trees.

- (iv)

- The minimum samples for splitting (min_samples_split) specify the minimum number of samples required to split an internal node. Higher values yield simpler trees, preventing overfitting. The default value is 2.

- (v)

- The minimum samples per leaf (min_samples_leaf) parameter sets the smallest number of observations needed to create a leaf node. Setting this parameter higher discourages the formation of extremely small leaves, helping to reduce model complexity and overfitting. The default setting is 1.

4.2.3. XGBoost

- (i)

- The n_estimators parameter determines how many boosting iterations are performed (total number of trees). Setting this parameter higher can strengthen predictive results, though it could also increase overfitting tendencies. The default value is 100.

- (ii)

- The learning_rate governs how much the model’s parameters are adjusted in each step (learning rate) while the loss function is being minimized. A lower value requires more trees to achieve comparable predictive accuracy but generally improves model adaptability. The default setting is 0.3.

- (iii)

- Maximum tree depth (max_depth) defines the limit on how deep each tree can grow. Higher model depth facilitates the discovery of complex patterns but can heighten the chance of overfitting. By default, max_depth is set to 6.

- (iv)

- The colsample_bytree parameter specifies the proportion of features (column subsampling) randomly picked to create each tree. This process incorporates variability and aids in reducing overfitting. The default setting is 1.0, meaning all features are used.

- (v)

- Subsample ratio (subsample) sets how much of the training dataset is randomly selected to construct each tree. Using a smaller value can help mitigate overfitting and improve computational efficiency. The default value is 1.0, indicating that the entire dataset is applied.

4.2.4. LightGBM

- (i)

- The num_leaves parameter (number of leaves) determines how many leaf nodes are allowed at most in a single tree. Given LightGBM’s leaf-wise growth strategy, this parameter plays a critical role in managing model complexity. Increasing this value enables the model to capture more complex patterns, though it simultaneously raises the chance of overfitting. The default setting is 31.

- (ii)

- Learning rate (learning_rate) influences the step size at each iteration during loss function optimization. Lower values generally require more trees to sustain predictive accuracy but often result in better generalization. The default setting is 0.1.

- (iii)

- Maximum tree depth (max_depth) sets an upper bound on how deep each tree can grow. Although LightGBM primarily uses a leaf-wise growth strategy, this parameter allows explicit depth limitation to help mitigate overfitting. By default, it is assigned a value of −1, indicating no restriction.

- (iv)

- The feature_fraction parameter specifies the proportion of features (columns) randomly selected to generate each individual tree. This technique incorporates variability and aids in minimizing overfitting. The default value of 1.0 indicates that the model includes all available features.

- (v)

- The min_data_leaf (minimum data in leaf) sets the smallest number of data points that must be present in a leaf node. Setting this value higher discourages the formation of leaves with insufficient data, aiding in mitigating overfitting and promoting better generalization. In the baseline model, the default value is 20.

5. Analytical Results

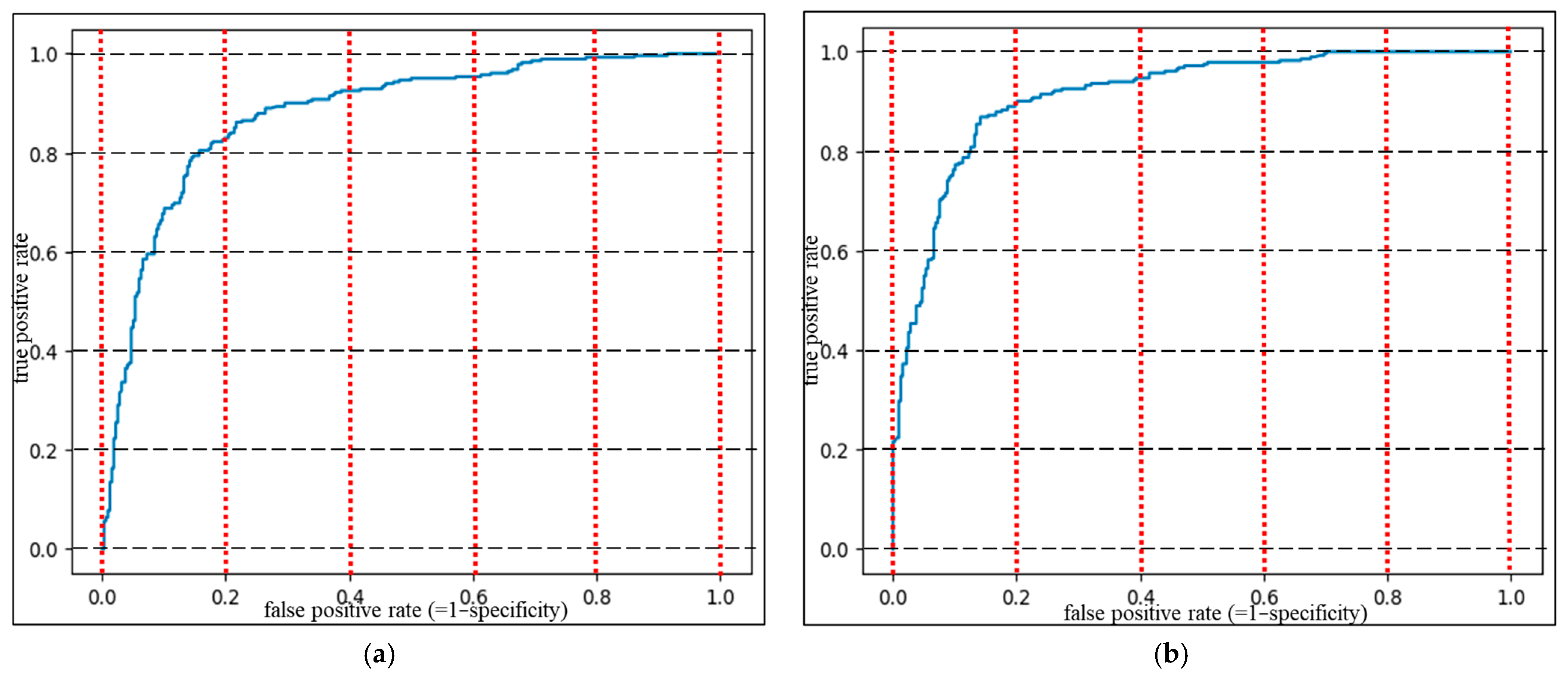

5.1. Results of Baseline Model

- (1)

- The preset hyperparameter values for each model are applied as outlined in Section 4. To enhance robustness, experiments are conducted on five distinct datasets (including training and test sets) using varied random seed values. The average performance across all iterations is then computed for comparison.

- (2)

- To construct the training dataset, the Python library RandomOverSampler [2,5,65,66,67] is used to apply an oversampling strategy so that the minority class is increased to match the majority class. This technique improves training accuracy by addressing class imbalance. RandomOverSampler, a function within the imbalanced-learn package, alleviates dataset disparity by randomly replicating samples from the minority class. This straightforward method effectively balances class distributions to achieve either equal or predefined class ratios.

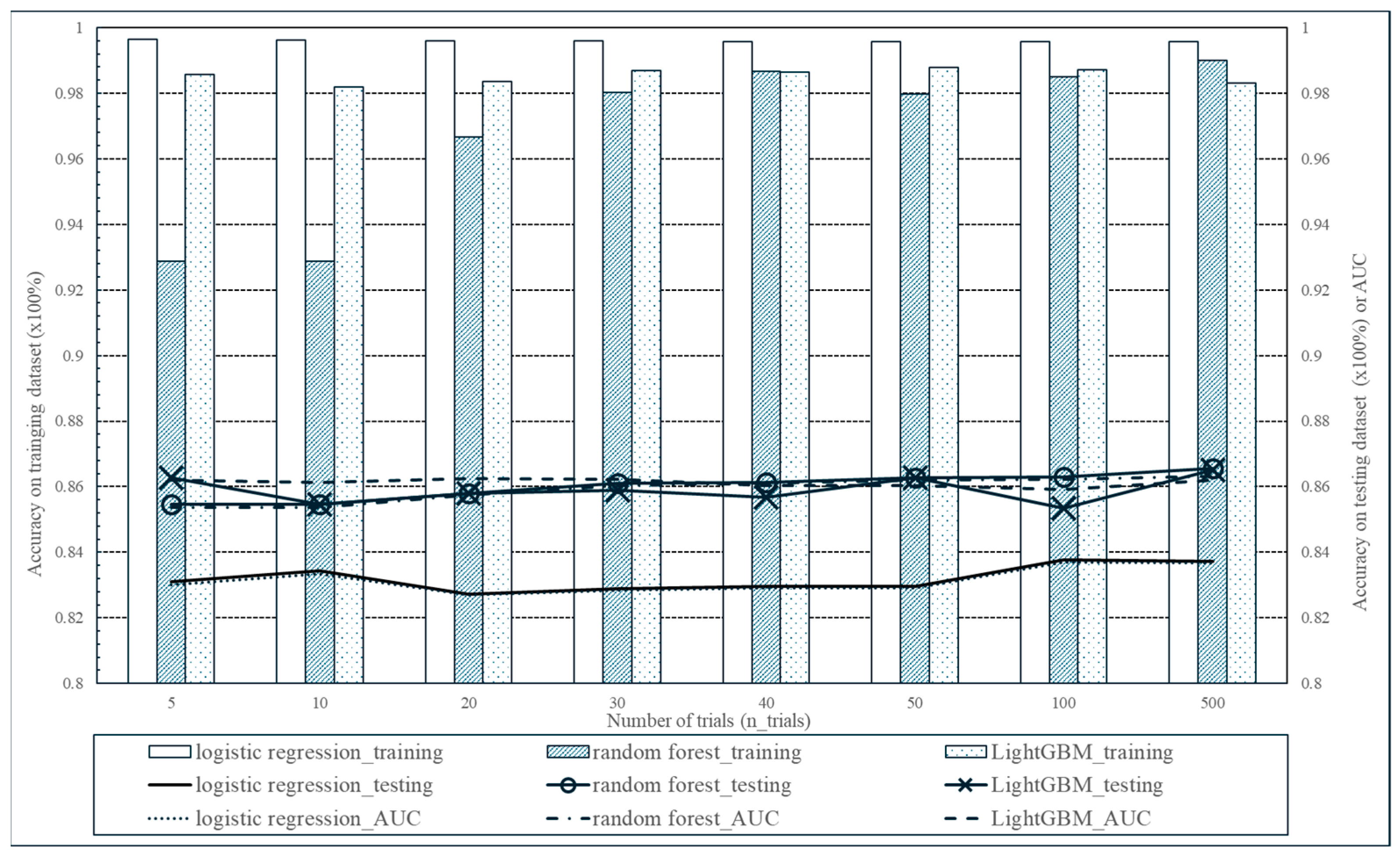

5.2. Results of Optimization Model

- (1)

- For model tuning, the Optuna library [64,65,66,67] was used. Optuna is an open-source tool created to automate the process of finding the best configurations for machine learning models. It implements advanced techniques such as Bayesian optimization and tree-structured Parzen estimators, as well as pruning mechanisms that terminate less promising trials at an early stage [64,65,66]. In Optuna, the n_trials parameter indicates the number of times the tuning procedure is executed, with each trial evaluating a unique combination of parameters. A higher n_trials value tends to improve the model’s accuracy but requires more processing time. Variations in n_trials were explored for high-performing models to assess their impact on the results.

- (2)

- For each model, five hyperparameters influencing analytical performance were selected, as shown in Table 6. These hyperparameters are chosen based on insights from the original studies that proposed each model, together with findings from recent research [28,29,37,38,52,58,67]. Table 6 further provides the tuning ranges for each hyperparameter, as well as the optimal values determined for a sample case when n_trials = 10.

- (3)

- Additionally, a 5-fold cross-validation technique was used on each training dataset to identify hyperparameter settings that maximize accuracy. Using these selected parameters, the final performance of the model was assessed.

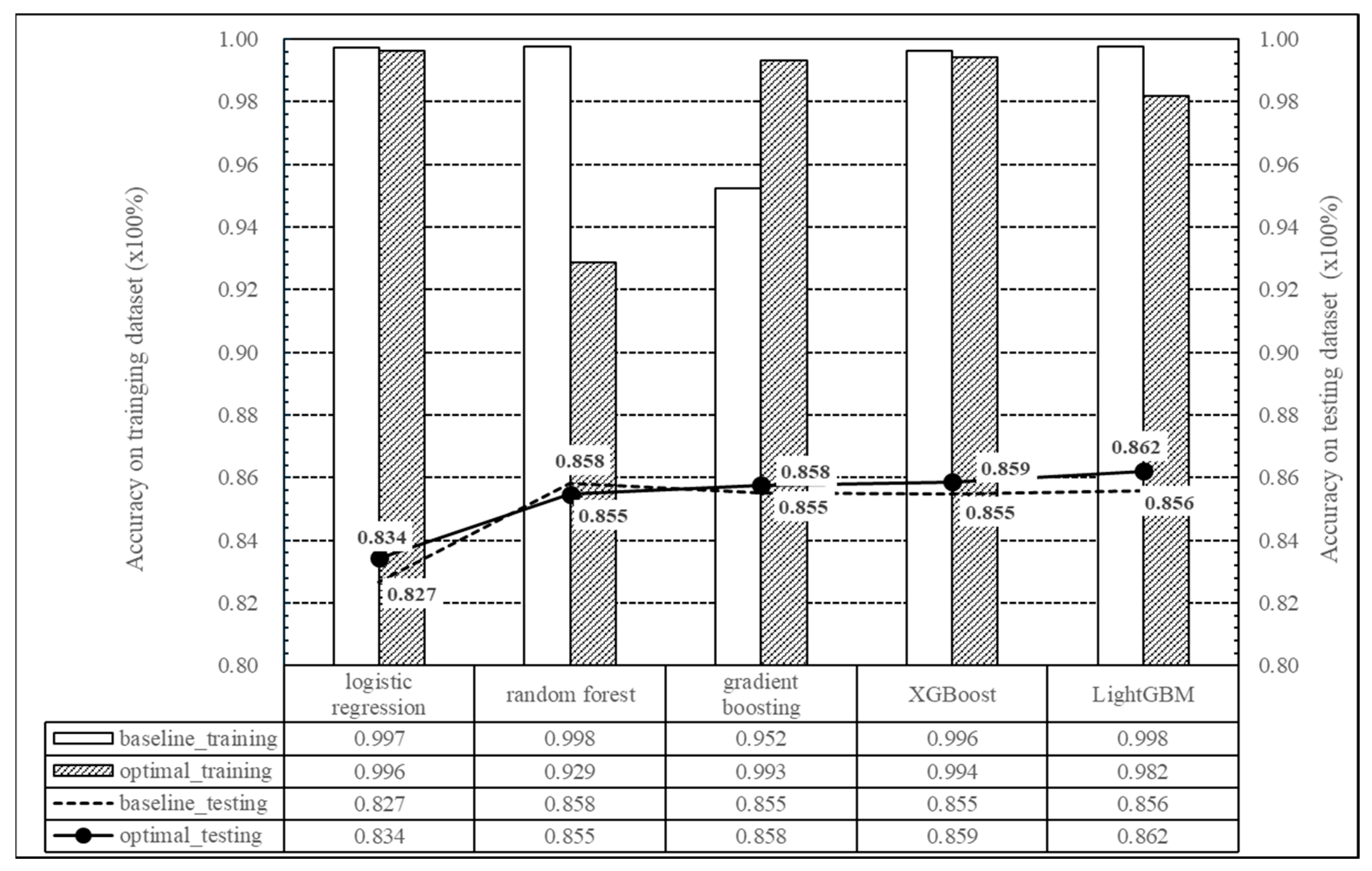

- (1)

- For the training dataset, the baseline model achieves higher accuracy than the optimized model. However, in gradient boosting techniques, the optimized model outperforms the baseline in predictive accuracy on the training data. For the random forest, the optimized configuration yields approximately 6.9% lower training accuracy compared to the baseline. This occurs because the baseline uses default hyperparameters without explicit regularization, which may overfit the training data and thus results in higher accuracy. In contrast, the optimized model applies regularization and constrained hyperparameters, which may reduce training accuracy but typically improve generalization performance on the testing dataset.

- (2)

- When evaluating performance on the testing dataset, ensemble-based models consistently achieve higher accuracy than logistic regression. For the testing dataset, the optimal configuration yields prediction accuracy gains of 2.4% (random forest), 2.8% (gradient boosting), 2.9% (XGBoost), and 3.3% (LightGBM) compared with logistic regression

- (3)

- Analysis of testing dataset accuracy indicates that, except for random forest, all models perform better under the optimized configuration compared with the baseline. Random forest exhibits negligible performance difference between baseline and optimized versions (about 0.4% difference in accuracy).

- (4)

- The results of the comparison between the performance of the baseline model and the results after hyperparameter tuning on the testing dataset are summarized as follows. In the case of logistic regression, the accuracy after hyperparameter tuning improved by 0.9% compared to the baseline model. On the other hand, for the four ensemble models, the accuracy improved by about 0.3%. In particular, the highest improvement in accuracy (0.7%) was observed in LightGBM after hyperparameter tuning compared to the baseline.

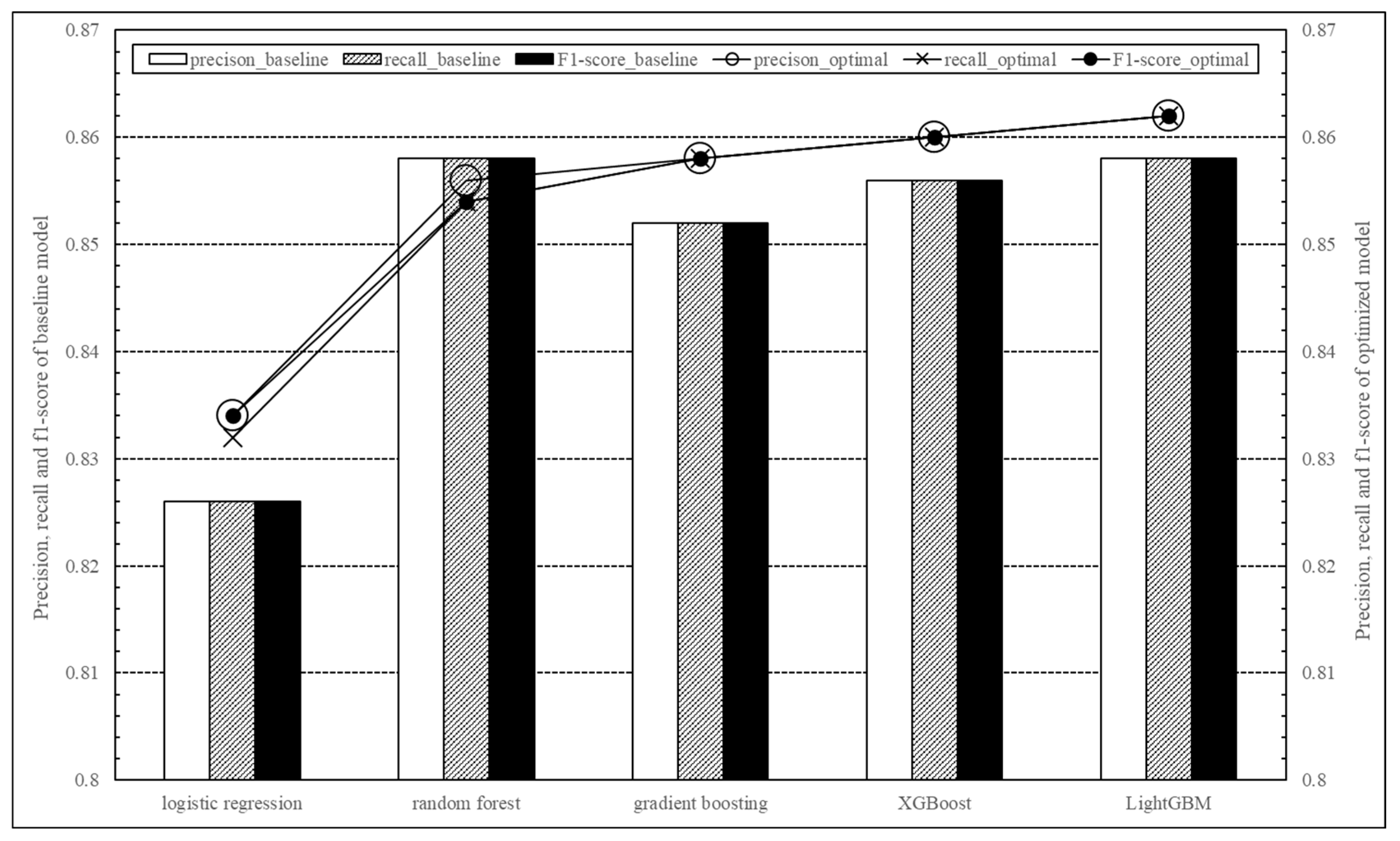

- (1)

- For each baseline model, there is little difference in the performance of precision, recall, and F1-score. This indicates that, when assuming the default hyperparameter values for each model, the prediction performance for each class does not differ significantly. That is, when precision, recall, and F1-score have similar values, the corresponding model can be considered to predict both classes (IP and non-IP) in a balanced manner [64,65,66,67]. In other words, the model’s predictions are consistent, reliable, and fair, performing without bias between classes. Conversely, for the optimal models of logistic regression and random forest, the prediction performance appears to exhibit a slight bias toward certain dependent variables. The evaluation results of precision, recall, and F1-score for each model are provided in the Appendix A.

- (2)

- Analysis of the test dataset indicates that the performance of the ensemble models consistently exceeds that of the logistic regression model.

- (3)

- Random forest and LightGBM achieve the best precision, recall, and F1-score among the baseline models, demonstrating their superior performance. The baseline configurations across various models yield similar results for these criteria.

- (4)

- Except for random forest, all models exhibit improved performance in their optimized configurations relative to their baseline versions. The three boosting algorithms—gradient boosting, XGBoost, and LightGBM—deliver comparable outcomes across evaluation metrics.

6. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Precision, Recall and F1-Score Results for Each Model

| Model | Metrics | Samples | Baseline | Optimal | Average of Samples | |||||||||

| 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 | Baseline | Optimal | |||

| logistic regression | precision | IP | 0.80 | 0.83 | 0.82 | 0.80 | 0.80 | 0.82 | 0.83 | 0.82 | 0.82 | 0.82 | 0.810 | 0.822 |

| non-IP | 0.82 | 0.87 | 0.82 | 0.83 | 0.86 | 0.83 | 0.86 | 0.84 | 0.83 | 0.86 | 0.840 | 0.844 | ||

| macro avg | 0.81 | 0.85 | 0.82 | 0.82 | 0.83 | 0.82 | 0.84 | 0.83 | 0.82 | 0.84 | 0.826 | 0.830 | ||

| weighted avg | 0.81 | 0.85 | 0.82 | 0.82 | 0.83 | 0.82 | 0.85 | 0.83 | 0.82 | 0.85 | 0.826 | 0.834 | ||

| recall | IP | 0.80 | 0.86 | 0.80 | 0.80 | 0.83 | 0.80 | 0.85 | 0.83 | 0.79 | 0.83 | 0.818 | 0.820 | |

| non-IP | 0.82 | 0.84 | 0.83 | 0.83 | 0.84 | 0.84 | 0.84 | 0.84 | 0.85 | 0.86 | 0.832 | 0.846 | ||

| macro avg | 0.81 | 0.85 | 0.82 | 0.82 | 0.83 | 0.82 | 0.85 | 0.83 | 0.82 | 0.85 | 0.826 | 0.834 | ||

| weighted avg | 0.81 | 0.85 | 0.82 | 0.82 | 0.83 | 0.82 | 0.84 | 0.83 | 0.82 | 0.85 | 0.826 | 0.832 | ||

| F1-score | IP | 0.80 | 0.85 | 0.81 | 0.80 | 0.81 | 0.81 | 0.84 | 0.83 | 0.81 | 0.83 | 0.814 | 0.824 | |

| non-IP | 0.82 | 0.86 | 0.83 | 0.83 | 0.85 | 0.83 | 0.85 | 0.84 | 0.84 | 0.86 | 0.838 | 0.844 | ||

| macro avg | 0.81 | 0.85 | 0.82 | 0.82 | 0.83 | 0.82 | 0.84 | 0.83 | 0.82 | 0.84 | 0.826 | 0.830 | ||

| weighted avg | 0.81 | 0.85 | 0.82 | 0.82 | 0.83 | 0.82 | 0.85 | 0.83 | 0.82 | 0.85 | 0.826 | 0.834 | ||

| random forest | precision | IP | 0.85 | 0.85 | 0.85 | 0.85 | 0.86 | 0.85 | 0.84 | 0.85 | 0.85 | 0.86 | 0.852 | 0.850 |

| non-IP | 0.85 | 0.91 | 0.85 | 0.86 | 0.86 | 0.84 | 0.89 | 0.84 | 0.83 | 0.90 | 0.866 | 0.860 | ||

| macro avg | 0.85 | 0.88 | 0.85 | 0.85 | 0.86 | 0.84 | 0.87 | 0.85 | 0.84 | 0.88 | 0.858 | 0.856 | ||

| weighted avg | 0.85 | 0.88 | 0.85 | 0.85 | 0.86 | 0.84 | 0.87 | 0.85 | 0.84 | 0.88 | 0.858 | 0.856 | ||

| recall | IP | 0.83 | 0.90 | 0.84 | 0.83 | 0.82 | 0.81 | 0.89 | 0.82 | 0.79 | 0.88 | 0.844 | 0.838 | |

| non-IP | 0.87 | 0.86 | 0.86 | 0.87 | 0.89 | 0.87 | 0.85 | 0.87 | 0.88 | 0.88 | 0.870 | 0.870 | ||

| macro avg | 0.85 | 0.88 | 0.85 | 0.85 | 0.86 | 0.84 | 0.87 | 0.84 | 0.84 | 0.88 | 0.858 | 0.854 | ||

| weighted avg | 0.85 | 0.88 | 0.85 | 0.85 | 0.86 | 0.84 | 0.87 | 0.84 | 0.84 | 0.88 | 0.858 | 0.854 | ||

| F1-score | IP | 0.84 | 0.87 | 0.84 | 0.84 | 0.84 | 0.83 | 0.86 | 0.84 | 0.82 | 0.87 | 0.846 | 0.844 | |

| non-IP | 0.86 | 0.88 | 0.86 | 0.86 | 0.88 | 0.86 | 0.87 | 0.85 | 0.85 | 0.89 | 0.868 | 0.864 | ||

| macro avg | 0.85 | 0.88 | 0.85 | 0.85 | 0.86 | 0.84 | 0.87 | 0.84 | 0.84 | 0.88 | 0.858 | 0.854 | ||

| weighted avg | 0.85 | 0.88 | 0.85 | 0.85 | 0.86 | 0.84 | 0.87 | 0.84 | 0.84 | 0.88 | 0.858 | 0.854 | ||

| gradient boosting | precision | IP | 0.84 | 0.83 | 0.83 | 0.85 | 0.87 | 0.84 | 0.85 | 0.85 | 0.82 | 0.87 | 0.844 | 0.846 |

| non-IP | 0.85 | 0.90 | 0.83 | 0.85 | 0.89 | 0.84 | 0.89 | 0.85 | 0.87 | 0.89 | 0.864 | 0.868 | ||

| macro avg | 0.84 | 0.86 | 0.83 | 0.85 | 0.88 | 0.84 | 0.87 | 0.85 | 0.85 | 0.88 | 0.852 | 0.858 | ||

| weighted avg | 0.84 | 0.86 | 0.83 | 0.85 | 0.88 | 0.84 | 0.87 | 0.85 | 0.85 | 0.88 | 0.852 | 0.858 | ||

| recall | IP | 0.83 | 0.89 | 0.82 | 0.82 | 0.86 | 0.82 | 0.88 | 0.83 | 0.85 | 0.87 | 0.844 | 0.850 | |

| non-IP | 0.86 | 0.84 | 0.85 | 0.88 | 0.90 | 0.86 | 0.86 | 0.87 | 0.85 | 0.89 | 0.866 | 0.866 | ||

| macro avg | 0.84 | 0.86 | 0.83 | 0.85 | 0.88 | 0.84 | 0.87 | 0.85 | 0.85 | 0.88 | 0.852 | 0.858 | ||

| weighted avg | 0.84 | 0.86 | 0.83 | 0.85 | 0.88 | 0.84 | 0.87 | 0.85 | 0.85 | 0.88 | 0.852 | 0.858 | ||

| F1-score | IP | 0.83 | 0.86 | 0.82 | 0.84 | 0.87 | 0.83 | 0.86 | 0.84 | 0.84 | 0.87 | 0.844 | 0.848 | |

| non-IP | 0.85 | 0.87 | 0.84 | 0.87 | 0.90 | 0.85 | 0.88 | 0.86 | 0.86 | 0.89 | 0.866 | 0.868 | ||

| macro avg | 0.84 | 0.86 | 0.83 | 0.85 | 0.88 | 0.84 | 0.87 | 0.85 | 0.85 | 0.88 | 0.852 | 0.858 | ||

| weighted avg | 0.84 | 0.86 | 0.83 | 0.85 | 0.88 | 0.84 | 0.87 | 0.85 | 0.85 | 0.88 | 0.852 | 0.858 | ||

| XGBoost | precision | IP | 0.84 | 0.86 | 0.85 | 0.85 | 0.85 | 0.84 | 0.84 | 0.85 | 0.84 | 0.87 | 0.850 | 0.848 |

| non-IP | 0.83 | 0.89 | 0.84 | 0.85 | 0.89 | 0.84 | 0.89 | 0.84 | 0.87 | 0.89 | 0.860 | 0.866 | ||

| macro avg | 0.84 | 0.88 | 0.84 | 0.85 | 0.87 | 0.84 | 0.87 | 0.85 | 0.86 | 0.88 | 0.856 | 0.860 | ||

| weighted avg | 0.84 | 0.88 | 0.84 | 0.85 | 0.87 | 0.84 | 0.87 | 0.85 | 0.86 | 0.88 | 0.856 | 0.860 | ||

| recall | IP | 0.80 | 0.88 | 0.81 | 0.82 | 0.86 | 0.81 | 0.89 | 0.82 | 0.85 | 0.86 | 0.834 | 0.846 | |

| non-IP | 0.87 | 0.87 | 0.87 | 0.87 | 0.88 | 0.86 | 0.85 | 0.87 | 0.86 | 0.90 | 0.872 | 0.868 | ||

| macro avg | 0.84 | 0.88 | 0.84 | 0.84 | 0.87 | 0.84 | 0.87 | 0.85 | 0.86 | 0.88 | 0.854 | 0.860 | ||

| weighted avg | 0.84 | 0.88 | 0.84 | 0.85 | 0.87 | 0.84 | 0.87 | 0.85 | 0.86 | 0.88 | 0.856 | 0.860 | ||

| F1-score | IP | 0.82 | 0.87 | 0.83 | 0.83 | 0.86 | 0.82 | 0.86 | 0.84 | 0.85 | 0.90 | 0.842 | 0.854 | |

| non-IP | 0.85 | 0.88 | 0.85 | 0.86 | 0.88 | 0.85 | 0.87 | 0.86 | 0.87 | 0.87 | 0.864 | 0.864 | ||

| macro avg | 0.84 | 0.88 | 0.84 | 0.85 | 0.87 | 0.84 | 0.87 | 0.85 | 0.86 | 0.88 | 0.856 | 0.860 | ||

| weighted avg | 0.84 | 0.88 | 0.84 | 0.85 | 0.87 | 0.84 | 0.87 | 0.85 | 0.86 | 0.88 | 0.856 | 0.860 | ||

| LightGBM | precision | IP | 0.84 | 0.86 | 0.84 | 0.84 | 0.86 | 0.85 | 0.86 | 0.85 | 0.84 | 0.86 | 0.848 | 0.852 |

| non-IP | 0.84 | 0.91 | 0.82 | 0.87 | 0.89 | 0.85 | 0.91 | 0.84 | 0.87 | 0.89 | 0.866 | 0.872 | ||

| macro avg | 0.84 | 0.88 | 0.83 | 0.85 | 0.87 | 0.85 | 0.89 | 0.84 | 0.85 | 0.88 | 0.854 | 0.862 | ||

| weighted avg | 0.84 | 0.89 | 0.83 | 0.85 | 0.88 | 0.85 | 0.89 | 0.84 | 0.85 | 0.88 | 0.858 | 0.862 | ||

| recall | IP | 0.81 | 0.90 | 0.79 | 0.84 | 0.86 | 0.83 | 0.90 | 0.82 | 0.84 | 0.86 | 0.840 | 0.850 | |

| non-IP | 0.87 | 0.87 | 0.86 | 0.86 | 0.89 | 0.87 | 0.87 | 0.86 | 0.86 | 0.89 | 0.870 | 0.870 | ||

| macro avg | 0.84 | 0.89 | 0.83 | 0.85 | 0.87 | 0.85 | 0.89 | 0.84 | 0.85 | 0.88 | 0.856 | 0.862 | ||

| weighted avg | 0.84 | 0.89 | 0.83 | 0.85 | 0.88 | 0.85 | 0.89 | 0.84 | 0.85 | 0.88 | 0.858 | 0.862 | ||

| F1-score | IP | 0.83 | 0.88 | 0.81 | 0.84 | 0.86 | 0.84 | 0.88 | 0.83 | 0.84 | 0.86 | 0.844 | 0.850 | |

| non-IP | 0.85 | 0.89 | 0.84 | 0.86 | 0.89 | 0.86 | 0.89 | 0.85 | 0.86 | 0.89 | 0.866 | 0.870 | ||

| macro avg | 0.84 | 0.88 | 0.83 | 0.85 | 0.87 | 0.85 | 0.89 | 0.84 | 0.85 | 0.88 | 0.854 | 0.862 | ||

| weighted avg | 0.84 | 0.89 | 0.83 | 0.85 | 0.88 | 0.85 | 0.89 | 0.84 | 0.85 | 0.88 | 0.858 | 0.862 | ||

| (Note) The ‘macro avg’ represents the unweighted mean of the metrics across all classes, whereas the ‘weighted avg’ is calculated by multiplying each class’s metric by its sample size and then dividing by the total number of samples. In this study, the ‘weighted avg’ was used to compare the performance among the models. | ||||||||||||||

References

- Global Trade Alert (GTA). Available online: https://globaltradealert.org/data-center (accessed on 15 July 2025).

- Yeo, J.; Park, J.; Yoon, D.; Kim, S.; Park, Y.; Jang, H.S. Basic Research 2023. In A Study on the Transition Direction of the Mobile Communication Network Infrastructure Industry Ecosystem; Korea Information Society Development Institute (KISDI): Jincheon, Republic of Korea, 2023. [Google Scholar]

- Juhasz, R.; Lane, N.; Oehlsen, E.; Perez, V.C. Global Industrial Policy: Measurement and Results; Policy Brief Series: Insights on Industrial Development; United Nations Industrial Development Organization (UNIDO): Vienna, Austria, 2023; pp. 1–6. [Google Scholar]

- Juhasz, R.; Lane, N. The political economy of industrial policy. J. Econ. Perspect. 2024, 38, 27–54. [Google Scholar] [CrossRef]

- Juhasz, R.; Lane, N.; Oehlsen, E.; Perez, V.C. Measuring Industrial Policy: A Text-Based Approach. SocArXiv Papers. Available online: https://osf.io/preprints/socarxiv/uyxh9_v6 (accessed on 15 March 2025).

- Juhasz, R.; Steinwender, C. Industrial policy and the great divergence. Annu. Rev. Econ. 2024, 16, 27–54. [Google Scholar] [CrossRef]

- Xu, A.; Dai, Y.; Hu, Z.; Qiu, K. Can green finance policy promote inclusive green growth?-based on the quasi-natural experiment of China’s green finance reform and innovation pilot zone. Int. Rev. Econ. Financ. 2025, 100, 104090. [Google Scholar] [CrossRef]

- Dong, X.; Mingzhe, Y. Time-varing effects of macro shocks on cross-border capital flows in China’s bond market. Int. Rev. Econ. Financ. 2024, 96, 103720. [Google Scholar] [CrossRef]

- Dong, X.; Yu, M. Green bond issuance and green innovation: Evidence from China’s energy industry. Int. Rev. Financ. Anal. 2024, 94, 103281. [Google Scholar] [CrossRef]

- Bird, S.; Klein, E.; Loper, E. Natural Language Processing with Python, 1st ed.; O’Reilly Media: Santa Rosa, CA, USA, 2009. [Google Scholar]

- Park, Y.; Lim, S.; Gu, C.; Syafiandini, A.F.; Song, M. Forecasting topic trends of blockchain utilizing topic modeling and deep learning-based time-series prediction on different document types. J. Informetr. 2025, 19, 101639. [Google Scholar] [CrossRef]

- Cheng, P.; Wu, Z.; Du, W.; Zhao, H.; Lu, W.; Liu, G. Backdoor attacks and countermeasures in natural language processing models: A comprehensive security review. IEEE Trans. Neural Netw. Learn. Syst. 2025, 36, 13628–13648. [Google Scholar] [CrossRef] [PubMed]

- Jones, K.S. A statistical interpretation of term specificity and its application in retrieval. J. Doc. 1972, 28, 11–21. [Google Scholar] [CrossRef]

- Abdelhakim, B.A.; Mohamed, B.A.; Soufyane, A. Using machine learning and TF-IDF for sentiment analysis in moroccan dialect: An analytical methodology and comparative study. Innov. Smart Cities Appl. 2024, 7, 342–349. [Google Scholar]

- Kwon, N.; Yoo, Y.; Lee, B. Novel curriculum learning strategy using class-based TF-IDF for enhancing personality detection in text. IEEE Access 2024, 12, 87873–87882. [Google Scholar] [CrossRef]

- Rizal, R.; Faturahman, A.; Impron, A.; Darmawan, I.; Haerani, E.; Rahmatulloh, A. Unveiling the truth: Detecting fake news using SVM and TF-IDF. In Proceedings of the International Conference on Advancement in Data Science, E-learning and Information System (ICADEIS), Bandung, Indonesia, 3–4 February 2025; pp. 1–6. [Google Scholar]

- Wu, X.; Zhu, X.; Wu, G.-Q.; Ding, W. Data mining with big data. IEEE Trans. Knowl. Data Eng. 2014, 26, 97–107. [Google Scholar]

- Zhong, N.; Li, Y.; Wu, S.-T. Effective pattern discovery for text mining. IEEE Trans. Knowl. Data Eng. 2012, 24, 30–44. [Google Scholar] [CrossRef]

- Cao, K.; Chen, S.; Chen, Y.; Nie, B.; Li, Z. Decision analysis of safety risks pre-control measures for falling accidents in mega hydropower engineering driven by accident case texts. Reliab. Eng. Syst. Saf. 2025, 261, 111120. [Google Scholar] [CrossRef]

- Jing, L.; Fan, X.; Feng, D.; Lu, C.; Jiang, S. A patent text-based product conceptual design decision-making approach considering the fusion of incomplete evaluation semantic and scheme beliefs. Appl. Soft Comput. 2024, 157, 111492. [Google Scholar] [CrossRef]

- Zhang, L. Features extration based on naïve bayes algorithm and TF-IDF for news classification. PLoS ONE 2025, 20, e0327347. [Google Scholar]

- Agresti, A. An Introduction to Categorical Data Analysis, 2nd ed.; Wiley: Hoboken, NJ, USA, 2007. [Google Scholar]

- Gosho, M.; Ohigashi, T.; Nagashima, K.; Ito, Y.; Maruo, K. Bias in odds ratios from logistic regression methods with sparse data sets. J. Epidemiol. 2023, 33, 265–275. [Google Scholar] [CrossRef]

- Loh, W.-Y. Logistic Regression Tree Analysis. In Springer Handbook of Engineering Statistics; Pham, H., Ed.; Springer: London, UK, 2006. [Google Scholar]

- Deng, Z.; Han, Z.; Ma, C.; Ding, M.; Yuan, L.; Ge, C.; Liu, Z. Vertical federated unlearning on the logistic regression model. Electronics 2023, 12, 3182. [Google Scholar] [CrossRef]

- Aizawa, Y.; Emura, T.; Michimae, H. Bayesian ridge estimators based on copula-based joint prior distributions for logistic regression parameters. Commun. Stat.-Simul. Comput. 2023, 54, 252–266. [Google Scholar] [CrossRef]

- Kayabol, K. Approximate sparse multinomial logistic regression for classification. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 490–493. [Google Scholar] [CrossRef]

- Gosho, M.; Ishii, R.; Nagashima, K.; Noma, H.; Maruo, K. Determining the prior mean in Bayesian logistic regression with sparse data: A nonarbitrary approach. J. R. Stat. Soc. Ser. C Appl. Stat. 2025, 74, 126–141. [Google Scholar] [CrossRef]

- Lin, C.; Xu, J.; Jiang, D.; Hou, J.; Liang, Y.; Zou, Z.; Mei, X. Multi-model ensemble learning for battery state-of-health estimation: Recent advances and perspectives. J. Energy Chem. 2025, 100, 739–759. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Chen, W.; Yan, B.; Xu, A.; Mu, X.; Zhou, X.; Jiang, M.; Wang, C.; Li, R.; Huang, J.; Dong, J. An intelligent matching method for the equivalent circuit of electrochemical impedance spectroscopy based on random forest. J. Mater. Sci. Technol. 2025, 209, 300–310. [Google Scholar] [CrossRef]

- Zhao, S.; Zhou, D.; Wang, H.; Chen, D.; Yu, L. Enhancing student academic success prediction through ensemble learning and image-based behavioral data transformation. Appl. Sci. 2025, 15, 1231. [Google Scholar] [CrossRef]

- Zong, Y.; Nian, Y.; Zhang, C.; Tang, X.; Wang, L.; Zhang, L. Hybrid grid search and bayesian optimization-based random forest regression for predicting material compression pressure in manufacturing processes. Eng. Appl. Artif. Intell. 2025, 141, 109580. [Google Scholar] [CrossRef]

- Yadav, P.; Kathuria, M. Sentiment analysis using various machine learning techniques: A review. IEIE Trans. Smart Process. Comput. 2022, 11, 79–84. [Google Scholar]

- Sunarya, U.; Park, C. Optimal number of cardiac cycles for continuous blood pressure estimation. IEIE Trans. Smart Process. Comput. 2022, 11, 421–425. [Google Scholar] [CrossRef]

- Slimani, C.; Wu, C.-F.; Rubini, S.; Chang, Y.-H.; Boukhobza, J. Accelerating random forest on memory-constrained devices through data storage optimization. IEEE Trans. Comput. 2023, 72, 1595–1609. [Google Scholar] [CrossRef]

- Xu, H.; Li, P.; Wang, J.; Liang, W. A study on black screen fault detection of single-phase smart energy meter based on random forest binary classifier. Measurement 2025, 242, 116245. [Google Scholar] [CrossRef]

- Wali, S.; Farrikh, Y.A.; Khan, I. Explainable AI and random forest based reliable intrusion detectino system. Comput. Secur. 2025, 157, 104542. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Uslu, N.S.; Buyuklu, A.H. The dynamics of the profit margin in a component maintenance, repair, and overhaul (MRO) within the aviation industry: An analytical approach using gradient boosting, variable clustering, and the Gini index. Sustainability 2024, 16, 6470. [Google Scholar] [CrossRef]

- Theerthagiri, P. Liver disease classification using histogram-based gradient boosting classification tree with feature selection algorithm. Biomed. Signal Process. Control 2025, 100, 107102. [Google Scholar] [CrossRef]

- Jafarian, T.; Ghaffari, A.; Seyfollahi, A.; Arasteh, B. Detecting and mitigating security anomalies in software-defined networking (SDN) using gradient-boosted trees and floodlight controller characteristics. Comput. Stand. Interfaces 2025, 91, 103871. [Google Scholar] [CrossRef]

- Madan, T.; Sagar, S.; Tran, T.A.; Virmani, D.; Rastogi, D. Air quality prediction using ensemble classifiers and single decision tree. J. Adv. Res. Appl. Sci. Eng. Technol. 2025, 52, 56–67. [Google Scholar] [CrossRef]

- Dao, N.A.; Nguyen, H.M.; Nguyen, K.T. Mining for building energy-consumption patterns by using intelligent clustering. IEIE Trans. Smart Process. Comput. 2021, 10, 469–476. [Google Scholar] [CrossRef]

- Valdes, G.; Friedman, J.H.; Jiang, F.; Gennatas, E.D. Representational gradient boosting: Backpropagation in the space of functions. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 10186–10195. [Google Scholar] [CrossRef]

- Rizkallah, L.W. Enhancing the performance of gradient boosting trees on regression problems. J. Big Data 2025, 12, 35. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Lu, X.; Chen, C.; Gao, R.; Xing, Z. Prediction of high-speed traffic flow around city based on BO-XGBoost Model. Symmetry 2023, 15, 1453. [Google Scholar] [CrossRef]

- Bhardwaj, K.; Goyal, N.; Mittal, B.; Sharma, V.; Shivhare, S.N. A novel active learning technique for fetal health classification based on XGBoost classifier. IEEE Access 2025, 13, 9485–9497. [Google Scholar] [CrossRef]

- Abdulganiyu, O.H.; Tchakoucht, T.A.; Saheed, Y.K.; Ahmed, H.A. XIDINTFL-VAE: XGBoost-based intrusion detection of imbalance network traffic via class-wise focal loss variational autoencoder. J. Supercomput. 2024, 81, 16. [Google Scholar] [CrossRef]

- Tian, J.; Tsai, P.-W.; Zhang, K.; Cai, X.; Xiao, H.; Yu, K.; Zhao, W.; Chen, J. Synergetic focal loss for imbalanced classification in federated XGBoost. IEEE Trans. Artif. Intell. 2024, 5, 647–660. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, B.; Xu, Z.; Li, M.; Skare, M. A multi-dimensional decision framework based on the XGBoost algorithm and the constrained parametric approach. Sci. Rep. 2025, 15, 4315. [Google Scholar] [CrossRef]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.-Y. LightGBM: A highly efficient gradient boosting decision tree. In Proceedings of the 31st Annual Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Nagabotu, V.; Namburu, A. Fetal health classification using LightGBM with grid search based hyper parameter tuning. Recent Pat. Eng. 2025, 19, e030723218386. [Google Scholar] [CrossRef]

- Yang, Z.; Han, Y.; Zhang, C.; Xu, Z.; Tang, S. Research on transformer transverse fault diagnosis based on optimized LightGBM model. Measurement 2025, 244, 116499. [Google Scholar] [CrossRef]

- Duan, Y.; Li, C.; Wang, X.; Guo, Y.; Wang, H. Forecasting influenza trends using decomposition technique and LightGBM optimized by grey wolf optimizer algorithm. Mathematics 2025, 13, 24. [Google Scholar] [CrossRef]

- Hao, S.; He, J.; Li, W.; Li, T.; Yang, G.; Fang, W.; Chen, W. CSCAD: An adaptive LightGBM algorithm to detect cache side-channel attacks. IEEE Trans. Dependable Secur. Comput. 2025, 22, 695–709. [Google Scholar] [CrossRef]

- Lian, H.; Ji, Y.; Niu, M.; Gu, J.; Xie, J.; Liu, J. A hybrid load prediction method of office buildings based on physical simulation database and LightGBM algorithm. Appl. Energy 2025, 377, 124620. [Google Scholar] [CrossRef]

- Xia, J.-Y.; Li, S.; Huang, J.-J.; Yang, Z.; Jaimoukha, I.M.; Guinduz, D. Metalearning-based alternating minimization algorithm for nonconvex optimization. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 5366–5380. [Google Scholar] [CrossRef]

- Li, H.; Xia, C.; Wang, T.; Wang, Z.; Cui, P.; Li, X. GRASS: Learning spatial-temporal properties from chainlike cascade data for microscopic diffusion prediction. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 16313–16327. [Google Scholar] [CrossRef]

- Zhang, Z.-W.; Liu, Z.-G.; Martin, A.; Zhou, K. BSC: Belief shift clustering. IEEE Trans. Syst. Man Cybern. Syst. 2022, 53, 1748–1760. [Google Scholar] [CrossRef]

- Li, L.; Cherouat, A.; Snoussi, H.; Wang, T. Grasping with occlusion-aware ally method in complex scenes. IEEE Trans. Autom. Sci. Eng. 2024, 22, 5944–5954. [Google Scholar] [CrossRef]

- Wang, T.; Chen, J.; Lu, J.; Liu, K.; Zhu, A.; Snoussi, H. Synchronous spatiotemporal graph transformer: A new framewotk for traffic data prediction. IEEE Trans. Neural Netw. Learn. Syst. 2022, 34, 10589–10599. [Google Scholar] [CrossRef] [PubMed]

- Mockus, J. Bayesian Approach to Global Optimization: Theory and Applications, 1st ed.; Kluwer Academic Publishers: Dordrecht, The Netherlands, 1989. [Google Scholar]

- Akiba, T.; Sano, S.; Yanase, T.; Ohta, T.; Koyama, M. Optuna: A next-generation hyperparameter optimization framework. arXiv 2019, arXiv:1907.10902. [Google Scholar] [CrossRef]

- Liang, Z.; Ismail, M.T. Advanced CEEMD hybrid model for VIX forecasting: Optimized decision trees and ARIMA integration. Evol. Intell. 2025, 18, 12. [Google Scholar] [CrossRef]

- Cihan, P. Bayesian hyperparameter optimization of machine learning models for predicting biomass gasification gases. Appl. Sci. 2025, 15, 1018. [Google Scholar] [CrossRef]

- Jang, H.-S.; Yeo, J. Current status analysis of 5G mobile communication services industry using business model canvas in South Korea. Asia Pac. Manag. Rev. 2024, 29, 462–476. [Google Scholar] [CrossRef]

- Jang, H.-S.; Baek, J.-H. Performance analysis of two-zone-based registration system with timer in wireless communication networks. Electronics 2024, 13, 160. [Google Scholar] [CrossRef]

- Jang, H.-S.; Baek, J.-H. Modeling and performance of three zone-based registration scheme in wireless communication networks. Appl. Sci. 2023, 13, 10064. [Google Scholar] [CrossRef]

| (Part A) Stated goal |

| Industrial policy encompasses deliberate governmental interventions aimed at restructuring economic activity. It strives to influence sectoral resource allocation and relative pricing mechanisms, thereby guiding long-term transformations of the economic structure—particularly through focused initiatives such as export promotion and investment in research and development (R&D). |

| (Part B) National state implementation |

| The objective of industrial policy is to achieve predefined targets related to the national economy. These policies are implemented by governmental or supranational organizations. Authorization and funding for these initiatives come from national governments, supranational institutions, or collaborative arrangements between these entities. |

| Example of IP (China) |

| On 1 August 2023, China’s General Administration of Customs under the Ministry of Commerce released Announcement No. 27 (2023) introducing export control measures for products associated with unmanned aerial vehicles and unmanned airships. The new requirement comes into force on 1 September 2023. Thirty items specified in a 10-digit HS code level were identified for requiring export licenses. Another announcement was issued for different products. |

| Example of non-IP (Thailand) |

| On 31 March 2022, the Ministry of Finance of Thailand issued Notification on the Exemption of Customs Duty for Goods imported for the Production of Face Masks granting a tax concession to producers of face masks under the subheading 630,790 in the form of import duty exemption on raw materials. This action only applies to a subset of the potential buyers of this good. According to the regulation, the objective was to meet the demand for face masks in the country following the COVID-19 outbreak. The raw materials include nonwovens and plaited bands. As the import duties exemption was provided to certain companies, it was considered selective. The regulation entered into force temporarily from 3 April to 30 September 2022. |

| Word | TF | Word | TF |

|---|---|---|---|

| million | 108.77 | foreign | 56.26 |

| state | 95.53 | financial | 49.32 |

| support | 75.38 | export | 48.92 |

| firm | 63.48 | products | 48.49 |

| government | 59.95 | subsidy | 48.38 |

| Word | TF | Word | TF |

|---|---|---|---|

| million | 89.59 | duty | 52.56 |

| state | 75.47 | government | 52.47 |

| tariff | 69.97 | support | 49.83 |

| import | 54.09 | see | 48.14 |

| related | 53.40 | loan | 47.41 |

| Model | Operating Methods | Advantages and Disadvantages |

|---|---|---|

| random forest |

|

|

| ||

| gradient boosting |

|

|

| ||

| XGBoost |

|

|

| ||

| LightGBM |

|

|

|

| Model | Hyperparameters | |||||

|---|---|---|---|---|---|---|

| logistic regression | name | C regularization strength | penalty regularization type | solver optimization algorithm | class_weight class weight | max_iter maximum_iterations |

| baseline | 1.0 | l2 (Ridge) | lbfgs | none | 100 | |

| tuned values | [10−5, 100] | [l1, l2] | [l1: liblinear, l2: newton-cg, lbfgs, sag, saga] | [none, balanced] | [100, 1000] | |

| optimal | 3.274 | l2 | sag | balanced | 168 | |

| random forest | name | n_estimators number of trees | max_features maximum number of features | max_depth maximum depth of trees | min_samples_split minimum number of samples to split | min_samples_leaf minimum number of samples in a leaf |

| baseline | 100 | sqrt | none | 2 | 1 | |

| tuned values | [50, 1000] | [none, sqrt, log2] | [5, 50] | [2, 20] | [1, 20] | |

| optimal | 105 | none | 23 | 3 | 7 | |

| gradient boosting | name | n_estimators number of trees | learning_rate learning rate | max_depth maximum depth of trees | min_samples_split minimum number of samples to split | min_samples_leaf minimum number of samples in a leaf |

| baseline | 100 | 0.1 | 3 | 2 | 1 | |

| tuned values | [50, 1000] | [0.01, 0.3] | [3, 32] | [2, 100] | [1, 50] | |

| optimal | 553 | 0.027 | 11 | 69 | 12 | |

| XGBoost | name | n_estimators number of trees | learning_rate learning rate | max_depth maximum depth of trees | colsample_bytree column subsampling | subsample subsample ratio |

| baseline | 100 | 0.3 | 6 | 1 | 1 | |

| tuned values | [50, 1000] | [0.01, 0.3] | [3, 32] | [0.5, 1] | [0.5, 1] | |

| optimal | 272 | 0.027 | 18 | 0.839 | 0.638 | |

| LightGBM | name | num_leaves number of leaves | learning_rate learning rate | max_depth maximum depth of trees | feature_fraction feature fraction | min_data_leaf minimum data in leaf |

| baseline | 31 | 0.1 | −1 | 1 | 20 | |

| tuned values | [2, 256] | [0.01, 0.3] | [−1, 32] | [0.5, 1] | [1, 50] | |

| optimal | 174 | 0.037 | 13 | 0.668 | 26 | |

| Model | Training Dataset | Testing Dataset | AUC | |

|---|---|---|---|---|

| Baseline | Model’s accuracy | 0 * | 0.0001 * | 0.0002 * |

| Random seed | 0.2033 | 0 * | 0 * | |

| Optimal | Model’s accuracy | 0.0001 * | 0 * | 0.0001 * |

| Random seed | 0.6913 | 0 * | 0 * | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jang, H.-S. Ensemble Learning Model for Industrial Policy Classification Using Automated Hyperparameter Optimization. Electronics 2025, 14, 3974. https://doi.org/10.3390/electronics14203974

Jang H-S. Ensemble Learning Model for Industrial Policy Classification Using Automated Hyperparameter Optimization. Electronics. 2025; 14(20):3974. https://doi.org/10.3390/electronics14203974

Chicago/Turabian StyleJang, Hee-Seon. 2025. "Ensemble Learning Model for Industrial Policy Classification Using Automated Hyperparameter Optimization" Electronics 14, no. 20: 3974. https://doi.org/10.3390/electronics14203974

APA StyleJang, H.-S. (2025). Ensemble Learning Model for Industrial Policy Classification Using Automated Hyperparameter Optimization. Electronics, 14(20), 3974. https://doi.org/10.3390/electronics14203974