Towards Robust Scene Text Recognition: A Dual Correction Mechanism with Deformable Alignment

Abstract

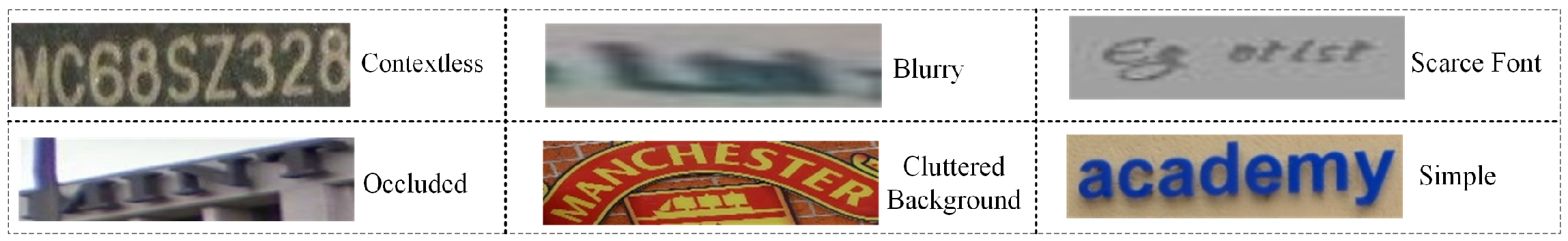

1. Introduction

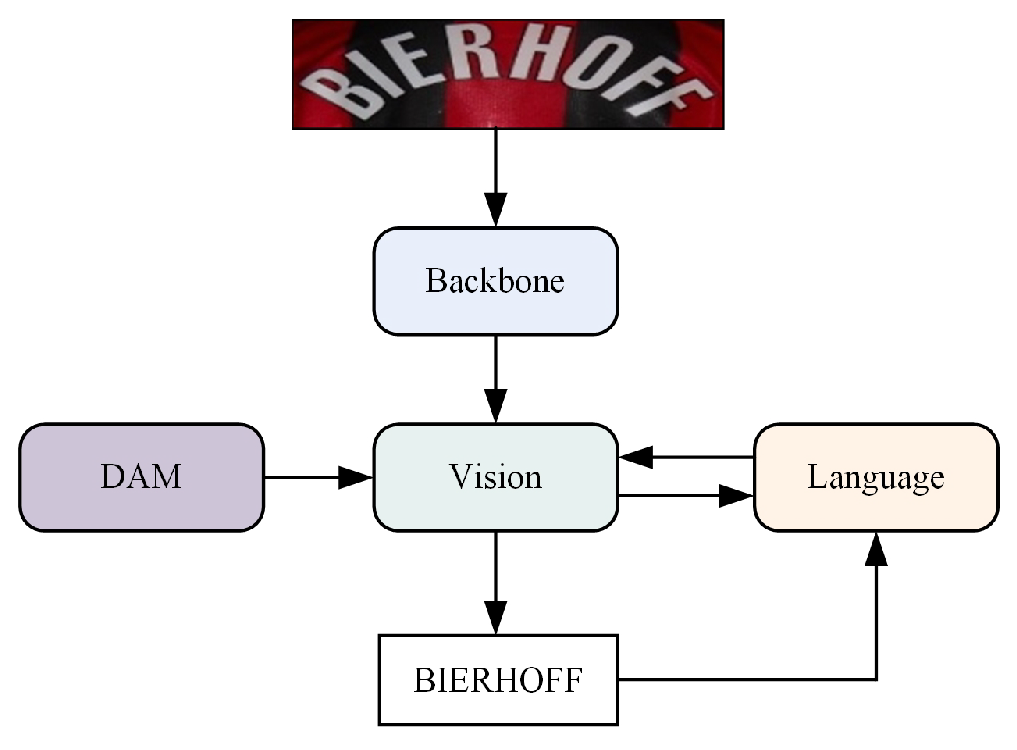

2. Methods

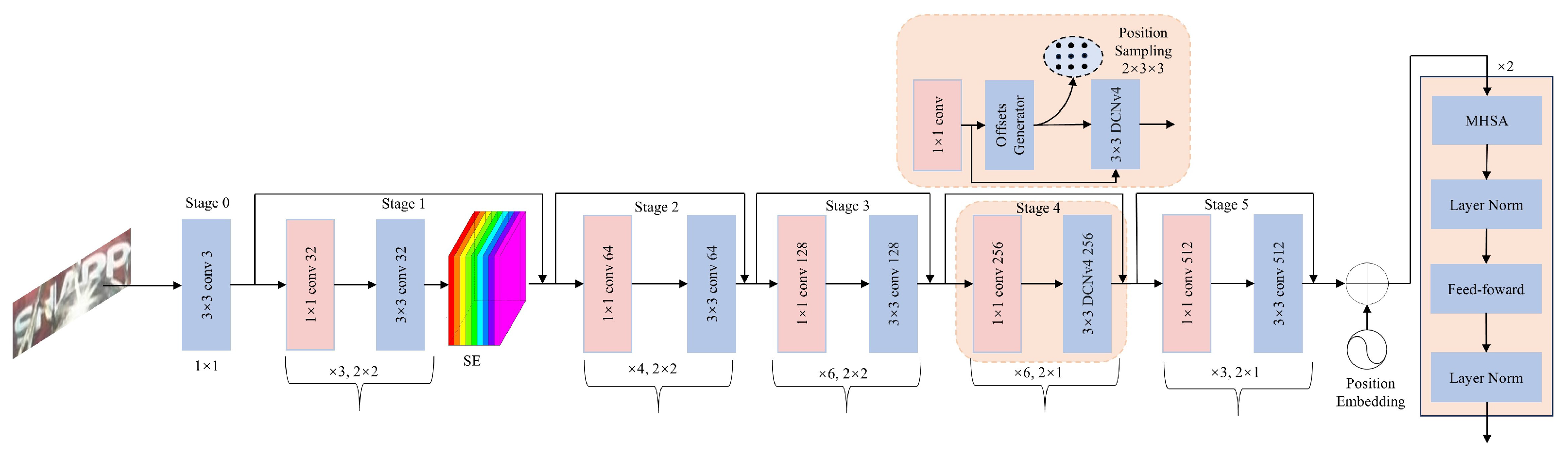

2.1. Visual Model

2.2. Recognition and Inference Module

2.3. Language Module

2.4. Vision-Language Fusion

2.5. Training Objective

3. Experiment

3.1. Datasets

3.2. Implementation Details

3.3. Ablation Study on Visual Model

3.3.1. Effectiveness of SCRTNet

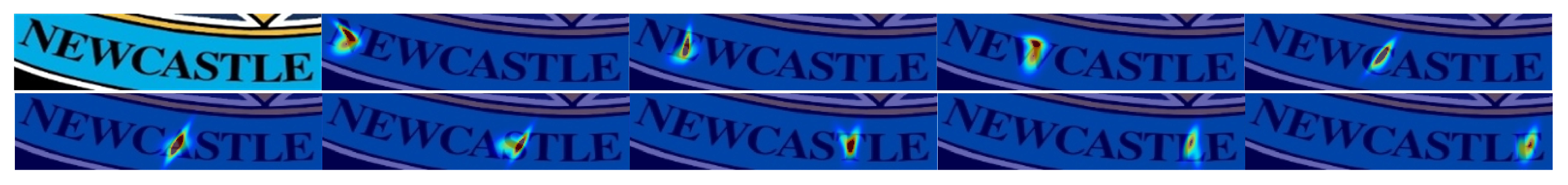

3.3.2. Effectiveness of DAM

3.4. Vision-Language Fusion

3.4.1. Ablation Study on Vision–Language Fusion

3.4.2. Sensitivity to Confidence Threshold

3.5. Comparison with SOTA

3.6. Analysis of Inference Speed

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wan, Z.; Zhang, J.; Zhang, L.; Luo, J.; Yao, C. On Vocabulary Reliance in Scene Text Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 11425–11434. [Google Scholar]

- Long, S.; He, X.; Yao, C. Scene Text Detection and Recognition: The Deep Learning Era. Int. J. Comput. Vis. 2021, 129, 1–24. [Google Scholar] [CrossRef]

- Wang, Y.; Xie, H.; Zha, Z.; Tian, Y.; Fu, Z.; Zhang, Y. R-net: A Relationship Network for Efficient and Accurate Scene Text Detection. IEEE Trans. Multimed. 2021, 23, 1316–1329. [Google Scholar] [CrossRef]

- Zhang, C.; Tao, Y.; Du, K.; Ding, W.; Wang, B.; Liu, J.; Wang, W. Character-Level Street View Text Spotting Based on Deep Multisegmentation Network for Smarter Autonomous Driving. IEEE Trans. Artif. Intell. 2021, 3, 297–308. [Google Scholar] [CrossRef]

- Ouali, I.; Ben Halima, M.; Ali, W. Augmented Reality for Scene Text Recognition, Visualization and Reading to Assist Visually Impaired People. Procedia Comput. Sci. 2022, 207, 158–167. [Google Scholar] [CrossRef]

- Mei, Q.; Hu, Q.; Yang, C.; Zheng, H.; Hu, Z. Port Recommendation System for Alternative Container Port Destinations Using a Novel Neural Language-Based Algorithm. IEEE Access 2020, 8, 199970–199979. [Google Scholar] [CrossRef]

- Liu, W.; Chen, C.; Wong, K.-Y.K.; Su, Z.; Han, J. STAR-Net: A Spatial Attention Residue Network for Scene Text Recognition. In Proceedings of the British Machine Vision Conference, York, UK, 19–22 September 2016. [Google Scholar]

- Shi, B.; Wang, X.; Lyu, P.; Yao, C.; Bai, X. Robust Scene Text Recognition with Automatic Rectification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4168–4176. [Google Scholar]

- Zhan, F.; Lu, S. ESIR: End-to-End Scene Text Recognition via Iterative Image Rectification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2059–2068. [Google Scholar]

- Luo, C.; Jin, L.; Sun, Z. MORAN: A Multi-Object Rectified Attention Network for Scene Text Recognition. Pattern Recognit. 2019, 90, 109–118. [Google Scholar] [CrossRef]

- Li, H.; Wang, P.; Shen, C.; Zhang, G. Show, Attend and Read: A Simple and Strong Baseline for Irregular Text Recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 8610–8617. [Google Scholar]

- Yang, X.; He, D.; Zhou, Z.; Kifer, D.; Giles, C.L. Learning to Read Irregular Text with Attention Mechanisms. In Proceedings of the International Joint Conference on Artificial Intelligence, Melbourne, Australia, 19–25 August 2017; pp. 3280–3286. [Google Scholar]

- Shi, B.; Yang, M.; Wang, X.; Lyu, P.; Yao, C.; Bai, X. ASTER: An Attentional Scene Text Recognizer with Flexible Rectification. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 2035–2048. [Google Scholar] [CrossRef] [PubMed]

- Yu, D.; Li, X.; Zhang, C.; Liu, T.; Han, J.; Liu, J.; Ding, E. Towards Accurate Scene Text Recognition with Semantic Reasoning Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 12113–12122. [Google Scholar]

- Zhao, Z.; Tang, J.; Lin, C.; Wu, B.; Huang, C.; Liu, H.; Tan, X.; Zhang, Z.; Xie, Y. Multi-Modal In-Context Learning Makes an Ego-Evolving Scene Text Recognizer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 15567–15576. [Google Scholar]

- Yue, X.; Kuang, Z.; Lin, C.; Sun, H.; Zhang, W. RobustScanner: Dynamically Enhancing Positional Clues for Robust Text Recognition. In Proceedings of the European Conference on Computer Vision, Virtual, 23–28 August 2020; pp. 135–151. [Google Scholar]

- Baek, J.; Kim, G.; Lee, J.; Park, S.; Han, D.; Yun, S.; Seong, J.O.; Lee, H. What Is Wrong with Scene Text Recognition Model Comparisons? Dataset and Model Analysis. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 4715–4723. [Google Scholar]

- Bautista, D.; Atienza, R. Scene Text Recognition with Permuted Autoregressive Sequence Models. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 178–196. [Google Scholar]

- Jiang, Q.; Wang, J.; Peng, D.; Liu, C.; Jin, L. Revisiting Scene Text Recognition: A Data Perspective. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 20543–20554. [Google Scholar]

- Qiao, Z.; Zhou, Y.; Yang, D.; Zhou, Y.; Wang, W. SEED: Semantics Enhanced Encoder-Decoder Framework for Scene Text Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020; pp. 13528–13537. [Google Scholar]

- Shi, B.; Bai, X.; Yao, C. An End-to-End Trainable Neural Network for Image-Based Sequence Recognition and Its Application to Scene Text Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 2298–2304. [Google Scholar] [CrossRef] [PubMed]

- Fang, S.; Xie, H.; Wang, Y.; Mao, Z.; Zhang, Y. Read Like Humans: Autonomous, Bidirectional and Iterative Language Modeling for Scene Text Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 7098–7107. [Google Scholar]

- Fang, S.; Mao, Z.; Xie, H.; Wang, Y.; Yan, C.; Zhang, Y. ABINet++: Autonomous, Bidirectional and Iterative Language Modeling for Scene Text Spotting. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 7123–7141. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Xie, H.; Fang, S.; Wang, J.; Zhu, S.; Zhang, Y. From Two to One: A New Scene Text Recognizer with Visual Language Modeling Network. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 14194–14203. [Google Scholar]

- Xiong, Y.; Li, Z.; Chen, Y.; Wang, F.; Zhu, X.; Luo, J.; Wang, W.; Lu, T.; Li, H.; Qiao, Y.; et al. Efficient Deformable ConvNets: Rethinking Dynamic and Sparse Operator for Vision Applications. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 5652–5661. [Google Scholar]

- Wang, T.; Zhu, Y.; Jin, L.; Luo, C.; Chen, X.; Wu, Y.; Wang, Q.; Cai, M. Decoupled Attention Network for Text Recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 12216–12224. [Google Scholar]

- Na, B.; Kim, Y.; Park, S. Multi-Modal Text Recognition Networks: Interactive Enhancements between Visual and Semantic Features. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 446–463. [Google Scholar]

- Zheng, T.; Chen, Z.; Fang, S.; Xie, H.; Jiang, Y. CDistNet: Perceiving Multi-Domain Character Distance for Robust Text Recognition. Int. J. Comput. Vis. 2024, 132, 300–318. [Google Scholar] [CrossRef]

- Hu, J.; Li, S.; Sun, G. Squeeze-and-Excitation Network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Jang, E.; Gu, S.; Poole, B. Categorical Reparameterization with Gumbel-Softmax. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; N-Gomez, A.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Gupta, A.; Vedaldi, A.; Zisserman, A. Synthetic Data for Text Localisation in Natural Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2315–2324. [Google Scholar]

- Jaderberg, M.; Simonyan, K.; Vedaldi, A.; Zisserman, A. Synthetic Data and Artificial Neural Networks for Natural Scene Text Recognition. In Proceedings of the Conference on Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014. [Google Scholar]

- Karatzas, D.; Shafait, F.; Uchida, S. ICDAR 2013 Robust Reading Competition. In Proceedings of the International Conference on Document Analysis and Recognition, Washington, DC, USA, 25–28 August 2013; pp. 1484–1493. [Google Scholar]

- Wang, K.; Babenko, B.; Belongie, S. End-to-End Scene Text Recognition. In Proceedings of the International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 1457–1464. [Google Scholar]

- Mishra, A.; Karteek, A.; Jawahar, C.V. Scene Text Recognition Using Higher Order Language Priors. In Proceedings of the British Machine Vision Conference, Swansea, UK, 7–10 September 2015; pp. 1–11. [Google Scholar]

- Karatzas, D.; Gomez-Bigorda, L.; Nicolaou, A.; Ghosh, S.; Bagdanov, A.; Iwamura, M.; Matas, J.; Neumann, L.; Chandrasekhar, V.; Lu, S.; et al. ICDAR 2015 Competition on Robust Reading. In Proceedings of the International Conference on Document Analysis and Recognition, Tunis, Tunisia, 23–26 August 2015; pp. 1156–1160. [Google Scholar]

- Phan, T.-Q.; Shivakumara, P.; Tian, S.; Tan, C.-L. Recognizing Text with Perspective Distortion in Natural Scenes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–27 June 2013; pp. 569–576. [Google Scholar]

- Anhar, R.; Palaiahnakote, S.; Chan, C.-S.; Tan, C.-L. A Robust Arbitrary Text Detection System for Natural Scene Images. Expert Syst. Appl. 2014, 41, 8027–8048. [Google Scholar] [CrossRef]

- Loshchilov, I.; Hutter, F. Fixing Weight Decay Regularization in Adam. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Yang, M.; Yang, B.; Liao, M.; Zhu, Y.; Bai, X. Class-Aware Mask-Guided Feature Refinement for Scene Text Recognition. Pattern Recognit. 2024, 149, 110244. [Google Scholar] [CrossRef]

- Atienza, R. Vision Transformer for Fast and Efficient Scene Text Recognition. In Proceedings of the International Conference on Document Analysis and Recognition, Lausanne, Switzerland, 5–10 September 2021; pp. 319–334. [Google Scholar]

- Lee, J.; Park, S.; Baek, J.; Seong, J.-O.; Kim, S.; Lee, H. On Recognizing Texts of Arbitrary Shapes with 2D Self-Attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 546–547. [Google Scholar]

- Wang, P.; Da, C.; Yao, C. Multi-Granularity Prediction for Scene Text Recognition. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 1484–1493. [Google Scholar]

- Cheng, C.; Wang, P.; Da, C.; Zheng, Q.; Yao, C. LISTER: Neighbor Decoding for Length-Insensitive Scene Text Recognition. In Proceedings of the International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 19484–19494. [Google Scholar]

- Wei, J.; Zhan, H.; Lu, Y.; Tu, X.; Yin, B.; Liu, C.; Pal, U. Image as a Language: Revisiting Scene Text Recognition via Balanced, Unified and Synchronized Vision-Language Reasoning Network. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; pp. 5885–5893. [Google Scholar]

| Encoder | Train Data | IC15 | Curve | Multi-Oriented | Artistic | Contextless | Salient | Multi-Words | General | Avg |

|---|---|---|---|---|---|---|---|---|---|---|

| ResNet | S | 70.2 | 67.1 | 59.6 | 50.8 | 70.2 | 59.8 | 61.2 | 60.5 | 62.4 |

| ResNet+TFs | S | 71.0 | 68.0 | 60.5 | 51.9 | 71.0 | 60.9 | 62.5 | 62.1 | 63.5 |

| ConvNeXtV2 | S | 70.8 | 67.8 | 60.6 | 50.9 | 71.3 | 59.9 | 62.3 | 61.7 | 63.2 |

| ViT-S | S | 69.5 | 67.7 | 60.1 | 50.7 | 70.1 | 58.8 | 61.0 | 60.1 | 62.3 |

| SCRTNet (Ours) | S | 71.6 | 68.9 | 61.2 | 52.6 | 72.6 | 61.1 | 62.8 | 63.2 | 64.3 |

| ResNet | R | 82.3 | 80.7 | 68.7 | 60.1 | 81.3 | 70.1 | 76.0 | 71.2 | 73.8 |

| ResNet+TFs | R | 83.2 | 81.5 | 72.6 | 61.2 | 82.5 | 70.9 | 77.3 | 72.0 | 75.1 |

| ConvNeXtV2 | R | 83.0 | 81.2 | 72.2 | 61.1 | 82.2 | 70.7 | 76.9 | 71.7 | 74.9 |

| ViT-S | R | 83.2 | 81.0 | 71.9 | 60.2 | 81.4 | 70.4 | 76.2 | 71.5 | 74.5 |

| SCRTNet (Ours) | R | 85.9 | 84.1 | 76.8 | 64.9 | 85.4 | 73.7 | 81.9 | 74.6 | 78.4 |

| ResNet | S+R | 83.6 | 82.2 | 73.1 | 61.7 | 83.3 | 73.2 | 77.8 | 73.5 | 76.1 |

| ResNet+TFs | S+R | 84.9 | 83.1 | 74.8 | 62.3 | 84.7 | 74.6 | 78.5 | 75.1 | 77.3 |

| ConvNeXtV2 | S+R | 84.3 | 82.9 | 74.1 | 62.9 | 83.6 | 73.3 | 77.4 | 74.9 | 76.7 |

| ViT-S | S+R | 82.7 | 81.9 | 73.1 | 61.6 | 82.9 | 72.9 | 76.6 | 72.9 | 75.8 |

| SCRTNet (Ours) | S+R | 86.1 | 85.7 | 77.2 | 65.1 | 86.9 | 75.1 | 83.2 | 76.3 | 79.5 |

| VM | LM | VLM | DAM | IC15 | Curve | Multi-Oriented | Artistic | Contextless | Salient | Multi-Words | General | Avg |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ✓ | 89.2 | 87.4 | 79.9 | 76.6 | 86.5 | 77.7 | 85.7 | 77.0 | 82.5 | |||

| ✓ | ✓ | 90.9 | 89.7 | 83.4 | 79.3 | 85.3 | 79.8 | 86.1 | 79.8 | 84.3 | ||

| ✓ | ✓ | ✓ | 92.1 | 89.9 | 85.5 | 79.8 | 88.5 | 81.3 | 86.5 | 83.1 | 85.8 | |

| ✓ | ✓ | ✓ | ✓ | 90.9 | 89.7 | 83.4 | 79.3 | 85.3 | 79.8 | 86.1 | 79.8 | 84.3 |

| Curve | Multi-Oriented | Artistic | Contextless | Salient | Multi-Words | General | Avg | |

|---|---|---|---|---|---|---|---|---|

| 0.1 | 89.5 | 85.2 | 79.7 | 85.1 | 79.7 | 86.0 | 79.7 | 84.0 |

| 0.2 | 89.6 | 85.3 | 79.8 | 85.2 | 79.8 | 86.1 | 79.8 | 84.2 |

| 0.3 | 89.7 | 85.4 | 79.9 | 85.2 | 79.9 | 86.2 | 79.9 | 84.3 |

| 0.4 | 89.7 | 85.5 | 79.9 | 85.3 | 79.9 | 86.2 | 79.9 | 84.4 |

| 0.5 | 89.8 | 85.5 | 80.0 | 85.3 | 80.0 | 86.3 | 80.0 | 84.5 |

| 0.6 | 89.8 | 85.5 | 80.0 | 85.3 | 80.0 | 86.3 | 80.0 | 84.5 |

| 0.7 | 89.7 | 85.4 | 79.9 | 85.2 | 79.9 | 86.2 | 79.9 | 84.4 |

| 0.8 | 89.6 | 85.3 | 79.8 | 85.2 | 79.8 | 86.1 | 79.8 | 84.3 |

| 0.9 | 89.5 | 85.2 | 79.7 | 85.1 | 79.7 | 86.0 | 79.7 | 84.2 |

| IIIT SVT IC13(857) IC15 (1811) SVTP CUTE | Curved Multi-Oriented Artistic Contextless Salient Multi-Word General | |||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Type | Method | Common Benchmarks | Avg | Union14M | Avg | Avg | Param /() | |||||||||||

| CTC | CRNN [21] | 90.8 | 83.8 | 92.8 | 71.8 | 70.5 | 81.2 | 81.8 | 29.3 | 12.6 | 34.3 | 44.2 | 16.8 | 35.6 | 60.3 | 33.3 | 55.7 | 8.3 |

| Attention | SATRN [43] | 97.1 | 95.2 | 98.8 | 87.3 | 91.0 | 93.9 | 93.9 | 74.9 | 64.8 | 67.2 | 76.3 | 72.2 | 74.2 | 75.8 | 72.2 | 82.2 | 67.0 |

| MGP-STR [44] | 97.2 | 97.9 | 98.0 | 91.4 | 93.0 | 98.1 | 95.9 | 85.9 | 80.8 | 73.6 | 76.1 | 78.4 | 72.8 | 84.4 | 78.9 | 86.7 | 148.0 | |

| LISTER [45] | 98.1 | 97.5 | 98.6 | 89.6 | 94.0 | 97.2 | 95.8 | 78.4 | 65.6 | 74.7 | 82.9 | 73.4 | 84.1 | 84.8 | 77.7 | 86.1 | 51.1 | |

| MAERec [19] | 98.5 | 97.8 | 98.3 | 89.5 | 94.4 | 98.6 | 96.2 | 88.8 | 83.9 | 80.0 | 85.5 | 84.9 | 87.6 | 85.9 | 85.2 | 90.3 | 35.7 | |

| LM | SRN [14] | 95.5 | 89.3 | 95.6 | 79.2 | 83.9 | 91.5 | 89.2 | 49.7 | 20.1 | 50.7 | 61.1 | 43.9 | 51.6 | 62.7 | 48.5 | 67.3 | 51.7 |

| VisionLAN [24] | 96.3 | 91.5 | 96.1 | 83.6 | 85.4 | 92.4 | 90.9 | 70.9 | 57.2 | 56.7 | 63.8 | 67.6 | 47.5 | 74.2 | 62.6 | 75.6 | 32.8 | |

| ABINet++ [23] | 97.2 | 95.7 | 97.9 | 87.6 | 92.2 | 94.5 | 94.2 | 75.1 | 61.5 | 65.3 | 71.2 | 72.9 | 59.2 | 79.4 | 69.2 | 80.7 | 36.7 | |

| BUSNet [46] | 97.6 | 97.5 | 97.9 | 89.3 | 95.4 | 97.8 | 95.9 | 82.7 | 79.1 | 71.8 | 79.2 | 77.4 | 72.9 | 83.9 | 78.1 | 86.3 | 32.1 | |

| CdisNet [28] | 98.0 | 97.1 | 97.9 | 88.7 | 93.6 | 97.2 | 95.4 | 81.4 | 73.9 | 73.6 | 79.1 | 78.5 | 81.4 | 82.4 | 78.6 | 86.4 | 65.5 | |

| DADCM | 98.3 | 97.9 | 98.7 | 92.1 | 95.3 | 98.6 | 96.8 | 89.9 | 85.5 | 79.8 | 88.5 | 81.3 | 86.5 | 87.2 | 85.5 | 90.7 | 31.8 | |

| Method | CRNN | MAERec | SRN | VisionLAN | ABINet++ | BUSNet | DADCM |

|---|---|---|---|---|---|---|---|

| Time (ms) | 6.5 | 91.0 | 19.1 | 17.8 | 29.6 | 19.8 | 19.2 |

| FLOPs (G) | 0.69 | 2.97 | 4.30 | 2.73 | 3.05 | 2.67 | 2.65 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feng, Y.; Li, C. Towards Robust Scene Text Recognition: A Dual Correction Mechanism with Deformable Alignment. Electronics 2025, 14, 3968. https://doi.org/10.3390/electronics14193968

Feng Y, Li C. Towards Robust Scene Text Recognition: A Dual Correction Mechanism with Deformable Alignment. Electronics. 2025; 14(19):3968. https://doi.org/10.3390/electronics14193968

Chicago/Turabian StyleFeng, Yajiao, and Changlu Li. 2025. "Towards Robust Scene Text Recognition: A Dual Correction Mechanism with Deformable Alignment" Electronics 14, no. 19: 3968. https://doi.org/10.3390/electronics14193968

APA StyleFeng, Y., & Li, C. (2025). Towards Robust Scene Text Recognition: A Dual Correction Mechanism with Deformable Alignment. Electronics, 14(19), 3968. https://doi.org/10.3390/electronics14193968