1. Introduction

Named entity recognition (NER) in the educational domain serves as a core natural language processing (NLP) application, providing crucial support for educational informatization by automatically identifying key entities—such as subject concepts, institutions, and teaching resources—in educational texts [

1,

2]. By extracting structured information from unstructured data, NER supports advanced applications such as relation extraction [

3], question answering [

4], and knowledge graph construction [

5]. Chinese educational NER faces specific challenges due to linguistic characteristics. The inherent ambiguity of Chinese word boundaries necessitates simultaneous attention to word segmentation and entity detection. Furthermore, Chinese named entities often exhibit a coarser granularity than words, ranging from single characters to multi-character compounds. Consequently, accurate boundary identification and enriched lexical semantics are critical for improving model performance [

6,

7].

The prevalence of specialized terminology, abbreviations, and compound words in the Chinese educational domain gives rise to significant challenges, including entity boundary ambiguity and nested entity structures. The phrase “University Physics,” for example, represents an indivisible course entity yet is susceptible to misinterpretation as a generic descriptive phrase. Furthermore, compound terms such as “Artificial Intelligence Python Practice” exhibit nesting, where the core entity contains subordinate entities denoting a field and a pedagogical method, respectively. The accurate resolution of boundary ambiguity necessitates precise contextual comprehension, whereas the identification of nested entities requires capabilities for multi-granular semantic recognition. BERT provides a transformative solution through its bidirectional Transformer architecture, which acquires dynamic, context-sensitive word representations via deep pre-training, thereby facilitating nuanced boundary detection and hierarchical analysis. The model’s multi-layer attention mechanism additionally enables the learning of features at varying granularities, offering inherent support for nested entity recognition. Together, these capabilities establish a consolidated and powerful semantic modeling foundation for addressing ambiguity and structural complexity in specialized domains.

To address the challenges of ambiguous boundaries and nested entity recognition in the educational domain NER, we propose an enhanced semantic BERT model (ES-BERT). By fusing domain-specific lexical knowledge from educational dictionaries with character-level features, ES-BERT enriches semantic representations. Experiments demonstrate that this fusion strategy mitigates semantic gaps and boundary ambiguity in Chinese character-level NER, achieving improvement in F1 score over baseline models. The main contributions of this study are threefold:

Model Architecture: Building upon BERT, we propose ES-BERT (enhanced semantic BERT), a hybrid-fusion model that combines domain-specific lexical features with character-level representations. This dual-path design synergistically enhances granular semantic encoding, providing a theoretical foundation for educational NER performance improvement.

Training Objective: To address class imbalance (non-entity token dominance) and boundary detection challenges, we integrate a novel focal loss-based joint training objective. This approach optimizes label constraints and boundary representations, significantly improving accuracy for boundary-sensitive entities.

Resource Construction: We manually curate a computer education corpus with 1000 expert-annotated research abstracts, processed through tokenization and rigorous annotation protocols. Additionally, we compile a domain-specific dictionary to mitigate the current lack of linguistic resources in this field.

2. Related Work

The rapid advancement of named entity recognition (NER) has led the research community to favor hybrid architectures that synergistically combine neural networks with statistical learning. A prime example is the BERT+BiLSTM+CRF framework, which accomplishes efficient sequence labeling through a multi-stage complementary process: BERT generates contextually rich character-level embeddings; BiLSTM captures long-range bidirectional dependencies to enhance feature coherence; and CRF optimizes the label sequence globally by modeling transition constraints, thereby preventing invalid predictions. By integrating deep representation learning with structured prediction, this framework exhibits robust adaptability and stability across diverse NER domains. Dong et al. [

8] proposed BERT-WWM-EXT, which incorporates Whole Word Masking during pre-training to enhance hierarchical representation learning. When integrated with BiLSTM-CRF, this approach demonstrated superior boundary detection capabilities in judicial texts. Hu et al. [

9] proposed a BERT-BiLSTM-CRF-based named entity recognition method for core educational technology courses to enhance learning efficiency, reporting improved performance over conventional CRF and BiLSTM-CRF models. Xie et al. [

10] enhanced the BERT-BiLSTM-CRF paradigm with contextual-semantic enhancement layers, setting new benchmarks on the MSRA and People’s Daily corpora, particularly for polysemous entities. During the ongoing evolution of the BERT model, a number of efficient variants have emerged—represented by RoBERTa [

11] and ALBERT [

12]—which have been widely applied in named entity recognition tasks.

With the advancement of research, semantic enhancement techniques have demonstrated effectiveness in named entity recognition (NER). Numerous studies have explored the integration of character-level, word-level, and semantic information to enhance the performance of Chinese NER tasks. Chen et al. [

13] aggregated multiple word-level features associated with characters and incorporated them into BERT for text feature enhancement, successfully addressing Chinese nested named entity recognition. Liu et al. [

14] proposed LEBERT, a lexically enhanced BERT model for Chinese sequence labeling. The model integrates external lexical knowledge directly into BERT layers through a lexical adapter and performs deep lexical knowledge fusion, achieving state-of-the-art performance in NER and related tasks. Wu et al. [

15] developed an entity recognition model that incorporates external dictionaries for feature enhancement and adversarial training. Their approach constructs character–word pairs from dictionaries, employs a dictionary adapter module for feature fusion, and utilizes FGM (Fast Gradient Method) to enhance model robustness. Sheng et al. [

16] designed a lexicon-enhanced BiLSTM-CRF model that combines character and word embeddings at the embedding layer to enrich initial text representations. These enhanced embeddings serve as input to a BiLSTM network, with the CRF layer performing final label decoding. Wang et al. [

17] introduced an interactive fusion method for integrating character and word information in Chinese NER. Their method employs an interactive graph structure to merge character and lexical features, resulting in more comprehensive feature representations. To more clearly illustrate the technical approaches of existing semantic enhancement techniques, a summary is provided in

Table 1.

Prior research has demonstrated that semantic enhancement techniques can effectively identify entity boundaries and extract rich semantic information from textual data, yielding measurable improvements in NER performance. To address the research gap in the educational domain NER, we propose ES-BERT, a novel architecture that integrates domain-adapted lexical features with character-level embeddings. Specifically, ES-BERT employs an educational domain lexicon to generate hybrid character–word representations augmented with domain-specific semantics. The proposed framework simultaneously addresses two key challenges in educational NER, as follows: (1) ambiguous entity boundaries and (2) nested entity recognition difficulties, resulting in substantial performance gains.

3. ES-BERT with Enhanced Semantic Representation

The BERT model has demonstrated remarkable advantages in named entity recognition (NER) tasks, with its contextual understanding capabilities and fine-tuning flexibility establishing it as a state-of-the-art approach [

18,

19,

20]. As a character-level model, BERT processes each Chinese character as an independent token, thus circumventing word segmentation errors. However, this architecture inherently lacks lexical information utilization—a critical component for capturing inter-character relationships and facilitating boundary detection [

21].

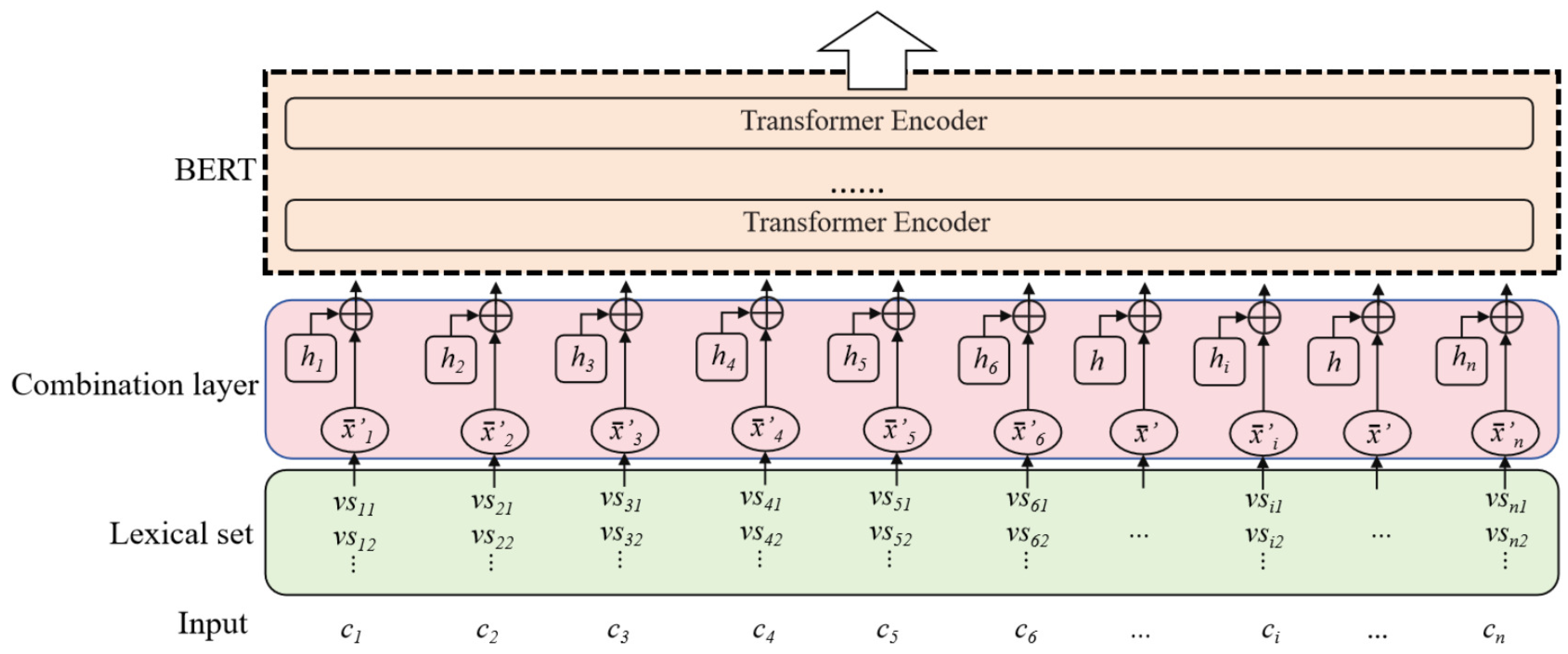

To address this limitation, we propose ES-BERT (enhanced semantic BERT,

Figure 1), which integrates word-level features with character representations to strengthen inter-character associations. This hierarchical semantic enhancement provides two key benefits, as follows: (1) more precise entity boundary identification and (2) richer contextual semantic extraction, which collectively advance the performance of Chinese NER. The construction process of the ES-BERT model is shown in Algorithm 1.

| Algorithm 1 Enhanced-semantic-BERT Chinese NER algorithm |

Input: Chinese sentence , domain lexicon D

Output: Encoded semantic vector sequence

Phase 1: Character–Lexicon Matching

- 1:

for to n do - 2:

# Initialize lexicon set for character - 3:

Generate word combinations centered at in sentence context - 4:

for each word combination w do - 5:

if # Verify match in domain lexicon then - 6:

- 7:

end if - 8:

end for - 9:

end for - 10:

Phase 2: Character–Word Vector Fusion - 11:

for

to n do - 12:

# Character vector: token + segment + position embeddings - 13:

# Initialize word vector set - 14:

for each word do - 15:

# Get word vector from domain lexicon - 16:

- 17:

end for - 18:

TF-IDF Weight Calculation - 19:

for each word vector do - 20:

# Calculate TF-IDF weights - 21:

end for - 22:

Weighted Word Vector Fusion - 23:

# Initialize fused word vector - 24:

for to do - 25:

- 26:

end for - 27:

Dimension Alignment Transformation - 28:

- 29:

Final Fusion - 30:

# Obtain character–word fused vector - 31:

end for Phase 3: BERT Encoding - 32:

# Construct input sequence - 33:

Self-Attention Mechanism - 34:

for each Transformer layer do - 35:

- 36:

- 37:

- 38:

- 39:

Multi-Head Attention - 40:

- 41:

where - 42:

- 43:

# Feed-forward network - 44:

end for - 45:

# Output encoded semantic vector sequence - 46:

return

P

|

3.1. Domain Lexicon Construction

In the Chinese linguistic context, multi-character lexical units (versus single characters) inherently embody richer semantic priors and provide more definitive boundary indicators for named entity recognition. This phenomenon is particularly pronounced in specialized domains. Domain-specific terminologies exhibit strong predictive power that can effectively guide and refine model predictions.

To optimize lexical semantic representation, our approach incorporates two key components, as follows: (1) core computer science terminologies and (2) domain-specific vocabulary from both tertiary and vocational education contexts. The educational domain lexicon was compiled by selectively extracting relevant terms from Tencent’s open-source Chinese word embeddings, focusing particularly on computer science terminology.

Leveraging this lexicon, we identify character-position-aware lexical candidates within input texts. This design intentionally circumvents error-prone word segmentation, since segmentation precision constitutes a fundamental bottleneck in Chinese NER systems. Crucially, any segmentation errors would propagate through the recognition cascade, severely compromising entity identification reliability.

To address this fundamental challenge, our framework dynamically constructs domain-specific lexical candidate sets through comprehensive sentence-to-lexicon matching. The core algorithm operates on a character-by-character basis, as follows: For each Chinese character in the input sequence, it evaluates all possible contextual combinations. When a combination matches a domain lexicon entry, the corresponding word is incorporated into the character’s lexical candidate set.

Formally, let

denote an n-character Chinese sentence. Our method generates a character–word sequence

, where each

represents the potential lexical set containing all domain words that (1) include character

, (2) occur in sentence

S, and (3) exist in the domain lexicon (see

Figure 1). This is implemented using an optimized maximum matching algorithm with

time complexity.

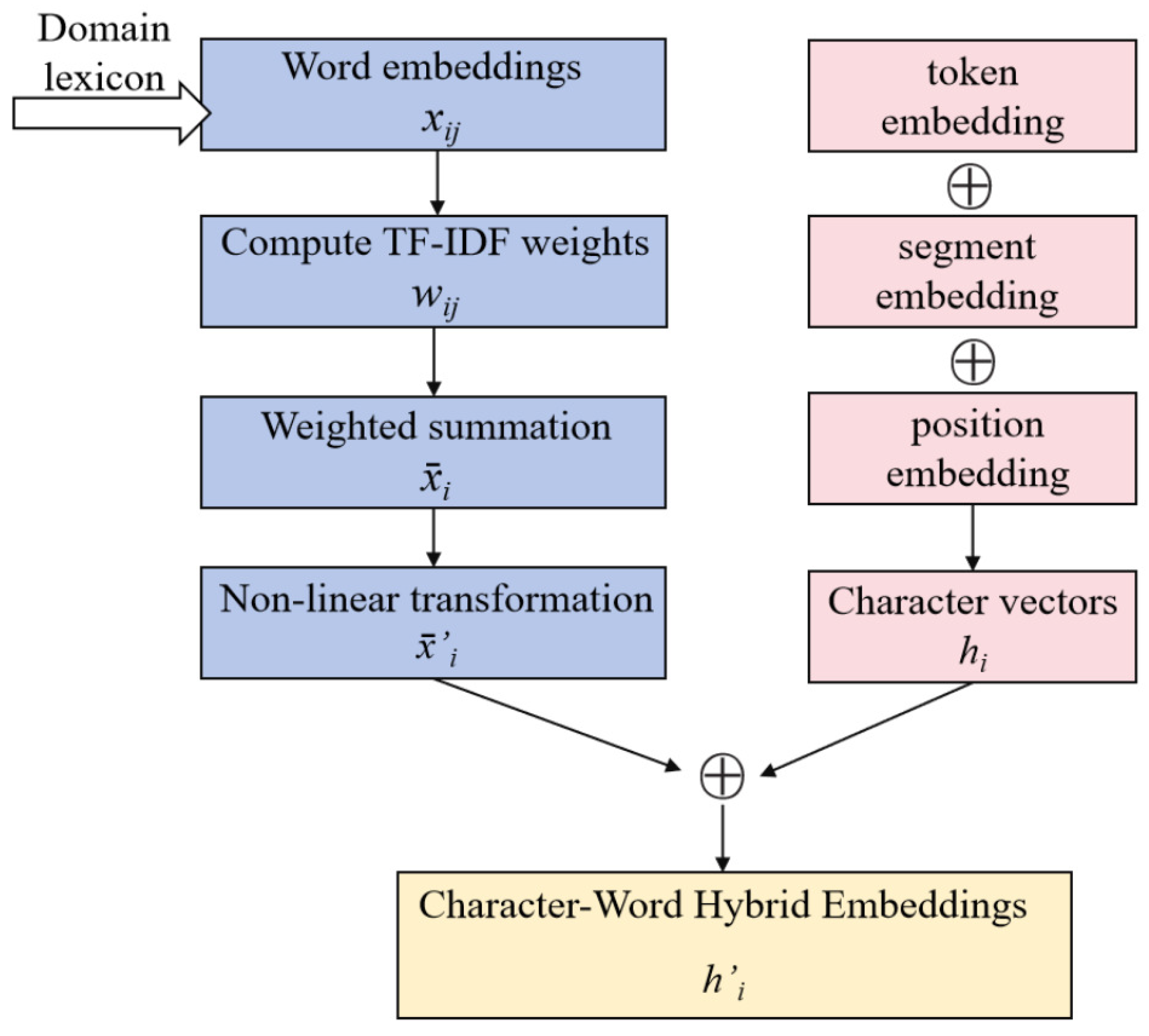

3.2. Character–Word Vector Fusion

Upon acquiring the character–word sets , we transform both characters and their associated words into vector representations . Specifically, the character vector at position i is derived from BERT’s output representation, which aggregates three components, as follows: token embedding, segment embedding, and position embedding. The word vector set contains all pre-trained lexical embeddings corresponding to character , where each is retrieved from our domain-specific lexicon.

To mitigate segmentation errors, we compute TF-IDF weights for candidate words, assigning lower weights to the following: (1) infrequent terms, and (2) out-of-vocabulary words. The weight

for the

j-th word associated with character

is calculated as follows:

where

represents the term frequency (TF) of word

(the

j-th word corresponding to character

) in the document,

represents the inverse document frequency (IDF) of word

.

For each character

, we compute the weighted sum of all its corresponding word vectors to generate the fused lexical vector

:

To address the dimensionality mismatch between fused lexical vectors and BERT-generated character embeddings, we first apply a nonlinear projection layer for vector alignment prior to secondary fusion. This dimensional transformation ensures feature space compatibility between the two representation types:

where,

is the activation function,

represents the aligned fused lexical vector,

denotes the transformation matrix,

is the projection matrix, and

,

are bias terms, where

indicates the character embedding dimension and

represents the word vector dimension.

Finally, we perform element-wise summation between the aligned fused lexical vectors and the character vectors from BERT’s embedding layer, yielding the combined character–word fusion vectors. These integrated vectors

simultaneously encapsulate both character-level and word-level information, as illustrated in

Figure 2.

3.3. BERT-Enhanced Hybrid Representation

The hybrid character–word embeddings

are subsequently processed through BERT’s Transformer encoder stack. The architecture employs 12-head self-attention mechanisms for dynamic modeling of contextual dependencies, enabling each position to attend to and aggregate the most informative features across the sequence. Specifically, this is achieved by computing three vector representations per token position—query (

Q), key (

K), and value (

V), which interact through scaled dot-product attention:

Here, denotes a linear transformation, and , , and represent trainable weight matrices.

The attention computation is formulated as follows:

Here, denotes the scaled dot-product attention operation. The term projects the attention matrix into a standard normal distribution space. is the normalized exponential function that ensures the attention weights sum to 1.

The model employs multi-head self-attention through parallel computation across multiple attention heads, followed by concatenation of all head outputs and a final linear projection. This architecture facilitates multi-perspective feature extraction from distinct representation subspaces, enables the acquisition of diverse semantic patterns, and ensures comprehensive contextual understanding.

Let , , and denote the query/key/value weight matrices for the attention head, with being the output projection matrix and the concatenation operator.

The sequence , produced by successive Transformer encoder layers, forms contextualized fusion vectors that holistically encode full-text semantics, making them immediately applicable to named entity recognition tasks.

4. ES-BERT Enhanced Named Entity Recognition Model

Our proposed framework for the educational domain NER integrates the enhanced semantic BERT (ES-BERT) model with a BiLSTM-CRF architecture [

22,

23,

24]. This integration synergistically leverages BERT’s deep contextual representations, BiLSTM’s capacity for capturing sequential dependencies, and CRF’s ability to enforce global label consistency. The model begins with ES-BERT generating semantically enhanced embeddings. These embeddings are then processed by the BiLSTM layer to extract hierarchical contextual features. Finally, the CRF layer decodes the most probabilistically optimal label sequence using the Viterbi algorithm. The entire system is jointly optimized with a focal loss objective to enhance robustness against annotation noise, as illustrated in

Figure 3.

4.1. BiLSTM Layer

Standard LSTM networks process sequences unidirectionally (left-to-right), inherently assigning greater importance to later tokens—a problematic bias for segmentation tasks. Our method constructs bidirectional representations through position-wise concatenation of forward

and backward

LSTM outputs, formally defined as

, where each

combines both directional contexts for optimized label prediction.

Here, and represent the forward and backward LSTM networks, respectively, capturing historical and future contextual information for the current feature position . The symbol ⊕ denotes vector concatenation. In named entity recognition (NER) tasks, the BiLSTM architecture fully utilizes bidirectional sequence context, significantly improving character-level label prediction accuracy.

4.2. CRF Layer

The CRF layer explicitly models both word-level semantic relationships and inter-label transition probabilities. When the BiLSTM layer generates erroneous predictions, the CRF layer corrects these errors by analyzing neighboring label contexts, thereby refining the final output sequence [

25]. Formally, given a label sequence

and a feature vector

, the sequence scoring function is defined as follows:

The score at each position is determined by (1) the emission matrix E from BiLSTM outputs, which associates each character with corresponding label weights, and (2) the transition matrix T of the CRF that captures label-to-label movement scores, where denotes the weight vector for label i and indicates the transition score from label i to label j.

The Viterbi algorithm’s identification of the maximum-probability path is ideally suited for named entity recognition (NER) tasks by globally optimizing sequence likelihood (avoiding local decision pitfalls), enforcing structural constraints through transition probabilities, and capturing long-range dependencies essential for precise entity boundary detection. Formally, given an input sequence

L and label space

y, the optimal tag sequence

is computed as follows:

where

denotes the complete collection of predicted label sequences.

4.3. Novel Focal Loss-Based Composite Loss

The negative log-likelihood loss (NLL), as the standard CRF loss function, demonstrates strong performance under ideal conditions (clean data, balanced class distribution, and complete annotations).

However, in named entity recognition (NER) tasks, non-entity (“O”) labels account for an overwhelmingly high proportion of tokens, while numerous challenging samples (e.g., boundary-ambiguous or nested entities) coexist. When certain entity categories dominate the dataset with significantly larger sample sizes, the negative log-likelihood (NLL) loss introduces substantial gradient bias. This causes the loss function to be predominantly influenced by high-frequency categories, consequently leading to model predictions that are markedly skewed toward majority classes.

Focal loss, originally designed to handle class imbalance and varying classification difficulty in sequence labeling tasks [

26], is enhanced in our framework through Viterbi-decoding integration. The proposed method first computes positional alignment discrepancies between Viterbi-derived paths and ground truth annotations, then generates sequence-aware weighting coefficients

. Only samples misclassified under sequential constraints receive elevated weights

. Consequently, we reformulate focal loss as follows:

In the formulation, T represents the sequence length, denotes the probability of the true label at position t, and is the focusing hyperparameter. To address the class imbalance inherent in this task, where majority-class samples are typically classified with ease, a moderate value (e.g., 2.0) is set to suppress gradient contributions from these simple samples. This focuses the optimization on learning discriminative features, thereby enhancing minority-class performance. The Viterbi weighting factor increases when predictions violate sequential constraints, while the hyperparameter > 0 amplifies weights for such misclassified samples. When = 0, the sequence-aware mechanism is inactive; setting > 0 (e.g., 0.75) provides a stronger signal to prioritize correcting errors that disrupt sequential coherence.

During model training, we implement a joint optimization framework that simultaneously preserves the standard CRF negative log-likelihood (

) objective and integrates Viterbi-augmented focal loss (

) as an adaptive regularization component (Algorithm 2).

Here,

is a hyperparameter, with the aim of minimizing the loss.

| Algorithm 2 Viterbi-enhanced focal loss (V-FL) |

Input: Sequence length T;

True label probability at position t;

True label sequence ;

Viterbi decoding path .

Output: Total loss

- 1:

Compute traditional CRF negative log-likelihood loss from Equation ( 14); - 2:

Calculate sequence-aware weighting coefficients: - 3:

for to T do - 4:

if then - 5:

# Increase weight for Viterbi prediction errors - 6:

else - 7:

# Base weight for correct predictions - 8:

end if - 9:

end for - 10:

Compute Viterbi-based focal loss (V-FL): - 11:

- 12:

for to T do - 13:

- 14:

end for - 15:

# Normalization - 16:

Compute joint optimization total loss: - 17:

return

|

This combined approach integrates the sequence-level constraints of the CRF loss with the token-level hard example weighting of the V-Focal loss. The CRF loss inherently models label transition patterns to ensure global coherence, whereas the V-Focal loss emphasizes difficult-to-classify tokens. Their joint optimization achieves two objectives, as follows: (1) globally coherent label sequences and (2) enhanced attention to challenging boundary cases. As a result, the final predictions exhibit both global sequence consistency and high local classification confidence.

5. Experiments

5.1. Experimental Environment

The experimental configuration consisted of an Intel Core i7-8565U processor with 16 GB RAM and 8 GB GPU VRAM, running on Windows 11 with a Python 3.8 runtime environment. The implementation employed PyCharm Professional 2023.2.5 in Anaconda with TensorFlow 2.10.0, and PyTorch 1.13.1, implementing Google’s BERT-Base-Chinese pretrained model whose key hyperparameters are detailed in

Table 2.

5.2. Experimental Data

The experimental dataset comprises a custom-built corpus of educational literature in computer-related disciplines (Edu_literature). We collected 1000 pedagogical research papers from CNKI using web crawlers programmed with topic keywords such as “industry-education integration”, “OBE concept”, and “project-driven approach”. The papers included author affiliations, titles, abstracts, and keywords. Entity annotation was performed through a hybrid approach combining machine processing and manual review. The procedure began with an initial annotation using a pre-trained model-based tool, followed by human-assisted verification to identify and resolve ambiguities and errors. A total of 5312 entities across four categories were annotated. The distribution of these categories is shown in

Table 3.

The dataset employs the standard BIO (begin–inside–outside) annotation scheme, where ‘B-X’ marks the beginning of entity X, ‘I-X’ indicates the continuation of entity X, and ‘O’ denotes non-entity tokens. Annotation examples are shown in

Table 4.

5.3. Evaluation Metrics

To assess model performance, we employ three standard evaluation metrics—precision, recall, and F1 score—for named entity recognition analysis.

where TP denotes the number of correctly identified entities, FP represents the number of non-entities incorrectly identified as entities, and FN indicates the number of entities that failed to be recognized.

5.4. Entity Recognition and Analysis in Education

Owing to the limited size of the Edu_literature dataset, a single train–test split risks unstable evaluation due to partitioning variability. To mitigate this, we employed 5-fold cross-validation for all six comparative models. This method ensures that models are trained and validated on nearly the entire dataset in each cycle, maximizing data utility. Performance metrics were averaged over all folds to yield a more robust estimate of model performance and stability.

The average performance metrics across all folds from the 5-fold cross-validation experiments for the models BiLSTM+CRF, BERT+BiLSTM+CRF [

9], ALBERT+BiLSTM+CRF [

12], Lattice-LSTM [

27], Soft-Lexicon (LSTM) [

28], and the model proposed in this study are presented in

Table 5.

As shown in

Table 5, the model proposed in this study demonstrates superior performance across all three metrics—precision (90.38%), recall (89.71%), and F1 score (90.04%)—significantly outperforming all other comparative models, which reflects its comprehensive advantage. Compared to the baseline model BiLSTM+CRF (F1 = 84.11%), the proposed model achieves an improvement of nearly 6 percentage points in F1 score, indicating the effectiveness of its architectural enhancements. Among the pre-trained language models, ALBERT+BiLSTM+CRF (F1 = 87.30%) slightly surpasses BERT+BiLSTM+CRF (F1 = 86.71%), suggesting that ALBERT’s parameter-sharing mechanism may lead to more stable representation learning. However, both fall short of the proposed model, implying that there remains room for optimization beyond simply employing pre-trained models. Within lexicon-enhanced models, Soft-Lexicon (LSTM) (F1 = 88.44%) performs better than Lattice-LSTM (F1 = 85.84%), validating the effectiveness of soft lexical integration. Nevertheless, the proposed model pushes beyond the performance ceiling of such methods, likely due to more refined semantic enhancement mechanisms—such as weighted integration of domain-specific dictionaries—that enable more accurate boundary detection and type recognition. Overall, the proposed model maintains high precision while balancing recall, with its performance advantage likely stemming from targeted modeling of domain-specific characteristics and deep fusion of character- and word-level information, offering an effective new solution for NER tasks.

To scientifically evaluate whether the performance improvement of our proposed ES-BERT model over the classic BERT+BiLSTM+CRF model is statistically significant, we compared the performance of the two models using a paired t-test based on F1 scores from 5-fold cross-validation. The results are presented in

Table 6.

The paired-sample t-test on the 5-fold cross-validation results (

Table 6) revealed that the proposed model (M = 0.900, SD = 0.010) significantly outperformed the BERT+BiLSTM+CRF baseline (M = 0.867, SD = 0.008), t(4) = −6.91,

p = 0.002. This statistically significant difference indicates that the performance gain afforded by the proposed model is robust and not attributable to random variation. The moderate positive correlation (r = 0.336) between model performances across folds suggests that while both models were influenced by similar data characteristics, the proposed model consistently achieved higher scores. These results provide strong evidence that the proposed model offers a meaningful improvement over the baseline in terms of predictive accuracy.

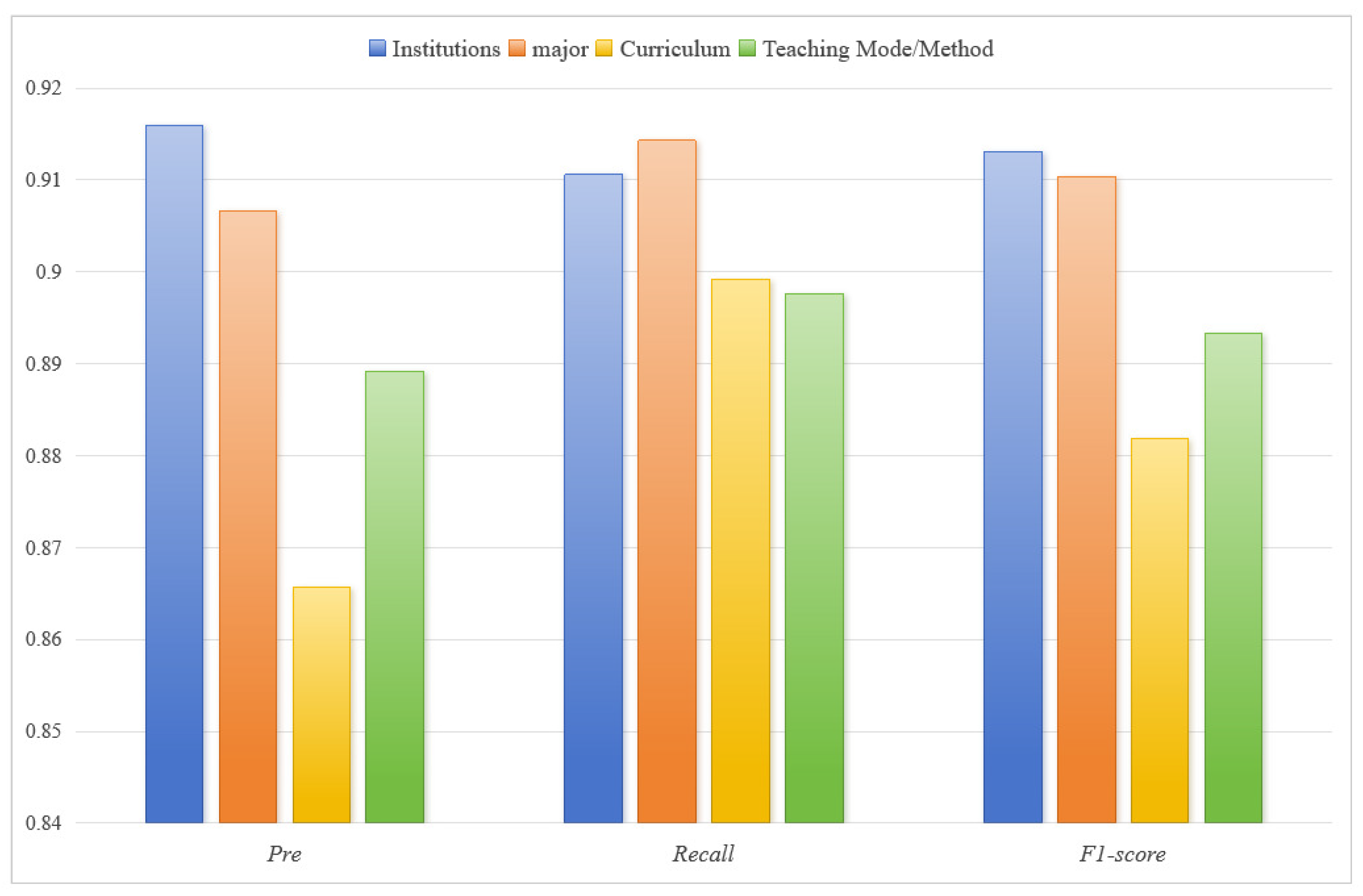

Based on the comparative experimental results, we further evaluate our model’s performance across four entity categories (institutions, majors, courses, and teaching methods/modes) using multiple evaluation metrics, as shown in

Figure 4.

Figure 4 demonstrates that our model achieves superior F1 scores for institutions and major entities, while exhibiting relatively lower performance on courses, teaching-method, and teaching-mode categories. In-depth analysis indicates that institutional entities in our self-constructed educational domain dataset exhibit higher frequency and diversity, enabling more comprehensive pattern learning during training. The presence of distinctive lexical markers (e.g., “University”/“College”) in institution names further boosts recognition accuracy. The model’s high major identification accuracy stems from standardized naming conventions documented in China’s Ministry of Education publications, particularly the “Undergraduate Program Catalog” and “Vocational Education Major Catalog”, which establish clear naming patterns (e.g., “Computer Science”, “Computer Science and Technology”). Course names present greater challenges due to lexical polysemy: terms like “Java Programming”, “Web Front-end Development”, and “Computer Operating Systems” function ambiguously as both course titles and technical terminology, introducing classification noise that impedes accurate disambiguation. To address these limitations, our future work will focus on the following: (1) dataset expansion and education-domain lexicon enrichment; (2) semantic segmentation precision improvement and named entity boundary detection optimization, ultimately aiming to acquire more precise entity features and boundary information for enhanced recognition performance.

5.5. Ablation Study

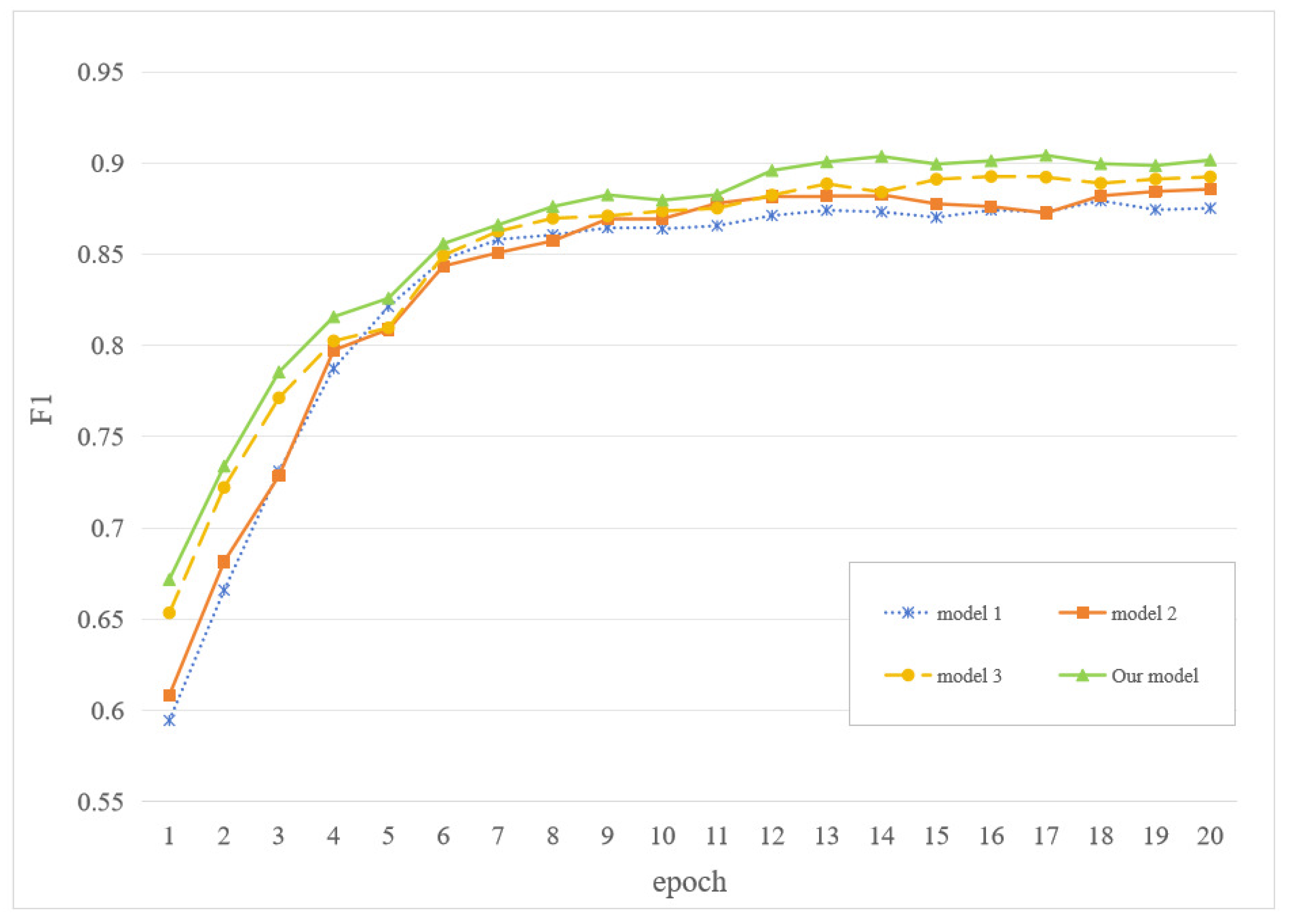

To evaluate the effectiveness of the ES-BERT model with enhanced semantic representation in named entity recognition, four progressively advanced model variants were designed for comparative analysis, with the objective of assessing the contribution of each module:

Model 1 (Baseline): Uses exclusively BERT-generated character embeddings as input to the BiLSTM-CRF framework, with negative log-likelihood (NLL) loss as the objective function.

Model 2: Building upon Model 1, semantic enhancement was incorporated without the use of a domain-specific lexicon. A word segmentation tool was applied to generate tokens, which were then fused with character-level representations to form the input to the model.

Model 3: Based on Model 2, a domain lexicon was integrated along with the proposed ES-BERT model. Domain-relevant vocabulary was combined with character-level information to construct the input representation.

Model 4 (Full Model): An enhanced version of Model 3, in which a modified focal loss-based joint loss function was introduced to replace the original loss function.

Figure 5 displays the F1 scores obtained from 20 iterative training runs of each model configuration.

Figure 5 demonstrates that Model 2 achieves higher overall F1 scores compared to Model 1 after convergence, confirming the effectiveness of lexical information fusion for recognition enhancement. However, our analysis reveals that word segmentation errors cause error propagation, adversely affecting downstream word recognition and, consequently, limiting further performance improvements.

To address this limitation, Model 3 introduces an innovative domain dictionary matching mechanism. By generating character-level candidate word sets with TF-IDF weighting, it significantly reduces the impact of incorrect segmentation—low-weight erroneous segmentations are effectively filtered out, resulting in F1 score improvement over Model 2.

This study introduces an enhanced model based on Model 3, which obtains the optimal F1 score. The model tackles two principal difficulties in domain-specific NER—vague entity boundaries and specialized terms—through strengthened domain semantic encoding, yielding more informative and precise features that augment boundary recognition. Additionally, a modified focal loss function addresses class imbalance by applying increased weights to persistently misclassified examples under contextual constraints, thus refining the learning focus on challenging instances. The collaborative operation of these modules markedly improves recall, consequently elevating the F1 score.

The ablation study confirms the critical importance of component-level synergistic optimization for performance improvement, providing new methodological insights for multi-feature fusion in NER tasks.

5.6. Model Transferability

Comparative experiments were conducted to validate the cross-domain transferability of the proposed model using the open-source KBQA and Resume datasets. Given the lack of dedicated domain-specific lexicons for these datasets, Tencent’s open-source Chinese word vectors served as the common domain dictionary for word segmentation in all Trials.

- (1)

The knowledge base question answering (KBQA) dataset, sourced from the NLPCC-ICCPOL 2016 shared task, comprises a knowledge base with 24,030 entity-attribute triplets. We split the corresponding QA pairs into training (14,609 pairs), validation (3945 pairs), and test (3945 pairs) sets.

- (2)

The Resume dataset, contains 16,565 annotated entities across 8 categories (including educational background, locations, and personal names), providing additional scenarios for evaluating transfer learning capability.

Table 7 presents the model’s performance on the specialized KBQA (Knowledge Base Question Answering) task set with a triple-based knowledge base, where our model achieves new state-of-the-art benchmarks of 98.41% precision, 98.35% recall, and 98.36% F1 score. These results conclusively evidence the model’s Outstanding capability for accurate recognition of diverse entity categories (including persons, organizations, and temporal expressions) in knowledge-intensive QA scenarios. Further analysis indicates the model’s dual advantage: maintaining high precision while achieving improved low-frequency entity recognition rates—a breakthrough stemming from the synergistic integration of our ES-BERT architecture with novel focal loss-based joint training optimization. The experimental outcomes not only confirm the architectural superiority of the model but also present a viable technical solution for entity recognition in knowledge base question answering systems.

Experimental results on the Chinese Resume dataset (

Table 8) confirm the efficacy of the ES-BERT model. By deeply fusing character-level and domain-specific lexical features, the model effectively captures domain knowledge from resumes and precisely identifies entity boundaries (e.g., person names, education, work experience). It achieves state-of-the-art performance (96.75% precision, 96.31% recall, and 96.53% F1 score), demonstrating the validity of our approach for handling the unique linguistic patterns of Chinese resumes. The architecture’s adaptive learning mechanism yields highly competitive boundary detection accuracy.

Systematic evaluation of the cross-domain transfer experiments demonstrates that when employing the general-purpose Tencent open-source Chinese word vectors as a domain lexicon, the proposed model architecture exhibits strong domain adaptation and generalization capabilities across two distinct datasets. These results establish a solid foundation for the model’s application in cross-domain scenarios.

6. Conclusions

Advances in AI technology are increasing the importance of named entity recognition (NER) for educational applications—such as adaptive learning, intelligent tutoring, and academic research—establishing it as a critical enabling technology for intelligent education systems. A key limitation in educational NER is insufficient lexical semantic awareness, which leads to weak domain-specific representations and imprecise entity boundaries. To address this, we propose ES-BERT, a novel model that introduces enhanced semantic representation. By integrating a domain-specific semantic enhancement mechanism into BERT’s architecture, ES-BERT provides an efficient solution for domain-specific NER.

Nevertheless, the study is subject to certain limitations, particularly the restricted scale of the annotated dataset and the limited coverage of the domain lexicon, underscoring the need for more extensive resources. Several architectural directions warrant further investigation in future work, such as extending lexical-semantic enhancement beyond BERT’s lower layers to optimize feature integration strategies, developing dynamic weighting mechanisms for lexical representations across different network depths, and systematically examining the effect of focal loss hyperparameters. Advances in these areas are expected to strengthen the model’s ability to comprehend complex semantic structures in the educational domain.

Author Contributions

Conceptualization, methodology, software, writing—original draft, writing—review, editing: P.H. and H.Z.; methodology, data curation, writing—original draft, visualization: Y.W. and L.D.; validation, investigation: L.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the following projects: the Research Project on Higher Education Teaching Reform in Jiangsu Province (grant number 2025JGYB405), the Jiangsu Province Industry Education Integration First Class Curriculum Project “Virtual Reality Technology and Application” (grant number 03145031), and the School Level Educational Reform Project of Nanjing University of Science and Technology ZiJin College (grant number 20240103005).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zheng, S.; Shen, Y. Educational Named Entity Recognition Integrating Word Information and Self-Attention Mechanism. Softw. Guide 2024, 23, 105–109. [Google Scholar]

- Ren, Y.; Su, B.; Yuan, S. Multidimensional Feature Named Entity Recognition Method in Education Domain. Comput. Eng. 2024, 50, 110–118. [Google Scholar]

- Qiao, B.; Zou, Z.; Huang, Y.; Fang, K.; Zhu, X.; Chen, Y. A joint model for entity and relation extraction based on BERT. Neural Comput. Appl. 2022, 34, 3471–3481. [Google Scholar] [CrossRef]

- Han, Q. Construction of Intelligent Question-Answering System to Improve Knowledge Management Ser-vice from the Perspective of Education Informatization. J. Inf. Knowl. Manag. 2025, 24, 1–22. [Google Scholar]

- Li, Y.; Liang, Y.; Yang, R.; Qiu, J.; Zhang, C.; Zhang, X. CourseKG: An Educational Knowledge Graph Based on Course Information for Precision Teaching. Appl. Sci. 2024, 14, 2710. [Google Scholar] [CrossRef]

- Hu, Y.; Chen, Y.; Huang, R.; Qin, Y. CRF-combined boundary assembly method for biomedical named entity recognition. Appl. Res. Comput. 2021, 38, 2025–2031. [Google Scholar]

- Zhang, R.; Dai, L.; Guo, P.; Wang, B. Chinese Nested Named Entity Recognition Algorithm Based on Segmentation Attention and Boundary-aware. Comput. Sci. 2023, 50, 213–220. [Google Scholar]

- Dong, H.; Kong, Y.; Gao, W.; Liu, J. Named entity recognition for public interest litigation based on a deep contextualized pretraining approach. Sci. Program. 2022, 1, 7682373.1–7682373.14. [Google Scholar] [CrossRef]

- Hu, H.; Li, J.; Dong, Z.; Bai, X. Named Entity Recognition Method in Educational Technology Field Based on BERT. Comput. Technol. Dev. 2022, 32, 164–168. [Google Scholar]

- Xie, T.; Yang, J.-A.; Liu, H. Chinese entity recognition based on BERT-BiLSTM-CRF mode. Comput. Syst. Appl. 2020, 29, 48–55. [Google Scholar]

- Lu, Z.; Zhao, W.; Yin, G. Named Entity Recognition for Textual Intelligence Based on RoB-ERTa_BiLSTM_CRF. J. China Acad. Electron. Inf. Technol. 2024, 19, 442–447. [Google Scholar]

- Jin, L.; Zhang, Y.; Yuan, Z.; Gao, S.; Gu, M.; Liu, X. Chinese named entity recognition of transformer bushing faults based on ALBERT-BiLSTM-CRF. IEEE Trans. Ind. Appl. 2025, 61, 2115–2123. [Google Scholar] [CrossRef]

- Chen, S.; Dou, Q.; Tang, H.; Jiang, P. Chinese nested named entity recognition based on vocabulary fusion and span detection. Appl. Res. Comput. 2023, 40, 2382–2386+2392. [Google Scholar]

- Liu, W.; Fu, X.; Zhang, Y.; Xiao, W. Lexicon enhanced chinese sequence labeling using BERT adapter. Int. Jt. Conf. Nat. Lang. Process. Meet. Assoc. Comput. Linguist. 2021, 2021, 5847–5858. [Google Scholar]

- Wu, G.; Fan, C.; Tao, G.; He, Y. Entity recognition of electronic medical records based on LEBERT-BCF. Comput. Era 2023, 2, 92–97. [Google Scholar]

- Sheng, L.; Zhang, Y.; Wu, D. Chinese named entity recognition method based on lexical enhancement. Mod. Electron. Tech. 2022, 45, 157–162. [Google Scholar]

- Wang, Y.; Wang, Z.; Yu, H.; Wang, G.; Lei, D. The interactive fusion of characters and lexical information for Chinese named entity recognition. Artif. Intell. Rev. 2024, 57, 258.1–258.21. [Google Scholar] [CrossRef]

- Zhao, J.; Qian, Y.; Wang, K.; Hou, S.; Chen, J. Survey of chinese named entity recognition research. Comput. Eng. Appl. 2024, 60, 15–27. [Google Scholar]

- Yang, P.; Dong, W. Chinese named entity recognition method based on BERT embedding. Comput. Eng. 2020, 46, 40–45+52. [Google Scholar]

- Huang, S.; Sha, Y.; Li, R. A Chinese named entity recognition method for small-scale dataset based on lexicon and unlabeled data. Multimed. Tools Appl. 2023, 82, 2185–2206. [Google Scholar] [CrossRef]

- Chen, S.; Luo, C.; Ouyang, X.; Li, W. A Semantic-enhanced Chinese Named Entity Recognition Algorithm Based on Dynamic Dictionary Matching. Radio Eng. 2021, 51, 519–525. [Google Scholar]

- Zheng, X.; Li, B.; Feng, Z.; Liu, X. Entity recognition of network sensitive words and variants based on BERT-BiLSTM-CRF. Comput. Digit. Eng. 2023, 51, 1585–1589. [Google Scholar]

- Che, X.; Xu, H.; Pan, M.; Liu, Q.-L. Two-stage learning algorithm for biomedical named entity recognition. J. Jilin Univ. Eng. Technol. Ed. 2023, 8, 2380–2387. [Google Scholar]

- Liu, X.-H.; Xu, R.-Z.; Yang, C.-Y. A Chinese named entity recognition model based on multi-feature fusion embedding. Comput. Eng. Sci. 2024, 46, 1473–1481. [Google Scholar]

- Liao, T.; Gou, Y.; Zhang, S. BERT-BiLSTM-CRF Chinese named entity recognition combined with atten-tion mechanism. J. Fuyang Norm. Univ. Nat. Sci. 2021, 38, 86–91. [Google Scholar]

- Dang, X.; Liu, J.; Dong, X.; Zhu, Z.; Li, F. Named Entity Recognition of Mechanical Equipment Failure for Imbalanced Data. Comput. Eng. 2024, 50, 104–112. [Google Scholar]

- Zhang, Y.; Yang, J. Chinese NER using lattice LSTM. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; pp. 1554–1564. [Google Scholar]

- Ma, R.; Peng, M.; Zhang, Q.; Huang, X. Simplify the usage of lexicon in Chinese NER. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 5951–5960. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).