1. Introduction

Job-related crimes refer to criminal offenses committed by public officials within state institutions, state-owned enterprises, or people’s organizations. These crimes involve the abuse of state-delegated powers through acts of corruption, fraud, dereliction of duty, infringement of citizens’ personal rights, or subversion of state regulations—all punishable under China’s Criminal Law. Suppose job-related crimes cannot be dealt with in a timely and fair manner. In that case, it will affect the credibility and efficiency of the government, which is why the anti-corruption campaign aims to crack down on job-related crimes. Although China’s rigorous anti-corruption campaigns have yielded significant results in recent years, the adjudication of job-related crimes faces systemic challenges, including protracted trial cycles and severe case backlogs, particularly in grassroots courts. Public judicial data reveals a consistent upward trend in such cases processed by courts and procuratorates. Within traditional judicial workflows, judges manually analyze voluminous historical precedents and legal provisions—a process that is labor-intensive, time-consuming, and prone to sentencing inconsistencies due to subjective interpretation. These deviations not only undermine judicial fairness but also risk eroding public trust in judicial transparency. Consequently, carrying out research to enhance sentencing efficiency, minimize human interference, and standardize judicial outcomes has become an urgent imperative.

The advances in research on artificial intelligence technology have been rapid in recent years, and they have a significant impact on practical work in the legal field. With the promulgation of the EU Artificial Intelligence Act, Legal Artificial Intelligence has gradually attracted widespread interest from judicial practice and artificial intelligence researchers, gaining rich research paradigms and traditional diversity from other disciplines, such as natural language processing, machine learning, mathematical statistics, and big data science. At the same time, Legal AI has brought efficiency improvements and fair judgments in judicial practices.

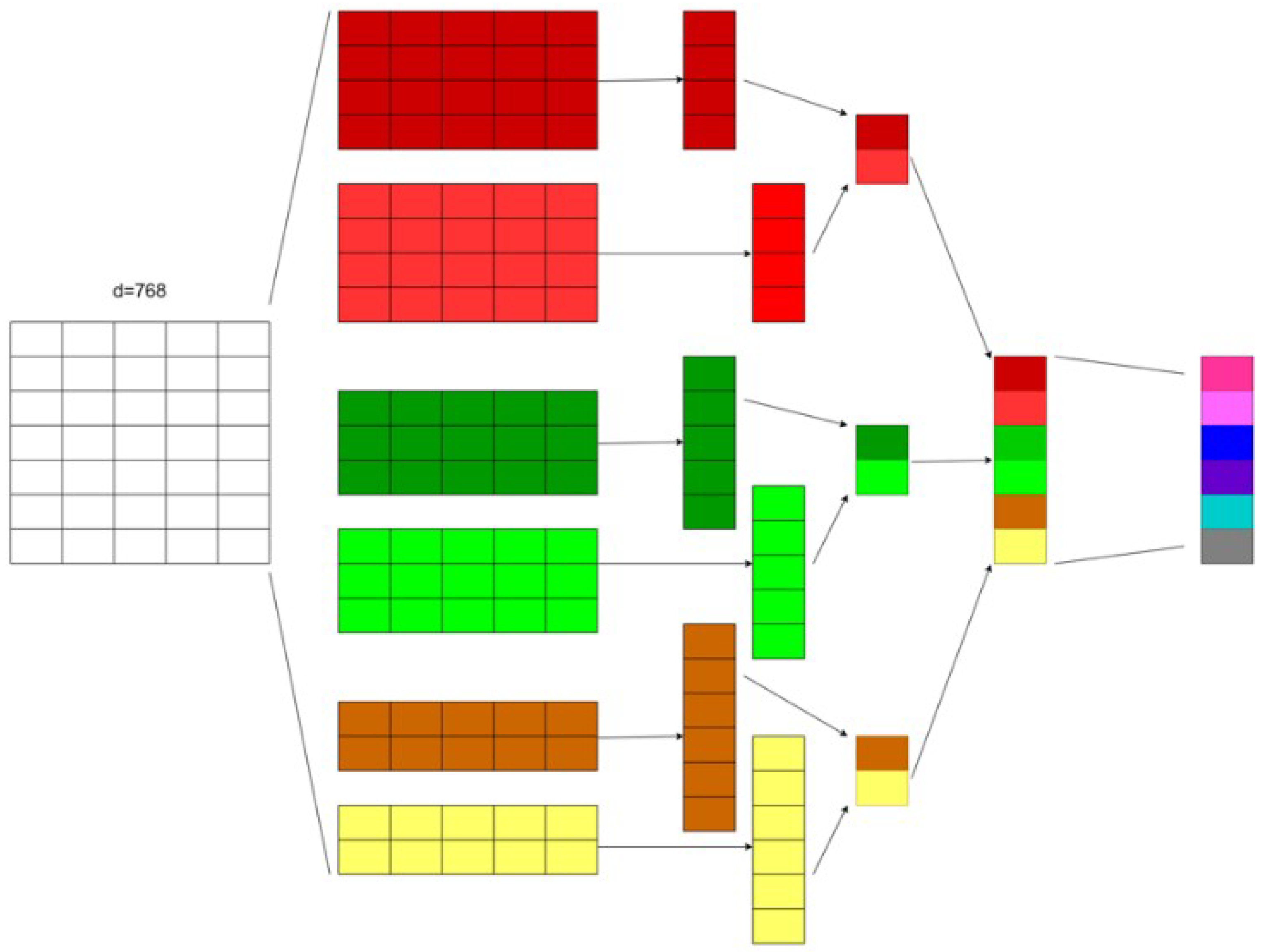

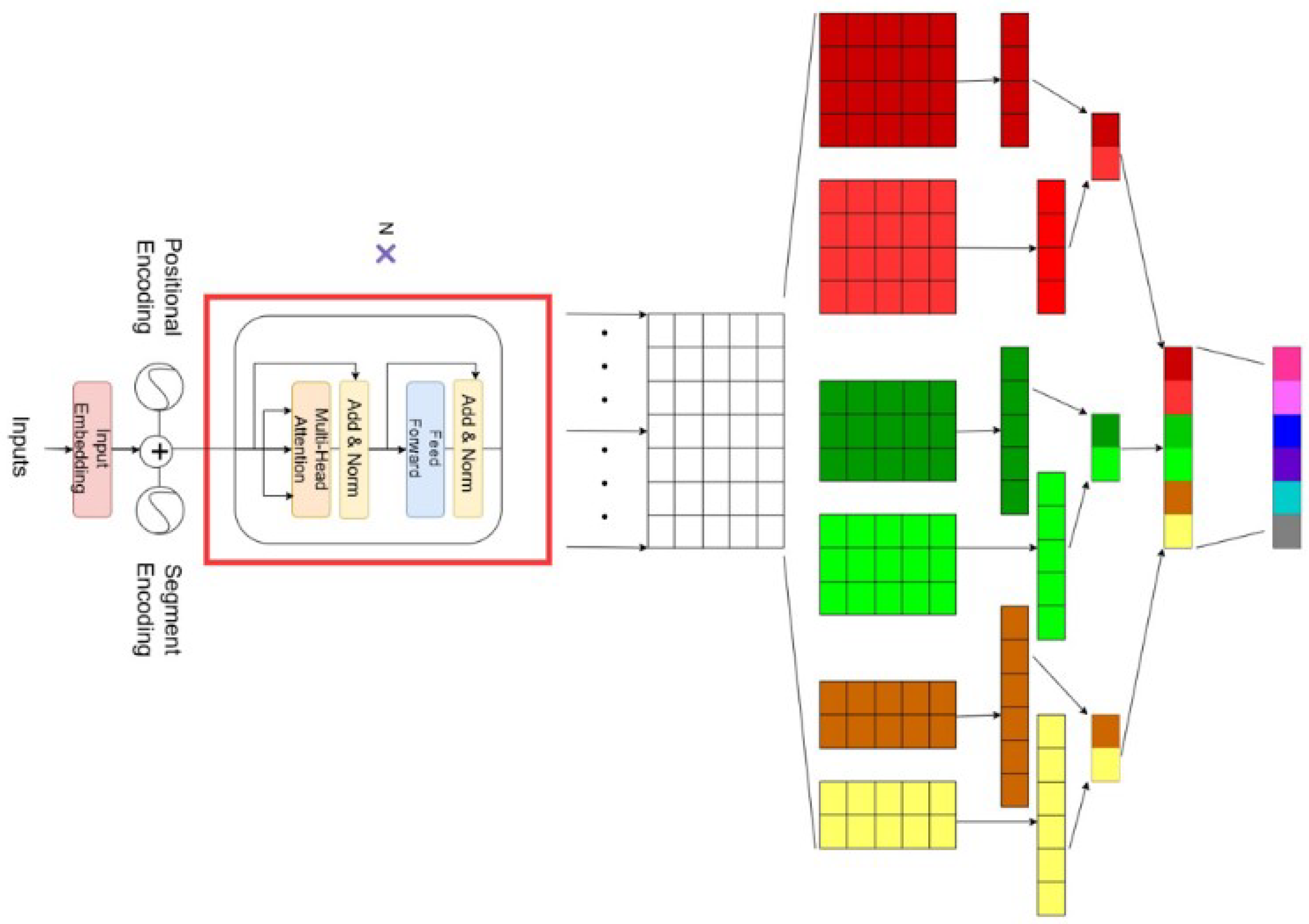

Legal AI should play a role in the governance of job-related crimes, especially by fully leveraging the legal semantic capture capabilities of BERT and TextCNN. To address urgent judicial challenges of job-related crimes in China’s anti-corruption campaign, this study pursues research objectives, including designing a hierarchical feature fusion network for legal semantics, optimizing domain adaptation for job-related crimes in legal classifications, and advancing AI’s role in China’s anti-corruption campaign through feature fusion and a hierarchical workflow.

2. Related Work

The rapid development of artificial intelligence technology has brought new possibilities to judicial AI [

1]. Early systems mostly focused on expert rule libraries or traditional machine learning methods (such as support vector machines), matching historical precedents with structured case features to provide trial references for judges. Goncalves et al. annotated legal texts, including 42 rulings of the European Court of Human Rights [

2]. Nallapati et al. proposed that traditional machine learning classifiers have shortcomings in the connection and extraction of relationships based on the argument from unstructured text to structured text [

3]. However, such methods rely on manually defined feature engineering, which makes it difficult to deal with the complex semantics and unstructured features of legal texts. Especially when dealing with cases of job-related crimes, suggestions by judicial AI are often rigid due to the variability of the case and the cross-application of legal provisions, resulting in limited practical application effects.

Natural language processing technology, with its advantages in text understanding and semantic analysis, has shown potential in scenarios such as legal Q&A and contract review [

4]. Mikolov et al. proposed the word2vec word embedding method to address the inherent shortcomings of traditional word representation methods in word order and idiomatic expression [

5]. The CBOW (continuous bag of words) and Skip–Gram models use neural networks to predict words in contextual environments, capture a large number of accurate syntactic and lexical semantic relationships, and learn high-quality distributed representation methods for words, namely, word embedding representation. The introduction of the negative sampling hierarchical softmax method improves the learning speed of dense vectors for word embedding [

6]. Aletras et al. combined legal provisions, word embeddings, and parts-of-speech tagging to provide predictions of judgment outcomes by describing the facts of the input legal case [

7]. In order to capture more multi-level contextual information, Vaswani et al. proposed an attention mechanism. The Transformer model based on the attention mechanism makes gradient descent more effective and has a particular applicability [

8]. BERT (Bidirectional Encoder Representations from Transformers) is composed of multiple layers of Transformer encoders stacked together, each layer containing a multi-head self-attention mechanism and a feedforward neural network [

9]. The self-attention mechanism dynamically calculates the correlation weights between words, enabling the model to focus on global context and local key information simultaneously [

10]. BERT uses bidirectional Transformer encoders, where the self-attention mechanism allows each token to directly attend to all other tokens in the input sequence. Every token representation output by BERT in later layers is inherently contextualized, incorporating global and coarse-grained information from the entire sequence to predict crime, thereby capturing long-range dependencies and semantic relationships beyond immediate neighbors. Kim proposed the TextCNN model [

11], which uses a convolutional neural network (CNN) model to classify text. The text is transformed into a dense vector representation through word embedding. Then, the discrete vocabulary is mapped to a continuous semantic space, effectively capturing local feature information for the text to be classified [

12]. In addition, TextCNN applies multiple convolutional filters of varying widths that slide over the word embedding sequence. Therefore, each filter specializes in detecting specific local patterns (n-grams) within its window size. At the same time, each filter produces a feature map that highlights where its local and fine-grained information is located, enabling quantitative analysis of the case.

In recent years, the LLM (large language model) has developed rapidly, which applies a large amount of text data to train deep learning models that can generate natural language text or understand the meaning of language text [

13]. LLMs can fully leverage the advantages of pre-training on large datasets by using computing power and deep learning patterns [

14]. Yue proposed the DISC-LawLLM model based on an LLM, which can solve specific tasks in the legal domain [

15]. Moreover, Cui proposed using llama and a knowledge graph to construct the ChatLaw model, which has been iterated to the second generation ChatLaw2 MoE [

16]. Represented by these models, multiple Chinese legal models have achieved excellent performance in Chinese legal Q&A and case matching [

17]. The Lexis+AI platform launched by the Lexis company integrates large language models such as GPT-4, which can generate sentencing recommendations by analyzing variables such as case nature, involved amount, and defendant background [

18]. The Law-AI tool developed by the Casetext group uses natural language processing technology to assist lawyers in legal retrieval, following human instructions to work [

19]. LLMs have a wide range of application scenarios. They conduct pre-training on massive data through self-supervised learning or semi-supervised learning and then further optimize their performance and ability through fine-tuning and human alignment [

20]. The existing legal intelligence applications mainly focus on general legal services scenarios [

21], such as case retrieval or legal consultation, and support for sentencing decisions mostly stays at the macro suggestion level.

Expert systems and traditional machine learning methods have some limitations in the process of predicting job-related crimes. Manual rules failed to handle edge cases, e.g., novel corruption tactics, causing higher error rates in complex job-related crimes [

22]. Typically, lawyers dedicate months to encoding rules and making systems scalable in response to evolving law [

23]. Without contextual knowledge, ambiguous language, such as the terms “gift” and “bribe,” cannot be interpreted, leading to rigid outcomes [

24]. Document failures of rule-based systems in common law show inaccuracy in statutory interpretation [

25]. Traditional machine learning proved inadequate for job-related crimes, where case variability and contextual semantics, such as abuse of power, demand adaptive reasoning [

26]. Compared with expert systems and traditional machine learning methods, word embeddings and convolutional neural networks are more of a focus for researchers. Word embeddings encode words as dense vectors, enabling similarity metrics and distributional semantics [

27]. Due to the large size of the dataset, efficient training could reduce compute costs for the corpora through negative sampling [

28]. Learned analogies of word embeddings could help parse legal relationships in job-related crimes, such as man and king or woman and queen [

29]. However, due to overfitting in global feature associations, word embeddings are static embeddings [

30], i.e., the same vector for all the same word senses, and they fail to encode the windows of words. Therefore, there may be deviations in understanding polysemous words, such as “gift” in legal and everyday contexts. Feature fusion is a method that fully considers both overfitting in global feature associations and the encoding of word windows. Feature fusion in deep learning integrates multiple cues or features representing different aspects of input characteristics to create a more comprehensive feature representation for tasks like classification. The linear combination of feature statistics and its extension to a general nonlinear fusion method involve minimizing a mean-square error and a regularization term [

31]. As the combination of features from different layers or branches, it is often implemented via simple operations, such as summation or concatenation. Attentional feature fusion is described by short- and long-bound connections as well as feature fusion within the frontend layer [

32].

However, the current research on legal classification prediction lacks an in-depth examination of auxiliary sentencing for the subdivision scenario of job-related crimes, which is characterized by a lack of logical analysis of criminal circumstances, factual determination, and legal relationships. In particular, insufficient attention is paid to research on the feature fusion of criminal circumstances. Job-related crime is a type of criminal offence that arises from the facts and legal consequences of a duty act. Its legal text includes both coarse-grained information for predicting crime and noncrime and fine-grained information for quantitatively analyzing the length of imprisonment of the defendant. This paper proposes a multi-level feature fusion method based on attention and convolutional kernels to address the problem of global and local feature segmentation in legal texts related to job-related crimes. The attention mechanism captures global features by embedding different semantic positions, while the convolutional kernel obtains local features through sliding windows. Multiple granularity features are then fused to enhance the model’s ability to perceive semantic information in job-related crime scenarios and improve its predictive performance for job-related crimes.

3. Features of Job-Related Crimes

3.1. Basis of Sentencing for Job-Related Crimes

The theory of sentencing for job-related crimes is based on the Criminal Law of the People’s Republic of China combined with judicial interpretations, criminal policies, and judicial practice experience to construct a clear hierarchy of punishment systems that balance fairness and efficiency. The sentencing levels for job-related crimes are divided into six categories, i.e., exemption from punishment, 0–1 year, 1–3 years, 3–5 years, 5–10 years, and more than 10 years. Its theoretical basis is rooted in the principle of proportionality between crime and punishment and the criminal trial policy of balancing leniency and severity, aiming to balance the corresponding relationship between social harm, subjective malice, and punishment through scientific and standardized standards.

In specific sentencing practices, the identification of punishment levels is mainly based on the objective harm and subjective malice of criminal behavior. Taking the crime of embezzlement as an example, Article 383 of the Criminal Law clearly shows that the amount of embezzlement between CNY 30,000 and CNY 200,000 is considered “relatively large” and can be sentenced to fixed-term imprisonment of up to 3 years or detention; CNY 200,000 to 3 million is regarded as a “huge amount” and shall be sentenced to imprisonment for not less than 3 years but not more than 10 years; and an amount of over CNY 3 million is considered “exceptionally large” and is subject to imprisonment for more than 10 years to life imprisonment. This is shown in

Table 1. Although this amount standard has rigid requests, judicial interpretations further introduce flexible standards such as “serious circumstances” and “dire circumstances”. For example, in cases of embezzlement, disaster relief, poverty alleviation, or adverse social impact, even if the amount does not reach the threshold, it can still be upgraded to punishment, as shown in

Table 2.

3.2. Global and Local Features of Sentencing

The global features of job-related crimes are the overall information about the legal relationship of job-related crimes obtained after analyzing the entire legal text. This information focuses on the legal relationship regulated by job-related crimes in the text, ignores local information, and has good stability, maintaining appropriate penalties and accountability. The criminal compositions, causal connections, and criminal accountability involved in job-related crimes have legality, specificity, and universality. The subjective purpose is adapted to the consequences of the crime, and the criminal behavior is consistent with the job-related responsibility. The global features of criminal embezzlement are shown in

Table 3. The local features of job-related crimes refer to the features obtained in specific areas of the legal text. These can reflect the local structure and detailed information on the conviction and sentencing of job-related crimes. The determination of the amount of embezzlement belongs to local features, and aggravating factors also belong to local features. The combination of these two kinds of features can elevate the level of judgment in job-related crimes. The local features of the criminal embezzlement are shown in

Table 4.

In judicial practice, the scientificity and adaptability of sentencing standards should be considered together. On the one hand, the fixed-amount standard may lead to sentencing differences between different areas due to unbalanced economic development. For example, the actual tolerance of “large amounts” in economically developed areas is higher than that in less developed regions, which damages legal unity and cannot demonstrate the power of legal punishment and deterrence. For example, the embezzlement of CNY 30,000 in economically underdeveloped areas affects the poverty relief of hundreds of people, and its criminal consequences are far greater than those in developed regions. On the other hand, the boundary of judges’ discretion needs to be further refined through judicial interpretation. The standard stipulates that probation or exemption shall not be applied to job-related crimes involving serious circumstances and destructive social impact, based on judicial opinions on several issues concerning the strict application of probation and exemption from criminal punishment in handling cases of job-related crimes, thus reducing the discretion space. The dynamic adjustment mechanism is introduced to link sentencing amount with regional per capita income to enhance the standard’s adaptability to the times.

3.3. Definition of Classification Task and Sentencing

According to the sentencing provisions for job-related crimes, they can be clearly classified into six categories, i.e., exemption from punishment, 0–1 year, 1–3 years, 3–5 years, 5–10 years, and more than 10 years. These six categories not only reflect the quantitative grading of the social harm of job-related crimes but also provide clear classification functions for the construction of machine learning models. In judicial practices, judges need to accurately match the text of the job-related crime to corresponding sentence ranges based on the facts of the case. Essentially, this involves mapping unstructured text (such as case descriptions in judgment documents) to preset category labels, which is highly consistent with the framework of multi-classification tasks from input features to output labels.

The choice of multi-classification tasks over regression or binary classification methods is mainly based on the legal requirement of discreteness and the normative needs of judicial decision-making. The law has strict boundaries for sentencing. For example, there is an unbreakable legal difference between “3–5 years” and “5–10 years”. Suppose a regression model is used to predict the specific length of a sentence. In that case, it may ignore such structural boundaries due to numerical continuity, resulting in prediction results deviating from legal standards. The regression model may output “4.8 years”, but according to criminal law regulations, this result needs to be classified as “3–5 years” instead of being used directly as an independent value. In addition, multi-classification tasks can generate probability distributions for each sentencing interval. For example, the probability of a case being sentenced to “5–10 years” is 75% and “3–5 years” is 20%. This probabilistic output not only provides a reference for judges but also assists them in balancing the rationality of different sentencing options, enhancing the transparency and scientific handling of decision-making.

5. Experiments and Results

5.1. Data Description

The current datasets of legal cases mostly come from China Judgments Online [

33], the Bank of the National Judicial Examination Center [

34], and Challenge of AI in Law [

35]. China Judgments Online covers various types of cases, including civil, criminal, administrative, etc., involving factual determination, evidence and basis for the determination, and verdict results. The National Judicial Examination Center provides teaching cases and standardized question banks selected by legal scholars. The case dataset from China Judgments Online and National Judicial Examination Center needed to be obtained through web crawler tools. Unlike the two dataset sources mentioned above, Challenge of AI offers an authoritative dataset of the judicial field that can be publicly downloaded. Therefore, the datasets of job-related crimes come from cases from China Judgments Online, the question bank from the National Judicial Examination Center, and the CAIL2018 (Challenge of AI in Law) dataset.

Data cleaning and data preprocessing are important and interconnected steps in preparing data for experiments. Personal privacy in cases involving sensitive data should not be exposed to the public; therefore, the content of cases related to personal privacy has been desensitized through technological means. The facts of the cases and sentencing standards, after being cleaned and preprocessed, comply with the sentencing circumstances stipulated in the Criminal Law. The structured data ensures a one-to-one correspondence between criminal facts and sentencing recommendations, providing natural attributes and labels for the corresponding relationship between input and output during modeling. Each case only contains two fields, namely, “statement of fact” and “range of verdict and sentence”. The ranges of verdicts and sentences are strictly divided into six categories according to the criminal law, namely, exemption from punishment, 0–1 year, 1–3 years, 3–5 years, 5–10 years, and more than 10 years.

The datasets of job-related crimes involved in this paper are specialized resources containing approximately 270,921 Chinese legal documents related to job-related crimes. The cases used for the experiments are divided into a training set, a verification set, and a test set, with a ratio of 6:2:2. This includes 162,552 cases in the training dataset, 54,185 cases in the verification dataset, and 54,184 cases in the test dataset.

5.2. Evaluation Metrics

On the one hand, legal judgments require both precision and reliability. On the other hand, legal predictions rely on extensive historical case data, and artificial intelligence is revolutionizing the legal profession by enabling attorneys to predict case outcomes with greater accuracy. Although accuracy reflects the overall correctness of the prediction, it is sensitive to data with imbalanced categories, as shown in Formula (

10). TP represents a true positive, where the model predicts a positive sample that is actually positive. TN represents a true negative, where the model predicts a negative sample that is actually negative. FP represents a false positive, where the model predicts a positive sample that is actually negative. FN represents a false negative, where the model predicts a negative sample that is actually positive. To objectively describe the model’s performance, precision, recall, and F1 score are used as evaluation metrics, as shown in Formulas (

11)–(

13). Precision emphasizes the reliability of predicting positive classes to avoid wrongful judgments, suitable for scenarios that require reducing false positives. Recall ensures that sufficient positive class samples are identified to avoid missed judgments. The F1 value is the harmony between precision and recall, meeting the rigid demand for fairness in judicial scenarios.

The experimental results are related to the multi-classification task of predicting the sentence of related job crimes, and the dataset has a class imbalance. For example, the number of cases with judgments more than 10 years reached 36,653 in the test set, accounting for 70.2%. Therefore, weighted average precision (WAP), weighted average recall (WAR), and weighted average F1 value (WAF1) are used as performance evaluations, as shown in Formulas (

14)–(

16). Additionally,

and

indicate the precision and recall of label

i, respectively.

and

represent the count of label

i and the count of the dataset in Formulas (

14), (

15) and (

16), respectively.

and

5.3. Experimental Setup

Bert-base-Chinese, TextCNN, ERNIE, and MLFFN (our model) were selected to conduct experiments evaluating the performance of different models on the dataset of job-related crimes. The dimension of segment embedding of MLFFN frontend is 5, the word embedding adopts a 100-dimensional glove, and the convolution core of the MLFFN backend adopts a dual-channel setting. The experimental environment is provided by AutoDL’s cloud service using PyTorch 2.3.0 (

https://pytorch.org/get-started/previous-versions/, accessed on 23 August 2025) in the framework and an NVIDIA V100 GPU in the hardware. The hyperparameter setup is shown in

Table 7, and the selection of hyperparameters takes into account practical convention, individual experience, and computational power. The dimension of GloVe pre-trained word embeddings used as input to TextCNN is 300, and the convolution kernel size covers two, three, and four windows, with each size configured with 100 filters. The segment embedding dimension of the MLFFN frontend is 5, the positional embedding dimension is 512, the word embedding adopts a 100-dimensional GloVe, and the MLFFN backend convolution kernel adopts a dual-channel setting.

5.4. Results and Discussion

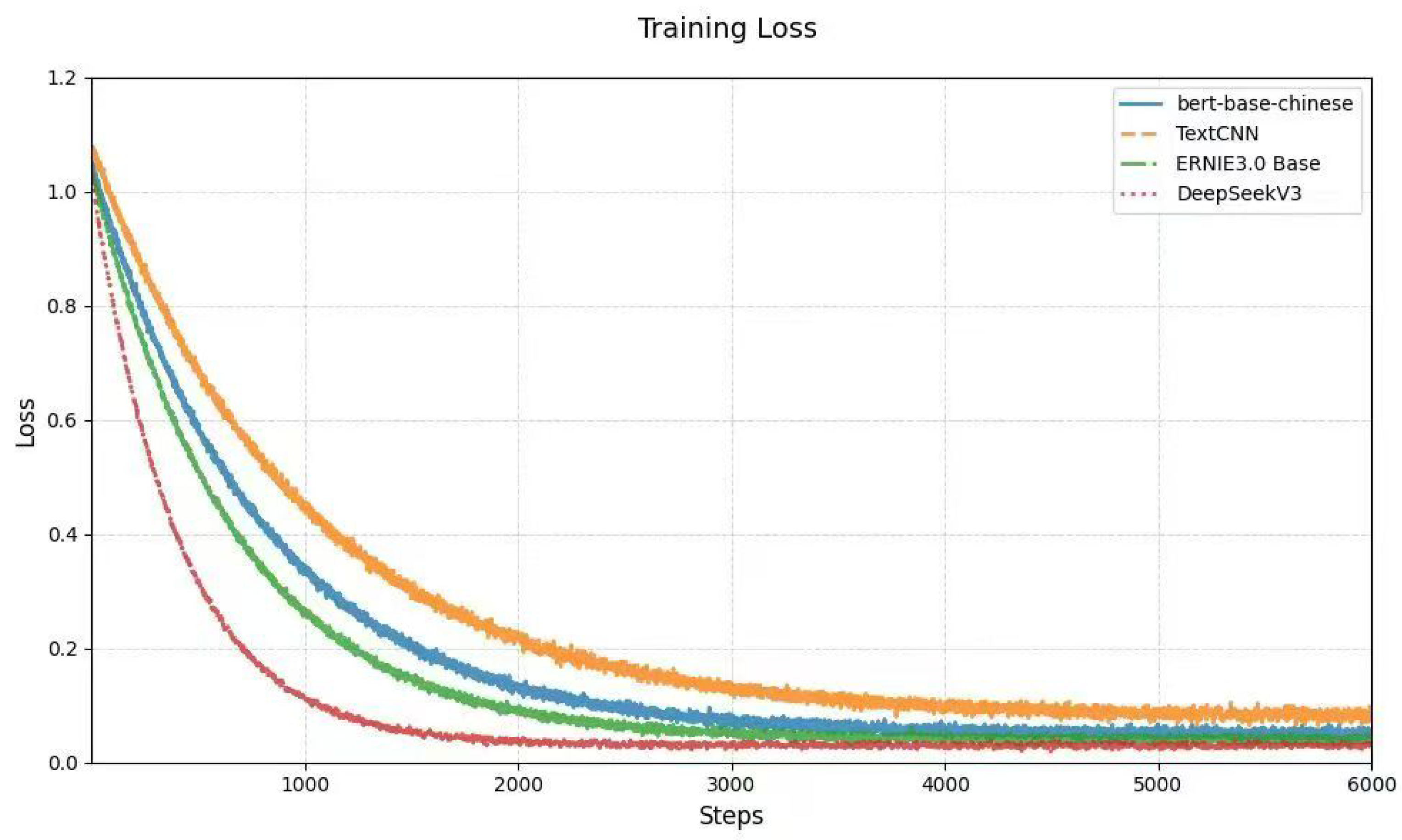

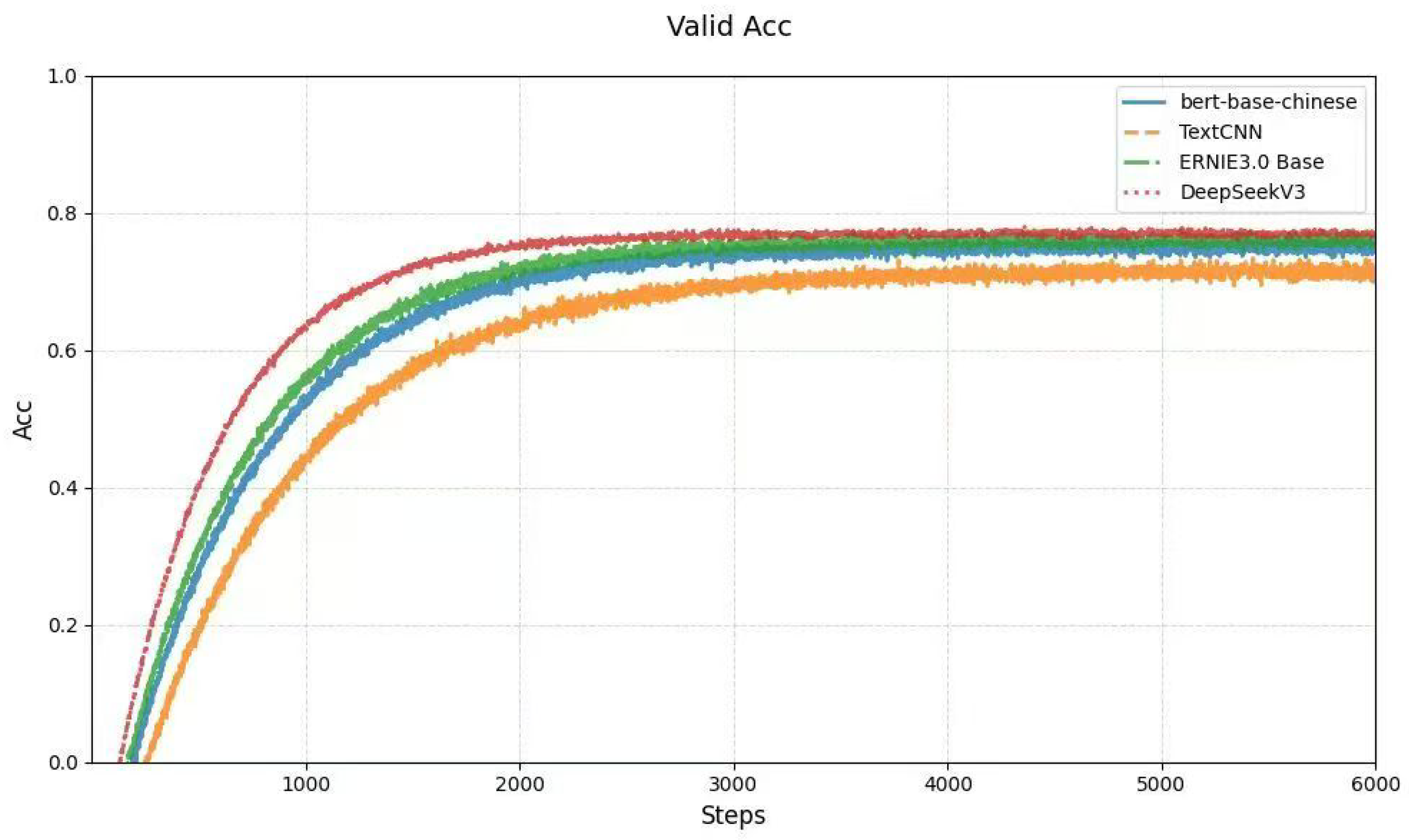

Large models used for comparative analysis have a huge number of parameters and are prone to overfitting training data, remembering noise rather than learning patterns. It is necessary to evaluate the performance of the validation set (such as loss, accuracy, etc.) after each training epoch. Suppose the training set metrics continue to improve while the validation set metrics decrease. In that case, it indicates that the model is beginning to overfit, which requires a timely adjustment of the training strategy.

The training will automatically end when the performance of the validation set no longer improves for more consecutive epochs. At the start of model training, the cross-entropy loss value rapidly decreases. By observing the accuracy curve of models on the validation set, the model reached a stable state after approximately three epochs and 6000 iterations of training, as shown in

Figure 5 and

Figure 6. The evaluation metrics, including ACC (accuracy), WAP, WAR, and WAF1, of each model on the test set are shown in

Table 8 and

Table 9.

From

Table 8, it can be seen that compared to Bert-base-Chinese, MLFFN has a 10%, 6%, and 9% improvement in WAP, WAR, and WAF1, respectively; compared to TextCNN, MLFFN shows improvements of 10%, 14%, and 23% on WAP, WAR, and WAF1, respectively; and compared to ERNIE, MLFFN has improvements of 6%, 7%, and 10% on WAP, WAR, and WAF1, respectively.

Experimental data shows that the MLFFN model has a performance improvement of over 6% in weighted accuracy, weighted recall, and weighted F1 value, further indicating that the fusion of global and local features is effective in predicting job-related crimes. The model adopted the framework of frontend Bert and backend TextCNN, enhanced with segment embeddings for legal classification in job-related crimes. Key findings include three points as follows.

Firstly, segment embeddings could improve performance by encoding structural boundaries in legal relationships, e.g., sections, clauses, etc. Secondly, the frontend Bert captures long-range contextual semantics in legal relationship clauses of job-related crimes, especially modifiers, while the backend TextCNN extracts discriminative local patterns of job-related crimes, such as legal phrases, particularly those related to duty positions. Thirdly, frozen parameters in the frontend Bert and fine-tuning parameters in the backend TextCNN could help converge training loss and validation accuracy in the training and validation sets.