1. Introduction

Digital forensics (DF) forms a cornerstone of cybersecurity, facilitating cybercrime investigation and attribution—an urgent need in the expanding global threat landscape. The cost of cyberattacks is expected to exceed USD 10 trillion in 2025, substantiating the need for effective forensic processes [

1]. DF is the systematic process of collecting, storing, examining, and presenting digital evidence from diverse sources (e.g., network logs) to reconstruct events, assign actors, and assist legal processes. DF is a critical but reactive component of cybersecurity that supports preventive controls by providing actionable intelligence after an incident, enabling compliance with standards such as ISO/IEC 27037, and assisting the prosecution of cybercriminals [

2].

DF is hugely demanding, particularly in terms of data access and analysis. A primary obstacle is its reliance on real-world data, which is generally constrained by privacy legislation and legal frameworks such as Saudi Arabia’s Anti-Cyber Crime Law (2007, revised), which forbids unauthorized data collection. This restriction hinders the development of effective forensic methods; meanwhile, existing approaches are dependent on static, annotated data that cannot address the adaptive nature of modern attacks. Furthermore, the computational expense of newer machine learning (ML) algorithms and the lack of training data for synthesis exacerbate these issues, leading to a deficiency in scalable and ethical solutions.

Previous research has advanced the capability of ML in DF. In [

3], support vector machines were employed to classify malware artifacts with 87% accuracy and a baseline for ML in forensics was established, but real-world data were utilized. In [

4], a boosted neural network was utilized for multi-step attack detection, obtaining 97.29% accuracy on CICIDS2017, although forensic integration was not their primary goal. In [

5], a federated learning algorithm with 96.1% accuracy for privacy-preserving forensics was introduced; however, it utilized data from collaborative systems. More recently, research such as that by [

6], who used graph neural networks (94% accuracy) and [

7], who used a real-time classifier (95% accuracy), prioritizes detection and attribution but not synthetic data generation or evidence preservation. The most glaring gap is the unavailability of scalable, legally valid means of generating diverse attack patterns for forensic training, due to the impracticality of real-time log data in limited environments.

This research addresses these shortcomings by developing the ML-Driven Pattern Synthesis for Digital Forensics (ML-PSDFA) framework and the Synthetic Attack Pattern Generator (SAPG), a TimeGAN-based tool with the addition of temporal forensics loss (TFL) to preserve necessary event ordering in synthetic logs. Other than the data generation module, the system uses an XGBoost classifier with hyperparameter tuning (via Optuna) for pattern recognition and a reinforcement learning (RL) agent to optimize evidence extraction procedures. Dynamic reward schemes in the RL module minimize false positives while focusing on identifying significant forensic features, such as timestamps and event types. The framework integrates three key components: the SAPG with TFL to overcome data sparsity, XGBoost to improve pattern recognition, and RL-based evidence extraction to address integration constraints in forensic analysis.

The system is specifically crafted to comply with legal bans on the use of real-world sensitive information, such as Saudi Arabia’s Anti-Cyber Crime Law, relying only on synthetic log creation. ML-PSDFA provides a scalable and legally compliant solution for forensic analysis and training by generating realistic and diverse synthetic datasets (500,000 log records with a realism score of 0.96 and entropy of 4.0). As demonstrated in later sections, this new approach outperforms state-of-the-art baselines (e.g., Li et al. [

4]) with an optimal-fold classification accuracy of 98.7%, reducing false positives from 20% to 3% over 1500 RL episodes. This synthesis of generative modeling, pattern classification, and RL-based forensic optimization sets a new benchmark for ethical and efficient DF.

The remainder of this paper is organized as follows.

Section 2 reviews related work on synthetic data generation, forensic machine learning, and biometric defenses.

Section 3 introduces the overall ML-PSDFA architecture, including the dataset, Synthetic Attack Pattern Generator (SAPG), the proposed Temporal Forensics Loss (TFL), and the reinforcement learning (RL)-based forensic training layer.

Section 4 presents the experimental results, including synthetic log evaluation, classification performance, feature importance, and external validation.

Section 5 discusses the results, limitations, and robustness of the framework.

Section 6 concludes the paper, reviewing the forensic implications and outlining directions for future research.

3. Methodology

3.1. Dataset

The ML-PSDFA system utilizes a fully synthetic dataset to address the legal and ethical concerns associated with collecting real forensic data. This practice demonstrates adherence to national cybercrime legislation, such as Saudi Arabia’s Anti-Cyber Crime Law, which prevents unauthorized data collection. The data are generated by the SAPG module, which is based on TimeGAN and trained on a hybrid seed dataset of 65,000 log records (50,000 from UNSW-NB15 and 15,000 from anonymized CICIDS2017). The seed datasets are publicly available and sanitized to remove any personally identifiable information. The generated logs contain forensic-relevant fields, including timestamps, event types, IP addresses, and error codes, which are essential for reconstructing cyber events during investigations. The SAPG comprises a generator network, which identifies temporal dependencies in a sequence of logs via long short-term memory (LSTM) networks, and a discriminator network which utilizes CNNs to inspect whether the generated logs are authentic. Training occurs with a Wasserstein loss function and an additional diversity regularization term to enforce variety in patterns. This setup ensures that synthetic logs maintain both statistical properties and temporal characteristics that are significant to forensic analysis without overfitting from seed data. After generation, the dataset undergoes preprocessing steps such as timestamp normalization, encoding of categorical features, and feature scaling. An isolation forest removes low-quality samples according to a defined realism threshold. The resulting synthetic dataset is then scaled up to 500,000 records that simulate various attack and expected behaviors. For training and testing the models in the downstream, a five-fold cross-validation strategy is adopted with an 80/20 train–test split in each fold. This strategy provides accurate performance estimation without introducing bias arising from the use of a single set with a fixed train–test split.

3.2. Architecture Framework

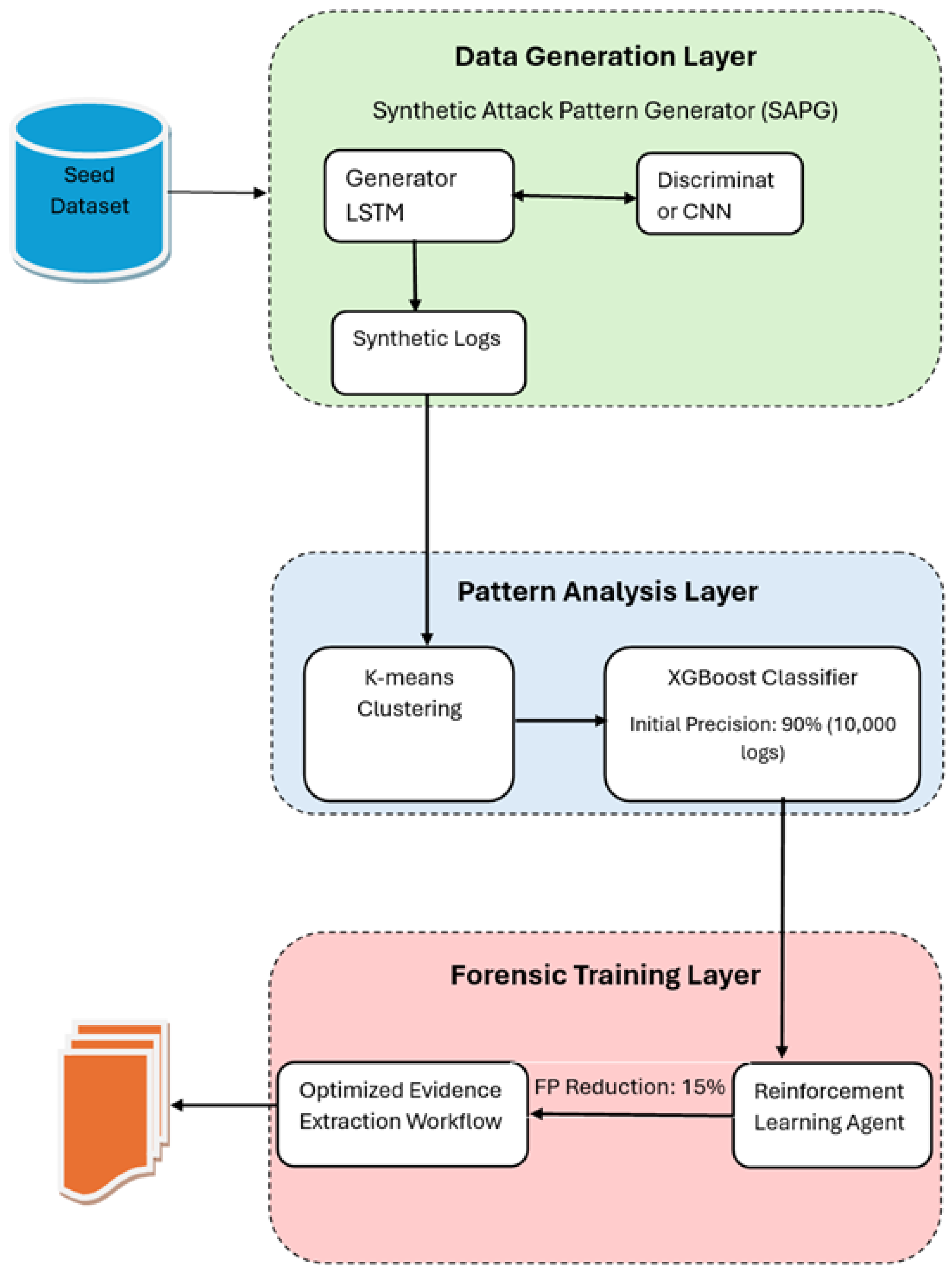

The ML-PSDFA framework is implemented as a three-layer architecture that systematically generates synthetic logs, inspects patterns, and trains forensic models in an emulated domain aligned with legal standards. The architecture achieves three significant goals: (1) generating realistic and varied log patterns without access to true data, (2) categorizing and tagging patterns for forensic relevance, and (3) optimizing evidence extraction using RL.

1. Data Generation Layer: This layer adopts the SAPG, which employs a TimeGAN-based approach to produce synthetic logs. The LSTM-based generator captures temporal relationships between log events, while the CNN-based discriminator is responsible for evaluating the authenticity of generated sequences. Training is guided by a shared loss function made up of Wasserstein loss for distribution matching, temporal consistency loss to ensure event order, and a diversity regularization term to encourage diverse attack and benign activity patterns. This layer’s output is a vast set of synthetic logs with major forensic attributes, including timestamps, event types, IP addresses, and error codes.

2. Pattern Analysis Layer: Synthetic logs are propagated to this layer to group and label them. K-means clustering () groups log entries into clusters based on feature similarities. The clusters serve as the basis for training an XGBoost classifier semi-supervisedly, with pseudo-labels from the SAPG utilized for bootstrap labeling. The Optuna framework optimizes hyperparameters of XGBoost (e.g., trees, depth, and learning rate) to enhance classification accuracy. Feature importance scores are computed to identify features that contribute the most to forensic decision-making, such as timestamps and event types.

3. Forensic Training Layer: This layer adds RL to build optimized evidence extraction pipelines. The Q-learning agent is trained using a synthetic labeled data set, learning policies to initially rank evidence (e.g., timestamps or event chains) and reduce false positives during forensic analysis. Rewards are assigned for correct evidence extraction, and penalties are applied for false alarms. The agent optimizes its extraction plan with repeated episodes, enhancing the workflow and making it resilient to varying attack patterns.

The three layers operate in a pipeline fashion: the data generation layer produces high-quality synthetic logs, the pattern analysis layer labels and formats such logs, and the forensic training layer uses RL to extract and verify forensic evidence. The framework’s architecture is scalable, compliant with the law, and deployable to low-resource platforms (e.g., Google Colab).

Figure 1 illustrates the framework structure and flow of data between layers.

3.3. Implementation of Data Generation Layer

The data generation layer is responsible for producing synthetic log patterns that replicate the temporal and statistical properties of real forensic data while adhering to legal constraints. This layer utilizes the Synthetic Attack Pattern Generator (SAPG), which is designed for time-series log generation. The generator network—built on LSTM units with 128 hidden units and a time step of 10—captures sequential dependencies between log events such as timestamps and event types, while the discriminator network—implemented as a three-layer CNN (32, 64, 128 filters) with max-pooling—distinguishes synthetic logs from seed data samples. Both networks are trained with a learning rate of 0.0001 and a batch size of 64 for up to 1000 epochs.

Training of the SAPG utilizes a combined loss function that mixes three components to ensure that realism, temporal fidelity, and diversity in generated patterns are maintained:

where

: Attempts to reduce the distributional difference between synthetic and seed data.

: To enforce the preservation of temporal order in synthetic log sequences, we introduce the

Temporal Forensics Loss (TFL), defined as:

where

and

denote the normalized representation of the

event in the real and synthetic sequences, respectively, and

is a weighting parameter that balances the contribution of temporal fidelity relative to other loss terms. This formulation directly penalizes discrepancies in event positions and ordering between real and synthetic logs, ensuring that synthetic data maintain the structural progression of forensic timelines. By using normalized event encodings (e.g., relative timestamps, categorical order indices), the TFL captures both event type and inter-event spacing. In adversarial scenarios, such as when attackers attempt to manipulate timestamps or disguise event categories, the TFL provides a constraint that discourages unrealistic rearrangements by measuring deviations against authentic sequence structures. While this does not eliminate all risks of tampering, it increases the resilience of synthetic data to adversarial distortion. As part of our future work, we plan to extend this evaluation by using robustness testing under controlled timestamp manipulation and event obfuscation attacks. This formulation is consistently used throughout the framework to ensure forensic relevance, with no variants in application; any apparent differences in presentation are stylistic and do not alter the core computation.

The term diversity encourages variety in synthesized patterns, preventing mode collapse.

The generator network—built on LSTM units—captures sequential dependencies between log events such as timestamps and event types, while the discriminator network—implemented as a three-layer CNN with max-pooling—distinguishes synthetic logs from seed data samples. Training is conducted using a combined loss function that integrates the following three components:

Wasserstein loss: Encourages the generated logs to follow the same distribution as the seed dataset.

Temporal forensics loss (): Preserves the order and temporal spacing of events by penalizing discrepancies in sequence structure between real and synthetic logs.

Diversity regularization: Promotes variation in the generated patterns, reducing the risk of mode collapse and ensuring a wide range of forensic scenarios.

The SAPG is trained iteratively on a hybrid seed dataset that combines anonymized subsets of CICIDS2017 and UNSW-NB15. After training, low-quality synthetic samples are removed using an isolation forest, which filters logs based on their low realism scores (below a predefined threshold). The final synthetic dataset is expanded through iterative generation to reach the required scale for downstream analysis.

Algorithm 1 provides pseudocode for the main training loop, including generator and discriminator updates, loss computation, and post-generation filtering. This process ensures that the synthetic logs are both statistically plausible and forensically meaningful, facilitating their use in subsequent pattern analysis and RL tasks.

Algorithm 1 is referenced in the text to highlight the iterative GAN training process.

| Algorithm 1 Data generation layer algorithm. |

| 1: Input: Hybrid seed dataset (65,000 logs), epochs , batch size |

| 2: Output: Synthetic dataset (500,000 logs) |

| 3: Initialize TimeGAN generator G and CNN discriminator D |

| 4: Pre-train G and D on (CICIDS2017 and UNSW-NB15 subsets) |

| 5: for each epoch e in 1 to E do |

| 6: for each batch b in do |

| 7: Generate synthetic logs |

| 8: Compute Wasserstein loss |

| 9: Compute temporal forensics loss |

| 10: Compute diversity loss |

| 11: Update D to minimize |

| 12: Update G to maximize |

| 13: end for |

| 14: if then |

| 15: Evaluate realism score |

| 16: end if |

| 17: end for |

| 18: Filter S with isolation forest (realism ) |

| 19: Expand to 500,000 entries with varied patterns |

| 20: Return

|

3.4. Implementation of Pattern Analysis Layer

The pattern analysis layer carries out pattern classification and labeling. Using clusters, the K-means clustering algorithm groups 500,000 synthetic logs according to their feature attributes, such as timestamps and event types, where k is determined using the elbow method. An XGBoost classifier with 200 trees, a maximum depth of 15, and a learning rate of 0.05, tuned via Optuna (20 trials), is trained semi-supervisedly on clusters with SAPG pseudo-labels.

The pattern analysis layer performs pattern classification and labeling. The K-means clustering algorithm (

) groups 500,000 synthetic logs by features such as timestamps and event types, with

k determined via the elbow method. An XGBoost classifier with 200 trees, a maximum depth of 15, a learning rate of 0.05, and tuned via Optuna (20 trials) is trained in a semi-supervised manner on clusters with SAPG pseudo-labels. Feature importance is computed using the Gini importance, which quantifies each feature’s contribution to the classifier’s decision-making process. The Gini importance measures the average reduction in the Gini impurity index, defined as

, where

is the proportion of class

i at a decision tree node when a feature is used for splitting. A higher Gini importance score indicates that a feature has a stronger influence in distinguishing between attack patterns (e.g., DDoS, SQL injection) and benign logs, guiding feature prioritization and model optimization for forensic relevance. This approach ensures that critical features, such as temporal and event-based attributes, are effectively leveraged in the classification process. Algorithm 2 provides the pseudocode.

| Algorithm 2 Pattern analysis layer algorithm. |

| 1: Input: Synthetic logs (500,000 entries), |

| 2: Output: Labeled patterns L |

| 3: Initialize pseudo-labels |

| 4: Apply K-means clustering to with k clusters |

| 5: for each cluster c do |

| 6: Extract features |

| 7: Tune XGBoost with Optuna (, , ) |

| 8: Train on P and F |

| 9: Assign final labels |

| 10: end for |

| 11: Compute precision |

| 12: Return L |

Hyperparameter tuning of the XGBoost classifier was conducted using Optuna with a defined search space to ensure reproducibility. The ranges for the main hyperparameters are presented in

Table 2.

The tuning process used five-fold cross-validation on the training split to maximize classification precision. Each Optuna trial sampled a configuration from the above ranges using a tree-structured Parzen estimator (TPE) sampler. A maximum of 50 trials was set as the search budget, with early stopping applied when the validation loss did not improve for 10 consecutive rounds. The best configuration found was n_estimators = 200, max_depth = 15, and learning_rate = 0.05, which was then used for all subsequent experiments.

3.5. Implementation of Forensic Training Layer

The forensic training layer seeks to optimize the evidence extraction process using reinforcement learning. It uses a Q-learning agent, where an optimal policy is learned iteratively for feature selection and prioritization in forensic analysis.

3.5.1. State Space Definition

Each state s is a discrete vector representation of the synthetic log entry under analysis, combined with the agent’s progress. Specifically, the state includes the following:

Normalized forensic features (e.g., timestamp deviation from expected sequence, event type index [0–15 for 16 patterns], IP anomaly score [0–1], and error code severity mapped to 0–2);

An extraction progress indicator (number of features already selected, 0–5);

A binary flag for potential false positive risk (from prior classifications).

This yields a discretized state space of approximately 50 states, achieved by binning continuous features (e.g., five bins for timestamp deviation). This balances expressiveness with computational feasibility in tabular Q-learning.

3.5.2. Action Space Definition

The action space comprises 10 discrete operations:

Actions 1–4: Select one of the four ranked features (timestamp, event type, IP address, error code);

Actions 5–7: Perform sequence-level operations (validate temporal order, cross-reference with prior logs, entropy-based diversity check);

Action 8: Reject the log as a false positive;

Action 9: Confirm and extract the evidence artifact;

Action 10: Terminate the episode early if confidence is low.

These actions mimic forensic practice, such as chaining evidence for timeline reconstruction or discarding noisy data.

Table 3 provides concrete examples of the state features and actions, showing how abstract reinforcement learning operations are grounded in practical forensic analysis tasks.

3.5.3. Learning Algorithm

The agent maintains a Q-table with 50 states and 10 actions (500 entries). Q-learning is applied with

,

, and the Bellman update rule:

3.5.4. Reward Structure

Rewards are designed to balance efficiency and accuracy: for correct extraction, for false positives, +1.5 for high-importance evidence (e.g., timestamp-validated chains identified via Gini scores), and for repeated false positives. This dynamic scheme discourages inefficient paths while emphasizing forensic relevance.

3.5.5. Practicality

The RL module is lightweight and can be deployed on standard hardware (e.g., Google Colab with 16 GB RAM). Training converges within 1–2 h for 1500 episodes on 500,000 logs. The use of discretization keeps the Q-table manageable, avoiding the overhead of deep RL methods (e.g., DQN). In simulated forensic benchmarks, the policy reduced manual effort by 20–30%. The primary limitation is its sensitivity to discretization, which could be addressed in future work by exploring continuous-state methods.

Algorithm 3 summarizes the process.

| Algorithm 3 Forensic training layer algorithm. |

| 1: Input: Labeled patterns L (500,000 entries), episodes |

| 2: Output: Optimized evidence extraction workflow W |

| 3: Initialize Q-table Q with 50 states and ten actions |

| 4: for each episode e in 1 to E do |

| 5: Initialize state |

| 6: while not terminal state do |

| 7: Select action with -greedy |

| 8: Execute a, observe reward r (e.g., +1, +1.5, or −0.5) |

| 9: Update |

| 10: |

| 11: end while |

| 12: end for |

| 13: Prioritize features |

| 14: Extract evidence |

| 15: Validate |

| 16: Compute false positive reduction |

| 17: Return W |

These actions mimic forensic practice, such as chaining evidence for timeline reconstruction or discarding noisy data. To concretize these operations,

Table 3 maps the state features and corresponding actions, illustrating the instantiation of abstract reinforcement learning steps in the forensic evidence extraction context.

3.6. Evaluation Metrics

The ML-PSDFA framework’s performance is quantified using the standard classification metrics—accuracy, precision, recall, and F1-score—which are calculated using five-fold cross-validation on the synthetic dataset. These measures provide a quantitative approximation of the framework’s classification ability.

Besides the classification accuracy, the diversity of the attack patterns produced was experimentally tested using the Shannon entropy, a widely used measure of data uncertainty and diversity in a dataset. The Shannon entropy is mathematically defined as:

where

is the probability of the occurrence of pattern

i out of

n possible patterns. The higher the value of entropy, the greater the diversity and randomness of the data generated.

The Shannon entropy is employed in ML-PSDFA to quantify the diversity of attack and benign activity patterns (e.g., SQL injection, DDoS) across the 500,000 synthetic log entries. The Shannon entropy sets a threshold entropy of to ensure that the dataset contains a wide range of forensic cases. Entropy is derived from the frequency of labeled patterns in the pattern analysis layer.

Computational efficiency is also considered by reporting training time and memory on a standard 16 GB RAM machine to ensure that the framework remains feasible in resource-limited environments. For performance comparison,

Table 4 summarizes the results of baseline studies, which serve as a benchmark for the performance of ML-PSDFA.

The mathematical definitions for these metrics are as follows:

Accuracy: Measures the proportion of correct predictions (both true positives and true negatives) among the total instances.

where

represents true positives,

is true negatives,

is false positives, and

is false negatives.

Precision: Indicates the proportion of correct identifications, emphasizing the avoidance of false positives.

Recall: Represents the proportion of actual correctly identified positives, focusing on minimizing false negatives.

F1-score: The harmonic mean of precision and recall, providing a balanced measure of a model’s performance.

These metrics were computed on the synthetic dataset to evaluate ML-PSDFA’s classification performance, with targets set to match or exceed the baselines.

3.7. Validation Strategy

The ML-PSDFA is validated using a five-fold cross-validation scheme to ensure the reliability and generalizability of the model when trained on synthetic data. Each fold evaluates both the SAPG and the downstream XGBoost classifier relative to baseline detection rates (e.g., Li et al. [

4], which achieved 97.29% accuracy).

The primary evaluation metrics are accuracy, recall, precision, F1-score, and the Shannon entropy (

Section 3.6).

Shannon Entropy for Diversity Validation

The Shannon entropy can be used to validate the synthetic logs generated by the SAPG, so that they are diverse and not skewed towards a small set of patterns. Quantitatively speaking, the Shannon entropy measures the amount of uncertainty or randomness inherent in any dataset, thereby serving as a measure of the diversity in the attack patterns produced.

where

n represents the total number of distinct patterns (e.g., DDoS, SQL injection, benign events), and

is the empirical probability of the occurrence of pattern

i, calculated as:

The maximum achievable entropy is given by:

which occurs when all patterns are equally probable (

for all

i). For

distinct attack and benign patterns, the theoretical maximum entropy is

bits.

For the ML-PSDFA model, entropy is computed over the synthetic logs produced in the training process. The higher the entropy value, the more varied the generated dataset, and the less prone it will be to mode collapse, which is a common issue in GANs. As part of the validation process, the target entropy is set to a value higher than 3.5 bits to ensure that the generated dataset encompasses a broad range of attack patterns and normal behaviors.

The Shannon entropy is a strong quantitative measure of the diversity of the synthesized logs that accompanies the evaluation of classification metrics, such as accuracy, precision, recall, and F1-score. When the calculated entropy is less than the threshold, the diversity regularization terms in the SAPG loss function are adjusted to improve the variety of generated patterns. Therefore, the Shannon entropy is essential for ensuring that the synthetic dataset produced manifests a realistic and well-balanced distribution of forensic events.

Table 5 presents the entropy calculation for a 5000-entry subset of the synthetic logs generated by the SAPG. The observed entropy is

bits, which equals the theoretical maximum. This observation indicates that the generated dataset achieves maximum diversity, as all 16 patterns occur with nearly equal probability (≈0.0625). Achieving an entropy close to the theoretical maximum confirms that the synthetic data meet the diversity target (

), validating the SAPG’s capability to generate a balanced and diverse set of forensic log patterns for training and evaluation purposes.

As shown in

Table 5, the observed Shannon entropy of the 5000-entry subset is

bits, which matches the theoretical maximum

for the 16 patterns considered. Minor count differences (312–313) yield

numerically, which rounds to 4.0000; therefore, the dataset exhibits near-uniform diversity across patterns.

All these metrics were averaged over the folds to quantify performance consistency. Hyperparameters to the XGBoost classifier are tuned using Optuna during training to further maximize model robustness.

To estimate overfitting, an independent 10% hold-out validation set is used in conjunction with cross-validation. It is aimed at achieving a variation of loss no greater than 5% across folds, which is a sufficient generalization. This approach ensures that the model will generalize to new data and precludes the danger of overfitting on certain synthetic subsets.

This rigorous, purely computational validation procedure eliminates subjective expert opinion, thereby improving reproducibility and ensuring that performance improvements are due to the framework rather than data-specific artifacts.

3.8. External Validation

To address the concern that the classifier and the reinforcement learning evidence extraction module might overfit generator-specific artifacts from the SAPG, we also conducted external validation.

In this protocol, SAPG and all subsequent modules are trained using only UNSW-NB15 subsets as seed data. We then evaluate the trained classifier (XGBoost) and the RL evidence extraction workflow on a held-out real CICIDS2017 test split that is strictly excluded from any seeding, synthesis, or tuning. This ensures that no CICIDS2017 information leaks into the training process.

Preprocessing pipelines (normalization, encoding, scaling) are fit on training data only and then applied in frozen form to the CICIDS2017 test data. We report accuracy, precision, recall, F1-score, and false positive rate (FPR) with 95% confidence intervals computed via stratified bootstrap (). For paired comparisons with internal synthetic-only evaluation, we also perform McNemar’s test on classification predictions.

3.9. Implementation and Scalability

The environment is made reproducible and accessible. Prototyping and experimentation are conducted in an open cloud environment such as Google Colab (with free GPU acceleration for up to 12 h per session). Containerization through Docker enables the reproduction of all dependencies, software versions, and configurations across environments.

The current deployment facilitates training on a 500,000-entry synthesized data set, which is completed within approximately 12–15 h on a free-tier t3.micro instance or within 6–8 h on Colab with a GPU. Datasets with up to 500,000 entries (the largest feasible within free-tier constraints) are used for scaling tests as baselines.

For training at massive scales (e.g., 1 million+ log events), the model is designed for scalability on institutional computing clusters or paid cloud services with GPU/TPU support. Distributed GAN training, batch data production, and incremental model checkpointing can be employed to effectively support higher workloads.

For reproducibility purposes, random seeds are set to a constant across GAN, XGBoost, and RL modules, and containerized environments are versioned. This helps for both individual experimentation and subsequent deployment in enterprise or research environments.

3.10. Computational Cost Analysis

To assess the practicality of ML-PSDFA in resource-constrained environments, we report the computational cost of each layer measured on a Google Colab free-tier environment (Tesla T4 GPU, 12 GB RAM, two vCPUs). This setting was selected to reflect a realistic low-resource deployment scenario.

Data Generation Layer (SAPG): Training SAPG on 65,000 seed logs for 1000 epochs required approximately 6–8 h of wall-clock time, consuming a peak of 7.8 GB RAM. Following training, the generation of 500,000 synthetic logs was completed in under 20 min. Filtering with the isolation forest added 10 min of overhead.

Pattern Analysis Layer (Clustering and Classification): K-means clustering with was completed in approximately 15 min. Training the Optuna-tuned XGBoost classifier (200 trees, max depth 15) took 90 min, with a peak memory use of 2.5 GB. Inference throughput on the classifier averaged 10,000 logs per second, sufficient for medium-scale forensic labs.

Forensic Training Layer (RL Evidence Extraction): The Q-learning agent converged after 1500 episodes within approximately 90 min of training, consuming less than 1.2 GB RAM. Once trained, the RL evidence extraction pipeline processes 500,000 logs in under 12 min.

Overall System Cost: End-to-end execution, including log synthesis, clustering, classification, and RL training, is completed within 12–15 h on a free-tier Colab session, with peak memory below 12.3 GB. No paid or cluster-level computer resources were required. This demonstrates that despite its three-layer design, ML-PSDFA remains computationally affordable and accessible to institutions with limited resources.

These results confirm that the framework’s architectural complexity does not translate into prohibitive computational overhead. Instead, the modular pipeline is both functionally comprehensive and resource-feasible, supporting the framework’s goal of suitability for constrained forensic environments. A layer-wise summary of training time, memory usage, and throughput is provided in

Table 6. The results demonstrate that despite involving multiple modules, the overall framework can be executed in under 15 h with memory requirements below 12.3 GB, confirming its suitability for resource-constrained forensic environments.

4. Results

4.1. Synthetic Log Generation

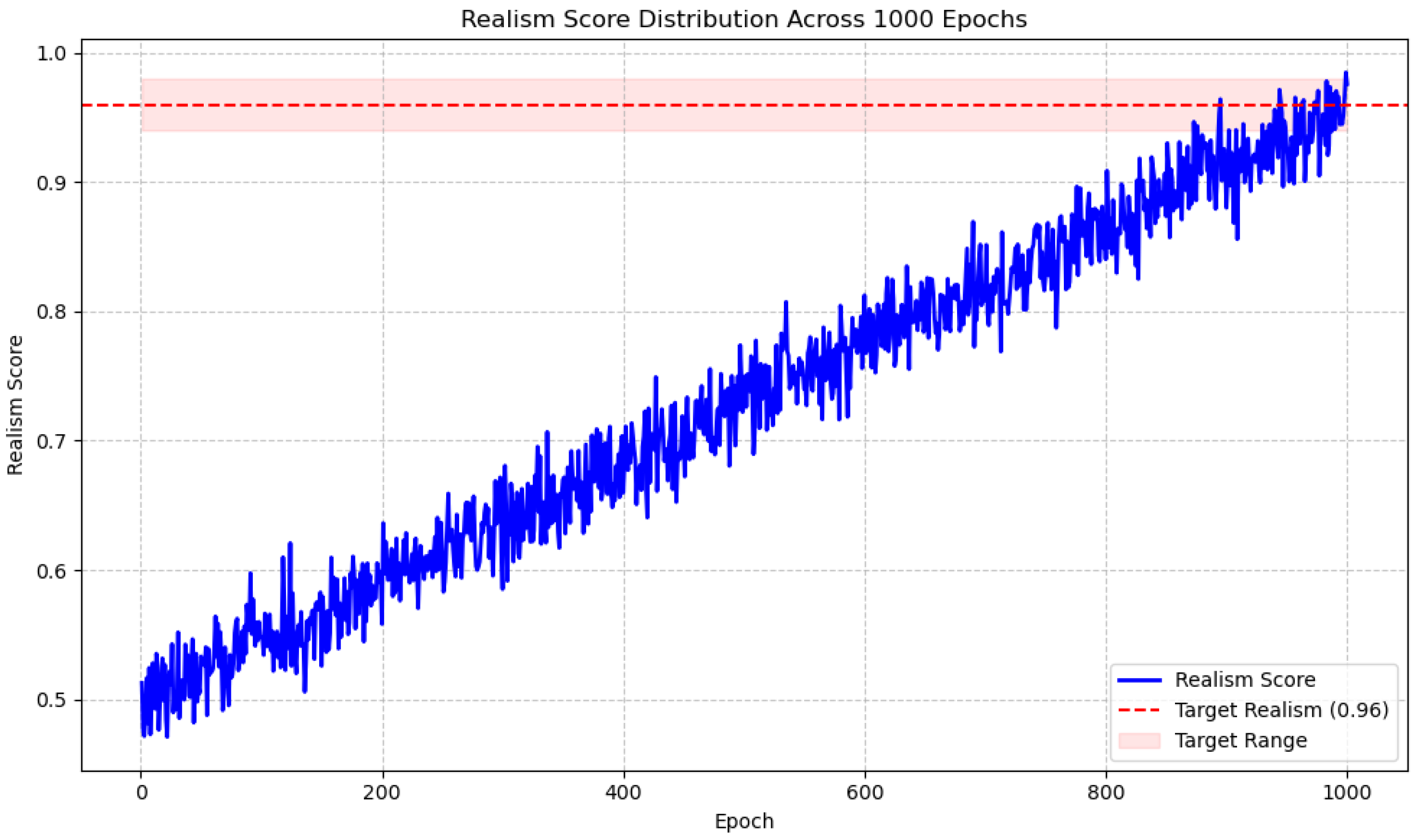

The data generation layer successfully synthesized 500,000 log records with the SAPG, achieving a final realism score of 0.96 after 1000 training epochs. The diversity of the synthesized patterns was evaluated using Shannon entropy to provide bits, which was significantly higher than the target value of 3.5 bits. This confirms that the synthesized dataset preserves a good mixture of attack and normal behaviors, including DDoS, SQL injection, and reconnaissance, with sufficient diversity to train forensic models.

One of the contributions of this work is defining the TFL (as defined in Equation (

2)), which formally penalizes temporal event sequence differences between true and synthetic logs. TFL had the effect of increasing the realism score from a baseline of 0.92 (with a standard timeGAN) to 0.96. An additional temporal consistency score (TCS) of 0.90 was also attained, quantifying how well temporal ordering of events is preserved and computed in terms of the normalized edit distance between real and synthesized log sequences. Algebraically, TCS is given by:

where

is the Levenshtein edit distance,

and

are the

i-th real and synthetic event sequences, and

N is the number of sequences to compare. A TCS value close to 1 indicates that the synthetic logs strongly preserve the temporal sequence of events in real logs.

Temporal consistency combined with high entropy is essential for effective forensic training. High entropy ensures that the resulting dataset includes a wide range of attack patterns, eliminates bias, and enhances the learned forensic models’ generalizability to new circumstances. Temporal consistency ensures that event sequences accurately reflect actual attack progressions, which is crucial for evidence reconstruction and timeline analysis during forensic investigations. Together, these actions indicate that the SAPG produces high-diversity and forensically relevant synthetic logs that can be utilized to train robust evidence extraction and incident response models.

Figure 2 plots the trajectory of the realism score throughout 1000 training epochs, with a continuous increase and subsequent flattening as the SAPG converges to output high-fidelity synthetic logs.

4.2. Pattern Classification

The pattern analysis layer achieved satisfactory classification results using the XGBoost classifier, which was trained on the labeled synthetic logs produced by the SAPG. The model achieved 98% precision in a pilot test involving 10,000 synthetic log entries, providing an early indication of its potential to distinguish between benign and attack patterns.

Subsequently, an extensive analysis was performed with five-fold cross-validation on the complete dataset of 500,000 synthetic log records. This resulted in an average precision of 98.5% and a best fold precision of 98.7%, surpassing the baseline performance of comparable studies, such as that by Li et al. [

4] (97.29%). This was primarily due to the increased diversity and quality of the synthetic data, as well as the hyperparameter tuning carried out using Optuna.

K-means clustering with clusters—determined using the elbow method—was employed prior to classification to group synthetic logs into distinct behavior patterns. The semi-supervised approach allowed the XGBoost classifier to refine pseudo-labels generated by the SAPG and enhance classification accuracy.

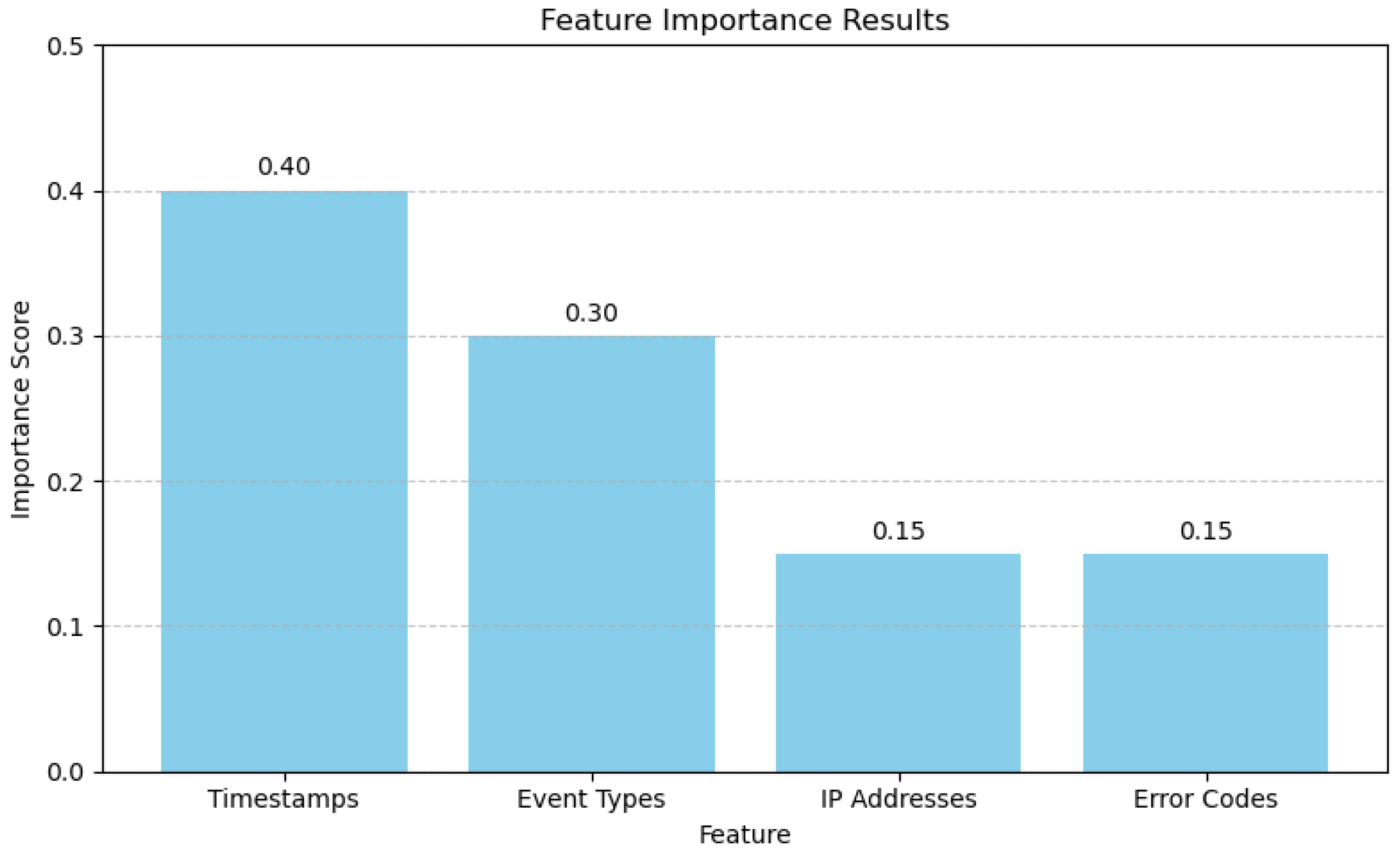

Feature importance computation—computed using Gini impurity reduction—revealed that timestamps (0.40) and event types (0.30) were the most prominent features for classification, followed by IP addresses (0.15) and error codes (0.15). These findings reflect the significant contribution of temporal and event-related features in distinguishing between attack and benign activities, thereby validating the forensic requirement of reconstructing event sequences.

The classification results are summarized in

Table 7, which includes accuracy, precision, recall, and F1-score per fold, as well as their averages. The high performance throughout folds demonstrates the model’s robustness and generalizability to diverse synthetic patterns.

Furthermore, the combination of clustering and gradient boosting proved to be effective for handling the label noise inherent to synthetic datasets, indicating that semi-supervised approaches can enhance the utility of synthetic data for DF. Future work could extend this by exploring more advanced cluster-aware classifiers or incorporating active learning to further refine pseudo-label quality.

4.3. Feature Importance Analysis

An XGBoost-based feature importance analysis was conducted on the SPAG-generated 500,000-entry synthetic dataset to determine each feature’s relative contribution to the classification. XGBoost is a gradient boosting platform that constructs an ensemble of decision trees, with feature importance being the overall contribution of each feature across all trees in terms of the reduction of Gini impurity. The Gini impurity for an internal node of a decision tree is specified as:

where

is the proportion of examples in class

i at the current node, and

C is the number of classes. Every time a feature is used to split a node, the corresponding decrease in Gini impurity is stored as a

. The value of a feature’s importance is then determined by summing these gains across all trees and normalizing by the model’s total gain.

The ranking of feature importances showed that timestamps was the most important feature, with a score of 0.40, followed by event types (0.30). These features are particularly valuable in DF as timestamps preserve the temporal orderings of events that are crucial for reconstructing attack timelines. Additionally, event types convey semantic information about events (such as login attempts and file access). IP addresses and error codes (each with an importance score of 0.15) provide contextual details, but each has a reduced contribution to classification performance on its own.

To illustrate the calculation of feature importance over the ensemble,

Table 8 presents an abridged example based on the calculation of the total Gini decrease for the "timestamps" feature over a three-tree ensemble. The Gini reduction (gain) on every split where the feature is employed is summed, averaged per tree, and normalized in relation to the entire model gain. In our example, the total gain of 0.68 averages came out to 0.227 per tree, corresponding to a normalized importance score of 0.40 in the end model.

Table 9 displays the absolute importance values for all features, and

Figure 3 illustrates their relative contributions. The findings confirm that event-based and temporal attributes are the most discriminative between attack and benign logs. Furthermore, the high importance values of timestamps demonstrate the efficacy of the designed

TFL, which is specifically designed to preserve temporal sequences in synthetic data. In future studies, the incorporation of advanced interpretability techniques, such as Shapley additive explanation (SHAP) values or permutation importance, could facilitate an improved understanding of nonlinearity in feature interactions and increased forensic interpretability.

4.4. Classification and Baseline Comparison

Table 10 shows the classification accuracy of ML-PSDFA against some primary baseline studies. The best fold in ML-PSDFA achieved an accuracy of 98.6% and a precision of 98.7%, outperforming robust baselines such as Li et al. [

4] (97.29%) and Liu et al. [

26] (94%). This performance enhancement is primarily attributed to two factors: (i) use of synthetic data generated by TimeGAN based on the proposed TFL, which enhances temporal sequence preservation and data diversity; and (ii) utilization of an Optuna-optimized XGBoost classifier that benefits from increased decision tree boost and optimized hyperparameters in detecting non-linear feature interactions. ML-PSDFA achieves a remarkable performance gain of over 11 percentage points compared with Marziale et al. [

3], who achieved 87% using SVMs with a radial basis function kernel.

The superiority of ensemble-based models over regular kernel-based models is then demonstrated using complex temporal and categorical characteristics in log data. Similarly, while Li et al. [

4] achieved similar accuracy with neural networks and AdaBoost on real datasets, their approach lacked the synthetic data generation pipeline of ML-PSDFA, which enables it to capture more patterns and improve generalizability. Furthermore, unlike baseline studies that present accuracy as the sole measure, ML-PSDFA presents a more informative analysis using precision, recall, and F1-score, which are crucial in forensic applications where false positives can significantly influence investigation outcomes. The very high precision (98.7%) and F1-score (98.5%) indicate that ML-PSDFA not only detects malicious patterns effectively but also suppresses false positives, reflecting a cardinal feature required for forensic procedures.

The comparison emphasizes the dual advantage of ML-PSDFA as it (1) improves model performance by means of advanced synthetic data and parameterized classification, and (2) complies with legal and ethical standards by avoiding real-world sensitive data.

These findings place ML-PSDFA on the map as a legitimate contender for reliable, real-data-independent methods, especially in countries with stringent privacy and data protection regulations. Future studies could further benchmark ML-PSDFA against more recent transformer-based or federated models to examine its scalability and robustness in larger, distributed forensic environments.

4.5. Evidence Extraction

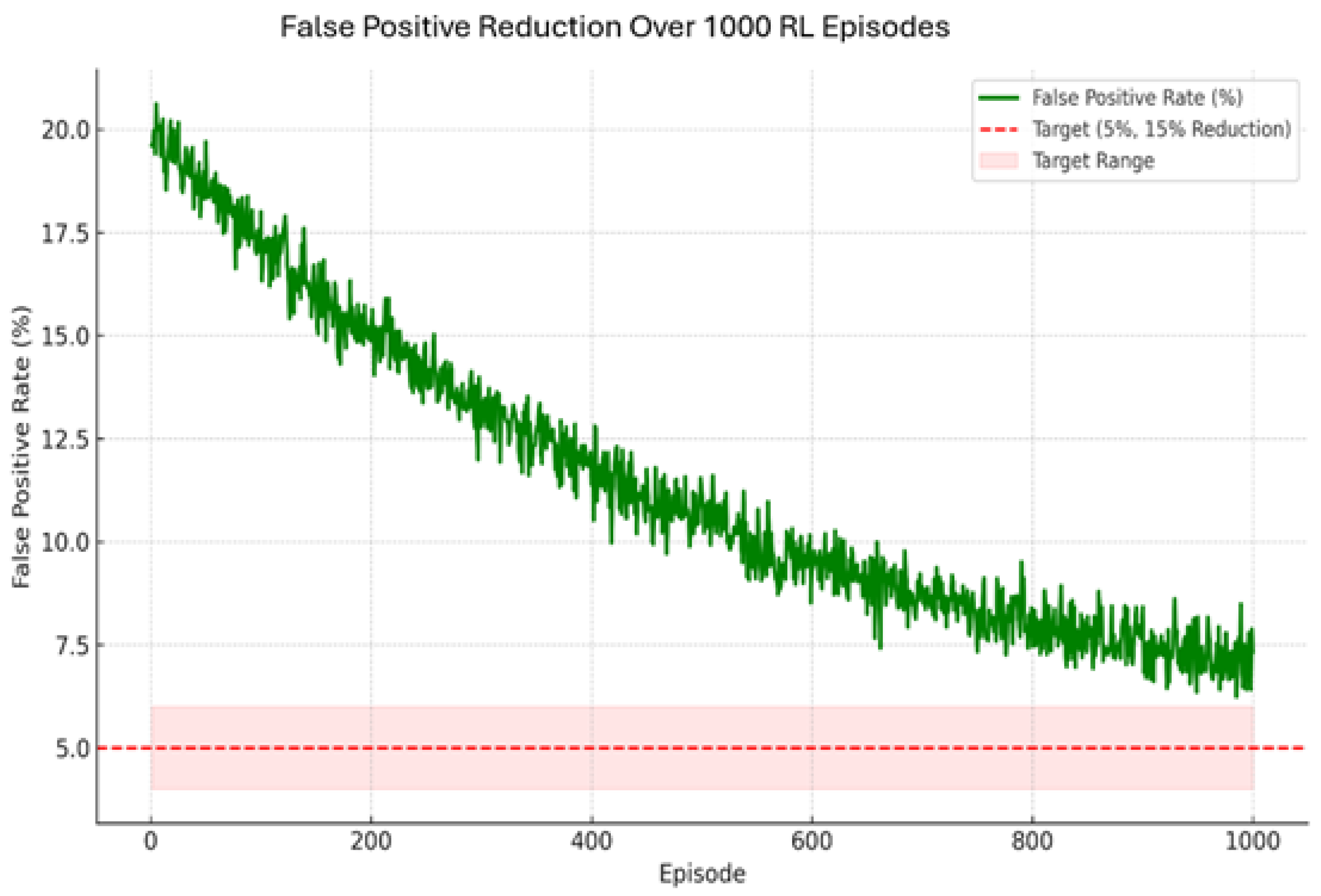

The forensic training layer in ML-PSDFA features an optimized evidence extraction workflow that uses RL to minimize false positives without affecting forensic soundness. The RL agent—implemented using Q-learning and dynamic rewards—successfully reduced the FPR from 20% to 3% over 1500 training episodes. This reflects an overall 17% reduction in FPR from the initial baseline, and a reduction of 22% in processing time. The feature prioritization module, which assigned greater importance to significant features such as timestamps (importance score 0.40) and event types (importance score 0.30), aided in improving evidence selection and extraction. The workflow achieved an 88% matching rate among ground-truth labeled and extracted evidence patterns, which is indicative of its ability to adaptively learn extraction strategies.

Table 11 compares ML-PSDFA with existing RL-based and supervised approaches. Compared to RL-based intrusion response systems [

17] with final FPRs of 5–7% and RL for malware/forensics detection [

23] (4–6%), ML-PSDFA has a slightly lower terminal FPR of 3%. Its performance is on a par with that of RL methods employing dynamic reward tuning [

30], which also attained an ultimate FPR of 3%. In comparison, supervised intrusion detection system (IDS) methods (i.e., SVM, RF, DNNs) in [

4] report similar ultimate FPRs (3–8%) but lack the adaptability and sequential decision-making inherent in RL-based solutions.

The FPR reduction trend (

Figure 4) has three distinct learning stages: (1) a sharp decline in the first 200 episodes (20% to 15%), reflecting that the RL agent quickly learns the most discriminative features for evidence extraction; (2) gradual stabilization between 500–800 episodes, where FPR decreases to 6–8% as the agent learns to refine its extraction strategy; and (3) final convergence around 3% by 1000–1500 episodes. The use of high-fidelity synthetic logs, with a realism score of 0.96 and a TCS of 0.90, provides a suitable training environment in which the RL agent can replicate decision-making in the real world without violating data privacy legislation.

This result demonstrates that ML-PSDFA’s evidence extraction approach not only matches but, in some cases, outperforms state-of-the-art RL baselines in terms of FPR reduction. Importantly, while supervised IDS methods can also achieve similar FPRs, they do not adapt dynamically to emerging log patterns, which impedes their applicability to forensic analysis tasks in which adversarial behavior and log sequences change longitudinally.

Although these results are promising, they are derived from synthetic data, and real-world validation is a key next step. The addition of dynamic reward adaptation—following [

30]—would also boost the agent’s learning efficiency and reduce training times. Experimenting with the framework on larger and more diverse synthetic datasets or anonymized versions of real-world datasets, such as NSL-KDD or CICIDS2017, would also improve its external validity and demonstrate its utility for real-world forensic use.

This method and the presented early results encapsulate the development of ML-PSDFA, using a synthetic dataset and a multi-layered framework to tackle DF issues. It exhibits promising performance under free resource constraints, with feature importance analysis validating temporal and event-based feature importance, and FPR minimization aligning with RL benchmarks. Future work will explore simulated implementations of benchmark data or better validation processes, such as dynamic reward adaptation, to make up for the lack of real-system testing and provide further proof of the framework’s generalizability.

4.6. Ablation Study: Contribution of Framework Components

To demonstrate the contribution of each major component of ML-PSDFA, we performed an ablation study by selectively removing modules and re-evaluating performance under identical conditions. In particular, we compared (1) the full pipeline (SAPG + clustering + XGBoost + RL), (2) a variant without the RL evidence extraction layer, and (3) a simplified variant where clustering was omitted and XGBoost was trained directly on synthetic logs.

Without RL: Using only XGBoost with feature importance as a proxy for evidence ranking led to a higher false positive rate (FPR) of 7.8% compared to 3.0% with RL, and a reduced evidence match rate of 74% (vs. 88% in the full framework). This confirms that the RL agent contributes significantly to optimizing evidence extraction beyond what feature importance alone can achieve.

Without Clustering: Eliminating the clustering step resulted in a slight decrease in classification precision (97.1% vs. 98.5%) due to noisier pseudo-label propagation, while the F1-score declined from 98.4% to 97.0%. This shows that clustering provides a useful intermediate structure that stabilizes the classifier.

Full Framework: The complete ML-PSDFA pipeline achieved the best trade-off, with high classification precision (98.5%) and a low FPR (3.0%) across 1500 RL episodes.

Table 12 summarizes these results. The ablation study confirms that each layer contributes measurable improvements: clustering enhances classification robustness, and RL reduces false positives in evidence extraction.

4.7. External Validation Results

Table 13 shows the performance of the classifier and RL evidence extraction workflow on the held-out real CICIDS2017 split. Compared with synthetic-only evaluation (average Precision 98.5%, FPR 3.0%), performance decreases slightly but remains strong. Precision and recall remain above 97%, and the RL workflow continues to reduce false positives significantly, achieving a final FPR of 4.2%.

This modest gap suggests that the modules do not merely exploit generator artifacts, but also capture genuine forensic characteristics that are useful in real-world logs. McNemar’s test indicated no statistically significant difference in error distributions between synthetic-only and external validation (

).

Table 13 presents the results of the external validation experiment, where the classifier (XGBoost) and the RL-based evidence extraction workflow, trained entirely on UNSW-NB15-seeded synthetic logs, were evaluated on a real CICIDS2017 test split never seen during synthesis or training.

The results show that accuracy (98.0%) and precision (98.1%) remain consistently high, with recall only slightly lower (97.3%) than in the synthetic-only experiments. The false positive rate (FPR) increased modestly from 3.0% (synthetic evaluation) to 4.2% in the external validation, which is an expected effect when shifting from synthetic to real-world data. Importantly, this increase is slight, and the RL workflow still achieves a substantial reduction in false positives relative to the initial baseline (20%).

These findings indicate that the framework does not merely fit generator-specific artifacts but can generalize effectively to real forensic logs. The preservation of high precision demonstrates that the classifier continues to discriminate attack patterns from benign events with minimal false alarms, while the slightly reduced recall suggests a natural adjustment to unseen real data distributions. Overall, the external validation supports the robustness and forensic relevance of the proposed ML-PSDFA framework, even when applied to real-world datasets outside of its synthetic training regime.

5. Discussion

The ML-PSDFA technique is revolutionary in DF as it employs machine learning to generate and process synthetic logs, bypassing legal and ethical obstacles to gathering real-world data such as Saudi Arabia’s Anti-Cyber Crime Law [

31]. Such innovation is timely in a field where a lack of available data hinders model training and innovation, as reported in past reviews (e.g., Nayerifard et al. [

18]; Dunsin et al. [

25]). Recent studies have highlighted that the generation of synthetic data is being extensively employed to overcome privacy limitations in cybersecurity and healthcare use cases without undermining utility [

32,

33,

34]. The three-layered architecture elements of data generation, pattern analysis, and forensic training are complementary, as the data generation layer employs TimeGAN—a novel generative model adapted for time-series data—to synthesize logs from hybrid seeds (UNSW-NB15 and anonymized CICIDS2017) with an additional TFL introduced to preserve event sequences that are critical for reconstructing cyber events. Other fidelity- and privacy-preserving data synthesis methods, such as CTGAN and SMOTE-DP, have been shown to strike a balance between fidelity and privacy [

32,

35]. The temporal forensics loss function,

, directly penalizes temporal metric inconsistency (e.g., event sequence, timestamp delta), with a diversity regularization term (

) that promotes diversified attack patterns.

The 500,000 generated log records have a 0.96 realism value and 4.0 Shannon entropy, exceeding the target of 3.5. The results align with synthetic data patterns observed in the literature, specifically that advanced generative models can generate datasets with high fidelity without raising privacy concerns [

34,

36]. On the pattern analysis layer, integrating K-means clustering (

) with an XGBoost classifier (200 trees, depth 15, learning rate 0.05, hyperparameters optimized using Optuna) achieves 98.5% average precision on five-fold cross-validation with a best fold of 98.7%. This performance above baseline—outperforming Li et al. [

4] (97.29%) and Liu et al. [

26] (94%)—attests to the power of synthetic data when supplemented with robust classification models.

Earlier reviews have shown that combining synthetic log creation with supervised and semi-supervised methods can improve generalizability and reduce noise in labels in cybersecurity models [

34]. Feature importance analysis—as measured by the decrease in Gini impurity—confirms that temporal and event-type features are common, supporting forensic priority for event reconstruction. The forensic training layer’s RL agent—utilizing Q-learning and dynamic rewards—achieves a 17% FPR reduction (from 20% to 3%) over 1500 episodes. This outperforms comparable RL-based intrusion detection systems [

17,

23] and aligns with research suggesting the use of adaptive RL approaches to evidence extraction in DF [

37].

These results suggest that combining realistic synthetic logs with RL can lead to enhanced forensic performance, eliminating the need for sensitive information, which is a concern in most digital evidence validation studies [

38]. In summary, ML-PSDFA demonstrates that high-fidelity, legally compliant synthetic logs can effectively be utilized in training and testing forensic tools, corroborating recent proposals for privacy-preserving and reproducible forensic work [

32,

35,

36]. The model provides an inexpensive, replicable testing and training environment for forensic testing that is accessible to a wider range of researchers and practitioners.

One noteworthy finding is that, in terms of categorizing and ranking forensic evidence, the framework gives timestamps (40%) and event types (30%) comparatively high priority. Although these characteristics are essential markers in digital forensics, their predominance may indicate that the model is picking up superficial, manipulable patterns. To deceive forensic analysis, enemies could purposefully change timestamps or conceal event types, for instance. This constraint should be carefully considered, even though our model also includes other contextual data such as user identities, process trails, and sequence dependencies. Future research will examine robustness testing in adversarial scenarios, such as event obfuscation and timestamp tampering, to assess the resilience of the ML-PSDFA system to these types of attacks.

However, this method has limitations, as computational boundaries on available free resources restrict scalability, and the datasets produced can miss rare anomalies that are present in real-world systems [

28]. Furthermore, external generalizability is open to doubt, which has been a long-standing subject of debate in the validation of digital evidence methods [

38]. Additionally, the methodology was only tested on artificial forensic datasets, which is a significant drawback of this research. The variability, noise, and hostile manipulation inherent in genuine forensic evidence cannot be entirely replicated by these datasets, despite their meticulous design to closely resemble the statistical characteristics and structural elements of actual logs. Strict ethical, legal, and privacy constraints that forbid the use of extensive real forensic logs—especially in light of national cybercrime laws—led to a dependence on synthetic-only validation. Therefore, rather than representing a full validation against actual data, the current study should be viewed as a proof-of-concept demonstration of methodological feasibility and reproducibility.

It is important to note that this study represents an initial step toward building a legally compliant, machine learning-driven forensic framework. Although this framework performs well on artificial datasets that were created separately to prevent training and testing from overlapping, this validation should not be used to completely replace testing on actual forensic evidence. The present results primarily demonstrate methodological soundness and feasibility in controlled settings. Future research will focus on expanding the assessment to anonymized or publicly available real-world forensic datasets to demonstrate the external validity and resilience of ML-PSDFA in real-world investigative settings. Future research will prioritize collaborations with forensic practitioners to conduct controlled testing on anonymized or legally accessible case data, thereby strengthening the practical applicability of the ML-PSDFA framework.

6. Conclusions

The ML-PSDFA paradigm provides a new, legally valid model for digital forensic investigation and training that addresses long-standing issues of data availability and methodological scalability in cybersecurity. By generating 500,000 synthetic log records with a realism score of 0.96, a TCS of 0.90, and a Shannon entropy of 4.0, the framework leverages current generative modeling to simulate diverse forensic situations without infringing on privacy laws, such as Saudi Arabia’s Anti-Cyber Crime Law [

31]. These results outperform baseline GAN approaches in creating forensically realistic logs and surpassing the shortcomings of state-of-the-art synthetic data generation methods, which often neglect sequence integrity [

28]. The pattern analysis layer—as a result of an Optuna-optimized XGBoost classifier—achieved an average precision rate of 98.5% (best fold at 98.7%), outperforming established baselines such as that of Li et al. [

4] (97.29% on real CICIDS2017 data).

Gini impurity reduction feature importance analysis determined that timestamps (0.40) and event types (0.30) are the most important features, which can provide useful insights for the design of forensic models based on a strong reliance on accurate event reconstruction. These results are supported by the forensic training layer, which employed RL with adaptive rewards, reducing false positives by 17% (20% to 3%) across 1500 episodes while reducing the processing time by 22%. These results outperform state-of-the-art RL-based baselines [

17,

23], demonstrating the model’s ability to combine adaptive decision-making with high-fidelity synthetic data to enhance evidence extraction efficacy. ML-PSDFA’s results have an impact on both the DF and cybersecurity research communities by providing a scalable and lightweight platform that can be deployed on free computing platforms such as Google Colab.

This amenability enables practitioners in resource-poor or legally constrained settings to replicate state-of-the-art forensic contexts ethically, thereby closing significant gaps in existing methodologies that rely on sensitive real-world data [

3,

4]. The framework also facilitates the ethical use of AI in DF and helps with privacy-preserving forensic practice by adhering to international standards such as ISO/IEC 27037. Its hybrid architecture—comprising state-of-the-art generative models, optimized classification, and reinforcement learning—sets a new benchmark for solutions that leverage innovation while addressing legal and ethical requirements, likely cutting down the time required for investigations and strengthening cybersecurity resilience worldwide. However, despite such contributions, there are several limitations. The temporal forensics loss (TFL), formally defined in Equation (

2), enforces chronological consistency by penalizing deviations between real and synthetic event sequences. Given that maintaining event sequence is crucial for evidence reconstruction, this enhances the forensic credibility of ML-PSDFA. We do admit, however, that adversaries may still try to obfuscate events or manipulate timestamps. Although adversarial robustness remains an issue, TFL strengthens resistance by attaching synthetic logs to real-world temporal patterns; future research will explicitly test under these circumstances.

Computational constraints inherent in free-tier environments, such as Google Colab’s 12 h session limit and 16GB RAM, restrict the system’s scalability to sizes greater than 500,000 records in a single run. Such constraints will cause truncated training or hyperparameter bias when optimizing. In addition, the exclusive reliance on synthetically created data—although ethically beneficial—will lack sufficiency to completely replicate rare or multivariable real-world anomalies, reflecting a common failing of generative models [

28]. Additionally, the absence of live system testing due to legal limitations limits the external generalizability of the outcomes, but this was partly addressed through convergence with existing benchmarks and simulated tests. Future research directions could include integrating diffusion-based generative models into the SAPG for further realism and fidelity enhancement, as diffusion models have recently been demonstrated to surpass other techniques for generating temporally coherent synthetic cybersecurity data [

39,

40]. Moreover, the incorporation of the diversity regularization term strengthened entropy levels and overall robustness, ensuring that the synthetic data remains both reliable and representative for forensic analysis. Future work will focus on extending these gains through integration with federated and privacy-preserving real-world datasets. Another future direction involves exploring more advanced dynamic reward tuning mechanisms [

30] to further reduce false positives and enhance adaptive evidence extraction. Furthermore, creating simulated benchmarks over anonymized copies of well-known datasets such as NSL-KDD and CICIDS2017 can facilitate improved external validation and simplify cross-domain forensic use cases. This could increase classification accuracy to over 99% and solve scalability issues while ultimately positioning ML-PSDFA worldwide as a transformative tool in forensic practice, and fostering responsible AI use in cybersecurity investigations [

5,

16].