Abstract

Reliable gesture interfaces are essential for coordinating distributed robot teams in the field. However, models trained in a single domain often perform poorly when confronted with new users, different sensors, or unfamiliar environments. To address this challenge, we propose a memory-efficient replay-based domain incremental learning (DIL) framework, ReDIaL, that adapts to sequential domain shifts while minimizing catastrophic forgetting. Our approach employs a frozen encoder to create a stable latent space and a clustering-based exemplar replay strategy to retain compact, representative samples from prior domains under strict memory constraints. We evaluate the framework on a multi-domain air-marshalling gesture recognition task, where an in-house dataset serves as the initial training domain and the NATOPS dataset provides 20 cross-user domains for sequential adaptation. During each adaptation step, training data from the current NATOPS subject is interleaved with stored exemplars to retain prior knowledge while accommodating new knowledge variability. Across 21 sequential domains, our approach attains accuracy on the domain incremental setting, exceeding pooled fine-tuning (), incremental fine-tuning (), and Experience Replay () by , , and percentage points, respectively. Performance also approaches the joint-training upper bound (), which represents the ideal case where data from all domains are available simultaneously. These results demonstrate that memory-efficient latent exemplar replay provides both strong adaptation and robust retention, enabling practical and trustworthy gesture-based human–robot interaction in dynamic real-world deployments.

1. Introduction

Human–robot interaction (HRI) is an interdisciplinary domain concerned with the analysis, design, and assessment of robotic systems that operate alongside or in collaboration with humans [1]. Communication forms the foundation of this interaction, and the manner in which it occurs depends strongly on whether the human and the robot share the same physical environment [1]. In high-noise settings like construction zones, manufacturing floors and airport ramps, standard voice-based or teleoperation-based communication frequently fails due to excessive background noise, limited maneuverability, and safety considerations. Consequently, dependence on these conventional communication methods may undermine both efficiency and user well-being, highlighting the necessity for alternative, context-sensitive communication strategies. In situations where verbal communication is hindered by noise, mobility limitations, or safety issues, hand gesture-based interaction provides a subtle and natural means for human–robot collaboration [2]. This nonverbal communication generally corresponds with human social behavior and can substantially reduce cognitive burden, making it more effective than verbal communication in challenging environments [2]. A classic example of this in practice is the system of air-marshalling signals which are standardized hand gestures that have long enabled pilots and ground crews to coordinate aircraft movement reliably and unambiguously. Recent studies on vision-driven systems in robotics have shown the effective ramp hand signals recognition through deep learning and computer vision methods, hence enhancing the autonomy and operational safety of unmanned vehicles [3,4,5]. These developments collectively emphasize gesture-based human–robot interaction, as an essential framework for promoting natural, accessible, and reliable communication between humans and robots, especially in contexts where conventional verbal communication is unfeasible [2,4,5,6,7].

Gesture-based communication is a promising approach in HRI to improve ergonomics, decrease downtime, raise safety, and boost resilience, where even children, older people, hearing- and speech-impaired individuals, or non-technical individuals with limited knowledge of robot operation can interact with robots [2,4,5,8,9,10,11]. However, one of the primary challenges in deploying robotic systems in real-world settings lies in the phenomenon of domain shift. Domain shift occurs when a model trained on data from one domain (the source) is deployed in another domain (the target) where it must perform the same task, yet the data distributions differ [12,13,14,15]. The difference in the data distribution in gesture-based HRI system deployment can be due to illumination, background clutter, camera viewpoints, or variations in human gesture execution styles across facilities, operators, and over time. These distributional differences severely impair model performance and render straightforward deployment impractical [16,17].

Domain incremental learning (DIL), which trains models to generalize across new domains encountered sequentially while keeping the task unchanged, is a method for addressing domain shift challenges [18,19]. This approach helps HRI systems to adapt to new domain knowledge, which is aligned with the generalization process for a robot in a real-world scenario. One of the fundamental challenges in DIL is catastrophic forgetting. According to various studies, when a neural network is trained on new tasks, its performance on previously learned tasks decreases drastically. This is because the parameters critical for earlier knowledge are overwritten by the new learning [20,21,22,23,24,25,26]. This problem is particularly relevant to domain incremental learning, where a system must continuously learn for new users who might perform gestures in different environments and at different speeds without losing proficiency in previously learned gesture patterns. Through effective retraining protocols and limited sample retention, research on continual gesture learning for HRI has shown great promise for incrementally learning new gesture classes while reducing forgetting [27,28,29,30,31]. However, a notable gap in the current literature is that most efforts have focused on class-incremental learning, whereas the challenge of adapting to new domains—such as different users and environments—within a DIL framework remains underexplored.

To address these challenges, our work focuses on designing gesture-based HRI systems that can work reliably in a wide range of real-world situations. Specifically, we target the open problem of adapting to new users and environments under a domain incremental learning framework, while minimizing catastrophic forgetting. Subject-as-domain domain incremental learning (DIL) frames each subject or human operator as a distinct domain within a shared task and label space. Under this formulation, gesture patterns vary across users due to individual morphology, motion style, and environmental context, producing sequential domain shifts that challenge model generalization [32]. This formulation falls under the domain incremental learning paradigm, in which the input distribution changes across domains while the label space remains fixed [33]. Our study pursues two complementary objectives: (1) adaptation to continuously learn new subject domains with high accuracy and robustness under shifting distributions; and (2) retention to preserve prior-domain performance by minimizing catastrophic forgetting. Together, these objectives enable human-centered incremental learning, where the system remains dependable and inclusive across diverse users. We address the challenge of memory efficiency by investigating techniques that selectively keep and replay the most informative samples from previous domains. In addition, we emphasize the importance of robust evaluation protocols that not only assess recognition accuracy but also capture retention, forgetting, and resource consumption across long sequences of domain shifts. Together, these directions aim to bridge the gap between current class-incremental approaches and the practical requirements of domain-incremental gesture recognition in human–robot interaction. The main contributions of this work can be summarized as follows:

- We present a multi-domain air-marshalling hand signal recognition framework under a DIL paradigm, facilitating robust adaptation to new users and environments without sacrificing prior knowledge.

- We introduce a memory-efficient latent exemplar replay strategy where the latent embedding of gesture video generated by a frozen encoder is used for DIL training to secure the privacy of the user.

- We develop a clustering-based exemplar selection mechanism to identify and store the most representative samples from previously learned domains, thereby enhancing generalization in subsequent learning phases.

- We investigate extensive evaluation metrics that go beyond accuracy, explicitly quantifying knowledge retention, forgetting, and resource utilization across extended sequences of domain shifts.

The remainder of this paper is organized as follows. Section 2 represents the related work in the domain of gesture-based domain incremental learning for HRI. Section 3 defines the problem statement about the subject-as-domain DIL setting and the twin goals of adaptation and retention. Section 4 introduces the proposed method ReDIaL and its components, such as latent embeddings (Section 4.1), the cross-modality model (Section 4.2), exemplar memory (Section 4.3.2), and balanced replay (Section 4.3.3). Section 5 covers the experimental setup for the proposed method ReDIL. Section 6 reports results across 21 domains with proper evaluation metrics and analysis. Section 7 discusses implications for human–robot collaboration, and Section 8 concludes with future directions.

2. Related Work

2.1. Gesture Recognition for HRI and Multi-Robot Control

Gesture-based interfaces that are easy to use and do not require technical knowledge can improve human–robot interaction [34]. This multidisciplinary field, which has its roots in early voice-gesture research from the 1990s, currently includes applications in industrial robots, assistive robots, exoskeletons, prostheses, and cognitive science, ergonomics, IoT, big data, and virtual reality [35]. Utilizing Microsoft Kinect v2 for multi-robot interaction, Canal et al. (2015) [34] created a real-time gesture recognition system that achieved high identification rates in user tests. The system implemented both static and dynamic gesture recognition utilizing weighted dynamic time warping and skeletal characteristics [34]. In their taxonomy of gesture-based human–swarm interaction, Alonso-Mora et al. (2015) divided techniques into two categories: shape-constrained interaction, which controls robot formations, and free-form interaction, which allows robot selection directly [36]. In order to guarantee single-operator control, Cicirelli et al. (2015) developed an HRI interface that combined quaternion joint angle information from neural network classifiers and numerous kinect cameras with person re-identification [37]. In a more recent study [38], Nguyen et al. (2023) created a wireless system that recognizes four distinct gestures using deep neural networks and XGBoost in conjunction with Vicon motion capture technology. This system achieved an accuracy of roughly 60% for robot control applications. However, most prior HRI gesture systems assume fixed users and sensing setups and do not handle user/environment-induced domain shifts or incremental adaptation; we address this gap with a multi-domain, domain incremental air-marshalling hand-signal recognizer that adapts to new users and contexts without sacrificing previously learned competence.

2.2. Continual, Lifelong, and Domain Incremental Learning

The challenge of gradually learning new information without forgetting previously taught material is addressed by continual learning. Deep neural networks are fundamentally limited by catastrophic forgetting [39]. Task incremental, domain incremental, and class incremental learning are the three basic situations for continual learning identified by Van de Ven et al. (2022). Each of these scenarios has unique difficulties and calls for a different approach [33]. Beyond these simple situations, Xie et al. (2022) investigated a more intricate situation in which the distributions of classes and domains vary at the same time. They suggest a domain-aware approach that uses bi-level balanced memory and von Mises–Fisher mixture models to address intra-class domain imbalance [40]. To preserve model compactness while preventing forgetting, Hung et al. (2019) offer a complementary strategy that combines progressive network expansion, crucial weight selection, and model compression. Together, these studies show that specific architectures and training techniques that strike a compromise between the stability-plasticity conundrum that arises when learning from non-stationary data streams are necessary for effective ongoing learning [41]. While continual-learning methods study catastrophic forgetting in general, few works instantiate a true domain-incremental HRI setting with a fixed label space and user-driven distribution shift or report retention/forgetting over long domain sequences; our approach formalizes this setting and provides evaluation that jointly measures accuracy, retention, forgetting, and resource usage.

2.3. Memory-Efficient Rehearsal Strategies

For continuous learning in resource-constrained situations, such as robotic systems, memory-efficient rehearsal techniques are essential. ExStream was first presented by Hayes et al. (2018), who showed that while complete rehearsal can prevent catastrophic forgetting, their technique achieves similar results with far lower memory and computation needs [42]. By storing intermediate layer activations rather than raw input data, Pellegrini et al. (2019) introduced Latent Replay, which significantly lowers storage and processing requirements while preserving representation stability through regulated learning rates. This method made it possible to apply continuous learning on smartphones in almost real-time [43]. The gradient-matching coresets created by Balles et al. (2022) for exemplar selection do not require pre-training because they choose rehearsal samples by comparing the gradients created by the coreset to those of the original dataset [44]. Similar to this, Yoon et al. (2021) developed Online Coreset Selection (OCS), which works well on standard, unbalanced, and noisy datasets by iteratively choosing representative and instructive samples that optimize current task while retaining a high affinity for previous tasks [45]. For continuous learning in resource-constrained situations, such as robotic systems, memory-efficient rehearsal techniques are essential. ExStream was first presented by Hayes et al. (2018), who showed that while complete rehearsal can prevent catastrophic forgetting, their technique achieves similar results with far lower memory and computation needs [42]. By storing intermediate layer activations rather than raw input data, Pellegrini et al. (2019) introduced Latent Replay, which significantly lowers storage and processing requirements while preserving representation stability through regulated learning rates. This method made it possible to apply continuous learning on smartphones in almost real-time [43]. The gradient-matching coresets created by Balles et al. (2022) for exemplar selection do not require pre-training because they choose rehearsal samples by comparing the gradients created by the coreset to those of the original dataset [44]. Similar to this, Yoon et al. (2021) developed Online Coreset Selection (OCS), which works well on standard, unbalanced, and noisy datasets by iteratively choosing representative and instructive samples that optimize current task adaptation while retaining a high affinity for previous tasks [45]. Existing rehearsal strategies often store raw inputs (raising storage/privacy concerns) or task-specific coresets; in contrast, we propose a privacy-preserving latent exemplar replay that stores compact video embeddings from a frozen encoder and uses clustering-based selection to maintain representative memories under tight budgets while supporting robust adaptation.

3. Problem Statement: Subject-As-Domain Incremental Gesture Learning

Our domain-incremental gesture recognition framework is based on clearly defined input spaces, a shared label space, and domain-indexed datasets. Two complementary modalities (1) RGB frames () and (2) Posture data (), are used to represent each observation . and have the dimension of , where T is the number of frames, C is the number of channels, H is the height of the frame, and W is the width of the frame. The multimodal observation can therefore be expressed as:

The detailed description of each modality is described in Section 4.1. The set of gesture classes to be recognized is represented by the shared label space , which is common across all domains. Each observation is associated with a distinct label . Formally, the dataset corresponding to domain is given by the following:

As the robot is incrementally exposed to new environments, domains are indexed over time:

- At time step , an initial dataset from the source domain , which may correspond to an internally collected dataset in a controlled environment, is used to train the robot.

- For subsequent time steps , the robot encounters datasets corresponding to new domains . In our experimental setup, these domains represent distinct participants, each introducing variability in body morphology, environmental conditions, and gesture execution styles, while preserving the same label space .

3.1. Domain Shift Formulation

Let be the multimodal observation and its gesture label (also referred to as class). Throughout this paper, the terms label and class are used interchangeably. The observation distribution in domain can be written as follows:

where

- controls execution speed, affecting the temporal length T of ;

- controls subject appearance and morphology, affecting pixel statistics;

- controls background/scene context, affecting visual content.

A domain shift occurs when any of these latent factors have domain-dependent distributions. Lets assume, for any new user in new domain , the distribution contains execution speed , subject appearance and morphology , background/scene context :

even though the gesture labels remain identical across domains () within the shared label space , the underlying data distributions differ. Hence, domain shifts in our problem are primarily driven by the following:

each of which modifies a different generative component of .

This formulation highlights that although the gesture labels remain identical across domains, subject-specific and environmental factors introduce significant distributional shifts in the input space. Addressing these discrepancies requires effective domain adaptation techniques that enable the model to generalize properly while retaining previously acquired knowledge.

3.2. Domain Incremental Learning Objective

Let be a sequence of domains that share the same gesture label space . At time step t, the model is trained on data from domain and achieves an accuracy:

which measures how well recognizes gestures within the distribution of and is the probability.

When the robot is deployed in a new environment at time , the data distribution changes as discussed in Section 3.1. A domain incremental learning process adapts the domain by fine-tuning the previous model to produce an updated model . The primary objective of this adaptation is twofold:

Here, ‘≥’ denotes a no-degradation criterion: after adapting to the new domain , the updated model should achieve accuracy on each previously learned domain that is at least the accuracy of the prior model on ; i.e., past-domain performance is maintained or improved. If , the model has suffered from catastrophic forgetting, a well-known problem in continual learning where adaptation to new data leads to a severe drop in accuracy on past domains. So, the forgetting in a DIL setting, can be defined as

If the , it indicates that there is catastrophic forgetting available during adaptation of domain . Thus, two fundamental and complementary goals are at the center of the creation of the updated model :

Goal 1. Adaptation: Achieve high by effectively learning the new domain distribution. This ensures the model remains reliable and accurate when deployed in novel environments.

Goal 2. Retention: Maintain or for all previously seen domains. This prevents catastrophic forgetting and guarantees that knowledge from earlier domains remains useful over time.

The simultaneous achievement of these goals is essential to maintaining strong, lifelong learning of domains in HRI. Without retention, adaptation runs the risk of causing catastrophic forgetting, in which previous environment knowledge is erased, decreasing the robot’s adaptability and dependability. On the other hand, focusing on retention without enough adaptation could result in poor generalization in unfamiliar settings, which would compromise usability in the real world. The basic problem of lifelong learning is thus embodied by gesture recognition across successive domains: allowing for ongoing adaptation to changing environments while preserving previously learned competencies.

4. Methodology

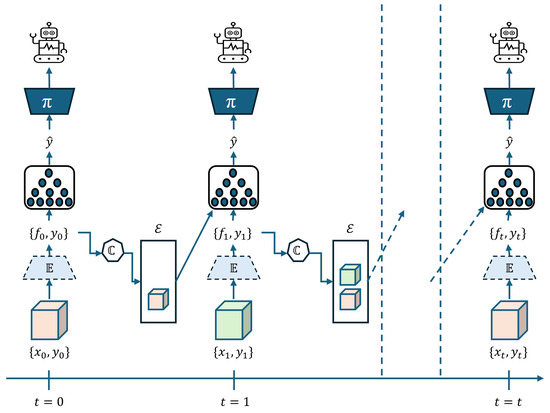

This section details our Replay-based Domain Incremental Learning (ReDIaL) pipeline for gesture recognition in HRI. As illustrated in Figure 1, the pipeline has four stages: (i) latent embedding generation with a frozen encoder that maps RGB and posture streams into a stable, privacy-preserving feature space; (ii) a cross-modality recognition model that aggregates modality-specific representations into a unified embedding; (iii) clustering-based exemplar selection that stores a compact, diverse subset of past domains within a fixed memory budget; and (iv) balanced replay training that interleaves current-domain samples with stored exemplars to control the stability–plasticity trade-off. Freezing stabilizes the feature manifold across subjects and reduces storage, while clustering improves mode coverage so that limited memory remains informative. The following subsections expand each component and present the training algorithm used for sequential adaptation.

Figure 1.

Overview of the proposed ReDIaL framework. At each step, inputs are encoded into a stable latent space by a frozen encoder, representative exemplars are selected via clustering, and balanced replay combines current and stored samples to adapt to new domains while preserving prior knowledge. is a frozen action decoder for robot action generation, and this is out of scope for this proposed ReDIaL method.

4.1. Latent Embedding Generation

As mentioned in Section 3, the contains two modalities which are and . The and can be expressed as follows:

- RGB frames (): The RGB video input stream that contains visual information such as background, clothing, texture, color, appearance, gesture, and environmental context. This modality represents rich pixel-level information and preserves contextual information that is necessary for differentiating gestures in diverse settings. Let denote the RGB video modality defined in the RGB video space , such that and , where T represents the number of frames, C denotes the number of channels, H indicates the height, and W specifies the width of the video .

- Posture data (): This modality provides a structured representation of human motion derived from tracked body joints over time, which can be obtained either from the RGB stream using a keypoint detection algorithm [46,47] or from dedicated hardware sensors. Unlike raw RGB frames, it emphasizes geometric and kinematic information such as posture, dynamics, and gesture trajectories, which are less sensitive to environmental variations [48]. For implementation, each frame of is represented as a skeleton-based image generated from the detected body joints, yielding .

Since both modalities in a have the same shape, we can write

Here, the first dimension indexes the RGB and posture modalities. Directly storing for all observations is memory-expensive in a DIL setting, as each modality represents a full video sequence. To address this, we map into a compact latent representation using a shared encoder . The latent embeddings are obtained as follows:

where n is the embedding dimension per modality, and denote the embedding for and , respectively. The final embedding can be expressed by concatenating the latent embeddings for each modality as follows:

This is the compact latent representation of observation that is utilized for storage and domain incremental learning. This approach significantly reduces memory requirements while retaining modality-specific information in a joint feature space. Thus, represents the latent embedding–label pair corresponding to an observation–label pair .

4.2. Model Overview

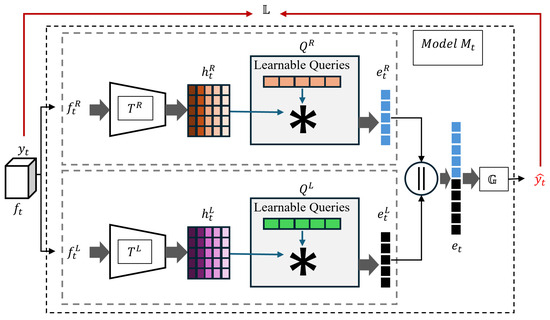

A cross-modality recognition model is used as the core classifier within our gesture-based HRI framework. The overview of the is given in Figure 2. Given the latent embeddings and from the RGB and posture modalities, respectively, two parallel transformer encoders and are applied to obtain modality-specific contextual representations:

These outputs are passed through a Query-based Global Attention Pooling (QGAP) mechanism, parameterized by learnable queries and , to produce compact embeddings:

Finally, a unified representation corresponding to the gesture observation is obtained by concatenating the two modality embeddings:

which is then fed to the classification head to predict the gesture label . This design enables to jointly reason over complementary information from both modalities while keeping a compact representation suitable for domain incremental learning.

Figure 2.

Cross-modality model : RGB and posture embeddings are encoded by modality-specific transformers and pooled via learnable queries, then concatenated and classified to predict the gesture label . Here, ‘*’ represents query-based self-attention.

4.3. Domain Incremental Learning Strategy

For a well-trained model that has converged on domain , it must learn from the incoming data of the new domain while preserving all previously acquired knowledge. To maintain this balance, we incorporate three key components: (i) a target-domain set , which stores samples from ; (ii) an exemplar memory , which retains representative instances from ; and (iii) a replay mechanism that jointly presents data from and during each optimization step. A thorough discussion of these components is provided in the subsequent sections of this paper.

4.3.1. Target Domain Set Collection

In the target domain , the data distribution may change due to lighting, viewpoint, background, or user motion style and speed. To support adaptation, we build a target domain set

where is the same frozen encoder used for previous domains. Using a fixed encoder ensures that the embeddings from lie in the same latent space as the earlier domains.

This consistency serves two purposes: (i) Adaptation: supplies in-domain examples for fine-tuning; (ii) Shift assessment: comparing the feature statistics or empirical distributions of with those of the all previously seen domains quantifies the domain shift and guides the adaptation procedure.

4.3.2. Clustering-Based Exemplar Memory Selection

Because retaining all past data is impractical, we maintain a fixed-size exemplar memory maintained by a budget . For domain with dataset , the number of exemplars to keep is

where denotes the floor operation. We apply clustering to the set by using clustering method , producing clusters and centroids . For each cluster, we select the medoid (the embedded sample closest to its centroid):

The exemplar buffer is then

This clustering-based selection yields broad mode coverage of the domain’s embedding distribution, producing a more informative and balanced memory than random or majority-class sampling, and thereby mitigating catastrophic forgetting during subsequent adaptation.

4.3.3. Balanced Multi-Domain Replay Training

Training proceeds with mini-batches that contain an equal number of samples from the new domain and the exemplar memory. Let denote the per-domain batch size, is the batch size, is the batch selected from and is the batch selected from . Here, Uniform represents a function that randomly selects number of samples () from a given set ( or ). At each step, we draw

and form a balanced mini-batch by concatenation

Model parameters (of ) are updated by minimizing the cross-entropy over , with learning rate :

Training continues for a fixed budget of steps or until a validation criterion is met. This replay schedule preserves exposure to past domains while adapting to the target domain, balancing stability and plasticity. We summarize this method as Algorithm 1.

| Algorithm 1 Balanced Replay for Domain Incremental Adaptation |

|

5. Experimental Setup

5.1. Datasets

We evaluate on two datasets comprising the same six air-marshalling hand–signal classes. Dataset 1 is an in-house collection from 8 participants. We treat it as the source domain and use COSMOS [49] as the frozen encoder to obtain latent embeddings . For training only, we apply four augmentations to the videos—identity (None), perspective, rotation, and padding, while keeping validation and test unaugmented. The split for is 2076 training samples, 121 validation samples, and 210 test samples.

Dataset 2 is the NATOPS dataset [50], from which we use the same six classes for 20 participants. We model each participant as a separate domain for . Each subject provides 20 samples per class (120 per subject). Here, each class corresponds to a gesture label, and in the remainder of this section and subsequent analyses, the terms class and label are used interchangeably. Per class, we allocate 14 for training, 2 for validation, and 4 for testing; with the four augmentations (including identity) applied to the training set, this yields training items per class and training items per domain. Validation and test remain unaugmented with and samples per domain, respectively.

For the exemplar memory , we employ a per-class clustering budget . From this results in exemplars. For each NATOPS domain, applying the budget per class selects embeddings per class, i.e., exemplars per domain. Thus, after processing all 20 NATOPS domains, the memory would contain exemplars in total.

5.2. Comparison Techniques

To contextualize the performance of our DIL method (ReDIaL), we compare against several standard baselines that probe domain shift, adaptation, and forgetting. Let denote Dataset 1 (source) and denote the 20 participant-specific domains from Dataset 2 (target). We write for the test set of domain , and use the pooled target test set . The following baseline models are considered for comparison:

- Source-only : trained on and evaluated on and . Purpose: quantifies cross-dataset/domain shift when no target data are used.

- Target-only (pooled) : trained on all target domains pooled in , , and evaluated on and . Purpose: serves as an oracle upper bound for target performance and gauges reverse shift toward the source domain.

- Joint-training Model : trained on the combined dataset of and , and tested on and . Purpose: serves as a baseline model if source and target domain data is available at once. This model provides an upper bound measure assuming that all domain data is available from the start, which is unrealistic in practice because new domain data can be continuously introduced to the robot when it is deployed in various environments over time.

- Fine-tune (pooled) : initialized from (trained on ), then fine-tuned on the pooled target data without any access to during fine-tuning. Purpose: measures adaptation to the target with potential catastrophic forgetting of the source.

- Incremental fine-tune (no rehearsal) : starting from , sequentially fine-tuned on with no samples from previously seen domains. Purpose: provides a strong lower bound for retention, highlighting forgetting in a purely sequential setting.

- Experience Replay [20] : used ER [20] method to compare our proposed method ReDIaL with state-of-the-art method. Purpose: evaluate the performance of ReDIaL with the ER, to find the effectiveness of ReDIaL in HRI system.

These baselines are included to (i) quantify domain shift (source-only vs. target-only), (ii) establish upper/lower bounds on target accuracy and retention, and (iii) isolate the benefits of ReDIaL’s rehearsal-based, latent-exemplar DIL training relative to naïve fine-tuning strategies.

5.3. Implementation Details

As a posture data, we used MediaPipe [46] for keypoint detection. For each modality-specific encoder and in , adaptive average pooling was employed to obtain a 512-dimensional spatial embedding. Each encoder was implemented as a two-layer Transformer with four attention heads per layer and a dropout rate of 0.20.

For all time steps , we use the same training configuration. The identical hyperparameters are applied to ReDIaL and to all baselines (, , ). A reduce-on-plateau scheduler lowers the learning rate when the validation loss does not improve, and early stopping selects the best checkpoint. The complete set of hyperparameters employed across all models and domains is presented in Table 1.

Table 1.

Training hyperparameters used for all models and domains. The learning-rate (LR) scheduler reduces the LR by the stated factor if the validation loss does not improve for the specified patience; early stopping monitors the same criterion.

5.4. Evaluation Metrics

To evaluate the performance of the recognition model, we adopt accuracy as the primary evaluation metric, following prior works [4,5]. For, simplicity and understanding, will be denoted as where . To further characterize the stability–plasticity behavior of the model, we compute two aggregate metrics:

Here, represents the average accuracy, while denotes the harmonic mean accuracy, providing a balanced measure of performance across domains. In addition, we quantify catastrophic forgetting as we discussed in Section 3 as

6. Results and Analysis

6.1. Result Analysis: Evidence of Domain Shift and the Advantage of ReDIaL

Table 2 summarizes performance on the source test set , the pooled target test set , and their union . We focus here on two aspects: (i) demonstrating the severity and asymmetry of the domain shift and (ii) showing how ReDIaL achieves strong adaptation to new domains while maintaining high performance on the source, approaching the non-incremental upper bound.

Table 2.

Accuracy (%) of the models evaluated on different test domains.

The source-only model attains on the source yet only on the pooled target, a drop of in accuracy. Conversely, the target-only (pooled) model achieves on the pooled target but just on the source, a drop of in performance. These large, cross-directional gaps ( and ) quantify a severe and asymmetric domain shift between the datasets: models trained in one domain generalize poorly to the other, even though the label space, , is identical. The union-set accuracies reinforce this domain shift: yields only overall, while reaches but remains weak on the source domain—again highlighting the underlying distribution difference.

The baseline joint-training model , which assumes simultaneous access to source and all target data, achieves on the source domain, on the target domain, and on the overall domain test set. This setting is unrealistic for real deployments, since in practice, domains are not accessible prior to deployment. However, it is useful as an upper bound on performance achievable without incremental constraints. Fine-tuning without rehearsal on pooled target data () yields perfect target performance () but reduces source accuracy to , indicating substantial drift away from the source distribution. Sequential fine-tuning with no rehearsal () improves target accuracy to but further drops the performance on the source to , leading to only on the union. These results show that naive adaptation strategies can succeed on the new domain while underperforming on the original one, which is a clear indication of a strong domain shift. Standard Experience Replay (ER) substantially narrows the cross-domain gap: (source), (target), and overall.

ReDIaL improves upon ER across the time steps t, achieving on the source (+ over ER), on the target (+), and overall (+). Notably, ReDIaL approaches the non-incremental joint-training model on every scenario (source: vs. , target: vs. 100, union: vs. ), but under incremental constraints. This suggests that ReDIaL’s design such as frozen encoder for a stable latent space, clustering-based exemplar selection for broad mode coverage, and balanced rehearsal effectively counters the domain shift while retaining prior knowledge.

The large cross-domain performance gaps of and provide direct, quantitative evidence of a significant and asymmetric domain shift. While naive fine-tuning strategies can overfit to the target distribution, they struggle to preserve performance on the source. By contrast, ReDIaL delivers high accuracy on both domains and on their union, nearly matching joint-training performance without violating the incremental learning protocol.

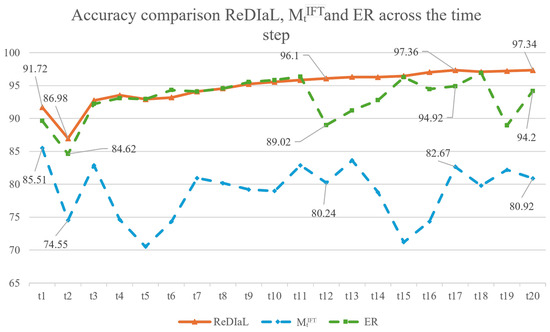

6.2. Overall Incremental Performance

The evaluation in Figure 3 represents accuracy over time for ReDIaL, , and ER, because all three models are trained sequentially on subject-as-domain streams; at each step t, we report accuracy on the cumulative test set of all domains observed up to t.

Figure 3.

Accuracy comparison ReDIaL, ER, and .

The incremental fine-tuning model without rehearsal exhibits the lowest and most variable performance across the sequence. Accuracy drops early from about at to roughly at , recovers partially around mid-sequence (e.g., near ), and achieves near at . This pattern is consistent with strong sensitivity to subject-specific shifts and limited retention of earlier domains when no replay mechanism is available.

ER attains higher accuracy than throughout and begins close to ReDIaL (approximately at versus for ReDIaL). The accuracy generally improves over time but shows notable drops around some intermediate domains (e.g., near ) and achieves at about by . These variations suggest that a uniform replay buffer helps maintain performance but can be sensitive to domain idiosyncrasies when the stored exemplars are not sufficiently representative of earlier distributions.

ReDIaL starts strong at (), experiences a significant decrease at (), and thereafter increases, reaching around , by , and at . Relative to ER, ReDIaL maintains a higher accuracy level with smaller fluctuations across the sequence. This behavior aligns with the method’s design: a frozen encoder that stabilizes the latent space across subjects, clustering-based exemplar selection that improves coverage of prior domains, and balanced replay that jointly exposes the model to past and current data.

Across sequential domains, exhibits substantial variability and the lowest final accuracy, ER mitigates this effect but remains sensitive to domain shifts, and ReDIaL achieves the highest accuracy throughout most of the sequence and at the final step. The temporal trends provide qualitative evidence that rehearsal is necessary for cross-domain stability and that the proposed latent-exemplar strategy is particularly effective in this domain-incremental setting.

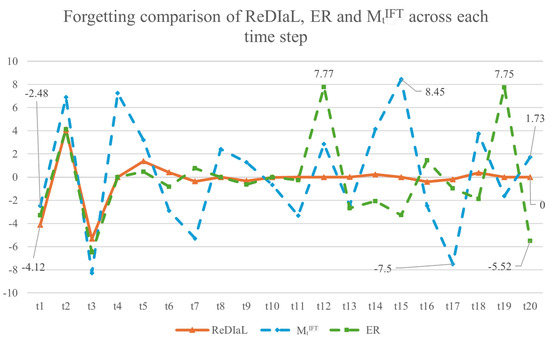

6.3. Catastrophic Forgetting Across Domains

Figure 4 represents forgetting at each time step, defined as the change in accuracy on the previous domain immediately after learning the next domain, as given in Equation (17). We compare the proposed replay strategy (ReDIaL) against incremental fine-tuning without rehearsal () and a standard experience replay baseline (ER), and we highlight time steps that are particularly susceptible to forgetting.

Figure 4.

Forgetting comparison across time steps for ReDIaL, ER, and . Positive values denote forgetting; negative values denote backward transfer.

exhibits the most inconsistent trend of forgetting across the sequence. In early steps, forgetting rises sharply (e.g., around ), then flips to a strong negative value at , and again shows a positive rise near . In the subsequent time steps t, another large positive spike appears around (annotated at approximately in the figure), followed by a deep negative drop close to (around ). This alternating pattern indicates that, in the absence of rehearsal, adaptation to a new subject can both overwrite previous knowledge and occasionally induce accidental improvements when two consecutive domains happen to be similar. Overall, the variability underscores the vulnerability of naive fine-tuning to subject-driven domain shifts.

ER substantially reduces forgetting relative to , but the curve remains fluctuated across incremental time steps t. In particular, large positive rise are visible around and (both near ), while a marked negative value appears at (approximately ). These events suggest that although replay stabilizes learning, the exemplar set may not always stores sufficient diversity from earlier domains, leaving the model sensitive to subjects that differ strongly in appearance, background, or execution speed.

ReDIaL keeps forgetting close to zero throughout most of the sequence. The curve shows small early negative values (e.g., about at ) that reflect mild backward transfer, followed by fluctuations of very low magnitude and convergence to zero by . The near-flat profile indicates that the combination of a frozen encoder, clustering-based exemplar selection, and balanced replay effectively constrains parameter drift when adapting to a new subject.

Across methods, several time steps stand out as particularly prone to forgetting: early transitions (e.g., –) and mid-to-late transitions (e.g., , , ). These coincide with subject changes that likely introduce stronger shifts in appearance, background, or execution speed. Among the three models, is the most affected, ER mitigates but does not eliminate these spikes, and ReDIaL exhibits the smallest amplitude with a stable end-of-sequence value. So, the results indicate that rehearsal is essential in this domain incremental setting, and that ReDIaL’s latent-exemplar replay provides the most reliable retention of previously learned domains.

6.4. Comparison with the Other Methods

Table 3 reports the time-averaged cumulative accuracy , the harmonic mean across domains, and the average forgetting . ReDIaL achieves the highest () and (), slightly outperforming ER ( and ) and showing a substantial margin over both fine-tuning baselines. The very small gap between and for ReDIaL and ER (both ) indicates consistently strong performance across domains, whereas exhibits a noticeably lower harmonic mean, suggesting degraded generalization to some domains.

Table 3.

Comparison of different method based on , , and . A checkmark (✓) indicates the component is included in the model, while a cross (✕) indicates it is not.

ReDIaL and ER also show near-zero or slightly negative average forgetting, reflecting not only effective retention but occasional backward transfer. In contrast, suffers from severe forgetting (), and while averages close to zero, it displays high per-step volatility as seen in the forgetting curves. Together, these results demonstrate that ReDIaL provides the most favorable balance of accuracy and retention, outperforming both naive fine-tuning and standard experience replay in this domain-incremental setting. Table 3 isolates the contribution of each key module in the proposed ReDIaL framework by comparing models with progressively added components—latent embedding, clustering, and balanced replay. The results demonstrate that models without replay (e.g., and ) suffer from severe forgetting (), confirming the necessity of a replay mechanism. Incorporating replay in the ER baseline markedly reduces forgetting and improves average performance, isolating the benefit of memory-based rehearsal. Finally, combining clustering for representative exemplar selection and balanced replay for stable adaptation in ReDIaL gives the highest and , which means that these modules work together to improve cross-domain generalization and knowledge retention. Overall, this comparison study clearly shows how each part affects the whole, which meets the ablation criteria.

7. Discussion

Our findings provide clear evidence of asymmetric domain shift in HRI gesture recognition. As shown in Table 2, a source-only model transfers poorly to the pooled target set, while a target-only model fails on the source—despite an identical label space. This pattern is consistent with the subject- and environment-driven factors identified in Section 3.1 (appearance, background, execution speed) and motivates a domain incremental solution that adapts to new users without erasing prior knowledge.

ReDIaL addresses this need through three complementary components: a frozen encoder that stabilizes the latent space across subjects, clustering-based exemplar selection that improves coverage of earlier domains, and balanced replay that mixes past and current data at every update (Algorithm 1). Combined with our cross-modality design (Section 4.1), these choices yield high and steady accuracy over time (Figure 3), approaching the joint-training upper bound while respecting incremental constraints, and outperforming ER on source, target, and their union (Table 2). The forgetting analysis (Figure 4) represents this trend: naive incremental fine-tuning () exhibits large variations, ER reduces but does not eliminate variations, and ReDIaL stays close to zero throughout, an observation reinforced by the time-averaged and harmonic metrics with near-zero average forgetting (Table 3).

Operating in the latent space is also practical: it preserves task-relevant features while sharply reducing storage compared with raw videos, enabling a replay buffer that scales to long domain sequences, and it improves privacy by avoiding the retention of identifiable frames (Section 4.1). Limitations include reliance on a fixed pre-trained encoder (COSMOS) and on keypoint quality for the posture stream; both can constrain downstream adaptation. While latent exemplar replay offers storage efficiency and privacy benefits by avoiding raw visual data, potential vulnerabilities such as latent inversion or membership inference attacks remain open concerns, warranting future exploration of privacy-preserving latent encoding or differentially private replay mechanisms.

Across all runs, performance remained highly stable, with near-saturated accuracy and minimal variance across domains, suggesting that formal statistical testing (e.g., confidence intervals or paired t-tests) would provide limited additional insight. The evaluation already includes standard continual learning (CL) metrics—average accuracy, harmonic mean, and forgetting—which together capture the intuition of BWT and ensure metric completeness. We acknowledge that sequential order may influence adaptation dynamics and plan to examine randomized domain orderings in future work. Finally, the observed performance plateau in later stages likely reflects a small-sample ceiling effect, where high per-domain accuracy limits measurable incremental gains.

While ReDIaL mitigates forgetting in a subject-as-domain setting, several focused steps remain for future work. (i) We plan to extend evaluation to larger gesture vocabularies and datasets, as well as to heterogeneous sensors (e.g., depth, IMU) and more diverse environments. This will help stress-test cross-domain generalization. (ii) For memory selection, we aim to move beyond clustering by comparing uncertainty-based and gradient-based strategies for building the replay buffer. In parallel, we will evaluate latent-space privacy and explore privacy-preserving replay techniques. (iii) On-robot studies are also critical. These will measure latency, energy use, memory footprint, and task success in noisy, real-time deployments, including trials with non-expert operators. (iv) Finally, we plan to extend to longer-horizon continual regimes that mix domain and class increments, include recurring users, different sequential order of domains and require robust calibration and out-of-distribution handling.

8. Conclusions

We introduced ReDIaL, a memory-efficient domain incremental learning framework for gesture-based HRI. By combining a frozen encoder for latent stability, clustering-based exemplar selection for representative memory, and balanced rehearsal for sequential adaptation, ReDIaL effectively mitigates catastrophic forgetting while enabling robust cross-user adaptation. Across 21 domains (one source and twenty subject-specific targets), ReDIaL achieves state-of-the-art incremental performance: it attains 97.34% accuracy on the combined old and new domains, improving over pooled fine-tuning by 5.47 percentage points, over incremental fine-tuning by 16.42 points, and over ER by 3.14 points (Table 2). Incremental performance analyses show consistently high accuracy over time (Figure 3) and near-zero forgetting across domain transitions (Figure 4); aggregate metrics confirm strong cross-domain robustness (Table 3).

These results demonstrate that latent exemplar replay not only improves accuracy and retention but also supports privacy and scalability, making it well-suited for robotic deployments operating under efficient compute and memory constraints. ReDIaL’s combination of accuracy, retention, privacy, and scalability aligns well with the requirements of human–robot collaboration, where systems must remain reliable across continually changing users, sensors, and environments. ReDIaL can front-end contact-rich, human-guided tasks by providing robust cross-user gesture commands under domain shift, while a downstream impedance-learning controller ensures contact stability in unknown environments. This perception–control pairing is consistent with evidence that impedance learning reduces tracking error and operator effort in carving/grinding tasks with real environments [51]. Looking ahead, future work will explore adaptive encoder updates, more informative exemplar selection strategies, and on-robot evaluations to further enhance robustness, privacy, and deployment readiness.

Author Contributions

Conceptualization, K.K.P. and J.Z.; methodology, K.K.P. and P.D.; validation, K.K.P. and P.D.; formal analysis, K.K.P.; investigation, K.K.P. and P.D.; data curation, K.K.P.; writing—original draft preparation, K.K.P.; writing—review and editing, K.K.P. and P.D.; visualization, K.K.P. and P.D.; supervision, J.Z.; funding acquisition, J.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the NSF under Grants CCSS-2245607.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are restricted because the system and dataset are still under active development and refinement.

Conflicts of Interest

There is no conflict of interest.

References

- Goodrich, M.A.; Schultz, A.C. Human–robot interaction: A survey. Found. Trends Hum.-Comput. Interact. 2008, 1, 203–275. [Google Scholar] [CrossRef]

- Wang, X.; Shen, H.; Yu, H.; Guo, J.; Wei, X. Hand and arm gesture-based human-robot interaction: A review. In Proceedings of the 6th International Conference on Algorithms, Computing and Systems, Larissa, Greece, 16–18 September 2022; pp. 1–7. [Google Scholar]

- de Frutos Carro, M.Á.; LópezHernández, F.C.; Granados, J.J.R. Real-time visual recognition of ramp hand signals for UAS ground operations. J. Intell. Robot. Syst. 2023, 107, 44. [Google Scholar] [CrossRef]

- Podder, K.K.; Zhang, J.; Wu, Y. IHSR: A Framework Enables Robots to Learn Novel Hand Signals from a Few Samples. In Proceedings of the 2024 IEEE International Conference on Advanced Intelligent Mechatronics (AIM), Boston, MA, USA, 15–19 July 2024; pp. 959–965. [Google Scholar]

- Podder, K.K.; Zhang, J.; Mao, S. Trustworthy Hand Signal Communication Between Smart IoT Agents and Humans. In Proceedings of the 2024 IEEE 10th World Forum on Internet of Things (WF-IoT), Ottawa, ON, Canada, 10–13 November 2024; pp. 595–600. [Google Scholar]

- Beeri, E.B.; Nissinman, E.; Sintov, A. Robust Dynamic Gesture Recognition at Ultra-Long Distances. arXiv 2024, arXiv:2411.18413. [Google Scholar] [CrossRef]

- Xie, J.; Xu, Z.; Zeng, J.; Gao, Y.; Hashimoto, K. Human–Robot Interaction Using Dynamic Hand Gesture for Teleoperation of Quadruped Robots with a Robotic Arm. Electronics 2025, 14, 860. [Google Scholar] [CrossRef]

- Podder, K.K.; Chowdhury, M.; Mahbub, Z.B.; Kadir, M. Bangla sign language alphabet recognition using transfer learning based convolutional neural network. Bangladesh J. Sci. Res. 2020, 31, 20–26. [Google Scholar]

- Podder, K.K.; Chowdhury, M.E.; Tahir, A.M.; Mahbub, Z.B.; Khandakar, A.; Hossain, M.S.; Kadir, M.A. Bangla sign language (bdsl) alphabets and numerals classification using a deep learning model. Sensors 2022, 22, 574. [Google Scholar] [CrossRef]

- Podder, K.K.; Ezeddin, M.; Chowdhury, M.E.; Sumon, M.S.I.; Tahir, A.M.; Ayari, M.A.; Dutta, P.; Khandakar, A.; Mahbub, Z.B.; Kadir, M.A. Signer-independent arabic sign language recognition system using deep learning model. Sensors 2023, 23, 7156. [Google Scholar] [CrossRef]

- Podder, K.K.; Zhang, J.; Wang, L. Universal Sign Language Recognition System Using Gesture Description Generation and Large Language Model. In Proceedings of the International Conference on Wireless Artificial Intelligent Computing Systems and Applications, Qindao, China, 21–23 June 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 279–289. [Google Scholar]

- Xu, J.; Xiao, L.; López, A.M. Self-supervised domain adaptation for computer vision tasks. IEEE Access 2019, 7, 156694–156706. [Google Scholar] [CrossRef]

- Luo, Y.; Zheng, L.; Guan, T.; Yu, J.; Yang, Y. Taking a closer look at domain shift: Category-level adversaries for semantics consistent domain adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2507–2516. [Google Scholar]

- Venkateswara, H.; Chakraborty, S.; Panchanathan, S. Deep-learning systems for domain adaptation in computer vision: Learning transferable feature representations. IEEE Signal Process. Mag. 2017, 34, 117–129. [Google Scholar] [CrossRef]

- Tanveer, M.H.; Fatima, Z.; Zardari, S.; Guerra-Zubiaga, D. An in-depth analysis of domain adaptation in computer and robotic vision. Appl. Sci. 2023, 13, 12823. [Google Scholar] [CrossRef]

- Kouw, W.M.; Loog, M. An introduction to domain adaptation and transfer learning. arXiv 2018, arXiv:1812.11806. [Google Scholar]

- Baptista, J.; Santos, V.; Silva, F.; Pinho, D. Domain adaptation with contrastive simultaneous multi-loss training for hand gesture recognition. Sensors 2023, 23, 3332. [Google Scholar] [CrossRef]

- Shi, H.; Wang, H. A unified approach to domain incremental learning with memory: Theory and algorithm. Adv. Neural Inf. Process. Syst. 2023, 36, 15027–15059. [Google Scholar]

- Lamers, C.; Vidal, R.; Belbachir, N.; van Stein, N.; Bäeck, T.; Giampouras, P. Clustering-based domain-incremental learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 3384–3392. [Google Scholar]

- Rolnick, D.; Ahuja, A.; Schwarz, J.; Lillicrap, T.; Wayne, G. Experience replay for continual learning. Adv. Neural Inf. Process. Syst. 2019, 32, 350–360. [Google Scholar]

- Houyon, J.; Cioppa, A.; Ghunaim, Y.; Alfarra, M.; Halin, A.; Henry, M.; Ghanem, B.; Van Droogenbroeck, M. Online distillation with continual learning for cyclic domain shifts. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 2437–2446. [Google Scholar]

- Li, S.; Su, T.; Zhang, X.; Wang, Z. Continual Learning With Knowledge Distillation: A Survey. IEEE Trans. Neural Netw. Learn. Syst. 2024, 36, 9798–9818. [Google Scholar] [CrossRef]

- Kutalev, A.; Lapina, A. Stabilizing Elastic Weight Consolidation method in practical ML tasks and using weight importances for neural network pruning. arXiv 2021, arXiv:2109.10021. [Google Scholar] [CrossRef]

- Aich, A. Elastic weight consolidation (EWC): Nuts and bolts. arXiv 2021, arXiv:2105.04093. [Google Scholar] [CrossRef]

- Kirkpatrick, J.; Pascanu, R.; Rabinowitz, N.; Veness, J.; Desjardins, G.; Rusu, A.A.; Milan, K.; Quan, J.; Ramalho, T.; Grabska-Barwinska, A.; et al. Overcoming catastrophic forgetting in neural networks. Proc. Natl. Acad. Sci. USA 2017, 114, 3521–3526. [Google Scholar] [CrossRef]

- Lee, S.W.; Kim, J.H.; Jun, J.; Ha, J.W.; Zhang, B.T. Overcoming catastrophic forgetting by incremental moment matching. Adv. Neural Inf. Process. Syst. 2017, 30, 4655–4665. [Google Scholar]

- Cucurull, X.; Garrell, A. Continual learning of hand gestures for human-robot interaction. arXiv 2023, arXiv:2304.06319. [Google Scholar] [CrossRef]

- Ding, Q.; Liu, D.; Ai, J.; Yin, P.; Wang, F.; Han, S. Comparisons on incremental gesture recognition with different one-class sEMG envelopes. Biomed. Signal Process. Control 2025, 103, 107421. [Google Scholar] [CrossRef]

- Calinon, S.; Billard, A. Incremental learning of gestures by imitation in a humanoid robot. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Arlington, VA, USA, 9–11 March 2007; pp. 255–262. [Google Scholar]

- Aich, S.; Ruiz-Santaquiteria, J.; Lu, Z.; Garg, P.; Joseph, K.; Garcia, A.F.; Balasubramanian, V.N.; Kin, K.; Wan, C.; Camgoz, N.C.; et al. Data-free class-incremental hand gesture recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 20958–20967. [Google Scholar]

- Wang, Z.; She, Q.; Chalasani, T.; Smolic, A. Catnet: Class incremental 3d convnets for lifelong egocentric gesture recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 230–231. [Google Scholar]

- Xu, X.; Zou, Q.; Lin, X. Alleviating human-level shift: A robust domain adaptation method for multi-person pose estimation. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 2326–2335. [Google Scholar]

- Van de Ven, G.M.; Tuytelaars, T.; Tolias, A.S. Three types of incremental learning. Nat. Mach. Intell. 2022, 4, 1185–1197. [Google Scholar] [CrossRef]

- Canal, G.; Angulo, C.; Escalera, S. Gesture based human multi-robot interaction. In Proceedings of the 2015 International Joint Conference on Neural Networks (IJCNN), Killarney, Ireland, 12–17 July 2015; pp. 1–8. [Google Scholar] [CrossRef]

- Su, H.; Qi, W.; Chen, J.; Yang, C.; Sandoval, J.; Laribi, M.A. Recent advancements in multimodal human–robot interaction. Front. Neurorobot. 2023, 17, 1084000. [Google Scholar] [CrossRef]

- Alonso-Mora, J.; Lohaus, S.H.; Leemann, P.; Siegwart, R.Y.; Beardsley, P.A. Gesture based human-Multi-robot swarm interaction and its application to an interactive display. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 5948–5953. [Google Scholar]

- Cicirelli, G.; Attolico, C.; Guaragnella, C.; D’Orazio, T. A kinect-based gesture recognition approach for a natural human robot interface. Int. J. Adv. Robot. Syst. 2015, 12, 22. [Google Scholar] [CrossRef]

- Nguyen, K.H.; Pham, A.D.; Minh, T.B.; Phan, T.T.T.; Do, X.P. Gesture Recognition Model with Multi-Tracking Capture System for Human-Robot Interaction. In Proceedings of the 2023 International Conference on System Science and Engineering (ICSSE), Ho Chi Minh, Vietnam, 27–28 July 2023; pp. 6–11. [Google Scholar]

- Parisi, G.I.; Kemker, R.; Part, J.L.; Kanan, C.; Wermter, S. Continual Lifelong Learning with Neural Networks: A Review. Neural Netw. Off. J. Int. Neural Netw. Soc. 2018, 113, 54–71. [Google Scholar] [CrossRef]

- Xie, J.; Yan, S.; He, X. General Incremental Learning with Domain-aware Categorical Representations. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans Ernest N. Morial Convention Center New Orleans, New Orleans, LA, USA, 21–24 June 2022; pp. 14331–14340. [Google Scholar]

- Hung, S.C.Y.; Tu, C.H.; Wu, C.E.; Chen, C.H.; Chan, Y.M.; Chen, C.S. Compacting, Picking and Growing for Unforgetting Continual Learning. arXiv 2019, arXiv:1910.06562. [Google Scholar] [CrossRef]

- Hayes, T.L.; Cahill, N.D.; Kanan, C. Memory Efficient Experience Replay for Streaming Learning. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2018; pp. 9769–9776. [Google Scholar]

- Pellegrini, L.; Graffieti, G.; Lomonaco, V.; Maltoni, D. Latent Replay for Real-Time Continual Learning. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 10203–10209. [Google Scholar]

- Balles, L.; Zappella, G.; Archambeau, C. Gradient-Matching Coresets for Rehearsal-Based Continual Learning. arXiv 2022, arXiv:2203.14544. [Google Scholar]

- Yoon, J.; Madaan, D.; Yang, E.; Hwang, S.J. Online Coreset Selection for Rehearsal-based Continual Learning. arXiv 2021, arXiv:2106.01085. [Google Scholar]

- Lugaresi, C.; Tang, J.; Nash, H.; McClanahan, C.; Uboweja, E.; Hays, M.; Zhang, F.; Chang, C.L.; Yong, M.G.; Lee, J.; et al. Mediapipe: A framework for building perception pipelines. arXiv 2019, arXiv:1906.08172. [Google Scholar] [CrossRef]

- Martınez, G.H. Openpose: Whole-Body Pose Estimation. Ph.D. Dissertation, Robotics Institute Carnegie Mellon University, Pittsburgh, PA, USA, 2019. [Google Scholar]

- Wang, Q.; Zhang, K.; Asghar, M.A. Skeleton-based ST-GCN for human action recognition with extended skeleton graph and partitioning strategy. IEEE Access 2022, 10, 41403–41410. [Google Scholar] [CrossRef]

- Kim, S.; Xiao, R.; Georgescu, M.I.; Alaniz, S.; Akata, Z. COSMOS: Cross-Modality Self-Distillation for Vision Language Pre-training. arXiv 2024, arXiv:2412.01814. [Google Scholar]

- Song, Y.; Demirdjian, D.; Davis, R. Tracking body and hands for gesture recognition: Natops aircraft handling signals database. In Proceedings of the 2011 IEEE International Conference on Automatic Face & Gesture Recognition (FG), Santa Barbara, CA, USA, 21–25 March 2011; pp. 500–506. [Google Scholar]

- Xing, X.; Burdet, E.; Si, W.; Yang, C.; Li, Y. Impedance learning for human-guided robots in contact with unknown environments. IEEE Trans. Robot. 2023, 39, 3705–3721. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).