A Dual-Structured Convolutional Neural Network with an Attention Mechanism for Image Classification

Abstract

1. Introduction

1.1. Backgroud

1.2. Related Work

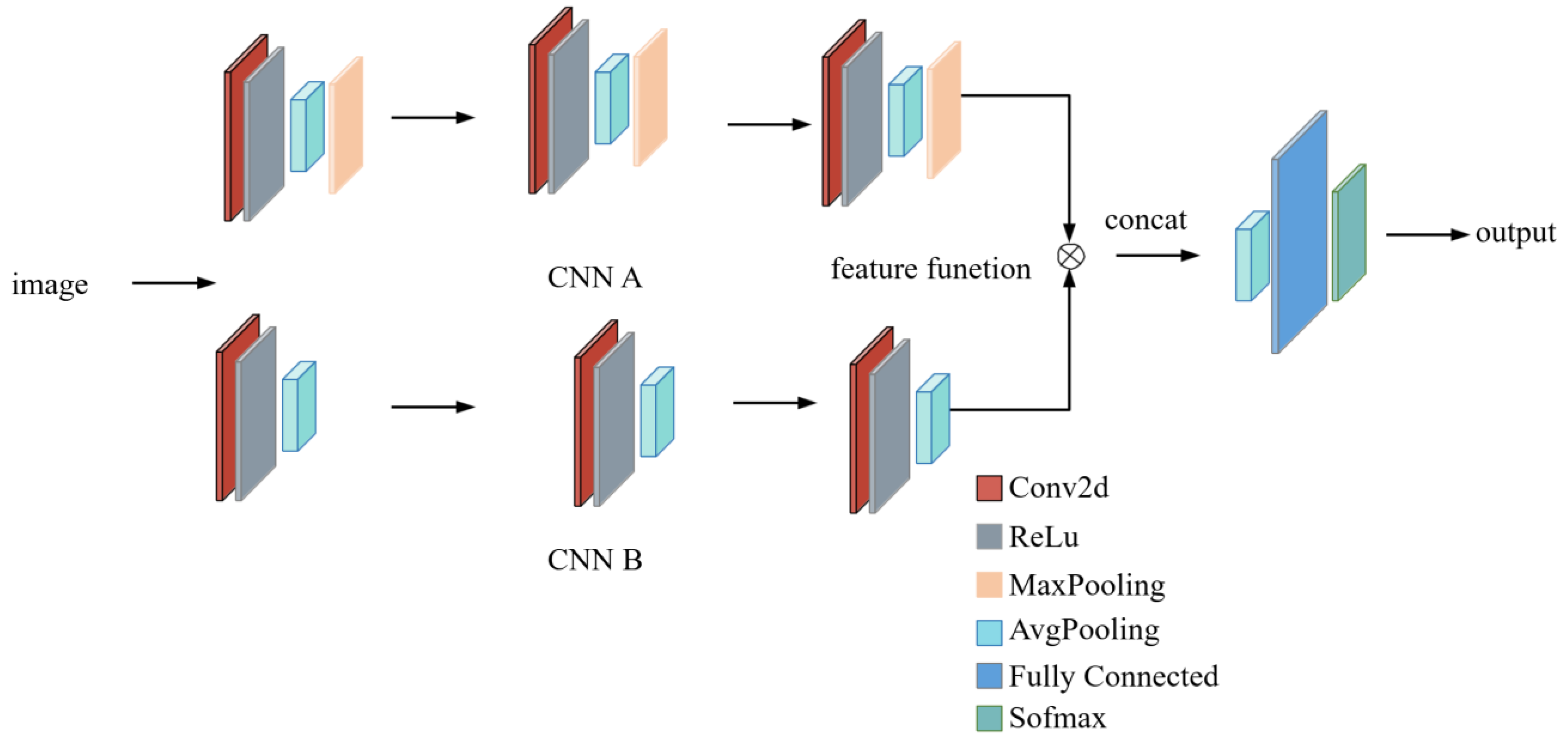

2. Materials and Methods

2.1. CNN-A Branch with Spatial Attention

2.2. CNN-B Branch with Channel Attention

2.3. Feature Fusion

2.4. The Classifier

2.5. Algorithm Implements

| Algorithm 1. Dual CNN with attention mechanism |

| Input: Output: CNN-A Extract features ; Apply spatial attention CNN-B Extract features ; Apply spatial attention Fusion Classification Pooling Prediction |

2.6. Experimental Datasets

3. Results

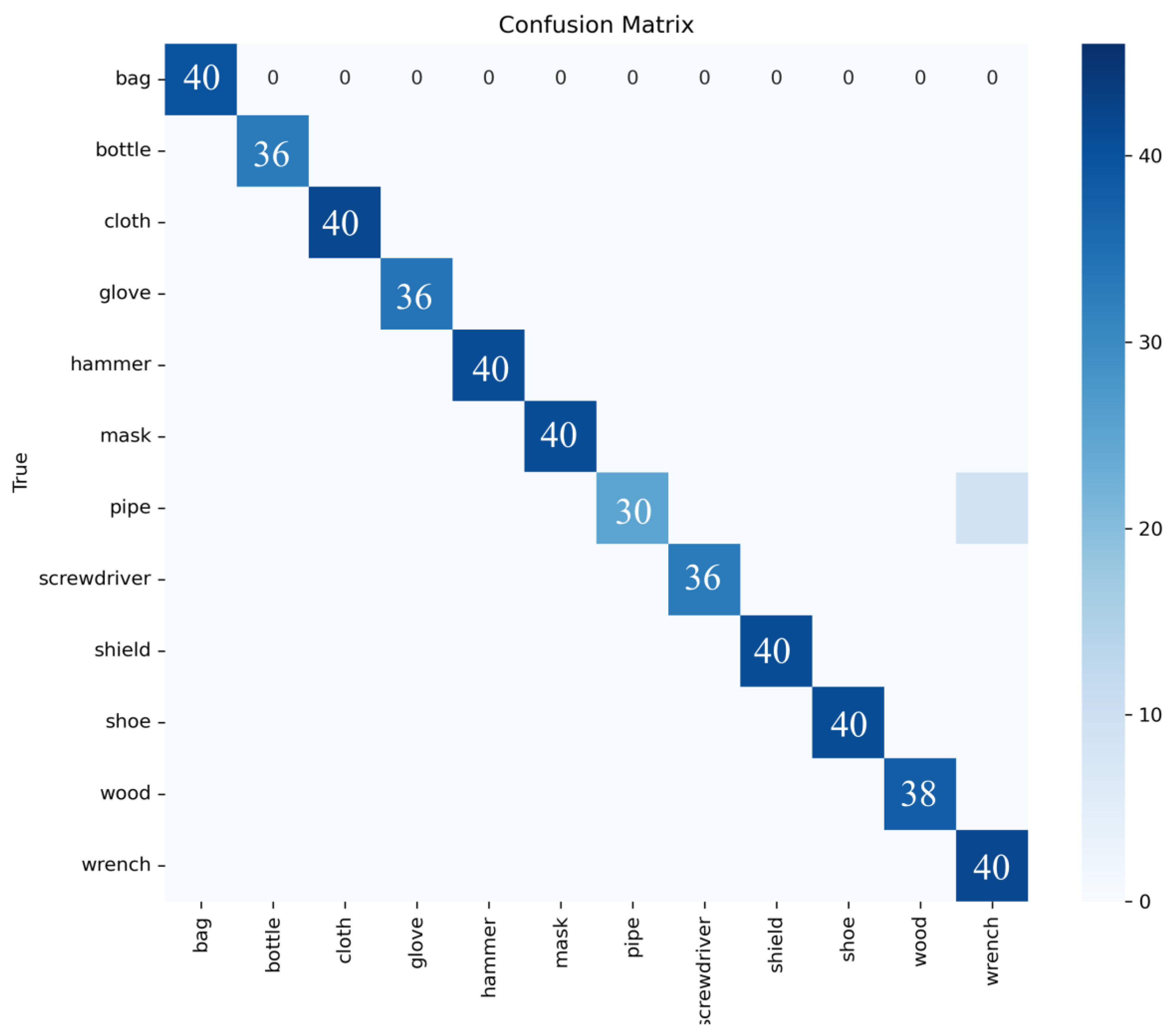

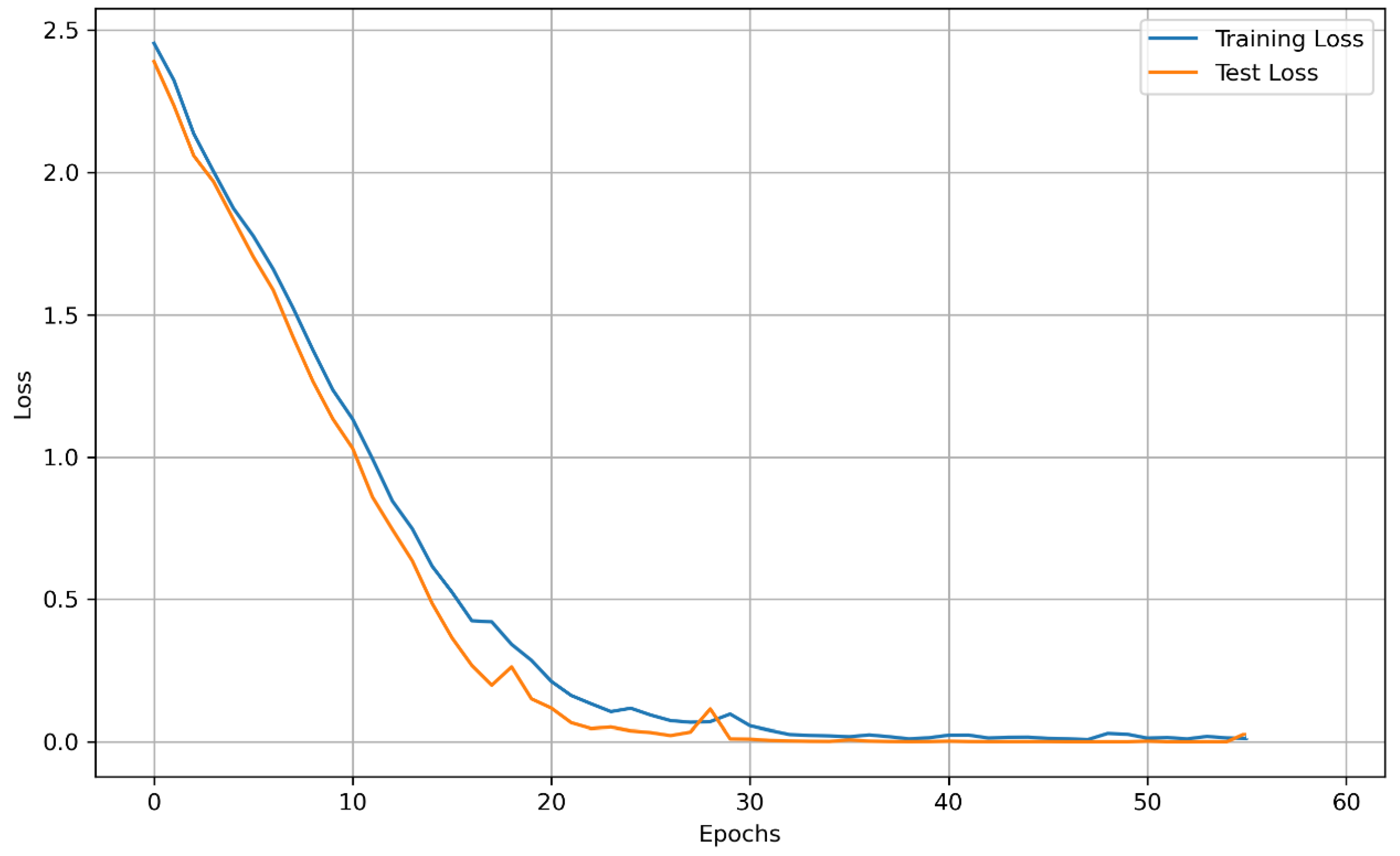

3.1. Classifying Performance

3.2. Classifying Efficiency

4. Discussion

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yang, S.; Chen, L. Computer-Aided Pathology Image Classification and Segmentation Joint Analysis Model. In Proceedings of the 2025 International Conference on Multi-Agent Systems for Collaborative Intelligence (ICMSCI), Erode, India, 20–22 January 2025; pp. 1317–1322. [Google Scholar]

- Yang, Y.; Fu, H.; Aviles-Rivero, A.I.; Xing, Z.; Zhu, L. DiffMIC-v2: Medical Image Classification via Improved Diffusion Network. IEEE Trans. Med. Imaging 2025, 44, 2244–2255. [Google Scholar] [CrossRef] [PubMed]

- Shovon, I.I.; Ahmad, I.; Shin, S. Segmentation Aided Multiclass Tumor Classification in Ultrasound Images using Graph Neural Network. In Proceedings of the 2025 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Fukuoka, Japan, 18–21 February 2025; pp. 1012–1015. [Google Scholar]

- Liu, Y.; Zhang, H.; Su, D. Research on the Application of Artificial Intelligence in the Classification of Artistic Attributes of Photographic Images. In Proceedings of the 2025 Asia-Europe Conference on Cybersecurity, Internet of Things and Soft Computing (CITSC), Rimini, Italy, 10–12 January 2025; pp. 370–374. [Google Scholar]

- Rollo, S.D.; Yusiong, J.P.T. A Two-Color Space Input Parallel CNN Model for Food Image Classification. In Proceedings of the 2024 International Conference on Information Technology Research and Innovation (ICITRI), Jakarta, Indonesia, 5–6 September 2024; pp. 141–145. [Google Scholar]

- El Amoury, S.; Smili, Y.; Fakhri, Y. CNN Hyper-parameter Optimization Using Simulated Annealing for MRI Brain Tumor Image Classification. In Proceedings of the 2025 5th International Conference on Innovative Research in Applied Science, Engineering and Technology (IRASET), Fez, Morocco, 15–16 May 2025; pp. 1–5. [Google Scholar]

- Li, J.; Liu, H.; Li, K.; Shan, K. Heart Sound Classification Based on Two-channel Feature Fusion and Dual Attention Mechanism. In Proceedings of the 2024 5th International Conference on Computer Engineering and Application (ICCEA), Hangzhou, China, 12–14 April 2024; pp. 1294–1297. [Google Scholar]

- Tong, X.; Chen, J.; Shi, J.; Jiang, Y. Improved ResNet50 Galaxy Classification with Multi-Attention Mechanism. In Proceedings of the 2025 5th International Conference on Artificial Intelligence and Industrial Technology Applications (AIITA), Xi’an, China, 28–30 March 2025; pp. 725–729. [Google Scholar]

- Zheng, Z.; Slam, N. A VGG Fire Image Classification Model with Attention Mechanism. In Proceedings of the 2024 5th International Conference on Computer Vision, Image and Deep Learning (CVIDL), Zhuhai, China, 19–21 April 2024; pp. 873–877. [Google Scholar]

- Wu, W. Face Recognition Algorithm Based on ResNet50 and Improved Attention Mechanism. In Proceedings of the 2025 5th International Conference on Neural Networks, Information and Communication Engineering (NNICE), Guangzhou, China, 10–12 January 2025; pp. 98–101. [Google Scholar]

- Lei, W.; Liu, X.; Ye, L.; Hu, T.; Gong, L.; Luo, J. Research on Graph Feature Data Aggregation Algorithm Based on Graph Convolution and Attention Mechanism. In Proceedings of the 2024 4th International Conference on Electronic Materials and Information Engineering (EMIE), Guangzhou, China, 24–26 May 2024; pp. 146–150. [Google Scholar]

- Zhang, R.; Xie, M. A Multi-task learning model with low-level feature sharing and inter-feature guidance for segmentation and classification of medical images. In Proceedings of the 2024 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Lisboa, Portugal, 3–6 December 2024; pp. 2894–2899. [Google Scholar]

- Shi, J.; Liu, Y.; Yi, W.; Lu, X. Semantic-Guided Cross-Modal Feature Alignment for Cross-Domain Few-Shot Hyperspectral Image Classification. In Proceedings of the 2025 6th International Conference on Computer Vision, Image and Deep Learning (CVIDL), Ningbo, China, 23–25 May 2025; pp. 622–625. [Google Scholar]

- Wang, Y.; Liu, G.; Yang, L.; Liu, J.; Wei, L. An Attention-Based Feature Processing Method for Cross-Domain Hyperspectral Image Classification. IEEE Signal Process. Lett. 2025, 32, 196–200. [Google Scholar] [CrossRef]

- Sun, K.; Dong, F.; Liu, W.; Wu, Q.; Sun, X.; Wang, W. Hyperspectral Image Classification with Spatial–Spectral–Channel 3-D Attention and Channel Attention. IEEE Trans. Geosci. Remote Sens. 2025, 63, 4412314. [Google Scholar] [CrossRef]

- Akilan, T.; Wu, Q.J.; Safaei, A.; Huo, J.; Yang, Y. A 3D CNN-LSTM-Based Image-to-Image Foreground Segmentation. IEEE Trans. Intell. Transp. Syst. 2020, 21, 959–971. [Google Scholar] [CrossRef]

- Xu, R.; Wang, C.; Zhang, J.; Xu, S.; Meng, W.; Zhang, X. RSSFormer: Foreground Saliency Enhancement for Remote Sensing Land-Cover Segmentation. IEEE Trans. Image Process. 2023, 32, 1052–1064. [Google Scholar] [CrossRef] [PubMed]

- Su, T.; Wang, H.; Qi, Q.; Wang, L.; He, B. Transductive Learning With Prior Knowledge for Generalized Zero-Shot Action Recognition. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 260–273. [Google Scholar] [CrossRef]

- Zhang, X.; Xiao, Z.; Ma, J.; Wu, X.; Zhao, J.; Zhang, S.; Li, R.; Pan, Y.; Liu, J. Adaptive Dual-Axis Style-Based Recalibration Network with Class-Wise Statistics Loss for Imbalanced Medical Image Classification. IEEE Trans. Image Process. 2025, 34, 2081–2096. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Y.; Liu, S.; Bruzzone, L. An Attention-Enhanced Feature Fusion Network (AeF2N) for Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2023, 20, 5511005. [Google Scholar] [CrossRef]

- Priya, V.V.; Chattu, P.; Sivasankari, K.; Pisal, D.T.; Sai, B.R.; Suganthi, D. Exploring Convolution Neural Networks for Image Classification in Medical Imaging. In Proceedings of the 2024 International Conference on Intelligent and Innovative Technologies in Computing, Electrical and Electronics (IITCEE), Bangalore, India, 24–25 January 2024; pp. 1–4. [Google Scholar]

- Hua, R.; Zhang, J.; Xue, J.; Wang, Y.; Liu, Z. FFGAN: Feature Fusion GAN for Few-shot Image Classification. In Proceedings of the 2024 Asia-Pacific Conference on Image Processing, Electronics and Computers (IPEC), Dalian, China, 12–14 April 2024; pp. 96–102. [Google Scholar]

- Brikci, Y.B.; Benazzouz, M.; Benomar, M.L. A Comparative Study of ViT-B16, DeiT, and ResNet50 for Peripheral Blood Cell Image Classification. In Proceedings of the 2024 International Conference of the African Federation of Operational Research Societies (AFROS), Tlemcen, Algeria, 3–5 November 2024; pp. 1–5. [Google Scholar]

- Dosovitskiy, A.; Beyer, L. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. In Proceedings of the 2021 International Conference on Learning Representations (ICLR), Virtual, 3–7 May 2021; pp. 1–22. [Google Scholar]

- Touvron, H.; Cord, M.; Douze, M.; Massa, F. Training data-efficient image transformers & distillation through attention. In Proceedings of the 2021 IEEE International Conference on Machine Learning (ICML), Virtual, 18–24 July 2021; pp. 1–22. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In Proceedings of the 2021 IEEE International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 11–17 October 2021; pp. 1–14. [Google Scholar]

- Liu, Y.; Tian, Y.; Liang, Y. VMamba: Visual State Space Model. Comput. Vis. Pattern Recognit. 2024, 4, 1–33. [Google Scholar]

| Branch | Layer | Output Channels | Kernel | Stride | Padding |

|---|---|---|---|---|---|

| CNN-A | ConvA1 | 32 | 3 × 3 | 1 | 1 |

| ConvA2 | 64 | 3 × 3 | 1 | 1 | |

| ConvA3 | 128 | 3 × 3 | 1 | 1 | |

| CNN-B | ConvB1 | 32 | 3 × 3 | 1 | 1 |

| ConvB2 | 64 | 3 × 3 | 1 | 1 | |

| ConvB3 | 128 | 3 × 3 | 1 | 1 |

| Module | Parameters | Value | Role |

|---|---|---|---|

| Spatial | Conv Kernel | 7 × 7 | Spatial weight |

| Channel | Reduction Ratio | 16 | Channel compression |

| Component | Output Dimension | Parameters |

|---|---|---|

| Concatenation | — | |

| Global Pooling | — | |

| Fully Connected | C |

| Hyperparameter | Value/Description | Comment |

|---|---|---|

| Optimizer | Adam | |

| Learning Rate | 0.001 | |

| Learning Rate Scheduler | ReduceLROnPlateau | Factor: 0.5, Patience: 5 |

| Batch Size | 32 | |

| Number of Epochs (Max) | 60 | Training halted by early stopping. |

| Early Stopping Patience | 10 | Monitored validation loss. |

| Loss Function | Categorical Cross-Entropy | |

| Weight Initialization | He Normal | For convolutional layers. |

| Data Augmentation | Horizontal Flip, Random Rotation (±10°) | Applied only to training set. |

| Train/Test Split | 1550/465 | ~77%/~23% split. |

| Reduction Ratio (r) | 16 | For channel attention module. |

| Spatial Attention Kernel | 7 × 7 | Convolution kernel size. |

| Random Seed | 42 | Fixed for reproducibility. |

| Methods | Accuracy | Precision | F1-Score | G-Mean | Top-1 Accuracy | Top-3 Accuracy | Top-5 Accuracy | CIFAR-10 (Top-1 Accy) | CIFAR-100 (Top-1 Accy) |

|---|---|---|---|---|---|---|---|---|---|

| DCNNAM | 98.06% | 96.00% | 98.01% | 97.47% | 98.06% | 99.78% | 100% | 95.72% | 78.89% |

| DiffMIC-v2 | 92.11% | 91.88% | 95.63% | 95.56% | 92.11% | 98.28% | 99.57% | 93.15% | 75.11% |

| GNN | 92.07% | 90.44% | 93.03% | 94.14% | 92.07% | 97.42% | 99.35% | 90.88% | 72.43% |

| CNNs | 90.00% | 90.18% | 91.11% | 92.37% | 90.00% | 96.99% | 98.92% | 89.50% | 70.05% |

| FFGAN | 88.59% | 88.59% | 90.49% | 90.19% | 88.59% | 95.91% | 98.28% | 88.21% | 68.92% |

| ResNet50 | 88.11% | 88.11% | 90.08% | 89.44% | 88.11% | 95.27% | 97.85% | 94.25% | 76.83% |

| ViT-Base [24] | 95.91% | 93.85% | 95.82% | 95.23% | |||||

| DeiT-Tiny [25] | 94.84% | 92.77% | 94.65% | 93.98% | |||||

| Swin-T-Tiny [26] | 96.77% | 95.12% | 96.59% | 96.18% | |||||

| VMamba-Tiny [27] | 96.34% | 94.58% | 96.22% | 95.76% |

| Model | DCNNAM | DiffMIC-v2 | FFGAN | ResNet50 | CNNs | GNN |

|---|---|---|---|---|---|---|

| Training time (s) | 2980.57 | 3163.06 | 3055.39 | 2822.39 | 2819.60 | 2781.96 |

| Inference time (s) | 4.75 | 12.31 | 18.54 | 7.22 | 3.10 | 5.82 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Y.; Zhang, J.; Liu, H.; Zhang, Y. A Dual-Structured Convolutional Neural Network with an Attention Mechanism for Image Classification. Electronics 2025, 14, 3943. https://doi.org/10.3390/electronics14193943

Liu Y, Zhang J, Liu H, Zhang Y. A Dual-Structured Convolutional Neural Network with an Attention Mechanism for Image Classification. Electronics. 2025; 14(19):3943. https://doi.org/10.3390/electronics14193943

Chicago/Turabian StyleLiu, Yongzhuo, Jiangmei Zhang, Haolin Liu, and Yangxin Zhang. 2025. "A Dual-Structured Convolutional Neural Network with an Attention Mechanism for Image Classification" Electronics 14, no. 19: 3943. https://doi.org/10.3390/electronics14193943

APA StyleLiu, Y., Zhang, J., Liu, H., & Zhang, Y. (2025). A Dual-Structured Convolutional Neural Network with an Attention Mechanism for Image Classification. Electronics, 14(19), 3943. https://doi.org/10.3390/electronics14193943