1. Introduction

Visual–language tracking (VLT) [

1,

2,

3,

4,

5] is an important task in multimodal perception that focuses on localizing specific target objects in video sequences by combining visual content with natural language descriptions. By incorporating linguistic cues, VLT enhances the discriminative capability of trackers compared with traditional visual tracking methods [

6,

7,

8]. This advantage has led to its growing use in diverse applications such as intelligent surveillance, virtual reality, autonomous driving, unmanned aerial vehicles, and human-computer interaction.

Despite recent advancements, VLT continues to face key challenges in practical scenarios, including target deformation, occlusion, and interference from semantically similar distractors. These issues often disrupt the alignment between modalities and impair tracking accuracy. Existing methods primarily focus on developing more effective multimodal interaction strategies [

9,

10], which typically fall into two categories: dual-stream and single-stream frameworks. Dual-stream approaches [

11,

12,

13,

14,

15] process visual and linguistic features separately using distinct encoders, followed by dedicated fusion modules for cross-modal interaction. In contrast, single-stream models [

16,

17,

18,

19] integrate both modalities directly through a shared backbone, enabling joint feature extraction and fusion in an end-to-end manner.

While these approaches improve modality integration to some extent, they often fail to emphasize the importance of semantic sensitivity in aligning cross-modal representations and modeling target dynamics. This shortcoming becomes particularly evident in challenging scenes with ambiguous distractors or drastic appearance changes, where fine-grained semantic understanding and adaptive temporal modeling are essential. To tackle these challenges, we introduce SATrack, a Semantically Aware Tracking framework designed for visual–language tracking. Its core objective is to improve cross-modal semantic alignment, filter out irrelevant distractors, and adaptively model target appearance changes over time, ultimately enhancing both robustness and tracking precision.

Specifically, SATrack comprises three key components: (i) Semantically Aware Contrastive Alignment (SACA) module: This component actively identifies hard negative samples, instances that are semantically similar yet belong to different categories, to improve the model’s cross-modal discriminative capacity. By focusing on subtle semantic distinctions, the SACA module enhances the precision of visual–language alignment. (ii) Cross-Modal Token Filtering (CMTF) strategy: This component combines cues from the template image and language prompt to steer attention mechanisms, enabling semantic filtering of tokens within the search region. It selectively preserves regions that are highly relevant to the query while discarding low-attention background or distracting areas. This enhances both the robustness of target representation and computational efficiency. (iii) Confidence-Guided Template Memory (CGTM) mechanism: This component maintains a dynamic memory of template frames, guided by the confidence scores of tracking predictions. This enables the model to adapt to appearance variations over time, enhancing its ability to model temporal dynamics. Extensive experiments show that SATrack significantly boosts visual–language tracking performance by improving semantic alignment, filtering out irrelevant information, and capturing appearance changes over time.

The main contributions of this work are summarized as follows:

We propose SATrack, a novel semantically aware visual–language tracking framework that explicitly addresses the challenges of cross-modal semantic shift and misalignment in complex scenes.

We design the Semantically Aware Contrastive Alignment module, which leverages hard negative mining guided by semantic similarity to enhance cross-modal discriminability and fine-grained feature alignment.

We introduce two complementary modules: Semantics-Guided Token Filtering for spatial filtering of irrelevant regions, and Confidence-Guided Template Memory for adaptive temporal modeling of appearance variations. Extensive experiments on TNL2K, LaSOT, and OTB99-L demonstrate the effectiveness of SATrack, achieving new state-of-the-art performance on TNL2K.

The remainder of the paper is organized as follows.

Section 2 reviews related work in visual and visual–language tracking.

Section 3 details the proposed SATrack framework, including its key components: Semantically Aware Contrastive Alignment, Cross-Modal Token Filtering, and Confidence-Guided Template Memory.

Section 4 presents experimental results on several benchmarks, and

Section 5 concludes the paper with future directions.

2. Related Work

2.1. Visual Tracking

Visual Object Tracking (VOT) aims to continuously predict the position of a target in subsequent video frames based on an initial bounding box provided in the first frame. As a fundamental problem in computer vision, traditional VOT can be broadly categorized into two groups: discriminative correlation filter-based methods [

20,

21] and Siamese network-based matching methods [

22,

23,

24]. These approaches typically locate the target by computing the similarity between a template and a search region, which is particularly effective for short sequences with relatively stable appearance. With the success of Transformer architectures in visual tasks, recent years have witnessed the emergence of attention-based trackers such as TransT [

25], STARK [

26], and SwinTrack [

27], which introduce global modeling capabilities and significantly improve adaptability and robustness in complex environments. However, these methods [

25,

26,

27,

28,

29] largely rely on static templates—typically the initial frame—as the sole reference for tracking, lacking the ability to model temporal variations in target appearance. This limitation often results in template drift when facing severe deformations, occlusions, or semantically similar distractors, ultimately degrading tracking performance. To mitigate these issues, recent works have proposed dynamic template mechanisms to enhance the modeling of target appearance over time. For example, ARTrack [

7] formulates tracking as an autoregressive sequence generation task, using historical positions to progressively guide predictions. ARTrackV2 [

30] further introduces learnable spatiotemporal prompts to improve long-term temporal modeling. ODTrack [

31] constructs inter-frame instance-level feature relations and extracts target-specific tokens, effectively reducing redundancy while improving temporal consistency. In this paper, we aim to develop a visual–language tracker that dynamically maintains a high-quality sample pool and temporal template set, enabling adaptive modeling of target appearance evolution across time.

2.2. Visual–Language Tracking

Unlike conventional visual tracking tasks, VLT utilizes not only RGB-based reference information but also language modality as an auxiliary input, enhancing the model’s discriminative power under complex scenarios. Existing VLT frameworks can be broadly categorized into dual-stream and single-stream architectures. Dual-stream architectures adopt separate encoders for language and visual modalities to extract features independently, followed by fusion modules to enable cross-modal interaction. For instance, UVLTrack [

32] extracts visual and linguistic features in the shallow layers and fuses them in deeper stages, while VLT [

33] introduces a modality mixer during separate encoding to enhance multimodal interaction. In contrast, single-stream architectures jointly feed language prompts and visual inputs into a shared Transformer backbone, enabling unified feature modeling and fusion in an end-to-end manner. For example, ATTracker [

16] designs an asymmetric multi-encoder structure to directly integrate linguistic and visual cues in the Transformer, while OVLM [

19] employs a unified backbone with vision–language fusion layers to improve multimodal alignment and tracking robustness. These approaches often exhibit superior performance in modeling modality consistency and facilitating efficient end-to-end training.

Despite the progress made in multimodal fusion and feature modeling, most existing VLT methods lack fine-grained modeling of cross-modal semantic consistency and semantic drift, as illustrated in

Figure 1a. Under scenarios involving ambiguous semantics, severe occlusions, or drastic target appearance changes, such methods are prone to mismatches or tracking failures. To address these issues, we propose SATrack, a Semantically Aware Tracking framework, as shown in

Figure 1b. SATrack introduces a semantics-guided contrastive alignment mechanism to enhance the modeling of semantic relations and improve tracking robustness under challenging conditions.

3. Proposed Method

This section presents a comprehensive overview of the proposed SATrack framework. We begin by outlining its overall tracking pipeline, then focus on the design of the Semantically Aware Contrastive Alignment module. Finally, we delve into the implementation of the Semantics-Guided Token Filtering module and Confidence-Guided Template Memory module.

3.1. Semantically Aware Contrastive Alignment Module

Conventional contrastive learning approaches (e.g., NT-Xent [

34]) typically rely on global representation vectors from visual and language modalities, using cosine similarity to impose contrastive supervision. However, this strategy suffers from two key limitations: (i) it fails to capture fine-grained semantic mismatches—for example, descriptions like “person in red” and “person in blue” may be globally similar but semantically conflicting at the local level; (ii) randomly sampled negative examples are often too easy, making the contrastive loss ineffective at focusing on truly challenging negative samples.

To address these issues and enhance fine-grained alignment between visual and language modalities, we introduce the Semantically Aware Contrastive Alignment (SACA) module. SACA module builds a token-level semantic similarity function across modalities using attention mechanisms and employs a semantic-similarity-based hard negative mining strategy to generate high-quality contrastive supervision signals. This guides the model to learn more precise and discriminative cross-modal semantic alignments.

Specifically, let the input visual modality tokens (either from template

or search image

) be denoted as

, and the language tokens as

, where

and

are the number of visual and language tokens, respectively, and

d is the embedding dimension. We apply a cross-modal attention mechanism, where

serves as the query and

as key and value, to compute the attention matrix:

Here,

represents the attention weight between the

i-th visual token and the

j-th language token, reflecting their semantic correlation. To compute the global semantic similarity between

and

, we first average over each row and then aggregate across all visual tokens:

Given a batch of

B training pairs

, we construct a semantic similarity matrix

. The similarity calculation at the n-th row and m-th column position is

We treat the diagonal element

, which corresponds to the self-similarity of the same target in different modalities, as the positive pair similarity score. To mine hard negatives, we select the top-

K highest off-diagonal elements in the

n-th row to form a hard negative set

. The visual-to-language contrastive loss is defined as

To ensure symmetric alignment, we define a reverse-direction semantic similarity function

by switching the roles of query and key/value. This gives a similarity matrix

and the language-to-visual contrastive loss:

The final Semantically Aware Contrastive Alignment loss is the average of both directions:

3.2. Semantics-Guided Token Filtering Module

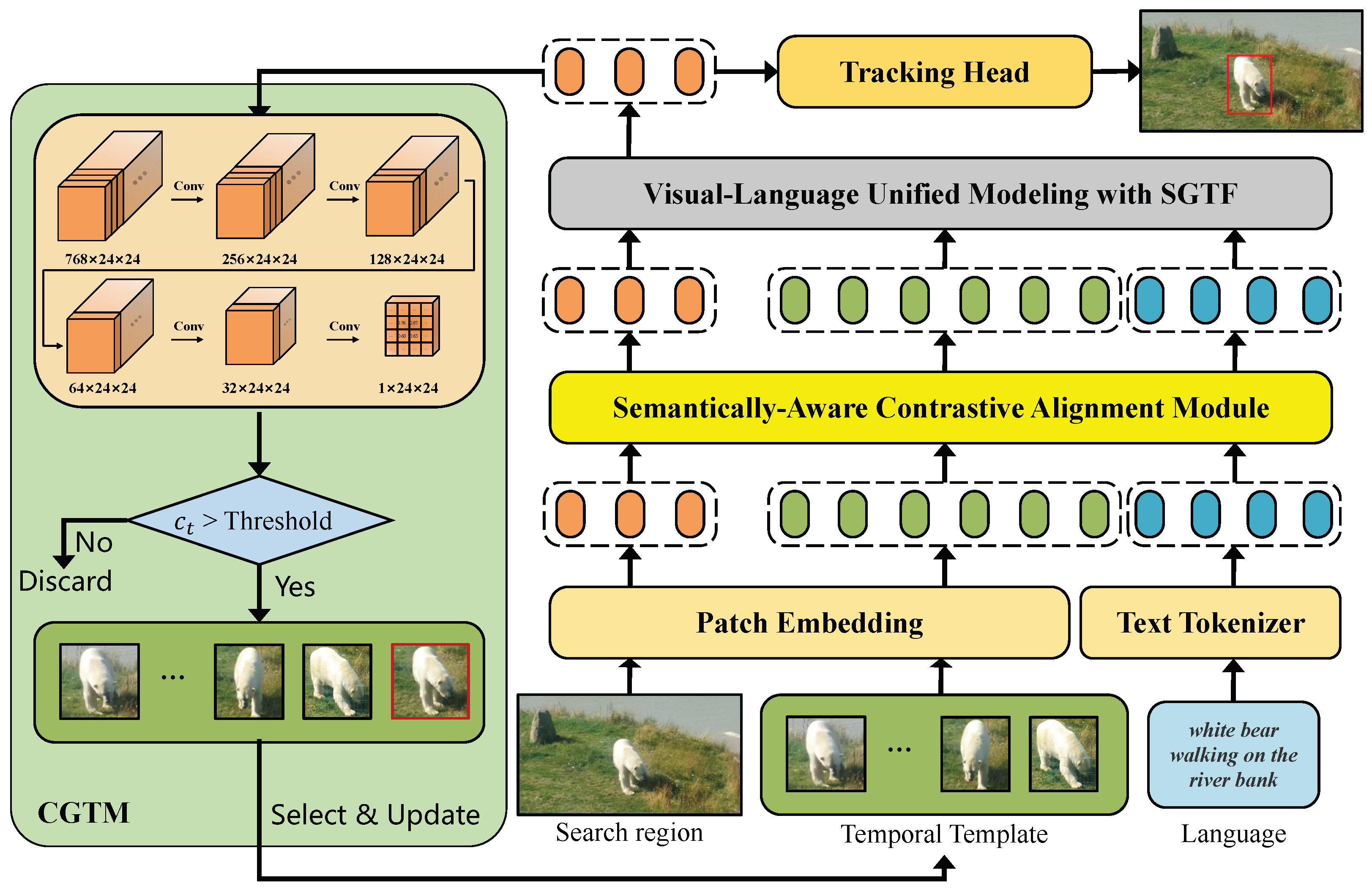

In Transformer-based unified multimodal tracking frameworks, the search region is typically divided into a large number of tokens, which include not only the target region but also extensive irrelevant background. Treating all tokens equally during feature modeling and attention computation incurs substantial computational overhead and introduces background noise, potentially degrading tracking performance. To address this issue, we propose the Semantics-Guided Token Filtering (CMTF) strategy, as illustrated in

Figure 2a. Leveraging multimodal priors provided by the language description and the template image, the CMTF strategy guides the Transformer to proactively discard irrelevant search tokens in the early stages. This selective filtering improves both computational efficiency and feature quality, contributing to more accurate and robust tracking.

Specifically, we concatenate the visual tokens

encoded from the template image with the language tokens

generated by the text encoder to form a set of multimodal reference tokens. These are used as queries to perform cross-attention with the search tokens

:

where

denotes token concatenation and

represents the attention response of each search token to the multimodal queries.

We then average and normalize the attention scores from the reference tokens to compute a semantic relevance score

for each search token:

To retain only the most informative candidate tokens, we define a retention ratio and keep the top tokens based on their relevance scores . The selected tokens are passed to subsequent Transformer layers for further modeling, while the discarded ones are either removed or replaced with zero vectors to reduce computational overhead.

The CMTF strategy leverages cross-modal attention between language and vision to inject semantic awareness into search tokens. This mechanism not only accelerates inference but also significantly enhances the model’s robustness against background distractions. To further illustrate the behavior of early-stage candidate filtering, we visualize its step-wise process in

Figure 2b.

3.3. Confidence-Guided Template Memory Module

In visual–language tracking tasks, the appearance of the target often undergoes continuous variations over time due to occlusion, scale changes, pose transformations, and other dynamic factors. These variations can lead to semantic drift between the initial template and the current target, thereby causing feature mismatches and negatively affecting tracking accuracy. To address this issue, we propose the Confidence-Guided Template Memory (CGTM) mechanism, which adaptively updates the template set by leveraging confidence prediction and high-quality template selection mechanisms. This allows the model to continuously capture the temporal evolution of target appearance, thereby enhancing robustness in long-term tracking scenarios.

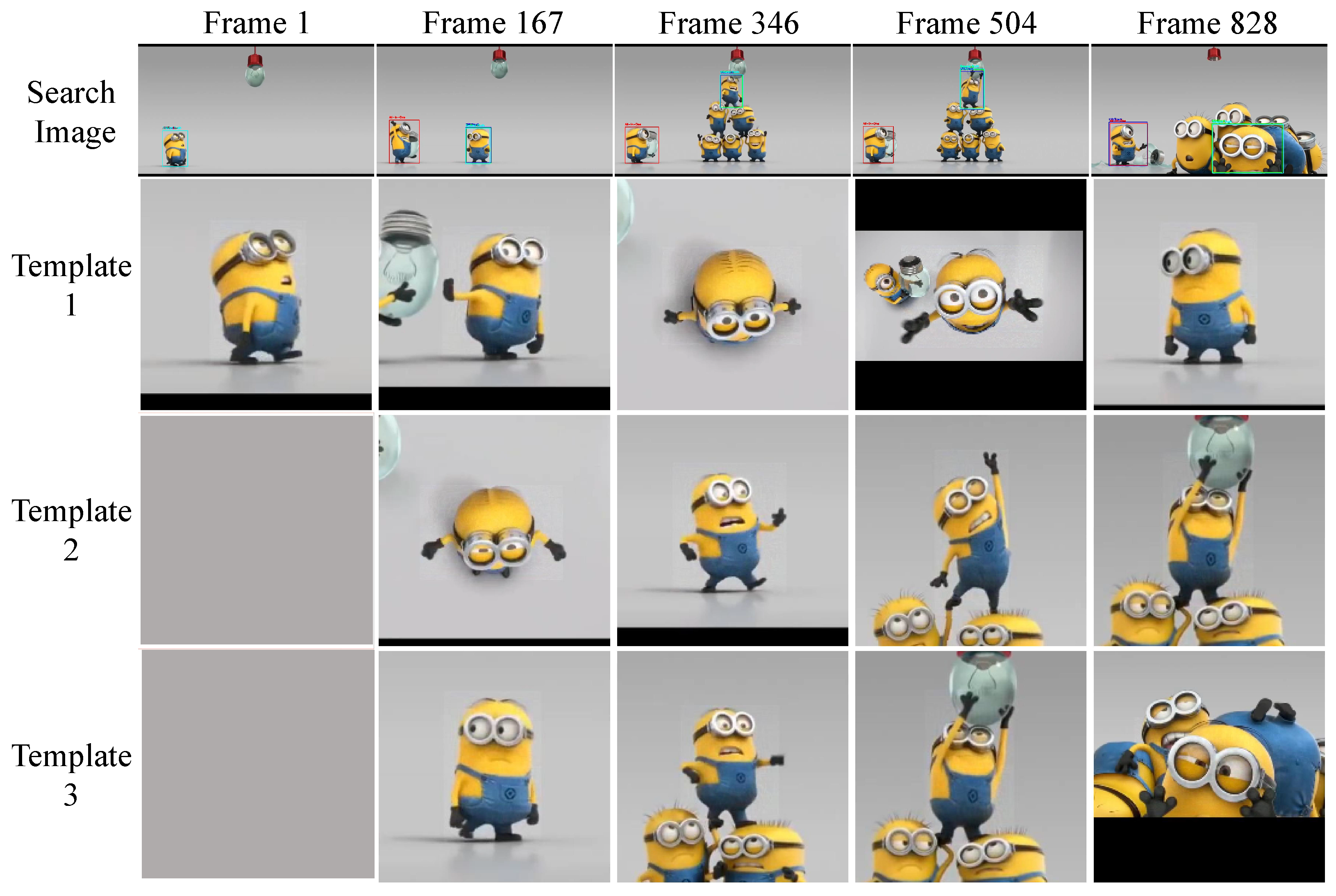

Specifically, we design a lightweight confidence prediction branch for the backbone feature network, as illustrated in

Figure 3. This branch consists of five convolutional layers with gradually reduced channel dimensions. It processes the search tokens

, which have been jointly modeled through the unified visual–language encoder, and outputs a confidence response map. The maximum value of this response map is taken as the confidence score of the target in the current frame. The computation is defined as

where

denotes a convolutional layer with BatchNorm and ReLU activation, and

is a

convolution producing a single-channel response map. The scalar

represents the target confidence in the current frame. If

, the corresponding frame is regarded as reliable and its template is added to the high-quality template memory pool

.

To ensure temporal diversity in the memory, we design a segmented balanced sampling strategy. The stored high-confidence templates are evenly partitioned into multiple temporal segments based on their frame indices. From each segment, we select the template corresponding to the middle frame as the representative temporal template. Formally, given the desired number of templates

N, and the current frame index

t, we sample

where

denotes the total number of templates in the memory at time

t. The resulting set

serves as the temporal template input for subsequent frames, enabling adaptive modeling of target appearance over time, as illustrated in

Figure 4. The CGTM mechanism combines confidence-driven high-quality template selection with structured temporal sampling, significantly enhancing the appearance modeling capability and improving robustness against semantic drift and occlusion.

3.4. Tracking Head

After processing through the unified visual–language modeling module, the feature representations of the search region are reshaped into a 2D spatial feature map and fed into a Fully Convolutional Network (FCN) composed of multiple convolutional layers. This FCN is responsible for both target classification and bounding box regression tasks. Specifically, the regression head predicts the target center probability, local offset, and normalized bounding box dimensions. To enhance the model’s discriminative power and localization accuracy, we adopt a multi-loss joint optimization strategy during training. For classification, we adopt Weighted Focal Loss

[

35] due to its ability to focus on hard-to-classify examples and mitigate class imbalance, which is crucial in visual–language tracking. Unlike traditional weighted cross-entropy, Focal Loss dynamically down-weights well-classified examples, ensuring that the model concentrates more on challenging instances. The regression branch combines the

loss and the Generalized Intersection over Union (GIoU) loss [

36] to optimize both overlap quality and regression stability. The overall regression loss is defined as

where

and

are hyperparameters controlling the trade-off between the two loss components. Ultimately, the tracking task is formulated as a multi-task optimization problem [

37], combining classification, regression, and semantic alignment objectives. The total loss function for training the model is given by

where

denotes the classification loss,

represents the Semantically Aware Contrastive Alignment loss, and

is a balancing coefficient.

4. Experiment

4.1. Implementation Details

4.1.1. Model

The proposed SATrack framework is built upon the ViT-Base backbone [

38], which consists of 12 stacked Transformer encoder layers. Each encoder layer includes two sub-modules: a Multi-Head Self-Attention mechanism and a Feed-Forward Network, both equipped with residual connections and Layer Normalization (LN) to enhance training stability. To accelerate convergence and improve tracking performance, we initialize the backbone with pretrained weights from ODTrack [

31]. Regarding input configurations, the template and search region are cropped based on the ground truth bounding box with scale factors of

and

, respectively. These crops are then resized to spatial resolutions of

for the template and

for the search image. For the language modality, the input textual descriptions are tokenized using the BERT-base-uncased tokenizer [

39] and encoded into fixed-dimensional embeddings. These embeddings are integrated with visual tokens for multimodal representation learning throughout the tracking process.

4.1.2. Train

All experiments are conducted on a server running Ubuntu OS with three NVIDIA TITAN RTX GPUs. Our model is implemented using the PyTorch 1.9.0 framework and trained on three large-scale visual–language tracking datasets: LaSOT [

40], TNL2K [

41], and OTB99-L [

42], aiming to improve the generalization ability across diverse scenarios. We adopt the AdamW optimizer [

43] with an initial learning rate of

. The model is trained for a total of 150 epochs. A weight decay of

is applied starting from epoch 125 to enhance generalization. The hyperparameters in the loss function follow the settings in [

44], where the weights for the regression loss are set as

and

, and the temperature coefficient for contrastive learning is

. The training batch size is fixed at 4. For evaluation, we follow the one-pass evaluation protocol and report three commonly used metrics: Precision (P), Normalized Precision (

), and Success (AUC).

4.2. State-of-the-Art Comparison

4.2.1. LaSOT

LaSOT [

40] is one of the most widely used benchmarks in the visual tracking community. It is specifically designed for long-term object tracking and includes a variety of challenging scenarios such as occlusion, scale variation, and illumination changes. The dataset consists of over 1400 video sequences, with 1120 used for training and 280 for testing.

Table 1 presents a systematic comparison between our proposed SATrack and several state-of-the-art visual tracking methods. Since LaSOT is a purely visual benchmark, where visual cues are often sufficient to distinguish the target, the additional language modality may introduce redundancy or even noise when the textual description is not highly discriminative. As a result, SATrack does not yet surpass some vision-only trackers, which are heavily optimized for purely visual tasks. However, it is noteworthy that SATrack achieves performance highly competitive with the best existing visual–language tracker, All-in-One [

17].

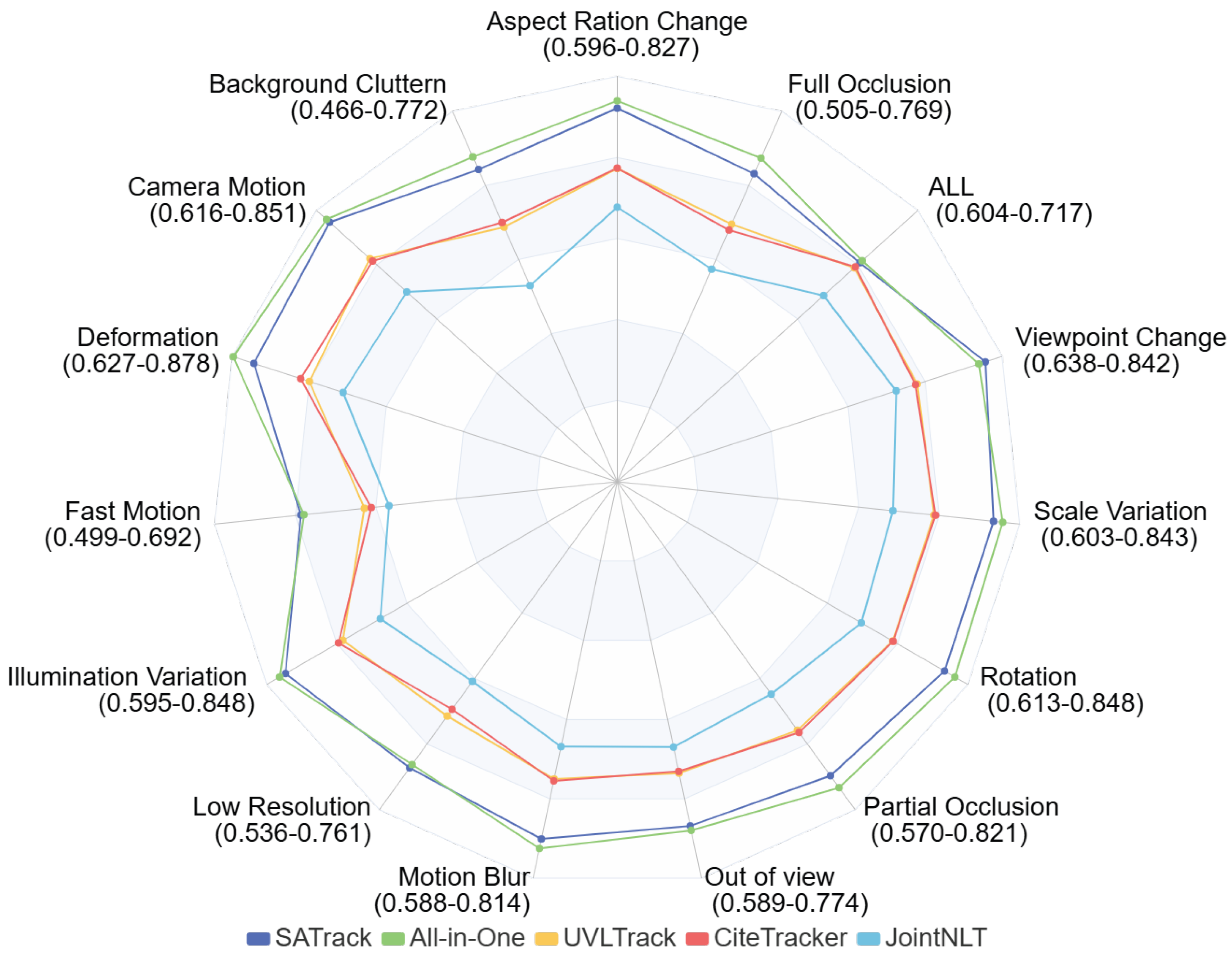

Further analysis, as illustrated in

Figure 5, demonstrates that SATrack achieves leading results on specific challenging attributes, such as Fast Motion, Low Resolution, and Viewpoint Change, highlighting its robustness under dynamic and complex scenarios. It is also worth noting that the All-in-One model is trained using a comprehensive multi-dataset strategy involving eight datasets, including LaSOT, TNL2K, and OTB99-L. In contrast, SATrack is trained on a more limited set of data, which further underscores the strong potential of our method under constrained supervision.

4.2.2. TNL2K

TNL2K [

41] is a large-scale benchmark specifically designed for visual–language tracking tasks, with a key focus on tightly coupling natural language descriptions with video sequences. The dataset contains 2000 videos—1300 for training and 700 for testing—spanning a wide range of real-world and synthetic scenarios, including cartoon clips and virtual game scenes. This high diversity makes TNL2K particularly challenging. The sequences encompass common tracking difficulties such as heavy occlusion, fast motion, and complex backgrounds. Each video is accompanied by detailed natural language annotations that describe the target’s appearance, semantic attributes, and contextual relationships, providing a solid foundation for developing and evaluating multimodal fusion approaches. As shown in

Table 1, we compare our proposed SATrack with several state-of-the-art visual–language tracking methods on the TNL2K test set. Compared with the current best-performing method UVLTrack [

32], SATrack achieves a 3.0% improvement in the Success (AUC) metric, reaching 65.7% and setting a new state of the art on this benchmark.

Attribute-based performance analysis, illustrated in

Figure 6, further reveals that SATrack consistently outperforms existing approaches across most challenge attributes. This performance gain can be attributed to SATrack’s effective integration of semantic understanding and appearance modeling. In particular, the method demonstrates enhanced robustness in handling complex scenarios involving occlusion, deformation, and illumination variation.

4.2.3. OTB99-L

OTB99-L [

42] is an extension of the classic OTB99 benchmark [

54], tailored for visual–language tracking tasks. This version augments the original 99 video sequences (51 for training and 48 for evaluation) with natural language annotations that provide detailed descriptions of target objects and their semantic context, thereby enabling multimodal evaluation. OTB99-L retains the original dataset’s challenges—such as scale variation, illumination changes, and fast motion—while introducing additional difficulties related to cross-modal alignment due to the inclusion of language-based descriptions. As such, it serves as a critical benchmark for advancing research in visual–language tracking. On this dataset, we conduct a comprehensive comparison between our proposed SATrack and other state-of-the-art methods listed in

Table 1. The experimental results demonstrate that SATrack achieves the best performance across both the Success (AUC) metric (71.4%) and the Precision (P) metric (93.7%). These results highlight the robustness and strong generalization ability of SATrack under multimodal tracking scenarios.

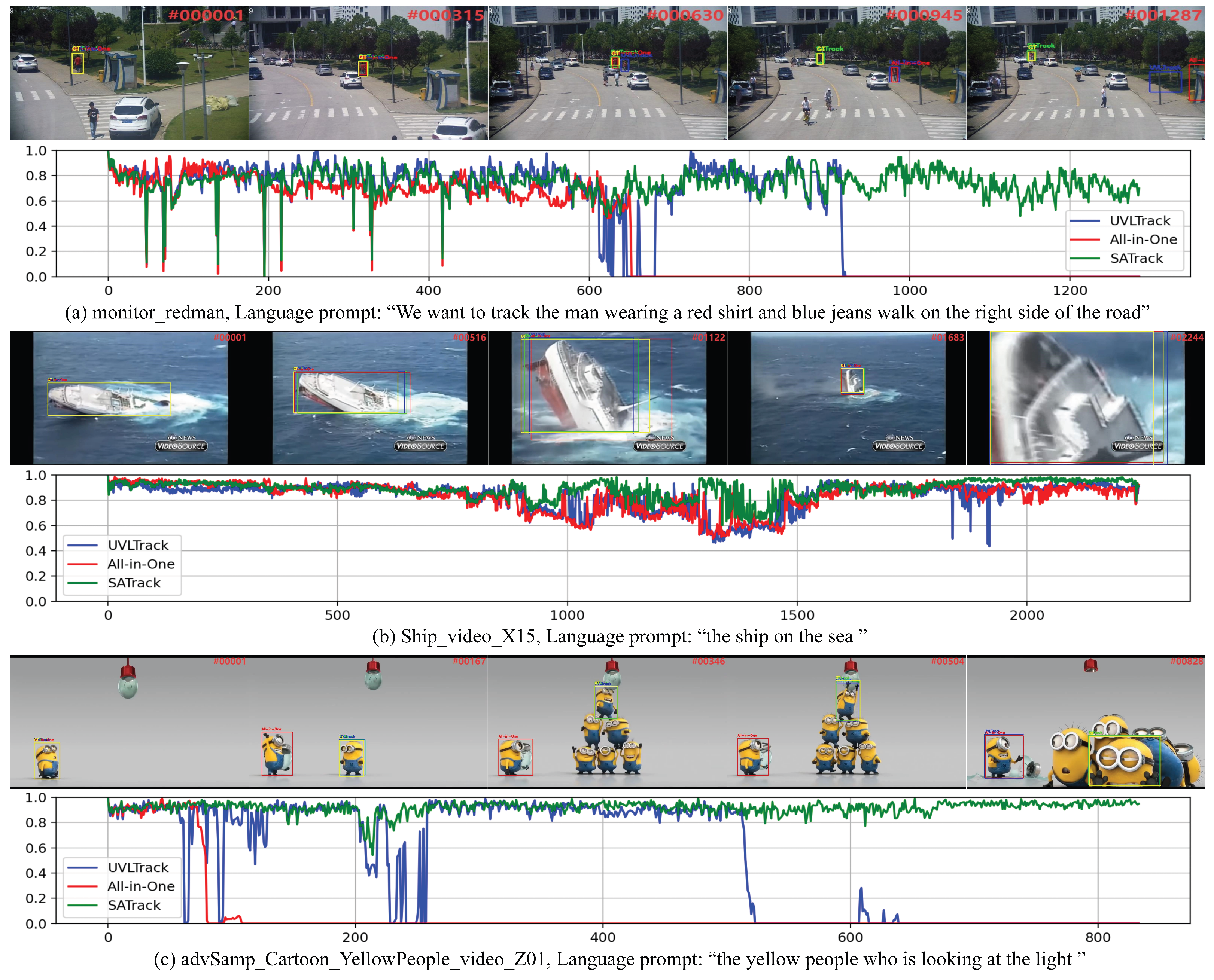

4.2.4. Qualitative Performance

As illustrated in

Figure 7, we present a qualitative comparison of our proposed SATrack against two state-of-the-art visual–language tracking methods, UVLTrack [

32] and All-in-One [

17], on three representative video sequences from the TNL2K test set. These sequences encompass various challenging scenarios, including the presence of distractor objects, background clutter, appearance variations, and occlusions. The visualization results demonstrate that SATrack exhibits superior robustness and tracking accuracy under complex conditions. For example, in the monitor redman sequence (first row of

Figure 7), UVLTrack fails to maintain accurate localization once occlusion and distractors appear. In contrast, SATrack continues to track the target precisely and stably, highlighting its strong adaptability and robustness in real-world environments.

4.3. Ablation Experiment

4.3.1. Effectiveness of SACA Module

To evaluate the practical contribution of the proposed SACA module, we conduct a series of ablation experiments on the TNL2K, with results summarized in

Table 2. The results demonstrate that incorporating the SACA module consistently improves performance across all key metrics, including Success (AUC), Precision (P), and Normalized Precision (

), highlighting its effectiveness in enhancing cross-modal semantic consistency and feature discriminability.

From the perspective of model complexity, traditional contrastive alignment methods typically rely on additional cross-modal projection layers to map visual and linguistic features into a shared embedding space. For example, adopting the alignment approach used in All-in-One [

17] significantly increases the parameter count to 259.2 M. In contrast, the SACA module leverages the semantic relationships already encoded in the attention distributions of the Transformer, and constructs a contrastive loss guided by attention-derived semantic distances—without introducing any additional parameters. This design enables implicit alignment that is both lightweight and effective.

Beyond complexity considerations, we also examine the robustness of the SACA module through a sensitivity study on its loss weight

. Specifically, we vary

in

while keeping other training settings unchanged, and evaluate the results on TNL2K. As summarized in

Table 3, a moderate weight (around

) achieves the best balance between leveraging the contrastive alignment signal and maintaining stable optimization of the primary classification/regression objectives. Smaller values under-utilize the semantic alignment supervision, while larger values (e.g., ≥0.5) tend to degrade performance due to over-emphasis on the auxiliary contrastive loss.

In summary, the SACA module is a lightweight yet effective component of SATrack, substantially improving cross-modal alignment while introducing negligible computational overhead.

4.3.2. Impact of Token Retention Ratio in CMTF Strategy

We further investigate the influence of the token retention ratio in the CMTF strategy on overall tracking performance. As introduced earlier, the CMTF strategy aims to filter out less relevant visual tokens based on attention responses prior to semantic alignment, thereby reducing redundancy and focusing on critical regions. To this end, we conduct ablation experiments on the TNL2K by varying the retention ratio

, which determines the proportion of visual tokens retained after filtering. The results are summarized in

Table 4.

As shown in the results, a moderate degree of token filtering (e.g., ) brings consistent improvements across all metrics, suggesting that removing low-relevance tokens guided by semantic attention helps mitigate redundancy and enhances alignment quality. However, overly aggressive filtering (e.g., ) slightly degrades performance, likely due to the removal of informative tokens critical to target representation. Overall, the CMTF strategy demonstrates robustness and stability, and retaining 90% of tokens appears to be an optimal balance between efficiency and performance.

4.3.3. Effect of Confidence Threshold in CGTM Mechanism

To assess the influence of the confidence threshold in the CGTM mechanism, we conducted a systematic ablation study on the LaSOT. As described earlier, the CGTM mechanism updates the long-term memory pool of templates by evaluating the matching confidence of each frame. A confidence score above a predefined threshold determines whether the corresponding template is retained for future use, thus enabling key-frame preservation and suppressing noisy samples. We evaluate the tracking performance under different confidence thresholds, and the results are summarized in

Table 5.

From the results, we observe that setting the threshold too high or too low adversely affects performance. A high threshold (e.g., 0.8) enforces strict filtering, ensuring the quality of retained templates but limiting memory diversity by excluding potentially useful semantic instances. Conversely, a low threshold (e.g., 0.6) allows noisy templates to enter the memory pool, degrading the robustness of the target representation. The best overall performance is achieved with a threshold of 0.7, which strikes a favorable balance between accuracy and robustness. Therefore, we adopt 0.7 as the default threshold for the CGTM mechanism in our main experiments.

4.4. Effect of the Template Memory Size

To evaluate the effect of the template memory size in the CGTM module, we conducted ablation experiments on the OTB99-L benchmark. In this study, we varied the memory size

N (set to 2, 3, and 4), while keeping all other training and inference settings unchanged. The results are reported in terms of AUC, Precision, and Normalized Precision, as shown in

Table 6.

As shown in

Table 6, increasing the memory size from 2 to 3 consistently improves all key metrics (e.g., AUC rises from 71.32% to 72.08%, Precision increases by 0.61 points, and Norm Precision improves by 1.31 points). This indicates that a moderate increase in memory size allows the tracker to maintain more high-quality templates, thus better adapting to appearance variations. However, when the memory size is further increased to 4, the overall performance slightly decreases (AUC drops from 72.08% to 71.59%). We hypothesize that a larger memory pool may introduce redundant or outdated templates, thereby reducing representativeness and introducing noise. In summary, a memory size of

N = 3 achieves the best trade-off between accuracy and robustness, and is therefore adopted as our default configuration.

4.5. Speed Analysis

Real-time capability is a critical performance metric for visual tracking in practical applications. As shown in

Table 1, our proposed model achieves an average inference speed of approximately 53 FPS, without requiring any dedicated acceleration strategies. This significantly outperforms most existing real-time visual–language tracking methods [

11,

46], and exceeds the standard video frame rate requirement [

55]. These results demonstrate the strong practical applicability and deployment potential of our framework.

5. Conclusions

In this work, we presented SATrack, a novel Semantic-Aware Alignment framework for visual–language tracking. SATrack is designed to tackle three key challenges in multimodal tracking: semantic ambiguity, background distractions, and temporal appearance variations. To this end, we integrate three complementary modules in a unified architecture: (i) Semantically Aware Contrastive Alignment (SACA), which improves cross-modal discriminability by mining semantically hard negatives; (ii) Semantics-Guided Token Filtering (CMTF), which enhances robustness and efficiency by filtering out irrelevant tokens; and (iii) Confidence-Guided Template Memory (CGTM), which adaptively updates reliable temporal templates to mitigate template drift.

Extensive experiments on three benchmarks (TNL2K, LaSOT, and OTB99-L) demonstrate the effectiveness of SATrack, achieving state-of-the-art performance on TNL2K and competitive results on other datasets. Ablation studies further validate the contribution of each module, while statistical analyses confirm the reliability of the observed improvements.

Looking ahead, we will extend SATrack to broader scenarios, including UAV and edge-device applications, by exploring lightweight deployment strategies and further strengthening cross-modal semantic consistency. We believe SATrack not only advances the methodological foundations of visual–language tracking but also will provide practical insights for deploying multimodal trackers in real-world, resource-constrained environments.

Author Contributions

Y.T. and L.X. wrote the main manuscript text and supervised the study; Z.L. contributed to methodology development and manuscript editing; L.J. and C.C. prepared the figures and performed data visualization; H.Z. collected and curated the data. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China under Grant (62273243, 62473341, 62203480).

Institutional Review Board Statement

The research presented in this paper does not involve any human or animal subjects. All data used in this study are publicly available. Therefore, no ethical approval or informed consent was required for this research.

Data Availability Statement

The datasets generated during and analyzed during the current study are available from the corresponding author on reasonable request.

Conflicts of Interest

Author Liang Jiang was employed by the State Grid Henan Electric Power Company. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Zhu, H.; Lu, Q.; Xue, L.; Zhang, P.; Yuan, G. Vision-Language Tracking With CLIP and Interactive Prompt Learning. IEEE Trans. Intell. Transp. Syst. 2024, 26, 3659–3670. [Google Scholar] [CrossRef]

- Ge, J.; Chen, X.; Cao, J.; Zhu, X.; Liu, B. Beyond visual cues: Synchronously exploring target-centric semantics for vision-language tracking. arXiv 2023, arXiv:2311.17085. [Google Scholar] [CrossRef]

- Zhao, H.; Wang, X.; Wang, D.; Lu, H.; Ruan, X. Transformer vision-language tracking via proxy token guided cross-modal fusion. Pattern Recognit. Lett. 2023, 168, 10–16. [Google Scholar] [CrossRef]

- Zhang, C.; Huang, G.; Liu, L.; Huang, S.; Yang, Y.; Wan, X.; Ge, S.; Tao, D. WebUAV-3M: A benchmark for unveiling the power of million-scale deep UAV tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 9186–9205. [Google Scholar] [CrossRef] [PubMed]

- Zhai, J.T.; Zhang, Q.; Wu, T.; Chen, X.Y.; Liu, J.J.; Cheng, M.M. SLAN: Self-Locator Aided Network for Vision-Language Understanding. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 21949–21958. [Google Scholar]

- Zhang, H.; Yang, X.; Wang, X.; Fu, W.; Zhong, B.; Zhang, J. SAM-Assisted Temporal-Location Enhanced Transformer Segmentation for Object Tracking with Online Motion Inference. Neurocomputing 2025, 617, 128914. [Google Scholar] [CrossRef]

- Wei, X.; Bai, Y.; Zheng, Y.; Shi, D.; Gong, Y. Autoregressive Visual Tracking. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 9697–9706. [Google Scholar] [CrossRef]

- Liang, Y.; Huang, F.; Qiu, Z.; Shu, X.; Liu, Q.; Yuan, D. Uncertainty and diversity-based active learning for UAV tracking. Neurocomputing 2025, 639, 130265. [Google Scholar] [CrossRef]

- Lu, Q.; Yuan, G.; Li, C.; Zhu, H.; Qin, X. Natural Language Guided Attention Mixer for Object Tracking. In Proceedings of the 2023 4th International Conference on Information Science, Parallel and Distributed Systems (ISPDS), Guangzhou, China, 14–16 July 2023; IEEE: New York, NY, USA, 2023; pp. 160–164. [Google Scholar]

- Wu, D.; Han, W.; Wang, T.; Dong, X.; Zhang, X.; Shen, J. Referring Multi-Object Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 14633–14642. [Google Scholar]

- Feng, Q.; Ablavsky, V.; Bai, Q.; Sclaroff, S. Siamese Natural Language Tracker: Tracking by Natural Language Descriptions With Siamese Trackers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 5851–5860. [Google Scholar]

- Wang, X.; Shu, X.; Zhang, Z.; Jiang, B.; Wang, Y.; Tian, Y.; Wu, F. Towards More Flexible and Accurate Object Tracking With Natural Language: Algorithms and Benchmark. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13763–13773. [Google Scholar]

- Li, X.; Huang, Y.; He, Z.; Wang, Y.; Lu, H.; Yang, M.H. CiteTracker: Correlating Image and Text for Visual Tracking. In Proceedings of the ICCV—2023 International Conference on Computer Vision, Paris, France, 2–6 October 2023. [Google Scholar]

- Wang, R.; Tang, Z.; Zhou, Q.; Liu, X.; Hui, T.; Tan, Q.; Liu, S. Unified Transformer with Isomorphic Branches for Natural Language Tracking. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 4529–4541. [Google Scholar] [CrossRef]

- Ma, D.; Wu, X. Capsule-based object tracking with natural language specification. In Proceedings of the 29th ACM International Conference on Multimedia, Virtual, 20–24 October 2021; pp. 1948–1956. [Google Scholar]

- Ge, J.; Cao, J.; Zhu, X.; Zhang, X.; Liu, C.; Wang, K.; Liu, B. Consistencies are All You Need for Semi-supervised Vision-Language Tracking. In Proceedings of the 32nd ACM International Conference on Multimedia, Melbourne, VIC, Australia, 28 October– 1 November 2024. [Google Scholar]

- Zhang, C.; Sun, X.; Yang, Y.; Liu, L.; Liu, Q.; Zhou, X.; Wang, Y. All in one: Exploring unified vision-language tracking with multi-modal alignment. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 5552–5561. [Google Scholar]

- Zhang, G.; Zhong, B.; Liang, Q.; Mo, Z.; Li, N.; Song, S. One-Stream Stepwise Decreasing for Vision-Language Tracking. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 9053–9063. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, J.; Zhang, J.; Zhang, T.; Zhong, B. One-Stream Vision-Language Memory Network for Object Tracking; IEEE: New York, NY, USA, 2023; Volume 26, pp. 1720–1730. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-Speed Tracking with Kernelized Correlation Filters. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 583–596. [Google Scholar] [CrossRef] [PubMed]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. ECO: Efficient Convolution Operators for Tracking. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6931–6939. [Google Scholar] [CrossRef]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H.S. Fully-Convolutional Siamese Networks for Object Tracking. In Proceedings of the Computer Vision—ECCV 2016 Workshops, Amsterdam, The Netherlands, 8–10 October 20116; Hua, G., Jégou, H., Eds.; Springer: Cham, Switzerland, 2016; pp. 850–865. [Google Scholar]

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J. Siamrpn++: Evolution of siamese visual tracking with very deep networks. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4282–4291. [Google Scholar]

- Hu, W.; Wang, Q.; Zhang, L.; Bertinetto, L.; Torr, P.H. SiamMask: A Framework for Fast Online Object Tracking and Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 3072–3089. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Yan, B.; Zhu, J.; Wang, D.; Yang, X.; Lu, H. Transformer Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 8126–8135. [Google Scholar]

- Yan, B.; Peng, H.; Fu, J.; Wang, D.; Lu, H. Learning spatio-temporal transformer for visual tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10448–10457. [Google Scholar]

- Lin, L.; Fan, H.; Xu, Y.; Ling, H. SwinTrack: A Simple and Strong Baseline for Transformer Tracking. Adv. Neural Inf. Process. Syst. 2022, 35, 16743–16754. [Google Scholar]

- Hu, X.; Zhong, B.; Liang, Q.; Zhang, S.; Li, N.; Li, X.; Ji, R. Transformer Tracking via Frequency Fusion. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 1020–1031. [Google Scholar] [CrossRef]

- Hu, X.; Zhong, B.; Liang, Q.; Zhang, S.; Li, N.; Li, X. Toward Modalities Correlation for RGB-T Tracking. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 9102–9111. [Google Scholar] [CrossRef]

- Bai, Y.; Zhao, Z.; Gong, Y.; Wei, X. ARTrackV2: Prompting Autoregressive Tracker Where to Look and How to Describe. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 19048–19057. [Google Scholar] [CrossRef]

- Zheng, Y.; Zhong, B.; Liang, Q.; Mo, Z.; Zhang, S.; Li, X. Odtrack: Online dense temporal token learning for visual tracking. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 22–25 February 2024; Volume 38, pp. 7588–7596. [Google Scholar]

- Ma, Y.; Tang, Y.; Yang, W.; Zhang, T.; Zhang, J.; Kang, M. Unifying Visual and Vision-Language Tracking via Contrastive Learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 22–25 February 2024; Volume 38, pp. 4107–4116. [Google Scholar]

- Guo, M.; Zhang, Z.; Fan, H.; Jing, L. Divert more attention to vision-language tracking. Adv. Neural Inf. Process. Syst. 2022, 35, 4446–4460. [Google Scholar] [CrossRef] [PubMed]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A Simple Framework for Contrastive Learning of Visual Representations. arXiv 2020, arXiv:2002.05709. [Google Scholar] [CrossRef]

- Law, H.; Deng, J. Cornernet: Detecting objects as paired keypoints. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 734–750. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Zhang, Y.; Yang, Q. A Survey on Multi-Task Learning. IEEE Trans. Knowl. Data Eng. 2022, 34, 5586–5609. [Google Scholar] [CrossRef]

- Alexey, D. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Kenton, J.D.M.W.C.; Toutanova, L.K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the naacL-HLT, Minneapolis, MN, USA, 2–7 June 2019; Volume 1, p. 2. [Google Scholar]

- Fan, H.; Lin, L.; Yang, F.; Chu, P.; Deng, G.; Yu, S.; Bai, H.; Xu, Y.; Liao, C.; Ling, H. Lasot: A high-quality benchmark for large-scale single object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5374–5383. [Google Scholar]

- Chen, B.; Li, P.; Bai, L.; Qiao, L.; Shen, Q.; Li, B.; Gan, W.; Wu, W.; Ouyang, W. Backbone is all your need: A simplified architecture for visual object tracking. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 375–392. [Google Scholar]

- Li, Z.; Tao, R.; Gavves, E.; Snoek, C.G.M.; Smeulders, A.W. Tracking by Natural Language Specification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Li, G.; Duan, N.; Fang, Y.; Gong, M.; Jiang, D. Unicoder-vl: A universal encoder for vision and language by cross-modal pre-training. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11336–11344. [Google Scholar]

- Wang, X.; Huang, Q.; Celikyilmaz, A.; Gao, J.; Shen, D.; Wang, Y.F.; Wang, W.Y.; Zhang, L. Reinforced cross-modal matching and self-supervised imitation learning for vision-language navigation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6629–6638. [Google Scholar]

- Shao, Y.; He, S.; Ye, Q.; Feng, Y.; Luo, W.; Chen, J. Context-Aware Integration of Language and Visual References for Natural Language Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 19208–19217. [Google Scholar]

- Zhou, L.; Zhou, Z.; Mao, K.; He, Z. Joint visual grounding and tracking with natural language specification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 23151–23160. [Google Scholar]

- Feng, Q.; Ablavsky, V.; Bai, Q.; Li, G.; Sclaroff, S. Real-time visual object tracking with natural language description. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 1–5 March 2020; pp. 700–709. [Google Scholar]

- Chenlong, X.; Bineng, Z.; Qihua, L.; Yaozong, Z.; Guorong, L.; Shuxiang, S. Less is more: Token context-aware learning for object tracking. arXiv 2025, arXiv:2501.00758. [Google Scholar] [CrossRef]

- Chen, X.; Peng, H.; Wang, D.; Lu, H.; Hu, H. Seqtrack: Sequence to sequence learning for visual object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 14572–14581. [Google Scholar]

- Xie, F.; Chu, L.; Li, J.; Lu, Y.; Ma, C. Videotrack: Learning to track objects via video transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 22826–22835. [Google Scholar]

- Gao, S.; Zhou, C.; Ma, C.; Wang, X.; Yuan, J. Aiatrack: Attention in attention for transformer visual tracking. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany; pp. 146–164. [Google Scholar]

- Ye, B.; Chang, H.; Ma, B.; Shan, S.; Chen, X. Joint feature learning and relation modeling for tracking: A one-stream framework. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany; pp. 341–357. [Google Scholar]

- Chen, Z.; Zhong, B.; Li, G.; Zhang, S.; Ji, R. Siamese Box Adaptive Network for Visual Tracking. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 6667–6676. [Google Scholar] [CrossRef]

- Wu, Y.; Lim, J.; Yang, M.H. Object Tracking Benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1834–1848. [Google Scholar] [CrossRef] [PubMed]

- Tekalp, A.M. Digital Video Processing; Prentice Hall Press: Hoboken, NJ, USA, 2015. [Google Scholar]

Figure 1.

Comparison of visual–language tracking paradigms: (a) Existing methods focus on optimizing multimodal interaction but often neglect fine-grained semantic alignment, which may lead to tracking failure under semantic ambiguity or appearance variation. (b) Our SATrack framework explicitly incorporates semantically aware alignment, addressing cross-modal semantic drift and improving robustness in challenging scenarios.

Figure 1.

Comparison of visual–language tracking paradigms: (a) Existing methods focus on optimizing multimodal interaction but often neglect fine-grained semantic alignment, which may lead to tracking failure under semantic ambiguity or appearance variation. (b) Our SATrack framework explicitly incorporates semantically aware alignment, addressing cross-modal semantic drift and improving robustness in challenging scenarios.

Figure 2.

(a) The structure of the Semantics-Guided Token Filtering module: (b) Visualization of the search tokens selection. The white regions denote the discarded tokens. After token selection in the 4th, 7th, and 11th layers, some tokens containing background information are gradually discarded, and the target tokens are retained.

Figure 2.

(a) The structure of the Semantics-Guided Token Filtering module: (b) Visualization of the search tokens selection. The white regions denote the discarded tokens. After token selection in the 4th, 7th, and 11th layers, some tokens containing background information are gradually discarded, and the target tokens are retained.

Figure 3.

The overall architecture of SATrack consists of a patch embedding layer, a text tokenizer, a Semantically Aware Contrastive Alignment module, a Visual–Language Unified Modeling module with Semantics-Guided Token Filtering module, a Confidence-Guided Template Memory module, and a tracking head.

Figure 3.

The overall architecture of SATrack consists of a patch embedding layer, a text tokenizer, a Semantically Aware Contrastive Alignment module, a Visual–Language Unified Modeling module with Semantics-Guided Token Filtering module, a Confidence-Guided Template Memory module, and a tracking head.

Figure 4.

A schematic diagram of the memory template set in the video sequence tracking process named “advSamp_Cartoon_YellowPeople_video_Z01” in TNL2K. The gray image blocks are drawn for placeholder purposes, indicating that the length of the incoming memory template has not reached its maximum value.

Figure 4.

A schematic diagram of the memory template set in the video sequence tracking process named “advSamp_Cartoon_YellowPeople_video_Z01” in TNL2K. The gray image blocks are drawn for placeholder purposes, indicating that the length of the incoming memory template has not reached its maximum value.

Figure 5.

Attribute-based evaluation on the LaSOT test set. AUC score is used to rank different trackers.

Figure 5.

Attribute-based evaluation on the LaSOT test set. AUC score is used to rank different trackers.

Figure 6.

Attribute-based evaluation on the TNL2K test set. AUC score is used to rank different trackers. Best viewed by zooming in.

Figure 6.

Attribute-based evaluation on the TNL2K test set. AUC score is used to rank different trackers. Best viewed by zooming in.

Figure 7.

Qualitative comparison on three challenging video sequences from the TNL2K test set. The top line shows the tracked target and bounding box, the middle line shows the IoU score over time, and the bottom line presents the language prompt. SATrack demonstrates superior tracking performance, maintaining accurate localization even under challenging scenarios such as occlusions and distractors. “#” indicates the frame number of the video. Best viewed by zooming in.

Figure 7.

Qualitative comparison on three challenging video sequences from the TNL2K test set. The top line shows the tracked target and bounding box, the middle line shows the IoU score over time, and the bottom line presents the language prompt. SATrack demonstrates superior tracking performance, maintaining accurate localization even under challenging scenarios such as occlusions and distractors. “#” indicates the frame number of the video. Best viewed by zooming in.

Table 1.

Comparison of SATrack with state-of-the-art trackers on TNL2K, LaSOT, and OTB99-L. Metrics include Area Under Curve (AUC), Precision (P), and Normalized Precision (). The best and second-best results are highlighted in red and blue, respectively.

Table 1.

Comparison of SATrack with state-of-the-art trackers on TNL2K, LaSOT, and OTB99-L. Metrics include Area Under Curve (AUC), Precision (P), and Normalized Precision (). The best and second-best results are highlighted in red and blue, respectively.

| Type | Method | TNL2K | LaSOT | OTB99-L | FPS |

|---|

| AUC | P | | AUC | P | | AUC | P |

|---|

| Vision–Language | SATrack | 65.8 | 70.6 | 83.6 | 71.0 | 78.2 | 81.0 | 72.1 | 94.5 | 53 |

| UVLTrack [32] | 62.7 | 65.4 | - | 69.4 | 74.9 | 63.5 | 71.1 | 92.0 | 57 |

| OSDT [18] | 59.3 | 59.3 | 59.3 | 64.3 | 64.3 | 64.3 | 66.2 | 86.7 | 67 |

| QueryNLT [45] | 57.8 | 58.7 | 75.6 | 59.9 | 63.5 | 69.6 | 66.7 | 88.2 | - |

| All-in-One [17] | 55.3 | 57.2 | - | 71.7 | 78.5 | 82.4 | 71.0 | 93.0 | 60 |

| JointNLT [46] | 56.9 | 58.1 | - | 60.4 | 63.6 | - | 65.3 | 85.6 | 39 |

| CiteTracker [13] | 57.7 | 59.6 | - | 64.3 | 73.4 | 68.6 | 66.2 | 86.7 | - |

| SNLT [11] | 27.6 | 41.9 | - | 54.0 | 57.6 | - | 66.6 | 80.4 | 50 |

| TNL2K-II [12] | 42.0 | 42.0 | 50.0 | 51.3 | 55.4 | - | 68.0 | 88.0 | - |

| RTTNLD [47] | 25.0 | 27.0 | - | 35.0 | 35.0 | 43.0 | 61.0 | 79.0 | 30 |

| Vision-Only | LMTrack [48] | - | - | - | 69.8 | 76.3 | 79.2 | - | - | 47 |

| ODTrack [31] | - | - | - | 73.2 | 80.6 | 83.2 | - | - | 73 |

| SeqTrack [49] | 56.4 | - | - | 71.5 | 77.8 | 81.1 | - | - | 15 |

| VideoTrack [50] | - | - | - | 70.2 | 76.4 | - | - | - | 69 |

| AiaTrack [51] | - | - | - | 69.0 | 73.8 | 79.4 | - | - | 38 |

| OSTrack [52] | 54.3 | - | - | 69.1 | 75.2 | 78.7 | - | - | 105 |

| TransT [25] | 50.7 | 51.7 | 57.1 | 64.9 | 69.0 | 73.8 | - | - | 50 |

| SiamBAN [53] | 41.0 | 41.7 | 48.5 | 48.5 | 54.1 | 59.8 | - | - | 40 |

| SiamRPN++ [23] | 41.3 | 41.2 | 48.2 | 49.4 | 49.1 | 56.9 | - | - | 35 |

| SiamFC [22] | 29.5 | 28.6 | 33.6 | 35.0 | 39.3 | 42.0 | - | - | 86 |

Table 2.

Our ablation study on the TNL2K aimed to evaluate the significance of SATrack’s SACA module. We conducted three comparative experiments: (1) No alignment mechanism was employed. (2) Traditional cosine similarity-based comparison alignment methods were introduced. (3) The proposed SACA module was applied. The best result in each column are highlighted in red.

Table 2.

Our ablation study on the TNL2K aimed to evaluate the significance of SATrack’s SACA module. We conducted three comparative experiments: (1) No alignment mechanism was employed. (2) Traditional cosine similarity-based comparison alignment methods were introduced. (3) The proposed SACA module was applied. The best result in each column are highlighted in red.

| Method | AUC | Precision | | Params (M) |

|---|

| (1) No alignment | 65.4 | 70.1 | 83.2 | 116.9 |

| (2) Cosine similarity | 65.5 | 70.2 | 83.3 | 259.2 |

| (3) SACA | 65.8 | 70.6 | 83.6 | 116.9 |

Table 3.

Sensitivity study of the SACA loss weight on TNL2K. The best result in each column is highlighted in red.

Table 3.

Sensitivity study of the SACA loss weight on TNL2K. The best result in each column is highlighted in red.

| AUC | Precision | |

|---|

| 0 | 65.4 | 70.1 | 83.2 |

| 0.1 | 65.6 | 70.4 | 83.3 |

| 0.2 | 65.8 | 70.6 | 83.6 |

| 0.3 | 65.6 | 70.5 | 83.4 |

| 0.5 | 65.3 | 70.2 | 83.2 |

Table 4.

Effect of token retention ratio in the CMTF strategy on the TNL2K test set. The best result in each column are highlighted in red.

Table 4.

Effect of token retention ratio in the CMTF strategy on the TNL2K test set. The best result in each column are highlighted in red.

| Retention Ratio () | AUC | Precision | |

|---|

| 1.0 (no filtering) | 65.63 | 70.42 | 83.33 |

| 0.90 | 65.80 | 70.62 | 83.57 |

| 0.80 | 65.56 | 70.53 | 83.37 |

Table 5.

Ablation study on different confidence thresholds in the CGTM mechanism, evaluated on the LaSOT. The best result in each column are highlighted in red.

Table 5.

Ablation study on different confidence thresholds in the CGTM mechanism, evaluated on the LaSOT. The best result in each column are highlighted in red.

| Threshold | AUC | Precision | |

|---|

| 0.8 | 70.76 | 77.97 | 80.81 |

| 0.7 | 71.03 | 78.21 | 81.04 |

| 0.6 | 70.23 | 77.71 | 80.36 |

Table 6.

Impact of template memory size N on tracking performance, evaluated on OTB99-L. The best result in each column are highlighted in red.

Table 6.

Impact of template memory size N on tracking performance, evaluated on OTB99-L. The best result in each column are highlighted in red.

| Memory Size N | AUC | Precision | |

|---|

| 2 | 71.32 | 93.86 | 86.86 |

| 3 | 72.08 | 94.47 | 88.17 |

| 4 | 71.59 | 93.81 | 87.88 |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).