DAPO: Mobility-Aware Joint Optimization of Model Partitioning and Task Offloading for Edge LLM Inference

Abstract

1. Introduction

- (1)

- We formulate the joint optimization problem of model partitioning and multi-server offloading in mobility-aware LLM inference as a Mixed-Integer Nonlinear Programming (MINLP) problem, explicitly modeling user mobility, multi-user competition, and system constraints.

- (2)

- We design the DAPO framework based on the DDPG algorithm, which maps continuous policy outputs into valid discrete actions, thereby effectively handling the high-dimensional hybrid action space of partitioning and offloading decisions.

- (3)

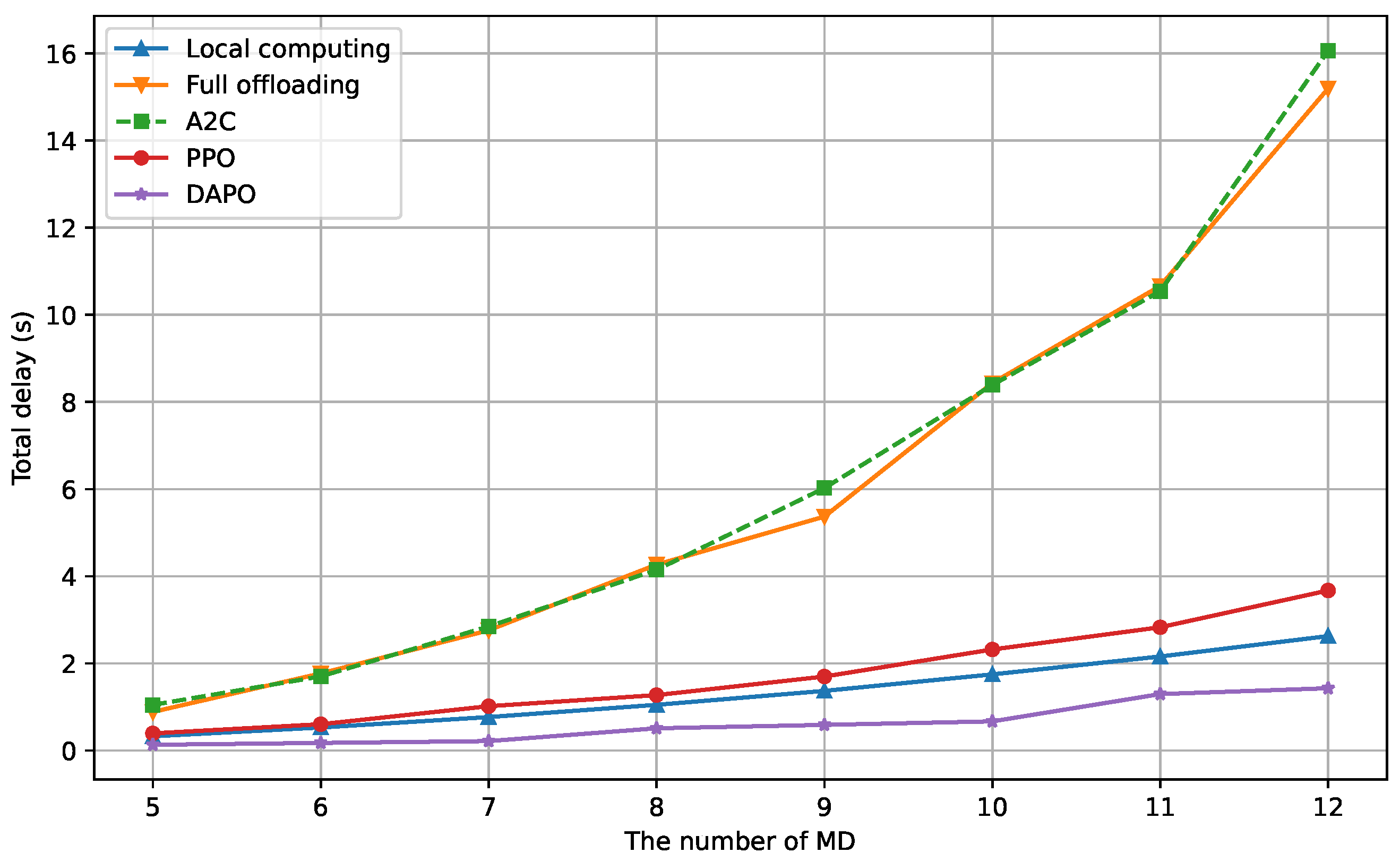

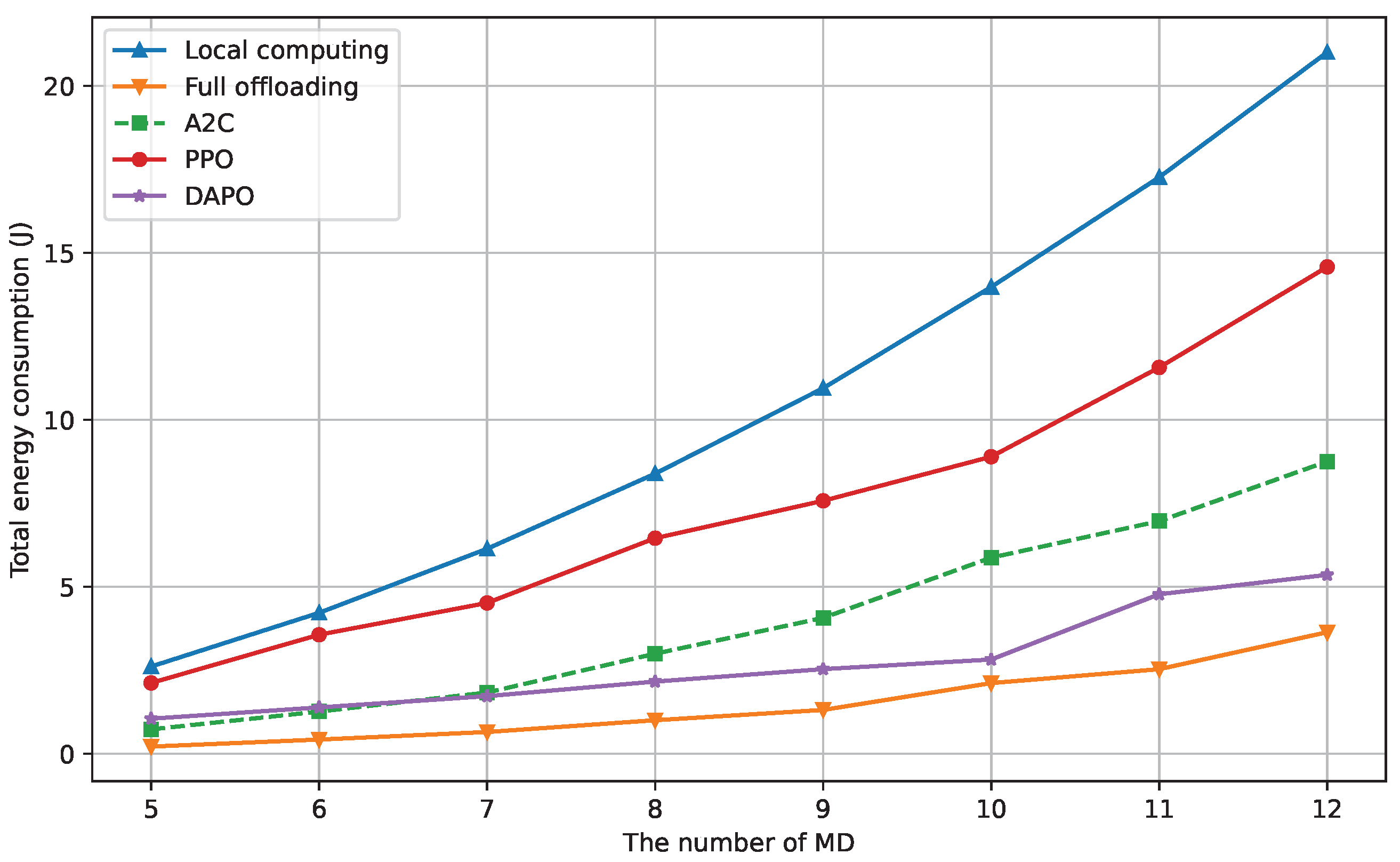

- We implement DAPO in a simulation platform and evaluate it against multiple baselines, providing detailed analyses of convergence, scalability, and robustness under varying user densities and bandwidth conditions. And the experimental results demonstrate that DAPO significantly outperforms static strategies and mainstream RL baselines in terms of reducing latency and energy consumption.

2. Related Work

3. System Model and Problem Formulation

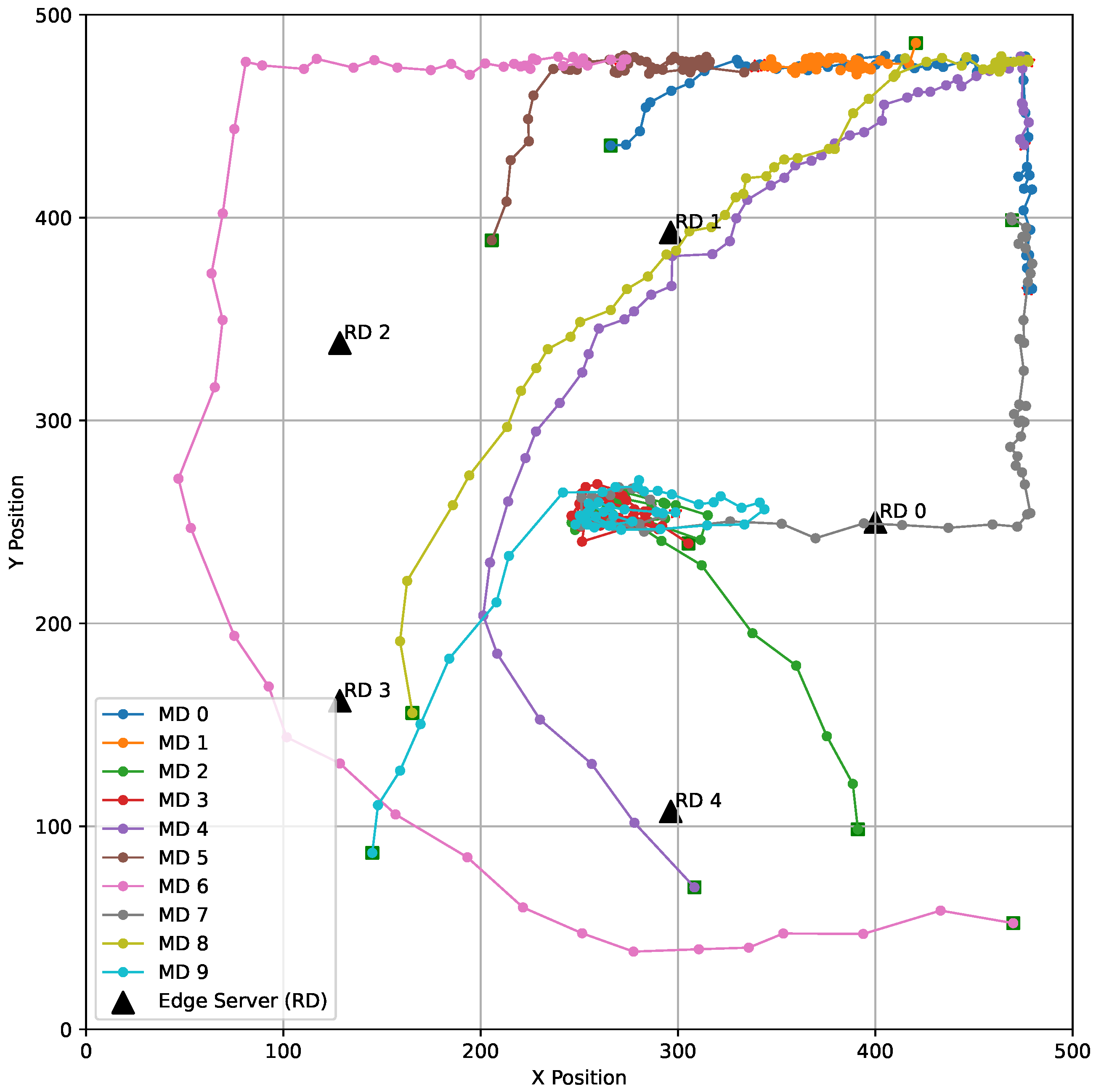

3.1. User Mobility Model

3.2. Communication Model

3.3. Computation Offloading Model

3.4. Problem Formulation

4. Problem Solution

4.1. State Space

4.2. Action Space

4.3. Reward Function

4.4. Algorithm Design

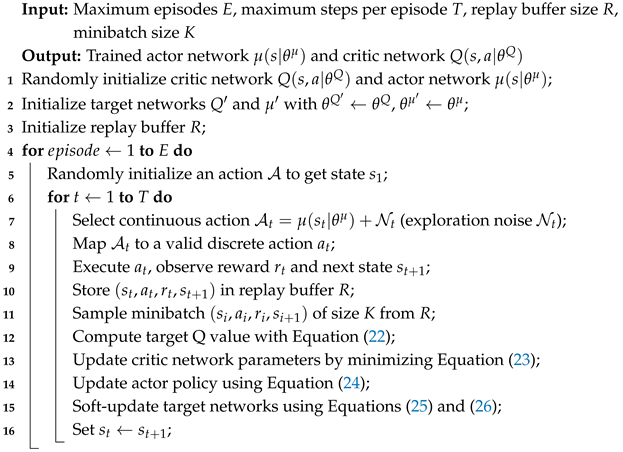

| Algorithm 1: The DAPO based on the DDPG Algorithm |

|

5. Performance Evaluation

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Deng, S.; Zhao, H.; Fang, W.; Yin, J.; Dustdar, S.; Zomaya, A.Y. Edge Intelligence: The Confluence of Edge Computing and Artificial Intelligence. IEEE Internet Things J. 2020, 7, 7457–7469. [Google Scholar] [CrossRef]

- Mao, Y.; Yu, X.; Huang, K.; Zhang, Y.-J.A.; Zhang, J. Green Edge AI: A Contemporary Survey. Proc. IEEE 2024, 112, 880–911. [Google Scholar] [CrossRef]

- Bianchini, R.; Fontoura, M.; Cortez, E.; Bonde, A.; Muzio, A.; Constantin, A.-M.; Moscibroda, T.; Magalhaes, G.; Bablani, G.; Russinovich, M. Toward ML-Centric Cloud Platforms. Commun. ACM 2020, 63, 50–59. [Google Scholar] [CrossRef]

- Huang, Y.; Qiao, X.; Lai, W.; Dustdar, S.; Zhang, J.; Li, J. Enabling DNN Acceleration with Data and Model Parallelization over Ubiquitous End Devices. IEEE Internet Things J. 2021, 9, 15053–15065. [Google Scholar] [CrossRef]

- Zhang, S.; Zhang, S.; Qian, Z.; Wu, J.; Jin, Y.; Lu, S. DeepSlicing: Collaborative and Adaptive CNN Inference with Low Latency. IEEE Trans. Parallel Distrib. Syst. 2021, 32, 2175–2187. [Google Scholar] [CrossRef]

- Dong, F.; Wang, H.; Shen, D.; Huang, Z.; He, Q.; Zhang, J.; Wen, L.; Zhang, T. Multi-Exit DNN Inference Acceleration Based on Multi-Dimensional Optimization for Edge Intelligence. IEEE Trans. Mob. Comput. 2022, 22, 5389–5405. [Google Scholar] [CrossRef]

- Zeng, L.; Chen, X.; Zhou, Z.; Yang, L.; Zhang, J. CoEdge: Cooperative DNN Inference with Adaptive Workload Partitioning over Heterogeneous Edge Devices. IEEE/ACM Trans. Netw. 2020, 29, 595–608. [Google Scholar] [CrossRef]

- Mohammed, T.; Joe-Wong, C.; Babbar, R.; Di Francesco, M. Distributed Inference Acceleration with Adaptive DNN Partitioning and Offloading. In Proceedings of the IEEE INFOCOM 2020—IEEE Conference on Computer Communications, Toronto, ON, Canada, 6–9 July 2020; IEEE: New York, NY, USA, 2020; pp. 854–863. [Google Scholar]

- Eshratifar, A.E.; Abrishami, M.S.; Pedram, M. JointDNN: An Efficient Training and Inference Engine for Intelligent Mobile Cloud Computing Services. IEEE Trans. Mob. Comput. 2019, 20, 565–576. [Google Scholar] [CrossRef]

- Zhou, M.; Zhou, B.; Wang, H.; Dong, F.; Zhao, W. Dynamic Path Based DNN Synergistic Inference Acceleration in Edge Computing Environment. In Proceedings of the 2021 IEEE 27th International Conference on Parallel and Distributed Systems (ICPADS), Beijing, China, 14–16 December 2021; IEEE: New York, NY, USA, 2021; pp. 567–574. [Google Scholar]

- Liang, H.; Sang, Q.; Hu, C.; Cheng, D.; Zhou, X.; Wang, D.; Bao, W.; Wang, Y. DNN Surgery: Accelerating DNN Inference on the Edge through Layer Partitioning. IEEE Trans. Cloud Comput. 2023, 11, 3111–3125. [Google Scholar] [CrossRef]

- Tang, X.; Chen, X.; Zeng, L.; Yu, S.; Chen, L. Joint Multiuser DNN Partitioning and Computational Resource Allocation for Collaborative Edge Intelligence. IEEE Internet Things J. 2020, 8, 9511–9522. [Google Scholar] [CrossRef]

- Li, E.; Zeng, L.; Zhou, Z.; Chen, X. Edge AI: On-Demand Accelerating Deep Neural Network Inference via Edge Computing. IEEE Trans. Wirel. Commun. 2019, 19, 447–457. [Google Scholar] [CrossRef]

- Mao, J.; Yang, Z.; Wen, W.; Wu, C.; Song, L.; Nixon, K.W.; Chen, X.; Li, H.; Chen, Y. MeDNN: A Distributed Mobile System with Enhanced Partition and Deployment for Large-Scale DNNs. In Proceedings of the 2017 IEEE/ACM International Conference on Computer-Aided Design (ICCAD), Irvine, CA, USA, 13–16 November 2017; IEEE: New York, NY, USA, 2017; pp. 751–756. [Google Scholar]

- Zhao, Z.; Barijough, K.M.; Gerstlauer, A. DeepThings: Distributed Adaptive Deep Learning Inference on Resource-Constrained IoT Edge Clusters. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2018, 37, 2348–2359. [Google Scholar] [CrossRef]

- Laskaridis, S.; Venieris, S.I.; Almeida, M.; Leontiadis, I.; Lane, N.D. SPINN: Synergistic Progressive Inference of Neural Networks over Device and Cloud. In Proceedings of the MobiCom ’20: The 26th Annual International Conference on Mobile Computing and Networking, London, UK, 21–25 September 2020; ACM: New York, NY, USA, 2020; pp. 1–15. [Google Scholar]

- Ngo, D.; Park, H.-C.; Kang, B. Edge Intelligence: A Review of Deep Neural Network Inference in Resource-Limited Environments. Electronics 2025, 14, 2495. [Google Scholar] [CrossRef]

- Wang, Y.; Xue, H.; Zhou, M. A Digital Twin-Assisted VEC Intelligent Task Offloading Approach. Electronics 2025, 14, 3444. [Google Scholar] [CrossRef]

- Zhang, J.; Ma, S.; Yan, Z.; Huang, J. Joint DNN Partitioning and Task Offloading in Mobile Edge Computing via Deep Reinforcement Learning. J. Cloud Comput. 2023, 12, 116. [Google Scholar] [CrossRef]

- Zhao, Z.; Wang, K.; Ling, N.; Xing, G. EdgeML: An AutoML Framework for Real-Time Deep Learning on the Edge. In Proceedings of the IoTDI ’21: International Conference on Internet-of-Things Design and Implementation, Charlottesvle, VA, USA, 18–21 May 2021; ACM: New York, NY, USA, 2021; pp. 133–144. [Google Scholar]

- Chen, Y.; Li, R.; Yu, X.; Zhao, Z.; Zhang, H. Adaptive Layer Splitting for Wireless Large Language Model Inference in Edge Computing: A Model-Based Reinforcement Learning Approach. Front. Inf. Technol. Electron. Eng. 2025, 26, 278–292. [Google Scholar] [CrossRef]

- Yuan, X.; Li, N.; Wei, K.; Xu, W.; Chen, Q.; Chen, H.; Guo, S. Mobility and Cost Aware Inference Accelerating Algorithm for Edge Intelligence. IEEE Trans. Mob. Comput. 2024, 24, 1530–1549. [Google Scholar] [CrossRef]

- Ordóñez, S.A.C.; Samanta, J.; Suárez-Cetrulo, A.L.; Carbajo, R.S. Intelligent Edge Computing and Machine Learning: A Survey of Optimization and Applications. Future Internet 2025, 17, 417. [Google Scholar] [CrossRef]

- Li, B.; Liu, Y.; Tan, L.; Pan, H.; Zhang, Y. Digital Twin Assisted Task Offloading for Aerial Edge Computing and Networks. IEEE Trans. Veh. Technol. 2022, 71, 10863–10877. [Google Scholar] [CrossRef]

- Saleem, U.; Liu, Y.; Jangsher, S.; Li, Y.; Jiang, T. Mobility-Aware Joint Task Scheduling and Resource Allocation for Cooperative Mobile Edge Computing. IEEE Trans. Wirel. Commun. 2020, 20, 360–374. [Google Scholar] [CrossRef]

- Deng, X.; Yin, J.; Guan, P.; Xiong, N.N.; Zhang, L.; Mumtaz, S. Intelligent Delay-Aware Partial Computing Task Offloading for Multiuser Industrial Internet of Things through Edge Computing. IEEE Internet Things J. 2021, 10, 2954–2966. [Google Scholar] [CrossRef]

- Merity, S.; Xiong, C.; Bradbury, J.; Socher, R. Pointer Sentinel Mixture Models. arXiv 2016, arXiv:1609.07843. [Google Scholar] [CrossRef]

| Notation | Description |

|---|---|

| The set of mobile devices (MDs) | |

| The set of edge servers (ESs) | |

| B | Bandwidth of the wireless channel |

| Power spectral density of Gaussian noise | |

| Total computing capability of MD n | |

| Total computing capability of ES m | |

| L | Total number of layers in the LLM |

| Computational workload of layer j | |

| Model split point for MD n | |

| Offloading decision (target server) for MD n | |

| Size of the intermediate data generated at split point | |

| Transmission power of MD n | |

| The operating power consumption of MD n | |

| The operating power consumption of ES m | |

| Computing resources allocated by ES m to MD n | |

| The uplink transmission rate between MD n and ES m | |

| The delay of full local execution of MD n | |

| The energy of full local execution of MD n | |

| Local computation delay for the task of MD n | |

| Remote computation delay for the task of MD n | |

| Transmission delay for offloading from MD n to ES m | |

| Total end-to-end inference delay for MD n | |

| Total energy consumption for MD n |

| Parameter | Value | Description |

|---|---|---|

| Episode | 200 | The size of the main loop |

| T | 1000 | The size of the secondary loop |

| Buffer size | 100,000 | The size of the replay buffer |

| Batch size | 64 | The size of the sample in the replay buffer |

| 0.99 | Reward discount factor | |

| Actor learning rate | 0.001 | The learning rate of Adam optimizer in Actor network |

| Critic learning rate | 0.001 | The learning rate of Adam optimizer in Critic network |

| 0.005 | The soft update speed of the target network |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feng, H.; Huang, G.; Zhou, N.; Zhang, F.; Liu, Y.; Zhou, X.; Liu, J. DAPO: Mobility-Aware Joint Optimization of Model Partitioning and Task Offloading for Edge LLM Inference. Electronics 2025, 14, 3929. https://doi.org/10.3390/electronics14193929

Feng H, Huang G, Zhou N, Zhang F, Liu Y, Zhou X, Liu J. DAPO: Mobility-Aware Joint Optimization of Model Partitioning and Task Offloading for Edge LLM Inference. Electronics. 2025; 14(19):3929. https://doi.org/10.3390/electronics14193929

Chicago/Turabian StyleFeng, Hao, Gan Huang, Nian Zhou, Feng Zhang, Yuming Liu, Xiumin Zhou, and Junchen Liu. 2025. "DAPO: Mobility-Aware Joint Optimization of Model Partitioning and Task Offloading for Edge LLM Inference" Electronics 14, no. 19: 3929. https://doi.org/10.3390/electronics14193929

APA StyleFeng, H., Huang, G., Zhou, N., Zhang, F., Liu, Y., Zhou, X., & Liu, J. (2025). DAPO: Mobility-Aware Joint Optimization of Model Partitioning and Task Offloading for Edge LLM Inference. Electronics, 14(19), 3929. https://doi.org/10.3390/electronics14193929