Abstract

Cognitive biases continue to pose significant challenges in executive decision-making, often leading to strategic inefficiencies, misallocation of resources, and flawed risk assessments. While traditional decision-making relies on intuition and experience, these methods are increasingly proving inadequate in addressing the complexity of modern business environments. Despite the growing integration of big data analytics into executive workflows, existing research lacks a comprehensive examination of how AI-driven methodologies can systematically mitigate biases while maintaining transparency and trust. This paper addresses these gaps by analyzing how big data analytics, artificial intelligence (AI), machine learning (ML), and explainable AI (XAI) contribute to reducing heuristic-driven errors in executive reasoning. Specifically, it explores the role of predictive modeling, real-time analytics, and decision intelligence systems in enhancing objectivity and decision accuracy. Furthermore, this study identifies key organizational and technical barriers—such as biases embedded in training data, model opacity, and resistance to AI adoption—that hinder the effectiveness of data-driven decision-making. By reviewing empirical findings from A/B testing, simulation experiments, and behavioral assessments, this research examines the applicability of AI-powered decision support systems in strategic management. The contributions of this paper include a detailed analysis of bias mitigation mechanisms, an evaluation of current limitations in AI-driven decision intelligence, and practical recommendations for fostering a more data-driven decision culture. By addressing these research gaps, this study advances the discourse on responsible AI adoption and provides actionable insights for organizations seeking to enhance executive decision-making through big data analytics.

1. Introduction

Cognitive biases represent a significant challenge in executive decision-making within today’s complex and high-stakes business environment. Corporate leaders face a multifaceted decision landscape influenced by organizational culture, historical precedent, external pressures, and deeply embedded psychological tendencies that often operate below conscious awareness [1]. Despite the traditional reverence for intuition and experience in executive circles, research has increasingly demonstrated how these cognitive shortcuts can lead to systematic errors in judgment, resulting in suboptimal strategic outcomes, resource misallocation, and missed market opportunities [2].

The impact of these cognitive distortions is particularly pronounced in executive contexts, where confirmation bias reinforces existing assumptions, anchoring bias tethers decisions to initial reference points, and overconfidence bias inflates the perceived accuracy of strategic forecasts. These psychological tendencies collectively undermine rational decision-making processes and can propagate throughout organizations, affecting everything from capital allocation to talent management and competitive strategy [2].

The emergence of big data analytics and artificial intelligence (AI) represents a paradigm shift in how organizations approach strategic decision-making. For the first time, executives have access to tools that can systematically counteract cognitive limitations through data-driven methodologies. Machine learning algorithms, predictive analytics, and real-time data processing capabilities enable businesses to identify patterns beyond human perception, challenge entrenched assumptions, and augment decision-making with objective insights derived from comprehensive datasets [3]. Advanced analytical approaches such as explainable AI (XAI), causal inference modeling, and anomaly detection further enhance the potential for more rational, evidence-based strategic decisions.

However, integrating data-driven insights into executive workflows presents significant challenges. Questions regarding data reliability, model interpretability, organizational readiness, and ethical implications must be addressed for analytics to mitigate cognitive biases effectively. The path from traditional intuition-based leadership to data-augmented decision-making requires careful navigation of technical and cultural barriers.

1.1. Literature Review Methodology and Current Research Landscape

This review employed a systematic approach to identify relevant literature at the intersection of cognitive bias mitigation and big data analytics in decision-making contexts. We searched the Web of Science, Scopus, and IEEE Xplore databases using the keywords (“cognitive bias*” OR “decision bias*”) AND (“big data” OR “artificial intelligence” OR “machine learning”) AND (“decision making” OR “executive*” OR “management”) for publications from 2018–2024. After screening 847 initial results, 156 studies met inclusion criteria focusing on empirical applications of AI/analytics for bias reduction in organizational settings.

Table 1 synthesizes ten (10) pivotal studies that exemplify current understanding of how analytical technologies influence executive decision processes, selected based on citation impact, methodological rigor, and relevance to bias mitigation applications.

Table 1.

Summary of key studies on big data analytics and cognitive bias mitigation.

Research Gaps Identified: Current literature reveals three critical gaps: (1) limited empirical validation of bias reduction effectiveness in real-world executive contexts, (2) insufficient integration of explainable AI techniques for maintaining decision transparency, and (3) lack of comprehensive frameworks addressing both technical and organizational implementation challenges.

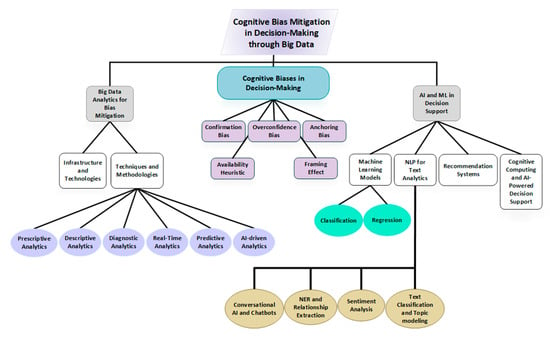

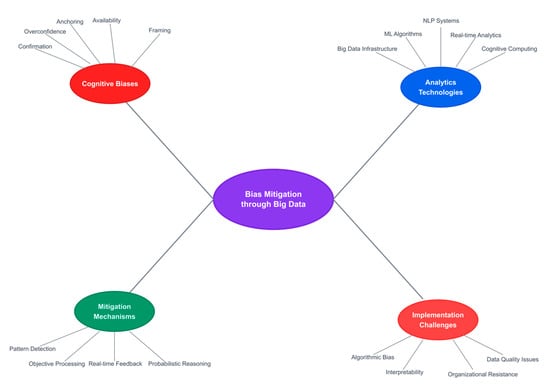

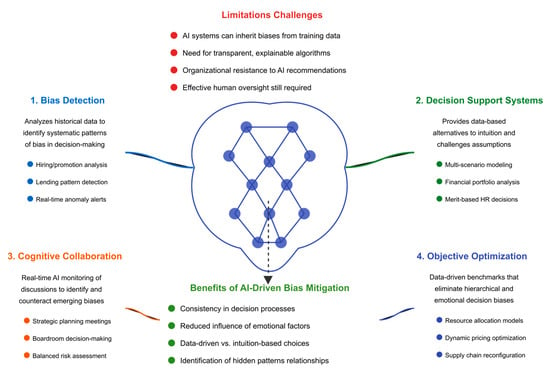

Building on this foundation, our research examines multiple dimensions of the bias mitigation challenge. Figure 1 presents the conceptual framework that guides our investigation, illustrating the interconnected nature of cognitive biases, analytical methodologies, and organizational factors that collectively shape executive decision quality.

Figure 1.

Overview of surveyed key topics.

1.2. Research Objectives and Contributions

This study addresses identified gaps by examining mechanisms through which big data analytics, AI, and XAI can systematically mitigate cognitive biases in executive decision-making while maintaining accountability and organizational acceptance.

Primary Research Questions:

- How do specific AI/ML techniques detect and counteract different types of cognitive biases in executive decision contexts?

- What are the key technical and organizational barriers to implementing bias mitigation systems?

- How can explainable AI enhance trust and adoption of automated debiasing tools?

Novel Contributions:

- Comprehensive taxonomy of bias mitigation mechanisms across descriptive, predictive, and prescriptive analytics

- Empirical evaluation framework comparing A/B testing, simulation experiments, and behavioral assessments for measuring bias reduction effectiveness

- Implementation roadmap addressing both technical requirements and organizational change management for bias mitigation systems

- XAI integration model balancing algorithmic transparency with decision-making efficiency

Scope and Applications: While primarily focused on corporate executive decision-making, findings extend to healthcare administration, public policy formulation, and emergency management contexts where bias mitigation is critical for optimal outcomes.

1.3. Paper Structure

Section 2 establishes theoretical foundations, examining cognitive biases in executive contexts and big data analytics capabilities. Section 3 analyzes real-world manifestations of bias in strategic decision-making. Section 4 investigates bias mitigation mechanisms through analytics, including AI-driven detection and correction systems. Section 5 addresses implementation challenges and limitations. Section 6 explores emerging trends, XAI applications, and research gaps. Section 7 presents conclusions and practical recommendations for organizations seeking to enhance decision quality through data-driven bias mitigation.

2. Theoretical Foundations

2.1. Cognitive Biases in Executive Decision-Making

Cognitive biases represent systematic deviations from rational judgment that lead individuals to make suboptimal decisions based on mental shortcuts, preconceptions, or flawed heuristics [14]. Within executive decision-making contexts, these biases pose significant challenges as leaders operate under conditions of uncertainty, time pressure, and high stakes [15]. This section examines five key cognitive biases that substantially impact executive decision-making: confirmation bias, overconfidence bias, anchoring bias, availability heuristic, and framing effect [16] (Table 2).

Table 2.

Overview of Key Cognitive Biases in Executive Decision-Making.

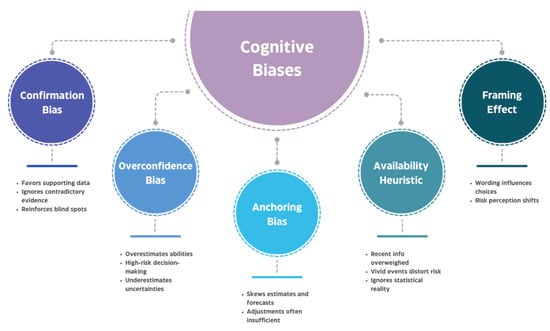

Also, the below conceptual framework (Figure 2) illustrates the five primary cognitive biases that systematically distort executive decision-making processes.

Figure 2.

The five cognitive biases in executive decision-making.

At the center, “Cognitive Biases” represents the overarching psychological phenomena that influence how leaders process information and make strategic choices. Each surrounding node depicts a specific bias with its key characteristics:

Confirmation Bias (left) manifests when executives favor information supporting their existing beliefs while ignoring contradictory evidence, leading to strategic blind spots and resistance to market changes.

Overconfidence Bias (lower left) reflects executives’ tendency to overestimate their abilities and underestimate risks, resulting in unrealistic project timelines and excessive risk-taking in areas such as mergers and acquisitions.

Anchoring Bias (bottom) occurs when initial information disproportionately influences subsequent decisions, affecting financial forecasts and negotiations even when the anchor proves arbitrary or outdated.

Availability Heuristic (lower right) leads executives to overweight easily recalled events, causing distorted risk assessments based on memorable incidents rather than statistical reality.

Framing Effect (right) demonstrates how identical information presented differently can reverse risk preferences, influencing strategic communications and decision outcomes.

The dotted connections between biases indicate their interconnected nature—these cognitive distortions often reinforce each other, creating compound effects that further compromise decision quality. Understanding these relationships is essential for developing comprehensive mitigation strategies through big data analytics, as discussed in subsequent sections.

2.1.1. Confirmation Bias

Confirmation bias represents the tendency to search for, interpret, and recall information that confirms preexisting beliefs while giving disproportionately less consideration to alternative possibilities [17]. In executive contexts, this bias manifests through selective information processing, where leaders unconsciously filter data to support their strategic vision while dismissing contradictory evidence [18]. The hierarchical structure of organizations often amplifies this bias through “filtering bias,” where subordinates selectively present information they believe executives want to hear [19].

This cognitive distortion particularly affects strategic planning and risk assessment processes. Executive teams with entrenched assumptions about market stability often dismiss early disruption signals as temporary anomalies rather than fundamental shifts in competitive dynamics [20]. The escalation of commitment to failing projects exemplifies confirmation bias in action, as executives seek evidence supporting initial decisions rather than objectively reassessing situations [21]. Historical analyses have documented how established retailers failed to recognize e-commerce threats despite clear market data indicating shifting consumer preferences, demonstrating the profound impact of this bias on strategic adaptation [22,23,24].

Effective mitigation strategies encompass institutionalized devil’s advocacy procedures that systematically challenge existing assumptions [25,26,27], structured decision-making processes requiring explicit documentation of reasoning and evidence [28], and AI-driven analytics that provide objective, data-based insights independent of human preconceptions [29].

2.1.2. Overconfidence Bias

Overconfidence bias involves excessive confidence in one’s abilities, knowledge, or chances of success, leading to systematic underestimation of risks and overestimation of positive outcomes [73]. This bias appears particularly pronounced among executives, where past successes and positional authority reinforce beliefs in personal judgment capabilities [74].

In corporate settings, overconfidence manifests through multiple channels. Executives frequently establish unrealistic project timelines, reflecting the “planning fallacy” wherein best-case scenarios dominate planning processes while potential obstacles receive insufficient consideration [75]. The merger and acquisition domain provides particularly compelling evidence, with overconfident executives pursuing excessive acquisition activity based on inflated synergy estimates and underestimation of integration complexities [76]. Strategic initiatives often suffer from inadequate risk assessment as leaders overestimate their ability to control market dynamics and competitive responses [77]. The psychological origins of this bias stem from self-serving attribution patterns, where executives attribute success to personal ability while externalizing blame for failures [78].

Evidence-based mitigation approaches incorporate systematic use of predictive analytics and Monte Carlo simulations to ground decisions in probabilistic reasoning rather than intuitive certainty [79]. Organizations that implement analytical validation paradigms within strategic planning cycles demonstrate improved calibration between confidence levels and actual outcomes [80]. Furthermore, cultivating organizational cultures that promote constructive skepticism and encourage challenging of executive assumptions has proven effective in moderating overconfidence effects [80].

2.1.3. Anchoring Bias

Anchoring bias occurs when individuals rely disproportionately on initial information when making subsequent judgments, even when that information proves arbitrary or irrelevant to the decision context [81]. In executive decision-making, anchoring significantly impacts financial planning, negotiations, and strategic goal-setting processes [82].

Revenue forecasting exemplifies this phenomenon, where executive teams often remain tied to initial projections despite evolving market conditions that warrant substantial revision [83]. Negotiation contexts reveal similar patterns, with opening offers establishing psychological reference points that disproportionately influence final agreements, regardless of objective valuation metrics [84]. Human resource decisions demonstrate anchoring effects when compensation determinations rely on historical salary levels rather than current market rates or performance metrics [85]. Strategic planning processes frequently exhibit anchoring when organizations set objectives based on competitors’ historical performance rather than conducting independent potential analyses [86,87].

Effective countermeasures require systematic approaches to decision-making. Organizations benefit from obtaining multiple independent estimates before critical decisions, ensuring diverse perspectives challenge initial anchors [88]. Employing first-principles reasoning in budgeting processes helps teams evaluate expenditures based on fundamental requirements rather than incremental adjustments to potentially flawed baselines [89]. AI-based pricing algorithms that analyze current market dynamics rather than historical precedents offer technological solutions to bypass anchoring effects [90,91]. Additionally, conducting systematic post-mortem analyses enables organizations to identify where anchoring influenced past decisions and develop institutional awareness of this bias [92,93].

2.1.4. Availability Heuristic

The availability heuristic leads individuals to overestimate the probability of events that are easily recalled, typically because they are recent, emotionally salient, or widely publicized [49,50]. This cognitive bias causes executives to overweight memorable events in decision-making while undervaluing comprehensive statistical information that may provide more accurate risk assessments [51,52].

Business contexts reveal multiple manifestations of this bias. Risk assessment processes often become distorted when executives base probability estimates on vivid events such as highly publicized cyber-attacks or market crashes, rather than systematic analysis of actual occurrence frequencies [49,50]. Strategic planning suffers when recent successes or failures disproportionately influence future strategies, causing organizations to overreact to temporary fluctuations while missing long-term trends [51]. Resource allocation decisions driven by anecdotal evidence rather than comprehensive data analysis lead to suboptimal investment patterns [52]. These patterns create organizational feedback loops where memorable narratives override data-driven insights, perpetuating decision-making based on salience rather than statistical relevance [51,52].

Mitigation strategies leverage both technological and organizational approaches. AI-driven analytics shift focus from anecdotal evidence to statistical patterns by processing comprehensive datasets that human cognition cannot effectively synthesize [53,54,55]. Structured decision-making techniques such as pre-mortem analyses force consideration of multiple scenarios beyond those readily available to memory [56]. Organizations that foster data-driven cultures prioritizing empirical evidence over memorable instances demonstrate improved calibration in risk assessment and strategic planning [57].

2.1.5. Framing Effect

The framing effect demonstrates how identical information presented in different formats can lead to opposing decisions, violating fundamental principles of rational choice [58]. According to prospect theory, individuals exhibit risk-averse behavior when outcomes are framed as gains but become risk-seeking when identical outcomes are framed as losses [59].

Executive communications and strategic choices reveal substantial framing effects. Budget proposals characterized as “efficiency enhancements” consistently receive greater organizational support than identical measures framed as “cost reductions,” despite equivalent fiscal impacts [60]. Strategic initiatives described in terms of growth opportunities generate fundamentally different responses than those emphasizing threat mitigation, even when the underlying economic considerations remain constant [61,62].

Addressing framing effects requires deliberate cognitive strategies. Decision-makers must consciously evaluate choices from multiple perspectives, actively considering how different presentations might influence judgment [63,64]. Using precise numerical terminology rather than emotionally loaded language helps minimize linguistic framing effects [65]. Organizations benefit from implementing standardized decision frameworks that present information in consistent formats, reducing the influence of presentation variations on strategic choices [66].

2.2. Big Data Analytics: Framework and Applications

Big data analytics represents a comprehensive methodological framework for collecting, processing, and analyzing vast, complex datasets to extract actionable insights that enhance organizational decision-making [67]. This field has undergone rapid evolution alongside digital transformation, enabling organizations to leverage diverse data sources for strategic advantage [68,69].

2.2.1. Fundamental Characteristics: The 5 V’s Framework

The conceptual foundation of big data analytics rests upon five key dimensions that distinguish it from traditional data processing approaches. Volume encompasses the unprecedented scale of modern data generation, with organizations routinely managing petabyte and exabyte-level datasets that exceed conventional storage and processing capabilities [67]. Variety reflects the heterogeneous nature of contemporary data, spanning structured formats conforming to predefined schemas, semi-structured data such as XML and JSON, and unstructured information including text, images, and multimedia content [67]. Velocity captures the accelerating pace of data generation and the corresponding requirement for real-time or near-real-time processing capabilities [67]. Veracity addresses critical concerns regarding data quality, accuracy, and trustworthiness, recognizing that analytical insights depend fundamentally on underlying data integrity [68]. Value represents the ultimate objective of big data initiatives: transforming raw information into meaningful insights that drive business success and competitive advantage [68].

2.2.2. Infrastructure and Technologies

Modern big data infrastructure comprises sophisticated technological ecosystems designed to manage the scale and complexity of contemporary data environments [69,70]. Storage systems have evolved from traditional relational databases to distributed architectures capable of handling massive, heterogeneous datasets. The Hadoop Distributed File System (HDFS) provides fault-tolerant storage by distributing data across multiple nodes, ensuring high availability through redundancy mechanisms [71,72]. NoSQL databases offer specialized solutions for different data management requirements: document stores accommodate schema-less data, column-family databases optimize read-intensive operations, key-value stores provide exceptional retrieval speed, and graph databases excel at mapping complex relationships between entities [73].

Processing frameworks have similarly evolved to meet big data demands. While MapReduce initially revolutionized parallel processing, Apache Spark (all versions through 4.0.1) has emerged as the dominant framework, offering in-memory processing capabilities that dramatically improve performance for iterative algorithms and real-time analytics [71,72]. Stream processing platforms including Apache Kafka and Flink enable organizations to analyze data in motion, supporting use cases requiring immediate insights [69].

Cloud computing has fundamentally transformed big data infrastructure accessibility. Platforms from Amazon Web Services, Microsoft Azure, and Google Cloud provide scalable, cost-effective solutions that eliminate barriers to entry for sophisticated analytics [74,75]. Edge computing complements cloud architectures by processing data near its source, reducing latency for time-sensitive applications while improving data privacy [74,75]. Orchestration tools such as Apache Airflow and Kubernetes automate workflow management, while comprehensive security frameworks ensure compliance with regulations including GDPR and industry-specific requirements [76,77].

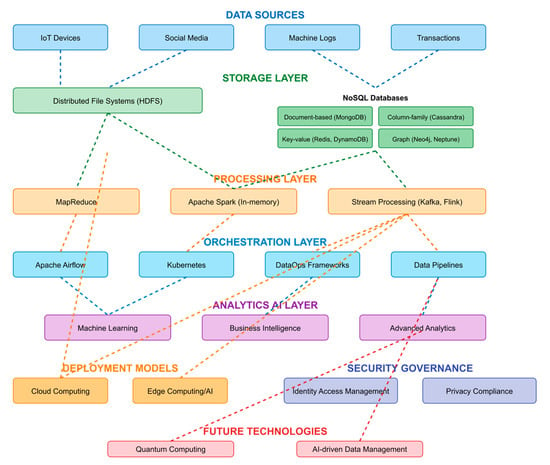

The architectural diagram (Figure 3) presents the multi-layered infrastructure supporting big data analytics for executive decision-making and bias mitigation. The visualization employs a hierarchical structure that traces data flow from initial collection through final analytical outputs, demonstrating the technological complexity underlying modern bias mitigation systems.

Figure 3.

Big Data Infrastructure and Technologies Ecosystem.

The Data Sources layer (top) identifies primary inputs including IoT devices, social media streams, machine logs, and transactional records, representing the diverse data ecosystem that feeds analytical processes. The Storage Layer illustrates the dual architecture of distributed file systems (HDFS) for large-scale data storage and specialized NoSQL databases optimized for different data structures—document-based systems for unstructured content, column-family databases for analytical workloads, key-value stores for rapid retrieval, and graph databases for relationship mapping [71,72,73].

The Processing Layer showcases the evolution from batch-oriented MapReduce to real-time processing frameworks. Apache Spark’s (ver. 3.5.0) in-memory capabilities enable iterative machine learning algorithms essential for bias detection, while stream processing platforms (Kafka, Flink) support real-time bias identification in ongoing decisions [71,72]. The Orchestration Layer demonstrates how workflow management tools coordinate complex analytical pipelines, ensuring systematic bias assessment across multiple data streams.

The Analytics AI Layer represents where bias mitigation actually occurs, through machine learning algorithms that identify patterns beyond human perception, business intelligence tools that visualize bias indicators, and advanced analytics that prescribe corrective actions. The diagram shows these analytical capabilities deployed through Cloud Computing and Edge Computing/AI models, with the former providing scalable processing power and the latter enabling real-time bias detection at decision points [74,75].

Critical to the framework are Security Governance mechanisms ensuring data integrity and regulatory compliance, as biased or compromised data would undermine mitigation efforts [76,77]. The Future Technologies component acknowledges emerging capabilities—quantum computing for complex optimization problems and AI-driven data management for automated bias detection in data preparation stages.

The interconnecting arrows demonstrate data flow patterns, emphasizing that bias mitigation requires seamless integration across all layers. This comprehensive infrastructure enables the analytical methodologies discussed in Section 2.2.3 and supports the AI/ML applications detailed in Section 2.2.4, forming the technological foundation for systematic cognitive bias mitigation in executive decision-making.

2.2.3. Analytical Methodologies

Organizations employ six primary analytical approaches to extract value from big data, each serving distinct purposes within the analytical continuum [78]. Descriptive analytics provides historical perspective by summarizing past events and identifying patterns within existing data [79]. Diagnostic analytics extends this foundation by investigating causal relationships and uncovering factors driving observed outcomes [80]. Predictive analytics leverages statistical modeling and machine learning to forecast future trends based on historical patterns [81]. Prescriptive analytics advances beyond prediction to recommend optimal actions given specific constraints and objectives [82]. Real-time analytics enables immediate response to changing conditions through continuous data processing [83]. AI-driven analytics automates the discovery of complex patterns and relationships that may elude human analysis [84].

2.2.4. Artificial Intelligence and Machine Learning Integration

Artificial intelligence and machine learning technologies constitute the analytical engine of modern big data systems, enabling automated extraction of sophisticated insights from massive datasets [85,86]. Classification algorithms categorize data into predefined groups, supporting applications ranging from fraud detection to medical diagnosis [87,88]. Regression models predict continuous outcomes, facilitating sales forecasting, risk assessment, and demand planning [89]. Ensemble methods and deep learning architectures uncover complex, non-linear relationships within high-dimensional data [90].

Natural language processing has emerged as a critical capability for analyzing the vast quantities of unstructured text data generated by modern organizations [94]. Sentiment analysis extracts emotional content from customer feedback, social media, and other textual sources [95]. Named entity recognition identifies and extracts key information elements from documents [96]. Conversational AI enables natural language interactions between humans and analytical systems [97,98,99].

Advanced applications leverage these foundational technologies to create sophisticated analytical capabilities. Recommendation systems employ collaborative filtering and deep learning to personalize user experiences [100,101,102,103]. Cognitive computing platforms such as IBM Watson and Microsoft Azure AI simulate human reasoning processes, enabling complex decision support across domains including healthcare, finance, and legal services [104,105,106,107,108,109,110,111]. These systems demonstrate adaptive learning capabilities, continuously improving their performance through interaction with data and users [98,99].

2.2.5. Implications for Bias Mitigation

The integration of big data analytics with executive decision-making offers multiple mechanisms for cognitive bias mitigation. AI systems process data without susceptibility to emotional influences or hierarchical pressures that affect human judgment. Machine learning algorithms identify patterns within datasets too large and complex for human cognitive processing, revealing insights that challenge existing assumptions. Real-time analytics provides immediate feedback on decision outcomes, enabling rapid identification and correction of biased judgment patterns. Statistical models quantify uncertainty explicitly, countering human tendencies toward overconfidence and providing calibrated probability assessments.

However, these technologies also present significant challenges. Algorithmic bias may emerge when models trained on historical data perpetuate past discriminatory patterns. Model interpretability remains a critical concern, as complex algorithms may produce accurate predictions without transparent reasoning. Organizational resistance to data-driven approaches can limit the practical impact of analytical insights. These considerations underscore the importance of thoughtful implementation strategies that leverage technological capabilities while addressing inherent limitations.

2.3. Theoretical Integration

This theoretical foundation establishes a comprehensive framework for understanding the intersection between cognitive biases and big data analytics in executive decision-making. The systematic biases that affect human judgment create predictable distortions in strategic choices, while big data analytics offers powerful tools for introducing objectivity and empirical rigor into decision processes. The convergence of these domains presents both unprecedented opportunities and complex challenges for organizations seeking to enhance decision quality.

The effectiveness of analytics-based bias mitigation depends critically on recognizing that technology alone cannot eliminate cognitive distortions. Rather, successful implementation requires integrating analytical capabilities with organizational processes, cultural change, and human expertise. The subsequent sections of this manuscript examine specific mechanisms through which big data analytics can address cognitive biases, evaluate empirical evidence for effectiveness, and identify key implementation challenges that organizations must navigate to realize the full potential of data-driven decision enhancement.

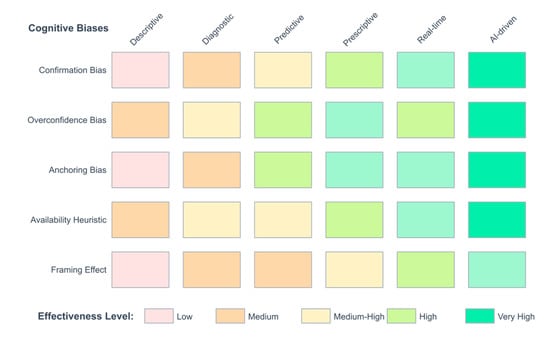

The heat map below (Figure 4) illustrates the differential effectiveness of six analytical methodologies in mitigating five key cognitive biases identified in executive decision-making. The matrix synthesizes empirical evidence from the literature to provide a visual guide for selecting appropriate analytical interventions based on the specific bias being addressed.

Figure 4.

Bias-analytics effectiveness matrix.

The color gradient represents effectiveness levels ranging from low (light red) to very high (dark green). AI-driven analytics demonstrates the highest overall effectiveness across all biases, particularly in addressing confirmation bias and overconfidence, where its ability to process vast datasets without preconceptions provides maximum value. Prescriptive and real-time analytics show strong performance in mitigating anchoring and availability biases by providing immediate, optimization-based recommendations that bypass historical reference points.

Notably, descriptive analytics shows limited effectiveness across most biases, as it primarily reports historical patterns without challenging underlying assumptions. This finding underscores that merely presenting data is insufficient for bias mitigation; rather, advanced analytical techniques that actively identify patterns, generate predictions, and recommend actions are necessary to counteract deeply ingrained cognitive distortions.

The matrix reveals that no single analytical approach provides universal bias mitigation, suggesting that organizations should implement comprehensive analytical ecosystems combining multiple methodologies. The effectiveness ratings are based on empirical studies referenced in Section 2.1 (references [17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66] for bias characteristics) and Section 2.2 (references [78,79,80,81,82,83,84] for analytics capabilities), providing evidence-based guidance for practitioners designing bias mitigation strategies.

Finally, the below conceptual mind map (Figure 5) visualizes the interconnected domains that constitute the theoretical framework for bias mitigation through big data analytics. The central node represents the convergence of cognitive psychology and data science, with four primary branches illustrating the essential components of this integration.

Figure 5.

Key concepts mind map in bias mitigation through big data analytics.

The Cognitive Biases branch (red, upper left) identifies the five key biases examined in this study, with associated reference clusters indicating the depth of literature supporting each bias’s characterization. The Analytics Technologies branch (blue, upper right) maps the technological infrastructure required for bias mitigation, from foundational big data systems to advanced cognitive computing platforms. Each sub-branch includes reference ranges demonstrating the empirical basis for these technological capabilities.

The Mitigation Mechanisms branch (green, lower left) explicates the four primary pathways through which analytics addresses cognitive biases: comprehensive pattern detection that reveals hidden relationships, objective processing free from emotional influence, real-time feedback enabling rapid bias recognition, and probabilistic reasoning that counters overconfidence. The Implementation Challenges branch (red, lower right) acknowledges critical barriers including algorithmic bias risks, interpretability requirements, organizational resistance, and data quality concerns.

The radial structure emphasizes that effective bias mitigation requires simultaneous attention to all four domains. The connecting lines represent the dynamic interactions between domains—for instance, how organizational resistance (a challenge) can undermine the deployment of analytics technologies, or how specific mitigation mechanisms directly address particular cognitive biases. This holistic view underscores that technological solutions alone are insufficient; successful bias mitigation demands integrated approaches addressing psychological, technological, methodological, and organizational dimensions simultaneously.

3. Cognitive Biases in Strategic Decision-Making

Cognitive biases systematically impede effective executive decision-making, affecting strategic choices and investment allocations before their consequences become apparent. Despite access to unprecedented data volumes, modern organizations frequently base strategic decisions on mental shortcuts and systematic judgment distortions. This section examines how cognitive biases manifest in executive environments, their effects on business strategy formulation, and the substantial challenges organizations face in detecting and mitigating these biases [112].

3.1. Challenges in Identifying and Mitigating Biases in Executive Contexts

Research consistently demonstrates cognitive biases’ pervasive influence on executive decision-making, yet these biases prove extraordinarily difficult to identify and correct in live operational contexts [113]. Strategic decisions present unique challenges due to their long-term implications, multifaceted external influences, and inherent unpredictability, making it exceptionally difficult to distinguish genuine strategic errors from natural risks associated with operating in uncertain business environments [114].

Table 3 summarizes five fundamental barriers to detecting and managing biases within organizational decision processes:

Table 3.

The main challenges in identifying and mitigating biases.

The “bias blind spot” represents the most fundamental challenge—individuals’ tendency to recognize cognitive distortions in others while remaining oblivious to identical patterns in their own thinking [115]. The combination of successful career progression and industry experience frequently leads executives to develop profound confidence in their judgment, substantially reducing receptiveness to the possibility of cognitive distortion affecting their reasoning processes. Self-attribution bias compounds this problem, as executives typically attribute failures to external market conditions rather than evaluating their own cognitive blind spots [116].

Organizational culture and groupthink dynamics further complicate bias identification at executive levels. High-stakes corporate decisions typically occur within executive teams characterized by dominant personalities and hierarchical structures that implicitly discourage dissent. Without mechanisms actively promoting cognitive diversity, structured red-team analysis, and formalized devil’s advocacy, executive teams develop tunnel vision that renders them oblivious to their cognitive distortions [117].

The feedback mechanisms within executive decision processes operate through fundamentally ambiguous and delayed pathways. Strategic decisions typically unfold over extended timeframes during which multiple external factors influence outcomes [118]. This complex causal environment makes it extraordinarily difficult to isolate which specific cognitive biases contributed to strategy failure. Research scholars face similar challenges measuring cognitive bias effects on firm performance, as isolating specific biases from complex organizational outcomes presents significant methodological difficulties [119].

Unlike fields such as medicine and aviation that employ structured error-reduction protocols, executive decision-making has historically lacked formal debiasing procedures. While some organizations have begun implementing debiasing interventions, including pre-mortem exercises, cognitive bias checklists, and AI-driven decision-support tools, formal bias detection and correction mechanisms remain rare [120].

3.2. Real-World Examples of Executive Biases

Historical corporate records reveal numerous strategic missteps and missed innovation opportunities attributable to cognitive distortions. These cases provide concrete evidence of how biases operate in practice and their substantial organizational costs [121].

3.2.1. Hindsight Bias—The Case of Missed Innovations

Hindsight bias manifests as the “I-knew-it-all-along” effect, causing individuals to retrospectively perceive events as more predictable than they appeared during their initial occurrence. The failure of established companies to capitalize on photocopying technology represents a well-documented example [122].

During the mid-20th century, Haloid Company (later Xerox) developed revolutionary photocopying technology. Industry leaders IBM and Kodak declined opportunities to invest in or acquire this technology, dismissing its potential as either insufficiently profitable or peripheral to their core operations. Contemporary observers often express bewilderment regarding these companies’ failure to recognize the breakthrough potential. However, the decision context was considerably more complex, as prevailing mental models and cognitive biases constrained executives’ technological evaluations toward innovations aligned with established business operations [123].

This situation transcended mere negligence, as confirmation bias significantly amplified hindsight effects. IBM and Kodak executives demonstrated strong confidence in their existing product portfolios, selectively processing information that reinforced current strategies while systematically overlooking disruptive alternatives [124]. The combination of selective attention to established business models and resistance to challenging fundamental assumptions resulted in declining an innovation that transformed the office equipment industry.

3.2.2. Representativeness Bias—Misjudging Market Patterns

Representativeness bias involves assuming that prior occurrences and familiar patterns will accurately predict future developments despite fundamental environmental changes [122]. Montgomery Ward’s experience following World War II demonstrates this phenomenon’s strategic impact.

Montgomery Ward’s leadership predicted post-World War II economic depression, assuming conditions would mirror the aftermath of World War I. Based on this perspective, the company implemented an extremely conservative business strategy, avoiding expansion and maintaining defensive operational postures. By contrast, competitor Sears recognized fundamental differences between the two post-war economic environments and pursued aggressive expansion, correctly anticipating consumer spending growth [124].

Montgomery Ward committed a fundamental strategic error by treating historical events as definitive guidance while disregarding substantial economic and social transformations. The United States experienced severe economic depression following World War I, but the post-World War II era presented an entirely different landscape characterized by rapid consumer demand, industrial expansion, and government-supported economic growth [125,126]. This misjudgment produced irreversible consequences as Sears leveraged the economic boom while Montgomery Ward permanently surrendered its competitive standing [127,128].

3.2.3. Overconfidence and Hubris—Value-Destroying Acquisitions

Overconfidence bias creates significant challenges during mergers and acquisitions, as executives frequently maintain unrealistic beliefs regarding their ability to extract value from large transactions [129]. Behavioral finance research consistently demonstrates that overconfident executives typically overpay for acquisitions while underestimating integration challenges [130,131].

The 2001 AOL–Time Warner merger exemplifies how overconfidence bias produces catastrophic acquisition failures. AOL pursued a $165 billion acquisition based on executive conviction that the combined entity would dominate emerging digital media markets [132]. However, fundamental assumptions regarding growth trajectory and business synergies proved fatally flawed. The merger culminated in a historic $99 billion write-off—the largest corporate impairment in history—before complete dismantlement within a decade [133].

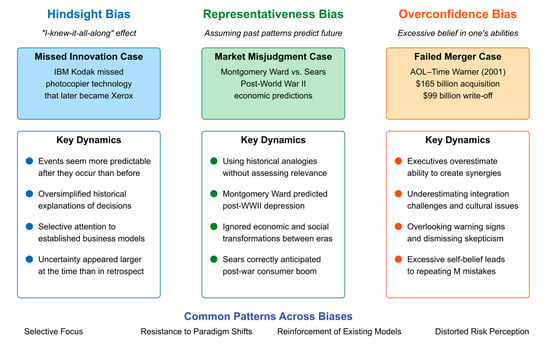

This pattern continues in contemporary technology acquisitions where executives project past accomplishments to overestimate their capacity to integrate and expand new business operations [134]. Recent artificial intelligence and fintech acquisitions demonstrate identical patterns, with leadership teams avoiding comprehensive due diligence because intense commitment to success narratives leads them to overlook warning signs [135]. Figure 6 synthesizes the three historical cases examined above, illustrating how different cognitive biases manifest in executive decision-making through distinct yet interconnected patterns.

Figure 6.

Real-world Examples of Executive Biases in Strategic Decision-Making.

Each bias demonstrates specific dynamics while contributing to four common patterns that transcend individual biases: selective focus, resistance to paradigm shifts, reinforcement of existing models, and distorted risk perception.

The figure reveals how cognitive distortions systematically undermine strategic judgment across diverse business contexts. The hindsight bias panel illustrates how IBM and Kodak’s failure to recognize photocopying technology’s revolutionary potential only appears “obvious” in retrospect, while uncertainty was substantially greater during the actual decision moment [122,123,124]. The representativeness bias panel demonstrates Montgomery Ward’s critical error in assuming post-WWII conditions would mirror post-WWI patterns, contrasting sharply with Sears’ accurate assessment of the transformed economic landscape [124,125,126,127]. The overconfidence bias panel captures how executive hubris in the AOL-Time Warner merger led to catastrophic value destruction through systematic underestimation of integration challenges [132,133].

These patterns—selective focus on confirming information, resistance to paradigm shifts that challenge existing models, reinforcement of established frameworks, and systematically distorted risk perception—represent fundamental cognitive vulnerabilities that persist across different bias types and business contexts. Understanding these common patterns is essential for developing effective bias mitigation strategies through data-driven approaches.

3.3. Implications for Data-Driven Decision Making

The historical cases examined reveal four recurring patterns across all cognitive biases: selective information processing, resistance to paradigm shifts, reinforcement of existing models, and distorted risk perception. These patterns demonstrate why traditional intuition-based decision-making proves inadequate for modern business complexity [136].

3.3.1. Why Analytics Matter

Each examined failure—IBM/Kodak, Montgomery Ward, and AOL–Time Warner—occurred despite available data that could have prevented strategic missteps. This paradox highlights the critical gap between having information and processing it objectively. Modern organizations face exponentially greater complexity than historical counterparts, creating decision contexts where human cognitive limitations become increasingly problematic [137].

Big data analytics offers specific countermeasures to each bias pattern:

- Against Selective Processing: Analytics examines all data without predetermined filters, revealing patterns that confirmation bias would obscure

- Against Paradigm Rigidity: Predictive models generate future scenarios based on emerging trends rather than historical analogies

- Against Echo Chambers: Data democratization enables evidence-based challenges to senior assumptions

- Against Risk Distortion: Probabilistic modeling quantifies uncertainty, replacing subjective optimism with empirical ranges

3.3.2. Implementation Requirements

Analytical tools alone cannot mitigate biases without corresponding organizational changes:

- Cultural Shift: Organizations must value empirical evidence over hierarchical opinion, replacing “HIPPO” (Highest-Paid Person’s Opinion) dynamics with data-driven cultures [136]

- Process Integration: Analytics must be embedded throughout decision cycles, not treated as optional validation

- Executive Literacy: Leaders need sufficient analytical understanding to interpret insights appropriately

- Clear Governance: Frameworks must define when analytical insights override intuition and when human judgment remains primary [137]

3.3.3. Toward Augmented Decision-Making

The objective is augmenting rather than replacing human judgment. Humans excel at contextual understanding and ethical reasoning; analytics provides objective pattern recognition and bias detection. This symbiosis creates decision-making capabilities exceeding either approach alone. The following sections examine how big data analytics and AI systems operationalize these bias mitigation principles, transforming theoretical frameworks into practical decision enhancement tools.

4. Big Data Analytics as a Tool for Bias Mitigation

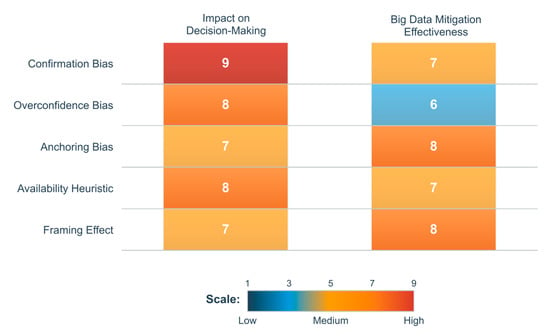

Big data analytics offers considerable potential to counteract cognitive biases in executive decision-making by supplanting subjective intuition with empirical, data-driven insights, enabling leaders to make more objective, evidence-based strategic choices. Cognitive biases, including confirmation bias, overconfidence, anchoring, and hindsight bias, frequently distort perception and risk assessment, resulting in suboptimal business decisions, inefficient resource allocation, and missed opportunities [138]. Through leveraging advanced artificial intelligence and machine learning algorithms, statistical modeling techniques, and real-time data processing capabilities, big data analytics can identify hidden patterns, challenge entrenched assumptions, and provide decision-makers with probabilistic rather than intuitive assessments of risks and opportunities. This section explores how analytics mitigates cognitive biases, examines AI’s role in identifying and correcting distorted judgment patterns, and discusses structured frameworks that enhance decision quality by integrating big data into executive workflows. We analyze how descriptive, predictive, and prescriptive analytics collectively enhance objectivity, enabling executives to identify trends, model future scenarios, and receive data-driven recommendations that minimize reliance on cognitive shortcuts [139]. Additionally, we address ethical considerations and limitations of AI-driven bias mitigation, including data reliability concerns, algorithmic bias risks, and integration challenges within traditionally intuition-driven decision environments. By understanding how big data and AI transform decision-making processes, organizations can reduce susceptibility to biases, improve strategic foresight, and cultivate analytical rigor that enhances long-term business resilience and competitive advantage [140]. Figure 7 presents a heatmap evaluating both the impact of five cognitive biases on decision-making and big data’s effectiveness in mitigating each bias on a 1–9 scale.

Figure 7.

Cognitive biases and Big Data mitigation effectiveness.

The visualization reveals that biases with the highest decision-making impact (confirmation bias: 9) often prove more resistant to mitigation (effectiveness: 7), while biases like anchoring and framing show stronger mitigation potential (effectiveness: 8) despite lower initial impact (7), highlighting where big data interventions may be most effective.

4.1. Mechanisms for Reducing Bias via Big Data Analytics

Big data analytics is crucial in reducing cognitive bias influence within executive decision-making contexts, functioning as a corrective mechanism that replaces intuition and subjective judgment with empirical, data-driven insights [141]. Cognitive biases frequently emerge because humans rely on heuristics—mental shortcuts that simplify decision-making but introduce systematic errors, particularly within high-stakes, uncertain environments. By contrast, big data analytics processes vast quantities of structured and unstructured information identifies complex patterns and provides objective insights that challenge biased reasoning processes. By offering quantifiable, evidence-based perspectives rather than intuition-driven assumptions, analytics help executives recognize flawed mental models, reevaluate preconceived notions, and make more rational strategic choices [142]. Whether applied to investment decisions, market expansion strategies, product innovation initiatives, or risk assessments, data-driven approaches effectively safeguard against human error, reinforcing analytical rigor throughout executive workflows [143].

4.1.1. Evidence-Based Insights vs. Intuition

Big data analytics significantly reduces cognitive biases by shifting decision-making processes from intuitive judgments toward evidence-based insights derived from comprehensive datasets. Executives traditionally rely heavily on personal experiences or anecdotal evidence, potentially introducing biases such as availability bias, which overemphasizes recent or memorable events. For instance, executives may incorrectly perceive strong organizational performance based solely on recent successes while ignoring objective indicators like market share decline or operational inefficiencies [144,145]. Data analytics challenges these perceptions by providing detailed analyses of market trends, financial metrics, and competitor benchmarks, compelling leaders to confront realities that might contradict their preconceived notions. Another critical bias addressed through big data is confirmation bias, wherein executives selectively accept information aligning with existing strategic beliefs or visions while neglecting contradictory evidence [146,147]. For example, CEOs pursuing market expansion might overlook critical data regarding customer dissatisfaction or regulatory risks, preferentially focusing on supportive evidence. AI-driven analytics mitigates this tendency by presenting comprehensive, unbiased datasets encouraging objective evaluations rather than selective interpretations. Additionally, automated decision-support systems, including predictive analytics platforms and AI-based risk assessment tools, minimize human bias risks affecting the interpretation or manipulation of analytical results [148].

Furthermore, predictive modeling and scenario-based simulations help prevent overconfidence and reliance on simplistic decision heuristics by compelling executives to explore multiple potential future scenarios rather than anchoring exclusively on past successes [149]. For example, AI-powered modeling in mergers and acquisitions generates extensive valuation scenarios that realistically account for economic shifts, competitive dynamics, and integration complexities, thereby avoiding overly optimistic or biased acquisition decisions [150,151]. Similarly, Monte Carlo simulations in corporate risk management provide insights into potential financial, geopolitical, or operational disruptions, supporting comprehensive risk assessment beyond simplistic optimism. Real-time analytics systems also mitigate hindsight bias—the tendency to view outcomes as predictable [152] retroactively. Continuous monitoring and immediate alerts regarding emerging trends, such as declining revenues or customer churn, facilitate timely corrective actions and prevent post-event rationalizations of strategic missteps [153].

However, big data analytics effectiveness in reducing biases remains contingent upon organizational governance structures, structured integration into decision-making processes, and active bias-awareness training [154]. Without appropriate governance mechanisms, subjective judgments or internal politics may continue overshadowing data-driven insights [155]. Finally, ethical considerations regarding algorithmic biases, data integrity, and interpretability of AI recommendations remain essential, as blindly trusting analytics without critical scrutiny could inadvertently perpetuate biases rather than mitigate them [156].

4.1.2. Comprehensive Pattern Detection

One significant advantage of big data analytics in reducing cognitive biases is its capacity for comprehensive pattern detection at levels far exceeding human cognitive abilities [157]. Regardless of expertise, human executives inherently face limitations in processing extensive, multidimensional datasets, often resulting in misinterpretations, selective attention, and reliance on preconceived notions. By contrast, big data analytics utilizes advanced machine learning algorithms and statistical methods to uncover complex hidden patterns and relationships within vast datasets. These analytical models introduce objectivity into strategic decision processes by systematically analyzing large-scale data across multiple variables and extended time horizons. This approach proves especially effective against confirmation bias, anchoring bias, and representativeness bias, ensuring decisions remain grounded in the complete range of available data rather than selectively filtered subsets [158]. Specifically, big data-driven pattern detection combats confirmation bias through automated hypothesis testing and anomaly detection. Traditionally, executives begin with preconceived assumptions based on personal experiences or anecdotal evidence, selectively seeking data supporting these beliefs while disregarding conflicting information [159]. Big data models avoid these limitations by objectively evaluating relevant data and identifying correlations, trends, and outliers that challenge conventional wisdom. For instance, while retail executives might presume specific customer segments unprofitable based on limited prior assumptions, advanced analytics could reveal hidden profitability through deeper, granular insights into cross-category spending, seasonal behaviors, or cost dynamics [160,161,162]. These analytical capabilities offer critical reality checks, compelling executives to reevaluate outdated assumptions and enhancing overall strategic accuracy [163].

Additionally, big data analytics provides insights into counterintuitive correlations and complex cause-effect relationships often overlooked by heuristic-driven executive decision-making. Executives traditionally rely on simplified mental models and rules of thumb that inadequately capture the complexity of consumer behavior, financial markets, and technological adoption trends [164]. Machine learning algorithms can examine numerous interactions across diverse data streams, discovering unexpected relationships that challenge established industry norms [165]. For example, detailed data analysis might reveal that extensive product discounting attracts primarily transient, price-sensitive buyers rather than fostering lasting loyalty, thus contradicting prevailing business strategies. Such findings encourage executives to refine strategic approaches based on nuanced, real-world behavioral insights instead of oversimplified assumptions [166].

Moreover, big data analytics’ real-time adaptive capabilities significantly mitigate biases associated with historical anchoring and inertia-driven decision-making [167]. Executives frequently rely on outdated strategies rooted in past experiences or static assumptions that no longer reflect current market dynamics. Advanced real-time processing and streaming analytics continuously update organizational insights, allowing executives to rapidly adapt to evolving conditions such as technological disruptions, shifting consumer preferences, and competitive threats [168]. Big data analytics also addresses cognitive distortions at organizational levels, particularly combating groupthink in collective decision-making environments. It functions as an unbiased arbitrator, providing objective, empirical evidence that reduces reliance on hierarchical dynamics and prevailing consensus. For instance, when executive committees strongly favor particular strategic initiatives, analytics can independently evaluate feasibility and expected outcomes, ensuring decisions incorporate rigorous empirical analysis rather than simply reinforcing internal group beliefs [169,170].

4.1.3. Filtering Out Irrelevancies

A fundamental challenge in human decision-making involves susceptibility to saliency bias—the tendency to disproportionately emphasize highly noticeable yet irrelevant information while undervaluing subtle but statistically significant variables [171]. This bias appears particularly pronounced in executive decision-making, recruitment processes, financial forecasting, and risk management contexts, frequently causing suboptimal judgments and outcomes. AI-driven analytics effectively counteracts this bias by systematically isolating critical predictive factors from vast datasets while disregarding irrelevant, emotionally charged, or attention-grabbing elements [172]. Unlike human decision-makers influenced by experience, narratives, cognitive shortcuts, or external pressures, AI models objectively evaluate data based solely on quantifiable predictive relevance. For instance, in recruitment processes, AI-powered hiring algorithms can eliminate unconscious demographic biases by ignoring factors such as names, gender, ethnicity, or appearance, focusing exclusively on objective criteria such as skills, qualifications, and past performance metrics. This approach ensures hiring decisions remain merit-based, fair, and aligned with genuine talent requirements, significantly improving organizational equity and efficiency [173,174,175].

In financial decision-making and investment analysis, saliency bias often emerges when human investors react excessively to short-term market volatility, sensational news, or emotional triggers, neglecting long-term fundamentals and statistically validated indicators [176]. AI-driven investment models combat this bias by employing deep learning, time-series forecasting, and sentiment analysis to identify patterns based on meaningful data rather than superficial market fluctuations. These advanced analytical methods help investors avoid reactionary decisions, such as panic-selling during financial crises, by providing robust, objective insights into long-term asset performance and economic trends [177]. Similarly, AI-based analytics significantly enhance corporate risk assessment and fraud detection by eliminating subjective interpretations often influenced by intuition or historical biases. AI platforms systematically filter large datasets, objectively identifying genuine risk signals without biases related to demographic backgrounds, institutional affiliations, or outdated assumptions [178]. For example, AI-powered credit scoring models prioritize behavioral indicators such as repayment habits and spending behaviors rather than irrelevant demographic factors or income brackets. This approach not only increases fairness and financial inclusion but also ensures more accurate and risk-sensitive lending decisions [179,180].

Furthermore, in corporate strategic planning, AI-driven analytics prevent executives from anchoring decisions to outdated or irrelevant benchmarks and past successes. Traditional strategic planning frequently suffers from reliance on historical metrics or experiences that may no longer reflect current market realities, competitive dynamics, or technological disruptions. AI-driven systems mitigate these risks by dynamically evaluating recent consumer behaviors, macroeconomic conditions, and competitive pressures specific to current strategic contexts, thus promoting adaptability and precision in decision-making [181,182,183]. Ultimately, AI’s capability to filter out irrelevant information represents not merely an incremental efficiency improvement but a fundamental shift toward more rational, data-grounded organizational decisions across multiple business domains [184]. However, this powerful potential must be complemented by rigorous oversight, continuous model validation, and ethical governance frameworks, as AI itself can inadvertently propagate biases if trained on biased datasets or selectively overridden by human judgment. Companies effectively integrating these bias-filtering AI mechanisms will thus achieve significant competitive advantages by consistently making rational, accurate, and strategically sound decisions in complex, rapidly evolving business environments [185,186].

4.1.4. Consistency and Repetition

A critical limitation inherent in human decision-making processes is their fundamental inconsistency. Executive and professional judgments frequently vary due to emotional fluctuations, fatigue, cognitive load, external pressures, and other contextual influences [187]. Even seasoned decision-makers may reach divergent conclusions under identical conditions, introducing elements of randomness, emotional bias, and unpredictability into strategic choices. AI-driven decision models effectively overcome these limitations by consistently applying logical frameworks and systematic reasoning processes, remaining unaffected by emotional states or mental fatigue [188,189]. Unlike human decision-makers, AI systems ensure that identical inputs reliably produce similar outputs, significantly reducing variability and arbitrary biases in decision processes.

This consistency proves particularly advantageous in corporate strategy formulation and financial forecasting contexts. For instance, executives reviewing investment opportunities may shift their stance based on transient mood states or temporary market fluctuations rather than stable, objective evaluation criteria. AI-powered financial modeling and forecasting systems eliminate inconsistencies, ensuring decisions reflect empirical data and probabilistic analysis rather than subjective sentiments or emotional reactions [190,191]. Consequently, organizations implementing AI-driven decision-support tools achieve more accurate, reliable, and rational long-term strategic planning processes that demonstrate reduced vulnerability to emotional or transient influences [192].

Human inconsistency significantly impacts hiring decisions and talent management functions, as recruitment professionals often inadvertently apply varying evaluation standards to similar candidates due to factors including fatigue, cognitive overload, or irrelevant contextual elements [193]. Behavioral research consistently demonstrates that candidate assessments frequently differ based on interview timing, preceding candidate quality, or evaluators’ momentary psychological states. AI-driven hiring algorithms eliminate this subjective variability by strictly adhering to predefined selection criteria and consistently evaluating candidate attributes, including experience, skill levels, and demonstrated performance metrics [194]. For example, an AI model ranking candidates based on objective data will produce identical outcomes regardless of contextual influences or evaluator biases. This standardization enhances fairness, predictability, and efficiency in recruitment processes, minimizing subconscious biases and significantly improving talent acquisition objectivity.

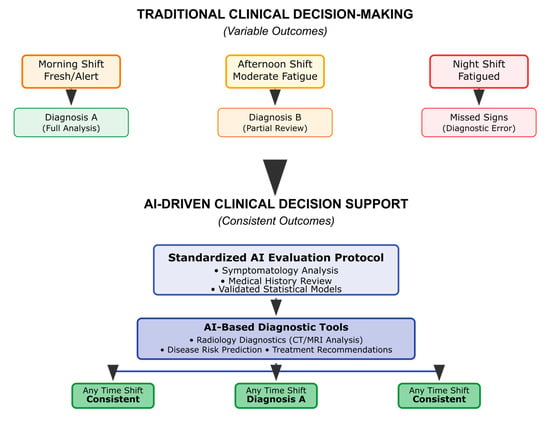

Similarly, clinical decision-making and healthcare diagnostics frequently suffer from human judgment variability attributable to cognitive fatigue, workload stress, and patient-specific contextual factors. Research studies highlight that clinicians’ medical decisions can differ significantly depending on evaluation timing—such as assessments conducted at the end of tiring shifts or following complex patient cases [195]. AI-driven diagnostic tools and clinical decision-support systems eliminate these inconsistencies by applying standardized evaluation protocols based strictly on symptomatology, medical history, and validated statistical models. Technologies, including AI-based radiology diagnostics, disease-risk prediction algorithms, and AI-guided treatment recommendation systems, ensure uniformity and accuracy regardless of external contextual or emotional factors (Figure 8). This systematic approach significantly reduces diagnostic errors and enhances patient outcomes by guaranteeing consistent treatment protocols based solely on objective clinical evidence [196].

Figure 8.

AI-driven consistency in clinical decision-making.

From corporate governance and leadership perspectives, integrating AI-driven consistency into decision-making frameworks supports sustained organizational stability and strategic coherence [197]. Executives frequently shift strategies impulsively in response to transient events, media narratives, or short-term market volatility, resulting in erratic strategic trajectories and organizational inefficiencies. AI analytics platforms address this instability by providing structured, algorithmically validated analyses firmly grounded in historical patterns, probabilistic forecasting, and comprehensive risk assessment methodologies [198]. Companies adopting AI-enhanced scenario simulations and strategic planning tools thereby avoid reactionary and short-term thinking patterns, ensuring steady execution of data-informed, long-term strategies and securing sustained competitive advantages in increasingly dynamic business environments [199].

4.2. Role of AI and Machine Learning in Detecting and Countering Bias

Artificial intelligence (AI) and machine learning (ML) have revolutionized bias detection and mitigation within decision-making processes, particularly in high-stakes environments where human cognitive limitations and systemic biases have historically influenced outcomes. Whether unconscious or structural, bias often remains undetected due to deep integration within established business practices, decision frameworks, and historical datasets [200]. AI and ML technologies have introduced data-driven methodologies enabling organizations to identify, quantify, and mitigate bias in previously impossible ways. One of ML’s most impactful applications in bias detection involves its capacity to analyze past decisions and decision-making processes at scale, statistically identifying patterns indicating bias presence [201]. By leveraging pattern recognition techniques, anomaly detection algorithms, and fairness-aware computational frameworks, AI systems can scrutinize historical data, detect systematic disparities, and provide quantifiable evidence of biased trends. This capability is particularly valuable in corporate hiring, financial lending, law enforcement, healthcare diagnostics, and algorithmic governance, where unchecked human bias can lead to inequitable, inefficient, or legally problematic decisions [202].

4.2.1. Bias Detection—Identifying and Correcting Systemic Inequities in Decision-Making Through ML and AI

Machine learning significantly enhances bias detection capabilities by analyzing historical decision-making data to reveal correlations with irrelevant or ethically problematic factors. ML algorithms examine historical lending data in financial contexts to identify hidden demographic biases, such as race or gender, influencing loan approvals despite identical financial qualifications [203]. Similarly, within corporate environments, AI analytics examine hiring and promotion records to pinpoint statistical patterns potentially signifying systemic biases based on characteristics including gender, age, or ethnicity that cannot be justified solely through objective performance measures [204]. By quantifying these biases clearly and objectively, AI enables organizations to proactively correct discriminatory practices, ensuring decisions align strictly with merit-based criteria [205]. ML algorithms also proactively detect biases by flagging anomalies in real-time decision-making processes. Unlike traditional human judgments susceptible to cognitive shortcuts or subjective biases, AI systems can implement immediate real-time anomaly detection, highlighting deviations from fair or optimal decisions. For instance, in judicial contexts, AI tools can monitor court decisions to detect and address disparities promptly, significantly reducing biases influenced by race, judge fatigue, or personal heuristics [206]. Similarly, in healthcare settings, AI-driven diagnostics ensure treatment recommendations based strictly on medical data rather than demographic characteristics historically linked to biased medical decisions, thereby correcting disparities in treatment and diagnosis among marginalized populations [207,208].

However, ML systems are not inherently bias-free; their effectiveness depends heavily on training data quality and neutrality. Historical biases embedded in datasets related to hiring, lending, or criminal sentencing may inadvertently transfer into AI algorithms, perpetuating rather than eliminating discrimination [209]. Therefore, rigorous ethical reviews, fair auditing, and algorithmic transparency are crucial safeguards. Techniques including adversarial debiasing, algorithmic auditing, and synthetic data augmentation are actively pursued within fairness-aware machine learning research to prevent AI systems from replicating or exacerbating existing biases [210]. Consequently, as organizations integrate AI more deeply into decision-making processes, embedding robust fairness measures, continuous bias detection mechanisms, and ethical oversight frameworks becomes essential for AI to function effectively as a corrective mechanism against systemic inequities rather than inadvertently reinforcing them [211].

4.2.2. AI-Driven Decision Support Systems—Counteracting Human Biases Through Algorithmic Guidance

AI-driven decision support systems (DSSs) have emerged as critical tools in corporate strategy, financial analysis, and policy-making, offering data-backed alternatives to intuition-based human decisions while mitigating cognitive biases, including confirmation, availability, and anchoring biases. Unlike traditional analytical tools, AI-based DSSs transcend merely providing information—they actively participate in decision-making by generating predictive insights, modeling alternative scenarios, and systematically challenging entrenched assumptions through computational reasoning [212]. These systems reduce bias by presenting decision-makers with alternative scenarios and outcomes, directly countering tendencies toward tunnel vision or self-reinforcing cognitive distortions. For instance, in strategic planning contexts, AI-powered tools can simulate multiple market entry scenarios, including options initially disregarded by executives due to personal biases or preconceived notions about consumer behavior or competition. By grounding these scenarios in robust predictive analytics, economic modeling, and consumer sentiment analysis, AI compels decision-makers to adopt broader, evidence-based perspectives rather than relying on familiar yet biased heuristics [213,214,215].

AI-driven DSSs are particularly influential in high-stakes financial environments, exemplified by hedge funds and algorithmic trading firms prioritizing data-driven logic over emotional or hierarchical biases [216]. Bridgewater Associates, a leading hedge fund, utilizes algorithmic systems to ensure investment decisions reflect empirical credibility and factual performance data (“believability”) rather than executive seniority or organizational influence, preventing social or hierarchical biases from distorting investment choices [217]. Similarly, AI financial modeling systems facilitate unbiased portfolio management by forecasting market movements and enabling fund managers to test multiple portfolio strategies rigorously through data-backed simulations. Beyond finance, AI decision-support tools significantly enhance corporate governance and organizational decision-making by addressing personal favoritism, institutional inertia, and legacy biases. In HR and talent management contexts, AI-based systems objectively assess promotion and resource allocation decisions based on empirical data, including productivity metrics and leadership performance, rather than subjective managerial evaluations [218,219]. Strategic planning is similarly transformed, with AI-driven analytics compelling executives to regularly reconsider outdated business models by continuously integrating competitive analysis, consumer behavior insights, and emerging technology trends, ensuring forward-looking decisions based strictly on objective data [220].

Despite these advantages, AI-driven DSSs also present inherent limitations, as AI algorithms can perpetuate biases if trained on biased historical datasets or incorporating flawed assumptions within their models [221]. For example, an AI hiring model might unintentionally replicate gender biases prevalent in historical hiring data, mistakenly interpreting these patterns as meritocratic standards rather than reflecting systemic inequities. Addressing such issues requires implementing fairness-aware machine learning techniques, adversarial debiasing methods, and rigorous, continuous auditing processes to detect and mitigate embedded biases. Ultimately, AI should function as an augmentative decision-support tool rather than an absolute authority, preserving critical human oversight to ensure AI-driven recommendations receive appropriate scrutiny and ethical consideration [222].

4.2.3. Cognitive Collaboration—AI as a Real-Time Debiasing Partner in Decision-Making

The concept of cognitive collaboration, where AI actively engages with human decision-makers to identify and mitigate biases during real-time strategic deliberations, represents a significant advancement beyond traditional passive AI decision support. Leveraging techniques including natural language processing (NLP), sentiment analysis, and real-time analytics, cognitive AI assistants actively monitor executive discussions, detecting cognitive distortions like groupthink, overconfidence, and confirmation bias, intervening when necessary to prompt balanced, empirically informed considerations [223,224]. These interactive systems do not replace human intuition; they function as proactive corrective mechanisms, ensuring strategic deliberations remain grounded in rational analysis and comprehensive evaluation rather than subjective biases.

AI-driven cognitive collaboration proves particularly effective in executive contexts, including strategic planning meetings, investment discussions, and boardroom decision-making, where bias-driven narratives or reliance on past successes often dominate discussions. AI cognitive assistants function as real-time “debiasing coaches,” analyzing ongoing conversations to identify blind spots and biases, then proactively prompting participants to consider overlooked risks or alternative perspectives. For instance, during market expansion planning, if discussions disproportionately emphasize potential gains without adequately addressing risks or competitive challenges, AI can highlight this imbalance, recommending historical precedent examination and risk factor analysis. Similarly, when executives anchor too heavily on past successes (representativeness bias), AI interventions encourage independent assessments of current market conditions, ensuring strategies remain data-driven rather than emotionally influenced [225,226,227].