ECG Waveform Segmentation via Dual-Stream Network with Selective Context Fusion

Abstract

1. Introduction

1.1. Traditional ECG Waveform Segmentation Methods

1.2. Segmentation of ECG Waveform Based on Fixed-Length Heartbeat Slicing

1.3. Deep Learning-Based ECG Waveform Segmentation Method

2. Materials and Methods

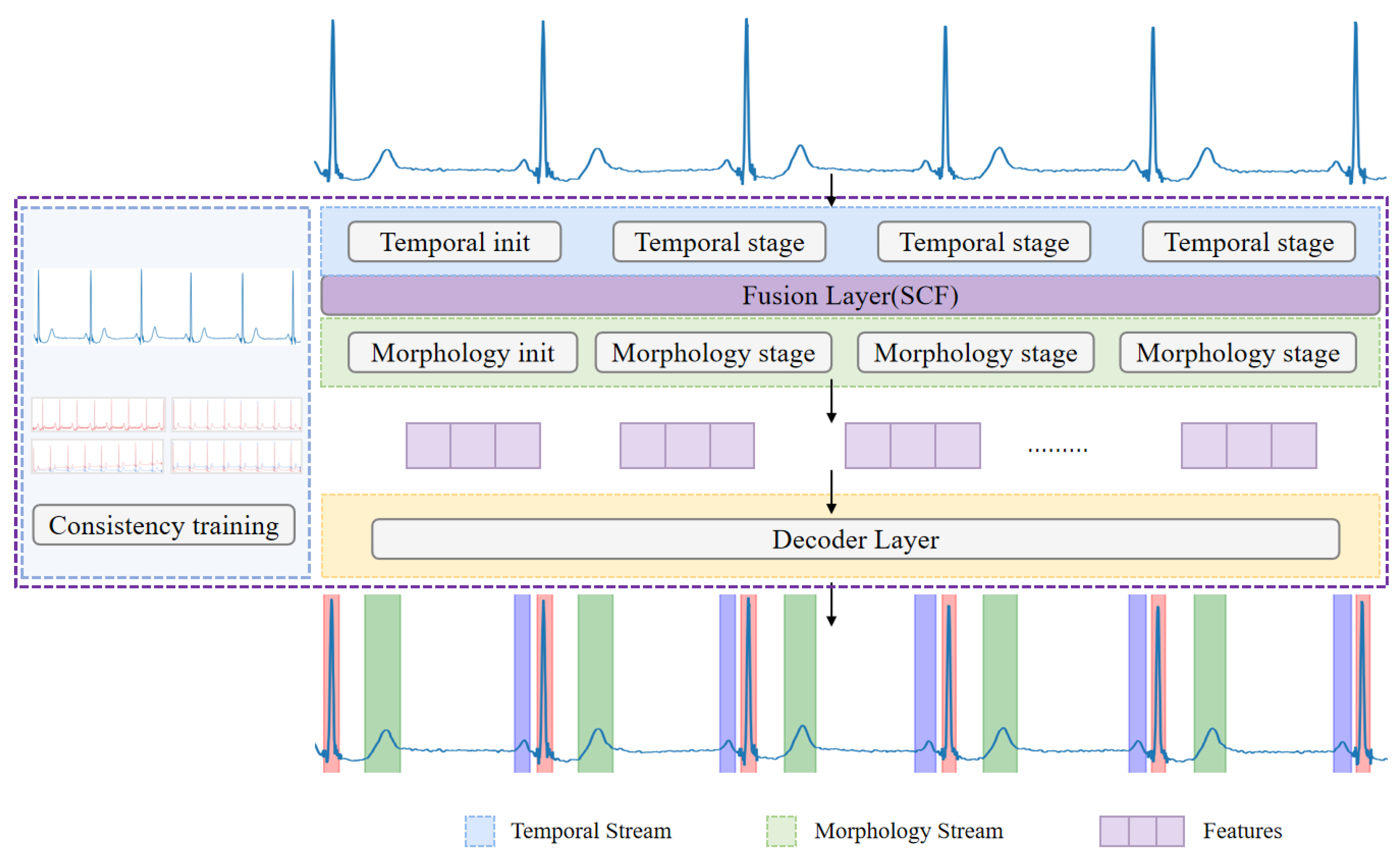

2.1. Model Architecture

2.1.1. Temporal Stream

2.1.2. Morphology Stream

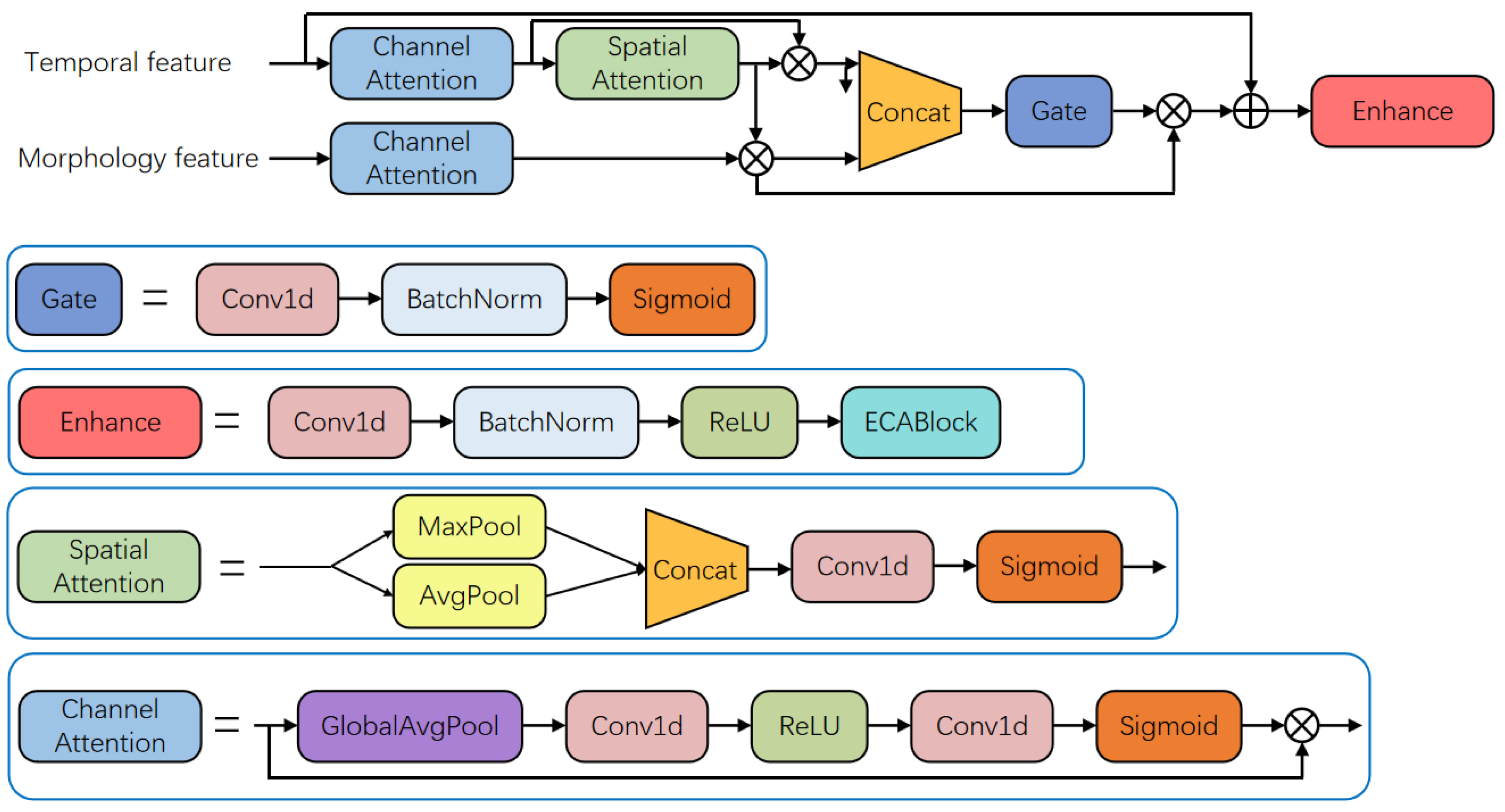

2.1.3. Feature Fusion Module

2.1.4. Decoder

2.1.5. Training Configuration

2.2. Dataset

2.3. Evaluation Metrics

3. Results

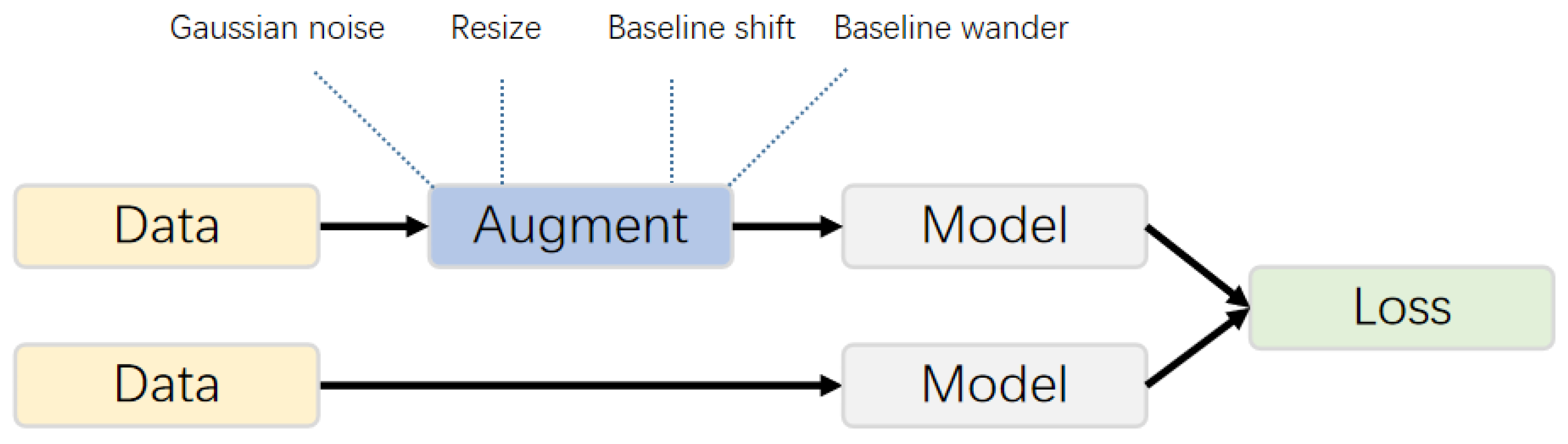

3.1. Data Augmentation and Training

3.2. Ablation Experiment

3.3. Comparison Experiment

| Method | Pre (%) | Rec (%) | F1 (%) |

|---|---|---|---|

| Sereda [30] | 84.08 | 98.23 | 90.41 |

| Moskalenko [31] | 95.59 | 98.75 | 97.14 |

| Liang [38] | 95.85 | 98.30 | 97.05 |

| Tutuko [37] | 95.53 | 98.22 | 96.86 |

| Joung [27] | 96.23 | 98.73 | 97.46 |

| Our Method | 96.79 | 98.90 | 97.83 |

| Sereda [30] + noise | 72.35 | 83.62 | 77.58 |

| Moskalenko [31] + noise | 82.40 | 85.15 | 83.75 |

| Liang [38] + noise | 83.25 | 86.40 | 84.79 |

| Tutuko [37] + noise | 82.31 | 85.26 | 83.76 |

| Joung [27] + noise | 84.10 | 86.85 | 85.45 |

| Our method + noise | 87.60 | 89.40 | 88.49 |

| Method | Pre (%) | Rec (%) | F1 (%) |

|---|---|---|---|

| Sereda [30] | 90.25 | 96.80 | 93.41 |

| Moskalenko [31] | 96.15 | 97.82 | 96.98 |

| Liang [38] | 96.40 | 97.65 | 97.02 |

| Tutuko [37] | 96.20 | 97.56 | 96.87 |

| Joung [27] | 97.07 | 98.11 | 97.58 |

| Our Method | 97.35 | 98.25 | 97.80 |

| Sereda [30] + noise | 75.80 | 82.45 | 78.98 |

| Moskalenko [31] + noise | 80.15 | 84.20 | 82.12 |

| Liang [38]+noise | 81.30 | 85.65 | 83.41 |

| Tutuko [37] + noise | 80.23 | 84.39 | 82.25 |

| Joung [27] + noise | 83.25 | 86.40 | 84.79 |

| Our method + noise | 84.95 | 87.60 | 86.25 |

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Gacek, T. ECG Signal Processing, Classification and Interpretation: A Comprehensive Framework of Computational Intelligence; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Pan, J. A real-time QRS detection algorithm. IEEE Trans. Biomed. Eng. 2007, 3, 230–236. [Google Scholar] [CrossRef]

- Li, C. Detection of ECG characteristic points using wavelet transforms. IEEE Trans. Biomed. Eng. 1995, 42, 21–28. [Google Scholar] [CrossRef] [PubMed]

- Martínez, J. A wavelet-based ECG delineator: Evaluation on standard databases. IEEE Trans. Biomed. Eng. 2004, 51, 570–581. [Google Scholar] [CrossRef]

- Kalyakulina, A. Finding morphology points of electrocardiographic-signal waves using wavelet analysis. Radiophys. Quantum Electron. 2019, 61, 689–703. [Google Scholar] [CrossRef]

- Sabherwal, P. Independent detection of t-waves in single lead ecg signal using continuous wavelet transform. Cardiovasc. Eng. Technol. 2019, 14, 167–181. [Google Scholar] [CrossRef]

- Di Marco, L. A wavelet-based ECG delineation algorithm for 32-bit integer online processing. Biomed. Eng. Online 2011, 10, 23. [Google Scholar] [CrossRef]

- Benitez, D. A new QRS detection algorithm based on the Hilbert transform. Comput. Cardiol. 2019, 27, 379–382. [Google Scholar]

- Mukhopadhyay, S. Time plane ECG feature extraction using Hilbert transform, variable threshold and slope reversal approach. In Proceedings of the International Conference on Communication and Industrial Application, Kolkata, India, 26–28 December 2011; pp. 1–4. [Google Scholar]

- Martinez, A. Automatic electrocardiogram delineator based on the phasor transform of single lead recordings. In Proceedings of the Computers in Cardiology, Belfast, UK, 26–29 September 2010; pp. 987–990. [Google Scholar]

- Graja, S. Hidden Markov tree model applied to ECG delineation. IEEE Trans. Instrum. Meas. 2019, 54, 2163–2168. [Google Scholar] [CrossRef]

- Akhbari, M. ECG segmentation and fiducial point extraction using multi hidden Markov model. Comput. Biol. Med. 2016, 79, 21–29. [Google Scholar] [CrossRef]

- Dubois, R. Automatic ECG wave extraction in long-term recordings using Gaussian mesa function models and nonlinear probability estimators. Comput. Methods Programs Biomed. 2007, 88, 217–233. [Google Scholar] [CrossRef] [PubMed]

- Kalyakulina, A. LUDB: A new open-access validation tool for electrocardiogram delineation algorithms. IEEE Access 2020, 8, 186181–186190. [Google Scholar] [CrossRef]

- Cimen, E. Arrhythmia classification via k-means based polyhedral conic functions algorithm. In Proceedings of the CSCI, Las Vegas, NV, USA, 15–17 December 2016; pp. 798–802. [Google Scholar]

- Kiranyaz, S. Real-time patient-specific ECG classification by 1-D convolutional neural networks. IEEE Trans. Biomed. Eng. 2015, 63, 664–675. [Google Scholar] [CrossRef]

- Abrishami, H. Supervised ECG interval segmentation using LSTM neural network. In Proceedings of the IEEE BIOCOMP, Las Vegas, NV, USA, 19–21 March 2018; pp. 71–77. [Google Scholar]

- Londhe, A. Semantic segmentation of ECG waves using hybrid channel-mix convolutional and bidirectional LSTM. Biomed. Signal Process. Control 2021, 63, 102162. [Google Scholar] [CrossRef]

- Camps, J. Deep learning based QRS multilead delineator in electrocardiogram signals. In Proceedings of the CinC, Maastricht, The Netherlands, 23–26 September 2018; Volume 45, pp. 1–4. [Google Scholar]

- Peimankar, A. DENS-ECG: A deep learning approach for ECG signal delineation. Expert Syst. Appl. 2021, 165, 113911. [Google Scholar] [CrossRef]

- Sodmann, P. ECG segmentation using a neural network as the basis for detection of cardiac pathologies. In Proceedings of the Computing in Cardiology, Rimini, Italy, 13–16 September 2020; pp. 1–4. [Google Scholar]

- Cai, W. QRS complex detection using novel deep learning neural networks. IEEE Access 2020, 8, 97082–97089. [Google Scholar] [CrossRef]

- Mitrokhin, M. Deep learning approach for QRS wave detection in ECG monitoring. In Proceedings of the AICT, Moscow, Russia, 20–22 September 2017; pp. 1–3. [Google Scholar]

- Wang, J. A knowledge-based deep learning method for ECG signal delineation. Future Gener. Comput. Syst. 2020, 109, 56–66. [Google Scholar] [CrossRef]

- Jimenez-Perez, G. Delineation of the electrocardiogram with a mixed-quality-annotations dataset using convolutional neural networks. Sci. Rep. 2021, 11, 863. [Google Scholar] [CrossRef] [PubMed]

- Avetisyan, A. Self-Trained Model for ECG Complex Delineation. In Proceedings of the ICASSP, Hyderabad, India, 6–11 April 2025; pp. 1–5. [Google Scholar]

- Joung, C. Deep learning based ECG segmentation for delineation of diverse arrhythmias. PLoS ONE 2024, 19, e0303178. [Google Scholar] [CrossRef]

- Emrich, J. Physiology-Informed ECG Delineation Based on Peak Prominence. In Proceedings of the EUSIPCO, Lyon, France, 26–30 August 2024; pp. 1402–1406. [Google Scholar]

- Jimenez-Perez, G. U-Net architecture for the automatic detection and delineation of the electrocardiogram. In Proceedings of the CinC, Singapore, 8–11 September 2019; pp. 1–4. [Google Scholar]

- Sereda, I. ECG segmentation by neural networks: Errors and correction. In Proceedings of the IJCNN, Budapest, Hungary, 14–19 July 2019; pp. 1–7. [Google Scholar]

- Moskalenko, V. Deep learning for ECG segmentation. In Proceedings of the International Conference on Neuroinformatics, Dolgoprudny, Russia, 7–11 October 2019; pp. 246–254. [Google Scholar]

- Chen, Z. Post-processing refined ECG delineation based on 1D-UNet. Biomed. Signal Process. Control 2023, 79, 104106. [Google Scholar] [CrossRef]

- Nurmaini, S. Robust electrocardiogram delineation model for automatic morphological abnormality interpretation. Sci. Rep. 2023, 13, 13736. [Google Scholar] [CrossRef] [PubMed]

- Li, X. SEResUTer: A deep learning approach for accurate ECG signal delineation and atrial fibrillation detection. Physiol. Meas. 2023, 44, 125005. [Google Scholar] [CrossRef] [PubMed]

- Dong, G. Learning Temporal Distribution and Spatial Correlation Toward Universal Moving Object Segmentation. IEEE Trans. Image Process. 2024, 33, 2447–2461. [Google Scholar] [CrossRef]

- Laguna, P. A database for evaluation of algorithms for measurement of QT and other waveform intervals in the ECG. In Proceedings of the Computers in Cardiology, Lund, Sweden, 7–10 September 1997; pp. 673–676. [Google Scholar]

- Tutuko, B. Short single-lead ECG signal delineation-based deep learning: Implementation in automatic atrial fibrillation identification. Sensors 2022, 22, 2329. [Google Scholar] [CrossRef] [PubMed]

- Liang, X. ECGSegNet: An ECG delineation model based on the encoder-decoder structure. Comput. Biol. Med. 2022, 145, 105445. [Google Scholar] [CrossRef] [PubMed]

| Method | Pre (%) | Rec (%) | F1 (%) |

|---|---|---|---|

| Temporal stream | 95.20 | 97.85 | 96.51 |

| Morphology stream | 95.85 | 97.10 | 96.47 |

| DualStream + concat | 96.25 | 98.30 | 97.26 |

| DualStream + SCF | 96.79 | 98.90 | 97.83 |

| Method | Pre (%) | Rec (%) | F1 (%) |

|---|---|---|---|

| Temporal stream | 95.92 | 97.30 | 96.60 |

| Morphology stream | 96.15 | 96.95 | 96.55 |

| DualStream + concat | 96.75 | 97.65 | 97.20 |

| DualStream + SCF | 97.35 | 98.25 | 97.80 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Niu, Y.; Lin, N.; Tian, Y.; Tang, K.; Liu, B. ECG Waveform Segmentation via Dual-Stream Network with Selective Context Fusion. Electronics 2025, 14, 3925. https://doi.org/10.3390/electronics14193925

Niu Y, Lin N, Tian Y, Tang K, Liu B. ECG Waveform Segmentation via Dual-Stream Network with Selective Context Fusion. Electronics. 2025; 14(19):3925. https://doi.org/10.3390/electronics14193925

Chicago/Turabian StyleNiu, Yongpeng, Nan Lin, Yuchen Tian, Kaipeng Tang, and Baoxiang Liu. 2025. "ECG Waveform Segmentation via Dual-Stream Network with Selective Context Fusion" Electronics 14, no. 19: 3925. https://doi.org/10.3390/electronics14193925

APA StyleNiu, Y., Lin, N., Tian, Y., Tang, K., & Liu, B. (2025). ECG Waveform Segmentation via Dual-Stream Network with Selective Context Fusion. Electronics, 14(19), 3925. https://doi.org/10.3390/electronics14193925