1. Introduction

Fully homomorphic encryption (FHE) and zero-knowledge proofs (ZK) are driving cryptographic applications into a new era, enabling secure computing and privacy-preserving techniques [

1]. How can FHE systems be made to run more efficiently on heterogeneous platforms? The current mainstream choice for practical implementations of these systems is lattice-based cryptography (LBC), which is selected not only for its security against quantum attacks in post-quantum cryptography (PQC), but also due to its underlying algebraic structure. LBC schemes operate over a polynomial ring, which naturally supports the homomorphic addition of ciphertexts and the multiplicative fits to realize the operations required by FHE. However, the core operations of LBC involve polynomial multiplications, which implement this FHE and ZK system [

2] with a significant computational overhead [

3,

4]. The question of how to make FHE and ZK systems run more efficiently on heterogeneous platforms has become a key bottleneck that restricts the large-scale deployment of FHE and ZK. If this challenge is solved, then converting all computer operations to full homomorphism and proxying computations on third-party clouds will become feasible in the future.

The number theoretic transform (NTT) is now commonly employed to overcome the prohibitive computational complexity of direct polynomial multiplication, making it a performance-critical kernel in any practical LBC-based system. However, the NTT algorithm itself poses significant hardware design challenges with its large amount of intrinsic parallelism, intricate memory access patterns, and complex data dependencies across computational stages. These characteristics make achieving high throughput and resource efficiency a major obstacle [

3,

4]. Ye et al. [

5] emphasize a fully unfolded streaming permutation network (SPN) and a compact modulus reduction algorithm to achieve ultra-low latency and high throughput. Similarly, innovating in both algorithmic and handcrafted architectural design, Su et al. [

6] propose the fusion of NWC and INTT scaling with unified PE memory cycle sharing. Yang et al. [

7] focus on automation, highlighting batch DSE for automated RTL generation from HDL designs. Cheng et al. [

8] focused on access conflicts, proposing a rotation table to reorder tuples in-place. These works optimize NTT accelerator performance and adaptability from multiple angles. However, designs based on manual patterns and involving co-design of algorithm and architecture are complex. It presents a difficulty for non-specialists to reuse and integrate into application scenarios. This approach lacks a portable, user-friendly, automated design methodology that incorporates expert knowledge.

High-level synthesis (HLS) has emerged as a possible solution by raising the abstraction level of hardware design. HLS enables designers to quickly explore design trade-offs and optimize performance [

9,

10]. Despite its capabilities, HLS faces a fundamental synthesis gap when applied to the implementation of demanding algorithms, such as NTT. It is challenging for HLS tools to automatically derive optimal hardware architectures from standard algorithmic descriptions, particularly for deep-pipelined memory systems required to support high-bandwidth data streams. This limitation manifests itself in what is called the HLS memory wall, a structural bottleneck that hinders deep pipelining (e.g., a pipeline II of 1) and impairs performance. Existing work primarily focuses on two approaches: one involves rewriting HLS source code to optimize loop and memory access structures, while the other employs pragma directives to drive HLS tools toward parallel or pipelined explorations, aiming to approximate the efficiency of handwritten RTL. Ozcan et al. [

11] modularized the NTT module and added pragma directives after identifying butterfly stages to achieve area and latency improvements without altering the algorithm. However, this approach did not structurally eliminate memory conflicts or cross-data dependencies. El-Kady et al. [

12] reconstructed the CT/GS code structure by phasing out inner butterfly stages and combining dual-port RAM to separate read/write operations with odd/even splitting. They also utilized pragma directives to resolve cross-iteration data dependencies, achieving controllable latency reduction at low parallelism (

p = 2) in their experiments. However, their approach is limited to

p = 2, lacking broad parameter adaptability, which contradicts the flexibility and scalability expected from HLS implementations. Kawamura et al. [

13] proposed that constructing independent pipelines in phases outperforms cross-phase pipelines. Their key contribution lies in providing reusable loop optimization guidelines for HLS that restore II = 1 when necessary through array partitioning. However, they did not address memory conflicts in large-scale parallelism. Their limitations similarly rely on manual, experience-based pragma adjustments and source code rewriting. Current research lacks systematic and automated approaches to address fragmented empirical knowledge. As a result, existing HLS-based NTT tends to remain suboptimal, lagging far behind hand-optimized hardware description language (HDL) designs [

14,

15,

16]. It presents inefficiencies in arithmetic operations, memory management, and parallelism [

11,

17].

To bridge this gap, we introduce the selection of an arithmetic-level modular multiplication algorithm and algebraically unified butterfly operations. At the microarchitecture level, we avoid memory conflicts and enable system-level template-driven automatic DSE generation for different N/q/d implementations. The goal is to elevate “expert hardware knowledge + pragma parameter tuning” to a portable, reusable, and automatically explorable HLS template framework. This paper describes and validates how a collaborative design approach through HLS can be utilized in a disciplined way to explicitly embed intent into high-level source code, using the NTT algorithm as an example, guiding the synthesis tool towards an optimal hardware implementation. The concept of hardware domain expertise introduced in HLS refers to the practice of analyzing an algorithm’s data structures and computational steps, inspired by software library files, to achieve engineering reuse and innovation in hardware design. By selecting appropriate resources based on the hardware platform, HLS accelerator templates are constructed. Embedding these templates into the workflow enables rapid implementation of high-performance hardware modules. This approach is of importance in an era where algorithms require close collaboration with hardware.

The proposed methodology is based on a hierarchy of co-design principles that systematically address HLS limitations, ranging from the arithmetic level to the system level. The main contributions of this work are summarized as follows:

Contributions:

We conducted a comparative analysis of modular multiplication to demonstrate its effectiveness for the co-design approach, including a finely tuned general Montgomery modular multiplication and a specific generalized Mersenne number method. Compared to related HDL-based designs, our design achieved similar cycle performance and utilized only 34% of the LUT resources in the best case.

We introduce a conflict-free memory architecture that structurally resolves the memory access contentions inherent to NTT, enabling a fully pipelined (II = 1) design and achieving a 5.6× latency reduction over naive HLS [

11].

We extend our methodology to the system level, presenting a scalable parallel architecture co-designed with its memory subsystem. A high-parallelism algorithm is developed, along with a compact butterfly structure, enabling the design of unified NTT/INTT architectures. This scalability supports various parameters and parallelism levels, further integrated into an automated framework for design, verification, and implementation [

2,

4].

Ultimately, this work does more than present a high-performance accelerator, it demonstrates a new paradigm for HLS development. It captures expert hardware knowledge across multiple domains. This includes the selection of modular multiplication algorithms and moduli, the derivation of algebraic butterfly fusion, and the use of parametric HLS pragma insertion. Furthermore, it incorporates twiddle factor precomputation, parallel NTT address generation logic, parameterized data types and memory structures suitable for (N, d), and thorough array partitioning operations. They constrain the design space to a region of optimal performance. This not only facilitates efficient DSE but also lays the groundwork for a future ecosystem of reusable HLS templates, aiming to democratize high-performance FPGA design for non-experts in hardware.

The rest of this article is organized as follows.

Section 2 presents the necessary preliminaries and background information.

Section 3 focuses on the design and optimization of our method for designing HLS-based NTT.

Section 4 demonstrates the application of HLS to improve the performance of the NTT, supported by detailed case studies.

Section 5 presents a comparative evaluation of the proposed approach with state-of-the-art implementations and validates the accelerators in an embedded system. Finally,

Section 6 concludes the article with a summary of contributions and future directions.

2. Background

2.1. Preliminaries and Notations

In this article, n represents the degree of the polynomial and is a power of 2, while the modulus q is a prime number. The ring of integers modulo q is denoted as . We define as the polynomial ring, where coefficients are elements of and polynomials are reduced modulo . Lowercase letters such as a represent integers, and bold lowercase letters like represent polynomials. The i-th coefficient of polynomial is expressed as , and the polynomial is expanded as , with coefficients stored in natural order unless specified otherwise. The NTT of the polynomial is represented as , where . The twiddle factor is an n-th primitive root of unity, and is a -th primitive root of unity. The symbols ·, ×, and ⊙ represent integer multiplication, polynomial multiplication, and pointwise multiplication, respectively.

2.2. Number Theoretic Transform (NTT)

The NTT is a variant of the discrete Fourier transform (DFT) adapted for operations over finite fields. It effectively executes convolutions, a key process in polynomial multiplication, as well as various cryptographic algorithms. In an n-point NTT, n input polynomial coefficients are transformed into for . Here, the n-th root of unity, , meets the condition , and for any , . The inverse NTT (INTT) reconstructs the original polynomial from its transformed version using the formula , thereby guaranteeing the unique recovery of the coefficients.

Traditional polynomial multiplication using direct convolution has a computational complexity of . In contrast, employing the NTT reduces this to by converting the operation to pointwise multiplication. However, directly applying NTT requires appending n zeros to each input, followed by performing a -point NTT, -point pointwise multiplication, and -point INTT. In the ring , the -point result of the INTT is eventually reduced to n-point. This process can be made more efficient by employing negative wrapped convolution (NWC), which utilizes only n-point NTT/INTT. When computing in using NWC, the first step involves scaling the coefficients and by , resulting in and . Specifically, is computed as . The next step is to compute . The final step involves scaling the coefficients by , yielding .

Applying NWC to polynomial multiplication involves scaling the coefficients by

prior to the NTT and by

after the INTT. Previous studies [

18,

19] have demonstrated that these scaling operations can be integrated into the NTT and INTT processes. Specifically, they can be incorporated into the decimation-in-time NTT using the Cooley-Tukey (CT) butterfly presented in Algorithm 1 and into the decimation-in-frequency INTT using the Gentle-Sande (GS) butterfly presented in Algorithm 2. With coefficients

and a twiddle factor

, the CT butterfly performs the calculations

and

; conversely, the GS butterfly computes

and

. Algorithm 1 efficiently computes the NTT of a polynomial

using a three-loop structure, where coefficient updates are performed using the CT butterfly operations (lines 6–7).

| Algorithm 1 Cooley-Tukey NTT algorithm [20] |

| Input:

A polynomial , and a precomputed twiddle factor table W storing powers of in bit reverse order

|

| Output:

NTT(), with coefficients in bit reverse order

|

- 1:

- 2:

for ; ; do - 3:

- 4:

for ; ; i++ do - 5:

for ; ; j++ do - 6:

mod q - 7:

mod q - 8:

end for - 9:

end for - 10:

end for - 11:

Return

|

| Algorithm 2 Gentleman-Sande INTT algorithm [21] |

| Input: A polynomial , and a precomputed twiddle factor table W storing powers of in natural order

|

| Output:

INTT(), with coefficients in normal order

|

- 1:

- 2:

for ; ; do - 3:

- 4:

for ; ; i++ do - 5:

for ; ; j++ do - 6:

▷ Temporary variable for - 7:

mod q - 8:

mod q - 9:

mod q - 10:

end for - 11:

end for - 12:

end for - 13:

Return

|

2.3. Modular Reduction

Modular reduction is a mathematical operation that computes the remainder of a division by a modulus q. In the context of NTT, it ensures that all arithmetic operations remain within a predefined range, preventing overflow and keeping values within the finite field defined by q. The modulus q in NTT can vary from as few as ten bits to several thousand. Smaller bitwidths for q enhance computational speed and reduce resource consumption, while larger moduli increase security but at the cost of higher computational demands. For larger bit numbers, the residue number system (RNS) can be employed to manage complexity, but this often introduces its own overhead.

Modular reduction is computationally intensive, and several optimization methods have been developed. The Montgomery reduction simplifies the process by transforming numbers into Montgomery form, enabling modular multiplications and reductions through arithmetic shifts and additions, rather than traditional divisions. Abd-Elkader et al. [

12] advanced this technique by developing a high-frequency, low-area Montgomery modular multiplier specifically for cryptographic applications. Similarly, Barrett reduction uses a pre-computed approximation of the modular inverse to facilitate rapid modular reductions without direct division. Langhammer et al. [

22] enhanced this method by introducing a shallow reduction technique that reduces logic costs. Additionally, optimizations targeted at specific moduli have been pursued to boost performance and efficiency. For instance, Zhang et al. [

23] utilized a technique that recursively splits features, improving the area-time efficiency for modulus 12,289.

2.4. High-Level Synthesis (HLS)

HLS allows designers to specify hardware functions in high-level programming languages such as C, C++, or SystemC, which are then automatically converted into HDL formats like Verilog or VHDL. This method offers a more abstract and efficient design process, reducing the risk of errors and enabling quicker iterations and better optimizations for complex designs. HLS is particularly valuable in the rapidly evolving field of digital technology and has proven successful in various applications [

24]. Among the HLS tools available, Vitis HLS is tailored explicitly for Xilinx FPGA platforms, while Catapult HLS supports a broader range of technologies and is recognized for its vendor-neutral approach. Catapult HLS is chosen for design due to its flexibility in working with both FPGA and ASIC technologies from various manufacturers. Furthermore, it offers advanced optimization techniques that enhance performance and efficiency, which are essential for meeting complex processing needs. Additionally, its user-friendly interface makes it highly adaptable to different design frameworks.

Pragmas and optimization techniques in HLS are vital for efficient hardware design. Pragmas such as ‘unroll’ enhance parallel processing by expanding loops, while the ‘pipeline’ pragma increases throughput by overlapping computation stages. The initiation interval (II) in pipelining, which defines the clock cycle gap between consecutive operations, is crucial for managing throughput. The HLS tool also incorporates optimization strategies such as resource sharing, dataflow optimizations, and advanced memory management, balancing performance, resource efficiency, and power consumption.

4. Experiments

4.1. Setup and Development

The experiment utilizes Catapult HLS 2021.1 and Xilinx Vivado 2019.2. In hardware implementation, the C code and optimization commands are provided to generate Verilog code and obtain design results. To ensure accuracy, the Verilog code is tested through the FPGA design flow using Xilinx Vivado 2019.2, targeting the Xilinx Virtex-7 xc7vx690tffg1761-2 board. SCVerify in Catapult is used to simulate the generated Verilog. Input and output interfaces are incorporated to simulate the design and gather implementation results. To ensure consistency with subsequent designs, II is targeted at 1 with a complete pipeline.

To evaluate our proposed work, we compare the results with a series of prior works focused on NTT accelerators. The comparison baseline includes both HLS-based and HDL-based designs. These works were selected for comparison because they were all deployed on FPGA platforms for experimentation, explored multiparameter combinations within NTT, and shared detailed resource conversion methods, enabling fair comparisons of performance and resource overhead. The evaluation matrix includes fundamental performance metrics in the hardware domain such as Latency and Cycle Count. Resource utilization is reported by LUT, FF, DSP, and BRAM overheads. Additional measures of normalized area-time efficiency are conducted by the area-time product and the area-cycle product. The former reveals the absolute time-area efficiency of the hardware design, while the latter observes the relative time-area efficiency independent of frequency. In subsequent testing sections, operations per watt and throughput per watt are used for power efficiency analysis, with detailed elaboration provided at the relevant points. To ensure a fair comparison between experiments and prior work, it is worth noting that this paper first calibrated and validated the resource conversion formula’s validity. This was achieved by performing implementations on Vivado 2019.2 for the resources DSP and BRAM across all compared works involving the Xilinx FPGA Virtex 7, Kintex-7, Zynq UltraScale+, and Spartan 7 series. At a baseline frequency of 100 MHz with no timing violations, the average replacement values were calculated as approximately 478 LUTs and 87 FFs per DSP, and approximately 277 LUTs and 32 FFs per 18 Kb BRAM. The calibration results indicate that the estimation formula used in the previous work [

30,

31], where one DSP equals 819.2 LUTs and 409.6 FFs, is more conservative in terms of DSP resource conversion. This imposes a penalty on DSP utilization in the final area calculations. This evaluation approach aims to enhance design portability across various mid- to low-end FPGAs. As it aligns with the objectives of this work, subsequent results will be normalized according to their conversion standards.

4.2. Experimental Results

The comparison results of modular multiplication are shown in

Table 1. Rafferty et al. [

32] explored hardware architectures for large integer multiplication and presented results for 32-bit Comba multiplication. Compared to [

32], our 32-bit Montgomery multiplication consumes a similar number of LUTs and FFs but utilizes more DSPs. Xing et al. [

33] implemented an optimized SAMS2 modular reduction method, a variant of Barrett reduction with low Hamming weight, and optimized 14-bit modular multiplication for the NewHope PQC algorithm. Compared to [

33], our 23-bit Mersenne multiplication consumes 87% of the LUTs and 91% of the FFs, and our 23-bit Montgomery multiplication consumes 54% of the LUTs and 74% of the FFs, although our design requires more DSPs. Ye et al. [

5] developed a low-latency modular arithmetic implementation utilizing the modulus property

for different bit sizes of the modulus

q. Compared with [

5], our 32-bit Montgomery multiplication achieves similar cycle performance while using 34% of the LUTs, 49% of the FFs, and 2.75 times the DSPs. Overall, our HLS-based design achieves comparable cycle performance to traditional hardware design methods, with relatively fewer LUTs and FFs but a higher DSP resource requirement.

The implementation results of the NTT algorithms are shown in

Table 2. The implementation process and tools are consistent with those described in

Section 3. From

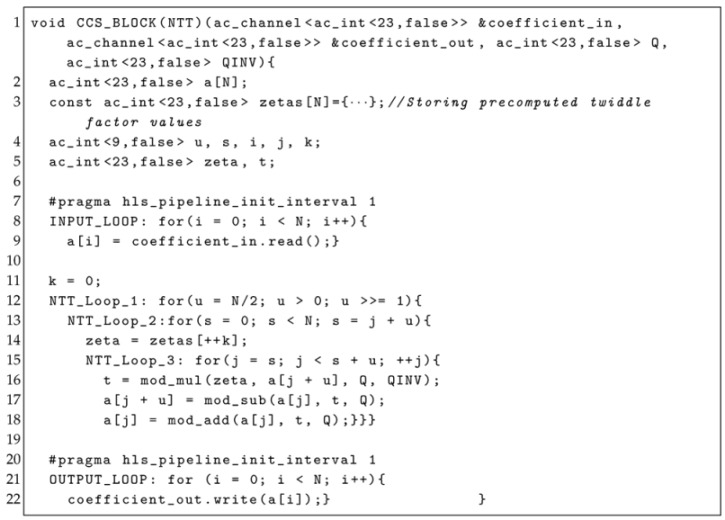

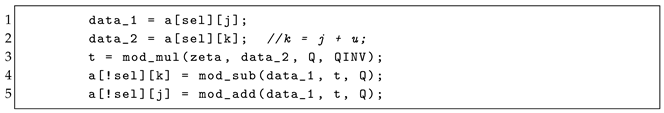

Table 2, we observe that as we gradually apply pipeline optimizations to the NTT algorithm, the area and the resource consumption for FPGA implementation increase slightly, but the cycle performance improves significantly. Notably, the number of BRAMs used when the pipeline II is set to 2 is less than that of the basic NTT, as some memory resources are implemented using LUTs. When comparing II = 2 with II = 1, fewer LUT resources are consumed with II = 1 because more DSPs, FFs, and BRAMs are utilized to improve performance. The NTT design (II = 1) achieves a latency of 11.3 µs. This represents a 5.6× improvement over the basic implementation of HLS. This validates our hypothesis after systematic HLS co-design analysis, designing an appropriate ping-pong cache structure resolves the primary bottleneck of memory access contention.

The unroll pragma is tested to determine if it can automatically increase algorithm parallelism, using the basic NTT algorithm without pipeline optimization. For NTT_Loop_1, we do not use the unroll pragma due to data dependencies between different NTT stages, which cause invalid calculations if expanded. As shown in

Table 2, simple loop unrolling does not significantly improve computing performance. Schedule analysis reveals that although unrolling adds computing units, all units access data from the same memory, which limits speed. Therefore, automatic optimization through tool commands is insufficient for achieving higher parallelism.

From

Table 2, as the modulus increases from 23-bit to 32-bit, the area and FPGA resource consumption grow proportionally. This is because the primary resource consumption in NTT lies in the butterfly unit, which operates under the modulus

Q. Utilizing a 23-bit Mersenne prime modulus for modular multiplication, Algorithm 4 reduces the total area presented in

Table 3 because of its lower complexity. The reduction in FPGA resources is evident in the decrease in the number of DSPs required, although there is an increase in the number of LUTs and FFs. The experimental results align with expectations from special prime analysis, validating that HLS-based designs enable convenient hardware generation for fast comparisons with reasonable initialization. Subsequently, to accommodate arbitrary prime requirements while maintaining comparable design efficiency, a more robust Montgomery algorithm design template was adopted. Therefore, different modular multiplication algorithms offer trade-offs tailored to specific requirements.

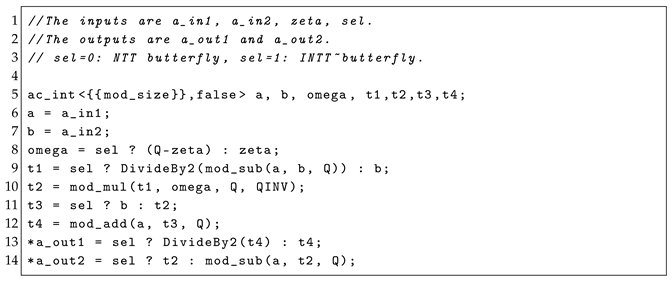

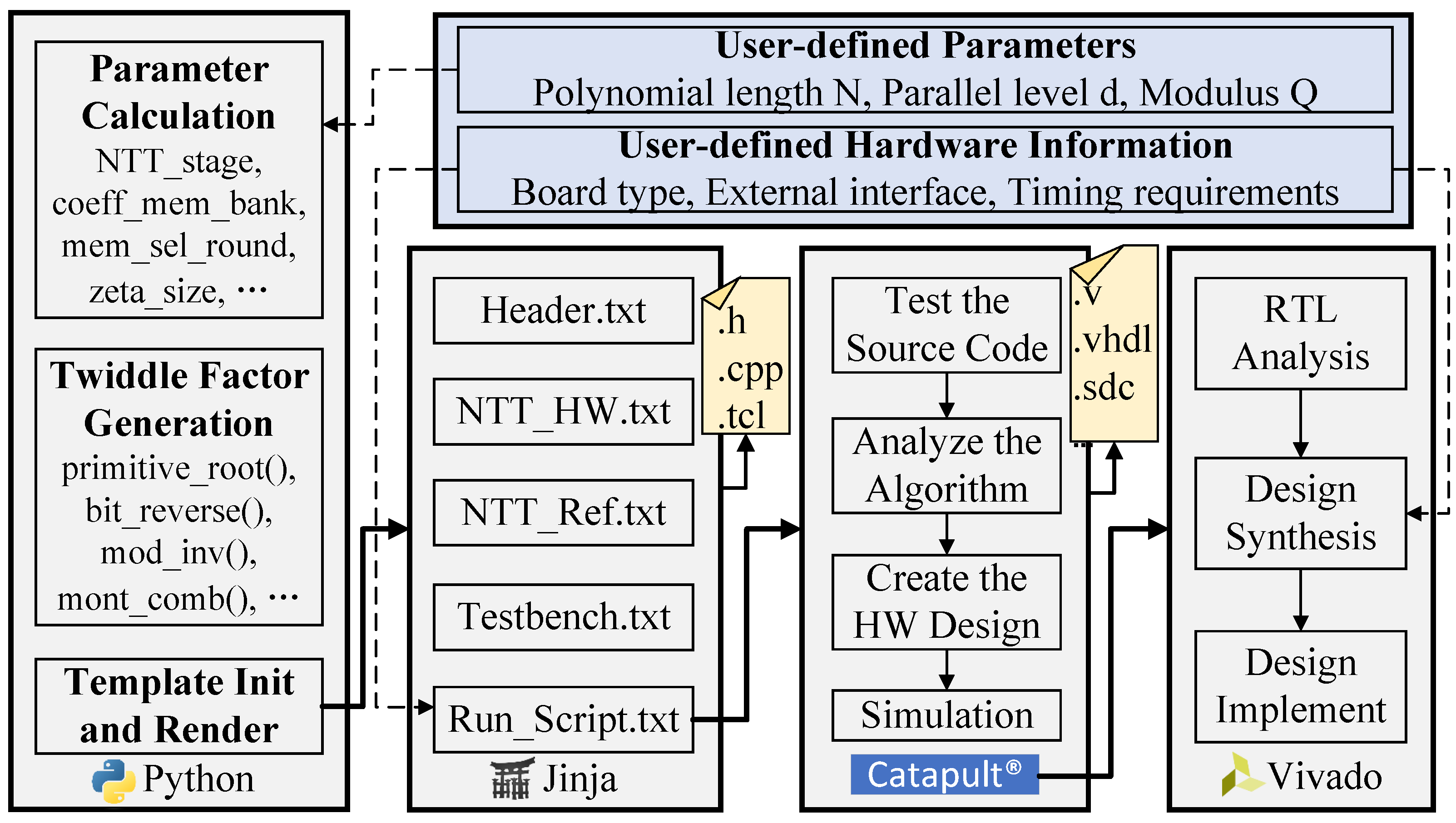

The unified NTT/INTT design integrates into the framework shown in

Figure 3. Results for the NTT/INTT design with

and 32-bit modulo are presented in

Table 4. For reference, the resource increase of the unified module relative to the NTT module under the same parameters is listed. As shown in

Table 4, the unified NTT/INTT design results in a 17% increase in LUTs and a 38% increase in FFs on average, while DSPs and BRAMs remain unchanged. The increase in LUTs and FFs is due to additional control and selection logic. The reuse of multiplication within the unified butterfly ensures that the number of DSPs remains constant. Additionally, our optimization reuses the twiddle factors for NTT and INTT, maintaining the same number of BRAMs. The cycle performance for NTT and INTT is identical, resulting in a combined NTT + INTT cycle count that is twice the NTT cycle count. Efficient pipeline divisions ensure that the unified NTT/INTT design does not affect timing performance compared to a standalone NTT design. Our design consumes similar resources and demonstrates comparable cycle performance to Verilog-based designs [

15], utilizing more DSPs but fewer BRAMs. Compared to [

34], their NTT design was manually fine-tuned on an HDL basis, and the performance and resource overhead obtained are close to our results using an automated design flow to produce an HLS design. Our generated design takes more DSP, while the LUT/FF is half and one-third of their design, respectively. This demonstrates the superior performance and design efficiency of our approach. Furthermore, our design supports different levels of parallelism, enabling a more thorough exploration of trade-offs. Additionally, the module underwent post-placement power analysis on Virtex-7, with its energy efficiency performance shown in the table. When the unified NTT/INTT accelerator

, total power consumption increased from 22 mW to 73 mW as the d rose from 1 to 4. However, this increase in power consumption is far outweighed by the substantial reduction in latency achieved by increasing the parallelism level d. Analysis reveals a decreasing trend in energy consumption per operation (NTT or INTT), from 0.97 μJ at

to 0.81 μJ at

. This indicates that despite the parallel architecture theoretically requiring greater total area and power consumption, its substantial computational speedup results in lower overall task energy consumption. The d = 4 design achieves a throughput of 1239.6 k-trans/s/W, representing approximately a 20% efficiency improvement over the d = 1 design. This proves that our system co-design methodology not only constructs scalable, high-performance architectures but also delivers exceptional energy efficiency.

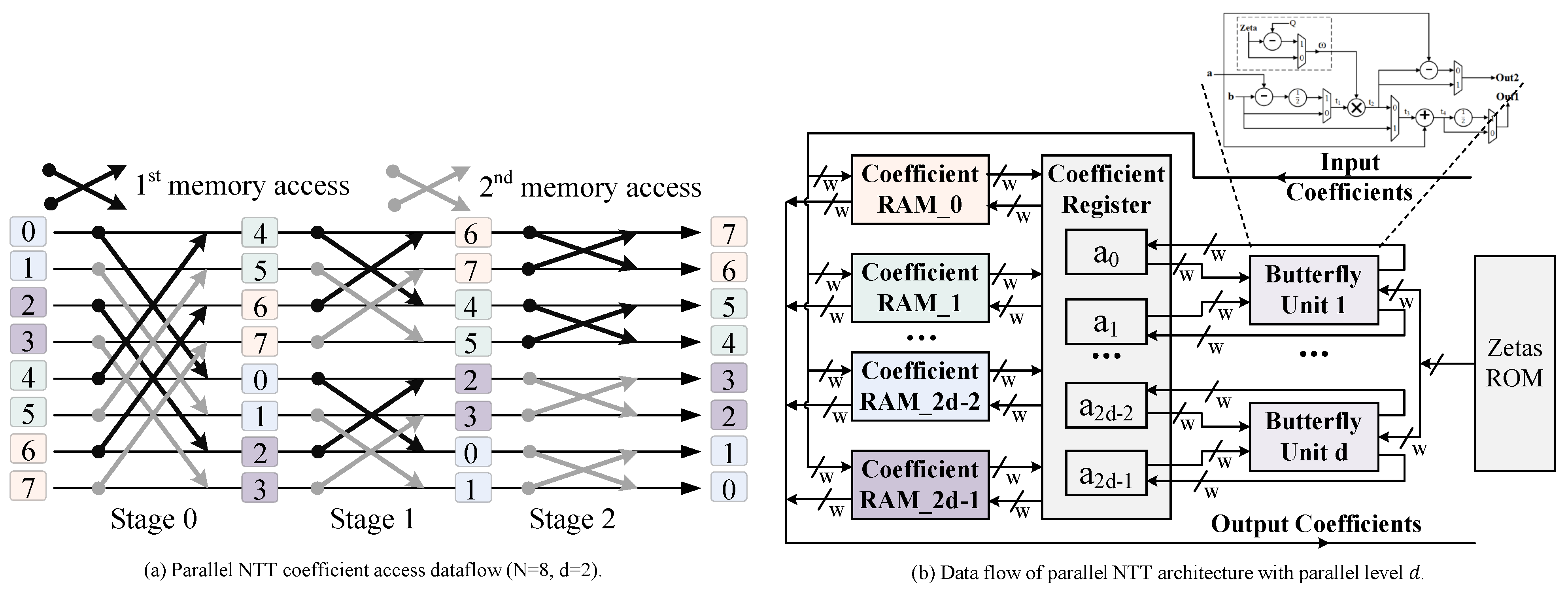

4.3. Design Space Exploration

To evaluate the scalability and adaptability of our proposed architectural template, a design space exploration is performed. The DSE strategy involves conducting a grid search across the most impactful architectural parameters: the degree of parallelism (d) and the transform size (N). Experiments are presented for powers of two, specifically for the parallelism degree d, as it naturally aligns with the inherent radix-2 structure of the NTT algorithm that we employ. This alignment results in highly efficient and straightforward hardware for memory banking, address generation, and twiddle factor distribution, which can be implemented using simple bitwise operations. While supporting non-power-of-two parallelism (e.g., ) is theoretically possible, it would necessitate the implementation of more complex mixed-radix algorithms and a significantly more intricate memory addressing scheme, leading to substantial control logic overhead. Therefore, the focus on powers-of-two parallelism is a deliberate design trade-off to maximize performance scalability while minimizing hardware complexity. For each configuration point , we run the complete HLS and physical implementation flow to obtain precise performance and resource cost (LUTs, FFs, BRAMs, DSPs) data. The goal of this exploration is not to find a single optimal point, but to characterize the Pareto-optimal front of the trade-offs between performance and resources, thereby providing a comprehensive guide for designers to select an implementation that best fits their specific constraints.

Different parameters are configured using the automated NTT code generation framework shown in

Figure 3, with implementation results summarized in

Table 5. The platform configuration remains consistent with the description in

Section 3. Tests are conducted for polynomial lengths

N ranging from 256 to 32,768. For

, the modulus

Q is 24 bits, while for

, the modulus

Q is 32 bits. Various results are tested with parallelism

d set to 1, 2, and 4 to investigate the trade-offs between resources and performance. Theoretically, the NTT for a polynomial length

N requires

butterfly operations. With

d butterflies,

parallel butterfly operations are required, consuming approximately

d times the resources. Therefore, the product of Cycle and LUT is relatively fixed, and this metric can roughly reflect the design trade-offs.

As shown in

Figure 4, for the same

N, increasing

d leads to higher resource consumption and shorter calculation cycles. However, tabular data shows that BRAM consumption does not necessarily increase with

d. Notably, when

N exceeds 2048, the number of BRAMs remains unchanged as

d increases for the same

N. This is because, for larger

N, coefficients are distributed across multiple BRAM blocks even when

, so increasing

d does not require additional BRAM blocks. Conversely, for the same

d, resource consumption increases as

N grows. This increase is partly due to the added complexity of control logic with larger

N, but a more significant factor is the implementation of the memory block. Our designs generate general Verilog code via HLS, which is not optimized for specific FPGA IPs. For memory units, the Vivado tool automatically uses a combination of BRAM and LUT to implement them, causing memory requirements to increase proportionally with

N. Consequently, LUT resources rise significantly as

N increases. This trend can be clearly observed in

Figure 5, where LUTs and BRAMs as a percentage of area overhead increase significantly as

N increases. It demonstrates a shift in design resource utilization from logic-intensive to memory-dependent components. For instance, with

and NTT length of 256, the DSP area constitutes approximately 79% of the total design footprint. As

N increases, when

32,768, BRAMs and LUTs used for storage logic account for about 65% of the total area overhead. This observation underscores the importance of our proposed co-design methodology. For long NTTs, optimizing the memory subsystem should be given the highest priority over the algorithm itself. At this scale, the efficiency of the memory subsystem becomes the primary factor constraining the scalability of NTT design.

The frequency of the NTT hardware implementation ranges from 115 to 119 MHz. Analysis indicates that the critical timing path primarily involves the input and output interfaces. Other critical paths include the coefficient RAM interfaces, modular multiplication operations, NTT loop count and control logic, and the data read interface of the twiddle factor ROM. As d increases, the RAM path becomes an increasingly significant timing bottleneck. In practice, connecting the input and output interfaces to high-speed interfaces can prevent them from becoming timing bottlenecks. For modular multiplications, NTT loop count, and control logic, users can increase the target frequency of the HLS tool and use more pipeline stages to split the critical path, thereby avoiding timing bottlenecks. To address the timing constraints of the coefficient RAM, especially when achieving higher parallelism, users can develop improved memory models to better utilize BRAM in FPGA, enabling more efficient parallel memory usage.

5. Discussion and Evaluation

5.1. Comparisons with HLS-Based and HDL-Based NTT Designs

This work focuses on the optimization and generation of the NTT module, whose performance significantly impacts the efficiency of PQC accelerators. For instance, in many PQC accelerators such as CRYSTALS-Dilithium, Kyber, or Falcon, a substantial portion of the area and computational overhead is attributable to NTT/INTT. The unified accelerator generated using the HLS framework can serve as an efficient, plug-and-play IP for these applications. This capability is demonstrated through RISC-V system integration in the following subsection. In this subsection, the experimental results of this work and some prior studies are presented and analyzed in detail.

Note that to conduct a fair comparison between different designs implemented on different devices, we follow [

30,

31] to assign different ratios to specific hardware resources (LUT, FF, DSP, and BRAM) to calculate the overall area usage. That is AREA = LUT/16 + FF/8 + DSP × 102.4 + BRAM × 56. Taking account of the influence of frequency, the Area-Time Product (ATP) is defined as the product of area and latency. Additionally, in many applications, the frequency is determined by the overall system rather than an individual module, so the product of area and cycle is also compared. The NTT design is compared with related HLS implementations in

Table 6. Compared with [

35] in

, our design has lower latency and roughly a 1.93× reduction in ATP. For

, our design uses fewer BRAMs to achieve lower latency, resulting in

and

reductions in ATP. Compared with [

13], our design achieves a smaller area and latency, achieving

and

reductions in ATP. Millar et al. [

36] enhanced FFT-based multiplication for HE, using more hardware resources to increase frequency. Our design achieves lower latency through better cycle performance, resulting in

and

improvements in ATP.

Compared to [

11], our design consumes similar resources but exhibits significantly better cycle performance, with a

ATP improvement. Our design consumes resources between the two designs in [

17], yet provides more than a

improvement in cycle performance. When N = 256, due to the deeper pipeline structure, our result exhibits a large area overhead around 45% greater than theirs [

37,

38], but resulting in

and

improvements in cycle performance. Our design consumes resources within the range of [

39], but uses fewer computation cycles and achieves

and

better ATP results. Compared with other HLS-based NTT designs, our design demonstrates superior cycle performance, maintains comparable frequency, consumes fewer resources, and shows clear advantages in ATP comparisons. In summary, our approach consistently outperforms prior HLS-based works in area-time efficiency, underscoring the effectiveness of our co-design methodology.

The comparison results between our HLS-based design and HDL-based design are shown in

Table 7. Ye et al. [

5] proposed a parameterized NTT architecture supporting various polynomial degrees, moduli, and data parallelism levels, using significant resources to achieve high frequency and low computational cycles, reducing latency by more than

compared to ours. However, our design achieves a more balanced use of hardware resources, resulting in

and

improvements in ATP results compared to [

5]. Yang et al. [

7] presented a framework for generating low-latency NTT designs for HE-based applications. Our approach, using simpler logic and more resource-efficient methods, achieves

and

improvements in ATP results despite operating at a lower frequency. Su et al. [

6] developed a reconfigurable multicore NTT/INTT architecture with variable PEs, fully optimizing hardware resource usage with high parallelism. Our design achieves similar ATP results at lower frequencies compared to [

6]. Cheng et al. [

8] presented a radix-4 polynomial multiplication design with an efficient memory access algorithm, enhancing NTT, INTT, and modular multiplication performance. Compared to [

8], our design uses similar hardware resources but achieves better cycle performance, resulting in a

improvement in ATP performance for

.

Overall, compared to HDL-based designs, our HLS-based design utilizes resources more efficiently and achieves reasonable cycle performance. While traditional HDL-based designs can fully leverage FPGA IPs to reduce the latency, HLS-based designs provide greater flexibility for rapid design space exploration. Despite operating at lower frequencies, HLS designs can achieve comparable or superior ATP results. In terms of Area-Cycle efficiency, our HLS-based design outperforms the referenced HDL-based designs by to . This validates the significance of our work, demonstrating a pathway to democratize high-performance hardware module design and foster a broader hardware research and developer community.

5.2. System Integration and Future Direction

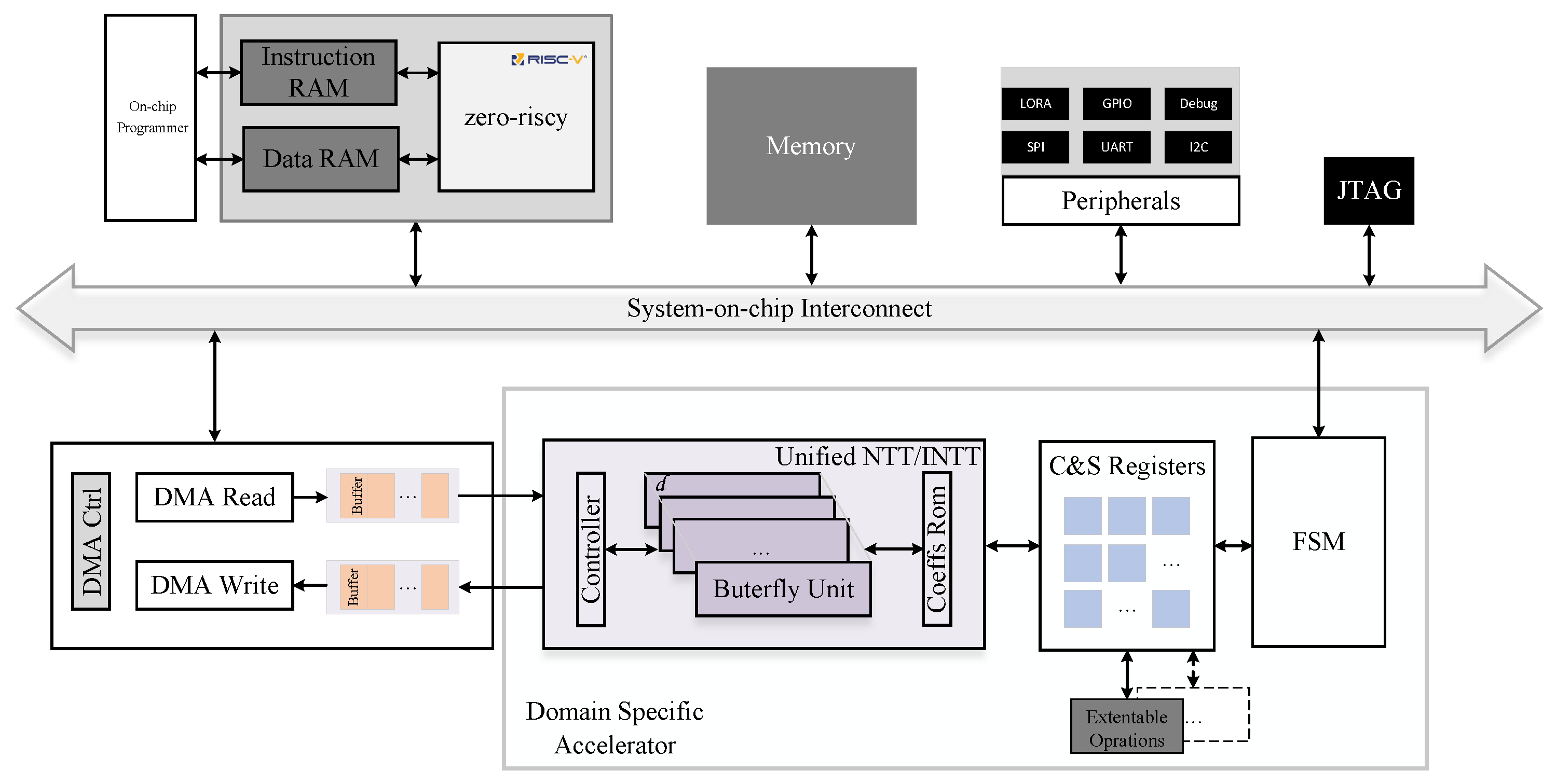

RISC-V is an open-source architecture that offers better reproducibility and flexibility than closed ARM processors, allowing our customized designs to be seamlessly connected at the RTL level. Moreover, our choice of RISC-V is derived from the Pulpino open source project, which has a large community, and therefore, the relevant information is already well established. This allows the design workflow and code in this paper to be open-sourced in the future, making it easy for more researchers and developers to use it freely, explore and implement their designs faster, and provide support for optimizing the lattice code in different ways. To illustrate the usability, we integrate the unified NTT/INTT module into an RISC-V-based processor system and verify its performance on a real FPGA board. The architecture of the integrated system is shown in

Figure 6. This platform is built on the open-source PULPino RISC-V system [

40,

41], which has supported several emerging applications [

42,

43]. The RISC-V core utilizes the zero-riscy core, a lightweight core with two pipeline stages. The RISC-V core performs software computations by executing instructions stored in the instruction RAM. The data RAM stores the data utilized and manipulated during program execution. The on-chip programmer, configured through online programming, handles loading and updating the memory contents. The system is 32-bit and transmits data and control signals through the AXI bus. The DMA controller reads and writes input and output polynomial coefficients. Additionally, the 1-bit selection signal for NTT and INTT is transferred through the control register.

The overall design utilizes HDL, enabling convenient migration across boards. For our integration board test, we use the Kintex-7 KC705 evaluation board. Compared to the previous Virtex-7 board, this board has only 47% of the LUT resources, which may slightly reduce the performance. The onboard operating frequency adheres to the RISC-V platform setting of 100 MHz, and the test results are presented in

Table 8. We independently and continuously perform NTT and INTT computations to obtain the cycle results. The SW cycle is measured using the RISC-V core to compute NTT and INTT. The SW-HW cycle includes the time taken for the RISC-V core to obtain computation results by invoking the hardware accelerator, including the data transmission time. Code sizes for software-only and software-hardware co-design approaches are also provided to demonstrate code size optimization.

The design [

15] presented a polynomial multiplier for qTESLA, incorporating NTT, pointwise multiplication, and INTT, and integrated it into a RISC-V processor via an APB bus. Our design performs similar computations but omits pointwise multiplication and requires additional transmission of the NTT output polynomial and the INTT input polynomial.

Table 8 compares the two designs from a system integration perspective. Although our RISC-V processor has lower performance and consumes six times more software cycles for similar computations, our design achieves 1.69, 2.25, and 2.71 improvements in latency through software-hardware co-design. Both designs exhibit substantial communication overhead due to the low frequency of the software processor. Higher speeds can be achieved by employing a high-performance processor and a communication bus IP with a higher frequency. The hardware accelerator, which already performs well, can maintain a lower frequency to save power. Additionally, using a wider bus or integrating more hardware modules can reduce transmission overhead, further improving the performance of the system.

As

Figure 7 shows, the speed-up ratio of the two strategies, HW and SW/HW co-design, the HW approach has a 328.2 to 1303.1 times improvement, while the SW/HW co-design approach has a 163.6 to 261.2 times improvement. Comparing our SW/HW co-design with the hardware compositions of our work at different levels of parallelism, we can see that the performance improvement in the SW/HW co-design approach with increasing parallelism, while reducing the amount of code by a factor of 20, is not as significant as the average performance improvement of the HW approach, which has a speedup ratio of nearly 99.3%. In our observations of the I/O overhead depicted in the figure, we find that as the number of butterfly units increases, the enhanced parallelism leads to an exponential improvement in the computation speed of the hardware (HW) approach. However, in the software-hardware co-design strategy, the involvement of the processor in scheduling and I/O operations becomes a bottleneck that constrains overall performance. As shown in

Table 8, the actual DMA throughput in the system remains nearly constant (approximately 153 MB/s). This implies that regardless of how fast the accelerator computes (from d = 1 to d = 4, computation time is reduced by a factor of 4), the time spent moving data in and out remains virtually unchanged. The bottleneck limiting further performance gains lies in the need for more efficient scheduling and DMA mechanisms to enhance bus data transfer throughput. Future optimization efforts should explore more effective software/hardware scheduling mechanisms to fully utilize the bus, thereby unlocking the potential of highly parallel hardware accelerators.

In summary, our work achieves comparable or superior performance and ATP results compared to existing NTT designs. Through system deployment, we demonstrate that HLS designs can be fully compatible with existing systems, achieving performance levels comparable to Verilog-based designs. Moreover, the proposed approach presents generalization potential when viewed horizontally. For instance, NTT transfer FFT requires only replacing the complex butterfly pattern at the top layer, while the phased control logic and memory subsystem read/write mechanisms can be reused. Although the sorting network architecture differs from NTT, it can also be analyzed systematically, starting with an examination of memory access patterns, identifying parallel bottlenecks, co-designing conflict-free accesses, and constructing new descriptions in HLS. For irregular graph algorithms, such as sparse graph bottlenecks arising from random memory accesses, software-managed caches and prefetching can be designed. This constitutes a reasonable inference for scenarios where the HLS source code has completed migration. Although not yet experimentally validated, it represents a viable extension of this work for thorough exploration. From a vertical perspective, our method studies RNS-NTT and can explore optimizing it by introducing new dimensions from a hardware design standpoint to identify favorable parallelism. In the future, HLS can be used to rapidly and effectively develop cryptographic libraries for performance evaluation and applications.