Intelligent Trademark Image Segmentation Through Multi-Stage Optimization

Abstract

1. Introduction

- (1)

- A novel image-equalization algorithm for trademarks, color three-channel adaptive histogram equalization (CTCAHE), is introduced. By representing and transforming trademark images in the YCrCb color space, this method applies contrast-limited histogram equalization to achieve adaptive image enhancement, thereby improving clarity and readability.

- (2)

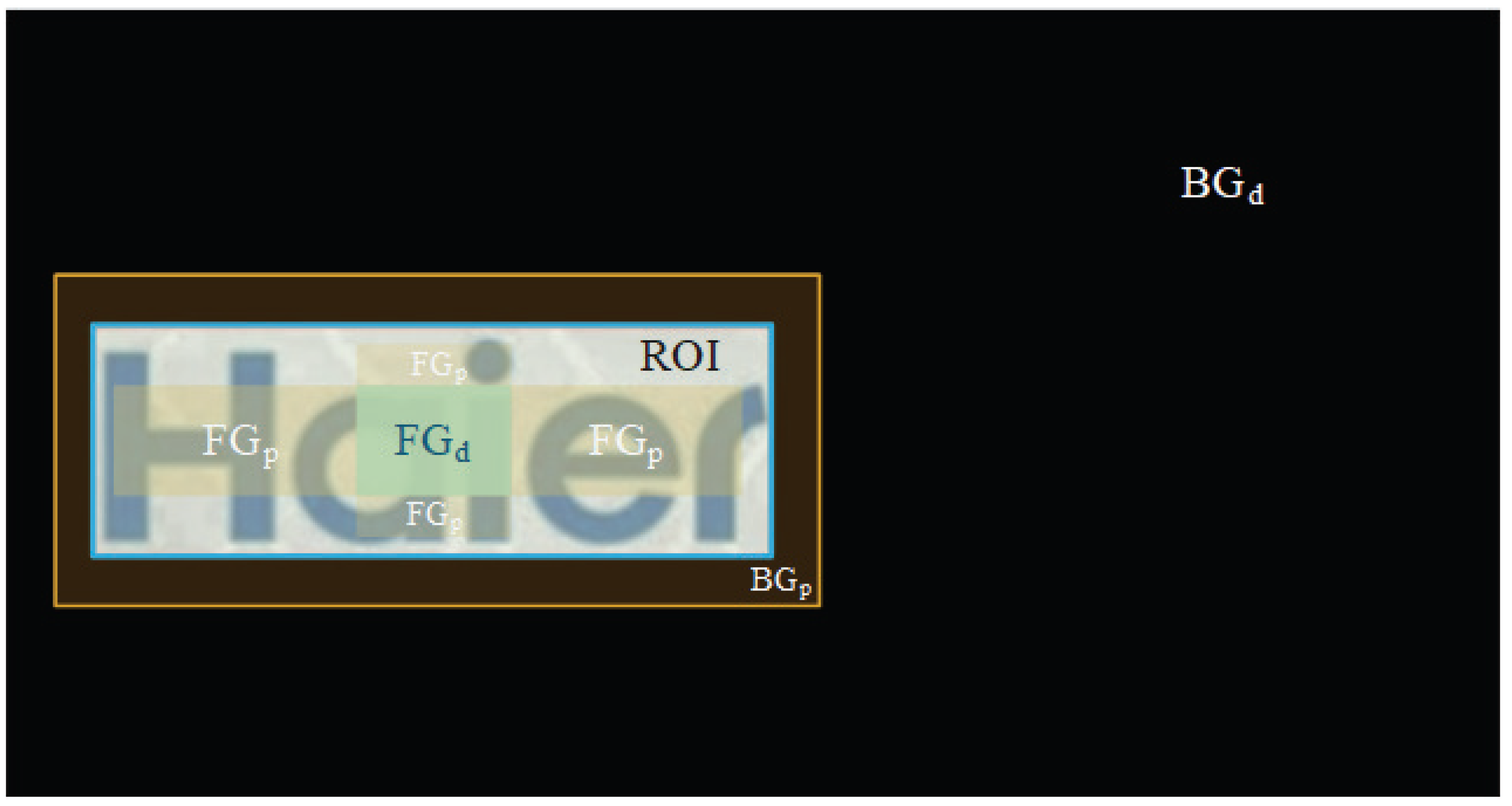

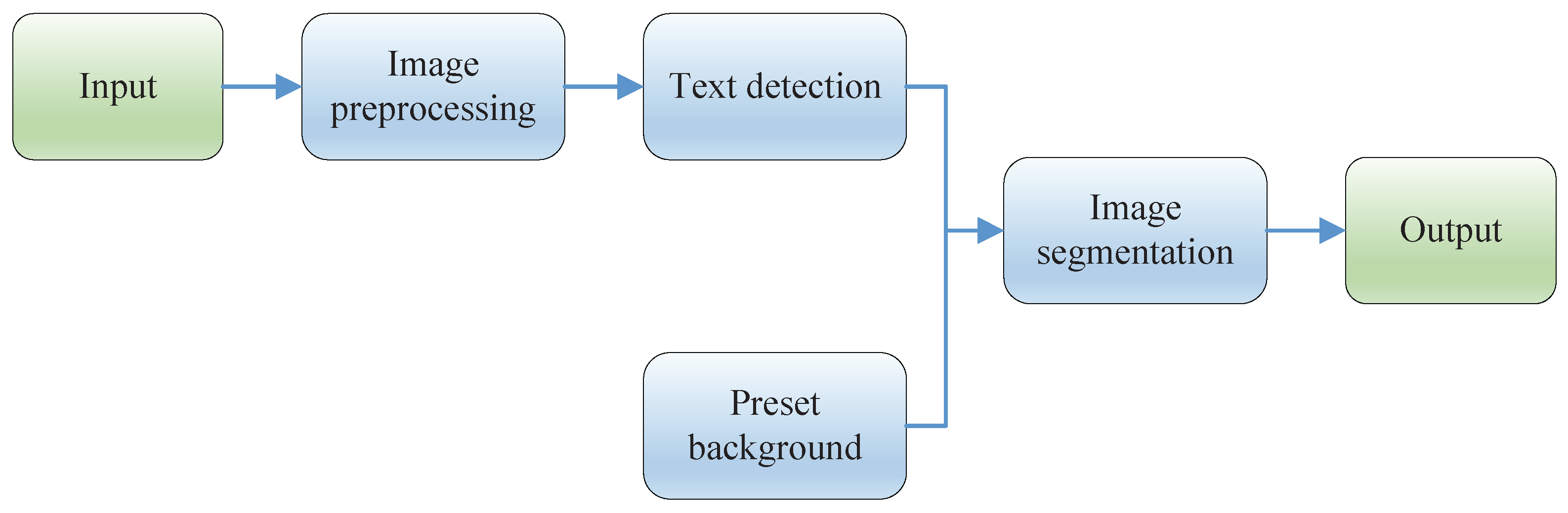

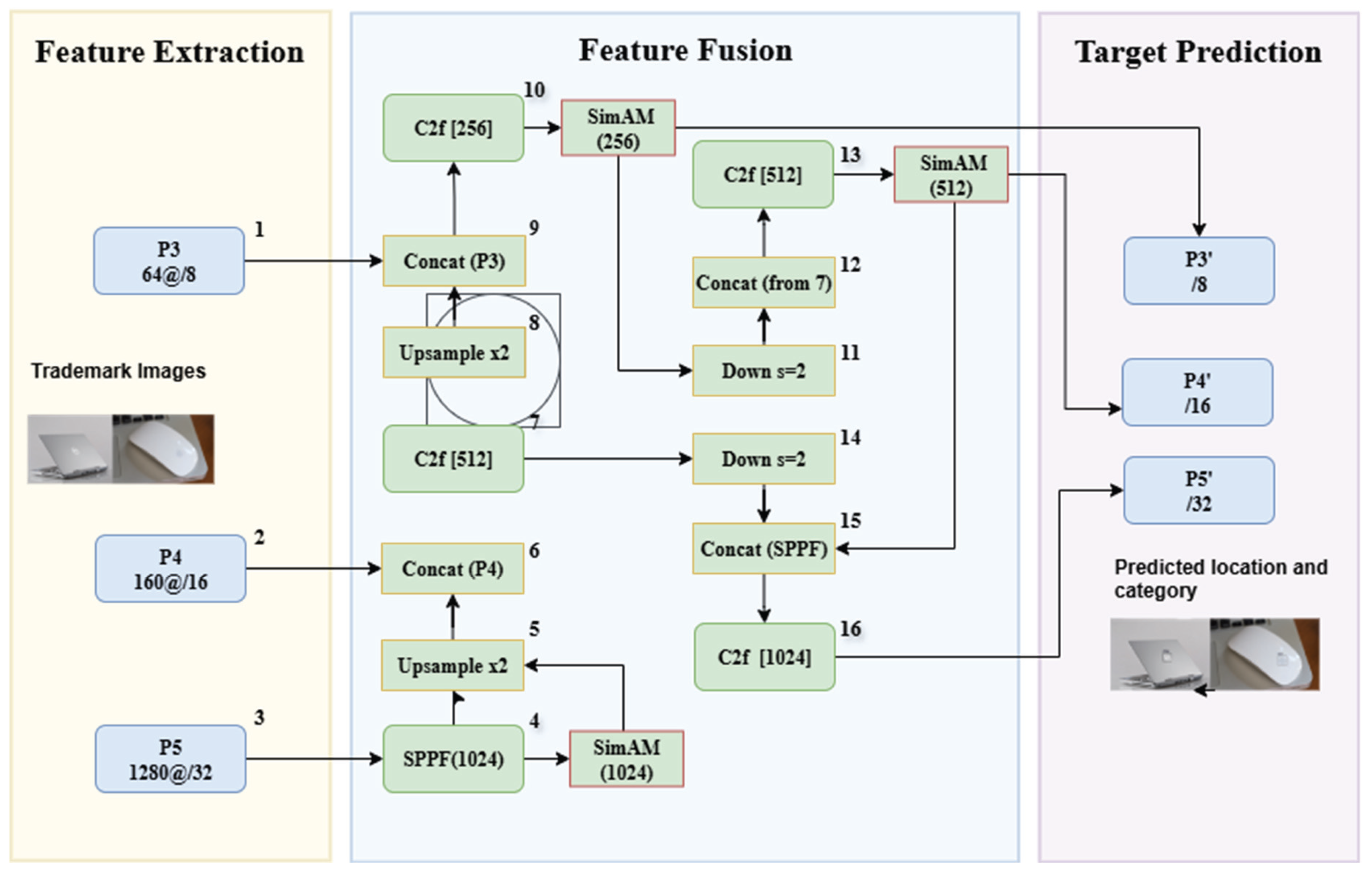

- An automated trademark segmentation algorithm is proposed. The YOLOv8 algorithm replaces manual bounding boxes for automatic localization and pre-judgment of regions of interest (ROI). This step marks reasonable pixel distributions for foreground, background, probable foreground, and probable background before inputting the data into the AT-Cut algorithm for iteration to obtain the optimal segmentation result.

- (3)

- Experimental results on public datasets demonstrate that, compared to the original algorithm, the proposed method achieves significant improvements in segmentation accuracy, validating its effectiveness.

Preliminaries

2. Related Work

3. Methodology

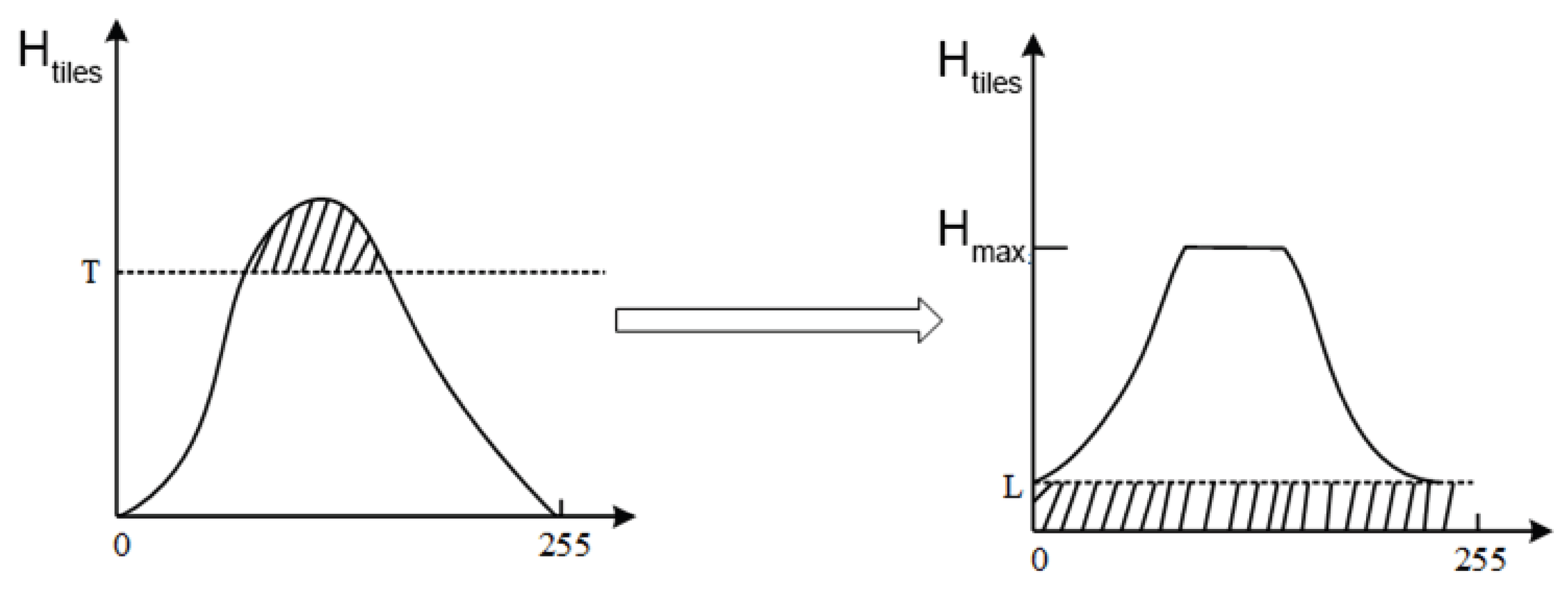

3.1. CTCAHE-Based Image Enhancement Algorithm

- (1)

- (2)

- Divide the Y-channel image into N sub-blocks (tiles) of size M × M. The value of M determines the visibility of local details, and adjusting M can reduce detail loss.

- (3)

- (4)

- Apply histogram equalization to all redistributed tiles.

- (5)

- For pixels outside the central region, use linear interpolation to reduce computation times and lower the algorithm’s time cost.

- (6)

- Merge the processed Y-channel back into the original YCrCb image and convert it back to the original color space to complete the image equalization.

3.2. Trademark Detection-Based Coordinate Localization

3.3. AT-Cut Algorithm

| Algorithm 1: Image segmentation algorithm. |

|

3.4. Erosion and Dilation

4. Experiments

4.1. Experimental Configuration

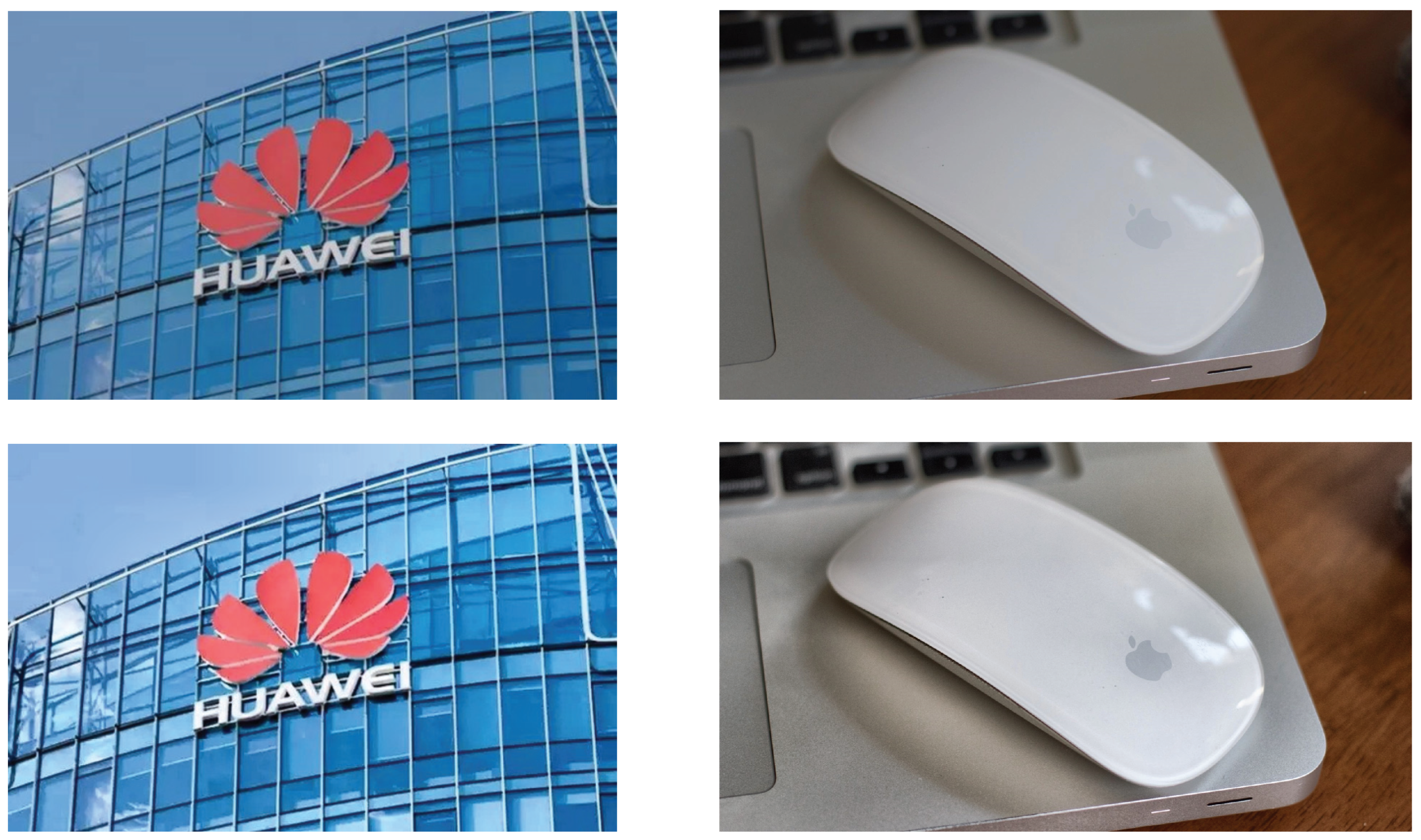

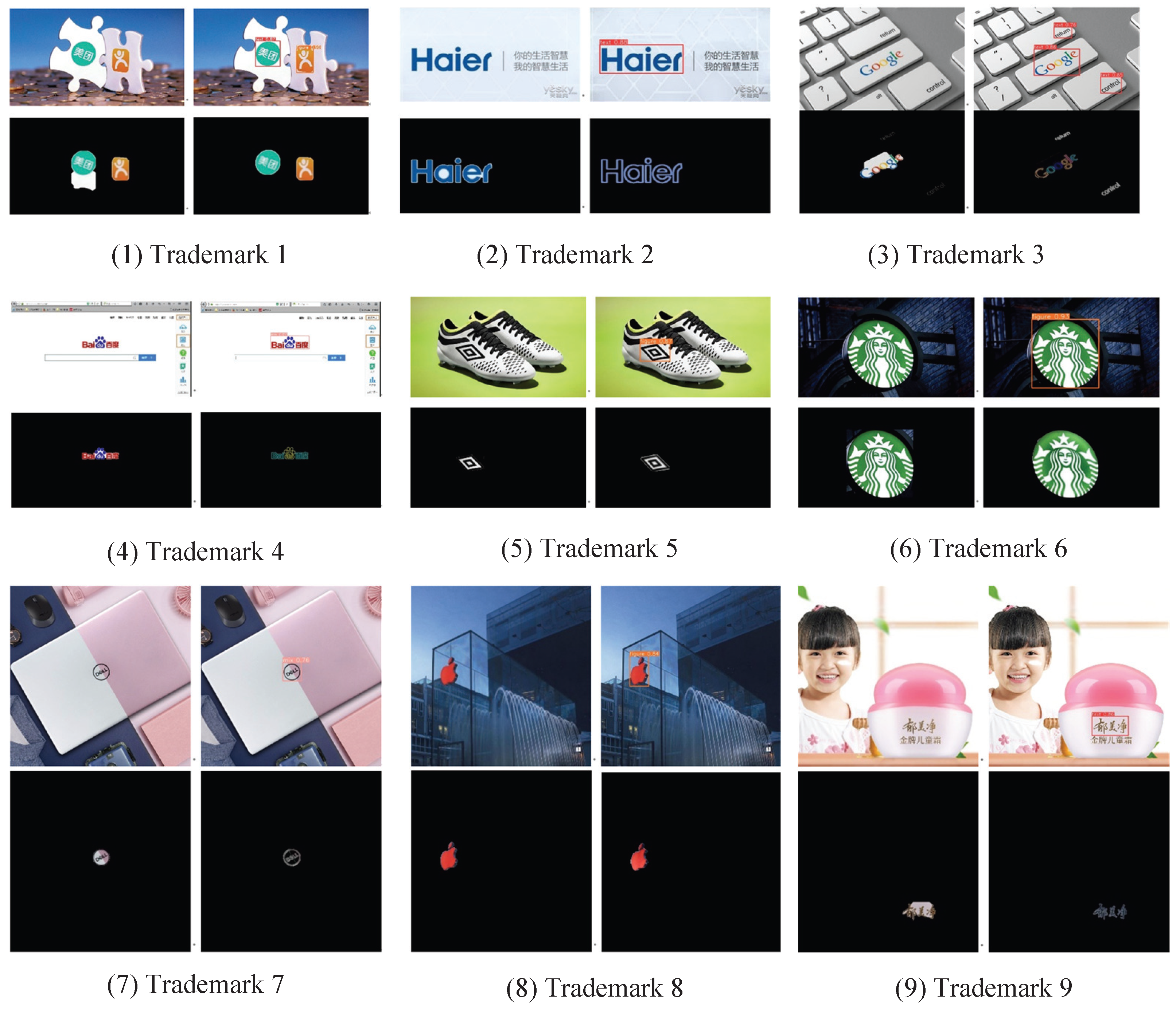

4.2. Qualitative Analysis

- (1)

- First Group (Figure and Mix Categories): The baseline method achieved incomplete segmentation, with excessive background extraction. In contrast, the proposed algorithm, benefiting from its image equalization process, effectively suppressed background interference and extracted highly complete foreground targets.

- (2)

- Second Group (Text-Based Logos): While the baseline method accurately located the rectangular regions of text-based logos, it introduced white noise around the letters “A” and “E,” which were inadvertently included in the segmentation. The proposed algorithm, leveraging mathematical morphology operations, successfully removed boundary noise through erosion and separated the intertwined “E” and “R” letters, resulting in cleaner and more precise target extraction.

- (3)

- Third Group (Text-Based Logos): The baseline method failed to detect two out of three text-based targets, misclassifying them as background. Additionally, it incorrectly included background elements above the “Google” logo. The proposed algorithm demonstrated superior performance, achieving both high accuracy and completeness in foreground segmentation.

- (4)

- Fourth Group: Similar challenges were observed in this group as in the second group, but the proposed algorithm successfully addressed these issues.

- (5)

- Fifth and Sixth Groups (Graphic-Based Logos): In the fifth group, the baseline method lost the outer black rectangle during target extraction. While the proposed algorithm retained some background information, it achieved a more complete segmentation of the logo shape. In the sixth group, although the baseline method preserved all foreground information, it also extracted bright background regions due to poor contrast. The proposed algorithm minimized these issues by reducing the impact of brightness variations, yielding an optimal segmentation result.

- (6)

- Seventh, Eighth, and Ninth Groups (Small Logo Segmentation): These groups focused on small logos from different categories: For the “DELL” logo, the proposed algorithm, utilizing morphological erosion and dilation, directly refined the main elements—the circle and text—producing a clearer result; the “Apple” logo, with its distinct color contrast, was accurately segmented by both methods, though the proposed algorithm showed slight improvements in boundary precision; the baseline method exhibited significant shortcomings in segmenting the “Yujing” logo, while the proposed algorithm achieved a more robust and accurate extraction.

4.3. Quantitative Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Tursun, O.; Denman, S.; Sivapalan, S.; Sridharan, S.; Fookes, C.; Mau, S. Component-Based Attention for Large-Scale Trademark Retrieval. IEEE Trans. Inf. Forensics Secur. 2022, 17, 2350–2363. [Google Scholar] [CrossRef]

- Appana, V.; Guttikonda, T.M.; Shree, D.; Bano, S.; Kurra, H. Similarity Score of Two Images using Different Measures. In Proceedings of the 2021 6th International Conference on Inventive Computation Technologies (ICICT), Coimbatore, India, 20–22 January 2021; pp. 741–746. [Google Scholar] [CrossRef]

- Li, J. Image Infringement Judgement with CNN-based Face Recognition. In Proceedings of the 2022 International Conference on Big Data, Information and Computer Network (BDICN), Sanya, China, 20–22 January 2022; pp. 610–615. [Google Scholar] [CrossRef]

- Agarwal, A.; Agrawal, D.; Sharma, D.K. Trademark Image Retrieval using Color and Shape Features and Similarity Measurement. In Proceedings of the 2021 8th International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 17–19 March 2021; pp. 486–490. [Google Scholar]

- Du, W.; Yang, H.; Toe, T.T. An improved image segmentation model of FCN based on residual network. In Proceedings of the 2023 4th International Conference on Computer Vision, Image and Deep Learning (CVIDL), Zhuhai, China, 12–14 May 2023; pp. 135–139. [Google Scholar] [CrossRef]

- Mahmud Auvy, A.A.; Zannah, R.; Mahbub-E-Elahi; Sharif, S.; Mahmud, W.A.; Noor, J. Semantic Segmentation with Attention Dense U-Net for Lung Extraction from X-Ray Images. In Proceedings of the 2024 6th International Conference on Electrical Engineering and Information & Communication Technology, Dhaka, Bangladesh, 2–4 May 2024; pp. 658–663. [Google Scholar] [CrossRef]

- Zhiyong Ju, C.Z.; Zhang, W. Color Commodity Label Image Segmentation Method Based on SVM and Region Growth. Electron. Sci. Technol. 2021, 34, 69–74. [Google Scholar] [CrossRef]

- S., P.; K., J.A.S. Object Segmentation Based on the Integration of Adaptive K-means and GrabCut Algorithm. In Proceedings of the 2022 International Conference on Wireless Communications Signal Processing and Networking (WiSPNET), Chennai, India, 24–26 March 2022; pp. 213–216. [Google Scholar] [CrossRef]

- Zhu, W.; Peng, B. Manifold-based aggregation clustering for unsupervised vehicle re-identification. Knowl.-Based Syst. 2022, 235, 107624. [Google Scholar] [CrossRef]

- He, J.; Li, A.; Li, H.; Liao, Y.; Gong, B.; Rao, Y.; Liang, W.; Wu, J.; Wen, Y. Visible Light Image Automatic Recognition and Segmentation Method for Overhead Power Line Insulators Based on Yolo v5 and Grabcut. South. Power Syst. Technol. 2023, 17, 128–135. [Google Scholar] [CrossRef]

- Yao, W.D.; Li, P.; Zhao, Y.; Wu, H. Review of research on face deepfake detection methods. J. Image Graph. 2025, 30, 2343–2363. [Google Scholar] [CrossRef]

- Minjie, Z.; Diqing, Z.; Yan, S.; Yilian, Z. Fingerprint Identification Technology of Power IOT Terminal based on Network Traffic Feature. In Proceedings of the 2023 IEEE 3rd International Conference on Information Technology, Big Data and Artificial Intelligence (ICIBA), Chongqing, China, 26–28 May 2023; Volume 3, pp. 1711–1715. [Google Scholar] [CrossRef]

- Manikandan, K.; Olayil, R.; Govindan, R.; Thangavelu, R. Artificial Intelligence Based Drone Control for Monitoring Military Environment and Its Security Applications. In Proceedings of the 2024 8th International Conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud) (I-SMAC), Kirtipur, Nepal, 3–5 October 2024; pp. 572–576. [Google Scholar] [CrossRef]

- Bhatia, S.; Sharma, M. Deep Learning Technique to Detect Fake Accounts on Social Media. In Proceedings of the 2024 11th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions) (ICRITO), Noida, India, 14–15 March 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Xu, J. The Boundaries of Copyright Protection for Deep Learning Technologies of Artificial Intelligence. In Proceedings of the 2023 4th International Conference on Big Data, Artificial Intelligence and Internet of Things Engineering (ICBAIE), Hangzhou, China, 25–27 August 2023; pp. 209–213. [Google Scholar] [CrossRef]

- Liu, L.; Wang, X.; Huang, X.; Bao, Q.; Li, X.; Wang, Y. Abnormal operation recognition based on a spatiotemporal residual network. Multim. Tools Appl. 2024, 83, 61929–61941. [Google Scholar] [CrossRef]

- Fulkar, B.; Patil, P.; Srivastav, G.; Mahale, P. Predicting Agricultural Crop Damage Caused by Unexpected Rainfall Using Deep Learning. In Proceedings of the 2024 International Conference on Intelligent Systems for Cybersecurity (ISCS), Gurugram, India, 3–4 May 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Purushothaman, K.E.; Ragavendran, N.; Ramesh, S.P.; Karthikeyan, V.G.; Uma Maheswari, G.; Saravanakumar, R. Innovative Urban Planning for Harnessing Blockchain and Edge Artificial Intelligence for Smart City Solutions. In Proceedings of the 2024 Second International Conference on Intelligent Cyber Physical Systems and Internet of Things (ICoICI), Coimbatore, India, 20–30 August 2024; pp. 65–68. [Google Scholar] [CrossRef]

- Zhu, W.; Peng, B. Sparse and low-rank regularized deep subspace clustering. Knowl.-Based Syst. 2020, 204, 106199. [Google Scholar] [CrossRef]

- Wang, X.; Li, D.; Li, S.; Sun, Y.; Lan, S. TV Corner-Logo Adaptive Threshold Segmentation Algorithm Based on Saliency Detection. In Proceedings of the 2019 Fifth International Conference on Instrumentation and Measurement, Computer, Communication and Control (IMCCC), Qinhuangdao, China, 18–20 September 2019; pp. 1885–1888. [Google Scholar] [CrossRef]

- Wu, M.; Xiao, W.; Hong, Z. Similar image retrieval in large-scale trademark databases based on regional and boundary fusion feature. PLoS ONE 2018, 13, e0205002. [Google Scholar] [CrossRef]

- Jardim, S.V.B.; António, J.; Mora, C. Graphical Image Region Extraction with K-Means Clustering and Watershed. J. Imaging 2022, 8, 163. [Google Scholar] [CrossRef]

- Long, J.; Feng, X.; Zhu, X.; Zhang, J.; Gou, G. Efficient Superpixel-Guided Interactive Image Segmentation Based on Graph Theory. Symmetry 2018, 10, 169. [Google Scholar] [CrossRef]

- Yingjie Yue, P.L.; Xu, R. A High Precision Segmentation Algorithm of Sports Trademark. Comput. Appl. Softw. 2022, 39, 246–251. [Google Scholar]

- Alshowaish, H.; Al-Ohali, Y.; Al-Nafjan, A. Trademark Image Similarity Detection Using Convolutional Neural Network. Appl. Sci. 2022, 12, 1752. [Google Scholar] [CrossRef]

- H, S.; H, L. Research on image recognition based on different depths of VGGNet. Image Process. Theory Appl 2024, 7, 84–90. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, 27–30 June 2016; IEEE Computer Society: Los Alamitos, CA, USA, 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Guan, B.; Ye, H.; Liu, H.; Sethares, W.A. Video Logo Retrieval Based on Local Features. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 25–28 October 2020; pp. 1396–1400. [Google Scholar] [CrossRef]

- Kumar, S.; Negi, A.; Singh, J.; Verma, H. A Deep Learning for Brain Tumor MRI Images Semantic Segmentation Using FCN. In Proceedings of the 2018 4th International Conference on Computing Communication and Automation (ICCCA), Greater Noida, India, 14–15 December 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Pan, C.; Yan, W. Object detection based on saturation of visual perception. Multim. Tools Appl. 2020, 79, 19925–19944. [Google Scholar] [CrossRef]

- Zhu, W.; Peng, Y. Elastic net regularized kernel non-negative matrix factorization algorithm for clustering guided image representation. Appl. Soft Comput. 2020, 97, 106774. [Google Scholar] [CrossRef]

- Shulgin, M.; Makarov, I. Scalable Zero-Shot Logo Recognition. IEEE Access 2023, 11, 142702–142710. [Google Scholar] [CrossRef]

- Tursun, O.; Aker, C.; Kalkan, S. A Large-scale Dataset and Benchmark for Similar Trademark Retrieval. arXiv 2017, arXiv:1701.05766. [Google Scholar] [CrossRef]

- Su, H.; Zhu, X.; Gong, S. Open Logo Detection Challenge. In Proceedings of the British Machine Vision Conference 2018, BMVC 2018, Newcastle, UK, 3–6 September 2018; Springer Press: Heidelberg, Germany, 2018; p. 16. [Google Scholar]

- Wang, J.; Min, W.; Hou, S.; Ma, S.; Zheng, Y.; Jiang, S. LogoDet-3K: A Large-Scale Image Dataset for Logo Detection. ACM Trans. Multim. Comput. Commun. Appl. 2022, 18, 21:1–21:19. [Google Scholar] [CrossRef]

- Hou, Q.; Min, W.; Wang, J.; Hou, S.; Zheng, Y.; Jiang, S. FoodLogoDet-1500: A Dataset for Large-Scale Food Logo Detection via Multi-Scale Feature Decoupling Network. In Proceedings of the MM ’21: ACM Multimedia Conference, Virtual Event, China, 20–24 October 2021; Shen, H.T., Zhuang, Y., Smith, J.R., Yang, Y., César, P., Metze, F., Prabhakaran, B., Eds.; ACM: New York, NY, USA, 2021; pp. 4670–4679. [Google Scholar] [CrossRef]

- Nguyen, D.; Nguyen, T.; Do, T.; Ngo, T.D.; Le, D. U15-Logos: Unconstrained Logo Dataset with Evaluation by Deep learning Methods. In Proceedings of the International Conference on Multimedia Analysis and Pattern Recognition, MAPR 2020, Hanoi, Vietnam, 8–9 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Li, L.; Wang, X.; Yan, W.Q. Enhanced multi-scale trademark element detection using the improved DETR. Sci. Rep. 2024, 14, 29174. [Google Scholar] [CrossRef]

- Zhou, B.; Wang, X.; Zhou, W.; Li, L. Trademark Text Recognition Combining SwinTransformer and Feature-Query Mechanisms. Electronics 2024, 13, 2814. [Google Scholar] [CrossRef]

- Rother, C.; Kolmogorov, V.; Blake, A. “GrabCut”: Interactive foreground extraction using iterated graph cuts. ACM Trans. Graph. 2004, 23, 309–314. [Google Scholar] [CrossRef]

- Liu, F.; Liu, Z.; Liu, W.; Zhao, H. Combining the YOLOv5 and Grabcut Algorithms for Fashion Color Analysis of Clothing. In Proceedings of the 2022 5th World Conference on Mechanical Engineering and Intelligent Manufacturing (WCMEIM), Ma’anshan, China, 18–20 November 2022; pp. 1126–1129. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, J.; Wang, X. Intelligent Trademark Image Segmentation Through Multi-Stage Optimization. Electronics 2025, 14, 3914. https://doi.org/10.3390/electronics14193914

Wang J, Wang X. Intelligent Trademark Image Segmentation Through Multi-Stage Optimization. Electronics. 2025; 14(19):3914. https://doi.org/10.3390/electronics14193914

Chicago/Turabian StyleWang, Jiaxin, and Xiuhui Wang. 2025. "Intelligent Trademark Image Segmentation Through Multi-Stage Optimization" Electronics 14, no. 19: 3914. https://doi.org/10.3390/electronics14193914

APA StyleWang, J., & Wang, X. (2025). Intelligent Trademark Image Segmentation Through Multi-Stage Optimization. Electronics, 14(19), 3914. https://doi.org/10.3390/electronics14193914