Analysis of Sensor Location and Time–Frequency Feature Contributions in IMU-Based Gait Identity Recognition

Abstract

1. Introduction

- We proposed a time–frequency architecture that integrates time-domain and frequency-domain features from multiple IMU sensors, allowing the model to capture both short-term motion dynamics and periodic motion patterns for more accurate identity recognition.

- We conducted a quantitative analysis of the contributions of different sensor positions and signal modalities (time-domain and frequency-domain features) in multi-IMU identity recognition.

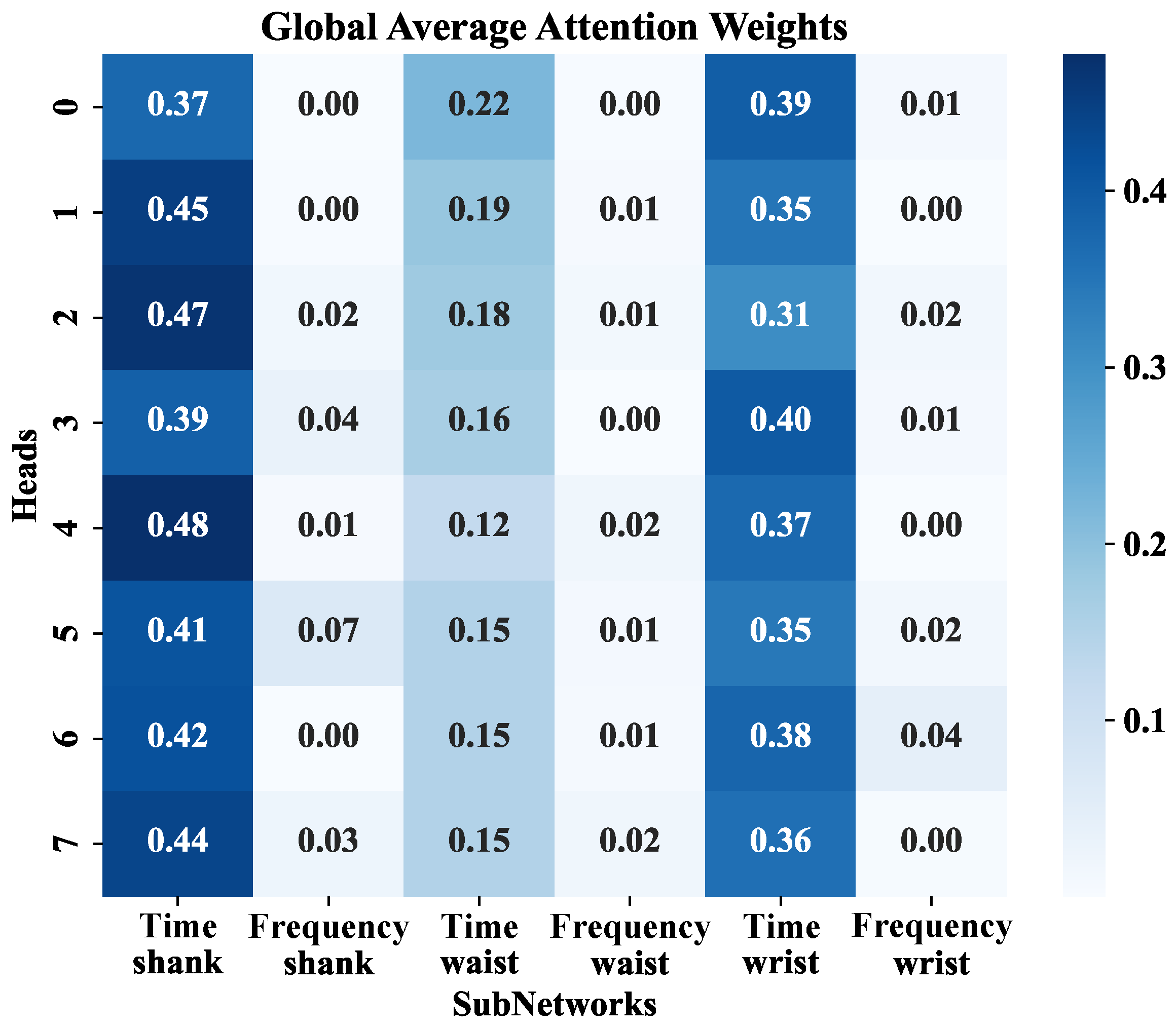

- We testified that lower-limb (shank) IMUs and time-domain features play dominant roles in identification performance, while signals from other positions and frequency-domain features mainly serve as auxiliary or redundant information that enhances system robustness and generalization.

2. Related Work

2.1. IMU-Based Gait and Identity Recognition

2.2. Time–Frequency Feature Extraction in Wearable Biometrics

2.3. Multi-Sensor Position Fusion for Wearable Identity Recognition

2.4. Deep Learning and Attention-Based Fusion in Wearable Sensing

3. Methods

- (1)

- Six parallel sub-networks from three positions (shank, waist, wrist) in the two modalities (time and frequency), producing comparable 512-dimensional embeddings;

- (2)

- A multi-head attention-gated fusion module that yields a fused representation and per-branch importance scores;

- (3)

- A lightweight classifier for identity recognition.

| Algorithm 1 TFAGNet for identity identification. |

|

3.1. Parallel Sub-Networks for Multi-Modal Feature Extraction

3.2. Attention-Gated Fusion Module

3.3. Classification Layer

4. Experiments

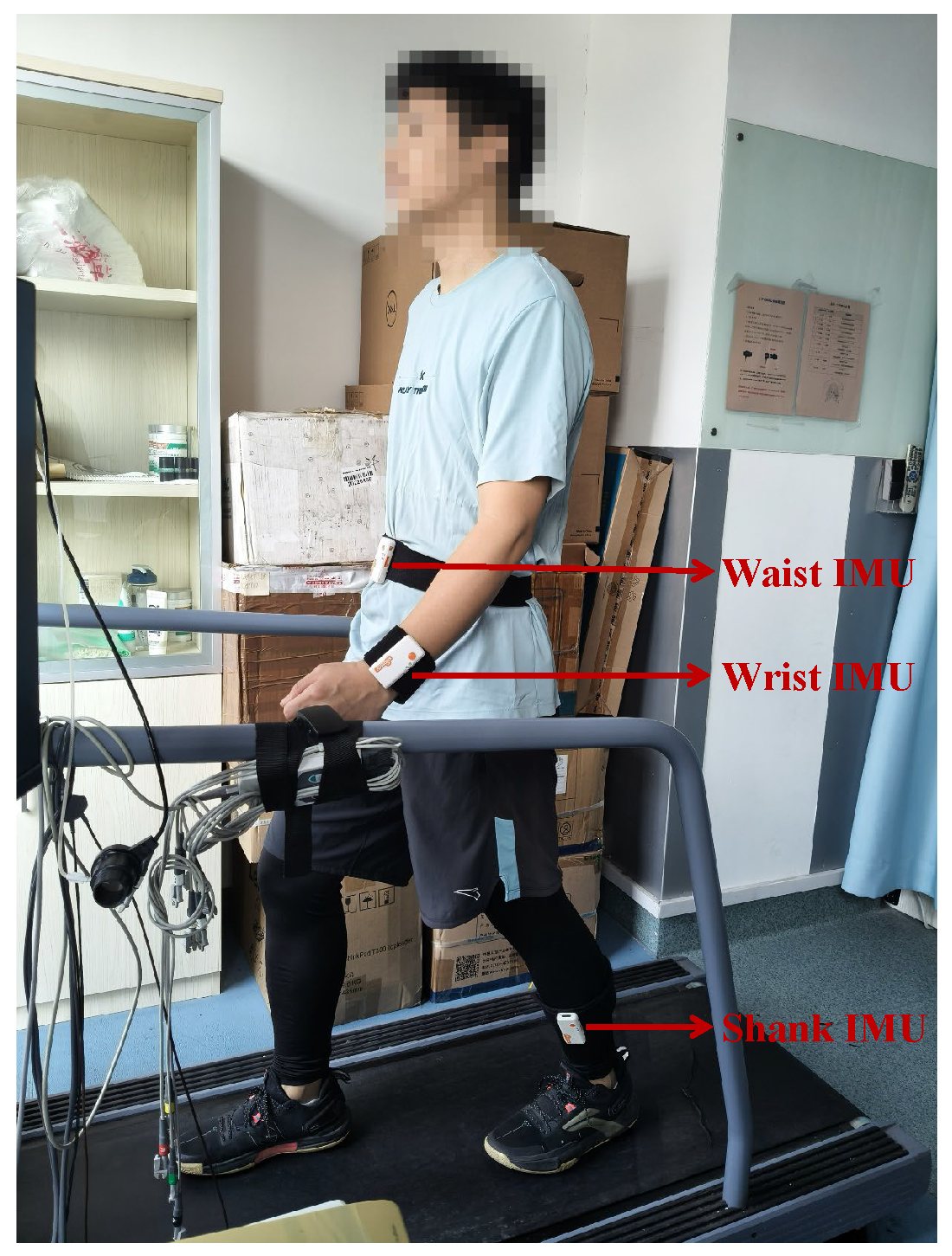

4.1. Data Collection

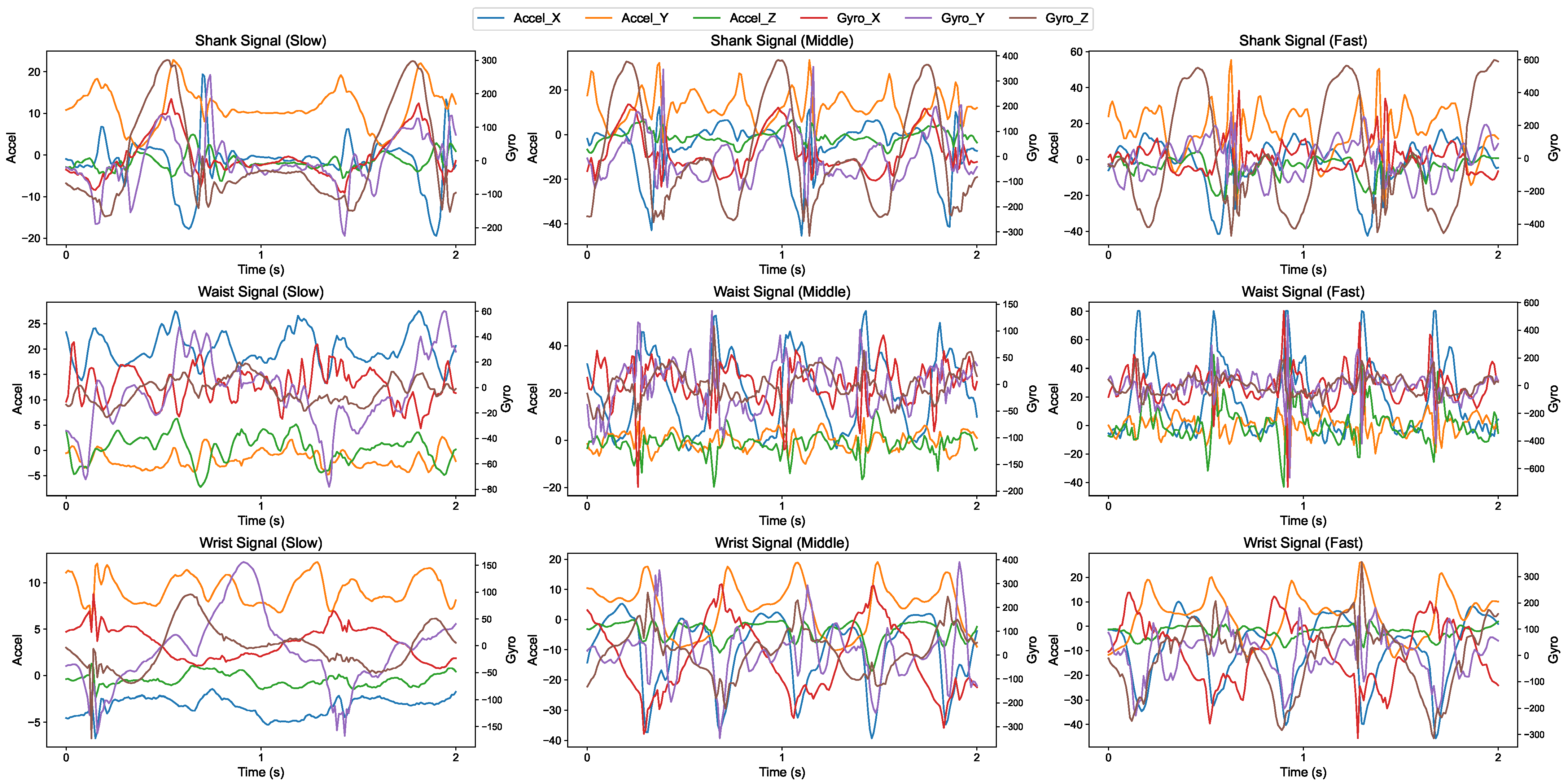

4.2. Data Preprocessing

4.3. Experimental Settings

5. Results and Analysis

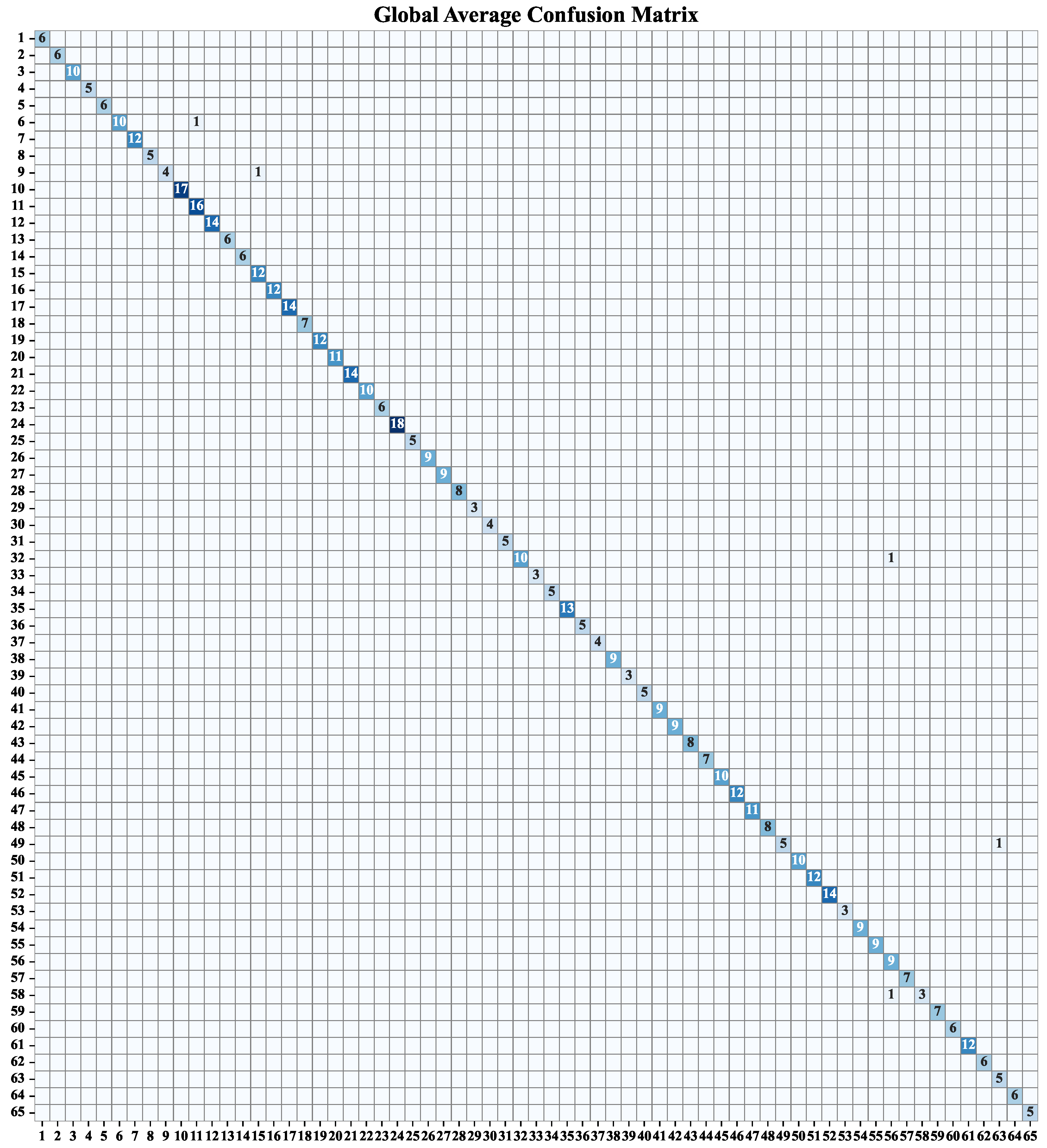

5.1. Gait Identification Performance

5.2. Comparison with Baseline Methods

5.3. Impact of Signal Modalities and Sensor Placements

5.4. Impact of Architectural Components

5.5. Practical Applications

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Liu, Y.; Ivanov, K.; Wang, J.; Xiong, F.; Wang, J.; Wang, M.; Nie, Z.; Wang, L.; Yan, Y. Topological data analysis for robust gait biometrics based on wearable sensors. IEEE Trans. Consum. Electron. 2024, 70, 4910–4921. [Google Scholar] [CrossRef]

- Zhang, Z.; Ning, H.; Farha, F.; Ding, J.; Choo, K.K.R. Artificial intelligence in physiological characteristics recognition for internet of things authentication. Digit. Commun. Netw. 2024, 10, 740–755. [Google Scholar] [CrossRef]

- Li, M.; Huang, B.; Tian, G. A comprehensive survey on 3D face recognition methods. Eng. Appl. Artif. Intell. 2022, 110, 104669. [Google Scholar] [CrossRef]

- Hou, B.; Zhang, H.; Yan, R. Finger-vein biometric recognition: A review. IEEE Trans. Instrum. Meas. 2022, 71, 5020426. [Google Scholar] [CrossRef]

- Nguyen, K.; Proença, H.; Alonso-Fernandez, F. Deep learning for iris recognition: A survey. ACM Comput. Surv. 2024, 56, 1–35. [Google Scholar] [CrossRef]

- Li, Y.; Sun, X.; Yang, Z.; Huang, H. Snnauth: Sensor-based continuous authentication on smartphones using spiking neural networks. IEEE Internet Things J. 2024, 11, 15957–15968. [Google Scholar] [CrossRef]

- Sun, Y.; Lo, B. An artificial neural network framework for gait-based biometrics. IEEE J. Biomed. Health Inform. 2018, 23, 987–998. [Google Scholar] [CrossRef]

- Manupibul, U.; Tanthuwapathom, R.; Jarumethitanont, W.; Kaimuk, P.; Limroongreungrat, W.; Charoensuk, W. Integration of force and IMU sensors for developing low-cost portable gait measurement system in lower extremities. Sci. Rep. 2023, 13, 10653. [Google Scholar] [CrossRef]

- Papavasileiou, I.; Qiao, Z.; Zhang, C.; Zhang, W.; Bi, J.; Han, S. GaitCode: Gait-based continuous authentication using multimodal learning and wearable sensors. Smart Health 2021, 19, 100162. [Google Scholar] [CrossRef]

- Liu, S.; Shao, W.; Li, T.; Xu, W.; Song, L. Recent advances in biometrics-based user authentication for wearable devices: A contemporary survey. Digit. Signal Process. 2022, 125, 103120. [Google Scholar] [CrossRef]

- Marsico, M.D.; Mecca, A. A survey on gait recognition via wearable sensors. ACM Comput. Surv. (CSUR) 2019, 52, 1–39. [Google Scholar] [CrossRef]

- Derawi, M.O.; Nickel, C.; Bours, P.; Busch, C. Unobtrusive user-authentication on mobile phones using biometric gait recognition. In Proceedings of the 2010 Sixth International Conference on Intelligent Information Hiding and Multimedia Signal Processing, Darmstadt, Germany, 15–17 October 2010; IEEE: New York, NY, USA, 2010; pp. 306–311. [Google Scholar]

- Mantyjarvi, J.; Lindholm, M.; Vildjiounaite, E.; Makela, S.M.; Ailisto, H. Identifying users of portable devices from gait pattern with accelerometers. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, Philadelphia, PA, USA, 23 March 2005; IEEE: New York, NY, USA, 2005; Volume 2, pp. ii/973–ii/976. [Google Scholar]

- Li, G.; Huang, L.; Xu, H. iwalk: Let your smartphone remember you. In Proceedings of the 2017 4th International Conference on Information Science and Control Engineering (ICISCE), Changsha, China, 21–23 July 2017; IEEE: New York, NY, USA, 2017; pp. 414–418. [Google Scholar]

- Sudhakar, S.R.V.; Kayastha, N.; Sha, K. ActID: An efficient framework for activity sensor based user identification. Comput. Secur. 2021, 108, 102319. [Google Scholar] [CrossRef]

- Nickel, C.; Wirtl, T.; Busch, C. Authentication of smartphone users based on the way they walk using k-NN algorithm. In Proceedings of the 2012 Eighth International Conference on Intelligent Information Hiding and Multimedia Signal Processing, Piraeus-Athens, Greece, 18–20 July 2012; IEEE: New York, NY, USA, 2012; pp. 16–20. [Google Scholar]

- Luca, R.; Bejinariu, S.I.; Costin, H.; Rotaru, F. Inertial data based learning methods for person authentication. In Proceedings of the 2021 International Symposium on Signals, Circuits and Systems (ISSCS), Iasi, Romania, 15–16 July 2021; IEEE: New York, NY, USA, 2021; pp. 1–4. [Google Scholar]

- Nickel, C.; Busch, C. Classifying accelerometer data via hidden markov models to authenticate people by the way they walk. IEEE Aerosp. Electron. Syst. Mag. 2013, 28, 29–35. [Google Scholar] [CrossRef]

- Rong, L.; Jianzhong, Z.; Ming, L.; Xiangfeng, H. A wearable acceleration sensor system for gait recognition. In Proceedings of the 2007 2nd IEEE Conference on Industrial Electronics and Applications, Harbin, China, 23–25 May 2007; IEEE: New York, NY, USA, 2007; pp. 2654–2659. [Google Scholar]

- Lu, H.; Huang, J.; Saha, T.; Nachman, L. Unobtrusive gait verification for mobile phones. In Proceedings of the 2014 ACM International Symposium on Wearable Computers, Seattle, WA, USA, 13–17 September 2014; pp. 91–98. [Google Scholar]

- Ahmad, M.; Alqarni, M.A.; Khan, A.; Khan, A.; Hussain Chauhdary, S.; Mazzara, M.; Umer, T.; Distefano, S. Smartwatch-Based Legitimate User Identification for Cloud-Based Secure Services. Mob. Inf. Syst. 2018, 2018, 5107024. [Google Scholar] [CrossRef]

- Hu, M.; Zhang, K.; You, R.; Tu, B. Multisensor-based continuous authentication of smartphone users with two-stage feature extraction. IEEE Internet Things J. 2022, 10, 4708–4724. [Google Scholar] [CrossRef]

- Middya, A.I.; Roy, S.; Mandal, S. User recognition in participatory sensing systems using deep learning based on spectro-temporal representation of accelerometer signals. Knowl. Based Syst. 2022, 258, 110046. [Google Scholar] [CrossRef]

- Chen, L.; Zhang, Y.; Peng, L. Metier: A deep multi-task learning based activity and user recognition model using wearable sensors. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2020, 4, 1–18. [Google Scholar] [CrossRef]

- Dehzangi, O.; Taherisadr, M.; ChangalVala, R. IMU-based gait recognition using convolutional neural networks and multi-sensor fusion. Sensors 2017, 17, 2735. [Google Scholar] [CrossRef]

- Asuncion, L.V.R.; De Mesa, J.X.P.; Juan, P.K.H.; Sayson, N.T.; Cruz, A.R.D. Thigh motion-based gait analysis for human identification using inertial measurement units (IMUs). In Proceedings of the 2018 IEEE 10th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment and Management (HNICEM), Baguio City, Philippines, 29 November–2 December 2018; IEEE: New York, NY, USA, 2018; pp. 1–6. [Google Scholar]

- Huang, H.; Zhou, P.; Li, Y.; Sun, F. A lightweight attention-based CNN model for efficient gait recognition with wearable IMU sensors. Sensors 2021, 21, 2866. [Google Scholar] [CrossRef]

- Lee, Y.J.; Wu, C.C. One step of gait information from sensing walking surface for personal identification. IEEE Sens. J. 2023, 23, 5243–5250. [Google Scholar] [CrossRef]

- Liu, J.; Song, W.; Shen, L.; Han, J.; Ren, K. Secure user verification and continuous authentication via earphone imu. IEEE Trans. Mob. Comput. 2022, 22, 6755–6769. [Google Scholar] [CrossRef]

- Venkatachalam, S.; Nair, H.; Vellaisamy, P.; Zhou, Y.; Youssfi, Z.; Shen, J.P. Realtime person identification via gait analysis using imu sensors on edge devices. In Proceedings of the 2024 International Conference on Neuromorphic Systems (ICONS), Arlington, VA, USA, 30 July–2 August 2024; IEEE: New York, NY, USA, 2024; pp. 371–375. [Google Scholar]

- Yuan, J.; Zhang, Y.; Liu, S.; Zhu, R. Wearable leg movement monitoring system for high-precision real-time metabolic energy estimation and motion recognition. Research 2023, 6, 0214. [Google Scholar] [CrossRef] [PubMed]

- Cortes, V.M.P.; Chatterjee, A.; Khovalyg, D. Dynamic personalized human body energy expenditure: Prediction using time series forecasting LSTM models. Biomed. Signal Process. Control 2024, 87, 105381. [Google Scholar]

- Lee, Y.J.; Wu, Y.S.; Lin, P.C. Utilization of two types of feature datasets with image-based and time series deep learning models in recognizing walking status and revealing personal identification. Adv. Eng. Inform. 2024, 62, 102729. [Google Scholar] [CrossRef]

- Zhao, C.; Gao, F.; Shen, Z. Multi-motion sensor behavior based continuous authentication on smartphones using gated two-tower transformer fusion networks. Comput. Secur. 2024, 139, 103698. [Google Scholar] [CrossRef]

- Guo, Z.; Shen, W.; Xiao, M.; Cui, L.; Xie, D. Transformer-Based Biometrics Method for Smart Phone Continuous Authentication. In Proceedings of the 2024 International Conference on Networking, Sensing and Control (ICNSC), Hangzhou, China, 18–20 October 2024; IEEE: New York, NY, USA, 2024; pp. 1–6. [Google Scholar]

- Nguyen, K.N.; Rasnayaka, S.; Wickramanayake, S.; Meedeniya, D.; Saha, S.; Sim, T. Spatio-temporal dual-attention transformer for time-series behavioral biometrics. IEEE Trans. Biom. Behav. Identity Sci. 2024, 6, 591–601. [Google Scholar] [CrossRef]

- Lu, Z.; Zhou, H.; Wang, L.; Kong, D.; Lyu, H.; Wu, H.; Chen, B.; Chen, F.; Dong, N.; Yang, G. GaitFormer: Two-Stream Transformer Gait Recognition Using Wearable IMU Sensors in the Context of Industry 5.0. IEEE Sens. J. 2025, 25, 19947–19956. [Google Scholar] [CrossRef]

- Yi, S.; Mei, Z.; Ivanov, K.; Mei, Z.; He, T.; Zeng, H. Gait-based identification using wearable multimodal sensing and attention neural networks. Sens. Actuators A Phys. 2024, 374, 115478. [Google Scholar] [CrossRef]

- Huan, R.; Dong, G.; Cui, J.; Jiang, C.; Chen, P.; Liang, R. INSENGA: Inertial sensor gait recognition method using data imputation and channel attention weight redistribution. IEEE Sens. J. 2025. [Google Scholar] [CrossRef]

- Li, J.; Wen, Y.; He, L. Scconv: Spatial and channel reconstruction convolution for feature redundancy. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 6153–6162. [Google Scholar]

| Statistical Characteristic | Value | ||

|---|---|---|---|

| Number of Subjects | 65 (All) | 30 (Males) | 35 (Females) |

| Age (years) | 27.78 ± 6.12 | 28.20 ± 6.31 | 27.43 ± 5.93 |

| Height (cm) | 168.55 ± 8.43 | 175.55 ± 5.53 | 162.56 ± 5.28 |

| Weight (kg) | 61.97 ± 11.31 | 70.15 ± 9.84 | 54.97 ± 6.95 |

| Hyperparameters | Value |

|---|---|

| Batch Size | 64 |

| Optimizer | Adam |

| Initial Learning Rate | 0.0001 |

| Learning Rate Scheduler | StepLR (gamma = 0.5, step_size = 20) |

| Epochs | 50 |

| Loss Function | Cross-Entropy Loss |

| Year | Method | Identity Recognition | |||||||

|---|---|---|---|---|---|---|---|---|---|

| 2800 Samples from 65 Subjects | |||||||||

| ACC | PRE | REC | F1 | MCC | AUC | Flops | Params | ||

| 2021 | CNN + CEDS [27] | 0.81 ± 0.02 | 0.86 ± 0.02 | 0.81 ± 0.02 | 0.81 ± 0.03 | 0.81 ± 0.03 | 0.97 ± 0.00 | 4.29 G | 2.45 M |

| 2022 | Two-direction CNN [29] | 0.88 ± 0.03 | 0.92 ± 0.02 | 0.88 ± 0.03 | 0.88 ± 0.03 | 0.88 ± 0.03 | 0.99 ± 0.00 | 1.24 G | 8.44 M |

| 2023 | SCNN [28] | 0.84 ± 0.05 | 0.90 ± 0.02 | 0.84 ± 0.05 | 0.84 ± 0.04 | 0.84 ± 0.05 | 0.99 ± 0.00 | 8.38 G | 4.78 M |

| 2024 | Efficient CNN [30] | 0.84 ± 0.06 | 0.88 ± 0.04 | 0.84 ± 0.06 | 0.83 ± 0.06 | 0.83 ± 0.06 | 0.99 ± 0.00 | 3.29 G | 4.63 M |

| 2024 | CNN-LSTM [32] | 0.86 ± 0.08 | 0.90 ± 0.05 | 0.86 ± 0.08 | 0.86 ± 0.09 | 0.86 ± 0.09 | 0.99 ± 0.00 | 3.79 G | 3.88 M |

| 2024 | SW-LSTM [33] | 0.89 ± 0.02 | 0.91 ± 0.02 | 0.89 ± 0.02 | 0.89 ± 0.03 | 0.89 ± 0.02 | 0.99 ± 0.00 | 2.63 G | 4.53 M |

| Ours | TFAGNet | 0.96 ± 0.01 | 0.97 ± 0.00 | 0.96 ± 0.01 | 0.96 ± 0.01 | 0.96 ± 0.01 | 0.99 ± 0.00 | 1.12 G | 1.54 M |

| Components | Identity Recognition | |||||||

|---|---|---|---|---|---|---|---|---|

| 2800 Samples from 65 Subjects | ||||||||

| ACC | PRE | REC | F1 | MCC | AUC | Flops | Params | |

| Without TBC | 0.97 ± 0.00 | 0.97 ± 0.00 | 0.97 ± 0.00 | 0.96 ± 0.00 | 0.96 ± 0.00 | 1.00 ± 0.00 | 3.32 G | 4.12 M |

| Without Multi-head Attention | 0.92 ± 0.01 | 0.93 ± 0.01 | 0.92 ± 0.01 | 0.91 ± 0.01 | 0.91 ± 0.01 | 0.98 ± 0.00 | 1.12 G | 2.30 M |

| Without Time Domain | 0.68 ± 0.02 | 0.72 ± 0.02 | 0.68 ± 0.02 | 0.67 ± 0.02 | 0.68 ± 0.02 | 0.95 ± 0.01 | 0.56 G | 1.29 M |

| Without Frequency Domain | 0.93 ± 0.01 | 0.94 ± 0.01 | 0.93 ± 0.01 | 0.93 ± 0.01 | 0.93 ± 0.01 | 0.99 ± 0.00 | 0.56 G | 1.29 M |

| Without Shank SubNet | 0.89 ± 0.01 | 0.90 ± 0.01 | 0.89 ± 0.01 | 0.88 ± 0.01 | 0.88 ± 0.01 | 0.98 ± 0.00 | 0.75 G | 1.37 M |

| Without Waist SubNet | 0.96 ± 0.01 | 0.97 ± 0.01 | 0.96 ± 0.01 | 0.96 ± 0.02 | 0.96 ± 0.01 | 0.99 ± 0.00 | 0.75 G | 1.37 M |

| Without Wrist SubNet | 0.95 ± 0.01 | 0.96 ± 0.01 | 0.95 ± 0.01 | 0.95 ± 0.01 | 0.95 ± 0.01 | 0.99 ± 0.00 | 0.75 G | 1.37 M |

| Only Shank SubNet | 0.94 ± 0.01 | 0.95 ± 0.01 | 0.94 ± 0.01 | 0.94 ± 0.01 | 0.94 ± 0.01 | 0.98 ± 0.01 | 0.37 G | 1.20 M |

| Only Waist SubNet | 0.78 ± 0.01 | 0.81 ± 0.02 | 0.78 ± 0.01 | 0.77 ± 0.01 | 0.78 ± 0.01 | 0.96 ± 0.01 | 0.37 G | 1.20 M |

| Only Wrist SubNet | 0.84 ± 0.01 | 0.86 ± 0.02 | 0.84 ± 0.01 | 0.83 ± 0.01 | 0.84 ± 0.01 | 0.97 ± 0.01 | 0.37 G | 1.20 M |

| ALL | 0.96 ± 0.01 | 0.97 ± 0.00 | 0.96 ± 0.01 | 0.96 ± 0.01 | 0.96 ± 0.01 | 0.99 ± 0.00 | 1.12 G | 1.54 M |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, F.; Wang, H.; Li, X.; Sun, F. Analysis of Sensor Location and Time–Frequency Feature Contributions in IMU-Based Gait Identity Recognition. Electronics 2025, 14, 3905. https://doi.org/10.3390/electronics14193905

Liu F, Wang H, Li X, Sun F. Analysis of Sensor Location and Time–Frequency Feature Contributions in IMU-Based Gait Identity Recognition. Electronics. 2025; 14(19):3905. https://doi.org/10.3390/electronics14193905

Chicago/Turabian StyleLiu, Fangyu, Hao Wang, Xiang Li, and Fangmin Sun. 2025. "Analysis of Sensor Location and Time–Frequency Feature Contributions in IMU-Based Gait Identity Recognition" Electronics 14, no. 19: 3905. https://doi.org/10.3390/electronics14193905

APA StyleLiu, F., Wang, H., Li, X., & Sun, F. (2025). Analysis of Sensor Location and Time–Frequency Feature Contributions in IMU-Based Gait Identity Recognition. Electronics, 14(19), 3905. https://doi.org/10.3390/electronics14193905