Abstract

Speaker verification (SV) is a core technology for security and personalized services, and its importance has been growing with the spread of wearables such as smartwatches, earbuds, and AR/VR headsets, where privacy-preserving on-device operation under limited compute and power budgets is required. Recently, self-supervised learning (SSL) models such as WavLM and wav2vec 2.0 have been widely adopted as front ends that provide multi-layer speech representations without labeled data. Lower layers contain fine-grained acoustic information, whereas higher layers capture phonetic and contextual features. However, conventional SV systems typically use only the final layer or a single-step temporal attention over a simple weighted sum of layers, implicitly assuming that frame importance is shared across layers and thus failing to fully exploit the hierarchical diversity of SSL embeddings. We argue that frame relevance is layer dependent, as the frames most critical for speaker identity differ across layers. To address this, we propose Masked Multi-layer Feature Aggregation (MMFA), which first applies independent frame-wise attention within each layer, then performs learnable layer-wise weighting to suppress irrelevant frames such as silence and noise while effectively combining complementary information across layers. On VoxCeleb1, MMFA achieves consistent improvements over strong baselines in both EER and minDCF, and attention-map analysis confirms distinct selection patterns across layers, validating MMFA as a robust SV approach even in short-utterance and noisy conditions.

1. Introduction

Speaker verification (SV) is the task of determining whether a given speech utterance belongs to a claimed speaker. It serves as a fundamental component in a variety of voice-driven applications, including secure biometric authentication systems, personalized voice assistants, smart home devices, and forensic voice analysis [1,2]. The growing adoption of wearable devices such as smartwatches, earbuds and AR/VR headsets has increased the demand for privacy-preserving on-device SV that operates under tight power and compute budgets and remains robust to short, potentially noisy utterances [3,4]. Over the past decade, the field of speaker verification has evolved considerably, transitioning from traditional statistical methods such as i-vector [5] and probabilistic linear discriminant analysis (PLDA) [6] to deep learning-based approaches. In particular, architectures such as x-vector [7] and ECAPA-TDNN [8] have demonstrated strong performance by leveraging deep neural networks to extract speaker-discriminative embeddings from input speech.

These deep models typically consist of convolutional or time-delay layers, followed by pooling and fully connected layers, and are trained on large-scale speaker-labeled datasets. For example, ECAPA-TDNN extends the x-vector architecture by introducing channel attention, multi-layer feature aggregation, and residual connections, achieving excellent performance across many speaker verification benchmarks.

In parallel with the development of speaker-specific architectures, there has been a growing trend toward using self-supervised learning (SSL) models as front-end feature extractors for speaker verification [9,10,11]. Models such as wav2vec 2.0 [12], HuBERT [13], and WavLM [14] are pretrained on large amounts of unlabeled speech using masked prediction objectives. These models learn multi-layer hierarchical representations, where lower layers capture fine-grained acoustic information and higher layers encode phonetic, semantic, and contextual information. Such representations have proven valuable not only for SV but also for a wide range of downstream tasks, including automatic speech recognition, emotion recognition, and audio classification [15,16,17].

However, effectively exploiting these rich hierarchical representations remains challenging. Each layer of an SSL model encodes information at a different level of abstraction, ranging from formants, timbre, and fine acoustic cues in lower layers to phonetic patterns and speaker-specific traits in intermediate layers, and semantic or contextual information in higher layers [18,19]. While aggregating all layers can provide a diverse set of speaker-relevant cues, it can also introduce redundant or task-irrelevant information. For example, linguistic content from higher layers may contribute little to speaker identity and may even obscure the fine-grained acoustic patterns that are crucial for discrimination.

In addition, real-world speech often contains silence, background noise, emotionally altered speech, or pronunciation errors. These segments carry little or no speaker-discriminative information, and indiscriminately including them in the aggregation process dilutes the contribution of informative frames, reduces the signal-to-noise ratio (SNR), and degrades verification accuracy. Environmental noise such as background music or cross-talk can even be embedded into the representation, causing the system to respond to environmental cues rather than speaker-specific characteristics, thereby harming robustness in practical conditions [20,21].

Existing SV systems that rely on either the final layer or simple layer-wise weighted aggregation, followed by a single pooling or temporal attention step, cannot fully resolve these issues. Such approaches implicitly assume that frame relevance is uniform across layers, which overlooks the possibility that different layers may emphasize different frames depending on the information they encode. We suggest that modeling this variability is critical: the frames most relevant to speaker identity may vary by layer, and ignoring this fact restricts the effectiveness of multi-layer aggregation.

To address these challenges, we propose the Masked Multi-Layer Feature Aggregation (MMFA) framework. MMFA leverages outputs from all layers, performing frame-wise attention independently within each layer to first identify speaker-relevant segments, and then integrating them through learnable layer-wise weighting. This design suppresses irrelevant or noisy frames while combining complementary information across layers. Unlike weighted-layer aggregation or single-step temporal attention, which assume a layer-invariant notion of frame importance, MMFA models frame relevance at the layer level by separating the estimation of frame importance for each layer, explicitly masking non-informative frames within each layer, and fusing the remaining evidence via learnable layer weights—optimizing both which layers matter and which frames matter within each layer.

Experiments on the VoxCeleb1 [22] and VoxCeleb2 [23] datasets show that MMFA consistently outperforms conventional aggregation strategies, including last-layer usage and uniform or learnable layer-weighted summation. Furthermore, analysis of the learned attention maps provides empirical support for our hypothesis: layers deemed important for speaker identity tend to distribute attention broadly across frames, whereas less informative layers focus on a few localized regions. These findings validate the effectiveness of MMFA and underscore that explicitly modeling frame relevance at the layer level is crucial for robust and accurate speaker verification.

2. Related Works

2.1. Previous Multi-Layer Aggregation

SSL models such as Wav2Vec 2.0, HuBERT, and WavLM are pre-trained on large-scale unlabeled speech data and produce hierarchical representations through multiple transformer layers. Lower layers tend to preserve low-level acoustic cues such as formant structures, energy dynamics, and spectral patterns, while upper layers encode higher-level information such as phonetic boundaries, lexical units, and long-range contextual semantics.

In SV, both fine-grained acoustic details and speaker-specific traits such as timbre and prosody are essential. Relying solely on the final layer, as is common in ASR, risks discarding valuable information contained in earlier layers. For example, lower layers may be more robust to noise but sensitive to phonetic variation, whereas upper layers provide greater abstraction at the cost of losing fine-grained speaker cues. Thus, balanced utilization of complementary information across layers is crucial.

To this end, prior work has explored aggregation strategies including simple averaging, learnable weighted sums, and concatenation of multiple layers [9,10,24]. However, these approaches generally treat layer aggregation as a static process and apply a single pooling step across time, implicitly assigning equal importance to all frames within each layer and overlooking temporal selectivity.

2.2. Masking in Speaker Verification

In SV, it is equally important to suppress irrelevant information such as background noise, silence, or distortions caused by emotional speech. Without proper handling, such non-speaker-specific segments may contaminate embeddings and reduce system robustness, especially in real-world acoustic conditions.

Masking has emerged as an effective way to mitigate this issue by allowing models to attend selectively to informative frames while ignoring irrelevant ones. For instance, Context-Aware Masking (CAM) [25] dynamically removes non-discriminative regions of speech, leading to significant performance improvements by isolating speaker-relevant segments.

When SSL models are employed, the representation space becomes richer due to multi-layer embeddings that capture diverse levels of acoustic and phonetic information. While this abundance of features is beneficial, it also increases the risk of incorporating irrelevant frames such as silence or noise, particularly under challenging conditions.

Existing masking methods, however, mainly focus on frame-level selection without considering the hierarchical structure of SSL embeddings. Conversely, multi-layer aggregation studies exploit complementary information across layers but neglect temporal selectivity within each layer. This gap motivates our approach, which integrates both perspectives by combining multi-layer aggregation with frame-level masking, enabling more effective utilization of SSL representations for SV.

3. Proposed Methods

In downstream SV systems that utilize SSL models, conventional multi-layer aggregation methods generally follow a two-stage process. First, layer-wise aggregation is performed by computing the relative importance of each layer to obtain a single unified representation. Second, frame-level pooling is applied to collapse the time dimension by assigning attention weights to frames and averaging them accordingly. While such methods are effective in capturing global trends, they often fail to fully account for the local and layer-dependent characteristics of speaker-discriminative information.

Speaker-relevant cues such as pitch, prosody, and timbre features are not uniformly distributed across time and layers. Some frames may contain silence, noise, or emotionally altered speech that contributes little to speaker identity, while others carry rich discriminative information. Moreover, since each SSL layer captures different types of information, we assume that the contribution of a given frame to speaker verification can vary substantially across layers. Unlike weighted-layer aggregation or single-step temporal attention—which assume a shared notion of frame importance across layers—our approach separates the estimation of frame importance by layer and adopts a mask-then-integrate procedure via learnable layer weights, thereby decoupling the layer and frame axes in a principled manner.

To address these limitations, we propose applying attentive pooling [26] independently to each layer in order to estimate frame-wise importance scores. Based on these scores, non-informative frames are selectively masked on a per-layer basis, while the remaining informative embeddings are aggregated through learnable scalar weights assigned to each layer. This process allows irrelevant frames to be suppressed while effectively leveraging complementary information across layers.

Unlike conventional attentive pooling, which typically reduces a [T, D] embedding into a single [1, D] vector, our method retains temporal resolution. Preserving the frame-level structure enables the model to reflect the fact that irrelevant information along the time axis may differ from one layer to another. As a result, the method can suppress layer-specific forms of noise and selectively emphasize the most discriminative speaker-related content at each time step.

3.1. WavLM

WavLM is a self-supervised speech representation learning model built upon the wav2vec 2.0 framework, designed to handle both speech recognition and speaker-related tasks more effectively [12]. It is pretrained on large-scale unlabeled speech using a masked prediction objective and introduces gated relative position bias into the Transformer layers to enhance the modeling of long-range dependencies.

Given an input waveform x, the signal is first passed through a convolutional neural network (CNN) feature encoder, producing a latent representation z ∈ ℝT×1024. This representation is then processed by a 24-layer Transformer encoder, yielding contextualized embeddings h across multiple layers.

WavLM has been shown to generate rich multi-layer embeddings that generalize well to a variety of downstream tasks, including speaker verification, speaker recognition, emotion recognition, and speech classification [14,15]. In particular, compared with earlier SSL models such as wav2vec 2.0 [12] and HuBERT [13], WavLM has demonstrated superior performance on speaker-related tasks, leading many recent studies to adopt it as the backend module in speaker verification systems.

In this work, we employ WavLM-Base+ as the SSL backend. The Base+ model requires significantly less computation and memory than the Large configuration while maintaining competitive performance in speaker verification, as reported in prior studies. This makes Base+ a practical and appropriate choice for evaluating our proposed framework, which relies on leveraging multi-layer representations. The hierarchical embeddings of WavLM provide complementary acoustic and contextual information across layers, aligning naturally with our design that performs frame-level masking and selective aggregation. By leveraging WavLM-Base+, our method can effectively capture both low-level acoustic cues and high-level speaker traits, thereby achieving robust verification performance even under challenging acoustic conditions.

3.2. MMFA

For each SSL layer, ∈ ℝD represents the frame embedding at index t.

The frame-level score et is computed as

where and are learnable parameters and is a trainable vector.

The frame-level attention weight αt is then obtained through a softmax normalization:

To suppress uninformative segments, a binary mask is applied based on the attention weights:

Finally, the masked embedding of each layer is obtained as

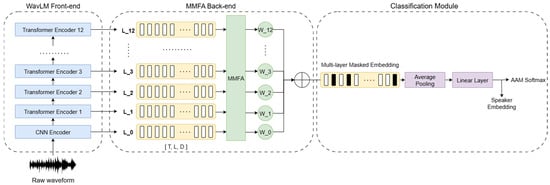

We compare the proposed MMFA with three alternative multi-layer aggregation strategies, as illustrated in Figure 1. The first method uses only the embedding from the final SSL layer. The second method, Weighted Sum, applies learnable scalar weights to each layer and aggregates them into a single representation. The third method, Concatenation, reshapes the embeddings from [L, T, D] into [T, L × D], thereby increasing the input dimension of the backend’s linear layer from D to L × D. These baselines provide reference points for evaluating the benefits of incorporating frame-level masking. In contrast, the proposed MMFA performs frame-wise masking independently within each layer prior to aggregation, enabling the model to capture both frame-level and layer-level importance and achieve more robust speaker verification.

Figure 1.

SSL-based SV framework with proposed multi-layer masked feature aggregation.

3.3. Regularizing Parameters During Fine-Tuning

Although pre-trained models provide a strong initialization for SV tasks, the fine-tuning process can still fall into local optima, especially when training data is limited. This issue becomes more pronounced for over-parameterized SSL models, which tend to overfit small datasets more easily than conventional speaker embedding models trained from scratch.

To mitigate this problem, we adopt the fine-tuning regularization technique proposed in previous work [10], which applies L2 regularization to constrain the fine-tuned parameters from deviating significantly from their original pre-trained values. This approach encourages a more stable and generalizable fine-tuning process without substantially altering the learned representations. The penalty term is defined as follows:

where are the pre-trained weights and are the parameters being updated. The overall loss function is defined as follows:

where is the speaker classification loss and is the regularization strength hyperparameter.

3.4. Layer-Wise Learning Rate Decay (LLRD)

In addition to fine-tuning regularization, we also adopt the layer-wise learning rate decay (LLRD) technique to stabilize training. This method is motivated by the observation that lower transformer layers capture general acoustic patterns useful for SV and should be updated less aggressively, whereas higher layers can be more freely adapted to task-specific cues.

Following the formulation in previous work [27], we assign exponentially increasing learning rates from the bottom to the top layers:

where is the learning rate for the l-th transformer layer, 1 is the base learning rate, and is a scaling factor. encourages greater updates for upper layers, while corresponds to uniform learning rates across all layers.

These techniques are widely adopted in prior work and are included here as standard practices to improve stability and generalization in SSL fine-tuning.

4. Experiments

4.1. Datasets and Evaluation Metrics

We evaluate our systems on the VoxCeleb corpus, a large-scale text-independent benchmark widely used in SV research. The training data is derived from VoxCeleb2-dev, which contains over 1 million utterances from 5994 speakers collected from YouTube interview videos under diverse acoustic conditions. The dataset is multilingual, with speakers covering a wide range of nationalities, ages, and professions, and thus provides a realistic setting for SV.

For evaluation, we follow the standard VoxCeleb1 test protocols and report results on three subsets:

- VoxCeleb1-O: The original test set with 40 speakers and 37,720 verifications.

- VoxCeleb1-E: An extended set including 1251 speakers and 579,818 trials, covering broader intra- and inter-speaker variations.

- VoxCeleb1-H: A hard subset of VoxCeleb1-E that includes only same-gender and same-nationality trials, designed to evaluate fine-grained discrimination.

To improve robustness during training, we apply data augmentation following the Kaldi recipe [28], using noise segments from MUSAN [29] and simulated room impulse responses (RIRs) [30]. All training utterances are randomly cropped to 3 s.

Performance is evaluated using two metrics. The first is the Equal Error Rate (EER) [31], which corresponds to the operating point where false acceptance and false rejection rates are equal. The second is the minimum Detection Cost Function (minDCF) [32], defined as

where and are the costs of misses and false alarms, respectively. Following standard practice, we report minDCF at .

4.2. Implementation Details

We fine-tune WavLM-Base+ speaker embedding models using the VoxCeleb2-dev set as training data. The Base+ model contains approximately 94 million parameters and provides a good balance between efficiency and performance, making it suitable as the backend for our experiments.

All models are fine-tuned for 20 epochs on a single 48 GB NVIDIA A40 GPU. We use the additive angular margin (AAM) softmax loss [33] with a margin of 0.2 and a scale of 30. The hyperparameter for fine-tuning regularization is set to unless otherwise specified. A batch size of 120 is used for the Base+ model, and all training utterances are randomly cropped to 3 s.

To stabilize training, the learning rate is reduced by 5% after each epoch. In addition, we optionally apply large margin fine-tuning (LM-FT) [34] to further improve performance. During LM-FT, the cropping window is extended to 5 s instead of 3 s, and the angular margin is increased to 0.5. LM-FT is performed for an additional 3 epochs after the initial 20-epoch training. Utterances shorter than 5 s are also repeat-padded to maintain a fixed-length input. Data augmentation is consistently applied throughout all training stages.

While the training window length is fixed for stability and batch processing, the underlying architecture is inherently length-invariant. Therefore, the model can process variable-length utterances directly during inference, which is consistent with standard practice in speaker verification.

4.3. Results

4.3.1. Layer-Wise Frame Attention Analysis

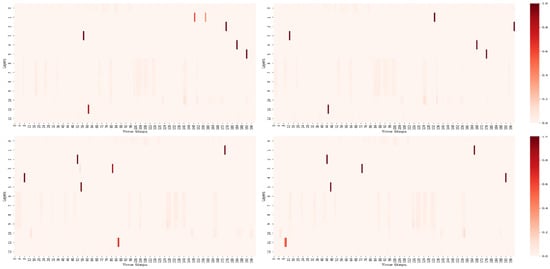

Figure 2 visualizes frame-level attention distributions across layers for different utterances from the same speaker. Some layers spread their importance relatively evenly across many frames, while others concentrate attention on only a few specific frames. In particular, mid-level layers consistently emphasize vowel regions and acoustically stable segments, whereas higher layers often focus on prosodic changes or sentence boundaries. This demonstrates that different layers highlight different types of frames, reflecting the distinct roles of each layer in capturing speaker-relevant information.

Figure 2.

Layer-wise frame attention maps for different utterances from same speaker.

Such observations suggest that treating all frames equally can dilute the contribution of the small subset of frames that are most informative for speaker discrimination. Therefore, suppressing uninformative frames at the frame level, before aggregating across layers, can provide more effective representations. This motivation is further supported by the quantitative results presented in the following subsections.

4.3.2. Comparison of the Different Aggregation Method with Proposed MMFA

To evaluate the effectiveness of the proposed MMFA, we compare it with several commonly used SSL feature aggregation strategies, including last-layer extraction, weighted sum across all layers, and full-layer concatenation. Table 1 summarizes the results on the VoxCeleb1-O, VoxCeleb1-H, and VoxCeleb1-E evaluation sets.

Table 1.

Effect of the proposed MMFA method across VoxCeleb1 subsets.

On VoxCeleb1-O, the standard evaluation set, MMFA achieves an EER of 0.9017 and a minDCF of 0.0661. This represents a clear improvement over the Weighted Sum baseline, which yields 1.2547 EER and 0.0934 minDCF. The last-layer method and the concatenation approach also provide modest gains compared with the baseline but remain inferior to MMFA. These results indicate that treating all frames and layers equally may introduce noise or redundancy, whereas MMFA benefits from selectively emphasizing informative frames and layers.

Furthermore, the performance gain is not limited to VoxCeleb1-O but is consistently observed on the more challenging VoxCeleb1-E and VoxCeleb1-H subsets. VoxCeleb1-E contains a much larger number of speakers and trial pairs, making it well-suited for assessing model generalization. VoxCeleb1-H is even more demanding, as it includes only trials between speakers of the same gender and nationality, requiring fine-grained discrimination. MMFA consistently surpasses competing methods under both of these challenging conditions, which demonstrates that the proposed approach goes beyond incremental baseline improvements and remains effective in more realistic and difficult scenarios.

4.3.3. Ablation Study on MMFA Components

As shown in Table 2, we conducted an ablation on VoxCeleb1-O while fixing the WavLM-Base+ backbone and incrementally adding aggregation components. Adding layer-wise learnable weights (LLW) improved the two metrics by about 9% and 32%, respectively. Using layer-wise frame attention (LFA) alone yielded gains of roughly 19% and 40%, and combining LFA with LLW widened the improvements to about 24% and 45%. Layer-wise frame masking (LFM) alone provided the largest single-module boost (about 32% and 49%). With all three modules together, the model achieved approximately 35% and 52% relative improvements. Overall, the performance gains were satisfactory relative to the model increase, and the complementary combination of the three modules delivered the largest benefit.

Table 2.

Ablation study on MMFA components on the VoxCeleb1-O evaluation set.

4.3.4. Ablation Study on Layer Selection Strategies

Table 3 presents an ablation study on layer selection strategies using the VoxCeleb1-O evaluation set. Motivated by prior studies suggesting that layers 3–7 in SSL models tend to capture speaker-relevant information, we evaluated two settings: selecting layers 3, 4, and 5 (Top-3), and selecting layers 3–7 (Top-5). Although these strategies leverage known informative layers, their improvements remained limited compared to the proposed method. In contrast, MMFA utilizes all layers while applying frame-wise masking to suppress uninformative segments, leading to the best performance in terms of both EER and minDCF.

Table 3.

Ablation study on layer selection strategies on the VoxCeleb1-O evaluation set.

4.3.5. Ablation Study on Different Bottom-K Mask Ratios

Table 4 presents the results of applying different Bottom-K masking ratios within the proposed MMFA framework on the VoxCeleb1-O evaluation set. The masking ratio was varied from 0% (no masking, all frames retained) to 70%.

Table 4.

Ablation study on different mask ratios on the VoxCeleb-O evaluation set.

Without masking, the system records an EER of 1.1222 and a minDCF of 0.0824. Removing the bottom 30% of frames—those assigned the lowest importance scores—slightly improves the EER to 1.0952, with a similar result (1.0951 EER) observed at a 50% masking ratio. These results suggest that discarding low-utility frames allows the model to concentrate more effectively on speaker-relevant segments.

The most significant improvement is observed at a 70% masking ratio, where the EER drops to 0.9017 and the minDCF to 0.0661. This finding indicates that, while SSL models generate a large amount of multi-layer frame-level information, in certain layers only a small subset of frames carry meaningful speaker-discriminative cues, whereas the remaining frames may act as noise. Actively filtering out such frames can therefore lead to improved performance, which is empirically confirmed in this study.

As further illustrated in Figure 2, the distribution of frame-level attention varies substantially across layers. Some layers spread their importance broadly across many frames, while others concentrate it on only a few specific frames. Prior work has suggested that mid-level layers are particularly informative for speaker verification; however, even within these layers, only a limited number of frames are truly critical. Treating all frames equally dilutes their contribution, and conventional weighted-sum aggregation cannot address this issue.

By contrast, the proposed MMFA combines layer-wise weighting with frame-level masking to retain only the most discriminative temporal segments from each layer. When applied on top of the same backend architecture, frame-level masking yields clear improvements over the weighted-sum baseline. In summary, weighted-sum aggregation alone is insufficient to fully exploit the rich multi-layer frame-level representations produced by SSL models, whereas MMFA provides a more effective means of harnessing them for speaker verification.

4.3.6. Comparison Between the Proposed System and SSL Based SV Systems

Table 5 presents a comparison of the proposed method with SSL models of comparable scale and backends of similar size. The results show stable improvements in VoxCeleb1-H, which is recognized as one of the most challenging benchmarks, highlighting the effectiveness of the proposed approach under difficult conditions. Unlike prior studies that primarily focused on layer-wise weighting, our method incorporates both layer-specific information diversity and frame-level importance, enabling a more optimal use of SSL features. Significantly, these improvements were achieved with only about 20 epochs of fine-tuning, yet the system delivers competitive performance compared with speaker verification-oriented baselines. This demonstrates that the proposed aggregation strategy not only validates the role of frame- and layer-aware modeling but also provides performance that is practically applicable and has clear potential for further gains in real-world deployments.

Table 5.

Comparison between the proposed system and other SV systems on Voxceleb1 subsets in terms of EER (%).

5. Discussion

The proposed MMFA has been experimentally shown to be effective and theoretically sound by selectively leveraging information at both the layer and frame levels within the aggregation process. Conventional single-pooling strategies fail to adequately capture differences across layers and variations in frame importance, whereas MMFA addresses this limitation and demonstrates consistent and stable improvements across various evaluation conditions. Importantly, it achieves this without requiring complex structural modifications or extensive training, while still maintaining competitiveness against speaker verification systems specifically optimized for the task. This confirms that MMFA is not merely a conceptual attempt but a practical alternative that can be readily deployed in real applications. Furthermore, the improvements observed on the challenging VoxCeleb1-H benchmark highlight its robustness even under difficult conditions.

The preceding ablation and comparison results support the necessity and performance advantages of MMFA, even when the aggregation head’s parameters increase slightly with the backbone held fixed. However, because it performs layer-wise attention along the time axis, some increase in computational load and memory usage is unavoidable. Accordingly, future work will explore lightweight variants of the aggregation head—such as low-dimensional or low-rank projections—to reduce complexity while preserving accuracy as much as possible. Achieving such model slimming is expected to further enhance applicability in wearable scenarios where real-time operation and resource constraints are critical.

Beyond these findings, MMFA benefits from being aggregation-based, which makes it both structurally simple and highly extensible. This characteristic ensures that the method can be easily applied in diverse environments and system configurations. Future research will further validate its generality and practical value by extending the approach to downstream tasks beyond speaker verification and by integrating it into larger-scale backend architectures. Such extensions are expected not only to yield further performance gains but also to provide deeper insight into how SSL-based embeddings can be most effectively utilized.

6. Conclusions

In this work, we proposed Multi-layer Masked Feature Aggregation (MMFA) to more effectively exploit multi-layer and frame-level information in SSL-based speaker verification by separating and leveraging them at the layer level. Prior studies have typically relied either on the final layer representation alone, or on aggregating all layers into a single embedding followed by one global frame-level pooling step. Such approaches fail to account for the fact that frame importance may vary across layers, and thus cannot fully capture layer-dependent speaker cues.

In contrast, MMFA independently estimates frame-level importance within each layer and then integrates the resulting embeddings through learnable aggregation. This design suppresses uninformative frames while preserving complementary information across different depths. Experiments on the VoxCeleb1 benchmark confirmed that MMFA consistently achieves lower EER and minDCF compared to conventional aggregation strategies. Beyond numerical improvements, the main contribution of this study lies in analytically demonstrating how multi-layer and frame-level representations contribute to speaker discrimination, thereby providing deeper insight into the mechanisms underlying SSL-based embeddings.

Author Contributions

Conceptualization, U.L. and S.-P.L.; methodology, U.L.; investigation, U.L.; writing—original draft preparation, U.L.; writing—review and editing, S.-P.L.; project administration, S.-P.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the 2025 Research Grant from SangMyung University (2025-A000-0004).

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Acknowledgments

This work was supported by the 2025 Research Grant from SangMyung University (2025-A000-0004).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Tu, Y.; Lin, W.; Mak, M.-W. A Survey on Text-Dependent and Text-Independent Speaker Verification. IEEE Access 2022, 10, 99038–99049. [Google Scholar] [CrossRef]

- Kinnunen, T.; Li, H. A Survey of Speaker Recognition: Fundamental Theories, Recognition Methods and Opportunities. IEEE Trans. Audio Speech Lang. Process. 2010, 18, 312–335. [Google Scholar] [CrossRef]

- Rossi, M.; Amft, O.; Kusserow, M.; Troster, G. Collaborative real-time speaker identification for wearable systems. In Proceedings of the IEEE International Conference on Pervasive Computing and Communications (PerCom 2010), Mannheim, Germany, 29 March–2 April 2010; pp. 180–189. [Google Scholar] [CrossRef]

- Ko, K.; Kim, S.; Kwon, H. Selective Audio Perturbations for Targeting Specific Phrases in Speech Recognition Systems. Int. J. Comput. Intell. Syst. 2025, 18, 103. [Google Scholar] [CrossRef]

- Dehak, N.; Kenny, P.; Dehak, R.; Dumouchel, P.; Ouellet, P. Front-End Factor Analysis for Speaker Verification. IEEE Trans. Audio Speech Lang. Process. 2011, 19, 788–798. [Google Scholar] [CrossRef]

- Ioffe, S. Probabilistic Linear Discriminant Analysis. In Proceedings of the European Conference on Computer Vision (ECCV 2006), Graz, Austria, 7–13 May 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 531–542. [Google Scholar] [CrossRef]

- Snyder, D.; Garcia-Romero, D.; Sell, G.; Povey, D.; Khudanpur, S. X-Vectors: Robust DNN Embeddings for Speaker Recognition. In Proceedings of the Interspeech 2018, Hyderabad, India, 2–6 September 2018; ISCA: Baixas, France, 2018; pp. 5329–5333. [Google Scholar] [CrossRef]

- Desplanques, B.; Thienpondt, J.; Demuynck, K. ECAPA-TDNN: Emphasized Channel Attention, Propagation and Aggregation in TDNN Based Speaker Verification. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2020), Barcelona, Spain, 4–8 May 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 7619–7623. [Google Scholar] [CrossRef]

- Miara, V.; Lepage, T.; Dehak, R. Towards Supervised Performance on Speaker Verification with Self-Supervised Learning by Leveraging Large-Scale ASR Models. In Proceedings of the Interspeech 2024, Kos, Greece, 1–5 September 2024; ISCA: Baixas, France, 2024; pp. 2660–2664. [Google Scholar] [CrossRef]

- Peng, J.; Plchot, O.; Stafylakis, T.; Mosner, L.; Burget, L.; Cernocky, J. An Attention-Based Backend Allowing Efficient Fine-Tuning of Transformer Models for Speaker Verification. In Proceedings of the IEEE Spoken Language Technology Workshop (SLT 2022), Doha, Qatar, 9–12 January 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Aldeneh, Z.; Higuchi, T.; Jung, J.-W.; Seto, S.; Likhomanenko, T.; Shum, S.; Abdelaziz, A.H.; Watanabe, S.; Theobald, B.-J. Can You Remove the Downstream Model for Speaker Recognition with Self-Supervised Speech Features? In Proceedings of the Interspeech 2024, Kos, Greece, 1–5 September 2024; ISCA: Baixas, France, 2024; pp. 2798–2802. [Google Scholar] [CrossRef]

- Baevski, A.; Zhou, H.; Mohamed, A.; Auli, M. wav2vec 2.0: A Framework for Self-Supervised Learning of Speech Representations. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS 2020), Virtual, 6–12 December 2020; NeurIPS: Vancouver, BC, Canada, 2020; pp. 12449–12460. [Google Scholar] [CrossRef]

- Hsu, W.-N.; Bolte, B.; Tsai, Y.-H.H.; Lakhotia, K.; Salakhutdinov, R.; Mohamed, A. HuBERT: Self-Supervised Speech Representation Learning by Masked Prediction of Hidden Units. IEEE/ACM Trans. Audio Speech Lang. Process. 2021, 29, 3451–3460. [Google Scholar] [CrossRef]

- Chen, S.; Wang, C.; Chen, Z.; Wu, Y.; Jia, J.; Chen, X.; Zhou, S.; Cao, C.; Li, Z.; Wang, S.; et al. WavLM: Large-Scale Self-Supervised Pre-Training for Full Stack Speech Processing. IEEE J. Sel. Top. Signal Process. 2022, 16, 1505–1518. [Google Scholar] [CrossRef]

- Yang, S.-W.; Chi, P.-H.; Chuang, Y.-S.; Lai, C.-I.; Lakhotia, K.; Lin, Y.-Y.; Liu, A.T.; Shi, J.; Chang, X.; Lin, G.-T.; et al. SUPERB: Benchmarking Self-Supervised Speech Representation Learning. In Proceedings of the Interspeech 2021, Brno, Czech Republic, 30 August–3 September 2021; ISCA: Baixas, France, 2021; pp. 1194–1198. [Google Scholar] [CrossRef]

- Kalibhat, N.; Narang, K.; Firooz, H.; Sanjabi, M.; Feizi, S. Measuring Self-Supervised Representation Quality for Downstream Classification Using Discriminative Features. arXiv 2022, arXiv:2203.01881. [Google Scholar] [CrossRef]

- Huang, C.; Chen, W.C.; Yang, S.; Liu, A.T.; Li, C.A.; Lin, Y.X.; Tseng, W.C.; Diwan, A.; Shih, Y.J.; Shi, J.; et al. Dynamic-SUPERB Phase-2: A Collaboratively Expanding Benchmark for Measuring the Capabilities of Spoken Language Models with 180 Tasks. arXiv 2024, arXiv:2411.05361. [Google Scholar] [CrossRef]

- Mohamed, M.; Liu, O.D.; Tang, H.; Goldwater, S. Orthogonality and Isotropy of Speaker and Phonetic Information in Self-Supervised Speech Representations. In Proceedings of the Interspeech 2024, Kos, Greece, 1–5 September 2024; ISCA: Baixas, France, 2024; pp. 2903–2907. [Google Scholar] [CrossRef]

- Pasad, A.; Chou, W.; Livescu, K. Layer-Wise Analysis of a Self-Supervised Speech Representation Model. In Proceedings of the 2021 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU 2021), Cartagena, Colombia, 13–17 December 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 914–921. [Google Scholar] [CrossRef]

- Xing, X.; Xu, M.; Zheng, F. A Joint Noise Disentanglement and Adversarial Training Framework for Robust Speaker Verification. In Proceedings of the Interspeech 2024, Kos, Greece, 1–5 September 2024; ISCA: Baixas, France, 2024; pp. 2845–2849. [Google Scholar] [CrossRef]

- Li, K.; Akagi, M.; Wu, Y.; Dang, J. Segment-Level Effects of Gender, Nationality and Emotion Information on Text-Independent Speaker Verification. In Proceedings of the Interspeech 2020, Shanghai, China, 25–29 October 2020; ISCA: Baixas, France, 2020; pp. 2987–2991. [Google Scholar] [CrossRef]

- Nagrani, A.; Chung, J.S.; Zisserman, A. VoxCeleb: A Large-Scale Speaker Identification Dataset. In Proceedings of the Interspeech 2017, Stockholm, Sweden, 20–24 August 2017; ISCA: Baixas, France, 2017; pp. 2616–2620. [Google Scholar] [CrossRef]

- Chung, J.S.; Nagrani, A.; Zisserman, A. VoxCeleb2: Deep Speaker Recognition. In Proceedings of the Interspeech 2018, Hyderabad, India, 2–6 September 2018; ISCA: Baixas, France, 2018; pp. 1086–1090. [Google Scholar] [CrossRef]

- Peng, J.; Mošner, L.; Zhang, L.; Plchot, O.; Stafylakis, T.; Burget, L.; Černocký, J. CA-MHFA: A Context-Aware Multi-Head Factorized Attentive Pooling for SSL-Based Speaker Verification. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2025), Hyderabad, India, 16–21 April 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 1–5. [Google Scholar] [CrossRef]

- Yu, Y.-Q.; Zheng, S.; Suo, H.; Lei, Y.; Li, W.-J. CAM: Context-Aware Masking for Robust Speaker Verification. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2021), Toronto, ON, Canada, 6–11 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 6703–6707. [Google Scholar] [CrossRef]

- Okabe, K.; Koshinaka, T.; Shinoda, K. Attentive Statistics Pooling for Deep Speaker Embedding. In Proceedings of the Interspeech 2018, Hyderabad, India, 2–6 September 2018; ISCA: Baixas, France, 2018; pp. 2252–2256. [Google Scholar] [CrossRef]

- Sun, C.; Qiu, X.; Xu, Y.; Huang, X. How to Fine-Tune BERT for Text Classification? In Proceedings of the China National Conference on Chinese Computational Linguistics (CCL 2019), Kunming, China, 18–20 October 2019; Springer: Cham, Switzerland, 2019; pp. 194–206. [Google Scholar] [CrossRef]

- Povey, D.; Ghoshal, A.; Boulianne, G.; Burget, L.; Glembek, O.; Goel, N.; Hannemann, M.; Motlíček, P.; Qian, Y.; Schwarz, P.; et al. The Kaldi Speech Recognition Toolkit. In Proceedings of the IEEE Workshop on Automatic Speech Recognition and Understanding (ASRU 2011), Waikoloa, HI, USA, 11–15 December 2011; IEEE: Piscataway, NJ, USA, 2011. [Google Scholar]

- Snyder, D.; Chen, G.; Povey, D. MUSAN: A Music, Speech, and Noise Corpus. In Proceedings of the 2015 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), Scottsdale, AZ, USA, 13–17 December 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Ko, T.; Peddinti, V.; Povey, D.; Khudanpur, S. A Study on Data Augmentation of Reverberant Speech for Robust Speech Recognition. In Proceedings of the Interspeech 2017, Stockholm, Sweden, 20–24 August 2017; ISCA: Baixas, France, 2017; pp. 3586–3590. [Google Scholar] [CrossRef]

- Martin, A.; Doddington, G.; Kamm, T.; Ordowski, M.; Przybocki, M. The DET Curve in Assessment of Detection Task Performance. In Proceedings of the Eurospeech 1997, Rhodes, Greece, 22–25 September 1997; ISCA: Baixas, France, 1997; pp. 1895–1898. [Google Scholar]

- Sadjadi, S.O.; Greenberg, C.S.; Reynolds, D.A. The 2016 NIST Speaker Recognition Evaluation. In Proceedings of the Interspeech 2017, Stockholm, Sweden, 20–24 August 2017; ISCA: Baixas, France, 2017; pp. 1353–1357. [Google Scholar] [CrossRef]

- Deng, J.; Guo, J.; Xue, N.; Zafeiriou, S. ArcFace: Additive Angular Margin Loss for Deep Face Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2019), Long Beach, CA, USA, 16–20 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 4690–4699. [Google Scholar] [CrossRef]

- Thienpondt, J.; Desplanques, B.; Demuynck, K. The IDLAB VoxSRC-20 Submission: Large Margin Fine-Tuning and Quality-Aware Score Calibration in DNN Based Speaker Berification. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2021), Toronto, ON, Canada, 6–11 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 5814–5818. [Google Scholar] [CrossRef]

- Zhou, T.; Zhao, Y.; Wu, J. ResNeXt and Res2Net Structures for Speaker Verification. In Proceedings of the IEEE Spoken Language Technology Workshop (SLT 2021), Shenzhen, China, 19–22 January 2021; pp. 301–307. [Google Scholar] [CrossRef]

- Rybicka, M.; Villalba, J.; Zelasko, P.; Dehak, N.; Kowalczyk, K. Spine2Net: SpineNet with Res2Net and Time-Squeeze-and-Excitation Blocks for Speaker Recognition. In Proceedings of the Interspeech 2021, Brno, Czech Republic, 30 August–3 September 2021; ISCA: Baixas, France, 2021; pp. 496–500. [Google Scholar] [CrossRef]

- Chen, Z.; Chen, S.; Wu, Y.; Qian, Y.; Wang, C.; Liu, S.; Qian, Y.; Zeng, M. Large-Scale Self-Supervised Speech Representation Learning for Automatic Speaker Verification. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2022), Singapore, 23–27 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 6147–6151. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).