Abstract

As Virtual Try-On (VTON) technology matures, 2D VTON methods based on diffusion models can now rapidly generate diverse and high-quality try-on results. However, with rising user demands for realism and immersion, many applications are shifting towards 3D VTON, which offers superior geometric and spatial consistency. Existing 3D VTON approaches commonly face challenges such as barriers to practical deployment, substantial memory requirements, and cross-view inconsistencies. To address these issues, we propose an efficient 3D VTON framework with robust multi-view consistency, whose core design is to decouple the monolithic 3D editing task into a four-stage cascade as follows: (1) We first reconstruct an initial 3D scene using 3D Gaussian Splatting, integrating the SMPL-X model at this stage as a strong geometric prior. By computing a normal-map loss and a geometric consistency loss, we ensure the structural stability of the initial human model across different views. (2) We employ the lightweight CatVTON to generate 2D try-on images, that provide visual guidance for the subsequent personalized fine-tuning tasks. (3) To accurately represent garment details from all angles, we partition the 2D dataset into three subsets—front, side, and back—and train a dedicated LoRA module for each subset on a pre-trained diffusion model. This strategy effectively mitigates the issue of blurred details that can occur when a single model attempts to learn global features. (4) An iterative optimization process then uses the generated 2D VTON images and specialized LoRA modules to edit the 3DGS scene, achieving 360-degree free-viewpoint VTON results. All our experiments were conducted on a single consumer-grade GPU with 24 GB of memory, a significant reduction from the 32 GB or more typically required by previous studies under similar data and parameter settings. Our method balances quality and memory requirement, significantly lowering the adoption barrier for 3D VTON technology.

1. Introduction

The importance of Virtual Try-On (VTON) technology has grown substantially with the rise in online shopping and e-commerce. As a critical application at the intersection of computer vision and the fashion industry, it has garnered widespread attention from both academia and industry in recent years. This technology enables users to digitally preview how clothing would look on them without physical fitting. This enhances the personalized shopping experience and reduces product returns due to style mismatches. Furthermore, it demonstrates immense potential in promoting sustainable consumption.

Early 2D VTON methods primarily relied on explicit warping mechanisms and Generative Adversarial Networks (GANs) [1] to deform and overlay garment images onto a target person. To address the challenges posed by complex poses and unconventional apparel, researchers began to introduce innovative designs. For instance, GP-VTON [2] introduced a Local-Flow Global-Parsing strategy to better preserve the semantic information of different garment parts. Subsequently, methods based on diffusion models further elevated the generation quality. IDM-VTON [3], for example, employed a dual-module architecture that fuses high-level semantics from a visual encoder into its cross-attention layers while injecting low-level details extracted by a dual UNet into its self-attention layers, thereby improving garment fidelity. More recently, lightweight architectures like CatVTON [4] have demonstrated that efficient VTON can be achieved through simple spatial concatenation, reducing model parameters and simplifying complex preprocessing workflows. Nevertheless, even sophisticated frameworks such as [3] are fundamentally 2D approaches. Consequently, they face significant challenges in maintaining cross-view consistency and spatial coherence when confronted with multi-view demands, highlighting the necessity of advancing 3D VTON.

Recent advancements in 3D scene representation have opened new avenues for extending 2D VTON to 3D. The introduction of 3D Gaussian Splatting (3DGS) [5], in particular, has enabled real-time rendering through efficient rasterization of 3D Gaussian primitives, unlike the time-consuming volume rendering required by traditional Neural Radiance Fields (NeRF) [6]. This breakthrough has spurred pioneering works in the 3D VTON domain, such as GS-VTON [7] and GaussianVTON [8]. Their core idea is to transfer the results from a pre-trained 2D VTON diffusion model to a 3DGS scene, following a series of consistency-enhancing preprocessing steps, to achieve cross-view-consistent virtual try-on. Such methods typically adopt multi-stage optimization strategies, combining image-based editing with 3D consistency constraints to transfer garment textures and geometric shapes onto a 3D human representation.

Parametric human models, such as SMPL [9] and SMPL-X [10], offer a promising direction for addressing the geometric consistency of the human body in 3D VTON. By generating 3D models that encapsulate body shape and pose, they enable robust skeletal tracking and surface structure representation, which can be used to solidify the structural stability of the human form in cross-view tasks. Although the SMPL-X model was designed with an emphasis on efficiency and being lightweight, its application in high-fidelity 3D VTON tasks can still impact the real-time try-on experience due to the complex clothing simulation, rendering, and optimization processes involved.

Despite these advancements, existing 3D VTON methods still face several key limitations. The most significant barrier to widespread adoption is the computational demand; most methods require high-end GPUs and substantial memory resources for training, which is prohibitive for practical deployment. Secondly, integrating 2D priors into 3D representations often leads to texture inconsistencies and geometric distortions across different views. This issue is rooted in the inherent lack of 3D spatial awareness in 2D diffusion models, compelling existing methods to restrict the range of supported viewing angles to prevent quality degradation, thereby severely compromising the user’s immersive experience.

To address the aforementioned challenges, we introduce “Splatting the Cat,” a 3D virtual try-on framework designed to reduce computational requirements, enhance cross-view consistency, and support free-viewpoint observation. Our primary contribution lies not in a single component, but in a streamlined multi-stage pipeline that fundamentally decouples the complex 3D editing task into a series of more manageable and computationally optimizable sub-problems. This design philosophy allows for targeted resource allocation at each stage. Our strategy integrates three key designs: (1) To lower computational demands, we adopt the lightweight CatVTON [4] for generating initial 2D virtual try-on results, which provide high-quality visual guidance for the subsequent 3D editing and LoRA [11] fine-tuning stages. (2) To fundamentally resolve the issues of texture blurring and detail loss in 360-degree virtual try-on, we introduce an innovative view-decomposed LoRA fine-tuning strategy. By training dedicated “expert” LoRA models for the front, side, and back views, we effectively prevent the compromises that occur when a single model attempts to learn global features, significantly improving the clarity of garment textures from each perspective. (3) Unlike methods that consider human geometry only in the later editing stages, we integrate the SMPL-X model as a strong geometric prior during the initial 3DGS scene reconstruction. By introducing explicit normal map and geometric consistency losses, we ensure that the human structure possesses cross-view stability from the outset, providing a clean and reliable foundation for the subsequent garment editing.

Through this series of designs, our framework achieves a deliberate balance among quality, efficiency, and cross-view consistency. All experiments for this 360-degree virtual try-on were completed on a single NVIDIA GeForce RTX 4090 with 24 GB of memory (NVIDIA, Santa Clara, CA, USA). This significantly lowers the entry barrier for 3D VTON technology, making it more accessible for broad application in fields such as e-commerce, virtual reality, and digital fashion.

The contributions of this work can be summarized as follows:

- We propose a computationally efficient 3D VTON framework that integrates a lightweight 2D VTON model architecture. This approach significantly reduces computational costs and memory footprint while maintaining high-quality virtual try-on results, setting a new benchmark for applications in resource-constrained environments.

- We introduce a view-decomposed LoRA fine-tuning strategy. By training specialized modules for the front, side, and back views, respectively, our method avoids the blurring issues that arise when a single pre-trained model learns global features, thereby markedly improving the clarity of garment textures from all perspectives.

- To ensure the stability of the human body structure, we integrate the SMPL-X model as a geometric prior. By introducing explicit constraints through a normal map loss and a geometric consistency loss, we mitigate pose distortions and deformations in the initial 3DGS scene across different viewpoints.

- Our entire research was conducted on a single RTX 4090 GPU with 24 GB of memory. In contrast to previous studies that typically require at least 32 GB, our method demonstrates a significantly lower VRAM demand.

2. Related Work

The evolution of Virtual Try-On (VTON) technology has progressed through multiple stages, from early 2D image warping-based methods to contemporary 3D neural rendering. This trajectory reflects the field’s continuous efforts to achieve more realistic, immersive, and geometrically consistent virtual try-on experiences.

2.1. Two-Dimensional Virtual Try-On

2.1.1. Warping-Based Methods

Early 2D VTON methods primarily followed a two-stage process: garment warping followed by blending or refinement. VITON [12] pioneered the paradigm of image-based virtual try-on, while VITON-HD [13] addressed misalignment issues at high resolutions through “misalignment-aware normalization.” These approaches relied on geometric transformations, initially using Thin Plate Spline (TPS) warping techniques [12]. However, TPS was found to have limited capabilities in handling complex non-rigid deformations in practical applications.

To overcome the limitations of TPS, subsequent research shifted towards methods based on “appearance flow.” ClothFlow [14] introduced flow-based modeling for clothed person generation, and Parser-free VTON [15] employed appearance flow distillation to eliminate the need for explicit semantic parsing. GP-VTON [2] further advanced warping techniques with its Local-Flow Global-Parsing (LFGP) strategy, which better preserves the semantics of different garment components under complex poses.

Despite continuous improvements, warping-based methods face a fundamental limitation in generating novel visual content for occluded regions or complex fabric interactions, as they essentially transform existing pixels rather than synthesizing new ones.

2.1.2. GAN-Based Methods

The adoption of Generative Adversarial Networks (GANs) [1] marked a significant advancement in 2D VTON, shifting the paradigm from purely geometric transformations to learned generative modeling. Early GAN-based approaches combined a warping module with adversarial training to achieve more realistic blending results.

VITON [12] utilized a GAN in its person representation module to generate convincing try-on effects, inspiring subsequent works to explore more sophisticated GAN architectures. CP-VTON [16] introduced a “characteristic-preserving mechanism” using a GAN to better maintain person identity during the try-on process. The Attribute-decomposed GAN [17] proposed a more comprehensive approach, enabling controllable person synthesis by decomposing attributes and demonstrating superior handling of complex poses and clothing variations. ClothFlow [14] integrated flow-based warping with GAN-based generation, proving that combining geometric and generative methods can yield superior outcomes.

However, GAN-based methods encountered several persistent challenges, including training instability, mode collapse, and difficulties in achieving fine-grained control over generation quality, all of which limited their effectiveness. Furthermore, GANs struggled with maintaining texture consistency and handling complex occlusions, issues that became increasingly apparent when dealing with fine garment details or unusual poses.

2.1.3. Diffusion-Based Methods

The emergence of more stable and controllable diffusion models [18] revolutionized the research landscape of 2D VTON. The task was redefined as a “conditioned inpainting” problem rather than one of geometric warping. LaDI-VTON [19] was a pioneering work based entirely on diffusion models, and later, TryOnDiffusion [20] introduced a dual-UNet architecture that used separate networks to extract garment and person features. Both studies revealed the immense potential of applying diffusion models to the VTON task.

Recent progress has focused on exploring more diverse architectural designs. IDM-VTON [3] proposed a complex dual-module fusion method that integrates high-level semantic features via a cross-attention mechanism and handles low-level texture details through a self-attention mechanism. StableVITON [21] introduced a zero-cross-attention block, significantly improving its ability to handle misalignment issues and better preserve garment details.

OOTDiffusion [22] introduced a latent diffusion model based on outfitting fusion for controllable virtual try-on. However, a prominent recent trend is the move towards lightweight architectures. CatVTON [4] is a successful example, demonstrating that effective VTON can be achieved by simply concatenating the latent vectors of the person and garment in the spatial dimension. By removing redundant modules such as the ReferenceNet [23], text encoder, and cross-attention layers, ref. [4] showed that the self-attention mechanism within a pre-trained UNet is sufficient to learn the implicit correspondence between the garment and the person, all while substantially reducing computational complexity.

Although 2D VTON technology has now reached a mature state capable of handling most scenarios, the growing demand for realism and immersive experiences, coupled with the needs of AR and VR applications, has spurred research towards 3D VTON.

2.2. Three-Dimensional Virtual Try-On

2.2.1. Traditional Physics-Based Methods

Early 3D VTON methods relied heavily on expensive 3D scanning and physics-based cloth simulation. DRAPE [24] introduced an early “person-agnostic” dressing technique, while Subspace Clothing Simulation [25] employed adaptive bases for efficient cloth modeling, and DeepWrinkles [26] advanced the state of accurate garment modeling. However, the need for expensive infrastructure and complex preprocessing greatly limited the practicality of these methods.

The introduction of parametric human models, particularly SMPL [9] and SMPL-X [10], provided learnable geometric priors for human body representation. Multi-garment Net [27] was an early example of a learning-based method that could predict parametric garment geometry compatible with the SMPL model. Pix2Surf [28] attempted to bridge the gap between 2D and 3D by learning the correspondence between a clothing image and a 3D UV map.

However, these reconstruction-based approaches face a fundamental “information gap”, a single 2D image cannot provide sufficient information about 3D structure, back-side texture, and material properties. This often leads to reconstruction results with low fidelity and geometric artifacts.

2.2.2. The Neural Rendering Paradigm

The latest research in 3D VTON has shifted towards neural rendering methods, reframing the task as 3D scene editing. Three-Dimensional Gaussian Splatting (3DGS) [5] has gradually replaced NeRF as the predominant 3D representation method due to its real-time rendering capabilities and high-fidelity output. The general workflow involves: (1) reconstructing a 3DGS scene from multi-view images of a person; (2) editing the 2D rendered images using a pre-trained 2D VTON diffusion model; and (3) back-propagating the changes from the edited 2D VTON results to iteratively optimize and edit the 3D scene, ultimately accomplishing the VTON task.

Instruct-NeRF2NeRF [29] established a foundational framework for iterative 3D editing using 2D diffusion models and introduced the “Iterative Dataset Update (IterativeDU)” strategy. However, due to the inherent stochasticity of 2D diffusion models and their lack of 3D spatial awareness, this approach is highly susceptible to multi-view inconsistencies.

To tackle the challenge of multi-view consistency, recent 3D VTON methods have proposed a variety of strategies. VTON360 [30] directly enhances the 3D awareness of the 2D VTON model through pseudo-3D pose inputs, a multi-view spatial attention mechanism, and multi-view CLIP embeddings to fundamentally generate cross-view consistent results. GaussianVTON [8] introduced a multi-stage refinement process that includes face consistency restoration, hierarchical sparse editing, and an “Edit Recall Reconstruction (ERR)” strategy to replace the traditional IterativeDU. GS-VTON [7] employed “reference-driven” image editing, fine-tuning a 2D pre-trained diffusion model with LoRA to create a personalized concept, and combined this with a person-aware 3DGS editing stage to achieve high-fidelity 3D results. However, our experiments revealed that this method primarily supports frontal views, with limited effectiveness for back-of-garment try-on.

The application scope of these solutions is not limited to VTON; they are also applicable to other general 3D generation tasks that require multi-view coherence. The diversity of consistency solutions, however, indirectly reflects the profound challenge of integrating the capabilities of 2D diffusion models with the demands of 3D space. Therefore, this work is also dedicated to resolving the cross-view consistency problem as VTON is extended to 360-degrees.

3. Method

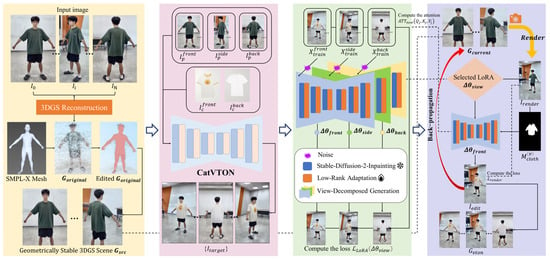

In this study, we propose “Splatting the Cat,” a framework designed to achieve highly efficient and consistent 3D virtual try-on. Our core design decomposes the complex 3D VTON task into a series of well-defined and technically manageable sub-processes. Our framework replaces a single, monolithic end-to-end model, we have devised a four-stage cascade where each stage addresses a specific challenge. As shown in Figure 1, our four-stage process is as follows: first, we establish a geometrically stable 3DGS scene. Second, we generate high-quality 2D VTON images for visual guidance. Third, we fine-tune a diffusion model using our view-decomposed LoRA strategy. Finally, an iterative optimization process integrates all components. This strategy of decoupling and refinement allows us to achieve on consumer-grade hardware what previously required substantial computational resources.

Figure 1.

Overview of our proposed framework Splatting the Cat.

3.1. Robust Initial 3DGS Scene Reconstruction

The success of 3D virtual try-on is largely contingent on the quality of the initial 3D human model. If the foundational model suffers from geometric distortions or cross-view inconsistencies, any subsequent editing efforts will be futile. Therefore, the goal of this stage is to construct a geometrically robust 3DGS scene of the person. By incorporating the parametric human model SMPL-X as a strong geometric prior, we transform the 3DGS reconstruction task from an ill-posed problem that relies purely on multi-view photometric consistency into a more stable optimization task with strong geometric constraints. In the following equations, we define as a camera pose randomly sampled from the distribution of training views.

First, given that the human subject remains nearly static in the dataset for 3D scene reconstruction, we utilize SMPLify-X, a single-image 3D human pose estimation tool, to predict the SMPL-X model parameters and reconstruct the human mesh . Subsequently, we perform a simple manual alignment to roughly overlap the SMPL-X mesh with the person in the 3DGS scene. This is followed by a rigid alignment between this mesh and the set of center points of the initial 3DGS point cloud to compensate for manual inaccuracies, thereby significantly reducing the risk of misalignment. This rigid alignment is achieved by minimizing the Symmetric Chamfer Distance between the two sets, as defined in (1):

In Equation (1), represents the alignment loss, is the center point vector of a Gaussian in the set , and is a vertex vector in the human mesh . and are the number of points in their respective sets. The objective is to minimize by finding the squared L2 norm of the shortest distance from each point to the mesh and vice versa. The aligned SMPL-X model then serves as a reliable “geometric scaffold” to guide the optimization of the 3DGS. We introduce two geometric regularization losses guided by this scaffold:

3.1.1. Normal Map Loss ()

This loss aims to ensure that the surface orientation of the human rendered from the 3DGS aligns with that of the SMPL-X model. We employ cosine distance, which is more robust to variations in lighting and scale compared to the L1 loss. We compute the angular difference between the rendered unit normal map from 3DGS, , and the SMPL-X reference normal map, only within the region defined by the binarized silhouette mask rendered from the SMPL-X model, as formulated in (2):

In Equation (2), denotes the vector dot product. This loss effectively constrains the curvature and smoothness of the human body surface.

3.1.2. Geometric Consistency Loss ()

To further ensure that the global geometry of the human body is accurate and consistent, we employ a pixel-wise Intersection over Union (IoU) loss to maximize the overlap between the rendered 3DGS silhouette and the SMPL-X reference silhouette . The formula is as follows:

By jointly optimizing the 3DGS parameters with these two geometric losses and the photometric reconstruction loss, we obtain an initial 3DGS scene with a structurally stable human body.

3.2. Efficient and Consistent 2D VTON Images

With a stable 3D human model in place, the next step is to generate a set of high-quality 2D VTON images. These images will serve as the “visual blueprints” or targets for the final 3D editing. The core challenge in this stage is to strike a balance between efficiency and cross-view consistency.

To maximize efficiency, we selected the lightweight CatVTON model [4], which represents a paradigm shift in VTON architecture. This model demonstrates that high quality can be achieved by eschewing modules previously considered essential in conventional designs, such as dedicated clothing encoders (e.g., ReferenceNet) and text-conditioned cross-attention mechanisms [3,21,22]. Instead of these complex components, CatVTON adopts a fundamentally simpler approach by directly concatenating the person and garment latent vectors in the spatial dimension. This design proves that the inherent self-attention mechanism within a single UNet is sufficient to learn the implicit correspondence, thereby significantly reducing computational demands while maintaining high-quality outcomes [4].

Its core mechanism involves passing the person image and the garment image through a shared VAE encoder to obtain latent vectors and , respectively. These are then directly concatenated along the spatial dimension to form a single input for the UNet, as shown in Equations (4)–(6):

However, running CatVTON independently for each view would result in visual incoherence due to the stochastic nature of diffusion models. To address this, we adopt the reference-driven strategy proposed in GS-VTON. When generating a series of multi-view images, we designate one view as the reference. During the self-attention computation in the UNet, for any non-reference image (at timestep ), its Key matrix and Value matrix are concatenated with the corresponding matrices from the reference image , as shown in Equations (7) and (8):

This ensures that our generated set of 2D visual blueprints, {}, exhibits high cross-view consistency in garment texture, color, and pattern.

3.3. View-Decomposed Expert LoRA Models

In our initial experiments extending the viewpoint to 360-degrees, we observed that fine-tuning a 2D pre-trained model with a single LoRA to learn the complete 360-degree appearance of a person often leads to a “feature averaging” dilemma. Specifically, when the visual information from the front, side, and back views differs significantly, forcing a single model to master all details simultaneously tends to result in all details—including garment edges, patterns, and textures—becoming blurred.

To resolve this fundamental problem, we devised a view-decomposed expert LoRA strategy. Instead of training a single, generic personalized model, we manually partition the image set {} generated in the second stage into three independent subsets: , , and , based on their azimuthal angle: front (from −60° to +60°), back (from 120° to 240°), and side (all remaining angles). Subsequently, on a pre-trained Stable Diffusion Inpainting model (with UNet weights ), we fine-tune a dedicated Low-Rank Adaptation (LoRA) module, , for each subset. During fine-tuning, the original weights remain frozen, and only the low-rank matrices of the LoRA are updated. The training objective is to minimize the standard diffusion model loss, as described in (9):

In Equation (9), is a clean image sampled from a specific view subset , is its noised latent representation at timestep , is the conditional input, is the sampled Gaussian noise, and represents the UNet model with the updated LoRA weights. This “divide and conquer” approach enables each LoRA module to become an expert for its designated viewpoint. While simple and intuitive, this method effectively addresses the challenge of a single model struggling to learn global information.

3.4. Iterative 3D Scene Editing

This is the final stage of our framework, where all preparatory work culminates. The objective is to precisely “paint” the visual appearance of the garment, guided by {} and the expert LoRA modules, onto the 3DGS human geometry base () established in the first stage.

We employ an iterative optimization strategy. The core idea is not to directly compute a loss between the 3D model and the 2D images, but rather to cleverly utilize our fine-tuned diffusion model as a “Quality Assessor.” The principle of this editor is that a perfect input image should require almost no modification. Therefore, our goal is to continuously adjust the 3DGS scene until its rendered images can “deceive” the editor into believing that no further changes are necessary. The specific iterative loop is as follows:

3.4.1. Render Current State

Render an image from a random viewpoint of the current 3DGS scene .

3.4.2. Consult Expert Opinion

The core objective of this step is to utilize the previously trained view-specific expert models to perform an “idealized edit” on the current 3DGS rendered image, , thereby generating a perfect target image, . First, based on the azimuthal angle of the current rendering viewpoint, the system selects and loads the corresponding expert module, , from the three pre-trained LoRA modules (front, side, and back).

The subsequent key operation occurs within the self-attention computation layers of the diffusion model’s UNet. Instead of a standard self-attention calculation, this process ingeniously fuses two sources of information to ensure the cross-view consistency of the edited result.

As formulated in Equation (10), this fusion is achieved by computing the attention output as a weighted sum of two distinct components, which we detail below:

Standard Attention

The first term of the equation, , represents the standard self-attention analysis on the current rendered image, , where , , and are all derived from . And . The function of this term is to allow the model to comprehend the current geometric structure, texture, and lighting distribution of the 3DGS scene, preserves the real-time fidelity of the 3D scene.

Reference Attention

The second term, , introduces a powerful prior. The model uses the query vector from the current image to “query” the entire training subset corresponding to that view, . By computing attention with the key and value from every image in the training subset and then averaging the results, the model obtains a highly consistent “average reference” based on all target images. This term essentially answers the question: “Based on all the correct examples I have seen for this view, what should the content at this current position ideally look like?”

The hyperparameter acts as a balancing coefficient, weighing the model’s confidence in the “current 3D structure” against its reliance on the “2D view-specific prior knowledge.” By blending these two attention outputs, the model not only performs plausible edits based on the current 3D geometry but also ensures that the edited textures, colors, and patterns converge toward the most stable and consistent target for that specific viewpoint.

Finally, after the full denoising process guided by this fused attention mechanism, the diffusion model outputs the idealized edit, . This result retains the structural foundation of while incorporating the consistent appearance that the target garment should have from that particular view.

3.4.3. Compute Improvement Space

We compute the visual difference between and as the loss. To ensure that edits are confined to the clothing region, we introduce a garment mask to weight the loss, thereby suppressing unnecessary alterations to the body and background. The loss function is defined as (11):

In Equation (11), and are loss weights. We incorporate the LPIPS [31] loss because, unlike traditional metrics based solely on pixel differences, ref. [31] utilizes multi-layer feature representations from a pre-trained convolutional neural network to compute perceptual similarity between images. It is learned with linear weights to align with human subjective evaluation. For a task like VTON, which aims to generate results with high perceptual realism, ref. [31] can significantly enhance the outcome.

3.4.4. Back-Propagate Correction

The calculated loss gradient is back-propagated only through to update the parameters of the Gaussian points in the 3DGS scene. The weights of the pre-trained diffusion model and the LoRA modules remain completely frozen during this process.

After several thousand iterations, the 3DGS scene gradually converges to an ideal state under the collective guidance of all the view-specific, LoRA fine-tuned pre-trained models, ultimately forming our final 3D VTON result, .

3.5. Implementation Details

All our experiments were conducted on a Linux system equipped with a single NVIDIA GeForce RTX 4090 (24 GB VRAM), with each stage taking between 20 min to one hour to train. This highlights the feasibility of our framework on consumer-grade hardware. Our implementation is based on the PyTorch (version 2.2.1 with CUDA 11.8) deep learning framework, and we use the Diffusers library from Hugging Face to handle the diffusion models. The SMPL-X mesh was generated using the off-the-shelf tools OpenPose [32] and SMPLify-X. The three expert LoRA modules were fine-tuned for 1000 iterations each on top of the Stable-Diffusion-2-Inpainting model [33]. We chose the inpainting version because it is better suited for local region editing, which aligns with the nature of virtual try-on. For the iterative 3DGS editing and optimization task, we followed the design of GS-VTON with 4000 iterations. Building upon the GaussianEditor [34] methodology, we introduced a 2D garment mask to constrain the editing area, maximally preserving details of the scene and body outside the clothing region. Our person images were uniformly input at a resolution of 432 × 768, supporting a higher resolution compared to the 512 × 512 limit common in previous studies.

4. Experiments and Results Analysis

Given the absence of a widely adopted, open-source benchmark for 3D VTON, we used a self-collected dataset to create the 3DGS scenes and establish a baseline for our experimental comparisons. In this study, we compare our method with two text-prompt-based 3D editing works, EditSplat [35] and Instruct-NeRF2NeRF [29]. For the comparison against GS-VTON [7], we specifically reproduced its experiments on a higher-tier V100 GPU with 32GB of memory to circumvent the out-of-memory (OOM) errors encountered on our 24 GB RTX 4090. Due to this difference in hardware, we will discuss the results from GS-VTON separately from the other studies. This ensures a fairer comparison by isolating the evaluation to our work and another image-guided approach. A comparison with GaussianVTON was not possible as its code has not been publicly released to date.

4.1. Qualitative Comparison

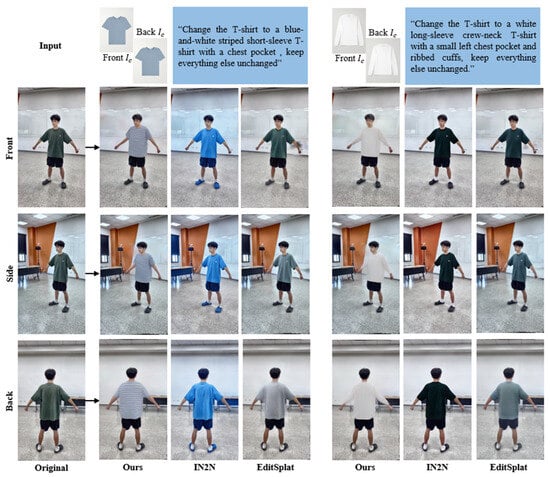

For our experiments, we used the VITON-HD and the open-source T-Shirts Dataset from Kaggle as the target garments for the VTON task. The person images were from a dataset we collected ourselves, consisting of 517 images captured from various angles in an indoor setting with stable lighting. This same person dataset was used as input for all qualitative comparisons. For the text-based methods, we adhered to their specified input requirements, providing information for “object_prompt,” “target_prompt,” “sampling_prompt,” and “target_mask_prompt.” To ensure a fair comparison, these prompts were generated using GPT-5 by feeding it the example prompt dataset from Instruct-Pix2Pix [36] along with an image of the target garment, and instructing it to produce a detailed and precise description of the garment’s appearance and material.

The comparison results are shown in Figure 2. It is evident that even with highly detailed textual descriptions of the target clothing, the performance of text-prompt-based scene editing methods on the try-on task falls far short of expectations. These methods appear to merely adjust the color tone of the original image, with no significant changes to the garment’s geometry or patterns. Even the colors often fail to match our textual descriptions. This validates the importance of using image-based prompts for the VTON task.

Figure 2.

Qualitative Comparison with text-based method studies 1.

We observed that our results do not perfectly preserve the original arm pose. We hypothesize this is because, after view decomposition, the model is trained and infers on a limited number of views, which can lead to inaccuracies when generating the mask that may erroneously include the arms, causing slight changes in posture. Another potential cause is that the SMPL-X model, when generating the geometric prior, still cannot reconstruct a mesh that perfectly matches our target subject’s body type. If the subject is leaner or heavier than the model average, the limbs can be incorrectly averaged during loss computation, preventing the complete preservation of the original pose. In future work, we plan to adding images from transitional regions to multiple expert LoRA datasets. This would force a single model to make more conservative changes to accommodate a wider range of views, which we hope would reduce pose variations.

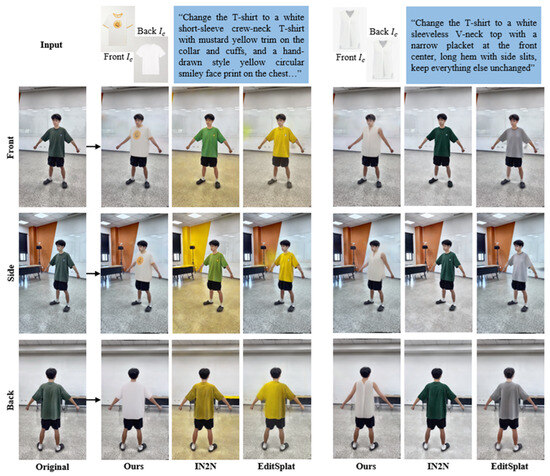

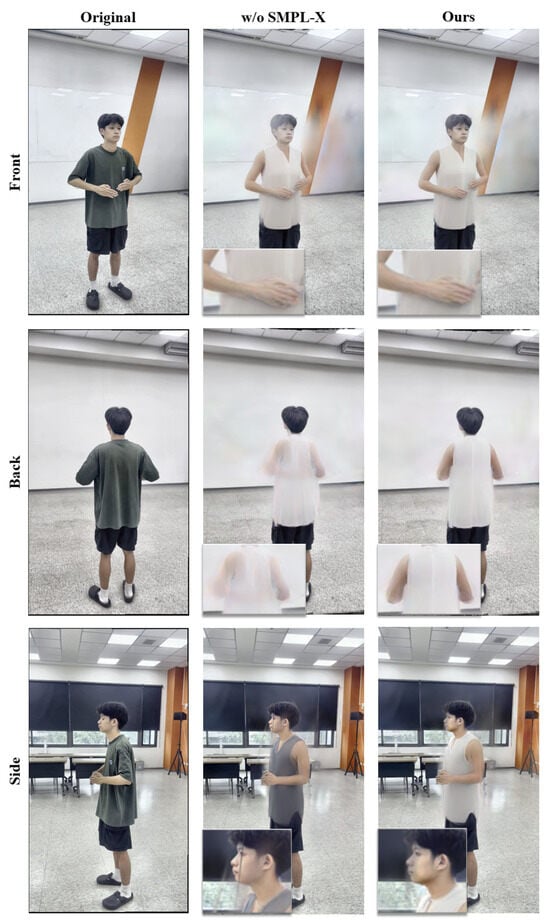

Subsequently, to verify our model’s ability to generate distinct front and back try-on results, we provided different garment inputs for the front and back and also experimented with a more challenging sleeveless sheer garment. The results are shown in Figure 3. It is clear that our model correctly differentiates between the front and back garment information, producing excellent try-on outcomes. In real-world applications, the front and back of most garments are different, so the ability to distinguish them is a crucial feature for enhancing realism. For the sleeveless sheer garment, our model successfully generates a complete human body structure, preserves the V-neck design of the garment, and even captures the semi-transparent quality of the sheer fabric. This confirms that our model can achieve strong try-on results across a variety of garment tasks.

Figure 3.

Qualitative Comparison with text-based method studies 2.

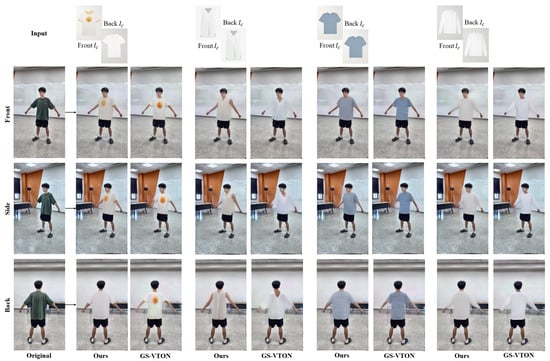

As observed in Figure 4, our method demonstrates a distinct advantage in maintaining the structural integrity of the arms and provides a more faithful reconstruction of challenging apparel, such as the semi-transparent sleeveless garment and inputs with different front and back designs. Conversely, GS-VTON exhibits a slight superiority in rendering fine garment details; for instance, with the white long-sleeve shirt input, their approach successfully generated a subtle chest pocket that was absent in our result. Nevertheless, when considering the memory requirements, we believe that our ability to achieve results largely comparable to GS-VTON while substantially lowering the hardware demands serves as an indirect testament to the effectiveness and value of our proposed method.

Figure 4.

Qualitative comparison with image-based method study.

To further demonstrate the effectiveness of our lightweight design, we provide a quantitative comparison of computational complexity using GFLOPs and the number of parameters, as shown in Table 1. Regarding resource consumption, our proposed view-decomposed LoRA strategy exhibits a significant advantage: during a single inference or training process, the additional VRAM occupancy comes from only one LoRA module, thus avoiding a threefold increase in the real-time memory load. The primary cost of this approach is concentrated in the one-time training phase; to generate the three expert modules, the total training time and required storage space are indeed three times that of a single LoRA module. However, given the inherently small parameter count of LoRA models, this increased storage cost is entirely acceptable.

Table 1.

Comparison of computational complexity. Red font indicates the best result.

4.2. Quantitative Comparison

Given that the field currently lacks a universally accepted quantitative metric, we implemented a program to provide more objective comparison data. This program comprehensively assesses the visual similarity between the generated and input garments from multiple aspects. The evaluation includes using the CLIP model to extract garment semantic features for similarity computation, along with analyzing multi-space color histograms (RGB/HSV/LAB), LBP texture features, and Canny edge detection. Recognizing that human perception is highly sensitive to the semantic features and color of apparel, we assign a higher weight of 0.3 to these two metrics, in contrast to the slightly lower weight of 0.2 assigned to edge and texture information. The final results, presented in Table 2, demonstrate that our method achieves better performance, validating the quality of our work from both subjective and objective perspectives.

Table 2.

The mean and standard deviation, calculated across multiple VTON results. Red font indicates the best result.

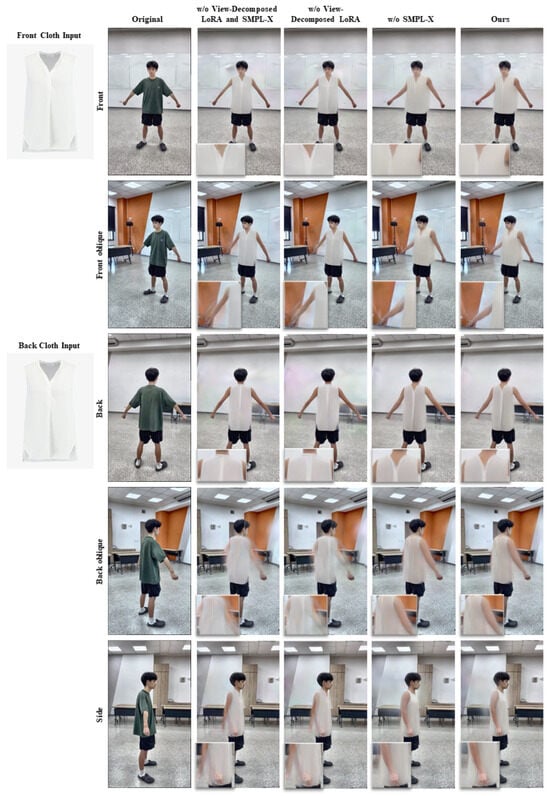

4.3. Ablation Study

To thoroughly validate the effectiveness of our two core designs—the view-decomposed LoRA strategy and the SMPL-X geometric prior—we conducted a comprehensive series of ablation studies. We set up four experimental configurations: “w/o Both,” which removes both designs; “w/o View-Decomposed LoRA,” which only removes the view decomposition strategy; “w/o SMPL-X,” which only removes the SMPL-X prior; and “Ours,” the full model. We present both qualitative visual results and quantitative metrics, evaluating five viewpoints: front, front-oblique, back, back-oblique, and side.

The quantitative results were calculated by using the original 2D person images (without try-on) from the same viewpoints as the Ground Truth. We chose PSNR, SSIM, and LPIPS as metrics because a successful VTON task should only alter the clothing region while minimizing changes to the background and other body parts. To some extent, these metrics can represent the quality of the VTON results, although the values are expected to be low due to this evaluation set up.

The results are presented in Figure 5 and Table 3. From the quantitative comparison, we found that while the view-decomposed LoRA strategy slightly lowers metric performance on the front and front-oblique views, it brings significant improvements to the back and back-oblique views. This aligns with our design motivation: by training fine-tuned LoRA modules for different views, we enhance the robustness of the global results. Notably, although the side view with the view-decomposed LoRA strategy shows poorer quantitative performance, a qualitative inspection of Figure 5 clearly reveals that the two experimental groups without this strategy suffer from a loss of proper arm geometry. This visually confirms that the view-decomposed strategy indeed yields more stable result quality.

Figure 5.

Qualitative comparison results of ablation study.

Table 3.

Quantitative comparison results of ablation study. Red font indicates the best result, while an underline marks the second-best.

In summary, the results of the ablation study demonstrate that our proposed strategies, when introduced, outperform the baseline without either component (“w/o Both”) in most cases. The qualitative comparison also shows higher global stability, providing a certain guarantee of quality.

4.4. Discussion

In our experiments, the introduction of the SMPL-X geometric prior did not lead to a marked improvement, only contributing to more plausible human poses and more robust arm structures in certain views. We believe the effect was not more pronounced because our human dataset does not feature extreme poses, and the subject’s outstretched arms reduce occlusion issues. Therefore, we expect that this human prior model would provide more significant stability and optimization benefits in more challenging pose scenarios. To validate the effectiveness of the SMPL-X method, we tested it on a dataset of self-occluded poses, as presented in Appendix A.

Regarding the decline in quantitative metrics for certain views after introducing the View-Decomposed LoRA strategy, we hypothesize that this is because a single LoRA method, in its effort to learn a 360-degree appearance, is exposed to images from all angles, allowing it to develop a more coherent and unified understanding of features like the arms. In contrast, while our view-decomposed LoRA approach reduces the occurrence of feature blurring and artifacts, each module specializes in a distinct perspective. Consequently, during the iterative editing of the 3D Gaussian scene, different expert LoRAs can influence the results generated by others. This can cause the outcome of a frontal edit to be slightly altered during a subsequent back-view edit, which in turn leads to a decrease in PSNR values for certain views.

As our research, which integrates neural rendering with human priors, achieves significantly greater efficiency than previous physics-based simulation studies, our future work will explore the potential of this lightweight framework for biomedical applications. We aim to provide new pathways for improving solutions in resource-constrained telemedicine environments [37].

5. Conclusions

We have presented “Splatting the Cat,” an efficient 3D virtual try-on framework designed to address the barriers to practical deployment, substantial memory requirements, and cross-view inconsistencies prevalent in existing methods. Our approach supports full 360-degree viewing angles, significantly enhancing its practical application value and feasibility for deployment. By decoupling the complex 3D VTON task into a four-stage cascade, introducing a view-decomposed LoRA strategy, and incorporating the SMPL-X model as a human geometric prior, we have achieved cross-view-consistent VTON results while markedly reducing memory demands. Our experiments demonstrate that, compared to text-prompt-based 3D scene editing methods, our approach more accurately restores garment geometry and texture, successfully handling complex tasks such as fitting different front and back garments and rendering semi-transparent materials. Furthermore, in comparison to other image-guided 3D VTON research, our method’s most distinct advantages are the superior cross-view robustness of the human geometry and the unique capability to render 360-degree results using different garments for the front and back. While we have identified a minor issue of slight pose variations in areas like the arms, we plan to address this in future work by incorporating overlapping transitional images into the different expert LoRA modules or by improving the precision of the garment mask to achieve more robust and consistent VTON outcomes. Overall, our research provides an efficient, high-quality solution for the 3D VTON field and represents an important milestone in the pursuit of lightweight 3D VTON research.

Author Contributions

Conceptualization, C.-W.W., H.-K.H., T.-Y.L. and C.-H.C.; methodology, C.-W.W., H.-K.H., T.-Y.L., H.-W.H. and C.-H.C.; software, C.-W.W., H.-K.H. and T.-Y.L.; validation, C.-W.W., H.-K.H. and T.-Y.L.; formal analysis, C.-W.W., H.-K.H. and T.-Y.L.; investigation, C.-W.W., H.-K.H. and T.-Y.L.; resources, H.-K.H. and C.-H.C.; data curation, C.-W.W., H.-K.H. and T.-Y.L.; writing—original draft, C.-W.W.; writing—review and editing, C.-W.W.; visualization, C.-W.W.; supervision, H.-W.H. and C.-H.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Ethical review and approval were waived for this study, as the research exclusively used personally captured images of the author, with the author being the sole subject of the dataset. Therefore, no external human subjects were involved.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The dataset generated and analyzed during the current study is not publicly available due to its nature of containing sensitive and personally identifiable information (the author’s own portraits). Sharing of this data is restricted to protect personal privacy.

Conflicts of Interest

The authors declare no conflicts of interest.

Nomenclature

The following explains the context in which each term is used in this study.

| Reconstruction | Specifically refers to the process of establishing the initial 3DGS scene from multi-view images. |

| Fine-tuning | Specifically refers to the method of adapting a large pre-trained model using Low-Rank Adaptation (LoRA) modules. |

| Editing | Specifically refers to the process of updating the 3DGS scene through iterative optimization to accomplish the virtual try-on task. |

| Rendering | Specifically refers to the action of generating a 2D image from the 3DGS scene. |

| Optimization | Used as a general term to describe the process of minimizing a loss function. |

Appendix A

Figure A1.

Self-occlusion human dataset test.

References

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS 2014), Montréal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Xie, Z.; Huang, Z.; Dong, X.; Zhao, F.; Dong, H.; Zhang, X.; Zhu, F.; Liang, X. GP-VTON: Towards General Purpose Virtual Try-On via Collaborative Local-Flow Global-Parsing Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2023), Vancouver, BC, Canada, 17–24 June 2023; pp. 23550–23559. [Google Scholar]

- Choi, Y.; Kwak, S.; Lee, K.; Choi, H.; Shin, J. Improving Diffusion Models for Authentic Virtual Try-On in the Wild. In European Conference on Computer Vision; Springer Nature: Cham, Switzerland, 2024; pp. 206–235. [Google Scholar]

- Zheng, C.; Dong, X.; Li, H.; Zhang, S.; Zhang, W.; Zhang, X.; Zhao, H.; Jiang, D.; Liang, X. CatVTON: Concatenation Is All You Need for Virtual Try-On with Diffusion Models. In Proceedings of the 13th International Conference on Learning Representations (ICLR 2025), Singapore, 24–28 April 2025. [Google Scholar]

- Kerbl, B.; Kopanas, G.; Leimkühler, T.; Drettakis, G. 3D Gaussian Splatting for Real-Time Radiance Field Rendering. ACM Trans. Graph. 2023, 42, 139. [Google Scholar] [CrossRef]

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. Nerf: Representing scenes as neural radiance fields for view synthesis. Commun. ACM 2021, 65, 99–106. [Google Scholar] [CrossRef]

- Cao, Y.; Hadi, M.; Pan, L.; Liu, Z. GS-VTON: Controllable 3D Virtual Try-On with Gaussian Splatting. arXiv 2024, arXiv:2410.05259. [Google Scholar] [CrossRef]

- Chen, H.; Huang, Y.; Huang, H.; Ge, X.; Shao, D. GaussianVTON: 3D Human Virtual Try-ON via Multi-Stage Gaussian Splatting Editing with Image Prompting. arXiv 2024, arXiv:2405.07472. [Google Scholar]

- Loper, M.; Mahmood, N.; Romero, J.; Pons-Moll, G.; Black, M.J. SMPL: A Skinned Multi-Person Linear Model. ACM Trans. Graph. 2015, 34, 248. [Google Scholar] [CrossRef]

- Pavlakos, G.; Choutas, V.; Ghorbani, N.; Bolkart, T.; Osman, A.A.; Tzionas, D. Expressive Body Capture: 3D Hands, Face, and Body from a Single Image. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 10967–10977. [Google Scholar] [CrossRef]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. LoRA: Low-Rank Adaptation of Large Language Models. arXiv 2021, arXiv:2106.09685. [Google Scholar]

- Han, X.; Wu, Z.; Wu, Z.; Yu, R.; Davis, L.S. VITON: An Image-Based Virtual Try-On Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7543–7552. [Google Scholar] [CrossRef]

- Choi, S.; Park, S.; Lee, M.; Choo, J. VITON-HD: High-Resolution Virtual Try-On via Misalignment-Aware Normalization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 14126–14135. [Google Scholar] [CrossRef]

- Han, X.; Huang, W.; Hu, X.; Scott, M. ClothFlow: A Flow-Based Model for Clothed Person Generation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 10470–10479. [Google Scholar] [CrossRef]

- Ge, Y.; Song, Y.; Zhang, R.; Ge, C.; Liu, W.; Luo, P. Parser-free virtual try-on via distilling appearance flows. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 8481–8489. [Google Scholar] [CrossRef]

- Wang, B.; Zheng, H.; Liang, X.; Chen, Y.; Lin, L.; Yang, M. Toward Characteristic-Preserving Image-Based Virtual Try-On Network. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 589–604. [Google Scholar]

- Men, Y.; Mao, Y.; Jiang, Y.; Ma, W.-Y.; Lian, Z. Controllable Person Image Synthesis with Attribute-Decomposed GAN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 5084–5093. [Google Scholar] [CrossRef]

- Ho, J.; Jain, A.; Abbeel, P. Denoising Diffusion Probabilistic Models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

- Morelli, D.; Baldrati, A.; Cartella, G.; Cornia, M.; Bertini, M.; Cucchiara, R. LaDI-VTON: Latent Diffusion Textual-Inversion Enhanced Virtual Try-On. In Proceedings of the 31st ACM International Conference on Multimedia (ACM MM 2023), Ottawa, ON, Canada, 29 October–3 November 2023; pp. 8580–8589. [Google Scholar] [CrossRef]

- Zhu, L.; Yang, D.; Zhu, T.; Reda, F.; Chan, W.; Saharia, C.; Norouzi, M.; Kemelmacher-Shlizerman, I. TryOnDiffusion: A Tale of Two UNets. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 4606–4615. [Google Scholar] [CrossRef]

- Kim, J.; Gu, G.; Park, M.; Park, S.; Choo, J. StableVITON: Learning Semantic Correspondence with Latent Diffusion Model for Virtual Try-On. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; pp. 8176–8185. [Google Scholar] [CrossRef]

- Xu, Y.; Gu, T.; Chen, W.; Chen, A. OOTDiffusion: Outfitting fusion based latent diffusion for controllable virtual try-on. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 27 February–2 March 2025; pp. 8996–9004. [Google Scholar]

- Hu, L. Animate anyone: Consistent and controllable image-to-video synthesis for character animation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 8153–8163. [Google Scholar]

- Guan, P.; Reiss, L.; Hirshberg, D.A.; Weiss, A.; Black, M.J. DRAPE: DRessing Any PErson. ACM Trans. Graph. (ToG) 2012, 31, 1–10. [Google Scholar] [CrossRef]

- Hahn, F.; Thomaszewski, B.; Coros, S.; Sumner, R.W.; Cole, F.; Meyer, M.; DeRose, T.; Gross, M. Subspace Clothing Simulation Using Adaptive Bases. ACM Trans. Graph. (TOG) 2014, 33, 1–9. [Google Scholar] [CrossRef]

- Lähner, Z.; Cremers, D.; Tung, T. DeepWrinkles: Accurate and Realistic Clothing Modeling. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 667–684. [Google Scholar] [CrossRef]

- Bhatnagar, B.L.; Tiwari, G.; Theobalt, C.; Pons-Moll, G. Multi-Garment Net: Learning to dress 3D people from images. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 5420–5430. [Google Scholar] [CrossRef]

- Mir, A.; Alldieck, T.; Pons-Moll, G. Learning to transfer texture from clothing images to 3D humans. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 7023–7034. [Google Scholar]

- Haque, A.; Tancik, M.; Efros, A.A.; Holynski, A.; Kanazawa, A. Instruct-NeRF2NeRF: Editing 3D Scenes with Instructions. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV 2023), Paris, France, 1–6 October 2023; pp. 19740–19750. [Google Scholar]

- He, Z.; Ning, Y.; Qin, Y.; Wang, W.; Yang, S.; Lin, L.; Li, G. VTON 360: High-Fidelity Virtual Try-On from Any Viewing Direction. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 10–17 June 2025; pp. 26388–26398. [Google Scholar]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 586–595. [Google Scholar] [CrossRef]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.-E.; Sheikh, Y. OpenPose: Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 172–186. [Google Scholar] [CrossRef] [PubMed]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-Resolution Image Synthesis with Latent Diffusion Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 10684–10695. [Google Scholar] [CrossRef]

- Chen, Y.; Chen, Z.; Zhang, C.; Wang, F.; Yang, X.; Wang, Y.; Cai, Z.; Yang, L.; Liu, H.; Lin, G. GaussianEditor: Swift and Controllable 3D Editing with Gaussian Splatting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 21476–21485. [Google Scholar]

- Lee, D.I.; Park, H.; Seo, J.; Park, E.; Park, H.; Baek, H.D.; Shin, S.; Kim, S.; Kim, S. EditSplat: Multi-View Fusion and Attention-Guided Optimization for View-Consistent 3D Scene Editing with 3D Gaussian Splatting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 11–15 June 2025; pp. 11135–11145. [Google Scholar]

- Brooks, T.; Holynski, A.; Efros, A.A. InstructPix2Pix: Learning to Follow Image Editing Instructions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2023), Vancouver, BC, Canada, 17–24 June 2023; pp. 18392–18402. [Google Scholar]

- Laganà, F.; Pellicanò, D.; Arruzzo, M.; Pratticò, D.; Pullano, S.A.; Fiorillo, A.S. FEM-Based Modelling and AI-Enhanced Monitoring System for Upper Limb Rehabilitation. Electronics 2025, 14, 2268. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).