1. Introduction

Rapid progress in large language models (LLMs) has accelerated the adoption of chatbots in various domains, particularly in the entertainment industry, where immersive and interactive experiences are highly valued [

1]. Recent studies increasingly focus on developing character-based chatbots that emulate the personas of fictional characters from novels, films, and animations [

2,

3], with Character.AI emerging as a notable commercial example [

4].

Such systems must not only recall the factual details of a fictional narrative but also maintain the distinctive linguistic style, emotional tone, and behavioral patterns of a specific character [

5,

6,

7]. Achieving this dual objective is essential for sustaining user immersion and delivering an authentic narrative experience.

However, maintaining both factual grounding and persona coherence in literary-based chatbots remains a significant challenge. While Retrieval-Augmented Generation (RAG) approaches have proven effective in improving factual accuracy by referencing narrative events [

8], the consistent preservation of character personas across multi-turn dialogues remains unresolved [

9,

10]. As a result, persona consistency is frequently underdeveloped, leading to responses that may be factually correct but stylistically generic. Without integrating factual knowledge and persona fidelity, chatbots risk being perceived as mere information retrievers rather than as living, breathing characters.

The difficulty is compounded by the nature of literary texts. Unlike conversational datasets, novels rarely provide extensive character-specific dialogue, resulting in data sparsity that limits the effectiveness of fine-tuning approaches [

2]. Narrative structures also introduce complexity in entity resolution, with characters referred to through multiple aliases, pronouns, or descriptive expressions [

11]. Furthermore, most existing systems require manual curation, character-specific tuning, or external knowledge integration, hindering scalability and automation [

12,

13].

To address these gaps, we propose Fic2Bot (Fiction-to-Bot), an end-to-end framework that automatically extracts and operationalizes a character’s persona directly from the raw novel text. Our approach integrates three key dimensions of persona representation:

(1) Worldview Knowledge—The raw novel text is segmented into scene-level units and structured with metadata, enabling the selective retrieval of key passages according to query and context. This design enhances narrative coherence while improving factual accuracy.

(2) Speech Style—Character utterances are analyzed through TF-IDF-based lexical profiling to extract characteristic vocabulary, while syntactic patterns such as sentence length and the frequency of interrogatives, negations, and exclamations are also examined.

(3) Emotional Tone—Pre-trained sentiment analysis models are applied to characterize the emotional tendencies of character utterances, which are quantified into a five-level distribution across positive and negative polarities.

The main contributions of this work are as follows:

End-to-end automated framework: We propose Fic2Bot, the first framework that automatically constructs in-character chatbots directly from raw novel text, eliminating the need for manual curation or fine-tuning.

Scene-structured RAG retrieval: We introduce a retrieval strategy that segments narratives into scene-level units with metadata, achieving higher recall and ranking precision compared to semantic chunking.

Comprehensive persona profiling: We develop a multidimensional analysis of lexical, syntactic, and sentiment features, enabling consistent stylistic and emotional expression across dialogues.

Extensive evaluation across genres: We validate the framework on three novels spanning children’s literature, romance comedy, and thriller, demonstrating robust performance in entity identification (F1 > 0.94), retrieval (+46.7%p Maximum Recall@3 improvement), and speaker attribution (up to +60.7%p over BookNLP).

This paper introduces the relevant previous research (

Section 2), specific methods used in each component of the framework (

Section 3), key experiments (

Section 4), discussion of the results (

Section 5), limitations of our work (

Section 6), and conclusions drawn from this research (

Section 7) in order.

2. Related Work

2.1. Persona Chatbot

The persona is a key element for delivering consistent interactions by continuously reflecting the unique personality and linguistic characteristics of a specific character and is essential for providing an immersive experience. In particular, character chatbots based on entertainment content aim to go beyond simple question-and-answer exchanges to offer users the experience of interacting with the original character, and active research is being conducted in this area [

14,

15].

Accordingly, recent efforts toward maintaining persona consistency have primarily focused on fine-tuning pre-trained language models on character-specific dialogue data to internalize character’s stylistic and personality traits within the model [

12,

14,

16]. However, such approaches face limitations in reconstructing existing characters, as they require separate fine-tuning or incorporation of external knowledge for each character, along with access to carefully curated datasets [

17].

Consequently, this study proposes Fic2Bot, a framework that automatically extracts characters’ speaking styles, personalities, and world knowledge solely from original fictional texts and integrates them into an LLM-based chatbot.

2.2. Coreference Resolution

Narrative texts such as novels often intermingle character names, aliases, and pronouns, making it difficult to clearly identify the speaker of each utterance [

18]. Therefore, coreference resolution—the task of determining whether different expressions refer to the same entity—is an essential preprocessing step.

Representative models in coreference resolution research include AllenNLP [

19], and more recently, BERT-based pre-trained models such as SpanBERT, which learn span boundary information and have achieved high performance in this task [

20,

21]. However, existing studies have mainly focused on structured texts such as news articles and Wikipedia, leading to performance degradation when applied to literary texts.

To address this, BookNLP [

22], which is tailored for literary text analysis, integrates speaker identification, entity extraction, and coreference resolution, and is capable of handling both singular and plural references. Nevertheless, as Bamman et al. [

23] pointed out, literary texts clearly distinguish between the narrative domains of narrators and characters, with frequent shifts between general and specific references. Moreover, in long-form novels, characters’ personalities and relationships may evolve throughout the story, and because BookNLP relies on surface-level linguistic cues such as pronoun-based references and name–pronoun associations, it faces limitations in maintaining consistent coreference matching for the same character across the entire narrative.

To overcome these limitations, recent studies have proposed prompt-based coreference resolution approaches leveraging large language models (LLMs). In particular, a method called Major Entity Identification (MEI) has been introduced to selectively identify coreferences referring only to key entities [

24]. This approach employs a two-stage prompting strategy, in which key terms are first extracted and then expanded into full phrases. This helps reduce unnecessary coreference links in literary texts with many characters and complex contexts, enabling more accurate resolution focused on key entities.

Building upon these prompt-based approaches, in this paper, we apply a modified MEI prompt structure, tailored to the goal of improving coreference resolution performance in literary texts.

2.3. Retrieval-Augmented Generation (RAG)

RAG is a representative approach designed to overcome the knowledge limitations of large language models (LLMs). It follows a two-stage architecture in which external knowledge is first retrieved and then used to generate a response to a given query [

25]. This method is particularly useful in scenarios that demand accurate, fact-based responses, and has been highlighted as an effective means to mitigate the hallucination problem commonly observed in generative models [

26].

Naive RAG models simply convert a user query into a vector and retrieve semantically similar documents from the entire corpus before generating a response. However, this approach often suffers from a lack of semantic cohesion between the query and the retrieved context, or the injection of irrelevant information, ultimately degrading the quality of the response. These limitations become more pronounced when dealing with narrative texts such as long-form novels, where the relationships between characters and the progression of events are highly complex [

27].

To address these challenges, recent research has proposed Advanced RAG techniques, which refine both the retrieval and generation phases to enhance accuracy and coherence. These include methods such as query optimization through expansion or rewriting, metadata-based targeted retrieval, and scene-level document segmentation. Compared to simple vector similarity-based retrieval, these strategies offer greater contextual relevance and improved response quality [

28].

Such Advanced RAG strategies are particularly effective in the context of literature-based character chatbot systems. Scene-level retrieval allows for a more precise reflection of intricately intertwined elements such as characters, events, and settings, while metadata filtering enables targeted retrieval of scenes focused on specific characters. In this study, we move beyond naive retrieval approaches and apply an Advanced RAG framework optimized for the Fic2Bot system, leveraging scene-based structuring and character-centric tagging.

2.4. A Chatbot System Based on Retrieval-Augmented Generation (RAG)

RAG-based chatbot systems have recently been actively investigated for their potential applications across various domains, including healthcare, customer support, education, and entertainment [

29,

30,

31,

32,

33]. The core principle of RAG lies in retrieving external knowledge sources and injecting them into the context of the generative model, allowing systems to incorporate up-to-date and rich information without relying solely on internal parameters. This approach has shown strong effectiveness in fact-intensive tasks such as open-domain question answering. However, in persona-oriented chatbots, which require consistent reproduction of a character’s speaking style, personality, and narrative worldview, this simple retrieval–injection mechanism remains insufficient [

14].

To address these shortcomings, ChatHaruhi [

17] combines script-based memory retrieval, persona-aware prompt design, and sentence embedding search to reproduce a character’s tone and behavior. While this approach demonstrates success in maintaining character consistency, it remains restricted to predefined characters. Incorporating new ones requires extensive manual work, including script collection, data curation, and prompt engineering, which severely limits both scalability and automation.

In contrast, the proposed Fic2Bot framework requires only the raw novel text provided by the user. Through coreference resolution, scene-level RAG, and stylistic–sentiment profiling, it automatically extracts each character’s speech style, personality, and world knowledge and integrates them into response generation. As a result, no manual dataset construction or character-specific tuning is needed. More importantly, Fic2Bot is not bound to a fixed set of characters or a specific domain: it can generalize to entirely new fictional characters across different novels and genres, thereby overcoming the generalization limitations of prior approaches. Thus, Fic2Bot achieves scalability, generalization, and minimal human intervention simultaneously, complementing the limitations of the ChatHaruhi approach.

3. Methods

This section details the proposed Fic2Bot framework, an automated pipeline that transforms raw novel text into in-character chatbot. Given long-form narrative text as the input, the system generates dialogue that reflects each character’s personality, tone, and style. To ensure both factual accuracy and persona consistency, the framework combines scene-level RAG with persona profiling.

We first outline the overall architecture (

Section 3.1) and then describe each component in execution order:

Major Entity Identification (MEI)(

Section 3.2) resolves ambiguity in character references across names, nicknames, and pronouns by consolidating them into unified entity representations, improving the accuracy and efficiency of subsequent modules.

Scene-level RAG (

Section 3.3) restructures the novel into discrete scene units and retrieves only those relevant to a query, reducing irrelevant context and enhancing response accuracy and contextual appropriateness.

Character Style Extraction (

Section 3.4) analyzes character utterances to build quantitative profiles of stylistic features—tone, speech patterns, and emotional tendencies—which model each character’s linguistic and affective traits.

Persona-based Response Generation (

Section 3.5) integrates retrieved scene context with style profiles to produce responses that are both factually grounded and consistent with how the character would plausibly speak within the narrative world.

For clarity, the module order in this section matches the evaluation order in

Section 4.

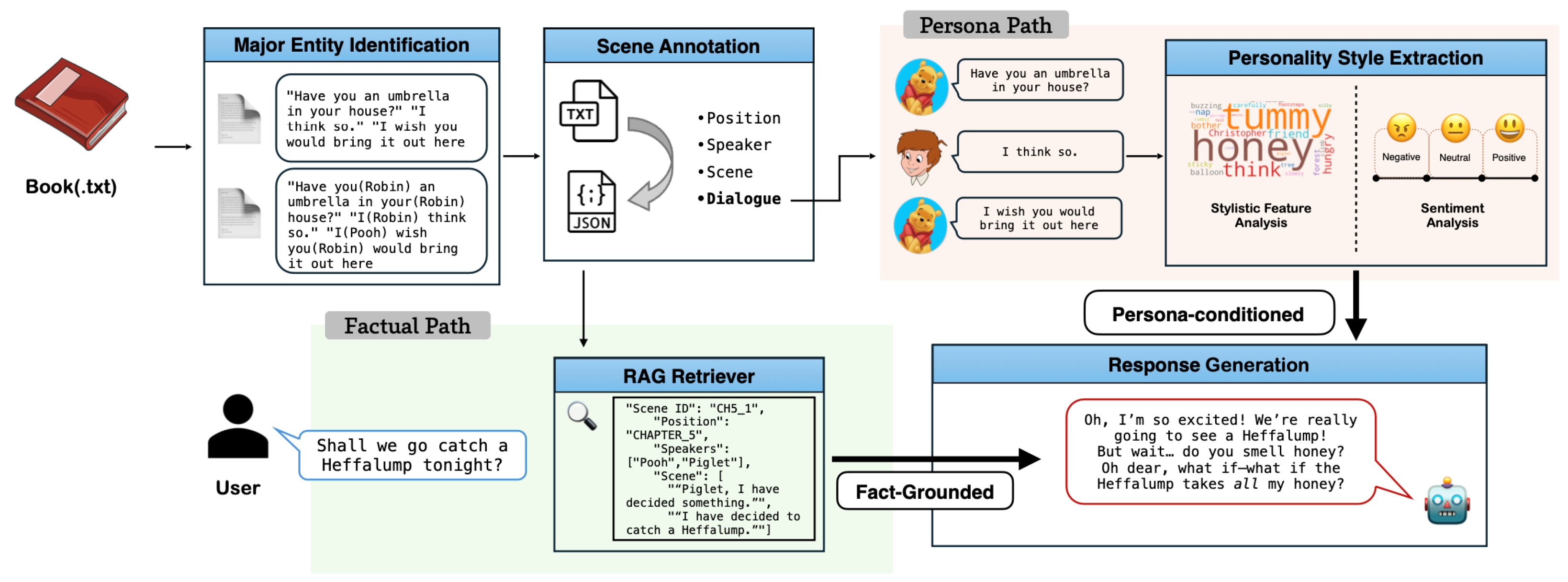

3.1. Overall Pipeline Architecture

Figure 1 illustrates the end-to-end Fic2Bot framework. The input is a raw novel text file (e.g., .txt), which undergoes preprocessing to resolve coreference and segment the story into discrete scenes. The preprocessed data then flows into two parallel, complementary paths:

Factual Path: Constructs a scene-level retrieval database for accurate, fact-based responses. This path supports the chatbot in recalling specific events, settings, and in-world facts from the novel.

Persona Path: Builds a character-specific style and personality profile by aggregating and analyzing all utterances attributed to each character.

The two paths converge in the final chatbot generation module, which integrates retrieved factual content with persona-specific stylistic and emotional features to produce character-consistent, contextually grounded responses.

3.2. Preprocessing: Major Entity Identification and Scene Structuring

The preprocessing stage serves two purposes: (1) link all mentions (names, aliases, pronouns) to their corresponding major characters, and (2) restructure the novel into scene-level JSON objects for downstream retrieval.

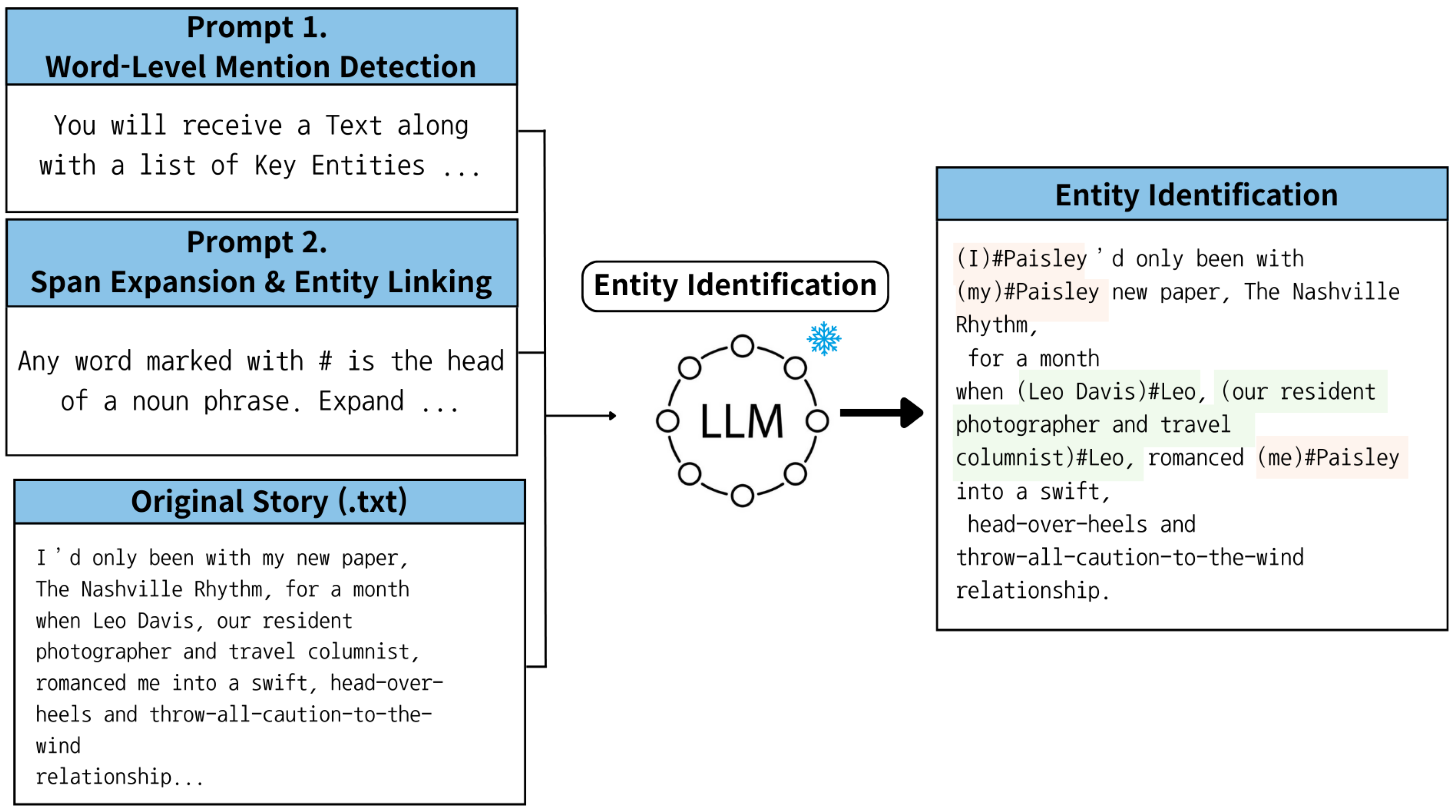

The Major Entity Identification (MEI) task employs a two-stage prompting strategy [

24]. The process begins with the selection of major entities and then proceeds through two stages, as illustrated in

Figure 2.

Major Entity Selection: Characters that appear five or more times in the novel are designated as primary entities; their names are then supplied to the prompt.

Stage 1—Word-Level Mention Detection: Identify the head word of each mention for all major entities (characters appearing ≥ 5 times in the text).

Stage 2—Span Expansion and Entity Linking: Expand each mention to its full phrase and link all variants to the corresponding entity.

MEI was proposed to address a limitation of conventional coreference resolution models, which perform well in mention clustering but exhibit lower accuracy in mention detection. This approach provides major entities as prior inputs along with the text to guide the reference resolution process. Its generalization ability has been validated across diverse domain datasets, and it has demonstrated robustness in long-form narratives such as novels, where pronouns and aliases frequently occur.

In this study, we adapted the prompts proposed in prior work [

24] to fit our research objectives. Specifically, we restricted the recognized entities to character entities within the novel, thereby eliminating irrelevant mentions such as objects or locations, and explicitly incorporated the recognition of plural pronouns. The complete Stage 1 prompt incorporating MEI is presented in

Appendix A.1, whereas

Appendix A.2 details the subsequent mention-span expansion and the final matching procedure with the primary entities.

Once entity linking is completed, the text is segmented into scenes through LLM-based prompting. The prompt instructs the segmentation of scenes based on changes in time, location, or main characters as defined in SceneML [

34]. Each scene is stored as a JSON object containing key metadata, such as a list of speakers, dialogue with matched speaker–utterance pairs, scene ID, and scene position (Chapter). This provides the foundation for the retrieval process in

Section 3.3 and the persona analysis in

Section 3.4. The complete prompt specification used in this step is presented in

Appendix A.3.

3.3. Scene-Level Retrieval-Augmented Generation (RAG)

In the factual path of our framework, we adopt a scene-level RAG approach. Compared to naive chunk-based retrieval, scene segmentation aligns retrieval units with narrative boundaries, thereby preserving contextual coherence [

35]. The overall process is schematically illustrated in

Figure 3.

The input query is searched against the scene-level structured data constructed during preprocessing. The query is rewritten by the LLM to improve semantic matching. The prompt used for this process is provided in

Appendix B. It is designed to eliminate ambiguity within the question while preserving the user’s original intent, enforce a consistent interrogative format, and prevent the model from inferring unsupported context. Moreover, it encourages concise and keyword-rich phrasing to enhance compatibility with retrieval systems. The retrieved scenes are subsequently reranked to improve precision and then passed to the response generation module, thereby ensuring factual accuracy in the final outputs.

3.4. Character Style Extraction

The persona path aggregates all dialogues for each character from the preprocessed scene data, in order to ensure consistent persona representation, and to perform two main analyses:

Stylistic Feature Analysis: Extracts signature vocabulary (via TF–IDF) and syntactic patterns (sentence length, question, exclamation, negation frequency). These features reflect how the character speaks.

Sentiment Analysis: Applies the pre-trained sentiment analysis model from Hugging Face, to score each character’s utterances into a score ranging from 1 (very negative) to 5 (very positive). This allowed for a quantitative measurement of the overall emotional state and tendencies of each character’s speech.

Combining lexical, syntactic, and sentiment features produces a multi-dimensional style profile that captures both linguistic habits and emotional tendencies. This profile directly informs the persona conditioning in the chatbot generation stage.

3.5. Persona-Based Chatbot Generation

In the final stage, the system integrates

Persona Instruction Prompt: Instructs the model to generate responses in the character’s style while referencing retrieved scenes. Additional rules enforce concise outputs and require that a reply is always generated, preventing empty answers.

Relevant Context from RAG: Supplies factually relevant scenes to ground the response.

Persona Analysis Data: Provides the extracted stylistic and sentiment features.

User Query: The incoming question or statement from the end user.

All components are combined into a single structured prompt for the LLM, which generates a response that is both factually accurate and stylistically faithful to the target character.

Figure 4 illustrates the integration of the four components into a single prompt.

Merging the outputs of the factual and persona paths ensures that generated responses are neither generic fact lists nor style-only imitations, but coherent, immersive replies that maintain the integrity of the fictional world.

4. Experiments

This section presents the procedures and results of key experiments conducted to verify whether the proposed Fic2Bot framework can effectively ensure persona consistency and factual accuracy in novel-based chatbots. The primary objective of these experiments is to evaluate the extent to which each module of the framework contributes to the chatbot’s overall performance.

To this end,

Section 4.1 introduces the datasets used in our experiments.

Section 4.2 details the implementation of the proposed pipeline.

Section 4.3 quantitatively evaluates each component of the framework.

Section 4.3.1 assesses Entity Identification in the preprocessing stage;

Section 4.3.2 evaluates the RAG component, which provides factual context from the novel;

Section 4.3.3 examines speaker–utterance matching, which supports character style extraction; and

Section 4.3.4 analyzes character speech patterns and emotional expressions based on the extracted corpus.

Section 4.4 evaluates the final chatbot’s persona consistency across multi-turn dialogues, and

Section 4.5 presents ablation and efficiency analyses, which investigate both the contribution of each component to the framework and the practical feasibility in terms of inference latency and computational cost.

4.1. Dataset

To verify the generality and scalability of our approach, we selected three novels according to two criteria: (1) Popularity diversity—including both widely known bestsellers and lesser-known titles to test performance when the LLM has varying prior exposure; (2) Genre diversity—covering distinct styles (children’s literature, romance comedy, and thriller) to avoid genre-specific overfitting.

In this study, we used three novels as source texts for persona-chatbot generation. Their titles and genres are listed in

Table 1, and the original texts were collected exclusively for research purposes.

4.2. Implementation

All experiments were conducted in Python 3.10, with Gemini-2.5-pro serving as the primary LLM backbone. Texts were segmented at the chapter level, and Major Entity Identification (MEI) was performed using a two-stage prompting strategy.

For retrieval, novels were converted into JSON format with metadata fields. Embeddings were generated with

Gemini-embedding-001 [

36] and searched using the vector store

Faiss [

37]. To improve ranking quality, we applied the Advanced RAG framework with a reranker (

BAAI/bge-reranker-base [

38]) and Gemini-based query rewriting.

Speaker attribution was performed with Gemini-2.5-pro, using BookNLP as a baseline, and the resulting speaker–utterance pairs were used for stylistic profiling. For sentiment analysis, we applied the

nlptown/bert-base-multilingual-uncased-sentiment model [

39], aggregating polarity scores (1–5) by character.

Finally, Gemini-2.5-pro generated multi-turn dialogue responses conditioned on persona profiles and retrieved scenes, while GPT-4 served as an independent evaluator for persona consistency. All experiments were conducted using the default hyperparameters provided by the Gemini-2.5-pro model (temperature = 1.0, max tokens = 8192, top P = 0.95, etc.) and GPT-4 (temperature = 1.0, max tokens = 1024, top P = 1.0, etc.), without additional tuning.

4.3. System Component Evaluation

4.3.1. Entity Identification

In

Section 3.2, we adopt the Major Entity Identification (MEI) task together with a two-stage prompting strategy to evaluate how accurately the preprocessing module links referring expressions (e.g., pronouns, aliases) to predefined major entities. Major entities are restricted to named characters that appear at least five times in each novel, and all texts are partitioned at the chapter level to ensure consistency across evaluation units.

Two metrics were used for the evaluation:

NER (Named Entity Recognition): Measures the model’s ability to accurately identify referring expressions (mentions) for each character, based on precision and recall. For each entity in document d, let the gold mentions be and the predicted mentions be .

MEI (Major Entity Identification): Measures whether each identified mention is correctly linked to the corresponding character across the text. For each entity

, let

and

denote the gold and predicted mention sets. Precision, recall, and F1 are then computed as

We then compute document-level performance with both macro and micro averaging:

Macro F1 averages entity-level F1 scores with equal weight, reflecting the model’s consistency across both frequent and infrequent characters under class imbalance. Micro F1 aggregates true positives, false positives, and false negatives across all entities before computing a single F1 score, giving more weight to frequent characters and representing overall performance proportional to their occurrence. By reporting both macro and micro F1, we capture not only the model’s overall accuracy but also its ability to generalize fairly across characters of varying frequency.

In the multi-entity case, where a single mention may correspond to multiple characters, we applied the same set-based precision, recall, and F1 metrics as in the single-entity case. However, rather than framing all mentions as a single classification problem, we evaluated each mention independently by comparing its predicted and gold sets. This formulation directly measures how well the predicted and gold mentions overlap for each case.

4.3.2. RAG Retrieval with Metadata Structuring

This experiment evaluates the effectiveness of scene-level metadata structuring for retrieval. The evaluation dataset consisted of fact-based questions automatically generated for each scene, where the answer was explicitly contained in the same scene. From this pool, 30 questions were sampled as the test set.

We report Recall@k and MRR@k, comparing against a baseline built with semantic chunking of the entire text. Recall@k evaluates whether the correct scene is included within the top-k retrieved results for a given query. It is calculated as follows:

In contrast, MRR@k measures the average reciprocal rank of the first correct result, defined as

Here, denotes the rank position of the correct scene for the i-th query. While Recall@k reflects the system’s ability to retrieve the correct scene, MRR@k captures how early in the ranking the correct result appears. Using both metrics allows us to evaluate the retrieval system in terms of both accuracy and efficiency.

4.3.3. Speaker Identification

The goal of this experiment is to assess whether the automatically generated speaker–utterance corpus is accurate enough for persona extraction. Evaluation was conducted against the gold-standard dataset using Accuracy and F1 score. Accuracy was computed over the entire set of gold-standard dialogues, including cases where utterances were not recognized as dialogues. F1 was calculated only on successfully recognized quotations, measuring whether speakers were correctly attributed.

4.3.4. Stylistic Feature Analysis

This section presents the stylistic analysis conducted on the speaker–utterance matching corpus generated in

Section 4.3.3. First, the top TF–IDF terms for each character were extracted to identify the lexical cues that most strongly characterize their utterances.

Figure 5 visualizes these terms as word clouds, which make the contrasts in vocabulary usage and expression tendencies across characters more salient. Through this visualization, one can intuitively observe differences in preferred word choices and recurrent expressions, providing clear evidence of each character’s unique linguistic profile. Importantly, these lexical distinctions are not limited to descriptive purposes but also serve as input for subsequent analyses, including sentiment evaluation. The outcomes of these analyses are then incorporated into the chatbot response generation process, ensuring that each character’s linguistic tendencies are faithfully reproduced in dialogue and that differences in speech styles are more distinctly reflected in the generated responses.

To assess the separability of character-specific speech patterns, we measured both Jaccard similarity and cosine similarity between the extracted word groups. Lower similarity values indicate more distinct linguistic differences between characters.

In addition, we analyzed syntactic features for each character’s utterances, including the average number of words per utterance, average number of characters, the proportion of exclamation marks, the proportion of question marks, the proportion of negative expressions, and the proportion of first-person pronouns.

4.3.5. Sentiment Analysis

Building on the extracted utterances illustrated in

Figure 5, this experiment evaluates the emotional tendencies of characters by analyzing the sentiment of their utterances. Scores were assigned on a 1–5 scale, where lower values indicate a more negative tone and higher values indicate a more positive tone. The average score and distribution were examined to capture each character’s overall emotional atmosphere and expressive patterns.

4.4. Persona Consistency Evaluation in Multi-Turn Settings

This experiment aims to evaluate whether the chatbot consistently maintains its persona throughout multi-turn conversations. To this end, we constructed three dialogue scenarios: (1) ordinary conversation, (2) emotional conversation, and (3) fact-based conversation grounded in the fictional world of the novel. For ordinary and emotional dialogues, we utilized categories 1 (ordinary) and 4 (emotion and attitude) of the high-quality multi-turn dataset DailyDialog [

40]. For fact-based fictional dialogues, we randomly selected scenes from the novel and assumed that the user and the character chatbot were situated within those scenes.

For each scenario, dialogues were generated with 6, 12, and 20 turns to simulate a variety of multi-turn situations. Each dialogue was constructed by alternating responses between a user-role LLM (User LLM) and the character chatbot. The User LLM generated utterances based on pre-defined scenario-specific prompts as shown in

Appendix C Table A5. To ensure naturalness and comparability, the User LLM prompts explicitly restricted utterances to 1–2 sentences, preventing the User LLM from producing overly long messages. In addition, scenario-specific response styles were enforced to guarantee consistency and fairness across experimental conditions.

Finally, the persona consistency of the chatbot’s responses was evaluated using an LLM judging prompt (

Appendix C Table A6). As reference, examples were drawn from the original corpus of the novel to represent the authentic speaking style of each character. The evaluation considered four aspects—Linguistic Style, Tone, Vocabulary, and Emotional Expression—each scored on a 1–5 scale with an averaged score. In addition, the judge determined whether the chatbot’s utterances sounded as if they were spoken by the same character from the novel (Yes/No), and provided a confidence score (0–100%) along with a concise rationale.

4.5. Module Effectiveness and Efficiency Analysis

4.5.1. Module Effectiveness and Baseline Comparison

To assess the effectiveness of individual components within Fic2Bot, we analyzed both the overall pipeline performance and the contribution of each module. Instead of full ablation for every component, we combined a baseline comparison with results from earlier sections that isolate each module’s effect. As a baseline, we implemented a full-text prompting approach, in which the entire novel text was provided as input and the model was given only a simple persona prompt (e.g., “You are [Character]. Respond accordingly.”) This setting removes modular preprocessing and relies solely on the LLM’s ability to infer character style and context. For response evaluation, we adopted the persona consistency evaluation methodology described in

Section 4.4, conducting multi-turn dialogues primarily under the Original scenario. In addition, we introduced the Dialogue Coherence metric to provide a more fine-grained assessment of the chatbot’s conversational ability.

Furthermore, we reorganized the RAG performance results from

Section 4.3.2 together with the outcomes of this effectiveness study, allowing a direct comparison of the incremental contributions of each module to factual accuracy and persona consistency. This design provides evidence of the causal relationship between the modular framework and the improvements in persona-driven dialogue generation performance.

4.5.2. Efficiency Analysis

To evaluate the practical applicability of the framework, we conducted an efficiency analysis focusing on building chatbot time, response latency, and computational cost. Specifically, we measured the entire chatbot generation Framework—including the MEI procedure, scene-level metadata structuring, embedding generation for vector-based retrieval, and persona extraction—as well as the end-to-end response time covering query rewriting, retrieval, reranking, and response generation. For comparison, we also measured the end-to-end response time of the baseline described in

Section 4.5.1.

Building chatbot time was measured in seconds, and response latency was reported as the median latency (p50) and the worst-case latency (p95). In addition, we quantified the token usage of LLM calls, reporting the average number of input and output tokens consumed per query, thereby providing an estimate of potential costs. Through this analysis, the framework can be comprehensively evaluated in terms of the overall cost in real-world deployment environments, taking into account both chatbot building and query processing times.

5. Results and Discussion

This section presents the results of each evaluation task introduced in

Section 4, following the same order as in the Methods sections.

5.1. Entity Identification Performance

Table 2 reports NER and MEI F1 scores for singular and plural references across the three novels. All models achieved consistently high performance (F1 > 0.90) in both tasks, demonstrating robust mention detection and accurate entity linking.

Performance on plural references and pronouns is particularly notable. As discussed in

Section 2.2, although numerous studies have been conducted on coreference resolution, sometimes plural pronouns have been excluded from evaluation [

41]. Even in studies addressing both singular and plural entities jointly, such as Zhou et al. [

42], the best reported scores for plural linking were macro F1 = 0.205 and micro F1 = 0.417.

In contrast, our method attains substantially higher scores without additional neural network training, relying solely on the MEI-focused LLM prompting design described in

Section 3.2. These results highlight the method’s effectiveness in handling the challenging problem of plural reference linking, while underscoring its efficiency and practical applicability.

5.2. RAG Retrieval Performance

Table 3 compares retrieval performance between structured scene-level data and the original text chunked using a semantic chunking library.

Across all three books, the proposed structured format consistently outperformed the original on both evaluation metrics. Compared to the original format,

Winnie-the-Pooh achieved a +34.45 percentage points improvement in Recall@3 and a +25.0 percentage points improvement in MRR@3. Similar improvements were observed in the other datasets, with

Off the Record nearly doubling its performance and

And What Can We Offer You Tonight showing more moderate but still clear gains. These results indicate that scene segmentation with metadata (

Section 3.3) enhances retrieval relevance by preserving narrative structure, thereby boosting both accuracy and ranking.

5.3. Speaker Identification Performance

Table 4 compares the performance of Fic2Bot with the BookNLP baseline in terms of Accuracy, macro F1, and micro F1. For

Winnie-the-Pooh, the proposed model achieved an Accuracy of 0.9617, representing an improvement of approximately +21.85 percentage points over the baseline. Furthermore, the macro F1 increased from 0.7465 to 0.9995, and the micro F1 improved from 0.7898 to 0.9989, indicating near-perfect attribution performance.

A similar trend was observed for Off the Record, where Fic2Bot achieved an Accuracy of 0.9975 compared to 0.9366 for BookNLP. Macro F1 and micro F1 also improved substantially by +18.63 percentage points and +24.47 percentage points, respectively.

For And What Can We Offer You Tonight, the frequent use of pronouns and implicit speaker references posed significant challenges for speaker attribution, leading to relatively low performance for BookNLP. However, Fic2Bot achieved an Accuracy of 0.9014, representing a +60.71 percentage points improvement, while macro F1 and micro F1 increased by +58.50 and +65.53 percentage points, respectively, indicating its effectiveness under such challenging narrative conditions.

These findings demonstrate that the LLM-based prompting approach employed by Fic2Bot is highly effective for speaker–dialogue attribution in literary texts. By leveraging the contextual understanding capabilities of LLMs, Fic2Bot robustly handles implicit speaker cues and complex narrative structures.

5.4. Results of Stylistic Feature Analysis

Table 5 and

Table 6 present the lexical similarity analysis across characters. Lexical similarity evaluation serves to verify whether each character employs a distinctive set of vocabulary and expressions. This demonstrates that their personas are clearly separable, providing evidence that such differentiated speaking styles can be faithfully incorporated into chatbot responses. The overall averages for each book remain below 0.14, indicating strong lexical separability and confirming that characters’ speech patterns are sufficiently distinct for style profiling. At the same time, a few character pairs with close narrative ties exhibit relatively high overlap—for instance, Jewel and Nero in

And What Can We Offer You Tonight reach a cosine similarity of 0.7099. These cases reflect the natural vocabulary sharing that emerges between characters who are closely intertwined throughout the narrative, rather than indicating a lack of separability.

This suggests that the speaker–utterance segmentation technique described in

Section 3.4 enables precise characterization of each character’s speech patterns and can be effectively leveraged for style profiling.

Table 7 presents an example of syntactic feature analysis results, focusing on representative characters from

Winnie-the-Pooh among the three books analyzed. The results indicate that

Winnie-the-Pooh exhibits the longest average utterance length (9.87 words, 50.86 characters) and the highest proportion of exclamation usage (28.21%), suggesting a more expressive emotional style. These findings imply that each speaker’s linguistic profile can be leveraged for style-based character modeling and maintaining persona consistency.

5.5. Results of Sentiment Analysis

Table 8 presents the sentiment score distribution of the five main characters in

Off the Record, classified on a 1–5 scale, where 1 indicates the most negative sentiment and 5 indicates the most positive sentiment, with 3 representing a neutral or moderately balanced tone. The full results for all characters are provided in

Appendix D Table A7.

For instance, Phil Jenkins exhibited the most pronounced positive tendency, with 75% of his utterances rated at the maximum score of 5 and no instances in the neutral-to-moderately-positive range (scores 3–4). In contrast, Simone Blake and Paisley McConkie displayed more balanced sentiment profiles, with utterances distributed across negative, neutral, and positive categories. These differences in emotional expression may reflect the distinct narrative roles and personality traits assigned to each character, serving as a key basis for defining emotional consistency and tone in persona construction.

5.6. Results of Persona Consistency Evaluation in Multi-Turn Settings

Table 9 presents the results of persona consistency evaluation for chatbot responses assessed using LLMs. The generated multi-turn dialogues were evaluated on a 5-point scale for four dimensions—linguistic style, tone, vocabulary and emotional expression—measuring how closely they resembled the original characters.

The results show that across all novels and scenarios, the chatbot achieved an average score of 3.86 or higher, demonstrating a generally high level of consistency. Moreover, in response to the question, “Do the chatbot-generated utterances clearly match the style of the same character as portrayed in the original novel?”, over 76.7% of cases were judged as Yes, indicating that the chatbot successfully reproduced the characters’ speaking styles.

In particular, Pooh from Winnie-the-Pooh achieved a perfect score of 5.0/5.0 across all scenarios. This outcome suggests that the repetitive and simplified speech patterns of children’s literature characters (e.g., “Oh, bother,” “a Bear of Very Little Brain”) and their exaggerated expressions contributed positively to persona extraction and preservation.

Overall, the proposed framework achieved a high level of persona consistency across diverse genres and characters, with the results indicating that the more salient the linguistic features of a character, the stronger the consistency achieved.

5.7. Results of Module Effectiveness and Efficiency Analysis

5.7.1. Analysis of Module Effectiveness

Table 10 presents the comparison between the baseline and the full Fic2Bot pipeline, showing consistent improvements in persona consistency and dialogue quality across all three novels. To further clarify the source of these gains, we link the baseline comparison with the individual module analyses reported in

Section 4.3.

Major Entity Identification (MEI): As reported in

Section 5.1, MEI achieved macro F1 scores above 0.94, with notable improvements in handling plural references compared to previous approaches. This module provides a reliable foundation for consistent entity tracking, which is essential for sustaining persona coherence.

Scene-level RAG:

Section 5.2 demonstrated that Recall@3 improved by +34–47 percentage points compared to chunk-based retrieval. MRR@3 also increased by +20–44 percentage points, confirming that scene segmentation directly enhances factual grounding in generated responses.

Speaker Attribution and Style Profiling:

Section 5.4 showed that our speaker attribution and lexical–syntactic profiling achieved low inter-character similarity (average Jaccard < 0.14), enabling distinct stylistic separability and supporting consistent persona reproduction.

These findings complement the full pipeline comparison in

Table 10. For example,

And What Can We Offer You Tonight exhibited the most substantial improvement, rising from 3.70 to 4.38 (+0.68), indicating the framework’s effectiveness even in more structurally complex narratives.

Winnie-the-Pooh also showed a clear gain, improving 4.62 to 5.00 (+0.38), suggesting that highly distinctive characters benefit strongly from the integration of all modules.

Off the Record showed smaller gains (+0.10), reflecting the difficulty of distinguishing characters in modern conversational settings.

In addition, outputs generated with Fic2Bot tended to contain richer factual details than those from the baseline. This can be attributed to the improved retrieval accuracy of scene-level RAG, which supplied more relevant narrative context to the generation process. However, because the evaluation relied on GPT-4, the scoring may have overemphasized surface-level stylistic features, potentially underestimating factual richness. This limitation highlights the need to complement LLM-based automatic evaluation with human studies to more comprehensively assess both style and factual fidelity.

In summary, the analysis indicates that (i) MEI secures consistent entity tracking, (ii) scene-level RAG strengthens factual accuracy, (iii) style profiling enforces persona distinctiveness, and (iv) their integration within Fic2Bot yields additive improvements in persona consistency and factual grounding across diverse genres.

5.7.2. Efficiency Analysis

For Winnie-the-Pooh, the response latency was the longest, with a median (p50) of 32.43 s and a 95th percentile (p95) of 37.41 s. This latency can be attributed to the generation of output tokens more than ten times longer than those of the other two novels. The verbose and descriptive speaking style of the character led to lengthy outputs, producing more detailed responses but also incurring the highest cost (USD 0.0053).

For Off the Record, the response latency was p50 14.31 s and p95 19.97 s, with a relatively small gap between the two, indicating stable response times. The average input and output tokens were 2149.02 and 22.95, respectively. The character’s direct and concise speaking style kept the outputs short, resulting in efficient operation at the lowest cost (USD 0.0029).

For And What Can We Offer You Tonight, the response latency was p50 16.20 s and p95 20.50 s, remaining within a narrow range. The average input and output tokens were 2654 and 30, respectively, and the character’s compact and poetic style contributed to shorter outputs, resulting in a relatively low cost (USD 0.0036).

Among the three novels, Off the Record required the longest chatbot building time, approximately 110 minutes. This appears to stem from the frequent scene transitions characteristic of modern drama, which likely imposed additional overhead in the scene-level embedding process. In contrast, children’s literature such as Winnie-the-Pooh has a simpler scene structure, resulting in shorter build times. In between these extremes, And What Can We Offer You Tonight exhibited an intermediate build time, positioned between the other two novels. Since chatbots are built only once when a novel is ingested, such differences do not translate into significant burdens for real-time operation. Finally, baseline, which relies on the entire text without additional retrieval or compression, exhibited shorter response latencies, with p50 and p95 ranging from a few to ten seconds. However, the number of input tokens per query increased excessively, resulting in high costs across all three novels, which could lead to exponential cost escalation in real-time operation.

6. Limitations

Several limitations remain in this study. First, the evaluation was confined to text-based novels, leaving applicability to multimodal narratives such as scripts or graphic novels untested.

Second, while prompt-based methods avoid additional fine-tuning, their heavy reliance on LLM prompting makes them vulnerable to performance variability across versions and providers. Notably, MultiLLM-Chatbot [

43] evaluated five major LLM families across diverse metrics and domains, clearly demonstrating model-level differences. Although the overall architecture is still applicable, subtle variations in reasoning, style adherence, and retrieval alignment may cause inconsistencies, highlighting the need for systematic cross-LLM evaluation.

Third, human evaluation of subjective qualities such as fluency, empathy, and engagement was not included. Automated LLM-based judgments offer scalability but cannot fully capture human perception. Large-scale human studies will therefore be essential for validating persona consistency and understanding user experience in practice.

Finally, character style modeling relied on shallow linguistic features such as TF-IDF statistics, sentence length, and exclamation frequency. While informative, these features fail to capture deeper semantic, rhetorical, or narrative-level styles, limiting the richness of character expression. In addition, potential biases in sentiment analysis were not addressed in this study, which should be systematically examined in future work.

7. Conclusions

This paper presented Fic2Bot, an end-to-end framework that automatically transforms raw novel text into in-character chatbots by extracting character-specific linguistic and emotional profiles and integrating these with scene-grounded retrieval. Unlike conventional approaches, Fic2Bot achieves high performance without task-specific fine-tuning, making it both efficient and scalable.

Experiments across three novels demonstrated that the framework improves entity identification, retrieval precision, and persona consistency in multi-turn dialogues. These results highlight its ability to jointly enhance factual grounding and stylistic coherence. The significance of Fic2Bot lies not only in its empirical performance but also in its broader applicability, as it demonstrates scalability across diverse genres and narrative styles while adopting prompting strategies instead of additional training.

However, several promising directions remain for future research. First, generalizability should be validated beyond text-only novels, extending to diverse modalities and languages. Incorporating visual context, supported by recent advances in vision–language models [

44] and multimodal RAG approaches [

45], may enable richer narrative forms such as illustrated novels, scripts, and graphic narratives. Second, to mitigate dependence on LLM prompting, hybrid architectures that combine prompt-based methods with task-specific pretrained models should be explored, alongside systematic cross-LLM evaluations to assess robustness across model families. Third, large-scale human evaluations are needed to complement automated LLM-based judgments, particularly for subjective qualities such as fluency, empathy, and engagement. Finally, style modeling should move beyond shallow linguistic features (e.g., TF-IDF, sentence length) toward deeper semantic, rhetorical, and narrative-level modeling, while deployment feasibility must be further investigated with attention to efficiency, scalability, and lightweight implementations for real-world applications.

Author Contributions

Conceptualization, S.K., C.L. and S.J.; Funding acquisition, M.L.; Investigation, S.K., C.L. and S.J.; Methodology, S.K., C.L., S.J. and M.L.; Project administration, M.L.; Software, S.K., C.L. and S.J.; Supervision, M.L.; Writing—original draft, S.K., C.L. and S.J.; Writing—review & editing, M.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Sungshin Women’s University Research Grant of 2025.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Appendix A.1

This prompt corresponds to the first stage of the MEI task.

Table A1.

Example prompt and corresponding input/output for the Coreference Resolution task.

Table A1.

Example prompt and corresponding input/output for the Coreference Resolution task.

| Prompt | You will receive a Text along with a list of Key Entities and their corresponding Cluster IDs as input. Your task is to perform Coreference Resolution on the provided text to categorize “each word belonging to a cluster” with its respective cluster id.

Follow the format below to label a word with its cluster ID:

word#cluster_id

Please keep in mind:

- Do not label general nouns, objects, or places.

- Only tag names, pronouns, or descriptive phrases that refer to characters.

- For example, tag both singular and plural references like: he, she, it, I, you, we, they, them, etc.

- If a pronoun refers to multiple known characters, tag it with all relevant cluster IDs (e.g., they#pooh,#piglet).

- Do not alter the content or order of the original text.

- Ensure the output adheres to the specified format for easy parsing.

- For characters not listed in Key Entities but appearing in the text, assign them the tag: #others

Key Entities: {entity_description}

Text: {text} |

| Input Example | Key Entities:

“Winnie-the-Pooh”: “#pooh”,

“Piglet”: “#piglet”

Text: Pooh went to the forest. He saw Piglet. They talked for hours. |

| Output Example | Pooh#pooh went to the forest. He#pooh saw Piglet#piglet. They#pooh,#piglet talked for hours. |

Appendix A.2

Table A2.

Prompt for expanding head words into full noun phrases.

Table A2.

Prompt for expanding head words into full noun phrases.

| Description | This prompt instructs the task of expanding an already tagged head word into its complete noun phrase, marking the full span accordingly. |

| Prompt | Any word marked with # is the head of a noun phrase. Expand this head by including its full span (e.g., determiners, adjectives, and modifiers). Do not add or remove content beyond bracketing. Wrap the full span using the format: (FULL SPAN)#ClusterID. Only bracket the expanded noun phrase, do not modify other parts of the sentence. If the head is a possessive determiner, ONLY bracket the determiner, NOT the noun it modifies, UNLESS the noun itself has a #ClusterID tag.

Text: {text} |

| Input Example | Piglet#piglet wished very much that his#piglet Grandfather T. W.#others were there, instead of elsewhere. |

| Output Example | (Piglet)#piglet wished very much that (his Grandfather T. W.)#others were there, instead of elsewhere. |

Appendix A.3

Table A3.

Prompt for scene-based structuring after MEI.

Table A3.

Prompt for scene-based structuring after MEI.

| Description | This prompt instructs the task of segmenting text data, after completing Major Entity Identification (MEI), into distinct scenes and converting them into a structured JSON format. |

| Prompt | You are a Scene Annotator. You will receive a story text input from {position}. Your task is to segment the story into distinct scenes based on any change in one or more of the following elements:

- Location

- Time

- Characters present

For each scene,

- “Scene ID”: e.g., “CH5_1”, “CH5_2”

- “Position”: e.g., “CHAPTER_5”

- “Speakers”: list of speakers

- “Scene”: list of strings (full scene text including narration and dialogue)

- “Dialogue”: list of “Speaker: utterance” lines only

Use consistent and full speaker names in both the “Speakers” list and the “Dialogue” lines. Do not use shortened or partial names.

Refer to the following character list and ID mapping, and always use the full name when writing speaker names:

{character_entities}

Use this output format:

[{“Scene ID”: “CH5_1”, “Position”: “CHAPTER_5”, “Speakers”: [“Winnie-the-Pooh”, “Christopher Robin”], “Scene”: [“Winnie-the-Pooh walked through the forest...”, ““Hello!” said Christopher Robin.”, ““Hi!” said Winnie-the-Pooh.”], “Dialogue”: [“Christopher Robin: Hello!”, “Winnie-the-Pooh: Hi!”]}]

Now annotate the following chapter using SceneML:

{{chapter_text}} |

| Input Example | Position: CHAPTER_5

Character Entities:

“Winnie-the-Pooh”: “pooh”,

“Christopher Robin”: “robin”

Text: Winnie-the-Pooh walked through the forest. “Hello!” said Christopher Robin. “Hi!” said Winnie-the-Pooh. |

| Output Example | [{“Scene ID”: “CH5_1”, “Position”: “CHAPTER_5”, “Speakers”: [“Winnie-the-Pooh”, “Christopher Robin”], “Scene”: [“Winnie-the-Pooh walked through the forest.”, ““Hello!” said Christopher Robin.”, ““Hi!” said Winnie-the-Pooh.”], “Dialogue”: [“Christopher Robin: Hello!”, “Winnie-the-Pooh: Hi!”]}] |

Appendix B

Table A4.

Prompt for question rewriting.

Table A4.

Prompt for question rewriting.

| Description | You are a helpful assistant that rewrites user questions to make them more effective for document retrieval systems. |

| Instructions | Please rewrite the question below to:Remove ambiguity while preserving the original intent. Keep the format as a question, not a declarative sentence. Avoid guessing specific contexts not mentioned in the question. Use concise and keyword-rich phrasing suitable for retrieval.

|

| Original Question | {original_query} |

| Rewritten Question | {rewritten_query} |

Instructs to rewrite the question while preserving its intent, maintaining the question format, and making it suitable for retrieval.

Appendix C

Appendix C provides the prompt templates used in

Section 4.4 for persona consistency evaluation. They were applied to simulate user utterances in different scenarios and to guide GPT-4 in judging consistency. Each subsection presents the exact prompt texts given to the models.

Table A5.

User prompt guidelines for each scenario.

Table A5.

User prompt guidelines for each scenario.

| ordinary conversation | You are simulating the USER in a two-person chat. Write the user’s next single message in a neutral tone. Avoid role labels. Keep it short (1–2 sentences). |

| emotional conversation | You are simulating the USER in a two-person chat. Write the user’s next single message with emotional intensity, responding to a conflict or disagreement. Avoid role labels. Keep it short (1–2 sentences). |

| fact-based conversation (first) | You are the USER inside a novel scene. Read the scene excerpt(s) below and write your first chat message as if you are physically present in that situation, addressing {character_name} directly.

– The message should be a curious or probing question about what’s happening in the scene now.

– Use 1–2 short sentences. Avoid role labels.

— Scene Excerpts —

{Scene}

Now write just the single user message (no quotes, no labels). |

| fact-based conversation (follow-up) | You are the USER inside the same ongoing novel scene. Stay fully in-world and speak as if you are physically here with the characters right now.

Rules:

· React directly to the assistant’s last line in-character.

· Use present tense; deictic words like here/now/this are fine if natural.

· Keep it to 1–2 short sentences.

· No role labels, no quotes, no stage directions, no meta-commentary.

· If possible, reference a concrete detail from the assistant’s line. |

Table A6.

Evaluation guidelines for character consistency analysis.

Table A6.

Evaluation guidelines for character consistency analysis.

| Role | You are an expert evaluator for character consistency analysis. You will be shown utterances from THREE different characters: Paisley, Hudson, and Leo. Each block contains multiple utterances representing that character’s style. You will then be shown a [Target] block of utterances. |

| Task | 1. Compare [Target] against ALL THREE character styles (Paisley, Hudson, Leo).

2. Based on similarity across four aspects, decide whether [Target] matches Paisley specifically.

3. Output ONLY in the format below. |

| Evaluation Criteria (1–5 scale) | 1. Linguistic Style: Word choice patterns, punctuation usage (periods, ellipses, exclamations, questions), formality level, sentence length

2. Tone: Emotional attitude (formal/casual, warm/cold), confidence level (certain vs hesitant)

3. Vocabulary: Characteristic or idiosyncratic words/phrases, simplicity vs complexity, catchphrases or signature expressions

4. Emotional Expression: How the character expresses feelings (worry, joy, curiosity, etc.)

5. Dialogue Coherence: Appropriateness of the response to preceding user utterance, variability of expression (avoid repetition) |

| Guidelines | - Compare [Target] against ALL THREE characters, not just Paisley.

- “Yes” only if [Target] clearly matches Paisley’s stylistic and tonal patterns better than Hudson or Leo.

- If evidence is weak or ambiguous, reflect lower confidence.

- In the reason, cite specific linguistic evidence. |

| Output Format | #x201C;Linguistic Style”: 1–5,

“Tone”: 1–5,

“Vocabulary”: 1–5,

“Emotional Expression”: 1–5,

“Dialogue Coherence”: 1–5,

“average”: (rounded average of the four scores, 1 decimal),

“answer”: “Yes|No”,

“confidence”: “0–100%”,

“reason”: “≤50 words explaining your decision with reference to the criteria” |

Appendix D

This Table presents the sentiment score distribution for the characters in Off the Record, derived from the emotion analysis described in the study.

Table A7.

Provides the sentiment score distribution for the characters in Off the Record (%).

Table A7.

Provides the sentiment score distribution for the characters in Off the Record (%).

| Character | 1 Star | 2 Stars | 3 Stars | 4 Stars | 5 Stars |

|---|

| Andrea | 27.78 | 8.33 | 22.22 | 5.56 | 36.11 |

| Carrie | 33.33 | 4.17 | 25.00 | 8.33 | 29.17 |

| Dalton | 33.33 | 16.67 | 33.33 | 0.00 | 16.67 |

| Dorian | 42.86 | 14.29 | 14.29 | 14.29 | 14.29 |

| Hudson Owens | 23.58 | 6.50 | 31.30 | 17.07 | 21.54 |

| Kyla Langford | 0.00 | 0.00 | 100.00 | 0.00 | 0.00 |

| Leo Davis | 20.00 | 5.00 | 15.00 | 5.00 | 55.00 |

| Linus | 28.57 | 14.29 | 14.29 | 14.29 | 28.57 |

| Lucy | 0.00 | 0.00 | 0.00 | 50.00 | 50.00 |

| Luke | 100.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| Moria Owens | 50.00 | 50.00 | 0.00 | 0.00 | 0.00 |

| Paisley McConkie | 29.50 | 10.25 | 26.09 | 9.94 | 24.22 |

| Phil Jenkins | 25.00 | 0.00 | 0.00 | 0.00 | 75.00 |

| Prescott | 0.00 | 0.00 | 66.67 | 0.00 | 33.33 |

| Simone Blake | 29.76 | 7.14 | 27.38 | 11.90 | 23.81 |

| Stan | 40.00 | 20.00 | 20.00 | 0.00 | 20.00 |

| Tina | 25.00 | 12.50 | 0.00 | 25.00 | 37.50 |

| Ralph | 0.00 | 0.00 | 0.00 | 0.00 | 100.00 |

Appendix E

Figure A1.

Comparative Analysis of Star Ratings and Linguistic Features Across Conversation Types. Abbreviations: Wds = Avg. Words; Chars = Avg. Characters; Excl = Exclamation; Ques = Question; Neg = Negation; 1stP = First-Person.

Figure A1.

Comparative Analysis of Star Ratings and Linguistic Features Across Conversation Types. Abbreviations: Wds = Avg. Words; Chars = Avg. Characters; Excl = Exclamation; Ques = Question; Neg = Negation; 1stP = First-Person.

References

- Mardiana, N.; Da, R.O. The Phenomenon of Use of Chatbot Character AI by ARMY-BTS. In Proceedings of the International Seminar Enrichment of Career by Knowledge of Language and Literature, Tokyo, Japan, 23–25 November 2024; pp. 65–74. [Google Scholar]

- Han, S.; Kim, B.; Yoo, J.Y.; Seo, S.; Kim, S.; Erdenee, E.; Chang, B. Meet your favorite character: Open-domain chatbot mimicking fictional characters with only a few utterances. arXiv 2022, arXiv:2204.10825. [Google Scholar] [CrossRef]

- Wang, X.; Wang, H.; Zhang, Y.; Yuan, X.; Xu, R.; Huang, J.-t.; Yuan, S.; Guo, H.; Chen, J.; Zhou, S. Coser: Coordinating llm-based persona simulation of established roles. arXiv 2025, arXiv:2502.09082. [Google Scholar]

- Character.AI. Available online: https://character.ai (accessed on 9 July 2025).

- Liu, Y.; Wei, W.; Liu, J.; Mao, X.; Fang, R.; Chen, D. Improving personality consistency in conversation by persona extending. In Proceedings of the 31st ACM International Conference on Information & Knowledge Management, Atlanta, GA, USA, 17–22 October 2022; pp. 1350–1359. [Google Scholar]

- Xue, B.; Wang, W.; Wang, H.; Mi, F.; Wang, R.; Wang, Y.; Shang, L.; Jiang, X.; Liu, Q.; Wong, K.-F. Improving Factual Consistency for Knowledge-Grounded Dialogue Systems via Knowledge Enhancement and Alignment. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2023, Singapore, 6–10 December 2023; pp. 7829–7844. [Google Scholar]

- Kovacevic, N.; Boschung, T.; Holz, C.; Gross, M.; Wampfler, R. Chatbots with attitude: Enhancing chatbot interactions through dynamic personality infusion. In Proceedings of the 6th ACM Conference on Conversational User Interfaces, Waterloo, ON, Canada, 8–10 July 2024; pp. 1–16. [Google Scholar]

- Wang, Y.; Leung, J.; Shen, Z. RoleRAG: Enhancing LLM Role-Playing via Graph Guided Retrieval. arXiv 2025, arXiv:2505.18541. [Google Scholar]

- Liu, D.; Wu, Z.; Song, D.; Huang, H. A Persona-Aware LLM-Enhanced Framework for Multi-Session Personalized Dialogue Generation. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2025, Vienna, Austria, 27 July–1 August 2025; pp. 103–123. [Google Scholar]

- Zhu, S.; Ma, T.; Rong, H.; Al-Nabhan, N. A Personalized Multi-Turn Generation-Based Chatbot with Various-Persona-Distribution Data. Appl. Sci. 2023, 13, 3122. [Google Scholar] [CrossRef]

- Vishnubhotla, K.; Rudzicz, F.; Hirst, G.; Hammond, A. Improving Automatic Quotation Attribution in Literary Novels. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics, Toronto, ON, Canada, 9–14 July 2023; pp. 737–746. [Google Scholar]

- Wang, N.; Peng, Z.y.; Que, H.; Liu, J.; Zhou, W.; Wu, Y.; Guo, H.; Gan, R.; Ni, Z.; Yang, J.; et al. RoleLLM: Benchmarking, Eliciting, and Enhancing Role-Playing Abilities of Large Language Models. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2024, Bangkok, Thailand, 11–16 August 2024; pp. 14743–14777. [Google Scholar]

- Hong, M.; Zhang, C.J.; Chen, C.; Lian, R.; Jiang, D. Dialogue Language Model with Large-Scale Persona Data Engineering. In Proceedings of the 2025 Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics: Human Language Technologies, Albuquerque, NM, USA, 29 April–4 May 2025; pp. 961–970. [Google Scholar]

- Liu, Y.; Zhang, Y.; Patel, S.O.; Zhu, Z.; Guo, S. HonkaiChat: Companions from Anime that feel alive! arXiv 2025, arXiv:2501.03277. [Google Scholar] [CrossRef]

- Lee, O.; Joseph, K. A large-scale analysis of public-facing, community-built chatbots on Character. AI. arXiv 2025, arXiv:2505.13354. [Google Scholar]

- Lu, K.; Yu, B.; Zhou, C.; Zhou, J. Large Language Models are Superpositions of All Characters: Attaining Arbitrary Role-play via Self-Alignment. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics, Bangkok, Thailand, 11–16 August 2024; pp. 7828–7840. [Google Scholar]

- Li, C.; Leng, Z.; Yan, C.; Shen, J.; Wang, H.; Mi, W.; Fei, Y.; Feng, X.; Yan, S.; Wang, H. Chatharuhi: Reviving anime character in reality via large language model. arXiv 2023, arXiv:2308.09597. [Google Scholar] [CrossRef]

- Roesiger, I.; Schulz, S.; Reiter, N. Towards Coreference for Literary Text: Analyzing Domain-Specific Phenomena. In Proceedings of the Second Joint SIGHUM Workshop on Computational Linguistics for Cultural Heritage, Social Sciences, Humanities and Literature, Santa Fe, NM, USA, 25 August 2018; pp. 129–138. [Google Scholar]

- Gardner, M.; Grus, J.; Neumann, M.; Tafjord, O.; Dasigi, P.; Liu, N.F.; Peters, M.; Schmitz, M.; Zettlemoyer, L. AllenNLP: A Deep Semantic Natural Language Processing Platform. In Proceedings of the Workshop for NLP Open Source Software (NLP-OSS), Melbourne, Australia, 15–20 July 2018; pp. 1–6. [Google Scholar]

- Joshi, M.; Levy, O.; Zettlemoyer, L.; Weld, D. BERT for Coreference Resolution: Baselines and Analysis. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 5803–5808. [Google Scholar]

- Joshi, M.; Chen, D.; Liu, Y.; Weld, D.S.; Zettlemoyer, L.; Levy, O. Spanbert: Improving pre-training by representing and predicting spans. Trans. Assoc. Comput. Linguist. 2020, 8, 64–77. [Google Scholar] [CrossRef]

- BookNLP. BookNLP: A Natural Language Processing Pipeline for Books. GitHub Repository. 2023. Available online: https://github.com/booknlp/booknlp (accessed on 9 August 2025).

- Bamman, D.; Lewke, O.; Mansoor, A. An Annotated Dataset of Coreference in English Literature. In Proceedings of the Twelfth Language Resources and Evaluation Conference, Marseille, France, 11–16 May 2020; pp. 44–54. [Google Scholar]

- Sundar, K.M.; Toshniwal, S.; Tapaswi, M.; Gandhi, V. Major Entity Identification: A Generalizable Alternative to Coreference Resolution. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing, Miami, FL, USA, 12–16 November 2024; pp. 11679–11695. [Google Scholar]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.-t.; Rocktäschel, T. Retrieval-augmented generation for knowledge-intensive nlp tasks. Adv. Neural Inf. Process. Syst. 2020, 33, 9459–9474. [Google Scholar]

- Fan, W.; Ding, Y.; Ning, L.; Wang, S.; Li, H.; Yin, D.; Chua, T.-S.; Li, Q. A survey on rag meeting llms: Towards retrieval-augmented large language models. In Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Barcelona, Spain, 25–29 August 2024; pp. 6491–6501. [Google Scholar]

- Gao, Y.; Xiong, Y.; Gao, X.; Jia, K.; Pan, J.; Bi, Y.; Dai, Y.; Sun, J.; Wang, H.; Wang, H. Retrieval-augmented generation for large language models: A survey. arXiv 2023, arXiv:2312.10997. [Google Scholar]

- Olsson, F. Beyond the Basics: Advanced Retrieval Techniques for RAG Systems: Assessing the Impact of Sentence-Window Retrieval and Auto-Merging Retrieval on the Performance of a RAG System in a Swedish Management Consulting Company. Master’s Thesis, KTH, School of Electrical Engineering and Computer Science, Stockholm, Sweden, 2024; p. 53. [Google Scholar]

- Swacha, J.; Gracel, M. Retrieval-Augmented Generation (RAG) Chatbots for Education: A Survey of Applications. Appl. Sci. 2025, 15, 4234. [Google Scholar] [CrossRef]

- Akkiraju, R.; Xu, A.; Bora, D.; Yu, T.; An, L.; Seth, V.; Shukla, A.; Gundecha, P.; Mehta, H.; Jha, A. Facts about building retrieval augmented generation-based chatbots. arXiv 2024, arXiv:2407.07858. [Google Scholar] [CrossRef]

- Khan, U.A.; Khan, F.; Khan, E.; Hasnain, M.A.; Moinuddin, A.A. Towards Efficient Educational Chatbots: Benchmarking RAG Frameworks. In Proceedings of the 2025 International Conference on Emerging Technologies in Computing and Communication (ETCC), Bangalore, India, 26–27 June 2025; pp. 1–6. [Google Scholar]

- Gargari, O.K.; Habibi, G. Enhancing medical AI with retrieval-augmented generation: A mini narrative review. Digit. Health 2025, 11, 20552076251337177. [Google Scholar] [CrossRef]

- Khasanova Zafar kizi, M.; Suh, Y. Design and Performance Evaluation of LLM-Based RAG Pipelines for Chatbot Services in International Student Admissions. Electronics 2025, 14, 3095. [Google Scholar] [CrossRef]

- Alrashid, T.; Gaizauskas, R.J. A Pilot Study on Annotating Scenes in Narrative Text using SceneML. In Proceedings of the Text2Story Workshop at ECIR, Online, 1 April 2021; pp. 7–14. [Google Scholar]

- Zeng, N.; Hou, H.; Yu, F.R.; Shi, S.; He, Y.T. SceneRAG: Scene-level Retrieval-Augmented Generation for Video Understanding. arXiv 2025, arXiv:2506.07600. [Google Scholar]

- Lee, J.; Chen, F.; Dua, S.; Cer, D.; Shanbhogue, M.; Naim, I.; Ábrego, G.H.; Li, Z.; Chen, K.; Vera, H.S. Gemini embedding: Generalizable embeddings from gemini. arXiv 2025, arXiv:2503.07891. [Google Scholar] [CrossRef]

- FAISS. Available online: https://faiss.ai/ (accessed on 8 August 2025).

- BAAI. Bge-Reranker-Base. Hugging Face. 2023. Available online: https://huggingface.co/BAAI/bge-reranker-base (accessed on 9 August 2025).

- NLPTown. BERT Base Multilingual Uncased Sentiment. Available online: https://huggingface.co/nlptown/bert-base-multilingual-uncased-sentiment (accessed on 8 August 2025).

- Li, Y.; Su, H.; Shen, X.; Li, W.; Cao, Z.; Niu, S. DailyDialog: A Manually Labelled Multi-turn Dialogue Dataset. In Proceedings of the Eighth International Joint Conference on Natural Language Processing, Taipei, Taiwan, 27 November–1 December 2017; Volume 1:Long Papers, pp. 986–995. [Google Scholar]

- Chen, H.Y.; Zhou, E.; Choi, J.D. Robust coreference resolution and entity linking on dialogues: Character identification on tv show transcripts. In Proceedings of the 21st Conference on Computational Natural Language Learning (CoNLL 2017), Vancouver, BC, Canada, 3–4 August 2017; pp. 216–225. [Google Scholar]

- Zhou, E.; Choi, J.D. They exist! introducing plural mentions to coreference resolution and entity linking. In Proceedings of the 27th International Conference on Computational Linguistics, Santa Fe, NM, USA, 20–26 August 2018; pp. 24–34. [Google Scholar]

- Chakraborty, S.; Chowdhury, R.; Shuvo, S.R.; Chatterjee, R.; Roy, S. A scalable framework for evaluating multiple language models through cross-domain generation and hallucination detection. Sci. Rep. 2025, 15, 29981. [Google Scholar] [CrossRef]

- Yu, X.; Yoo, S.; Lin, Y. Clipceil: Domain generalization through clip via channel refinement and image-text alignment. Adv. Neural Inf. Process. Syst. 2024, 37, 4267–4294. [Google Scholar]

- Joshi, P.; Gupta, A.; Kumar, P.; Sisodia, M. Robust Multi Model RAG Pipeline for Documents Containing Text, Table & Images. In Proceedings of the 2024 3rd International Conference on Applied Artificial Intelligence and Computing (ICAAIC), Rajkot, India, 27–29 June 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 993–999. [Google Scholar]

Figure 1.

Overall architecture of the Fic2Bot framework, which consists of parallel factual and persona processing paths that are integrated in the final generation stage.

Figure 1.

Overall architecture of the Fic2Bot framework, which consists of parallel factual and persona processing paths that are integrated in the final generation stage.

Figure 2.

Two-stage Major Entity Identification process. The original story text is first processed with Prompt 1 for word-level mention detection and Prompt 2 for span expansion and entity linking. Using the LLM, all mentions are linked to major characters, resulting in a structured entity-annotated text for downstream tasks.

Figure 2.

Two-stage Major Entity Identification process. The original story text is first processed with Prompt 1 for word-level mention detection and Prompt 2 for span expansion and entity linking. Using the LLM, all mentions are linked to major characters, resulting in a structured entity-annotated text for downstream tasks.

Figure 3.

Proposed RAG pipeline optimized for character-consistent chatbot generation.

Figure 3.

Proposed RAG pipeline optimized for character-consistent chatbot generation.

Figure 4.

Final prompt used for generating chatbot responses, which integrates the results of scene retrieval and persona extraction.

Figure 4.

Final prompt used for generating chatbot responses, which integrates the results of scene retrieval and persona extraction.

Figure 5.

Character-specific linguistic patterns visualized through distinctive keywords. This word cloud not only highlights distinctive lexical tendencies but also illustrates the extracted utterances that served as input for subsequent analyses, including sentiment evaluation.

Figure 5.

Character-specific linguistic patterns visualized through distinctive keywords. This word cloud not only highlights distinctive lexical tendencies but also illustrates the extracted utterances that served as input for subsequent analyses, including sentiment evaluation.

Table 1.

Summarizes the basic statistical information of the novels used as experimental data in this study.

Table 1.

Summarizes the basic statistical information of the novels used as experimental data in this study.

| | Winnie-the-Pooh | Off the Record | And What Can We Offer You Tonight |

|---|

| Author | A. A. Milne | Kasey Stockton | Premee Mohamed |

| Genre | Child | Romance Comedy | Revenge Drama |

| Tokens 1 | 28,228 | 24,795 | 29,466 |

| Narrative Perspective | third-person point of view | first-person point of view | first-person point of view |

| Main Characters 2 | 12 | 18 | 9 |

Table 2.

NER and MEI F1 scores for each book.

Table 2.

NER and MEI F1 scores for each book.

| Book | NER F1 | MEI F1 |

|---|

| F1 Score | Singular | Plural |

|---|

| Macro F1 | Micro F1 | Macro F1 | Micro F1 |

|---|

| Winnie-the-Pooh | 0.9933 | 0.9570 | 0.9954 | 0.9460 | 0.9510 |

| Off the Record | 0.9914 | 0.9620 | 0.9908 | 0.9490 | 0.9518 |

| And What Can We Offer You Tonight | 0.9738 | 0.9633 | 0.9650 | 0.9389 | 0.9463 |

Table 3.

Comparison of retrieval performance (Recall@3 and MRR@3) between structured (ours) and original formats for each book.

Table 3.

Comparison of retrieval performance (Recall@3 and MRR@3) between structured (ours) and original formats for each book.

| Book | Version | Recall@3 | MRR@3 |

|---|

| Winnie-the-Pooh | Structured | 0.7667 | 0.7333 |

| | Original | 0.4222 | 0.4833 |

| Off the Record | Structured | 0.7667 | 0.7000 |

| | Original | 0.3000 | 0.2611 |

| And What Can We Offer You Tonight | Structured | 0.5000 | 0.4389 |

| | Original | 0.2333 | 0.2333 |

Table 4.

Accuracy and F1 scores of speaker attribution models across books. BookNLP is used as the baseline, while Fic2Bot represents our proposed model. All models are evaluated on the task of matching utterances to their corresponding speakers.

Table 4.